Fault Detection and Diagnosis in Air-Handling Unit (AHU) Using Improved Hybrid 1D Convolutional Neural Network

Abstract

1. Introduction

- A 13-layer CNN-LSTM AHU FDD for the HVAC system is suggested, which uses batch normalization for quicker training and efficient autonomous feature learning;

- Evaluate the capabilities of deep-learning models such as GB, RF, DRNN, DNN, and CNN-LSTM models in comparison to traditional neural networks and machine-learning models;

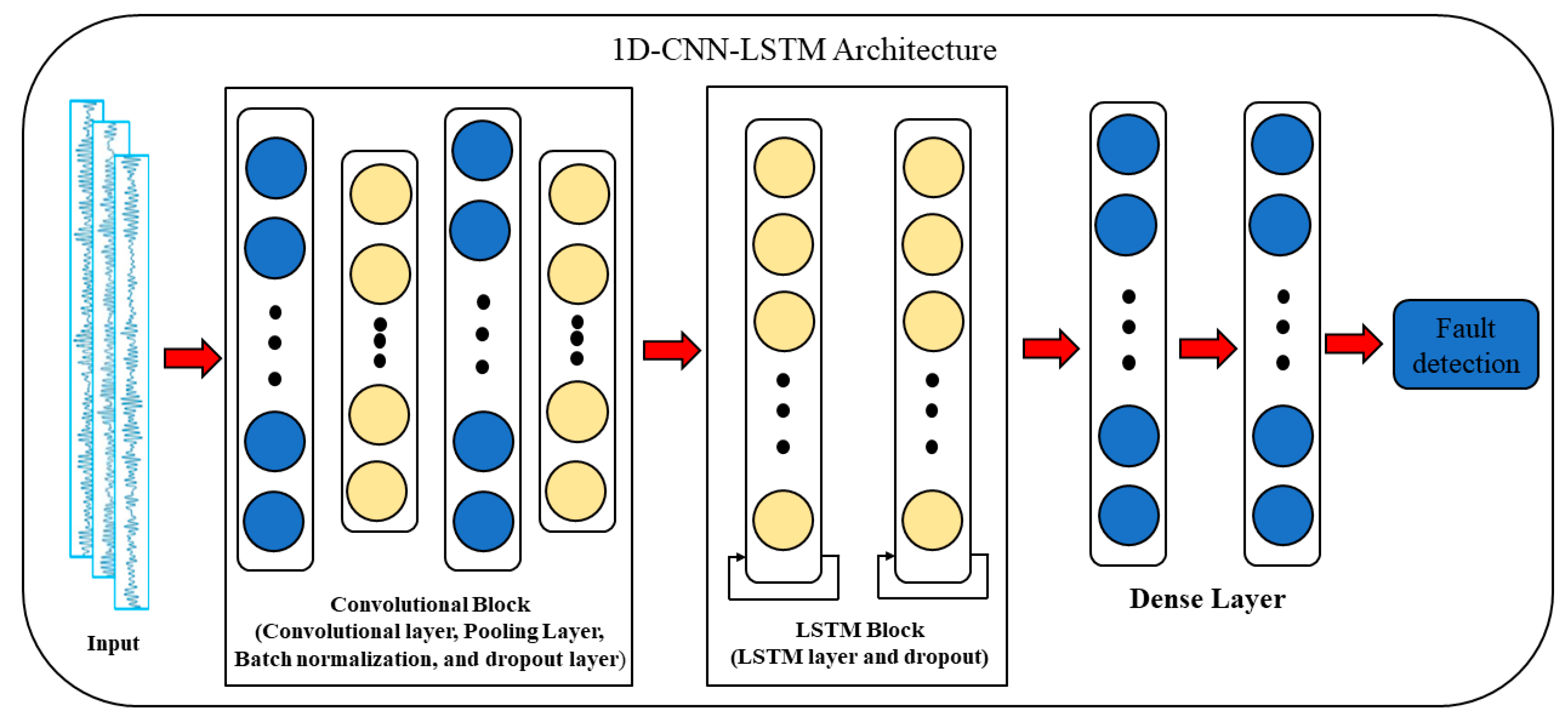

- Create a hybrid model (1D-CNN-LSTM) that leverages the best features of the CNN and LSTM methods;

- The hybrid model offers a comprehensive approach to FDD learning;

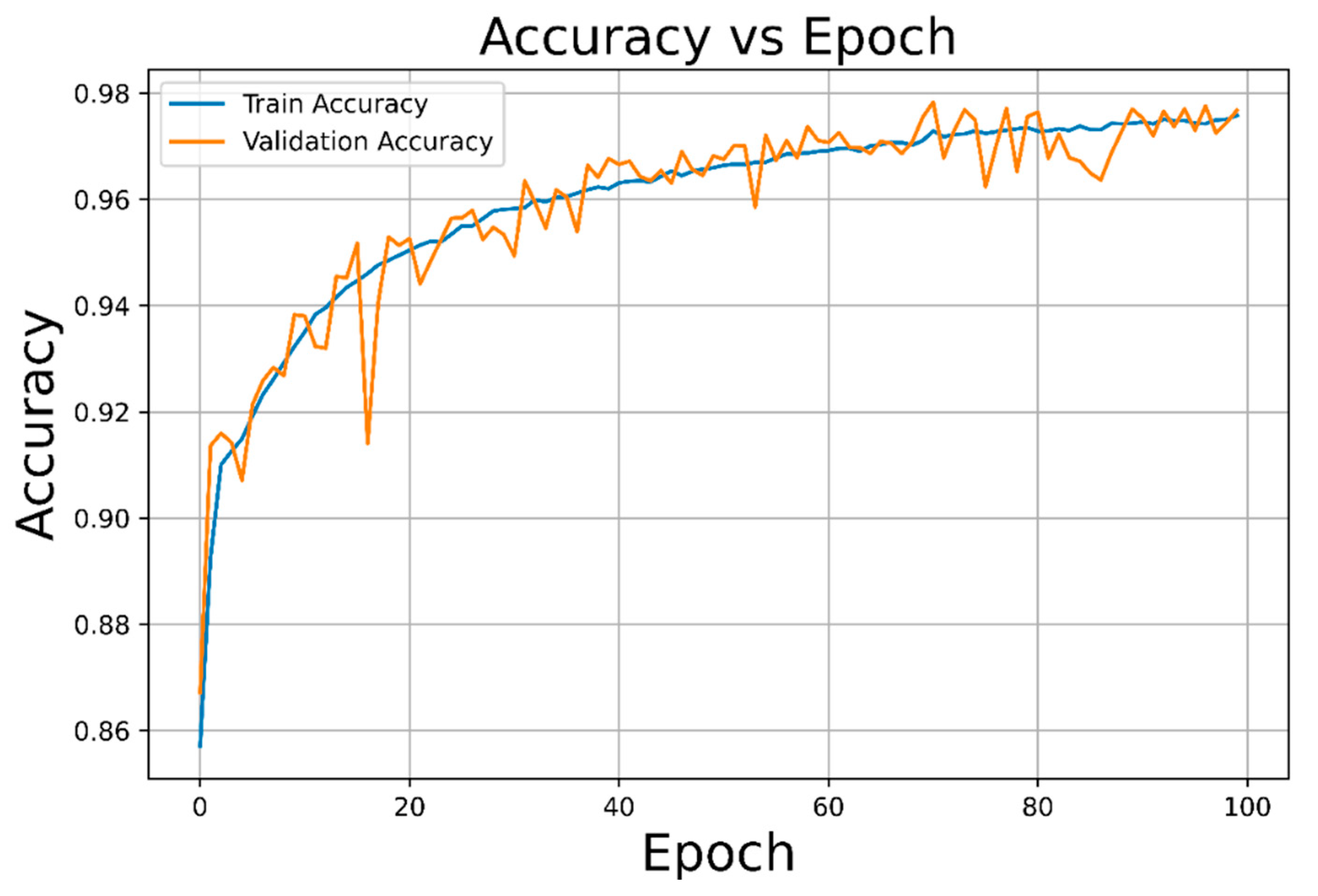

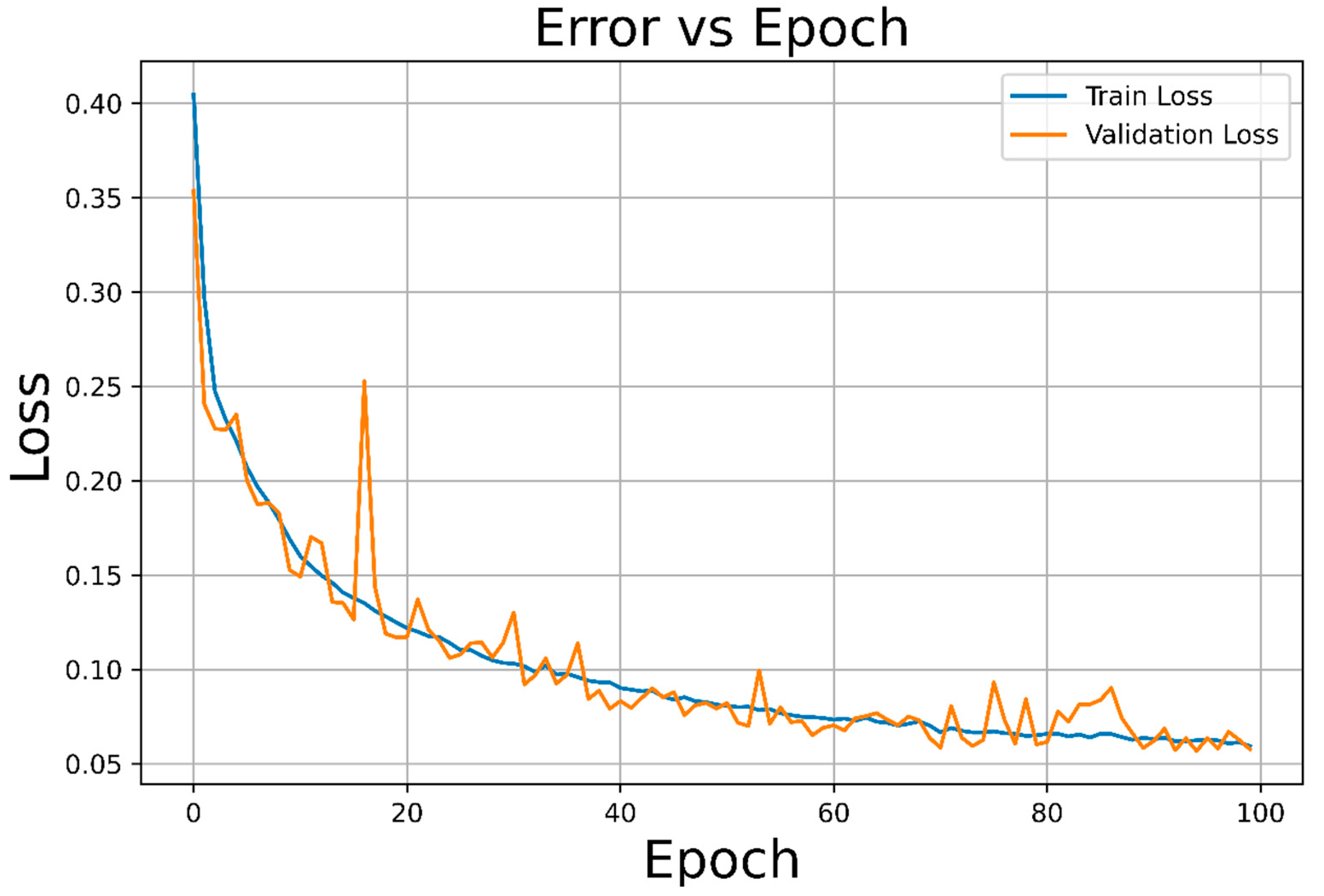

- The suggested model outperforms the baseline models in terms of performance, namely recall, accuracy, and precision, according to the experimental data.

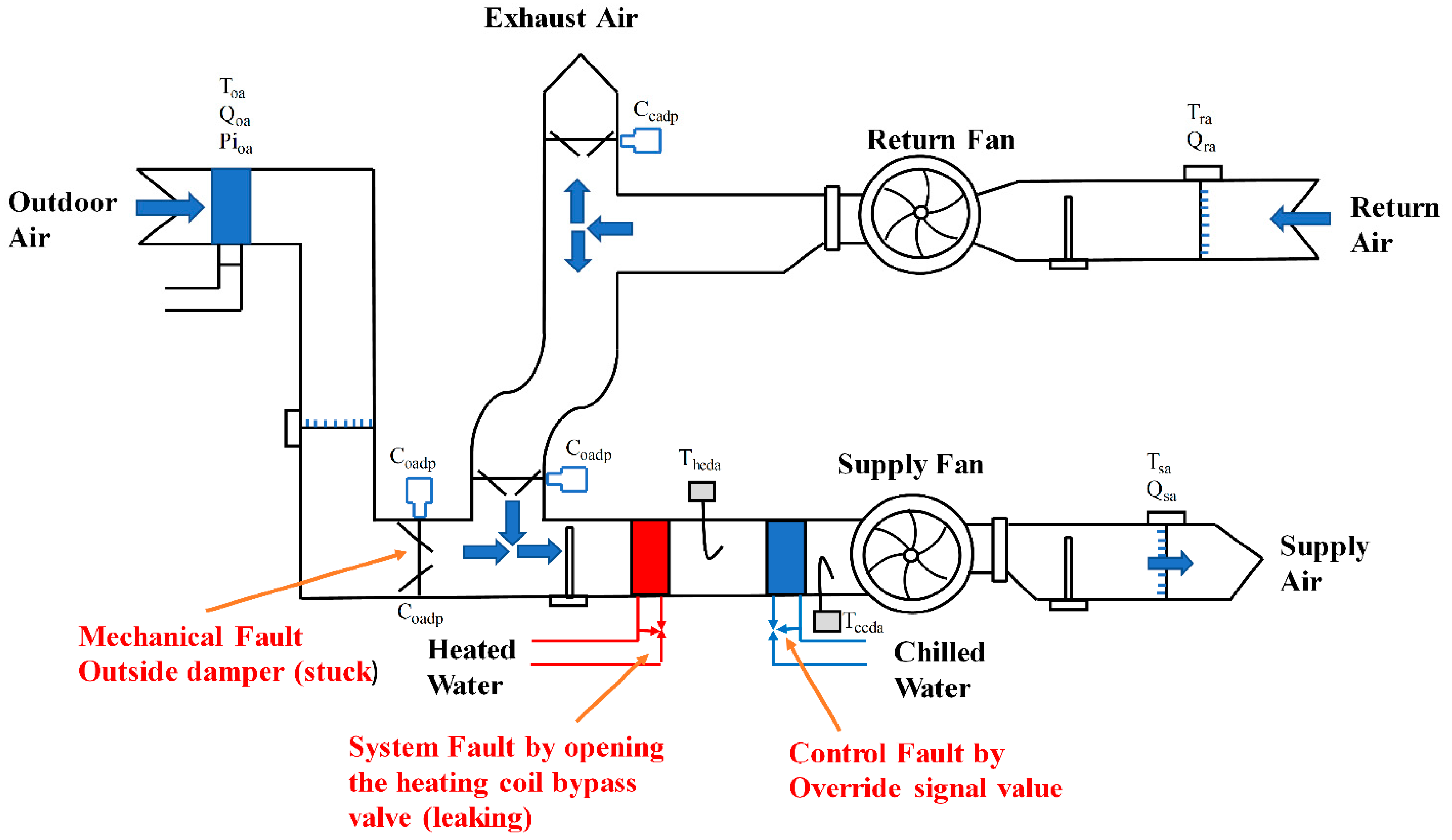

2. Data-Driven Method

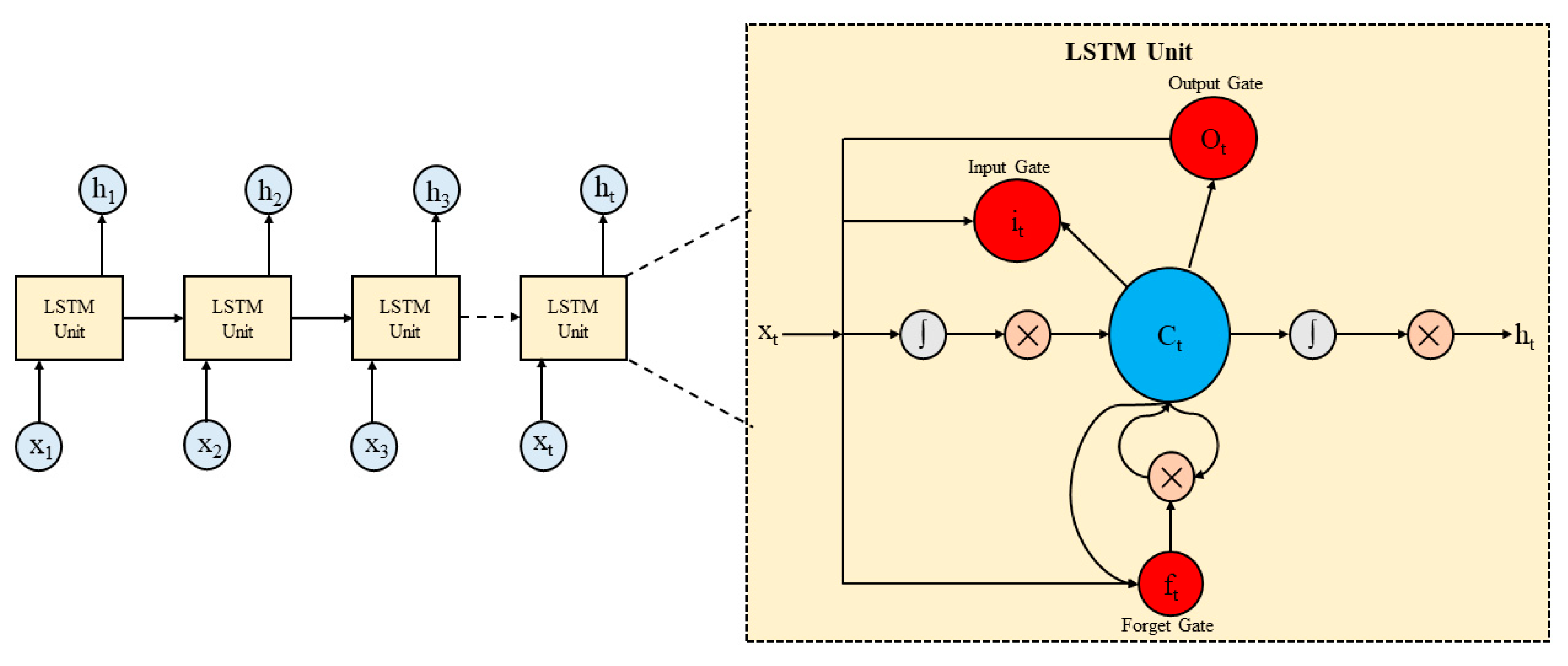

2.1. Long Short-Term Memory (LSTM)

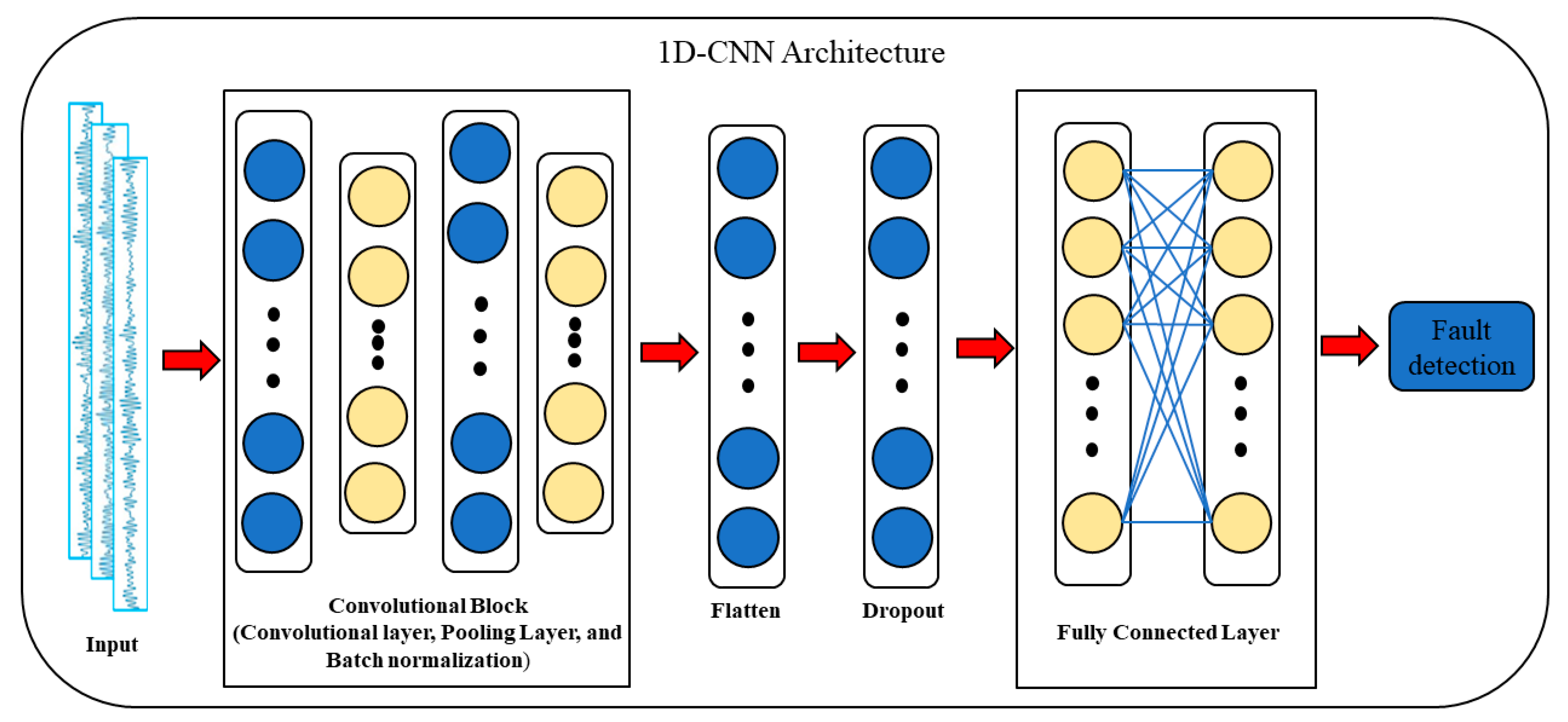

2.2. Convolutional Neural Network (CNN)

- (1)

- Convolutional Layer

- (2)

- Pooling Layer

- (3)

- Fully Connected Layer

2.3. The Proposed Model

3. Data Analysis and Results

4. Comparison with the State-of-the-Art Methods

4.1. Robustness Analysis

- Gaussian noise with μ = 0, σ = 0.05;

- Random missing values replaced with the column mean (up to 10%).

4.2. Shortcomings and Limitations

- Interpretability: While the model performs well, it lacks transparency. Future work may explore explainable AI methods (e.g., SHAP, LIME).

- Computation Cost: The hybrid architecture has a higher training time and memory requirements compared to simpler ML models.

- Limited Fault Diversity: The performance is strong on the existing dataset, but may not generalize to systems with different AHU configurations without retraining.

- Data Dependency: Requires large and labeled datasets. Rare fault types may be underrepresented, affecting the recall for minority classes.

- Because many air quality data parameters can affect the FDD accuracy and efficiency, data-driven approaches are needed to quickly identify the key components and build single and hybrid models for comparison. The unique model contains just one DL model. The greatest aspects of both kinds are combined in hybrid vehicles. The results show that the hybrid model effectively detects the fault in the HVAC system more accurately than isolated models. Thus, the hybrid model should be utilized for multi-feature data.

- There are advantages and disadvantages to almost every model. FDD requires a CNN-LSTM hybrid model, whereby the former uses the preexisting AHU system to extract the relevant characteristics, and the latter generates FDD. The findings demonstrate that the proposed model halves the training time while increasing the FDD accuracy.

5. Conclusions

- The hybrid ML architecture proposed herein combines the benefits of LSTM and 1D CNN algorithms to detect the faults in an HVAC system, thereby enhancing the system efficacy.

- The proposed model offers rapid convergence, autonomous feature learning, and enhanced learning capabilities, in addition to being more computationally efficient than the other models.

- The dropout layers in the model facilitate feature extraction and noise reduction, providing crucial information to the LSTM layers.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Santamouris, M.; Vasilakopoulou, K. Present and future energy consumption of buildings: Challenges and opportunities towards decarbonisation. e-Prime—Adv. Electr. Eng. Electron. Energy 2021, 1, 100002. [Google Scholar] [CrossRef]

- Che, W.W.; Tso, C.Y.; Sun, L.; Ip, D.Y.; Lee, H.; Chao, C.Y.; Lau, A.K. Energy consumption, indoor thermal comfort and air quality in a commercial office with retrofitted heat, ventilation and air conditioning (HVAC) system. Energy Build. 2019, 201, 202–215. [Google Scholar] [CrossRef]

- Nižetić, S.; Djilali, N.; Papadopoulos, A.; Rodrigues, J.J. Smart technologies for promotion of energy efficiency, utilization of sustainable resources and waste management. J. Clean. Prod. 2019, 231, 565–591. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, L.; Li, Y.; Shi, Y.; Gao, X.; Hu, Y. A review of computing-based automated fault detection and diagnosis of heating, ventilation and air conditioning systems. Renew. Sustain. Energy Rev. 2022, 161, 112395. [Google Scholar] [CrossRef]

- Shi, Z.; O’Brien, W. Development and implementation of automated fault detection and diagnostics for building systems: A review. Autom. Constr. 2019, 104, 215–229. [Google Scholar] [CrossRef]

- Li, W.; Li, H.; Gu, S.; Chen, T. Process fault diagnosis with model-and knowledge-based approaches: Advances and opportunities. Control Eng. Pract. 2020, 105, 104637. [Google Scholar] [CrossRef]

- Zhou, X.; Du, H.; Xue, S.; Ma, Z. Recent advances in data mining and machine learning for enhanced building energy management. Energy 2024, 307, 132636. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, T.; Zhang, X.; Zhang, C. Artificial intelligence-based fault detection and diagnosis methods for building energy systems: Advantages, challenges and the future. Renew. Sustain. Energy Rev. 2019, 109, 85–101. [Google Scholar] [CrossRef]

- Trizoglou, P.; Liu, X.; Lin, Z. Fault detection by an ensemble framework of Extreme Gradient Boosting (XGBoost) in the operation of offshore wind turbines. Renew. Energy 2021, 179, 945–962. [Google Scholar] [CrossRef]

- Zhang, L.; Wen, J.; Li, Y.; Chen, J.; Ye, Y.; Fu, Y.; Livingood, W. A review of machine learning in building load prediction. Appl. Energy 2021, 285, 116452. [Google Scholar] [CrossRef]

- Martinez-Viol, V.; Urbano, E.M.; Kampouropoulos, K.; Delgado-Prieto, M.; Romeral, L. Support vector machine based novelty detection and FDD framework applied to building AHU systems. In Proceedings of the 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 8–11 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1749–1754. [Google Scholar]

- Mirnaghi, M.S.; Haghighat, F. Fault detection and diagnosis of large-scale HVAC systems in buildings using data-driven methods: A comprehensive review. Energy Build. 2020, 229, 110492. [Google Scholar] [CrossRef]

- Chen, Z.; O’Neill, Z.; Wen, J.; Pradhan, O.; Yang, T.; Lu, X.; Lin, G.; Miyata, S.; Lee, S.; Shen, C.; et al. A review of data-driven fault detection and diagnostics for building HVAC systems. Appl. Energy 2023, 339, 121030. [Google Scholar] [CrossRef]

- Yun, W.-S.; Hong, W.-H.; Seo, H. A data-driven fault detection and diagnosis scheme for air handling units in building HVAC systems considering undefined states. J. Build. Eng. 2021, 35, 102111. [Google Scholar] [CrossRef]

- Van Every, P.M.; Rodriguez, M.; Jones, C.B.; Mammoli, A.A.; Martínez-Ramón, M. Advanced detection of HVAC faults using unsupervised SVM novelty detection and Gaussian process models. Energy Build. 2017, 149, 216–224. [Google Scholar] [CrossRef]

- Bezyan, Y.; Nasiri, F.; Nik-Bakht, M. A Feature Selection Approach for Unsupervised Steady-State Chiller Fault Detection. In Multiphysics and Multiscale Building Physics, Proceedings of the 9th International Building Physics Conference (IBPC 2024), Toronto, ON, Canada, 25–27 July 2024; International Association of Building Physics; Springer: Singapore, 2025; pp. 148–153. [Google Scholar]

- Yan, K.; Chong, A.; Mo, Y. Generative adversarial network for fault detection diagnosis of chillers. Build. Environ. 2020, 172, 106698. [Google Scholar] [CrossRef]

- Pule, M.; Matsebe, O.; Samikannu, R. Application of PCA and SVM in fault detection and diagnosis of bearings with varying speed. Math. Probl. Eng. 2022, 2022, 5266054. [Google Scholar] [CrossRef]

- Li, Y.; O’Neill, Z. A critical review of fault modeling of HVAC systems in buildings. Build. Simul. 2018, 11, 953–975. [Google Scholar] [CrossRef]

- Yang, X.; Chen, J.; Gu, X.; He, R.; Wang, J. Sensitivity analysis of scalable data on three PCA related fault detection methods considering data window and thermal load matching strategies. Expert Syst. Appl. 2023, 234, 121024. [Google Scholar] [CrossRef]

- Zebari, R.; Abdulazeez, A.; Zeebaree, D.; Zebari, D.; Saeed, J. A comprehensive review of dimensionality reduction techniques for feature selection and feature extraction. J. Appl. Sci. Technol. Trends 2020, 1, 56–70. [Google Scholar] [CrossRef]

- Abid, A.; Khan, M.T.; Iqbal, J. A review on fault detection and diagnosis techniques: Basics and beyond. Artif. Intell. Rev. 2021, 54, 3639–3664. [Google Scholar] [CrossRef]

- Taheri, S.; Ahmadi, A.; Mohammadi-Ivatloo, B.; Asadi, S. Fault detection diagnostic for HVAC systems via deep learning algorithms. Energy Build. 2021, 250, 111275. [Google Scholar] [CrossRef]

- Masdoua, Y.; Boukhnifer, M.; Adjallah, K.H. Fault detection and diagnosis in AHU system with data driven approaches. In Proceedings of the 2022 8th International Conference on Control, Decision and Information Technologies (CoDIT), Istanbul, Turkey, 17–20 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1375–1380. [Google Scholar]

- Lee, K.-P.; Wu, B.-H.; Peng, S.-L. Deep-learning-based fault detection and diagnosis of air-handling units. Build. Environ. 2019, 157, 24–33. [Google Scholar] [CrossRef]

- Gao, Z.-W.; Xiang, Y.; Lu, S.; Liu, Y. An optimized updating adaptive federated learning for pumping units collaborative diagnosis with label heterogeneity and communication redundancy. Eng. Appl. Artif. Intell. 2025, 152, 110724. [Google Scholar] [CrossRef]

- Mehta, M.; Chen, S.; Tang, H.; Shao, C. A federated learning approach to mixed fault diagnosis in rotating machinery. J. Manuf. Syst. 2023, 68, 687–694. [Google Scholar] [CrossRef]

- Prince; Hati, A.S. Convolutional neural network-long short term memory optimization for accurate prediction of airflow in a ventilation system. Expert Syst. Appl. 2022, 195, 116618. [Google Scholar] [CrossRef]

- Furia, C.A.; Mandrioli, D.; Morzenti, A.; Rossi, M. Modeling Time in Computing; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Liu, X.; Tang, Z.; Yang, B. Predicting Network Attacks with CNN by Constructing Images from NetFlow Data. In Proceedings of the 2019 IEEE 5th Intl Conference on Big Data Security on Cloud (BigDataSecurity), IEEE Intl Conference on High Performance and Smart Computing, (HPSC) and IEEE Intl Conference on Intelligent Data and Security (IDS), Washington, DC, USA, 27–29 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 61–66. [Google Scholar]

- Yalçın, M.E.; Ayhan, T.; Yeniçeri, R. Artificial Neural Network Models. In Reconfigurable Cellular Neural Networks and Their Applications; Springer: Cham, Switzerland, 2020; pp. 5–22. [Google Scholar]

- Lee, K.B.; Cheon, S.; Kim, C.O. A convolutional neural network for fault classification and diagnosis in semiconductor manufacturing processes. IEEE Trans. Semicond. Manuf. 2017, 30, 135–142. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; NIPS: Kolkata, India, 2012; pp. 1097–1105. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Wu, H.; Gu, X. Towards dropout training for convolutional neural networks. Neural Netw. 2015, 71, 1–10. [Google Scholar] [CrossRef]

- Cuéllar, M.P.; Delgado, M.; Pegalajar, M. An application of non-linear programming to train recurrent neural networks in time series prediction problems. In Enterprise Information Systems VII, Proceedings of the the Seventh International Conference on Enterprise Information Systems (ICEIS 2005), Miami, FL, USA, 24–28 May 2005; Springer: Berlin/Heidelberg, Germany, 2007; pp. 95–102. [Google Scholar]

- Li, S. Development and validation of a dynamic air handling unit model, Part I. ASHRAE Trans. 2010, 116, 57–73. [Google Scholar]

- Cassel, M.; Lima, F. Evaluating one-hot encoding finite state machines for SEU reliability in SRAM-based FPGAs. In Proceedings of the 12th IEEE International On-Line Testing Symposium (IOLTS’06), Lake Como, Italy, 10–12 July 2006; IEEE: Piscataway, NJ, USA, 2006; p. 6. [Google Scholar]

- Granderson, J.; Lin, G.; Harding, A.; Im, P.; Chen, Y. Building fault detection data to aid diagnostic algorithm creation and performance testing. Sci. Data 2020, 7, 65. [Google Scholar] [CrossRef] [PubMed]

- Ahmadi, A.; Nabipour, M.; Mohammadi-Ivatloo, B.; Amani, A.M.; Rho, S.; Piran, M.J. Long-term wind power forecasting using tree-based learning algorithms. IEEE Access 2020, 8, 151511–151522. [Google Scholar] [CrossRef]

| Layer | Output Shape | Param # |

|---|---|---|

| conv1d (Conv1D) | (None, 14, 64) | 256 |

| max_pooling1d (MaxPooling1D) | (None, 7, 64) | 0 |

| conv1d_1 (Conv1D) | (None, 5, 128) | 24,704 |

| max_pooling1d_1 (MaxPooling1D) | (None, 2, 128) | 0 |

| lstm (LSTM) | (None, 50) | 35,800 |

| flatten (Flatten) | (None, 50) | 0 |

| dense (Dense) | (None, 100) | 5100 |

| Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|

| 0.9844 | 0.98857 | 0.98648 | 0.97 |

| Model | Precision | Recall | Accuracy |

|---|---|---|---|

| Gradient Boost [41] | 0.79 | 0.81 | 0.79 |

| Random forests [24] | 0.81 | 0.89 | 0.83 |

| DRNN [23] | 0.89 | 0.92 | 0.91 |

| DNN [25] | 0.79 | 0.95 | 0.95 |

| 1D-CNN | 0.874 | 0.865 | 0.892 |

| Proposed model | 0.9844 | 0.98857 | 0.97 |

| Model | Clean Data | Gaussian Noise | Missing Value |

|---|---|---|---|

| Proposed Model | 0.97 | 96.72 | 91.3 |

| Baseline 1D-CNN | 89.2 | 83.7 | 81.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prince; Yoon, B.; Kumar, P. Fault Detection and Diagnosis in Air-Handling Unit (AHU) Using Improved Hybrid 1D Convolutional Neural Network. Systems 2025, 13, 330. https://doi.org/10.3390/systems13050330

Prince, Yoon B, Kumar P. Fault Detection and Diagnosis in Air-Handling Unit (AHU) Using Improved Hybrid 1D Convolutional Neural Network. Systems. 2025; 13(5):330. https://doi.org/10.3390/systems13050330

Chicago/Turabian StylePrince, Byungun Yoon, and Prashant Kumar. 2025. "Fault Detection and Diagnosis in Air-Handling Unit (AHU) Using Improved Hybrid 1D Convolutional Neural Network" Systems 13, no. 5: 330. https://doi.org/10.3390/systems13050330

APA StylePrince, Yoon, B., & Kumar, P. (2025). Fault Detection and Diagnosis in Air-Handling Unit (AHU) Using Improved Hybrid 1D Convolutional Neural Network. Systems, 13(5), 330. https://doi.org/10.3390/systems13050330