Abstract

Scientific, accurate, and interpretable carbon price forecasts provide critical support for addressing climate change, achieving low-carbon goals, and informing policy-making and corporate decision-making in energy and environmental markets. However, the existing studies mainly focus on deterministic forecasting, with obvious limitations in data feature diversity, model interpretability, and uncertainty quantification. To fill these gaps, this study constructs an interpretable hybrid system for carbon market price prediction by combining feature screening algorithms, deep learning models, and interpretable explanatory analysis methods. Specifically, this study first screens important variables from twenty-one multi-source structured and unstructured influencing factor datasets on five dimensions affecting carbon price using the Boruta algorithm. Immediately after that, this study proposes a hybrid architecture of bidirectional temporal convolutional network and Informer models, where a bidirectional temporal convolutional network is used to extract local spatio-temporal dependent features, while Informer captures long sequences of global features through the connectivity mechanism, thus realizing staged feature extraction. Then, to improve the interpretability of the model and quantify the uncertainty, this study introduces Shapley additive explanations to analyze the feature contribution in the prediction process, and the Monte Carlo dropout method is used to achieve interval prediction. Finally, the empirical results in China’s Guangdong and Shanghai carbon markets show that the proposed model significantly outperforms benchmark models, and the coverage probability of the obtained prediction intervals significantly outperforms the confidence level. The Shapley additive explanation analysis reveals regional heterogeneity drivers. In addition, the proposed model is also intensively validated in the European carbon market and the U.S. natural gas market, which also demonstrate an excellent prediction performance, indicating that the model has good robustness and applicability.

1. Introduction

Carbon emissions from fossil fuels contribute to global warming [], which poses substantial challenges to environmental conservation, economic stability, and social equity. To address this issue, the European Union launched the world’s first large-scale carbon market, the European Union Emissions Trading System (EU-ETS), in 2005, employing a cap-and-trade mechanism []. Carbon trading has been increasingly adopted by various countries and regions as an effective mechanism to control carbon emissions. As a responsible major economy, China has been expanding its carbon emissions trading pilot programs since 2011 in Beijing, Shanghai, Tianjin, Chongqing, Shenzhen, and provinces including Hubei, Guangdong, and Fujian [,]. The carbon price serves as the core economic tool of carbon markets, incentivizing corporate emissions reductions. Therefore, accurate carbon price forecasting is crucial for governments to formulate evidence-based policies and for enterprises to participate effectively. However, the nonlinearity and complexity of carbon markets pose significant forecasting challenges [].

Currently, carbon price prediction research primarily covers two aspects: the analysis of influencing factors and the improvement of prediction models. Before the advent of deep learning, econometric models were commonly employed for a univariate carbon price prediction based solely on historical data. However, with a deeper analysis of data characteristics, it became evident that carbon price exhibits nonlinear and non-stationary properties [], making it difficult to achieve accurate predictions using historical data alone. As the superiority of machine learning (ML) techniques has been demonstrated across various domains, artificial intelligence (AI)-based models leveraging ML methods have proven effective in capturing nonlinear features []. Consequently, an increasing number of scholars have integrated ML approaches with external factors to enhance carbon price prediction accuracy [].

Regarding influencing factor analysis, the existing carbon price forecasting studies primarily focus on structural determinants, including macroeconomic indicators, energy price fluctuations, and international carbon market linkages; there remains a notable research gap in the systematic incorporation and analysis of non-structural data sources [,]. One the one hand, numerous scholars emphasize the substantial influence of energy prices on carbon price fluctuations [,]. Wang and Guo (2018) [] identified that oil and natural gas prices exert significant influences on carbon price dynamics. Wang et al. (2023) [] further dissected the transmission mechanisms through which energy prices influence carbon prices. On the other hand, in the macroeconomic context, stock prices have become a crucial factor influencing carbon prices []. Shi et al. (2023) [] found that macroeconomic fluctuations significantly impact carbon prices in their study of China’s carbon market. Song and Liu (2024) [] employed a vector autoregression model to study the Chinese market, revealing that stock prices significantly influence carbon prices. In addition, monetary policy tools such as interest rates and government bonds exert a substantial influence on carbon market prices [,]. Additionally, carbon price fluctuations may be influenced by environmental factors [,]. Zhou et al. (2024) [] found through their research that environmental variables such as air quality not only influence short-term fluctuations in carbon prices but also participate in the long-term price formation of carbon markets. With the advancement of big data technologies, internet search behaviors have increasingly become a crucial component of online information. Unstructured data are playing an ever more significant role in predictive analytics. Zhao et al. (2025) [] found that combining Baidu search index data with energy prices and economic factors can significantly improve the accuracy of carbon price forecasting. Zhou and Du (2025) [] proposed a multivariate event-enhanced pre-trained large language model (LLM) that integrates unstructured text data through sentiment analysis, demonstrating an exceptional predictive accuracy and robustness in the Hubei and Shanghai carbon markets.

As for the carbon price forecasting models, they can be broadly categorized into three approaches: traditional statistical models, AI models, and hybrid models []. Traditional statistical models have been widely employed in early carbon price prediction studies [,]. However, these models often rely on linear assumptions, making it challenging to handle the nonlinear and highly volatile characteristics of carbon prices. In AI models, long short-term memory (LSTM) [], convolutional neural networks (CNNs) [], and temporal convolutional networks (TCNs) [] have gained increasing attention in recent years. These models can autonomously learn complex carbon price patterns and effectively capture long-term dependencies in time series data. Nevertheless, they also face challenges including overfitting, insufficient interpretability, and parameter randomness []. To address these challenges, researchers have developed hybrid prediction models by integrating intelligent algorithms []. Duan et al. (2024) [] employed the whale optimization algorithm (WOA) to optimize the parameters of the extreme gradient boosting (XGBoost) model. Building on this, researchers have explored integrating feature selection methods and decomposition techniques to further improve prediction accuracy []. Liu et al. (2024) [] combined multi-stage decomposition with interval multilayer perceptron models enhanced by a sparrow search algorithm to obtain final carbon price predictions. Although the aforementioned models have achieved certain research progress, most of them are based on a single prediction model. Given that carbon prices are influenced by multiple factors and exhibit significant variations across different markets, it is difficult for a single prediction model to fit all carbon price components []. Consequently, hybrid models comprising multiple prediction techniques can fully integrate the advantages of each constituent model through strategic combination, leveraging their respective strengths in handling different data characteristics to achieve more robust and adaptable prediction outcomes []. Cui and Niu (2024) [] proposed a hybrid prediction framework integrating an attention–temporal convolutional network–bidirectional gated recurrent unit and LSTM for a model ensemble, with the entropy method assigning weights to their predictions, demonstrating a superior prediction performance. Zhao et al. (2025) [] developed an adaptive multi-factor integrated hybrid model based on periodic reconstruction and random forest techniques, demonstrating an outstanding predictive performance in empirical studies. Moreover, for complex hybrid models, the emergence of interpretability enhances transparency by visualizing decision-making principles, aiding users in understanding model logic and enabling stakeholders to grasp the key factors driving predictions, which is now widely applied in fields such as crude oil price and electricity load forecasting [,,].

Inspired by the preceding discussion, this study combines feature screening algorithms, deep learning models, and interpretable explanatory analytics to construct an interpretable hybrid system for carbon market price prediction. First, multidimensional data sources, including macroeconomic indicators and the Baidu Index, are considered. The Boruta feature selection algorithm is then employed to construct a feature subspace, ensuring the inclusion of the most relevant predictive variables. Next, this study proposes a hybrid architecture of BiTCN and the Informer model, where BiTCN is used to extract local spatio-temporal dependent features, while the Informer captures the global features of the long sequences through the connectivity mechanism, which enables phased feature extraction and improves the prediction accuracy. Then, in order to improve the interpretability of the model and quantify the uncertainty, this study introduces the Shapley additive explanations (SHAP) to analyze the feature contributions in the prediction process and the Monte Carlo dropout method to achieve interval prediction, which provides a more interpretable prediction framework. Moreover, the contributions of this study are summarized below. (1) Previous studies have largely focused solely on structured data, neglecting unstructured information such as market sentiment. This study integrates twenty-one influential factors, including environmental variables such as weather and air quality, as well as macroeconomic indicators such as government bond yields and stock prices. Additionally, it also incorporates non-structural data, such as the Baidu Index, which can reflect real-time market sentiment and public attention, thereby enhancing the accuracy and timeliness of the predictions. (2) Traditional methods often employ linear ensembles, which struggle to uncover synergies between different models and provide only point predictions lacking interpretability analysis. This paper proposes a hybrid model that combines the strengths of multiple deep learning models. It preserves the original features without complex data decomposition, significantly improving prediction accuracy and enabling interval predictions. Simultaneously, it achieves interpretable predictive analysis using the SHAP method. (3) Previous studies lacked cross-regional, multi-scenario experimental validation. This paper conducts comparative experiments across multiple carbon markets, including Shanghai, Guangdong, and the EU. The results demonstrate the model’s significant predictive advantages and robustness across diverse market environments.

2. Methods

2.1. Framework of the Proposed Hybrid System

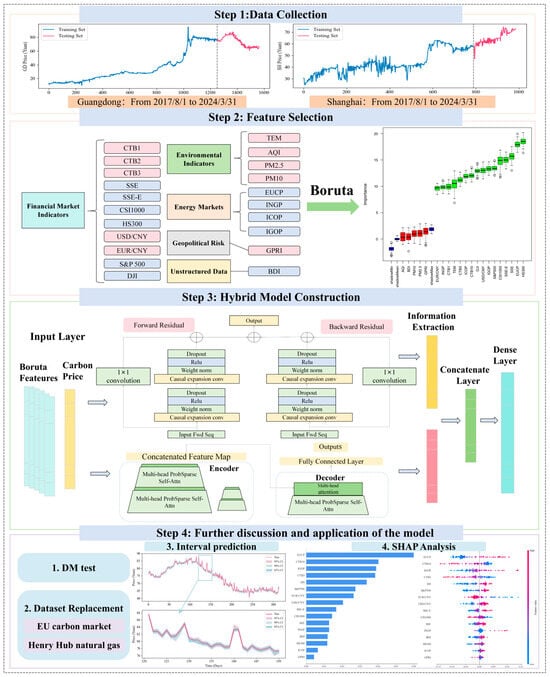

This study builds a proposed hybrid system for multi-carbon price prediction tasks, consisting of data collection, feature selection, hybrid model construction, and a further discussion and application of the model, as presented in Figure 1.

Figure 1.

The detailed prediction framework of this study.

- (1)

- Data collection: Daily carbon price data from the Guangdong and Shanghai carbon emissions trading markets are selected for empirical analysis, covering the period from 1 August 2017 to 31 March 2024.

- (2)

- Feature selection: The Boruta method is first applied to reduce the dimensionality of 21 commonly used carbon prices and influencing factor data across five dimensions. These factors encompass both structured and unstructured data. Subsequently, Experiment I is conducted to validate the effectiveness of the Boruta method in enhancing prediction accuracy.

- (3)

- Hybrid model construction: This step first constructs common baseline models (LSTM, GRU, TCN, and Transformer) and their enhanced variants (BiLSTM, BiGRU, BiTCN, and Informer). Through Experiment II, the optimal models, i.e., Informer and Transformer, are selected via comparative analysis. Subsequently, the Informer and Transformer models are combined to build a hybrid model, namely BiTCN-Informer. Finally, Experiment III is also conducted to validate the predictive performance of the proposed hybrid model through comparative analysis.

- (4)

- Further discussion and application of the model: Statistical hypothesis testing and experiments with additional datasets are employed to further validate the predictive efficacy of the proposed models. Concurrently, the SHAP method is also introduced to elucidate the predictive mechanisms of the hybrid model, while Monte Carlo dropout is utilized to quantify uncertainty and enable interval prediction. This fully demonstrates the practical value of the hybrid model, whose rich insights provide robust support for corporate and governmental decision-making.

2.2. Boruta Algorithm

Boruta is a wrapper-based feature selection algorithm that builds upon the random forest model []. It employs the Z-score as its benchmark criterion, aiming to identify all features that contribute significantly to the response variable. The core idea of Boruta is to introduce randomness into the system and assess the importance of each feature by comparing it with its randomly permuted counterpart, known as “shadow features.” This approach effectively mitigates misleading effects caused by correlations and stochastic fluctuations, ensuring a more reliable selection of influential factors. The Boruta algorithm follows an iterative process that can be summarized as follows:

- (1)

- Shadow feature construction: Duplicate the original features and randomly shuffle their values to create “shadow features”, which serve as a baseline for comparison.

- (2)

- Random forest-based voting: Train a random forest model on the augmented dataset and compute the feature importance scores. Features that consistently outperform their shadow counterparts are retained, while those with lower importance are removed.

- (3)

- Convergence and feature selection: The process continues until all features are classified as “important”, “unimportant”, or “tentatively important”.

2.3. The Hybrid BiTCN-Informer Model

This study proposes a hybrid model for carbon price prediction, called BiTCN-Informer, where the Informer serves as the primary model and BiTCN complements the Informer’s long-term forecasting capability by extracting local features.

2.3.1. Bidirectional Temporal Convolutional Network (BiTCN)

The BiTCN is an advanced deep learning architecture proposed in 2023 [], which is designed to enhance long time series prediction. It is structured into three main components: bidirectional temporal blocks, the feature fusion layer, and residual connections.

- (1)

- Bidirectional temporal blocks

BiTCN retains dilation convolution as a core operation. Among them, backward convolution, which is an important component of the bidirectional temporal blocks, introduces an additional pathway that enables the network to incorporate both historical dependencies and future covariates. The mathematical formulation of forward and backward dilated convolutions in BiTCN is as follows:

where and represent the forward and backward outputs of BiTCN, and denote the forward and backward elements in the convolution kernel, k is the kernel size, is the input, and is the expansion factor. Moreover, Batch Normalization and the ReLU activation function are applied to stabilize training and enhance feature representation.

- (2)

- Feature fusion layer

The feature fusion layer aims to integrate forward and backward outputs into a unified representation. This layer ensures that the model effectively leverages information from both temporal perspectives, enhancing feature extraction and improving predictive performance. The fused output can be calculated by Equation (3).

- (3)

- Residual connections

The main effect of residual connections is to stabilize training and facilitate gradient flow, linking the input directly to the output of each layer. This prevents vanishing gradients in deep networks and helps preserve essential features from earlier layers. The final output is mathematically expressed as follows:

2.3.2. Staged Feature Extraction

Due to single models frequently lacking the capability to extract complex features, this study proposes a connection mechanism to develop a hybrid model to achieve hierarchical feature extraction. This mechanism employs linear projection to facilitate dimensional adaptation between models. Subsequent to the connection mechanism, the local features extracted by BiTCN can be augmented with the long-term dependencies identified by Informer. While reducing noise, it can effectively improve the global modeling ability of Informer [,]. Firstly, as Equation (5) shows, the BiTCN output features are projected to the Informer input dimension via linear transformation:

where (B: batch size, T: time steps, D: feature dimension) is the BiTCN output and and are learnable parameters for dimension adaptation.

Then, the bidirectional features from BiTCN are fused with global dependencies from Informer:

where LayerNorm stabilizes the gradient flow through residual connections and represents the resulting fusion feature.

2.3.3. Informer

Informer is an advanced time series prediction model derived from Transformer, specifically designed to handle long-sequence prediction tasks efficiently []. While the standard Transformer model suffers from quadratic complexity O(L2) in self-attention, Informer introduces three key improvements, ProbSparse self-attention, self-attention distilling, and a generative style decoder, significantly reducing computational cost and improving prediction accuracy. Informer excels in carbon price prediction, significantly outperforming LSTMs and TCNs []. The architecture of Informer consists of an encoder–decoder structure, where the encoder extracts long-range dependencies with sparse self-attention, and the decoder generates future sequences non-autoregressively. The following sections provide a detailed description of each component.

- (1)

- ProbSparse self-attention

The standard self-attention is defined as follows:

where , , and are the query, key, and value matrices; and is the scaling factor.

ProbSparse self-attention identifies dominant queries through a sparsity measurement to reduce the quadratic complexity. For the i-th query qi, the divergence between its attention distribution and a uniform distribution is quantified by Kullback–Leibler (KL) divergence:

Dropping the constant term , the sparsity score is simplified as follows:

Only the top queries with the highest sparsity scores are retained, forming a sparse query matrix . The ProbSparse attention is then computed as follows:

- (2)

- Self-attention distilling in encoder

The encoder employs a distilling operation that hierarchically compresses features and removes redundant combinations. After each ProbSparse self-attention layer, a distillation step is applied:

where uses a kernel width of 3, and MaxPool downsamples the sequence by a stride of 2. This operation reduces the temporal dimension by half at each layer. Multiple encoder stacks with progressively halved inputs are concatenated to preserve multi-scale features, enhancing robustness for long sequences.

- (3)

- Generative style decoder

Traditional autoregressive decoders suffer from slow inference and error accumulation. Instead, the Informer adopts a non-autoregressive generative decoder that predicts the entire output sequence in a single forward pass. The decoder input combines a start token (historical sequence segment ) and a placeholder for target timestamps:

Masked multi-head attention ensures each position attends only to prior positions. The decoder leverages the encoder’s distilled features to generate predictions in one step, eliminating step-by-step latency and error propagation.

2.4. Shapley Additive Explanations (SHAP)

SHAP originates from the concept of Shapley values in game theory [] and has been widely adopted to interpret machine learning model predictions [], providing interpretability for complex black-box models. SHAP shows important value in predicting carbon price, and reveals the nonlinear influence and interaction effect of carbon price drivers through global and local explanations []. The Shapley value for feature i is computed as follows:

where represents the Shapley value of feature i, indicating its average marginal contribution to the model output; N is the set of all features and S is a subset of N that does not include i; v(S) denotes the model’s prediction when only the feature subset S is used; assigns a fair weighting to different subsets; and calculates the marginal contribution of feature i to the model output.

2.5. Monte Carlo Dropout

Monte Carlo dropout is a variational inference technique that approximates BNN by retaining dropout layers during both training and inference []. By sampling multiple stochastic forward passes, it estimates the predictive distribution and quantifies uncertainty [,]. Monte Carlo dropout provides a practical Bayesian approach for interval prediction, which balances computational efficiency and theoretical rigor, making it widely applicable in time series prediction. The quantification of uncertainty is through predictive distribution, which is computed as Equation (14):

where S is the number of dropout samples and θs denotes parameters with dropout masks.

where μ and σ2 are the mean and standard deviation across samples. Z = 1.96 corresponds to a 95% confidence level under a Gaussian assumption.

3. Data Sources

This section primarily introduces the data sources for this study, including carbon prices and their influencing factors, and presents the results of the feature selection.

3.1. Carbon Prices

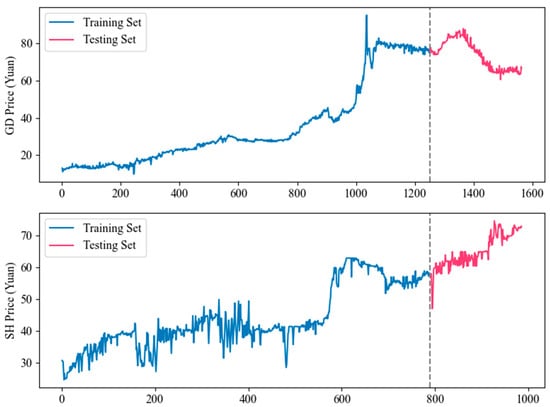

Two daily carbon price datasets from Guangdong and Shanghai carbon markets, named GD and SH, are selected as study cases to test the prediction performance of the proposed model. Each dataset spans 1 August 2017 to 31 March 2024, with the first 80% allocated as the training set and the remaining 20% as the testing set. Figure 2 shows the trend changes in the two carbon price series.

Figure 2.

The trend changes in GD and SH datasets.

3.2. Influencing Factors

Based on the existing related literature [,,,], this study collects the influencing factors of carbon price from the following five dimensions. Among these, the environmental indicator data originate from the China Stock Market & Accounting Research Database (https://data.csmar.com/), while other data come from the Investing website (https://cn.investing.com/). Table 1 summarizes the influencing factors of the GD and SH datasets.

Table 1.

Features used in datasets GD and SH and their abbreviations.

(1) Environmental indicators: Environmental indicators are one of the most commonly used variables in the field of carbon price prediction. Researchers tend to select either temperature or air quality as the primary variable in order to investigate the environmental impact on carbon prices. In more detail, four kinds of environmental indicators are chosen in this study: temperature (TEM), air quality index (AQI), and PM2.5 and PM10 concentration. (2) Energy markets: The prices of energy commodities have often been shown in studies to have a large impact on carbon prices. In addition to the most commonly used EU-ETS carbon price (EUCP), we take the international market prices of natural gas (INGP), crude oil (ICOG), and gasoline (IGOP) in order to study their impact on China’s carbon price. (3) Financial market indicators: This study selects more financial market indicators as features to be selected, which can be roughly divided into the following three categories: firstly, the bond market, including China’s 1-year, 5-year, and 10-year government bonds (CTB1, CTB5 and CTB10); secondly, the exchange rate market, including the exchange rates of the US dollar and the euro against the CNY; thirdly, the stock market, including the Shanghai Composite Index (SSE), Shanghai Stock Exchange Energy Sector Index (SSE-E), China Securities Index 1000 (CSI1000), Shanghai and Shenzhen 300 Index (HS300), Shanghai Composite Energy, S&P 500 Index (S&P500), and Dow Jones Index (DJI). (4) Geopolitical risk: The Geopolitical Risk Index (GPRI) offers a data-driven approach to study geopolitical developments, which is a widely recognized quantitative indicator designed to capture the daily fluctuations of global geopolitical risks. (5) Unstructured data: Studies have shown that the integration of unstructured data, such as the Baidu Index (BDI), with structured data has been shown to enhance prediction accuracy. A total of 30 keywords related to carbon prices, such as “carbon sinks”, “carbon neutrality”, “low carbon”, “energy conservation”, and “new energy”, are integrated into Baidu’s daily search volume. By principal component analysis (PCA), the initial principal component of BDI data (from https://index.baidu.com/ by crawlers, accessed on 1 May 2024) is then extracted as a daily time series.

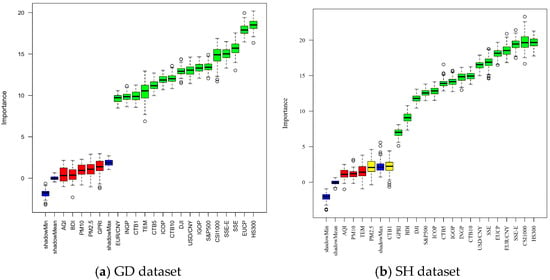

3.3. Feature Selection Results

In the Boruta method, a feature is typically rejected when its importance is lower than the maximum importance of all shadow features [,]. After inputting GD and SH datasets into Boruta, five features are identified as “unimportant”. Figure 3 displays the importance of each feature in the GD and SH datasets. Finally, the prediction models are populated with sixteen important features from both datasets, in conjunction with their carbon price series. Table 2 shows the selected influencing factors of two datasets.

Figure 3.

The importance of each feature in GD and SH datasets.

Table 2.

The feature selecting results from Boruta.

4. Experimental Results and Analysis

This section presents the experimental results and analysis of this study. In addition to the conventional point predictions for the GD and SH datasets, this study also implements interval prediction using the Bayesian method introduced earlier. First, this section outlines the evaluation metrics used for both point and interval predictions. Subsequently, three comparative experiments are conducted for point predictions. Finally, interval prediction is performed based on the point forecasting results.

4.1. Performance Measures

Four performance measures, including the mean absolute error (MAE), mean absolute percentage error (MAPE), root mean square error (RMSE), and Theil inequality coefficient (TIC), are adopted to evaluate the predictive performances of models [].

where and represent the t-th actual and predicted values.

As for the interval prediction, three performance criteria, including the prediction interval coverage probability (PICP), prediction interval normalized average width (PINAW), and coverage width-based criterion (CWC), are used in this study [].

where and are the lower and upper limit of the t-th prediction interval. is an indicator function. If is within the prediction interval, then ; otherwise, it is 0. is also an indicator function. If PICP is lower than μ = (1 − α) %, then γ(PICP) = 1; otherwise, it is 0. η is a parameter used to determine the degree of punishment, which controls the degree of amplification of the difference between PICP and μ.

4.2. Parameter Setting

All experiments are executed using Python 3.8 on a Windows 11 system with a 64-bit 3.20 GHz AMD Ryzen 7 5800H CPU and 16 GB RAM. The data normalization is implemented using the MinMaxScaler function. The parameter settings of the proposed model are shown in Table 3.

Table 3.

The hyperparameter settings of the proposed model.

4.3. Point Prediction

In this study, three comparative point prediction experiments are designed on the GD and SH datasets. The details are presented in the following subsections.

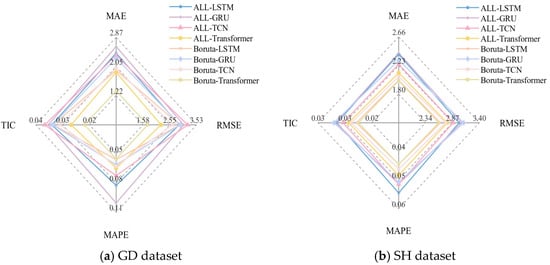

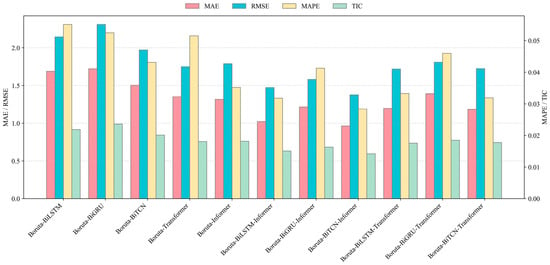

4.3.1. Experiment I: Comparisons of Benchmark Models

Experiment I aims to validate the impact of the Boruta feature selection algorithm on the model performance. It compares the prediction performance differences between models using all variables and those using Boruta-filtered features, further exploring the interference effects of redundant features in multi-source data. First, four common models, including LSTM, GRU, TCN, and Transformer, are selected for experiments using the GD and SH datasets. Subsequently, all-variable models and models with Boruta-selected variables are constructed to compare and analyze their predictive performance. Figure 4 and Table 4 show the prediction results of these models.

Figure 4.

The point prediction results in Experiment I using GD and SH datasets.

Table 4.

Results of the comparison before and after selecting features using Boruta.

- (1)

- For the GD dataset, the prediction accuracy of the model using all variables as inputs is lower than that of the same model using the features selected by the Boruta method. For instance, the MAE, RMSE, MAPE, and TIC values of the ALL-LSTM model are 2.4261, 2.9045, 0.0790, and 0.0301, respectively, which significantly underperforms for the prediction accuracy of the LSTM model with Boruta feature selection, i.e., Boruta-LSTM. Moreover, the same conclusion holds true for the vast majority of other models, demonstrating the positive impact of the Boruta algorithm on enhancing the model prediction performance.

- (2)

- For the SH dataset, the improvement in model performance after variable selection using the Boruta algorithm can also be observed. For instance, the MAE of the ALL-LSTM model is 2.3988, while that of the Boruta-LSTM model is 2.0081, further validating Boruta’s effectiveness in enhancing predictive performance. In comparison, the Boruta-Transformer model demonstrates the best performance among all models, exhibiting lower prediction errors than the other comparison models.

Based on the results of Experiment I and the above analysis and discussion, the following can be demonstrated: (1) After removing the unimportant features through Boruta, the prediction performance of each model has generally been enhanced. This indicates that feature selection effectively removes weakly correlated variables, significantly improving the prediction accuracy. (2) Among the four common time series prediction models, Transformer exhibits the best prediction performance, as its attention mechanism efficiently captures complex dependencies among multiple features.

4.3.2. Experiment II: Comparisons of Single Models with and Without Boruta Algorithm

The purpose of Experiment II is to compare the performances of different benchmark models based on the Boruta algorithm in the carbon price prediction task to identify the optimal predictive model. The baseline models include LSTM, GRU, TCN, BiLSTM, BiGRU, BiTCN, Transformer, and Informer. Table 5 presents the prediction error results for these models.

Table 5.

Results of the comparison between the benchmark models.

- (1)

- For the GD dataset, measured by MAE, Boruta-Informer achieves a value of 1.3171, significantly outperforming Boruta-LSTM (1.9362), Boruta-GRU (2.2868), and the other models. Across the other evaluation metrics, Boruta-Informer also demonstrated lower prediction errors than the competing models, exhibiting a superior predictive capability. For instance, Boruta-Informer’s MAPE value was only 0.0352, lower than other models such as Boruta-LSTM (0.0521) and Boruta-GRU (0.0575). The results show that Boruta-Informer exhibits a low prediction error, minimal deviation between predicted and actual values, and high prediction accuracy when forecasting carbon market prices. The TIC of Boruta-Informer is 0.0181, which is also at a relatively low level. This series of data shows that Boruta-Informer has a small average error, a low deviation between the predicted value and the actual value, and a high prediction accuracy when predicting the carbon market price. Furthermore, comparative analysis reveals that Boruta-BiTCN achieves an MAE of 1.5041, RMSE of 1.9718, and MAPE of 0.0431, with a TIC of 0.0201, indicating a slightly inferior predictive performance compared to the Boruta-Informer model.

- (2)

- For the SH dataset, the Boruta-Informer model can obtain MAE, RMSE, MAPE, and TIC values of 1.7424, 2.5141, 0.0365, and 0.0256, respectively, maintaining its status as the optimal prediction model. Similarly, the Boruta-BiTCN model demonstrates an outstanding predictive performance on the Shanghai dataset, second only to the Boruta-Informer model, with corresponding MAE, RMSE, MAPE, and TIC values of 1.8486, 2.4820, 0.0412, and 0.0251, respectively.

- (3)

- Furthermore, comparing unidirectional and bidirectional deep learning models reveals that bidirectional mechanisms offer superior predictive advantages. Taking the GD dataset as an example, Boruta-BiLSTM achieves an MAE of 1.6912, lower than Boruta-LSTM’s 1.9362, an RMSE of 2.1446, lower than Boruta-LSTM’s 2.9223, an MAPE of 0.0551, lower than Boruta-LSTM’s 0.0521, and a TIC of 0.0219, lower than Boruta-LSTM’s 0.0296. Moreover, the Boruta-BiGRU and Bo-ruta-BiTCN models also outperform their respective unidirectional counterparts. These models demonstrate similar performances for Shanghai data.

Based on the analysis and discussion, the following conclusions can be drawn: (1) Among unidirectional deep learning models, Informer can perform best across multiple metrics, followed by Boruta-BiTCN. This is primarily because Informer and BiTCN demonstrate strong feature capture and predictive capabilities when handling multivariate time series forecasting. (2) Deep learning models with bidirectional mechanisms (e.g., BiLSTM, BiGRU, and BiTCN) significantly outperform standard unidirectional models. This advantage likely stems from the bidirectional mechanisms’ ability to learn sequence information simultaneously from both forward and backward directions, enabling a more comprehensive extraction of sequence features and thereby enhancing prediction accuracy.

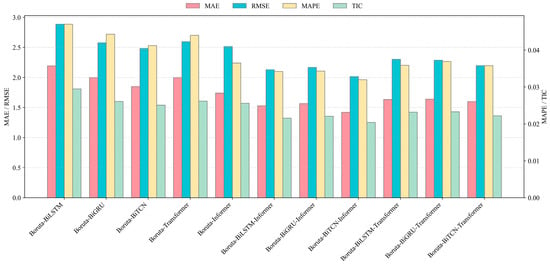

4.3.3. Experiment III: Comparisons of Hybrid Models

Based on the discussion and analysis from Experiment II, to further enhance prediction accuracy, this experiment constructs a hybrid prediction model by integrating the complementary strengths of the Boruta algorithm, BiTCN, and the Informer model. Additionally, several comparative models are also constructed in this experiment. Figure 5 and Figure 6, along with Table 6, present the prediction error results for these models.

Figure 5.

The point prediction results in Experiment III uisng GD dataset.

Figure 6.

The point prediction results in Experiment III using SH dataset.

Table 6.

Results of the comparison between the benchmark models and the hybrid models.

- (1)

- For the GD dataset, the proposed Boruta-BiTCN-Informer model demonstrates significantly lower prediction errors than other benchmark models, particularly outperforming single deep learning models based on the Boruta algorithm across all evaluation metrics. For instance, in terms of RMSE, Boruta-BiTCN-Informer achieves a value of 1.3788, whereas single models like Boruta-BiTCN record an RMSE of 1.9718. Additionally, Boruta-BiTCN-Informer’s MAPE value is 0.0284, substantially lower than the MAPE values of the other single models. Moreover, for the SH dataset, the Boruta-BiTCN-Informer model also demonstrates an outstanding predictive performance, with an MAE of 1.4213, lower than those of the benchmark models. The MAPE and MAE values of the proposed model are 2.0154 and 0.0320, respectively, demonstrating a higher predictive precision compared to the other models.

- (2)

- Comparing hybrid models built upon the same single model, it can be seen that the hybrid models based on Informer generally outperform those using Transformer. For instance, as for the prediction of the GD dataset, Boruta-BiLSTM-Informer achieves an MAE of 1.0221, lower than the 1.1975 (MAE) of the Boruta-BiLSTM-Transformer. Similar conclusions can be drawn from the model performance comparisons on the SH dataset.

The results of Experiment III show the following: (1) Hybrid models generally perform better than single benchmark models. By combining different models, the hybrid models can make use of the advantages of each model, thereby improving the overall prediction performance. (2) The proposed Boruta-BiTCN-Informer model performs best on different datasets, with a high prediction accuracy. It can effectively capture the characteristics of carbon price time series data and make more accurate predictions. (3) The hybrid models with Informer as the main model have a better performance than those based on Transformer. This indicates that Informer has stronger feature-capturing and prediction capabilities, providing superior support for the model.

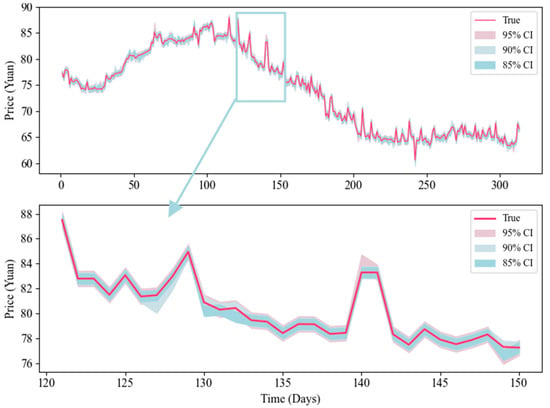

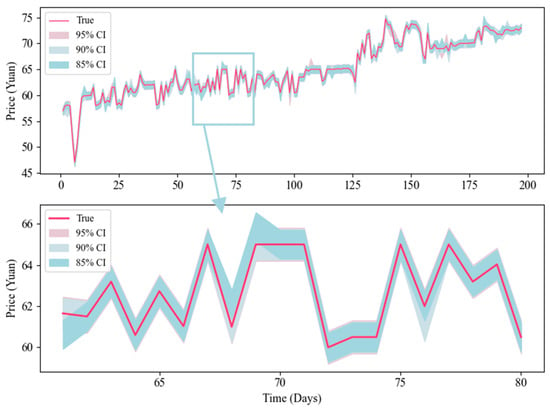

4.4. Interval Prediction

In the experimental comparison analysis of the preceding subsections, the superior predictive performance of the proposed model has been fully validated. However, in practical applications, point predictions often fall short of interval predictions due to their lack of utility. Therefore, this study additionally uses the Monte Carlo dropout method in Bayesian deep learning to achieve interval prediction. Table 7 and Figure 7 and Figure 8 present the interval prediction results for the two datasets. The results indicate that, under different confidence levels, the PICP values predicted by the model are higher than the corresponding confidence level, while maintaining very low PINAW values. This indicates that the proposed interval prediction method is effective, yielding interval predictions with a strong predictive performance. This conclusion is further supported by the CWC metric. Furthermore, comparing the prediction results across the two datasets reveals that the model performs better on the GD dataset than on the SH dataset, exhibiting higher PICP values and smaller PINAW values.

Table 7.

Interval prediction results of Bouruta-BiTCN-Informer for GD and SH datasets.

Figure 7.

The interval prediction results of Bouruta-BiTCN-Informer using GD dataset.

Figure 8.

The interval prediction results of Bouruta-BiTCN-Informer using SH dataset.

5. Further Discussions

This section is the discussion part of this study. Firstly, the superiority of the proposed model is verified once again using the Diebold–Mariano (DM) test. Then, the European Union carbon emission trading price and natural gas price datasets are adopted in order to verify the robustness of the proposed model. Finally, the interpretability analysis of the model predictions is performed by the SHAP method.

5.1. Diebold–Mariano (DM) Test

In this study, the DM test proposed by Diebold–Mariano in 1995 [] is utilized for the validation of the superiority of the proposed model. The DM test is capable of determining whether there is a significant difference between the two models in terms of their predictive performance at a given level of significance α. Models are compared according to the DM value. If the absolute value of the DM value is greater than Zα/2, the null hypothesis, that there is no significant difference in the predictive performance of the two models, will be rejected. Otherwise, it is considered that there is no significant difference. Table 8 presents the DM test values between the proposed model and comparison models.

Table 8.

Results of the DM test between the proposed model and comparison models.

As demonstrated in Table 8, except for Boruta-BiLSTM-Informer model, all DM values exceed Z0.05/2 = 1.96. This indicates that, at the 0.05 confidence level, the proposed model exhibits a significant difference in predictive performance relative to the other models. Furthermore, the vast majority of DM values also exceed Z0.01/2 = 2.58, further demonstrating that the Boruta-BiTCN-Informer model exhibits a significantly superior predictive performance compared to benchmark models at the 0.01 confidence level. In summary, the DM test results confirm that the proposed model’s predictive performance differs significantly from the comparison models, exhibiting markedly superior predictive capabilities and achieving outstanding prediction results in carbon price forecasting tasks.

5.2. Further Study of the Model Performance Based on Other Datasets

To further validate the applicability and robustness of the proposed model, this study selects the European carbon trading price (EU) and the natural gas price from Henry Hub (NG) as supplementary datasets to test the model [,]. The experimental results are shown in Table 9.

Table 9.

The prediction results of the developed models and benchmarks using EU and NG.

For the EU dataset, the Boruta-BiTCN-Informer model achieves an MAE value of 1.6336, lower than the those of the other models. Similarly, across the other model evaluation metrics, the proposed model can also demonstrate significantly lower prediction errors than the comparison models. This conclusion can also be validated using the NG dataset. For instance, the proposed Boruta-BiTCN-Informer model achieves an MAPE value of 0.0435, significantly smaller than the 0.0561 recorded by Boruta-BiLSTM-Transformer. Overall, the proposed Boruta-BiTCN-Informer model demonstrates a robust performance and strong prediction stability across all datasets. This also validates that the hybrid model framework proposed in this study is not only applicable to carbon price forecasting but also holds promise for other energy price prediction tasks, providing a reference for future research.

5.3. Interpretability Analysis

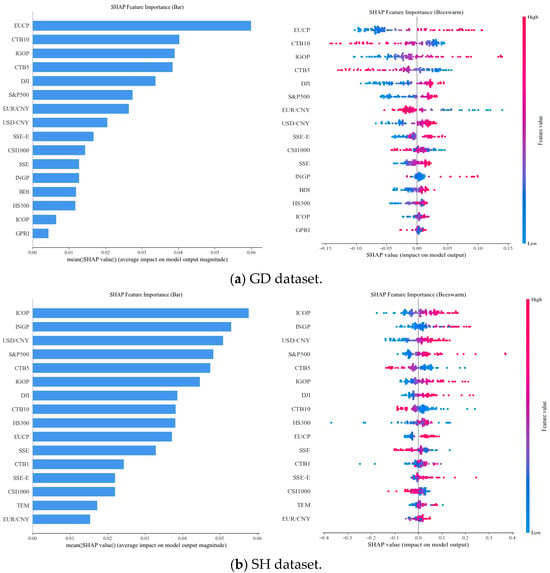

This study also adopts the SHAP method to quantitatively assess the impact of input features on carbon price predictions, thereby further elucidating the decision-making process of the BiTCN-Informer model. Figure 9 presents the corresponding SHAP analysis results of the proposed model when predicting carbon prices in Guangdong and Shanghai. As illustrated in Figure 9, taking Shanghai carbon price prediction as an example, the left-hand bar chart displays the global feature importance ranking based on the average absolute SHAP value, where the length of each horizontal bar indicates the contribution of that feature to the model’s prediction. The right-hand SHAP summary plot provides a more granular feature impact analysis. Each point in this plot represents a point sample, with the x-axis denoting SHAP values. Positive SHAP values indicate a positive contribution of the corresponding feature to carbon price prediction, while negative SHAP values indicate a negative contribution. Additionally, the color of each point represents the magnitude of the feature value, ranging from red (high value) to blue (low value).

Figure 9.

The SHAP analysis using GD and SH datasets.

From the bar chart, in the Guangdong carbon trading market, the five most influential factors driving carbon price fluctuations are the EU-ETS carbon price, China Treasury Bond (10-year), international crude oil price, China Treasury Bond (5-year), and the Dow Jones Index. Notably, the EU-ETS carbon price exerts a significantly stronger impact than the other factors, surpassing the second-ranked feature by a SHAP value margin of 0.01. This pronounced influence is likely attributed to Guangdong’s export-oriented economic structure. As China’s largest export hub, Guangdong accommodates numerous energy-intensive manufacturing enterprises whose carbon cost accounting is directly influenced by the EU Carbon Border Adjustment Mechanism (CBAM). Consequently, the EU-ETS carbon price transmits cross-regional policy spillover effects to the Guangdong carbon market, reinforcing its susceptibility to external regulatory and market dynamics. In contrast, the Shanghai carbon trading market exhibits a different set of key drivers. The five most influential factors are the international crude oil price, international natural gas price, USD/CNY exchange rate, S&P 500 Index, and China Treasury Bond (5-year). This indicates that energy market prices and financial market indicators play a dominant role in shaping carbon price dynamics in Shanghai. A plausible explanation is Shanghai’s status as a global financial hub, where market participants are more inclined to incorporate global energy supply–demand shifts and cross-border capital flows into their carbon asset pricing strategies.

A deeper examination of the SHAP summary plot reveals a notable heterogeneity in the impact of the EUR/CNY exchange rate across these two markets. In Shanghai, a higher EUR/CNY value is associated with positive SHAP values, indicating a positive correlation between the exchange rate and carbon prices. Conversely, in the Guangdong market, an increase in EUR/CNY corresponds to negative SHAP values, suggesting that the exchange rate exerts a suppressive effect on carbon price increases in Guangdong. These findings demonstrate that the depreciation of the Chinese yuan (CNY) relative to the euro (EUR) exerts heterogeneous impacts across different carbon markets. In the Shanghai market, participants predominantly focus on the transmission mechanism of exchange rate fluctuations, foreign capital flows, and carbon asset allocation. Concurrently, CNY depreciation may elevate imported energy costs or enhance market liquidity. Conversely, in the Guangdong market, participants prioritize the trade cost transmission pathway. An appreciation of the EUR amplifies carbon compliance costs transferred by EU importers, incentivizing local enterprises to adopt quota reservation strategies to suppress short-term carbon prices.

Based on the above analysis, different decision-makers may formulate rules or schemes for specific application scenarios: (1) For export-oriented, high-energy-consuming enterprises in Guangdong (e.g., home appliance manufacturers), when the SHAP value of EUCP exceeds a specific threshold (e.g., 0.04) over a continuous period, they may procure annual carbon allowances in advance to lock in compliance costs. When quoting export orders, convert the SHAP contribution from EUCP into a cost coefficient to hedge against carbon cost volatility. (2) For energy-dependent enterprises in Shanghai (e.g., steel producers), when the SHAP value of INGP exceeds a specific threshold (e.g., 0.03), prioritize low-energy production lines to reduce current-period carbon emissions. During carbon asset inventory, consider the heterogeneous impact of USD/CNY (positive in Shanghai markets). When the CNY depreciates, increase carbon allowance holdings modestly. (3) For the newly established national carbon market coordination department, set distinct weightings for different regions: Guangdong market allowances should reference the SHAP value from the EUCP (0.3–0.4), while Shanghai market allowances should reference the SHAP value from energy/financial indicators (0.25–0.35), thereby avoiding the bias inherent in uniform rules. Monitor the SHAP values for ICOP and USD/CNY and concurrently implement short-term exchange rate stabilization measures to mitigate carbon price volatility.

6. Conclusions and Future Outlook

This study constructs an interpretable hybrid carbon price prediction system integrating feature selection, deep learning, and interpretability analysis methods. An extensive experimental analysis and discussion demonstrate the proposed model’s superior predictive performance, significantly enhancing carbon price forecasting accuracy while providing interpretability references. The specific conclusions are as follows.

- (1)

- The proposed Boruta-BiTCN-Informer model demonstrates an outstanding predictive performance and high robustness across diverse market environments. In the Guangdong carbon market, the model achieves a mean absolute error of only 0.9642, representing a 26.7937% reduction compared to the Boruta-Informer model and an average decrease of 27.3809% relative to all benchmark models. Similarly, in the Shanghai carbon market, the proposed model achieves a mean absolute percentage error of 0.0320, representing a 12.3288% reduction compared to Boruta-Informer and an average decrease of 16.9507% relative to all comparison models, fully demonstrating its superior performance in carbon price forecasting.

- (2)

- Regarding model interpretability and practicality, this study employs the SHAP method to deeply reveal nonlinear interactions among carbon price drivers. The findings indicate that energy prices dominate carbon price fluctuations in the Shanghai carbon market, while EU carbon prices exert a significant influence on the Guangdong carbon market. Furthermore, the proposed model maintains its advantage in both the EU carbon market and the U.S. Henry Hub natural gas market. For instance, in the EU market, the proposed model achieved an MAE of 1.6336, representing an 11.0240% reduction compared to the Boruta-Informer model. In the natural gas market, the MAE improvement over the Boruta-Informer model reaches 30.7937%. Moreover, the interval prediction capability of the proposed model provides practical risk boundaries, offering robust support for enterprises and decision-makers in formulating strategic plans within uncertain market environments.

- (3)

- From the perspective of methodological innovation, the bidirectional architecture of BiTCN effectively mitigates the feature loss problem in traditional hybrid models, and the sparse attention mechanism of the Informer model optimizes the long-sequence modeling process. The synergy of the two not only provides an efficient solution for carbon price prediction but also serves as a reference for predicting other energy commodity prices.

Overall, the proposed model in this study demonstrates an exceptional predictive performance, significantly enhancing the accuracy of carbon market price forecasting. It also provides a methodological framework for reference in the field of time series forecasting. Future research will further explore the dynamic changes in carbon market price characteristics, focusing on cutting-edge deep learning technology research, thereby providing information references for carbon market development and decision-making.

Author Contributions

P.D.: conceptualization, software, methodology, writing—original draft preparation, funding acquisition. X.Z.: investigation, software, writing—review and editing. T.C.: visualization, writing—reviewing and editing. W.Y.: supervision, writing—review and editing, formal analysis. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by the Humanities and Social Science Fund of the Ministry of Education of China (22YJCZH028) and the Basic Research Program of Jiangsu (No. BK20251593).

Data Availability Statement

The data presented in this study are available in https://cn.investing.com (accessed on 1 May 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Nathaniel, S.P.; Yalçiner, K.; Bekun, F.V. Assessing the Environmental Sustainability Corridor: Linking Natural Resources, Renewable Energy, Human Capital, and Ecological Footprint in BRICS. Resour. Policy 2021, 70, 101924. [Google Scholar] [CrossRef]

- Dutta, A. Modeling and Forecasting the Volatility of Carbon Emission Market: The Role of Outliers, Time-Varying Jumps and Oil Price Risk. J. Clean. Prod. 2016, 172, 2773–2781. [Google Scholar]

- Weng, Q.; Xu, H. A Review of China’s Carbon Trading Market. Renew. Sustain. Energy Rev. 2018, 91, 613–619. [Google Scholar] [CrossRef]

- Hao, Y.; Tian, C. A Hybrid Framework for Carbon Trading Price Forecasting: The Role of Multiple Influence Factor. J. Clean. Prod. 2020, 262, 120378. [Google Scholar] [CrossRef]

- Mao, Y.; Yu, X. A Hybrid Forecasting Approach for China’s National Carbon Emission Allowance Prices with Balanced Accuracy and Interpretability. J. Environ. Manag. 2024, 351, 119873. [Google Scholar] [CrossRef] [PubMed]

- Lin, B.; Zhang, C. Forecasting carbon price in the European carbon market: The role of structural changes. Process Saf. Environ. Prot. 2022, 166, 341–354. [Google Scholar]

- Wang, J.; Zhuang, Z.; Gao, D. An enhanced hybrid model based on multiple influencing factors and divide-conquer strategy for carbon price prediction. Omega 2023, 120, 102922. [Google Scholar] [CrossRef]

- Yu, Y.; Song, X.; Zhou, G.; Liu, L.; Pan, M.; Zhao, T. DKWM-XLSTM: A Carbon Trading Price Prediction Model Considering Multiple Influencing Factors. Entropy 2025, 27, 817. [Google Scholar] [CrossRef]

- Jiang, M.; Che, J.; Li, S.; Hu, K.; Xu, Y. Incorporating key features from structured and unstructured data for enhanced carbon trading price forecasting with interpretability analysis. Appl. Energy 2025, 382, 125301. [Google Scholar] [CrossRef]

- Zhong, W.; Yue, W.; Haoran, W.; Nan, T.; Shuyue, W. Integrating fast iterative filtering and ensemble neural network structure with attention mechanism for carbon price forecasting. Complex Intell. Syst. 2025, 11, 6. [Google Scholar]

- Huang, J.; Yuee, G.; Li, C.; Yu, X.; Xiong, W. A Hybrid Attention-Enabled Multivariate Information Fusion for Carbon Price Forecasting. Renew. Energy 2025, 256, 124246. [Google Scholar] [CrossRef]

- Pu, R.Y.; Liang, Q.M.; Wei, Y.M.; Yan, S.Y.; Wang, X.Y.; Li, D.H.; Yi, C.; Ji, C.J. Impact of the China’s New Energy Market on Carbon Price Fluctuation Risk: Evidence from Seven Pilot Carbon Markets. Energy Strategy Rev. 2025, 59, 101718. [Google Scholar] [CrossRef]

- Wang, Y.; Guo, Z. The dynamic spillover between carbon and energy markets: New evidence. Energy 2018, 149, 24–33. [Google Scholar] [CrossRef]

- Wang, R.; Zhao, X.; Wu, K.; Peng, S.; Cheng, S. Examination of the transmission mechanism of energy prices influencing carbon prices: An analysis of mediating effects based on demand heterogeneity. Environ. Sci. Pollut. Res. 2023, 30, 59567–59578. [Google Scholar] [CrossRef]

- Millischer, L.; Evdokimova, T.; Fernandez, O. The carrot and the stock: In search of stock-market incentives for decarbonization. Energy Econ. 2023, 120, 106615. [Google Scholar] [CrossRef]

- Shi, C.; Zeng, Q.; Zhi, J.; Na, X.; Cheng, S. A study on the response of carbon emission rights price to energy price macroeconomy and weather conditions. Environ. Sci. Pollut. Res. 2023, 30, 33833–33848. [Google Scholar] [CrossRef]

- Song, Y.; Liu, Y. Empirical analysis of the relationship between carbon trading price and stock price of high carbon emitting firms based on VAR model-evidence from Chinese listed companies. Environ. Sci. Pollut. Res. Int. 2024, 31, 1146–1157. [Google Scholar] [CrossRef]

- Li, B.; Wang, H.; Ye, Y. Is Carbon Price Uncertainty Priced in the Corporate Bond Yield Spreads? Evidence from Chinese Corporate Bond Markets. Int. Rev. Financ. Anal. 2025, 107, 104618. [Google Scholar] [CrossRef]

- Yan, L.; Han, W. The Complexity of Connection between Green Bonds and Carbon Markets: New Evidence from China. Financ. Res. Lett. 2025, 84, 107790. [Google Scholar] [CrossRef]

- Li, H.; Huang, X.; Zhou, D.; Guo, L. The dynamic linkages among crude oil price, climate change and carbon price in China. Energy Strategy Rev. 2023, 48, 101123. [Google Scholar] [CrossRef]

- Zhou, K.; Li, Y. Influencing factors and fluctuation characteristics of China’s carbon emission trading price. Phys. A Stat. Mech. its Appl. 2019, 524, 459–474. [Google Scholar] [CrossRef]

- Zhou, Y.; Jin, C.; Ren, K.; Gao, S.; Yu, Y. TFR: A Temporal Feature-Refined Multi-Stage Carbon Price Forecasting. Energy Sci. Eng. 2024, 13, 611–625. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, J.; Wang, S.; Zheng, J.; Lv, M. Using explainable deep learning to improve decision quality: Evidence from carbon trading market. Omega 2025, 133, 103281. [Google Scholar] [CrossRef]

- Zhou, M.; Du, P. Multivariate events enhanced pre-trained large language model for carbon price forecasting. Energy 2025, 336, 138377. [Google Scholar] [CrossRef]

- Wang, J.; Dong, J.; Zhang, X.; Li, Y. A multiple feature fusion-based intelligent optimization ensemble model for carbon price forecasting. Process Saf. Environ. Prot. 2024, 187, 1558–1575. [Google Scholar] [CrossRef]

- Byun, S.J.; Cho, H. Forecasting carbon futures volatility using GARCH models with energy volatilities. Energy Econ. 2013, 40, 207–221. [Google Scholar] [CrossRef]

- Sheng, C.; Wang, G.; Geng, Y.; Chen, L. The correlation analysis of futures pricing mechanism in China’s carbon financial market. Sustainability 2020, 12, 7317. [Google Scholar] [CrossRef]

- Zhu, J.; He, Y.; Yang, X.; Yang, S. Ultra-short-term wind power probabilistic forecasting based on an evolutionary non-crossing multi-output quantile regression deep neural network. Energy Convers. Manag. 2024, 301, 118062. [Google Scholar] [CrossRef]

- Huang, W.; Wang, H.; Qin, H.; Wei, Y.; Chevallier, J. Convolutional neural network forecasting of European Union allowances futures using a novel unconstrained transformation method. Energy Econ. 2022, 110, 106049. [Google Scholar] [CrossRef]

- Cao, Y.; Zha, D.; Wang, Q.; Wen, L. Probabilistic carbon price prediction with quantile temporal convolutional network considering uncertain factors. J. Environ. Manag. 2023, 342, 118137. [Google Scholar] [CrossRef]

- He, Y.; Wang, Y. Short-term wind power prediction based on EEMD–LASSO–QRNN model. Appl. Soft Comput. 2021, 105, 107288. [Google Scholar] [CrossRef]

- Tian, C.; Hao, Y. Point and interval forecasting for carbon price based on an improved analysis-forecast system. Appl. Math. Model. 2020, 79, 126–144. [Google Scholar] [CrossRef]

- Duan, Y.; Zhang, J.; Wang, X.; Feng, M.; Ma, L. Forecasting carbon price using signal processing technology and extreme gradient boosting optimized by the whale optimization algorithm. Energy Sci. Eng. 2024, 12, 810–834. [Google Scholar] [CrossRef]

- Zhang, K.; Yang, X.; Wang, T.; Thé, J.; Tan, Z.; Yu, H. Multi-step carbon price forecasting using a hybrid model based on multivariate decomposition strategy and deep learning algorithms. J. Clean. Prod. 2023, 405, 136959. [Google Scholar] [CrossRef]

- Liu, S.; Xie, G.; Wang, Z.; Wang, S. A secondary decomposition-ensemble framework for interval carbon price forecasting. Appl. Energy 2024, 359, 122613. [Google Scholar] [CrossRef]

- Zheng, G.; Li, K.; Yue, X.; Zhang, Y. A multifactor hybrid model for carbon price interval prediction based on decomposition-integration framework. J. Environ. Manag. 2024, 363, 121273. [Google Scholar] [CrossRef]

- Hao, Y.; Wang, X.; Wang, J.; Yang, W. A novel interval-valued carbon price analysis and forecasting system based on multi-objective ensemble strategy for carbon trading market. Expert Syst. Appl. 2024, 244, 122912. [Google Scholar]

- Cui, X.; Niu, D. Carbon price point–interval forecasting based on two-layer decomposition and deep learning combined model using weight assignment. J. Clean. Prod. 2024, 483, 144124. [Google Scholar]

- Zhao, S.; Wang, Y.; Deng, J.; Li, Z.; Deng, G.; Chen, Z.; Li, Y. An adaptive multi-factor integrated forecasting model based on periodic reconstruction and random forest for carbon price. Appl. Soft Comput. 2025, 177, 113274. [Google Scholar] [CrossRef]

- Xiao, Y.; Hu, X.; Lin, Y.; Lu, Y.; Jing, R.; Zhao, Y. Interpretable Short-Term Electricity Load Forecasting Considering Small Sample Heatwaves. Appl. Energy 2025, 398, 126417. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, K.; Chen, S.; Qian, X.; Zhou, Y.; Zhan, X.; Chen, J. Interpretable Prediction of Short-Term Load Curve for Extreme Scenarios Based on Mixture of Experts Model. Electr. Power Syst. Res. 2025, 247, 111730. [Google Scholar] [CrossRef]

- Wu, H.; Gao, X.Z.; Li, Z.; Heng, J.N.; Du, P. Audiovisual-Cognition-Inspired Network with Explainability for Oil Price Forecasting. Appl. Soft Comput. 2025, 186, 114093. [Google Scholar] [CrossRef]

- Kursa, M.B.; Rudnicki, W.R. Feature selection with the boruta package. J. Stat. Softw. 2010, 36, 1–13. [Google Scholar] [CrossRef]

- Sprangers, O.; Schelter, S.; de Rijke, M. Parameter-efficient deep probabilistic forecasting. Int. J. Forecast. 2023, 39, 332–345. [Google Scholar] [CrossRef]

- Wang, J.; He, J.; Feng, C.; Feng, L.; Li, Y. Stock index prediction and uncertainty analysis using multi-scale nonlinear ensemble paradigm of optimal feature extraction, two-stage deep learning and Gaussian process regression. Appl. Soft Comput. 2021, 113, 107898. [Google Scholar] [CrossRef]

- Qin, C.; Huang, G.; Yu, H.; Wu, R.; Tao, J.; Liu, C. Geological information prediction for shield machine using an enhanced multi-head self-attention convolution neural network with two-stage feature extraction. Geosci. Front. 2023, 14, 101519. [Google Scholar] [CrossRef]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting. In Proceedings of the 35th AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021; pp. 11106–11115. [Google Scholar]

- Wang, Y.; Wang, Z.; Luo, Y. A hybrid carbon price forecasting model combining time series clustering and data augmentation. Energy 2024, 308, 132929. [Google Scholar] [CrossRef]

- Štrumbelj, E.; Kononenko, I. Explaining prediction models and individual predictions with feature contributions. Knowl. Inf. Syst. 2014, 41, 647–665. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4766–4775. [Google Scholar]

- Lei, H.; Xue, M.; Liu, H.; Ye, J. Unveiling the driving patterns of carbon prices through an explainable machine learning framework: Evidence from Chinese emission trading schemes. J. Clean. Prod. 2024, 438, 140697. [Google Scholar] [CrossRef]

- Gal, Y.; Ghahramani, Z. Dropout as a Bayesian approximation: Representing model uncertainty in deep learning. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 1651–1660. [Google Scholar]

- Seoh, R. Qualitative analysis of monte carlo dropout. arXiv 2020, arXiv:2007.01720. [Google Scholar] [CrossRef]

- Goel, P.; Chen, L. On the robustness of Monte Carlo dropout trained with noisy labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Nashville, TN, USA, 19–25 June 2021; pp. 2219–2228. [Google Scholar]

- Amiri, M.; Pourghasemi, H.R.; Ghanbarian, G.A.; Afzali, S.F. Assessment of the importance of gully erosion effective factors using Boruta algorithm and its spatial modeling and mapping using three machine learning algorithms. Geoderma 2019, 340, 55–69. [Google Scholar] [CrossRef]

- Chen, J.; Ying, Z.; Zhang, C.; Balezentis, T. Forecasting tourism demand with search engine data: A hybrid CNN-BiLSTM model based on Boruta feature selection. Inf. Process. Manag. 2024, 61, 103699. [Google Scholar] [CrossRef]

- Li, J.; Liu, D. Carbon price forecasting based on secondary decomposition and feature screening. Energy 2023, 278, 127783. [Google Scholar] [CrossRef]

- Zhang, X.; Zong, Y.; Du, P.; Wang, S.; Wang, J. Framework for multivariate carbon price forecasting: A novel hybrid model. J. Environ. Manag. 2024, 369, 122275. [Google Scholar] [CrossRef] [PubMed]

- Diebold, F.X.; Mariano, R.S. Comparing predictive accuracy. J. Bus. Econ. Stat. 2002, 20, 134–144. [Google Scholar] [CrossRef]

- Liu, S.; Li, M.; Yang, K.; Wei, Y.; Wang, S. From forecasting to trading: A multimodal-data-driven approach to reversing carbon market losses. Energy Econ. 2025, 144, 108350. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).