1. Introduction

This paper addresses a two-dimensional (2D) or three-dimensional (3D) elliptic interface problem for the Poisson equation with a smooth function

:

It is subject to the Dirichlet boundary condition, given as follows:

The domain

is assumed to be rectangular and is partitioned into subdomains

by an interface

. We denote the solution in each subdomain by

and

. Across the interface

, the solution exhibits jump discontinuities governed by the following jump conditions:

where the notation

denotes the difference between the values of a quantity as it approaches

from

and

(i.e., the value from the

side minus the value from the

side). The term

represents the normal derivative

, where

is the unit normal vector pointing from

to

. The source term is piecewise continuous with

.

Interface problems pose significant challenges for traditional numerical methods due to the loss of solution regularity across the interface. To address this issue and recover numerical accuracy near the interface, various methods have been developed by enforcing the jump conditions given in (3) and (4) into the numerical discretization. These approaches enable the design of accurate and robust numerical algorithms, including the coupling interface method [

1], the piecewise-polynomial interface method [

2], the kernel-free integral equation method [

3], the finite volume method [

4], and the immersed finite element method (IFEM) [

5,

6].

Over the past few decades, finite difference methods using Cartesian grids have been intensively studied for elliptic interface problems. Notable examples include the immersed interface method (IIM) [

7,

8,

9], the ghost fluid method (GFM) [

10], and the matched interface and boundary (MIB) method [

11,

12]. Building on the MIB methods, the augmented matched interface and boundary (AMIB) method has recently been developed for two- and three-dimensional elliptic interface problems [

13,

14,

15]. In the AMIB method, finite difference discretizations near an irregular interface are corrected by Cartesian derivative jumps defined on the interface, which are reconstructed via additional approximations. Moreover, such jumps are treated as auxiliary variables in an augmented system, so that they can be solved together with the unknown solution in an iterative procedure. By using fast Poisson solvers including the fast Fourier transform [

13,

14] and multigrid method [

15] to invert the discrete Laplacian operator, the AMIB method is highly efficient, achieving fourth-order accuracy for both the solution and its gradient. Nontrivial developments have been carried out in the AMIB method [

14,

16] in treating sharply curved interfaces. Nevertheless, the fourth-order AMIB method assumes the interface is

continuous, so that it may not be applicable to

continuous interfaces involving geometric singularities.

In recent years, the application of neural networks, both shallow and deep, to solve elliptic interface problems has gained significant attention in the scientific computing community. Unlike traditional grid-based methods, neural networks are inherently mesh-free, making them highly effective for handling complex interfaces and irregular domains. Prominent approaches like the Deep Ritz method [

17] and physics-informed neural networks (PINNs) [

18] have been widely used for solving partial differential equations (PDEs) with smooth solutions. Building on these foundations, various neural network models have been developed specifically for elliptic interface problems [

19,

20,

21,

22,

23,

24]. While many of these methods utilize deep network architectures, recent advances have demonstrated that shallow networks can also effectively address interface problems, such as the discontinuity capturing shallow neural network (DCSNN) [

25] and the cusp-capturing PINNs [

22]. Despite their promise, these mesh-free neural network approaches face ongoing challenges in ensuring stability and achieving robust convergence rates. To address these limitations, a hybrid method combining neural network-based machine learning with the traditional FFT Poisson solver was developed in [

26]. This approach incorporates interface conditions into a learned function while using regular finite difference discretizations, achieving second-order accuracy and effectively handling complex geometries.

In this work, we propose a novel fourth-order hybrid method, referred to as the corrected hybrid method, for solving the Poisson interface problem (

1)–(

3). This method combines neural network learning with corrected finite difference schemes, integrating elements from the machine learning framework of the hybrid method [

26] and the corrected finite difference discretization with high-order fast Poisson solvers from the AMIB method [

14]. The corrected hybrid method retains the advantages of the hybrid method, such as its implementation simplicity and ability to handle complex interface geometries, while preserving the high-order accuracy of the AMIB method.

In the AMIB method, the standard fourth-order finite difference scheme for the Laplacian operator is corrected using Cartesian derivative jumps, which require additional approximation treatments. To simplify this process, the corrected hybrid method employs neural networks to predict these derivative jumps directly. This approach reduces implementation complexity while maintaining accuracy. Unlike the original hybrid method, which uses shallow neural networks to approximate solution components with second-order accuracy, the corrected hybrid method utilizes neural networks to predict high-order jump quantities. These predictions are incorporated into a corrected finite difference scheme, enabling fourth-order accuracy.

It is important to emphasize that the objective of the corrected hybrid method is not to replace traditional numerical methods such as the AMIB or IIM or to compete with them across all dimensions. Instead, the goal is to provide an alternative approach that is particularly advantageous for its high accuracy and ease of implementation when addressing Poisson interface problems with non-homogeneous jump conditions. By leveraging fast Poisson solvers and machine learning, the corrected hybrid method achieves both computational efficiency and high-order accuracy. Moreover, the use of the correct hybrid method for solving an open interface problem will also be explored.

The rest of the paper is organized as follows. In

Section 2, a high-order hybrid method is proposed to solve the elliptic interface problems. The incorporation of FFT and multigrid solvers will be discussed in treating elliptic equations with constant coefficients and variable coefficients.

Section 3 is dedicated to the numerical results to demonstrate the performance of the proposed algorithm in 2D and 3D. The generalization of the corrected hybrid method will be considered in

Section 4, so that one can solve an open interface problem. Finally, this paper ends with a summary in

Section 5.

3. Numerical Results

In this section, we analyze the accuracy of the proposed corrected hybrid method in solving two- and three-dimensional Poisson interface problems. For a comparison, we also examine the performance of the second-order hybrid method [

26]. Additionally, we evaluate the ray-casting AMIB scheme introduced in [

14,

15], where Cartesian derivative jumps are constructed using fictitious values generated via the ray-casting MIB scheme. For both the AMIB method and the proposed corrected hybrid method, either the fourth-order FFT solver or the geometric multigrid is employed to invert the discrete matrix

in solving the resulting linear Equation (

16).

In each test, the neural network function

is represented using a shallow network with a sigmoid activation function. This network employs only a single hidden layer, which simplifies the architecture and ensures efficiency by requiring the training of only a few hundred parameters across all numerical examples. A few hundred training points are randomly sampled along the interface to effectively capture its physical features. However, for more complex problems, particularly in three-dimensional cases or those involving complex geometric features, a larger number of training points may be necessary to maintain accuracy. The source code accompanying this manuscript is available on GitHub (visited on 22 March 2025):

https://github.com/szhao-ua/fourth-order-hybrid-method.

The accuracy of approximating solution gradients or fluxes is explored through several numerical examples. The discrete gradient

is computed using a fourth-order corrected central difference scheme. This method incorporates the predicted Cartesian derivative jumps provided by the neural network along with the computed solution to achieve high accuracy. For instance, the fourth-order central difference for the

x component of the gradient, denoted as

, is given by

The corrected gradients are then computed as follows:

The correction of fourth-order central differences for the gradient in all corner cases is addressed in detail in Theorems 1–4 of [

15].

For simplicity, the computational domain

is assumed to be either a square (2D) or a cubic domain (3D), uniformly partitioned into subintervals in each direction

. The accuracy and convergence of the numerical solutions are assessed for 2D problems by measuring errors in the maximum norm defined as follows:

where

and

are, respectively, the analytical and numerical solution. Similarly, error norms for the derivatives in the gradient can be defined using the same maximum norm. These definitions naturally extend to 3D problems.

The successive error, which measures the difference between solutions at two levels of grid refinement, is defined as

where

and

are the numerical solutions on the coarse and finer grids, respectively.

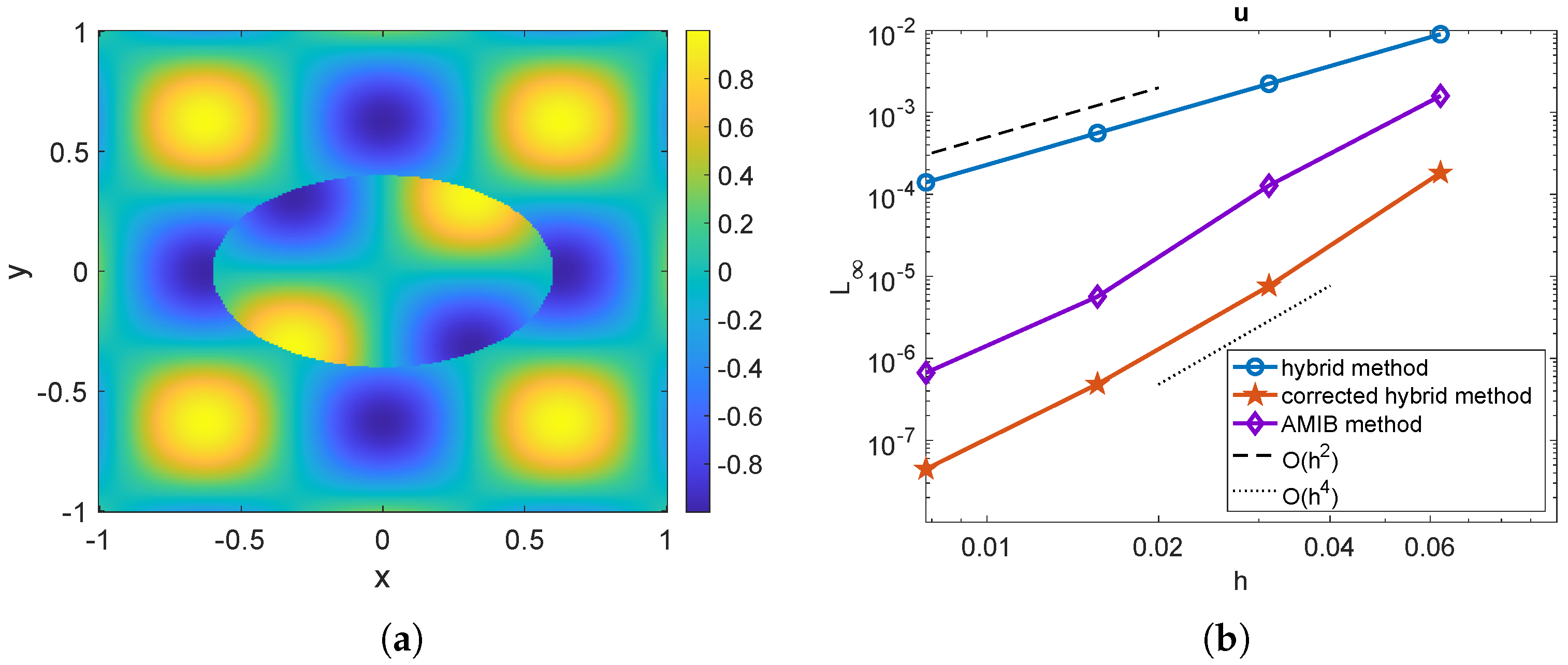

Example 1. We begin by solving a two-dimensional Poisson interface problem and comparing the results of the proposed corrected hybrid method with those obtained using the AMIB method [15] and the original hybrid method [26]. The problem is defined on the square domain , with the embedded interface represented by an ellipse: The exact solution is given by the following:from which the corresponding right-hand side , and jump conditions and used in the loss function can be calculated accordingly. In this example, the geometric multigrid method is used to invert the matrix in both the proposed scheme and the AMIB scheme [15]. The same neural network setup is employed for both the present and the original hybrid methods. The network for is designed with 40 neurons in the hidden layer and trained using 200 randomly sampled training points on the interface . The training process terminates when the stopping condition is met or the maximum number of iterations (epoch = 1000) is reached.

The numerical solution for a mesh size of

is displayed in

Figure 1a. In

Figure 1b, we present the mesh refinement study, showing the maximum norm error

as a function of the mesh size

h. The results indicate that the original hybrid method (solid blue line with circular markers) achieves a second-order convergence rate, whereas both the proposed hybrid method (solid red line with pentagram markers) and the AMIB method (solid purple line with diamond markers) achieve a fourth-order convergence rate. In the present hybrid method, the computed solution is used to determine

by applying the corrected finite difference scheme (

17)–(20) with the predicted Cartesian derivative jumps. The gradients are also tested among these three methods, shown in

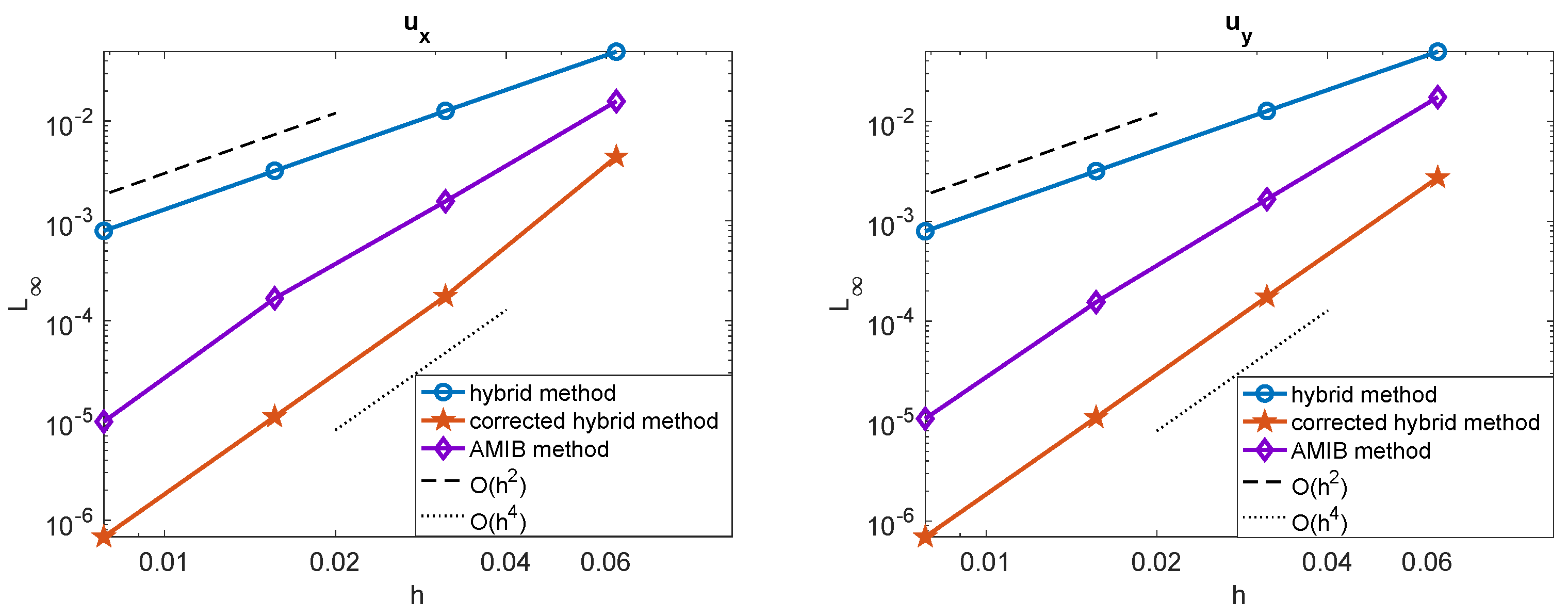

Figure 2. The maximum errors of the gradients computed using the original hybrid method attain a second-order convergence rate, while the proposed hybrid method and the AMIB method achieve fourth-order convergence in the gradients.

Next, we evaluate the performance of the proposed corrected method in solving the PDE (

1) in the case of a variable coefficient

. The coefficient is set as

. The comparison of maximum errors for the solutions and gradients obtained using the AMIB method and the corrected hybrid method is presented in

Figure 3 and

Figure 4. The results demonstrate that both methods achieve a fourth-order convergence rate for both solutions and gradients.

The induced numerical error arises from two primary sources: the neural network approximation and the finite difference approximation. Although detailed results are not presented here, the final loss value achieved is approximately

∼

. This corresponds to a predictive error, denoted as

, of

∼

for the target function

D. However, the predictive error

for higher-order (

k-th) derivatives of

D can grow exponentially with

k, as seen in (

10), which is used in the corrected finite difference schemes (

11)–(14). To maintain fourth-order accuracy, the predictive error of the correction terms in (

11)–(14), given by

, must be smaller than the local truncation error

. This indicates that the error is primarily dominated by the neural network approximation when the mesh size

h is very small. To verify this observation, we conducted additional numerical tests with finer mesh widths. As expected, further refining the mesh did not lead to any significant reduction in the overall error, confirming that the neural network approximation is the limiting factor. A deeper or wider neural network architecture may achieve smaller predictive errors in the finer mesh.

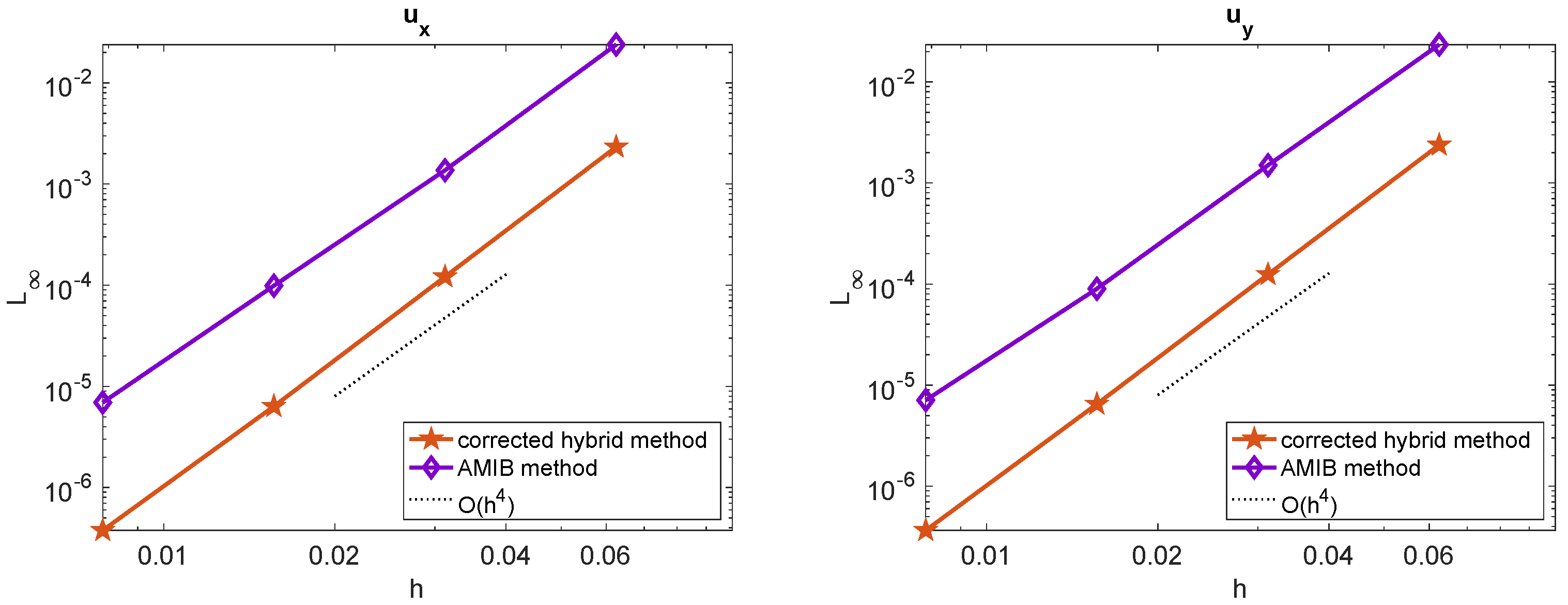

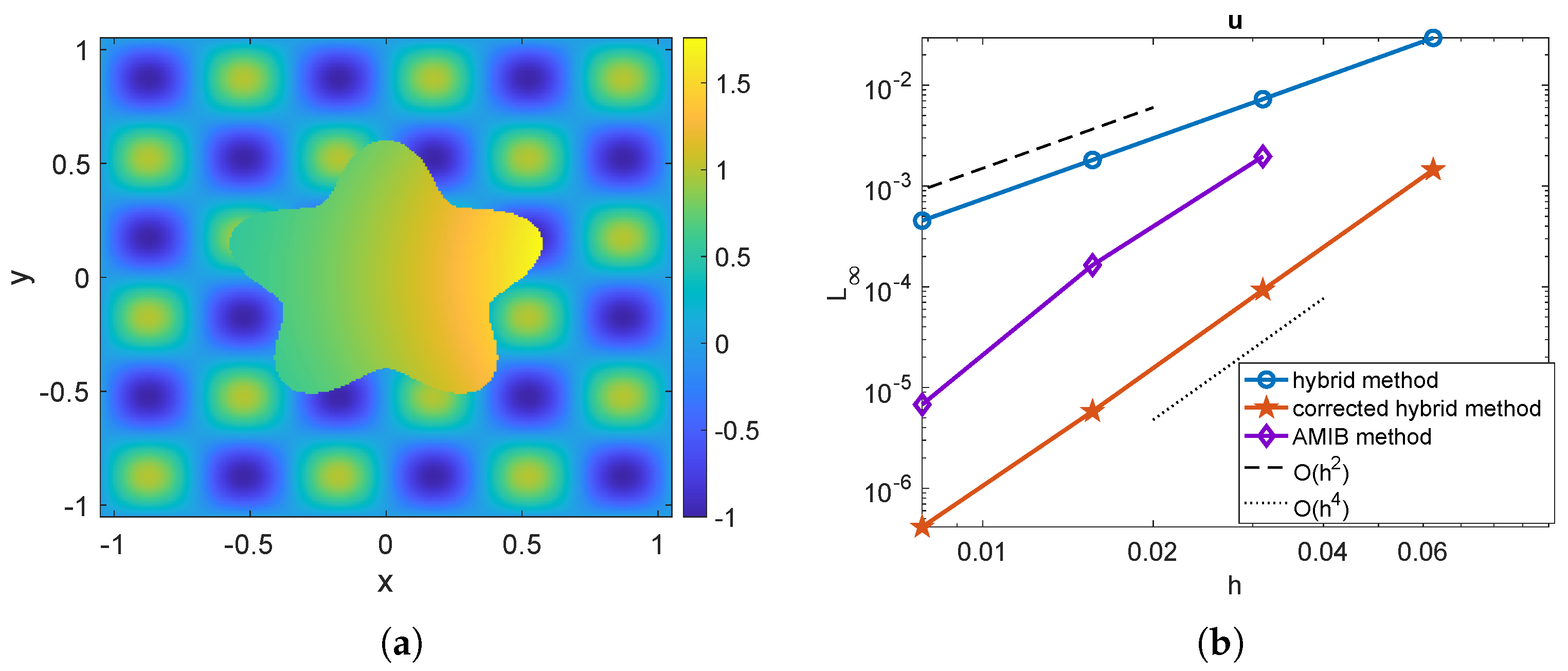

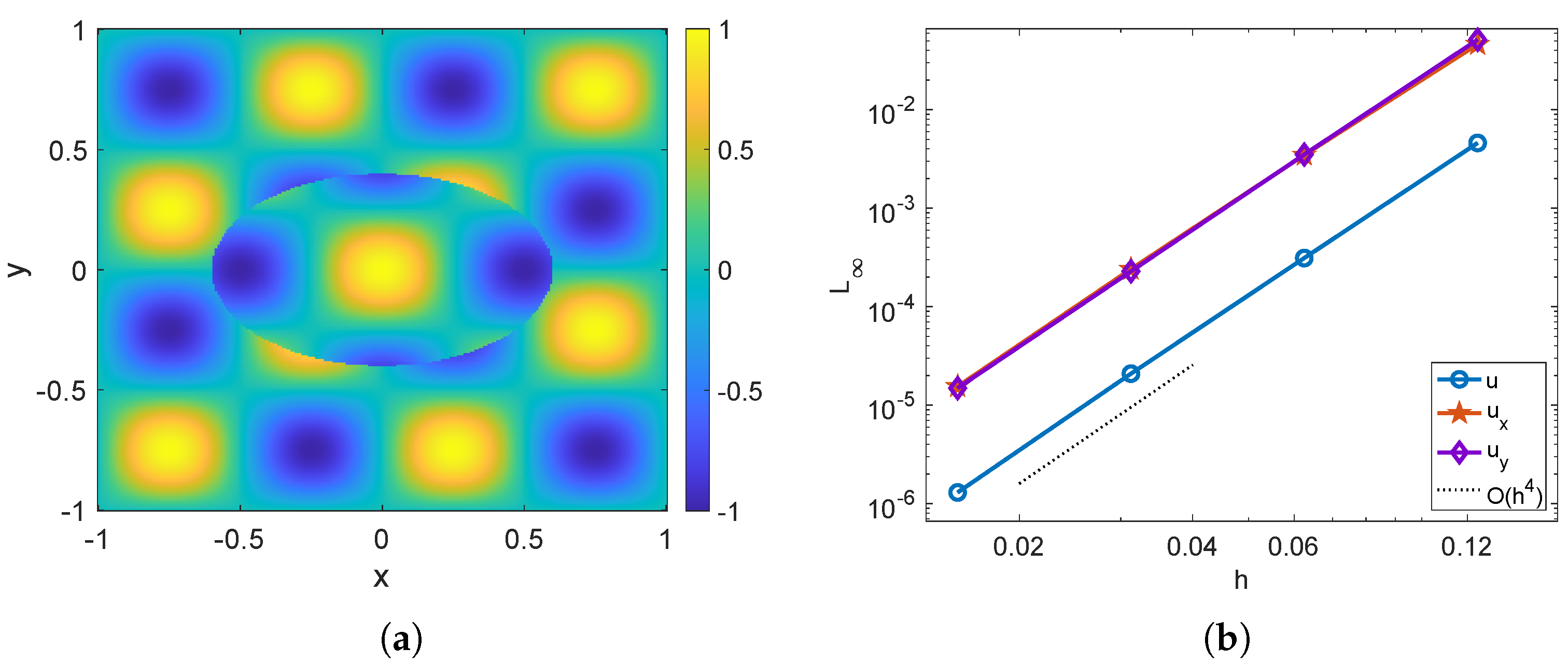

Example 2. The second example concerns the Poisson equation and compares the performances of the three methods on a square domain , with the embedded interface represented by a five-headed curve: The exact solution is chosen asfrom which the corresponding jump information used in the loss function can be calculated accordingly. With , a periodic boundary condition is imposed so that the FFT is employed to invert the matrix in both the proposed corrected hybrid scheme and the AMIB scheme [14]. The same neural network setup as in Example 1 is employed. In

Figure 5a, the numerical solution of the proposed scheme is shown for a mesh size of

, where the discontinuity is sharply captured across the interface. The mesh refinement results for the numerical solution and its gradient among the three methods are tested again and presented in

Figure 5b and

Figure 6, respectively. These results demonstrate that the original hybrid method achieves a second-order convergence rate for both the solution and the gradient. In contrast, both the proposed hybrid method and the AMIB method achieve a fourth-order convergence rate for both the solution and the gradient.

In

Figure 5b and

Figure 6, the AMIB method fails to produce numerical solutions and gradients on a coarse grid, whereas the corrected hybrid method remains robust. This discrepancy arises because, on coarse meshes, the AMIB method encounters difficulties in generating fictitious values and constructing accurate Cartesian derivative jumps. In contrast, the corrected hybrid method bypasses this challenge by leveraging its mesh-free neural network component, which ensures stability and accuracy even on coarser grids.

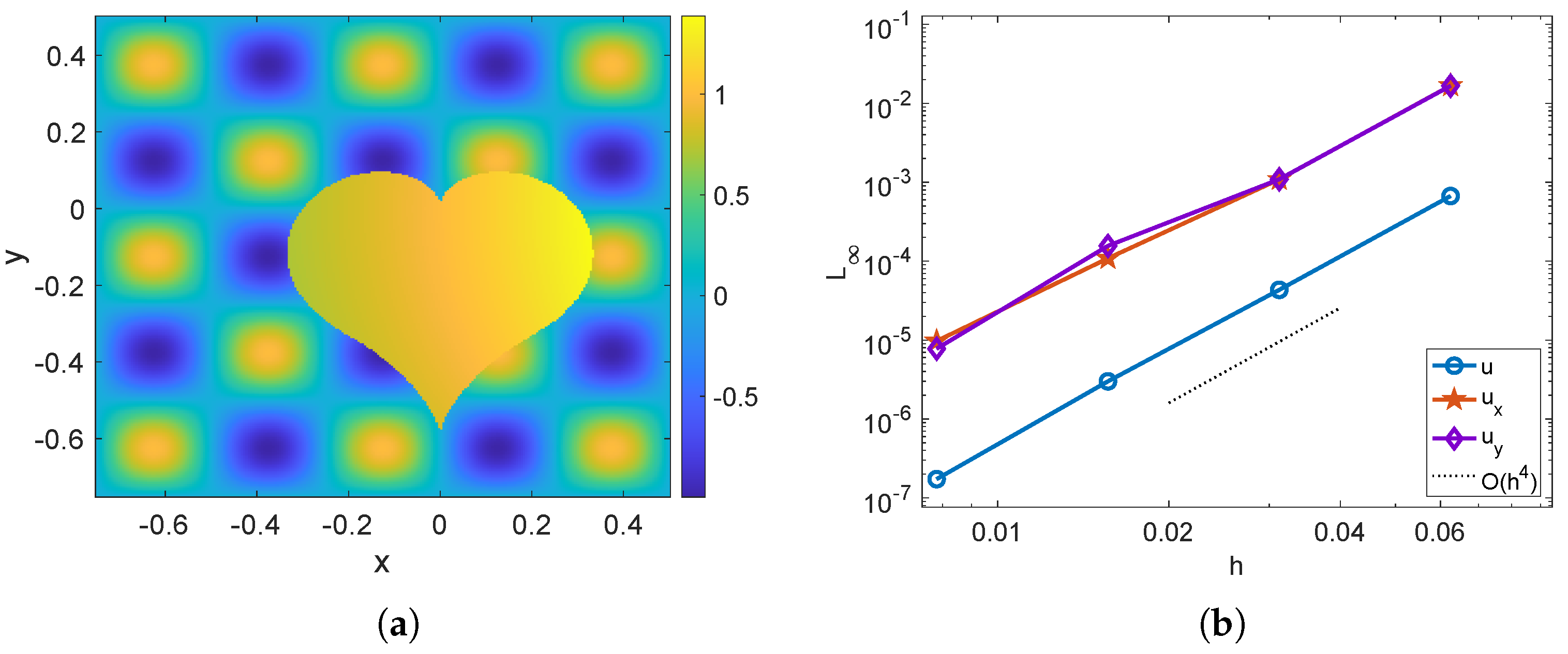

Example 3. In this example, we consider a complex geometry with geometric singularities, which are beyond the capability of AMIB methods [13,14,15,16]. The problem is defined on the square domain , with the embedded interface given by the heart-shaped curve: The heart-shaped interface features a singularity at the origin and the bottom tip, making it computationally challenging. The analytical solution and setup are identical to those in Example 2, except that

is used. In

Figure 7a, the numerical solution is shown for a mesh size of

, demonstrating sharp discontinuity across the interface.

Figure 7b presents the mesh refinement results for the numerical solution and its gradient. The proposed method exhibits fourth-order convergence for both the solution and the gradient.

Example 4. To assess the reliability of the hybrid method, we consider a scenario where the exact solution is unavailable. The problem is defined on the square domain , with the interface Γ embedded as an ellipse described by the following: The right-hand side function for the Poisson interface problem is chosen as follows:where . The Dirichlet boundary condition is imposed along the boundary of Ω. With an unknown solution, the fourth-order geometric multigrid method is employed in this corrected hybrid method to invert . At the interface Γ, the following jump conditions are prescribed:where represents the outward normal direction of Γ pointing from to . For this test, the neural network

is configured with 150 neurons in a single hidden layer and is trained on 300 randomly sampled points along the interface

. Since the exact solution is unavailable, we evaluate the maximum error by computing the successive error

, where

denotes the solution obtained with grid size

h. In

Figure 8a, we display the numerical solution

u at the finest resolution

. In

Figure 8b, the maximum norm errors for

u and its gradient are presented. As anticipated, all quantities exhibit fourth-order convergence.

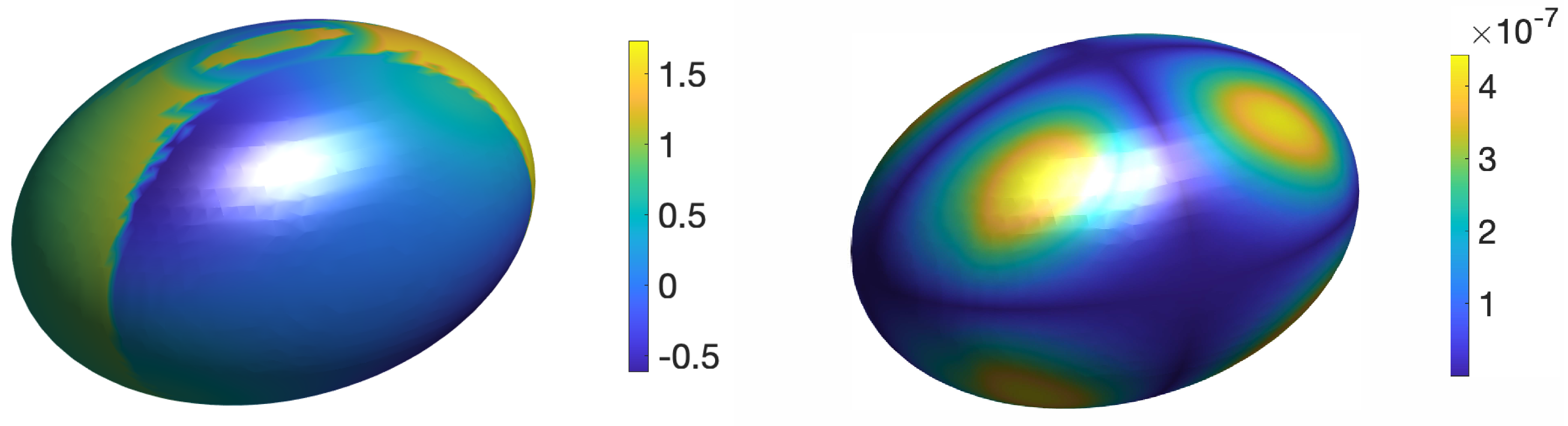

Example 5. We consider a three-dimensional Poisson interface problem given in (1) with , where the interface is defined as an ellipsoid,embedded in a cubic domain . The exact solution is given by So, the corresponding right-hand side , and the jump information and are derived accordingly. We use the same shallow network structure as in the 2D examples: a single hidden layer containing 40 neurons. The network is trained using 200 points randomly sampled on the interface to learn the neural network function .

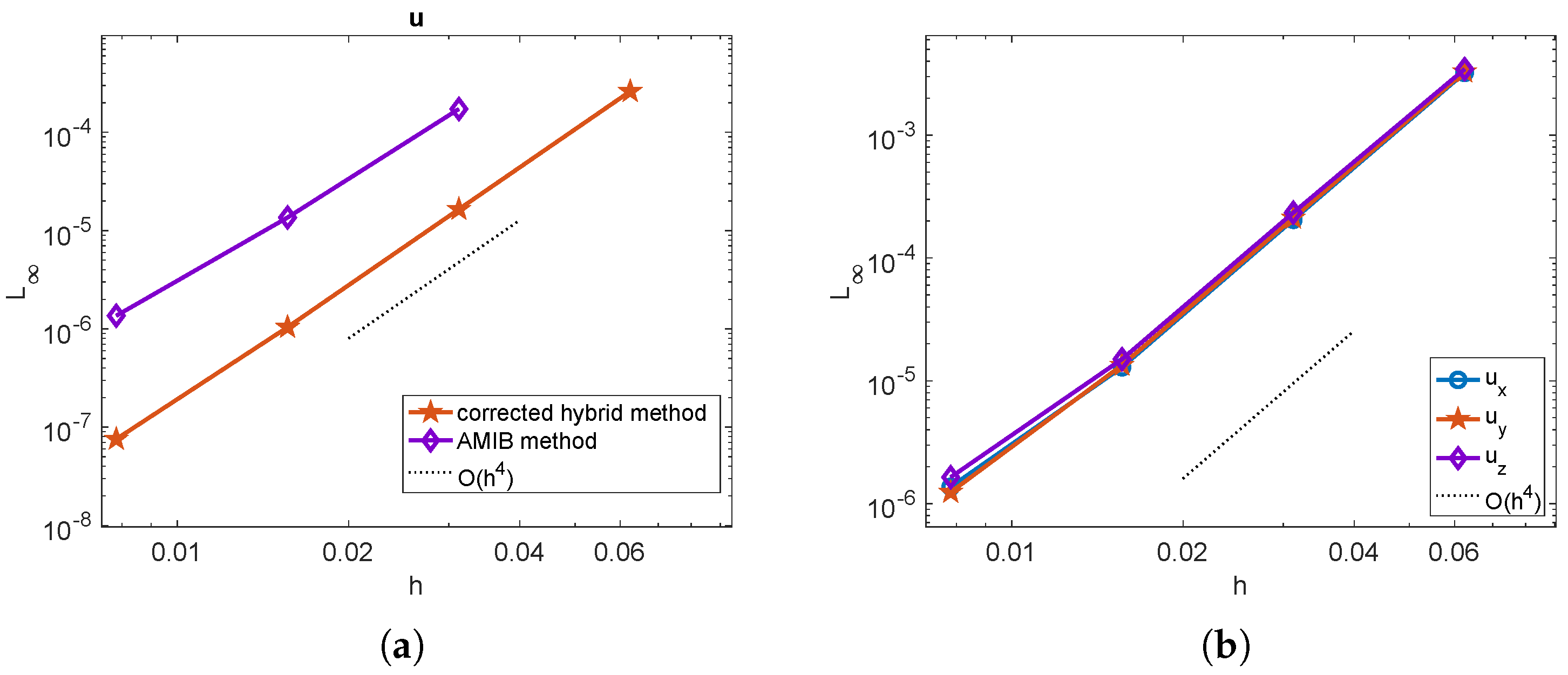

With

, the anti-symmetry property is satisfied at the boundary, allowing the use of the 3D FFT solver in both the corrected hybrid method and the 3D AMIB solver [

14]. The numerical solutions and the maximum errors of the proposed corrected hybrid method are visualized in

Figure 9, where the solutions and errors are mapped onto the ellipsoid surface

from both the interior and exterior.

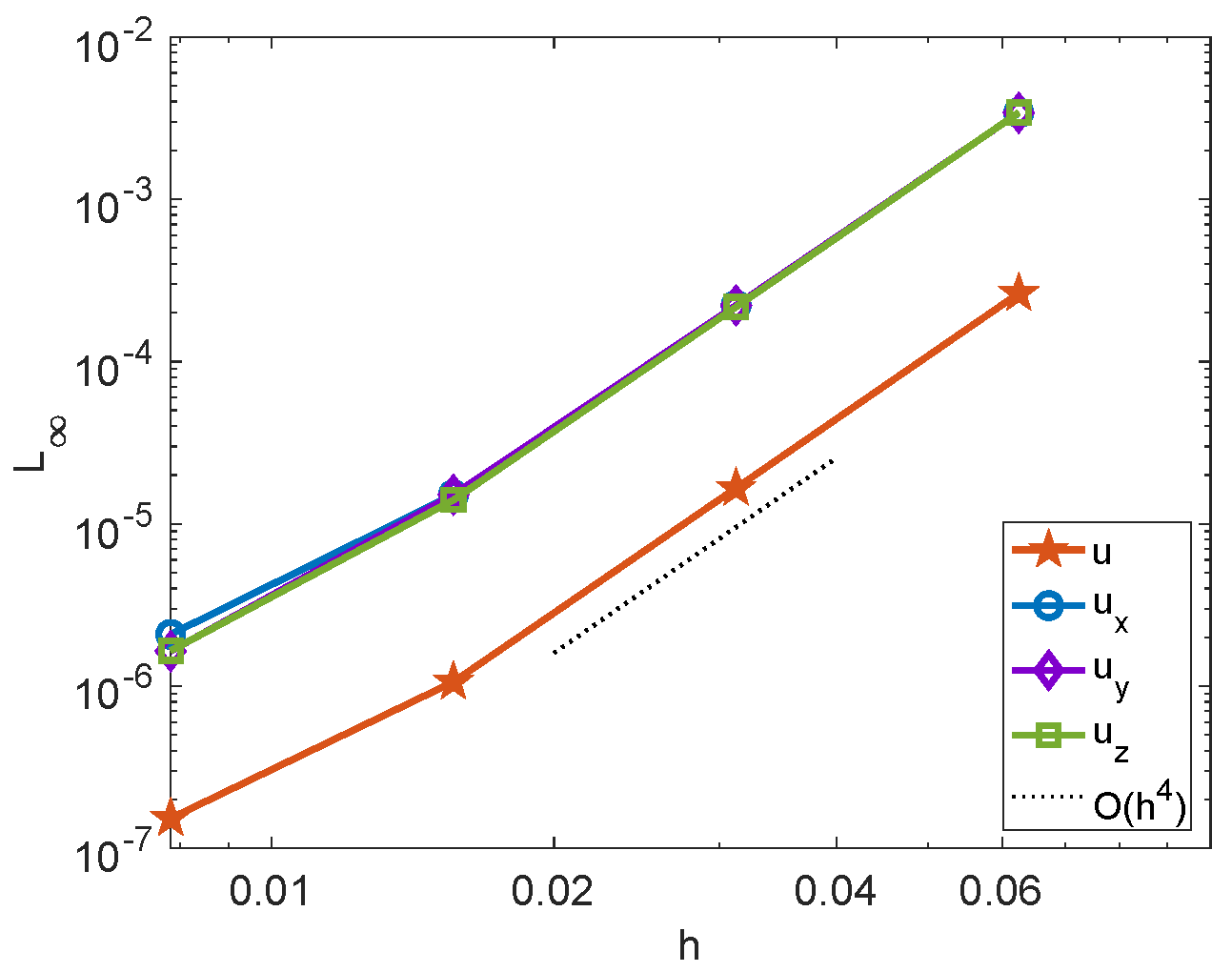

Figure 10a presents the mesh refinement results, demonstrating that both the corrected hybrid method and the 3D AMIB solver [

14] achieve fourth-order accuracy for the numerical solution. Notably, the proposed hybrid method exhibits higher accuracy than the AMIB solver. The corrected hybrid method utilizes a neural network to predict Cartesian derivative jumps in a straightforward, mesh-free manner, making it more robust and effective for handling complex interface geometries, especially in 3D. Similar to the 2D case, the AMIB solver fails for this 3D scenario with a mesh size of

, whereas the corrected hybrid method remains robust. Additionally,

Figure 10b shows that the gradient of the proposed hybrid method also achieves fourth-order convergence.

Example 6. We aim to solve a three-dimensional Poisson interface problem with geometric singularities. The interface is defined as an apple-shaped surface:where and , embedded in a cubic domain . The same neural network architecture and analytical solution with as in Example 5 are employed. The apple-shaped interface exhibits a singularity at the origin, which poses notable computational difficulties. The numerical solution and corresponding error of the corrected hybrid method on a mesh with

are shown in

Figure 11. The mesh refinement results for the numerical solution and its gradient are presented in

Figure 12. These results demonstrate that the proposed corrected hybrid method achieves fourth-order convergence for both the solution and its gradient, even in the presence of geometric singularities.

4. Elliptic Interface Problem with Open Interface

To further explore the potential of the proposed corrected hybrid method, we will consider an elliptic interface problem with an open interface. Unlike a closed interface, an open interface extends to the boundary of the computational domain (see

Figure 13a), introducing additional challenges for both neural network and numerical methods. In particular, open interface problems have not been studied before by using the original hybrid method [

26] and the fourth-order AMIB method [

13,

14,

15].

Since the proposed corrected hybrid method relies on a neural network trained to approximate the difference function

, we first further examine the mathematical definitions behind

for interface problems. We first note that in Equations (

4) and (5), we assume the governing equation can be extended over a small band

containing all points within a certain distance

d from

. Thus, the third jump condition for

in Equation (

6) is not only valid on the interface

. It can actually be extended to the subdomain

as follows:

We note that in Equation (

21),

and

are unavailable, respectively, in

and

, according to the original elliptic interface (

1)–(

3). In the present study, we assume that the function expressions of

and

are given so that we can extend them to the other hand side of the interface

. When only nodal values of

and

are known, one can extrapolate these values to the other hand side of the interface

. Such approximations will be of high precision, because the band-width

d is assumed to be small.

For a closed interface

, the three jump conditions given in Equation (

6) over

provide sufficient constraints for training

, since

is implicitly influenced by neighboring points from all directions around the interface. Note that the interface information enforced in the loss function (

7) is defined over a lower-dimensional set (

is 1D in 2D problems). Such information becomes stand-alone only when

is closed. However, in the case of an open interface, the three jump conditions no longer yield sufficient constraints in all directions near the interface because information is lost at the open ends.

For open interface problems, we propose using Equation (

21) to replace the third jump condition in Equation (

6) for predicting

. This provides more numerical stability for approximating higher-order derivatives of

. In fact, the present treatment aligns with the fundamental principle of finite difference stencils, where high-order derivative approximations achieve greater accuracy when computed over multiple points instead of a single location. To approximate

, training data are collected from

interface points

and

points in the small region

,

, where the target values are

,

, and

,

. The neural network is trained by minimizing the following MSE loss function:

To minimize the loss function, we employ the LM method. Once the neural network function is obtained, the subsequent computational procedures remain the same as in the corrected hybrid method.

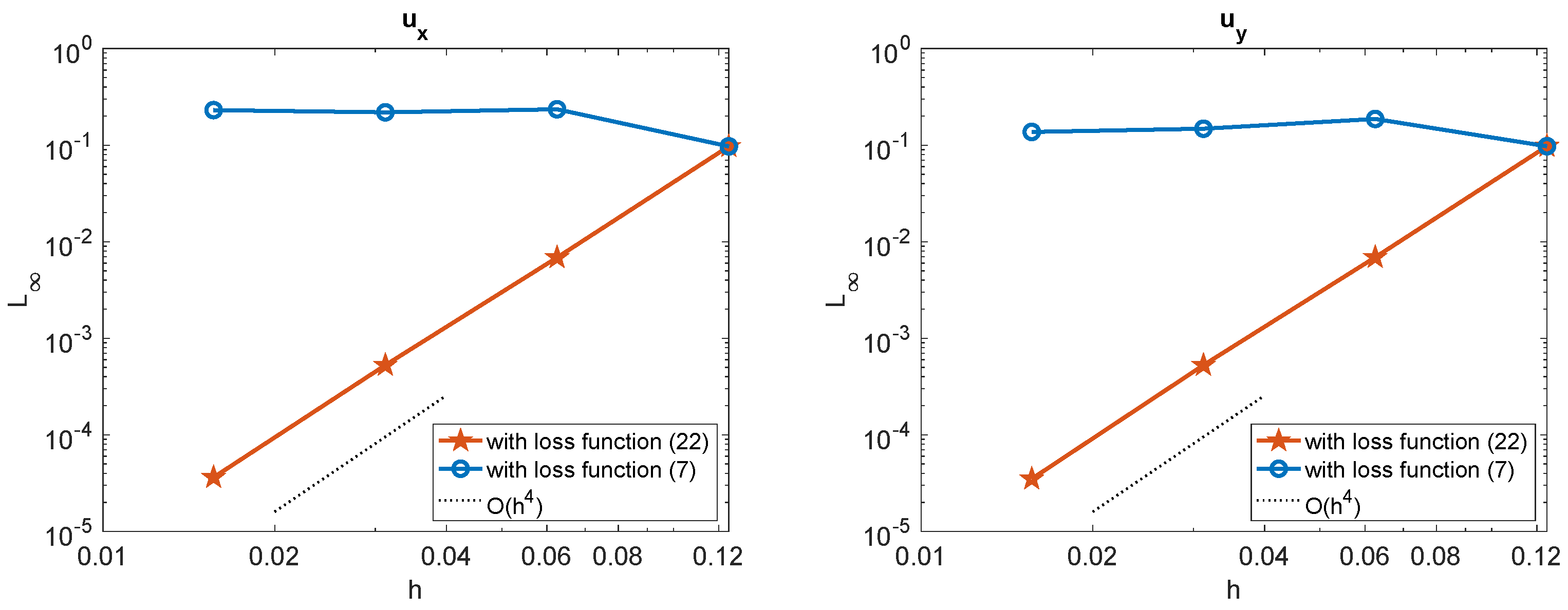

Example 7. Consider an open interface given by the following equation:defined on the domain (see Figure 13a). The exact solution is the same as in Example 2 with . For the neural network training, we employ a shallow network structure with

points on the interface and

points in the small region

. The solution of the corrected hybrid method, where the neural network is trained with the loss function in (

22), is plotted in

Figure 13a. The performance of the corrected hybrid method is evaluated by comparing the maximum errors of the solutions and gradients with the loss functions in (

7) and (

22). These comparisons are presented in

Figure 13b and

Figure 14, respectively. Using 40 neurons in the hidden layer, we observe that the loss function in (

7) (with the third constraint applied directly on the interface) results in a lack of numerical stability of the high-order Cartesian derivative jumps, and consequently, the solution is inaccurate. In contrast, when the loss function in (

22) is employed to train

, and the third constraint is applied in the small region

, the solution and gradient achieve fourth-order convergence. This example demonstrates that the present corrected hybrid method is effective in solving elliptic interface problems with open interfaces.

However, extending the original hybrid method [

26] to open interfaces might be challenging. Specifically, the neural network function is defined in

, and since the open interface extends to the boundary of the domain, the information around the interface is incomplete. The missing information at the open ends makes it difficult to apply the jump conditions accurately, as there is no neighboring domain to provide the necessary context or constraints beyond the interface. Additionally, the region

around the open interface may not have a well-defined structure to enforce the smoothness conditions of the regular solution.