iSight: A Smart Clothing Management System to Empower Blind and Visually Impaired Individuals

Abstract

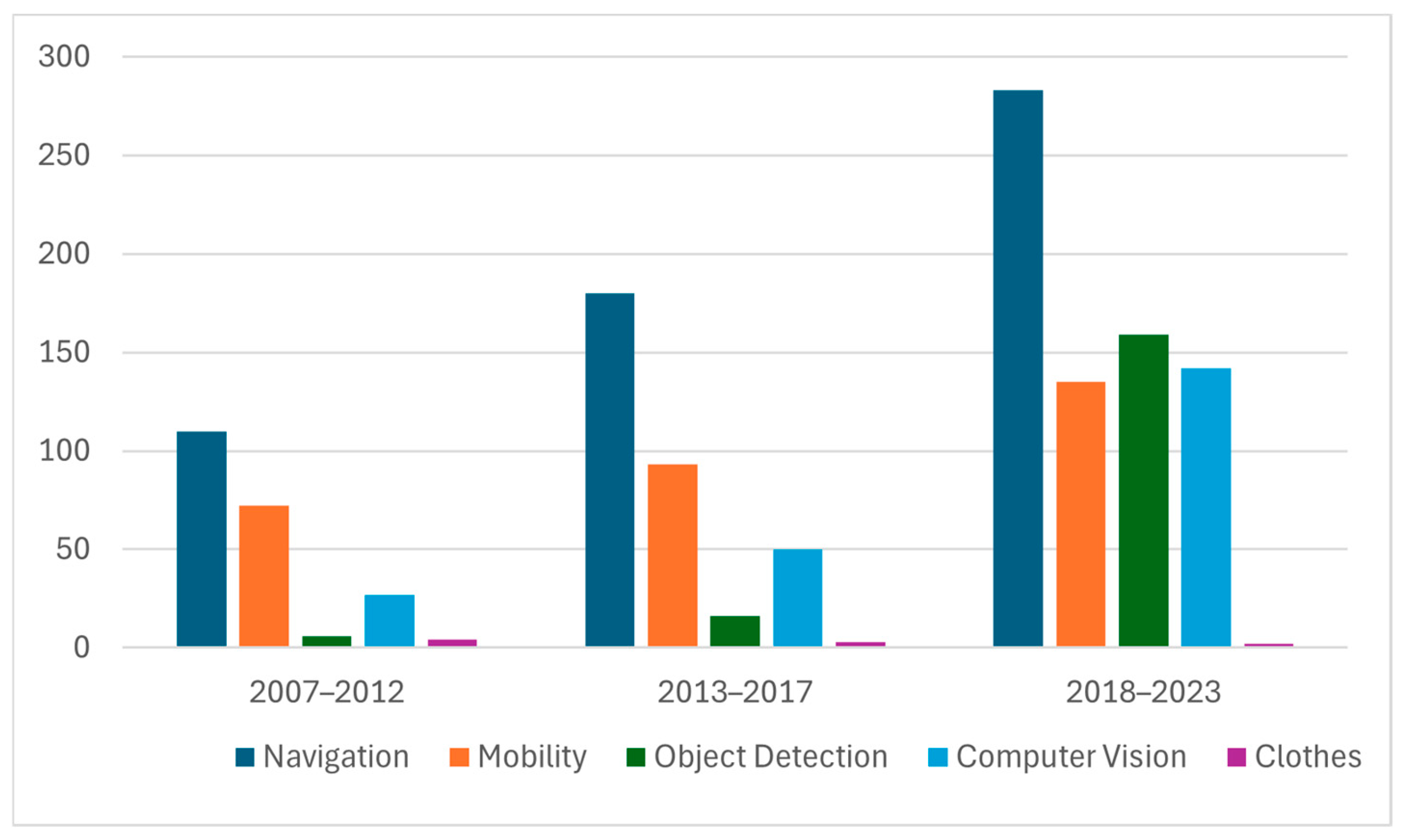

1. Introduction

1.1. Limitations of Existing Assistive Technology

1.2. Gaps in Computer Vision Approaches for Clothing Analysis

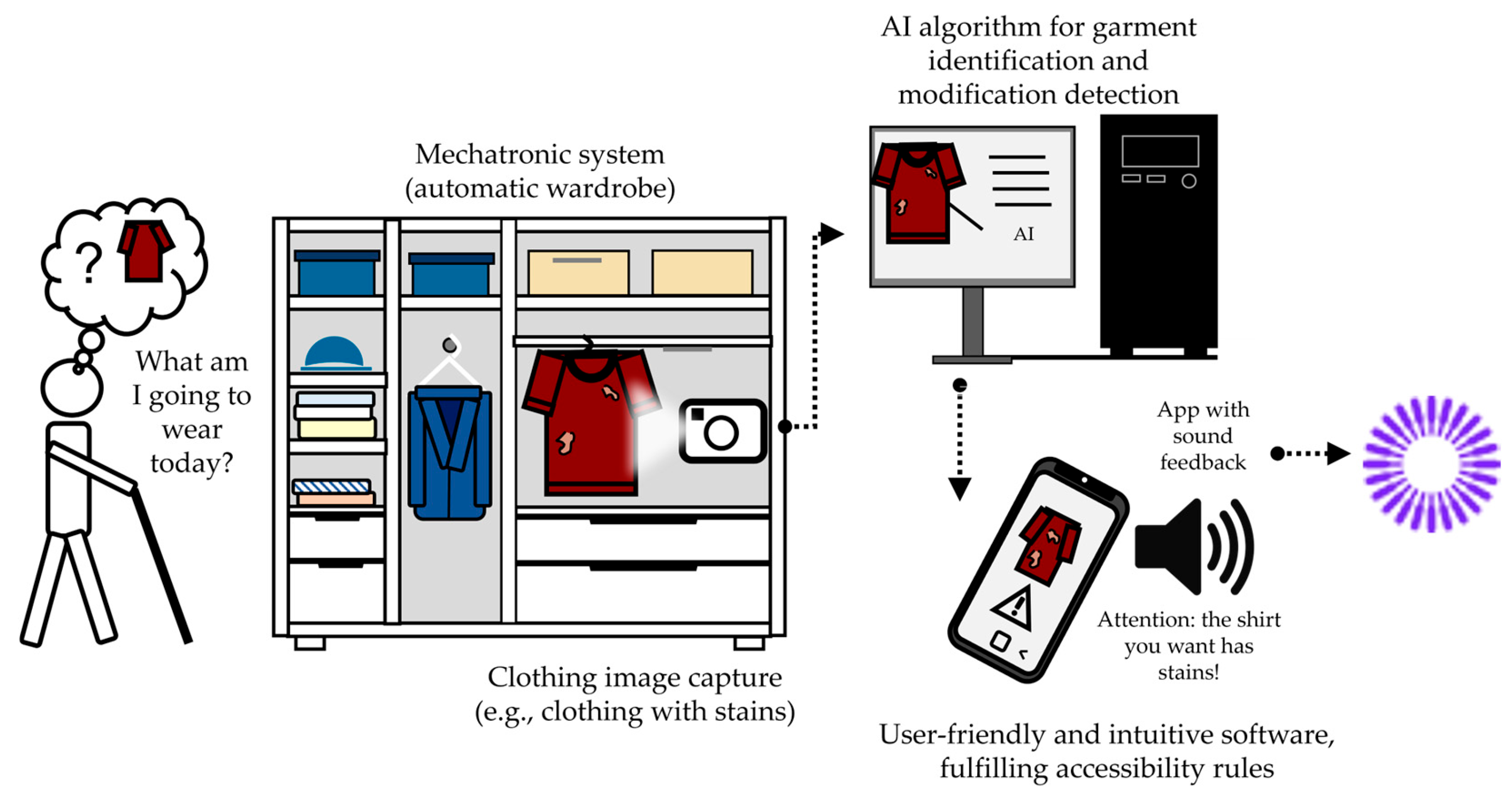

1.3. Proposed Approach

1.4. Paper Structure

2. Methodology

2.1. System Overview and Components

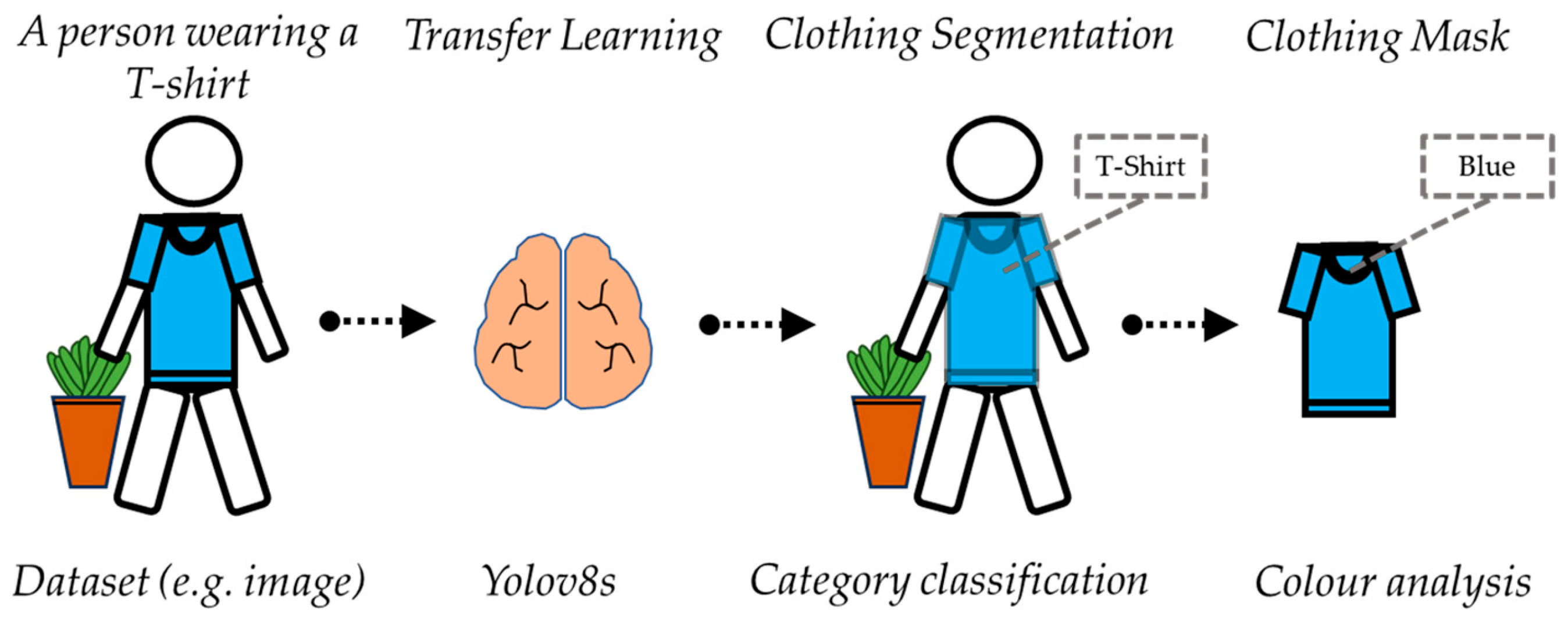

2.2. Development of Deep Learning Algorithms

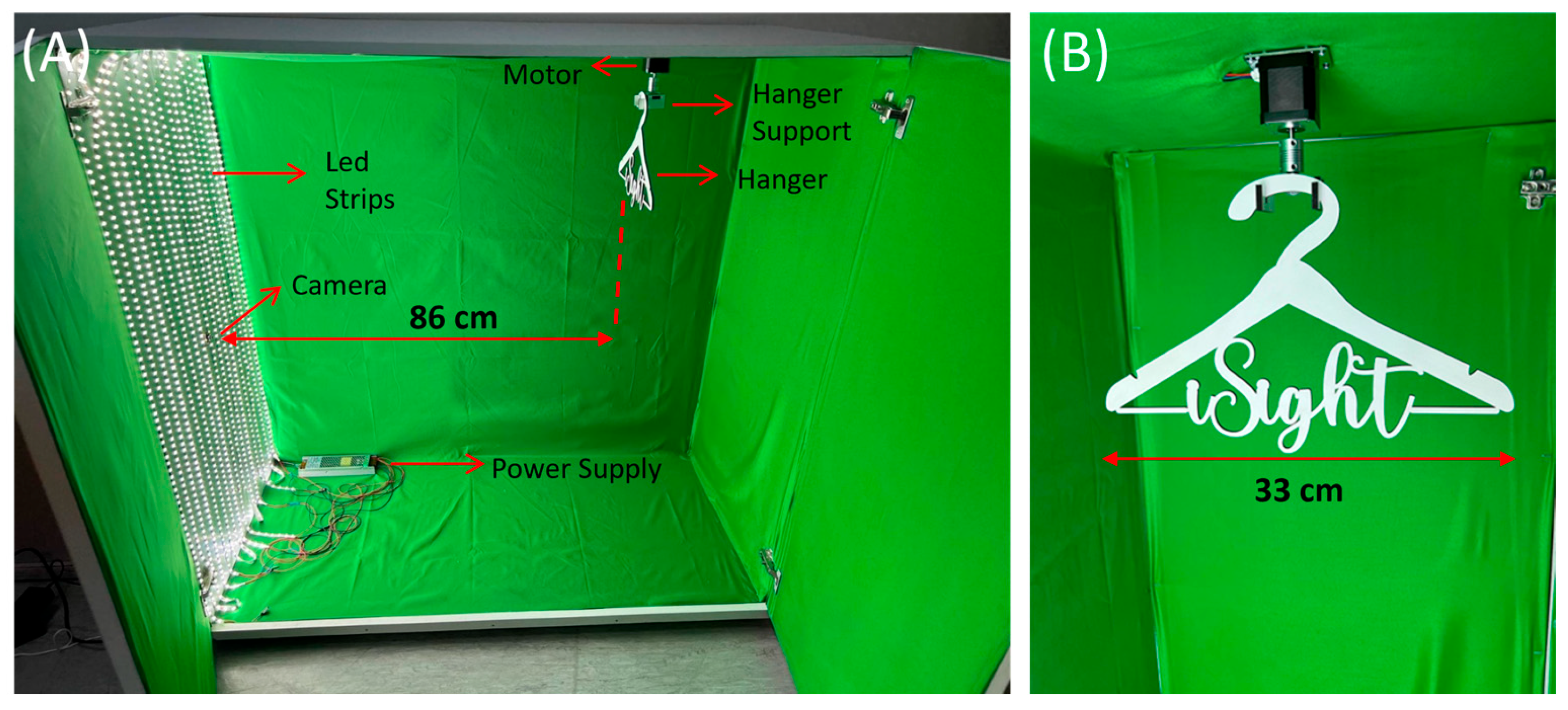

2.3. Smart Wardrobe Design

- Camera Module: The Raspberry Pi Camera Module V3 captures high-resolution images with a wide field of view, enabling detailed garment analysis.

- Stepper Motor: A Nema 17 stepper motor, controlled by an A4988 motor driver, rotates garments precisely, ensuring comprehensive coverage.

- LED Lighting: Uniform illumination is achieved through LED strips, enhancing the visibility of garment details.

- NFC Reader: An ITEAD PN532 NFC module reads tags affixed to garments, associating each item with a unique identifier.

- Controller: A Raspberry Pi 4 Model B serves as the system’s computational hub, managing hardware operations and interfacing with the mobile application.

2.4. Mobile Application Development

- NFC Tag Reading: Users can identify garments by scanning NFC tags attached to them, facilitating quick and accurate retrieval of clothing details.

- Garment Management: The application enables users to add, edit, and organize clothing items into categories such as tops, bottoms, and footwear.

- AI-Powered Analysis: Users can classify garments, detect colours, and identify defects through AI-based inference.

3. iSight Evaluation

3.1. Ethical Considerations

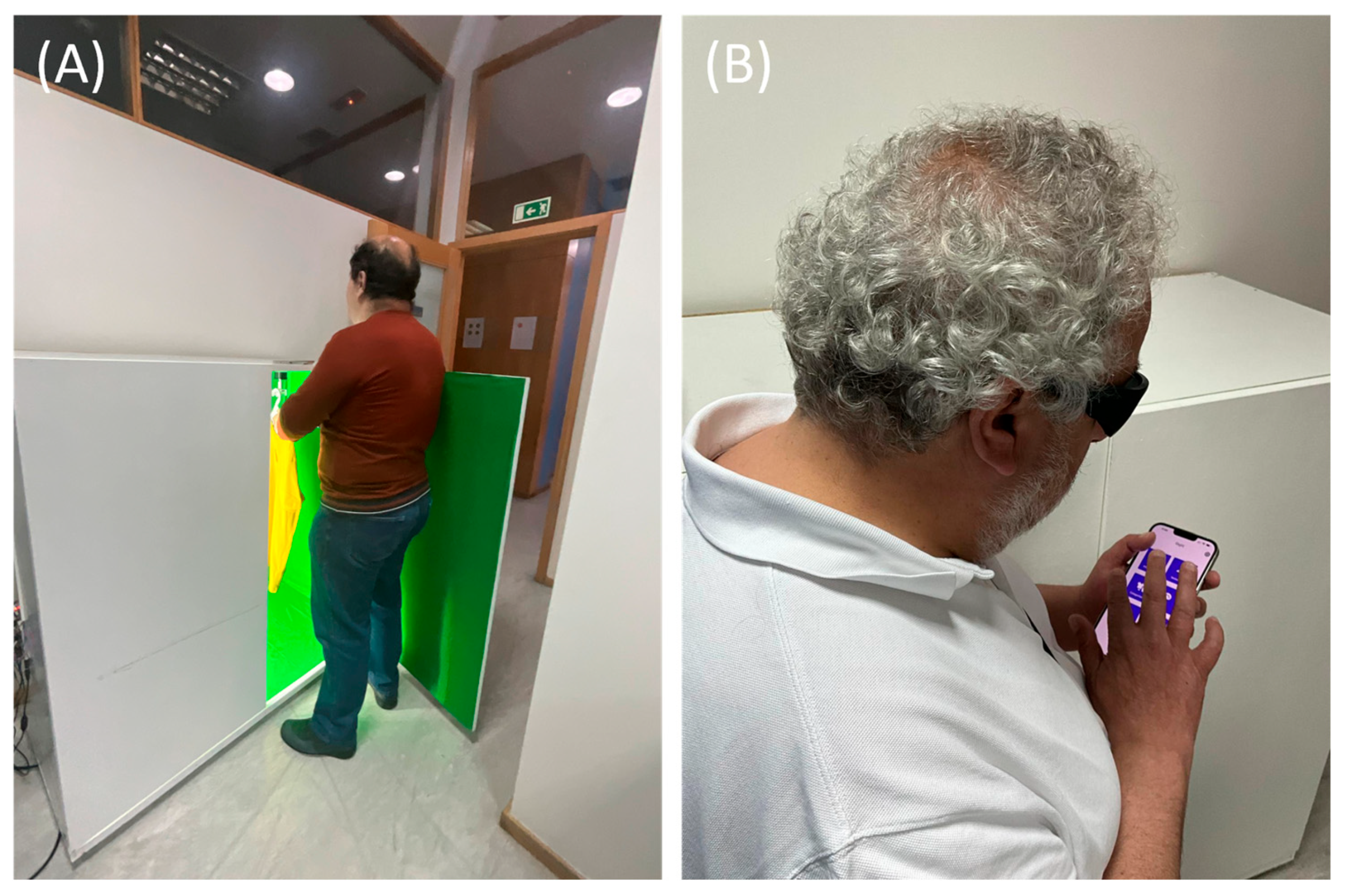

3.2. Testing Protocol

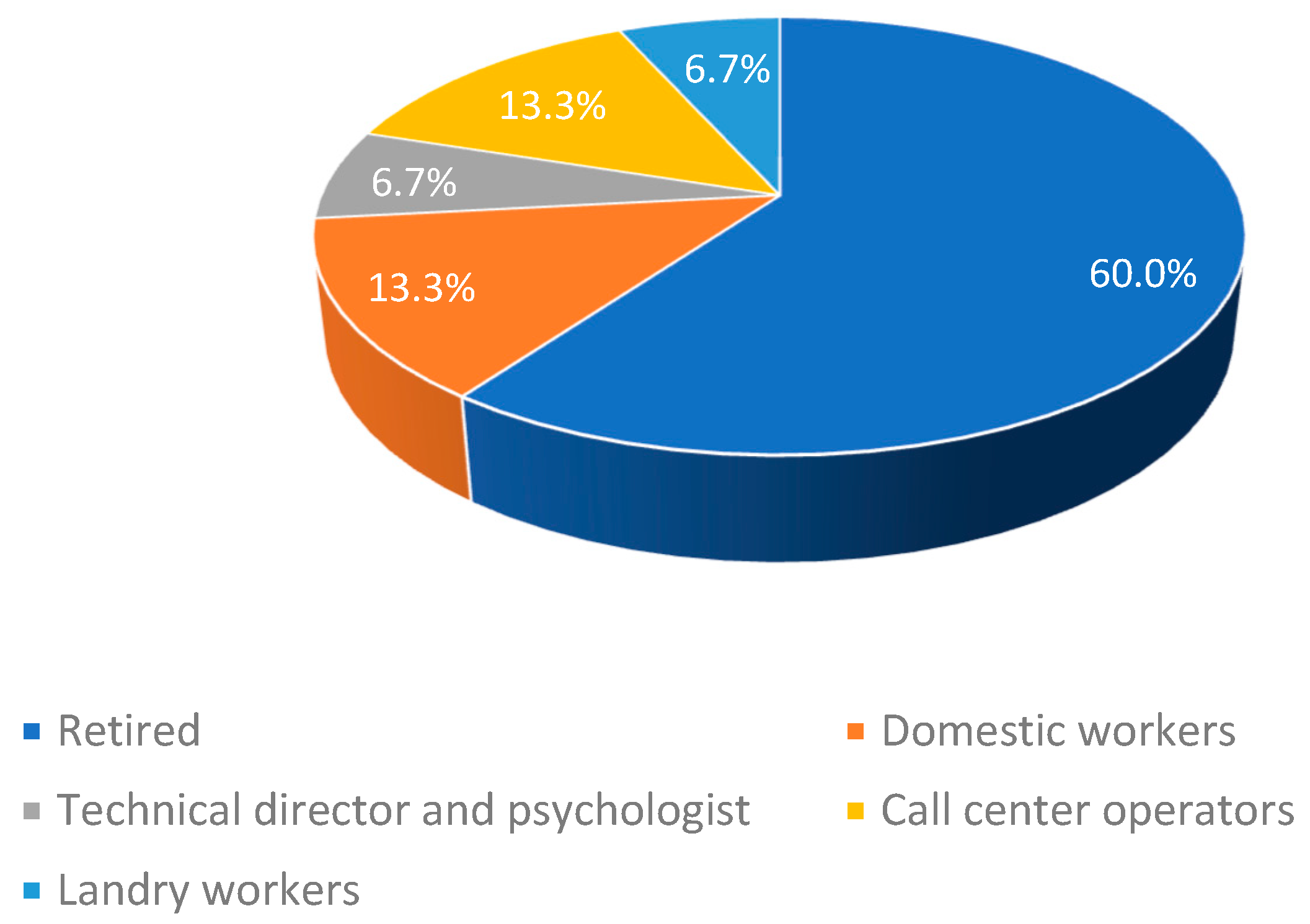

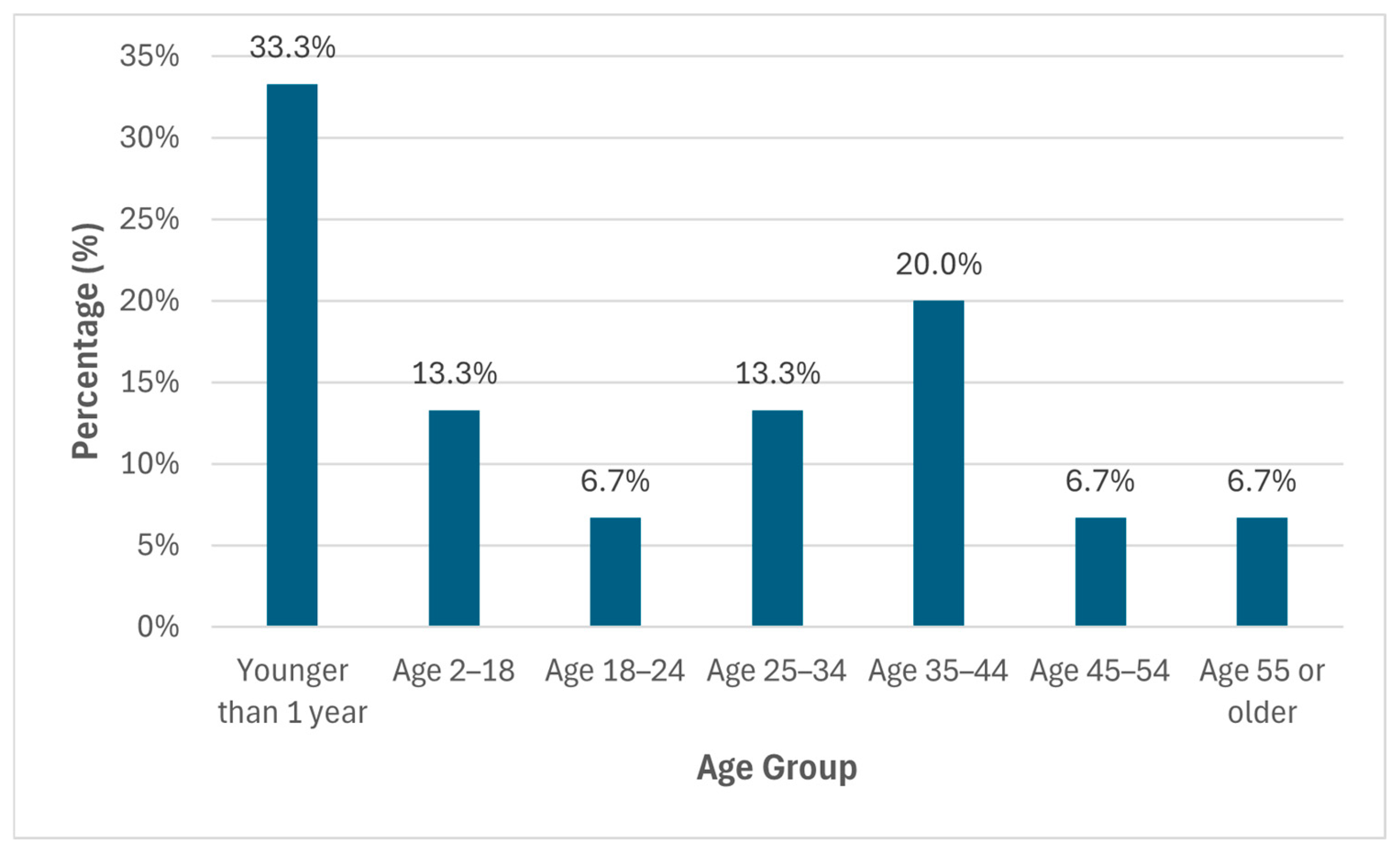

3.3. Sample Characterization Based on Questionnaire Data

- I.

- Sample Characterization

- II.

- Type of Visual Impairment

- III.

- Technology Use and Familiarity

- IV.

- Accessibility of the iSight Mobile Application

- V.

- Usability of the iSight Prototype

- VI.

- Perceived Importance and Impact on Quality of Life

3.4. Statistical Analysis and Relevant Findings

4. Discussion

5. Conclusions

6. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AADVDB | Association of Support for the Visually Impaired of Braga District |

| ACAPO | Association of the Blind and Amblyopes of Portugal |

| AI | Artificial Intelligence |

| CEICSH | Ethics Committee for Research in Social and Human Sciences |

| HSV | Hue Saturation Value |

| NFC | Near Field Communication |

| RFID | Radio Frequency Identification |

| RNID | Regulamento Nacional de Interoperabilidade Digital |

| SGD | Sustainable Development Goal |

| WHO | World Health Organization |

References

- Magnitude and Projections—The International Agency for the Prevention of Blindness. Available online: https://www.iapb.org/learn/vision-atlas/magnitude-and-projections/ (accessed on 15 December 2023).

- INE. Census 2011: XV General Population Census and V General Housing Census; INE Statistics Portugal: Lisbon, Portugal, 2012. [Google Scholar]

- Chia, E.-M.; Mitchell, P.; Ojaimi, E.; Rochtchina, E.; Wang, J.J. Assessment of vision-related quality of life in an older population subsample: The Blue Mountains Eye Study. Ophthalmic Epidemiol. 2006, 13, 371–377. [Google Scholar] [CrossRef] [PubMed]

- Langelaan, M.; de Boer, M.R.; van Nispen, R.M.A.; Wouters, B.; Moll, A.C.; van Rens, G.H.M.B. Impact of visual impairment on quality of life: A comparison with quality of life in the general population and with other chronic conditions. Ophthalmic Epidemiol. 2007, 14, 119–126. [Google Scholar] [CrossRef] [PubMed]

- Goal 10|Department of Economic and Social Affairs. Available online: https://sdgs.un.org/goals/goal10 (accessed on 17 September 2024).

- Johnson, K.; Lennon, S.J.; Rudd, N. Dress, body and self: Research in the social psychology of dress. Fash. Text. 2014, 1, 20. [Google Scholar] [CrossRef]

- Adam, H.; Galinsky, A.D. Enclothed cognition. J. Exp. Soc. Psychol. 2012, 48, 918–925. [Google Scholar] [CrossRef]

- Bhowmick, A.; Hazarika, S.M. An insight into assistive technology for the visually impaired and blind people: State-of-the-art and future trends. J. Multimodal User Interfaces 2017, 11, 149–172. [Google Scholar] [CrossRef]

- Elmannai, W.; Elleithy, K. Sensor-based assistive devices for visually-impaired people: Current status, challenges, and future directions. Sensors 2017, 17, 565. [Google Scholar] [CrossRef]

- Messaoudi, M.D.; Menelas, B.-A.J.; Mcheick, H. Review of Navigation Assistive Tools and Technologies for the Visually Impaired. Sensors 2022, 22, 7888. [Google Scholar] [CrossRef]

- Yang, X.; Yuan, S.; Tian, Y. Assistive Clothing Pattern Recognition for Visually Impaired People. IEEE Trans. Human-Machine Syst. 2014, 44, 234–243. [Google Scholar] [CrossRef]

- Medeiros, A.J.; Stearns, L.; Findlater, L.; Chen, C.; Froehlich, J.E. Recognizing Clothing Colors and Visual Textures Using a Finger-Mounted Camera: An Initial Investigation. In Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility, ASSETS ’17, Baltimore, MD, USA, 20 October–1 November 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 393–394. [Google Scholar] [CrossRef]

- Khalid, L.; Gong, W. Vision4All—A Deep Learning Fashion Assistance Solution For Blinds. In Proceedings of the 2022 5th International Conference on Artificial Intelligence and Big Data (ICAIBD), Chengdu, China, 27–30 May 2022; pp. 156–161. Available online: https://api.semanticscholar.org/CorpusID:250504202 (accessed on 16 February 2025).

- Goh, K.N.; Chen, Y.Y.; Lin, E.S. Developing a smart wardrobe system. In Proceedings of the 2011 IEEE Consumer Communications and Networking Conference (CCNC) 2011, Las Vegas, NV, USA, 9–12 January 2011; pp. 303–307. [Google Scholar] [CrossRef]

- Alabduljabbar, R. An IoT smart clothing system for the visually impaired using NFC technology. Int. J. Sen. Netw. 2022, 38, 46–57. [Google Scholar] [CrossRef]

- Stangl, A.J.; Kothari, E.; Jain, S.D.; Yeh, T.; Grauman, K.; Gurari, D. BrowseWithMe: An Online Clothes Shopping Assistant for People with Visual Impairments. In Proceedings of the 20th International ACM SIGACCESS Conference on Computers and Accessibility, ASSETS ’18, Galway, Ireland, 22–24 October 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 107–118. [Google Scholar] [CrossRef]

- Li, C.; Li, J.; Li, Y.; He, L.; Fu, X.; Chen, J. Fabric Defect Detection in Textile Manufacturing: A Survey of the State of the Art. Secur. Commun. Netw. 2021, 2021, 9948808. [Google Scholar] [CrossRef]

- Ngan, H.Y.T.; Pang, G.K.H.; Yung, N.H.C. Automated fabric defect detection—A review. Image Vis. Comput. 2011, 29, 442–458. [Google Scholar] [CrossRef]

- He, X.; Wu, L.; Song, F.; Jiang, D.; Zheng, G. Research on Fabric Defect Detection Based on Deep Fusion DenseNet-SSD Network. In Proceedings of the International Conference on Wireless Communication and Sensor Networks, icWCSN 2020, Warsaw, Poland, 13–15 May 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 60–64. [Google Scholar] [CrossRef]

- Jing, J.; Wang, Z.; Rätsch, M.; Zhang, H. Mobile-Unet: An efficient convolutional neural network for fabric defect detection. Text. Res. J. 2022, 92, 30–42. [Google Scholar] [CrossRef]

- Xie, H.; Wu, Z. A Robust Fabric Defect Detection Method Based on Improved RefineDet. Sensors 2020, 20, 4260. [Google Scholar] [CrossRef] [PubMed]

- Han, Y.-J.; Yu, H.-J. Fabric Defect Detection System Using Stacked Convolutional Denoising Auto-Encoders Trained with Synthetic Defect Data. Appl. Sci. 2020, 10, 2511. [Google Scholar] [CrossRef]

- Bhatt, D.; Patel, C.; Talsania, H.; Patel, J.; Vaghela, R.; Pandya, S.; Modi, K.; Ghayvat, H. CNN Variants for Computer Vision: History, Architecture, Application, Challenges and Future Scope. Electronics 2021, 10, 2470. [Google Scholar] [CrossRef]

- Liu, Z.; Luo, P.; Qiu, S.; Wang, X.; Tang, X. DeepFashion: Powering Robust Clothes Recognition and Retrieval with Rich Annotations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar] [CrossRef]

- Ge, Y.; Zhang, R.; Wu, L.; Wang, X.; Tang, X.; Luo, P. DeepFashion2: A Versatile Benchmark for Detection, Pose Estimation, Segmentation and Re-Identification of Clothing Images. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5332–5340. Available online: https://api.semanticscholar.org/CorpusID:59158744 (accessed on 16 February 2025).

- Zheng, S.; Yang, F.; Kiapour, M.; Piramuthu, R. ModaNet: A Large-Scale Street Fashion Dataset with Polygon Annotations. arXiv 2018, arXiv:1807.01394. [Google Scholar] [CrossRef]

- Liang, X.; Liu, S.; Shen, X.; Yang, J.; Liu, L.; Dong, J.; Lin, L.; Yan, S. Deep Human Parsing with Active Template Regression. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 2402–2414. [Google Scholar] [CrossRef]

- Liang, X.; Xu, C.; Shen, X.; Yang, J.; Liu, S.; Tang, J.; Lin, L.; Yan, S. Human Parsing with Contextualized Convolutional Neural Network. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV) 2015, Santiago, Chile, 7–13 December 2015; pp. 1386–1394. [Google Scholar] [CrossRef]

- Gong, K.; Liang, X.; Zhang, D.; Shen, X.; Lin, L. Look into Person: Self-supervised Structure-sensitive Learning and A New Benchmark for Human Parsing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision 2017, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Rocha, D.; Carvalho, V.; Soares, F.; Oliveira, E.; Leão, C.P. Understand the Importance of Garments’ Identification and Combination to Blind People. In Human Interaction, Emerging Technologies and Future Systems; Ahram, V.T., Taiar, R., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 74–81. [Google Scholar]

- Rocha, D.; Soares, F.; Oliveira, E.; Carvalho, V. Blind People: Clothing Category Classification and Stain Detection Using Transfer Learning. Appl. Sci. 2023, 13, 1925. [Google Scholar] [CrossRef]

- Rocha, D.; Pinto, L.; Machado, J.; Soares, F.; Carvalho, V. Using Object Detection Technology to Identify Defects in Clothing for Blind People. Sensors 2023, 23, 4381. [Google Scholar] [CrossRef]

- Introdução à Norma Europeia EN 301 549—acessibilidade.gov.pt. Available online: https://www.acessibilidade.gov.pt/blogue/categoria-normas/microsoft-publica-videos-sobre-a-norma-europeia-de-acessibilidade-as-tic/ (accessed on 25 July 2024).

- WCAG 2 Overview | Web Accessibility Initiative (WAI) | W3C. Available online: https://www.w3.org/WAI/standards-guidelines/wcag/ (accessed on 16 February 2025).

- DL n.° 83/2018—Acessibilidade Dos Sítios Web e Das Aplicações Móveis—acessibilidade.gov.pt. Available online: https://www.acessibilidade.gov.pt/blogue/categoria-acessibilidade/dl-n-o-83-2018-acessibilidade-dos-sitios-web-e-das-aplicacoes-moveis/ (accessed on 25 July 2024).

- Burton, M.J.; Ramke, J.; Marques, A.P.; A Bourne, R.R.; Congdon, N.; Jones, I.; Tong, B.A.M.A.; Arunga, S.; Bachani, D.; Bascaran, C.; et al. The Lancet Global Health Commission on Global Eye Health: Vision beyond 2020. Lancet Glob. Health 2021, 9, e489–e551. [Google Scholar] [CrossRef]

- Pavey, S.; Douglas, G.; Corcoran, C. Transition into adulthood and work — findings from Network 1000. Br. J. Vis. Impair. 2008, 26, 202–216. [Google Scholar] [CrossRef]

- Schneider, K. Students Who Are Blind or Visually Impaired in Postsecondary Education. 2001. Available online: https://api.semanticscholar.org/CorpusID:140769224 (accessed on 16 February 2025).

- McDonnall, M.C.; Tatch, A. Educational Attainment and Employment for Individuals with Visual Impairments. J. Vis. Impair. Blind. 2021, 115, 152–159. [Google Scholar] [CrossRef]

- Caine, K.E. Local Standards for Sample Size at CHI. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems 2016, San Jose, CA, USA, 7–12 May 2016; Available online: https://api.semanticscholar.org/CorpusID:14445824 (accessed on 16 February 2025).

- Barnett, S.D.; Heinemann, A.W.; Libin, A.; Houts, A.C.; Gassaway, J.; Sen-Gupta, S.; Resch, A.; Brossart, D.F. Small N designs for rehabilitation research. J. Rehabil. Res. Dev. 2012, 49, 175–186. [Google Scholar] [CrossRef] [PubMed]

- Colorino—Color and Light detector—Caretec. Available online: https://www.caretec.at/product/colorino-color-and-light-detector/ (accessed on 15 July 2024).

- Bhatt, S.; Agrali, A.; Suri, R.; Ayaz, H. Does Comfort with Technology Affect Use of Wealth Management Platforms? Usability Testing with fNIRS and Eye-Tracking. In Proceedings of theInternational Conference on Applied Human Factors and Ergonomics, Orlando, FL, USA, 21–25 July 2018; Springer: Cham, Switzerland, 2018. Available online: https://api.semanticscholar.org/CorpusID:169196337 (accessed on 16 February 2025).

- McLellan, S.G.; Muddimer, A.; Peres, S.C. The effect of experience on system usability scale ratings. J. Usability Stud. Arch. 2012, 7, 56–67. [Google Scholar]

- Chung, Y. A Review of Nonparametric Statistics for Applied Research. J. Educ. Behav. Stat. 2016, 41, 455–458. [Google Scholar] [CrossRef]

- Martin, J.K.; Martin, L.G.; Stumbo, N.J.; Morrill, J. The impact of consumer involvement on satisfaction with and use of assistive technology. Disabil. Rehabil. Assist. Technol. 2011, 6, 225–242. [Google Scholar] [CrossRef]

- Ranada, Å.L.; Lidström, H. Satisfaction with assistive technology device in relation to the service delivery process—A systematic review. Assist. Technol. 2019, 31, 82–97. [Google Scholar] [CrossRef]

- Ghafoor, K.; Ahmad, T.; Aslam, M.; Wahla, S.Q. Improving social interaction of the visually impaired individuals through conversational assistive technology. Int. J. Intell. Comput. Cybern. 2023, 17, 126–142. [Google Scholar] [CrossRef]

- Shinohara, K.; Wobbrock, J.O. Self-Conscious or Self-Confident? A Diary Study Conceptualizing the Social Accessibility of Assistive Technology. ACM Trans. Access. Comput. 2016, 8, 1–31. [Google Scholar] [CrossRef]

- Christy, B.; Pillai, A. User feedback on usefulness and accessibility features of mobile applications by people with visual impairment. Indian. J. Ophthalmol. 2021, 69, 555–558. [Google Scholar] [CrossRef]

- Darvishy, A. Accessibility of Mobile Platforms; Springer: Cham, Switzerland, 2014; Available online: https://api.semanticscholar.org/CorpusID:11212078 (accessed on 16 February 2025).

| Category | Precision | Recall | AP at IoU = 0.50 | AP |

|---|---|---|---|---|

| all | 0.941 | 0.896 | 0.965 | 0.949 |

| Dress | 0.849 | 0.917 | 0.941 | 0.906 |

| Jacket | 1 | 0.911 | 0.99 | 0.955 |

| Pants | 0.989 | 0.875 | 0.97 | 0.96 |

| Polo | 0.953 | 0.837 | 0.96 | 0.952 |

| Shirt | 0.863 | 0.875 | 0.925 | 0.916 |

| Shoes | 0.996 | 1 | 0.995 | 0.995 |

| Shorts | 0.833 | 0.833 | 0.964 | 0.955 |

| T-shirt | 0.917 | 0.917 | 0.971 | 0.954 |

corresponds to “Not significant” and denotes no correlation/significance, while

corresponds to “Not significant” and denotes no correlation/significance, while  corresponds to “Significant” and denotes substantial impact/correlation].

corresponds to “Significant” and denotes substantial impact/correlation].

corresponds to “Not significant” and denotes no correlation/significance, while

corresponds to “Not significant” and denotes no correlation/significance, while  corresponds to “Significant” and denotes substantial impact/correlation].

corresponds to “Significant” and denotes substantial impact/correlation].| Test/Analysis | Hypothesis | Result Summary | * Significance Level |

|---|---|---|---|

| Fisher’s Exact Test | DI.1: Ease of Navigation vs. Satisfaction | Not significant (p = 0.101) |  |

| Fisher’s Exact Test | DI.2: Ease of Use vs. Satisfaction | Not significant (p = 0.608) |  |

| Spearman’s Rank Correlation | DI.1: Ease of Navigation vs. Satisfaction | Moderate positive correlation, not significant (ρ = 0.500, p = 0.058) |  |

| Spearman’s Rank Correlation | DI.2: Ease of Use vs. Satisfaction | Weak positive correlation, not significant (ρ = 0.189, p = 0.500) |  |

| Mann–Whitney U Test | D2.1: Ease of Navigation vs. Comfort with Technology | Significant (U = 11.00, p = 0.044) |  |

| Mann–Whitney U Test | D2.2: Overall Experience vs. Comfort with Technology | Significant (U = 12.00, p = 0.047) |  |

| Spearman’s Rank Correlation | D2.1: Comfort with Technology vs. Ease of Navigation | Moderate positive correlation, significant (ρ = 0.572, p = 0.026) |  |

| Spearman’s Rank Correlation | D2.2: Comfort with Technology vs. Overall Experience | Strong positive correlation, significant (ρ = 0.627, p = 0.012) |  |

| Spearman’s Rank Correlation | D3: Identifying Colours and Identifying Categories | Moderate positive association, significant (ρ = 0.612, p = 0.015) |  |

| Spearman’s Rank Correlation | D3: Identifying Colours and Detecting Stains | Weak positive association, not significant (ρ = 0.294, p = 0.287) |  |

| Spearman’s Rank Correlation | D3: Identifying NFC Tags and Detecting Stains | Weak positive association, not significant (ρ = 0.423, p = 0.116) |  |

| Spearman’s Rank Correlation | D4: Comfort with Technology vs. Frequency of Technology Use | Moderate positive correlation, not significant (ρ = 0.488, p = 0.065) |  |

| Spearman’s Rank Correlation | D5: iSight Functionality vs. Increased Confidence, Self-esteem, Well-being, and Independence | Strong positive correlation, significant (ρ = 0.700, p = 0.004) |  |

| Fisher’s Exact Test | D5: iSight Functionality vs. Increased Confidence, Self-esteem, Well-being, and Independence | Significant (p = 0.017) |  |

| Category | Feedback/Suggestion | Affected Component |

|---|---|---|

| Performance Improvements | “This is a very interesting idea for daily use. I would just add fewer menus to make it faster”. | Mobile application interface |

| “The application is useful but I would like it to be faster in making choices, that is, to have fewer menus”. | Mobile application interface | |

| Feature Enhancements | “Include information such as the fabric of the clothing”. | AI algorithms (Fabric identification) |

| “Read label characteristics such as: washing instructions, ironing temperature, and whether it can be bleached”. | AI algorithms (Label detection and reading) | |

| “Check the type of fabric of the clothing”. | AI algorithms (Fabric identification) | |

| “Check if there is a mix-up of shoes in similar models”. | AI algorithms (Object recognition) | |

| “It would be interesting to also know the location of the stain on the piece of clothing”. | AI Algorithms (Stain localization) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rocha, D.; Leão, C.P.; Soares, F.; Carvalho, V. iSight: A Smart Clothing Management System to Empower Blind and Visually Impaired Individuals. Information 2025, 16, 383. https://doi.org/10.3390/info16050383

Rocha D, Leão CP, Soares F, Carvalho V. iSight: A Smart Clothing Management System to Empower Blind and Visually Impaired Individuals. Information. 2025; 16(5):383. https://doi.org/10.3390/info16050383

Chicago/Turabian StyleRocha, Daniel, Celina P. Leão, Filomena Soares, and Vítor Carvalho. 2025. "iSight: A Smart Clothing Management System to Empower Blind and Visually Impaired Individuals" Information 16, no. 5: 383. https://doi.org/10.3390/info16050383

APA StyleRocha, D., Leão, C. P., Soares, F., & Carvalho, V. (2025). iSight: A Smart Clothing Management System to Empower Blind and Visually Impaired Individuals. Information, 16(5), 383. https://doi.org/10.3390/info16050383