Human Activity Recognition for Production and Logistics—A Systematic Literature Review

Abstract

1. Introduction

- What is the current status of research regarding HAR for P+L and related domains from a practitioner’s perspective?

- What are the specifications of current applications regarding the sensor technology, recording environment and utilised datasets?

- What methods of HAR are deployed?

- What is the research gap to enhance HAR in P+L? What does the future road map look like?

2. Demarcation from Related Surveys

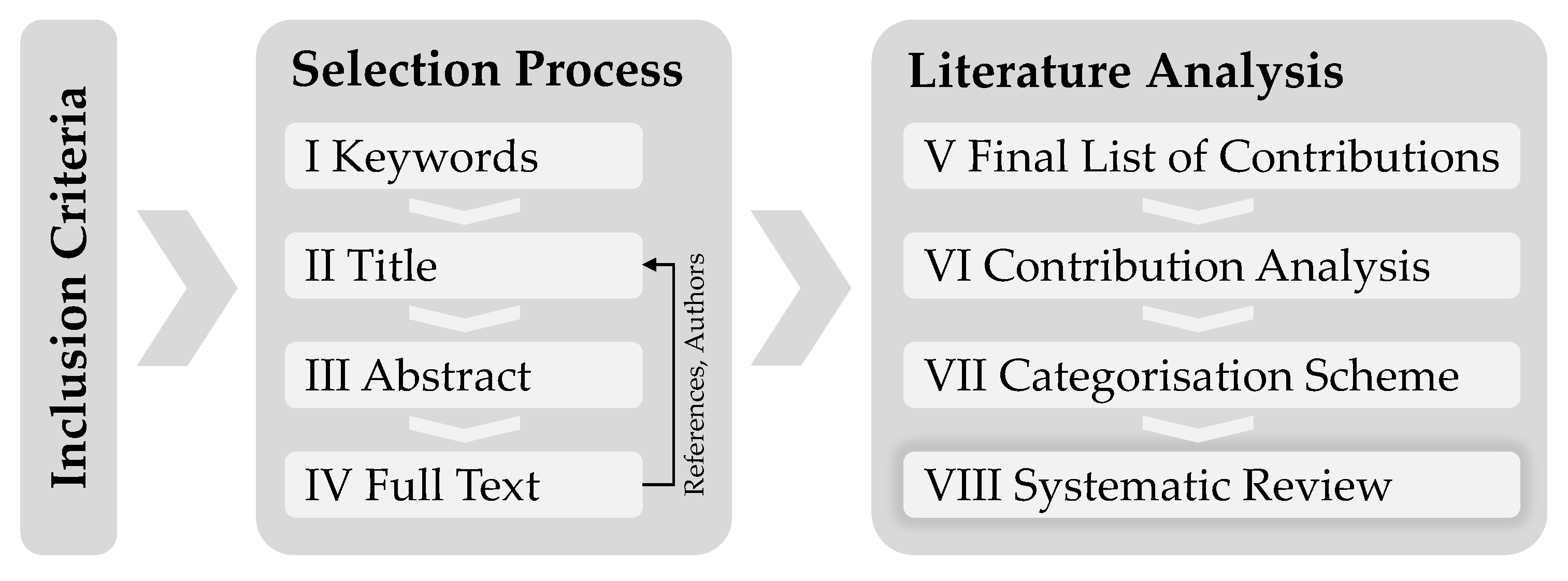

3. Method of Literature Review

3.1. Inclusion Criteria

3.2. Selection Process

3.3. Literature Analysis

- the initial situation and scope;

- the methodological and empirical results; and

- the further research demand.

4. Results

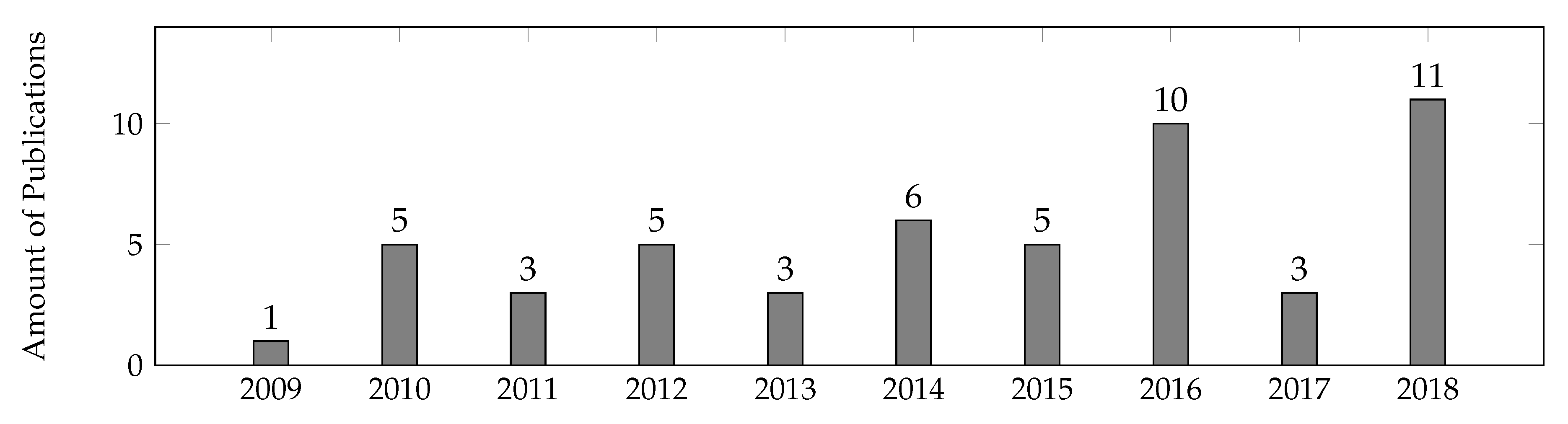

4.1. Contributions Per Stage and Reasons for Exclusion during Selection Process

4.2. Systematic Review of Relevant Contributions

4.2.1. Application

Domain

Activity

Attachment

Dataset

4.2.2. HAR Methods

Data Representation

Pre-Processing

Segmentation

Shallow Methods

Deep Learning

Metrics

5. Discussion and Conclusions

- What is the current status of research regarding HAR for P+L and related domains from a practitioner’s perspective?For the past 10 years, eight publications dealing with HAR in P+L have been identified. They address a variety of use cases but none covers the entire domain. Apart from two applications [83,85], the approaches assume a predefined set of activities, which is a downside amid the versatility of human work in P+L. Furthermore, the necessary effort for dataset creation is unknown, making the expenditure for deploying HAR in industry difficult to predict. In applications for related domains, locomotion activities as well as exercises and ADLs that resemble manual work in P+L are covered, allowing for their transfer to this domain.

- What are the specifications of current applications regarding the sensor technology, recording environment and utilised datasets?The vast majority of research is done using IMUs placed on a person or using the accelerometers of smartphones. The sensor attachment could not be derived from the activities to be recognised. There was no link apparent to the reviewers. Seven out of the eight P+L contributions use data recorded in a laboratory. In total, 39 contributions use real-life data versus 19 that use laboratory data. Only four papers use both real-life and laboratory data. The reviewers did not find work regarding the training of a classifier using data from a laboratory for deployment in a real-life P+L facility or transfer learning between datasets and scenarios in an industrial context. Most of the publications proposed their own datasets or used individual excerpts from data available in repositories; thus, replicating their methods and results is hardly possible.

- What methods of HAR are deployed?Current publications solve HAR either using a standard pattern recognition algorithm or using deep networks. Publications follow only the sliding window approach for segmenting signals. The window size differs strongly according to the recording scenarios. However, the overlapping is usually . For the standard methods, there is a large number of statistical features in time and frequency, being the variance, mean, correlation, energy and entropy the most common. Deep applications have been applied successfully for solving HAR. In comparison with applications in the vision domain, the networks are relatively shallow. Temporal CNNs or combinations between tCNNS and RNNs show the best results. Accuracy is the most used metric for evaluating the HAR methods. However, methods using datasets with unbalanced annotation should be evaluated with precision, recall and F1-metrics; otherwise, the performance of the method is not evaluated correctly.

- What is the research gap to enhance HAR in P+L? What does the future road map look like?From the reviewer’s perspective, further research on HAR for P+L should focus on five issues. First, a high-quality benchmark dataset for HAR methods to deploy in P+L is missing. This dataset should contain motion pattern that are as close to reality as possible and it should allow for comparison among different methods and thus being relevant for application in industry. Second, it must become possible to quantify the data creation effort, including both recording and annotation following a predefined protocol. This allows for a holistic effort estimation when deploying HAR in P+L. Third, most of the observed activities in the literature corpus are simplistic and they do not cover the entirety of manually performed work in P+L. Furthermore, the definition of activities cannot be considered fixed at design time and expected to remain the same during run time in such a rapidly evolving industry. Methods of HAR for P+L must address this issue. Fourth, method-wise, the segmentation approach should be revised in detail as a window-based approach is currently the only method for generating activity hypothesis. This method does not handle activities that differ on their duration. A new method for computing activities with strongly different duration is needed. Fifth, the methods using deep networks do not include confidence measure. Even though these network methods show the state-of-the-art performance on benchmark datasets, they are still overconfident with their predictions. For this reason, integrating deep architectures with probabilistic reasoning for solving HAR using context information can be difficult.

Author Contributions

Funding

Conflicts of Interest

References

- Dregger, J.; Niehaus, J.; Ittermann, P.; Hirsch-Kreinsen, H.; ten Hompel, M. Challenges for the future of industrial labor in manufacturing and logistics using the example of order picking systems. Procedia CIRP 2018, 67, 140–143. [Google Scholar] [CrossRef]

- Hofmann, E.; Rüsch, M. Industry 4.0 and the current status as well as future prospects on logistics. Comput. Ind. 2017, 89, 23–34. [Google Scholar] [CrossRef]

- Michel, R. 2016 Warehouse/DC Operations Survey: Ready to Confront Complexity; Northwestern University Transportation Library: Evanston, IL, USA, 2016. [Google Scholar]

- Schlögl, D.; Zsifkovits, H. Manuelle Kommissioniersysteme und die Rolle des Menschen. BHM Berg-und Hüttenmänn. Monatshefte 2016, 161, 225–228. [Google Scholar] [CrossRef]

- Liang, C.; Chee, K.J.; Zou, Y.; Zhu, H.; Causo, A.; Vidas, S.; Teng, T.; Chen, I.M.; Low, K.H.; Cheah, C.C. Automated Robot Picking System for E-Commerce Fulfillment Warehouse Application. In Proceedings of the 14th IFToMM World Congress, Taipei, Taiwan, 25–30 October 2015; pp. 398–403. [Google Scholar] [CrossRef]

- Oleari, F.; Magnani, M.; Ronzoni, D.; Sabattini, L. Industrial AGVs: Toward a pervasive diffusion in modern factory warehouses. In Proceedings of the 2014 IEEE 10th International Conference on Intelligent Computer Communication and Processing (ICCP), Piscataway, NJ, USA, 4–6 September 2014; pp. 233–238. [Google Scholar] [CrossRef]

- Grosse, E.H.; Glock, C.H.; Neumann, W.P. Human Factors in Order Picking System Design: A Content Analysis. IFAC-PapersOnLine 2015, 48, 320–325. [Google Scholar] [CrossRef]

- Calzavara, M.; Glock, C.H.; Grosse, E.H.; Persona, A.; Sgarbossa, F. Analysis of economic and ergonomic performance measures of different rack layouts in an order picking warehouse. Comput. Ind. Eng. 2017, 111, 527–536. [Google Scholar] [CrossRef]

- Grosse, E.H.; Calzavara, M.; Glock, C.H.; Sgarbossa, F. Incorporating human factors into decision support models for production and logistics: Current state of research. IFAC-PapersOnLine 2017, 50, 6900–6905. [Google Scholar] [CrossRef]

- Chen, C.; Jafari, R.; Kehtarnavaz, N. A survey of depth and inertial sensor fusion for human action recognition. Multimed. Tools Appl. 2017, 76, 4405–4425. [Google Scholar] [CrossRef]

- Ordóñez, F.; Roggen, D. Deep Convolutional and LSTM Recurrent Neural Networks for Multimodal Wearable Activity Recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef]

- Haescher, M.; Matthies, D.J.; Srinivasan, K.; Bieber, G. Mobile Assisted Living: Smartwatch-based Fall Risk Assessment for Elderly People. In Proceedings of the 5th International Workshop on Sensor-Based Activity Recognition and Interaction iWOAR’18, Berlin, Germany, 20–21 September 2018; pp. 6:1–6:10. [Google Scholar] [CrossRef]

- Hölzemann, A.; Van Laerhoven, K. Using Wrist-Worn Activity Recognition for Basketball Game Analysis. In Proceedings of the 5th International Workshop on Sensor-Based Activity Recognition and Interaction iWOAR’18, Berlin, Germany, 20–21 September 2018; pp. 13:1–13:6. [Google Scholar] [CrossRef]

- Zeng, M.; Nguyen, L.T.; Yu, B.; Mengshoel, O.J.; Zhu, J.; Wu, P.; Zhang, J. Convolutional Neural Networks for Human Activity Recognition using Mobile Sensors. In Proceedings of the 6th International Conference on Mobile Computing, Applications and Services, ICST, Austin, TX, USA, 6–7 November 2014. [Google Scholar] [CrossRef]

- Feichtenhofer, C.; Pinz, A.; Zisserman, A. Convolutional Two-Stream Network Fusion for Video Action Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1933–1941. [Google Scholar] [CrossRef]

- Ronao, C.A.; Cho, S.B. Deep Convolutional Neural Networks for Human Activity Recognition with Smartphone Sensors. In Neural Information Processing; Lecture Notes in Computer Science; Arik, S., Huang, T., Lai, W.K., Liu, Q., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 46–53. [Google Scholar] [CrossRef]

- Yang, J.B.; Nguyen, M.N.; San, P.P.; Li, X.L.; Krishnaswamy, S. Deep Convolutional Neural Networks on Multichannel Time Series for Human Activity Recognition. In Proceedings of the 24th International Conference on Artificial Intelligence IJCAI’15, Buenos Aires, Argentina, 25–31 July 2015; pp. 3995–4001. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning; Information Science and Statistics; Springer: Cham, Switzerland, 2006. [Google Scholar]

- Fink, G.A. Markov Models for Pattern Recognition: From Theory to Applications, 2nd ed.; Advances in Computer Vision and Pattern Recognition; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Twomey, N.; Diethe, T.; Fafoutis, X.; Elsts, A.; McConville, R.; Flach, P.; Craddock, I. A Comprehensive Study of Activity Recognition Using Accelerometers. Informatics 2018, 5, 27. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Yao, R.; Lin, G.; Shi, Q.; Ranasinghe, D.C. Efficient dense labelling of human activity sequences from wearables using fully convolutional networks. y 2018, 78, 252–266. [Google Scholar] [CrossRef]

- Feldhorst, S.; Aniol, S.; ten Hompel, M. Human Activity Recognition in der Kommissionierung– Charakterisierung des Kommissionierprozesses als Ausgangsbasis für die Methodenentwicklung. Logist. J. Proc. 2016, 2016. [Google Scholar] [CrossRef]

- Alam, M.A.U.; Roy, N. Unseen Activity Recognitions: A Hierarchical Active Transfer Learning Approach. In Proceedings of the 2017 IEEE 37th International Conference on Distributed Computing Systems (ICDCS), Atlanta, GA, USA, 5–8 June 2017; pp. 436–446. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Luan, P.G.; Tan, N.T.; Thinh, N.T. Estimation and Recognition of Motion Segmentation and Pose IMU-Based Human Motion Capture. In Robot Intelligence Technology and Applications 5; Advances in Intelligent Systems and Computing; Kim, J.H., Myung, H., Kim, J., Xu, W., Matson, E.T., Jung, J.W., Choi, H.L., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 383–391. [Google Scholar] [CrossRef]

- Pfister, A.; West, A.M.; Bronner, S.; Noah, J.A. Comparative abilities of Microsoft Kinect and Vicon 3D motion capture for gait analysis. J. Med. Eng. Technol. 2014, 38, 274–280. [Google Scholar] [CrossRef] [PubMed]

- Schlagenhauf, F.; Sahoo, P.P.; Singhose, W. A Comparison of Dual-Kinect and Vicon Tracking of Human Motion for Use in Robotic Motion Programming. Robot Autom. Eng. J. 2017, 1, 555558. [Google Scholar] [CrossRef]

- Bulling, A.; Blanke, U.; Schiele, B. A tutorial on human activity recognition using body-worn inertial sensors. ACM Comput. Surv. 2014, 46, 1–33. [Google Scholar] [CrossRef]

- Roggen, D.; Förster, K.; Calatroni, A.; Tröster, G. The adARC pattern analysis architecture for adaptive human activity recognition systems. J. Ambient. Intell. Humaniz. Comput. 2013, 4, 169–186. [Google Scholar] [CrossRef]

- Dalmazzo, D.; Tassani, S.; Ramírez, R. A Machine Learning Approach to Violin Bow Technique Classification: A Comparison Between IMU and MOCAP systems Dalmazzo, David and Tassani, Simone and Ramírez, Rafael. In Proceedings of the 5th International Workshop on Sensor-Based Activity Recognition and InteractioniWOAR’18, Berlin, Germany, 20–21 September 2018; pp. 12:1–12:8. [Google Scholar] [CrossRef]

- Vinciarelli, A.; Esposito, A.; André, E.; Bonin, F.; Chetouani, M.; Cohn, J.F.; Cristani, M.; Fuhrmann, F.; Gilmartin, E.; Hammal, Z.; et al. Open Challenges in Modelling, Analysis and Synthesis of Human Behaviour in Human–Human and Human–Machine Interactions. Cogn. Comput. 2015, 7, 397–413. [Google Scholar] [CrossRef]

- Lara, O.D.; Labrador, M.A. A Survey on Human Activity Recognition using Wearable Sensors. IEEE Commun. Surv. Tutor. 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Xing, S.; Hanghang, T.; Ping, J. Activity recognition with smartphone sensors. Tinshhua Sci. Technol. 2014, 19, 235–249. [Google Scholar] [CrossRef]

- Attal, F.; Mohammed, S.; Dedabrishvili, M.; Chamroukhi, F.; Oukhellou, L.; Amirat, Y. Physical Human Activity Recognition Using Wearable Sensors. Sensors 2015, 15, 31314–31338. [Google Scholar] [CrossRef]

- Edwards, M.; Deng, J.; Xie, X. From pose to activity: Surveying datasets and introducing CONVERSE. Comput. Vis. Image Underst. 2016, 144, 73–105. [Google Scholar] [CrossRef]

- O’Reilly, M.; Caulfield, B.; Ward, T.; Johnston, W.; Doherty, C. Wearable Inertial Sensor Systems for Lower Limb Exercise Detection and Evaluation: A Systematic Review. Sport. Med. 2018, 48, 1221–1246. [Google Scholar] [CrossRef] [PubMed]

- Kitchenham, B.; Brereton, P. A systematic review of systematic review process research in software engineering. Inf. Softw. Technol. 2013, 55, 2049–2075. [Google Scholar] [CrossRef]

- Kitchenham, B.; Pearl Brereton, O.; Budgen, D.; Turner, M.; Bailey, J.; Linkman, S. Systematic literature reviews in software engineering—A systematic literature review. Inf. Softw. Technol. 2009, 51, 7–15. [Google Scholar] [CrossRef]

- Kitchenham, B. Procedures for Performing Systematic Reviews; Keele University: Keele, UK, 2004; p. 33. [Google Scholar]

- Chen, L.; Zhao, X.; Tang, O.; Price, L.; Zhang, S.; Zhu, W. Supply chain collaboration for sustainability: A literature review and future research agenda. Int. J. Prod. Econ. 2017, 194, 73–87. [Google Scholar] [CrossRef]

- Caspersen, C.J.; Powell, K.E.; Christenson, G.M. Physical activity, exercise, and physical fitness: definitions and distinctions for health-related research. Public Health Rep. 1985, 100, 126–131. [Google Scholar] [PubMed]

- Purkayastha, A.; Palmaro, E.; Falk-Krzesinski, H.J.; Baas, J. Comparison of two article-level, field-independent citation metrics: Field-Weighted Citation Impact (FWCI) and Relative Citation Ratio (RCR). J. Inf. 2019, 13, 635–642. [Google Scholar] [CrossRef]

- Xi, L.; Bin, Y.; Aarts, R. Single-accelerometer-based daily physical activity classification. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; pp. 6107–6110. [Google Scholar] [CrossRef]

- Altun, K.; Barshan, B. Human Activity Recognition Using Inertial/Magnetic Sensor Units. In Human Behavior Understanding; Salah, A.A., Gevers, T., Sebe, N., Vinciarelli, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6219, pp. 38–51. [Google Scholar] [CrossRef]

- Altun, K.; Barshan, B.; Tunçel, O. Comparative study on classifying human activities with miniature inertial and magnetic sensors. Pattern Recognit. 2010, 43, 3605–3620. [Google Scholar] [CrossRef]

- Khan, A.M.; Lee, Y.K.; Lee, S.Y.; Kim, T.S. Human Activity Recognition via an Accelerometer- Enabled-Smartphone Using Kernel Discriminant Analysis. In Proceedings of the 2010 5th International Conference on Future Information Technology, Busan, Korea, 21–23 May 2010; pp. 1–6. [Google Scholar] [CrossRef]

- Kwapisz, J.R.; Weiss, G.M.; Moore, S.A. Activity recognition using cell phone accelerometers. ACM SigKDD Explor. Newsl. 2011, 12, 74. [Google Scholar] [CrossRef]

- Wang, L.; Gu, T.; Tao, X.; Chen, H.; Lu, J. Recognizing multi-user activities using wearable sensors in a smart home. Pervasive Mob. Comput. 2011, 7, 287–298. [Google Scholar] [CrossRef]

- Casale, P.; Pujol, O.; Radeva, P. Human Activity Recognition from Accelerometer Data Using a Wearable Device. In Pattern Recognition and Image Analysis; Vitrià, J., Sanches, J.M., Hernández, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6669, pp. 289–296. [Google Scholar] [CrossRef]

- Gu, T.; Wang, L.; Wu, Z.; Tao, X.; Lu, J. A Pattern Mining Approach to Sensor-Based Human Activity Recognition. IEEE Trans. Knowl. Data Eng. 2010, 23, 1359–1372. [Google Scholar] [CrossRef]

- Lee, Y.S.; Cho, S.B. Activity Recognition Using Hierarchical Hidden Markov Models on a Smartphone with 3D Accelerometer. In Hybrid Artificial Intelligent Systems; Corchado, E., Kurzyński, M., Woźniak, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6678, pp. 460–467. [Google Scholar] [CrossRef]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. Human Activity Recognition on Smartphones Using a Multiclass Hardware-Friendly Support Vector Machine. In Ambient Assisted Living and Home Care; Bravo, J., Hervás, R., Rodríguez, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7657, pp. 216–223. [Google Scholar] [CrossRef]

- Deng, L.; Leung, H.; Gu, N.; Yang, Y. Generalized Model-Based Human Motion Recognition with Body Partition Index Maps; Blackwell Publishing Ltd.: Oxford, UK, 2012; Volume 31, pp. 202–215. [Google Scholar] [CrossRef]

- Lara, S.D.; Labrador, M.A. A mobile platform for real-time human activity recognition. In Proceedings of the 2012 IEEE Consumer Communications and Networking Conference (CCNC), Las Vegas, NV, USA, 14–17 January 2012; pp. 667–671. [Google Scholar] [CrossRef]

- Lara, O.D.; Pérez, A.J.; Labrador, M.A.; Posada, J.D. Centinela: A human activity recognition system based on acceleration and vital sign data. Pervasive Mob. Comput. 2012, 8, 717–729. [Google Scholar] [CrossRef]

- Siirtola, P.; Röning, J. Recognizing Human Activities User-independently on Smartphones Based on Accelerometer Data. IJIMAI 2012, 1, 38. [Google Scholar] [CrossRef]

- Koskimäki, H.; Huikari, V.; Siirtola, P.; Röning, J. Behavior modeling in industrial assembly lines using a wrist-worn inertial measurement unit. J. Ambient. Intell. Humaniz. Comput. 2013, 4, 187–194. [Google Scholar] [CrossRef]

- Shoaib, M.; Scholten, H.; Havinga, P. Towards Physical Activity Recognition Using Smartphone Sensors. In Proceedings of the 2013 IEEE 10th International Conference on Ubiquitous Intelligence and Computing and 2013 IEEE 10th International Conference on Autonomic and Trusted Computing, Vietri sul Mere, Italy, 18–21 December 2013; pp. 80–87. [Google Scholar] [CrossRef]

- Zhang, M.; Sawchuk, A.A. Human Daily Activity Recognition With Sparse Representation Using Wearable Sensors. IEEE J. Biomed. Health Inform. 2013, 17, 553–560. [Google Scholar] [CrossRef] [PubMed]

- Bayat, A.; Pomplun, M.; Tran, D.A. A Study on Human Activity Recognition Using Accelerometer Data from Smartphones. Procedia Comput. Sci. 2014, 34, 450–457. [Google Scholar] [CrossRef]

- Garcia-Ceja, E.; Brena, R.; Carrasco-Jimenez, J.; Garrido, L. Long-Term Activity Recognition from Wristwatch Accelerometer Data. Sensors 2014, 14, 22500–22524. [Google Scholar] [CrossRef] [PubMed]

- Gupta, P.; Dallas, T. Feature Selection and Activity Recognition System Using a Single Triaxial Accelerometer. IEEE Trans. Biomed. Eng. 2014, 61, 1780–1786. [Google Scholar] [CrossRef]

- Kwon, Y.; Kang, K.; Bae, C. Unsupervised learning for human activity recognition using smartphone sensors. Expert Syst. Appl. 2014, 41, 6067–6074. [Google Scholar] [CrossRef]

- Aly, H.; Ismail, M.A. ubiMonitor: intelligent fusion of body-worn sensors for real-time human activity recognition. In Proceedings of the 30th Annual ACM Symposium on Applied Computing-SAC’15, Salamanca, Spain, 13–17 April 2015; pp. 563–568. [Google Scholar] [CrossRef]

- Bleser, G.; Steffen, D.; Reiss, A.; Weber, M.; Hendeby, G.; Fradet, L. Personalized Physical Activity Monitoring Using Wearable Sensors. In Smart Health; Holzinger, A., Röcker, C., Ziefle, M., Eds.; Springer International Publishing: Cham, Switzerland, 2015; Volume 8700, pp. 99–124. [Google Scholar] [CrossRef]

- Chen, Y.; Xue, Y. A Deep Learning Approach to Human Activity Recognition Based on Single Accelerometer. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Kowloon, China, 9–12 October 2015; pp. 1488–1492. [Google Scholar] [CrossRef]

- Guo, M.; Wang, Z. A feature extraction method for human action recognition using body-worn inertial sensors. In Proceedings of the 2015 IEEE 19th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Calabria, Italy, 6–8 May 2015; pp. 576–581. [Google Scholar] [CrossRef]

- Zainudin, M.; Sulaiman, M.N.; Mustapha, N.; Perumal, T. Activity recognition based on accelerometer sensor using combinational classifiers. In Proceedings of the 2015 IEEE Conference on Open Systems (ICOS), Bandar Melaka, Malaysia, 24–26 August 2015; pp. 68–73. [Google Scholar] [CrossRef]

- Ayachi, F.S.; Nguyen, H.P.; Lavigne-Pelletier, C.; Goubault, E.; Boissy, P.; Duval, C. Wavelet-based algorithm for auto-detection of daily living activities of older adults captured by multiple inertial measurement units (IMUs). Physiol. Meas. 2016, 37, 442–461. [Google Scholar] [CrossRef]

- Fallmann, S.; Kropf, J. Human Activity Pattern Recognition based on Continuous Data from a Body Worn Sensor placed on the Hand Wrist using Hidden Markov Models. Simul. Notes Eur. 2016, 26, 9–16. [Google Scholar] [CrossRef]

- Feldhorst, S.; Masoudenijad, M.; ten Hompel, M.; Fink, G.A. Motion Classification for Analyzing the Order Picking Process using Mobile Sensors-General Concepts, Case Studies and Empirical Evaluation. In Proceedings of the 5th International Conference on Pattern Recognition Applications and Methods, Rome, Italy, 24–26 February 2016; SCITEPRESS-Science and and Technology Publications: Setubal, Portugal, 2016; pp. 706–713. [Google Scholar] [CrossRef]

- Hammerla, N.Y.; Halloran, S.; Ploetz, T. Deep, Convolutional, and Recurrent Models for Human Activity Recognition using Wearables. arXiv 2016, arXiv:1604.08880. [Google Scholar]

- Liu, Y.; Nie, L.; Liu, L.; Rosenblum, D.S. From action to activity: Sensor-based activity recognition. Neurocomputing 2016, 181, 108–115. [Google Scholar] [CrossRef]

- Margarito, J.; Helaoui, R.; Bianchi, A.; Sartor, F.; Bonomi, A. User-Independent Recognition of Sports Activities from a Single Wrist-worn Accelerometer: A Template Matching Based Approach. IEEE Trans. Biomed. Eng. 2015, 63, 788–796. [Google Scholar] [CrossRef] [PubMed]

- Reyes-Ortiz, J.L.; Oneto, L.; Samà, A.; Parra, X.; Anguita, D. Transition-Aware Human Activity Recognition Using Smartphones. Neurocomputing 2016, 171, 754–767. [Google Scholar] [CrossRef]

- Ronao, C.A.; Cho, S.B. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 2016, 59, 235–244. [Google Scholar] [CrossRef]

- Ronao, C.A.; Cho, S.B. Recognizing human activities from smartphone sensors using hierarchical continuous hidden Markov models. Int. J. Distrib. Sens. Netw. 2017, 13, 155014771668368. [Google Scholar] [CrossRef]

- Song-Mi, L.; Sangm, M.Y.; Heeryon, C. Human activity recognition from accelerometer data using Convolutional Neural Network. In Proceedings of the 2017 IEEE International Conference on Big Data and Smart Computing (BigComp), Jeju, Korea, 13–16 February 2017; pp. 131–134. [Google Scholar] [CrossRef]

- Scheurer, S.; Tedesco, S.; Brown, K.N.; O’Flynn, B. Human activity recognition for emergency first responders via body-worn inertial sensors. In Proceedings of the 2017 IEEE 14th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Eindhoven, The Netherlands, 9–12 May 2017; pp. 5–8. [Google Scholar] [CrossRef]

- Vital, J.P.M.; Faria, D.R.; Dias, G.; Couceiro, M.S.; Coutinho, F.; Ferreira, N.M.F. Combining discriminative spatiotemporal features for daily life activity recognition using wearable motion sensing suit. Pattern Anal. Appl. 2017, 20, 1179–1194. [Google Scholar] [CrossRef]

- Chen, Z.; Le, Z.; Cao, Z.; Guo, J. Distilling the Knowledge From Handcrafted Features for Human Activity Recognition. IEEE Trans. Ind. Inform. 2018, 14, 4334–4342. [Google Scholar] [CrossRef]

- Moya Rueda, F.; Grzeszick, R.; Fink, G.; Feldhorst, S.; ten Hompel, M. Convolutional Neural Networks for Human Activity Recognition Using Body-Worn Sensors. Informatics 2018, 5, 26. [Google Scholar] [CrossRef]

- Nair, N.; Thomas, C.; Jayagopi, D.B. Human Activity Recognition Using Temporal Convolutional Network. In Proceedings of the 5th international Workshop on Sensor-Based Activity Recognition and Interaction-iWOAR’18, Berlin, Germany, 20–21 September 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Reining, C.; Schlangen, M.; Hissmann, L.; ten Hompel, M.; Moya, F.; Fink, G.A. Attribute Representation for Human Activity Recognition of Manual Order Picking Activities. In Proceedings of the 5th international Workshop on Sensor-based Activity Recognition and Interaction-iWOAR’18, Berlin, Germany, 20–21 September 2018; pp. 1–10. [Google Scholar] [CrossRef]

- Tao, W.; Lai, Z.H.; Leu, M.C.; Yin, Z. Worker Activity Recognition in Smart Manufacturing Using IMU and sEMG Signals with Convolutional Neural Networks. Procedia Manuf. 2018, 26, 1159–1166. [Google Scholar] [CrossRef]

- Wolff, J.P.; Grützmacher, F.; Wellnitz, A.; Haubelt, C. Activity Recognition using Head Worn Inertial Sensors. In Proceedings of the 5th international Workshop on Sensor-based Activity Recognition and Interaction-iWOAR’18, Berlin, Germany, 20–21 September 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Xi, R.; Li, M.; Hou, M.; Fu, M.; Qu, H.; Liu, D.; Haruna, C.R. Deep Dilation on Multimodality Time Series for Human Activity Recognition. IEEE Access 2018, 6, 53381–53396. [Google Scholar] [CrossRef]

- Xie, L.; Tian, J.; Ding, G.; Zhao, Q. Human activity recognition method based on inertial sensor and barometer. In Proceedings of the 2018 IEEE International Symposium on Inertial Sensors and Systems (INERTIAL), Moltrasio, Italy, 26–29 March 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Zhao, J.; Obonyo, E. Towards a Data-Driven Approach to Injury Prevention in Construction. In Advanced Computing Strategies for Engineering; Smith, I.F.C., Domer, B., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 10863, pp. 385–411. [Google Scholar] [CrossRef]

- Zhu, Q.; Chen, Z.; Yeng, C.S. A Novel Semi-supervised Deep Learning Method for Human Activity Recognition. IEEE Trans. Ind. Inform. 2018, 3821–3830. [Google Scholar] [CrossRef]

- Rueda, F.M.; Fink, G.A. Learning Attribute Representation for Human Activity Recognition. arXiv 2018, arXiv:1802.00761. [Google Scholar]

- Lampert, C.H.; Nickisch, H.; Harmeling, S. Attribute-Based Classification for Zero-Shot Visual Object Categorization. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 453–465. [Google Scholar] [CrossRef] [PubMed]

- Lockhart, J.W.; Weiss, G.M.; Xue, J.C.; Gallagher, S.T.; Grosner, A.B.; Pulickal, T.T. WISDM Lab: Dataset; Department of Computer & Information Science, Fordham University: Bronx, NY, USA, 2013. [Google Scholar]

- Kwapisz, J.R.; Weiss, G.M.; Moore, S.A. WISDM Lab: Dataset; Department of Computer & Information Science, Fordham University: Bronx, NY, USA, 2012. [Google Scholar]

- Roggen, D.; Plotnik, M.; Hausdorff, J. UCI Machine Learning Repository: Daphnet Freezing of Gait Data Set; School of Information and Computer Science, University of California: Irvine, CA, USA, 2013; Available online: https://archive.ics.uci.edu/ml/datasets/Daphnet+Freezing+of+Gait (accessed on 20 July 2019).

- Müller, M.; Röder, T.; Eberhardt, B.; Weber, A. Motion Database HDM05; Technical Report; Universität Bonn: Bonn, Germany, 2007. [Google Scholar]

- Banos, O.; Toth, M.A.; Amft, O. UCI Machine Learning Repository: REALDISP Activity Recognition Dataset Data Set. Available online: https://archive.ics.uci.edu/ml/datasets/REALDISP+Activity+Recognition+Dataset (accessed on 20 July 2019).

- Reyes-Ortiz, J.L.; Anguita, D.; Oneto, L.; Parra, X. UCI Machine Learning Repository: Smartphone-Based Recognition of Human Activities and Postural Transitions Data Set. Available online: https://archive.ics.uci.edu/ml/datasets/Smartphone-Based+Recognition+of+Human+Activities+and+Postural+Transitions (accessed on 20 July 2019).

- Zhang, M.; Sawchuk, A.A. Human Activities Dataset. 2012. Available online: http://sipi.usc.edu/had/ (accessed on 20 July 2019).

- Yang, A.Y.; Giani, A.; Giannatonio, R.; Gilani, K.; Iyengar, S.; Kuryloski, P.; Seto, E.; Seppa, V.P.; Wang, C.; Shia, V.; et al. d-WAR: Distributed Wearable Action Recognition. Available online: https://people.eecs.berkeley.edu/~yang/software/WAR/ (accessed on 20 July 2019).

- Roggen, D.; Calatroni, A.; Long-Van, N.D.; Chavarriaga, R.; Hesam, S.; Tejaswi Digumarti, S. UCI Machine Learning Repository: OPPORTUNITY Activity Recognition Data Set. Available online: https://archive.ics.uci.edu/ml/datasets/opportunity+activity+recognition (accessed on 20 July 2019).

- Reyes-Ortiz, J.L.; Anguita, D.; Ghio, A.; Oneto, L.; Parra, X. UCI Machine Learning Repository: Human Activity Recognition Using Smartphones Data Set. Available online: https://archive.ics.uci.edu/ml/datasets/human+activity+recognition+using+smartphones (accessed on 20 July 2019).

- Reiss, A. UCI Machine Learning Repository: PAMAP2 Physical Activity Monitoring Data Set. Available online: https://archive.ics.uci.edu/ml/datasets/pamap2+physical+activity+monitoring (accessed on 20 July 2019).

- Bulling, A.; Blanke, U.; Schiele, B. MATLAB Human Activity Recognition Toolbox. Available online: https://github.com/andreas-bulling/ActRecTut (accessed on 20 July 2019).

- Zappi, P.; Lombriser, C.; Stiefmeier, T.; Farella, E.; Roggen, D.; Benini, L.; Tröster, G. Activity Recognition from On-body Sensors: Accuracy-power Trade-off by Dynamic Sensor Selection. In Proceedings of the 5th European Conference on Wireless Sensor Networks EWSN’08, Bologna, Italy, 30 January–1 February 2008; Springer: Berlin, Heidelberg, 2008; pp. 17–33. [Google Scholar]

- Fukushima, K.; Miyake, S. Neocognitron: A new algorithm for pattern recognition tolerant of deformations and shifts in position. Pattern Recognit. 1982, 15, 455–469. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems 25; Curran Associates, Inc.: Red Hook, NY, USA; pp. 1097–1105.

| Ref. | Year | Author & Description |

|---|---|---|

| [33] | 2013 | Lara and Labrador reviewed the state of the art in HAR based on wearable sensors. They addressed the general structure of HAR systems and design issues. Twenty-eight systems are evaluated in terms of recognition performance, energy consumption and other criteria. |

| [34] | 2014 | Xing Su et al. surveyed recent advances in HAR with smartphone sensors and address experiment settings. They divided activities into five types: living, working, health, simple and complex. |

| [35] | 2015 | Attal et al. reviewed classification techniques for HAR using accelerometers. They provided an overview of sensor placement, detected activities and performance metrics of current state-of-the-art approaches. |

| [36] | 2016 | Edwards et al. presented a review on publicly available datasets for HAR. The examined sensor technology includes MoCap and IMUs. The observed application domains are ADL, surveillance, sports and generic activities, meaning that a wide variety of actions is covered. |

| [20] | 2018 | Twomey et al. surveyed the state-of-the-art in activity recognition using accelerometers. They focused on ADL and examined, among other issues, the sensor placement and its influence on the recognition performance. |

| [37] | 2018 | O’Reilly et al. synthesised and evaluated studies which investigate the capacity for IMUs to assess movement quality in lower limb exercises. The studies are categorised into three groups: exercise detection, movement classification or measurement validation. |

| Inclusion Criteria | Description |

|---|---|

| Database | IEEE Xplore, Science Direct, Google Scholar, Scopus, European Union Digital Library (EUDL), ACM Digital Library, LearnTechLib, Springer Link, Wiley Online Library, dblp computer science bibliography, IOP Science, World Scientific, Multidisciplinary Digital Publishing Institute (MDPI), SciTePress Digital Library (Science and Technology Publications) |

| Keywords | Motion Capturing, Motion Capture, MoCap, OMC, OMMC Inertial Measurement Unit, IMU, Accelerometer, body-worn/on-body/wearable/wireless Sensor (Human) Activity/Action, Recognition, HAR Production, Manufacturing, Logistics, Warehousing, Order Picking |

| Year of publication | 2009–2018 |

| Language | English |

| Source Types | Conference Proceedings & Peer-reviewed Journals |

| Identifier | Persistent Identifier mandatory (DOI, ISBN, ISSN, arxiv) |

| Content Criteria | Description |

|---|---|

| (A) IMU or OMMC | Method is based on data from IMUs or OMMC-Systems. The sensors and markers are either attached to the subject’s body or body-worn. |

| (B) Human | Contribution addresses the recognition of activities performed by humans. |

| (C) Physical World | Data are recorded in the physical world without the use of simulated or immersive environments. |

| (D) Quantification | The application aims to quantitatively determine the occurrence of activities, not to capture and analyse them for developing new methods in related fields. |

| (E) Application-oriented | Perspectives for deploying the proposed method in P+L is conceivable. Definition of HAR-related terms is not the contribution’s focus. |

| (F) Physical activity | According to Caspersen et al., [42] “physical activity is defined as any bodily movement produced by skeletal muscles that results in energy expenditure”. In this literature review, bodily movement is limited to torso and limb movement. |

| (G) No focus on hardware | Comparison of sensor technologies or a showcase of new hardware when using it for HAR is not the contribution’s focus. |

| (H) Clear Method | Publications are computer science oriented, stating clear pattern recognition methods and performance metrics. |

| Stage | Description |

|---|---|

| (I) Keywords | Keywords of the publication match with the Inclusion Criteria. Contributions have not yet been examined by the reviewers at this point. |

| (II) Title | The title does not conflict with any Content Criteria. This is because the title either complies with the criteria or it is ambiguous. |

| (III) Abstract | The abstract’s content does not conflict with any Content Criteria. This is because the content either complies with the criteria or necessary specifications are missing. |

| (IV) Full Text | Reading the full text confirms compliance with all Content Criteria. Properties of the publication are recorded in the literature overview. |

| Root Category | ||

|---|---|---|

| Subcategory | Description | |

| Domain | ||

| P+L | Deployment in industrial settings, e.g., production facilities or warehouses | |

| Other | Related application domain, e.g., health or ADL | |

| Activity | ||

| Work | Working activities such as assembly or order picking | |

| Exercises | Sport Activities, e.g., riding a stationary bicycle or gymnastic exercises | |

| Locomotion | Walking, running as well as the recognition of the lack of locomotion when standing | |

| ADL | Activities of daily living including cooking, doing the laundry, driving a car and so forth | |

| Attachment | ||

| Arm | Upper and lower arm | |

| Hand | including wrists | |

| Leg | including knee and shank | |

| Foot | including ankle | |

| Torso | including chest, back, belt and waist | |

| Head | including sensors attached to a helmet or protective gear | |

| Smartphone | Worn in a pocket or a bag. If attached to a limb, the subcaterogy is checked as well | |

| Dataset | ||

| Repository | Utilised dataset is available in a repository | |

| Individual | Dataset is created specifically for the contribution and not available in a repository | |

| Laboratory | Recording takes place in a constraint laboratory environment | |

| Real-life | Recording takes place in a real-life environment, e.g., a real warehouse or in public places | |

| Name of dataset | Name, origin, repository and description of dataset | |

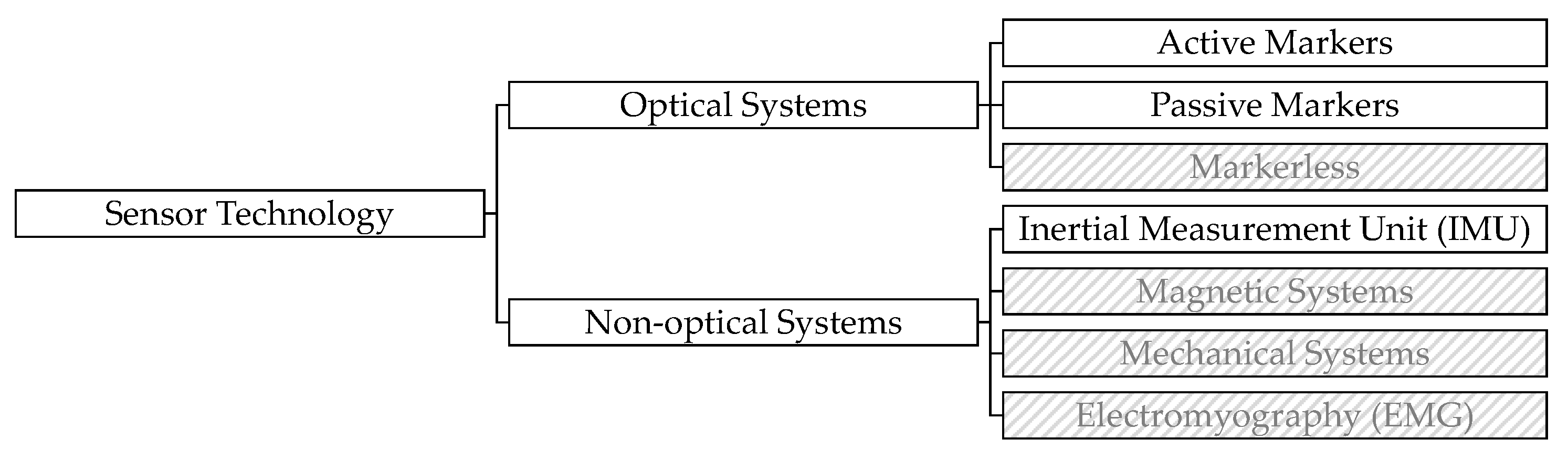

| Sensor | ||

| Passive Markers | Markers reflect light for the camera to capture | |

| Active Markers | Markers emit light for the camera to capture | |

| IMU | Devices that measure specific forces such as acceleration or gyroscopes | |

| Data Preparation (DP) | ||

| Pre.-Pr. | Pre-Processing: Normalisation, noise filtering, low-pass and high-pass filtering, and re-sampling | |

| Segm. | Segmentation: Sliding window-approach | |

| Shallow Method | ||

| FE - Stat. Feat. | Statistical feature extraction: Time- and Frequency-Domain Features | |

| FE- App.-based | Application-based features, e.g., Kinematics, Body model, Event-Based | |

| FR | Feature reduction, e.g., Principal Components Analysis (PCA), Linear Discriminant Analysis (LDA), Kernel Discriminant Analysis (KDA), Random Projection (RP) | |

| CL-NB | Classification method: Naïve Bayes | |

| CL-HMMs | Classification method: Hidden Markov Models | |

| CL-SVM | Classification method: Support Vector Machines | |

| CL-MLP | Classification method: Multilayer Perceptron | |

| CL-Other | Classification method: Random Forest (RF), Decision Trees (DT), Dynamic Time Warping (DTW), K-Nearest Neighbor (KNN), Fuzzy-Logic (FL), Logistic Regression (LR), Bayesian Network (BN), Least-Squares (LS), Conditional Random Field (CRF), Factorial Conditional Random Field (FCR), Conditional Clauses (CC), Gaussian Mixture Models (GMM), Template Matching (TM), Dynamic Bayesian Mixture Model (DBMM), Emerging Patterns (EP), Gradient-Boosted Trees (GBT), Sparsity Concentration Index (SCI) | |

| Deep Learning (DL) | ||

| CNN | Convolutional Neural Networks | |

| tCCN | Temporal CNNs and Dilated tCNNs (DTCNN) | |

| rCNN | Recurrent Neural Networks, e.g., GRU, LSTM, Bidirectional LSTM | |

| Stage | No. of Publications |

|---|---|

| (I) Keywords | 1243 |

| (II) Title | 524 |

| (III) Abstract | 263 |

| (IV) Full Text | 52 |

| General Information | Domain | Activity | Attachment | Dataset | DP | Shallow Method | DL | ||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ref. | Year | Author | FWCI | P+L | Other | Work | Exercises | Locomotion | ADL | Arm | Hand | Leg | Foot | Torso | Head | Smartphone | Repository | Individual | Laboratory | Real-Life | Pre.-Pr. | Segm. | FE-Stat.Feat. | FE-Others | FR | CL-NB | CL-HMMs | CL-SVM | CL-MLP | CL-Others | CNN | tCNN | rCNN |

| [44] | 2009 | Xi Long et al. | 12.48 | x | x | x | x | x | x | x | x | x | x | x | x | ||||||||||||||||||

| [45] | 2010 | Altun and Barshan | 7.95 | x | x | x | x | x | x | x | x | x | x | x | LS, KNN | ||||||||||||||||||

| [46] | 2010 | Altun et al. | 4.60 | x | x | x | x | x | x | x | x | x | x | x | x | BDM,LSM,KNN,DTW | |||||||||||||||||

| [47] | 2010 | Khan et al. | 7.38 | x | x | x | x | x | x | x | x | LDA, KDA | |||||||||||||||||||||

| [48] | 2010 | Kwapisz et al. | - | x | x | x | x | x | x | x | x | DT, LR | |||||||||||||||||||||

| [49] | 2010 | Wang et al. | 4.62 | x | x | x | x | x | x | x | x | FCR | |||||||||||||||||||||

| [50] | 2011 | Casale et al. | 8.02 | x | x | x | x | x | x | x | RF | ||||||||||||||||||||||

| [51] | 2011 | Gu et al. | 5.16 | x | x | x | x | x | x | x | EP | ||||||||||||||||||||||

| [52] | 2011 | Lee and Cho | 13.37 | x | x | x | x | x | x | x | |||||||||||||||||||||||

| [53] | 2012 | Anguita et al. | 35.00 | x | x | x | x | x | x | x | x | x | x | ||||||||||||||||||||

| [54] | 2012 | Deng et al. | 0.58 | x | x | x | x | x | x | x | x | x | x | x | x | x | GM, DTW | ||||||||||||||||

| [55] | 2012 | Lara and Labrador | 7.53 | x | x | x | x | x | x | x | x | x | DT | ||||||||||||||||||||

| [56] | 2012 | Lara et al. | 18.74 | x | x | x | x | x | x | x | x | x | BN, DT, LR | ||||||||||||||||||||

| [57] | 2012 | Siirtola and Röning | - | x | x | x | x | x | x | x | x | x | QDA,KNN,DT | ||||||||||||||||||||

| [58] | 2013 | Koskimäki et al. | 1.20 | x | x | x | x | x | x | x | KNN | ||||||||||||||||||||||

| [59] | 2013 | Shoaib et al. | 9.30 | x | x | x | x | x | x | x | x | x | x | x | x | x | LR,KNN,DT | ||||||||||||||||

| [60] | 2013 | Zhang and Sawchuk | 6.37 | x | x | x | x | x | x | x | x | SCI | |||||||||||||||||||||

| [61] | 2014 | Bayat et al. | 11.96 | x | x | x | x | x | x | x | x | x | x | x | RF, LR | ||||||||||||||||||

| [29] | 2014 | Bulling et al. | 64.63 | x | x | x | x | x | x | x | x | x | x | x | x | x | KNN boosting | ||||||||||||||||

| [62] | 2014 | Garcia-Ceja et al. | 2.52 | x | x | x | x | x | x | x | x | x | CRF | ||||||||||||||||||||

| [63] | 2014 | Gupta and Dallas | 9.06 | x | x | x | x | x | x | x | x | KNN | |||||||||||||||||||||

| [64] | 2014 | Kwon et al. | 5.89 | x | x | x | x | x | x | x | x | GMM | |||||||||||||||||||||

| [14] | 2014 | Zeng et al. | 39.20 | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | ||||||||||||||

| [65] | 2015 | Aly and Ismail | 0.00 | x | x | x | x | x | x | x | x | x | x | x | x | x | CC | ||||||||||||||||

| [66] | 2015 | Bleser et al. | 2.95 | x | x | x | x | x | x | x | x | x | |||||||||||||||||||||

| [67] | 2015 | Chen and Xue | 20.10 | x | x | x | x | x | x | x | x | x | DBM | x | |||||||||||||||||||

| [68] | 2015 | Guo andWang | 3.56 | x | x | x | x | x | x | x | x | x | x | x | x | x | x | KNN, DT | |||||||||||||||

| [69] | 2015 | Zainudin et al. | 6.95 | x | x | x | x | x | x | x | x | DT, LR | |||||||||||||||||||||

| [70] | 2016 | Ayachi et al. | 1.59 | x | x | x | x | x | x | x | x | x | x | x | |||||||||||||||||||

| [71] | 2016 | Fallmann and Kropf | - | x | x | x | x | x | x | x | x | x | x | x | x | x | x | ||||||||||||||||

| [72] | 2016 | Feldhorst et al. | 1.31 | x | x | x | x | x | x | x | x | x | x | x | RF | ||||||||||||||||||

| [73] | 2016 | Hammerla et al. | 23.99 | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | ||||||||||||

| [74] | 2016 | Liu et al. | 30.36 | x | x | x | x | x | x | x | x | x | x | x | x | x | KNN | ||||||||||||||||

| [75] | 2016 | Margarito et al. | 5.24 | x | x | x | x | x | x | x | x | DTW, TM | |||||||||||||||||||||

| [11] | 2016 | Ordóñez and Roggen | 42.72 | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | ||||||||||||

| [76] | 2016 | Reyes-Ortiz et al. | 12.46 | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | |||||||||||||

| [77] | 2016 | Ronao and Cho | 24.82 | x | x | x | x | x | x | x | |||||||||||||||||||||||

| [78] | 2016 | Ronao and Cho | 4.89 | x | x | x | x | x | x | x | x | x | x | ||||||||||||||||||||

| [79] | 2017 | Song-Mi Lee et al. | 10.91 | x | x | x | x | x | x | x | |||||||||||||||||||||||

| [80] | 2017 | Scheurer et al. | 7.06 | x | x | x | x | x | x | x | x | GBT, KNN | |||||||||||||||||||||

| [81] | 2017 | Vital et al. | 0.93 | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | DBMM | |||||||||||||

| [82] | 2018 | Chen et al. | 1.77 | x | x | x | x | x | x | x | x | x | x | ||||||||||||||||||||

| [83] | 2018 | Moya Rueda et al. | 3.69 | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | x | ||||||||||||

| [84] | 2018 | Nair et al. | 0.00 | x | x | x | x | x | x | x | x | x | |||||||||||||||||||||

| [85] | 2018 | Reining et al. | 0.00 | x | x | x | x | x | x | x | x | x | x | x | x | x | x | ||||||||||||||||

| [86] | 2018 | Tao et al. | 0.00 | x | x | x | x | x | x | x | x | ||||||||||||||||||||||

| [87] | 2018 | Wolff et al. | 0.00 | x | x | x | x | x | x | x | x | x | x | ||||||||||||||||||||

| [88] | 2018 | Xi et al. | 1.61 | x | x | x | x | x | x | x | x | x | x | x | x | x | x | ||||||||||||||||

| [89] | 2018 | Xie et al. | 6.43 | x | x | x | x | x | x | x | x | RF | |||||||||||||||||||||

| [22] | 2018 | Yao et al. | 3.53 | x | x | x | x | x | x | x | x | x | x | x | x | x | x | ||||||||||||||||

| [90] | 2018 | Zhao and Obonyo | 0.00 | x | x | x | x | x | x | x | x | x | x | x | x | x | x | KNN | |||||||||||||||

| [91] | 2018 | Zhu et al. | 0.00 | x | x | x | x | x | x | x | x | x | RF,KNN,LR | x | |||||||||||||||||||

| Total: 52 | 8 | 47 | 8 | 13 | 46 | 23 | 19 | 24 | 16 | 15 | 35 | 6 | 21 | 18 | 38 | 19 | 39 | 20 | 40 | 32 | 7 | 8 | 11 | 7 | 16 | 10 | 7 | 7 | 4 | ||||

| Ref. | Name | Utl. in |

|---|---|---|

| [94] | Actitracker from Wireless Sensor Data Mining (WISDM) | [14] |

| [95] | Activity Prediction from Wireless Sensor Data Mining (WISDM) | [69] |

| [96] | Daphnet Gait dataset (DG) | [73] |

| [97] | Mocap Database HDM05 | [54] |

| [98] | Realistic Sensor Displacement Benchmark Dataset (REALDISP) | [76] |

| [99] | Smartphone-Based Recognition of Human Activities and Postural Transitions Dataset | [76] |

| [100] | USC-SIPI Human Activity Dataset (USC-HAD) | [78] |

| [101] | Wearable Action Recognition Database (WARD) | [68] |

| Ref. | Name | Description | Utl. in |

|---|---|---|---|

| [102] | Opportunity | Published in 2012, this dataset contains recordings from wearable, object, and ambient sensors in a room simulating a studio flat. Four subjects were asked to perform early morning cleanup and breakfast activities. | [11,14,22,73,74,83,88] |

| [103] | Human Activity Recognition Using Smartphones Data Set | The dataset from 2012 contains smartphone-recordings. 30 subjects at the age of 19 to 48 performed six different locomotion activities wearing a smartphone on the waist. | [71,78,82,84,91] |

| [104] | 2 | Published in 2012, this dataset provides recordings from three IMUs and a heart rate monitor. Nine subjects performed twelve different household, sports and daily living activities. Some subjects performed further optional activities. | [66,73,76,83,88] |

| PAMAP | |||

| [105] | Hand Gesture | The dataset from 2013 contains 70 minutes of arm movements per subject from eight ADLs as well as from playing tennis. Two recorded subjects were equipped with three IMUs on the right hand and arm. | [22,29,71] |

| [106] | Skoda | This dataset from the year 2008 contains ten manipulative gestures performed by a single worker in a car maintenance scenario. 20 accelerometers were used for recording. | [11,14] |

| R [Hz] | 1 | 20 | 20 | 20 | 20 | 25 | 25 | 30 | 40 | 30 | 50 | 50 | 50 | 50 | 50 | 98 | 100 | 100 | 100 | 126 | 300 |

| WS [s] | 10–20 | 3 | 5 | 5 | 25 | 5 | 10 | 0.72 | 1.9–7.5 | 1.67 | 1.28 | 1.2–1.3 | 2 | 2.56 | 5,12,20 | 0.5 | 2.56 | 1.28 | 4 | 6 | 0.67 |

| Ov. [%] | - | 33 | 50 | - | 50 | - | - | 50 | - | 50 | 50–75 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 5 |

| Domain | Features | Definitions | Publications |

|---|---|---|---|

| Time | Variance | Arithmetic Variance | [29,44,45,46,48,49,50,53,55,56,57,59,60,61,62,63,65,67,68,69,71,72,75,76,78,80,81,82,89,90,91,91] |

| Mean | Arithmetic Mean | [29,45,48,49,50,53,55,56,59,61,62,63,65,67,68,69,71,72,75,76,78,80,81,82,89,90,91] | |

| Pairwise Correlation | Correlation between every pair of axes | [50,53,55,56,60,61,62,65,69,78,80,82,89,91] | |

| Minimum | Smallest value in the window | [45,46,57,69,72,76,82,90,91] | |

| Maximun | Largest value in the window | [45,46,57,69,72,76,82,90,91] | |

| Energy | Average sum of squares | [49,50,71,75,76,78,82,91] | |

| Signal Magnitude Area | [47,50,53,78,80,82,91] | ||

| IQR | Interquartile Range | [55,76,78,82,89,91] | |

| Root Mean Square | Square root of the arithmetic mean | [50,55,56,61,75,90] | |

| Kurtosis | [45,46,60,68,80] | ||

| Skewness | [45,46,50,75,80] | ||

| MinMax | Difference between the Maximum and the Minimum in the window | [50,61,63,75] | |

| Zero Crossing Rate | Rate of the changes of the sign | [29,50,69] | |

| Average Absolute Deviation | Mean absolute deviations from a central point | [55,90] | |

| MAD | Median Absolute Deviation | [55,76] | |

| Mean Crossing Rate | [29,50] | ||

| Slope | Sen’s slope for a series of data | [90] | |

| Log-Covariance | [81] | ||

| Norm | Euclidean Norm | [72] | |

| APF | Average Number of occurrences of Peaks | [61] | |

| Variance Peak Frequency | Variance of APF | [61] | |

| Correlation Person Coefficient | [76] | ||

| Angle | Angle between mean signal and vector | [76] | |

| Time Between Peaks | Time [ms] between peaks | [48] | |

| Binned Distribution | Quantisation of the difference between the Maximum and the Minimum | [48] | |

| Median | Middle value in the window | [50] | |

| Five different Percentiles | Observations in five different percentiles | [57] | |

| Sum and Square Sum in Percentiles | Sum and Square sum of observations above/below certain percentile | [57] | |

| ADM | Average Derivate of the Magnitude | [62] | |

| Frequency | Entropy | Normalised information entropy of the discrete FFT component magnitudes of the signal | [29,44,49,53,60,63,68,71,75,80,90] |

| Signal Energy | Sum squared signal amplitude | [29,56,63,68,71,82,89,90,91] | |

| Skewness | Symmetric of distribution | [76,82,90,91] | |

| Kurtosis | Heavy tail of the distribution | [76,82,90,91] | |

| DC Component of FFT and DCT | [50,67,89] | ||

| Peaks of the DFT | First 5 Peaks of the FFT | [45,46] | |

| Spectral | [90] | ||

| Spectral centroid | Centroid of a given spectrum | [90] | |

| Frequency Range Power | Sum of absolute amplitude of the signal | [90] | |

| Cepstral coefficients | Mel-Frequency Cepstral Coefficients | [29] | |

| Correlation | [49] | ||

| maxFreqInd | Largest Frequency Component | [76] | |

| MeanFreq | Frequency Signal Weighted Average | [76] | |

| Energy Band | Spectral Energy of a Frequency Band | [76] | |

| PPF | Peak Power Frequency | [80] |

| Domain | Features | Definitions | Publications |

|---|---|---|---|

| Spatial | Gravity variation | Gravity acceleration computed using the harmonic mean of the acceleration along the three axes (x,y,z) | [65] |

| Eigenvalues of Dominant Directions | [50] | ||

| Structural | Trend | [55,56] | |

| Magnitude of change | [55,56] | ||

| Time | Autoregressive Coefficients | [47] | |

| Kinematics | User steps frequency | Number of detected steps per unit time | [65] |

| Walking Elevation | Correlation between the acceleration along the y-axis vs. the gravity acceleration or acceleration along the z-axis | [65] | |

| Correlation Hand and foot | Acceleration correlation between wrist and ankle | [65] | |

| Heel Strike Force | Mean and variance of the Heel Strike Force, which is computed using dynamics | [65] | |

| Average Velocity | Integral of the acceleration | [50] |

| Metric | # of Publications |

|---|---|

| Accuracy | 38 |

| Precision | 12 |

| Recall | 11 |

| weightedF_1 | 5 |

| meanF_1 | 6 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reining, C.; Niemann, F.; Moya Rueda, F.; Fink, G.A.; ten Hompel, M. Human Activity Recognition for Production and Logistics—A Systematic Literature Review. Information 2019, 10, 245. https://doi.org/10.3390/info10080245

Reining C, Niemann F, Moya Rueda F, Fink GA, ten Hompel M. Human Activity Recognition for Production and Logistics—A Systematic Literature Review. Information. 2019; 10(8):245. https://doi.org/10.3390/info10080245

Chicago/Turabian StyleReining, Christopher, Friedrich Niemann, Fernando Moya Rueda, Gernot A. Fink, and Michael ten Hompel. 2019. "Human Activity Recognition for Production and Logistics—A Systematic Literature Review" Information 10, no. 8: 245. https://doi.org/10.3390/info10080245

APA StyleReining, C., Niemann, F., Moya Rueda, F., Fink, G. A., & ten Hompel, M. (2019). Human Activity Recognition for Production and Logistics—A Systematic Literature Review. Information, 10(8), 245. https://doi.org/10.3390/info10080245