Evaluating the Response of AI-Based Large Language Models to Common Patient Concerns About Endodontic Root Canal Treatment: A Comparative Performance Analysis

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design

2.2. Question Set

2.3. Response Generation and Data Collection

- ChatGPT 5 (OpenAI Global, San Francisco, CA, USA; model identifier: gpt 5; access date: July 2025; access platform: local inference; account type: self-hosted; default generation settings: temperature = 1.0; top-p = 1.0; no context window limits were exceeded).

- DeepSeek V3 (DeepSeek, Hangzhou, China; model identifier: deepseek v3; access date: June 2025; access platform: local inference; account type: self-hosted; default generation settings: temperature = 1.0; top-p = 1.0; no context window limits were exceeded).

- Gemini 2.5 Flash (Google, Mountain View, CA, USA; model identifier: Gemini 2.5 Flash; access date: August 2025; access platform: local inference; account type: self-hosted; default generation settings: temperature = 1; top-p = 0.95; no context window limits were exceeded).

2.4. Evaluation Criteria and Scoring Method

2.5. Data Recording and Management

2.6. Statistical Analysis

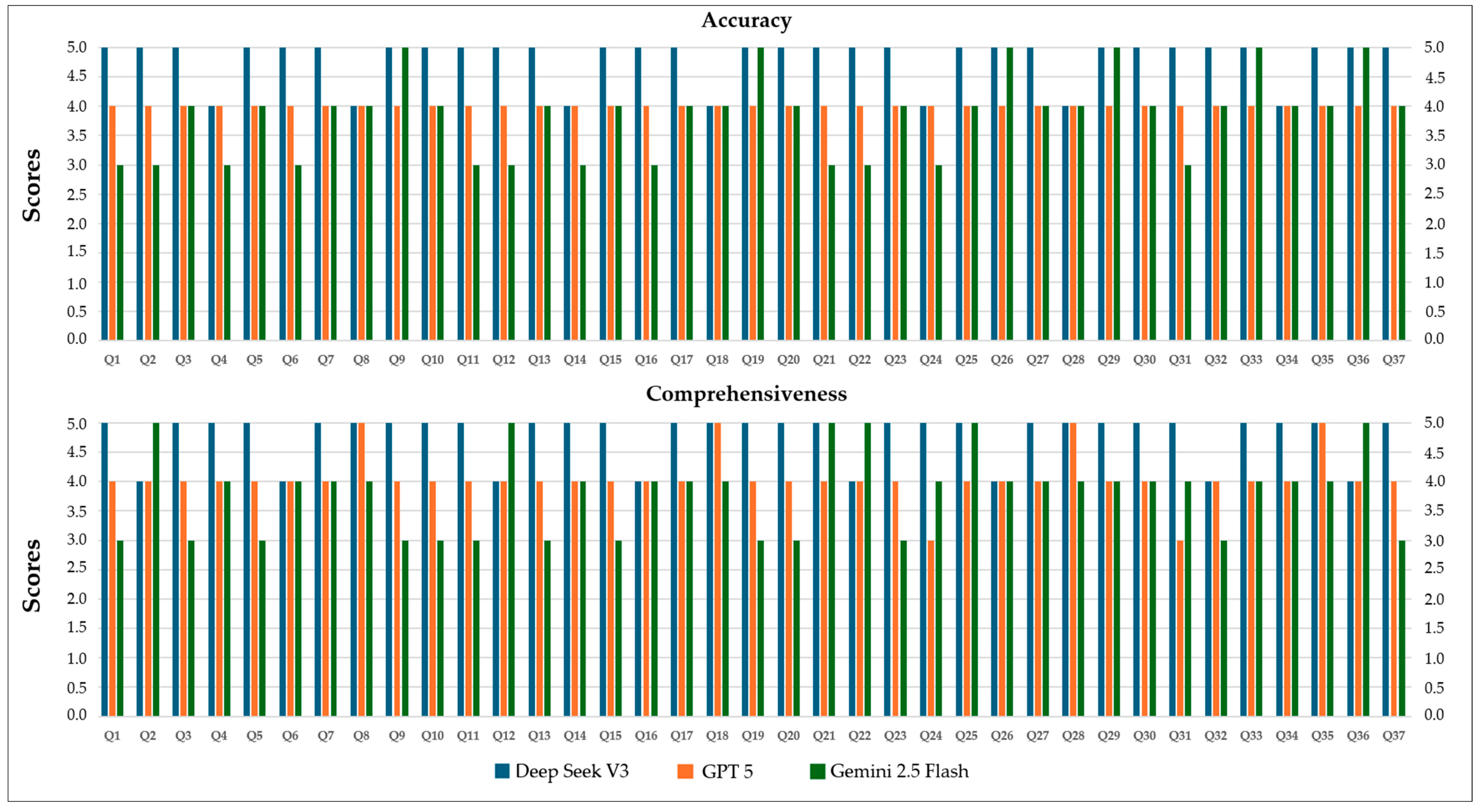

3. Results

- DeepSeek V3 versus GPT 5 showed p < 0.001 and a Cliff’s delta (δ) of 0.81, indicating a very large and statistically significant difference.

- DeepSeek V3 versus Gemini 2.5 Flash yielded p < 0.001 and δ = 0.71, reflecting a large and significant difference.

- GPT 5 versus Gemini 2.5 Flash had p = 0.109 and δ = 0.16, which was not statistically significant and represents a small effect.

- DeepSeek V3 versus GPT 5 showed p < 0.001 and δ = 0.69, indicating a highly significant difference.

- DeepSeek V3 versus Gemini 2.5 Flash had p < 0.001 and δ = 0.70, again reflecting a large and statistically significant difference.

- GPT 5 versus Gemini 2.5 Flash yielded p = 0.058 and δ = 0.21, which was not statistically significant, although it indicates a small-to-moderate effect potential.

4. Discussion

5. Conclusions

- LLMs showed significant differences in accuracy and comprehensiveness when responding to patient FAQs regarding root canal treatment.

- DeepSeek V3 achieved relatively higher scores in accuracy and comprehensiveness compared to GPT 5 and Gemini 2.5 Flash, although these findings are based on a Likert-based rubric and a limited English-language dataset, and should not be interpreted as definitive evidence of absolute superiority.

- Advanced LLMs may serve as supportive tools in patient education and health literacy related to root canal treatment; however, expert clinician oversight remains essential in clinical decision-making and treatment planning.

- When appropriately utilized, LLMs can contribute to enhancing patient awareness and supporting satisfaction during endodontic treatment processes.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| LLM | Large Language Model |

| AAE | American Association of Endodontists |

| FAQ | Frequently Asked Questions |

| ICC | Intraclass Correlation Coefficient |

| SD | Standard Deviation |

References

- Schwendicke, F.; Samek, W.; Krois, J. Artificial intelligence in dentistry: Chances and challenges. J. Dent. Res. 2020, 99, 769–774. [Google Scholar] [CrossRef] [PubMed]

- Howard, J. Artificial intelligence: Implications for the future of work. Am. J. Ind. Med. 2019, 62, 917–926. [Google Scholar] [CrossRef]

- Deng, L. Artificial intelligence in the rising wave of deep learning: The historical path and future outlook [perspectives]. IEEE Signal Process. Mag. 2018, 35, 177–180. [Google Scholar] [CrossRef]

- Abd-Alrazaq, A.; AlSaad, R.; Alhuwail, D.; Ahmed, A.; Healy, P.M.; Latifi, S.; Aziz, S.; Damseh, R.; Alrazak, S.A.; Sheikh, J. Large language models in medical education: Opportunities, challenges, and future directions. JMIR Med. Educ. 2023, 9, e48291. [Google Scholar] [CrossRef]

- Aminoshariae, A.; Kulild, J.C.; Mickel, A.; Fouad, A.F. Association between systemic diseases and endodontic outcome: A systematic review. J. Endod. 2017, 43, 514–519. [Google Scholar] [CrossRef]

- McCabe, P.; Dummer, P.M.H. Pulp canal obliteration: An endodontic diagnosis and treatment challenge. Int. Endod. J. 2012, 45, 177–197. [Google Scholar] [CrossRef]

- Nouroloyouni, A.; Nazi, Y.; Mikaieli Xiavi, H.; Noorolouny, S.; Kuzekanani, M.; Plotino, G.; Walsh, J.L.; Sheikhfaal, B.; Alyali, R.; Tavakkol, E. Cone-Beam Computed Tomography Assessment of Prevalence of Procedural Errors in Maxillary Posterior Teeth. BioMed Res. Int. 2023, 2023, 4439890. [Google Scholar] [CrossRef]

- Johnsen, I.; Bårdsen, A.; Haug, S.R. Impact of case difficulty, endodontic mishaps, and instrumentation method on endodontic treatment outcome and quality of life: A four-year follow-up study. J. Endod. 2023, 49, 382–389. [Google Scholar] [CrossRef]

- Özbay, Y.; Erdoğan, D.; Dinçer, G.A. Evaluation of the performance of large language models in clinical decision-making in endodontics. BMC Oral Health 2025, 25, 648. [Google Scholar] [CrossRef]

- Aziz, A.A.A.; Abdelrahman, H.H.; Hassan, M.G. The use of ChatGPT and Google Gemini in responding to orthognathic surgery-related questions: A comparative study. J. World Fed. Orthod. 2025, 14, 20–26. [Google Scholar] [CrossRef]

- Portilla, N.D.; Garcia-Font, M.; Nagendrababu, V.; Abbott, P.V.; Sanchez, J.A.G.; Abella, F. Accuracy and consistency of gemini responses regarding the management of traumatized permanent teeth. Dent. Traumatol. 2025, 41, 171–177. [Google Scholar] [CrossRef]

- Guo, D.; Yang, D.; Zhang, H.; Song, J.; Zhang, R.; Xu, R.; Zhu, Q.; Ma, S.; Wang, P.; Bi, X. Deepseek-r1: Incentivizing reasoning capability in llms via reinforcement learning. arXiv 2025, arXiv:2501.12948. [Google Scholar]

- Guven, Y.; Ozdemir, O.T.; Kavan, M.Y. Performance of artificial intelligence chatbots in responding to patient queries related to traumatic dental injuries: A comparative study. Dent. Traumatol. 2025, 41, 338–347. [Google Scholar] [CrossRef] [PubMed]

- Temsah, M.-H.; Jamal, A.; Alhasan, K.; Temsah, A.A.; Malki, K.H. OpenAI o1-preview vs. ChatGPT in healthcare: A new frontier in medical AI reasoning. Cureus 2024, 16, e70640. [Google Scholar] [CrossRef] [PubMed]

- Dursun, D.; Bilici Geçer, R. Can artificial intelligence models serve as patient information consultants in orthodontics? BMC Med. Inf. Inform. Decis. Mak. 2024, 24, 211. [Google Scholar] [CrossRef]

- Sallam, M.; Salim, N.A.; Barakat, M.; Al-Tammemi, A.a.B. ChatGPT applications in medical, dental, pharmacy, and public health education: A descriptive study highlighting the advantages and limitations. Narra J. 2023, 3, e103. [Google Scholar] [CrossRef]

- Chau, R.C.W.; Thu, K.M.; Yu, O.Y.; Hsung, R.T.-C.; Lo, E.C.M.; Lam, W.Y.H. Performance of generative artificial intelligence in dental licensing examinations. Int. Dent. J. 2024, 74, 616–621. [Google Scholar] [CrossRef]

- Balel, Y. Can ChatGPT be used in oral and maxillofacial surgery? J. Stomatol. Oral. Maxillofac. Surg. 2023, 124, 101471. [Google Scholar] [CrossRef]

- Lim, Z.W.; Pushpanathan, K.; Yew, S.M.E.; Lai, Y.; Sun, C.-H.; Lam, J.S.H.; Chen, D.Z.; Goh, J.H.L.; Tan, M.C.J.; Sheng, B. Benchmarking large language models’ performances for myopia care: A comparative analysis of ChatGPT-3.5, ChatGPT-4.0, and Google Bard. EBioMedicine 2023, 95, 104770. [Google Scholar] [CrossRef]

- Suárez, A.; Díaz-Flores García, V.; Algar, J.; Gómez Sánchez, M.; Llorente de Pedro, M.; Freire, Y. Unveiling the ChatGPT phenomenon: Evaluating the consistency and accuracy of endodontic question answers. Int. Endod. J. 2024, 57, 108–113. [Google Scholar] [CrossRef]

- Suárez, A.; Jiménez, J.; de Pedro, M.L.; Andreu-Vázquez, C.; García, V.D.-F.; Sánchez, M.G.; Freire, Y. Beyond the Scalpel: Assessing ChatGPT’s potential as an auxiliary intelligent virtual assistant in oral surgery. Comput. Struct. Biotechnol. J. 2024, 24, 46–52. [Google Scholar] [CrossRef]

- Şahin Akboğa, Ö.; Korkmaz, M.; Korkmaz, İ.N.; Kulu, M. The expert evaluation of Chat GPT-4 responses to surgery-related anxiety in patients who undergo Total Hip Replacement surgery. Mediterian J. Health Sci. Curr. Res. 2024, 2, 1–9. [Google Scholar]

- Abdulrab, S.; Abada, H.; Mashyakhy, M.; Mostafa, N.; Alhadainy, H.; Halboub, E. Performance of 4 Artificial Intelligence Chatbots in Answering Endodontic Questions. J. Endod. 2025, 51, 602–608. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.; Wu, Z.; Song, J.; Luo, S.; Chai, Z. Comprehensiveness of large language models in patient queries on gingival and endodontic health. Int. Dent. J. 2025, 75, 151–157. [Google Scholar] [CrossRef] [PubMed]

- Gondode, P.; Duggal, S.; Garg, N.; Lohakare, P.; Jakhar, J.; Bharti, S.; Dewangan, S. Comparative analysis of accuracy, readability, sentiment, and actionability: Artificial intelligence chatbots (ChatGPT and Google Gemini) versus traditional patient information leaflets for local anesthesia in eye surgery. Br. Ir. Orthopt. J. 2024, 20, 183–192. [Google Scholar] [CrossRef]

- Liao, H. DeepSeek large-scale model: Technical analysis and development prospect. J. Comput. Sci. Electr. Eng. 2025, 7, 33–37. [Google Scholar]

- Wang, C.; Chen, Y. Evaluating Large Language Models for Evidence-Based Clinical Question Answering. arXiv 2025, arXiv:2509.10843. [Google Scholar] [CrossRef]

- Comanici, G.; Bieber, E.; Schaekermann, M.; Pasupat, I.; Sachdeva, N.; Dhillon, I.; Blistein, M.; Ram, O.; Zhang, D.; Rosen, E. Gemini 2.5: Pushing the frontier with advanced reasoning, multimodality, long context, and next generation agentic capabilities. arXiv 2025, arXiv:2507.06261. [Google Scholar] [CrossRef]

- AAE. Root Canal Treatment. Available online: https://www.aae.org/patients/root-canal-treatment/ (accessed on 10 September 2025).

- Alan, R.; Alan, B.M. Utilizing ChatGPT-4 for providing information on periodontal disease to patients: A DISCERN quality analysis. Cureus 2023, 15, e46213. [Google Scholar] [CrossRef]

- Meissel, K.; Yao, E.S. Using Cliff’s delta as a non-parametric effect size measure: An accessible web app and R tutorial. Pract. Assess. Res. Eval. 2024, 29, 2. [Google Scholar]

- Giannakopoulos, K.; Kavadella, A.; Aaqel Salim, A.; Stamatopoulos, V.; Kaklamanos, E.G. Evaluation of the performance of generative AI large language models ChatGPT, Google Bard, and Microsoft Bing Chat in supporting evidence-based dentistry: Comparative mixed methods study. J. Med. Internet Res. 2023, 25, e51580. [Google Scholar] [CrossRef] [PubMed]

- Ohta, K.; Ohta, S. The performance of GPT-3.5, GPT-4, and Bard on the Japanese National Dentist Examination: A comparison study. Cureus 2023, 15, e50369. [Google Scholar] [CrossRef] [PubMed]

- Alhaidry, H.M.; Fatani, B.; Alrayes, J.O.; Almana, A.M.; Alfhaed, N.K.; Alhaidry, H.; Alrayes, J.; Almana, A.; Alfhaed Sr, N.K. ChatGPT in dentistry: A comprehensive review. Cureus 2023, 15, e38317. [Google Scholar] [CrossRef] [PubMed]

- Goodman, R.S.; Patrinely, J.R.; Stone, C.A.; Zimmerman, E.; Donald, R.R.; Chang, S.S.; Berkowitz, S.T.; Finn, A.P.; Jahangir, E.; Scoville, E.A. Accuracy and reliability of chatbot responses to physician questions. JAMA Netw. Open 2023, 6, e2336483. [Google Scholar] [CrossRef]

- Wang, L.; Wan, Z.; Ni, C.; Song, Q.; Li, Y.; Clayton, E.; Malin, B.; Yin, Z. Applications and concerns of ChatGPT and other conversational large language models in health care: Systematic review. J. Med. Internet Res. 2024, 26, e22769. [Google Scholar] [CrossRef]

- Aljamani, S.; Hassona, Y.; Fansa, H.A.; Saadeh, H.M.; Jamani, K.D. Evaluating Large Language Models in Addressing Patient Questions on Endodontic Pain: A Comparative Analysis of Accessible Chatbots. J. Endod. 2025, in press. [Google Scholar] [CrossRef]

- Mago, J.; Sharma, M. The potential usefulness of ChatGPT in oral and maxillofacial radiology. Cureus 2023, 15, e42133. [Google Scholar] [CrossRef]

- Bragazzi, N.L.; Szarpak, Ł.; Piccotti, F. Assessing ChatGPT’s Potential in Endodontics: Preliminary Findings from A Diagnostic Accuracy Study. SSRN 2023. [Google Scholar] [CrossRef]

- Lee, P.; Bubeck, S.; Petro, J. Benefits, limits, and risks of GPT-4 as an AI chatbot for medicine. N. Engl. J. Med. 2023, 388, 1233–1239. [Google Scholar] [CrossRef]

- Ali, R.; Tang, O.Y.; Connolly, I.D.; Fridley, J.S.; Shin, J.H.; Sullivan, P.L.Z.; Cielo, D.; Oyelese, A.A.; Doberstein, C.E.; Telfeian, A.E. Performance of ChatGPT, GPT-4, and Google Bard on a neurosurgery oral boards preparation question bank. Neurosurgery 2023, 93, 1090–1098. [Google Scholar] [CrossRef]

- Cascella, M.; Montomoli, J.; Bellini, V.; Bignami, E. Evaluating the feasibility of ChatGPT in healthcare: An analysis of multiple clinical and research scenarios. J. Med. Syst. 2023, 47, 33. [Google Scholar] [CrossRef]

- Yıldırım, A.; Cicek, O.; Genç, Y.S. Can AI-Based ChatGPT Models Accurately Analyze Hand–Wrist Radiographs? A Comparative Study. Diagnostics 2025, 15, 1513. [Google Scholar] [CrossRef]

- Villena, F.; Véliz, C.; García-Huidobro, R.; Aguayo, S. Generative artificial intelligence in dentistry: Current approaches and future challenges. arXiv 2024, arXiv:2407.17532. [Google Scholar] [CrossRef]

- Segura-Egea, J.; Gould, K.; Hakan Şen, B.; Jonasson, P.; Cotti, E.; Mazzoni, A.; Sunay, H.; Tjäderhane, L.; Dummer, P. European Society of Endodontology position statement: The use of antibiotics in endodontics. Int. Endod. J. 2018, 51, 20–25. [Google Scholar] [CrossRef]

- Patel, S.; Brown, J.; Semper, M.; Abella, F.; Mannocci, F. European Society of Endodontology position statement: Use of cone beam computed tomography in Endodontics: European Society of Endodontology (ESE) developed by. Int. Endod. J. 2019, 52, 1675–1678. [Google Scholar] [CrossRef]

| General definition and purpose |

|

|

|

|

| Symptoms and indications |

|

|

|

| During and after the procedure |

|

|

|

|

|

|

|

|

|

| Alternatives, success, and risks |

|

|

|

|

|

|

| Pain, safety, and misconceptions |

|

|

|

|

|

|

|

|

|

| Cost and insurance |

|

|

|

| Specialty and referral |

|

|

|

|

| Accuracy | Comprehensiveness | |||

|---|---|---|---|---|

| Score | Category | Definition | Category | Definition |

| 1 | Completely incorrect | Inconsistent with current scientific evidence or clinically misleading | Not adequate | Addresses only a minor part of the question; highly inadequate explanation |

| 2 | More incorrect than correct | Includes some correct elements but overall misleading | Somewhat adequate | Covers the question partially; omits key points |

| 3 | Partially correct/partially incomplete | Contains essential information but with noticeable gaps or superficial detail | Adequate | Provides a general answer to the question; lacks depth in some areas |

| 4 | More correct than incorrect | Minor factual or terminological inaccuracies may exist | Very adequate | Addresses most aspects of the question with sufficient and detailed explanation |

| 5 | Completely correct | Fully consistent with current evidence and clinical guidelines | Extremely adequate | Thoroughly and clearly covers all relevant aspects of the question in a balanced and informative manner |

| Criteria | Large Language Models | p | ||

|---|---|---|---|---|

| DeepSeek V3 a | GPT 5 b | Gemini 2.5 Flash c | ||

| Mean ± SD; (Median) [Q1–Q3] | Mean ± SD; (Median) [Q1–Q3] | Mean ± SD; (Median) [Q1–Q3] | ||

| Accuracy | 4.81 ± 0.39; (5) b,c [5–5] | 4 ± 0; (4) [4–4] | 3.83 ± 0.68; (4) [3–4] | <0.001 *,K |

| Comprehensiveness | 4.78 ± 0.41; (5) b,c [5–5] | 4.05 ± 0.4; (4) [4–4] | 3.81 ± 0.7; (4) [3–4] | 0.001 *,K |

| Criteria | Eta Squared (η2) | Pairwise Comparison | p | Cliff’s Delta (δ) |

|---|---|---|---|---|

| Accuracy | 0.49 | DeepSeek V3 vs. GPT 5 | <0.001 * | 0.81 |

| DeepSeek V3 vs. Gemini 2.5 Flash | <0.001 * | 0.71 | ||

| GPT 5 vs. Gemini 2.5 Flash | 0.109 | 0.16 | ||

| Comprehensiveness | 0.39 | DeepSeek V3 vs. GPT 5 | <0.001 * | 0.69 |

| DeepSeek V3 vs. Gemini 2.5 Flash | <0.001 * | 0.70 | ||

| GPT 5 vs. Gemini 2.5 Flash | 0.058 | 0.21 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Demir Cicek, B.; Cicek, O. Evaluating the Response of AI-Based Large Language Models to Common Patient Concerns About Endodontic Root Canal Treatment: A Comparative Performance Analysis. J. Clin. Med. 2025, 14, 7482. https://doi.org/10.3390/jcm14217482

Demir Cicek B, Cicek O. Evaluating the Response of AI-Based Large Language Models to Common Patient Concerns About Endodontic Root Canal Treatment: A Comparative Performance Analysis. Journal of Clinical Medicine. 2025; 14(21):7482. https://doi.org/10.3390/jcm14217482

Chicago/Turabian StyleDemir Cicek, Busra, and Orhan Cicek. 2025. "Evaluating the Response of AI-Based Large Language Models to Common Patient Concerns About Endodontic Root Canal Treatment: A Comparative Performance Analysis" Journal of Clinical Medicine 14, no. 21: 7482. https://doi.org/10.3390/jcm14217482

APA StyleDemir Cicek, B., & Cicek, O. (2025). Evaluating the Response of AI-Based Large Language Models to Common Patient Concerns About Endodontic Root Canal Treatment: A Comparative Performance Analysis. Journal of Clinical Medicine, 14(21), 7482. https://doi.org/10.3390/jcm14217482