Psychometric Calibration of a Tool Based on 360 Degree Videos for the Assessment of Executive Functions

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Procedure of Study

2.2.1. Pre-Task Evaluation

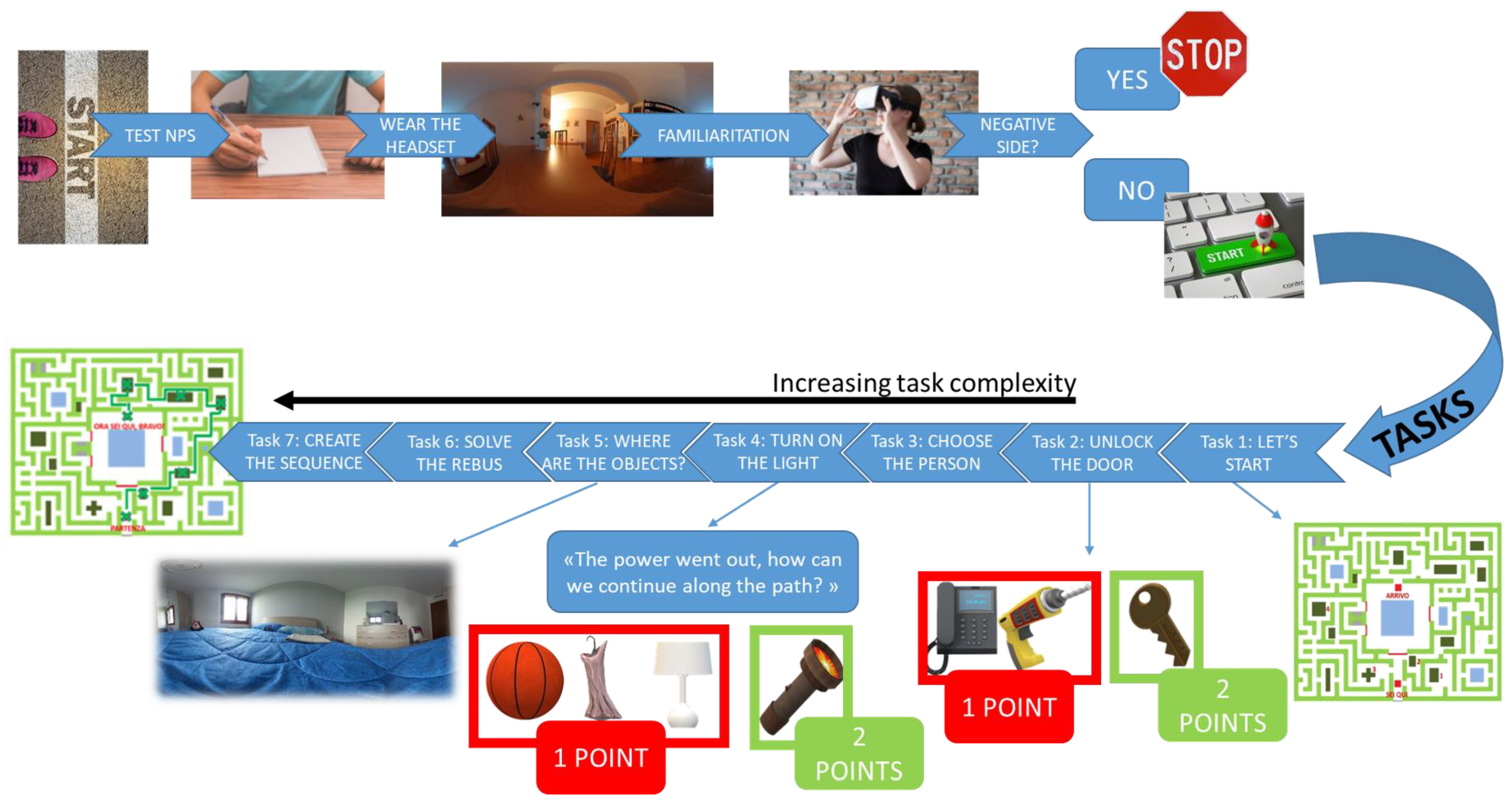

2.2.2. EXIT 360° Session

2.2.3. Post-Task Evaluation

2.3. Statistical Analysis

3. Results

3.1. Participants

3.2. Traditional Neuropsychological Assessment

3.3. EXIT 360°

3.4. Correlation between Neuropsychological Tests and EXIT 360°

3.5. Usability

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Harvey, P.D. Clinical applications of neuropsychological assessment. Dialog. Clin. Neurosci. 2012, 14, 91–99. [Google Scholar] [CrossRef] [PubMed]

- Stuss, D.T.; Alexander, M.P. Executive functions and the frontal lobes: A conceptual view. Psychol. Res. 2000, 63, 289–298. [Google Scholar] [CrossRef] [PubMed]

- Chaytor, N.; Schmitter-Edgecombe, M. The Ecological Validity of Neuropsychological Tests: A Review of the Literature on Everyday Cognitive Skills. Neuropsychol. Rev. 2003, 13, 181–197. [Google Scholar] [CrossRef]

- Goldstein, G. Functional Considerations in Neuropsychology; Gr Press/St Lucie Press, Inc.: Delray Beach, FL, USA, 1996. [Google Scholar]

- Barker, L.A.; Andrade, J.; Romanowski, C.A.J. Impaired Implicit Cognition with Intact Executive Function After Extensive Bilateral Prefrontal Pathology: A Case Study. Neurocase 2004, 10, 233–248. [Google Scholar] [CrossRef]

- Chan, R.C.K.; Shum, D.; Toulopoulou, T.; Chen, E.Y. Assessment of executive functions: Review of instruments and identification of critical issues. Arch. Clin. Neuropsychol. 2008, 23, 201–216. [Google Scholar] [CrossRef]

- Goel, V.; Grafman, J.; Tajik, J.; Gana, S.; Danto, D. A study of the performance of patients with frontal lobe lesions in a financial planning task. Brain 1997, 120, 1805–1822. [Google Scholar] [CrossRef]

- Green, M.F. What are the functional consequences of neurocognitive deficits in schizophrenia? Am. J. Psychiatry. 1996, 153, 321–330. [Google Scholar]

- Green, M.F.; Kern, R.S.; Braff, D.L.; Mintz, J. Neurocognitive deficits and functional outcome in schizophrenia: Are we measuring the “right stuff”? Schizophr. Bull. 2000, 26, 119–136. [Google Scholar] [CrossRef]

- Chevignard, M.; Pillon, B.; Pradat-Diehl, P.; Taillefer, C.; Rousseau, S.; Le Bras, C.; Dubois, B. An ecological approach to planning dysfunction: Script execution. Cortex 2000, 36, 649–669. [Google Scholar] [CrossRef]

- Fortin, S.; Godbout, L.; Braun, C.M.J. Cognitive structure of executive deficits in frontally lesioned head trauma patients performing activities of daily living. Cortex 2003, 39, 273–291. [Google Scholar] [CrossRef] [PubMed]

- Gitlin, L.N.; Corcoran, M.; Winter, L.; Boyce, A.; Hauck, W.W. A randomized, controlled trial of a home environmental intervention: Effect on efficacy and upset in caregivers and on daily function of persons with dementia. Gerontologist 2001, 41, 4–14. [Google Scholar] [CrossRef] [PubMed]

- Crawford, J.R. Introduction to the assessment of attention and executive functioning. Neuropsychol. Rehabil. 1998, 8, 209–211. [Google Scholar] [CrossRef]

- Alderman, M.K. Motivation for Achievement: Possibilities for Teaching and Learning; Routledge: England, UK, 2013. [Google Scholar]

- Van der Linden, M.; Seron, X.; Coyette, F. La Prise En Charge Des Troubles Exécutifs. Traité De Neuropsychologie Clinique: Tome II; Solal: Marseille, France, 2000; pp. 253–268. [Google Scholar]

- Nelson, H.E. A Modified Card Sorting Test Sensitive to Frontal Lobe Defects. Cortex 1976, 12, 313–324. [Google Scholar] [CrossRef]

- Stroop, J.R. Studies of interference in serial verbal reactions. J. Exp. Psychol. 1935, 18, 643. [Google Scholar] [CrossRef]

- Dubois, B.; Slachevsky, A.; Litvan, I.; Pillon, B. The FAB: A frontal assessment battery at bedside. Neurology 2000, 55, 1621–1626. [Google Scholar] [CrossRef]

- Appollonio, I.; Leone, M.; Isella, V.; Piamarta, F.; Consoli, T.; Villa, M.L.; Forapani, E.; Russo, A.; Nichelli, P. The frontal assessment battery (FAB): Normative values in an Italian population sample. Neurol. Sci. 2005, 26, 108–116. [Google Scholar] [CrossRef]

- Reitan, R.M. Trail Making Test: Manual for administration and scoring; Reitan Neuropsychology Laboratory: Tucson, Arizona, 1992. [Google Scholar]

- Shallice, T.; Burgess, P.W. Deficits in strategy application following frontal lobe damage in man. Brain 1991, 114, 727–741. [Google Scholar] [CrossRef] [PubMed]

- Klinger, E.; Chemin, I.; Lebreton, S.; Marie, R.M. A virtual supermarket to assess cognitive planning. Annu. Rev. Cyber Ther. Telemed. 2004, 2, 49–57. [Google Scholar]

- Burgess, P.W.; Alderman, N.; Forbes, C.; Costello, A.; M-A.Coates, L.; Dawson, D.R.; Anderson, N.D.; Gilbert, S.J.; Dumontheil, I.; Channon, S. The case for the development and use of “ecologically valid” measures of executive function in experimental and clinical neuropsychology. J. Int. Neuropsychol. Soc. 2006, 12, 194–209. [Google Scholar] [CrossRef] [PubMed]

- Chaytor, N.; Burr, R.; Schmitter-Edgecombe, M. Improving the ecological validity of executive functioning assessment. Arch. Clin. Neuropsychol. 2006, 21, 217–227. [Google Scholar] [CrossRef]

- Chevignard, M.P.; Catroppa, C.; Galvin, J.; Anderson, V. Development and Evaluation of an Ecological Task to Assess Executive Functioning Post Childhood TBI: The Children’s Cooking Task. Brain Impair. 2010, 11, 125–143. [Google Scholar] [CrossRef]

- Mausbach, B.T.; Harvey, P.D.; E Pulver, A.; A Depp, C.; Wolyniec, P.S.; Thornquist, M.H.; Luke, J.R.; A McGrath, J.; Bowie, C.R.; Patterson, T.L. Relationship of the Brief UCSD Performance-based Skills Assessment (UPSA-B) to multiple indicators of functioning in people with schizophrenia and bipolar disorder. Bipolar Disord. 2010, 12, 45–55. [Google Scholar] [CrossRef] [PubMed]

- Rand, D.; Rukan, S.B.-A.; Weiss, P.L.; Katz, N. Validation of the Virtual MET as an assessment tool for executive functions. Neuropsychol. Rehabilitation 2009, 19, 583–602. [Google Scholar] [CrossRef] [PubMed]

- Bailey, P.E.; Henry, J.D.; Rendell, P.G.; Phillips, L.H.; Kliegel, M. Dismantling the “age–prospective memory paradox”: The classic laboratory paradigm simulated in a naturalistic setting. Q. J. Exp. Psychol. 2010, 63, 646–652. [Google Scholar] [CrossRef] [PubMed]

- Campbell, Z.; Zakzanis, K.K.; Jovanovski, D.; Joordens, S.; Mraz, R.; Graham, S.J. Utilizing Virtual Reality to Improve the Ecological Validity of Clinical Neuropsychology: An fMRI Case Study Elucidating the Neural Basis of Planning by Comparing the Tower of London with a Three-Dimensional Navigation Task. Appl. Neuropsychol. 2009, 16, 295–306. [Google Scholar] [CrossRef] [PubMed]

- Bohil, C.; Alicea, B.; Biocca, F.A. Virtual reality in neuroscience research and therapy. Nat. Rev. Neurosci. 2011, 12, 752–762. [Google Scholar] [CrossRef]

- Parsons, T.D.; Courtney, C.G.; Arizmendi, B.; Dawson, M. Virtual Reality Stroop Task for neurocognitive assessment. Stud. Health Technol. informatics 2011, 163, 433–439. [Google Scholar]

- Parsons, T.D. Virtual Reality for Enhanced Ecological Validity and Experimental Control in the Clinical, Affective and Social Neurosciences. Front. Hum. Neurosci. 2015, 9, 660. [Google Scholar] [CrossRef]

- Rizzo, A.A.; Buckwalter, J.G.; McGee, J.S.; Bowerly, T.; Van Der Zaag, C.; Neumann, U.; Thiebaux, M.; Kim, L.; Pair, J.; Chua, C. Virtual Environments for Assessing and Rehabilitating Cognitive/Functional Performance A Review of Projects at the USC Integrated Media Systems Center. PRESENCE: Virtual Augment. Real. 2001, 10, 359–374. [Google Scholar] [CrossRef]

- Riva, G. Virtual Reality in Neuro-Psycho-Physiology: Cognitive, Clinical and Methodological Issues in Assessment and Rehabilitation; IOS press: Amsterdam, The Netherlands, 1997; Volume 44. [Google Scholar]

- Riva, G. Psicologia Dei Nuovi Media; Il Mulino: Bologna, Italy, 2004. [Google Scholar]

- Climent, G.; Banterla, F.; Iriarte, Y. Virtual reality, technologies and behavioural assessment. AULA Ecol. Eval. Atten. Process. 2010, 19–28. [Google Scholar]

- Borgnis, F.; Baglio, F.; Pedroli, E.; Rossetto, F.; Uccellatore, L.; Oliveira, J.A.G.; Riva, G.; Cipresso, P. Available Virtual Reality-Based Tools for Executive Functions: A Systematic Review. Front. Psychol. 2022, 13. [Google Scholar] [CrossRef]

- Abbadessa, G.; Brigo, F.; Clerico, M.; De Mercanti, S.; Trojsi, F.; Tedeschi, G.; Bonavita, S.; Lavorgna, L. Digital therapeutics in neurology. J. Neurol. 2021, 269, 1209–1224. [Google Scholar] [CrossRef]

- Armstrong, C.M.; Reger, G.M.; Edwards, J.; Rizzo, A.A.; Courtney, C.G.; Parsons, T. Validity of the Virtual Reality Stroop Task (VRST) in active duty military. J. Clin. Exp. Neuropsychol. 2013, 35, 113–123. [Google Scholar] [CrossRef] [PubMed]

- Cipresso, P.; Serino, S.; Pedroli, E.; Albani, G.; Riva, G. Psychometric reliability of the NeuroVR-based virtual version of the Multiple Errands Test. In Proceedings of the 2013 7th International Conference on Pervasive Computing Technologies for Healthcare and Workshops, Venice, Italy, 5–8 May 2013; Volume 2013, pp. 446–449. [Google Scholar]

- Riva, G.; Carelli, L.; Gaggioli, A.; Gorini, A.; Vigna, C.; Algeri, D.; Repetto, C.; Raspelli, S.; Corsi, R.; Faletti, G.; et al. NeuroVR 1.5 in practice: Actual clinical applications of the open source VR system. Annu. Rev. Cyber Ther. Telemed. 2009, 144, 57–60. [Google Scholar]

- Cipresso, P.; La Paglia, F.; La Cascia, C.; Riva, G.; Albani, G.; La Barbera, D. Break in volition: A virtual reality study in patients with obsessive-compulsive disorder. Exp. Brain Res. 2013, 229, 443–449. [Google Scholar] [CrossRef]

- Josman, N.; Schenirderman, A.E.; Klinger, E.; Shevil, E. Using virtual reality to evaluate executive functioning among persons with schizophrenia: A validity study. Schizophr. Res. 2009, 115, 270–277. [Google Scholar] [CrossRef]

- Aubin, G.; Béliveau, M.-F.; Klinger, E. An exploration of the ecological validity of the Virtual Action Planning–Supermarket (VAP-S) with people with schizophrenia. Neuropsychol. Rehabilitation 2015, 28, 689–708. [Google Scholar] [CrossRef] [PubMed]

- Wiederhold, B.K.; Riva, G. Assessment of executive functions in patients with obsessive compulsive disorder by NeuroVR. Annu. Rev. Cybertherapy Telemed. 2012, 2012, 98. [Google Scholar]

- Raspelli, S.; Carelli, L.; Morganti, F.; Albani, G.; Pignatti, R.; Mauro, A.; Poletti, B.; Corra, B.; Silani, V.; Riva, G. A neuro vr-based version of the multiple errands test for the assessment of executive functions: A possible approach. J. Cyber Ther. Rehabil. 2009, 2, 299–314. [Google Scholar]

- Raspelli, S.; Pallavicini, F.; Carelli, L.; Morganti, F.; Poletti, B.; Corra, B.; Silani, V.; Riva, G. Validation of a Neuro Virtual Reality-based version of the Multiple Errands Test for the assessment of executive functions. Stud. Health Technol. informatics 2011, 167, 92–97. [Google Scholar]

- Rouaud, O.; Graule-Petot, A.; Couvreur, G.; Contegal, F.; Osseby, G.V.; Benatru, I.; Giroud, M.; Moreau, T. Apport de l’évaluation écologique des troubles exécutifs dans la sclérose en plaques. Rev. Neurol. 2006, 162, 964–969. [Google Scholar] [CrossRef] [PubMed]

- Negro Cousa, E.; Brivio, E.; Serino, S.; Heboyan, V.; Riva, G.; De Leo, G. New Frontiers for cognitive assessment: An exploratory study of the potentiality of 360 technologies for memory evaluation. Cyberpsychol. Behav. Soc. Netw. 2019, 22, 76–81. [Google Scholar] [CrossRef] [PubMed]

- Pieri, L.; Serino, S.; Cipresso, P.; Mancuso, V.; Riva, G.; Pedroli, E. The ObReco-360°: A new ecological tool to memory assessment using 360° immersive technology. Virtual Real. 2021, 26, 639–648. [Google Scholar] [CrossRef]

- Serino, S.; Baglio, F.; Rossetto, F.; Realdon, O.; Cipresso, P.; Parsons, T.D.; Cappellini, G.; Mantovani, F.; De Leo, G.; Nemni, R.; et al. Picture Interpretation Test (PIT) 360°: An Innovative Measure of Executive Functions. Sci. Rep. 2017, 7, 1–10. [Google Scholar] [CrossRef]

- Bialkova, S.; Dickhoff, B. Encouraging Rehabilitation Trials: The Potential of 360 Immersive Instruction Videos. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 1443–1447. [Google Scholar]

- Violante, M.G.; Vezzetti, E.; Piazzolla, P. Interactive virtual technologies in engineering education: Why not 360° videos? Int. J. Interact. Des. Manuf. 2019, 13, 729–742. [Google Scholar] [CrossRef]

- Realdon, O.; Serino, S.; Savazzi, F.; Rossetto, F.; Cipresso, P.; Parsons, T.D.; Cappellini, G.; Mantovani, F.; Mendozzi, L.; Nemni, R.; et al. An ecological measure to screen executive functioning in MS: The Picture Interpretation Test (PIT) 360°. Sci. Rep. 2019, 9, 1–8. [Google Scholar] [CrossRef]

- Borgnis, F.; Baglio, F.; Pedroli, E.; Rossetto, F.; Riva, G.; Cipresso, P. A Simple and Effective Way to Study Executive Functions by Using 360° Videos. Front. Neurosci. 2021, 15, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Borgnis, F.; Baglio, F.; Pedroli, E.; Rossetto, F.; Isernia, S.; Uccellatore, L.; Riva, G.; Cipresso, P. EXecutive-Functions Innovative Tool (EXIT 360°): A Usability and User Experience Study of an Original 360°-Based Assessment Instrument. Sensors 2021, 21, 5867. [Google Scholar] [CrossRef]

- Borgnis, F.; Baglio, F.; Pedroli, E.; Rossetto, F.; Meloni, M.; Riva, G.; Cipresso, P. A Psychometric Tool for Evaluating Executive Functions in Parkinson’s Disease. J. Clin. Med. 2022, 11, 1153. [Google Scholar] [CrossRef]

- Krabbe, P.F.M. (Ed.) Chapter 7— Validity. In The Measurement of Health and Health Status; Academic Press: Cambridge, MA, USA, 2017; pp. 113–134. [Google Scholar] [CrossRef]

- Chin, C.L.; Yao, G. Convergent validity. Encycl. Qual. Life Well-Being Res. 2014, 1. [Google Scholar]

- Nasreddine, Z.S.; Phillips, N.A.; Bédirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cummings, J.L.; Chertkow, H. The Montreal Cognitive Assessment, MoCA: A Brief Screening Tool For Mild Cognitive Impairment. J. Am. Geriatr. Soc. 2005, 53, 695–699. [Google Scholar] [CrossRef] [PubMed]

- Santangelo, G.; Siciliano, M.; Pedone, R.; Vitale, C.; Falco, F.; Bisogno, R.; Siano, P.; Barone, P.; Grossi, D.; Santangelo, F.; et al. Normative data for the Montreal Cognitive Assessment in an Italian population sample. Neurol. Sci. 2014, 36, 585–591. [Google Scholar] [CrossRef] [PubMed]

- Borgnis, F.; Baglio, F.; Pedroli, E.; Rossetto, F.; Meloni, M.; Riva, G.; Cipresso, P. EXIT 360—Executive-functions innovative tool 360—A simple and effective way to study executive functions in P disease by using 360 videos. Appl. Sci. 2021, 11, 6791. [Google Scholar] [CrossRef]

- Novelli, G.; Papagno, C.; Capitani, E.; Laiacona, M. Tre test clinici di memoria verbale a lungo termine: Taratura su soggetti normali. Arch. Psicol. Neurol. Psichiatr. 1986, 47(2), 278–296. [Google Scholar]

- Monaco, M.; Costa, A.; Caltagirone, C.; Carlesimo, G.A. Forward and backward span for verbal and visuo-spatial data: Standardization and normative data from an Italian adult population. Neurol. Sci. 2013, 34, 749–754. [Google Scholar] [CrossRef]

- Spinnler, H.; Tognoni, G. Standardizzazione e taratura italiana di tests neuropsicologici. [Italian normative values and standardization of neuropsychological tests]. Ital. J. Neurol. Sci. 1987, 6, 1–20. [Google Scholar]

- Raven, J.C. Progressive Matrices: Sets A, B, C, D, and E.; University Press; H. K. Lewis & Co. Ltd.: London, UK, 1938. [Google Scholar]

- Raven, J.C. Progressive matrices: Sets A, AbB. In Board and Book Forms; Lewis: London, UK, 1947. [Google Scholar]

- Caffarra, P.; Vezzadini, G.; Zonato, F.; Copelli, S.; Venneri, A. A normative study of a shorter version of Ravens progressive matrices 1938. Neurol. Sci. 2003, 24, 336–339. [Google Scholar] [CrossRef]

- Brooke, J. System Usability Scale (SUS): A Quick-and-Dirty Method of System Evaluation User Information; Digital Equipment Co Ltd.: Reading, UK, 1986; p. 43. [Google Scholar]

- Brooke, J. SUS: A ’Quick and Dirty’ Usability Scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Lewis, J.R.; Sauro, J. The factor structure of the system usability scale. In International Conference on Human Centered Design; Springer: Berlin/Heidelberg, Germany, 2009; pp. 94–103. [Google Scholar]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An Empirical Evaluation of the System Usability Scale. Int. J. Hum.–Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

| Name | Executive Function |

|---|---|

| Trail Making Test | Visual search Task switching Cognitive flexibility |

| Verbal Fluency Task | Access to vocabulary on phonemic key |

| Stroop Test | Inhibition |

| Digit Span Backward | Working memory |

| Frontal Assessment Battery | Abstraction Cognitive flexibility Motor programming/planning Interference sensitivity Inhibition control |

| Attentive Matrices | Visual search Selective attention |

| Progressive Matrices of Raven | Sustained and selective attention Reasoning |

| Name | Executive Function | |

|---|---|---|

| Task 1 | Let’s Start | Planning–Inhibition Control–Visual Search |

| Task 2 | Unlock the Door | Decision Making |

| Task 3 | Choose the Person | Divided Attention–Inhibition Control–Visual Search |

| Task 4 | Turn On the Light | Problem Solving–Planning–Inhibition Control |

| Task 5 | Where Are the Objects? | Visual Search–Selective and Divided Attention–Reasoning |

| Task 6 | Solve the Rebus | Planning–Reasoning–Set shifting–Selective and Divided Attention |

| Task 7 | Create the Sequence | Working Memory–Selective Attention–Inhibition Control |

| Subjects [n = 77] | ||

|---|---|---|

| Age (years) | Mean (SD) | 53.2 (20.40) |

| Sex (M:F) | 29:48 | |

| Education (years) | Median (IQR) | 13 (13–18) |

| MoCA_raw score | Mean (SD) | 26.9 (2.37) |

| MoCA_correct score | Mean (SD) | 25.9 (2.62) |

| Neuropsychological Tests | Raw Score Mean (SD) | Corrected Score Mean (SD) | Cut-Off of Normality |

|---|---|---|---|

| Trail Making Test–Part A * | 37.2 (22.9) | 35.1 (19.3) | ≤68 |

| Trail Making Test–Part B * | 94.1 (58.9) | 90.6 (48.4) | ≤177 |

| Trail Making Test–Part B-A * | 56.9 (42.3) | 57 (34.2) | ≤111 |

| Verbal Fluency Task | 41.6 (11.1) | 37.9 (9.46) | ≥23 |

| Stroop Test–Errors | 0.68 (1.09) | 0.62 (1.13) | ≤2.82 |

| Stroop Test–Time * | 22.6 (15.5) | 23.8 (11.5) | ≤31.65 |

| Frontal Assessment Battery | 17.6 (1) | 17.7 (0.85) | ≥14.40 |

| Digit Span Backward | 4.77 (0.99) | 4.56 (0.97) | ≥3.29 |

| Attentive Matrices | 54.3 (5.53) | 48.6 (6.43) | ≥37 |

| Progressive Matrices of Raven | 32.3 (3.63) | 32.3 (3.2) | ≥23.5 |

| EXIT 360° Total Score | EXIT 360° Total Reaction Time | |

|---|---|---|

| Montreal Cognitive Assessment | 0.48 ** | −0.31 * |

| Progressive Matrices of Raven | 0.44 ** | - |

| Attentive Matrices | 0.26 * | −0.23 * |

| Frontal Assessment Battery | 0.41 ** | - |

| Verbal Fluency Task | 0.54 ** | - |

| Digit Span Backward | 0.32 * | - |

| Trail Making Test–Part A | - | 0.14 |

| Trail Making Test–Part B | - | 0.27 * |

| Trail Making Test–Part B-A | - | 0.29 * |

| Stroop Test–Errors | −0.32 * | 0.25 * |

| Stroop Test–Time | −0.45 ** | 0.28 * |

| Task 1 | Task 2 | Task 3 | Task 4 | Task 5 | Task 6 | Task 7 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Score | Time | Score | Time | Score | Time | Score | Time | Score | Time | Score | Time | Score | Time | |

| PMR | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | 0.241 | n.s. | 0.484 | n.s. | 0.296 | n.s. |

| AM | n.s. | n.s. | n.s. | n.s. | n.s. | −0.218 | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | 0.284 | −0.226 |

| FAB | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | 0.254 | n.s. | n.s. | n.s. | 0.266 | n.s. | 0.283 | n.s. |

| V.F.T. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | 0.489 | n.s. | 0.438 | n.s. |

| DS | n.s. | −0.269 | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | 0.251 | n.s. | 0.341 | −0.253 | 0.303 | n.s. |

| TMT–A | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | −0.301 | n.s. | −0.462 | n.s. | −0.299 | 0.244 |

| TMT–B | n.s. | 0.333 | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | −0.31 | n.s. | −0.36 | n.s. | n.s. | n.s. |

| TMT B-A | n.s. | 0.366 | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | −0.259 | n.s. | n.s. | n.s. | n.s. | n.s. |

| ST_E | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | n.s. | −0.29 | 0.28 | n.s. | n.s. | n.s. | n.s. |

| ST_T | n.s. | 0.339 | n.s. | n.s. | n.s. | n.s. | −0.282 | n.s. | −0.297 | n.s. | −0.344 | n.s. | −0.329 | 0.286 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Borgnis, F.; Borghesi, F.; Rossetto, F.; Pedroli, E.; Lavorgna, L.; Riva, G.; Baglio, F.; Cipresso, P. Psychometric Calibration of a Tool Based on 360 Degree Videos for the Assessment of Executive Functions. J. Clin. Med. 2023, 12, 1645. https://doi.org/10.3390/jcm12041645

Borgnis F, Borghesi F, Rossetto F, Pedroli E, Lavorgna L, Riva G, Baglio F, Cipresso P. Psychometric Calibration of a Tool Based on 360 Degree Videos for the Assessment of Executive Functions. Journal of Clinical Medicine. 2023; 12(4):1645. https://doi.org/10.3390/jcm12041645

Chicago/Turabian StyleBorgnis, Francesca, Francesca Borghesi, Federica Rossetto, Elisa Pedroli, Luigi Lavorgna, Giuseppe Riva, Francesca Baglio, and Pietro Cipresso. 2023. "Psychometric Calibration of a Tool Based on 360 Degree Videos for the Assessment of Executive Functions" Journal of Clinical Medicine 12, no. 4: 1645. https://doi.org/10.3390/jcm12041645

APA StyleBorgnis, F., Borghesi, F., Rossetto, F., Pedroli, E., Lavorgna, L., Riva, G., Baglio, F., & Cipresso, P. (2023). Psychometric Calibration of a Tool Based on 360 Degree Videos for the Assessment of Executive Functions. Journal of Clinical Medicine, 12(4), 1645. https://doi.org/10.3390/jcm12041645