A Psychometric Tool for Evaluating Executive Functions in Parkinson’s Disease

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Procedure

2.3. Statistical Analysis

3. Results

3.1. Participants

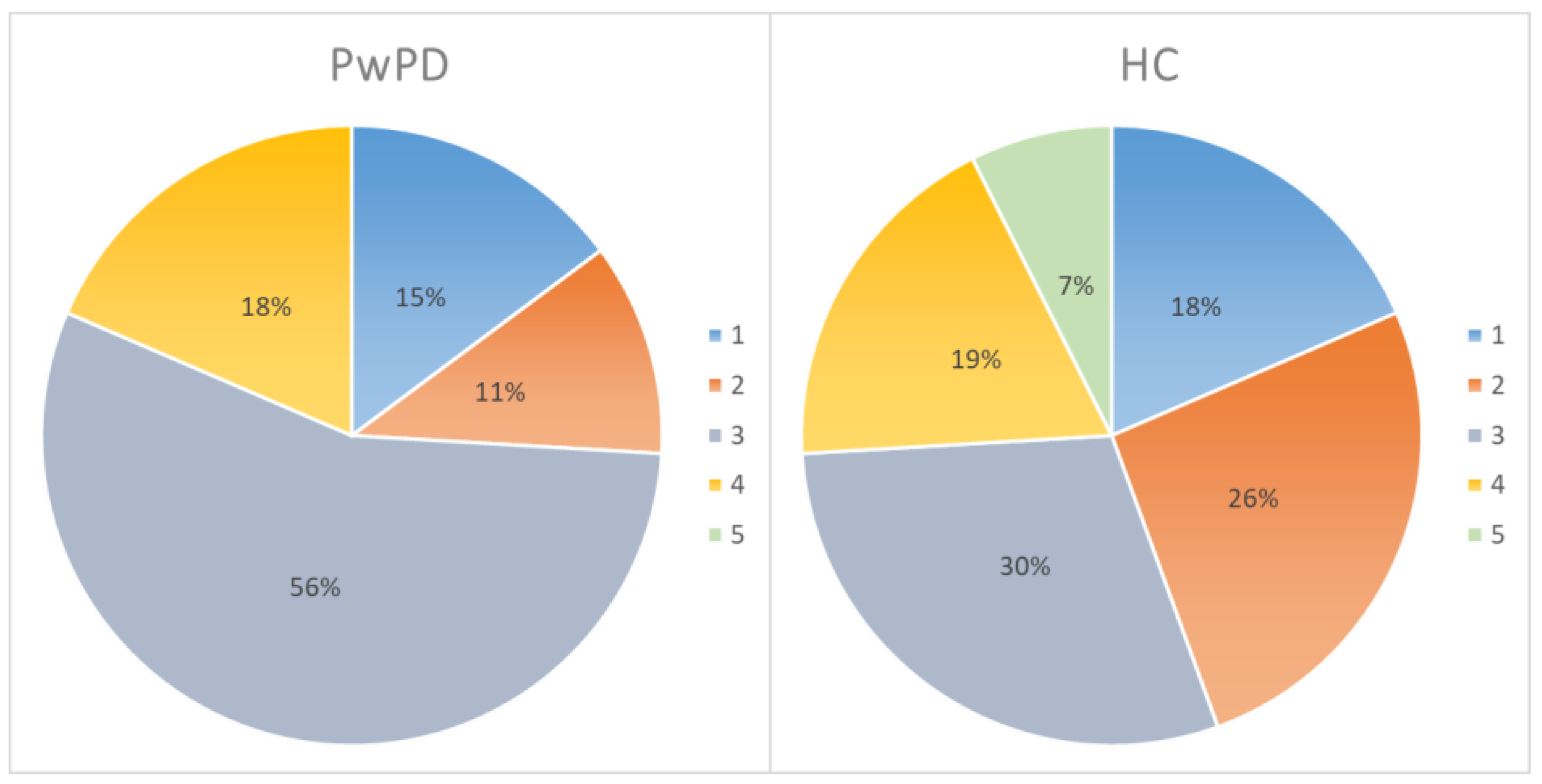

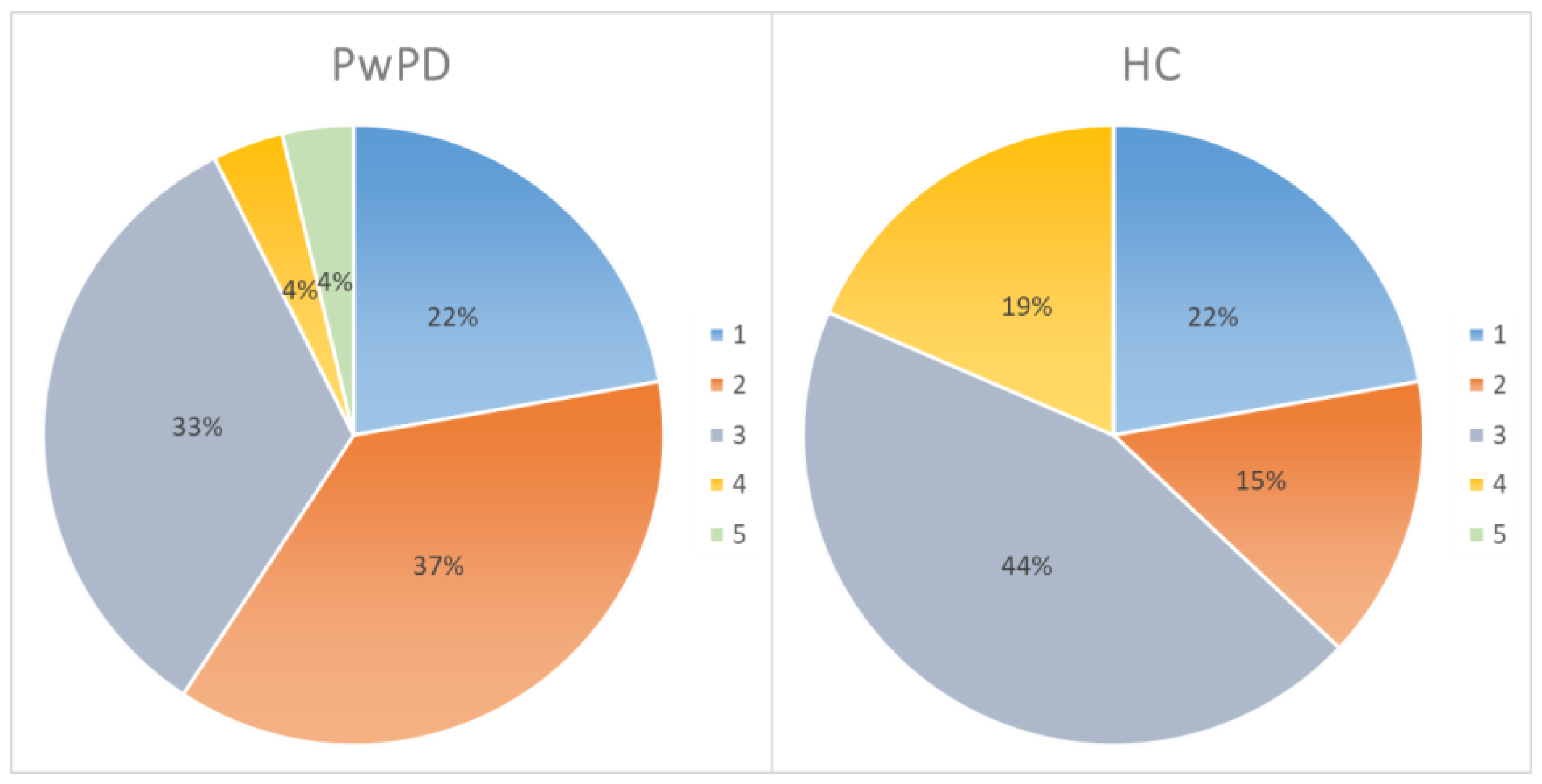

3.2. Technological Expertise

3.3. Neuropsychological Evaluation

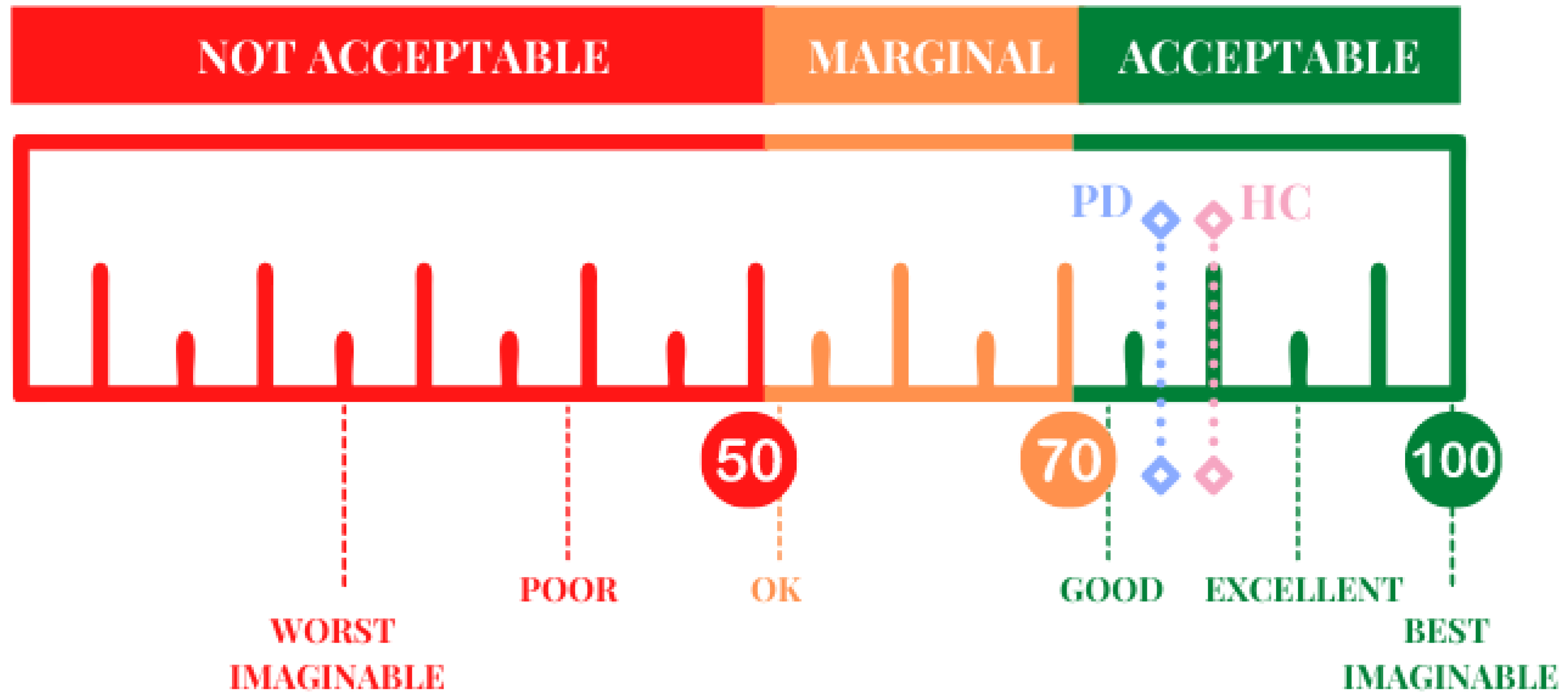

3.4. EXIT 360°: Usability

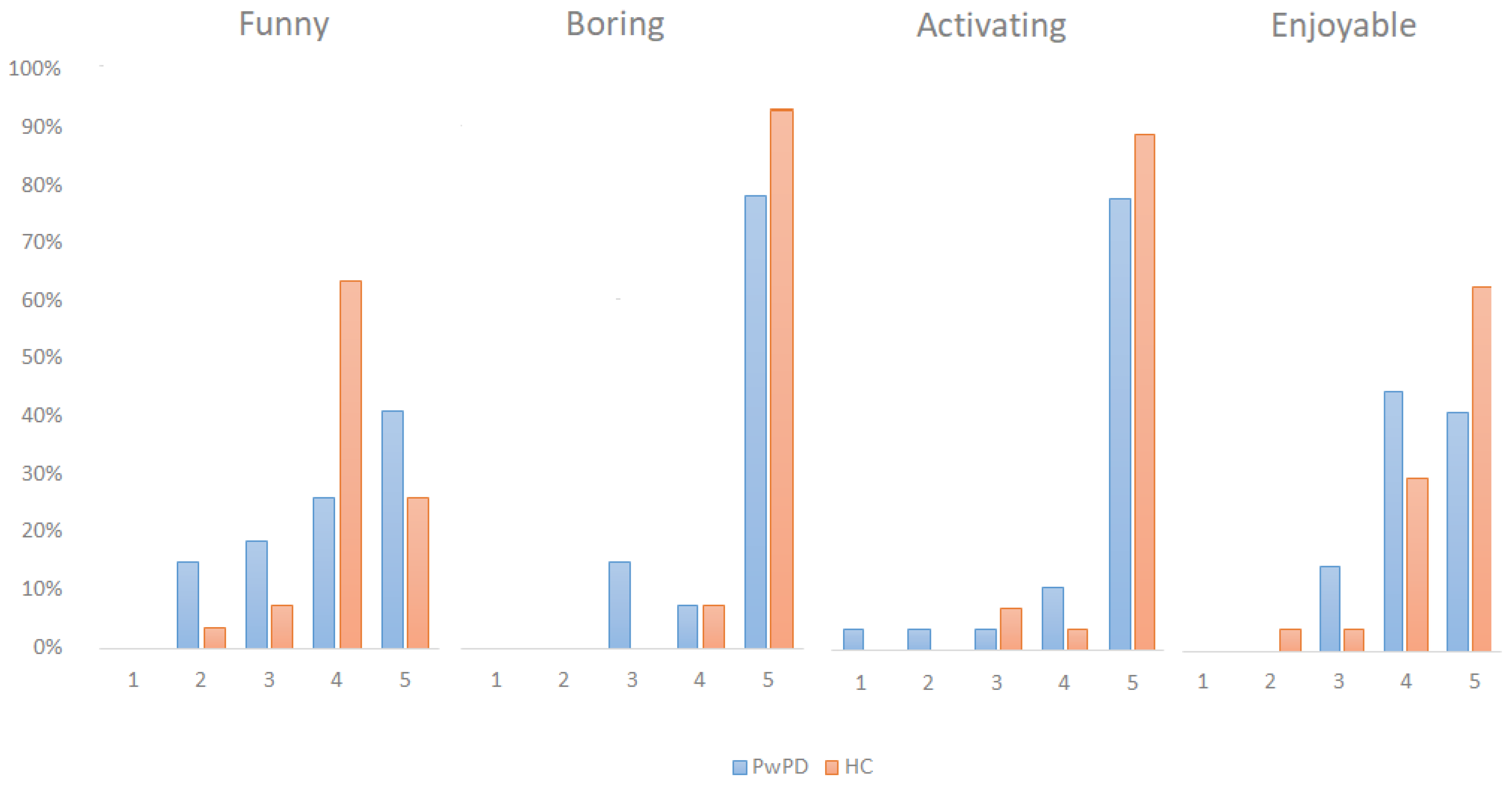

3.5. EXIT 360°: User Experience

3.6. Correlation

4. Discussion

Limitations and Future Perspectives

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ED | Executive Dysfunctions |

| EFs | Executive Functions |

| EXIT 360° | EXecutive-functions Innovative Tool 360° |

| F | Female |

| FAB | Frontal Assessment Battery |

| HC | Healthy Control |

| ICT—SOPI | ICT—Sense of Presence Inventory |

| IMI | Intrinsic Motivation Inventory |

| IQR | Interquartile Range |

| M | Male |

| MDS | Movement Disorder Society |

| MoCA test | Montreal Cognitive Assessment Test |

| N | Number |

| PD | Parkinson’s Disease |

| PIT | Picture Interpretation Test |

| PwPD | Patients with Parkinson’s Disease |

| SD | Standard Deviation |

| SUS | System Usability Scale |

| UEQ | User Experience Questionnaire |

| VMET | Virtual Multiple Errand Test |

| VR | Virtual Reality |

References

- Neguț, A. Cognitive assessment and rehabilitation in virtual reality: Theoretical review and practical implications. Rom. J. Appl. Psychol. 2014, 16, 1–7. [Google Scholar]

- Bohil, C.J.; Alicea, B.; Biocca, F.A. Virtual reality in neuroscience research and therapy. Nat. Rev. Neurosci. 2011, 12, 752–762. [Google Scholar] [CrossRef]

- Neguț, A.; Matu, S.-A.; Sava, F.A.; David, D. Virtual reality measures in neuropsychological assessment: A meta-analytic review. Clin. Neuropsychol. 2016, 30, 165–184. [Google Scholar] [CrossRef]

- Camacho-Conde, J.A.; Climent, G. Attentional profile of adolescents with ADHD in virtual-reality dual execution tasks: A pilot study. Appl. Neuropsychol. Child. 2020, 11, 1–10. [Google Scholar] [CrossRef]

- Dahdah, M.N.; Bennett, M.; Prajapati, P.; Parsons, T.D.; Sullivan, E.; Driver, S. Application of virtual environments in a multi-disciplinary day neurorehabilitation program to improve executive functioning using the Stroop task. NeuroRehabilitation 2017, 41, 721–734. [Google Scholar] [CrossRef]

- Cipresso, P.; Albani, G.; Serino, S.; Pedroli, E.; Pallavicini, F.; Mauro, A.; Riva, G. Virtual multiple errands test (VMET): A virtual reality-based tool to detect early executive functions deficit in parkinson’s disease. Front. Behav. Neurosci. 2014, 8, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Isernia, S.; Di Tella, S.; Pagliari, C.; Jonsdottir, J.; Castiglioni, C.; Gindri, P.; Salza, M.; Gramigna, C.; Palumbo, G.; Molteni, F.; et al. Effects of an innovative telerehabilitation intervention for people with Parkinson’s disease on quality of life, motor, and non-motor abilities. Front. Neurol. 2020, 11, 846. [Google Scholar] [CrossRef]

- Kudlicka, A.; Clare, L.; Hindle, J.V. Executive functions in Parkinson’s disease: Systematic review and meta-analysis. Mov. Disord. 2011, 26, 2305–2315. [Google Scholar] [CrossRef]

- Aarsland, D.; Creese, B.; Politis, M.; Chaudhuri, K.R.; Weintraub, D.; Ballard, C. Cognitive decline in Parkinson disease. Nat. Rev. Neurol. 2017, 13, 217–231. [Google Scholar] [CrossRef] [Green Version]

- Fengler, S.; Liepelt-Scarfone, I.; Brockmann, K.; Schäffer, E.; Berg, D.; Kalbe, E. Cognitive changes in prodromal Parkinson’s disease: A review. Mov. Disord. 2017, 32, 1655–1666. [Google Scholar] [CrossRef]

- Fang, C.; Lv, L.; Mao, S.; Dong, H.; Liu, B. Cognition Deficits in Parkinson’s Disease: Mechanisms and Treatment. Park Dis. 2020, 2020, 2076942. [Google Scholar] [CrossRef]

- Chan, R.C.K.; Shum, D.; Toulopoulou, T.; Chen, E.Y.H. Assessment of executive functions: Review of instruments and identification of critical issues. Arch. Clin. Neuropsychol. 2008, 23, 201–216. [Google Scholar] [CrossRef] [Green Version]

- Leroi, I.; McDonald, K.; Pantula, H.; Harbishettar, V. Cognitive impairment in Parkinson disease: Impact on quality of life, disability, and caregiver burden. J. Geriatr. Psychiatry Neurol. 2012, 25, 208–214. [Google Scholar] [CrossRef]

- Lawson, R.A.; Yarnall, A.J.; Duncan, G.W.; Breen, D.P.; Khoo, T.K.; Williams-Gray, C.H.; Barker, R.A.; Collerton, D.; Taylor, J.P.; Burn, D.J.; et al. Cognitive decline and quality of life in incident Parkinson’s disease: The role of attention. Parkinsonism Relat. Disord. 2016, 27, 47–53. [Google Scholar] [CrossRef] [Green Version]

- Barone, P.; Erro, R.; Picillo, M. Quality of life and nonmotor symptoms in Parkinson’s disease. In International Review of Neurobiology; Elsevier: Amsterdam, The Netherlands, 2017; pp. 499–516. [Google Scholar]

- Diamond, A. Executive functions. Annu. Rev. Psychol. 2013, 64, 135–168. [Google Scholar] [CrossRef] [Green Version]

- Dirnberger, G.; Jahanshahi, M. Executive dysfunction in P arkinson’s disease: A review. J. Neuropsychol. 2013, 7, 193–224. [Google Scholar] [CrossRef]

- Josman, N.; Schenirderman, A.E.; Klinger, E.; Shevil, E. Using virtual reality to evaluate executive functioning among persons with schizophrenia: A validity study. Schizophr. Res. 2009, 115, 270–277. [Google Scholar] [CrossRef]

- Levine, B.; Stuss, D.T.; Winocur, G.; Binns, M.A.; Fahy, L.; Mandic, M.; Bridges, K.; Robertson, I.H. Cognitive rehabilitation in the elderly: Effects on strategic behavior in relation to goal management. J. Int. Neuropsychol. Soc. JINS 2007, 13, 143. [Google Scholar] [CrossRef]

- Azuma, T.; Cruz, R.F.; Bayles, K.A.; Tomoeda, C.K.; Montgomery, E.B., Jr. A longitudinal study of neuropsychological change in individuals with Parkinson’s disease. Int. J. Geriatr. Psychiatry 2003, 18, 1043–1049. [Google Scholar] [CrossRef]

- Janvin, C.C.; Aarsland, D.; Larsen, J.P. Cognitive predictors of dementia in Parkinson’s disease: A community-based, 4-year longitudinal study. J. Geriatr. Psychiatry Neurol. 2005, 18, 149–154. [Google Scholar] [CrossRef]

- Serino, S.; Pedroli, E.; Cipresso, P.; Pallavicini, F.; Albani, G.; Mauro, A.; Riva, G. The role of virtual reality in neuropsychology: The virtual multiple errands test for the assessment of executive functions in Parkinson’s disease. Intell. Syst. Ref. Libr. 2014, 68, 257–274. [Google Scholar]

- Armstrong, C.M.; Reger, G.M.; Edwards, J.; Rizzo, A.A.; Courtney, C.G.; Parsons, T.D. Validity of the Virtual Reality Stroop Task (VRST) in active duty military. J. Clin. Exp. Neuropsychol. 2013, 35, 113–123. [Google Scholar] [CrossRef] [PubMed]

- Parsons, T.D. Virtual reality for enhanced ecological validity and experimental control in the clinical, affective and social neurosciences. Front. Hum. Neurosci. 2015, 9, 1–19. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Serino, S.; Baglio, F.; Rossetto, F.; Realdon, O.; Cipresso, P.; Parsons, T.D.; Cappellini, G.; Mantovani, F.; De Leo, G.; Nemni, R.; et al. Picture Interpretation Test (PIT) 360°: An Innovative Measure of Executive Functions. Sci. Rep. 2017, 7, 1–10. [Google Scholar]

- Realdon, O.; Serino, S.; Savazzi, F.; Rossetto, F.; Cipresso, P.; Parsons, T.D.; Cappellini, G.; Mantovani, F.; Mendozzi, L.; Nemni, R.; et al. An ecological measure to screen executive functioning in MS: The Picture Interpretation Test (PIT) 360°. Sci. Rep. 2019, 9, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Klinger, E.; Chemin, I.; Lebreton, S.; Marie, R.M. A virtual supermarket to assess cognitive planning. Annu. Rev. Cyber. Therapy Telemed. 2004, 2, 49–57. [Google Scholar]

- Klinger, E.; Chemin, I.; Lebreton, S.; Marié, R.-M. Virtual action planning in parkinson’s disease: AControl study. Cyberpsychol. Behav. 2006, 9, 342–347. [Google Scholar] [CrossRef]

- Raspelli, S.; Carelli, L.; Morganti, F.; Albani, G.; Pignatti, R.; Mauro, A.; Poletti, B.; Corra, B.; Silani, V.; Riva, G. A neuro vr-based version of the multiple errands test for the assessment of executive functions: A possible approach. J. Cyber. Ther. Rehabil. 2009, 2, 299–314. [Google Scholar]

- Wiederhold, B.K.; Reality, V.; Riva, G. Annual Review of Cybertherapy and Telemedicine 2010—Advanced Technologies in Behavioral, Social and Neurosciences. Stud. Health Technol. Inform. 2010, 154, 92. [Google Scholar]

- Violante, M.G.; Vezzetti, E.; Piazzolla, P. Interactive virtual technologies in engineering education: Why not 360° videos? Int. J. Interact. Des. Manuf. 2019, 13, 729–742. [Google Scholar] [CrossRef]

- Borgnis, F.; Baglio, F.; Pedroli, E.; Rossetto, F.; Riva, G.; Cipresso, P. A Simple and Effective Way to Study Executive Functions by Using 360° Videos. Front. Neurosci. 2021, 15, 296. [Google Scholar] [CrossRef] [PubMed]

- Borgnis, F.; Baglio, F.; Pedroli, E.; Rossetto, F.; Isernia, S.; Uccellatore, L.; Riva, G.; Cipresso, P. EXecutive-Functions Innovative Tool (EXIT 360°): A Usability and User Experience Study of an Original 360°-Based Assessment Instrument. Sensors 2021, 21, 5867. [Google Scholar] [CrossRef] [PubMed]

- Tuena, C.; Pedroli, E.; Trimarchi, P.D.; Gallucci, A.; Chiappini, M.; Goulene, K.; Gaggioli, A.; Riva, G.; Lattanzio, F.; Giunco, F.; et al. Usability issues of clinical and research applications of virtual reality in older people: A systematic review. Front. Hum. Neurosci. 2020, 14, 93. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sauer, J.; Sonderegger, A.; Schmutz, S. Usability, user experience and accessibility: Towards an integrative model. Ergonomics 2020, 63, 1207–1220. [Google Scholar] [CrossRef]

- Pedroli, E.; Cipresso, P.; Serino, S.; Albani, G.; Riva, G. A Virtual Reality Test for the assessment of cognitive deficits: Usability and perspectives. In Proceedings of the 2013 7th International Conference on Pervasive Computing Technologies for Healthcare and Workshops, Venice, Italy, 5–8 May 2013; Volume 2013, pp. 453–458. [Google Scholar]

- Pedroli, E.; Greci, L.; Colombo, D.; Serino, S.; Cipresso, P.; Arlati, S.; Mondellini, M.; Boilini, L.; Giussani, V.; Goulene, K.; et al. Characteristics, usability, and users experience of a system combining cognitive and physical therapy in a virtual environment: Positive bike. Sensors 2018, 18, 2343. [Google Scholar] [CrossRef] [Green Version]

- Iso, W. 9241-11. Ergonomic requirements for office work with visual display terminals (VDTs). Int. Organ. Stand. 1998, 45. [Google Scholar]

- Schultheis, M.T.; Rizzo, A.A. The application of virtual reality technology in rehabilitation. Rehabil. Psychol. 2001, 46, 296. [Google Scholar] [CrossRef]

- Parsons, T.D.; Phillips, A.S. Virtual reality for psychological assessment in clinical practice. Pract. Innov. 2016, 1, 197–217. [Google Scholar] [CrossRef] [Green Version]

- Nasreddine, Z.S.; Phillips, N.A.; Bédirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cummings, J.L.; Chertkow, H. The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 2005, 53, 695–699. [Google Scholar] [CrossRef]

- Santangelo, G.; Siciliano, M.; Pedone, R.; Vitale, C.; Falco, F.; Bisogno, R.; Siano, P.; Barone, P.; Grossi, D.; Santangelo, F.; et al. Normative data for the Montreal Cognitive Assessment in an Italian population sample. Neurol. Sci. 2015, 36, 585–591. [Google Scholar] [CrossRef] [Green Version]

- Postuma, R.B.; Berg, D.; Stern, M.; Poewe, W.; Olanow, C.W.; Oertel, W.; Obeso, J.; Marek, K.; Litvan, I.; Lang, A.E.; et al. MDS clinical diagnostic criteria for Parkinson’s disease. Mov. Disord. 2015, 30, 1591–1601. [Google Scholar] [CrossRef] [PubMed]

- World Medical Association. Declaration of Helsinki: Ethical principles for medical research involving human subjects. JAMA 2013, 310, 2191–2194. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Borgnis, F.; Baglio, F.; Pedroli, E.; Rossetto, F.; Meloni, M.; Riva, G.; Cipresso, P. EXIT 360—executive-functions innovative tool 360—A simple and effective way to study executive functions in parkinson’s disease by using 360 videos. Appl. Sci. 2021, 11, 6791. [Google Scholar] [CrossRef]

- Appollonio, I.; Leone, M.; Isella, V.; Piamarta, F.; Consoli, T.; Villa, M.L.; Forapani, E.; Russo, A.; Nichelli, P. The Frontal Assessment Battery (FAB): Normative values in an Italian population sample. Neurol. Sci. 2005, 26, 108–116. [Google Scholar] [CrossRef]

- Dubois, B.; Slachevsky, A.; Litvan, I.; Pillon, B. The FAB: A frontal assessment battery at bedside. Neurology 2000, 55, 1621–1626. [Google Scholar] [CrossRef] [Green Version]

- Serino, S.; Repetto, C. New trends in episodic memory assessment: Immersive 360 ecological videos. Front. Psychol. 2018, 9, 1878. [Google Scholar] [CrossRef] [Green Version]

- Brooke, J. System Usability Scale (SUS): A Quick-and-Dirty Method of System Evaluation User Information; Digit Equip Co Ltd.: Reading, UK, 1986; p. 43. [Google Scholar]

- Brooke, J. SUS-A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Lewis, J.R.; Sauro, J. The factor structure of the system usability scale. In International Conference on Human Centered Design; Springer: Berlin/Heidelberg, Germany, 2009; pp. 94–103. [Google Scholar]

- Laugwitz, B.; Held, T.; Schrepp, M. Construction and evaluation of a user experience questionnaire. In Symposium of the Austrian HCI and Usability Engineering Group; Springer: Berlin/Heidelberg, Germany, 2008; pp. 63–76. [Google Scholar]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Applying the user experience questionnaire (UEQ) in different evaluation scenarios. In International Conference of Design, User Experience, and Usability; Springer: Berlin/Heidelberg, Germany, 2014; pp. 383–392. [Google Scholar]

- Schrepp, M.; Thomaschewski, J. Design and Validation of a Framework for the Creation of User Experience Questionnaires. Int. J. Interact. Multimed. Artif. Intell. 2019, 5, 88–95. [Google Scholar] [CrossRef]

- Lessiter, J.; Freeman, J.; Keogh, E.; Davidoff, J. A cross-media presence questionnaire: The ITC-Sense of Presence Inventory. Presence Teleoperators Virtual Environ. 2001, 10, 282–297. [Google Scholar] [CrossRef] [Green Version]

- Engeser, S.; Rheinberg, F.; Vollmeyer, R.; Bischoff, J. Motivation, flow-Erleben und Lernleistung in universitären Lernsettings. Zeitschrift für Pädagogische Psychol. 2005, 19, 159–172. [Google Scholar] [CrossRef]

- Deci, E.L.; Eghrari, H.; Patrick, B.C.; Leone, D.R. Facilitating internalization: The self-determination theory perspective. J. Pers. 1994, 62, 119–142. [Google Scholar] [CrossRef] [PubMed]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An empirical evaluation of the system usability scale. Intl. J. Hum.–Computer Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Cronbach, L.J. Coefficient alpha and the internal structure of tests. Psychometrika 1951, 16, 297–334. [Google Scholar] [CrossRef] [Green Version]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Construction of a Benchmark for the User Experience Questionnaire (UEQ). Int. J. Interact. Multim. Artif. Intell. 2017, 4, 40–44. [Google Scholar] [CrossRef] [Green Version]

- Aubin, G.; Béliveau, M.F.; Klinger, E. An exploration of the ecological validity of the Virtual Action Planning–Supermarket (VAP-S) with people with schizophrenia. Neuropsychol. Rehabil. 2018, 28, 689–708. [Google Scholar] [CrossRef]

- Alster, P.; Madetko, N.; Koziorowski, D.; Friedman, A. Progressive Supranuclear Palsy-Parkinsonism Predominant (PSP-P)-A Clinical Challenge at the Boundaries of PSP and Parkinson’s Disease (PD). Front. Neurol. 2020, 11, 180. [Google Scholar] [CrossRef] [PubMed]

- Necpál, J.; Borsek, M.; Jeleňová, B. “Parkinson’s disease” on the way to progressive supranuclear palsy: A review on PSP-parkinsonism. Neurol. Sci. 2021, 42, 4927–4936. [Google Scholar] [CrossRef]

| Scale | Aim | Characteristics |

|---|---|---|

| User Experience Questionnaire (UEQ) [52,53,54] | 1. attractiveness (overall impression of the product) 2. perspicuity: easily to learn how to use the product 3. efficiency (user’s effort to solve tasks) 4. dependability (feeling of control of the interaction) 5. stimulation (motivation to use the product) 6. novelty: (innovation and creation of product) | a 26 item-scale (semantic differential scale: each item consists of two opposite adjectives, e.g., boring vs. exciting) that allows for calculation of the six different domains |

| ICT—Sense of Presence Inventory (ICT—SOPI) [55] | 1. spatial, physical presence: the feeling of being in a physical space in the virtual environment and having control over it 2. engagement: the tendency to feel psychologically and pleasantly involved in the virtual environment 3. ecological validity: the tendency to perceive the virtual environment as real 4. negative effects: adverse psychological reactions | 44 item-scale 5-point scale from 1: “strongly disagree” to 5 “strongly agree.” ICT—SOPI is divided into thoughts and feelings after experiencing the environment (Part A) or while the user was experiencing the environment (Part B). Items are divided into four dimensions, generated by calculating the mean of all items contributing to each factor. |

| Flow Short Scale (three items) [56] | perceived level of: —abilities in coping with the task —challenges —challenge-skill balance | 5-point scale: from low to high |

| Intrinsic Motivation Inventory (subscale enjoyment—four items) [57] | participants’ appreciation of the proposed activity (i.e., boring, pleasant, fun and activating) | 5-point scale: from low to high The item boring scores were reversed to align with the remaining items; therefore, in the whole scale, a low value in the items reflects a negative perception of the experience with EXIT 360°. |

| PwPD N = 27 | HC N = 27 | Group Comparison (p-Value) | |

|---|---|---|---|

| Age (years, mean (SD)) | 68.2 (9) | 66.4 (10.5) | 0.507 |

| Sex (M: F) | 11:16 | 11:16 | 1.000 |

| Age of education (years, median (IRQ)) | 13 (5) | 13 (5) | 0.740 |

| MoCA_raw score (mean (SD)) | 25.4 (3.12) | 26.3 (2.25) | 0.235 |

| MoCA_adjusted score (mean (SD)) | 25.3 (2.25) | 26.0 (2.53) | 0.246 |

| PwPD Mean ± SD | HC Mean ± SD | Group Comparison (p-Value) | |

|---|---|---|---|

| Total EXIT Score | 10.5 ± 1.58 | 12.3 ± 1.07 | <0.001 |

| Total Reaction Time | 716.4 ± 174.19 | 457.3 ± 73.60 | <0.001 |

| FAB | 15.94 ± 2.33 | 17.46 ± 1.003 | 0.006 |

| PwPD Median (IRQ) | HC Median (IRQ) | Group Comparison (p-Value) | |

|---|---|---|---|

| Boring | 5 (5) | 5 (5) | 0.107 |

| Enjoyable | 4 (4–5) | 5 (4–5) | 0.113 |

| Activating | 5 (5) | 5 (5) | 0.28 |

| Funny | 4 (3.5) | 4 (4–4.5) | 0.81 |

| PwPD Mean ± SD | HC Mean ± SD | Group Comparison (p-Value) | |

|---|---|---|---|

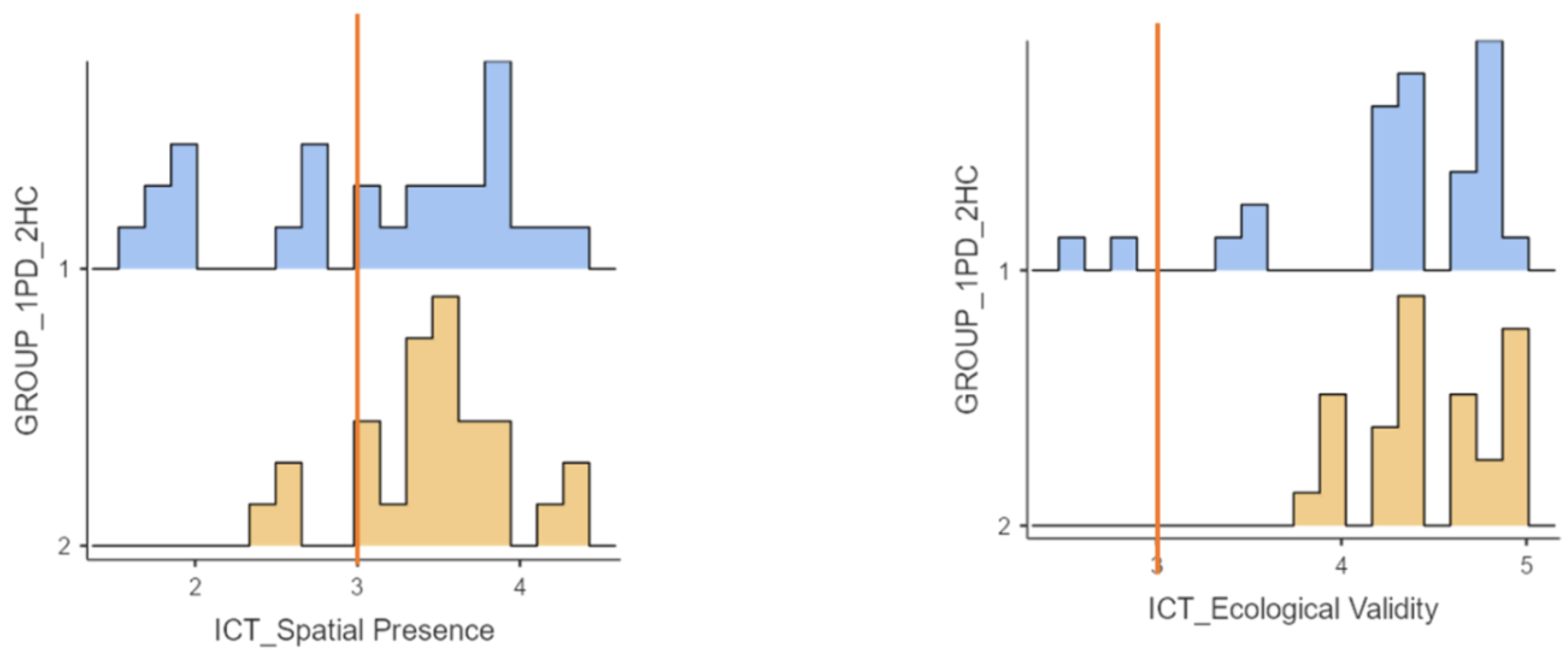

| Spatial Presence | 3.11 ± 0.83 | 3.47 ± 0.48 | 0.054 |

| Engagement | 3.43 ± 0.54 | 3.9 ± 0.47 | 0.001 |

| Ecological Validity | 4.29 ± 0.61 | 4.49 ± 0.37 | 0.149 |

| Negative Effects | 1.29 ± 0.42 | 1.2 ± 0.26 | 0.361 |

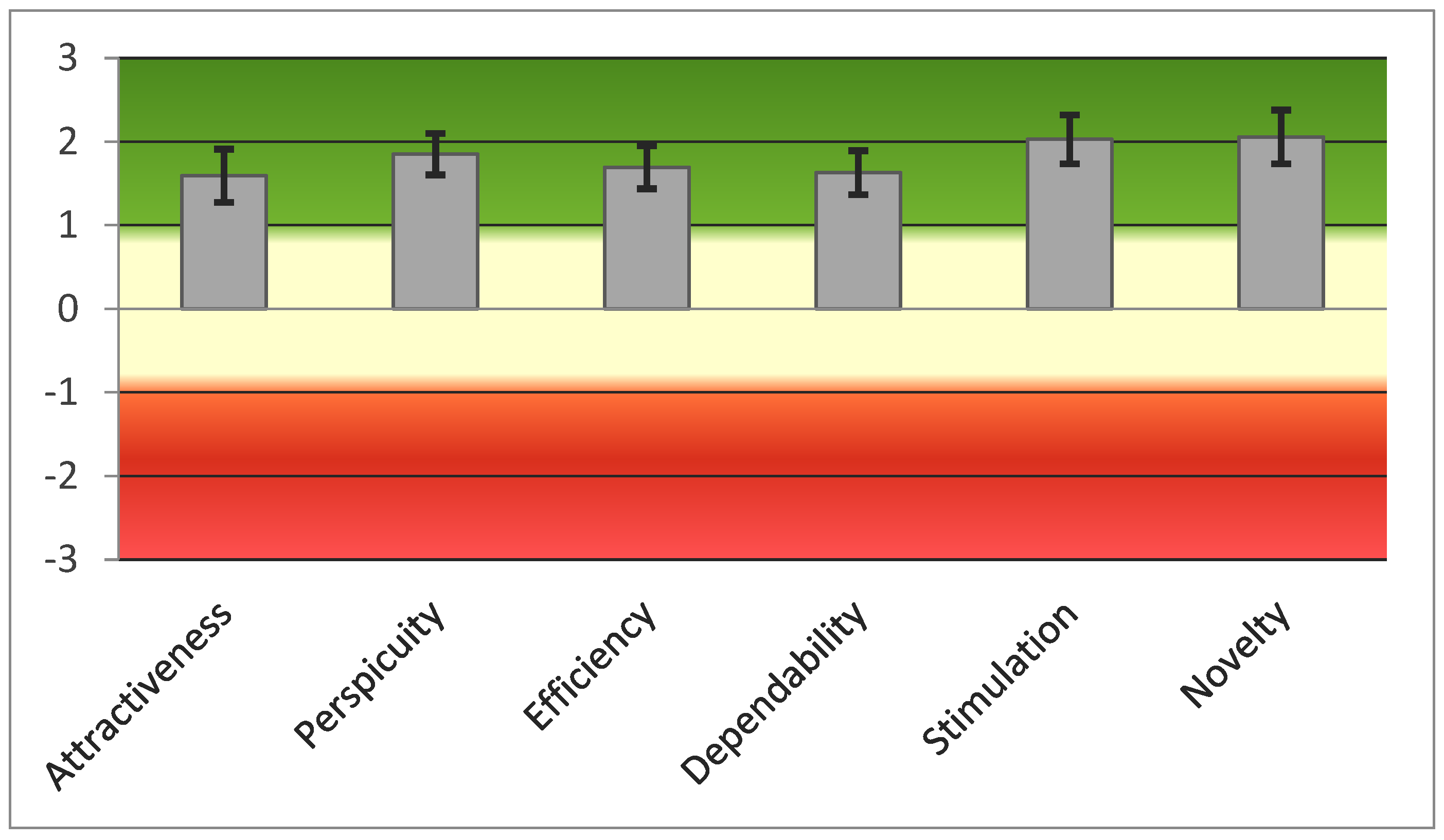

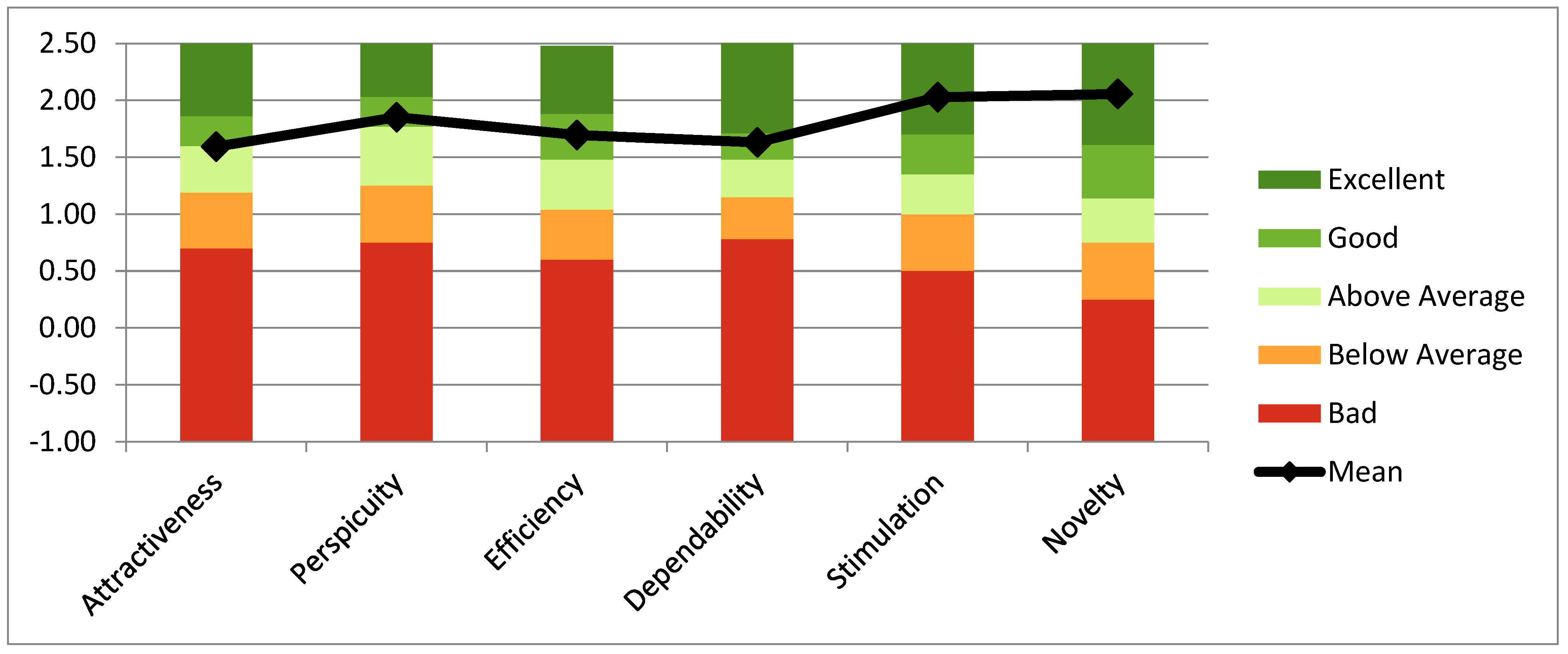

| Mean | SD | Confidence Interval | Alpha- Coefficient | ||

|---|---|---|---|---|---|

| Attractiveness | 1.593 | 0.846 | 1.273 | 1.912 | 0.89 |

| Perspicuity | 1.852 | 0.655 | 1.605 | 2.099 | 0.81 |

| Efficiency | 1.694 | 0.681 | 1.438 | 1.951 | 0.72 |

| Dependability | 1.630 | 0.695 | 1.368 | 1.892 | 0.78 |

| Stimulation | 2.028 | 0.776 | 1.735 | 2.321 | 0.79 |

| Novelty | 2.056 | 0.853 | 1.734 | 2.377 | 0.93 |

| PwPD Mean (SD) | HC Mean (SD) | Group Comparison (p-Value) | |

|---|---|---|---|

| Attractiveness | 1.59 (0.85) | 1.81 (1.13) | 0.430 |

| Perspicuity | 1.85 (0.66) | 2.01 (0.75) | 0.416 |

| Efficiency | 1.69 (0.68) | 1.73 (0.84) | 0.859 |

| Dependability | 1.63 (0.70) | 2.14 (0.86) | 0.020 |

| Stimulation | 2.03 (0.78) | 2.08 (0.98) | 0.818 |

| Novelty | 2.06 (0.85) | 2.46 (0.68) | 0.058 |

| Pragmatic Quality | 1.73 (0.59) | 1.96 (0.74) | 0.204 |

| Hedonic Quality | 2.04 (0.72) | 2.27 (0.82) | 0.275 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Borgnis, F.; Baglio, F.; Pedroli, E.; Rossetto, F.; Meloni, M.; Riva, G.; Cipresso, P. A Psychometric Tool for Evaluating Executive Functions in Parkinson’s Disease. J. Clin. Med. 2022, 11, 1153. https://doi.org/10.3390/jcm11051153

Borgnis F, Baglio F, Pedroli E, Rossetto F, Meloni M, Riva G, Cipresso P. A Psychometric Tool for Evaluating Executive Functions in Parkinson’s Disease. Journal of Clinical Medicine. 2022; 11(5):1153. https://doi.org/10.3390/jcm11051153

Chicago/Turabian StyleBorgnis, Francesca, Francesca Baglio, Elisa Pedroli, Federica Rossetto, Mario Meloni, Giuseppe Riva, and Pietro Cipresso. 2022. "A Psychometric Tool for Evaluating Executive Functions in Parkinson’s Disease" Journal of Clinical Medicine 11, no. 5: 1153. https://doi.org/10.3390/jcm11051153

APA StyleBorgnis, F., Baglio, F., Pedroli, E., Rossetto, F., Meloni, M., Riva, G., & Cipresso, P. (2022). A Psychometric Tool for Evaluating Executive Functions in Parkinson’s Disease. Journal of Clinical Medicine, 11(5), 1153. https://doi.org/10.3390/jcm11051153