Featured Application

This study explores the intelligent application of Shanghai’s writing proficiency assessment, targeting efficient recognition of handwritten Chinese characters in promotion exams for primary and secondary students.

Abstract

The dense text detection and segmentation of Chinese characters has always been a research hotspot due to the complex background and diverse scenarios. In the field of education, the detection of handwritten Chinese characters is affected by background noise, texture interference, etc. Especially in low-quality handwritten text, character overlap or occlusion makes the character boundaries blurred, which increases the difficulty of detection and segmentation; In this paper, an improved EAST network CEE (Components-ECA-EAST Network), which fuses the attention mechanism with the feature pyramid structure, is proposed based on the analysis of the structure of Chinese character mini-components. The ECA (Efficient Channel Attention) attention mechanism is incorporated during the feature extraction phase; in the feature fusion stage, the convolutional features are extracted from the self-constructed mini-component dataset and then fused with the feature pyramid in a cascade manner, and finally, Dice Loss is used as the regression task loss function. The above improvements comprehensively improve the performance of the network in detecting and segmenting the mini-components and subtle strokes of handwritten Chinese characters; The CEE model was tested on the self-constructed dataset with an accuracy of 84.6% and a mini-component mAP of 77.6%, which is an improvement of 7.4% and 8.4%, respectively, compared to the original model; The constructed dataset and improved model are well suited for applications such as writing grade examinations, and represent an important exploration of the development of educational intelligence.

1. Introduction

The task of detecting Chinese characters is not only an important step in Chinese character recognition and processing, but also widely used in the fields of cultural heritage preservation, calligraphy analysis, and font generation. However, due to the multi-scale characteristics, complex background, and dense distribution of Chinese character components, traditional image segmentation methods are difficult to meet the requirements of practical applications.

The main challenges of handwritten Chinese character detection can be summarized as follows: first, the structure of Chinese characters is inherently complex, with each glyph consisting of multiple strokes and complex relative positional relationships between the strokes. Some Chinese characters have large differences in stroke order, curvature, and structural combinations, making recognition more difficult. Compared with Latin letters or numbers, the structure of Chinese characters is not only more complex but also varied. Secondly, there are significant individual differences in the writing styles of handwritten Chinese characters. Factors such as writing styles, stroke thickness, and inclination of characters by different writers can lead to differences in the performance of the same character shape among different individuals. The issues of blurred handwriting, overlapping characters, and hyphenation, all caused by excessive writing speed, can significantly affect the accurate recognition of characters. Furthermore, in practical applications, background noise, texture interference, and other non-target elements often appear in handwritten Chinese character images, and these factors place higher demands on character localization and segmentation. Finally, in some low-quality handwritten texts, characters may overlap and occlude, which makes the boundaries between characters blurred and increases the difficulty of detection and segmentation.

Wang [1] and others proposed a text region detection method based on multi-scale sliding windows, which efficiently identifies text regions with good results by sliding classification of each window through a convolutional network. Tian [2] proposed the CTPN (Connectionist Text Proposal Network) text detection network, which holds a significant position in the field of text detection, and is especially suitable for the detection task of scene text box narrow, because the size of the text box varies greatly. CTPN uses fixed text anchor frames of different sizes and combines them with the Faster-RCNN concept to efficiently detect small-size text regions. Meanwhile, CTPN uses a text line construction method to connect multiple candidate text boxes into complete text boxes. Subsequently, the network further processes the convolutional features through a bidirectional LSTM structure to enhance the representation of spatial as well as sequential information.

On this basis, the EAST (Efficient and Accurate Scene Text Detector) algorithm proposed by Zhou [3] draws on the concept of U-Net [4], employing a fully convolutional neural network (FCN) and non-maximum suppression (NMS) algorithms to streamline intermediate processing steps, enabling efficient detection at the single-character level. SegLink [5] is an improved version of CTPN, which not only makes tilt improvement for text box prediction but also optimizes the positioning of text boxes by connecting candidate boxes through neural networks. PixelLink [6] predicts text pixels and non-text pixels separately by training a convolutional neural network model and connecting them for instance segmentation. Based on this, the TextSnake [7] algorithm handles irregular text regions more flexibly by predicting the position of the centerline of the text, the radius of the central disc, and the angle between the centers of adjacent discs, thus avoiding the dependence of traditional prediction frames (e.g., quadrangles and rotated rectangles) on the shape and length of the text. Diverging from traditional methods using bbox instance segmentation, BoundarySpotter [8] employs multi-scale boundary point localization and adaptive topology reconstruction for geometric/semantic modeling of irregular text, proving boundary representations enhance accuracy in complex scenes. R50_DBU [9] introduces an enhanced differential binarization network, integrating U-Net’s feature architecture, to address challenges in automated text detection such as inadequate recognition of special characters and incomplete detection of dense and lengthy text segments. TextFuse [10] proposes a dual-stage fusion framework addressing single-feature dependency limitations in scene text detection through multi-modal feature aggregation and multi-algorithm consensus integration.

In this paper, an improved EAST network CEE (Components-ECA-EAST Network) that integrates the attention mechanism and the feature pyramid structure is proposed based on the structural analysis of Chinese character mini components. The ECA (Efficient Channel Attention) attention structure is introduced in the feature extraction phase to refine the weight distribution between feature channels and improve the shortcomings of traditional EAST in attention allocation; for the tiny component dataset, the features are first enhanced and refined by convolutional layers, and then cascaded fusion with the feature pyramid is carried out, to better retain the fine-grained features in the multi-scale feature aggregation to better preserve fine-grained information, and finally, Dice Loss is utilized as the loss function for the regression task. The above improvements improve the network’s performance in detecting and segmenting the ultra-small components and fine strokes of handwritten Chinese characters.

2. Components-ECA-EAST Network

2.1. CEE Network Structure

In the research of detection and segmentation of handwritten Chinese character images, the EAST model has been widely adopted due to its efficient and accurate text detection capabilities [11]. The EAST model proposes a simplified end-to-end text detection method, which is especially suitable for dealing with scene text detection tasks. Unlike traditional methods, EAST does not rely on Region Proposal Networks (RPNs) or candidate frame generation but avoids complex processing by predicting the bounding boxes and geometric properties of text regions directly.

Specifically, EAST utilizes a Fully Convolutional Neural Network (FCN) for image feature extraction, predicting whether each pixel belongs to a text region while simultaneously inferring morphological features of the text, such as its orientation and size [12]. This method significantly improves the speed of text detection while maintaining high accuracy in diverse background environments.

A major advantage of the EAST model is its good adaptability. Many traditional text detection algorithms need to be specially adapted for different text scales and orientations, whereas EAST can flexibly cope with various types of text through a unified network architecture, especially when facing handwritten Chinese character images, it can effectively deal with a variety of irregular writing and complex backgrounds, and improve the robustness of detection [13]. Therefore, the adoption of EAST in the detection and segmentation of handwritten Chinese character images can significantly improve the accuracy, reduce the occurrence of false and missed detections, and provide more reliable input data for the subsequent text recognition and image segmentation tasks. However, the traditional EAST often suffers from the problems of missed detection and edge blurring when dealing with ultra-small targets and especially performs poorly in the detection of tiny parts in complex word classes [14], moreover, the performance of the EAST model is limited for the detection of dense and long texts. This also becomes an improvement idea for this paper.

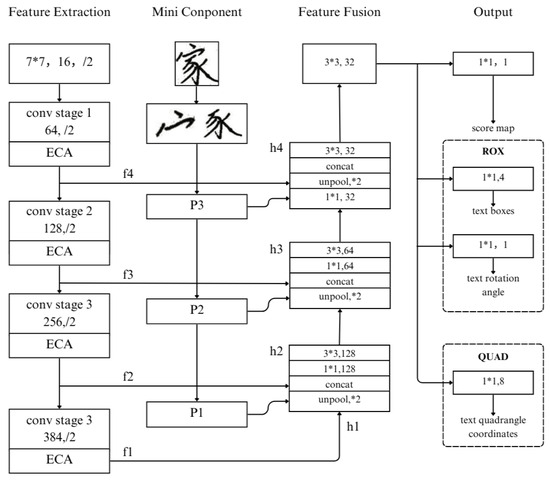

The improvements in this paper mainly focus on the feature extraction phase and feature fusion phase of EAST. To address the problem that EAST overly relies on the backbone network in the feature extraction phase, and insufficient processing of dense textual information, which leads to inaccurate feature extraction, information loss, and insufficient feature expression ability in complex backgrounds or fuzzy or overlapping Chinese character scenarios, this paper introduces the ECA attention mechanism in the backbone network to strengthen the capture of key information and the allocation of feature weights among channels [15]. Given the leakage and edge-blurring phenomenon of EAST in detecting small targets, this paper uses the self-built small parts dataset to extract the convolutional features and fuses them with the convolutional layer in the feature extraction stage through the feature pyramid network (FPN) further enhances the detection of small targets and the representation of boundary features. The CEE network structure is illustrated in Figure 1, where f, P, and h represent the feature maps obtained after the convolution operations.

Figure 1.

CEE network structure.

2.2. Multi-Scale Feature Fusion

Multi-scale feature fusion is a strategy widely used by deep learning models for image processing tasks, which aims to unite features from different scales to comprehensively capture the detailed information and global structure of the target. The core of this approach is to integrate features extracted from varying receptive fields, enabling the model to focus on both the global structure and local details of the image. In Convolutional Neural Networks (CNNs), the size of the feature map is usually progressively reduced with convolution and pooling operations, so that features at different levels correspond to information at different scales [16]. Lower-level features contain more detailed information (e.g., edges and textures), while higher-level features reflect more abstract information (e.g., shapes and semantics).

The EAST model uses the idea of FPN in multi-scale fusion. Multiscale information representation is achieved by constructing a multiscale feature map that combines shallow and deep features [17]. As a classical implementation of this approach, a Feature Pyramid Network (FPN) fuses different levels of features by up-sampling layer by layer and summing them with the lower-level features, so that the network can extract detailed information as well as capture the global structure, which demonstrates excellent performance in classification and detection tasks.

C denotes the layer C feature map of the backbone network and P denotes the layer C feature map after fusion through FPN, the feature fusion process of FPN can be represented as:

where a is the fused feature map in layer of FPN, denotes the up-sampling of the previous feature map (usually using bilinear interpolation or inverse convolution); denotes the layer index of the feature map.

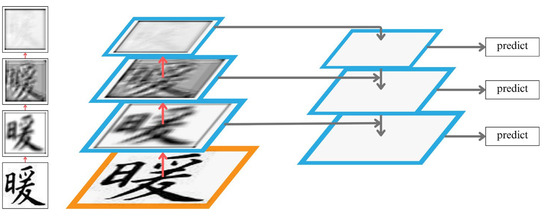

In this paper, we use the self-built mini-components dataset to perform convolution operation on the Chinese character image to extract deep and shallow features, and fuse these features with the feature maps generated from the EAST feature extraction layer via FPN, and enhance the network’s sensitivity to mini-components. The specific structure is shown in Figure 2.

Figure 2.

Mini-components feature pyramid fusion.

2.3. ECA Module

Over the past few years, the application of attention mechanisms in deep convolutional neural networks has become more and more widespread, such as BAM [18], CBAM [19], GCNet [20], SENet [21], Self-Attention [22] and other attention mechanisms. The properties of the attention mechanism enable the network to reasonably increase the focus on the key information of handwritten Chinese character images. As the network size becomes larger and larger, more and more feature information is stored in the model, but the deepening of its network layers will lead to information overload and the existence of many useless and redundant feature information. Therefore, the introduction of the attention mechanism module can effectively solve this problem of information redundancy, and improve the attention to other feature information, so that the network is more focused on valuable feature information.

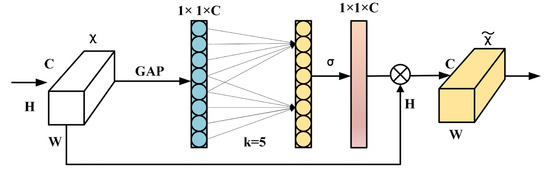

The Efficient Channel Attention [23] module is a lightweight channel attention mechanism that aims to improve the ability of convolutional neural networks to capture inter-channel information, thus enhancing the feature representation performance of the network. The ECA module effectively improves computational efficiency by reducing the number of parameters and avoiding redundant computation, which is an important innovation. Unlike other traditional attention modules, such as the SE module, which requires a fully connected layer to learn the channel weights, the fully connected layer dimensionality reduction causes the channel attention to lose the feature information, and makes the model complexity and computation relatively high, and the efficiency of capturing the dependencies between channels relatively low. The ECA module achieves the same functionality by introducing more simplified methods, which makes it very efficient while maintaining a good feature weighting capability. To address the annual grading demands of over 200,000 handwriting test sheets from fifth- and ninth-grade students in Shanghai, the lightweight design of the ECA module is critical [24]. Compared to other attention mechanism, ECA significantly reduces inference latency and computational overhead, enabling deployment on standard server clusters to meet real-time requirements in high-concurrency exam scenarios.

In this paper, the ECA module is integrated into the EAST feature extraction layer to strengthen the network’s ability to model inter-channel correlation to further optimize the performance of handwritten Chinese character detection and segmentation, increase EAST’s ability to focus on dense text. By introducing a lightweight attention mechanism, the ECA module can fully capture the inter-channel interaction information without significantly increasing the computational burden, which significantly improves the accuracy of feature extraction. Particularly when processing handwritten Chinese character images with complex backgrounds and significant interference, the module enhances sensitivity to target regions and the ability to distinguish detailed features [25], which effectively alleviates the problems of lost stroke details and the difficulty of restoring local features. This improvement provides higher-quality feature representation for feature fusion and target segmentation and shows excellent robustness and reliability, especially in complex scenes. The specific structure is illustrated in Figure 3.

Figure 3.

ECA module structure.

2.4. Dice Loss

The EAST model is an efficient algorithm for scene text detection, which mainly predicts the geometry of text regions by regression methods. However, traditional loss functions (e.g., cross-entropy loss [26] and L1/L2 regression loss [27]) have limitations in dealing with complex geometries and small target detection, especially when the categories are unbalanced and the target shapes are diverse. This limitation often leads to miss and false detections of the model in dense text and small target detection scenarios. To address these challenges, this paper employs Dice Loss [28] into the EAST model, replaces the class-balanced cross-entropy that was previously used to count losses in score plots.

Dice Loss was initially utilized in the domain of medical image segmentation to quantify the overlap between predicted and ground truth regions. Its advantage is that it can optimize the model’s accurate prediction of the target region, which is especially effective in the case of category imbalance. Introducing Dice Loss into the EAST model can significantly enhance the model’s sensitivity to dense text regions and small targets, thus improving the overall detection performance [29]. Dice Loss is based on the Dice Coefficient, which is calculated as:

where A represents the predicted region and B corresponds to the ground truth region. Converting the Dice Coefficient into a loss function usually takes the following form:

where denotes the predicted value, denotes the true value, and is a small constant to avoid division by zero.

3. Results

3.1. Dataset

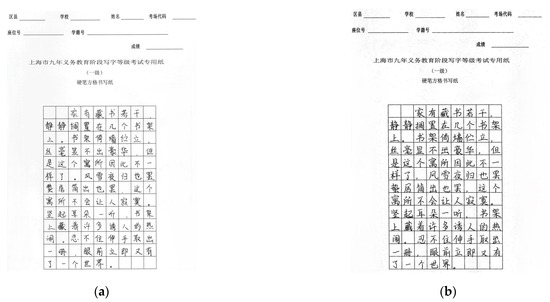

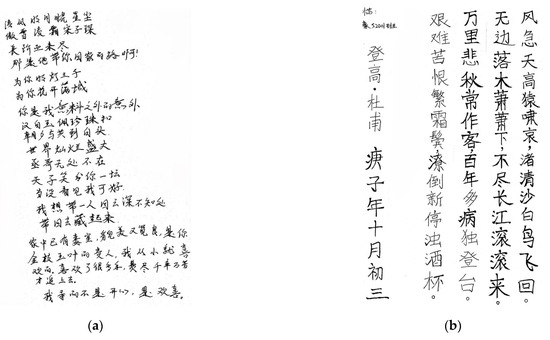

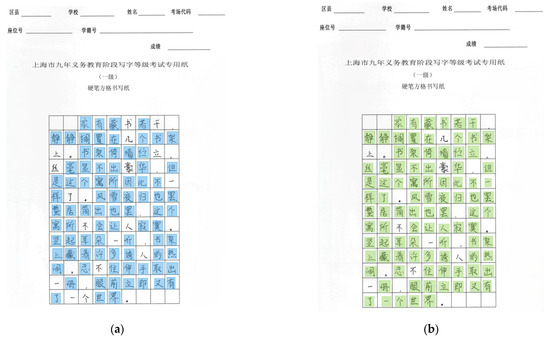

The dataset was constructed in the context of the writing grade examination for primary and secondary school students in Shanghai, 240 students who were about to take the writing grade examination were invited and their handwritten images of Chinese characters were collected as the research samples. The dataset covers different writing scenarios: first, formal writing on exam-specific papers, and second, unconstrained daily writing practice. To fully represent the differences between these two writing environments, 480 high-quality images of handwritten Chinese characters were collected, covering more than 40,000 Chinese characters and presenting rich background variations and diverse writing styles. This dataset aims to simulate the actual differences between the test scenarios and daily writing and to better meet the needs of handwritten Chinese character detection in writing grade tests. All samples are dense text images containing a large number of Chinese characters. Figure 4a,b show the partial writing grade exam dataset constructed in this paper, while Figure 5a,b present the corresponding unconstrained text dataset, respectively.

Figure 4.

Partial writing grade exam dataset diagram.

Figure 5.

Partial unconstrained text dataset diagram.

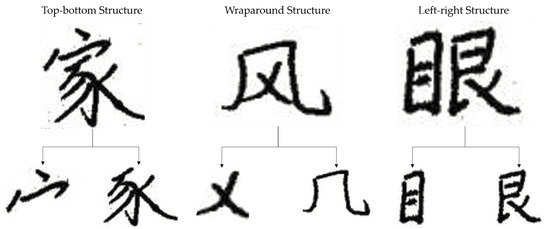

In addition, to improve the capability of the Chinese character detection model in recognizing diverse structural components of Chinese characters, this paper further integrates a set of image datasets designed specifically for the task of Chinese character component segmentation. The dataset covers a variety of different structures of Chinese character components, including left-right structure, top-bottom structure, wraparound structure, etc., to cope with the diversity of Chinese character component morphology as well as the complexity of the background. Figure 6 shows the diagram of some Chinese character components.

Figure 6.

Chinese character component diagram.

Finally, the dataset was expanded to 960 images by data enhancement methods such as randomly rotating different angles to improve the adaptability of our model in different scenes, and all the images were uniformly scaled to a fixed size of 1200 × 1200. Ultimately, the dataset was partitioned into training, validation, and test sets with a ratio of 8:1:1, respectively.

3.2. Experimental Parameters

In this paper, the network model is implemented through PyTorch, the code is written and run on Linux CentOS 7.7 system, and the experimental hardware envi-ronment is NVIDIA A100 Tensor Core GPU 80G, provided by NVIDIA Corporation, located in Santa Clara, CA, USA. Adam (Adaptive Moment Estimation) optimizer is used for training, the learning rate is set to 0.001, the batch size was set to 24.

3.3. Evaluation Indicators

The segmentation model performance was evaluated using three key metrics: precision, recall, and F-score. These metrics quantify segmentation accuracy, detection reliability, and overall robustness, respectively.

- Precision

Precision serves as one of the fundamental evaluation metrics in object segmentation tasks, quantifying the accuracy of positive class predictions generated by the model. Specifically, Precision indicates how many of the samples predicted by the model as positive class are true targets, and is calculated as follows:

TP (True Positive) represents the number of true target samples correctly identified. FP (False Positive) denotes the number of non-target samples misclassified as targets.

- 2.

- Recall

Recall reflects the proportion of correct targets detected by the model overall targets, which is defined as:

TP represents the number of accurately identified targets, while FN corresponds to the quantity of undetected targets.

- 3.

- F-score

The harmonic mean of precision and recall, employed to comprehensively evaluate model performance. The calculation formula is:

3.4. Experimental Result

The segmentation performance results of the original model and the CEE model on different Chinese character structures are shown in Table 1, from the left-right structure, the Precision of CEE model reaches 85.7%, which is 7.1 percentage points higher than the 78.6% of the original model, the F-score is improved from 77.8% to 84.8%, and the Recall is improved from 77.1% to 84.2%, which indicates that the performance of CEE model is greatly improved in the regular left-right structure. In the top and bottom structure, the Precision of the CEE model is 84.3%, which is 6.5 percentage points higher than the 77.8% of the original model, the F-score increases from 76.7% to 83.4%, and the Recall increases from 75.4% to 82.5%, which further proves that the CEE model is more accurate in the processing of the rule structure. The advantage of the CEE model is more obvious in the complex enclosing structure, where its Precision increases by 10.2 percentage points from 69.2% to 79.4%, F-score increases from 67.7% to 78.5%, and Recall increases from 66.4% to 77.8%.

Table 1.

Performance results of different Chinese character structure segmentation.

Taken together, the CEE model performs well on all Chinese character structures, especially in complex tasks such as encircling structures, by introducing a feature pyramid network and convolutional module with a channel attention mechanism, it significantly enhances the segmentation accuracy and robustness in complex contexts, and at the same time, reduces the leakage rate, demonstrating strong adaptive ability and improvement effect.

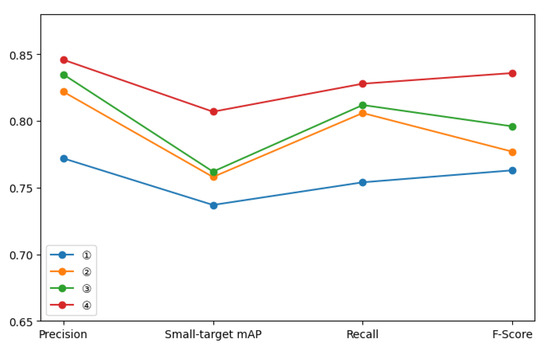

3.5. Ablation Experiment

To validate the performance improvement of the proposed model modifications on the overall architecture, ablation studies were conducted on our custom dataset. As shown in the Table 2, method ① is the EAST base network (Basic), method ② is the mini-component dataset through the feature pyramid as a multi-scale feature fusion (Mini-Component-Basic), method ③ is the addition of the attention mechanism of the ECA module (ECA-Basic), and method ④ is the CEE model in this paper. A comparative analysis of different approaches is presented in Table 2, demonstrating that the proposed model achieves significant accuracy improvement through the integration of the attention mechanism and multi-scale fusion strategy.

Table 2.

Ablation experiments results.

According to Figure 7, the part feature fusion and ECA module each play a unique role in model performance enhancement, demonstrating significant improvements in overall performance and small target detection. In terms of Precision, the performance of the base model is 77.2%, which improves to 82.2% after fusing part features, an increase of 5.0%. This result indicates that fusing part features improves the recognition performance of complex Chinese character part shapes by enhancing the representation of local features. After adding the ECA module, Precision improves to 83.5%, indicating that the dynamic weighting mechanism can effectively highlight important part features and suppress the interference of background noise. When feature fusion is combined with the ECA module, the Precision of the final model reaches 84.6%, with a total improvement of 7.4%, verifying the significant contribution of the synergistic optimization effect of the two modules to the overall detection performance.

Figure 7.

Ablation experiments comparison.

In terms of small target detection, the advantages of the improved model are even more obvious. The small target mAP of the baseline model is only 69.2%, and the introduction of part features improves it to 74.3%, an increase of 5.1%. This reflects the fact that feature fusion improves the model’s fitness on complex parts and narrow regions by enhancing the saliency of small target parts. The introduction of the ECA module improves the small target mAP to 73.6% and reaches 76.2% in the full model, for a total improvement of 8.0%. This reflects that the ECA module significantly improves the localization accuracy of the segmentation boundary by dynamically selecting key features, adapting to the complex and diverse characteristics of the boundaries of Chinese character components, and reflecting the fundamental role of the convolution module in constructing the boundary space representation.

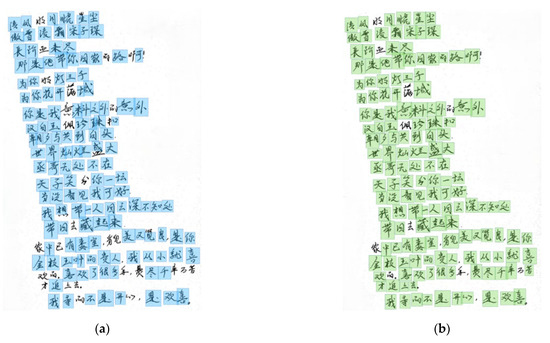

The comparative analysis in Figure 8a,b (unconstrained detection) reveals the CEE model’s enhanced capability in detecting suboptimal-scale Chinese characters, particularly evident in the complete segmentation of the character “星” (star) (Row 1), where the basic model achieved only partial recognition. This validates the mini-component fusion strategy’s effectiveness in resolving scale-dependent ambiguities. However, residual limitations emerge in processing characters with pronounced curvature, attributable to insufficient robustness against nonlinear morphological variations.

Figure 8.

Comparison of unconstrained dataset experimental results.

Complementarily, Figure 9a,b (standardized examination sheet detection) demonstrates the CEE model’s superiority in structured grid environments while exposing paradoxical failures: systematic misclassifications persist for geometrically simple characters such as “了” (completed) and “入” (enter). This phenomenon fundamentally stems from a mechanistic contradiction between the attention module’s intrinsic bias toward high stroke-density patterns and the morphological feature extraction requirements for characters with minimal topological complexity.

Figure 9.

Comparison of writing exam dataset experimental results.

The comparison of the experimental results, in general, fully verifies the significant performance improvement of the mini component feature fusion and ECA module in the task of Chinese character component segmentation, and the synergistic design of the two modules overcomes the limitations of the traditional model, which provides a reliable technical support and theoretical basis for the task of segmentation of complex targets.

3.6. Method Comparisons

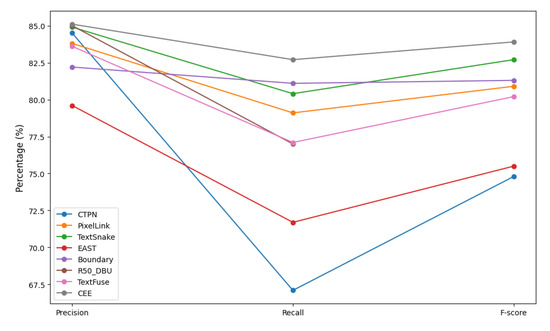

To further validate the performance of the handwriting detection model algorithm proposed in this paper, experimental comparisons with other mainstream text detection algorithms, CTPN [5], PixelLink [6], TextSnake [7], Boundary [8], R50_DBU [9] and TextFuse [10] were conducted on the ICDAR2015 dataset. The ICDAR [30] dataset is used for the ICDAR Challenge, in which the ICDAR2015 dataset contains 1500 images, 1000 images are used for the training set and the remaining 500 images are used for the test set.

CTPN is a single-stage model for text detection, which has significant advantages in dealing with oblique and curved text. PixelLink is a pixel-level-based text detection model, particularly suitable for scene text detection and text regions with great morphological variations. TextSnake is particularly good at dealing with curved and bent text. This study focuses on the segmentation task of Chinese character detection, which usually involves multi-scale target detection, complex background processing, and accurate localization of small targets, so these three models that are good at handling complex text are targeted to be chosen as comparison objects to evaluate the performance of CEE networks in Chinese character detection. The results are shown in Table 3. Boundary is An end-to-end framework utilizing multi-scale boundary point localization and adaptive topology reconstruction to boost accuracy in detecting/recognizing irregularly shaped text within complex scenes, R50_DBU represents a sophisticated segmentation-driven network incorporating U-Net architecture, TextFuse integrates hierarchical feature synthesis with cross-detector consensus mechanisms to resolve single-modality constraints in scene text analysis.

Table 3.

Algorithm comparison.

The EAST model using the combination of mini-component in this paper has higher accuracy, recall, and F-score than the classical EAST algorithm. The values are also improved by one to two percentage points in comparison to other algorithms, and the detection results are improved. The algorithm comparison is shown in Figure 10.

Figure 10.

Algorithm comparison.

4. Discussion

This study proposes an enhanced EAST-based model, termed the CEE model, for text detection in handwriting proficiency assessment. The incorporation of a custom mini-component dataset and the integration of a Feature Pyramid Network (FPN) in the feature fusion stage significantly improve the model’s capability in dense text feature extraction and multi-scale feature representation. Meanwhile, the ECA attention module is added to the model, which further strengthens the extraction capability of key features and significantly improves the performance of the EAST algorithm in detection accuracy, especially for the optimization of the small target leakage detection problem. In addition, the CEE model improves the loss function and optimizes the training process of the model.

The experimental results show that the CEE model performs well on both the self-constructed dataset and the ICDAR2015 dataset, and compared with the original EAST algorithm, its accuracy and recall are both improved by about 7.4%, the F-score is improved by about 7.3%, and the mAP of small targets is improved by about 7.0%. While large language models (LLMs) have demonstrated remarkable capabilities in semantic understanding, their ability to model fine-grained visual structures (e.g., stroke-level components in handwritten characters) remains underexplored [31]. In this study, we address these vision-structure alignment challenges through localized attention refinement and multi-scale feature preservation strategies, thereby offering new insights into bridging the gap between high-level semantics and low-level visual patterns. Subsequent investigations will prioritize two key methodological refinements: (1) algorithmic optimization through architectural simplification to streamline computational demands, and (2) systematic expansion of the assessment corpus by diversifying text genres and incorporating multi-level writing samples. These strategic enhancements aim to establish an optimized framework that demonstrates enhanced generalizability and operational efficiency in automated writing evaluation systems, particularly for high-stakes standardized assessments.

Author Contributions

Conceptualization, Y.S.; methodology, R.L.; software, R.L.; validation, R.L.; formal analysis, R.L.; investigation, R.L and Y.S.; resources, Y.S.; data curation, R.L.; writing—original draft preparation, R.L.; writing—review and editing, R.L. and Y.S.; visualization, R.L.; supervision, Y.S., X.L. and X.T.; project administration, Y.S., X.L. and X.T.; funding acquisition, Y.S., X.L. and X.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by University-Industry Co-operation Project, Intelligent Sensing and Real-time Monitoring System for Urban Spatial Light Environment, Shanghai, China, grant number (24)DZ-007.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to the use of external datasets as the input data for creating an extended dataset.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, K.; Babenko, B.; Belongie, S. End-to-end scene text recognition. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1457–1464. [Google Scholar]

- Tian, Z.; Huang, W.; He, T.; He, P.; Qiao, Y. Detecting text in natural image with connectionist text proposal network. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VIII 14. pp. 56–72. [Google Scholar]

- Zhou, X.; Yao, C.; Wen, H.; Wang, Y.; Zhou, S.; He, W.; Liang, J. East: An efficient and accurate scene text detector. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5551–5560. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. pp. 234–241. [Google Scholar]

- Shi, B.; Bai, X.; Belongie, S. Detecting oriented text in natural images by linking segments. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2550–2558. [Google Scholar]

- Deng, D.; Liu, H.; Li, X.; Cai, D. Pixellink: Detecting scene text via instance segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Long, S.; Ruan, J.; Zhang, W.; He, X.; Wu, W.; Yao, C. Textsnake: A flexible representation for detecting text of arbitrary shapes. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 20–36. [Google Scholar]

- Wang, H.; Lu, P.; Zhang, H.; Yang, M.; Bai, X.; Xu, Y.; He, M.; Wang, Y.; Liu, W. All you need is boundary: Toward arbitrary-shaped text spotting. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12160–12167. [Google Scholar]

- Cheng, Y.; Wan, Y.R.; Sima, Y.J.; Zhang, Y.M.; Hu, S.Y.; Wu, S. Text Detection of Transformer Based on Deep Learning Algorithm. Teh. Vjesn. 2022, 29, 861–866. [Google Scholar] [CrossRef]

- Shi, X.J.; Peng, G.W.; Shen, X.J.; Zhang, C.S. TextFuse: Fusing Deep Scene Text Detection Models for Enhanced Performance. Multimed. Tools Appl. 2024, 83, 22433–22454. [Google Scholar] [CrossRef]

- Liu, M.; Li, B.; Zhang, W. Research on Small Acceptance Domain Text Detection Algorithm Based on Attention Mechanism and Hybrid Feature Pyramid. Electronics 2022, 11, 3559. [Google Scholar] [CrossRef]

- Yuan, L.; Zeng, C.; Pan, P. Research of Chinese Entity Recognition Model Based on Multi-Feature Semantic Enhancement. Electronics 2024, 13, 4895. [Google Scholar] [CrossRef]

- Wawer, A.; Mykowiecka, A.; Żuk, B. Detecting Aggression in Language: From Diverse Data to Robust Classifiers. Electronics 2024, 13, 4857. [Google Scholar] [CrossRef]

- Xue, Y.; Wang, Q.; Hu, Y.; Qian, Y.; Cheng, L.; Wang, H. FL-YOLOv8: Lightweight Object Detector Based on Feature Fusion. Electronics 2024, 13, 4653. [Google Scholar] [CrossRef]

- Ren, Q.-D.-E.-J.; Wang, L.; Ma, Z.; Barintag, S. Offline Mongolian Handwriting Recognition Based on Data Augmentation and Improved ECA-Net. Electronics 2024, 13, 835. [Google Scholar] [CrossRef]

- Qian, K.; Ding, X.; Jiang, X.; Ji, Y.; Dong, L. CFF-Net: Cross-Hierarchy Feature Fusion Network Based on Composite Dual-Channel Encoder for Surface Defect Segmentation. Electronics 2024, 13, 4714. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Park, J. Bam: Bottleneck attention module. arXiv 2018, arXiv:1807.06514. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Cao, Y.; Xu, J.; Lin, S.; Wei, F.; Hu, H. Gcnet: Non-local networks meet squeeze-excitation networks and beyond. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Zhang, H.; Goodfellow, I.; Metaxas, D.; Odena, A. Self-attention generative adversarial networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 7354–7363. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Liu, R.; Shi, Y.; Tang, X.; Liu, X. Combining Multi-Scale Fusion and Attentional Mechanisms for Assessing Writing Accuracy. Appl. Sci. 2025, 15, 1204. [Google Scholar] [CrossRef]

- Molnar, C.; König, G.; Bischl, B.; Casalicchio, G. Model-agnostic feature importance and effects with dependent features: A conditional subgroup approach. Data Min. Knowl. Discov. 2024, 38, 2903–2941. [Google Scholar] [CrossRef]

- De Boer, P.-T.; Kroese, D.P.; Mannor, S.; Rubinstein, R.Y. A tutorial on the cross-entropy method. Ann. Oper. Res. 2005, 134, 19–67. [Google Scholar] [CrossRef]

- Ranjbarzadeh, R.; Jafarzadeh Ghoushchi, S.; Anari, S.; Safavi, S.; Tataei Sarshar, N.; Babaee Tirkolaee, E.; Bendechache, M. A deep learning approach for robust, multi-oriented, and curved text detection. Cogn. Comput. 2024, 16, 1979–1991. [Google Scholar] [CrossRef]

- Zhao, R.; Qian, B.; Zhang, X.; Li, Y.; Wei, R.; Liu, Y.; Pan, Y. Rethinking dice loss for medical image segmentation. In Proceedings of the 2020 IEEE International Conference on Data Mining (ICDM), Sorrento, Italy, 17–20 November 2020; pp. 851–860. [Google Scholar]

- Schönfelder, P.; Stebel, F.; Andreou, N.; König, M. Deep learning-based text detection and recognition on architectural floor plans. Autom. Constr. 2024, 157, 105156. [Google Scholar] [CrossRef]

- Peyrard, C.; Baccouche, M.; Mamalet, F.; Garcia, C. ICDAR2015 Competition on Text Image Super-Resolution. In Proceedings of the International Conference on Document Analysis & Recognition, Tunis, Tunisia, 23–26 August 2015. [Google Scholar]

- LeCun, Y. A path towards autonomous machine intelligence version 0.9.2, 2022-06-27. Open Rev. 2022, 62, 1–62. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).