Advancing YOLOv8-Based Wafer Notch-Angle Detection Using Oriented Bounding Boxes, Hyperparameter Tuning, Architecture Refinement, and Transfer Learning

Abstract

1. Introduction

2. YOLOv8-Based Detection Models and Optimization Methods

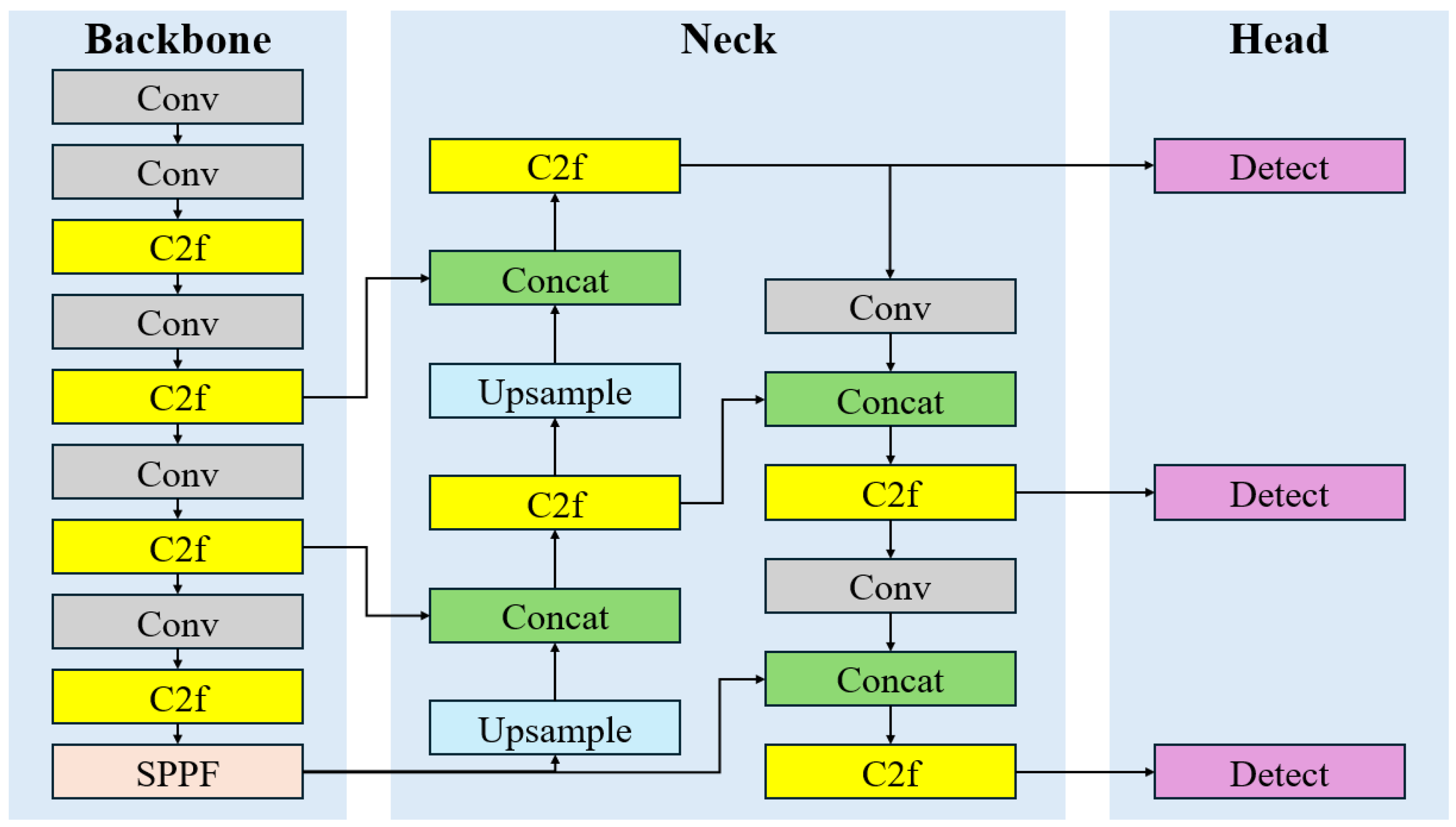

2.1. YOLOv8 and YOLOv8-OBB

2.2. Effect of Hyperparameters on Model Training and Optimization Methods

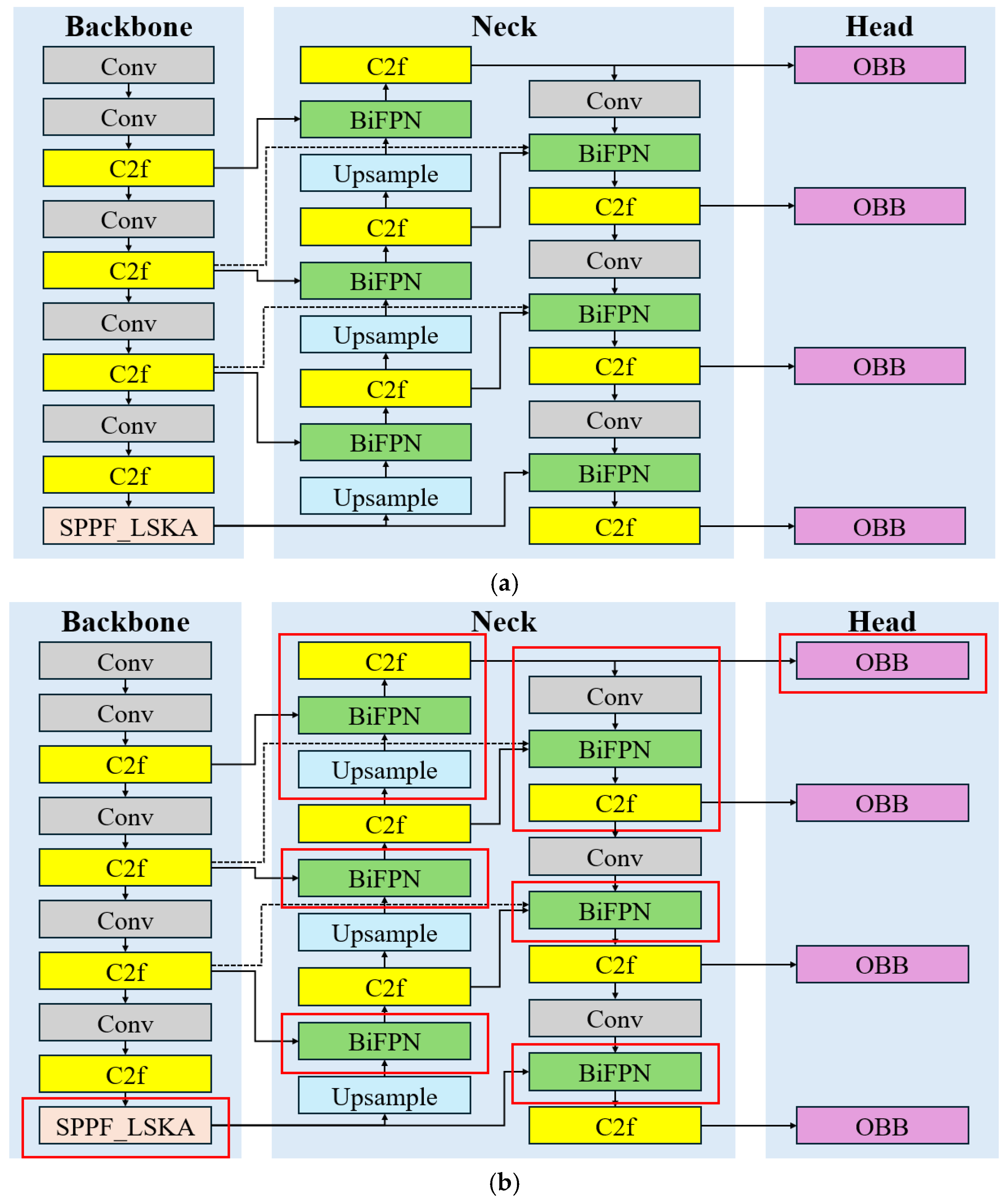

2.3. Architecture Improvement Based on YOLOv8-OBB

2.4. Gradual Unfreezing Transfer Learning

3. Performance Metric and Experimental Setup

3.1. Evaluation Indicator and Implementation Environment

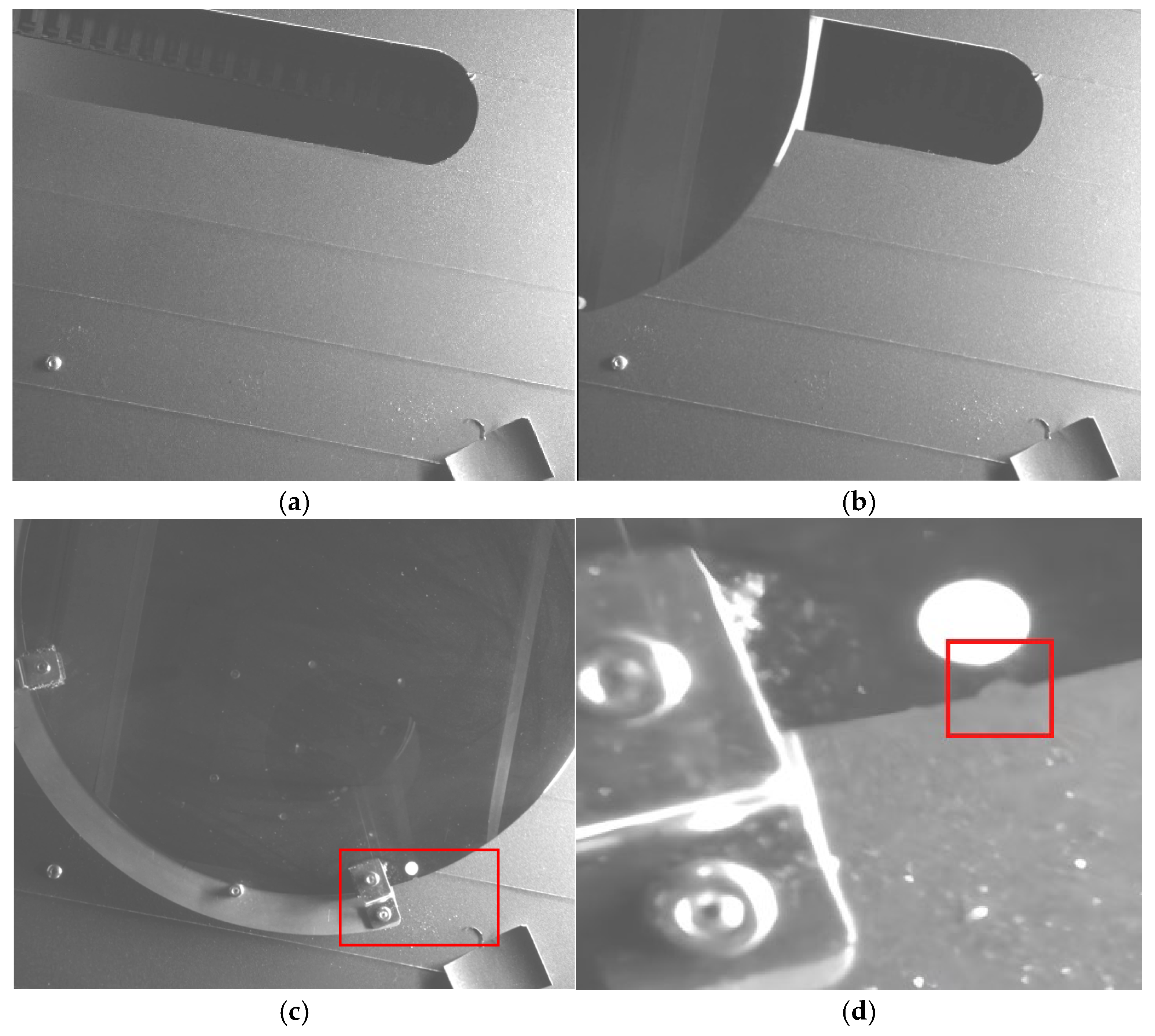

3.2. Dataset Description and Preparation

4. Results and Discussion

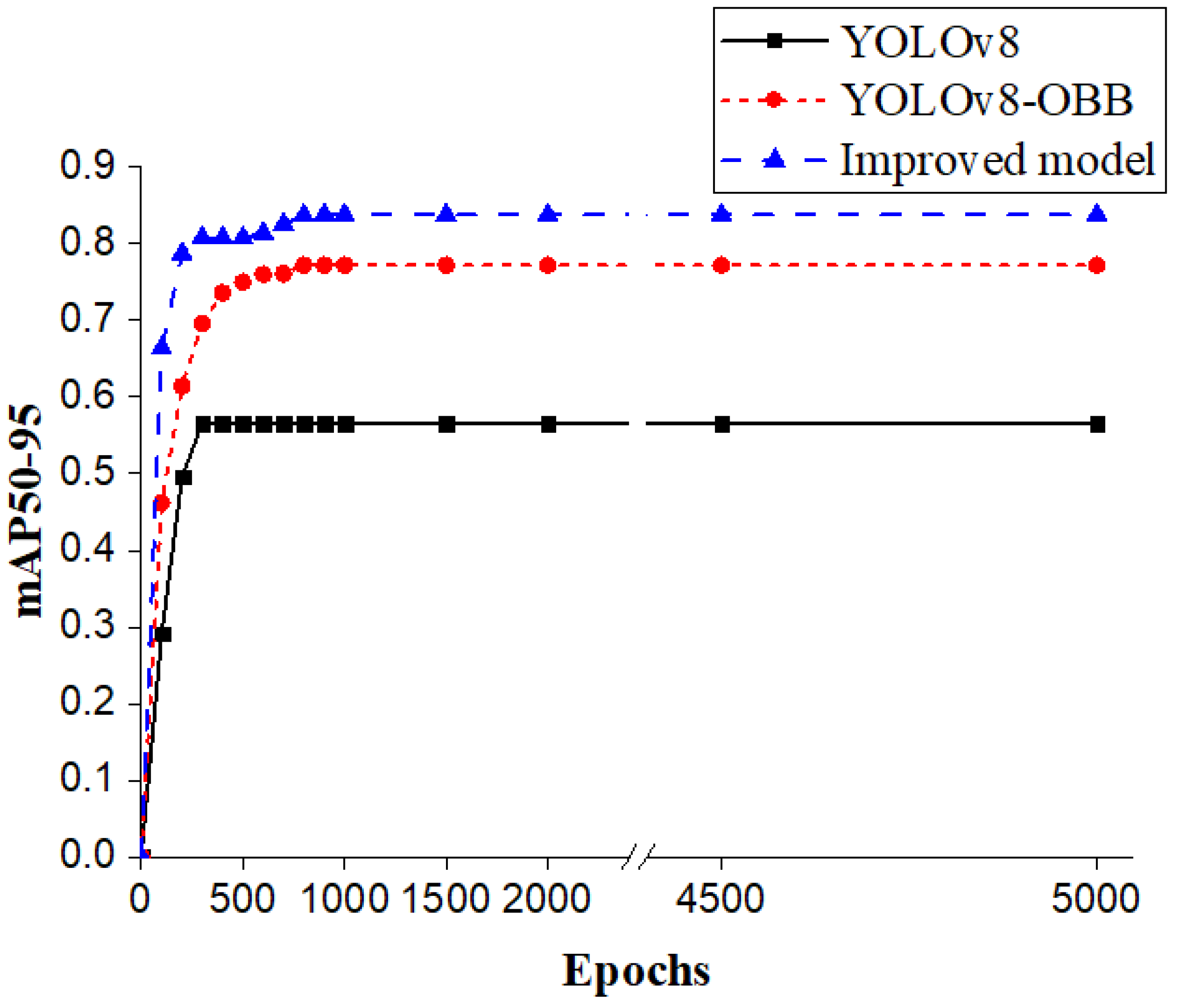

4.1. Comparison of YOLOv8 and YOLOv8-OBB

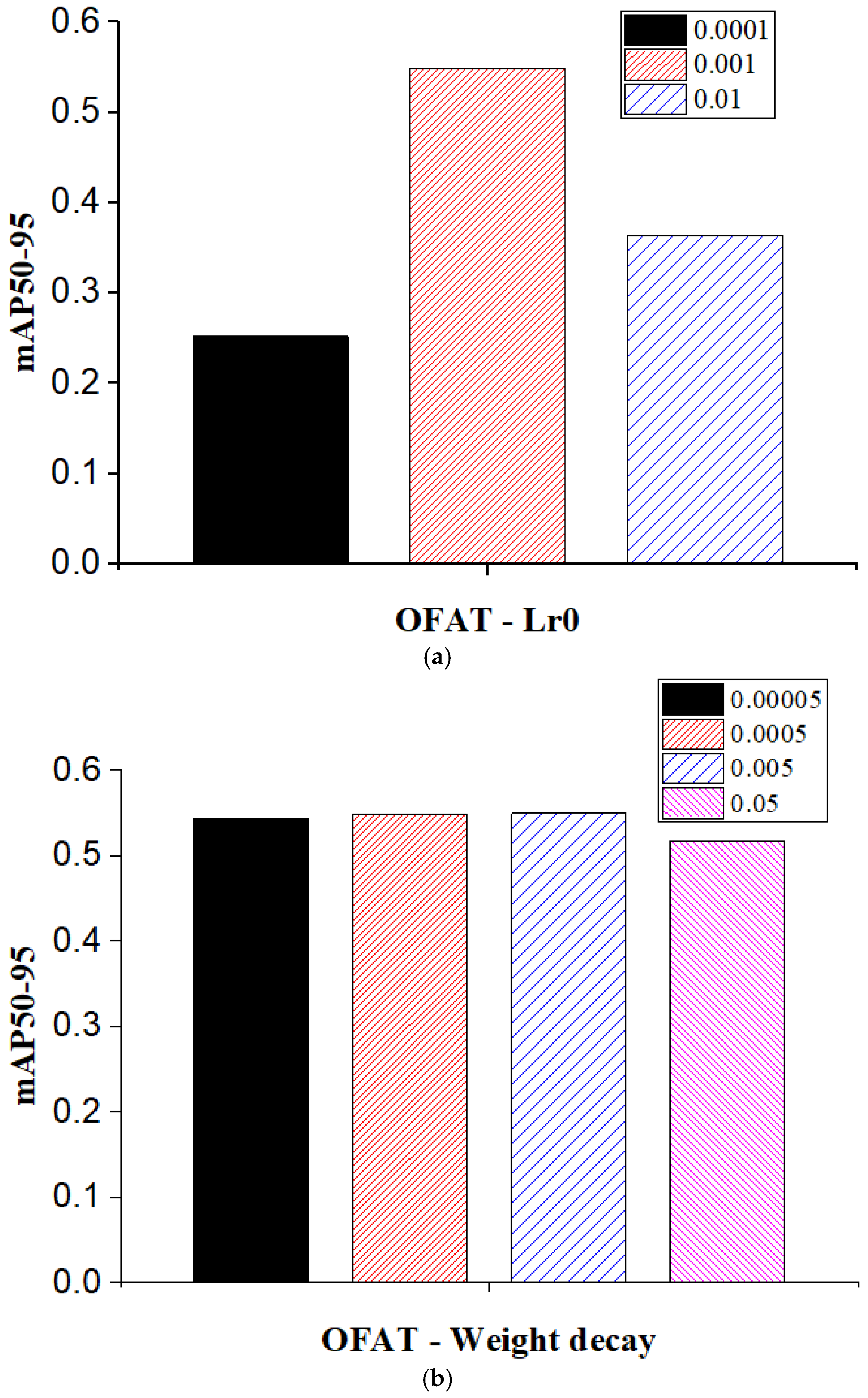

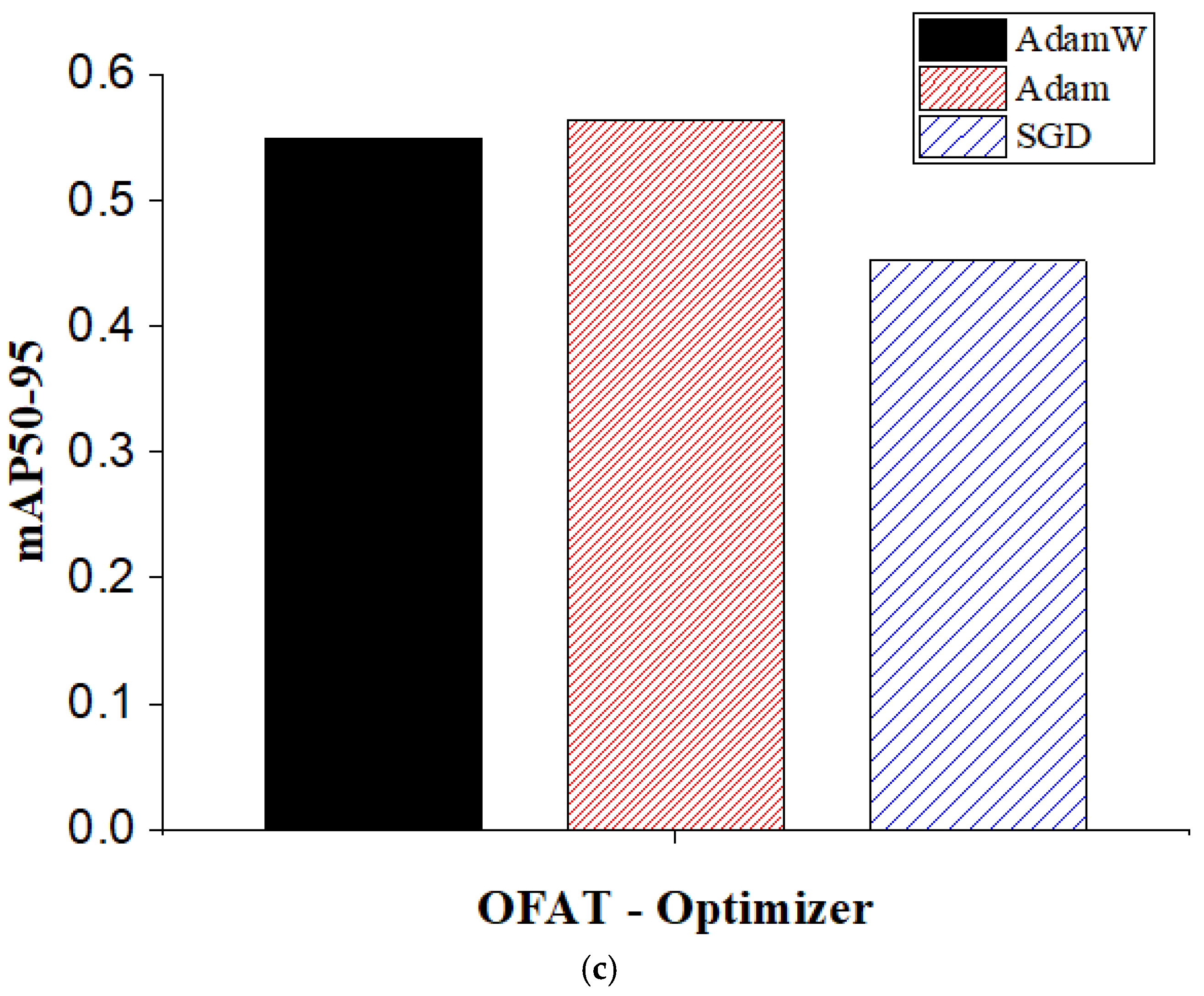

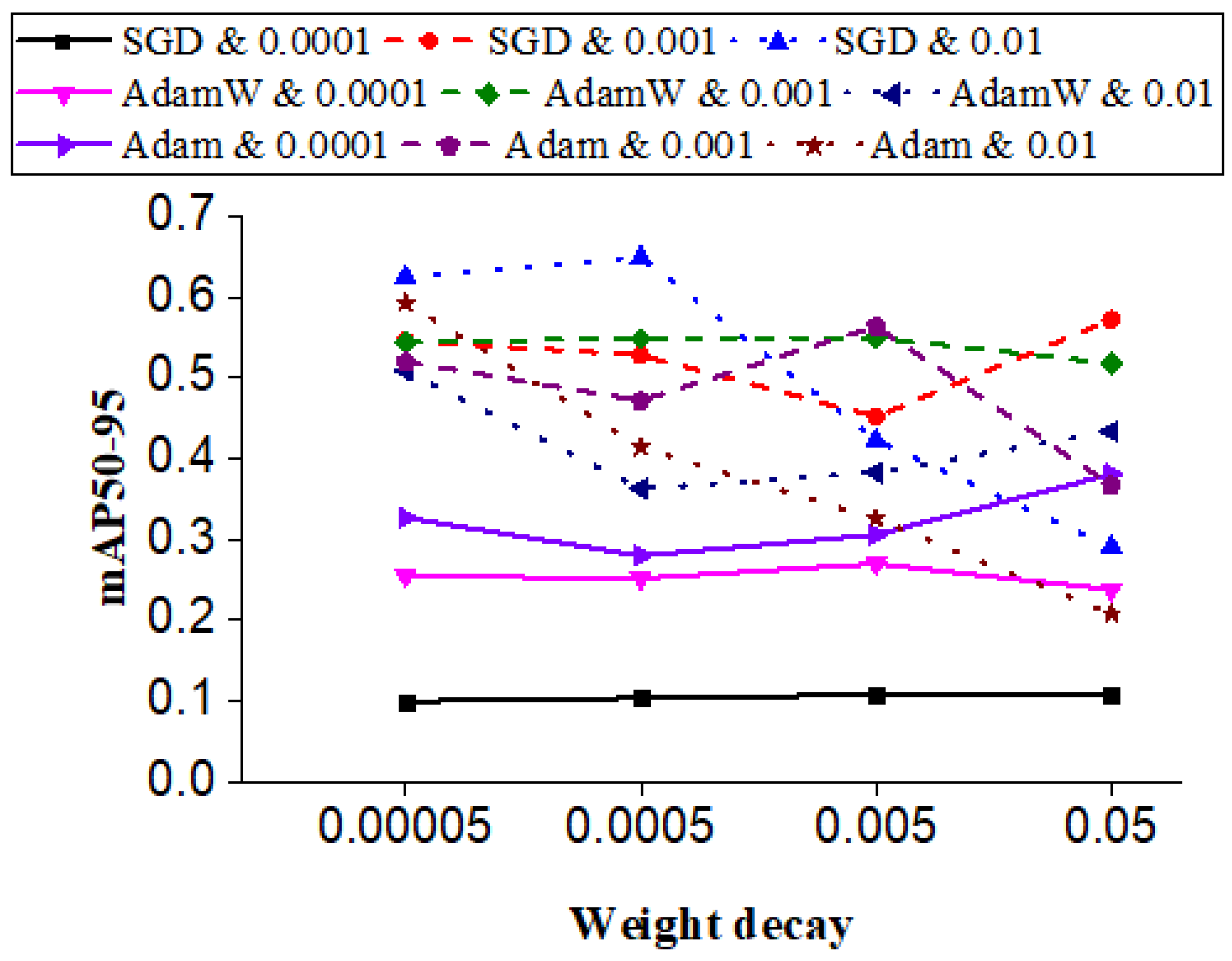

4.2. Tuning of Model Parameters

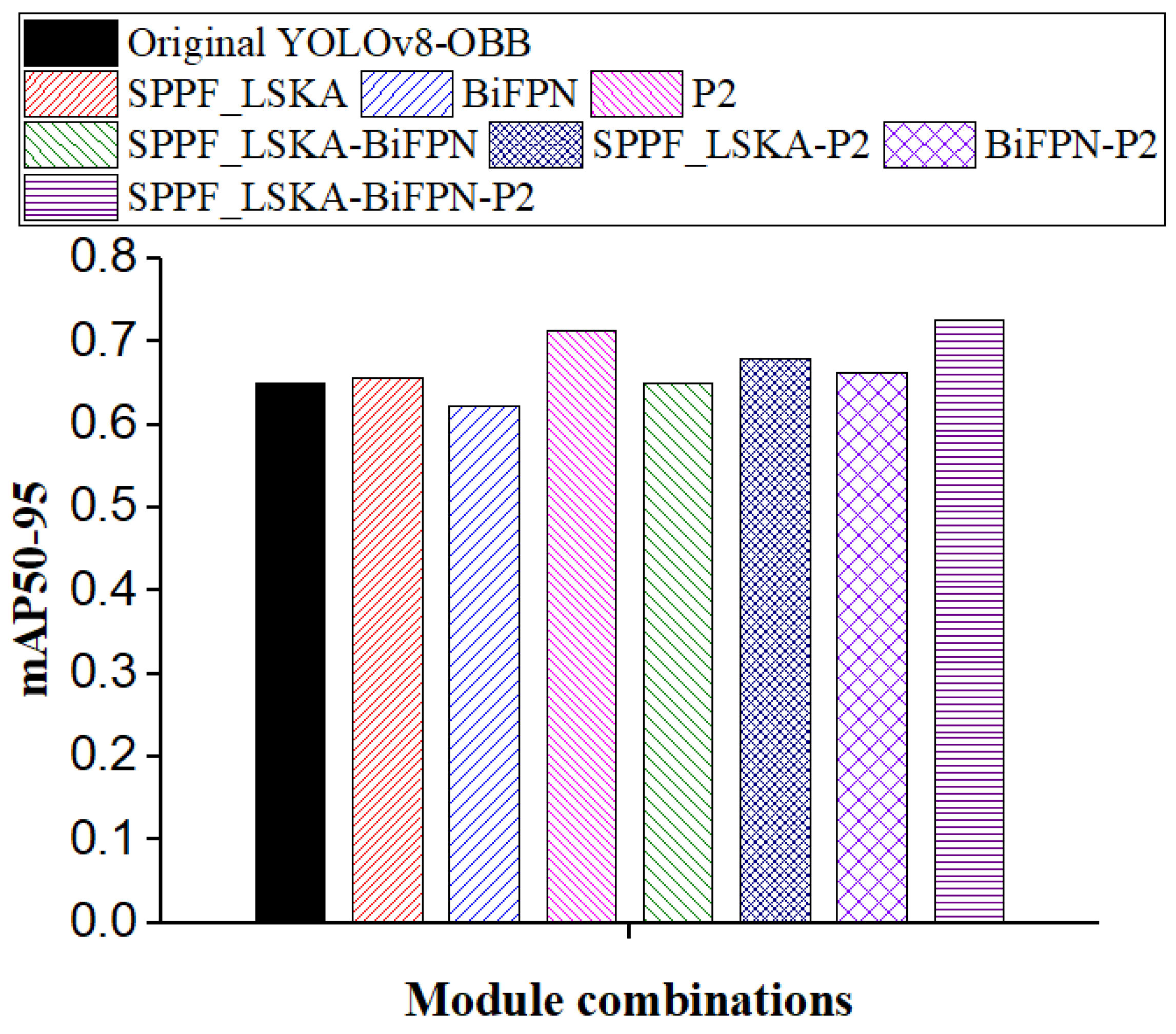

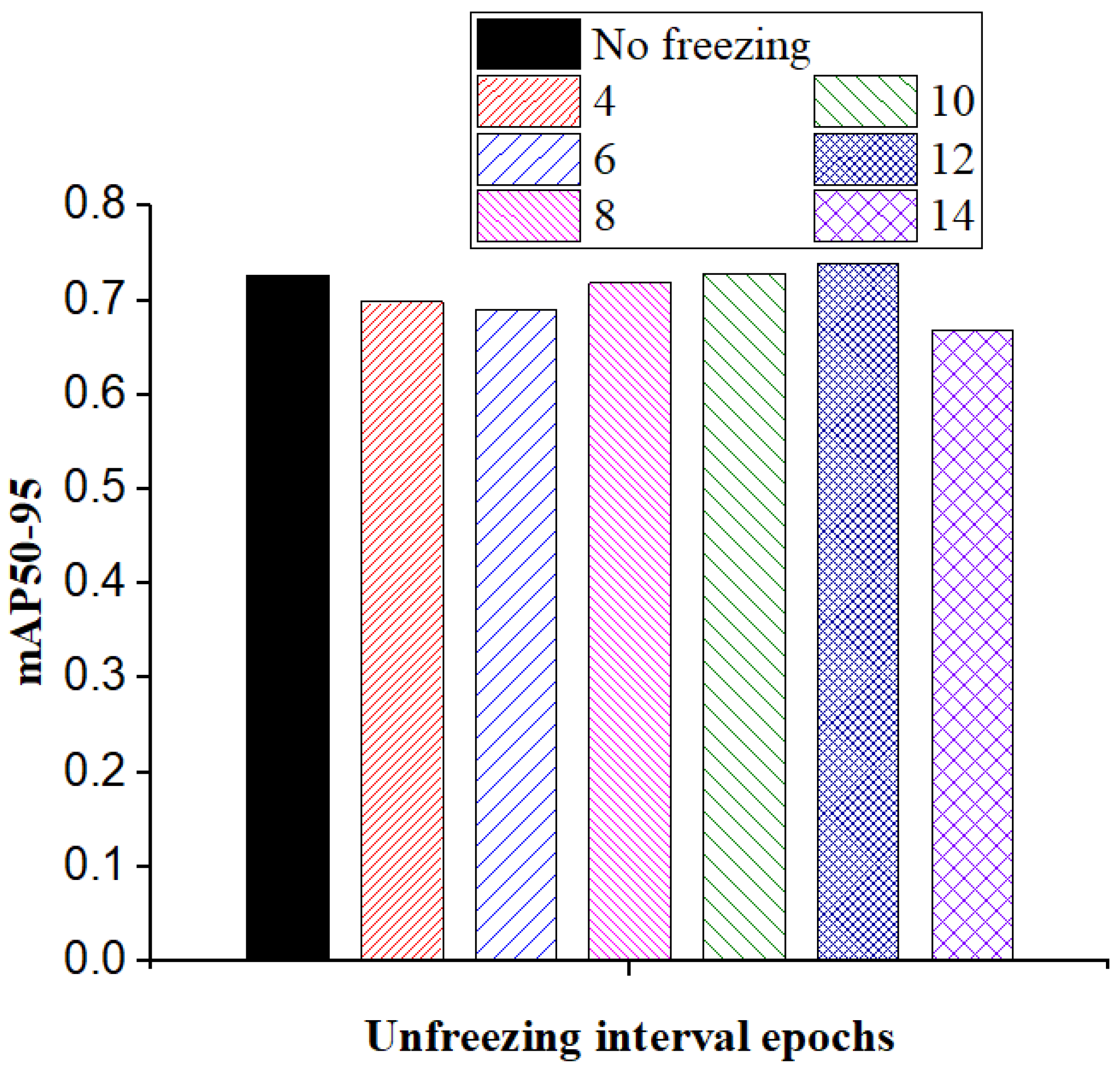

4.3. Architectural Enhancement and Transfer Learning via Gradual Unfreezing

4.4. Detection Results and Validation with Prolonged Training

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Purwaningsih, L.; Konsulke, P.; Tonhaeuser, M.; Jantoljak, H. Defect inspection wafer notch orientation and defect detection dependency. In Proceedings of the International Symposium for Testing and Failure Analysis (ISTFA 2021), Phoenix, AZ, USA, 31 October–4 November 2021; ASM International: Almere, The Netherlands, 2021; pp. 403–405. [Google Scholar] [CrossRef]

- Chaudhry, A.; Kumar, M.J. Controlling short-channel effects in deep-submicron SOI MOSFETs for improved reliability: A review. IEEE Trans. Device Mater. Reliab. 2004, 4, 99–109. [Google Scholar] [CrossRef]

- Dongsheng, Q.; Suilong, Q.; Weibin, R.; Yixu, S.; Yannan, Z. Design and experiment of the wafer pre-alignment system. In Proceedings of the 2007 International Conference on Mechatronics and Automation (ICMA 2007), Heilongjiang, China, 5–8 August 2007; IEEE: New York, NY, USA, 2007; pp. 1483–1488. [Google Scholar] [CrossRef]

- Luckman, G.; Harris, M.; Rathmell, R.D.; Kopalidis, P.; Ray, A.M.; Sato, F.; Sano, M. Precision halo control with antimony and indium on Axcelis medium current ion implanters. In Proceedings of the 14th International Conference on Ion Implantation Technology (IIT 2002), Taos, NM, USA, 22–27 September 2002; IEEE: New York, NY, USA, 2002; pp. 279–282. [Google Scholar] [CrossRef]

- Rucki, M.; Kilikevicius, A.; Bzinkowski, D.; Ryba, T. Identification of rubber belt damages using machine learning algorithms. Appl. Sci. 2025, 15, 10449. [Google Scholar] [CrossRef]

- Wang, C.; Serre, T. A hybrid approach to investigating factors associated with crash injury severity: Integrating interpretable machine learning with logit model. Appl. Sci. 2025, 15, 10417. [Google Scholar] [CrossRef]

- Liu, H.; Wang, X.; He, F.; Zheng, Z. Automated network defense: A systematic survey and analysis of AutoML paradigms for network intrusion detection. Appl. Sci. 2025, 15, 10389. [Google Scholar] [CrossRef]

- Gao, J.; Li, J. Intelligent fast calculation of petrophysical parameters of clay-bearing shales based on a novel dielectric dispersion model and machine learning. Appl. Sci. 2025, 15, 10381. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, C.; Xia, X.; Tan, M.; Wang, H.; Lv, Y.; Du, J. Eco-friendly and intelligent cellulosic fibers-based packaging system for real-time visual detection of food freshness. Chem. Eng. J. 2023, 474, 146013. [Google Scholar] [CrossRef]

- Moreira, R.; Moreira, L.F.R.; Munhoz, P.L.A.; Lopes, E.A.; Ruas, R.A.A. AgroLens: A low-cost and green-friendly smart farm architecture to support real-time leaf disease diagnostics. Internet Things 2022, 19, 100570. [Google Scholar] [CrossRef]

- Cao, L.; Su, J.; Saddler, J.; Cao, Y.; Wang, Y.; Lee, G.; Gopaluni, R.B. Machine learning for real-time green carbon dioxide tracking in refinery processes. Renew. Sustain. Energy Rev. 2025, 213, 115417. [Google Scholar] [CrossRef]

- Shang, G.; Xu, L.; Tian, J.; Cai, D.; Xu, Z.; Zhou, Z. A real-time green construction optimization strategy for engineering vessels considering fuel consumption and productivity: A case study on a cutter suction dredger. Energy 2023, 274, 127326. [Google Scholar] [CrossRef]

- Trigka, M.; Dritsas, E. A Comprehensive Survey of Machine Learning Techniques and Models for Object Detection. Sensors 2025, 25, 214. [Google Scholar] [CrossRef]

- Zheng, J.; Yan, J.; Wang, Q.; Zhou, H.; Huang, S. Wafer Precision Alignment Method Based on Feature Recognition. J. Donghua Univ. 2025, 51, 2. [Google Scholar] [CrossRef]

- Mitchell, M.; Sivaraya, S.; Bending, S.J.; Mohammadi, A. Novel technique for backside alignment using direct laser writing. Micromachines 2025, 16, 255. [Google Scholar] [CrossRef]

- Zhang, Y.; Song, Z.; Yu, J.; Cao, B.; Wang, L. A novel pose estimation method for robot threaded assembly pre-alignment based on binocular vision. Robot. Comput. Integr. Manuf. 2025, 93, 102939. [Google Scholar] [CrossRef]

- Wang, H.; Sim, H.J.; Hwang, J.J.; Kwak, S.J.; Moon, S.J. YOLOv4-based semiconductor wafer notch detection using deep learning and image enhancement algorithms. Int. J. Precis. Eng. Manuf. 2024, 25, 1909–1916. [Google Scholar] [CrossRef]

- Li, L.; Tokuda, F.; Seino, A.; Kobayashi, A.; Tien, N.C.; Kosuge, K. Fabric dynamic motion modeling and collision avoidance with oriented bounding box. IEEE Robot. Autom. Lett. 2025, 10, 9542–9549. [Google Scholar] [CrossRef]

- Wang, K.; Wang, Z.; Li, Z.; Su, A.; Teng, X.; Pan, E.; Yu, Q. Oriented object detection in optical remote sensing images using deep learning: A survey. Artif. Intell. Rev. 2025, 58, 350. [Google Scholar] [CrossRef]

- Fu, Y.; Wang, Z.; Zheng, H.; Yin, X.; Fu, W.; Gu, Y. Integrated detection of coconut clusters and oriented leaves using improved YOLOv8n-OBB for robotic harvesting. Comput. Electron. Agric. 2025, 231, 109979. [Google Scholar] [CrossRef]

- Du, J. Understanding of object detection based on CNN family and YOLO. J. Phys. Conf. Ser. 2018, 1004, 012029. [Google Scholar] [CrossRef]

- Tan, L.; Huangfu, T.; Wu, L.; Chen, W. Comparison of RetinaNet, SSD, and YOLO v3 for real-time pill identification. BMC Med. Inform. Decis. Mak. 2021, 21, 11. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.A.; Sung, J.Y.; Park, S.H. Comparison of Faster-RCNN, YOLO, and SSD for real-time vehicle type recognition. In Proceedings of the IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia 2020), Seoul, Republic of Korea, 1–3 November 2020; IEEE: New York, NY, USA, 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Sapkota, R.; Ahmed, D.; Karkee, M. Comparing YOLOv8 and Mask R-CNN for instance segmentation in complex orchard environments. Artif. Intell. Agric. 2024, 13, 84–99. [Google Scholar] [CrossRef]

- Tan, F.G.; Yuksel, A.S.; Aksoy, B. Deep learning-based hyperparameter tuning and performance comparison. In Proceedings of the International Conference on Artificial Intelligence and Applied Mathematics in Engineering, Antalya, Türkiye, 3–5 November 2023; Springer Nature: Cham, Switzerland, 2023; pp. 128–140. [Google Scholar] [CrossRef]

- Wahyudi, D.; Soesanti, I.; Nugroho, H.A. Optimizing hyperparameters of YOLO to improve performance of brain tumor detection in MRI images. In Proceedings of the 6th International Conference on Information and Communications Technology (ICOIACT 2023), Yogyakarta, Indonesia, 10–11 November 2023; IEEE: New York, NY, USA, 2023; pp. 413–418. [Google Scholar] [CrossRef]

- El Khatib, Z.; Mnaouer, A.B.; Moussa, S.; Abas, M.A.B.; Ismail, N.A.; Abdulgaleel, F.; Ashraf, L. LoRa-enabled GPU-based CubeSat YOLO object detection with hyperparameter optimization. In Proceedings of the International Symposium on Networks, Computers and Communications (ISNCC 2022), Shenzhen, China, 19–22 July 2022; IEEE: New York, NY, USA, 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Salim, E. Hyperparameter optimization of YOLOv4 tiny for palm oil fresh fruit bunches maturity detection using genetics algorithms. Smart Agric. Technol. 2023, 6, 100364. [Google Scholar] [CrossRef]

- Zhao, Z.; Liu, X.; He, P. PSO-YOLO: A contextual feature enhancement method for small object detection in UAV aerial images. Earth Sci. Inform. 2025, 18, 258. [Google Scholar] [CrossRef]

- Yan, Q.; Shao, L.H.; Wang, X.; Shi, N.; Qin, A.; Shi, H.; Gao, Q. Small object detection algorithm based on high-resolution image processing and fusion of different scale features. In Proceedings of the 3rd International Conference on Image Processing and Media Computing (ICIPMC 2024), Hefei, China, 17–19 May 2024; IEEE: New York, NY, USA, 2024; pp. 36–43. [Google Scholar] [CrossRef]

- Juanjuan, Z.; Xiaohan, H.; Zebang, Q.; Guangqiang, Y. Small object detection algorithm combining coordinate attention mechanism and P2-BiFPN structure. In Proceedings of the International Conference on Computer Engineering and Networking, Wuxi, China, 3–5 November 2023; Springer Nature: Singapore, 2023; pp. 268–277. [Google Scholar] [CrossRef]

- Cheng, P.C.; Chiang, H.H.K. Diagnosis of salivary gland tumors using transfer learning with fine-tuning and gradual unfreezing. Diagnostics 2023, 13, 3333. [Google Scholar] [CrossRef]

- Khanna, U. Gradual Unfreezing Transformer-Based Language Models for Biomedical Question Answering. Ph.D. Thesis, Macquarie University, Sydney, Australia, 2021. [Google Scholar]

- Pintelas, E.; Livieris, I.E.; Pintelas, P. Quantization-based 3D-CNNs through circular gradual unfreezing for DeepFake detection. IEEE Trans. Artif. Intell. 2025, 1–13. [Google Scholar] [CrossRef]

- Ramos, L.; Casas, E.; Bendek, E.; Romero, C.; Rivas-Echeverría, F. Computer vision for wildfire detection: A critical brief review. Multimeda Tools Appl. 2024, 83, 83427–83470. [Google Scholar] [CrossRef]

- Casas, E.; Ramos, L.; Bendek, E.; Rivas-Echeverría, F. Assessing the effectiveness of YOLO architectures for smoke and wildfire detection. IEEE Access 2023, 11, 96554–96583. [Google Scholar] [CrossRef]

- Van, D.D. Application of advanced deep convolutional neural networks for the recognition of road surface anomalies. Eng. Technol. Appl. Sci. Res. 2023, 13, 10765–10768. [Google Scholar] [CrossRef]

- Isa, I.S.; Rosli, M.S.A.; Yusof, U.K.; Maruzuki, M.I.F.; Sulaiman, S.N. Optimizing the hyperparameter tuning of YOLOv5 for underwater detection. IEEE Access 2022, 10, 52818–52831. [Google Scholar] [CrossRef]

- Moraes, A.M.; Pugliese, L.F.; Santos, R.F.D.; Vitor, G.B.; Braga, R.A.D.S.; Silva, F.R.D. Effectiveness of YOLO architectures in tree detection: Impact of hyperparameter tuning and SGD, Adam, and AdamW optimizers. Standards 2025, 5, 9. [Google Scholar] [CrossRef]

- Irfani, M.H.; Heriansyah, R. Hyperparameter tuning to improve object detection performance in handwritten images. In Proceedings of the 2024 International Conference on Intelligent Cybernetics Technology & Applications (ICICyTA 2024), Bali, Indonesia, 25 November 2024; IEEE: New York, NY, USA, 2024; pp. 990–995. [Google Scholar] [CrossRef]

- Di, J.; Xi, K.; Niu, H.; Wu, X.; Yang, Y. Enhanced YOLOv8 framework for precise small object detection in UAV imagery. IEEE Access 2025, 13, 157811–157827. [Google Scholar] [CrossRef]

- Hu, Y.; Dai, Y.; Wang, Z. Real-time detection of tiny objects based on a weighted bi-directional FPN. In Proceedings of the International Conference on Multimedia Modeling, Bergen, Norway, 9–12 January 2022; Springer International Publishing: Cham, Switzerland, 2022; pp. 3–14. [Google Scholar] [CrossRef]

- Li, M.; Chen, Y.; Zhang, T.; Huang, W. TA-YOLO: A lightweight small object detection model based on multi-dimensional trans-attention module for remote sensing images. Complex Intell. Syst. 2024, 10, 5459–5473. [Google Scholar] [CrossRef]

| Model Size | Nano |

|---|---|

| Number of parameters | 3,157,200 |

| Gradients | 3,157,184 |

| GFLOPs | 8.9 |

| Stage | Baseline | Improved Model | Channel Width | Structural Change |

|---|---|---|---|---|

| Backbone | SPPF | SPPF_LSKA | 1024 | Module replacement |

| Neck | Concat (P3–P5) | BiFPN (P3–P5) | P2 with 128 Channels added | Module replacement |

| Head | Detect (P3–P5) OBB (P3–P5) | OBB (P2–P5) | Additional P2 branch | Branch extension |

| Platform | Description |

|---|---|

| System | Windows 11 |

| Integrated development environment | Visual studio code |

| Virtual environment | Anaconda prompt |

| GPU | Nvidia Geforce RTX 4090 |

| CPU | AMD Ryzen 7 5700X 8-Core Processor, 3401 MHz |

| Framework | Pytorch 2.5.1 |

| CUDA | 12.4 |

| Language | Python 3.11.11 |

| Ultralytics | 8.3.51 |

| Hyperparameter | Configuration |

|---|---|

| Model scale | Nano |

| Lr0 | 0.01 |

| Weight decay | 0.0005 |

| Optimizer | AdamW |

| Epochs | 100 |

| Patience | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jun, E.S.; Sim, H.J.; Moon, S.J. Advancing YOLOv8-Based Wafer Notch-Angle Detection Using Oriented Bounding Boxes, Hyperparameter Tuning, Architecture Refinement, and Transfer Learning. Appl. Sci. 2025, 15, 11507. https://doi.org/10.3390/app152111507

Jun ES, Sim HJ, Moon SJ. Advancing YOLOv8-Based Wafer Notch-Angle Detection Using Oriented Bounding Boxes, Hyperparameter Tuning, Architecture Refinement, and Transfer Learning. Applied Sciences. 2025; 15(21):11507. https://doi.org/10.3390/app152111507

Chicago/Turabian StyleJun, Eun Seok, Hyo Jun Sim, and Seung Jae Moon. 2025. "Advancing YOLOv8-Based Wafer Notch-Angle Detection Using Oriented Bounding Boxes, Hyperparameter Tuning, Architecture Refinement, and Transfer Learning" Applied Sciences 15, no. 21: 11507. https://doi.org/10.3390/app152111507

APA StyleJun, E. S., Sim, H. J., & Moon, S. J. (2025). Advancing YOLOv8-Based Wafer Notch-Angle Detection Using Oriented Bounding Boxes, Hyperparameter Tuning, Architecture Refinement, and Transfer Learning. Applied Sciences, 15(21), 11507. https://doi.org/10.3390/app152111507