1. Introduction

In recent years, sentiment analysis has emerged as one of the core tasks in natural language processing (NLP) and information retrieval (IR), demonstrating significant value in assisting the understanding of massive text corpora and improving decision-making efficiency [

1]. With the rapid growth of unstructured text such as social media, online reviews, and professional reports, the effective identification and extraction of emotional information has become a key technology for the deployment of intelligent systems [

2]. In practical scenarios, emotional signals in complex long texts are often distributed across multiple sentences and paragraphs, exhibiting characteristics such as loose structure, implicit expression, and wide emotional span [

3]. For instance, in legal judgments, medical case reports, and financial analysis documents, emotional signals frequently appear in implicit and scattered forms [

4,

5,

6]. Among them, financial texts can be regarded as a typical representative of complex long texts due to their high degree of specialization and strong structural characteristics, and their emotional attitudes exert a substantial influence on market behaviors [

7]. Therefore, accurately identifying potential emotions from such texts can not only provide decision support for investors but also serve high-value tasks such as the construction of financial market sentiment indices [

8], credit risk assessment [

9], and black swan event forecasting [

10].

Although existing approaches achieve satisfactory performance on short texts or general corpora, their effectiveness often declines significantly when handling long texts and domain-specific contexts, thereby exposing a set of urgent challenges [

11]. First, long texts usually contain multi-level emotional signals across paragraphs, which may appear in implicit and dispersed forms, making it difficult for traditional local modeling methods to capture global dependencies [

12]. Second, most current models lack dynamic fusion mechanisms across granularity levels, as sentence-level and document-level information are typically processed in isolation, leading to insufficient cross-level semantic alignment [

13]. Third, contextual consistency modeling is often limited, which hinders the effective integration of long-range semantic dependencies, and consequently results in missing or misaligned emotional signals when processing texts with strong contextual dependence and conservative expression styles, such as financial reviews and analytical reports [

14]. Moreover, extremely long inputs give rise to truncation and information dilution issues, causing critical emotional fragments to be weakened or lost during the modeling process [

15]. Overall, these challenges constrain the generalization ability and stability of sentiment recognition models in complex textual environments.

To address these issues, a multi-granularity attention-based sentiment analysis method is proposed, built upon the transformer architecture. This method is designed to simultaneously model sentence-level and document-level emotional features, and to efficiently integrate signals across different granularities, thereby enhancing the capacity for emotion recognition in long and structurally complex contexts [

16]. By introducing hierarchical structure modeling and cross-granularity alignment mechanisms [

17], the method effectively alleviates the limitations of single-granularity modeling, insufficient contextual integration, and semantic fragmentation in existing approaches. The overall framework consists of three core modules: (1) a sentence-level attention module that focuses on capturing fine-grained emotional features within sentences, preventing the dilution of critical signals during encoding; (2) a document-level attention module dedicated to modeling long-range dependencies, uncovering contextual emotional consistency and semantic coherence across paragraphs; and (3) a cross-granularity fusion module that dynamically integrates sentence-level and document-level attention representations, achieving structural-aware emotion modeling and granularity alignment, thereby improving representational power and robustness. The main contributions of this study are as follows:

A multi-granularity attention structure is proposed to overcome the static hierarchical limitation of traditional models, enabling deep emotional semantic modeling across sentences and paragraphs.

A cross-granularity alignment mechanism is designed to enhance the fusion of representations between different semantic levels, effectively capturing dispersed emotional signals in long texts.

Comprehensive empirical validation is conducted on multiple real-world datasets, including financial reviews, news summaries, and product evaluations. Comparative experiments with various state-of-the-art models demonstrate that the proposed method achieves superior performance in terms of accuracy, robustness, and structural adaptability in sentiment recognition.

We will make all datasets and the corresponding source code open source upon the publication of this paper.

2. Related Work

This section provides an overview of existing research related to sentiment analysis, attention mechanisms, and their integration with information retrieval (IR).

Table 1 summarizes representative approaches, highlighting their core methods, advantages, and limitations to motivate the design of our proposed model.

2.1. Text Sentiment Analysis Methods

Text sentiment analysis, an important NLP research direction, identifies subjective emotional tendencies and is widely used in opinion monitoring, feedback mining, and risk perception [

32]. Early lexicon-based methods determined polarity by matching words with sentiment scores, offering good interpretability and simplicity. For instance, Perumal Chockalingam et al. proposed SAVSA, which refines VADER with SentiWordNet for improved tweet classification [

18], while Lyu et al. combined LIWC with culture- and suicide-related dictionaries to detect depressive tendencies on Weibo [

19]. However, such approaches depend on manually built lexicons and struggle with ambiguity, context, and implicit emotions. To address this, traditional machine learning was introduced: Tabany and Gueffal applied SVM for sentiment and fake review detection [

20], Mishra et al. used multinomial naive Bayes with 94% accuracy on Kaggle tweets [

21], and Mutmainah et al. adopted TF-IDF with random forest, reaching 91.2% accuracy [

22]. Despite promising results on short texts, these models relied on static, low-dimensional features and lacked deep semantic modeling, limiting performance on long texts.

The rise of deep learning has greatly advanced sentiment analysis. Kumaragurubaran et al. leveraged CNNs to enhance SVM performance on tweets [

23], while Gupta et al. combined Bi-GRU and Bi-LSTM for improved aspect-level feature extraction [

24]. Yet, these models struggled with long-text issues like information loss. Transformer [

26] and pre-trained models such as BERT [

33] and RoBERTa [

34] addressed context modeling via self-attention, achieving major benchmark improvements. However, input length limits and flat encoding constrained cross-sentence sentiment modeling, especially in ultra-long texts with sparse signals and hierarchical dependencies, motivating multi-granularity and hierarchical approaches.

2.2. Attention Mechanism and Multi-Granularity Modeling

The attention mechanism, a core technique in modern NLP, assigns dynamic weights to input positions and has been widely applied in translation, reading comprehension, classification, and sentiment analysis [

35]. Since Bahdanau et al. introduced it in neural machine translation [

25], attention has evolved into soft [

36], hard [

37], self-, and multi-head forms [

26]. Transformer architectures, built on multi-head self-attention, enabled long-range dependency modeling and became the backbone of pre-trained models such as BERT, GPT, and RoBERTa. To capture hierarchical semantics, hierarchical attention networks (HAN) introduced sentence- and document-level modules for multi-granularity modeling [

27]. However, HAN and similar methods suffer from static granularity partitioning and lack cross-granularity integration, limiting adaptability and semantic consistency [

38]. To overcome this, the proposed model extends the transformer with sentence-level, document-level, and cross-granularity fusion modules, enhancing structural flexibility and semantic integration for more effective sentiment modeling in complex texts.

2.3. IR and Sentiment Signal Capture

The integration of sentiment analysis with information retrieval (IR) has become a key NLP direction for locating salient emotional signals in large-scale corpora, supporting applications such as opinion monitoring, event forecasting, and recommendation systems [

39]. Recent works combined sentiment knowledge with semantic modeling: Cambria et al. introduced SenticNet 8, integrating affective knowledge graphs with commonsense reasoning [

28], while Li et al. incorporated SenticNet into semantic-syntactic models to enhance social media sentiment retrieval [

29]. Despite progress, these methods struggle with structurally complex, cross-paragraph long texts, especially in financial contexts where implicit cues are dispersed across documents. Efforts such as Yuan et al.’s FinBERT-QA, which combined BM25 with BERT-based re-ranking for FiQA [

30], and Chen et al.’s EFSA framework, which built a large-scale Chinese dataset with event-level reasoning [

31], improved retrieval and fine-grained modeling but remained limited to document- or sentence-level analysis, often relying on static granularity. To address these challenges, the proposed multi-granularity attention-based model jointly captures sentence- and document-level features with a fusion mechanism, enhancing cross-paragraph semantic consistency, sentiment evolution modeling, and interpretability, with strong applicability to financial texts.

3. Materials and Method

3.1. Proposed Method

3.1.1. Overall

The overall process of the proposed method begins with the model design. Given documents that have been preprocessed through cleaning, sentence segmentation, and tokenization, the inputs are first passed into the embedding layer, where two types of representations are generated in parallel: one is static word embeddings (e.g., GloVe), providing a stable global semantic foundation, and the other is contextual dynamic embeddings derived from a pre-trained language model (e.g., BERT-base), capturing fine-grained meanings that vary with context. These two embeddings are aligned in dimensionality and fused through a gating mechanism, where the gating coefficients are produced by concatenating word embeddings with local context and processing them through a multilayer perceptron, followed by intra-sentence normalization. The fused representation forms a unified word-level embedding, to which hierarchical positional encodings are added to preserve both intra-sentence and inter-sentence order information. Subsequently, the word-level representations are fed into the sentence-level transformer, which models word dependencies within each sentence through multi-head self-attention and feed-forward networks. Sentence representations are obtained via attention pooling or by using the [CLS] token, forming a sequential set of sentence vectors ordered according to the document. This sequence, together with sentence position and paragraph boundary encodings, is then passed into the document-level transformer. Cross-sentence self-attention explicitly models thematic coherence, sentiment transitions, and causal links across the full document, producing contextualized sentence representations and a document-level aggregated vector. To bridge the hierarchical semantics, a cross-granularity contrastive fusion module aligns the features from the sentence-level and document-level encoders. For each sentence, its local representation and the corresponding global contextual representation are input into the alignment network, where a dynamic fusion weight between 0 and 1 is computed (determined jointly by similarity and confidence and adaptively normalized across the document), yielding a fused local–global representation. Positive and negative pairs are simultaneously constructed to maximize consistency between the same semantic unit across different granularities and minimize similarity between mismatched units, thereby enhancing cross-level discriminability. On top of the fused representations, hierarchical sentiment aggregation is performed: attention weights highlight critical sentiment segments within sentences, followed by a reweighting of sentences at the document level, resulting in a structure-aware document-level sentiment vector. Finally, this document vector is normalized by layer normalization and regularized with dropout before being passed into a linear classification head and softmax to produce sentiment polarity and confidence scores. During training, a joint objective is employed that combines cross-entropy loss for label separability with a contrastive consistency loss to enforce granularity alignment and global coherence. At inference time, the same forward path is used, and the model is capable of producing both intra-sentence and inter-sentence attention heatmaps as interpretable evidence, thereby supporting the localization and traceability of key sentiment cues in long and domain-specific texts.

3.1.2. Multi-Granularity Transformer Structure

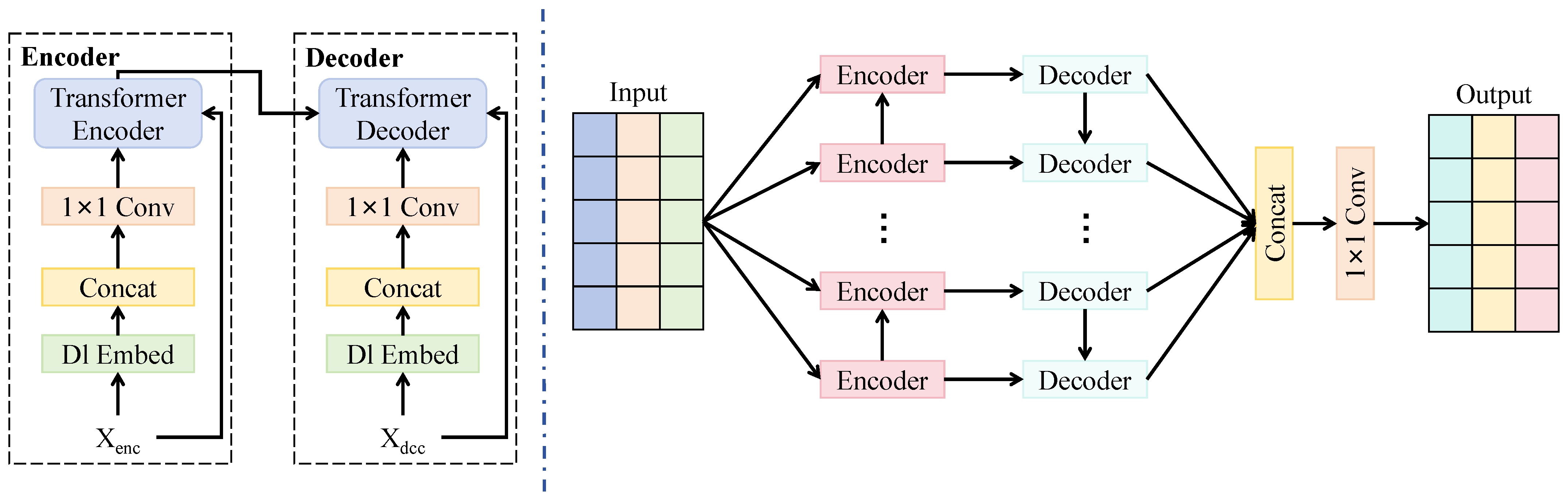

Within the overall architecture, the multi-granularity transformer structure serves as the core representation learning module. Its design objective is to simultaneously capture sentiment cues at both the sentence and document levels, while mitigating semantic attenuation and information fragmentation in long-text modeling through a hierarchical process, as shown in

Figure 1. This structure first receives word embeddings that have been processed by the embedding layer, already incorporating both static and dynamic semantic representations, thus containing global stability and contextual sensitivity. During the sentence-level modeling stage, the text is divided into multiple subsequences, each corresponding to a sentence, which are fed into the sentence-level transformer. The sentence-level transformer employs a multi-head self-attention mechanism, with each layer consisting of 12 attention heads and a hidden dimension of 256, enabling weighted modeling of dependencies among words within a sentence and preventing important sentiment cues from being diluted in long sentences. Each sentence is finally represented by pooling or through the [CLS] token, forming a sequence of sentence-level sentiment vectors representing the document.

In the document-level modeling stage, the sequence of sentence representations is treated as input units and fed into the document-level transformer. This module comprises 6 encoder layers, each with a hidden dimension of 256 and a dropout rate of 0.3 to prevent overfitting. The document-level transformer captures logical coherence and sentiment transitions across sentences through cross-sentence self-attention, producing a global sentiment representation vector. To ensure coordination across different granularities, sentence position and paragraph boundary encodings are introduced during document-level modeling, enabling the model to distinguish similar expressions across contexts while preserving structural consistency in cross-sentence alignment.

Mathematically, the sentence-level and document-level transformers can be regarded as two hierarchical functional mappings. Let the input document be , where denotes the word embedding sequence of the i-th sentence. The output of the sentence-level transformer is , where represents the sentence-level sentiment representation. Subsequently, the document-level transformer takes as input and models global dependencies, yielding the document-level representation . This hierarchical mapping ensures a progressive aggregation of local and global semantics.

This design offers multiple advantages in the present task. First, the sentence-level transformer captures fine-grained local sentiment signals, ensuring precision in sentences containing strong sentiment expressions. Second, the document-level transformer effectively handles implicit sentiment propagation and thematic shifts across paragraphs, enabling the model to maintain global consistency in long-text scenarios. Finally, the integration of both levels of representation avoids the performance degradation encountered by single-level modeling approaches in long texts. Through this multi-granularity hierarchical modeling, the model is capable of perceiving both explicit and implicit sentiment information when processing complex texts such as financial news and professional reports, thereby enhancing stability and generalization in sentiment recognition tasks.

3.1.3. Different-Dimensional Embedding Strategy

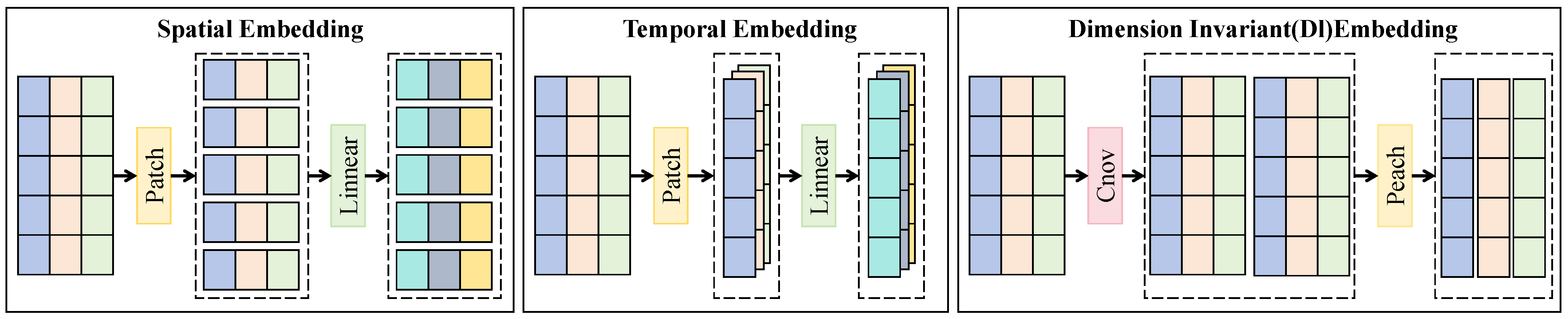

Within the overall framework, the different-dimensional embedding strategy is responsible for both low-level semantic representation and deep contextual perception. The core idea is to leverage static semantic embeddings and dynamic contextual embeddings simultaneously, and to achieve semantic alignment and feature fusion through channel expansion and dimensional mapping in the feature space, as shown in

Figure 2. Specifically, the static embedding component adopts 300-dimensional word vectors based on GloVe, serving as a global semantic foundation and providing statistical co-occurrence features among words. The dynamic embedding component is implemented using the BERT-base encoder, which is composed of 12 stacked transformer layers, each with a hidden size of 768 and 12 multi-head attention mechanisms with a head dimension of 64, thereby ensuring that dynamic embeddings capture dependency relations and semantic shifts within long texts. To map these two embeddings into a unified space, a combination of

convolutional layers and fully connected layers was designed. The static embedding is expanded into a tensor representation with

,

, and

, while the dynamic embedding is mapped to

,

, and

. The two embeddings are concatenated along the channel dimension to form a joint representation of

,

, and

, which is subsequently linearly projected into a 256-dimensional embedding space, serving as the unified input for the multi-granularity transformer.

From a mathematical perspective, let the static word embedding matrix be

and the dynamic embedding matrix be

. After channel expansion and mapping, the resulting representations are

where

and

denote the linear transformation matrices for static and dynamic embeddings, respectively, and

and

represent the expanded channel dimensions. In the experimental configuration,

,

,

, and

. The fused representation is obtained through concatenation as

and then projected back into a unified dimension

where

is the final input dimension. This mapping ensures that embeddings from different sources are aligned within the same feature space.

The rationale for this mapping can be established by considering static and dynamic embeddings as two semantic subspaces S and D, with dimensions and , respectively. Through the linear transformations and concatenation operations, both are mapped into a higher-dimensional joint subspace F. According to subspace theory, if S and D exhibit partial linear independence, then the rank of their concatenation satisfies , indicating that the joint space contains richer semantic features than either individual space. This enriched representation consequently enhances the expressive capacity of the multi-granularity transformer.

Moreover, when combined with the cross-granularity contrastive fusion module, this strategy significantly strengthens the consistency between local and global modeling. Since cross-granularity fusion relies on the alignment of sentence-level and document-level representations, such alignment is guaranteed only when the underlying word vectors are both stable and diverse. With the joint representation of static and dynamic embeddings, the sentence-level transformer can fully exploit local lexical co-occurrence features, while the document-level transformer can capture long-range dependencies and sentiment propagation paths. In other words, static embeddings enhance global stability, while dynamic embeddings contribute contextual sensitivity. Once jointly mapped into a unified space, cross-granularity contrastive constraints further optimize local-global consistency.

For the present task, this design effectively addresses the challenges of sentiment sparsity and ambiguity in long and domain-specific texts. For instance, in financial news, the term “overweight” may express a positive sentiment when associated with market optimism but may be neutral in the context of regulatory constraints. Dynamic embeddings are able to capture such context-dependent distinctions, while static embeddings ensure overall semantic stability. By mapping and fusing the two into a joint subspace, the model not only preserves consistency in sentiment modeling but also improves adaptability to contextual differences, thereby achieving superior performance and generalization.

3.1.4. Cross-Granularity Contrastive Fusion Module

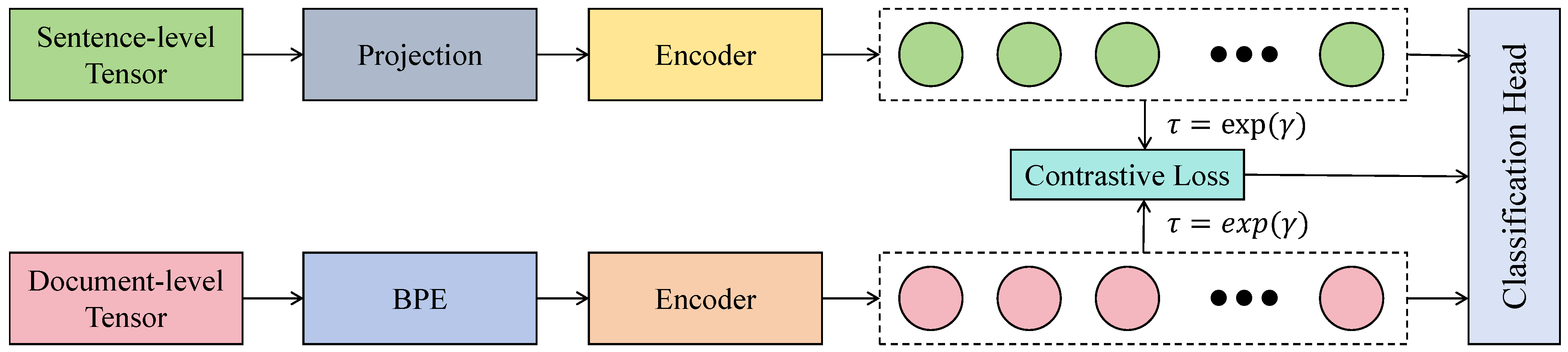

The cross-granularity contrastive fusion module takes sentence-level and document-level representations as input, aiming to achieve alignment and separation within a unified representation space, thereby encoding both local sentiment cues and global contextual coherence simultaneously, as shown in

Figure 3.

Specifically, the sentence-level tensor obtained from the multi-granularity transformer is reshaped into a feature map of size

, while the document-level tensor is reshaped into

. Both are first processed by

convolutions for dimensionality reduction and normalization. Two projection heads,

and

, sharing architectural design but not parameters, are applied (two fully connected layers

, with GELU activation and dropout = 0.1 in the intermediate layer), producing normalized vectors of length 128. Let the global pooled sentence-level and document-level vectors of the

i-th document be

and

, respectively. Their projections are defined as

and

. The similarity is computed using the inner product scaled by a temperature parameter

, which is parameterized as

to ensure positivity and trainability, offering improved stability consistent with contrastive learning paradigms. The symmetric cross-granularity InfoNCE loss is thus defined as

When embeddings are unit-normalized, the inner product reduces to cosine similarity. The gradient with respect to the positive pair

is dominated by

, where

is the softmax probability, indicating that optimization increases similarity for positive pairs while suppressing similarity for negative pairs. This achieves the geometric goal of “intra-document cross-granularity alignment and inter-document separation” in the shared semantic space, ensuring that explicit intra-sentence sentiment and implicit cross-sentence sentiment can be compared and aggregated within the same coordinate system. To propagate cross-granularity consistency back to the encoder, beyond the contrastive loss, a lightweight gated fusion head

concatenates the two vectors and outputs weights to produce the fused representation

for the classifier:

This design, when combined with the different-dimensional embedding strategy (denoted as

), first aligns static global and dynamic contextual embeddings into a unified channel structure forming the base features of size

, ensuring both stability and sensitivity at the bottom layer. The multi-granularity transformer then establishes hierarchical dependencies across words, sentences, and sentences-to-document. Finally, this module enforces alignment on a 128-dimensional unit sphere, allowing representations from different granularities to be optimized under the same metric. Theoretically, denoting the sentence-level and document-level subspaces as

S and

D, the mutual information lower bound induced by contrastive learning is expressed as

so the decrease in

corresponds to an increase in the shared information between

S and

D, providing an information-theoretic justification for cross-granularity consistency. Furthermore, as

is trainable, the entropy of the soft negative sample distribution can be adaptively adjusted to prevent gradient vanishing or explosion, ensuring stable convergence and improved separability in long-text scenarios. Overall, the fixed geometric radius (embedding normalization), trainable temperature, and symmetric bidirectional contrastive objective enable this module to address key challenges in long and domain-specific texts, such as sentiment sparsity, restrained expression, and cross-sentence diffusion. Sentence-level strong signals are preserved and aligned with weaker document-level signals to improve detectability; global noise is suppressed by negative pairs to enhance robustness; and the unified spherical metric allows the subsequent classification head to achieve effective separability with only a linear boundary, thereby reducing overfitting risk and enhancing cross-dataset generalization.

3.2. Experimental Setup

To verify the effectiveness and robustness of the proposed method, a systematic evaluation was conducted on a unified experimental platform. All experiments were executed on computing nodes equipped with NVIDIA Tesla V100 GPUs (32 GB memory), with Ubuntu 20.04 LTS as the operating system. Model training and result analysis were performed in the Python 3.9 environment, primarily relying on PyTorch 1.12 as the deep learning framework, and the HuggingFace Transformers library was employed for the invocation and fine-tuning of pre-trained language models. For each dataset, the original corpus was randomly divided into training, validation, and test sets with a ratio of 8:1:1. Stratified sampling was adopted to maintain the same sentiment label distribution across all subsets. To prevent data leakage, all samples from the same source post or article were kept within the same subset. This consistent partitioning strategy ensures fair comparison and reproducibility across different model configurations. For training parameters, the AdamW optimizer was adopted, with gradient clipping applied to avoid gradient explosion. The initial learning rate was set to and was gradually reduced using a linear decay strategy; the batch size was set to 32; the maximum number of training epochs was 20. During training, the early stopping strategy was applied, where training was terminated if no improvement was observed on the validation set for 5 consecutive epochs. To ensure result stability, all experiments were repeated 5 times, and the mean and standard deviation were reported. For hyperparameter settings, the dimensionality of static word embeddings d was set to 300, initialized using pre-trained GloVe vectors. Contextualized dynamic representations were obtained from BERT-base (12 transformer layers, hidden dimension of 768, and 12 attention heads). For the multi-granularity attention mechanism, the hidden units for both sentence-level and document-level representations were set to 256, with a dropout ratio of 0.3. In synonym replacement, the number of replaced words k was set to 10% of the sentence length; back translation employed the English–Chinese–English translation path; the probability of random deletion p was set to 0.1. All hyperparameters were determined through grid search on the validation set.

3.3. Evaluation Metrics

To comprehensively assess the performance of the proposed method on sentiment analysis tasks, multiple commonly used classification metrics were employed, including accuracy, precision, recall, F1-score, and the area under the curve (AUC). These metrics reflect the performance of the model in positive and negative class recognition from both overall and detailed perspectives. Accuracy measures the overall proportion of correct classifications; precision and recall respectively evaluate the reliability and coverage of positive class recognition; the F1-score provides a balance between precision and recall; and AUC evaluates the classification capability and robustness of the model under different thresholds.

3.4. Baseline

To validate the effectiveness of the proposed model, four representative sentiment analysis methods were selected as baseline models, namely SVM [

20], long short-term memory network (LSTM) [

40], BERT [

41], RoBERTa [

42], and HAN [

27]. Among them, SVM, as a representative of traditional approaches, demonstrates robust classification performance in low-dimensional feature spaces and is capable of capturing partially nonlinear decision boundaries through high-dimensional space mapping, making it a strong baseline in text sentiment classification tasks. LSTM effectively models long-range dependencies in sequences and is advantageous in capturing the dynamic evolution of sentiment within sentences, thus serving as a typical model widely employed in sentiment analysis during the early stage of deep learning. BERT, through its bidirectional transformer architecture and large-scale unsupervised pretraining, is able to acquire context-dependent deep semantic representations, significantly improving performance on sentiment analysis tasks. RoBERTa, building upon BERT, enhances model expressiveness and generalization by employing larger-scale corpora, longer training periods, and dynamic masking strategies, and has achieved superior results across multiple downstream tasks. HAN leverages the hierarchical structure of text (word–sentence–document), introducing attention mechanisms at both the word and sentence levels to identify the most critical segments for sentiment classification, thereby improving the modeling capability and interpretability of long texts to some extent. Comparisons with these methods provide a clearer demonstration of the advantages of the proposed multi-granularity attention mechanism in handling long and domain-specific texts.

4. Results and Discussion

4.1. Instrumentation

Data Collection

For general long-text sentiment analysis tasks, we selected the financial forum reviews dataset. This dataset was collected from multiple international financial forums (such as StockTwits and the Seeking Alpha discussion board), covering user posts and comments from 2000 to 2010. All reviews come from publicly accessible financial community data. The average text length is between 200 and 300 words, and each review is labeled with binary sentiment polarity, either positive or negative, as shown in

Table 2. The sentiment labels were obtained through a semi-automatic annotation process: an initial polarity classification was generated using a financial sentiment lexicon and keyword-based rules, followed by manual verification on a randomly selected subset to ensure labeling accuracy and consistency. The dataset is characterized by relatively long reviews that often contain sentiment signals across multiple sentences and paragraphs, thus providing a solid foundation for validating the model in long-text financial scenarios.

For e-commerce review tasks, we used the financial product user reviews dataset. This dataset was collected from 2015 to 2020 from major financial e-commerce and investment platforms (such as Amazon’s finance-related books and personal finance product sections). Sentiment labels were derived automatically from star ratings: 1–2 stars were classified as negative, 4–5 stars as positive, while 3-star reviews were excluded as neutral. This rule-based labeling ensured objectivity and scalability across a large corpus. The dataset is characterized by a large sample size and diverse topics. The average text length is moderate, around 50–100 words, making it suitable for evaluating the model’s generalization ability in short-text financial sentiment modeling.

For professional domain sentiment analysis, a financial news dataset was constructed. This dataset was collected from 2018 to 2024 through major financial news websites and publicly available financial information platforms, including Reuters, Bloomberg, the Wall Street Journal, and China Securities Net. The collection process combined keyword-based web crawling with manual verification, ensuring coverage of diverse themes such as market trends, corporate announcements, policy interpretations, and industry analyses. To maintain representativeness, non-financial content and overly short news briefs were filtered out, with complete news articles retained as analysis objects. Sentiment annotation was conducted through a dual-annotator verification process: two financial-domain experts independently labeled each article based on its market-oriented sentiment orientation (e.g., “positive news” labeled as positive, “risk warnings” as negative). Disagreements were resolved through discussion, ensuring high inter-annotator agreement. The average text length of this dataset ranges from 400 to 600 words, representing typical long, structured texts. The financial news dataset exhibits stronger implicit and indirect emotional expressions, making it a challenging benchmark for evaluating the capability of the model to capture sentiment across paragraphs and granularities.

4.2. Operation

Data Preprocessing and Augmentation

To effectively enhance the performance of sentiment recognition in multi-source complex texts, a systematic data preprocessing and augmentation strategy was designed prior to model training. The objective of this strategy is to improve the semantic regularity, structural consistency, and expressive diversity of the input corpora, thereby increasing the robustness and generalization ability of the model to semantic variations. Particularly in long texts or domain-specific contexts, word ambiguity, noisy expressions, and the sparsity of training samples significantly affect model performance. Therefore, the normalization and extended transformation of raw data serve as critical preliminary steps for improving modeling quality.

Formally, let the original text corpus be denoted as

, where each text

represents a complete document composed of a sequence of sentences. To ensure structural consistency and semantic stability of the inputs to downstream models, a standardization process was first applied:

where

denotes the text cleaning function, including character normalization (such as full-width to half-width conversion and Unicode standardization), removal of special symbols, punctuation unification, and sentence segmentation. This process eliminates non-linguistic interference and redundant noise in the input, yielding the standardized text

. To further construct structured word-sequence representations, tokenization was applied to the cleaned text, followed by the removal of stopwords (e.g., “the”, “is”, “and”), which carry little or no sentiment value. This reduced the influence of high-frequency words on the distribution of model attention, resulting in the following representation:

where

denotes the

t-th token in text

, and

represents a predefined stopword set. The resulting sequence

is thus obtained after stopword removal.

In the word representation stage, both static and dynamic embeddings were adopted to balance global semantic statistics and contextual semantic dependencies. First, static embeddings such as GloVe provide fixed word representations that capture the overall semantic contour of the text. The embedding matrix is defined as

, where

denotes the vocabulary size and

d the embedding dimension:

with

representing the vector embedding of token

. However, static embeddings fail to differentiate meaning variations across contexts. To address this, BERT was employed to extract context-aware dynamic embeddings. This representation effectively captures semantic ambiguity and dependency structures in long texts, enhancing both intra-sentence and inter-sentence coherence. The representation is formulated as

where

denotes the encoding process of the BERT model, and

represents the contextualized embedding of the token

at position

t.

In addition, to improve the adaptability of the model to semantic diversity and expression variations, three text-level data augmentation strategies were introduced: synonym replacement, back translation, and random deletion. Synonym replacement introduces semantically equivalent lexical variants to enhance input diversity. Specifically, for an input text

,

k non-stopwords

were randomly selected from its token sequence, and for each selected token, a synonym was generated:

where

denotes the index of the selected token in the

i-th text.

can be implemented via WordNet-based lexical lookup or by selecting the most semantically similar alternative through cosine similarity in a pre-trained embedding space. In this study, we used the WordNet 3.1lexical database and a 300-dimensional GloVe pre-trained embedding model to retrieve and evaluate synonym candidates. This strategy preserves overall semantic consistency while introducing lexical-level diversity, thereby improving the model’s generalization to semantically equivalent expressions.

Back translation was further applied to introduce structural and word-order variations, thereby enriching syntactic diversity. An intermediate language

(e.g., Chinese) was selected, and commercial translation systems were used to perform two-step translation: first from the source language

L to

, and then back to

L, generating an augmented sample

:

where

denotes a commercial translation system such as Google Translate. Specifically, the Google Translate API was employed to perform both forward and backward translation steps, ensuring high translation quality and broad language coverage. This strategy introduces syntactic and word-order variations, as well as different connective structures, while preserving semantic invariance, thereby enhancing model robustness to syntactic variations.

Finally, random deletion was employed to simulate incomplete inputs or noisy text conditions. For each token

in the input sequence, a retention or deletion decision was made according to a predefined probability, resulting in an augmented text:

where

is a binary random variable controlling token retention, with

and

denoting the predefined deletion probability. If

, the token

is retained; otherwise, it is removed. This strategy enhances the tolerance of the model to missing non-critical components in the input sequence.

4.3. Overall Performance Comparison on Financial Forum Reviews Dataset

The purpose of this experiment is to validate the effectiveness and robustness of the proposed multi-granularity attention sentiment analysis method in long-text scenarios. The Financial Forum Reviews Dataset is characterized by long review content with sentiment information distributed across multiple sentences and paragraphs, making it particularly suitable for evaluating the capability of long-text financial sentiment modeling.

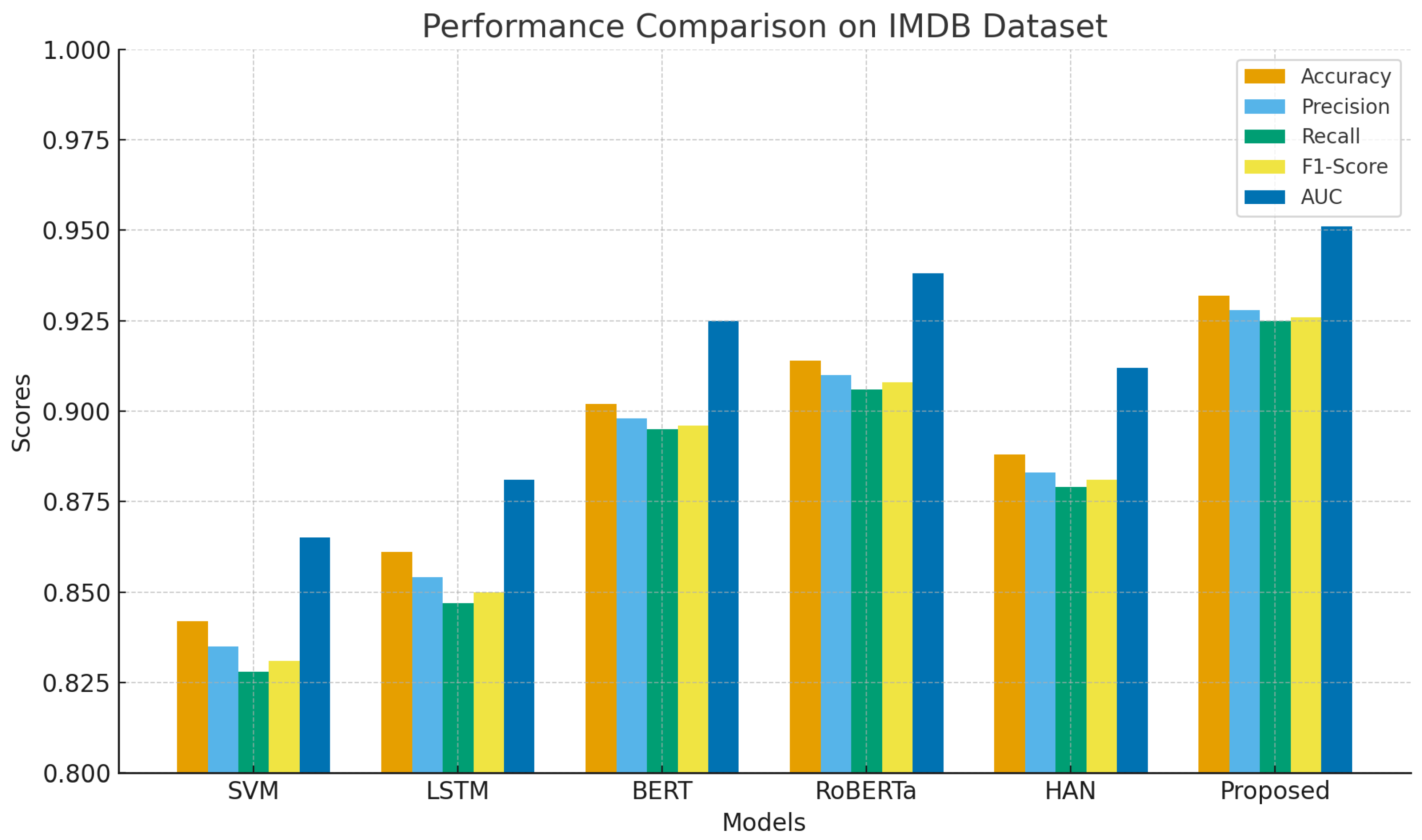

As shown in

Table 3 and

Figure 4, the traditional SVM maintains relatively high accuracy and AUC in a limited feature space, but its inability to capture complex semantic relationships causes it to lag behind deep learning models. LSTM leverages gated mechanisms to model sequential information and outperforms SVM on long text, yet its recurrent structure restricts its ability to capture long-distance dependencies. HAN introduces attention at both word and sentence levels, allowing the identification of key sentiment fragments, which improves performance compared with LSTM but remains constrained by static granularity partitioning. BERT and RoBERTa, based on transformer self-attention, exhibit superior semantic modeling and long-distance dependency capturing, with RoBERTa outperforming BERT due to larger-scale pretraining and longer training schedules. The proposed method achieves the best results across all metrics by balancing global consistency with fine-grained local capture through multi-granularity attention, demonstrating particular stability and generalization in F1 and AUC. From a theoretical perspective, performance differences are closely linked to the mathematical characteristics of the models. SVM relies on hyperplane separation in high-dimensional space, effective for sparse low-dimensional features but insufficient for hierarchical semantics of long text. LSTM’s recurrent units achieve memory through state propagation, yet gradient vanishing or explosion limits long-range dependency modeling. HAN assigns hierarchical attention weights to words and sentences, mathematically equivalent to introducing distributions at multiple levels, but the lack of cross-level fusion constrains its expressiveness. Transformer-based models such as BERT and RoBERTa explicitly model global dependencies via self-attention matrices, mathematically corresponding to weighted mappings across the entire sequence, enabling superior performance in long text. The proposed method further constructs joint representations across sentence and document levels with alignment and fusion mechanisms, mathematically enhancing mutual information across subspaces, thereby ensuring robust global dependency capture and improved local detail discrimination.

4.4. Overall Performance Comparison on Financial Product User Reviews Dataset

The purpose of this experiment is to evaluate the performance of different models on diverse short and medium-length texts, verifying their generalization ability in the context of financial product reviews. The Financial Product User Reviews Dataset contains user feedback on finance-related books and personal financial products, characterized by concise language, broad topical coverage, and relatively direct sentiment signals. This makes it a suitable benchmark for assessing adaptability to semantic diversity and cross-topic variance in financial domains.

As shown in

Table 4, SVM maintains certain classification performance under low-dimensional features but is constrained by limited feature expressiveness. LSTM models contextual dependencies through recurrent structures, showing improvements over traditional methods but still limited in capturing long-range dependencies. HAN, with attention mechanisms at both word and sentence levels, highlights key information and performs better than LSTM, yet struggles with large-scale cross-domain reviews. BERT and RoBERTa demonstrate strong advantages in semantic modeling through self-attention, with RoBERTa achieving further gains through larger datasets and longer training. The proposed method surpasses all baselines across metrics, confirming its stability and capability in capturing sentiment across texts of varying lengths and domains. Theoretically, SVM’s kernel-based hyperplane construction performs well in small-scale tasks but lacks expressive power for long-tail distributions. LSTM’s state propagation functions adequately in short texts but is mathematically constrained by gradient decay in longer dependencies. HAN’s weighted attention enables explicit local aggregation but lacks dynamic cross-layer alignment. Transformer models, by mapping global dependencies through attention matrices, ensure semantic consistency across diverse domains. The proposed method combines multi-granularity representation and cross-layer alignment, mathematically creating a joint semantic subspace that maximizes complementarity, thus achieving superior generalization and robustness.

4.5. Overall Performance Comparison on Financial News Dataset

The purpose of this experiment is to evaluate the performance of sentiment analysis models on financial news, characterized by complex structures and implicit sentiment, thereby testing domain adaptability and robustness. Financial news often employs restrained language, with sentiment cues subtly embedded in policy interpretation, risk warnings, and market predictions, imposing higher demands on long-text modeling, cross-paragraph semantic understanding, and implicit sentiment detection.

As shown in

Table 5, SVM performs poorly in this domain, with low accuracy and AUC. LSTM improves modeling through recurrence but remains inadequate in complex contexts. HAN identifies key sentiment fragments via hierarchical attention, outperforming LSTM but constrained by static partitioning. BERT achieves substantial improvements through global semantic modeling, while RoBERTa, benefiting from more extensive pretraining, further enhances generalization. The proposed method achieves the best results across all metrics, particularly excelling in F1 and AUC, highlighting its adaptability to financial text. Theoretical analysis reveals that SVM’s reliance on hyperplane partitioning cannot capture hierarchical semantics in long, complex inputs. LSTM suffers from gradient decay, limiting long-range dependency capture. HAN aggregates local information with hierarchical attention but lacks dynamic alignment across layers. Transformer-based models leverage attention matrices to map global dependencies, allowing implicit semantic relationships to be captured, with RoBERTa excelling due to improved pretraining strategies. The proposed multi-granularity approach constructs joint representation spaces across sentence and document levels, aligning features via cross-granularity mechanisms, mathematically enhancing mutual information sharing across subspaces. This enables consistency across local and global semantics, delivering superior performance in financial sentiment analysis.

4.6. Ablation Study of the Proposed Method on Financial News Dataset

The purpose of this experiment is to evaluate the contribution of each core module through ablation analysis, particularly in the challenging context of financial news where text is complex and sentiment is often implicit. The roles of the multi-granularity transformer, different-dimensional embedding strategy, and cross-granularity fusion module are assessed.

As shown in

Table 6, removing the multi-granularity transformer results in the most significant performance drop across all metrics, indicating its critical role in capturing cross-sentence dependencies and implicit sentiment propagation. Excluding the different-dimensional embedding strategy reduces sensitivity to contextual nuances, leading to declines in precision and recall. Omitting the cross-granularity fusion module results in intermediate performance, demonstrating its importance in aligning global and local features but with less impact than the multi-granularity structure. Overall, the full model achieves the best performance, validating the necessity and complementarity of all components. From a mathematical perspective, the multi-granularity transformer decomposes the document into hierarchical subspaces, with self-attention enhancing cross-level dependencies, addressing gradient decay and semantic dilution in long texts. The embedding strategy projects static and dynamic embeddings into a unified space, increasing subspace rank and enriching representation. The cross-granularity fusion module applies contrastive learning to maximize alignment between sentence-level and document-level features, mathematically optimizing mutual information across subspaces and improving geometric separation of positive and negative samples. Together, these modules address different challenges in long-text sentiment modeling, enabling the full model to outperform all ablated variants in financial news sentiment analysis.

4.7. Discussion

The proposed multi-granularity attention framework demonstrates consistent superiority over existing baseline models across multiple datasets, particularly in handling long and semantically complex financial texts. Compared with strong pre-trained baselines such as RoBERTa, the performance improvement, though moderate (around 2–3%), is both consistent and statistically significant. This indicates that the proposed model achieves better semantic alignment and contextual consistency through its hierarchical design rather than relying solely on large-scale pre-training. The sentence-level and document-level attention mechanisms jointly contribute to this improvement by capturing sentiment shifts that single-granularity models often overlook.

A closer examination of the results shows that the remaining performance gap between our model and an ideal classifier mainly arises from two aspects. First, in certain ambiguous cases—such as texts containing mixed sentiment cues or domain-specific metaphors—the attention weights may still focus on sentiment-neutral expressions, leading to softened polarity predictions. Second, the current contrastive fusion strategy is limited by the representational diversity of training samples; when the dataset lacks balanced examples of subtle sentiment transitions, cross-granularity alignment becomes less effective. These observations suggest that while the proposed model enhances granularity integration, there remains room for improvement in handling implicit or context-shifting sentiment.

Future extensions can address these issues in several ways. One promising direction is to incorporate adaptive learning strategies, such as curriculum learning or self-training with pseudo-labeled data, to dynamically refine the cross-granularity representations. Another potential improvement lies in expanding the dataset with more fine-grained or multi-domain annotations, which can enhance the model’s robustness and generalization. Integrating reinforcement-based attention optimization could also further strengthen the model’s interpretability and focus on key sentiment-bearing segments.

Beyond quantitative performance, the model also shows strong applicability in multiple real-world contexts. In financial news interpretation, it captures implicit market sentiment cues such as “tightened regulation” or “policy constraints”, providing investors with more reliable indicators of market tone. In e-commerce reviews, it distinguishes colloquial and ambiguous expressions like “acceptable” or “so-so” by leveraging the balance between static semantics and dynamic context. In social media and public opinion analysis, the cross-granularity fusion module effectively detects suggestive or contrastive sentiments that span multiple sentences (e.g., “although the policy intention is positive, its implementation has raised concerns”), offering a more precise basis for early risk detection. Moreover, in clinical text applications, it identifies implicit sentiment shifts reflecting patient condition improvement or deterioration, thereby supporting more comprehensive psychological assessment.

In summary, while the proposed model achieves robust and interpretable performance across domains, its current capacity is bounded by data diversity and fixed learning strategies. Future work will focus on adaptive training mechanisms, data expansion, and multimodal extensions to further enhance sentiment understanding and generalization.

5. Threats to Validity

Following Wohlin [

43] and Antony [

44], the potential threats to the validity of this study are analyzed across four dimensions, along with corresponding mitigation strategies.

External validity. The main external threat lies in domain dependence, as the training and evaluation were conducted primarily on financial and e-commerce datasets. Consequently, generalization to other specialized domains (e.g., medical or legal texts) where annotated data are scarce may be limited. To mitigate this risk, the study incorporated multiple datasets of different genres, such as news, forum, and product reviews, to enhance diversity and coverage. Future work will explore few-shot and zero-shot transfer learning strategies using large language models (LLMs) as backbones to improve adaptability across domains.

Construct validity. The construction of sentiment labels in domain-specific texts, particularly financial news, poses an inherent challenge that may introduce annotation noise. To alleviate this concern, a dual-annotator verification process was employed for manually labeled datasets, and inter-annotator agreement (Cohen’s Kappa) was calculated to ensure reliability. The study also adopted validated evaluation metrics (accuracy, precision, recall, and F1-score) that align with prior research, ensuring consistent construct measurement.

Internal validity. The superior performance of the proposed model could partially stem from the strong pre-trained backbone (BERT-base), rather than solely from the newly introduced multi-granularity modules. All baseline models (including BERT and RoBERTa) were fine-tuned using widely adopted parameter settings and verified through multiple runs to ensure stable and fair comparisons. Furthermore, standardized preprocessing and consistent data augmentation procedures were applied across datasets to minimize potential biases.

Conclusion validity. Although all experiments were repeated five times, random variations in initialization or data splitting may still influence the reported results. To strengthen statistical stability, both mean and standard deviation were reported in the results tables, and statistical significance tests (e.g., paired t-test and ANOVA) were performed to confirm that the observed improvements are meaningful. These measures collectively ensure the robustness and credibility of the conclusions drawn from the experiments.

Reliability. To ensure transparency and reproducibility, all implementation details, including preprocessing scripts, model configurations, and parameter settings, will be publicly released in a GitHub repository after publication acceptance.

6. Conclusions

Sentiment analysis has long been regarded as a key topic in NLP and IR, with substantial practical value in financial news, user reviews, and public opinion monitoring. However, existing approaches often struggle with semantic dilution, dispersed sentiment cues, and insufficient modeling of contextual dependencies when confronted with long documents and structurally complex professional discourse. To address these challenges, a transformer-based multi-granularity attention framework is proposed, in which sentence-level and document-level representations are jointly modeled, and different-dimensional embedding strategies together with cross-granularity contrastive fusion are employed to achieve unified modeling of local and global sentiment signals with consistency optimization. This design enhances the expressive capacity of cross-level semantic representations in theory and demonstrates superior empirical performance. Experiments show that the proposed approach significantly outperforms representative baselines, including SVM, LSTM, BERT, RoBERTa, and HAN, across multiple metrics such as accuracy, precision, recall, F1-score, and AUC. For example, on the financial forum reviews dataset, accuracy reaches , with precision and recall of and , respectively, clearly surpassing other baselines; on the financial product user reviews dataset, the model achieves an accuracy of , with precision, recall, and AUC reaching , , and respectively, demonstrating clear advantages in short-text sentiment analysis; on the financial news dataset, accuracy attains and AUC reaches , further confirming adaptability and robustness in professional texts. Ablation results also verify that the multi-granularity transformer architecture, different-dimensional embedding strategy, and cross-granularity fusion module each contribute critically to overall gains. The principal contribution lies in a multi-granularity sentiment framework that balances local and global modeling, integrates static and dynamic embeddings in an innovative manner, and introduces a cross-granularity contrastive fusion mechanism, effectively alleviating the difficulties of sentiment recognition in long and domain-specific texts. Future research can proceed in several directions. One avenue is the exploration of few-shot or zero-shot learning strategies by combining transfer learning with self-supervised approaches to mitigate the shortage of labeled data in specialized domains. Another promising direction is the extension to multimodal sentiment recognition, where text can be jointly modeled with speech intonation, facial expressions, or structured data from financial markets, thereby improving comprehensiveness and accuracy. Moreover, optimization efforts could include the incorporation of lightweight attention mechanisms and knowledge distillation strategies to reduce computational costs and enable deployment in mobile or real-time applications. Through such advancements, the practical value and generalizability of the model in real-world scenarios will be further enhanced.

Author Contributions

Conceptualization, W.H., S.L., S.Z. and Y.Z.; Data curation, Y.H., Z.L. and X.D.; Formal analysis, Y.W. and X.D.; Funding acquisition, Y.Z.; Investigation, Y.W.; Methodology, W.H., S.L. and S.Z.; Project administration, Y.Z.; Resources, Y.H., Z.L. and X.D.; Software, W.H., S.L. and S.Z.; Supervision, Y.Z.; Validation, Y.H. and Y.W.; Visualization, Z.L.; Writing—original draft, W.H., S.L., S.Z., Y.H., Y.W., Z.L., X.D. and Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China grant number 61202479.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wankhade, M.; Rao, A.C.S.; Kulkarni, C. A survey on sentiment analysis methods, applications, and challenges. Artif. Intell. Rev. 2022, 55, 5731–5780. [Google Scholar] [CrossRef]

- Anderson, T.; Sarkar, S.; Kelley, R. Analyzing public sentiment on sustainability: A comprehensive review and application of sentiment analysis techniques. Nat. Lang. Process. J. 2024, 8, 100097. [Google Scholar] [CrossRef]

- Jim, J.R.; Talukder, M.A.R.; Malakar, P.; Kabir, M.M.; Nur, K.; Mridha, M.F. Recent advancements and challenges of NLP-based sentiment analysis: A state-of-the-art review. Nat. Lang. Process. J. 2024, 6, 100059. [Google Scholar] [CrossRef]

- Abimbola, B.; de La Cal Marin, E.; Tan, Q. Enhancing legal sentiment analysis: A convolutional neural network–long short-term memory document-level model. Mach. Learn. Knowl. Extr. 2024, 6, 877–897. [Google Scholar] [CrossRef]

- Denecke, K.; Reichenpfader, D. Sentiment analysis of clinical narratives: A scoping review. J. Biomed. Inform. 2023, 140, 104336. [Google Scholar] [CrossRef]

- Xing, F. Designing heterogeneous llm agents for financial sentiment analysis. ACM Trans. Manag. Inf. Syst. 2025, 16, 1–24. [Google Scholar] [CrossRef]

- Du, K.; Xing, F.; Mao, R.; Cambria, E. Financial sentiment analysis: Techniques and applications. ACM Comput. Surv. 2024, 56, 1–42. [Google Scholar] [CrossRef]

- Arauco Ballesteros, M.A.; Martínez Miranda, E.A. Stock market forecasting using a neural network through fundamental indicators, technical indicators and market sentiment analysis. Comput. Econ. 2025, 66, 1715–1745. [Google Scholar] [CrossRef]

- Pal, P.; Wang, Z.; Zhu, X.; Chew, J.; Pruś, K.; Wei, X. AI-Based Credit Risk Assessment and Intelligent Matching Mechanism in Supply Chain Finance. J. Theory Pract. Econ. Manag. 2025, 2, 1–9. [Google Scholar]

- Harper, C. From Black Swans to Early Warnings: Integrating Deep Learning in Systemic Risk Detection Across Global Economies. 2025. Available online: https://www.researchgate.net/publication/391902032_From_Black_Swans_to_Early_Warnings_Integrating_Deep_Learning_in_Systemic_Risk_Detection_Across_Global_Economies (accessed on 22 October 2025).

- Hassan, A.; Mahmood, A. Deep learning approach for sentiment analysis of short texts. In Proceedings of the 2017 3rd International Conference on Control, Automation and Robotics (ICCAR), Nagoya, Japan, 22–24 April 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 705–710. [Google Scholar]

- Dong, Z.; Tang, T.; Li, L.; Zhao, W.X. A survey on long text modeling with transformers. arXiv 2023, arXiv:2302.14502. [Google Scholar] [CrossRef]

- Huo, Y.; Liu, M.; Zheng, J.; He, L. MGAFN-ISA: Multi-Granularity Attention Fusion Network for Implicit Sentiment Analysis. Electronics 2024, 13, 4905. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, J.; Long, L.; Li, X.; Ma, R.; Wu, Y.; Chen, X. A Multi-Level Sentiment Analysis Framework for Financial Texts. arXiv 2025, arXiv:2504.02429. [Google Scholar] [CrossRef]

- Sheng, D.; Yuan, J. An efficient long Chinese text sentiment analysis method using BERT-based models with BiGRU. In Proceedings of the 2021 IEEE 24th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Dalian, China, 5–7 May 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 192–197. [Google Scholar]

- Chen, A.; Wei, Y.; Le, H.; Zhang, Y. Learning by teaching with ChatGPT: The effect of teachable ChatGPT agent on programming education. Br. J. Educ. Technol. 2024; early view. [Google Scholar]

- Zhang, L.; Zhang, Y.; Ma, X. A new strategy for tuning ReLUs: Self-adaptive linear units (SALUs). In Proceedings of the ICMLCA 2021; 2nd International Conference on Machine Learning and Computer Application, Shenyang, China, 17–19 December 2021; VDE: Offenbach am Main, Germany, 2021; pp. 1–8. [Google Scholar]

- Perumal Chockalingam, S.; Thambusamy, V. Enhancing Sentiment Analysis of User Response for COVID-19 Vaccinations Tweets Using SentiWordNet-Adjusted VADER Sentiment Analysis (SAVSA): A Hybrid Approach. In Proceedings of the International Conference on Intelligent Systems Design and Applications, Olten, Switzerland, 11–13 December 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 437–451. [Google Scholar]

- Lyu, S.; Ren, X.; Du, Y.; Zhao, N. Detecting depression of Chinese microblog users via text analysis: Combining Linguistic Inquiry Word Count (LIWC) with culture and suicide related lexicons. Front. Psychiatry 2023, 14, 1121583. [Google Scholar] [CrossRef] [PubMed]

- Tabany, M.; Gueffal, M. Sentiment analysis and fake amazon reviews classification using SVM supervised machine learning model. J. Adv. Inf. Technol. 2024, 15, 49–58. [Google Scholar] [CrossRef]

- Mishra, P.; Patil, S.A.; Shehroj, U.; Aniyeri, P.; Khan, T.A. Twitter Sentiment Analysis using Naive Bayes Algorithm. In Proceedings of the 2022 3rd International Informatics and Software Engineering Conference (IISEC), Ankara, Turkey, 15–16 December 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Mutmainah, S.; Citra, E.E. Improving the Accuracy of Social Media Sentiment Classification with the Combination of Tf-Idf Method and Random Forest Algorithm. Journix J. Inform. Comput. 2025, 1, 30–40. [Google Scholar] [CrossRef]

- Kumaragurubaran, T.; Pandi, S.S.; Naresh, G.; Ragavender, T. Navigating Public Opinion: Enhancing Sentiment Analysis on Social Media with CNN and SVM. In Proceedings of the 2024 5th International Conference for Emerging Technology (INCET), Belgaum, India, 24–26 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–5. [Google Scholar]

- Gupta, S.; Singhal, N.; Hundekari, S.; Upreti, K.; Gautam, A.; Kumar, P.; Verma, R. Aspect Based Feature Extraction in Sentiment Analysis using Bi-GRU-LSTM Model. J. Mob. Multimed. 2024, 20, 935–960. [Google Scholar] [CrossRef]

- Chorowski, J.K.; Bahdanau, D.; Serdyuk, D.; Cho, K.; Bengio, Y. Attention-based Models for Speech Recognition. Adv. Neural Inf. Process. Syst. 2015, 28, 48–62. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 960–975. [Google Scholar]

- Prasad Kumar, S.N.; Gangurde, R.; Mohite, U.L. RMHAN: Random Multi-Hierarchical Attention Network with RAG-LLM-Based Sentiment Analysis Using Text Reviews. Int. J. Comput. Intell. Appl. 2025, 24, 2550007. [Google Scholar] [CrossRef]

- Cambria, E.; Zhang, X.; Mao, R.; Chen, M.; Kwok, K. SenticNet 8: Fusing emotion AI and commonsense AI for interpretable, trustworthy, and explainable affective computing. In Proceedings of the International Conference on Human-Computer Interaction, Washington DC, USA, 29 June–4 July 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 197–216. [Google Scholar]

- Li, Y.; Su, Z.; Chen, K.; Jiang, K. Aspect-based Sentiment Analysis via Knowledge Enhancement. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–8. [Google Scholar]

- Yuan, B. FinBERT-QA: Financial Question Answering with pre-trained BERT Language Models. arXiv 2025, arXiv:2505.00725. [Google Scholar]

- Chen, T.; Zhang, Y.; Yu, G.; Zhang, D.; Zeng, L.; He, Q.; Ao, X. EFSA: Towards event-level financial sentiment analysis. arXiv 2024, arXiv:2404.08681. [Google Scholar] [CrossRef]

- Alslaity, A.; Orji, R. Machine learning techniques for emotion detection and sentiment analysis: Current state, challenges, and future directions. Behav. Inf. Technol. 2024, 43, 139–164. [Google Scholar] [CrossRef]

- Koroteev, M.V. BERT: A review of applications in natural language processing and understanding. arXiv 2021, arXiv:2103.11943. [Google Scholar] [CrossRef]

- Cheruku, R.; Hussain, K.; Kavati, I.; Reddy, A.M.; Reddy, K.S. Sentiment classification with modified RoBERTa and recurrent neural networks. Multimed. Tools Appl. 2024, 83, 29399–29417. [Google Scholar] [CrossRef]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Datta, S.K.; Shaikh, M.A.; Srihari, S.N.; Gao, M. Soft attention improves skin cancer classification performance. In Proceedings of the International Workshop on Interpretability of Machine Intelligence in Medical Image Computing, Strasbourg, France, 27 September 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 13–23. [Google Scholar]

- Papadopoulos, A.; Korus, P.; Memon, N. Hard-attention for scalable image classification. Adv. Neural Inf. Process. Syst. 2021, 34, 14694–14707. [Google Scholar]

- Kovalyk, Y.; Robinson, K.; Langthorne, A.; Whitestone, P.; Goldsborough, R. Dynamic hierarchical attention networks for contextual embedding refinement. TechRxiv 2024. [Google Scholar] [CrossRef]

- Manmothe, S.R.; Jadhav, J.R. Integrating Multimodal Data for Enhanced Analysis and Understanding: Techniques for Sentiment Analysis and Cross-Modal Retrieval. J. Adv. Zool. 2024, 45, 22. [Google Scholar] [CrossRef]

- Khan, S.S.; Mondal, P.K.; Shaqib, S.; Ahmed, N.; Prova, N.N.I.; Sattar, A. Performance Analysis of LSTM and Bi-LSTM Model with Different Optimizers in Bangla Sentiment Analysis. In Proceedings of the 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT), Kamand, India, 24–28 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–7. [Google Scholar]

- Wu, Y.; Jin, Z.; Shi, C.; Liang, P.; Zhan, T. Research on the application of deep learning-based BERT model in sentiment analysis. arXiv 2024, arXiv:2403.08217. [Google Scholar] [CrossRef]

- Rahman, M.M.; Shiplu, A.I.; Watanobe, Y.; Alam, M.A. RoBERTa-BiLSTM: A context-aware hybrid model for sentiment analysis. IEEE Trans. Emerg. Top. Comput. Intell. 2025; early access. [Google Scholar]

- Wohlin, C.; Runeson, P.; Höst, M.; Ohlsson, M.C.; Regnell, B.; Wesslén, A. Experimentation in Software Engineering; Springer: Berlin/Heidelberg, Germany, 2012; Volume 236. [Google Scholar]

- Antony, J. Design of Experiments for Engineers and Scientists; Elsevier: Amsterdam, The Netherlands, 2023. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).