2.1. Data Preprocessing

All data preprocessing steps in this study strictly adhered to the principle of forward validation to prevent information leakage. First, the division of the training and test sets was performed in strict chronological order, ensuring that all test data points occurred after the training set time points. This partitioning method prevented any test data from influencing the training process, thereby maintaining the authenticity and reliability of the model. Furthermore, the calculation of all preprocessing parameters was strictly confined to the training set. For example, the IQR ranges, ARIMA model coefficients, and feature statistics were extracted only from the training set data, preventing the influence of test set information on model parameters. Finally, the data processing followed the principle of forward filling, meaning that the test set data was processed using only parameters determined from the training set, ensuring that the model was free of future information, thus preventing information leakage and potential data bias. These measures together constitute the strict data leakage prevention protocol of this study, which aims to ensure the fairness and credibility of the model evaluation and prevent any form of future information leakage from affecting the final results.

2.1.1. Abnormal Data Processing

This article utilizes the IQR algorithm to distinguish between normal sample data and anomalous sample data. As shown in

Figure 2, the IQR refers to sorting any set of wind power data by size and dividing them into four equal parts. When a specific piece of wind power data lies at the boundary of the sorted wind power dataset, it is referred to as a quartile. The spacing between the quartiles can be used to represent a data sequence or a skewed distribution. Therefore, the IQR is considered to be accurate and stable.

The first quartile, second quartile, and third quartile are the wind power data located at the boundary points. From the upper limit to the lower limit, the wind power data decreases. The IQR range represents the difference in the size of the data.

By arranging the wind power data in ascending order, the sample can be obtained by

. The calculation formula for the quartiles is as follows [

22]:

Calculation of the median value

:In the formula, is the characteristic data sample of the wind power for the nth instance, k is a natural number.

If the total number of feature data n is even, the

of the ascending sample is split into two datasets with the median

as the limit (

is independent and not included in the two datasets). Calculate the medians and

of the split data, respectively. Therefore, according to the definition of quartiles, the first quartile can be described as

and the third quartile cab be described as

. When the total number of wind power data

n = 4

k + 3:

When the total number of wind power data

n = 4

k + 1:

By calculating

and

through the aforementioned process, the IQR range can be obtained as:

According to the IQR range, the limits of the abnormal data values within the sample can be determined:

In the Equation (5), represents the lower limit of the sequence; represents the upper limit of the sequence. Any data that falls outside the range from the lower limit to the upper limit is considered abnormal data.

After removing the outlier data points, the meteorological time series and power time series exhibited gaps. To ensure the continuity and validity of the series, this paper employs the method of cubic spline interpolation to correct and fill in the missing sequences, the calculation formula is as shown by Equation (6) [

23].

where

.

The effect of removing and filling outliers in the air pressure data is shown in

Figure 3.

2.1.2. Autoregressive Integrated Moving Average

Upon refining the initial dataset through outlier removal and missing data imputation, the ARIMA model is employed to improve data stability and forecast stability by modeling and transforming the processed time series. As a classical statistical approach, ARIMA is extensively used in analyzing non-stationary time series. Its core principle involves applying differencing to remove trends and seasonal components, thus stabilizing the series, followed by modeling the linear dependency structure using autoregressive (AR) and moving average (MA) terms.

Specifically, while the data processed through three iterations of spline interpolation exhibits continuity and smoothness at the data points, it may still retain certain local fluctuations and trend drifts, which could impact the efficiency of deep neural networks in recognizing temporal structures. The ARIMA model, as a linear time series modeling tool, is capable of extracting structural information from the sequence from a statistical perspective, thereby allowing the subsequent input features to align more closely with the dynamic pattern perception requirements of the following LSTM network, based on the premise of approximate stationarity. Thus, ARIMA processing not only serves as a means of noise reduction but also plays a role in dimensionality reduction and structural reorganization in the feature space, providing a more expressive foundation of input data for modeling wind power forecasting.

The ARIMA model is a statistical methodology that uses historical time series data to identify underlying patterns and forecast future values [

24]. It is denoted as ARIMA (

p,

d,

q), where its parameters are defined as follows:

p signifies the order of the autoregressive component,

q represents the order of the moving average component, and

d is the number of differencing operations required to achieve stationarity in the time series. Its mathematical expression is depicted as Equation (7) [

23]:

where

is the forecasted value,

is the constant term,

,

,

are the lags of

up to

lags,

are the lagged predicted errors up to

lags and

are the lag coefficients, while

are error coefficients.

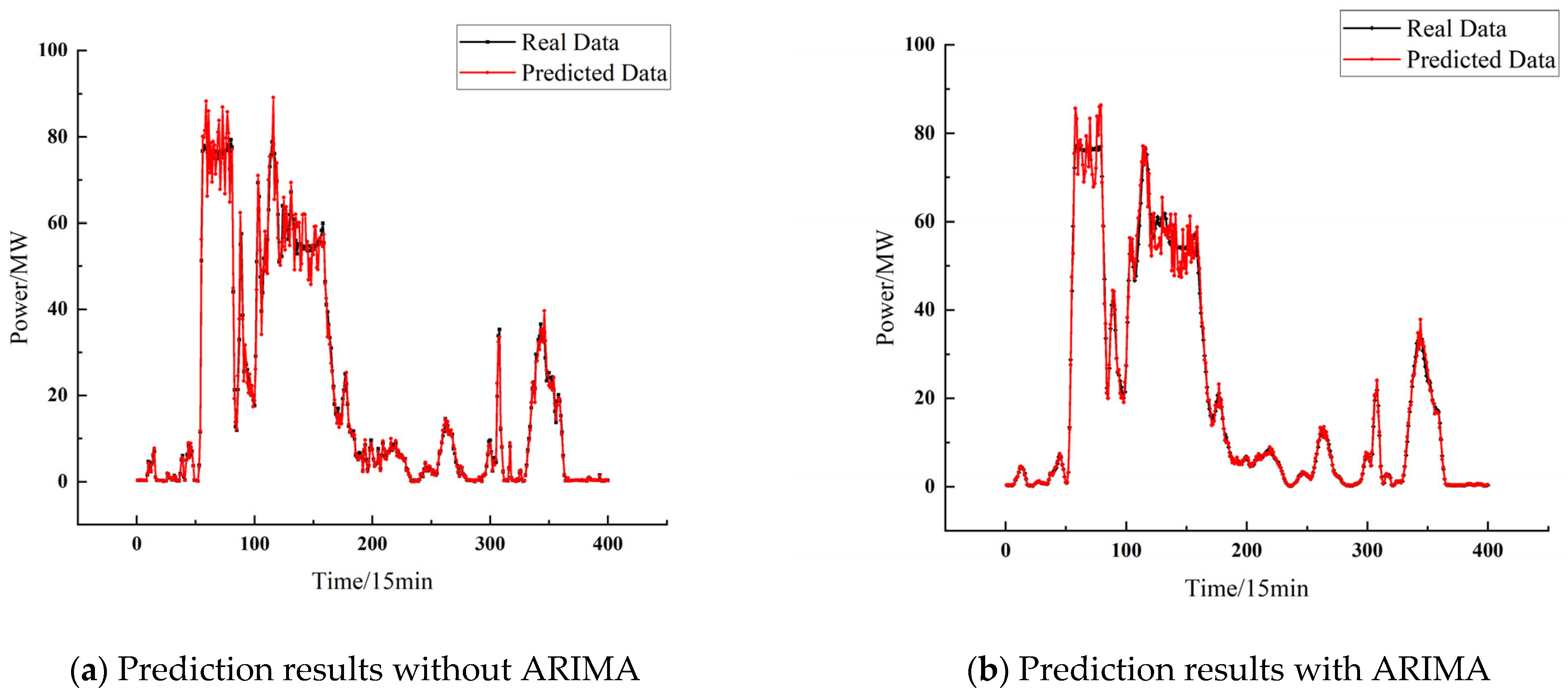

The introduction of this model during the preprocessing stage not only effectively reduces the potential random fluctuations in the data that could interfere with subsequent model training, but also enhances the stability and generalization capability of the neural network when learning temporal dependencies. The power prediction comparison chart before and after ARIMA noise reduction processing of the data is shown in

Figure 4 below.

This study employed the ARIMA model as a key data preprocessing step to improve the stationarity and predictability of wind power series. Through systematic model identification and parameter estimation, the optimal ARIMA (2,1,0) model structure was determined [

25]. Stationarity was tested by the Augmented Dickey–Fuller method (ADF). The ADF statistic of the original series was −2.34 (

p = 0.16), indicating nonstationary characteristics. After first-order differencing, the series reached stationarity, with the ADF statistic improving to −6.78 (

p = 0.0001). Based on this, the differencing order d = 1 was determined.

The selection of model order was guided by the analysis of the Autocorrelation Function (ACF) and Partial Autocorrelation Function (PACF). The original series’ ACF displayed a slow decay, while its PACF showed a significant cut-off after the second lag, suggesting an appropriate autoregressive order of p = 2. The ARIMA (2,1,0) configuration was further validated through model comparison using the Akaike Information Criterion (AIC), achieving a superior AIC value of 1245.2.

The application of ARIMA preprocessing substantially optimized the wind power series, reducing its standard deviation from 6.61 MW to 1.24 MW—an 81.2% decrease that effectively suppressed random fluctuations. This noise reduction is also evidenced by the Ljung–Box test, where the Q statistic dropped from 245.6 (

p < 0.001) to 18.3 (

p = 0.108), indicating the effective elimination of autocorrelation [

26]. The resulting restructured series provides a more regularized input feature space for the subsequent LSTM-BP model.

To quantitatively evaluate the actual contribution of ARIMA preprocessing to forecasting performance, a rigorous ablation experiment was designed, and the results are shown in

Table 2. The full model (including ARIMA) and the ablation model (excluding ARIMA) were compared and validated under the same experimental conditions. The experimental results show that ARIMA preprocessing reduces the model’s mean absolute error (MAE) from 0.72 MW to 0.54 MW in one-step forecasts, a relative improvement of 25.0%, and the root mean square error (RMSE) from 1.25 MW to 0.90 MW, a relative improvement of 28.0%. In multi-step forecasting scenarios, the complete model demonstrates superior error control capabilities.

2.2. Features Construction and Extraction

The characteristics are the dominant factors determining the accuracy of the predictive model. Therefore, it is essential to conduct an in-depth exploration of the meteorological features that are highly correlated with wind power generation, in order to construct more effective features. The relevant meteorological features are represented as , where (i = 1, 2, ⋯, n) represents the feature at the i-th moment, (k = 1, 2, ⋯, M) denotes the k-th feature among , n is the sample size, and M is the number of meteorological features. The feature construction is as follows:

The predictive accuracy of a model is largely determined by the quality of its input features. A thorough investigation into the meteorological factors strongly correlated with wind power output is therefore crucial for constructing more powerful predictive features. These relevant meteorological features are represented by a matrix , where each , corresponds to the feature vector at the i-th time step. Here, (with k = 1, 2, …, M) denotes the k-th meteorological variable within , n is the total sample size, and M is the number of features. The feature construction process is described as follows:

Original features, Y = [Y1, Y2,⋯, Yn]T

Temporal characteristics. That is, the time corresponding to the feature, the year, month, day, day of the week, as well as the hour, minute and other characteristics.

Combined features. The constructed combination features comprise both linear and nonlinear types, designed to elucidate the respective linear and nonlinear interdependencies among the input variables. The corresponding computational formulas are detailed in Equation (8).

In the equation: j, k = 1, 2, …, M; represent the additive, subtractive, multiplicative, and divisive characteristics of the sample at the i-th moment.

- 4.

Statistical Characteristics. The data fluctuation within the time window can be represented as follows:

In Equation (9), the terms and on the left-hand side represent the average, standard deviation, and maximum value of the k-th feature within the time window , respectively. The parameter defines the size of this window.

According to the correlation analysis shown in

Figure 5, it can be inferred that in the original features, wind speed at various heights will be a critical factor in predicting actual power generation.

Bayesian optimization is commonly used in machine learning to select optimal parameters. To reduce model computational complexity and find the optimal feature combination, this paper applies Bayesian optimization to the number of features (num1) used to extract statistical features and the number of features (num2) used for feature combination. This is used to constrain feature dimensions and optimize model parameters, constructing a sample parameter combination , where is the s-th feature combination and q is the number of feature combinations. The steps for Bayesian optimization parameter tuning are as follows.

Gaussian process estimation. The Gaussian process estimation begins by selecting a set of r feature combinations [p1, p2,⋯,pr] from the constructed pool and evaluating their corresponding loss functions [L(p1), L(p2,⋯,L(pr)], where L(ps) (for s = 1, 2,⋯,r) denotes the loss associated with the s-th combination. The sample set Dr, is then formed by pairing these parameters with their losses, as defined in Equation (10).

The estimated loss function follows a Gaussian distribution. The joint distribution of the loss values

is given by Equation (11):

where k (·,·) is the kernel function and represents the covariance.

- 2.

Selecting sampling points. The Expected Improvement (EI) function is introduced as an acquisition function to identify new sampling points by comparing the expected loss of a candidate point against the current best. This study employs the Tree-structured Parzen Estimator (TPE) to construct the EI function. Let p+ represent the current optimal parameter set, and y* be a threshold value greater than the corresponding loss L(p+). The mathematical expectation for this process is given by Equation (12).

where

is the probability of density function,

is the probability of y occurring when

occurs,

is the probability of

occurring when y occurs, and

is the probability of

occurring;

is the distribution weight;

is the distribution function when

; and

is the probability density function when

.

The point where the function

takes its maximum value is used as the sampling point to obtain the new optimal parameter combination

, as is shown in Equation (13).

where

represents the parameter that maximizes the mathematical expectation function.

- 3.

Update the Gaussian distribution of L(ps).

Since the new sample is added, the Gaussian distribution has been updated by Equation (14):

According to Formula (13), the new distribution of L(

) is calculated by Equation (15):

where

and

are the mean and standard deviation, respectively.

- 4.

Repeat steps 2, 3, and 4 until the maximum number of iterations is reached and the optimal output point is calculated.

Following the aforementioned feature construction methodology, the initial dataset incorporates air temperature, humidity, air pressure, wind speed, and wind direction measured at four different heights. From each of these base variables, three statistical characteristics were derived, yielding a total of 33 statistical features. For combined features, the commutativity of addition and multiplication operations resulted in

new features. In contrast, as subtraction and division are non-commutative, they contributed

additional features. Furthermore, five time-related features were included, bringing the total feature set to 5725. The substantial dimensionality of this set considerably prolongs model training time. To address this, a Bayesian hyperparameter tuning approach was employed to identify the most predictive features and reduce their number, with the parameter search space detailed in

Table 3.

A Bayesian optimization method was used to select features. This method determines the degree of influence of a feature on wind power based on the magnitude of its effect on the model fitting error. Meteorological features that minimize the model fitting error were selected, as shown in

Table 4.