Innovations in Robot-Assisted Surgery for Genitourinary Cancers: Emerging Technologies and Clinical Applications

Abstract

1. Introduction

2. Integration of Artificial Intelligence (AI) in Robotic Surgery

2.1. Machine Learning in Robotic Surgery

2.2. Enhancing Surgical Skill Development

2.3. AI for Postoperative Predictions

2.4. Addressing Limitations in Haptic Feedback

3. Advancements in Imaging and Augmented Reality (AR)

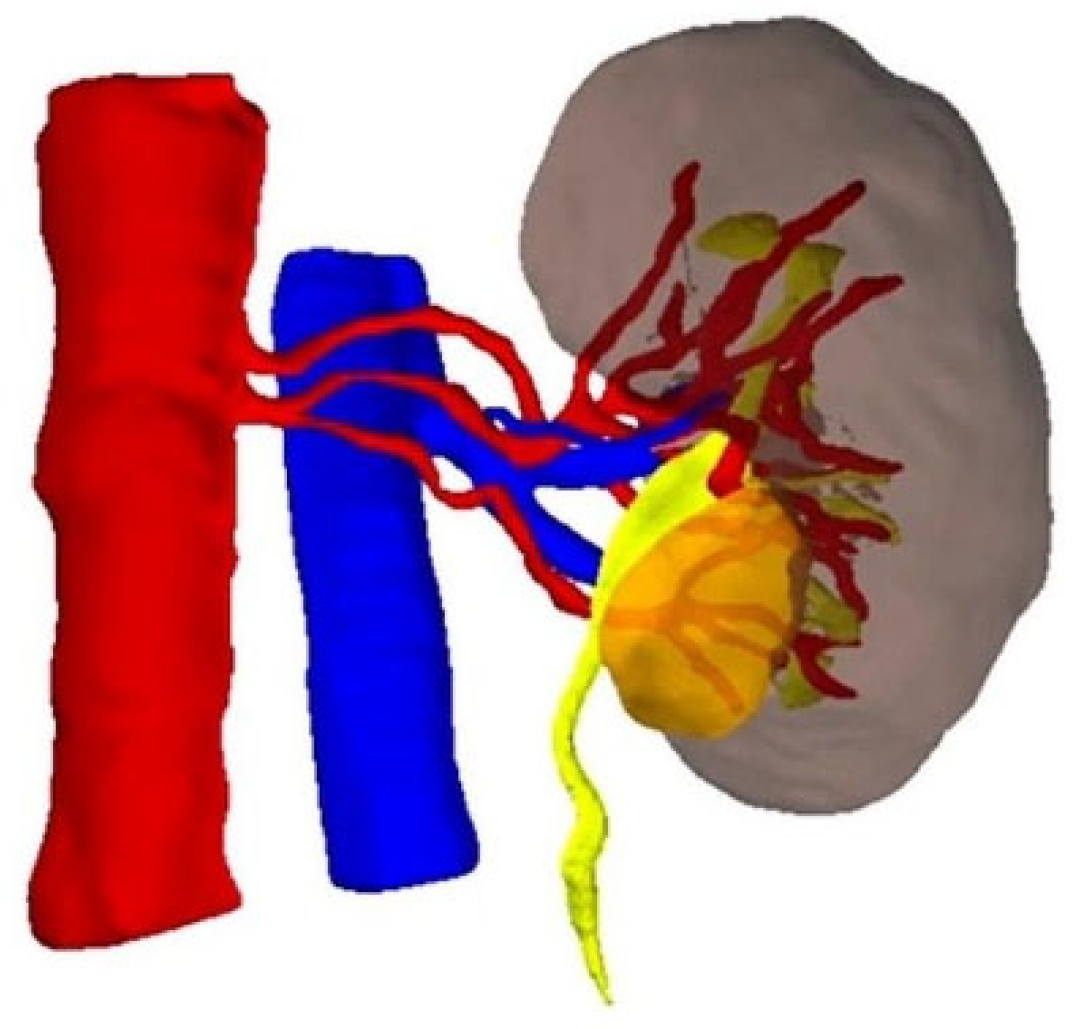

3.1. Three-Dimensional (3D) Virtual Models for Surgical Planning

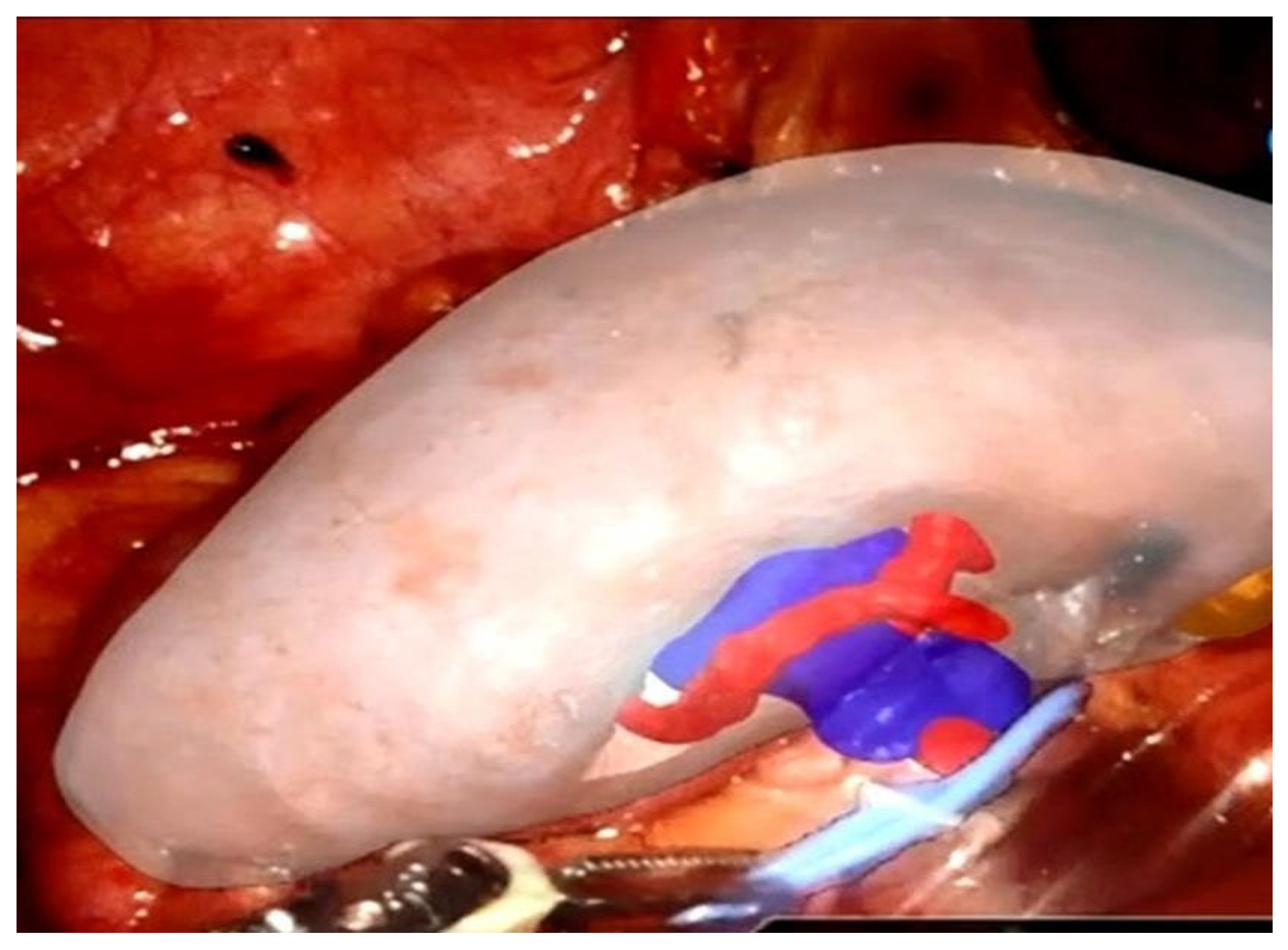

3.2. Augmented Reality in Intraoperative Navigation

3.3. Immersive Surgical Planning in the Metaverse

3.4. Limitations and Future Directions

4. Real-Time Tissue Recognition and Margin Assessment

4.1. Frozen Section and the NeuroSAFE Technique

- Better postoperative erectile function (mean IIEF-5 difference: +3.2);

- Improved early continence at 3 months;

- Increased nerve-sparing rates, particularly in cases initially deemed unsuitable for bilateral preservation.

4.2. Ex Vivo Imaging Technologies

4.3. In Vivo Optical and Spectroscopic Techniques

4.4. Indocyanine Green (ICG) and Fluorescence-Guided Partial Nephrectomy

4.5. Augmented Reality and Artificial Intelligence for Margin Guidance

5. Single-Port vs. Multi-Port Approaches in Robot-Assisted Urologic Oncology

The Emergence of Single-Port Robotic Surgery

6. Telesurgery and the Future of Remote Robotic Urology

7. Future Directions

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mikhail, D.; Sarcona, J.; Mekhail, M.; Richstone, L. Urologic Robotic Surgery. Surg. Clin. N. Am. 2020, 100, 361–378. [Google Scholar] [CrossRef] [PubMed]

- Ilic, D.; Evans, S.M.; Allan, C.A.; Jung, J.H.; Murphy, D.; Frydenberg, M. Laparoscopic and robotic-assisted versus open radical prostatectomy for the treatment of localised prostate cancer. Cochrane Database Syst. Rev. 2017, 9, Cd009625. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Bellos, T.; Manolitsis, I.; Katsimperis, S.; Juliebø-Jones, P.; Feretzakis, G.; Mitsogiannis, I.; Varkarakis, I.; Somani, B.K.; Tzelves, L. Artificial Intelligence in Urologic Robotic Oncologic Surgery: A Narrative Review. Cancers 2024, 16, 1775. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Nosrati, M.S.; Amir-Khalili, A.; Peyrat, J.M.; Abinahed, J.; Al-Alao, O.; Al-Ansari, A.; Abugharbieh, R.; Hamarneh, G. Endoscopic scene labelling and augmentation using intraoperative pulsatile motion and colour appearance cues with preoperative anatomical priors. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 1409–1418. [Google Scholar] [CrossRef] [PubMed]

- Cheikh Youssef, S.; Hachach-Haram, N.; Aydin, A.; Shah, T.T.; Sapre, N.; Nair, R.; Rai, S.; Dasgupta, P. Video labelling robot-assisted radical prostatectomy and the role of artificial intelligence (AI): Training a novice. J. Robot. Surg. 2023, 17, 695–701. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Klén, R.; Salminen, A.P.; Mahmoudian, M.; Syvänen, K.T.; Elo, L.L.; Boström, P.J. Prediction of complication related death after radical cystectomy for bladder cancer with machine learning methodology. Scand. J. Urol. 2019, 53, 325–331. [Google Scholar] [CrossRef] [PubMed]

- Hung, A.J.; Chen, J.; Che, Z.; Nilanon, T.; Jarc, A.; Titus, M.; Oh, P.J.; Gill, I.S.; Liu, Y. Utilizing Machine Learning and Automated Performance Metrics to Evaluate Robot-Assisted Radical Prostatectomy Performance and Predict Outcomes. J. Endourol. 2018, 32, 438–444. [Google Scholar] [CrossRef] [PubMed]

- Hung, A.J.; Chen, J.; Ghodoussipour, S.; Oh, P.J.; Liu, Z.; Nguyen, J.; Purushotham, S.; Gill, I.S.; Liu, Y. A deep-learning model using automated performance metrics and clinical features to predict urinary continence recovery after robot-assisted radical prostatectomy. BJU Int. 2019, 124, 487–495. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Dai, Y.; Abiri, A.; Pensa, J.; Liu, S.; Paydar, O.; Sohn, H.; Sun, S.; Pellionisz, P.A.; Pensa, C.; Dutson, E.P.; et al. Biaxial sensing suture breakage warning system for robotic surgery. Biomed. Microdevices 2019, 21, 10. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Piana, A.; Gallioli, A.; Amparore, D.; Diana, P.; Territo, A.; Campi, R.; Gaya, J.M.; Guirado, L.; Checcucci, E.; Bellin, A.; et al. Three-dimensional Augmented Reality-guided Robotic-assisted Kidney Transplantation: Breaking the Limit of Atheromatic Plaques. Eur. Urol. 2022, 82, 419–426. [Google Scholar] [CrossRef] [PubMed]

- Roberts, S.; Desai, A..; Checcucci, E.; Puliatti, S.; Taratkin, M.; Kowalewski, K.F.; Rivas, J.G.; Rivero, I.; Veneziano, D.; Autorino, R.; et al. “Augmented reality” applications in urology: A systematic review. Minerva Urol. Nephrol. 2022, 74, 528–537. [Google Scholar] [CrossRef] [PubMed]

- Amparore, D.; Pecoraro, A.; Checcucci, E.; Piramide, F.; Verri, P.; De Cillis, S.; Granato, S.; Angusti, T.; Solitro, F.; Veltri, A.; et al. Three-dimensional Virtual Models’ Assistance During Minimally Invasive Partial Nephrectomy Minimizes the Impairment of Kidney Function. Eur. Urol. Oncol. 2022, 5, 104–108. [Google Scholar] [CrossRef] [PubMed]

- Porpiglia, F.; Checcucci, E.; Amparore, D.; Piramide, F.; Volpi, G.; Granato, S.; Verri, P.; Manfredi, M.; Bellin, A.; Piazzolla, P.; et al. Three-dimensional Augmented Reality Robot-assisted Partial Nephrectomy in Case of Complex Tumours (PADUA ≥10): A New Intraoperative Tool Overcoming the Ultrasound Guidance. Eur. Urol. 2020, 78, 229–238. [Google Scholar] [CrossRef] [PubMed]

- Kobayashi, S.; Cho, B.; Huaulmé, A.; Tatsugami, K.; Honda, H.; Jannin, P.; Hashizumea, M.; Eto, M. Assessment of surgical skills by using surgical navigation in robot-assisted partial nephrectomy. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1449–1459. [Google Scholar] [CrossRef] [PubMed]

- Kobayashi, S.; Cho, B.; Mutaguchi, J.; Inokuchi, J.; Tatsugami, K.; Hashizume, M.; Eto, M. Surgical Navigation Improves Renal Parenchyma Volume Preservation in Robot-Assisted Partial Nephrectomy: A Propensity Score Matched Comparative Analysis. J. Urol. 2020, 204, 149–156. [Google Scholar] [CrossRef] [PubMed]

- Sica, M.; Piazzolla, P.; Amparore, D.; Verri, P.; De Cillis, S.; Piramide, F.; Volpi, G.; Piana, A.; Di Dio, M.; Alba, S.; et al. 3D Model Artificial Intelligence-Guided Automatic Augmented Reality Images during Robotic Partial Nephrectomy. Diagnostics 2023, 13, 3454. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Porpiglia, F.; Fiori, C.; Checcucci, E.; Amparore, D.; Bertolo, R. Augmented Reality Robot-assisted Radical Prostatectomy: Preliminary Experience. Urology 2018, 115, 184. [Google Scholar] [CrossRef] [PubMed]

- Porpiglia, F.; Checcucci, E.; Amparore, D.; Autorino, R.; Piana, A.; Bellin, A.; Piazzolla, P.; Massa, F.; Bollito, E.; Gned, D.; et al. Augmented-reality robot-assisted radical prostatectomy using hyper-accuracy three-dimensional reconstruction (HA3D™) technology: A radiological and pathological study. BJU Int. 2019, 123, 834–845. [Google Scholar] [CrossRef] [PubMed]

- Porpiglia, F.; Checcucci, E.; Amparore, D.; Manfredi, M.; Massa, F.; Piazzolla, P.; Manfrin, D.; Piana, A.; Tota, D.; Bollito, E.; et al. Three-dimensional Elastic Augmented-reality Robot-assisted Radical Prostatectomy Using Hyperaccuracy Three-dimensional Reconstruction Technology: A Step Further in the Identification of Capsular Involvement. Eur. Urol. 2019, 76, 505–514. [Google Scholar] [CrossRef] [PubMed]

- Checcucci, E.; Amparore, D.; Volpi, G.; De Cillis, S.; Piramide, F.; Verri, P.; Piana, A.; Sica, M.; Gatti, C.; Alessio, P.; et al. Metaverse Surgical Planning with Three-dimensional Virtual Models for Minimally Invasive Partial Nephrectomy. Eur. Urol. 2024, 85, 320–325. [Google Scholar] [CrossRef] [PubMed]

- Beyer, B.; Schlomm, T.; Tennstedt, P.; Boehm, K.; Adam, M.; Schiffmann, J.; Sauter, G.; Wittmer, C.; Steuber, T.; Graefen, M.; et al. A feasible and time-efficient adaptation of NeuroSAFE for da Vinci robot-assisted radical prostatectomy. Eur. Urol. 2014, 66, 138–144. [Google Scholar] [CrossRef] [PubMed]

- Dinneen, E.; Almeida-Magana, R.; Al-Hammouri, T.; Pan, S.; Leurent, B.; Haider, A.; Freeman, A.; Roberts, N.; Brew-Graves, C.; Grierson, J.; et al. Effect of NeuroSAFE-guided RARP versus standard RARP on erectile function and urinary continence in patients with localised prostate cancer (NeuroSAFE PROOF): A multicentre, patient-blinded, randomised, controlled phase 3 trial. Lancet Oncol. 2025, 26, 447–458. [Google Scholar] [CrossRef] [PubMed]

- Puliatti, S.; Bertoni, L.; Pirola, G.M.; Azzoni, P.; Bevilacqua, L.; Eissa, A.; Elsherbiny, A.; Sighinolfi, M.C.; Chester, J.; Kaleci, S.; et al. Ex vivo fluorescence confocal microscopy: The first application for real-time pathological examination of prostatic tissue. BJU Int. 2019, 124, 469–746. [Google Scholar] [CrossRef] [PubMed]

- Rocco, B.; Sarchi, L.; Assumma, S.; Cimadamore, A.; Montironi, R.; Reggiani Bonetti, L.; Turri, F.; De Carne, C.; Puliatti, S.; Maiorana, A.; et al. Digital Frozen Sections with Fluorescence Confocal Microscopy During Robot-assisted Radical Prostatectomy: Surgical Technique. Eur. Urol. 2021, 80, 724–729. [Google Scholar] [CrossRef] [PubMed]

- Darr, C.; Costa, P.F.; Kahl, T.; Moraitis, A.; Engel, J.; Al-Nader, M.; Reis, H.; Köllermann, J.; Kesch, C.; Krafft, U.; et al. Intraoperative Molecular Positron Emission Tomography Imaging for Intraoperative Assessment of Radical Prostatectomy Specimens. Eur. Urol. Open Sci. 2023, 54, 28–32. [Google Scholar] [CrossRef] [PubMed Central]

- Lopez, A.; Zlatev, D.V.; Mach, K.E.; Bui, D.; Liu, J.J.; Rouse, R.V.; Harris, T.; Leppert, J.T.; Liao, J.C. Intraoperative Optical Biopsy during Robotic Assisted Radical Prostatectomy Using Confocal Endomicroscopy. J. Urol. 2016, 195, 1110–1117. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Panarello, D.; Compérat, E.; Seyde, O.; Colau, A.; Terrone, C.; Guillonneau, B. Atlas of Ex Vivo Prostate Tissue and Cancer Images Using Confocal Laser Endomicroscopy: A Project for Intraoperative Positive Surgical Margin Detection During Radical Prostatectomy. Eur. Urol. Focus 2020, 6, 941–958. [Google Scholar] [CrossRef] [PubMed]

- Crow, P.; Molckovsky, A.; Stone, N.; Uff, J.; Wilson, B.; WongKeeSong, L.M. Assessment of fiberoptic near-infrared raman spectroscopy for diagnosis of bladder and prostate cancer. Urology 2005, 65, 1126–1130. [Google Scholar] [CrossRef] [PubMed]

- Pinto, M.; Zorn, K.C.; Tremblay, J.P.; Desroches, J.; Dallaire, F.; Aubertin, K.; Marple, E.T.; Kent, C.; Leblond, F.; Trudel, D.; et al. Integration of a Raman spectroscopy system to a robotic-assisted surgical system for real-time tissue characterization during radical prostatectomy procedures. J. Biomed. Opt. 2019, 24, 1–10. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Katsimperis, S.; Tzelves, L.; Bellos, T.; Manolitsis, I.; Mourmouris, P.; Kostakopoulos, N.; Pyrgidis, N.; Somani, B.; Papatsoris, A.; Skolarikos, A. The use of indocyanine green in partial nephrectomy: A systematic review. Cent. Eur. J. Urol. 2024, 77, 15–21. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Bjurlin, M.A.; McClintock, T.R.; Stifelman, M.D. Near-infrared fluorescence imaging with intraoperative administration of indocyanine green for robotic partial nephrectomy. Curr. Urol. Rep. 2015, 16, 20. [Google Scholar] [CrossRef] [PubMed]

- Gadus, L.; Kocarek, J.; Chmelik, F.; Matejkova, M.; Heracek, J. Robotic Partial Nephrectomy with Indocyanine Green Fluorescence Navigation. Contrast Media Mol. Imaging 2020, 2020, 1287530. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Borofsky, M.S.; Gill, I.S.; Hemal, A.K.; Marien, T.P.; Jayaratna, I.; Krane, L.S.; Stifelman, M.D. Near-infrared fluorescence imaging to facilitate super-selective arterial clamping during zero-ischaemia robotic partial nephrectomy. BJU Int. 2013, 111, 604–610. [Google Scholar] [CrossRef] [PubMed]

- Martini, A.; Falagario, U.G.; Cumarasamy, S.; Jambor, I.; Wagaskar, V.G.; Ratnani, P.; Iii, K.G.H.; Tewari, A.K. The Role of 3D Models Obtained from Multiparametric Prostate MRI in Performing Robotic Prostatectomy. J. Endourol. 2022, 36, 387–393. [Google Scholar] [CrossRef] [PubMed]

- Rovera, G.; Grimaldi, S.; Oderda, M.; Finessi, M.; Giannini, V.; Passera, R.; Gontero, P.; Deandreis, D. Machine Learning CT-Based Automatic Nodal Segmentation and PET Semi-Quantification of Intraoperative (68)Ga-PSMA-11 PET/CT Images in High-Risk Prostate Cancer: A Pilot Study. Diagnostics 2023, 13, 3013. [Google Scholar] [CrossRef] [PubMed Central]

- Kaouk, J.; Garisto, J.; Eltemamy, M.; Bertolo, R. Pure Single-Site Robot-Assisted Partial Nephrectomy Using the SP Surgical System: Initial Clinical Experience. Urology 2019, 124, 282–285. [Google Scholar] [CrossRef] [PubMed]

- Kaouk, J.; Bertolo, R.; Eltemamy, M.; Garisto, J. Single-Port Robot-Assisted Radical Prostatectomy: First Clinical Experience Using The SP Surgical System. Urology. 2019, 124, 309. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Thomas, D.; Salama, G.; Ahmed, M. Single port robotic radical cystectomy with intracorporeal urinary diversion: A case series and review. Transl. Androl. Urol. 2020, 9, 925–930. [Google Scholar] [CrossRef] [PubMed Central]

- Hinojosa-Gonzalez, D.E.; Roblesgil-Medrano, A.; Torres-Martinez, M.; Alanis-Garza, C.; Estrada-Mendizabal, R.J.; Gonzalez-Bonilla, E.A.; Flores-Villalba, E.; Olvera-Posada, D. Single-port versus multiport robotic-assisted radical prostatectomy: A systematic review and meta-analysis on the da Vinci SP platform. Prostate 2022, 82, 405–414. [Google Scholar] [CrossRef] [PubMed]

- Fahmy, O.; Fahmy, U.A.; Alhakamy, N.A.; Khairul-Asri, M.G. Single-Port versus Multiple-Port Robot-Assisted Radical Prostatectomy: A Systematic Review and Meta-Analysis. J. Clin. Med. 2021, 10, 5723. [Google Scholar] [CrossRef] [PubMed Central]

- Li, K.; Yu, X.; Yang, X.; Huang, J.; Deng, X.; Su, Z.; Wang, C.; Wu, T. Perioperative and Oncologic Outcomes of Single-Port vs Multiport Robot-Assisted Radical Prostatectomy: A Meta-Analysis. J. Endourol. 2022, 36, 83–98. [Google Scholar] [CrossRef] [PubMed]

- Noël, J.; Moschovas, M.C.; Sandri, M.; Bhat, S.; Rogers, T.; Reddy, S.; Corder, C.; Patel, V. Patient surgical satisfaction after da Vinci(®) single-port and multi-port robotic-assisted radical prostatectomy: Propensity score-matched analysis. J. Robot. Surg. 2022, 16, 473–481. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Lenfant, L.; Sawczyn, G.; Aminsharifi, A.; Kim, S.; Wilson, C.A.; Beksac, A.T.; Schwen, Z.; Kaouk, J. Pure Single-site Robot-assisted Radical Prostatectomy Using Single-port Versus Multiport Robotic Radical Prostatectomy: A Single-institution Comparative Study. Eur. Urol. Focus 2021, 7, 964–972. [Google Scholar] [CrossRef] [PubMed]

- Noh, T.I.; Kang, Y.J.; Shim, J.S.; Kang, S.H.; Cheon, J.; Lee, J.G.; Kang, S.G. Single-Port vs Multiport Robot-Assisted Radical Prostatectomy: A Propensity Score Matching Comparative Study. J. Endourol. 2022, 36, 661–667. [Google Scholar] [CrossRef] [PubMed]

- Vigneswaran, H.T.; Schwarzman, L.S.; Francavilla, S.; Abern, M.R.; Crivellaro, S. A Comparison of Perioperative Outcomes Between Single-port and Multiport Robot-assisted Laparoscopic Prostatectomy. Eur. Urol. 2020, 77, 671–674. [Google Scholar] [CrossRef] [PubMed]

- Ge, S.; Zeng, Z.; Li, Y.; Gan, L.; Meng, C.; Li, K.; Wang, Z.; Zheng, L. Comparing the safety and efficacy of single-port versus multi-port robotic-assisted techniques in urological surgeries: A systematic review and meta-analysis. World J. Urol. 2024, 42, 18. [Google Scholar] [CrossRef] [PubMed]

- Xu, M.C.; Hemal, A.K. Single-Port vs Multiport Robotic Surgery in Urologic Oncology: A Narrative Review. J. Endourol. 2025, 39, 271–284. [Google Scholar] [CrossRef] [PubMed]

- Harrison, R.; Ahmed, M.; Billah, M.; Sheckley, F.; Lulla, T.; Caviasco, C.; Sanders, A.; Lovallo, G.; Stifelman, M. Single-port versus multiport partial nephrectomy: A propensity-score-matched comparison of perioperative and short-term outcomes. J. Robot. Surg. 2023, 17, 223–231. [Google Scholar] [CrossRef] [PubMed]

- Glaser, Z.A.; Burns, Z.R.; Fang, A.M.; Saidian, A.; Magi-Galluzzi, C.; Nix, J.W.; Rais-Bahrami, S. Single- versus multi-port robotic partial nephrectomy: A comparative analysis of perioperative outcomes and analgesic requirements. J. Robot. Surg. 2022, 16, 695–703. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.T.; Ngo, X.T.; Duong, N.X.; Dobbs, R.W.; Vuong, H.G.; Nguyen, D.D.; Basilius, J.; Onder, N.K.; Mendiola, D.F.A.; Hoang, T.-D.; et al. Single-Port vs Multiport Robot-Assisted Partial Nephrectomy: A Meta-Analysis. J. Endourol. 2024, 38, 253–261. [Google Scholar] [CrossRef] [PubMed]

- Gross, J.T.; Vetter, J.M.; Sands, K.G.; Palka, J.K.; Bhayani, S.B.; Figenshau, R.S.; Kim, E.H. Initial Experience with Single-Port Robot-Assisted Radical Cystectomy: Comparison of Perioperative Outcomes Between Single-Port and Conventional Multiport Approaches. J. Endourol. 2021, 35, 1177–1183. [Google Scholar] [CrossRef] [PubMed]

- Ditonno, F.; Franco, A.; Manfredi, C.; Veccia, A.; De Nunzio, C.; De Sio, M.; Vourganti, S.; Chow, A.K.; Cherullo, E.E.; Antonelli, A.; et al. Single-port robot-assisted simple prostatectomy: Techniques and outcomes. World J. Urol. 2024, 42, 98. [Google Scholar] [CrossRef] [PubMed]

- Heo, J.E.; Kang, S.K.; Lee, J.; Koh, D.; Kim, M.S.; Lee, Y.S.; Ham, W.S.; Jang, W.S. Outcomes of single-port robotic ureteral reconstruction using the da Vinci SP(®) system. Investig. Clin. Urol. 2023, 64, 373–379. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Nguyen, T.T.; Basilius, J.; Ali, S.N.; Dobbs, R.W.; Lee, D.I. Single-Port Robotic Applications in Urology. J. Endourol. 2023, 37, 688–699. [Google Scholar] [CrossRef] [PubMed]

- Marescaux, J.; Leroy, J.; Gagner, M.; Rubino, F.; Mutter, D.; Vix, M.; Butner, S.E.; Smith, M.K. Transatlantic robot-assisted telesurgery. Nature 2001, 413, 379–380. [Google Scholar] [CrossRef] [PubMed]

- Zheng, J.; Wang, Y.; Zhang, J.; Guo, W.; Yang, X.; Luo, L.; Jiao, W.; Hu, X.; Yu, Z.; Wang, C.; et al. 5G ultra-remote robot-assisted laparoscopic surgery in China. Surg. Endosc. 2020, 34, 5172–5180. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Wang, Y.; Jiao, W.; Li, J.; Wang, B.; He, L.; Chen, Y.; Gao, X.; Li, Z.; Zhang, Y.; et al. Application of 5G technology to conduct tele-surgical robot-assisted laparoscopic radical cystectomy. Int. J. Med. Robot. 2022, 18, e2412. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Yang, X.; Chu, G.; Feng, W.; Ding, X.; Yin, X.; Zhang, L.; Lv, W.; Ma, L.; Sun, L.; et al. Application of Improved Robot-assisted Laparoscopic Telesurgery with 5G Technology in Urology. Eur. Urol. 2023, 83, 41–44. [Google Scholar] [CrossRef]

- Li, J.; Jiao, W.; Yuan, H.; Feng, W.; Ding, X.; Yin, X.; Zhang, L.; Lv, W.; Ma, L.; Sun, L.; et al. Telerobot-assisted laparoscopic adrenalectomy: Feasibility study. Br. J. Surg. 2022, 110, 6–9. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.; Wang, J.Y.; Zhu, X.; Sun, H.J.; Aikebaer, A.; Tian, J.Y.; Shao, Y.; Maimaitijiang, D.; Muhetaer, W.; Li, J.; et al. Ultra-remote robot-assisted laparoscopic surgery for varicocele through 5G network: Report of two cases and review of the literature. Zhonghua Nan Ke Xue 2022, 28, 696–701. [Google Scholar] [PubMed]

- Ebihara, Y.; Hirano, S.; Kurashima, Y.; Takano, H.; Okamura, K.; Murakami, S.; Shichinohe, T.; Morohashi, H.; Oki, E.; Hakamada, K.; et al. Tele-robotic distal gastrectomy with lymph node dissection on a cadaver. Asian J. Endosc. Surg. 2024, 17, e13246. [Google Scholar] [CrossRef] [PubMed]

- Takahashi, Y.; Hakamada, K.; Morohashi, H.; Wakasa, Y.; Fujita, H.; Ebihara, Y.; Oki, E.; Hirano, S.; Mori, M. Effects of communication delay in the dual cockpit remote robotic surgery system. Surg. Today 2024, 54, 496–501. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

| Technology | Application | Clinical Validation | Reference |

|---|---|---|---|

| Hyperaccuracy 3D AR for RAPN | Navigation and tumor enucleation | Improved functional outcomes, reduced ischemia | Porpiglia et al. (2020) [13] |

| Elastic AR for RARP | Capsular involvement identification | 100% capsular accuracy vs. 47% in controls | Porpiglia et al. (2019) [19] |

| iKidney AI-AR System | Automated AR alignment | First clinical use reported, 97.8% overlay precision | Sica et al. (2023) [16] |

| Confocal Laser Endomicroscopy (CLE) | In vivo nerve-sparing visualization | Feasibility study; high-quality imaging of landmarks | Lopez et al. (2016) [26] |

| Fluorescence Confocal Microscopy (FCM) | Ex vivo prostate margin evaluation | >90% accuracy; rapid margin assessment | Puliatti et al. (2019); Rocco et al. (2021) [23,24] |

| Raman Spectroscopy (RS) | In vivo tissue differentiation | 91% accuracy in vivo; pilot use in RARP | Pinto et al. (2019) [29] |

| Indocyanine Green (ICG) with NIRF Imaging | Perfusion and tumor contrast in RAPN | Widely used; validated in numerous RAPN studies | Gadus et al. (2020); Borofsky et al. (2013) [32,33] |

| Technology | Sensitivity | Specificity | Cost-Effectiveness | Ease of Use | Reference |

|---|---|---|---|---|---|

| Confocal Laser Endomicroscopy (CLE) | High | High | Moderate | Moderate | Lopez et al. (2016) [26] |

| Fluorescence Confocal Microscopy (FCM) | >90% | >90% | Moderate to High | High (ex vivo) | Puliatti et al. (2019); Rocco et al. (2021) [23,24] |

| Raman Spectroscopy (RS) | ≈91% | ≈96% | High | Moderate (requires training) | Pinto et al. (2019) [29] |

| Feature | Single-Port (SP) | Multi-Port (MP) |

|---|---|---|

| Number of Ports | 1 multichannel port | 3–5 separate ports |

| Incision Size | ≈25 mm | 8–12 mm each |

| Instrument Triangulation | Limited | Excellent |

| Learning Curve | Steeper | Shorter |

| Access to Confined Spaces | Superior | Challenging |

| Pain and Recovery | Improved | Moderate |

| Lymph Node Yield | Often Lower | Higher |

| Instrument Strength/Traction | Reduced | Stronger |

| Same-Day Discharge Rate | Higher | Lower |

| Availability | Limited Globally | Widely Available |

| Technology | Maturity Level | Key Strengths | Key Study/Reference |

|---|---|---|---|

| AI-based Performance Prediction | Validated in multi-institutional studies | Outcome prediction, tailored recovery | Hung et al. (2018, 2019) [7,8] |

| Hyperaccuracy 3D AR | Applied in complex RAPN and RARP | Anatomical fidelity, margin accuracy | Porpiglia et al. (2020) [13] |

| Confocal Microscopy (FCM) | Validated ex vivo; clinical feasibility shown | Digital workflow, rapid turnaround | Puliatti et al. (2019) [23]; Rocco et al. (2021) [24] |

| Raman Spectroscopy (RS) | Pilot intraoperative use; promising results | High diagnostic accuracy, integration with da Vinci | Pinto et al. (2019) [29] |

| Indocyanine Green (ICG) Imaging | Routine in RAPN; well established | Perfusion mapping, tumor contrast | Gadus et al. (2020) [32]; Borofsky et al. (2013) [33] |

| iKidney AR System | First-in-human case; early stage | Automation, eliminates manual overlay | Sica et al. (2023) [16] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Katsimperis, S.; Tzelves, L.; Feretzakis, G.; Bellos, T.; Tsikopoulos, I.; Kostakopoulos, N.; Skolarikos, A. Innovations in Robot-Assisted Surgery for Genitourinary Cancers: Emerging Technologies and Clinical Applications. Appl. Sci. 2025, 15, 6118. https://doi.org/10.3390/app15116118

Katsimperis S, Tzelves L, Feretzakis G, Bellos T, Tsikopoulos I, Kostakopoulos N, Skolarikos A. Innovations in Robot-Assisted Surgery for Genitourinary Cancers: Emerging Technologies and Clinical Applications. Applied Sciences. 2025; 15(11):6118. https://doi.org/10.3390/app15116118

Chicago/Turabian StyleKatsimperis, Stamatios, Lazaros Tzelves, Georgios Feretzakis, Themistoklis Bellos, Ioannis Tsikopoulos, Nikolaos Kostakopoulos, and Andreas Skolarikos. 2025. "Innovations in Robot-Assisted Surgery for Genitourinary Cancers: Emerging Technologies and Clinical Applications" Applied Sciences 15, no. 11: 6118. https://doi.org/10.3390/app15116118

APA StyleKatsimperis, S., Tzelves, L., Feretzakis, G., Bellos, T., Tsikopoulos, I., Kostakopoulos, N., & Skolarikos, A. (2025). Innovations in Robot-Assisted Surgery for Genitourinary Cancers: Emerging Technologies and Clinical Applications. Applied Sciences, 15(11), 6118. https://doi.org/10.3390/app15116118