Abstract

People’s emotions play an important part in our daily life and can not only reflect psychological and physical states, but also play a vital role in people’s communication, cognition and decision-making. Variations in people’s emotions induced by external conditions are accompanied by variations in physiological signals that can be measured and identified. People’s psychological signals are mainly measured with electroencephalograms (EEGs), electrodermal activity (EDA), electrocardiograms (ECGs), electromyography (EMG), pulse waves, etc. EEG signals are a comprehensive embodiment of the operation of numerous neurons in the cerebral cortex and can immediately express brain activity. EDA measures the electrical features of skin through skin conductance response, skin potential, skin conductance level or skin potential response. ECG technology uses an electrocardiograph to record changes in electrical activity in each cardiac cycle of the heart from the body surface. EMG is a technique that uses electronic instruments to evaluate and record the electrical activity of muscles, which is usually referred to as myoelectric activity. EEG, EDA, ECG and EMG have been widely used to recognize and judge people’s emotions in various situations. Different physiological signals have their own characteristics and are suitable for different occasions. Therefore, a review of the research work and application of emotion recognition and judgment based on the four physiological signals mentioned above is offered. The content covers the technologies adopted, the objects of application and the effects achieved. Finally, the application scenarios for different physiological signals are compared, and issues for attention are explored to provide reference and a basis for further investigation.

1. Introduction

Psychology defines emotion as a special form of human reflection of objective reality, which is the experience of human attitudes towards whether objective things meet human needs. Emotional states affect people’s daily life and work. For example, neuroscientists found that emotional control can help people make wise choices when dealing with complex problems. Checking and responding to emotions can modify learning outcomes [1]. Anxiety appears in potentially dangerous circumstances and can bias a person to judge a stimulus as more threatening [2]. Humans prefer to pursue products and make buying decisions based on their emotional and aesthetic preferences. The analysis of emotional states involves several disciplines, e.g., neuroscience, biomedical engineering and the cognitive sciences. Since emotion reflects a person’s psychological and physical state, certain psychological signals correspond to certain specific emotions.

Changes in a person’s emotions induced by environmental factors are accompanied by changes in physiological signals (ECG, EDA, ECG, EMG, pulse wave, etc.) and behavioral signals (facial expression, language tone, text, etc.). The behavioral signals are external manifestations triggered by human emotions and are the indirect embodiment of emotional information, while physiological signals belong to the internal form of expression and can more truly reflect human emotions. Human emotion recognition and judgment systems are also based on the physiological and behavioral signals. Compared with the system based on behavioral signals, the system based on physiological signals has the advantages of high time resolution and convenient measurement, so the identification of emotions from physiological signals has extensive applications in many fields such as education, industrial design, medicine, rehabilitation, entertainment, pedagogy, psychology, etc. [3], thus attracting widespread attention. In the last two years, some achievements including new models, new approaches or methods, new algorithms, and new systems have emerged.

In terms of new models, Zhang et al. [4] presented a multimodal emotion recognition model based on manifold learning and a convolutional neural network, and the test results showed that the presented model achieved high identification accuracy. Quispe et al. [5] employed a self-supervised learning paradigm to make it possible to learn representations directly from unlabeled signals and subsequently use them to classify affective states, and the test showed that the self-supervised representations could learn widely useful features that improve data efficiency and do not require the data to be labeled for learning. Dasdemir et al. [6] presented a new model to study how augmented reality systems were effective in distinguishing the emotional states of students engaged in book reading activities with EEG. They indicated that augmented reality-based reading had a significant discriminatory effect and achieved higher classification performance than real reading, and the presented model was good at classifying emotional states from EEG signals, with accuracy scores close to 100%. Hernandez-Melgarejo [7] presented a new feedback control schema to manipulate the user state based on user–virtual reality system interaction and conducted real experiments with a virtual reality system prototype to show the effectiveness of the presented schema.

In terms of new approaches or methods, Dissanayake et al. [8] presented a new self-supervised method that developed an approach to learn representations of individual physiological signals; the presented approach was more robust to losses in the input signal. Lee et al. [9] adopted a bimodal structure to extract shared photoplethysmography and EMG to enhance recognition performance and obtained high performance of 80.18% and 75.86% for arousal and valence, respectively. Pusarla et al. [10] presented a new deep learning-based method to extract and classify emotion-related information from the two-dimensional spectrograms obtained from EEG signals and offered a deep convolution neural network for emotion recognition with dense connections among layers to uncover the hidden deep features of EEG signals. The test results showed that the presented model boosted the emotion recognition accuracy by 8% compared to the state-of-the-art methods using the DEAP database. Moin et al. [11] proposed a multimodal method for cross-subject emotion recognition based on the fusion of EEG and facial gestures and obtained the highest accuracy of 97.25% for valence and 96.1% for arousal, respectively. Kim et al. [12] acquired a new EEG dataset (wireless-based EEG data for emotion analysis, WeDea) based on the discrete emotion theory and presented a new combination for WeDea analysis. The practical results indicated that WeDea was a promising resource for emotion analysis. Romeo et al. [13] presented a method with a framework from the machine learning literature, which was able to model time intervals by capturing the presence or absence of relevant states without the need to label the affective responses continuously. They indicated that the presented method showed reliability in a gold-standard scenario and towards real-world usage. Mert et al. [14] presented a method of converting the DEAP dataset into a multimodal latent dataset for emotion recognition and applied the method to each participant’s recordings to obtain a new latent encoded dataset. A naive Bayes classifier was used to assess the encoding performance of the 100-dimensional modalities, and compared to the original results, yielded leave-one-person-out cross-validation error rates of 0.3322 and 0.3327 for high/low arousal and valence states, while the original values were 0.349 and 0.382.

In terms of new algorithms, Fu et al. [15] built a substructure-based joint probability domain adaptation algorithm to overcome physiological signals’ noise effects, and the new algorithm could avoid the weaknesses of domain level matching that was too rough and sample level matching that was susceptible to noise. Pusarla et al. [16] used a new local mean decomposition algorithm to decompose EEG signals into product functions and even capture the underlying nonlinear characteristics of EEG; an emotion recognition system based on the presented algorithm outperformed state-of-the-art methods and achieved high accuracy. Katada and Okada [17] improved the performance of biosignal-based emotion and personality estimations by considering individual physiological differences as a covariate shift. They pointed out that importance weighting in machine learning models could reduce the effects of individual physiological differences in peripheral physiological responses. Hasnul et al. [18] presented a new multi-filtering augmentation algorithm to increase the sample size of the ECG data. The algorithm augmented ECG signals by cleaning the data in different ways, and the benefit of the algorithm was measured using the classification accuracy of five machine learning algorithms. It was found that there was a significant improvement in performance for all of the datasets and classifiers. Shi [19] provided a universal solution for cross-domain information fusion scenarios in body sensor networks and a feasible solution for quantitatively evaluating the domain weights; they conducted experiments to verify the adaptability and promising performance of fusion frameworks and dynamic domain evaluation for cross-domain information fusion in BSNs.

In terms of new systems, Anuragi et al. [20] presented an EEG signal-based automated cross-subject emotion recognition framework that decomposed the EEG signals into four sub-band signals; the test results showed that the presented framework had advantages for classifying human emotions compared to other state-of-the-art emotion classification models. Asiain et al. [21] proposed a novel platform of physiological signal acquisition for multi-sensory emotion detection to record and analyze different physiological signals, and the most important features of the proposed platform were compared with those of a proven wearable device. Zontone et al. [22] built a system to assess the emotional response in drivers while they were driving on a track with different car handling setups. The experimental results based on the system indicated that the base car setup appeared to be the least stressful, and that the presented system enabled one to effectively recognize stress while the subjects were driving in the different car configurations. Xie et al. [23] constructed a hybrid deep neural network to evaluate automobile interior acceleration sounds fused with physiological signals, and the results showed that the constructed network contributed to achieving accurate evaluations of automobile sound quality with EEG signals.

A review of research on emotion recognition and judgment based on physiological signals can be carried out from different aspects. In the study of this field, the most common approach is to establish a system or model based on physiological signals as the comparison standard to recognize and judge emotions, so psychological signals are a basic element. People’s psychological signals are mainly composed of EEG, EDA, ECG, EMG, pulse waves, etc. Pulse waves have obvious mechanical characteristics of blood flow and are employed less often in studies based on emotion recognition and judgment. Therefore, this paper mainly offers a review of the research work and application of emotion recognition and judgment based on EEG, EDA, ECG and EMG in recent years. It aims to provide readers with a further understanding of the role and characteristics of different psychological signals in emotional recognition and judgment. The rest of the paper is structured as follows. In Section 2, we introduce the research and application of EEG. Section 3 introduces research and application of EDA, ECG and EMG. The research and application of multimodal physiological signals are presented in Section 4. Section 5 presents ethical and privacy concerns related to the use of physiological signals for emotion recognition. Finally, a brief conclusion with future prospects is presented in Section 6.

2. Research and Application of EEG

Changes in people’s emotions comprise a sophisticated process and often lead to spatio-temporal brain activity that can be captured with EEG. The EEG signal is rich in useful information because it is a comprehensive embodiment of the operation of numerous neurons in the cerebral cortex and can immediately reflect brain activity. In addition, the EEG signal is simple to record and cost-effective. So, the EEG-based method has attracted widespread attention and is broadly used for emotion recognition and judgment.

Yang et al. [24] put forward an approach to emotion image classification according to the user’s experience measured with EEG signals and eye movements, and built a relationship between the psychological signals and the expected emotional experience. They showed that the proposed approach was accurate in distinguishing images that could cause a pleasurable experience by classifying 16 abstract art paintings as positive, negative or neutral according to volunteers’ physiological responses. Yoon and Chung [25] studied emotion recognition and judgment based on EEG signals. They defined emotions as two-level and three-level classes with valence and arousal dimensions, extracted features using fast Fourier transform, and performed feature selection with the Pearson correlation coefficient. The results showed that the mean precision for the valence and arousal estimation was 70.9% and 70.1%, respectively, for the two-level class, and 55.4% and 55.2%, respectively, for the three-level class. Andreu-Perez et al. [26] let game players play “League of Legends” and imaged players’ brain activity through functional near infrared spectroscopy as well as recording videos of the players’ faces. Based on the above, they decoded the expertise level of the players in a multi-modal framework, which was the first work decoding expertise level of players with non-restrictive technology for brain imaging. They declared that the best tri-class classification precision was 91.44%. Zhang et al. [27] first measured the EEG signals and extracted features from the signals, then processed the EEG data using a modified algorithm of the radial basis function neural network, and finally compared and discussed the experimental results given by different classification models. The results showed that the modified algorithm was better than other algorithms. Chew et al. [28] proposed a new approach to preference-based measurement based on user’s aesthetic preferences using EEG for a moving virtual three-dimensional object. The EEG signals were classified into two classes of like and dislike, and the classification accuracy could reach 80%. Chanel et al. [29] put forward a complete acquisition protocol to establish a physiological emotional database for subjects, and then formulated arousal assessment as 2 or 3 degrees. The results confirmed the possibility of applying EEG signals to evaluate the arousal component of emotion. Wagh and Vasanth [30] employed various classifiers to categorize the EEG signal into three emotional states and used the discrete wavelet transform to decompose EEG signals into different frequency bands. They also derived time domain features from the EEG signal to recognize different emotions. The results indicated that the maximum classification rates were 71.52% and 60.19%, respectively, when the classification methods of decision tree and k nearest neighbor were used, and the higher frequency spectrum performed well in emotion recognition.

Ozdemir et al. [31] presented a new approach to recognize emotions with a series of multi-channel EEG signals by changing the EEG signals into a sequence of multi-spectral topology images. Based on the new approach, they obtained testing accuracy of 86.13% for arousal, 90.62% for valence, 88.48% for dominance, and 86.23% for like–unlike. Abadi et al. [32] proposed a magnetoencephalogram-based database for decoding affective user responses that, different from data sets, had little contact with the scalp and thus promoted a naturalistic affective response and enabled fine-grained analysis of cognitive reactions over brain lobes, in turn favoring emotion recognition. Tang et al. [33] presented an EEG-based art therapy evaluation approach that could assess therapeutic effectiveness according to the variation in emotion before and after therapy. The results indicated that the model of emotion recognition with long short-term memory deep temporal features had better classification effects than the state-of-the-art approach with non-temporal features, and the classification accuracy in the high-frequency band was higher than that in the low-frequency band. Soroush et al. [34] rebuilt EEG phase space and converted it into a new state space, and extracted the Poincare intersections as features and then fed them into the classification model. They declared that not only was the presented approach effective in emotion recognition, but it also introduced a new method for nonlinear signal processing. Halim and Rehan [35] proposed a machine learning-based approach to discern stress patterns induced by driving with EEG signals, and built relations between brain dynamics and emotion. They also presented a framework to identify emotions based on EEG patterns by recognizing specific features of the emotion from the raw EEG signal. Fifty subjects were tested, and high classification accuracy, precision, sensitivity and specificity were obtained. Lu et al. [36] analyzed the results of people’s physiological estimation under different illumination levels and color temperatures based on EEG signals. The results showed that illumination greatly affected the response of the visual center, which was conducive to the design of light environments. In order to recognize and classify fear levels based on EEG and databases for emotion analysis, Balan et al. [37] compared different machine and deep learning methods with and without selecting features, and found that, although all methods could give high classification accuracy, the highest F scores were obtained using the random forest classifier—89.96% as shown in Table 1 and 85.33% as shown in Table 2 for the two-level fear (0—no fear and 1—fear) and four-level fear (0—no fear, 1—low fear, 2—medium fear, 3—high fear) evaluation modalities, respectively. In Table 1 and Table 2, F1 is fear, DNN is deep neural network, SCN is stochastic configuration network, SVM is support vector machine, RF is random forest, LDA is linear discriminant analysis, and KNN is k-nearest neighbors.

Table 1.

Classification accuracy when input is a vector of 32 Higuchi fractal dimensions [37].

Table 2.

Classification accuracy when input is a vector of 30 alpha, beta and theta PSDs [37].

Al Hammadi et al. [38] built an insider risk evaluation system as a fitness for duty security assessment to assort abnormal EEG signals with a potential insider threat. They collected data from 13 subjects in different emotional states and mapped and divided different levels of emotions into four risk levels. High classification accuracy was obtained. Guo et al. [39] integrated EEG and eye-tracking metrics to identify and quantify the visual aesthetics of a product. The results showed that there existed obvious difference in the fixation time ratio and dwell time ratio among three groups of visual aesthetic lamps; the integrated EEG and eye-tracking metrics could improve the quantification accuracy. Priyasad et al. [40] proposed a new method to recognize emotion through unprocessed EEG signals, and it was found that, with the new method, the classification accuracy for arousal, valence and dominance could be higher than 88%. Xie et al. [41] presented an assessment approach based on EEG signals. They first determined the brain cognition laws by evaluating the EEG power topographic map under the effect of three types of automobile sound, i.e., the qualities of comfort, powerfulness, and acceleration, then classified the EEG features thus recognized as different automobile sounds, and finally employed the Kalman smoothing and minimal redundancy maximal relevance algorithm to modify the accuracy and reduce the amount of calculation. The results indicated that there existed differences in the neural features of different automobile sound qualities. Yan et al. [42] studied the issue of inducing and checking driving anger. They first collected data on brain signals of driving anger when drivers encountered three typical scenarios that could lead to driving anger, and then distinguished normal driving from angry driving according to the self-reported data; finally, a classifier was used to detect angry driving based on the features, and 85.0% detection accuracy was obtained. Li and Wang [43] described the research status of cognitive training and analyzed the reasons for individual cognitive differences. They investigated cognitive neural plasticity for reducing the cognitive differences, explored the merits and drawbacks of the transfer approach in reducing cognitive differences, and put forward future research issues. Mohsen et al. [44] proposed a long short-term memory model for classification of positive, neutral, and negative emotions, and applied the model to a dataset that included three classes of emotions with a total of 2100 EEG samples from two subjects. Experimental results showed that the presented model had a testing accuracy of 98.13% and a macro average precision of 98.14%.

3. Research and Application of EDA, ECG and EMG

EDA, used to describe variations in the conductivity of skin, measures the electrical features of skin through skin conductance response, skin potential, skin conductance level or skin potential response. EDA is mature and non-invasive and has many advantages such as reliability, user-friendly acquisition, swift response, and low cost; hence, it is used to study many issues.

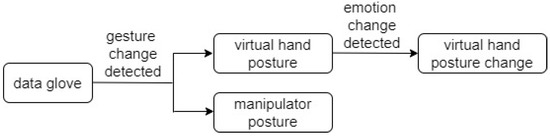

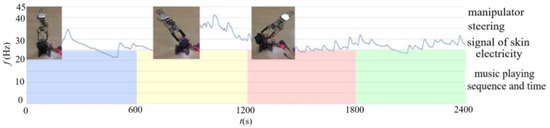

Liapis et al. [45] identified specific stress regions in the valence-arousal space by combining pairs of valence-arousal and skin conductance for 31 volunteers. The findings showed which regions in the valence-arousal rating space could express self-reported stress in accordance with the subject’s skin conductance. They also presented a new method for the empirical discrimination of emotion regions in the valence-arousal space. Feng et al. [46] proposed an automated method of classifying emotions for children using EDA. The results quantitatively indicated that the proposed method could significantly improve emotion classification using wavelet-based features. van der Zwaag [47] selected songs with the most increased or decreased skin conductance levels in 10 subjects to cause an energetic or calm motion based on the resulting user models. The results indicated that the effect of a song on skin conductance could be reliably predicted, and skin conductance that could kept in certain state for at least 30 min was directly related to energetic or calm mood. It was feasible to reflect the emotional response of listeners through physiological signals. Lin et al. [48] studied the application of data gloves with two systems: one was a system of emotion recognition and judgment that was built based on the optimal features of EDA, and another was a system of virtual gesture control and a manipulator propelled by emotion, as shown in Figure 1. The test results for five subjects showed that the system could regulate the gestures of a virtual hand through reading the signals of skin electricity when the subjects did not change any gesture, and the manipulator could complete the steering change driven by emotion as shown in Figure 2, where the bottom row is the duration of the music, the middle row is the EDA signals of subjects after listening to music, and the top row is the manipulator steering change driven by emotion based on the EDA signals.

Figure 1.

System of virtual gesture control and a manipulator propelled by emotion [48].

Figure 2.

Steering change driven by emotions [48].

Hu et al. [49] studied the differences in people’s assessments of tactile sensations for beech surfaces of different shapes and roughness using skin conductance and subjective emotion measurement of pleasure arousal dominance. They found that, under a relatively high level of emotional arousal, the subject’s emotional stability could be partly retained because of a beech with arc shapes. As for perception of beech, males had a larger range of emotional arousal and lower speed of emotional arousal than females. Yin et al. [50] combined people’s individual EDA features and music features, and presented a network of residual temporal and channel attention. They first applied a mechanism of channel-temporal attention for EDA-based emotion recognition to explore dynamic and steady temporal and channel-wise features and proved the effectiveness of the presented network for mining EDA features. Romaniszyn-Kania et al. [51] developed a tool and presented a set to complement psychological data based on EDA, and a comparison of accuracy of the k-means classification with the independent division revealed the best results for negative emotions (78.05%). The comparison of the division of the participants according to the classifier is shown in Table 3, where JAWS is Job-related Affective Well-being Scale, “pos” is positive, “neg” is negative, PCA is partial component analysis, ACC is accuracy.

Table 3.

Comparison of the division of the participants according to the classifier [51].

ECG measures a physiological signal generated from the contraction and recovery of the heart, and ECG technology uses an electrocardiograph to record the electrical activity changes of the heart from the body surface in each cardiac cycle. The ECG data based on physiological characteristics are directly related to an individual, and hence often used to identify a person’s psychological state.

To improve the ability of emotional recognition from ECG signals, Sepulveda et al. [52] presented a wavelet scattering algorithm to extract the features of signals based on the AMIGOS database as inputs for different classifiers evaluating their performance, and reported that an accuracy of 88.8%, 90.2% and 95.3% had been obtained in the valence, arousal and two-dimensional classification, respectively, using the presented algorithm. Wang et al. [53] applied ECG features including time-frequency domain, waveform and non-linear characteristics, combined with their presented model of emotion recognition, to recognize a driver’s calm and anxiety. Accuracy scores of 91.34% and 92.89% for calm and anxiety, respectively, were obtained. Wu and Chang [54] used ECG to study experimentally the impact of music on emotions. The results showed that fast, intermediate and slow music strengthened, inhibited and did not change the activity of the autonomic sympathetic nervous system, respectively. They also suggested that music could be used to relieve psychological pressure. Fu et al. [55] presented an approach using the human heart rate as a new form of modality to determine human emotions with a physiological mouse measuring photoplethysmographic data and experimentally evaluated the accuracy of the approach. Hu and Li [56] collected 140 signal samples of ECG triggered by Self-Assessment Manikin emotion self-assessment experiments using the International Affective Picture System, and used Wasserstein generative adversarial network with gradient penalty to add different numbers of samples for different classes. The results showed that the accuracy and weighted F1 values of all three classifiers were improved after increasing the quantity of data.

EMG is a technique using electronic instruments to evaluate and record the electrical activity of muscles, which is usually referred to as myoelectric activity. There are two kinds of methods for EMG: one is the surface derivation method, i.e., the method of attaching an electrode to the skin to derive the potential; the other is the needle electrode method, which is the method of inserting a needle electrode into a muscle to derive the local potential. Automatic analysis of EMG can be performed by using computer technology, such as analytical EMG, single-fiber EMG and giant EMG.

Bornemann et al. [57] studied whether identification could be modified by focusing subjects on their internal reactions, and tested how changes in expression type and presentation parameters affect facial expression and recognition rate based on EMG. They found that the participants had different EMG responses, i.e., maximal corrugator responses to angry, then neutral, and minimum to happy faces. They declared that corrugator EMG was sufficiently credible to predict stimulus valence statistically; the concise expression would generate a strong bodily signal as feedback to modify the recognition ability; either the physical and psychological reactions were mainly unconscious, or other approaches to instruction or training were needed to utilize their feedback potential.

4. Research on and Application of Multimodal Physiological Signals

Due to the variety of expressions of human emotion, an emotion analysis using only single-mode information is not perfect, e.g., facial expressions can be disguised through the individual’s subjective will. However, multimodal signals can provide more discriminative information than single modal signals. Therefore, scholars began to try to carry out further research on emotion recognition with multimodal methods.

4.1. Two Psychological Signals

Du et al. [58] presented an approach to detect human emotions according to heart beat and facial expressions. Through game experiments using their presented approach, they found that it needed less computing time and had high accuracy in detecting the emotions of excitement, sadness, anger and calmness. The intensity of emotion could be evaluated with the values of heart beat. Lin et al. [59] conducted experiments of emotion classification for different types of physiological signals and combinations of EEG, and the results showed that skin electrical signals and facial EMG signals yielded better classification results. Lin et al. [60] built a system of emotion judgment and a system of emotion visualization based on changes in pictures and scenes, and compared the change in emotional trends detected with systems based on sets of optimal signal features and machine learning-based methods through skin electricity and pulse waves of 20 subjects who were listening to music. The results indicated that the change in emotional trends detected using the system of emotion visualization based on changes in picture and scene were consistent with those detected using the system of emotion judgment. Meanwhile, the interactive quality and real-time ability of the emotion visualization system based on scene changes were better than those based on picture changes; the interactive quality of the emotion judgment based on a set of optimal signal features was better than that based on a machine learning-based method.

Wu et al. [61] first presented a technique according to a fusion model of the Gaussian mixed model with the Butterworth and Chebyshev signal filter, and calculated the features extracted from EEG signals and eye-tracking signals, then used a max-min approach for vector normalization, and finally proposed a deep gradient neural network for classifying EEG and eye-tracking signals. The experimental results showed that the presented neural network could predict emotions with 88.10% accuracy, and the presented approach also performed with high efficiency in several indices compared with other typical neural networks. Tang et al. [62] used ECG and EEG signals to assess the impact of music on the generation of ideas by subjects with design experience under individual and group conditions. The results indicated that the subjects produced more but worse design ideas in response to music that aroused positive emotions, and pleasant emotions were more likely to be evoked under group conditions. At the initial stage of design, the positive music was beneficial for producing more design ideas, but non-music was better when designers needed to think deeply. Singh et al. [63] put forward a neural network driven by a solution to learning driving-induced stress modes, related it with time–frequency changes observed from galvanic skin response and photoplethysmography, and obtained precision of 89.23%, specificity of 94.92% and sensitivity of 88.83%. They summarized that the layer recurrent neural network was most suitable for the detection of stress degree. Katsigiannis and Ramzan [64] built a multimodal database of EEG and ECG signals obtained during emotional arousal through audio-visual stimuli from 23 subjects along with the subjects’ self-evaluations of their respective emotional states based on valence, arousal and dominance, and established a baseline for subject-wise emotion identification using the features extracted from EEG and ECG signals as well as their fusion. They also assessed the self-evaluations of the subjects by comparing them with other self-evaluations. The results showed that the classification effect for valence, arousal and dominance based on the presented database could be matched with databases obtained using expensive and medical-grade devices, pointing out the prospects of using low-cost equipment for emotion recognition.

4.2. Multiple Physiological Signals

Laparra-Hernandez et al. [65] assessed human perceptions by recording subjects’ EMG, EMG and galvanic skin response signals while neutral, smiling and frowning faces as well as eight images of ceramic flooring were projected. The results indicated that the forms of ceramic tile flooring could be distinguished by values of galvanic skin response, and there was a sharp distinction in the EMG signals induced by the calibration images. Zhang et al. [66] presented a regularized deep fusion framework for use in emotion recognition based on the physiological signals of EEG, EMG, galvanic skin response and respiratory rate. They extracted effective features from the above signals and built ensemble-dense embeddings of multimodal features from which they applied a deep network framework to learn task-specific representations for each signal, and then designed a global fusion layer with a regularization term to blend the produced representations. There results showed that the presented framework could modify the effect of emotion recognition, and the blended representation could exhibit higher class separability for emotion recognition. Jang et al. [67] evaluated the reliability of physiological variations produced by six different basic emotions with measurements of physiological signals of skin conductance level, blood volume pulse and fingertip temperature when 12 subjects watched emotion-provoking film clips and self-assessed their emotions under the stimuli in the films. It was found that the physiological signals obtained in the emotion-arousing period displayed good internal consistency. Yoo et al. [68] presented an approach to assess, analyze, and fix human emotions using signals of EEG, ECG, galvanic skin response, photoplethysmograms and respiration. Using the presented approach, six different emotional states could be recognized, and high accuracy performance was obtained. Khezri et al. [69] presented a new adaptive approach to modify the system of emotion recognition with measurements of skin conductance, blood volume pressure and inter-beat intervals along with three-channel forehead biosignals as emotional modalities. Six different emotions were stimulated by showing video clips to each of the 25 subjects. The results indicated that the presented approach was better than conventional methods, and overall emotion classification accuracy of 84.7% and 80% was obtained, respectively, using support vector machine and K-nearest neighbors classifications. Zhou et al. [70] studied experimentally whether auditory stimulation could be used as a stimulus source for emotion judgment by recording signals of human EEG, facial EMG, EDA and respiration. The results showed that auditory stimulation was as effective as visual stimulation. They also presented culture-specific, i.e., Chinese vs. Indian, prediction models, and no major differences in prediction accuracy were observed between the presented culture-specific models.

Yan et al. [71] first proposed an approach using hybrid feature extraction to extract statistical- and event-related features from ECG, EDA, EMG and blood volume pulse, then presented a method of adaptive decision fusion to integrate signal modalities for emotion classification, and finally assessed the presented framework through a comparative analysis based on a dataset. The results showed that it was necessary to use event-related features and emphasized the significance of improving the adaptive decision fusion method for emotion classification. Anolli et al. [72] developed an E-learning system endowed with emotion computing ability for the cultivation of relational skills based on 10 different kinds of emotions detected from the facial expressions, vocal nonverbal features and postures of 34 subjects. Picard et al. [73] developed a machine with the ability to recognize daily eight emotional states from EMG, EDA, blood volume pressure and respiration signals over multiple weeks. It was found that the characteristics of different emotions on the same day were inclined to aggregate more closely than the characteristics of the same emotion on different days. They also presented new features and algorithms to deal with the daily variations. Fleureau et al. [74] presented an approach to establish a real-time emotion detector that included EMG, heart rate and galvanic skin response for application in entertainment and video viewing. They intended to detect the emotional effects of a video in a new manner by recognizing emotional states in the motion stream and by providing the associated binary valence of each detected state. Kim et al. [75] built a user-independent system of emotion recognition based on ECG, EDA and skin temperature variations that could reflect the effect of emotions on the nervous system. They designed an approach with preprocessing and extraction features to draw the emotion-specific features from signals with short segments, and used a support vector machine as a pattern classifier to solve the classification problems, i.e., large overlap among clusters and large variance within clusters. Pour et al. [76] studied how positive, neutral and negative feedback responses from an intelligent tutoring system based on EDA, EMG and ECG signals affect learners’ physiology and emotions. The results showed that learners mostly presented expressions of delight when receiving positive feedback, but expressions of surprise when receiving negative feedback.

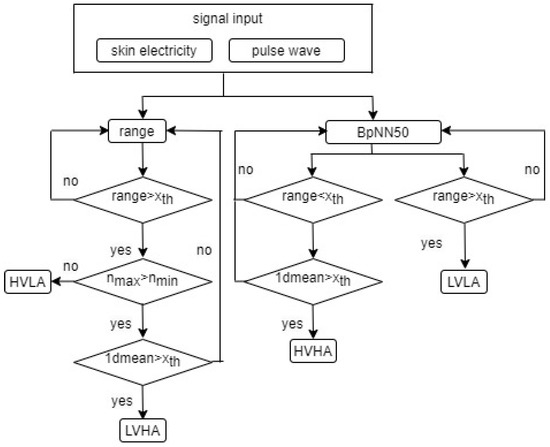

Liu et al. [77] presented an approach to determine operators’ emotional states attributed to a set of CAD design tasks based on EEG, galvanic skin resistance, and ECG signals. The stimulation and interpretation of each operator’s emotions were performed successfully, through various task action chains, with obvious correlations confirmed between the operator’s CAD experience and their emotional states. Pinto et al. [78] processed ECG, EMG and EDA signals with unimodal and multimodal methods to develop a physiological model of emotions in 55 subjects. The results showed that the ECG signal was the best in emotion classification, and fusion of all signals could provide the most effective emotion identification. Zhuang et al. [79] developed a novel approach for valence-arousal model emotion assessment with multiple physiological signals to determine human emotional states using various algorithms. It was found that the deep neural network approach could precisely identify human emotional states. Garg et al. [80] presented a model using a machine learning method for the calibration of the mood of the song according to audio signals and human emotions based on EEG, ECG, EDA and pulse wave signals. They performed extensive experiments using songs that induced different moods and emotions and tried to detect emotions with a certain degree of efficiency and accuracy. Zhuang et al. [81] built a system of emotion recognition based on the EEG, ECG and EMG signals and used the T method of the Mahalanobis–Taguchi system to handle the signals and further identify emotional states. The results indicated that, although the T method only modified the accuracy on the valence state, it still identified the emotion intensity in different states. Lin et al. [82] studied a model of emotion recognition and judgment based on ECG, EDA, facial EMG and pulse wave signals monitored in 40 subjects playing a computer game. The subjects were required to complete a questionnaire including typical scenarios appearing in the game and subjects’ evaluations of the scenarios. Relationships between the signal features and emotion states were established with a statistical approach and the questionnaire responses. They obtained a set of optimal features from the physiological EDA and pulse wave signals, reduced the dimensionality features, chose features with a significance of 0.01 according to the relationship between the features and emotional states, and finally built a model of emotion recognition and judgment as shown in Figure 3, where BpNN50 means percentage of main wave interval > 50 ms, 1dmean is the mean value of first-order difference, HVLA is high valence-low arousal, LVHA is low valence-high arousal, HVHA is high valence-high arousal and LVLA is low valence-low arousal.

Figure 3.

Process of emotion judgment model [82].

Albraikan et al. [83] built a real-time emotional biofeedback system based on EDA, ECG, blood volume pulse and skin temperature signals and presented the experimental results for the system based on the above physiological signals. It was found that the system could help improve emotional self-awareness by decreasing error rate by 16.673% and 3.333% for men and women, respectively. Chen et al. [84] presented a multi-stage multimodal dynamical fusion network based on EEG, EMG and electrooculography signals to solve the problem of cross-modal interaction appearing in one-stage fusion. They obtained the joint representation according to cross-modal correlation using the presented network, which was verified on a multimodal benchmark. The experimental results showed that the method of multi-stage, multi-modal dynamical fusion was better than the related one-stage, multi-modal methods. Niu et al. [85] performed an experiment to explore subjects’ emotional experience of virtual reality by comparing feelings and ECG, EDA and skin temperature signals. The results showed that a higher arousal level was obtained for virtual reality than that for a two-dimensional (2D) case. Eye fatigue occurred easily in both the virtual reality and 2D environments, while the former was more likely to lead to symptoms of dizziness and vertigo. Subjects had higher excitement levels in the virtual reality environment as well as more frequent and stronger emotional fluctuations. Chung and Yang [86] presented an integrated system of emotion evaluation. The system, which detected and analyzed variations in human objective and emotional states in an integrative way, consisted of subsystems of real-time subjective and objective emotionality evaluation based on EEG, ECG, EDA and skin temperature. They declared that the presented system would be conducive to the construction of a human emotion evaluation index by assembling the measured data into a database. Uluer et al. [87] proposed an assisted robotic system for children with hearing disabilities to improve emotion identification ability based on EDA, blood volume pulse and skin temperature signals. Situations concerned with a conventional setup, a tablet, and robot + tablet were assessed with 16 subjects with cochlear implants or hearing aids. The results indicated that the collected physiological signals could be divided, and the positive and negative emotions could be more effectively distinguished when subjects interacted with the robot than in the other two setups. Kiruba and Sharmila [88] identified a preferable framework of artificial intelligent ensemble feature selection and model of heterogeneous ensemble classification. Three base classifiers were applied to make decisions aimed at improving the classification performance of health-related messages. The results showed that the integration of the above framework and the model presented the highest accuracy in emotion classification based on the signal features of ECG, EDA, respiration and skin temperature. Habibifar and Salmanzadeh [89] studied the effectiveness of physiological signals, i.e., EEG, ECG, EMG and EDA, in detecting negative emotions in 43 subjects driving in different scenarios. They extracted 58 features from the physiological signals and calculated the assessment criteria for sensitivity, accuracy, specificity and precision. The results showed that the ECG and EDA signals were best in detecting negative emotions, with 88% and 90% accuracy, respectively. Hssayeni and Ghoraani [90] studied the application of ECG, EDA, EMG and respiration signals for evaluating positive and negative emotions using data-driven feature extraction. They put forward two methods of multimodal data fusion with deep convolutional neural networks and evaluated positive and negative emotions using the proposed architecture. The results indicated that the presented model had better performance than traditional machine learning, i.e., using only two modalities, the presented model evaluated positive emotions with a correlation of 0.69 (p < 0.05) vs. 0.59 (p < 0.05) with traditional machine learning, and negative emotions with a correlation of 0.79 (p < 0.05) vs. 0.73 (p < 0.05). Chen et al. [91] proposed a new system to detect driving stress based on the features extracted from time and spectral and wavelet domains of physiological signals including ECG, EDA and respiration. They employed sparse Bayesian learning and principal component analysis to seek optimal feature sets and used kernel-based classifiers to increase accuracy. The results showed that driver stress could be characterized by peculiar signal sets that could be utilized by in-vehicle intelligent systems in various ways to assist drivers in controlling their negative emotions while driving.

Saffaryazdi et al. [92] presented an experimental setup for stimulating spontaneous emotions with a face-to-face conversation and a dataset of physiological signals from EEG, EDA and photoplethysmography, and pointed out new directions for future research in conversational emotion recognition. Ma et al. [93] studied how sex differences affected recognition under three different sleep conditions using EEG and eye movements. The results showed that sleep deprivation impaired the stimulation of happy emotions and also notably weakened the ability to discriminate sad emotions in males, while females maintained the same emotions as under common sleep. Singson et al. [94] identified and recognized emotions of happy, sad, neutral, fear, and anger using facial expressions, ECG and heart rate variability, and the accuracy obtained was 68.42% based on the results from ECG. Hinduja et al. [95] studied recognizing context over time using physiological signals, and indicated that the fusion of EMG signals was more accurate compared to the fusion of non-EMG signals. Although the fusion of non-EMG signals yielded comparatively higher accuracy, ECG data resulted in the highest unimodal accuracy.

5. Ethical and Privacy Concerns Related to the Use of Physiological Signals for Emotion Recognition

Ethical and privacy issues should be given attention in emotion recognition and judgment because they relate to relevant data extracted from people along with their personal and behavioral information [96]. The former may reflect physical and psychological health, whereas the latter may include health, location, and other highly confidential information [97]. In addition, emotion research based on physiological signals could reveal the internal characteristics of individuals [98]. Therefore, ethical issues including the protection of user privacy deserve more attention. Sarma and Barma [99] indicated that such private information could be exploited, causing violations of personal rights, so ethical approval is imperative before data acquisition. Namely ethical consent, which includes general, personal and researcher concerns, must be obtained from the participant. They also detailed the specific contents of the three levels of concern.

In daily life and work, there is a potential privacy problem with the leakage of physiological information, including physiological signals and facial expressions. Sun and Li [100] presented a new approach based on a pre-trained 3D convolutional neural network, i.e., Privacy-Phys, to modify remote photoplethysmography in facial videos for privacy. The results showed that the approach was more effective and efficient than the previous baseline. Pal et al. [101] pointed out that digital identity and identity management must be taken into consideration because of the dynamic nature and scale of the number of devices, applications, and associated services in a large-scale emotion recognition system.

Liao et al. [102] presented a new affective virtual reality system by adopting the immersion, privacy and design flexibility of virtual reality based on EEG, EDA and heart rate signals. The results proved that virtual reality scenes could achieve the same emotion elicitation as video. Preethi et al. [103] developed a complete product to use the detected emotions in a real-time application based on ECG, EMG, respiration and skin temperature data. The signals were obtained with personal wearable technology while not compromising privacy. Accuracy of 91.81% was obtained in an experiment with 150 participants.

Monitoring of the elderly using visual and physiological devices is likely to breach privacy. Reading distress emotions with speech would provide effective monitoring while preserving the privacy of the elderly. Machanje et al. [104] presented a new method where association rules drawn from speech features were used to derive the correlation between features and feed these correlations to machine learning techniques for distress detection. Chen et al. [105] presented a privacy-preserving representation-learning variational generative adversarial network to learn an image representation that was explicitly disentangled from the identity information, and the test results showed quantitatively and qualitatively that the presented approach could reach a balance between the preservation of privacy and data utility. Nakashima et al. [106] presented an image melding-based method that altered facial regions in a visually unintrusive way while preserving facial expression, and the test results indicated that the presented approach could retain facial expression while protecting privacy. Ullah et al. [107] presented an effective and robust solution for facial expression recognition under an unconstrained environment that could classify facial images in the client/server model while preserving privacy, and by validating the results on four popular and versatile databases, they elaborated that the effectiveness of the presented approach remained unchanged by preserving privacy. Can and Ersoy [108] applied federated learning, a candidate for developing high-performance models while preserving privacy, to heart activity data collected with smart bands for stress-level monitoring and achieved satisfying results for an IoT-based wearable biomedical monitoring system that preserves the privacy of the data.

6. Conclusions and Prospects

Emotion recognition and judgment based on physiological signals has been widely studied and applied. This review covers research work on and applications of emotion recognition and judgment based on individual EEG, EDA, ECG, and EMG signals and their combination in recent years. Different physiological signals have their own characteristics, so they are suitable for different occasions. As a summary, Table 4 lists the practical occasions for the use of different physiological signals in the research described in this paper, so as to understand the scenarios for the use of physiological signals for emotion recognition from a local perspective. From the previous description and Table 1, we can see that EEG is used alone in many cases, and EDA and ECG are often used in multimodal cases. Pulse wave is used less often.

Table 4.

Applications of different physiological signals discussed in this paper.

Given their current status, we believe that the following, as well as other, aspects of emotion recognition and judgment based on physiological signals need further study.

A single physiological signal comprises limited information and possesses weak representation ability. Establishing data fusion models of psychological signals, seeking universal training algorithms for cross-individual conversion, and developing a criterion index for assessments of individual differences are all needed.

The influence of the external environment on people’s emotions is multifaceted. More accurate, rigorous and detailed methods of emotion stimulation and acquisition approaches based on psychological signals should be pursued in order to comprehensively reflect the impact of environmental factors on people’s emotions.

The methods of machine learning and deep learning are generally applied to emotion recognition and classification in various fields. However, psychological signals of emotion have their own properties, e.g., the time dimension and space dimension, so it is necessary to search for a more suitable approach to emotion recognition.

There is no targeted difference between functional scopes of application and object-oriented products when theoretical achievements are used for the establishment of systems or related products, so more-targeted models of emotion recognition and classification need to be built.

Natural emotion communication between users and systems is still lacking. Some systems with which users can communicate emotionally and manipulate according to their emotional status are mandatory, rather than natural interaction.

Different people have different cognition and evaluation criteria for the same external things. Factors influencing these criteria include beliefs, ideas, experiences, personal preferences, etc. Investigations of individual cognitive distinctions and cross-individual cognitive domains based on psychological signals are still needed. A large number of different physiological signals are needed to establish models and systems that can meet the needs of users as well as possible.

Author Contributions

Conceptualization, W.L.; methodology, W.L. and C.L.; software, W.L. and C.L.; validation, C.L. and W.L.; writing, W.L. and C.L.; resources, W.L. and C.L.; review, C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Grant no. 12132015).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

There are no conflict of interest regarding the publication of this paper.

References

- Hussain, M.S.; Alzoubi, O.; Calvo, R.A.; D’Mello, S.K. Affect detection from multichannel physiology during learning sessions with auto tutor. Artif. Intell. Educ. 2011, 6738, 131–138. [Google Scholar]

- Paul, E.S.; Cuthill, I.; Norton, V.; Woodgate, J.; Mendl, M. Mood and the speed of decisions about anticipated resources and hazards. Evol. Hum. Behav. 2011, 32, 21–28. [Google Scholar] [CrossRef]

- Karray, F.; Alemzadeh, M.; Saleh, J.A.; Arab, M.N. Human-computer interaction: Overview on state of the art. Int. J. Smart Sens. Intell. Syst. 2008, 1, 137–159. [Google Scholar] [CrossRef]

- Zhang, Y.; Cheng, C.; Zhang, Y.D. Multimodal emotion recognition based on manifold learning and convolution neural network. Multimed. Tools Appl. 2022, 81, 33253–33268. [Google Scholar] [CrossRef]

- Quispe, K.G.M.; Utyiama, D.M.S.; dos Santos, E.M.; Oliveira, H.A.B.F.; Souto, E.J. Applying self-supervised representation learning for emotion recognition using physiological signals. Sensors 2022, 22, 9102. [Google Scholar] [CrossRef]

- Dasdemir, Y. Cognitive investigation on the effect of augmented reality-based reading on emotion classification performance: A new dataset. Biomed. Signal Process. Control 2022, 78, 103942. [Google Scholar] [CrossRef]

- Hernandez-Melgarejo, G.; Luviano-Juarez, A.; Fuentes-Aguilar, R.Q. A framework to model and control the state of presence in virtual reality systems. IEEE Trans. Affect. Comput. 2022, 13, 1854–1867. [Google Scholar] [CrossRef]

- Dissanayake, V.; Seneviratne, S.; Rana, R.; Wen, E.; Kaluarachchi, T.; Nanayakkara, S. SigRep: Toward robust wearable emotion recognition with contrastive representation learning. IEEE Access 2022, 10, 18105–18120. [Google Scholar] [CrossRef]

- Lee, Y.K.; Pae, D.S.; Hong, D.K.; Lim, M.T.; Kang, T.K. Emotion recognition with short-period physiological signals using bimodal sparse autoencoders. Intell. Autom. Soft Comput. 2022, 32, 657–673. [Google Scholar] [CrossRef]

- Pusarla, N.; Singh, A.; Tripathi, S. Learning DenseNet features from EEG based spectrograms for subject independent emotion recognition. Biomed. Signal Process. Control 2022, 74, 103485. [Google Scholar] [CrossRef]

- Moin, A.; Aadil, F.; Ali, Z.; Kang, D.W. Emotion recognition framework using multiple modalities for an effective human-computer interaction. J. Supercomput. 2023. [Google Scholar] [CrossRef]

- Kim, S.H.; Yang, H.J.; Nguyen, N.A.T.; Prabhakar, S.K.; Lee, S.W. WeDea: A new eeg-based framework for emotion recognition. IEEE J. Biomed. Health Inform. 2022, 26, 264–275. [Google Scholar] [CrossRef]

- Romeo, L.; Cavallo, A.; Pepa, L.; Bianchi-Berthouze, N.; Pontil, M. Multiple instance learning for emotion recognition using physiological signals. IEEE Trans. Affect. Comput. 2022, 13, 389–407. [Google Scholar] [CrossRef]

- Mert, A. Modality encoded latent dataset for emotion recognition. Biomed. Signal Process. Control 2023, 79, 104140. [Google Scholar] [CrossRef]

- Fu, Z.Z.; Zhang, B.N.; He, X.R.; Li, Y.X.; Wang, H.Y.; Huang, J. Emotion recognition based on multi-modal physiological signals and transfer learning. Front. Neurosci. 2022, 16, 1000716. [Google Scholar] [CrossRef]

- Pusarla, N.; Singh, A.; Tripathi, S. Normal inverse gaussian features for EEG-based automatic emotion recognition. IEEE Trans. Instrum. Meas. 2022, 71, 6503111. [Google Scholar] [CrossRef]

- Katada, S.; Okada, S. Biosignal-based user-independent recognition of emotion and personality with importance weighting. Multimed. Tools Appl. 2022, 81, 30219–30241. [Google Scholar] [CrossRef]

- Hasnul, M.A.; Ab Aziz, N.A.; Abd Aziz, A. Augmenting ECG data with multiple filters for a better emotion recognition system. Arab. J. Sci. Eng. 2023, 1–22. [Google Scholar] [CrossRef]

- Shi, H.; Zhao, H.; Yao, W. A transfer fusion framework for body sensor networks (BSNs): Dynamic domain adaptation from distribution evaluation to domain evaluation. Inf. Fusion 2023, 91, 338–351. [Google Scholar] [CrossRef]

- Anuragi, A.; Sisodia, D.S.; Pachori, R.B. EEG-based cross-subject emotion recognition using Fourier-Bessel series expansion based empirical wavelet transform and NCA feature selection method. Inf. Sci. 2022, 610, 508–524. [Google Scholar] [CrossRef]

- Asiain, D.; de Leon, J.P.; Beltran, J.R. MsWH: A multi-sensory hardware platform for capturing and analyzing physiological emotional signals. Sensors 2022, 22, 5775. [Google Scholar] [CrossRef]

- Zontone, P.; Affanni, A.; Bernardini, R.; Del Linz, L.; Piras, A.; Rinaldo, R. Analysis of physiological signals for stress recognition with different car handling setups. Electronics 2022, 11, 888. [Google Scholar] [CrossRef]

- Xie, L.P.; Lu, C.H.; Liu, Z.; Chen, W.; Zhu, Y.W.; Xu, T. The evaluation of automobile interior acceleration sound fused with physiological signal using a hybrid deep neural network. Mech. Syst. Signal Process. 2023, 184, 109675. [Google Scholar] [CrossRef]

- Yang, M.Q.; Lin, L.; Milekic, S. Affective image classification basedon usereye movement and EEG experience information. Interact. Comput. 2018, 30, 417–432. [Google Scholar] [CrossRef]

- Yoon, H.J.; Chung, S.Y. EEG-based emotion estimation using Bayesian weighted-log-posterior function and perception convergence algorithm. Comput. Biol. Med. 2013, 43, 2230–2237. [Google Scholar] [CrossRef]

- Andreu-Perez, A.R.; Kiani, M.; Andreu-Perez, J.; Reddy, P.; Andreu-Abela, J.; Pinto, M.; Izzetoglu, K. Single-trial recognition of video gamer’s expertise from brainhaemodynamic and facial emotion responses. Brain Sci. 2021, 11, 106. [Google Scholar] [CrossRef]

- Zhang, J.; Zhou, Y.T.; Liu, Y. EEG-based emotion recognition using an improved radial basis function neural network. J. Ambient Intell. Humaniz. Comput. 2020, 5. [Google Scholar] [CrossRef]

- Chew, L.H.; Teo, J.; Mountstephens, J. Aesthetic preference recognition of 3D shapes using EEG. Cogn. Neurodynamics 2016, 10, 165–173. [Google Scholar] [CrossRef]

- Chanel, G.; Kronegg, J.; Grandjean, D.; Pun, T. Emotion assessment: Arousal evaluation using EEG’s and peripheral physiological signals. Multimed. Content Represent. Classif. Secur. 2006, 4105, 530–537. [Google Scholar]

- Wagh, K.P.; Vasanth, K. Performance evaluation of multi-channel electroencephalogram signal (EEG) based time frequency analysis for humane motion recognition. Biomed. Signal Process. Control 2022, 78, 103966. [Google Scholar] [CrossRef]

- Ozdemir, M.A.; Degirmenci, M.; Izci, E.; Akan, A. EEG-based emotion recognition with deep convolutional neural networks. Biomed. Eng.-Biomed. Tech. 2021, 66, 43–57. [Google Scholar] [CrossRef] [PubMed]

- Abadi, M.K.; Subramanian, R.; Kia, S.M.; Avesani, P.; Patras, I.; Sebe, N. DECAF:meg-based multimodal database for decoding affective physiological responses. IEEE Trans. Affect. Comput. 2015, 6, 209–222. [Google Scholar] [CrossRef]

- Tang, Z.C.; Li, X.T.; Xia, D.; Hu, Y.D.; Zhang, L.T.; Ding, J. An art therapy evaluation method based on emotion recognition using EEG deep temporal features. Multimed. Tools Appl. 2022, 81, 7085–7101. [Google Scholar] [CrossRef]

- Soroush, M.Z.; Maghooli, K.; Setarehdan, S.K.; Nasrabadi, A.M. Emotionrecognitionusing EEG phase space dynamics and Poincare intersections. Biomed. Signal Process. Control 2020, 59, 101918. [Google Scholar] [CrossRef]

- Halim, Z.; Rehan, M. On identification of driving-induced stress using electroencephalogram signals: A framework based on wearable safety-critical scheme and machine learning. Inf. Fusion 2020, 53, 66–79. [Google Scholar] [CrossRef]

- Lu, M.L.; Hu, S.T.; Mao, Z.; Liang, P.; Xin, S.; Guan, H.Y. Research on work efficiency and light comfort based on EEG evaluation method. Build. Environ. 2020, 183, 107122. [Google Scholar] [CrossRef]

- Balan, O.; Moise, G.; Moldoveanu, A.; Leordeanu, M.; Moldoveanu, F. Fear level classification based on emotional dimensions and machine learning techniques. Sensors 2019, 19, 1738. [Google Scholar] [CrossRef]

- Al Hammadi, A.Y.; Yeun, C.Y.; Damiani, E.; Yoo, P.D.; Hu, J.K.; Yeun, H.K.; Yim, M.S. Explainable artificial intelligence to evaluate industrial internal security using EEG signals in IoT framework. AD Hoc Netw. 2021, 123, 102641. [Google Scholar] [CrossRef]

- Guo, F.; Li, M.M.; Hu, M.C.; Li, F.X.; Lin, B.Z. Distinguishing and quantifying the visual aesthetics of a product: An integrated approach of eye-tracking and EEG. Int. J. Ind. Ergon. 2019, 71, 47–56. [Google Scholar] [CrossRef]

- Priyasad, D.; Fernando, T.; Denman, S.; Sridharan, S.; Fookes, C. Affect recognition from scalp-EEG using channel-wise encoder networks coupled with geometric deep learning and multi-channel feature fusion. Knowl. -Based Syst. 2022, 250, 109038. [Google Scholar] [CrossRef]

- Xie, L.P.; Lu, C.H.; Liu, Z.E.; Yan, L.R.; Xu, T. Study of auditory brain cognition laws-based recognition method of automobile sound quality. Front. Hum. Neurosci. 2021, 15, 663049. [Google Scholar] [CrossRef]

- Yan, L.X.; Wan, P.; Qin, L.Q.; Zhu, D.Y. The induction and detection method of angry driving evidences from EEG and physiological signals. Discret. Dyn. Nat. Soc. 2018, 2018, 3702795. [Google Scholar] [CrossRef]

- Li, J.J.; Wang, Q.A. Review of individual differences from transfer learning. Her. Russ. Acad. Sci. 2022, 92, 549–557. [Google Scholar] [CrossRef]

- Mohsen, S.; Alharbi, A.G. EEG-based human emotion prediction using an LSTM model. In Proceedings of the 2021 IEEE International Midwest Symposium on Circuits and Systems, Lansing, MI, USA, 9–11 August 2021; pp. 458–461. [Google Scholar]

- Liapis, A.; Katsanos, C.; Sotiropoulos, D.G.; Karousos, N.; Xenos, M. Stress in interactive applications: Analysis of the valence-arousal space based on physiological signals and self-reported data. Multimed. Tools Appl. 2017, 76, 5051–5071. [Google Scholar] [CrossRef]

- Feng, H.H.; Golshan, H.M.; Mahoor, M.H. A wavelet-based approach to emotion classification using EDA signals. Expert Syst. Appl. 2018, 112, 77–86. [Google Scholar] [CrossRef]

- van der Zwaag, M.D.; Janssen, J.H.; Westerink, J.H.D.M. Directing physiology and mood through music: Validation of an affective music player. IEEE Trans. Affect. Comput. 2013, 4, 57–68. [Google Scholar] [CrossRef]

- Lin, W.Q.; Li, C.; Zhang, Y.J. Interactive application of dataglove based on emotion recognition and judgment system. Sensors 2022, 22, 6327. [Google Scholar] [CrossRef] [PubMed]

- Hu, Q.W.; Li, X.H.; Fang, H.; Wan, Q. The tactile perception evaluation of wood surface with different roughness and shapes: A study using galvanic skin response. Wood Res. 2022, 67, 311–325. [Google Scholar] [CrossRef]

- Yin, G.H.; Sun, S.Q.; Yu, D.A.; Li, D.J.; Zhang, K.J. A multimodal framework for large-scale emotion recognition by fusing music and electrodermal activity signals. ACM Trans. Multimed. Comput. Commun. Appl. 2022, 18, 78. [Google Scholar] [CrossRef]

- Romaniszyn-Kania, P.; Pollak, A.; Danch-Wierzchowska, M.; Kania, D.; Mysliwiec, A.P.; Pietka, E.; Mitas, A.W. Hybrid system of emotion evaluation in physiotherapeutic procedures. Sensors 2020, 21, 6343. [Google Scholar] [CrossRef]

- Sepulveda, A.; Castillo, F.; Palma, C.; Rodriguez-Fernandez, M. Emotion recognition from ECG signals using wavelet scattering and machine learning. Appl. Sci. 2021, 11, 4945. [Google Scholar] [CrossRef]

- Wang, X.Y.; Guo, Y.Q.; Ban, J.; Xu, Q.; Bai, C.L.; Liu, S.L. Driver emotion recognition of multiple-ECG-feature fusion based on BP network and D-S evidence. IET Intell. Transp. Syst. 2020, 14, 815–824. [Google Scholar] [CrossRef]

- Wu, M.H.; Chang, T.C. Evaluation of effect of music on human nervous system by heart rate variability analysis using ECG sensor. Sens. Mater. 2021, 33, 739–753. [Google Scholar] [CrossRef]

- Fu, Y.J.; Leong, H.V.; Ngai, G.; Huang, M.X.; Chan, S.C.F. Physiological mouse: Toward an emotion-aware mouse. Univers. Access Inf. Soc. 2017, 16, 365–379. [Google Scholar] [CrossRef]

- Hu, J.Y.; Li, Y. Electrocardiograph based emotion recognition via WGAN-GP data enhancement and improved CNN. In Proceedings of the 15th International Conference on Intelligent Robotics and Applications-Smart Robotics for Society, Intelligent Robotics and Applications, Harbin, China, 1–3 August 2022; pp. 155–164. [Google Scholar]

- Bornemann, B.; Winkielman, P.; van der Meer, E. Can you feel what you do not see? Using internal feedback to detect briefly presented emotional stimuli. Int. J. Psychophysiol. 2012, 85, 116–124. [Google Scholar] [CrossRef]

- Du, G.L.; Long, S.Y.; Yuan, H. Non-contact emotion recognition combining heart rate and facial expression for interactive gaming environments. IEEE Access 2020, 8, 11896–11906. [Google Scholar] [CrossRef]

- Lin, W.Q.; Li, C.; Sun, S.Q. Deep convolutional neural network for emotion recognition using EEG and peripheral physiological signal. Lect. Notes Comput. Sci. 2017, 10667, 385–394. [Google Scholar]

- Lin, W.Q.; Li, C.; Zhang, Y.J. Emotion visualization system based on physiological signals combined with the picture and scene. Inf. Vis. 2022, 21, 393–404. [Google Scholar] [CrossRef]

- Wu, Q.; Dey, N.; Shi, F.Q.; Crespo, R.G.; Sherratt, R.S. Emotion classification on eye-tracking and electroencephalograph fused signals employing deep gradient neural networks. Appl. Soft Comput. 2021, 110, 107752. [Google Scholar] [CrossRef]

- Tang, Z.C.; Xia, D.; Li, X.T.; Wang, X.Y.; Ying, J.C.; Yang, H.C. Evaluation of the effect of music on idea generation using electrocardiography and electroencephalography signals. Int. J. Technol. Des. Educ. 2022. [Google Scholar] [CrossRef]

- Singh, R.R.; Conjeti, S.; Banerjee, R. A comparative evaluation of neural network classifiers for stress level analysis of automotive drivers using physiological signals. Biomed. Signal Process. Control 2013, 8, 740–754. [Google Scholar] [CrossRef]

- Katsigiannis, S.; Ramzan, N. DREAMER: A database for emotion recognition through EEG and ECG signals from wireless low-cost off-the-shelf devices. IEEE J. Biomed. Health Inform. 2018, 22, 98–107. [Google Scholar] [CrossRef] [PubMed]

- Laparra-Hernandez, J.; Belda-Lois, J.M.; Medina, E.; Campos, N.; Poveda, R. EMG and GSR signals for evaluating user’s perception of different types of ceramic flooring. Int. J. Ind. Ergon. 2009, 39, 326–332. [Google Scholar] [CrossRef]

- Zhang, X.W.; Liu, J.Y.; Shen, J.; Li, S.J.; Hou, K.C.; Hu, B.; Gao, J.; Zhang, T. Emotion recognition from multimodal physiological signals using a regularized deep fusion deep fusion of kernel machine. IEEE Trans. Cybern. 2021, 51, 4386–4399. [Google Scholar] [CrossRef]

- Jang, E.H.; Byun, S.; Park, M.S.; Sohn, J.H. Reliability of physiological responses induced by basic emotions: A pilot study. J. Physiol. Anthropol. 2019, 38, 15. [Google Scholar] [CrossRef]

- Yoo, G.; Seo, S.; Hong, S.; Kim, H. Emotion extraction based on multi bio-signal using back-propagation neural network. Multimed. Tools Appl. 2018, 77, 4925–4937. [Google Scholar] [CrossRef]

- Khezri, M.; Firoozabadi, M.; Sharafat, A.R. Reliable emotion recognition system based on dynamic adaptive fusion of forehead bio-potentials and physiological signals. Comput. Methods Programs Biomed. 2015, 122, 149–164. [Google Scholar] [CrossRef]

- Zhou, F.; Qu, X.D.; Jiao, J.X.; Helander, M.G. Emotion prediction from physiological signals: A comparison study between visual and auditory elicitors. Interact. Comput. 2014, 26, 285–302. [Google Scholar] [CrossRef]

- Yan, M.S.; Deng, Z.; He, B.W.; Zou, C.S.; Wu, J.; Zhu, Z.J. Emotion classification with multichannel physiological signals using hybrid feature and adaptive decision fusion. Biomed. Signal Process. Control 2022, 71, 103235. [Google Scholar] [CrossRef]

- Anolli, L.; Mantovani, F.; Mortillaro, M.; Vescovo, A.; Agliati, A.; Confalonieri, L.; Realdon, O.; Zurloni, V.; Sacchi, A. A multimodal database as a background for emotional synthesis, recognition and training in e-learning systems. Lect. Notes Comput. Sci. 2005, 3784, 566–573. [Google Scholar]

- Picard, R.W.; Vyzas, E.; Healey, J. Toward machine emotionalintelligence: Analysis of affective physiological state. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1175–1191. [Google Scholar] [CrossRef]

- Fleureau, J.; Guillotel, P.; Quan, H.T. Physiological-based affect event detector for entertainment video applications. IEEE Trans. Affect. Comput. 2012, 3, 379–385. [Google Scholar] [CrossRef]

- Kim, K.H.; Bang, S.W.; Kim, S.R. Emotion recognition system using short-term monitoring of physiological signals. Med. Biol. Eng. Comput. 2004, 42, 419–427. [Google Scholar] [CrossRef] [PubMed]

- Pour, P.A.; Hussain, M.S.; AlZoubi, O.; D’Mello, S.; Calvo, R.A.; Aleven, V.; Kay, J.; Mostow, J. The impact of system feedback on learners’ affective and physiological states. Intell. Tutoring Syst. Proc. 2010, 6094 Pt 1, 264–272. [Google Scholar]

- Liu, Y.; Ritchie, J.M.; Lim, T.; Kosmadoudi, Z.; Sivanathan, A.; Sung, R.C.W. A fuzzy psycho-physiological approach to enable the understanding of an engineer’s affect status during CAD activities. Comput.-Aided Des. 2014, 54, 19–38. [Google Scholar] [CrossRef]

- Pinto, G.; Carvalho, J.M.; Barros, F.; Soares, S.C.; Pinho, A.J.; Bras, S. Multimodal emotion evaluation: A physiological model for cost-effective emotion classification. Sensors 2020, 20, 3510. [Google Scholar] [CrossRef]

- Zhuang, J.R.; Guan, Y.J.; Nagayoshi, H.; Yuge, L.; Lee, H.H.; Tanaka, E. Two-dimensional emotion evaluation with multiple physiological signals. Adv. Affect. Pleasurable Des. 2019, 774, 158–168. [Google Scholar]

- Garg, A.; Chaturvedi, V.; Kaur, A.B.; Varshney, V.; Parashar, A. Machine learning model for mapping of music mood and human emotion based on physiological signals. Multimed. Tools Appl. 2022, 81, 5137–5177. [Google Scholar] [CrossRef]

- Zhuang, J.R.; Guan, Y.J.; Nagayoshi, H.; Muramatsu, K.; Watanuki, K.; Tanaka, E. Real-time emotion recognition system with multiple physiological signals. J. Adv. Mech. Des. Syst. Manuf. 2019, 13, JAMDSM0075. [Google Scholar] [CrossRef]

- Lin, W.Q.; Li, C.; Zhang, Y.M. Model of emotion judgment based on features of multiple physiological signals. Appl. Sci. 2022, 12, 4998. [Google Scholar] [CrossRef]

- Albraikan, A.; Hafidh, B.; El Saddik, A. iAware: A real-time emotional biofeedback system based on physiological signals. IEEE Access 2018, 6, 78780–78789. [Google Scholar] [CrossRef]

- Chen, S.H.; Tang, J.J.; Zhu, L.; Kong, W.Z. A multi-stage dynamical fusion network for multimodal emotion recognition. Cogn. Neurodynamics 2022. [Google Scholar] [CrossRef]

- Niu, Y.F.; Wang, D.L.; Wang, Z.W.; Sun, F.; Yue, K.; Zheng, N. User experience evaluation in virtual reality based on subjective feelings and physiological signals. J. Imaging Sci. Technol. 2019, 63, 060413. [Google Scholar] [CrossRef]

- Chung, S.C.; Yang, H.K. A real-time emotionality assessment (RTEA) system based on psycho-physiological evaluation. Int. J. Neurosci. 2008, 118, 967–980. [Google Scholar] [CrossRef]

- Uluer, P.; Kose, H.; Gumuslu, E.; Barkana, D.E. Experience with an affective robot assistant for children with hearing disabilities. Int. J. Soc. Robot. 2021. [Google Scholar] [CrossRef]

- Kiruba, K.; Sharmila, D. AIEFS and HEC based emotion estimation using physiological measurements for the children with autism spectrum disorder. Biomed. Res. 2016, 27, S237–S250. [Google Scholar]

- Habibifar, N.; Salmanzadeh, H. Improving driving safety by detecting negative emotions with biological signals: Which is the best? Transp. Res. Rec. 2022, 2676, 334–349. [Google Scholar] [CrossRef]

- Hssayeni, M.D.; Ghoraani, B. Multi-modal physiological data fusion for affect estimation using deep learning. IEEE Access 2021, 9, 21642–21652. [Google Scholar] [CrossRef]

- Chen, L.L.; Zhao, Y.; Ye, P.F.; Zhang, J.; Zou, J.Z. Detecting driving stress in physiological signals based on multimodal feature analysis and kernel classifiers. Expert Syst. Appl. 2017, 85, 279–291. [Google Scholar] [CrossRef]

- Saffaryazdi, N.; Goonesekera, Y.; Saffaryazdi, N.; Hailemariam, N.D.; Temesgen, E.G.; Nanayakkara, S.; Broadbent, E.; Billinghurst, M. Emotion recognition in conversations using brain and physiological signals. In Proceedings of the 27th International Conference on Intelligent User Interfaces, University Helsinki, Electricity Network, Helsinki, Finland, 22–25 March 2022; pp. 229–242. [Google Scholar]

- Ma, R.X.; Yan, X.; Liu, Y.Z.; Li, H.L.; Lu, B.L. Sex difference in emotion recognition under sleep deprivation: Evidence from EEG and eye-tracking. In Proceedings of the IEEE Engineering in Medicine and Biology Society Conference Proceedings, Virtual Conference, 1–5 November 2021; pp. 6449–6452. [Google Scholar]

- Singson, L.N.B.; Sanchez, M.T.U.R.; Villaverde, J.F. Emotion recognition using short-term analysis of heart rate variability and ResNet architecture. In Proceedings of the 13th International Conference on Computer and Automation Engineering, Melbourne, VIC, Australia, 20–22 March 2021; pp. 15–18. [Google Scholar]

- Hinduja, S.; Kaur, G.; Canavan, S. Investigation into recognizing context over time using physiological signals. In Proceedings of the 9th International Conference on Affective Computing and Intelligent Interaction (ACII), Nara, Japan, 28 September–1 October 2021. [Google Scholar]

- Keller, H.E.; Lww, S. Ethical issues surrounding human participants research using the Internet. Ethics Behav. 2003, 13, 211–221. [Google Scholar] [CrossRef]

- Cowie, R. Ethical issues in affective computing. In The Oxford Handbook of Affective Computing (Oxford Library of Psychology); Oxford University Press: New York, NY, USA, 2015; p. 334. [Google Scholar]

- Giardino, W.J.; Eban-Rothschild, A.; Christoffel, D.J.; Li, S.B.; Malenka, R.C.; de Lecea, L. Parallel circuits from the bed nucleiof stria terminalis to the lateral hypothalamus drive opposing emotional states. Nature Neurosci. 2016, 21, 1084–1095. [Google Scholar] [CrossRef] [PubMed]

- Sarma, P.; Barma, S. Review on stimuli presentation for affect analysis based on EEG. IEEE Access 2020, 8, 51991–52009. [Google Scholar] [CrossRef]

- Sun, Z.D.; Li, X.B. Privacy-phys: Facial video-based physiological modification for privacy protection. IEEE Signal Process. Lett. 2022, 29, 1507–1511. [Google Scholar] [CrossRef]

- Pal, S.; Mukhopadhyay, S.; Suryadevara, N. Development and progress in sensors and technologies for human emotion recognition. Sensors 2021, 21, 5554. [Google Scholar] [CrossRef] [PubMed]

- Liao, D.; Shu, L.; Liang, G.D.; Li, Y.X.; Zhang, Y.; Zhang, W.Z.; Xu, X.M. Design and evaluation of affective virtual reality system based on multimodal physiological signals and self-assessment manikin. IEEE J. Electromagn. Microw. Med. Biology. 2020, 4, 216–224. [Google Scholar] [CrossRef]

- Preethi, M.; Nagaraj, S.; Mohan, P.M. Emotion based media playback system using PPG signal. In Proceedings of the 6th International Conference on Wireless Communications, Signal Processing and Networking, Chennai, India, 25–27 March 2021; pp. 426–430. [Google Scholar]