From Cyber–Physical Convergence to Digital Twins: A Review on Edge Computing Use Case Designs

Abstract

1. Introduction

1.1. Research Motivation

- Network Maturity and Diversity: The on-going deployment of 5G networks is showing that wireless communication has greatly matured from the previous two generations in terms of coverage and offered services. Parallel to maturity is the level of network diversity that has been reached, which comes from different perspectives.

- From the technology perspective, millimeter wave (mmWave) communications and the network backhaul technology are the main aspects that determine network maturity in the evolution towards 6G. There are actually three significant classes of this technological perspective, defined according to use case situations: (i) extreme/enhanced mobile broadband (eMBB), (ii) ultra-reliable low-latency communication (URLLC), and (iii) massive machine-type communication (mMTC). These are network augmentations, among which mixes of utilization situations will revolve to quickly prosper 6G networks into realization in no time [7].

- From the element management system (EMS) and business perspectives, the relevant aspects are business models, such as (i) ecosystem maturity, (ii) coordination of industry verticals, and (iii) the regulation aspects, including those related to spectrum management and fragmentation.

- From the network intelligence perspective, the determination towards realizing 6G networks can be viewed as a dispersed neural system that connects (i) the physical, (ii) the cyber, as well as (iii) the biological universes, which genuinely introduces a period wherein all network operations will be recognized as linked and smart [3].

These perspectives of network maturity and diversity have set a solid foundation for all things intelligent. - Network Flexibility Towards Digital Transformation: With the introduction of some underlying technologies through AI, digital transformation is becoming a reality. The whole idea of digital transformation is the orchestration of network automation technologies to make the design and maintenance processes comprehensible and more natural to apply [8]. Further entrenchment of big data through the increase in digitally available data means that new methodologies to formulate and understand the transient behavior of network systems need to be developed. That is why, as network data operations continue to soar, network data analytics need to be incorporated into the operations of wireless networks, more especially the network components that are pushing significantly large amounts of multimedia traffic to the internet [4]. Therefore, effective ways to improve the computational capabilities of the relevant network infrastructure will bring the necessary capacity to meet the unprecedented computational demands of future network users. The current network design and control methods based on deep neural network (DNN) architectures are not enough for 5G problems, hence they may not be adequate for the “tsunami” of use cases and multi-platform environments brought by 6G networks. To this effect, when it comes to network big data, network infrastructure should become a major priority among enterprise executives. Despite the popularity of AI strategies with diverse capabilities that have improved connectivity and network flexibility for the dynamic virtual environments, rapid scalability is needed to handle the intermittent nature of big data loads [9].

1.2. Novelty and Summary of Contributions

- Blurring of Lines and the Cyber–Physical Convergence: With the convergence of several disciplines, research in wireless and mobile communications has become an interdisciplinary field, shaped by several interacting dimensions. This is called the the blurring of lines [11], i.e., the blurring of the traditional boundaries between the digital, physical, and biological worlds, which has led to cyber–physical convergence. To this effect, the fourth industrial revolution (4IR) is a set of technological advancements that have exploited the convergence of these technologies. As the lines between the different fields of research continue to blur, MNOs have already begun finding transitions beyond the traditional services, such as voice to actually monetize the new valuable assets such as data and multimedia content. Therefore, in this contribution, it is shown how digital transformation is blurring the lines between all these interacting dynamics that build up and finally converge into a behavioral psychology concept called RL. Then, the proxies of cyber–physical convergence are discussed in terms of (i) quantum physics and quantum computing and (ii) data science and big data.

- Adoption of Cyber–Physical Convergence Towards Realizing DTs: Since the emergence of AI strategies has already begun shaping an increasing range of industry sectors, their potential impacts in terms of sustainable development are expected to impact the global operation of the telecommunication industry, both in the short term and in the long term. In this way, network operators are able to profitably manage and operate the dizzingly complex next-generation IoT networks [12]. However, there is currently no published research on the systematic assessment of the extent to which AI strategies will impact all the aspects of sustainable development. Regarding the sustainable development goals agreed upon in the 2030 international agenda, telecommunication companies are under immense pressure to properly leverage AI for 6G networks. IoT and digital transformation require high levels of intelligence in order to improve efficiency and increase profitability. Possible ways to address this pressure resulted in the CIoPPD&T paradigm, which has been defined by CISCO as a monster paradigm. In terms of digital transformation, the CIoPPD&T is an industry-ripe paradigm for AI-driven solutions, wherein MNOs have already begun to experiment in terms of solution deployment and deployment [13]. Their main aim of this contribution is on leveraging AI capabilities in terms of fast, scalable interpretation, analytics, and prediction towards providing the convergence to drive the adoption of the cyber–physical convergence towards the realization of DTs.

- Edge-Based Big Data-Inspired Digital Twin: As the IoT, intelligent networks, and social media are increasingly becoming prevalent, data volumes are explosively increasing, and the velocity at which the data are generated has a profound impact on society and social interactions. This means that big data has already been woven into the fabric of everyday operations [14]. Application-level data have quickly become the primary source of mobile big data, and these data can escalate into terabytes [15]. Research entities and industry experts have envisaged that through the use of network big data, 6G networking will take communication closer to 2030 vision, which is the Internet of Everything (IoE) [9]. Therefore, as big data is hurtling towards the wireless communication enterprise, data-aided models may help in finding key insights from the network data that improve predictive analytics. Meanwhile, cyber–physical systems are a key concept of the 4IR architecture. The physical and software components of cyber–physical systems are deeply intertwined and are able to operate on different spatial and temporal scales. That is, they are able to (i) exhibit multiple and distinct behavioral modalities and (ii) interact with one another in ways that are capable of changing with context. As the integration of the big data technology and DT continue to cover a wide range of applications in wireless communication, the main aim of this contribution is to discuss the application of big data computing and big data analytics in DTs. However, big data is a double-edged sword with particular keenness on both sides, which is to say that as it presents opportunities for enterprise development, it simultaneously brings with it challenges. In this case, this discussion will only focus on the application of big data in predictive analytics (the predictive DT), as well as in day 3 edge network operations.

- Digital Twin-inspired Vehicle-to-Edge (V2E) 6G Use Case: AI has already surpassed expectations in opening up different possibilities for machines to collaborate in digital transformation. Edge computing, as a new interdisciplinary paradigm of edge intelligence, performs computations in order to reduce latency, improve service availability, as well as save system bandwidth [16]. As edge computing and AI carry the promise of bringing intelligence to the edge of the network, they have since received tremendous amounts of research interest from the vehicular communication community. Therefore, in order to advance the DT technology at the edge, in terms of URLLC processes, the vehicular communication use case is considered, where a cellular vehicle-to-edge (C-V2E) DT is considered. With the assumption that the advisory information to vehicle controls are provided using advanced driver assistance systems (ADAS), this contribution elaborates on the DT concept at the edge by systematically splitting the DT design into different aspects, such as (i) requirements, (ii) AI agent, (iii) mapping, (iv) central controller, and (v) inter-twin communication. Regarding current and next-generation computational intelligence, this DT concept is discussed together with the prospective deployment strategy of an open radio access network (Open RAN)—tailored to meet the monitoring and control required in 6G edge computing networks.

1.3. Organization of the Article

2. Challenges Facing Digital Transformation

2.1. Lack of Interactive Processing

2.2. Existence of Long-Range Algorithmic Dependencies

2.3. Tentative Solutions to the Digital Transformation Challenges

2.3.1. Introducing Cutting-Edge AI Algorithms

2.3.2. Introducing Big Data Computing Tools

3. The Pillars of the CIoPPD&T Paradigm

3.1. Cognitive Science and Cognition

3.2. The Internet and the People

3.3. The Processes and the Data

3.4. The Things

4. The Interacting Dimensions of this Complex Socio-Technical Ecosystem

4.1. Information and Communications Technology

4.2. Group Dynamics and User Behavior

4.2.1. Describing User Behavior

4.2.2. Group Dynamics in Cognitive Radio Networks

4.3. Behavioral and Cognitive Psychology, Micro- and Behavioral Economics

4.4. Nature-Inspired Computational Approaches, Neurosciences, and Neural Computing

4.5. Statistical and Discrete Mathematics

4.5.1. Learning and Queuing Theory in Stochastic Optimization

4.5.2. Learning and Graph Theory for Deep Learning

4.6. Quantum Physics, Quantum Computing, and Quantum Machine Learning

4.7. Data, Data Science, and Big Data Analytics

4.8. The Proxies of Cyber–Physical Convergence

4.8.1. The Human Proxy and User Experience Proxy

4.8.2. Integrating the Human Proxy with Deep Reinforcement Learning

5. Overview of the AI Market and Current State of the Telecommunications Industry

5.1. Current State of the Telecommunications Industry

5.1.1. Consumer Expansion into the Content Arena

5.1.2. The Rise of Over-the-Top (OTT) Services

5.1.3. Content-Based Applications and Social Networking

5.1.4. Looking beyond Network Connectivity—The Confluence

6. The Digital Twin Technology

6.1. Digital Twins as a Concept of the CIoPPD&T

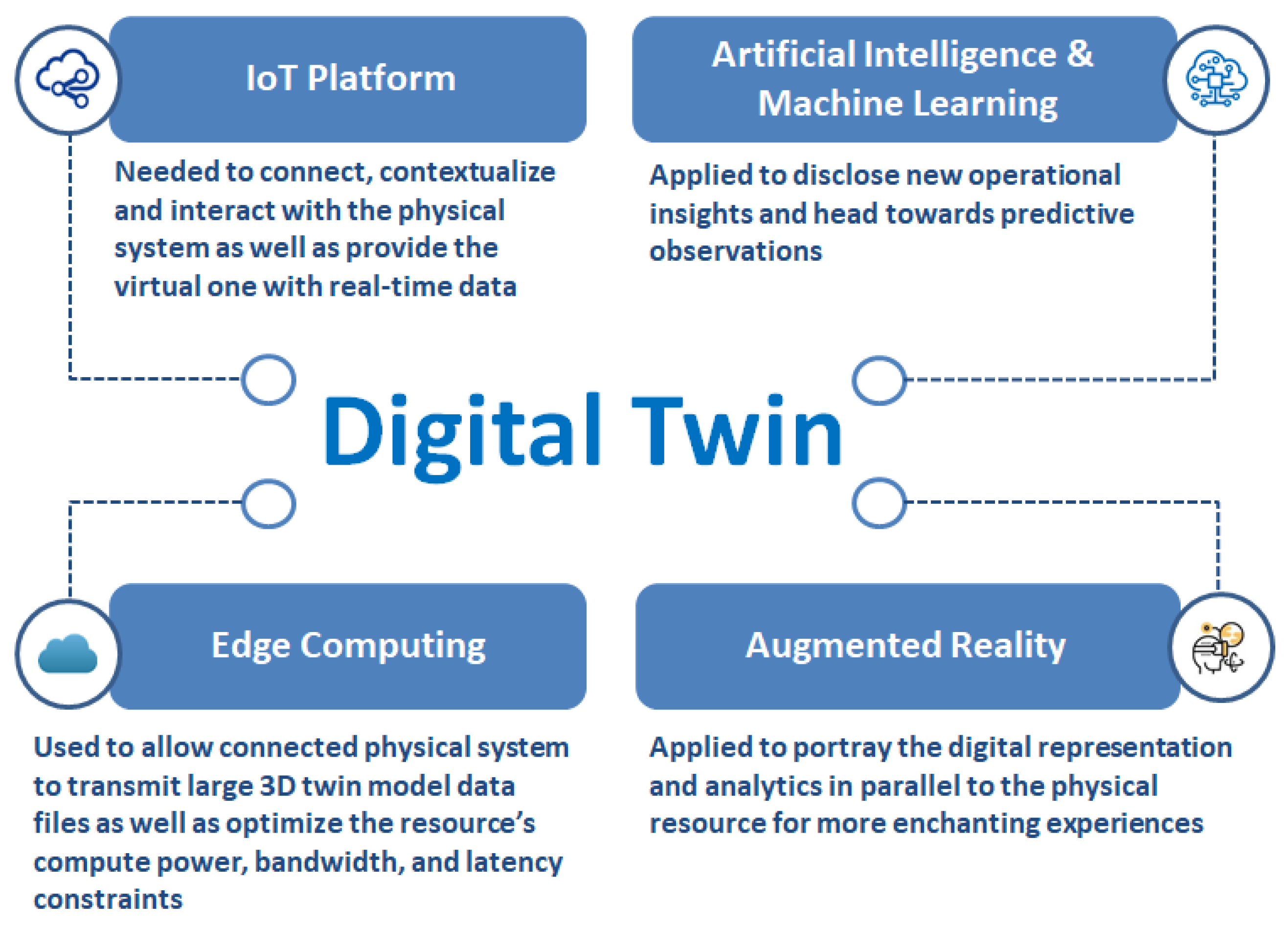

- IoT Platform: The incorporation of the IoT platform in the DT architecture is to enable connection, contextualization, and interaction with the physical system as well as to provide the virtual one with real-time data.

- Artificial Intelligence and Machine Learning: AI and ML are very critical DT components, and as such, they are expected to play very crucial roles in the self-organization, the self-healing, as well as the self-configuration of 6G networks. This will be made possible and even enhanced by other cutting-edge technologies such as QML and blockchain. Through these technologies, the digital counterpart of the real network will be able to provide seamless monitoring, analysis, evaluation, and prediction.

- Edge Computing: Edge computing, preferably with the use of distributed computing, allows connected physical systems to transmit large 3D twin model data files as well as optimize resources such as (i) computing power, (ii) bandwidth, and (iii) latency constraints.

- Augmented Reality: Augmented/virtual reality (A/VR) is applied to portray the digital representation and analytics in parallel with physical resources for more enchanting experiences.

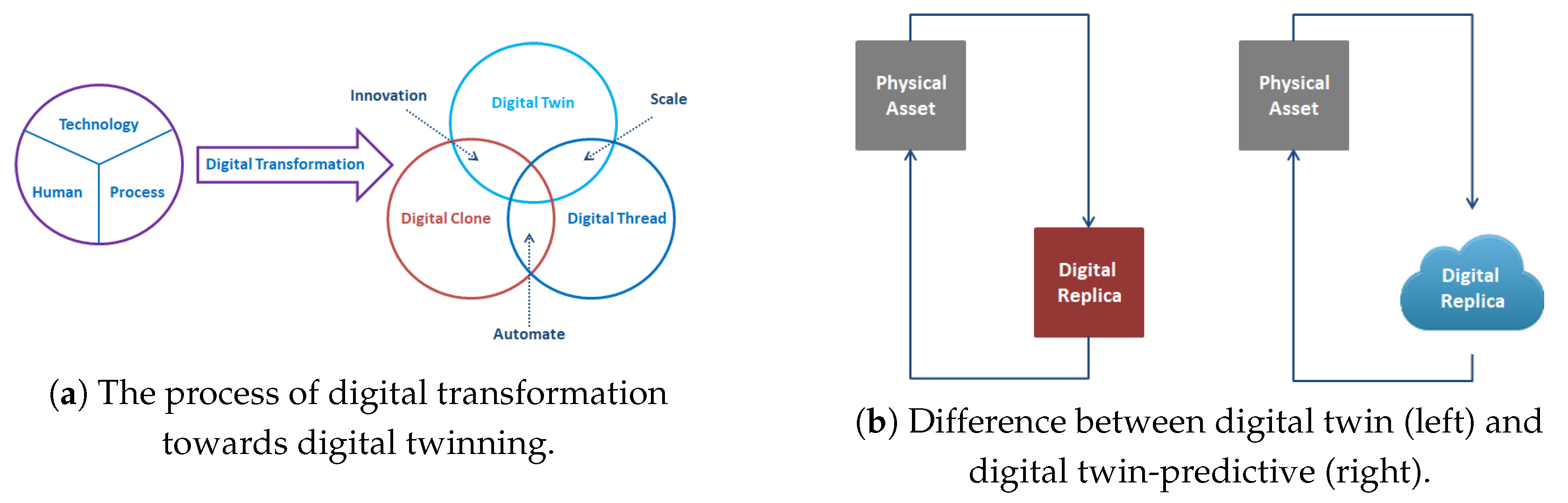

6.2. The Digital Transformation

- Digital Clone: This is an emerging technology involving deep learning (DL) algorithms that are used in manipulation of currently existing hyper-realistic media, i.e., audios, photos, and videos. With various establishments making digital cloning technology available to the public, functionalities such as audio-visual, memory, personality, as well as consumer behavior cloning can be realized [97]. A consumer behavior clone, a DT version of user behavior, can be a user profile or a cluster of customers based on their demographics. It must be noted, as can be seen in Figure 3a above, that innovation is realized when DTs and digital cloning are integrated.

- Digital Thread: Since its aim is to signify the digitization and traceability of an asset throughout its lifespan, it can be defined as the foundation behind digital transformation [98]. This is made possible through linking all the DT capabilities, such as (i) its design, (ii) the performance data, (iii) the product data, (iv) the supply chain data, as well as (v) the software used in the creation of the product. Therefore, a digital thread, together with other DTs, can be used to meet certain design requirements, records, as well as all the data to be used.

- Digital Replica: As shown in Figure 3b, a DT is referred to as a digital replica of a physical asset that has a two-way dynamic mapping between the physical asset and its DT. This is to say that the replica consists of a structure of connected elements and meta-information.

- Digital Shadow: The definition of the digital shadow can be based on its purpose and existing relationship with the corresponding DT [99]. When the design objective is to serve a specific purpose, the digital shadow operates in isolation from the DT. In this way, the digital shadow provides a blueprint of the required data, its sources, the relationships between the various pieces of the data needed, as well as any data manipulations that need to be performed. The data are either forwarded directly from the digital shadow to the DT or the digital shadow performs some pre-processing and/or simulations itself. Therefore, based on the data that are delivered by the shadow, the DT integrates them into a complete digital reality for detailed processing, simulation, and analysis.

- Digital Twin-Predictive: With reference to Figure 3b, the ultimate goal of a predictive twin is beyond the DT, as it aims to achieve further analysis for the purpose of predictions and individualization through the use of big data and ML in cyberspace. Therefore, a DT-predictive is a digital replica that uses two-dimensional real-time data communication over cyberspace.

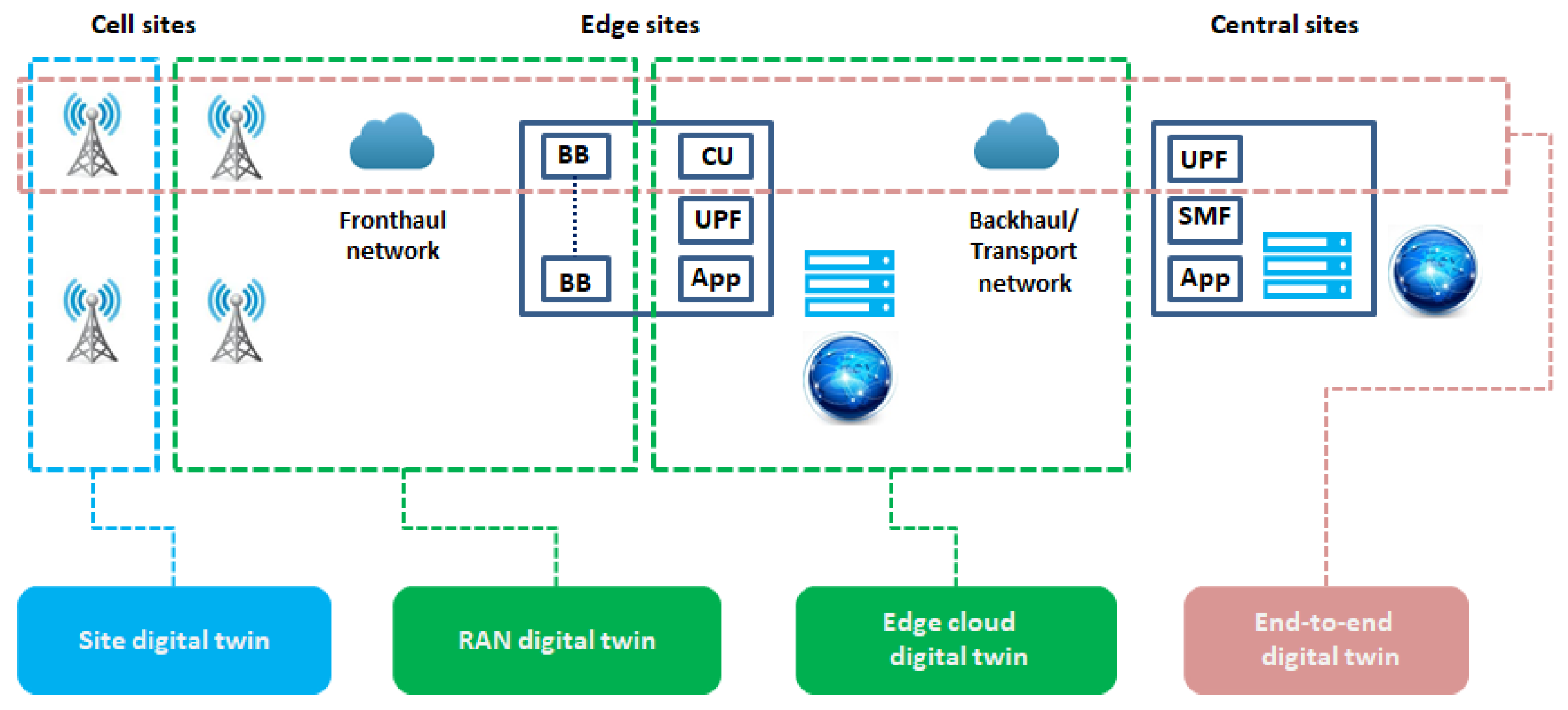

6.2.1. The Network Digital Twin

- hardware layer, which comprises the physical components of the DT, such as routers, actuator IoT sensors, as well as edge servers;

- middleware layer, which is all about data governance, processing, integration, visualization, modeling, connectivity, and control; and

- software layer, which consists of analytics engines, ML models, data dashboards, as well as modeling and simulation software.

- Data Platform: A data platform is one of the main DT components, which ensures secure data ingestion and processing, as well as steady performance, normalization, management, ML, AI analytics, micro-services, and integration.

- Autonomous Network Platform: This is the digital domain of the DT framework on wireless communication consisting of four main components, i.e., network state prediction, expert knowledge, AI algorithm, and DT network. Therefore, in a DT exists an autonomous platform that enables a transition to a world in which computers use digital maps as a life-like representation of the physical network. The autonomous platform forms a foundation for simulations and virtual reality environments that are meant to fool the human mind and make it to believe that it is actually located where it is not. This module is between the physical network and the DT and enables the DT to analyze the outcome of a set of inputs and predict the outcome without affecting the physical network. If the outcomes are what is intended, then the new configurations can be transferred to the physical network as updates [103].

6.2.2. End-to-End Wireless Network Digital Twin

- Cell Site Digital Twin: Since the process of installing, inspecting, and maintaining cellular towers is difficult and costly, the AR functionality can be enabled to visualize the cell tower. There is a plethora of use cases where the cell site DT can support MNOs or tower companies, i.e., (i) rollouts of new generation technology such as 6G, (ii) site survey on existing sites, (iii) upgrades, and (iv) maintenance activities [106]. Based on the assumption of perfect spectrum sensing in dynamic spectrum access (DSA), the information exchange that takes place between SUs and the gNBs is according to peer-to-peer information exchange.

- Infrastructure Digital Twin: Based on the specified kind of information exchange between the users and the network, the programmability of the network can allow for enhanced data management tasks (load management, compression, and data reduction). A programmable framework for advanced IoT and data-driven automation also allows for virtualized resource provisioning. The most prominent resource provisioning of this kind is known as containerization. Containers are created as images and allow users to package application code, dependencies, and configuration into a single object that can be deployed in any environment. However, they are only considered containers when they are running.

- Device Digital Twin: On the other hand, information exchange between users is governed by transfer learning and cooperation management, which considers source agent selection and target agent training. Information fidelity technologies such as federated learning and blockchain are incorporated with digital twinning to provide security for targeted services and advanced testing and facilitate deployment.

- Edge Site Digital Twin: Due to the use of higher frequencies, which are vulnerable to absorption, 6G networks will be limited in terms of transmission range. This means that edge platforms or sites will be a perfect solution to counteract this limitation, and computational resources will be docked at an aggregation site and launched on-demand to each edge site. However, it must be noted that the DT of the edge site includes both the access network and the backhaul network (transport). In order to meet the high computing demand of edge computing networks, new enabling technologies such as (i) the air interface and the transmission technologies as well as the novel network architecture, (ii) advanced multi-antenna technologies, (iii) network slicing, (iv) cell-free architecture, and (v) cloud/fog/edge computing are already being developed [107].

- End-to-End Digital Twin: This kind of DT is mostly encountered in network slicing architectures where the relationships between the different slices are monitored using graph neural networks.

7. Edge Computing Digital Twins—Special Use Cases

- The Cloud Layer: The cloud layer, which is also known as the core (regional data center) of the MNO. This layer is traditionally a non-edge tier, and it is most often owned and operated by the public cloud provider, a CSP, or even a larger enterprise [112]. It is responsible for processing big data, business logic, and data warehousing. Cloud computing is the most prevalent tool for user data management in this layer.

- The Fog Layer: The fog computing layer is a computing layer lying between the edge and the cloud. Since it is commonly owned and operated by a CSP, it is known as the service provider edge. In other words, it is a tier located between the core (regional data centers) and the last mile, i.e., the access network where the network operator serves multiple customers [113]. The fog layer is also responsible for local network access, data analysis and data reduction, control response, as well as function virtualization.

- The Edge Layer: Also known as the end user premises edge, the edge layer is responsible for the real-time processing of large volumes of data. Edge computing, which refers to data computation that takes place at the edge of the network, is the most prevalent tool for user data management at this layer. Along with fog computing, edge computing has been used widely in increasing the speed and the efficiency of data processing, as well as bringing intelligence closer to the user devices.

7.1. A Choice Modeling-Based DT for Edge Computing Platforms

7.1.1. Modeling Behavioral Model for Day 3 Edge Computing Operations

- the DNN takes the states of the computational task queue, which are estimates of congestion as well as those of incident traffic types;

- the output of the DNN is the behavioral model for the provisioning of the computational resources, which is as , with as the control action that determines the provisioning of computational resources;

- the behavioral model instructs the regional data center on the computational requirements of the different edge sites, which then launches containerized computational resources based on the respective demands.

7.1.2. Choice Modeling and Mathematical Psychology

7.2. Federated Deep Reinforcement Learning-Based Digital Twin

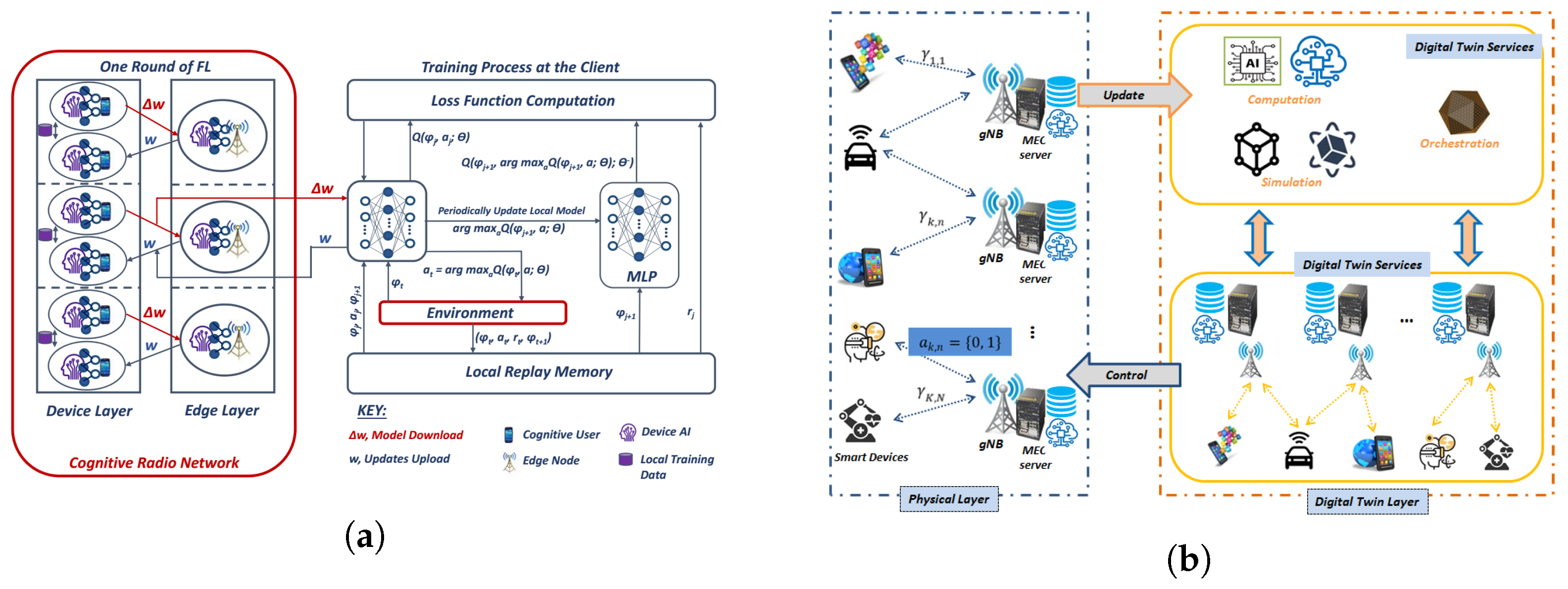

7.2.1. The Federated DRL Computational Offloading

- The Device Layer: The device layer operates according to vertical FML, where each edge device begins by partitioning a DNN model according to the current environmental factors, such as channel conditions and available bandwidth [123]. Then, an assumed set of edge devices share their local datasets with one another to cooperatively train their associated gNB by uploading gradients . The transmission link for information exchange between devices is governed by device-to-device (D2D) protocols. In return, the edge server computes global updates and broadcasts an aggregated gradient as w through the gNB to globally train its associated devices [124].

- The Edge Layer: The edge layer consists of a gNB that is managed with a DNN model that is partitioned according to the workload of the edge server and executes according to device resource and intermediate data to the server. Here, features that describe the state of the edge servers and the requirements of the edge devices are fed to learn the DNN [125]. Through its generalization capabilities, the DNN trains the agent towards yielding general scheduling policies, which are not just tuned states that are encountered during the training process but are adaptive states that can be applied even to unknown states in the processes of predicting/prescribing.

7.2.2. Realizing the FDRL-Based Digital Twin

- Physical Layer: Since the value of the information that is obtained in IoE systems depends on the AoI, the time elapsed from the time that the raw data are generated by the applications to the time when the data are processed and delivered to the processes must be minimized [29]. User behavior and gNB association are the most critical processes of the physical layer (mostly the network edge), where the data producers frequently share with other parties such as edge servers for the training of their models [111].

- Digital Twin Layer and DT Services: The DT, found in the DT layer, is concerned with monitoring, capturing, and processing of the data in order to deliver insights for decision makers to act on. This means that the collection and storage of the status data and their subsequent processing is very important. In this case, the DT layer requires robust capacities of data storage and cloud-based ML platforms for analytics. The analytics are the most vital component of the DT platform since it translates the status data into analytics insights, which are then shaped into formats suitable for human perception.

8. Big Data and Big Data Analytics—The Final Frontier

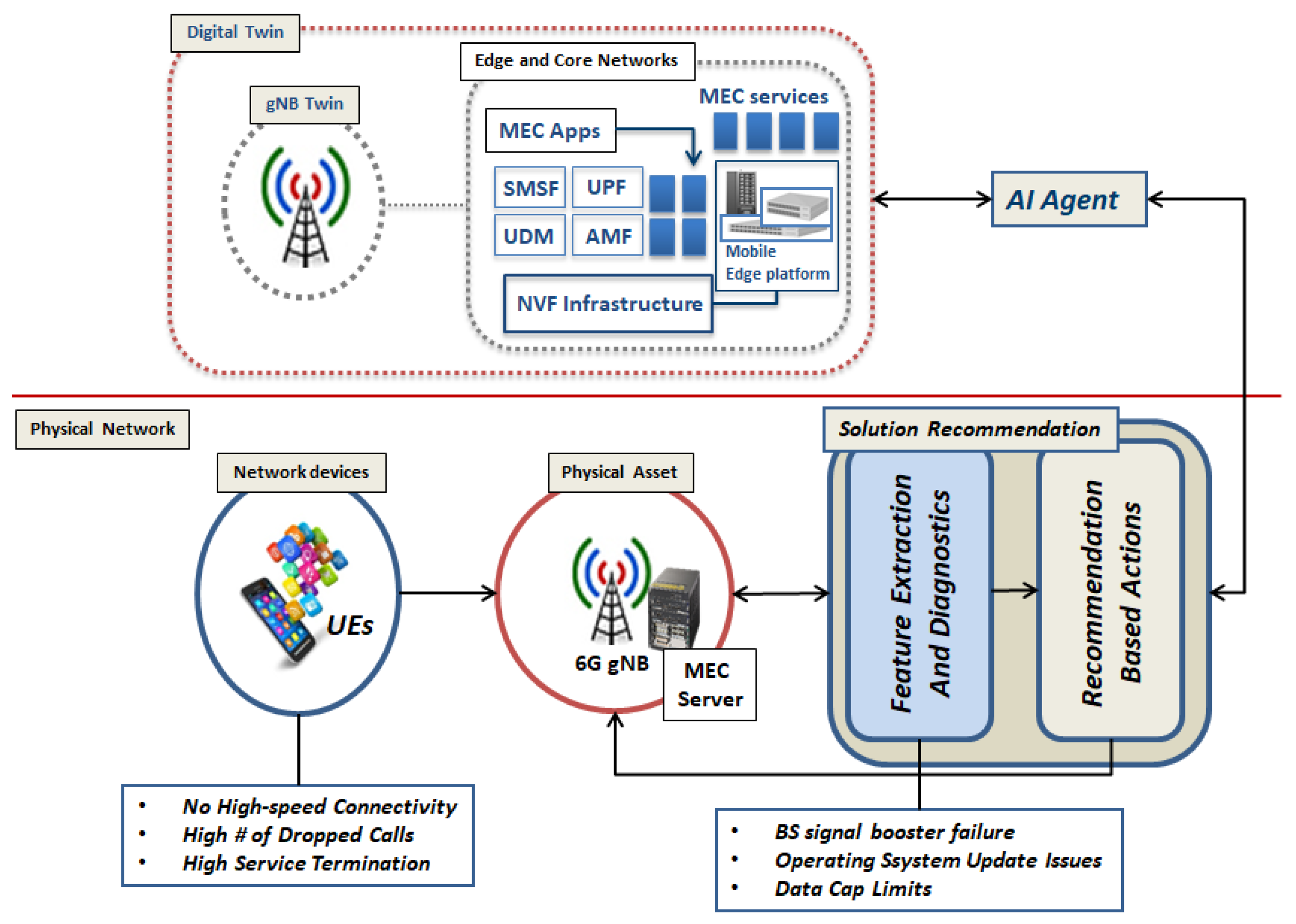

8.1. Big Data-Inspired, Edge-Based DT for Real-Time Network Diagnostics

- Data Mining and Information Extraction: Using data mining techniques such as clustering algorithms, similar complaints can be grouped together for collective diagnosis. It must, however, be noted that the grievances received from the subscribers might be in text format, and some preliminary pre-processing might be required in order to be a dataset that is ready for processing and analysis. For the preliminary pre-processing step, a natural language processing and lexicon processing algorithm might be used to “mine” similar text structures. After, there is grouping of similar textual information using classification algorithms to observe some correlations in the text data for further processing.

- Data Analytics and Diagnostics: Using data mining techniques, a lot of information can be extracted and classified in terms of their similarities and differences, and diagnostic techniques can be applied in each cluster of information to diagnose problems. In the diagnostic step, the classified data are processed in order to obtain a good diagnostic visibility of the network problems. From the customer service side, this visibility can assist the service providers with information such as regions of the network, gNB sites, user behavior, and applications. Using this information, diagnosis of what the real problems might be can be performed.

- Solution Recommendation: Within a short space of time, potential solutions are evaluated and the best one is recommended and commissioned through the use of virtualized functions. For example, problems such as software maintenance and upgrades can be virtualized instead of allocating a technician on site. In this case, the software agent runs a solution recommendation module (SRM) and sends recommendations to the decision module, which proposes a network function virtualization (NFV) to the relevant gNB site [131]. However, due to the nature of the diagnosed problems, the recommendation processes differ from one another due to the difference in user behavior in different network regions. As a result, the agent that handles the recommendation process must reside at the edge server in order to dynamically launch tasks of relevant VM instances to resolve dynamic problems. Therefore, the VM launching can be managed using a DRL strategy as contemplated in [132].

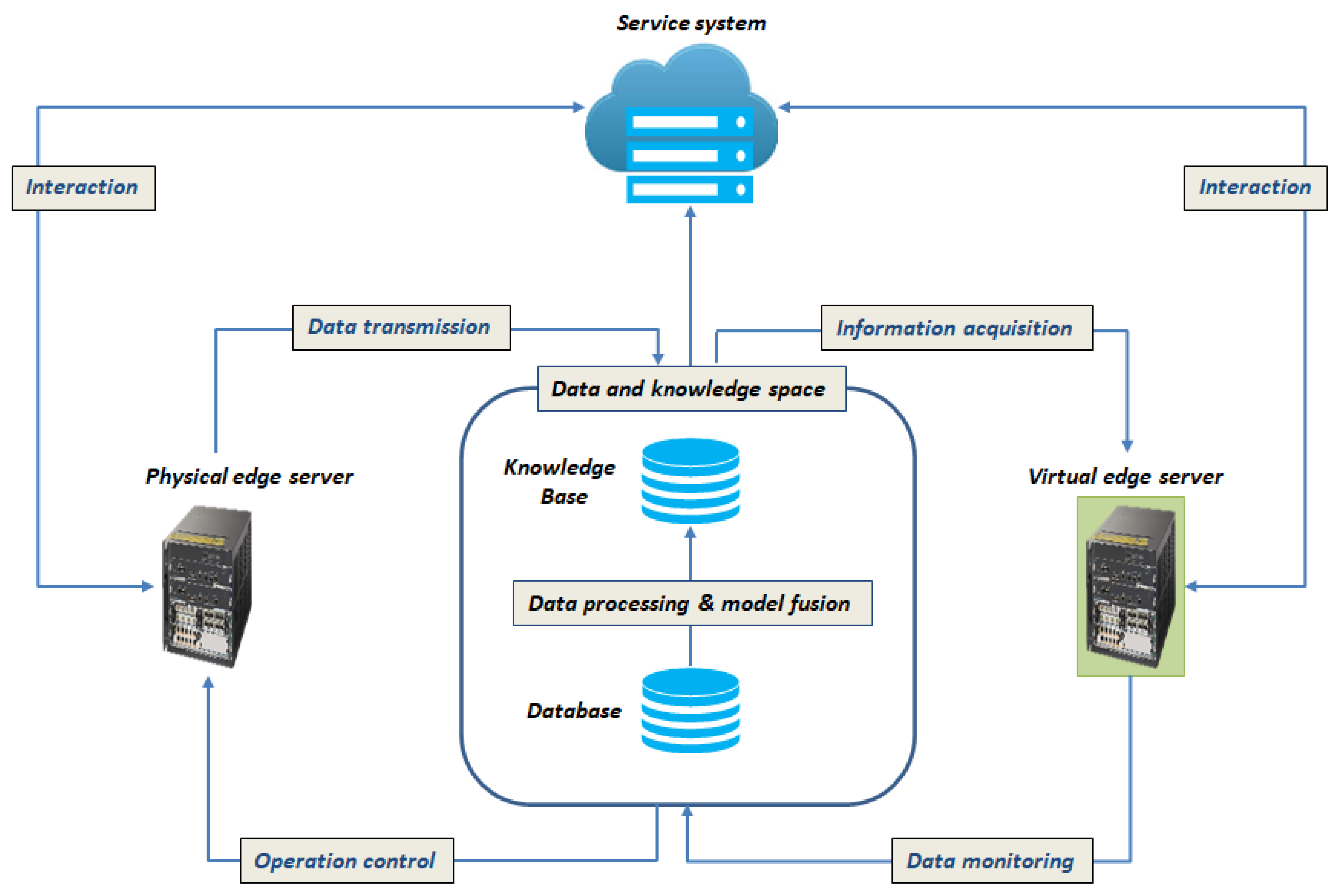

8.2. Big Data-Inspired DT for Real-Time Predictive Maintenance

- The virtual edge server: The virtual edge server then realizes the data monitoring and operation control for the physical edge server. In edge computing, edge-based predictive analytics solutions use the DT technology to prevent server downtime as a means of conserving and protecting QoS parameters and, subsequently, the QoE of users.

- The data and knowledge space: Here, the data are transferred to the database where they are stored and pre-processed. With the data and the model that are integrated and fused, the maintenance knowledge for decision making that is generated by ML algorithms is stored in the knowledge base [135].

- Inter-twin interaction: After establishing the knowledge base, the virtual edge server can then obtain the required information from the knowledge.

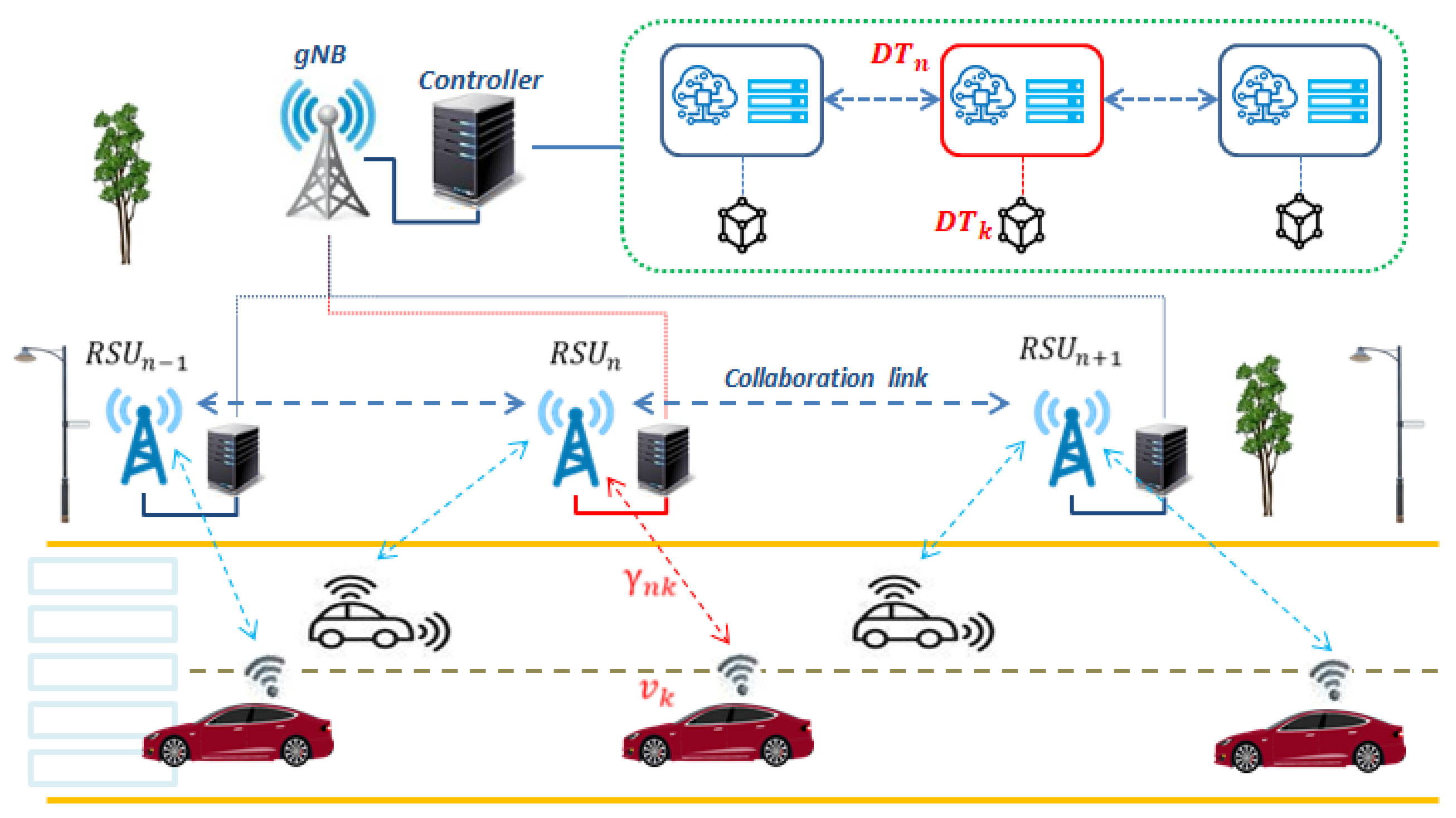

9. Network Digital Twin for Vehicle-to-Edge Communication

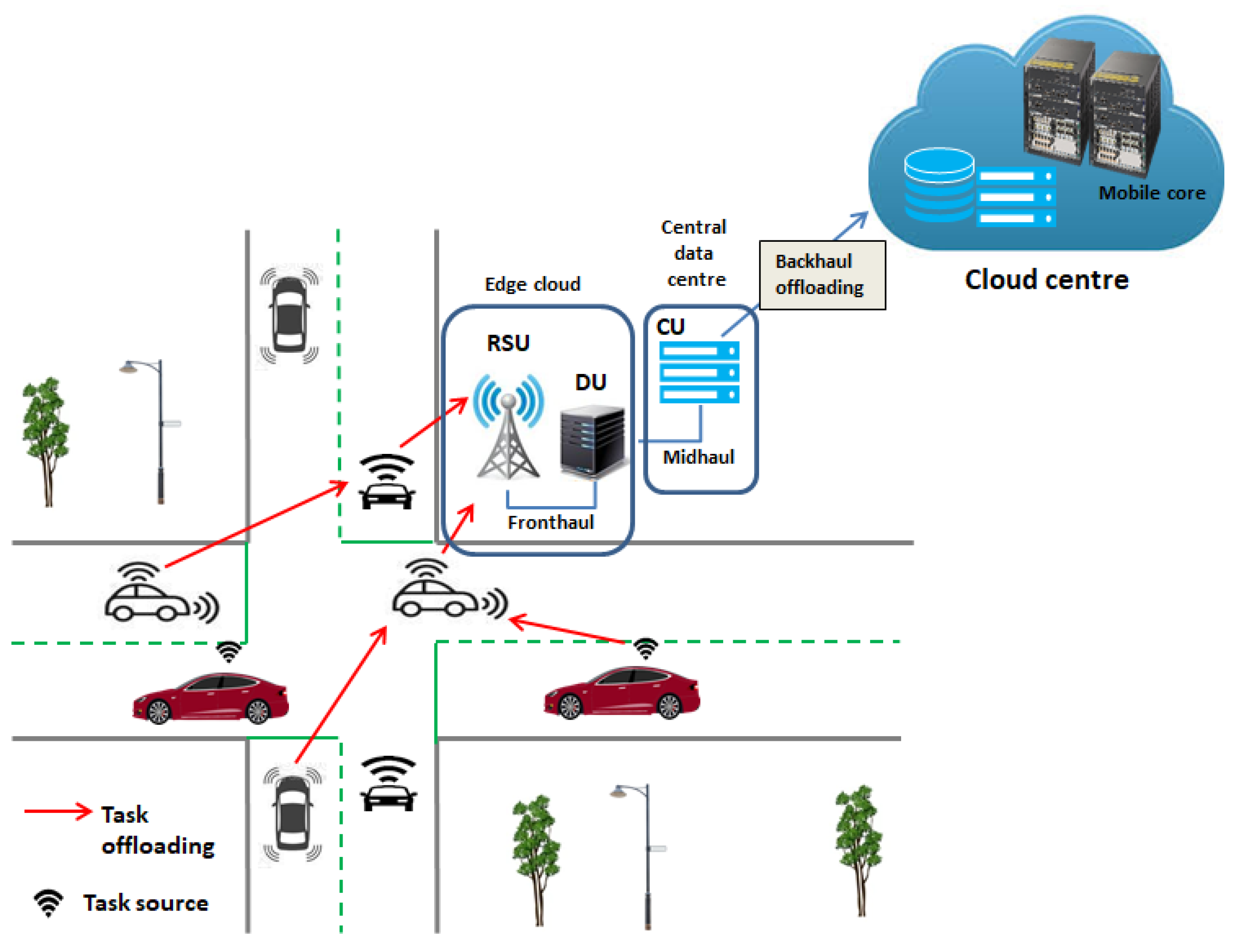

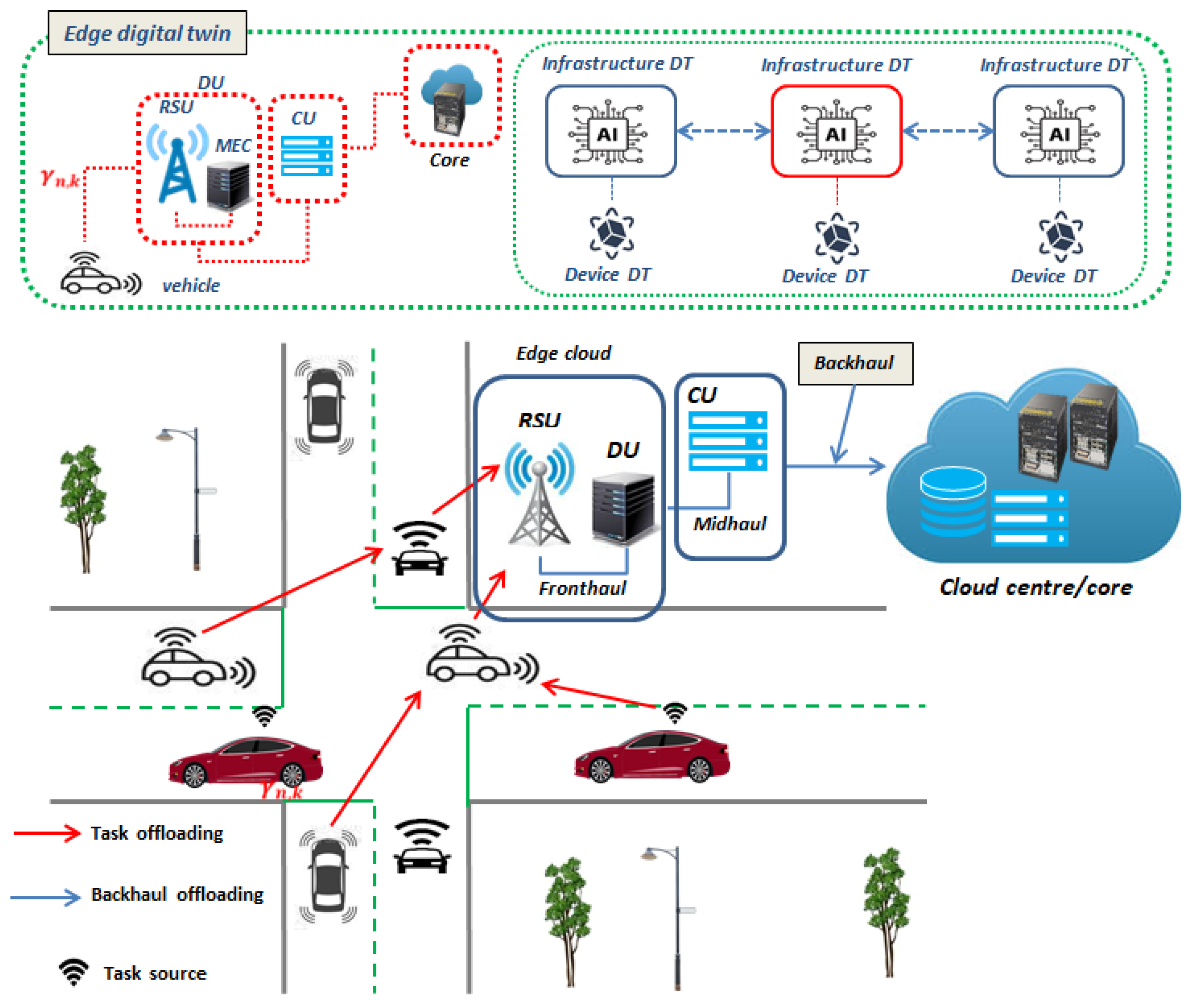

9.1. Edge Computing-Based Digital Twin for Traditional RAN

- Device/vehicle DT: The vehicle DT (vDT) can be thought of as the profile of the device, which may include (i) the travelling speed, (ii) the trajectory and real-time vehicle location, as well as (iii) the resource requirements.

- The requirements: The requirements may include (i) the size of the task (payload size), (ii) the required processing cycles, as well as (iii) the dependency and the priority among tasks.

- The AI agent: The AI agent then iteratively associates the device tasks with the servers and takes note of the reduction of the objective, i.e., records the resulting reward. The features that describe the status of the server as well as the device requirements are then fed to learn the DNN, which uses its generalization capabilities to yield general scheduling policies.

In relation to the other DTs, the vehicle DT monitors the vehicle trajectory and vehicle speed optimization—to name a few. Other additional parameters that can be monitored are blind-spot and accident detection. - Infrastructure DT: In edge computing, the infrastructure consists of (i) the RSUs, (ii) the edge server, and (iii) the vehicles themselves. The responsibility of the infrastructure DT (iDT) is monitoring and optimization of service provisioning to the devices. This means that the infrastructure DT manages device behavior in terms of their real-time location and network behavior. Then, the state of each edge server can be described using its resources, i.e., (i) its computing speed and (ii) the quality of the communication channel. For an efficient infrastructure DT establishment, effective mapping between RSUs and vehicles must be established.

- Mapping: The mapping between the physical twin and the virtual twin has three components: (i) data storage, which collects data from the physical network, such as vehicle state, RSU state, and wireless channel state, (ii) virtual model mapping, and (iii) inter-twin management.

- The Central Controller: The central controller at the edge server can model the offloaded tasks and determines their resource demand status using the aggregated data in the device DT. It is this controller that assigns tasks through the computing task model.

- Inter-Twin Communication: The knowledge transfer process between the two twins can be secured using blockchain in order to preserve knowledge integrity for the immutable and trackable contributions of each device. Blockchain is a comparatively newer technology that simplifies network management and enhances its performance by offering a variety of applications that considerably improve the security of authentication.

- Link DT: Due to these channel imperfections, more especially in urban environments, the concept of DT can be enabled as a city-aware DT model. The simulations of the city-aware DT model should allow for accurate modeling of ray reflections and signal attenuations [137].

9.2. Edge Computing-Based Digital Twin for Open RAN

9.2.1. Edge-Based Vehicular Communications—A Primer for Open RAN DT

- Distributed Unit: The DU is the lower-layer split of the open RAN protocol stack, while the fixed RSU together with the DU are considered as the edge cloud. According to the logical split 7.2x, the DU can be defined as the logical node that includes a portion of the gNB functions. It is tasked with controlling the operation of a number of RSUs.

- Centralized Unit: The CU is the upper-layer split of the open RAN protocol stack, and it is considered as the central data center. It is the logical node that includes a portion of the gNB functions, i.e., packet data convergence protocol (PDCP) and the radio link controller (RLC) layers of the protocol stack, as defined by logical split 2. The CU can also support a number of DUs. Therefore, by taking advantage of network function virtualization (NFV) techniques and running them at CUs, part of the network functions can be transferred into data centers instead of running them at the RSU.

9.2.2. Open RAN-Inspired Edge Digital Twin—A Vehicular Communication Use Case

10. Conclusions

10.1. Elucidation of Contributions

- Edge-Based DT for Day 3 Operations: The principles of choice modeling and mathematical psychology were brought from prospect theory, and discrete choice experiments were brought to model day 3 edge operations. To address the complexity of 6G networks, the traditional edge computing (as currently known in the 5G context) could be improved through the use of low-cost data centers (i.e., CU) that operate according to cognitive choice modeling.

- Big Data-Inspired Edge DT: Since 6G networks are expected to enable a plethora of newer AI-assisted smart applications, big data analytics could be utilized to completely transform the world of communication. In this way, the status of the edge systems in terms of an edge-resource device model can be realized through the deployment of big data-based DT models deployed at the edge server. With this modeling approach, parameter calibration between physical assets and DTs can be performed at regular intervals. In this way, the physical components can exchange real-time information with the DT, thus opening the door for predictive maintenance.

- Vehicle-to-Edge Use Case Design: The design and realization of the C-V2E offloading scheme was realized with the suggestion of splitting the NDT design to specifically focus on the vDT and the iDT that will monitor different network profiles. This kind of design is more suitable for vehicular services since it allows for the creation of a specific DT—tailored for monitoring a specific troublesome feature of the network, such as the connection link in urban environments. This is a performance-related and real-time constraint violation challenge, which is very critical in ensuring the dependability of the DT. The DT cannot entirely and accurately simulate the physical entity, as there will be specific errors, and the cumulative error will increase with time.

- Open RAN-Based Vehicle-to-Edge Use Case Design: With the vital role played by open RAN in improving the computational capabilities of the edge, vehicular applications could benefit from greatly reduced latency. To that effect, an EDT was designed based on the dis-aggregated RAN architecture, specifically for C-V2E applications. Here, a customized functional split of the edge was realized according to open RAN principles, such that the edge server was dis-aggregated into (i) edge cloud and (ii) central data center. This design architecture achieves reduced latency compared to the non-split architecture by orders of magnitude.

10.2. Recommendations for Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ahammed, T.B.; Patgiri, R.; Nayak, S. A Vision on the Artificial Intelligence for 6G Communication. ICT Express 2023, 9, 197–210. [Google Scholar] [CrossRef]

- Lehr, W.; Queder, F.; Haucap, J. 5G: A New Future for Mobile Network Operators, or Not? Telecommun. Policy 2021, 45, 102086. [Google Scholar] [CrossRef]

- Ashwin, M.; Alqahtani, A.S.; Mubarakali, A.; Sivakumar, B. Efficient Resource Management in 6G Communication Networks Using Hybrid Quantum Deep Learning Model. Comput. Electr. Eng. 2023, 106, 108565. [Google Scholar] [CrossRef]

- Hlophe, M.C.; Maharaj, B.T. QoS Provisioning and Energy Saving Scheme for Distributed Cognitive Radio Networks Using Deep Learning. J. Commun. Netw. 2020, 22, 185–204. [Google Scholar] [CrossRef]

- Attaran, M. The Impact of 5G on the Evolution of Intelligent Automation and Industry Digitization. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 5977–5993. [Google Scholar] [CrossRef] [PubMed]

- Balaram, A.; Sakthivel, T.; Chandan, R.R. A Context-aware Improved POR Protocol for Delay Tolerant Networks. Automatika 2023, 64, 22–33. [Google Scholar] [CrossRef]

- Han, B.; Habibi, M.A.; Richerzhagen, B.; Schindhelm, K.; Zeiger, F.; Lamberti, F.; Pratticò, F.G.; Upadhya, K.; Korovesis, C.; Belikaidis, I.P.; et al. Digital Twins for Industry 4.0 in the 6G Era. arXiv 2023, arXiv:2210.08970. [Google Scholar] [CrossRef]

- Kim, S.; Choi, B.; Lwe, Y.K. Where is the Age of Digitalization Heading? The Meaning, Characteristics, and Implications of Contemporary Digital Transformation. Sustainability 2021, 13, 8909. [Google Scholar] [CrossRef]

- Shah, S.D.; Gregory, M.A.; Li, S. Cloud-native Network Slicing Using Software Defined Networking Based Multi-access Edge Computing: A Survey. IEEE Access 2021, 9, 10903–10924. [Google Scholar] [CrossRef]

- Yousefpour, A.; Fung, C.; Nguyen, T.; Kadiyala, K.; Jalali, F.; Niakanlahiji, A.; Kong, J.; Jue, J.P. All One Needs to Know about Fog Computing and Related Edge Computing Paradigms: A Complete Survey. J. Syst. Archit. 2019, 98, 289–330. [Google Scholar] [CrossRef]

- Schneller, L.; Porter, C.N.; Wakefield, A. Implementing Converged Security Risk Management: Drivers, Barriers, and Facilitators. Secur. J. 2023, 36, 333–349. [Google Scholar] [CrossRef]

- Mendez, J.; Bierzynski, K.; Cuéllar, M.P.; Morales, D.P. Edge Intelligence: Concepts, Architectures, Applications and Future Directions. ACM Trans. Embed. Comput. Syst. 2022, 21, 1–41. [Google Scholar] [CrossRef]

- Broschert, S.; Coughlin, T.; Ferraris, M.; Flammini, F.; Florido, J.G.; Gonzalez, A.C.; Henz, P.; de Kerckhove, D.; Rosen, R.; Saracco, R.; et al. Symbiotic Autonomous Systems; White Paper III; IEEE: New York, NY, USA, 2019. [Google Scholar]

- Maier, M.; Ebrahimzadeh, A.; Beniiche, A.; Rostami, S. The Art of 6G (TAO 6G): How to Wire Society 5.0. J. Opt. Commun. Netw. 2022, 14, A101–A112. [Google Scholar] [CrossRef]

- Rohini, P.; Tripathi, S.; Preeti, C.M.; Renuka, A.; Gonzales, J.L.; Gangodkar, D. A Study on the Adoption of Wireless Communication in Big Data Analytics Using Neural Networks and Deep Learning. In Proceedings of the 2nd IEEE International Conference on Advanced Computing and Innovative Technologies in Engineering, Greater Noida, India, 28–29 April 2022; pp. 1071–1076. [Google Scholar]

- Coombs, C.; Hislop, D.; Taneva, S.K.; Barnard, S. The Strategic Impacts of Intelligent Automation for Knowledge and Service Work: An Interdisciplinary Review. J. Strateg. Inf. Syst. 2020, 29, 101600. [Google Scholar] [CrossRef]

- Cheng, X.; Fang, L.; Hong, X.; Yang, L. Exploiting Mobile Big Data: Sources, Features, and Applications. IEEE Netw. 2017, 31, 72–79. [Google Scholar] [CrossRef]

- Mosqueira-Rey, E.; Hernández-Pereira, E.; Alonso-Ríos, D.; Bobes-Bascarán, J.; Fernández-Leal, Á. Human-in-the-loop Machine Learning: A State of the Art. Artif. Intell. Rev. 2023, 56, 3005–3054. [Google Scholar] [CrossRef]

- Rahman, A.; Hossain, M.S.; Muhammad, G.; Kundu, D.; Debnath, T.; Rahman, M.; Khan, M.S.; Tiwari, P.; Band, S.S. Federated Learning-based AI Approaches in Smart Healthcare: Concepts, Taxonomies, Challenges and Open Issues. Clust. Comput. 2023, 26, 2271–2311. [Google Scholar] [CrossRef]

- Sergiou, C.; Lestas, M.; Antoniou, P.; Liaskos, C.; Pitsillides, A. Complex Systems: A Communication Networks Perspective Towards 6G. IEEE Access 2020, 8, 89007–89030. [Google Scholar] [CrossRef]

- Li, H.; Song, J.B. Behavior Dynamics in Cognitive Radio Networks: An Interacting Particle System Approach. In Proceedings of the IEEE International Conference on Communications(ICC), Ottawa, ON, Canada, 10–15 June 2012; pp. 1581–1585. [Google Scholar]

- Esenogho, E.; Walingo, T. Primary Users ON/OFF Behaviour Models in Cognitive Radio Networks. In Proceedings of the International Conference on Wireless and Mobile Communication Systems (WMCS), Lisbon, Portugal, 30 October–1 November 2014; pp. 209–214. [Google Scholar]

- Nleya, B.; Mutsvangwa, A. Enhanced Congestion Management for Minimizing Network Performance Degradation in OBS Networks. SAIEE Afr. Res. J. 2018, 109, 48–57. [Google Scholar] [CrossRef]

- Sharma, P. Evolution of Mobile Wireless Communication Networks-1G to 5G as well as Future Prospective of Next Generation Communication Network. Int. J. Comput. Sci. Mob. Comput. 2023, 2, 47–53. [Google Scholar]

- Hlophe, M.C.; Maharaj, B.T. AI Meets CRNs: A Prospective Review on the Application of Deep Architectures in Spectrum Management. IEEE Access 2021, 9, 113954–113996. [Google Scholar] [CrossRef]

- Xin, Y.; Yang, K.; Chih-Lin, I.; Shamsunder, S.; Lin, X.; Lai, L. Guest Editorial: AI-Powered Telco Network Automation: 5G Evolution and 6G. IEEE Wirel. Commun. 2023, 30, 68–69. [Google Scholar] [CrossRef]

- Miraz, M.H.; Ali, M.; Excell, P.S. A Review on Internet of Things (IoT), Internet of Everything (IoE) and Internet of Nano Things (IoNT). In Proceedings of the 2015 Internet Technologies and Applications (ITA), Wrexham, UK, 8–11 September 2015; pp. 219–224. [Google Scholar]

- Barrett, H.C. Towards a Cognitive Science of the Human: Cross-cultural Approaches and their Urgency. Trends Cogn. Sci. 2020, 24, 620–638. [Google Scholar] [CrossRef] [PubMed]

- Asheralieva, A.; Niyato, D. Optimizing Age of Information and Security of the Next-Generation Internet of Everything Systems. IEEE Internet Things J. 2022, 9, 20331–20351. [Google Scholar] [CrossRef]

- Enke, B.; Graeber, T. Cognitive Uncertainty. Q. J. Econ. 2023, 38, 2021–2067. [Google Scholar] [CrossRef]

- Hlophe, M.C.; Maharaj, B.T. Prospect-theoretic DRL Approach for Container Provisioning in Energy-constrained Edge Platforms. In Proceedings of the IEEE 97th VTC2023-Spring, Florence, Italy, 20–23 June 2023; pp. 1–5. [Google Scholar]

- Majid, M.; Habib, S.; Javed, A.R.; Rizwan, M.; Srivastava, G.; Gadekallu, T.R.; Lin, J.C. Applications of Wireless Sensor Networks and Internet of Things Frameworks in the Industry Revolution 4.0: A Systematic Literature Review. Sensors 2022, 22, 2087. [Google Scholar] [CrossRef] [PubMed]

- Okegbile, S.D.; Cai, J.; Yi, C.; Niyato, D. Human Digital Twin for Personalized Healthcare: Vision, Architecture and Future Directions. IEEE Netw. 2022, 37, 262–269. [Google Scholar] [CrossRef]

- Dwivedi, R.; Mehrotra, D.; Chandra, S. Potential of Internet of Medical Things (IoMT) Applications in Building a Smart Healthcare System: A Systematic Review. J. Oral Biol. Craniofacial Res. 2021, 12, 302–318. [Google Scholar] [CrossRef]

- Berglund, J. Technology you can Swallow: Moving Beyond Wearable Sensors, Researchers are Creating Ingestible Ones. IEEE Pulse 2018, 9, 15–18. [Google Scholar] [CrossRef]

- Chude-Okonkwo, U.A.; Malekian, R.; Maharaj, B.T. Molecular Communication Model for Targeted Drug Delivery in Multiple Disease Sites with Diversely Expressed Enzymes. IEEE Trans. Nanobiosci. 2016, 15, 230–245. [Google Scholar] [CrossRef]

- Wang, Y.; Zhu, H.; Wang, Z.; Li, H.; Li, G. A Uniform Parcel Delivery System Based on IoT. Adv. Internet Things 2018, 8, 39–63. [Google Scholar] [CrossRef][Green Version]

- Yi, X.; Liu, F.; Liu, J.; Jin, H. Building a Network Highway for Big Data: Architecture and Challenges. IEEE Netw. 2014, 28, 5–13. [Google Scholar] [CrossRef]

- Ghosh, A.; Chakraborty, D.; Law, A. Artificial Intelligence in Internet of Things. CAAI Trans. Intell. Technol. 2018, 3, 208–218. [Google Scholar] [CrossRef]

- Mahmood, N.H.; Böcker, S.; Munari, A.; Clazzer, F.; Moerman, I.; Mikhaylov, K.; Lopez, O.; Park, O.S.; Mercier, E.; Bartz, H.; et al. White Paper on Critical and Massive Machine Type Communication towards 6G. arXiv 2020, arXiv:2004.14146. [Google Scholar]

- Forecast, G.M. Cisco Visual Networking Index: Global Mobile Data Traffic Forecast Update, 2017–2022. Update 2019, 2017, 2022. [Google Scholar]

- Conti, M.; Das, S.K.; Bisdikian, C.; Kumar, M.; Ni, L.M.; Passarella, A.; Roussos, G.; Tröster, G.; Tsudik, G.; Zambonelli, F. Looking Ahead in Pervasive Computing: Challenges and Opportunities in the era of cyber-physical Convergence. Pervasive Mob. Comput. 2012, 8, 2–21. [Google Scholar] [CrossRef]

- Webb, M.; Cox, M. A Review of Pedagogy Related to Information and Communications Technology. Technol. Pedagog. Educ. 2004, 13, 235–286. [Google Scholar] [CrossRef]

- Mohsan, S.A.; Mazinani, A.; Malik, W.; Younas, I.; Othman, N.Q.; Amjad, H.; Mahmood, A. 6G: Envisioning the Key Technologies, Applications and Challenges. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 14–23. [Google Scholar] [CrossRef]

- Hoey, J.; Schröder, T.; Morgan, J.; Rogers, K.B.; Rishi, D.; Nagappan, M. Artificial Intelligence and Social Simulation: Studying Group Dynamics on a Massive Scale. Small Group Res. 2018, 49, 647–683. [Google Scholar] [CrossRef]

- Blesch, K.; Hauser, O.P.; Jachimowicz, J.M. Measuring Inequality Beyond the Gini Coefficient May Clarify Conflicting Findings. Nat. Hum. Behav. 2022, 6, 1525–1536. [Google Scholar] [CrossRef]

- van Mierlo, T.; Hyatt, D.; Ching, A.T. Employing the Gini Coefficient to Measure Participation Inequality in Treatment-focused Digital Health Social Networks. Netw. Model. Anal. Health Inform. Bioinform. 2016, 5, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Daly, E.M.; Haahr, M. Social Network Analysis for Information Flow in Disconnected Delay-tolerant MANETs. IEEE Trans. Mob. Comput. 2009, 8, 606–621. [Google Scholar] [CrossRef]

- Hui, P.; Crowcroft, J.; Yoneki, E. Bubble Rap: Social-based Forwarding in Delay-tolerant Networks. In Proceedings of the 9th ACM International Symposium on Mobile Ad Hoc Networking and Computing, Los Angeles, CA, USA, 26–30 May 2008; pp. 241–250. [Google Scholar]

- Aslam, S.; Shahid, A.; Lee, K.G. Primary User Behavior Aware Spectrum Allocation Scheme for Cognitive Radio Networks. Comput. Electr. Eng. 2015, 42, 135–147. [Google Scholar] [CrossRef]

- Sen, A.K. Neo-Classical and Neo-Keynbsian Theories of Distribution. Econ. Rec. 2002, 4, 478. [Google Scholar] [CrossRef]

- Xing, Y.; Chandramouli, R. Human Behavior Inspired Cognitive Radio Network Design. IEEE Commun. Mag. 2008, 46, 122–127. [Google Scholar] [CrossRef]

- Niemiec, R.; McCaffrey, S.; Jones, M. Clarifying the Degree and Type of Public Good Collective Action Problem Posed by Natural Resource Management Challenges. Ecol. Soc. 2020, 25, 30. [Google Scholar] [CrossRef]

- Sande, M.M.; Hlophe, M.C.; Maharaj, B.T. A Backhaul Adaptation Scheme for IAB Networks Using Deep Reinforcement Learning With Recursive Discrete Choice Model. IEEE Access 2023, 9, 14181–14201. [Google Scholar] [CrossRef]

- Davis, J.W.; McDermott, R. The Past, Present, and Future of Behavioral IR. Int. Organ. 2021, 75, 147–177. [Google Scholar] [CrossRef]

- Gaina, R.D. Playing with Evolution. IEEE Potentials 2022, 41, 44–47. [Google Scholar] [CrossRef]

- Aimone, J.B.; Perekh, O.D.; Severa, W.M. Neural Computing for Scientific Computing Applications; Sandia National Lab: Albuquerque, NM, USA, 2017.

- Redhead, D.; Power, E.A. Social Hierarchies and Social Networks in Humans. Philos. Trans. R. Soc. B 2022, 377, 20200440. [Google Scholar] [CrossRef]

- Sivakumaran, A.; Alfa, A.S.; Maharaj, B.T. An Empirical Analysis of the Effect of Malicious Users in Decentralised Cognitive Radio Networks. In Proceedings of the 89th IEEE VTC2019-Spring, Kuala Lumpur, Malaysia, 28 April–1 May 2019; pp. 1–5. [Google Scholar]

- Haenggi, M.; Andrews, J.G.; Baccelli, F.; Dousse, O.; Franceschetti, M. Stochastic Geometry and Random Graphs for the Analysis and Design of Wireless Networks. IEEE J. Sel. Areas Commun. 2009, 27, 1029–1046. [Google Scholar] [CrossRef]

- Alfa, A.S. Queueing Theory for Telecommunications: Discrete Time Modelling of a Single Node System; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Prados-Garzon, J.; Ameigeiras, P.; Ramos-Munoz, J.J.; Navarro-Ortiz, J.; Andres-Maldonado, P.; Lopez-Soler, J.M. Performance Modeling of Softwarized Network Services Based on Queuing Theory with Experimental Validation. IEEE Trans. Mob. Comput. 2019, 20, 1558–1573. [Google Scholar] [CrossRef]

- Walton, N.; Xu, K. Learning and Information in Stochastic Networks and Queues. In Tutorials in Operations Research: Emerging Optimization Methods and Modeling Techniques with Applications; INFORMS TutORials, Published online: Catonsville, MD, USA, 2021; pp. 161–198. [Google Scholar]

- Ojeda, C.; Cvejoski, K.; Georgiev, B.; Bauckhage, C.; Schuecker, J.; Sánchez, R.J. Learning Deep Generative Models for Queuing Systems. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 9214–9222. [Google Scholar]

- Abernethy, J.; Bartlett, P.L.; Hazan, E. Blackwell Approachability and No-regret Learning are Equivalent. In Proceedings of the 24th Annual Conference on Learning Theory—JMLR Workshop and Conference Proceedings, Budapest, Hungary, 9–11 June 2011; pp. 27–46. [Google Scholar]

- Zhang, C.; Ren, M.; Urtasun, R. Graph Hypernetworks for Neural Architecture Search. arXiv 2018, arXiv:1810.05749. [Google Scholar]

- Saad, W.; Han, Z.; Zheng, R.; Debbah, M.; Poor, H.V. A College Admissions Game for Uplink User Association in Wireless Small Cell Networks. In Proceedings of the IEEE INFOCOM, Toronto, ON, Canada, 24 April–2 May 2014; pp. 1096–1104. [Google Scholar]

- Ye, Q.; Rong, B.; Chen, Y.; Al-Shalash, M.; Caramanis, C.; Andrews, J.G. User Association for Load Balancing in Heterogeneous Cellular Networks. IEEE Trans. Wirel. Commun. 2013, 12, 2706–2716. [Google Scholar] [CrossRef]

- Tang, X.; Ren, P.; Wang, Y.; Du, Q.; Sun, L. User Association as a Stochastic Game for Enhanced Performance in Heterogeneous Networks. In Proceedings of the IEEE International Conference on Communications (ICC), London, UK, 8–12 June 2015; pp. 3417–3422. [Google Scholar]

- Suriya, M. Machine Learning and Quantum Computing for 5G/6G Communication Networks—A Survey. Int. J. Intell. Netw. 2022, 3, 197–203. [Google Scholar]

- Córcoles, A.D.; Kandala, A.; Javadi-Abhari, A.; McClure, D.T.; Cross, A.W.; Temme, K.; Nation, P.D.; Steffen, M.; Gambetta, J.M. Challenges and Opportunities of Near-term Quantum Computing Systems. Proc. IEEE 2019, 108, 1338–1352. [Google Scholar] [CrossRef]

- Hlophe, M.C.; Maharaj, B.T. Spectrum Occupancy Reconstruction in Distributed Cognitive Radio Networks Using Deep Learning. IEEE Access 2019, 7, 14294–14307. [Google Scholar] [CrossRef]

- Dai, H.N.; Wong, R.C.; Wang, H.; Zheng, Z.; Vasilakos, A.V. Big Data Analytics for Large-scale Wireless Networks: Challenges and Opportunities. ACM Comput. Surv. (CSUR) 2019, 52, 1–36. [Google Scholar] [CrossRef]

- ur Rehman, M.H.; Yaqoob, I.; Salah, K.; Imran, M.; Jayaraman, P.P.; Perera, C. The Role of Big Data Analytics in Industrial Internet of Things. Future Gener. Comput. Syst. 2019, 99, 247–259. [Google Scholar] [CrossRef]

- Singh, S.K.; El-Kassar, A.N. Role of Big Data Analytics in Developing Sustainable Capabilities. J. Clean. Prod. 2019, 213, 1264–1273. [Google Scholar] [CrossRef]

- Awotunde, J.B.; Adeniyi, E.A.; Ogundokun, R.O.; Ayo, F.E. Application of Big Data with Fintech in Financial Services. In Fintech with Artificial Intelligence, Big Data, and Blockchain; Springer: Singapore, 2021; pp. 107–132. [Google Scholar]

- Qin, W.; Chen, S.; Peng, M. Recent advances in Industrial Internet: Insights and Challenges. Digit. Commun. Netw. 2020, 6, 1–3. [Google Scholar] [CrossRef]

- Sittig, D.F.; Singh, H. A New Socio-technical Model for Studying Health Information Technology in Complex Adaptive Healthcare Systems. In Cognitive Informatics for Biomedicine; Springer: Cham, Switzerland, 2015; pp. 59–80. [Google Scholar]

- Reichl, P. Quality of Experience in Convergent Communication Ecosystems. Media Convergence Handbook—Vol. 2: Firms and User Perspectives; Springer: Berlin/Heidelberg, Germany, 2016; pp. 225–244. [Google Scholar]

- Gweon, H.; Fan, J.; Kim, B. Socially Intelligent Machines that Learn from Humans and Help Humans Learn. Philos. Trans. R. Soc. A 2023, 381, 20220048. [Google Scholar] [CrossRef] [PubMed]

- Koster, R.; Balaguer, J.; Tacchetti, A.; Weinstein, A.; Zhu, T.; Hauser, O.; Williams, D.; Campbell-Gillingham, L.; Thacker, P.; Botvinick, M.; et al. Human-centred Mechanism Design with Democratic AI. Nat. Hum. Behav. 2022, 6, 1398–1407. [Google Scholar] [CrossRef] [PubMed]

- Conti, M.; Mordacchini, M.; Passarella, A. Design and Performance Evaluation of Data Dissemination Systems for Opportunistic Networks Based on Cognitive Heuristics. ACM Trans. Auton. Adapt. Syst. (TAAS) 2013, 8, 1–32. [Google Scholar] [CrossRef]

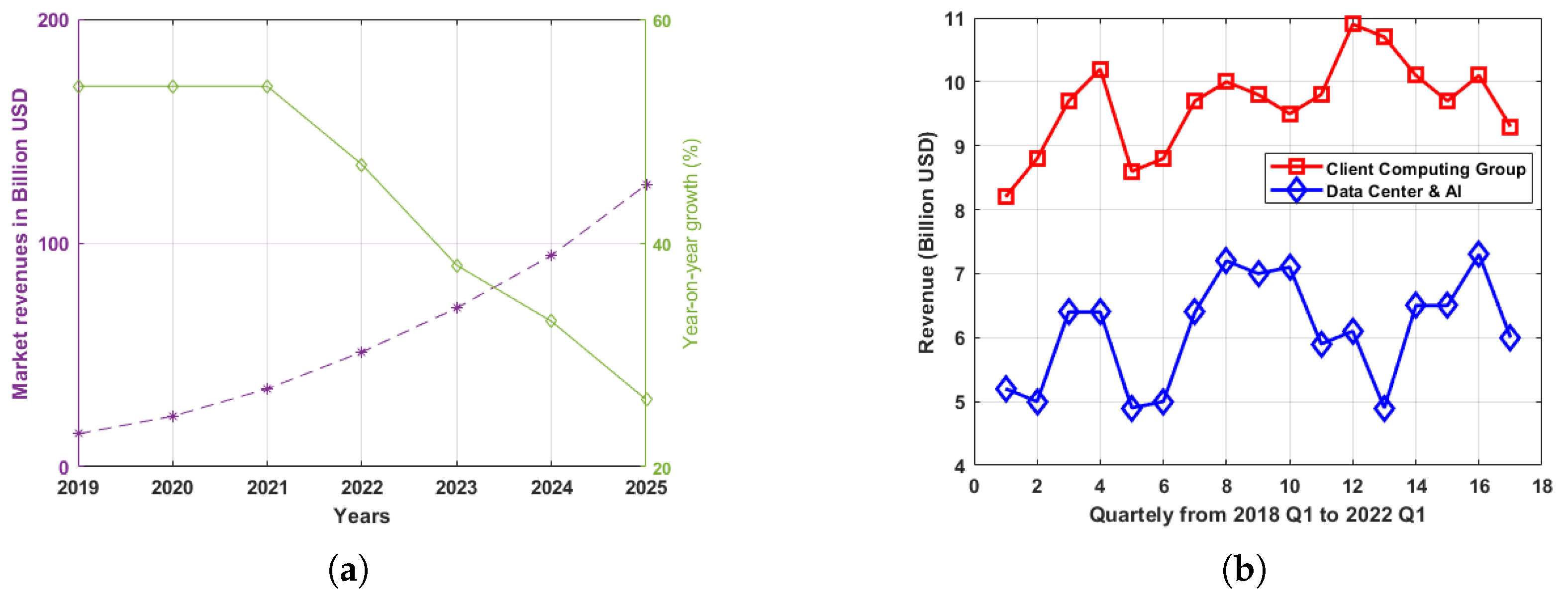

- Forecast Growth of the Artificial Intelligence (AI) Software Market Worldwide from 2019 to 2025. 27 June 2022. Available online: https://www.statista.com/statistics/607960/worldwide-artificial-intelligence-market-growth/ (accessed on 13 September 2022).

- Mookerjee, J.; Rao, O.R. A Review of the Impact of Disruptive Innovations on Markets and Business Performance of Players. Int. J. Grid Distrib. Comput. 2021, 14, 605–630. [Google Scholar]

- Aker, J.; Cariolle, J. The Use of Digital for Public Service Provision in sub-Saharan Africa. FERDI Notes Brèves/Policy Briefs 2022. Available online: https://ferdi.fr/dl/df-xVbQVhXVJgr2JBZSad2PuLPM/ferdi-b209-the-use-of-digital-for-public-service-provision-in-sub-saharan.pdf (accessed on 17 February 2023).

- Periaiya, S.; Nandukrishna, A.T. What Drives User Stickiness and Satisfaction in OTT Video Streaming Platforms? A Mixed-method Exploration. Int. J. Hum.-Comput. Interact. 2023, 1–7. [Google Scholar] [CrossRef]

- Nokia, O.; Yrjölä, F.S.; Matinmikko-Blue, M. The Importance of Business Models in Mobile Communications. In The Changing World of Mobile Communications: 5G, 6G and the Future of Digital Services; Palgrave Macmillan: Cham, Switzerland, 2023; Volume 138. [Google Scholar]

- Shrivastava, S. Digital Disruption is Redefining the Customer Experience: The Digital Transformation Approach of the Communications Service Providers. Telecom Bus. Rev. 2017, 10, 41–52. [Google Scholar]

- Ozdogan, M.O.; Carkacioglu, L.; Canberk, B. Digital Twin Driven Blockchain Based Reliable and Efficient 6G Edge Network. In Proceedings of the 18th IEEE International Conference on Distributed Computing in Sensor Systems, Los Angeles, CA, USA, 30 May–1 June 2022; pp. 342–348. [Google Scholar]

- Zeb, S.; Mahmood, A.; Hassan, S.A.; Piran, M.J.; Gidlund, M.; Guizani, M. Industrial Digital Twins at the Nexus of NextG Wireless Networks and Computational Intelligence: A Survey. J. Netw. Comput. Appl. 2022, 200, 103309. [Google Scholar] [CrossRef]

- Rakuten Mobile and Technical University of Munich Launch Digital Network Twins Research. 12 April 2022. Available online: https://corp.mobile.rakuten.co.jp/english/news/press/2022/0420-01/ (accessed on 1 December 2022).

- Ning, D.; MathWorks Australia. Developing and Deploying Analytics for IoT Systems; Matlab: Melbourne, Australia, 2017. [Google Scholar]

- Saghiri, A.M. Cognitive Internet of Things: Challenges and Solutions. In Artificial Intelligence-Based Internet of Things Systems; Springer: Cham, Switzerland, 2022; pp. 335–362. [Google Scholar]

- Rejeb, A.; Rejeb, K.; Treiblmaier, H. Mapping Metaverse Research: Identifying Future Research Areas Based on Bibliometric and Topic Modeling Techniques. Information 2023, 14, 356. [Google Scholar] [CrossRef]

- Broo, D.G. Transdisciplinarity and Three Mindsets for Sustainability in the Age of Cyber-physical Systems. J. Ind. Inf. Integr. 2022, 27, 100290. [Google Scholar] [CrossRef]

- Zaki, M. Digital Transformation: Harnessing Digital Technologies for the Next Generation of Services. J. Serv. Mark. 2019, 33, 429–435. [Google Scholar] [CrossRef]

- Truby, J.; Brown, R. Human Digital Thought Clones: The Holy Grail of Artificial Intelligence for Big Data. Inf. Commun. Technol. Law 2021, 30, 140–168. [Google Scholar] [CrossRef]

- Pang, T.Y.; Pelaez Restrepo, J.D.; Cheng, C.T.; Yasin, A.; Lim, H.; Miletic, M. Developing a Digital Twin and Digital Thread Framework for an ‘Industry 4.0’ Shipyard. Appl. Sci. 2021, 11, 1097. [Google Scholar] [CrossRef]

- Yu, W.; Patros, P.; Young, B.; Klinac, E.; Walmsley, T.G. Energy Digital Twin Technology for Industrial Energy Management: Classification, Challenges and Future. Renew. Sustain. Energy Rev. 2022, 161, 112407. [Google Scholar] [CrossRef]

- Dai, Y.; Zhang, K.; Maharjan, S.; Zhang, Y. Deep Reinforcement Learning for Stochastic Computation Offloading in Digital Twin Networks. IEEE Trans. Ind. Inform. 2020, 17, 4968–4977. [Google Scholar] [CrossRef]

- Wright, L.; Davidson, S. How to tell the difference between a model and a digital twin. Adv. Model. Simul. Eng. Sci. 2020, 7, 1–3. [Google Scholar] [CrossRef]

- Zelenbaba, S.; Rainer, B.; Hofer, M.; Zemen, T. Wireless Digital Twin for Assessing the Reliability of Vehicular Communication Links. In Proceedings of the IEEE Globecom Workshops, Rio de Janeiro, Brazil, 4–8 December 2022; pp. 1034–1039. [Google Scholar]

- Vaezi, M.; Noroozi, K.; Todd, T.D.; Zhao, D.; Karakostas, G.; Wu, H.; Shen, X. Digital Twins From a Networking Perspective. IEEE IoT J. 2022, 9, 23525–23544. [Google Scholar] [CrossRef]

- Akhtar, M.W.; Hassan, S.A.; Ghaffar, R.; Jung, H.; Garg, S.; Hossain, M.S. The Shift to 6G Communications: Vision and Requirements. Hum.-Centric Comput. Inf. Sci. 2020, 10, 1–27. [Google Scholar] [CrossRef]

- Sultana, S.; Akter, S.; Kyriazis, E. How Data-driven Innovation Capability is Shaping the Future of Market Agility and Competitive Performance? Technol. Forecast. Soc. Change 2022, 174, 121260. [Google Scholar] [CrossRef]

- Vilà, I.; Sallent, O.; Pérez-Romero, J. On the Design of a Network Digital Twin for the Radio Access Network in 5G and Beyond. Sensors 2023, 23, 1197. [Google Scholar] [CrossRef]

- Fettweis, G.P.; Boche, H. 6G: The Personal Tactile Internet—And Open Questions for Information Theory. IEEE BITS Inf. Theory Mag. 2021, 1, 71–82. [Google Scholar] [CrossRef]

- Deng, J.; Zheng, Q.; Liu, G.; Bai, J.; Tian, K.; Sun, C.; Yan, Y.; Liu, Y. A Digital Twin Approach for Self-optimization of Mobile Networks. In Proceedings of the IEEE Wireless Communications and Networking Conference Workshops (WCNCW), Nanjing, China, 29 March 2021; pp. 1–6. [Google Scholar]

- You, X.; Wang, C.X.; Huang, J.; Gao, X.; Zhang, Z.; Wang, M.; Huang, Y.; Zhang, C.; Jiang, Y.; Wang, J.; et al. Towards 6G Wireless Communication Networks: Vision, Enabling Technologies, and New Paradigm Shifts. Sci. China Inf. Sci. 2021, 64, 1–74. [Google Scholar] [CrossRef]

- Pham, X.Q.; Nguyen, T.D.; Huynh-The, T.; Huh, E.N.; Kim, D.S. Distributed Cloud Computing: Architecture, Enabling Technologies, and Open Challenges. IEEE Consum. Electron. Mag. 2022, 12, 98–106. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge Intelligence: Paving the Last Mile of Artificial Intelligence with Edge Computing. Proc. IEEE 2019, 107, 1738–1762. [Google Scholar] [CrossRef]

- Shah, D.R.; Dhawan, D.A.; Thoday, V. An Overview on Security Challenges in Cloud, Fog, and Edge Computing. In Data Science and Security, Proceedings of IDSCS, Bangalore, India, 11–12 February 2022; Springer: Singapore, 2022; pp. 337–345. [Google Scholar]

- Singh, S.P.; Nayyar, A.; Kumar, R.; Sharma, A. Fog Computing: From Architecture to Edge Computing and Big Data Processing. J. Supercomput. 2019, 75, 2070–2105. [Google Scholar] [CrossRef]

- Čilić, I.; Krivić, P.; Podnar Žarko, I.; Kušek, M. Performance Evaluation of Container Orchestration Tools in Edge Computing Environments. Sensors 2023, 23, 4008. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Huang, Q.; Yin, X.; Abbasi, M.; Khosravi, M.R.; Qi, L. Intelligent Offloading for Collaborative Smart City Services in Edge Computing. IEEE Internet Things J. 2020, 7, 7919–7927. [Google Scholar] [CrossRef]

- Regragui, Y.; Moussa, N. A Real-time Path Planning for Reducing Vehicles Traveling Time in Cooperative-intelligent Transportation Systems. Simul. Model. Pract. Theory 2023, 123, 102710. [Google Scholar] [CrossRef]

- Wen, C.H.; Wu, W.N.; Fu, C. Preferences for Alternative Travel Arrangements in Case of Major Flight Delays: Evidence from Choice Experiments with Prospect Theory. Transp. Policy 2019, 83, 111–119. [Google Scholar] [CrossRef]

- Banafaa, M.; Shayea, I.; Din, J.; Azmi, M.H.; Alashbi, A.; Daradkeh, Y.I.; Alhammadi, A. 6G Mobile Communication Technology: Requirements, Targets, Applications, Challenges, Advantages, and Opportunities. Alex. Eng. J. 2022, 64, 245–274. [Google Scholar] [CrossRef]

- Shahjalal, M.; Kim, W.; Khalid, W.; Moon, S.; Khan, M.; Liu, S.; Lim, S.; Kim, E.; Yun, D.W.; Lee, J.; et al. Enabling Technologies for AI Empowered 6G Massive Radio Access Networks. ICT Express 2022, 9, 341–355. [Google Scholar] [CrossRef]

- Abreha, H.G.; Hayajneh, M.; Serhani, M.A. Federated Learning in Edge Computing: A Systematic Survey. Sensors 2022, 22, 450. [Google Scholar] [CrossRef] [PubMed]

- Hlophe, M.C.; Maharaj, B.T. Secondary User Experience-oriented Resource Allocation in AI-empowered Cognitive Radio Networks Using Deep Neuroevolution. In Proceedings of the 91st IEEE VTC-Spring, Antwerp, Belgium, 25–28 May 2020; pp. 1–5. [Google Scholar]

- Yang, Z.; Chen, M.; Wong, K.K.; Poor, H.V.; Cui, S. Federated Learning for 6G: Applications, Challenges, and Opportunities. Engineering 2021, 8, 33–41. [Google Scholar] [CrossRef]

- Hlophe, M.C.; Maharaj, B.T.; Sande, M.M. Energy-Efficient Transmissions in Federated Learning-Assisted Cognitive Radio Networks. In Proceedings of the IEEE 21st International Conference on Communication Technology (ICCT), Tianjin, China, 13–16 October 2021; pp. 216–222. [Google Scholar]

- Dean, J.; Corrado, G.; Monga, R.; Chen, K.; Devin, M.; Mao, M.; Ranzato, M.A.; Senior, A.; Tucker, P.; Yang, K.; et al. Large Scale Distributed Deep Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1223–1231. [Google Scholar]

- Muhammad, A.; Ahmed, M.; Takayuki, I. Federated Learning Versus Classical Machine Learning: A Convergence Comparison. arXiv 2021, arXiv:2107.10976. [Google Scholar]

- Hadi, M.S.; Lawey, A.Q.; El-Gorashi, T.E.; Elmirghani, J.M. Big Data Analytics for Wireless and Wired Network Design: A Survey. Comput. Netw. 2018, 132, 180–199. [Google Scholar] [CrossRef]

- Nair, A.K.; Sahoo, J.; Raj, E.D. Privacy Preserving Federated Learning Framework for IoMT Based Big Data Analysis Using Edge Computing. Comput. Stand. Interfaces 2023, 86, 103720. [Google Scholar] [CrossRef]

- Werner, A.; Zimmermann, N.; Lentes, J. Approach for a Holistic Predictive Maintenance Strategy by Incorporating a Digital Twin. Procedia Manuf. 2019, 39, 1743–1751. [Google Scholar] [CrossRef]

- Grieves, M. Intelligent Digital Twins and the Development and Management of Complex Systems. Digit. Twin 2022, 2, 8. [Google Scholar] [CrossRef]

- Maharaj, B.T.; Awoyemi, B.S. Deep Learning Opportunities for Resource Management in Cognitive Radio Networks. In Developments in Cognitive Radio Networks: Future Directions for Beyond 5G; Springer: Cham, Switzerland, 2022; pp. 189–207. [Google Scholar]

- Touloupos, M.; Kapassa, E.; Kyriazis, D.; Christodoulou, K. Test Recommendation for Service Validation in 5G Networks. In Information Systems, Proceedings of the 16th European, Mediterranean, and Middle Eastern Conference, Dubai, United Arab Emirates, 9–10 December 2019; Springer: Cham, Switzerland, 2019; pp. 139–150. [Google Scholar]

- Alonso, R.S.; Sittón-Candanedo, I.; Casado-Vara, R.; Prieto, J.; Corchado, J.M. Deep Reinforcement Learning for the Management of Software-Defined Networks and Network Function Virtualization in an Edge-IoT Architecture. Sustainability 2020, 12, 5706. [Google Scholar] [CrossRef]

- Zhong, D.; Xia, Z.; Zhu, Y.; Duan, J. Overview of Predictive Maintenance Based on Digital Twin Technology. Heliyon 2023, 9, e14534. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.; Mozaffari, M.; Saad, W.; Bennis, M.; Debbah, M. Machine Learning for Predictive On-demand Deployment of UAVs for Wireless Communications. In Proceedings of the IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, United Arab Emirates, 9–13 December 2018; pp. 1–6. [Google Scholar]

- Xu, Q.; Zhou, G.; Zhang, C.; Chang, F.; Huang, Q.; Zhang, M.; Zhi, Y. Digital twin-driven Intelligent Maintenance Decision-making System and Key-enabling Technologies for Nuclear Power Equipment. Digit. Twin 2022, 2, 14. [Google Scholar] [CrossRef]

- Waqar, M.; Kim, A. Performance Improvement of Ethernet-based Fronthaul Bridged Networks in 5G Cloud Radio Access Networks. Appl. Sci. 2019, 9, 2823. [Google Scholar] [CrossRef]

- Ding, C.; Ho, I.W. Digital-twin-enabled City-model-aware Deep Learning for Dynamic Channel Estimation in Urban Vehicular Environments. IEEE Trans. Green Commun. Netw. 2022, 6, 1604–1612. [Google Scholar] [CrossRef]

- Mirzaei, J.; Abualhaol, I.; Poitau, G. Network Digital Twin for Open RAN: The Key Enablers, Standardization, and Use Cases. arXiv 2023, arXiv:2308.02644. [Google Scholar]

- Gaibi, Z.; Jones, G.; Pont, P.; Mihir, V. A Blueprint for Telecom’s Critical Reinvention; McKinsey & Co.: Chicago, IL, USA, 2021. [Google Scholar]

- Morais, F.Z.; de Almeida, G.M.; Pinto, L.; Cardoso, K.V.; Contreras, L.M.; Righi, R.D.; Both, C.B. PlaceRAN: Optimal Placement of Virtualized Network Functions in the Next-generation Radio Access Networks. arXiv 2021, arXiv:2102.13192. [Google Scholar]

- Klement, F.; Katzenbeisser, S.; Ulitzsch, V.; Krämer, J.; Stanczak, S.; Utkovski, Z.; Bjelakovic, I.; Wunder, G. Open or Not Open: Are Conventional Radio Access Networks More Secure and Trustworthy than Open-RAN? arXiv 2022, arXiv:2204.12227. [Google Scholar]

- Yao, J.F.; Yang, Y.; Wang, X.C.; Zhang, X.P. Systematic Review of Digital Twin Technology and Applications. Vis. Comput. Ind. Biomed. Art 2023, 6, 10. [Google Scholar] [CrossRef]

- Lu, Y.; Maharjan, S.; Zhang, Y. Adaptive Edge Association for Wireless Digital Twin Networks in 6G. IEEE Internet Things J. 2021, 8, 16219–16230. [Google Scholar] [CrossRef]

- Lu, Y.; Huang, X.; Zhang, K.; Maharjan, S.; Zhang, Y. Low-latency Federated Learning and Blockchain for Edge Association in Digital Twin Empowered 6G Networks. IEEE Trans. Ind. Inform. 2020, 17, 5098–5107. [Google Scholar] [CrossRef]

- Yu, J.; Alhilal, A.; Hui, P.; Tsang, D.H. Bi-directional Digital Twin and Edge Computing in the Metaverse. arXiv 2022, arXiv:2211.08700. [Google Scholar]

- Qiu, S.; Zhao, J.; Lv, Y.; Dai, J.; Chen, F.; Wang, Y.; Li, A. Digital-Twin-Assisted Edge-Computing Resource Allocation Based on the Whale Optimization Algorithm. Sensors 2022, 22, 9546. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.; Jia, Z.; Liao, H.; Lu, W.; Mumtaz, S.; Guizani, M.; Tariq, M. Secure and Latency-aware Digital Twin Assisted Resource Scheduling for 5G Edge Computing-empowered Distribution Grids. IEEE Trans. Ind. Inform. 2021, 18, 4933–4943. [Google Scholar] [CrossRef]

- Sasikumar, A.; Vairavasundaram, S.; Kotecha, K.; Indragandhi, V.; Ravi, L.; Selvachandran, G.; Abraham, A. Blockchain-based Trust Mechanism for Digital Twin Empowered Industrial Internet of Things. Future Gener. Comput. Syst. 2023, 141, 16–27. [Google Scholar]

- George, A.S.; George, A.H. A Review of ChatGPT AI’s Impact on Several Business Sectors. Partners Univers. Int. Innov. J. 2023, 1, 9–23. [Google Scholar]

| Objective | Technique | Algorithm | Considerations | Reference(s) |

|---|---|---|---|---|

| Describe a network DT architecture focusing on the RAN and also align with open RAN | Identify different application use cases and train algorithms under different conditions | Reinforcement learning | Aligning with open RAN for implementation used for training RL-based capacity- sharing solutions for network slicing | [106] |

| Design a DT technique for self-optimizing mobile networks | Combine expert knowledge with RL and DT | Reinforcement learning | Future network states must be predicted based on which optimization decisions are generated by expert knowledge | [108] |

| Objective | Technique | Algorithm | Considerations | Refs |

|---|---|---|---|---|

| Enabling new functionalities, e.g., hyper- connected experience and low latency | Formulate an edge association product w.r.t. network states and varying topology | Deep reinforcement learning | Use of transfer learning to solve DT migration problem | [143] |

| Incorporate DT into wireless networks to migrate real-time data processing and learning | Blockchain- empowered FML framework to run in the DT for collaborative computing | Multi-agent reinforcement learning | Improve system reliability, security, balance, learning, accuracy, and time cost | [144] |

| Ensure seamless handover among MEC servers and to avoid intermittent metaverse services | Real-time data collection and model training | C-Deep deterministic policy gradient | Interplay between local DT edge computing on local MEC and the global one on cloud due to the nature of network states | [145] |

| Develop RA model and establish a joint power optimization function, delay, and unbalanced RA rate | Design a greedy initialization strategy that will improve convergence speed of DT | Whale optimization algorithm | Demonstrate that RA and allocation objective function value, power consumption, and reduce RA imbalance rates | [146] |

| Address DT construction challenges and assisted resource scheduling, e.g., low accuracy and large iteration delay | Federated machine learning | Deep Q-learning network | Low-latency accurate, and secure DT to jointly optimize total iteration delay and loss function, and leverage model recognition | [147] |

| Blockchain proof of authority trust mechanism to provide quality services, e.g., data security and privacy | Combine a blockchain- based distributed network with DT for IIoT | Blockchain and deterministic pseudo- random generation (DPRG) | Enhance the authority of decentralized DT combined blockchain networks | [148] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hlophe, M.C.; Maharaj, B.T. From Cyber–Physical Convergence to Digital Twins: A Review on Edge Computing Use Case Designs. Appl. Sci. 2023, 13, 13262. https://doi.org/10.3390/app132413262

Hlophe MC, Maharaj BT. From Cyber–Physical Convergence to Digital Twins: A Review on Edge Computing Use Case Designs. Applied Sciences. 2023; 13(24):13262. https://doi.org/10.3390/app132413262

Chicago/Turabian StyleHlophe, Mduduzi C., and Bodhaswar T. Maharaj. 2023. "From Cyber–Physical Convergence to Digital Twins: A Review on Edge Computing Use Case Designs" Applied Sciences 13, no. 24: 13262. https://doi.org/10.3390/app132413262

APA StyleHlophe, M. C., & Maharaj, B. T. (2023). From Cyber–Physical Convergence to Digital Twins: A Review on Edge Computing Use Case Designs. Applied Sciences, 13(24), 13262. https://doi.org/10.3390/app132413262