BTDNet: A Multi-Modal Approach for Brain Tumor Radiogenomic Classification

Abstract

:1. Introduction

2. Related Works

3. Materials and Method

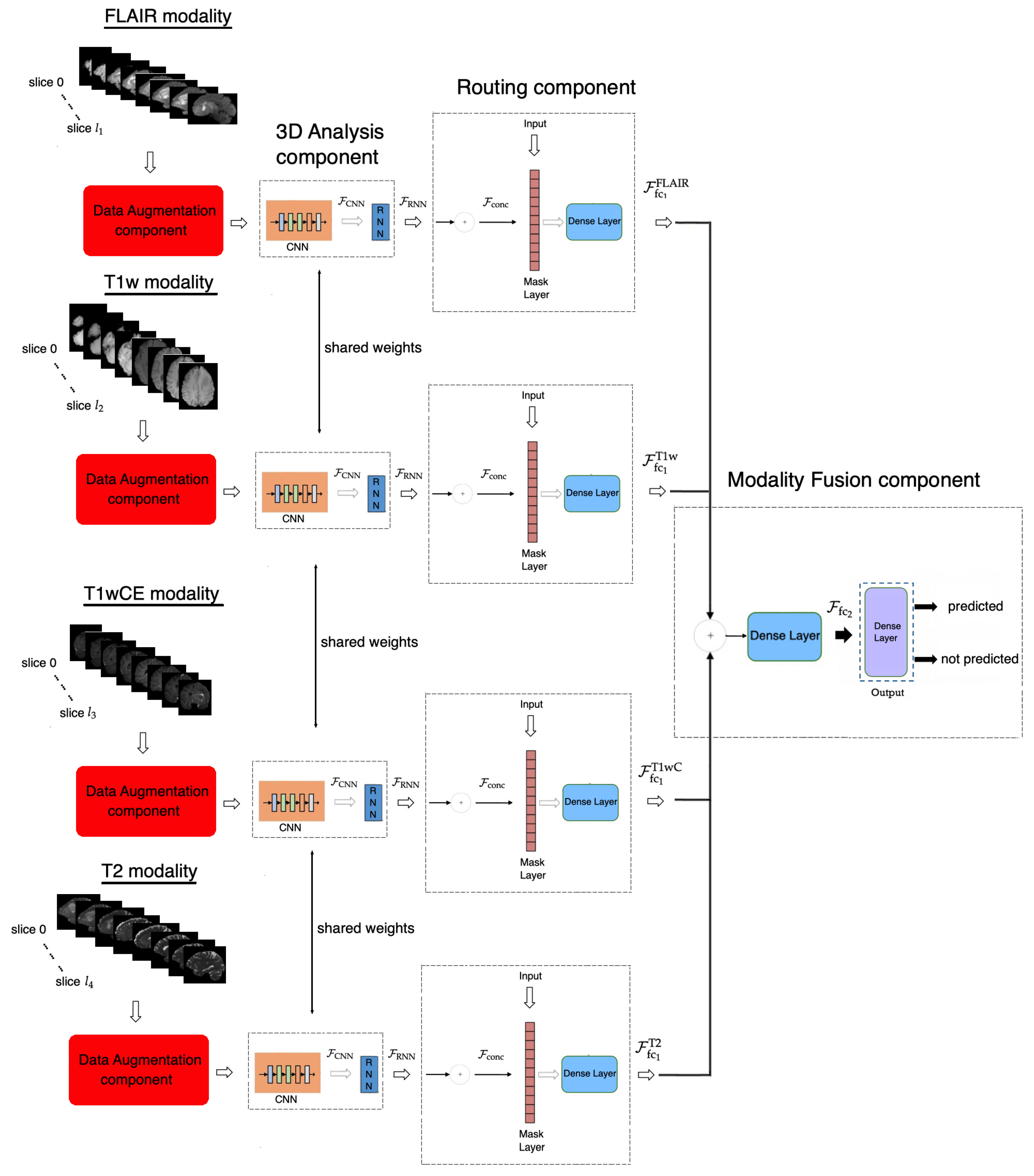

3.1. Proposed Method

3.1.1. Data Augmentation Component

3.1.2. Three-Dimensional Analysis Component

3.1.3. Routing Component

3.1.4. Modality Fusion Component

3.1.5. Objective Function

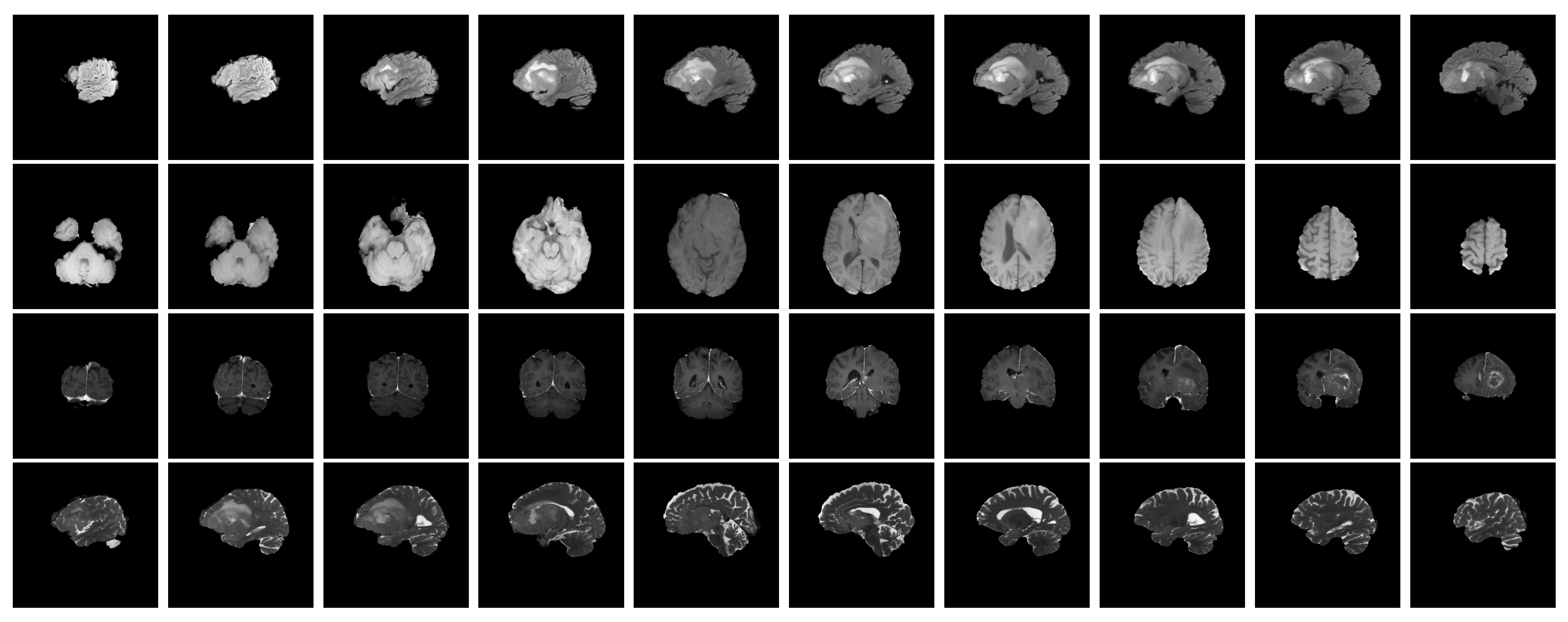

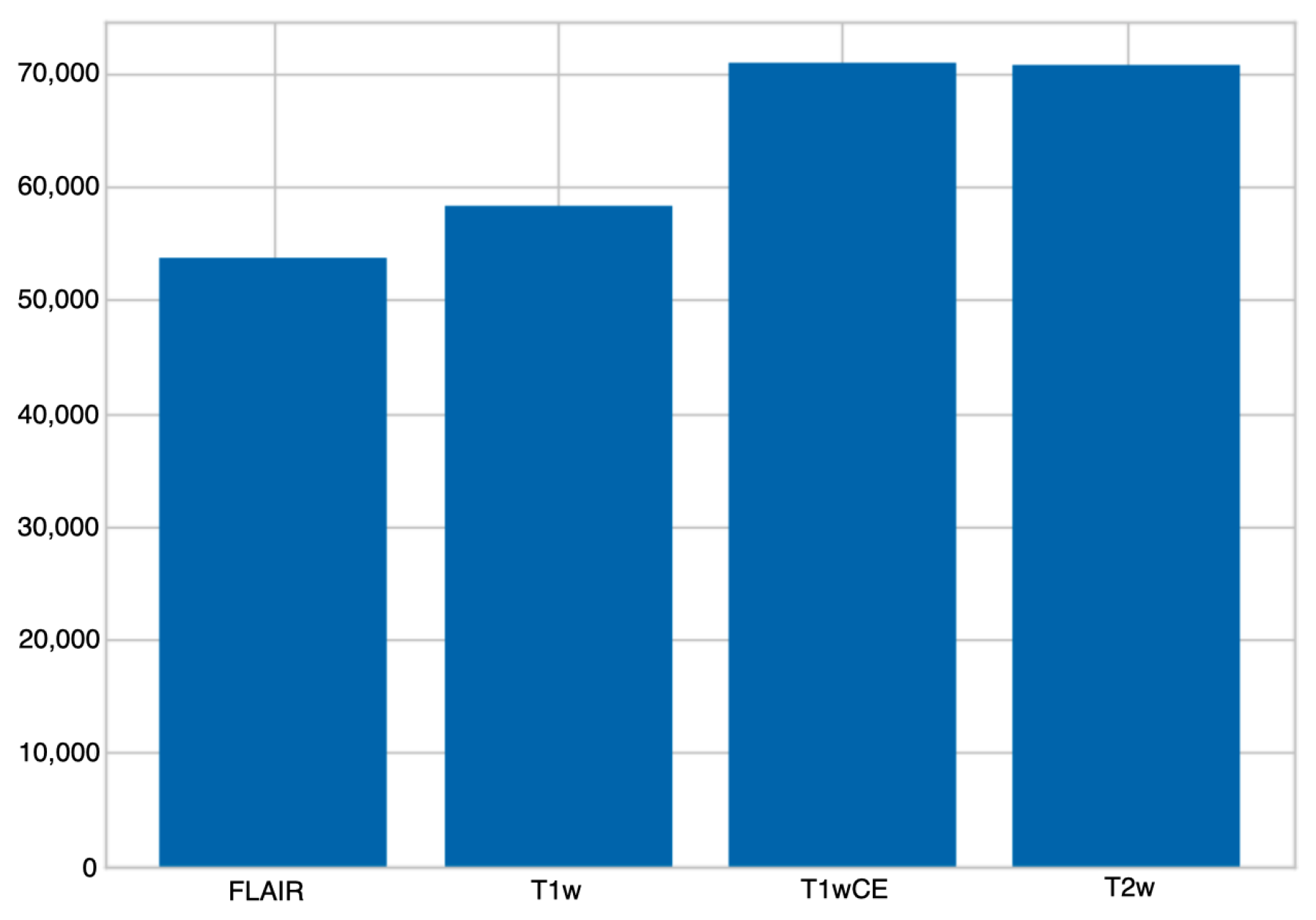

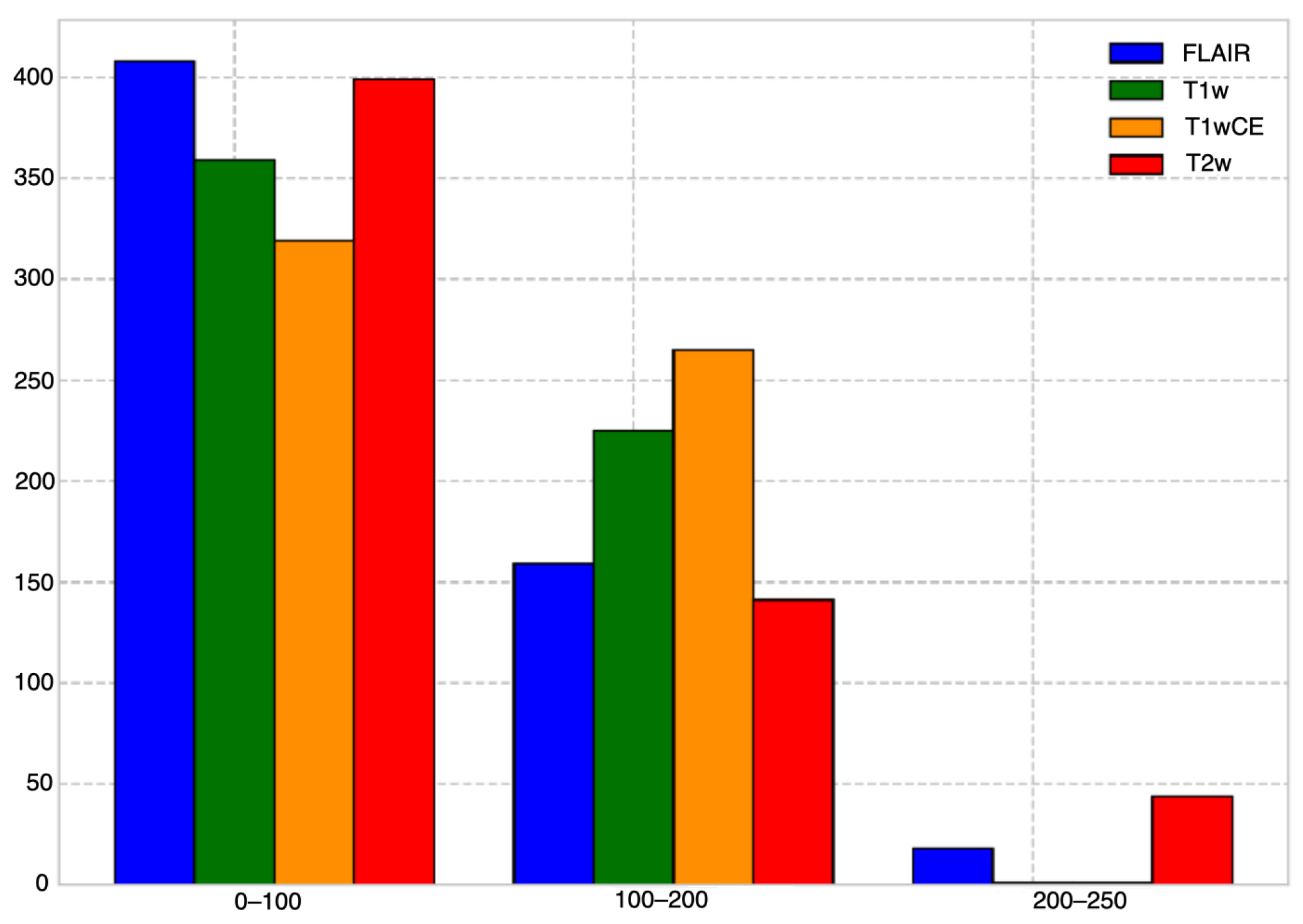

3.2. Dataset

3.3. Pre-Processing

3.4. Performance Metrics

3.5. Implementation Details

4. Experimental Results

4.1. Comparison with the State-of-the-Art

4.2. Ablation Study

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shergalis, A.; Bankhead, A.; Luesakul, U.; Muangsin, N.; Neamati, N. Current challenges and opportunities in treating glioblastoma. Pharmacol. Rev. 2018, 70, 412–445. [Google Scholar] [CrossRef] [PubMed]

- McKinnon, C.; Nandhabalan, M.; Murray, S.A.; Plaha, P. Glioblastoma: Clinical presentation, diagnosis, and management. BMJ 2021, 374, n1560. [Google Scholar] [CrossRef] [PubMed]

- Thawani, R.; McLane, M.; Beig, N.; Ghose, S.; Prasanna, P.; Velcheti, V.; Madabhushi, A. Radiomics and radiogenomics in lung cancer: A review for the clinician. Lung Cancer 2018, 115, 34–41. [Google Scholar] [CrossRef] [PubMed]

- Jansen, R.W.; van Amstel, P.; Martens, R.M.; Kooi, I.E.; Wesseling, P.; de Langen, A.J.; Menke-Van der Houven, C.W.; Jansen, B.H.; Moll, A.C.; Dorsman, J.C.; et al. Non-invasive tumor genotyping using radiogenomic biomarkers, a systematic review and oncology-wide pathway analysis. Oncotarget 2018, 9, 20134. [Google Scholar] [CrossRef] [PubMed]

- Villanueva-Meyer, J.E.; Mabray, M.C.; Cha, S. Current clinical brain tumor imaging. Neurosurgery 2017, 81, 397. [Google Scholar] [CrossRef] [PubMed]

- Rivera, A.L.; Pelloski, C.E.; Gilbert, M.R.; Colman, H.; De La Cruz, C.; Sulman, E.P.; Bekele, B.N.; Aldape, K.D. MGMT promoter methylation is predictive of response to radiotherapy and prognostic in the absence of adjuvant alkylating chemotherapy for glioblastoma. Neuro-Oncology 2010, 12, 116–121. [Google Scholar] [CrossRef] [PubMed]

- Louis, D.N.; Perry, A.; Reifenberger, G.; Von Deimling, A.; Figarella-Branger, D.; Cavenee, W.K.; Ohgaki, H.; Wiestler, O.D.; Kleihues, P.; Ellison, D.W. The 2016 World Health Organization classification of tumors of the central nervous system: A summary. Acta Neuropathol. 2016, 131, 803–820. [Google Scholar] [CrossRef] [PubMed]

- Baba, F. RSNA-MICCAI Brain Tumor Radiogenomic Classification. 2021. Available online: https://www.kaggle.com/competitions/rsna-miccai-brain-tumor-radiogenomic-classification/discussion/281347 (accessed on 29 October 2023).

- Roberts, D. RSNA-MICCAI Brain Tumor Radiogenomic Classification. 2021. Available online: https://www.kaggle.com/competitions/rsna-miccai-brain-tumor-radiogenomic-classification/discussion/280033 (accessed on 29 October 2023).

- Phan, M. RSNA-MICCAI Brain Tumor Radiogenomic Classification. 2021. Available online: https://www.kaggle.com/competitions/rsna-miccai-brain-tumor-radiogenomic-classification/discussion/280029 (accessed on 29 October 2023).

- Soares, C. RSNA-MICCAI Brain Tumor Radiogenomic Classification. 2021. Available online: https://www.kaggle.com/competitions/rsna-miccai-brain-tumor-radiogenomic-classification/discussion/287713 (accessed on 29 October 2023).

- Tangirala, B. RSNA-MICCAI Brain Tumor Radiogenomic Classification. 2021. Available online: https://www.kaggle.com/competitions/rsna-miccai-brain-tumor-radiogenomic-classification/discussion/281911 (accessed on 29 October 2023).

- Radosavovic, I.; Dollár, P.; Girshick, R.; Gkioxari, G.; He, K. Data distillation: Towards omni-supervised learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4119–4128. [Google Scholar]

- Santurkar, S.; Tsipras, D.; Ilyas, A.; Madry, A. How does batch normalization help optimization? Adv. Neural Inf. Process. Syst. 2018, 31. [Google Scholar]

- Hendrycks, D.; Gimpel, K. Gaussian error linear units (gelus). arXiv 2016, arXiv:1606.08415. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Baid, U.; Ghodasara, S.; Mohan, S.; Bilello, M.; Calabrese, E.; Colak, E.; Farahani, K.; Kalpathy-Cramer, J.; Kitamura, F.C.; Pati, S.; et al. The rsna-asnr-miccai brats 2021 benchmark on brain tumor segmentation and radiogenomic classification. arXiv 2021, arXiv:2107.02314. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Jocher, G. YOLOv5 by Ultralytics. Zenodo 2020. [Google Scholar] [CrossRef]

- Psaroudakis, A.; Kollias, D. Mixaugment & mixup: Augmentation methods for facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2367–2375. [Google Scholar]

- LeCun, Y.; Bengio, Y. Convolutional networks for images, speech, and time series. Handb. Brain Theory Neural Netw. 1995, 3361, 1995. [Google Scholar]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to forget: Continual prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef] [PubMed]

- Powers, D.M. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar]

- Schütze, H.; Manning, C.D.; Raghavan, P. Introduction to Information Retrieval; Cambridge University Press: Cambridge, UK, 2008; Volume 39. [Google Scholar]

- Amari, S. Backpropagation and stochastic gradient descent method. Neurocomputing 1993, 5, 185–196. [Google Scholar] [CrossRef]

- Foret, P.; Kleiner, A.; Mobahi, H.; Neyshabur, B. Sharpness-aware minimization for efficiently improving generalization. arXiv 2020, arXiv:2010.01412. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

| Methods | Score |

|---|---|

| stats-EfficientNet [12] | 42.9 ± 6.84 |

| YOLO-EfficientNet [9] | 44.4 ± 7.41 |

| EfficientNet-Aggr [11] | 46.1 ± 4.68 |

| EfficientNet-LSTM-mpMRI [10] | 47.4 ± 4.41 |

| 3D-Resnet10-Trick [8] | 62.9 ± 4.8 |

| BTDNet | 66.2 ± 3.1 |

| BTDNet | Score |

|---|---|

| ResNet50 as CNN | 64.1 ± 3.6 |

| ResNet101 as CNN | 62.8 ± 3.88 |

| EfficientNetB0 as CNN | 63.9 ± 3.92 |

| EfficientNetB3 as CNN | 62.9 ± 4.21 |

| ConvNeXt-T as CNN | 64.9 ± 3.7 |

| LSTM, 64 units as RNN | 65.1 ± 3.5 |

| LSTM, 256 units as RNN | 64.3 ± 4.3 |

| GRU, 64 units as RNN | 65 ± 3.7 |

| GRU, 256 units as RNN | 64.4 ± 4.4 |

| no routing component | 60.9 ± 5.7 |

| no mask layer | 62.9 ± 5.2 |

| dense layer (routing component), 32 units | 65.1 ± 3.4 |

| dense layer (routing component), 128 units | 64.9 ± 3.6 |

| only FLAIR modality | 64.5 ± 3.3 |

| only T1w modality | 63.2 ± 4.3 |

| only T1wCE modality | 63.8 ± 4.1 |

| only T2 modality | 64.1 ± 3.8 |

| no test-time data augmentation | 65.2 ± 3.4 |

| no geometric transformations | 65.3 ± 3.3 |

| no MixAugment | 64.7 ± 3.8 |

| categorical cross-entropy as objective function | 64.9 ± 3.4 |

| binary cross-entropy as objective function | 64.5 ± 3.5 |

| BTDNet | 66.2 ± 3.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kollias, D.; Vendal, K.; Gadhavi, P.; Russom, S. BTDNet: A Multi-Modal Approach for Brain Tumor Radiogenomic Classification. Appl. Sci. 2023, 13, 11984. https://doi.org/10.3390/app132111984

Kollias D, Vendal K, Gadhavi P, Russom S. BTDNet: A Multi-Modal Approach for Brain Tumor Radiogenomic Classification. Applied Sciences. 2023; 13(21):11984. https://doi.org/10.3390/app132111984

Chicago/Turabian StyleKollias, Dimitrios, Karanjot Vendal, Priyankaben Gadhavi, and Solomon Russom. 2023. "BTDNet: A Multi-Modal Approach for Brain Tumor Radiogenomic Classification" Applied Sciences 13, no. 21: 11984. https://doi.org/10.3390/app132111984

APA StyleKollias, D., Vendal, K., Gadhavi, P., & Russom, S. (2023). BTDNet: A Multi-Modal Approach for Brain Tumor Radiogenomic Classification. Applied Sciences, 13(21), 11984. https://doi.org/10.3390/app132111984