Simulation and Implementation of a Mobile Robot Trajectory Planning Solution by Using a Genetic Micro-Algorithm

Abstract

1. Introduction

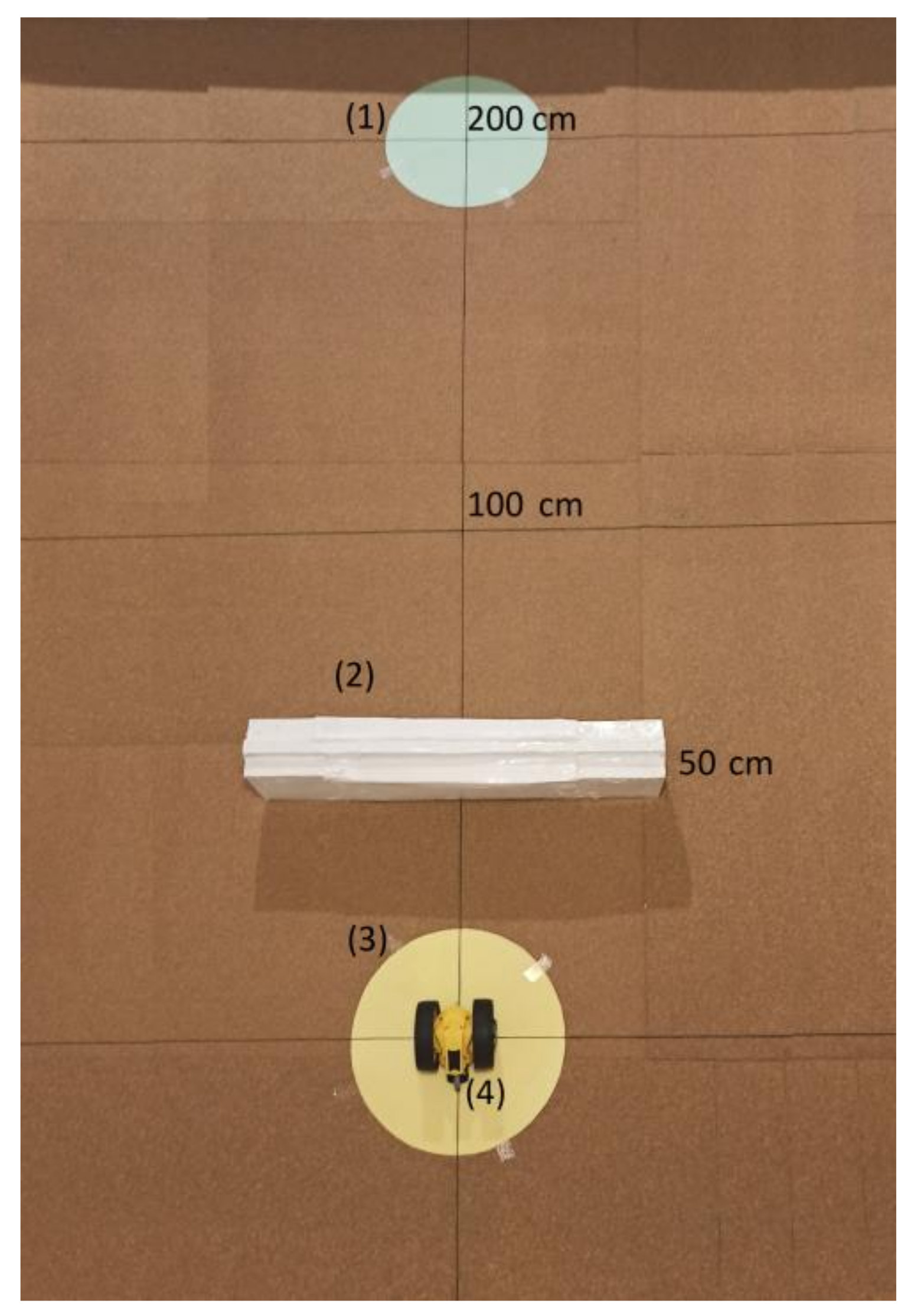

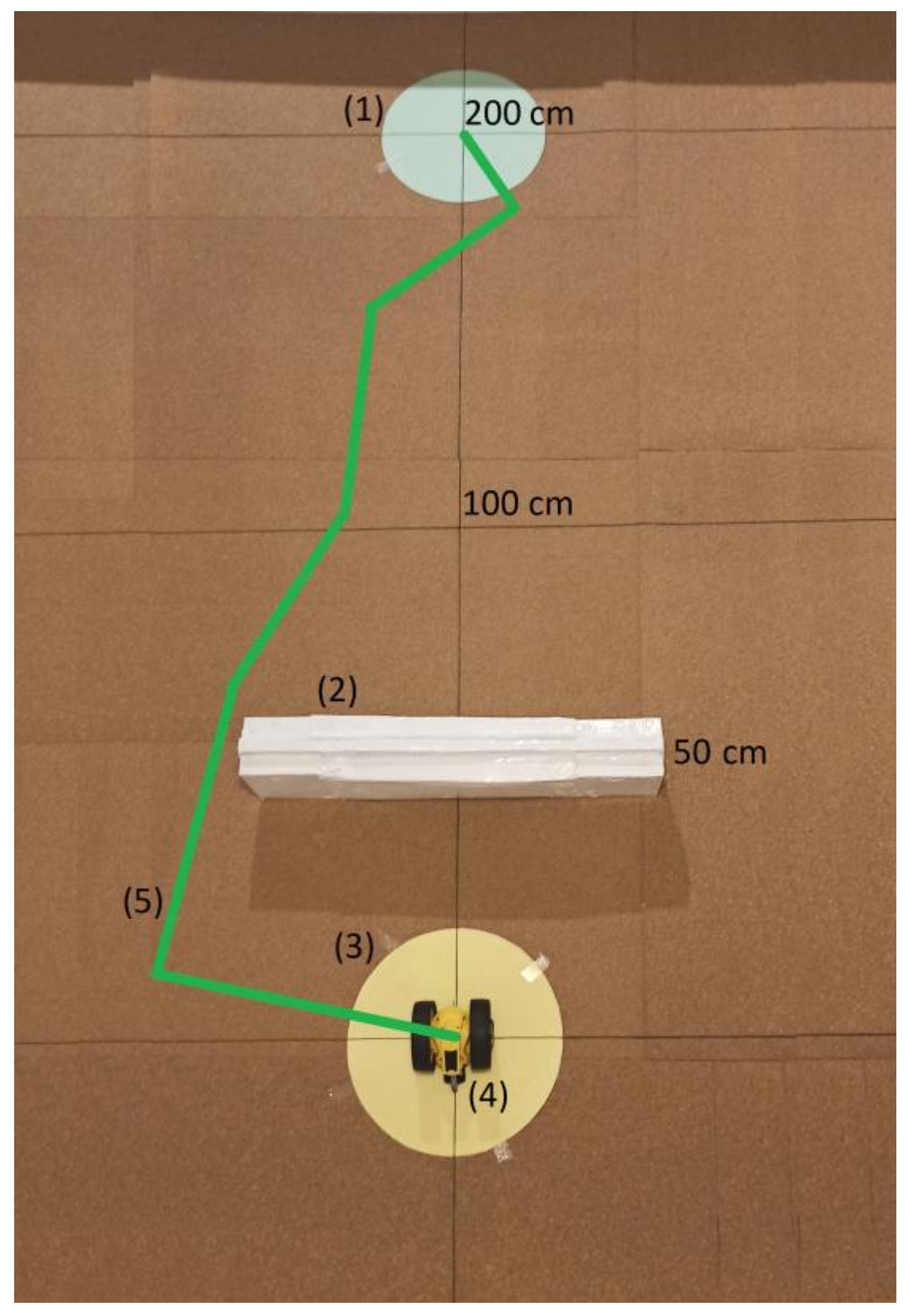

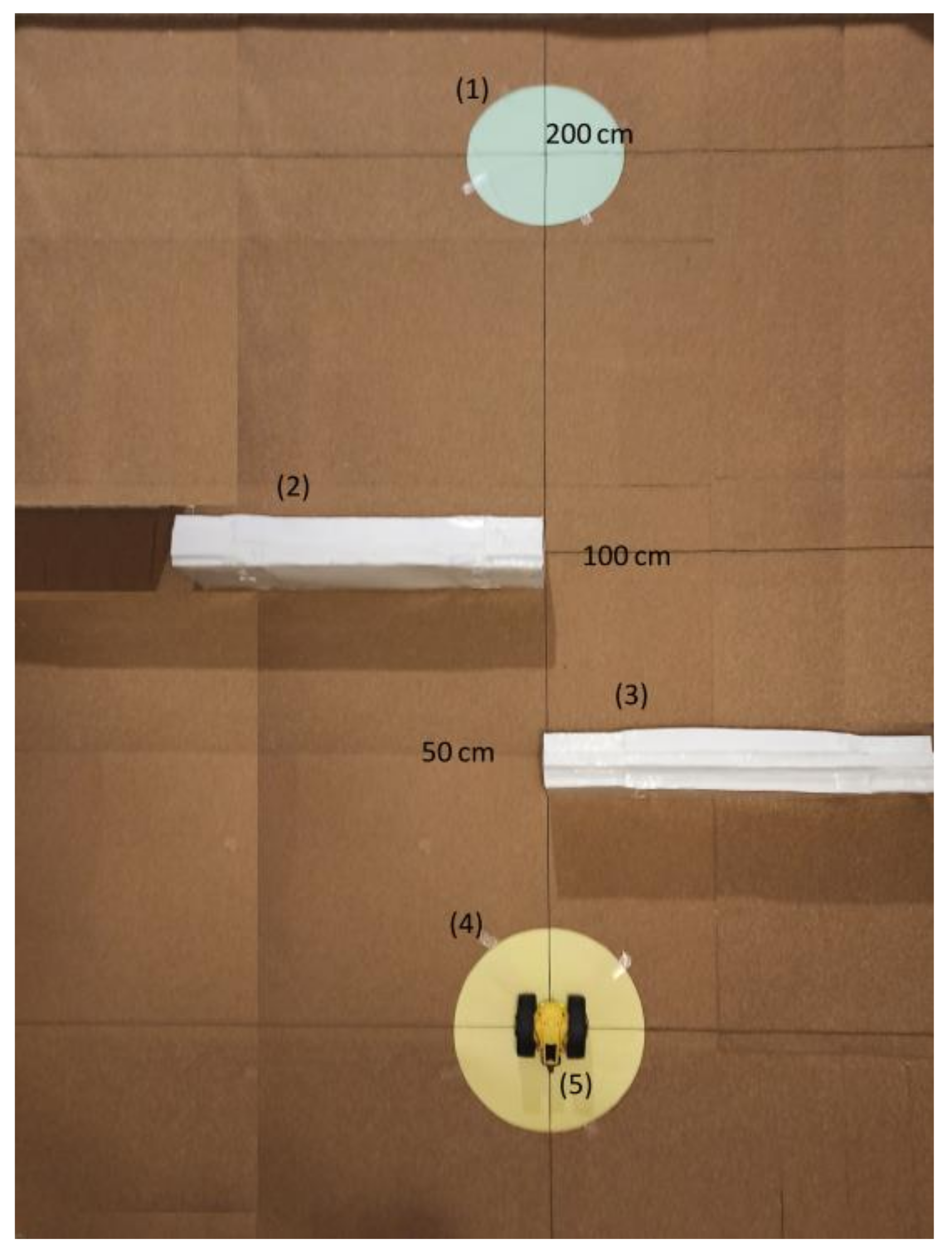

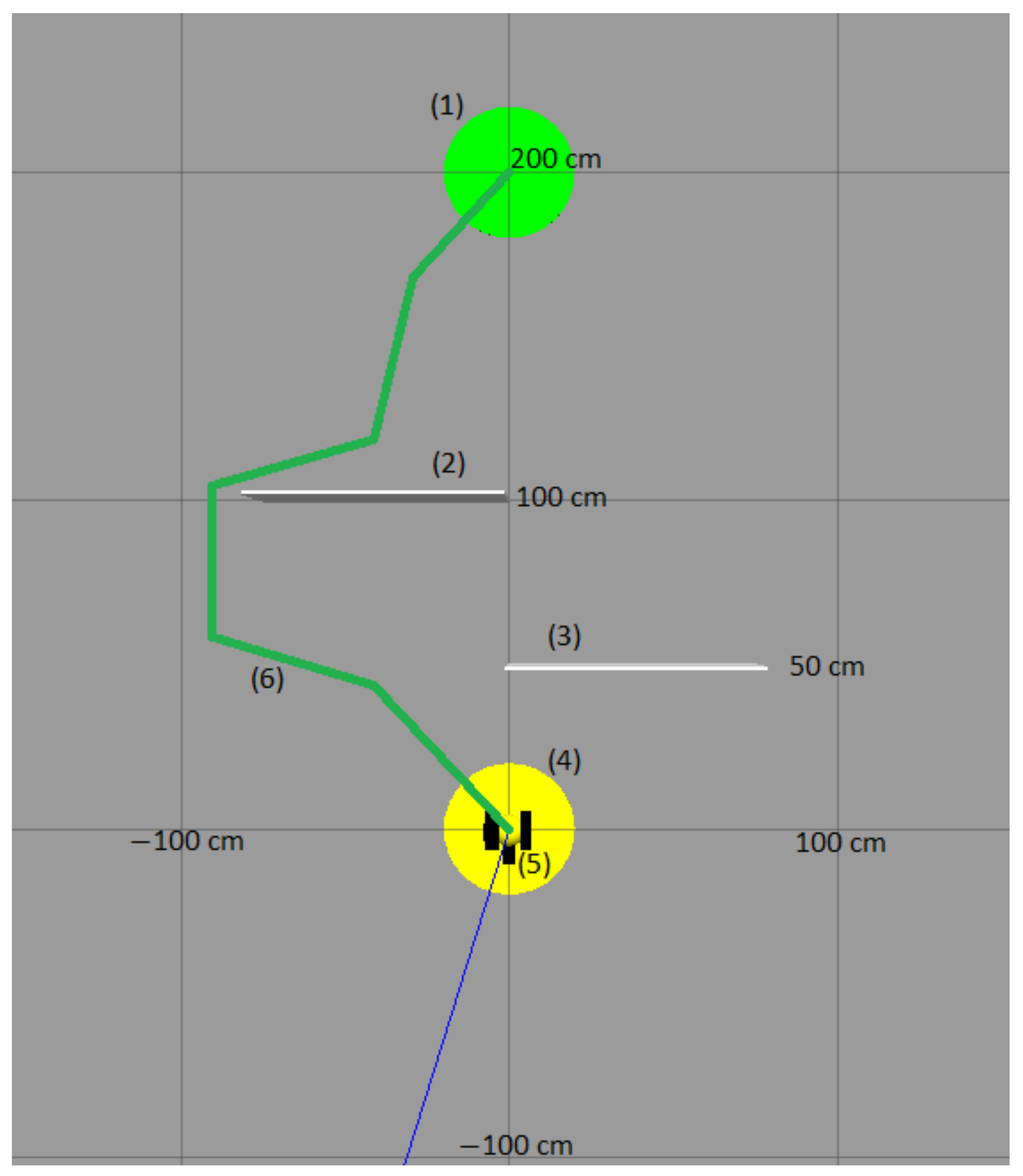

2. Materials and Methods

2.1. Robot

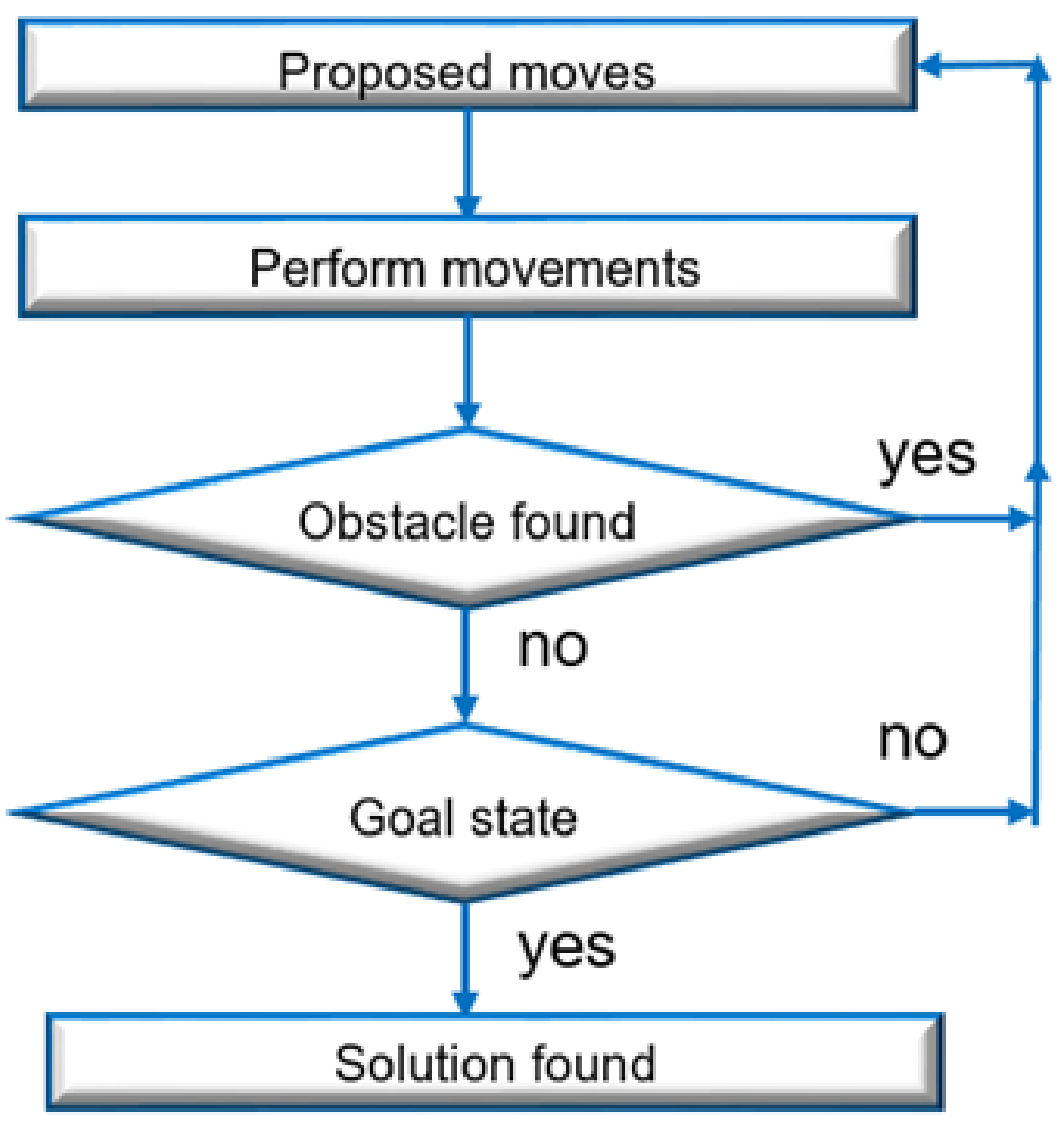

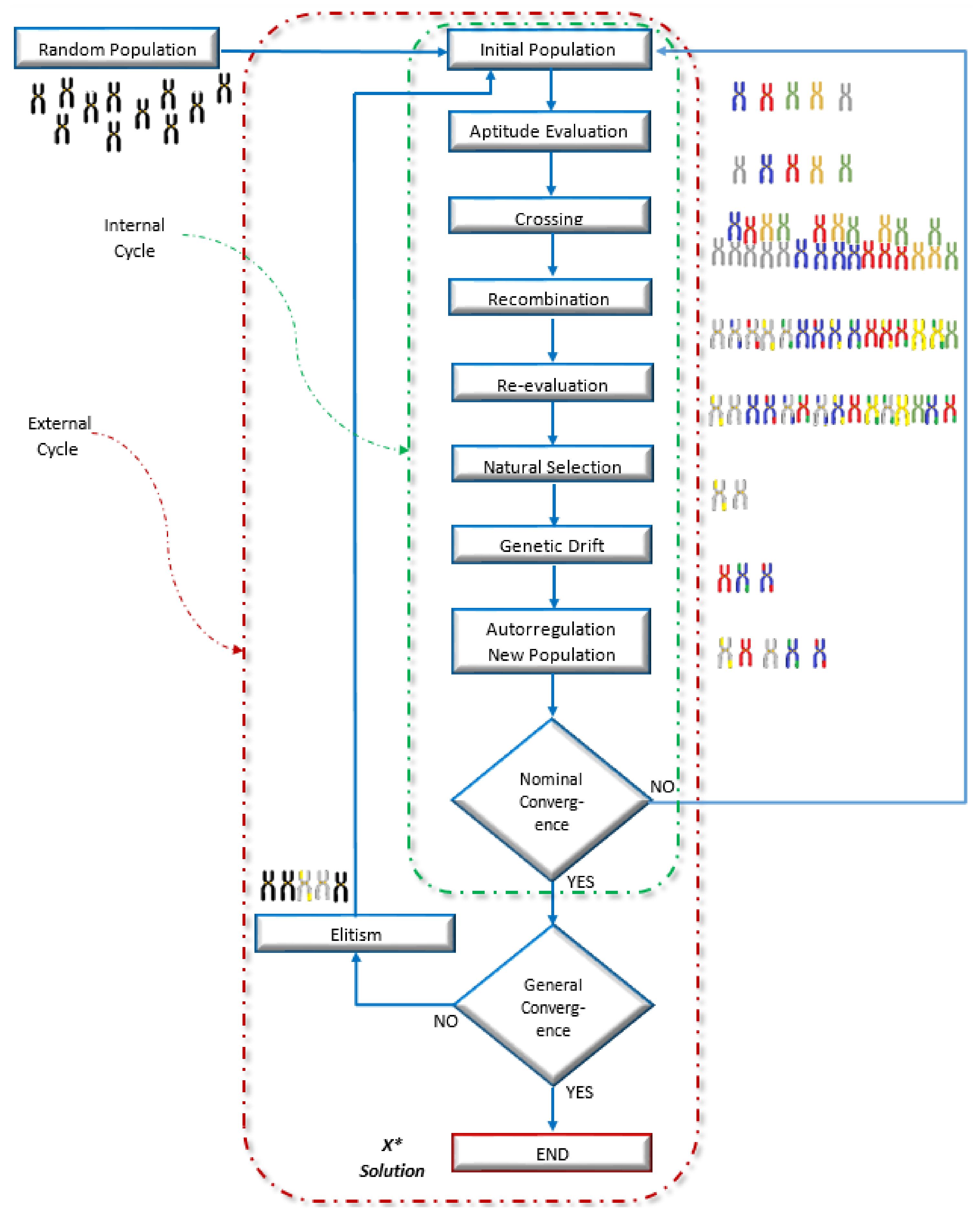

2.2. Algorithm

- Initialization: an initial population of n individuals is randomly generated;

- Generation: in this stage, the corresponding genetic operators are applied to the initial population, depending on the paradigm being handled; they are also known as evolutionary adaptation procedures;

- Cycle: Finally, the second stage is repeated until the convergence criterion or stop condition is reached. Some algorithms cycle from the first stage, keeping the fittest individuals and replacing the rest of the population within a second convergence cycle.

- Selection: consists of a probabilistic or deterministic process that makes it possible to choose the parent individuals of the next generation;

- Crossover: refers to the exchange of information between two parents selected based on their fitness according to the objective function;

- Mutation: is responsible for making minimal changes to the newly created individuals in the new population to explore areas of the search space that the crossover could not reach, thus maintaining the diversity of the individuals.

- Evolutionary programming;

- Evolutionary strategies;

- Genetic algorithms.

- Differential evolution;

- Genetic programming;

- Memetic algorithm;

- Probabilistic models.

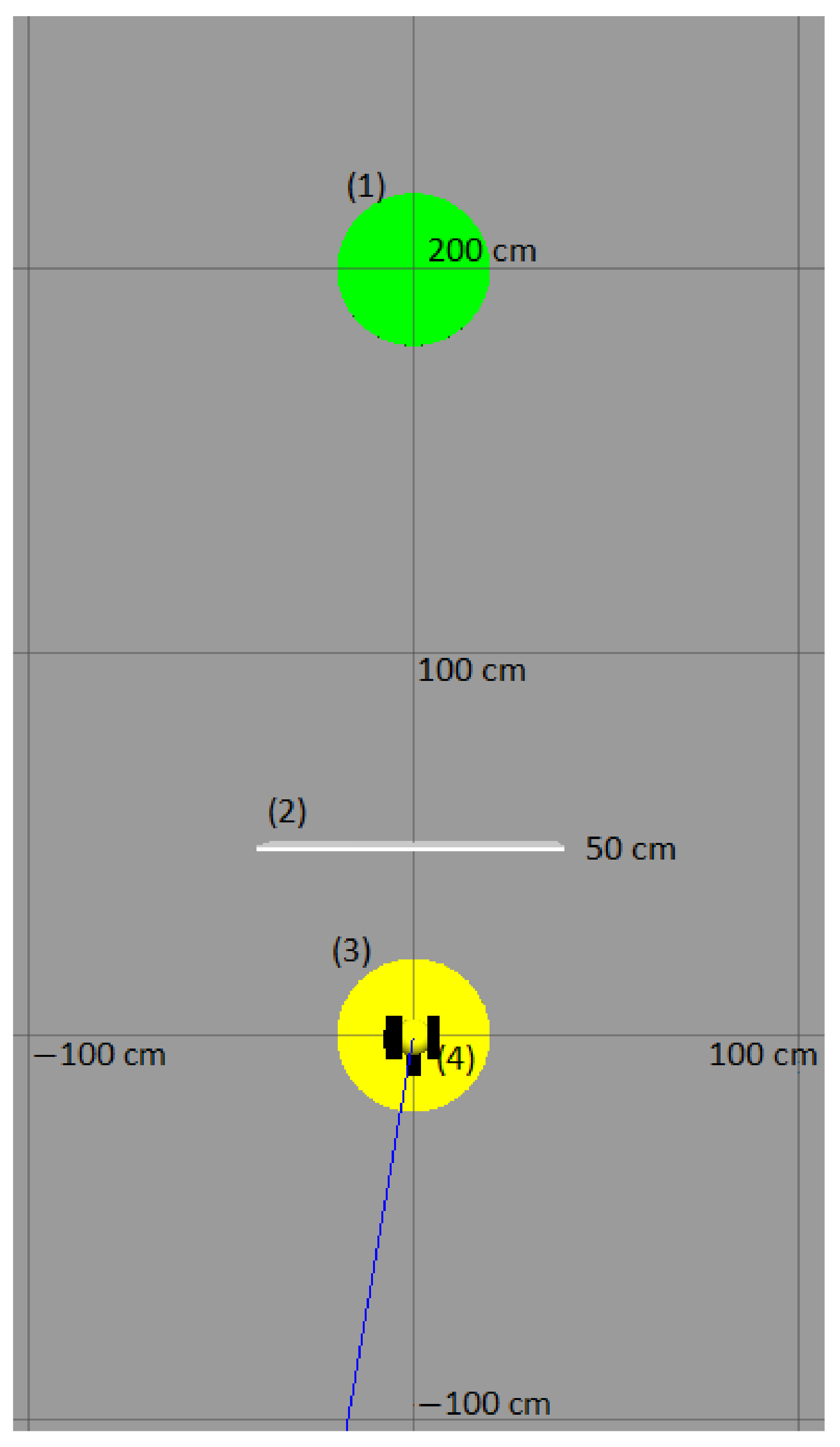

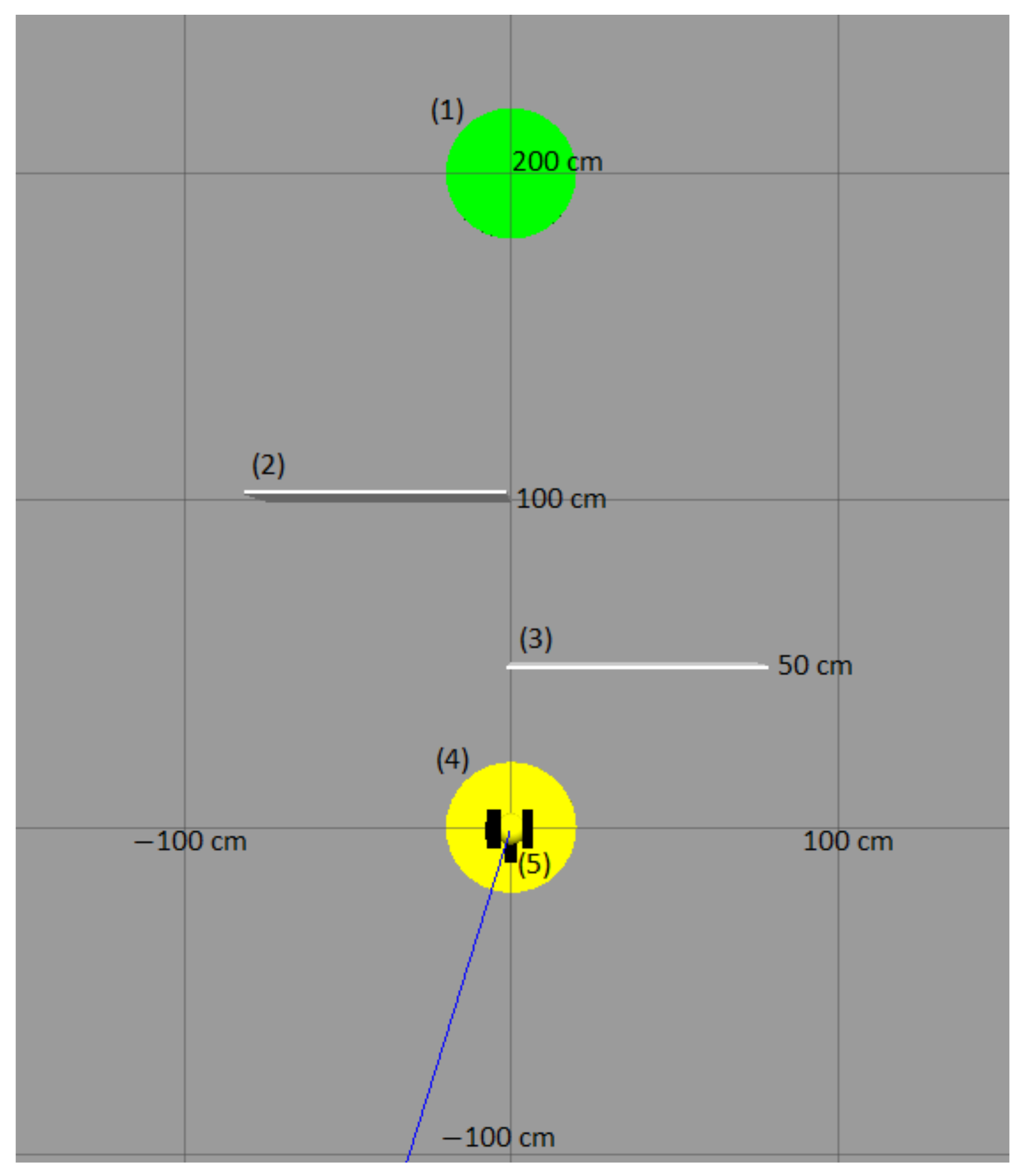

2.3. Simulator

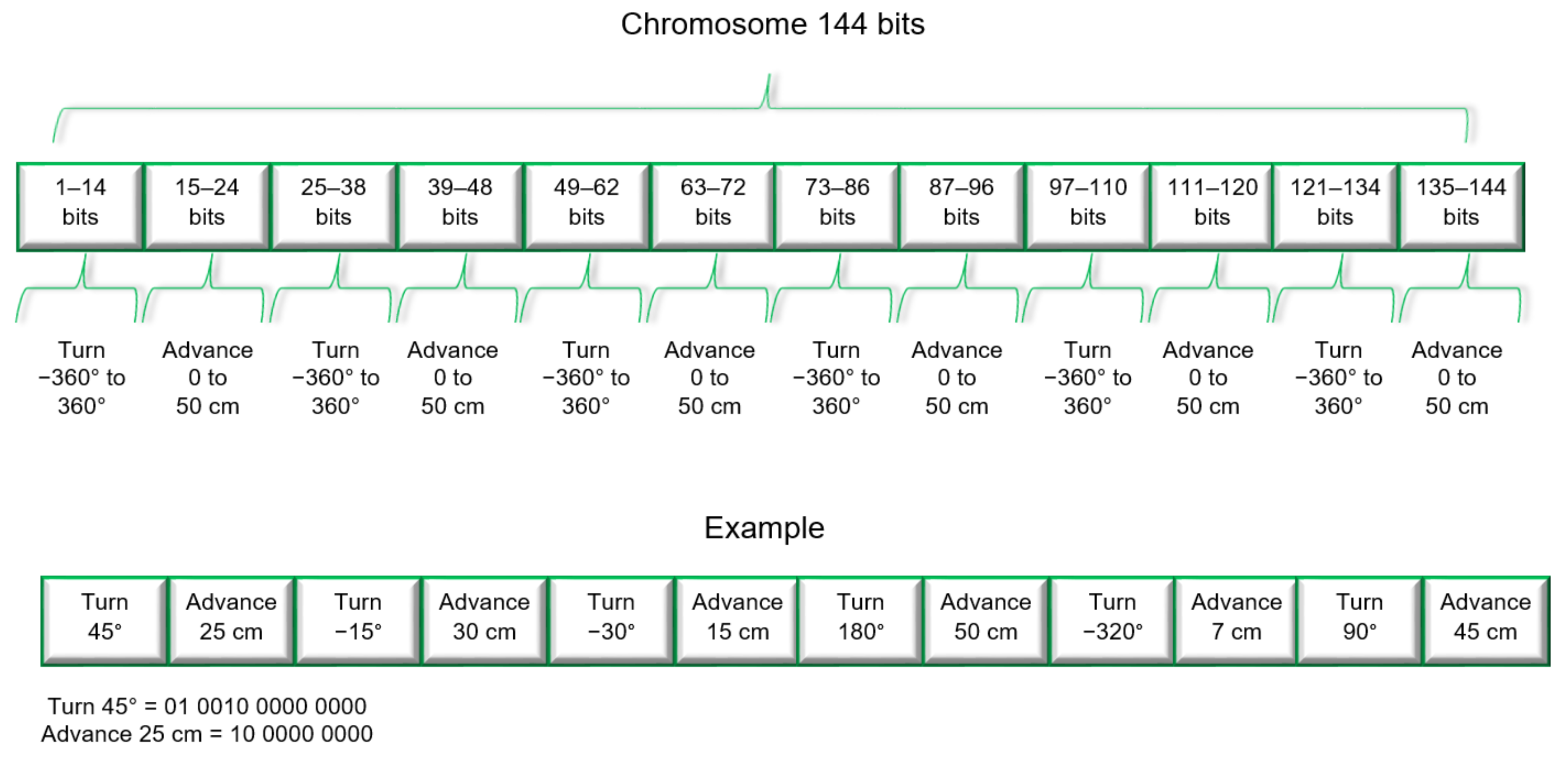

2.4. Chromosome bit-to-motion conversion

2.5. Methods

| Algorithm 1 RandomPopulation |

| Input: populationSize, chromosome, |

| sizeChormosome |

| Output: Random Population |

| 1. function RandomPopulation() |

| 2. set RandomSeed() |

| 3. for i ← 1 to populationSize do |

| 4. RandomBinary(chromosome, sizeChromosome) |

| 5. end for |

| Algorithm 2 RandomBinary |

| Input: sizeChromosome, chromosome |

| Output: Random binary, chromosome |

| 1. function RandomBinary() |

| 2. for i ← 1 to sizeChromosome do |

| 3. RandomNum ← random [1, 100] |

| 4. if RandomNum ≤ 50 do |

| 5. chromosome[ i ] ← 0 |

| 6. else chromosome[ i ] ← 1 |

| 7. end if |

| 8. end for |

| Algorithm 3 Main algorithm |

| Input: stopCondition, nominalConv, populationSize, |

| chromosomeSize |

| Output: bestChromosome, resultTest1 |

| 1. P ← RandomPopulation() |

| 2. for h ← 1 to stopCondition do |

| 3. serial ← 0 |

| 4. for i ← 1 to nominalConv do |

| 5. rfrag ← random[0, 5] |

| 6. fragment ← (24 × rfrag) |

| 7. if module(h+1) = 0 do |

| 8. serial ← 6 + i |

| 9. else serial ← 23 – i |

| 10. end if |

| 11. RandomSeed() |

| 12. FitnessEval(P) |

| 13. CrossPop ← Cross(P) |

| 14. SortedPop ← FitnessEval2(CrossPop) |

| 15. NewPop ← SelfRegulation(SortedPop) |

| 16. for j ← 1 to populationSize do |

| 17. for k ← 1 to chromosomeSize do |

| 18. P[j][k] ← NewPop[j][k] |

| 19. end for |

| 20. end for |

| 21. end for |

| 22. RandomBinary(sizeChromosome, P[1]) |

| 23. RandomBinary(sizeChromosome, P[2]) |

| 24. RandomBinary(sizeChromosome, P[3]) |

| 25. inputFile ← openFile(“resultsTest1.txt”, “r”) |

| 26. set g ← 0 |

| 27. while inputFile ≠ EOF do |

| 28. inputData ← readChar(inputFile) |

| 29. Aux[g] ← inputData |

| 30. g ← g + 1 |

| 31. end while |

| 32. closeFile(inputFile) |

| 33. outputFile ← openFile(“resultsTest1.txt”, “w”) |

| 34. set e ← 0 |

| 35. while e < g do |

| 36. outputFile ← Aux[e] |

| 37. e ← e + 1 |

| 38. end while |

| 39. outputFile ← bestFitness |

| 40. closeFile(outputFile) |

| 41. bestFile ← openFile(“bestChromosome.txt”,“w”) |

| 42. for i ← 1 to chromosomeSize do |

| 43. bestFile ← P[populationSize-1][ i ] |

| 44. end for |

| 45. closeFile(bestFile) |

| 46. end for |

| Algorithm 4. FitnessEval |

| Input: populationSize, orderedPopulation |

| Output: orderedPopulation, bestFitness, P |

| 1. function FitnessEval() |

| 2. for i ← 0 to populationSize do |

| 3. fitness ← FitnessFunction_call_to_gazebo_simulator |

| 4. orderedPopulation[i][0] ← fitness |

| 5. orderedPopulation[i][1] ← i |

| 6. end for |

| 7. for i ← 1 to populationSize-1 do |

| 8. for j ← 0 to populationSize-i do |

| 9. if orderedPopulation[j][0] > orderedPopulation[j+1][0] do |

| 10. aux ← orderedPopulation[j][0] |

| 11. orderedPopulation[j][0] ← orderedPopulation[j+1][0] |

| 12. orderedPopulation[j+1][0] ← aux |

| 13. aux ← orderedPopulation[j][1] |

| 14. orderedPopulation[j][1] ← orderedPopulation[j+1][1] |

| 15. orderedPopulation[j+1][1] ← aux |

| 16. end if |

| 17. end for |

| 18. end for |

| 19. for i ← 0 to populationSize do |

| 20. k ← orderedPopulation[i][1] |

| 21. for j ← 0 to chromosomeSize doend for |

| 22. aux[i][j] ← population[k][j] |

| 23. end for |

| 24. end for |

| 25. for i ← 0 to populationSize do |

| 26. for j ← 0 to chromosomeSize do |

| 27. P[populationSize-i-1] ← aux[i][j] |

| 28. end for |

| 29. bestFitness ← orderedPopulation[i][0] |

| 30. end for |

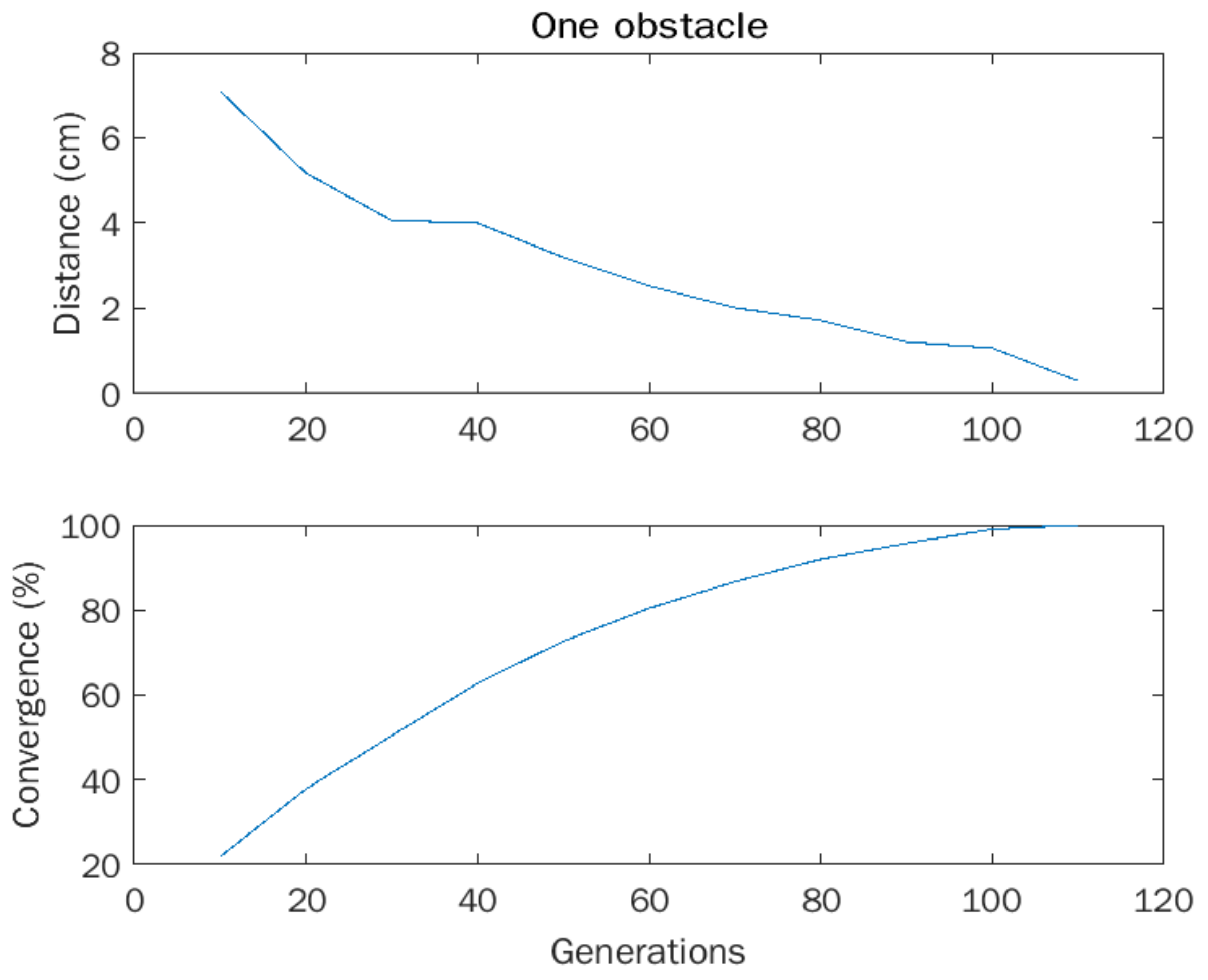

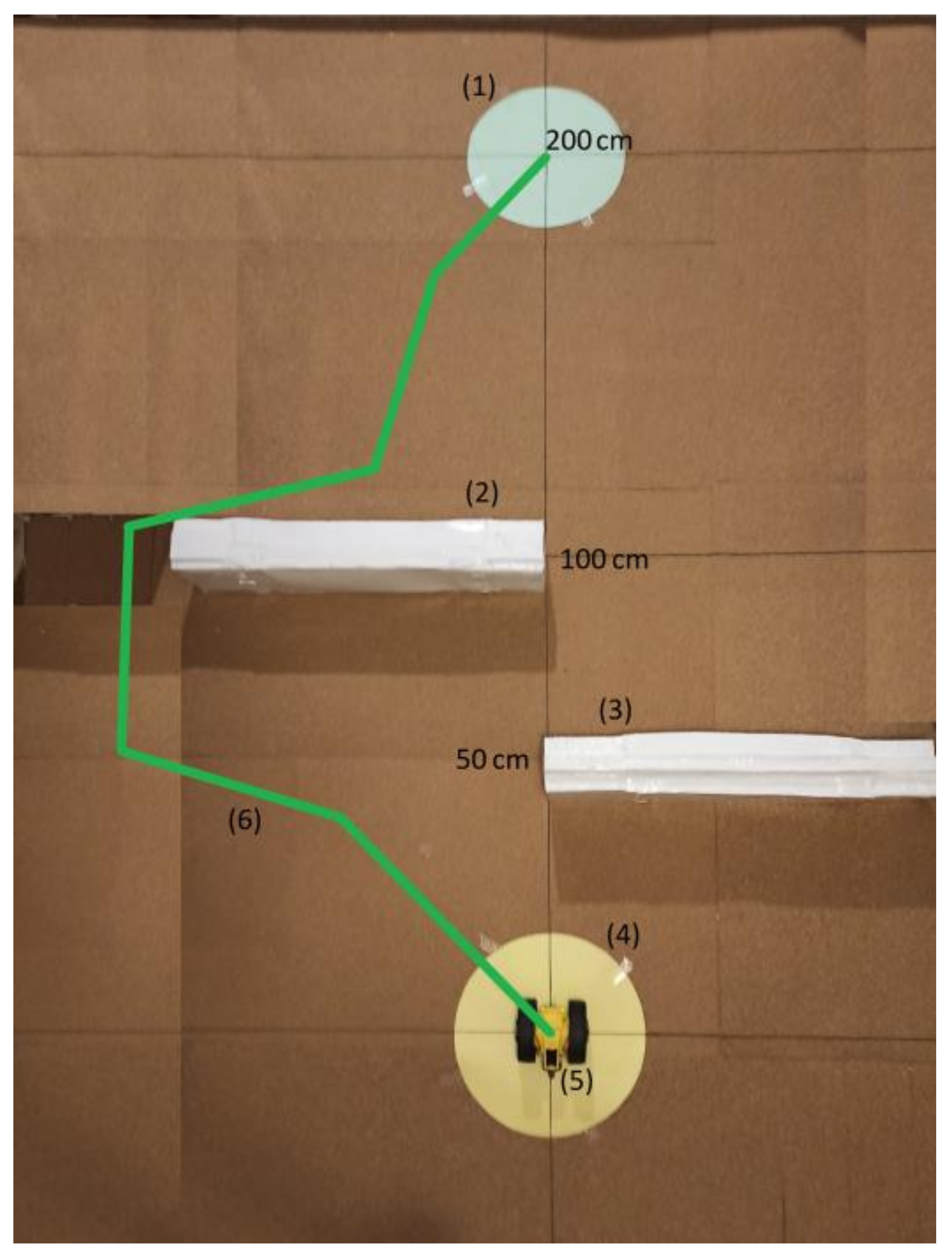

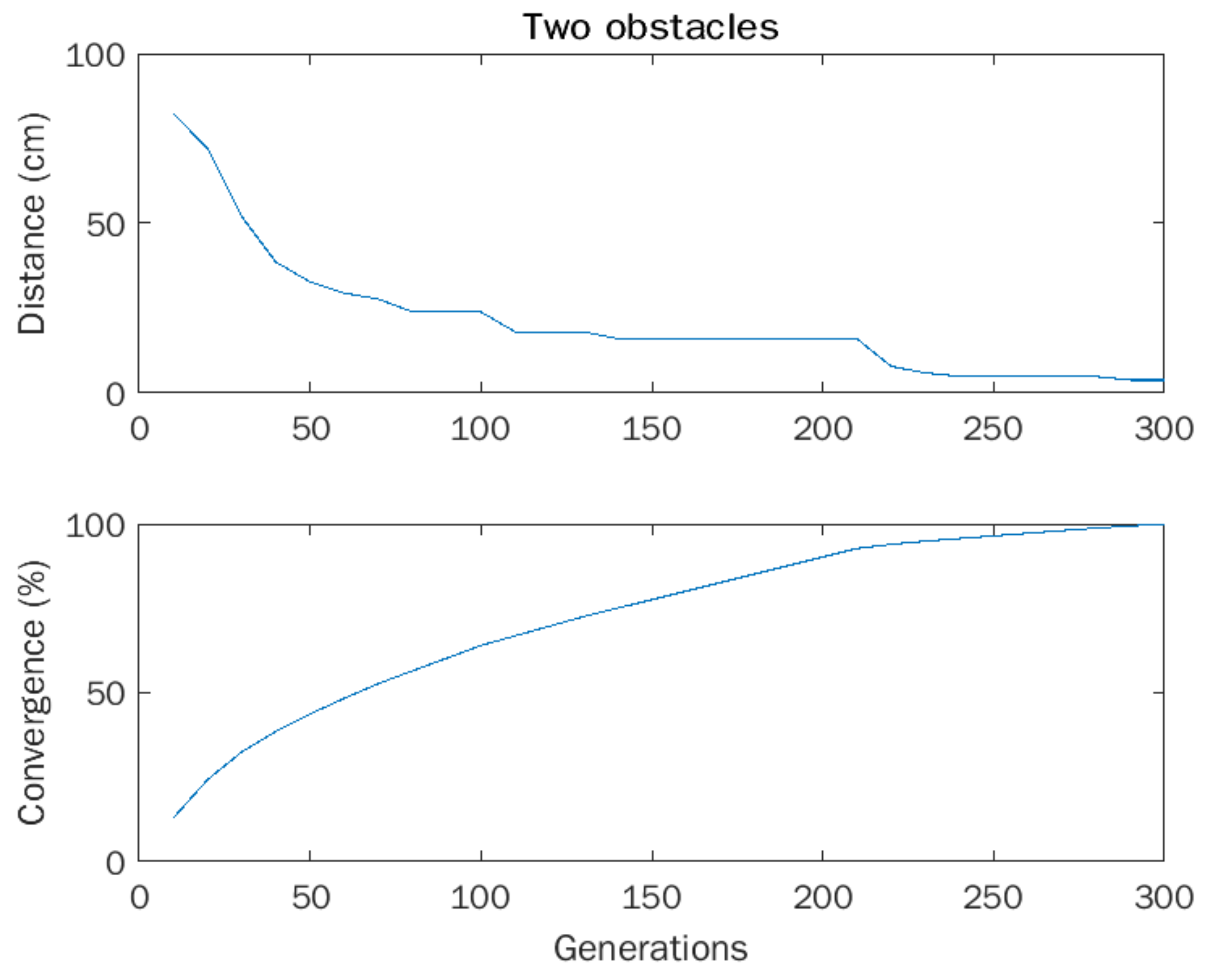

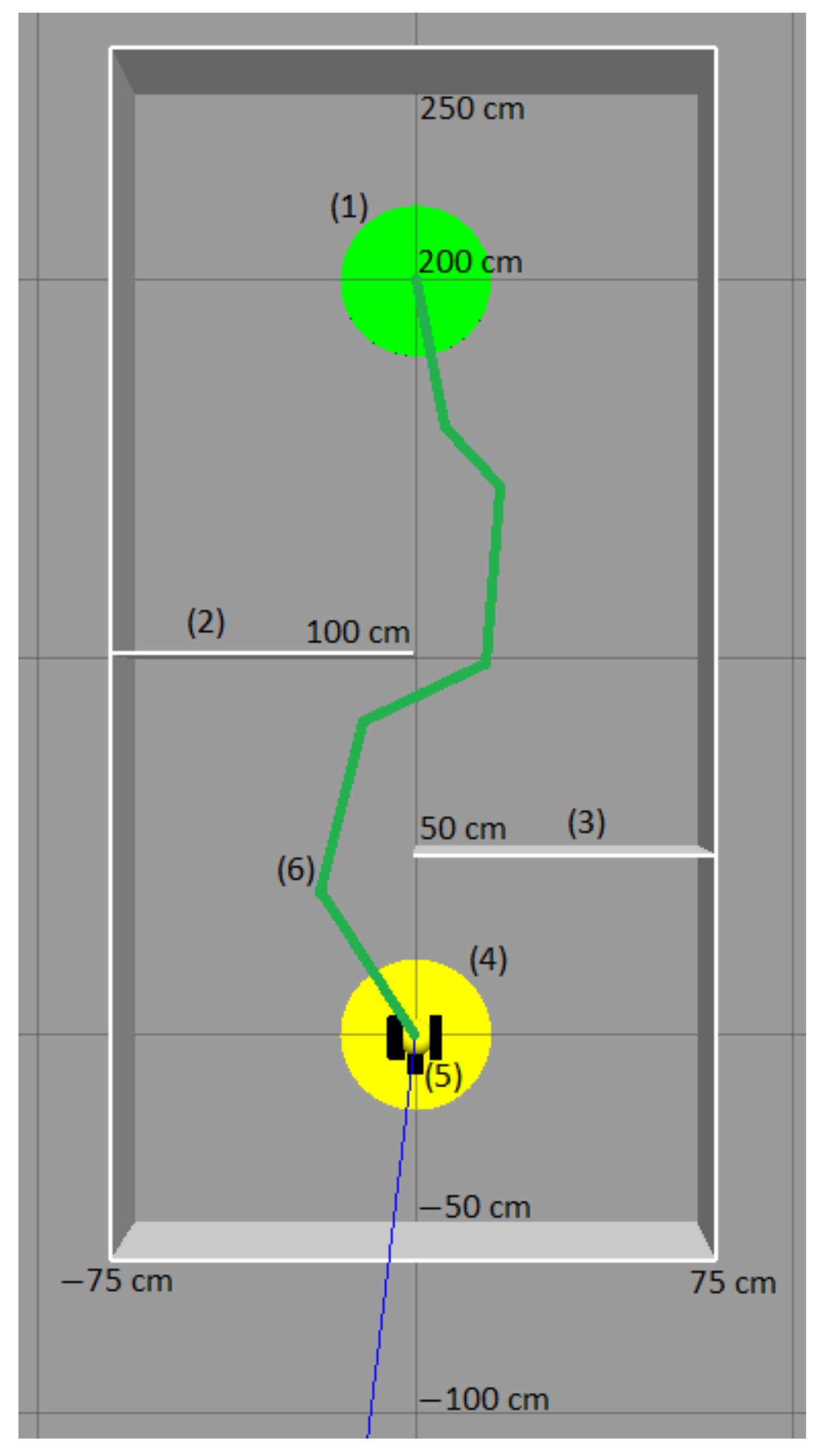

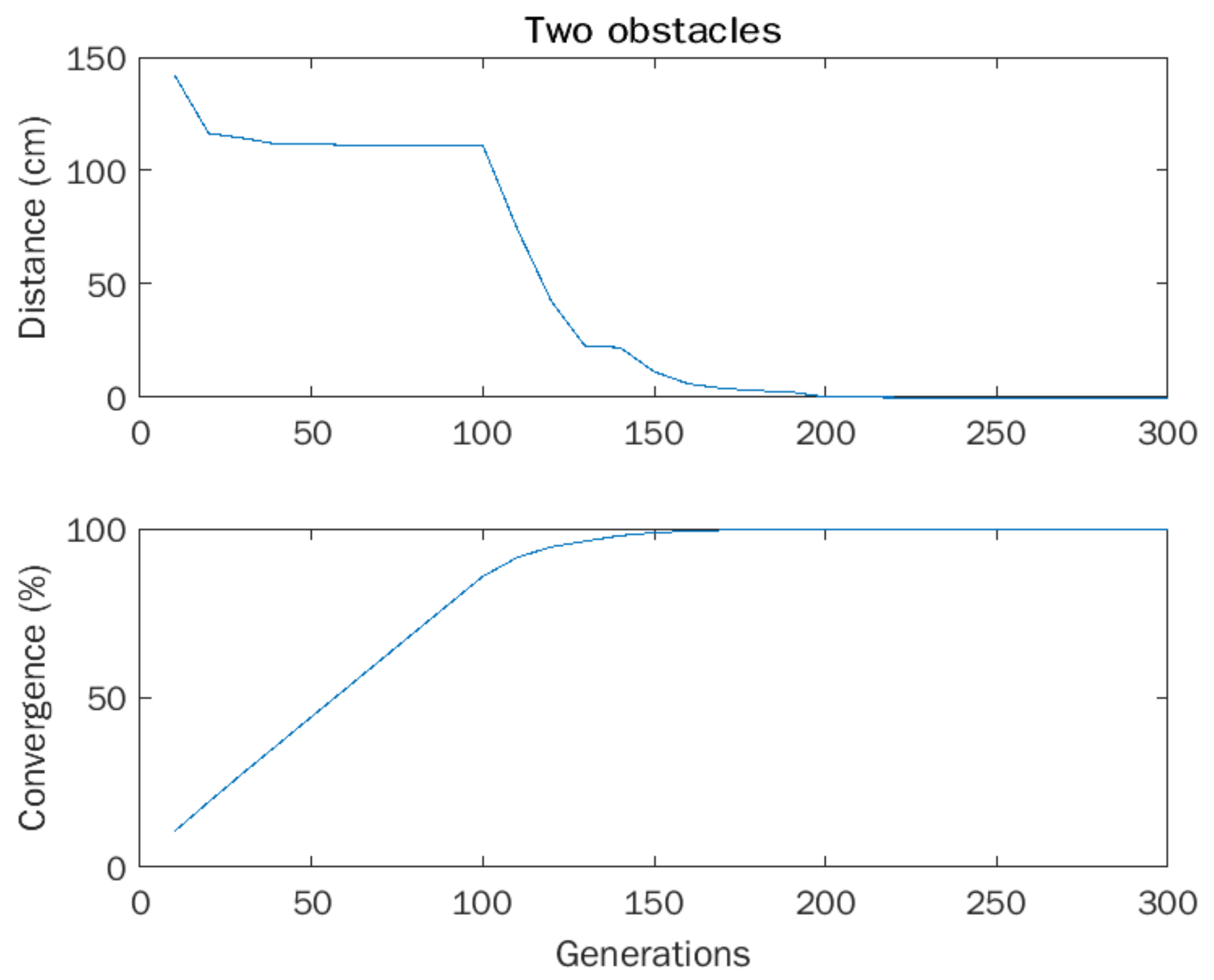

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Tan, K.C.; Jung, M.; Shyu, I.; Wan, C.; Dai, R. Motion Planning and Task Allocation for a Jumping Rover Team. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 5278–5283. [Google Scholar] [CrossRef]

- Huizinga, J.; Clune, J. Evolving Multimodal Robot Behavior via Many Stepping Stones with the Combinatorial Multi-Objective Evolutionary Algorithm. Evol. Comput. 2021, 30, 131–164. [Google Scholar]

- Ramos, R.; Duarte, M.; Oliveira, S.; Christensen, A.L. Evolving Controllers for Robots with Multimodal Locomotion. In From Animals to Animats 14; Tuci, E., Giagkos, A., Wilson, M., Hallam, J., Eds.; Springer: Cham, Switzerland, 2016; Volume 9825. [Google Scholar] [CrossRef]

- Lan, J.; Xie, Y.; Liu, G.; Cao, M. A Multi-Objective Trajectory Planning Method for Collaborative Robot. Electronics 2020, 9, 859. [Google Scholar] [CrossRef]

- Zhang, X.; Huang, Y.; Rong, Y.; Li, G.; Wang, H.; Liu, C. Optimal Trajectory Planning for Wheeled Mobile Robots under Localization Uncertainty and Energy Efficiency Constraints. Sensors 2021, 21, 335. [Google Scholar] [CrossRef] [PubMed]

- Dinev, T.; Xin, S.; Merkt, W.; Ivan, V.; Vijayakumar, S. Modeling and Control of a Hybrid Wheeled Jumping Robot. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 2563–2570. [Google Scholar] [CrossRef]

- Wu, M.; Dai, S.-L.; Yang, C. Mixed Reality Enhanced User Interactive Path Planning for Omnidirectional Mobile Robot. Appl. Sci. 2020, 10, 1135. [Google Scholar] [CrossRef]

- Molina-Leal, A.; Gómez-Espinosa, A.; Cabello, J.A.E.; Cuan-Urquizo, E.; Cruz-Ramírez, S.R. Trajectory Planning for a Mobile Robot in a Dynamic Environment Using an LSTM Neural Network. Appl. Sci. 2021, 11, 10689. [Google Scholar] [CrossRef]

- Klemm, V.; Morra, A.; Salzmann, C.; Tschopp, F.; Bodie, K.; Gulich, L.; Kung, N.; Mannhart, D.; Pfister, C.; Vierneisel, M.; et al. Ascento: A two-wheeled jumping robot. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 7515–7521. [Google Scholar] [CrossRef]

- Gul, F.; Mir, I.; Abualigah, L.; Sumari, P.; Forestiero, A. A Consolidated Review of Path Planning and Optimization Techniques: Technical Perspectives and Future Directions. Electronics 2021, 10, 2250. [Google Scholar] [CrossRef]

- Hao, K.; Zhao, J.; Yu, K.; Li, C.; Wang, C. Path Planning of Mobile Robots Based on a Multi-Population Migration Genetic Algorithm. Sensors 2020, 20, 5873. [Google Scholar] [CrossRef] [PubMed]

- Estrada, F.A.C.; Lozada, J.C.H.; Gutierrez, J.S.; Valencia, M.I.C. Performance between Algorithm and micro Genetic Algorithm to solve the robot locomotion. IEEE Lat. Am. Trans. 2019, 17, 1244–1251. [Google Scholar] [CrossRef]

- Lee, H.-Y.; Shin, H.; Chae, J. Path Planning for Mobile Agents Using a Genetic Algorithm with a Direction Guided Factor. Electronics 2018, 7, 212. [Google Scholar] [CrossRef]

- De Oliveira, G.C.R.; de Carvalho, K.B.; Brandão, A.S. A Hybrid Path-Planning Strategy for Mobile Robots with Limited Sensor Capabilities. Sensors 2019, 19, 1049. [Google Scholar] [CrossRef] [PubMed]

- Liu, M. Genetic Algorithms in Stochastic Optimization and Applications in Power Electronics. Ph.D. Thesis, University of Kentucky, Lexington, KY, USA, 2016. [Google Scholar]

- Holland, J. Adaptation in Natural and Artificial Systems: An Introductory Analysis with Applications to Biology, Control, and Artificial Intelligence; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Zhang, H.-Y.; Lin, W.-M.; Chen, A.-X. Path Planning for the Mobile Robot: A Review. Symmetry 2018, 10, 450. [Google Scholar] [CrossRef]

| Reference | Simulator Used/Operating System | Embedded Algorithm | Implementation or Simulation | Algorithm Used | Time for Travel/Computing Time./Other |

|---|---|---|---|---|---|

| Tan et al. [1] | MATLAB/Windows | No, the solutions are computed on MATLAB | Both | Rapidly exploring random tree (RRT) | 36 s |

| Huizinga and Clune [2] | Bullet, Fastsim | No | Simulation | Multi-objective evolutionary algorithm (CMOEA) | It depends on the optimization result. |

| Ramos et al. [3] | JBotEvolver/Java-based open-source | No, the solutions are computed on software | Simulation | Continuous-time recurrent neural network | A simple obstacle (1.71 to 1.84 s) |

| Dinev et al. [6] | Simulator PyBullet | No, the solutions are computed using CasADI and KNITRO | Simulation | KNITRO and selected the Interior/Conjugate-Gradient algorithm suited for large-scale | 5 s |

| Klemm et al. [9] | Gazebo/ROS | Yes, the solution was implemented directly on the chip | Both | Optimal control strategy for regulating a linear system at minimal cost LQR | Time to jump 2 s |

| Chávez et al. [12] | MATLAB/Windows | Yes, in a P8X32A 8-core Architecture | Both | Genetic algorithm | Another type of robot |

| Lee et al. [13] | Java JDK1.8 / Windows 10 | No, the solutions are computed using CasADI and KNITRO | Simulation | Genetic algorithm (GA) and a direction factor toward a target point | Not presented. Only shows computing time 1.87 s |

| de Oliveira et al. [14] | MobileSim, Pioneer Software Development Kit to commercial Pioneer 3-DX robot./ROS. | No. Personal computer is responsible for acquiring the sensor data and controlling the robot. | Both | Hybrid path-planning strategy with A* algorithm | It depends on the optimization result |

| Hao et al. [11] | MATLAB r2018a / Windows 10(64-bit) | No, the solutions are computed in MATLAB | Simulation | Multi-population migration genetic algorithm | Not presented. Only shows computing time 120.59 s |

| Mengmei Liu [15] | MATLAB and SIMULINK | No, the solutions are computed on MATLAB. Do not use a mobile robot | Simulation | Genetic Algorithm | Time to do one simulation 2.5 s. total of simulation 1600 |

| Lan et al. [4] | MATLAB, ROS | No, the solutions are computed on MATLAB | Simulation | Multi-objective particle swarm optimization algorithm (TCMOPSO) | It depends on the optimization result |

| Zhang et al. [5] | none | Yes, the solution was implemented directly on the chip | Implementation | Dolphin swarm algorithm | 35 s time to trajectory |

| Mulun et al. [7] | ROS, Rviz/Windows | No, the solutions are computed on ROS | Both | Vector field histogram * (VFH *) | Not presented. Only shows computing time 62.2 s |

| Molina-Leal et al. [8] | GAZEBO/Windows | Both, TurtleBot 3 Waffle Pi | Both | Adam optimization algorithm | Another type of robot |

| Our proposed solution | ROS, GAZEBO, Linux. | Yes, the solution was implemented directly on the chip | Both | Micro-genetic algorithm | 33 s |

| Measured | Simulation | Robot |

|---|---|---|

| 360° | 358°–361° | 354°–366° |

| 180° | 179°–181° | 173°–184° |

| 90° | 89°–91° | 86°–94° |

| 45° | 43°–46° | 43°–48° |

| 10° | 9°–11° | 8°–12° |

| 5° | 4°–7° | 3°–8° |

| Measured | Simulation | Robot |

|---|---|---|

| 1 m | 98–102 cm | 94–107 cm |

| 50 cm | 48–52 cm | 45–55 cm |

| 25 cm | 24–27 cm | 21–28 cm |

| 15 cm | 14–16 cm | 12–18 cm |

| 10 cm | 9–11 cm | 8–12 cm |

| 5 cm | 3–7 cm | 2–7 cm |

| Experiment | Generations | Time (min) | Goal (µm) |

|---|---|---|---|

| 1 | 300 | 1920.0 | 040 |

| 2 | 300 | 1920.0 | 100 |

| 3 | 300 | 1920.6 | 000 |

| 4 | 300 | 1920.0 | 200 |

| 5 | 300 | 1928.4 | 100 |

| 6 | 300 | 1926.0 | 100 |

| 7 | 300 | 1923.0 | 020 |

| 8 | 300 | 1920.0 | 010 |

| 9 | 300 | 1920.0 | 000 |

| 10 | 300 | 1924.2 | 000 |

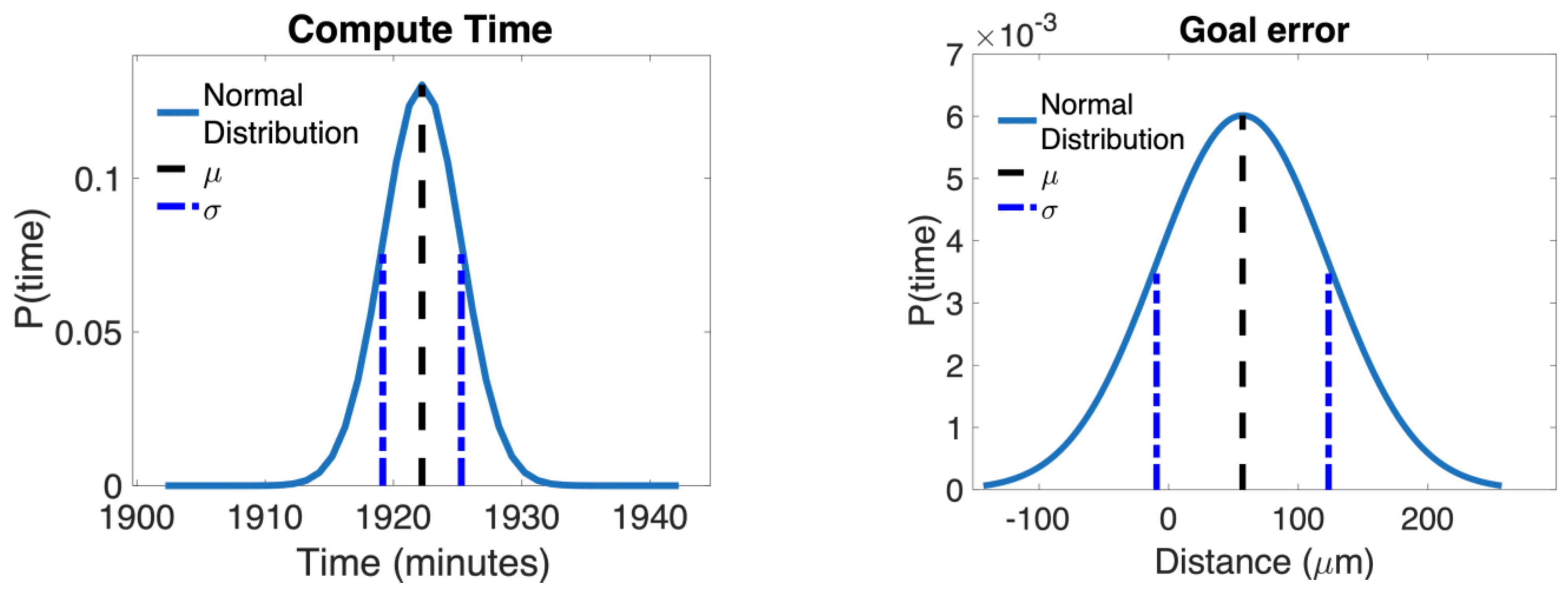

| Date | Time (min) | Goal (µm) |

|---|---|---|

| Maximum | 1928.40 | 200 |

| Minimum | 1920.00 | 000 |

| Mode | 1920.00 | 000 |

| Median | 1922.22 | 057 |

| Standard deviation | 0002.88 | 062 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cardoza Plata, J.E.; Olguín Carbajal, M.; Herrera Lozada, J.C.; Sandoval Gutierrez, J.; Rivera Zarate, I.; Serrano Talamantes, J.F. Simulation and Implementation of a Mobile Robot Trajectory Planning Solution by Using a Genetic Micro-Algorithm. Appl. Sci. 2022, 12, 11284. https://doi.org/10.3390/app122111284

Cardoza Plata JE, Olguín Carbajal M, Herrera Lozada JC, Sandoval Gutierrez J, Rivera Zarate I, Serrano Talamantes JF. Simulation and Implementation of a Mobile Robot Trajectory Planning Solution by Using a Genetic Micro-Algorithm. Applied Sciences. 2022; 12(21):11284. https://doi.org/10.3390/app122111284

Chicago/Turabian StyleCardoza Plata, Jose Eduardo, Mauricio Olguín Carbajal, Juan Carlos Herrera Lozada, Jacobo Sandoval Gutierrez, Israel Rivera Zarate, and Jose Felix Serrano Talamantes. 2022. "Simulation and Implementation of a Mobile Robot Trajectory Planning Solution by Using a Genetic Micro-Algorithm" Applied Sciences 12, no. 21: 11284. https://doi.org/10.3390/app122111284

APA StyleCardoza Plata, J. E., Olguín Carbajal, M., Herrera Lozada, J. C., Sandoval Gutierrez, J., Rivera Zarate, I., & Serrano Talamantes, J. F. (2022). Simulation and Implementation of a Mobile Robot Trajectory Planning Solution by Using a Genetic Micro-Algorithm. Applied Sciences, 12(21), 11284. https://doi.org/10.3390/app122111284