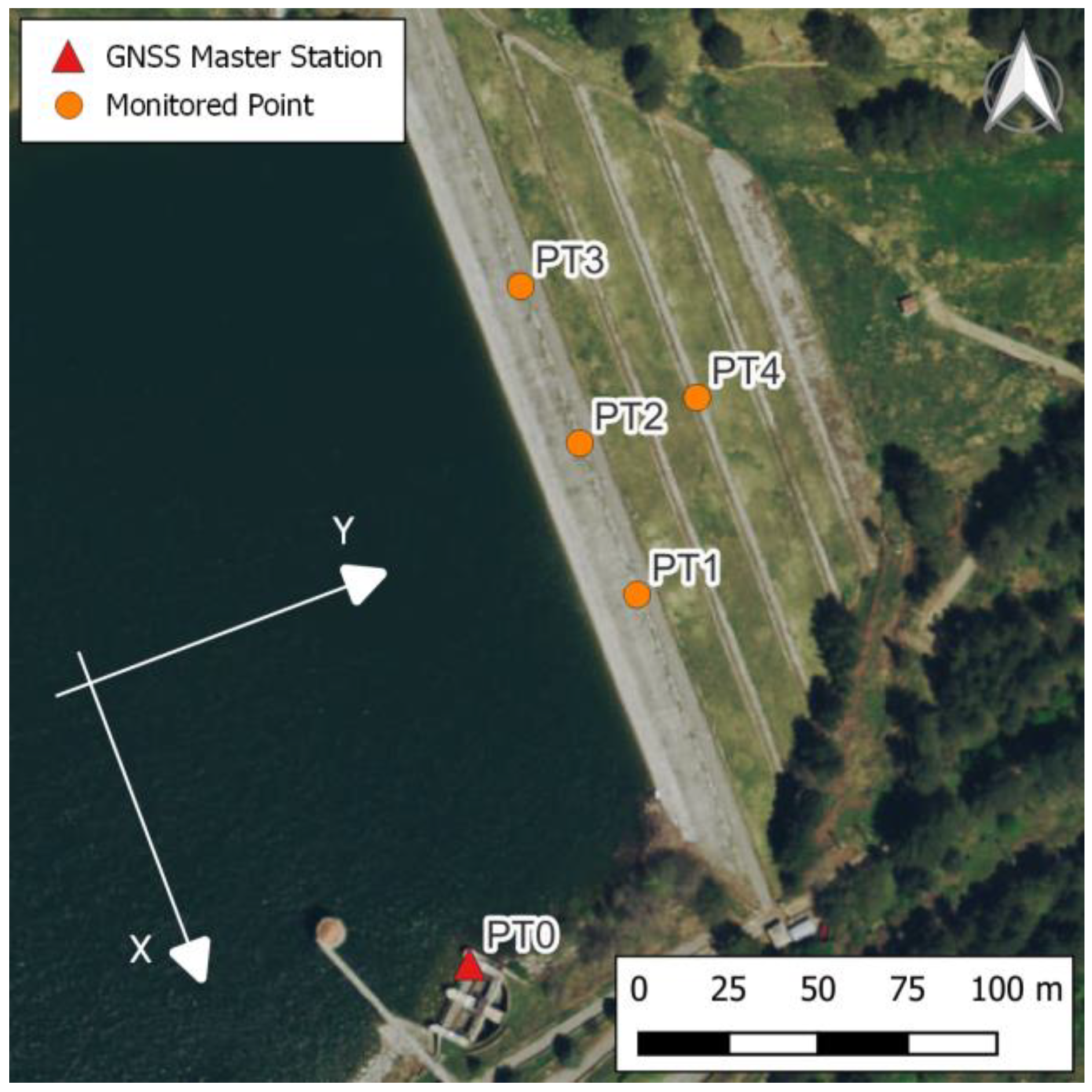

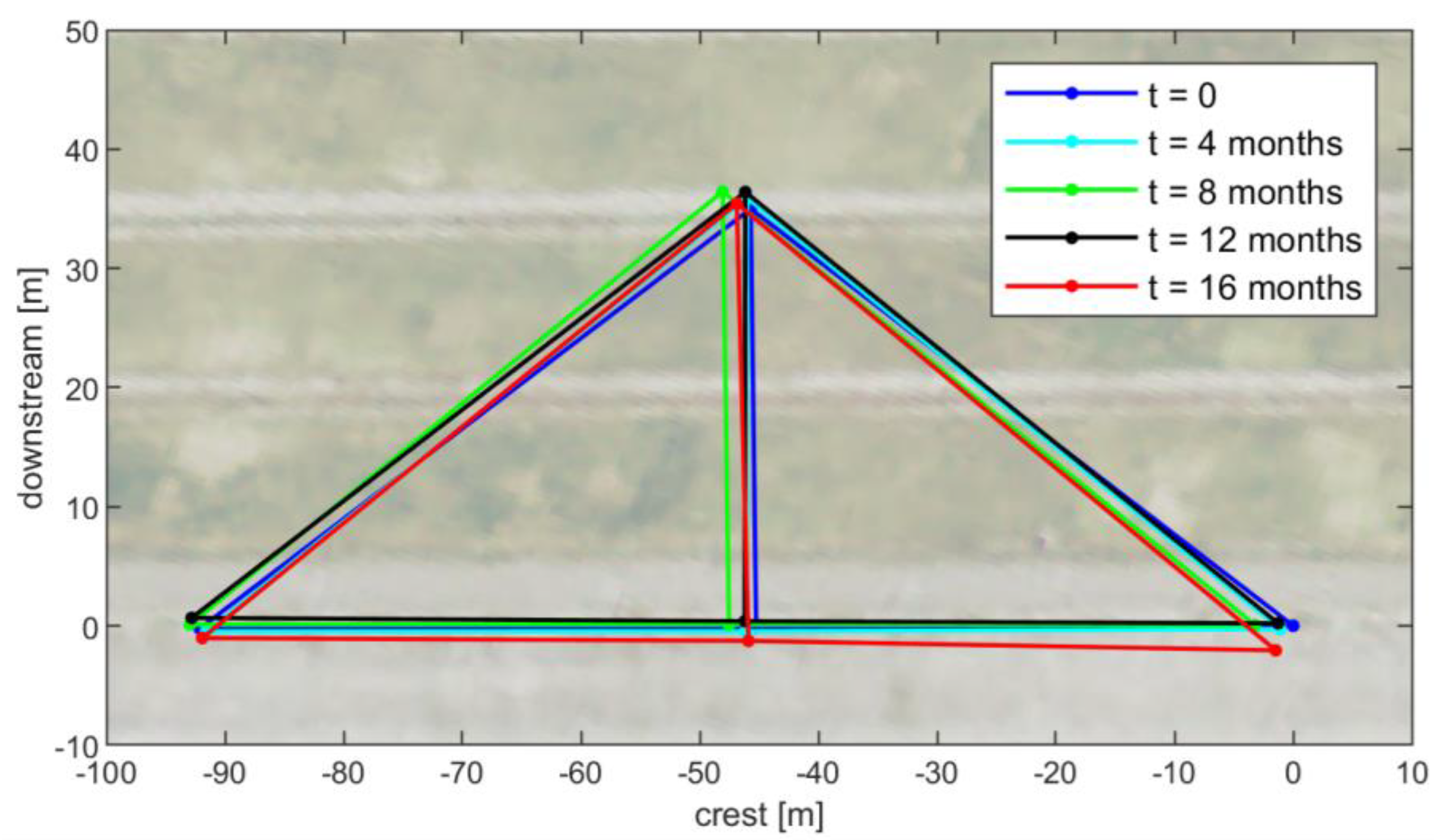

This section presents the real case of dam monitoring based on GNSS techniques and is taken as a reference to exemplify the methodological approach for the analysis and interpretation of the displacement time series logged by the GNSS monitoring system. Two subsections are reported. The first one focuses on the description of the monitored structure, its characteristics and the installed GNSS monitoring equipment. For security and privacy reasons, site and structure will be described in a general way, and any information that could potentially lead to identifying the location and/or the responsible personnel/organization/company of the dam will be omitted. The second subsection deals with the processing required to filter the raw time series for the subsequent displacement interpretation, with particular reference to a possible practical implementation in the framework of an early warning system.

2.2. Displacement Time Series Analysis

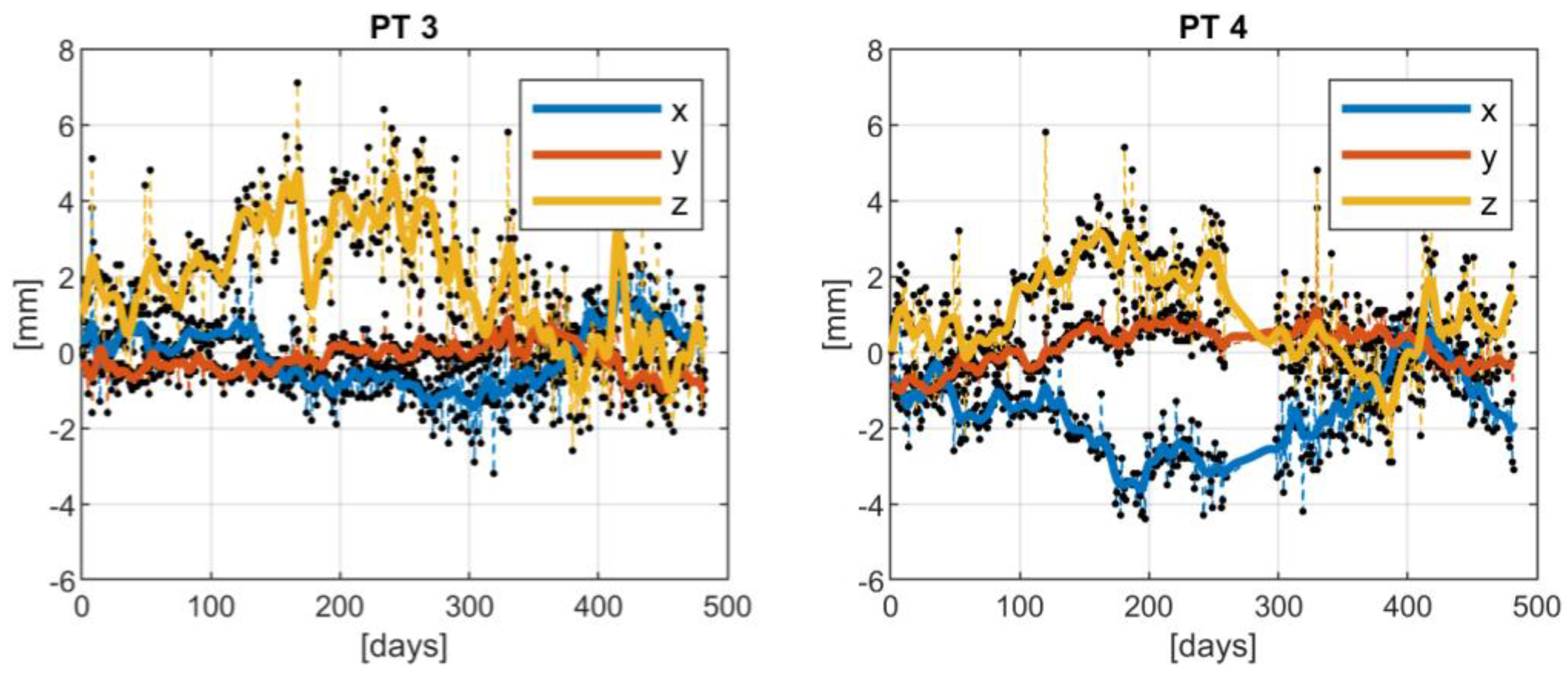

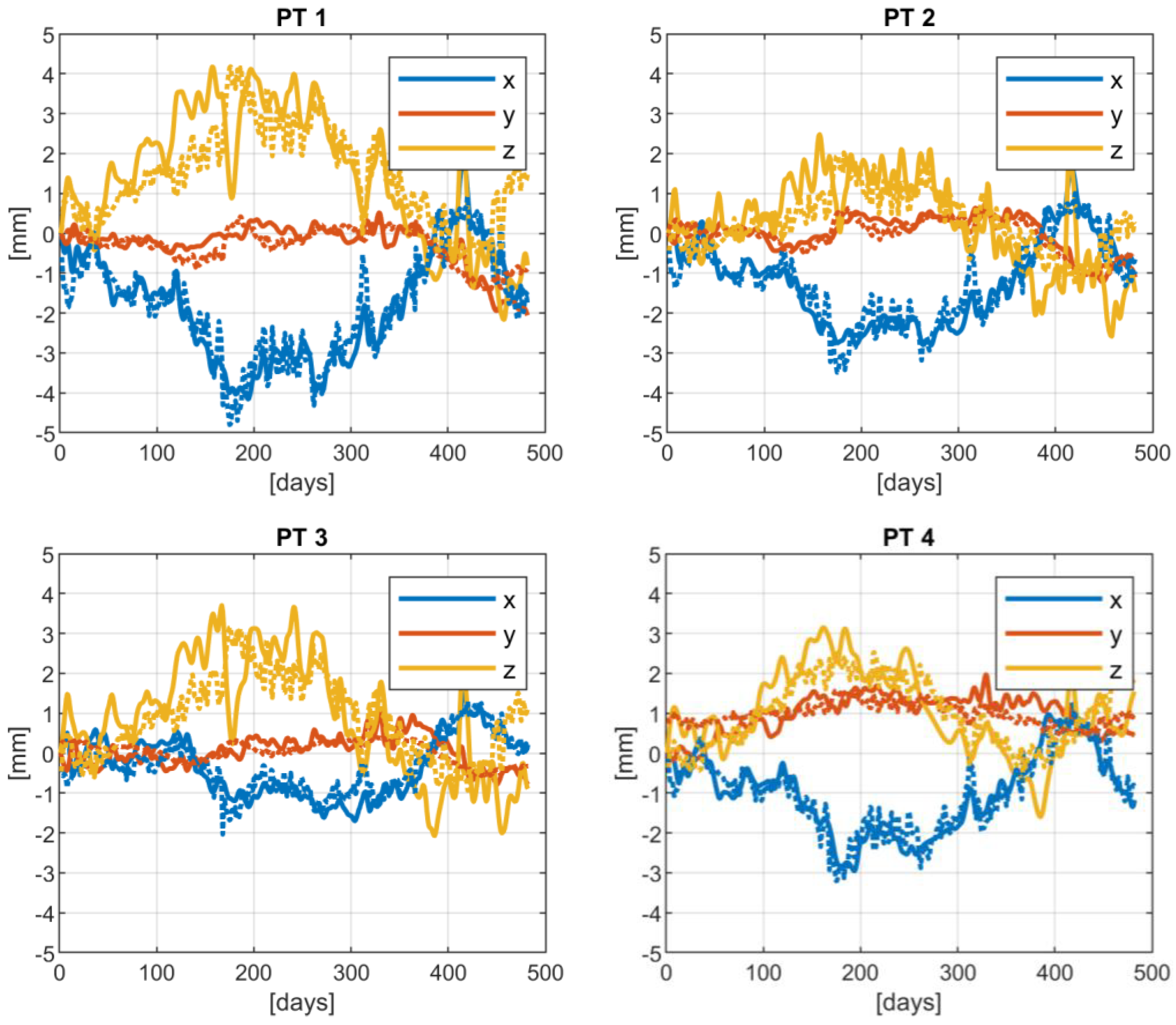

The time series of the daily estimated displacements of each point along the three local XYZ axes needed to be filtered to properly manage the observation noise [

40,

41], fill possible gaps, and allow for a straightforward modeling and interpretation of the displacements. The filtering was performed by applying the collocation approach [

42,

43], thus modeling the signal in time as a stationary random process. A requirement for such an approach is that the signal to be treated is zero-mean, which is not guaranteed in the case of time series regarding point displacements. To fulfill this requirement, a remove–compute–restore scheme was adopted, where the first step consists of removing a deterministic trend from the observed data by modeling it as the sum of a polynomial and a periodical component. After this stage, the observed residual displacements were filtered by collocation, and finally, the deterministic trend was added back to the filtered time series to estimate the displacement.

Given the proposed scheme, we wrote the observation equation as:

where, considering a point

and a coordinate

,

and

are the polynomial and periodic deterministic trends, respectively,

the residual displacement to be stochastically modeled, and

the observation noise. Note that each

combination was independently processed. Therefore, for the sake of simplicity, the

indexes are omitted in the notation of the following equations.

The estimation of the polynomial trend was performed by least squares adjustment [

44], expressing the polynomial as:

where

are the coefficients to be estimated, and

is the degree of the polynomial. The least squares adjustment was solved disregarding possible periodical components and residual displacements. To avoid an over parametrization of the interpolating function, the optimal polynomial degree

needed to be calibrated. To this aim, a first estimate of the set of

coefficients was performed with

, where

is the maximum allowable degree. Then, a statistical test (

t-test) to check whether the estimated value of the

-degree coefficient

was significantly different from zero was applied. If the test hypothesis was accepted, the current

degree of the polynomial was chosen as optimal; otherwise, the least squares adjustment was performed again after reducing the current degree

by 1. This procedure was iterated until the null hypothesis on the

-degree coefficient was rejected.

As for the periodical component of the signal

, it was determined by means of a Fourier analysis of the polynomial-reduced displacements

, namely:

where

is the Fourier transform operator,

is the frequency, and

is the previously estimated polynomial deterministic trend. Note that possible data gaps were filled by using the previously estimated polynomial trend to make the discrete Fourier transform applicable. These interpolated values were only used for the purpose of Fourier analysis. Given the transformed residuals

, the empirical Amplitude Spectral Density (ASD) of

was computed as:

From Equation (4), a smoothed model

of the ASD was determined by applying a moving median filter. The ratio between the empirical and filtered ASDs

was then used to identify the main frequencies carrying a periodical contribution in the signal. This periodical effect was decomposed in two parts, one related to low frequencies, i.e., in the range

, and one related to high frequencies, i.e., in the range

. Note that, according to the proposed model, the condition

must always be satisfied. The choice of introducing two thresholds allows for flexibility in the data analysis, making it possible to adapt the analysis to the physical behavior of the considered phenomenon or structure, e.g., by excluding middle frequencies, i.e.,

, from the analysis. Given the ratio

of Equation (5), a threshold

can be defined to identify the frequencies of the dominating periodical contributions, i.e., the frequencies satisfying the condition

. The periodical trends were then computed by an inverse Fourier transform of the coefficients

corresponding to the identified frequencies that are also included in the chosen frequency ranges (low or high frequencies). This translates into the following estimation equations:

where the characteristic functions

and

are defined as:

Note that the introduction of the scale factor before the inverse Fourier transform in Equations (6) and (7) was required to empirically distinguish the periodical from the stochastic component under the assumption that the ASD of the stochastic component was equal to the moving-median ASD.

In summary, the overall periodical effect was determined as:

In the following, the detected low and high-frequency components were treated with a different approach. In particular, the former was assumed to be related to the physical phenomenon, while the latter to the noise. Therefore, they were both removed from the observations, but only the low-frequency periodical component was subsequently restored after the collocation step.

Once polynomial and periodical deterministic trends were computed, they were used to reduce the signal and isolate the observations of the stochastic component

from the overall displacement observations

. Therefore, the observation equation becomes:

where

includes both the measurement noise

and the estimation error of the polynomial and periodical deterministic components. For the sake of simplicity, the possible correlations between

and

were neglected and

was assumed to be white.

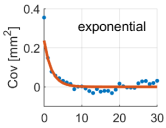

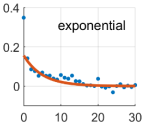

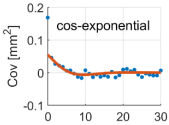

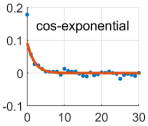

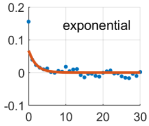

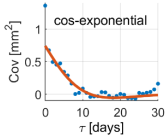

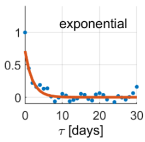

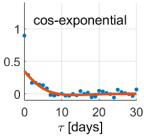

Given the observations reduced as in Equation (11), the empirical covariance function of the signal was determined as:

where the summation extends

, with

being the overall set of observation epochs, and

is the number of couples for a given

. The empirical covariance function was used to determine an analytical covariance model

, along with the determination of

. In particular,

was determined by a best fit procedure on

for

since at

, the empirical covariance combines signal and noise, according to the assumption of a white

. Once the model

was defined, the variance of the noise was computed as:

The best covariance model was chosen among several candidate models as the one with the lowest residuals between the empirical and analytical covariances. Then, the collocation estimate of the filtered signal was computed as:

where the

and

are the vectors containing the estimation and observation epochs, respectively, and the notation

represents the covariance matrix computed by evaluating the model covariance

for all the possible time lags

coming from the possible combinations of the elements of the vectors

.

Finally, from the output of Equation (14), the modeled displacement at the estimation epochs

was computed by restoring the polynomial and periodical components as