Motion Planning of Robot Manipulators for a Smoother Path Using a Twin Delayed Deep Deterministic Policy Gradient with Hindsight Experience Replay

Abstract

1. Introduction

2. Preliminaries and Problem Setup

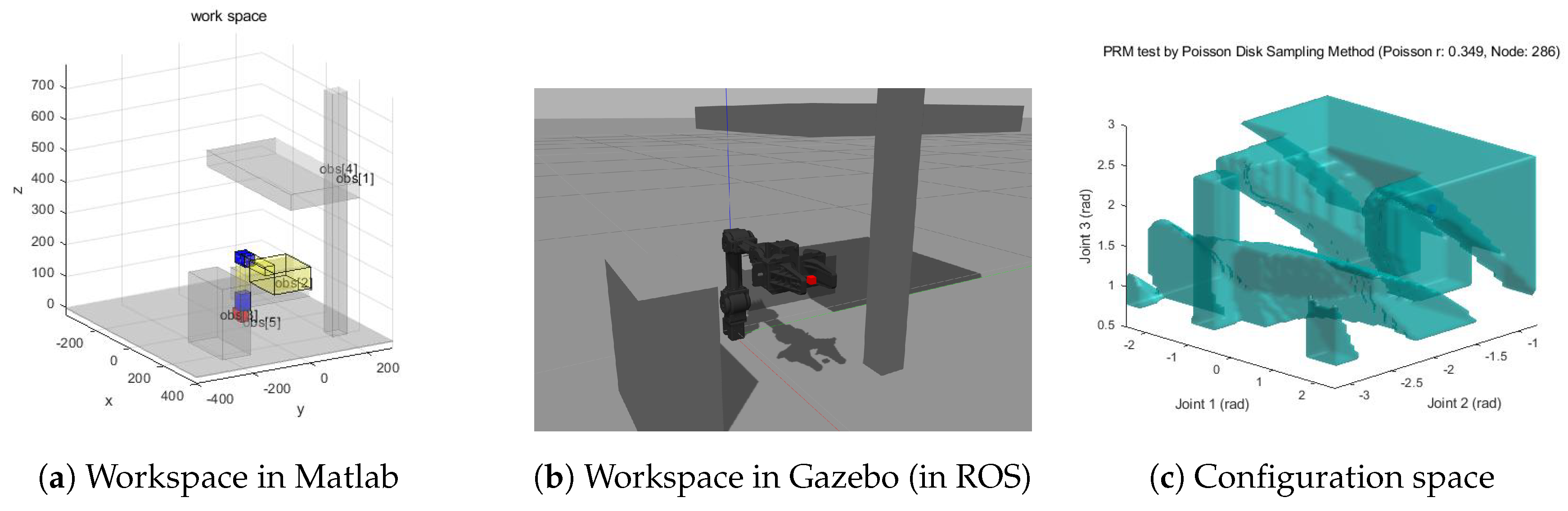

2.1. Configuration Space and Sampling-Based Path Planning

2.2. Reinforcement Learning

3. TD3 Based Motion Planning for Smoother Paths

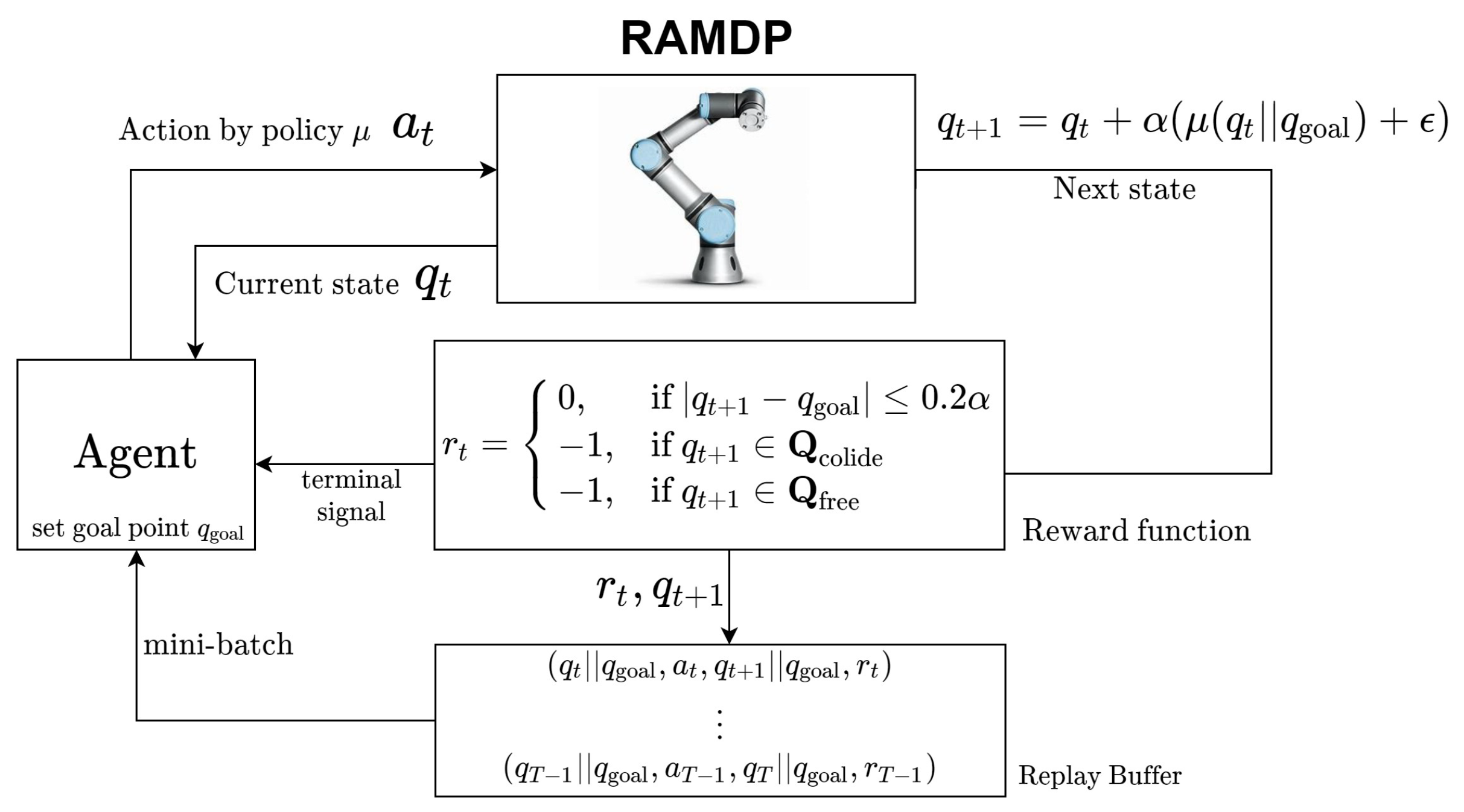

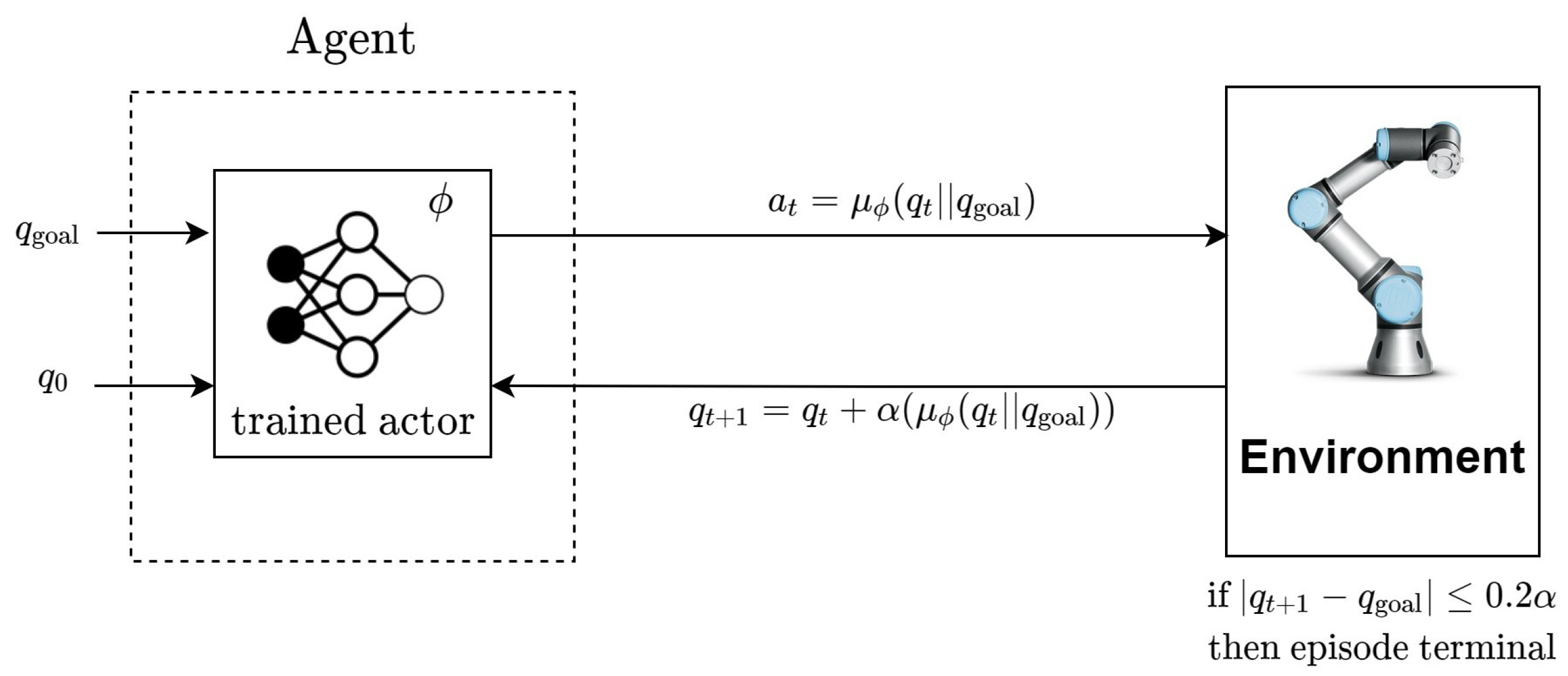

3.1. RAMDP (Robot Arm Markov Decision Process) for Path Planning

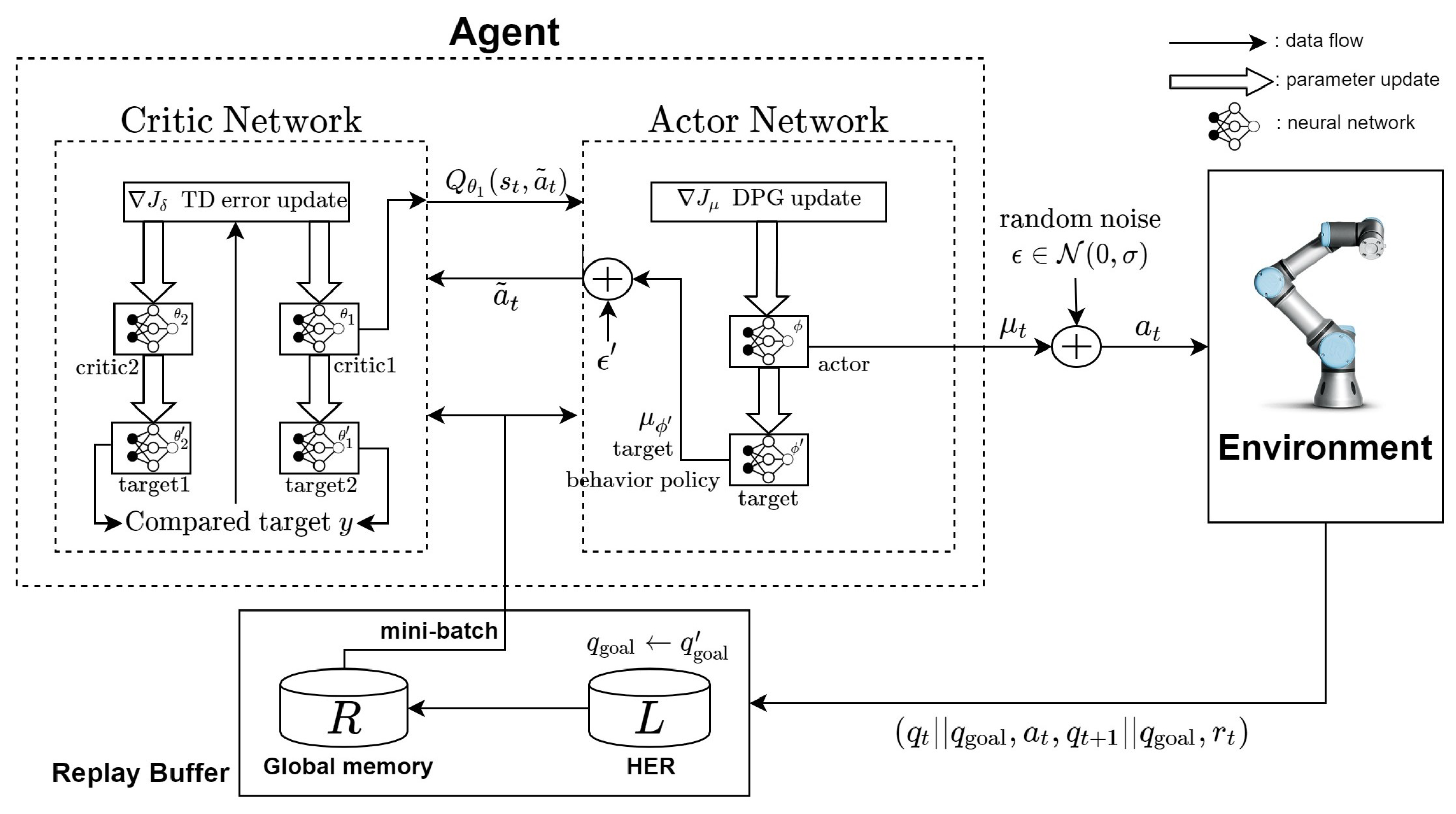

3.2. TD3 (Twin Delayed Deep Deterministic Policy Gradient)

3.3. Hindsight Experience Replay

| Algorithm 1 Training procedure for motion planning by TD3 with HER. The red part is for a robot manipulator, and the blue part is for HER. | |

| 1: Initialize critic networks and actor network with | |

| 2: Initialize target networks | |

| 3: Initialize replay buffer | |

| 4: for to M do | |

| 5: Initialize local buffer | ▹ memory for HER |

| 6: for to do | |

| 7: Randomly choose goal point | |

| 8: Select action with noise: | |

| 9: | ▹ denotes concatenation |

| 10: | |

| 11: | |

| 12: if then | |

| 13: | |

| 14: else if then | |

| 15: | |

| 16: else if then | |

| 17: Terminal by goal arrival | |

| 18: end if | |

| 19: | |

| 20: | |

| 21: Store the transition in | |

| 22: | |

| 23: Sample mini-batch of n transitions from | |

| 24: | |

| 25: | |

| 26: Update critics with temporal difference error: | |

| 27: | |

| 28: | |

| 29: if then | ▹ delayed update with d |

| 30: Update actor by the deterministic policy gradient: | |

| 31: | |

| 32: Update target networks: | |

| 33: | |

| 34: | |

| 35: end if | |

| 36: end for | |

| 37: if then | |

| 38: Set additional goal | |

| 39: for to do | |

| 40: Sample a transition from | |

| 41: | |

| 42: Store the transition in | |

| 43: end for | |

| 44: end if | |

| 45: end for | |

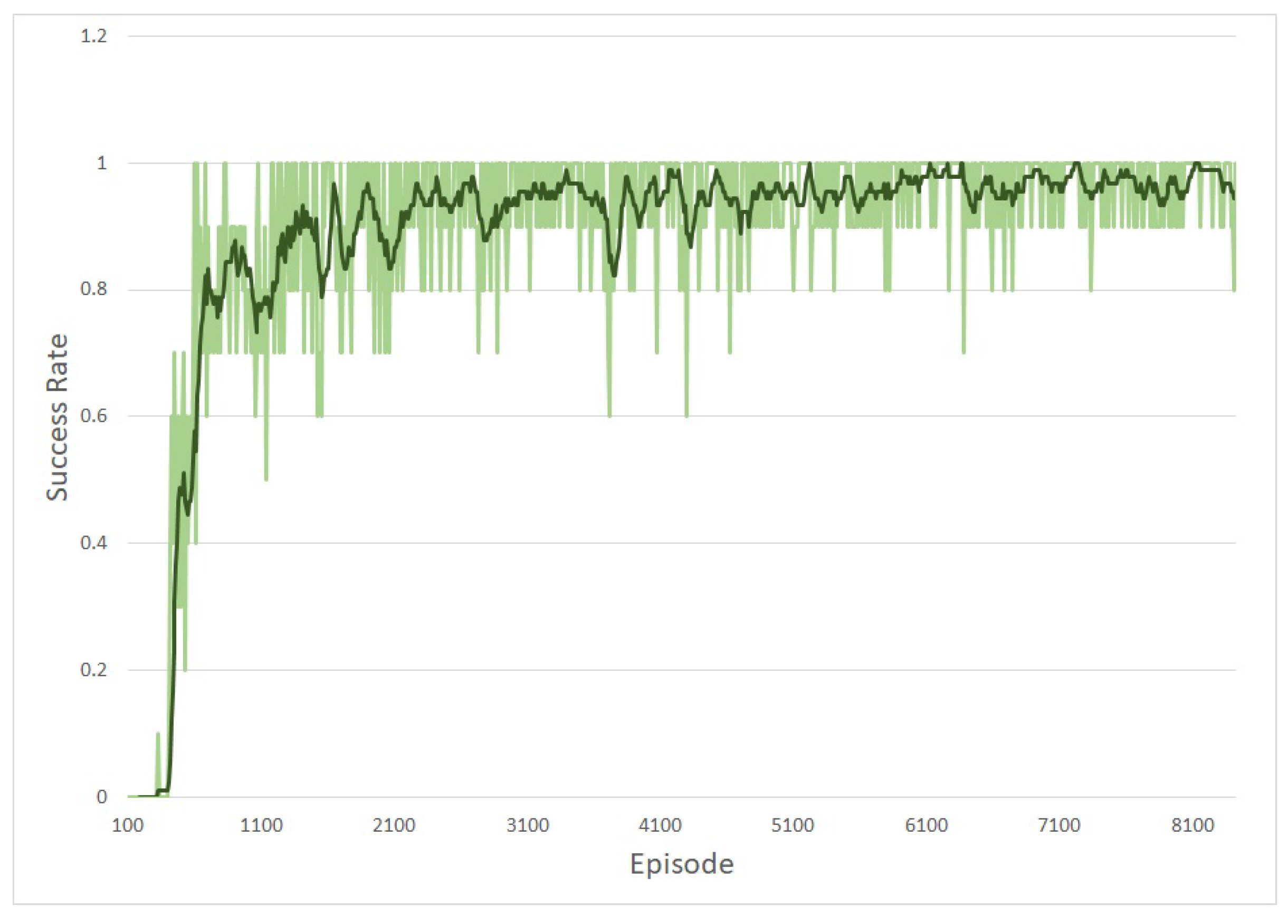

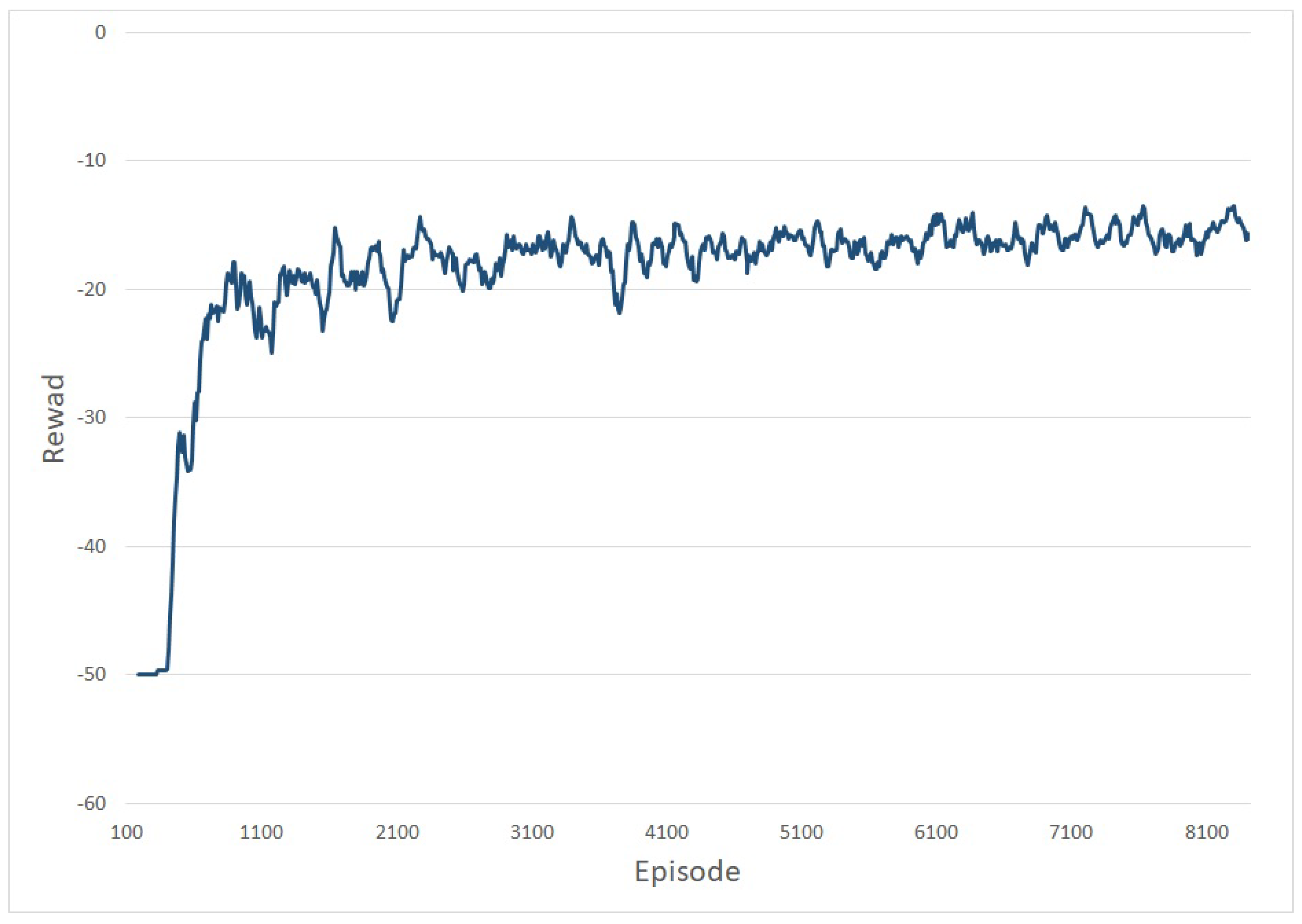

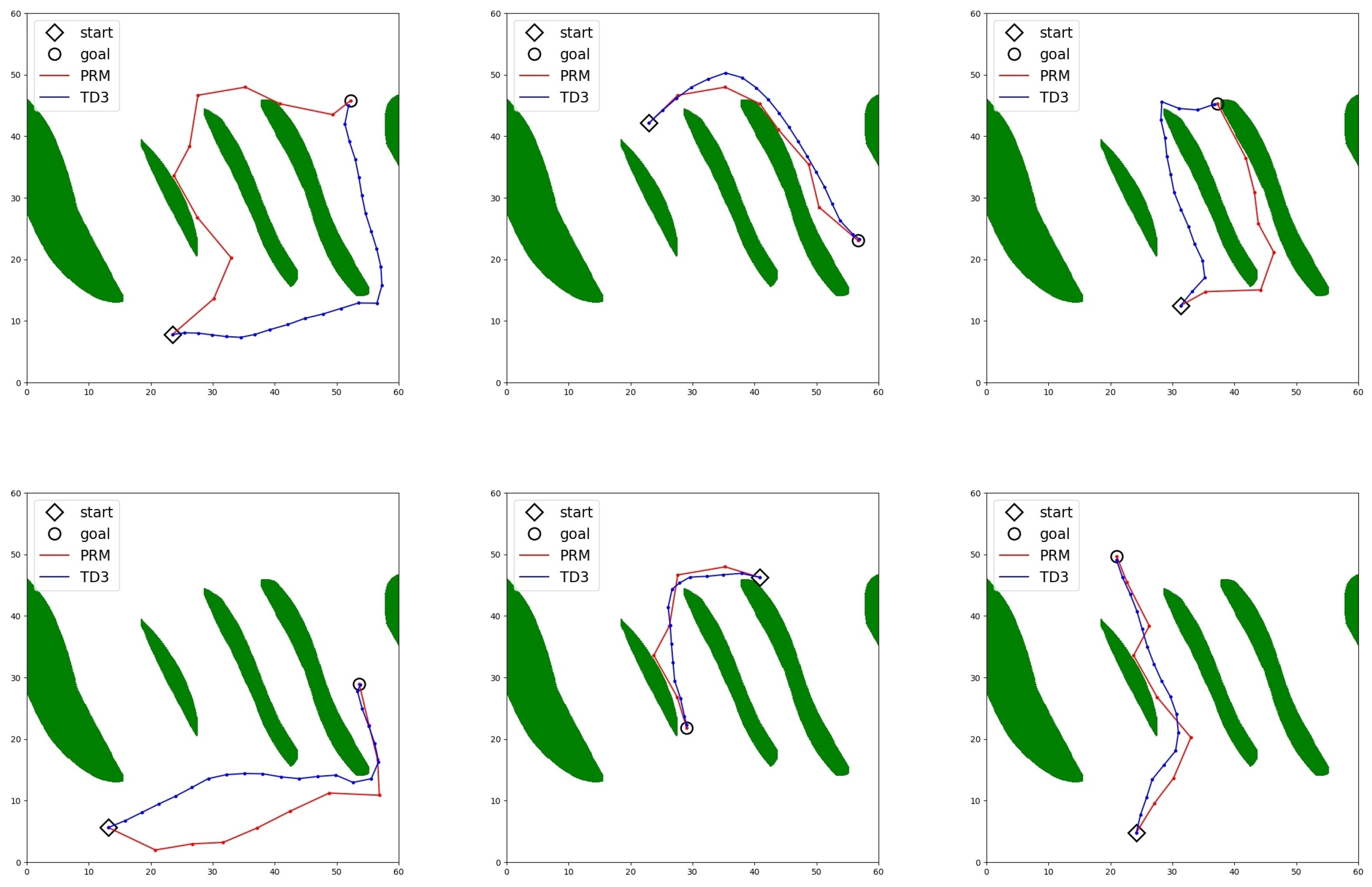

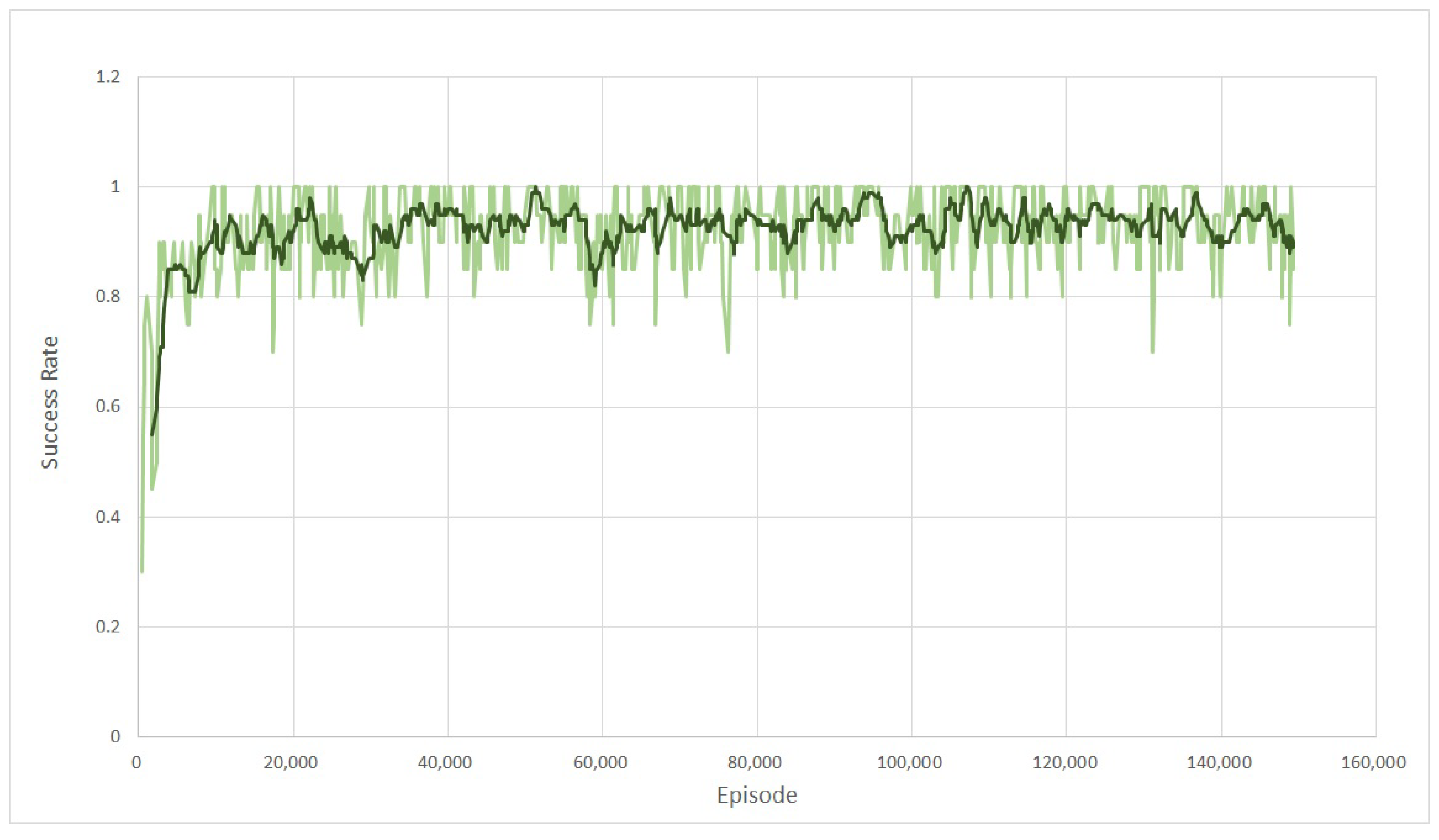

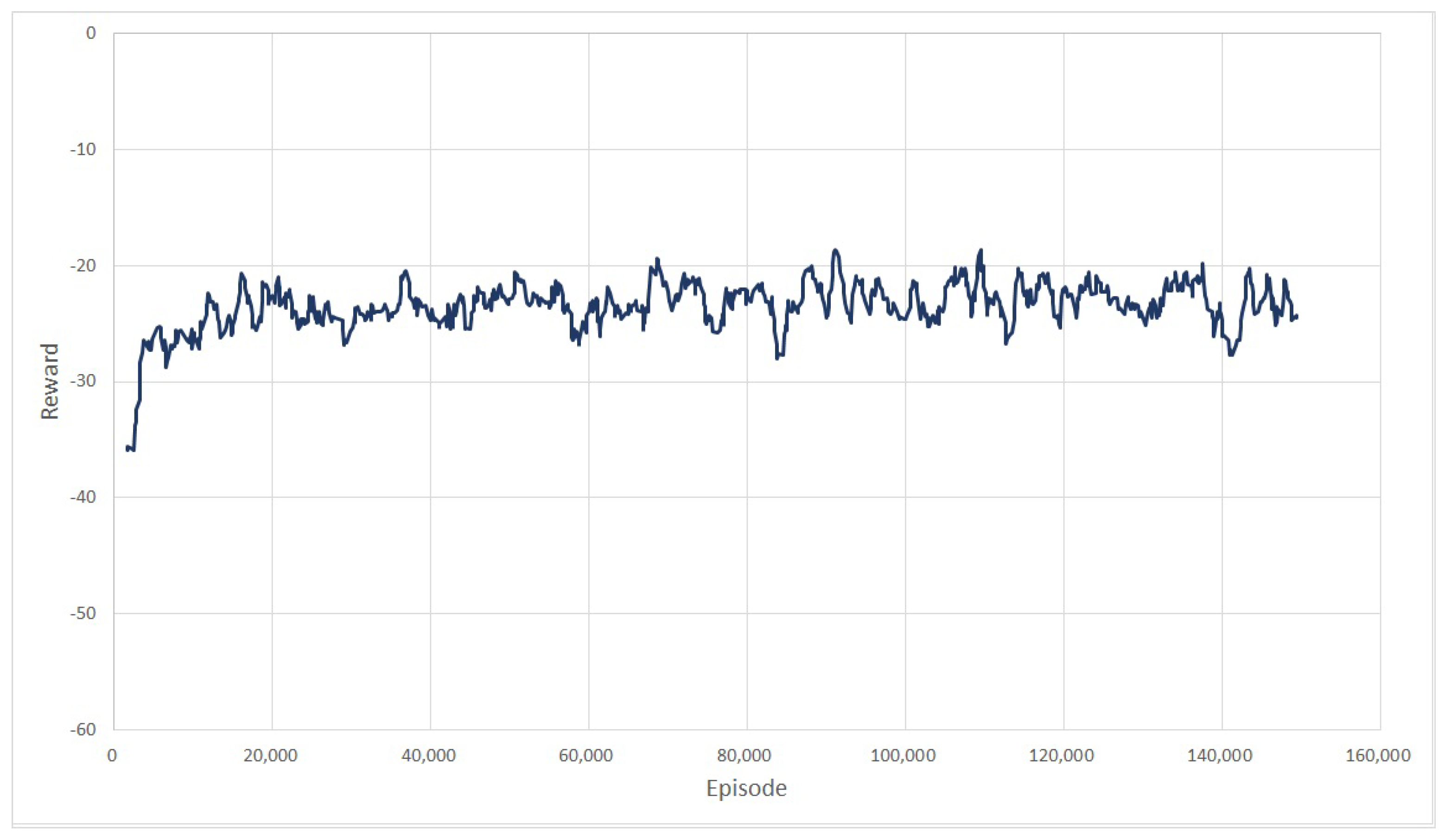

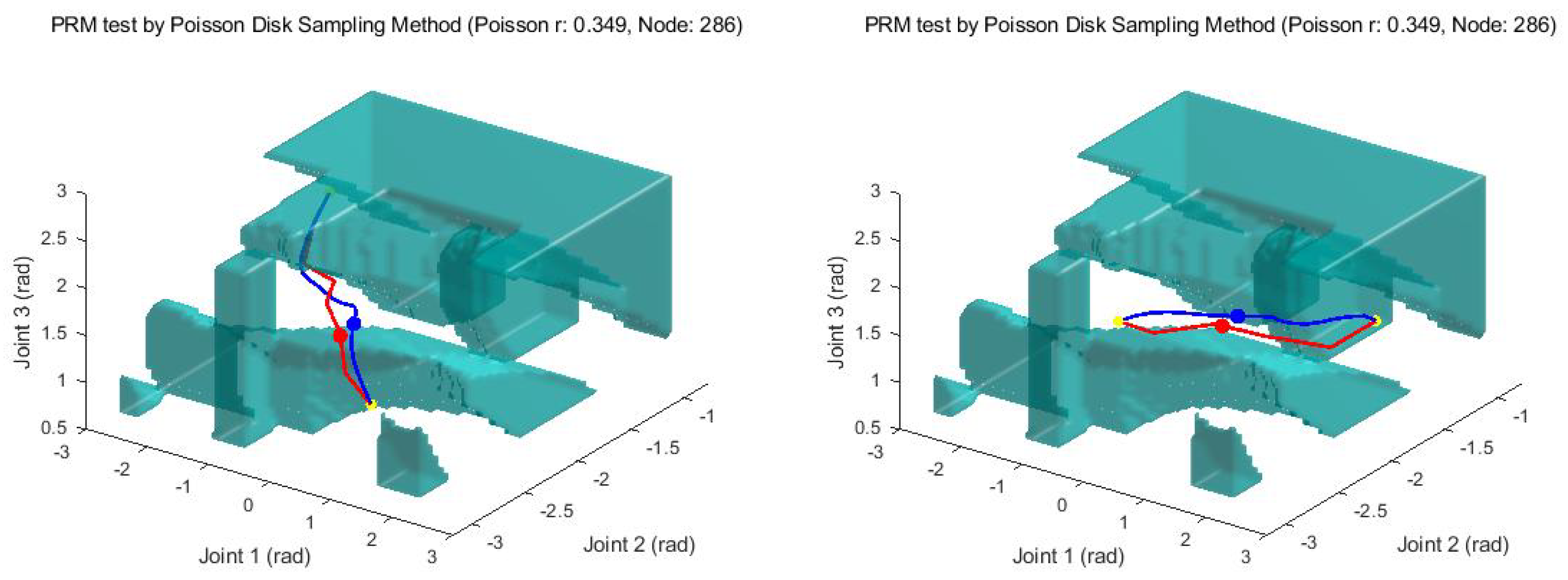

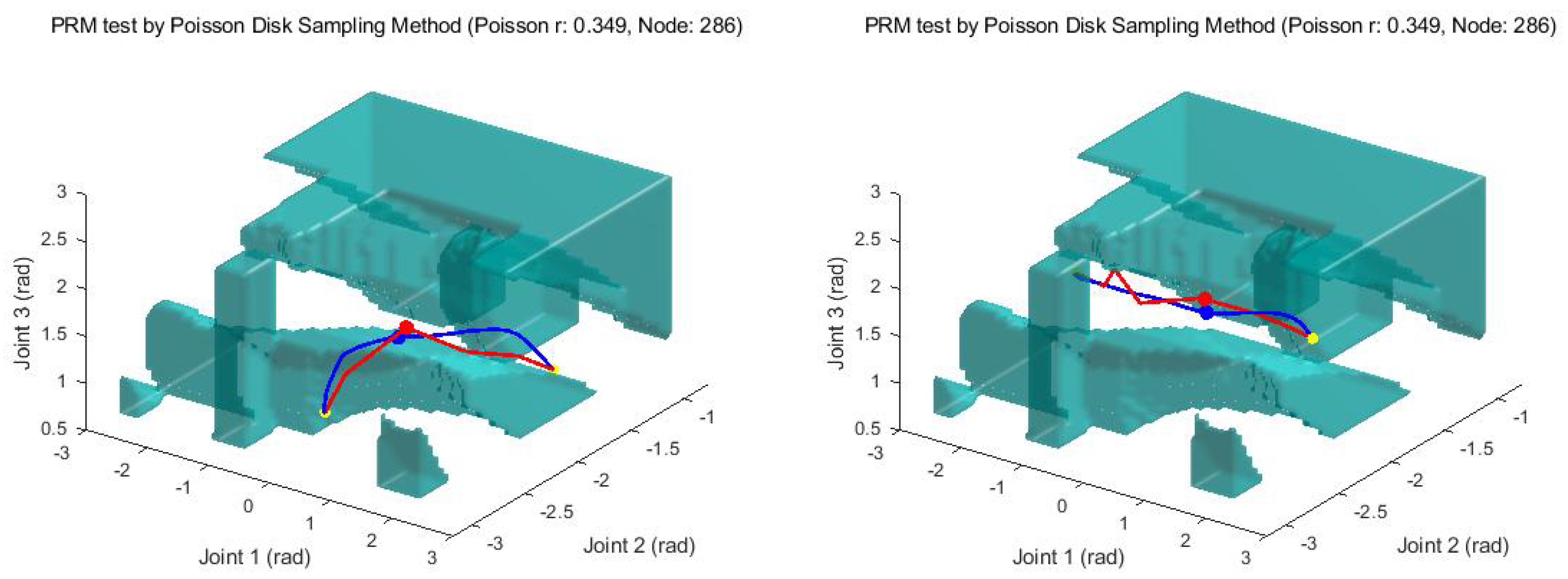

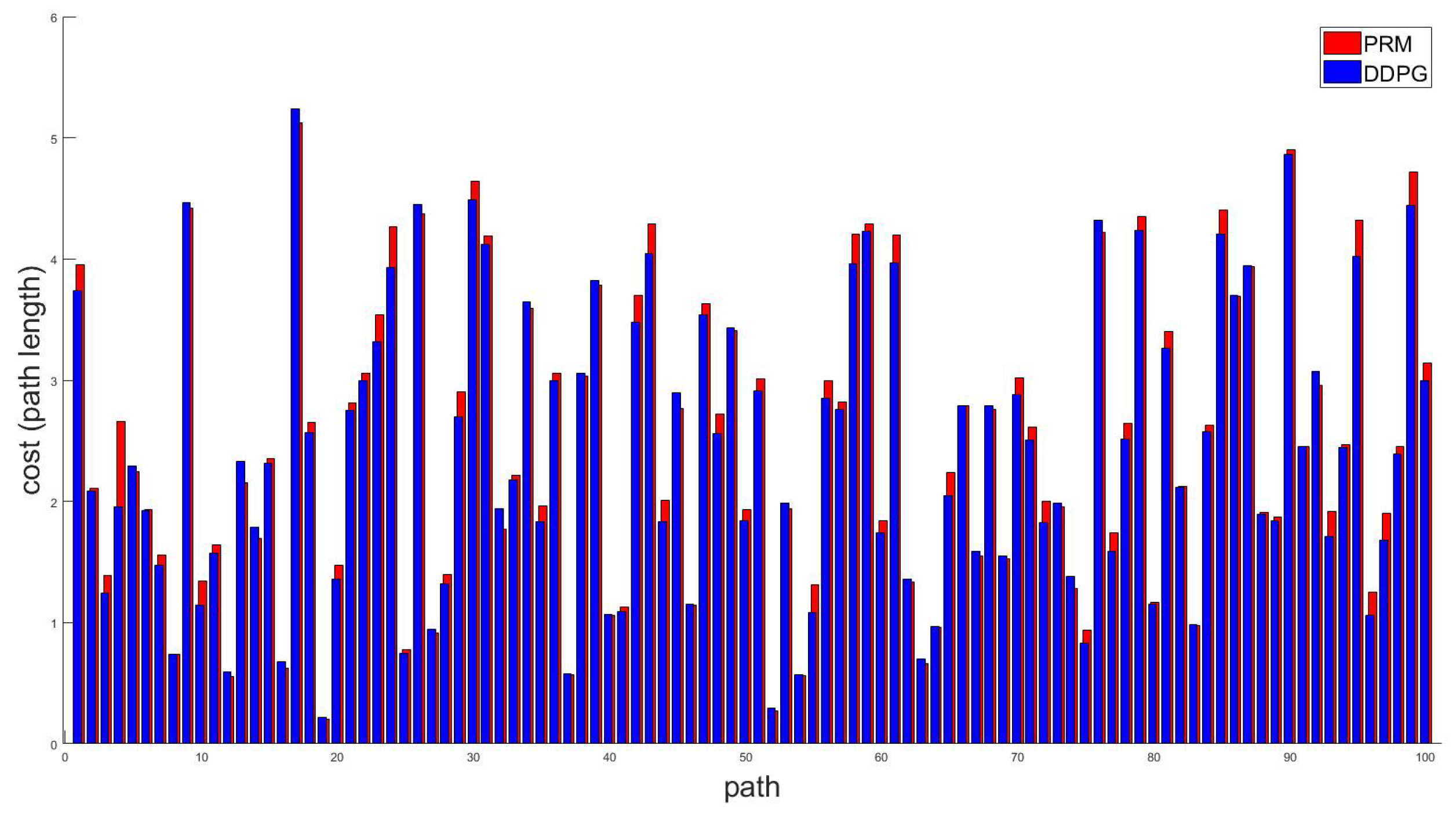

4. Case Study for 2-DOF and 3-DOF Manipulators

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| MDP | Markov Decision Process |

| RAMDP | Robot Arm Markov Decision Process |

| DOF | Degrees Of Freedom |

| PRM | Probabilistic Roadmaps |

| RRT | Rapid Exploring Random Trees |

| FMMs | Fast Marching Methods |

| DNN | Deep Neural Networks |

| DQN | Deep Q-Network |

| (A3C) | Asynchronous Advantage Actor-Critic |

| (TRPO) | Trust Region Policy Optimization |

| (DPG) | Deterministic Policy Gradient |

| DDPG | Deep Deterministic Policy Gradient |

| TD3 | Twin Delayed Deep Deterministic Policy Gradient |

| HER | Hindsight Experience Replay |

| (ROS) | Robot Operating System |

References

- Laumond, J.P. Robot Motion Planning and Control; Springer: Berlin, Germany, 1998; Volume 229. [Google Scholar]

- Choset, H.M.; Hutchinson, S.; Lynch, K.M.; Kantor, G.; Burgard, W.; Kavraki, L.E.; Thrun, S. Principles of Robot Motion: Theory, Algorithms, and Implementation; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Cao, B.; Doods, G.; Irwin, G.W. Time-optimal and smooth constrained path planning for robot manipulators. In Proceedings of the 1994 IEEE International Conference on Robotics and Automation, San Diego, CA, USA, 8–13 May 1994; pp. 1853–1858. [Google Scholar]

- Kanayama, Y.J.; Hartman, B.I. Smooth local-path planning for autonomous vehicles1. Int. J. Robot. Res. 1997, 16, 263–284. [Google Scholar] [CrossRef]

- Rufli, M.; Ferguson, D.; Siegwart, R. Smooth path planning in constrained environments. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3780–3785. [Google Scholar]

- Karaman, S.; Frazzoli, E. Sampling-based algorithms for optimal motion planning. Int. J. Robot. Res. 2011, 30, 846–894. [Google Scholar] [CrossRef]

- Spong, M.W.; Hutchinson, S.A.; Vidyasagar, M. Robot modeling and control. IEEE Control Syst. 2006, 26, 113–115. [Google Scholar]

- Kavraki, L.E.; Kolountzakis, M.N.; Latombe, J.C. Analysis of probabilistic roadmaps for path planning. IEEE Trans. Robot. Autom. 1998, 14, 166–171. [Google Scholar] [CrossRef]

- Kavraki, L.E.; Svestka, P.; Latombe, J.C.; Overmars, M.H. Probabilistic roadmaps for path planning in high-dimensional configuration spaces. IEEE Trans. Robot. Autom. 1996, 12, 566–580. [Google Scholar] [CrossRef]

- Kavraki, L.E. Random Networks in Configuration Space for Fast Path Planning. Ph.D. Thesis, Stanford University, Stanford, CA, USA, 1995. [Google Scholar]

- Kavraki, L.E.; Latombe, J.C.; Motwani, R.; Raghavan, P. Randomized query processing in robot path planning. J. Comput. Syst. Sci. 1998, 57, 50–60. [Google Scholar] [CrossRef]

- Bohlin, R.; Kavraki, L.E. A Randomized Approach to Robot Path Planning Based on Lazy Evaluation. Comb. Optim. 2001, 9, 221–249. [Google Scholar]

- Hsu, D.; Latombe, J.C.; Kurniawati, H. On the probabilistic foundations of probabilistic roadmap planning. Int. J. Robot. Res. 2006, 25, 627–643. [Google Scholar] [CrossRef]

- Kuffner, J.J.; LaValle, S.M. RRT-connect: An efficient approach to single-query path planning. In Proceedings of the 2000 IEEE International Conference on Robotics and Automation, San Francisco, CA, USA, 24–28 April 2000; Volume 2, pp. 995–1001. [Google Scholar]

- Lavalle, S.; Kuffner, J. Rapidly-exploring random trees: Progress and prospects. In Algorithmic and Computational Robotics: New Directions; CRC Press: Boca Ratol, FL, USA, 2000; pp. 293–308. [Google Scholar]

- Janson, L.; Schmerling, E.; Clark, A.; Pavone, M. Fast marching tree: A fast marching sampling-based method for optimal motion planning in many dimensions. Int. J. Robot. Res. 2015, 34, 883–921. [Google Scholar] [CrossRef] [PubMed]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Puterman, M.L. Markov Decision Processes: Discrete Stochastic Dynamic Programming, 1st ed.; John Wiley & Sons, Inc.: New York, NY, USA, 1994. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.; Veness, J.; Bellemare, M.; Graves, A.; Riedmiller, M.; Fidjeland, A.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Langford, J. Efficient Exploration in Reinforcement Learning. In Encyclopedia of Machine Learning and Data Mining; Sammut, C., Webb, G.I., Eds.; Springer US: Boston, MA, USA, 2017; pp. 389–392. [Google Scholar]

- Tokic, M. Adaptive ε-greedy exploration in reinforcement learning based on value differences. In Annual Conference on Artificial Intelligence; Springer: Berlin, Germany, 2010; pp. 203–210. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.M.O.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Hasselt, H.v.; Guez, A.; Silver, D. Deep Reinforcement Learning with Double Q-Learning. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 2094–2100. [Google Scholar]

- Fujimoto, S.; van Hoof, H.; Meger, D. Addressing function approximation error in actor-critic methods. arXiv 2018, arXiv:1802.09477. [Google Scholar]

- Hessel, M.; Modayil, J.; Van Hasselt, H.; Schaul, T.; Ostrovski, G.; Dabney, W.; Horgan, D.; Piot, B.; Azar, M.; Silver, D. Rainbow: Combining improvements in deep reinforcement learning. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016; pp. 1928–1937. [Google Scholar]

- Degris, T.; Pilarski, P.M.; Sutton, R.S. Model-free reinforcement learning with continuous action in practice. In Proceedings of the 2012 American Control Conference (ACC), Montreal, QC, Canada, 27–29 June 2012; pp. 2177–2182. [Google Scholar]

- Degris, T.; White, M.; Sutton, R.S. Off-policy actor-critic. arXiv 2012, arXiv:1205.4839. [Google Scholar]

- Bae, H.; Kim, G.; Kim, J.; Qian, D.; Lee, S. Multi-Robot Path Planning Method Using Reinforcement Learning. Appl. Sci. 2019, 9, 3057. [Google Scholar] [CrossRef]

- Lv, L.; Zhang, S.; Ding, D.; Wang, Y. Path Planning via an Improved DQN-Based Learning Policy. IEEE Access 2019, 7, 67319–67330. [Google Scholar] [CrossRef]

- Paul, S.; Vig, L. Deterministic policy gradient based robotic path planning with continuous action spaces. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 725–733. [Google Scholar]

- Gu, S.; Holly, E.; Lillicrap, T.; Levine, S. Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3389–3396. [Google Scholar]

- Andrychowicz, M.; Wolski, F.; Ray, A.; Schneider, J.; Fong, R.; Welinder, P.; McGrew, B.; Tobin, J.; Abbeel, O.P.; Zaremba, W. Hindsight experience replay. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5048–5058. [Google Scholar]

- Lozano-Pérez, T. Spatial Planning: A Configuration Space Approach. In Autonomous Robot Vehicles; Cox, I.J., Wilfong, G.T., Eds.; Springer New York: New York, NY, USA, 1990; pp. 259–271. [Google Scholar]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic Policy Gradient Algorithms. In Proceedings of the 31st International Conference on International Conference on Machine Learning, Bejing, China, 22–24 June 2014; pp. I-387–I-395. [Google Scholar]

- Sutton, R.S.; Mcallester, D.; Singh, S.; Mansour, Y. Policy gradient methods for reinforcement learning with function approximation. In Advances in Neural Information Processing Systems 12; MIT Press: Cambridge, MA, USA, 2000; pp. 1057–1063. [Google Scholar]

| DOF | Joint Max (degree) | Joint Min (degree) | Action Step Size () | Goal Boundary |

|---|---|---|---|---|

| 2 | (140, −45, 150) | (−140, −180, 45) | 0.1381 | 0.2 |

| 3 | (60, 60) | (0, 0) | 3.0 | 1.0 |

| Network Name | Learning Rate | Optimizer | Update Delay | DNN Size |

|---|---|---|---|---|

| Actor | 0.001 | adam | 2 | |

| Critic | 0.001 | adam | 0 | |

| Actor target | 0.005 | - | 2 | |

| Critic target | 0.005 | - | 2 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, M.; Han, D.-K.; Park, J.-H.; Kim, J.-S. Motion Planning of Robot Manipulators for a Smoother Path Using a Twin Delayed Deep Deterministic Policy Gradient with Hindsight Experience Replay. Appl. Sci. 2020, 10, 575. https://doi.org/10.3390/app10020575

Kim M, Han D-K, Park J-H, Kim J-S. Motion Planning of Robot Manipulators for a Smoother Path Using a Twin Delayed Deep Deterministic Policy Gradient with Hindsight Experience Replay. Applied Sciences. 2020; 10(2):575. https://doi.org/10.3390/app10020575

Chicago/Turabian StyleKim, MyeongSeop, Dong-Ki Han, Jae-Han Park, and Jung-Su Kim. 2020. "Motion Planning of Robot Manipulators for a Smoother Path Using a Twin Delayed Deep Deterministic Policy Gradient with Hindsight Experience Replay" Applied Sciences 10, no. 2: 575. https://doi.org/10.3390/app10020575

APA StyleKim, M., Han, D.-K., Park, J.-H., & Kim, J.-S. (2020). Motion Planning of Robot Manipulators for a Smoother Path Using a Twin Delayed Deep Deterministic Policy Gradient with Hindsight Experience Replay. Applied Sciences, 10(2), 575. https://doi.org/10.3390/app10020575