1. Introduction

Artificial Ιntelligence (AI) has rapidly shifted from pilot applications to mainstream adoption in marketing practices. It now supports customer service, personalization, pricing, and decision-making processes, reshaping the entire marketing cycle (

Huang & Rust, 2021;

Kumar et al., 2024). This technological transformation generates new opportunities for firms to increase efficiency and deliver tailored customer experiences, yet it also raises pressing questions about trust, transparency, and ethical responsibility (

Awad & Krishnan, 2006;

Martin & Murphy, 2017;

Ameen et al., 2021).

However, while prior research has advanced our understanding of AI’s technical and operational benefits, far less attention has been given to the human side of adoption—particularly how consumers evaluate AI-based personalization through the lenses of trust, ethics, and identity. This gap motivates the present study, which focuses on the psychological and social foundations of AI acceptance.

The debate around the “personalization–privacy paradox” highlights this tension: consumers appreciate customized experiences but hesitate when personalization requires extensive data collection (

Awad & Krishnan, 2006;

Martin & Murphy, 2017). Transparency in data practices can reduce skepticism, though it rarely resolves it entirely. Moreover, research on algorithmic decision-making reveals diverging perspectives. On one hand, studies have shown “algorithm aversion,” where individuals avoid algorithmic advice after observing even a single error (

Dietvorst et al., 2015). On the other hand, evidence suggests “algorithm appreciation,” with people trusting algorithmic advice more than human advice under certain conditions (

Logg et al., 2019). These contrasting findings underscore an unresolved debate about consumer trust in AI-driven marketing. Despite these valuable insights, limited evidence exists on how these attitudes translate into acceptance of AI-based personalized advertising—a domain where algorithmic targeting directly interacts with consumer identity and ethical perception (

Ameen et al., 2021;

Gursoy et al., 2019).

Beyond functional benefits or privacy concerns, consumer acceptance is also influenced by identity. Consumption often serves as a means of self-expression and differentiation from others (

Berger & Heath, 2007). Practices such as AI-driven personalization may therefore face resistance when perceived as threatening consumers’ autonomy or their desired self-image. Against this backdrop, the present study investigates the factors shaping acceptance of AI-based personalized advertising in Greece. Drawing on a survey of 650 participants, we examine the role of (a) trust and ethical perceptions toward AI (AI TrustEthics), (b) individual exposure and familiarity with AI (AI ExposureUse), (c) digital consumer behavior (Digital Behavior), and (d) consumption identity (Consumption Identity). By analyzing these dimensions with logistic regression models, we aim to disentangle their relative contributions to acceptance.

Specifically, this study seeks to answer the following research questions:

How do trust and perceived ethics influence acceptance of AI-driven personalization?

How does familiarity with AI shape openness to personalized advertising?

What roles do digital behavior and consumption identity play in this process?

How do demographic factors such as age, gender, education, and socioeconomic status moderate these effects?

This study makes three contributions. First, it enriches current discussions on AI adoption in marketing by integrating ethical perceptions with identity-related mechanisms. Second, it provides empirical insights from Greece, thereby extending the geographical and cultural scope of existing literature. Third, it highlights practical implications for managers and policymakers, emphasizing the importance of building trust, ensuring responsible AI practices, and respecting consumer values in digital marketing strategies. Collectively, these contributions advance understanding of how ethical trust and personal identity jointly shape consumer reactions to AI personalization, offering a human-centered perspective on technology acceptance in marketing.

2. Theoretical Framework

The discussion around artificial intelligence (AI) in marketing has been growing rapidly in recent years, as this technology expands the possibilities of personalization and fundamentally reshapes the relationship between firms and consumers. However, the acceptance of such applications is not self-evident; it depends on several factors related to trust, familiarity, everyday digital behavior, and consumer identity. The following sections present the key theoretical concepts that form the foundation of this study.

2.1. Trust and Ethics in AI

In marketing contexts, trust is the first hurdle any AI system must clear. Consumers do not judge AI only on whether it “works”; they also weigh whether its operation is understandable, fair, and respectful of their rights. Work on AI in services shows that adoption hinges on both technical reliability and perceived integrity—that is, whether the system behaves in ways that align with social and ethical expectations (

Huang & Rust, 2021). In line with acceptance models such as TAM/UTAUT, trust provides the psychological bridge from perceived usefulness/ease to actual willingness to use (

Venkatesh et al., 2012). When applied to AI, trust combines confidence in performance with the belief that the system will act responsibly, transparently, and without exploiting user data (

Gefen, 2000).

Recent classifications of trust in AI-based decision support further unpack this idea into facets such as competence, transparency/understandability, fairness, and user control, each of which can lift—or erode—willingness to rely on AI outputs (

Glikson & Woolley, 2020). These principles are also reflected in the concept of Corporate Digital Responsibility (CDR), which extends corporate social responsibility to digital practices and calls for transparency, accountability, and user autonomy in the design and governance of AI systems (

Lobschat et al., 2021).

Ethical frameworks offer concrete anchors for this orientation: principles like beneficence, non-maleficence, autonomy, justice, and explicability suggest that AI used for personalization should be not only effective but also explainable and fair in its data practices (

Floridi et al., 2018;

Dignum, 2019). When firms signal this stance consistently—through consent practices, clear explanations, and meaningful recourse—trust becomes easier to grant and maintain.

Trust is also tested in the everyday trade-offs of data-driven marketing. The well-documented personalization–privacy paradox captures a persistent tension: people value tailored experiences yet hesitate when personalization requires extensive data collection or opaque tracking (

Awad & Krishnan, 2006;

Martin & Murphy, 2017). Perceptions of algorithmic fairness and bias have become increasingly relevant; studies show that even minor biases in AI decision-making can undermine consumer confidence (

Li et al., 2023). Design and communication choices can mitigate these concerns. Greater algorithmic transparency—clarifying what data are used, why recommendations appear, and how users can adjust settings—tends to improve acceptance. Likewise, subtle anthropomorphic cues that express competence or friendliness can enhance perceived trustworthiness when they remain realistic (

Li et al., 2023). Ultimately, trust is earned through responsible design and governance, not assumed.

In this study, we treat trust/ethics as a multidimensional driver of acceptance for AI-based personalized advertising. The construct speaks to whether consumers view AI-enabled targeting as reliable, transparent, privacy-respecting, and fair.

Based on the above, we expect that higher perceived trust and ethicality will be associated with greater acceptance of AI-driven personalization.

H1. Higher levels of trust and perceived ethical standards in AI will positively influence consumer acceptance of AI-based personalized advertising.

2.2. Familiarity and Digital Behavior

The acceptance of new technologies often depends on the extent to which users are familiar with them. In the case of artificial intelligence, exposure and personal experience play a crucial role: the more consumers encounter AI applications in their daily lives, the easier it becomes for them to trust and adopt these systems. According to the Unified Theory of Acceptance and Use of Technology, familiarity and repeated interaction reduce uncertainty and increase willingness to adopt innovations (

Venkatesh et al., 2012).

Studies have shown that consumers who have already interacted with smart assistants, chatbots, or algorithm-based recommendations are more likely to accept additional AI applications in marketing. For example,

Gursoy et al. (

2019) found that familiarity with AI devices in hospitality services increased customers’ willingness to accept them. Similarly,

Nagy and Hajdu (

2021) found that consumers’ trust and perceived ease of use significantly influence their positive attitudes toward AI-based online services, increasing their willingness to adopt such technologies. Also,

Gursoy et al. (

2019) found that prior experience with AI devices in hospitality services improved consumers’ attitudes toward using them. Recent work also shows that familiarity shapes expectations of fairness, transparency, and usefulness, all of which determine behavioral intention toward AI (

Dwivedi et al., 2019).

Familiarity, however, is not limited to usage but also involves understanding.

Wang et al. (

2024) highlight that “AI literacy” helps consumers interpret algorithmic recommendations more effectively and makes them more open to accepting them. This suggests that acceptance is shaped not only by passive exposure but also by consumers’ perceived ability to make sense of how AI systems operate. Consumers with higher literacy are less likely to feel manipulated and more likely to perceive personalization as beneficial rather than invasive.

At the same time, consumers’ digital routines influence their openness to AI. Consumers’ everyday digital activities play an important role in shaping how they perceive and respond to personalization. The shift from traditional retail to digital environments has produced not only new ways of shopping but also new behavioral patterns that influence acceptance of technological innovations. Research on multi-channel and omni-channel retailing emphasizes that consumers who actively move across digital platforms—social media, e-commerce, and mobile apps—tend to be more open to digital personalization strategies (

Verhoef et al., 2015). Similarly,

Lazaris and Vrechopoulos (

2014) argue that the omni-channel consumer is accustomed to seamless experiences across devices and platforms, making them more receptive to AI-driven recommendations and advertising. Recent findings show that frequent digital engagement builds “digital maturity,” which makes consumers more receptive to algorithmic decision aids and recommendation systems (

Riandhi et al., 2025).

Taken together, prior research suggests that consumers with more frequent and intensive digital behaviors are more likely to embrace AI-based personalization. Their continuous exposure to digital platforms builds familiarity with recommendation systems and normalizes algorithmic guidance, thus reducing resistance to AI-powered advertising.

H2. Greater familiarity and exposure to AI technologies will increase consumer acceptance of AI-based personalized advertising.

H3. More frequent and intensive digital behaviors will increase consumer acceptance of AI-based personalized advertising.

2.3. Consumption and Identity

Consumption is not merely about functional utility; it also serves as a means of self-expression and identity construction.

Belk’s (

1988) concept of the “extended self” highlights how possessions and brands become integral parts of how individuals define themselves. (

Belk, 2013) Social identity theory also helps explain this behavior. People define themselves not only as individuals but also as members of social groups. Through their choices, they signal connection with some groups and distance from others. In this sense, consumption is a symbolic act—it communicates meaning about who someone is, what they value, and where they belong. Brands, digital services, and even algorithms can serve as “identity markers” that help consumers maintain coherence between their self-image and their social image.

Recent studies have also shown that when personalization technologies support this sense of identity, consumers respond positively—but when they threaten it, resistance grows.

Arsenyan and Mirowska (

2021) found that users reject AI-driven recommendations when they feel a loss of control over self-expression. Similarly,

Mikalef et al. (

2020) observed that identity threat and privacy concerns can weaken trust in algorithmic personalization, even when the outcomes are accurate. In contrast, alignment between algorithmic messages and the consumer’s self-concept increases acceptance and perceived value (

Chernev et al., 2011).

In short, identity considerations lie at the heart of how people respond to AI-driven marketing. Personalization does not occur in a neutral space—it interacts with deeply rooted psychological and social needs for self-expression, differentiation, and belonging. This makes identity considerations central to any framework of consumer acceptance of AI technologies. When AI-based personalization resonates with how people see themselves—and supports their desire for authenticity—it is more likely to be welcomed rather than resisted.

H4. When personalization strategies align with consumers’ self-concept and identity motives, acceptance of AI-based personalized advertising will increase.

2.4. Conceptual Model and Hypotheses

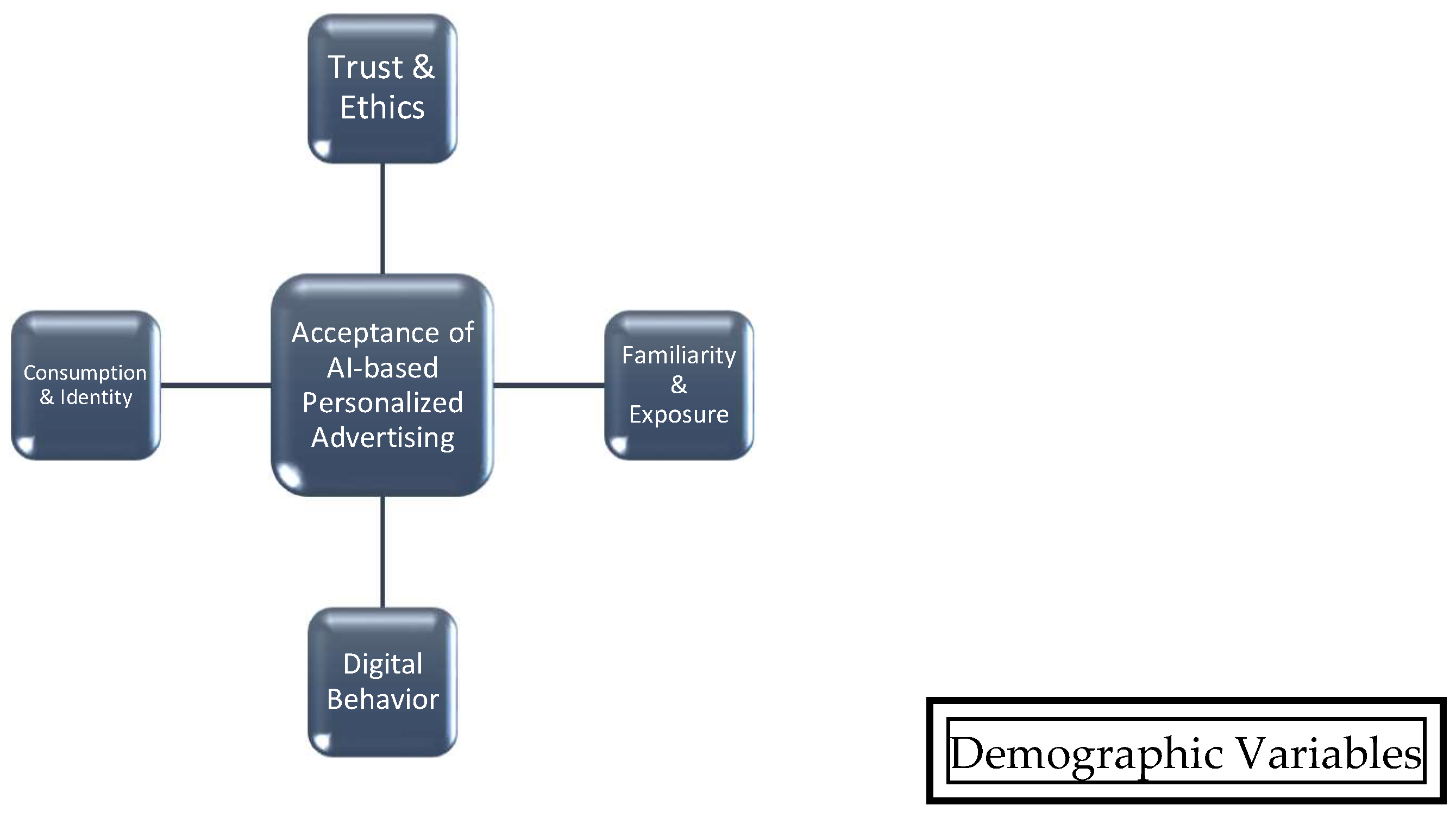

Based on the theories discussed in the previous sections, this study develops a framework that explains how consumers accept AI-based personalized advertising. The model is built around four main factors: trust and ethics in AI, familiarity and exposure to AI, digital consumer behavior, and consumption and identity. These factors capture different sides of how people experience and react to AI when it is used in marketing.

Trust and ethics describe whether consumers believe that AI works in a fair, transparent, and responsible way. Familiarity and exposure refer to how much contact people have already had with AI, and how comfortable they feel using it. Digital consumer behavior reflects everyday habits, such as shopping online, using mobile apps, and interacting with social media, which often make people more open to algorithmic personalization. Finally, consumption and identity focus on how people use products and services to express who they are, and how AI-driven personalization can either support or challenge this process.

In addition, the framework also considers demographic factors such as age, gender, education, and socioeconomic status. These variables are not the main focus of the theory but are important controls, since previous studies show that they can influence attitudes toward technology and marketing practices.

Each of these constructs represents a theoretical pathway derived from prior research and corresponds to one of the hypotheses developed in the previous sections (H1–H5). The proposed model suggests that acceptance of AI-based personalized advertising increases when consumers perceive AI as ethical and trustworthy, feel familiar and confident with its use, engage actively in digital environments, and see AI personalization as consistent with their identity. Demographic factors are treated as control variables that may shape these relationships.

Figure 1 illustrates the conceptual model and the hypothesized relationships between constructs and acceptance of AI-based personalized advertising.

3. Materials and Methods

3.1. Study Design

This study followed a cross-sectional, survey-based design conducted as part of a doctoral dissertation. Data were collected using a structured questionnaire designed to capture consumers’ attitudes toward AI in marketing, their experience and exposure to AI tools, everyday digital behaviors, and key demographic characteristics. The questionnaire was organized into four thematic sections and one brief demographics block, covering the following areas:

- ➢

AI attitudes/ethics including awareness, trust, perceived ethics, acceptance of AI-driven advertising.

- ➢

AI experience/use -frequency of interaction with AI applications (e.g., chatbots, ChatGPT-3.5 or use of AI at work),

- ➢

Digital consumer behavior—such as of online purchasing by product category, pre-purchase research habits and cross-channel behaviors (showrooming/webrooming),

- ➢

Social influence & consumption-as-identity-perceived influence of others on purchases; degree to which purchases express personal identity. Demographics including age, gender, education, marital status, job responsibility, income satisfaction.

To enhance representativeness, the survey was administered using both online and paper-based questionnaires. The online version was distributed via Google Forms, while 145 printed copies were administered in public spaces to include digital connectivity. This mixed-mode approach ensured broader demographic coverage and reduced digital-savviness bias.

Only adults aged 23 and above were included, ensuring that respondents had independent purchasing experience and sufficient digital literacy to provide informed opinions about AI-based marketing practices.

3.2. Sample and Data-Collection Procedure

Data were collected from December 2024 to April 2025 and targeted adult consumers across Greece. To limit bias toward digitally savvy respondents, we used a mixed-mode administration: online via Google Forms, and paper questionnaires, to include participants with limited digital familiarity.

We obtained 650 complete questionnaires (505 online and 145 paper-based). Paper forms were coded using the same template as the online responses and merged into a single dataset. Skip logic was applied in the online version to ensure that only participants with basic familiarity with AI proceeded to the main questionnaire. Variables followed a common coding scheme (e.g., binary 0/1 for Yes/No, Likert 1–5 where applicable), and composite indices were computed as means scores, standardized where necessary to ensure consistency across variables. Reliability and internal consistency were assessed using Cronbach’s alpha, with all constructs above 0.70. Disclosure regarding AI tools: Generative AI (ChatGPT-3.5) was not used for data analysis or interpretation but only for grammar and language editing during manuscript revision, following journal policy. All participation was voluntary and anonymous, and respondents provided informed consent before completing the survey.

3.3. Measures and Variable Coding

All measures followed a consistent coding and scaling procedure (binary: 1 = Yes, 0 = No; Likert: 1–5). For constructs composed of multiple items, we assessed internal consistency using Cronbach’s alpha (target α ≥ 0.70). Composite indices were computed as means of their items, ignoring system-missing values. When components different metrics (e.g., binary counts with Likert scores), variables were standardized (Z-scores) prior to averaging to prevent artificial weighting due to scale differences (

Field, 2018;

Tabachnick & Fidell, 2019).

Dependent variable

Main predictors/indices

AI_TrustEthics (0–1). Attitude toward AI from two binary items: “Do you trust AI?” and “Do you consider AI ethical?”

Computation: MEAN (trust, ethics). Cronbach’s α = 0.800 after excluding the “service by smart robot” item, which lowered reliability. Higher scores reflect stronger trust and perceived ethics.

Skip logic: For respondents who reported not knowing what AI is, these two items were set to system-missing and were not imputed.

AI_ExposureUse (0–1). Experience/exposure to AI from three binary items: use of chatbots, use of ChatGPT-3.5, use of AI at work.

Computation: MEAN (chatbots, CHATGPT_usage, ai_work). Cronbach’s α = 0.819. Higher scores indicate greater exposure/use.

Digital_Behavior (composite). Built from three components, each standardized and then averaged:

Online_Breadth: count of product/service categories the respondent purchases online (sum of Yes across categories).

Prepurchase_Research: (a) indicator of research before purchase (Yes/No) plus (b) the count of criteria checked (e.g., price, reviews, availability).

OmniChannel: showrooming + webrooming (range 0–2).

Final index: Digital_Behavior = MEAN (Z_Online_Breadth, Z_Prepurchase_Research, Z_OmniChannel). Standardization ensures fair weighting across heterogeneous metrics (

Field, 2018).

Social influence. Initially tested as a multi-item construct but internal consistency was insufficient; therefore, we used a single-item measure (influence_by_others_2, Likert 1–5). The single-item choice is acceptable for clear, unidimensional attributes (

Wanous et al., 1997;

Bergkvist & Rossiter, 2007). Higher values indicate stronger perceived influence of others.

Demographics/controls

Age (years, continuous), Gender (0/1), Master (1 = Yes), Married (1 = Yes), Job_responsibility (1 = Yes), Income_satisfaction (1–5). These variables served as controls in the hierarchical logistic models.

Reliability and quality checks

3.4. Statistical Protocol

All statistical analyses were conducted in IBM SPSS Statistics 29.0 (Advanced Statistics) with a two-tailed α = 0.05. Data quality and completeness were checked before modeling. Items contingent on AI awareness was kept as system-missing (skip logic) and were checked before modeling. Items contingent on AI awareness was kept as system-missing (skip logic) and not imputed. Composite indices were calculated as means scores, and standardized (Z-scores) when different metrics were involved, to ensure equal weighting (

Field, 2018;

Tabachnick & Fidell, 2019).

Descriptive statistics (mean, SD, range) and Pearson correlations were reported for all variables. Regression models used listwise deletion; valid N was reported in each analysis. For linear models assumptions of normality/homoscedasticity multicollinearity (Tolerance/VIF), and influence observations (Cook’s D, leverage, studentized deleted residuals) were assessed (

Field, 2018;

Tabachnick & Fidell, 2019).

The main prediction—acceptance of AI-driven advertising—was tested with binary logistic regression using Enter in three steps:

- (1)

AI_TrustEthics & AI_ExposureUse (baseline),

- (2)

adding Digital_Behavior and Social influence (extended), and

- (3)

a hierarchical model with Block 1 demographics, Block 2 AI indices, and Block 3 behavioral factors.

We report Omnibus χ

2, Nagelkerke R

2, Hosmer–Lemeshow, classification table, and Wald, p, and Odds Ratios [Exp(B)] with 95% CIs (

Hosmer et al., 2013). Model discrimination is summarized with ROC/AUC from predicted probabilities (

Fawcett, 2006).

Robustness checks included an OLS model with AI_TrustEthics as the DV. When testing interactions, continuous predictors were centered/standardized before creating the interaction term (

Aiken & West, 1991;

Hayes, 2018). As robustness checks, we tried alternative classification cut-offs (0.40/0.60) and inspection of influential cases produced consistent results.

4. Results

4.1. Descriptive Statistics

The final sample consists of 650 participants aged between 23 to 85 years (M = 39.7, SD = 15.07). The gender distribution is fairly balanced (46% men, 54% women). Regarding education, a notable share (31%) hold a master’s degree, and 49% report having a position of responsibility at work. About 26% are employed in the public sector, while 46% are married. In terms of income, most respondents fall in the mid-range categories, with a mean score of 3.54 on a six-point scale, corresponding mainly to €901–€1200 per month.

For the main constructs developed in this study: AI_TrustEthics—capturing trust in AI and its perceived ethical dimension—has a mean of 0.47 (SD = 0.46) on a 0–1 scale. This indicates that consumer opinions are divided—roughly half remain cautious, while the other half express moderate trust in AI.

AI_ExposureUse, reflecting familiarity with and use of AI tools (e.g., chatbots, ChatGPT-3.5, Leonardo AI), shows a mean of 0.34 (SD = 0.42), suggesting relatively limited direct experience with AI technologies among respondents. The composite Digital_Behavior index—combining breadth of online purchasing, pre-purchase research, and omnichannel practices—is standardized (M = 0.00, SD = 0.82). This variation shows clear differences within the sample: some consumers are highly active online, whereas others still rely mainly on traditional shopping patterns.

Influence_by_others which measures how much purchase decisions are affected by friends family, or influencers, has a mean of 0.53, indicating that social influence plays a noticeable role for about half of the participants. Finally, Consumption_Identity (“To what extent do your purchases express your personality/identity?”) has a mean of 3.70 on a 1–5 scale, showing that a substantial part of the sample views consumption as an expression of self-image and personal identity. Descriptive statistics of the main constructs are presented in

Table 1.

4.2. Correlations

Before running the regression models, we examined how the main variables relate to each other.

Table 2 reports Pearson correlations for AI_TrustEthics (trust in and perceived ethics of AI), AI_ExposureUse (familiarity with and use of AI tools), Digital_Behavior (standardized composite), Influence_by_others, and Consumption_Identity. Pearson’s r was appropriate for these kinds of considered appropriate for these continuous or composite measures—even when constructed from Likert items—since such indices can be treated as approximately continuous without introducing meaningful bias (

Norman, 2010;

Carifio & Perla, 2008).

Two results are clear. First, AI_TrustEthics is strongly and positively related to AI_ExposureUse (r = 0.508,

p < 0.001). In practice, In practical terms, respondents with greater hands-on experience with AI (e.g., chatbots, generative tools) tend to express higher trust and perceive AI as more ethically acceptable. Prior studies have shown a similar pattern: direct experience reduces uncertainty and supports acceptance (

Gursoy et al., 2019;

Lu et al., 2019). Second, AI_TrustEthics has a small but significant positive correlation with Digital_Behavior (r = 0.147,

p < 0.001). More digitally active consumers tend to display slightly more favorable attitudes toward AI, in line with work on digitally mature customer journeys (

Lemon & Verhoef, 2016).

By contrast, Influence_by_others is not meaningfully associated with the other indices. This suggests that acceptance of AI in marketing is shaped more by personal attitudes and personal experience than by perceived social pressure—consistent with broader evidence on technology acceptance (

Venkatesh et al., 2012). Finally, Consumption_Identity shows a negative association with Digital_Behavior (r = −0.172,

p < 0.001): as online engagement becomes broader and more systematic, purchases appear less tied to expressions of personal identity. The correlations among the main variables are presented in

Table 2.

4.3. Logistic Regression—Model 1 (Baseline)

Model 1 estimates the likelihood of accepting AI-driven advertising (DV: Accept_AI_Ads, 1 = Yes, 0 = No) from two predictors: AI_TrustEthics and AI_ExposureUse.

Model fit. The logistic regression model was statistically significant (Omnibus χ2(2) = 148.75, p < 0.001) and shows satisfactory explanatory power (Nagelkerke R2 = 0.416). The Hosmer–Lemeshow goodness-of-fit test indicated some miscalibration (p < 0.001), of the calibration plot showed no systematic bias between observed and predicted probabilities. With the default 0.50 cut-off, overall accuracy is about 79.5%, with a mild tendency to over-classify non-acceptance (0) cases—a common outcome in unbalanced samples. Model discrimination was high confirming that the model reliably distinguishes between acceptance and non-acceptance.

Coefficients. AI_TrustEthics is a strong, positive, and statistically significant predictor (B = 4.11, Exp(B) = 61.00, p < 0.001). This finding supports H1, showing that higher trust and stronger perceptions of AI as ethical substantially increase the odds of accepting AI-driven personalization.

In contrast, AI_ExposureUse is not significant (B = 0.06, Exp(B) = 1.06,

p = 0.86), suggesting that simple familiarity or sporadic use of AI tools does not meaningfully influence acceptance in the absence of perceived trust or ethical assurance. Marginal effects analysis further showed that a one-standard-deviation increase in AI_TrustEthics raised the probability of acceptance by approximately 18%, while variation in AI_ExposureUse had no measurable effect. Overall, Model 1 provides partial support for the theoretical expectations: H1 is confirmed, whereas H2 is not yet supported in this baseline specification. The results of the binary logistic regression analysis are presented in

Table 3.

4.4. Logistic Regression—Model 2 (Enhanced)

Model 2 examines whether adding behavioral and social factors improves prediction of acceptance of AI-driven advertising (DV: Accept_AI_Ads, 1 = Yes, 0 = No) beyond basic attitudes toward AI and familiarity with it. The predictors included AI_TrustEthics, AI_ExposureUse, Digital_Behavior (standardized composite), and Influence_by_others.

Model fit. The model is statistically significant (Omnibus χ2(4) = 151.37, p < 0.001) and shows a slightly higher explanatory power than the baseline (Nagelkerke R2 = 0.423 vs. 0.416). Model calibration improves meaningfully: the Hosmer–Lemeshow test is non-significant (p = 0.106), indicating acceptable agreement between predicted and observed values. Overall accuracy remains around 79.5%, but class balance is better than in Model 1: the model correctly classifies 87% of the “0” cases (non-acceptance) and ~49.5% of the “1” cases (acceptance).

Coefficients and interpretation. AI_TrustEthics remains the only strong and statistically significant predictor (B ≈ 4.07, Exp(B) = 58.40,

p < 0.001). A one-unit increase in trust/perceived ethics is associated with a large multiplicative increase in the odds of accepting AI-personalization. By contrast, AI_ExposureUse (

p ≈ 0.64), Digital_Behavior (

p ≈ 0.67), and Influence_by_others (

p ≈ 0.115) do not show independent statistical contributions in this specification. When trust/ethics is accounted for, neither bare familiarity with AI, nor the intensity of digital consumer behaviour, nor declared social influence adds materially to prediction. The results of the enhanced logistic regression model are presented in

Table 4.

Adding Digital_Behavior and Influence_by_others improves calibration (HL test) and marginally raises explained variance, but the core result remains: trust and perceived ethics of AI is the decisive driver of acceptance of AI-personalized advertising, while the additional variables do not exhibit independent effects in this model. Subsequent analysis proceeds with a hierarchical specification including demographic controls to assess their incremental contribution. Thus, when trust and ethical perceptions are controlled for, neither general familiarity with AI (H2) nor broader digital engagement (H3) nor perceived social influence meaningfully alter the likelihood of acceptance. Marginal-effects analysis showed that a one-standard-deviation increase in AI_TrustEthics raises the predicted probability of acceptance, while changes in the other variables had negligible effects.

Overall, Model 2 reinforces the importance of trust and ethical perceptions (supporting H1), while the behavioral and social variables do not reach significance in this specification, providing no additional support for H2 or H3.

4.5. Logistic Regression—Model 3 (Hierarchical)

In Model 3 I estimate acceptance of AI-driven advertising (DV: Accept_AI_Ads, 1 = Yes, 0 = No) with a hierarchical design. I first enter the demographic controls (age, gender, income, marital status, job responsibility). Then, I add the attitudinal block (AI_TrustEthics, AI_ExposureUse). Finally, I include the behavioural/identity block (Digital_Behavior, Consumption_Identity).

Model fit and calibration. The full three-block model performs very well: Omnibus χ2(10) = 303.425, p < 0.001, Nagelkerke R2 = 0.732. Calibration is acceptable (Hosmer–Lemeshow χ2(8) = 12.635, p = 0.125). With the default cut-off (0.50), overall accuracy reaches 92.5% (correctly classifying 95.6% of the non-acceptance cases and 80.8% of the acceptance cases). Compared with the earlier specifications, both explained variance and calibration improve once attitudes are in the model and remain strong after adding behaviour/identity. Compared with earlier models, both explained variance and calibration improved substantially after introducing attitudinal variables and remained high once behavioural and identity constructs were added.

Substantive results. The picture is consistent and clear. AI_TrustEthics is, by far, the dominant driver of acceptance (B = 7.891, OR = 2673.747, p < 0.001). When this attitudinal dimension is controlled, AI_ExposureUse becomes a significant positive contributor (B = 1.534, OR = 4.638, p = 0.017), supporting H2 and indicating that hands-on familiarity with AI enhances acceptance beyond trust alone.

Consumption_Identity is negative and significant (B = −1.516, OR = 0.220, p < 0.001): the more people frame purchases as expressions of identity, the lower their likelihood to accept AI-personalized ads. The composite Digital_Behavior index is not significant at the 5% level (B = −0.922, OR = 0.398, p = 0.075) once all covariates were included, providing no additional support for H3.

Among control variables, age relates negatively to acceptance (B = −0.063, OR = 0.939, p = 0.030), while income relates positively (B = 0.606, OR = 1.833, p < 0.001). Holding a position of responsibility is associated with lower odds (B = −2.958, OR = 0.052, p < 0.001), whereas being married is associated with higher odds (B = 2.656, OR = 14.240, p < 0.001). Gender and Influence_by_others are not significant (p = 0.702 and p = 0.187, respectively).

In summary, Model 3 offers strong support for H1, H2 and H4, partial support for H5 (age, income, job role effects), and no additional evidence for H3. Overall, acceptance of AI-personalized advertising appears primarily attitudinal—driven by trust and ethical perceptions—reinforced by direct experience with AI, dampened by identity-based consumption motives, and modestly shaped by demographic context. The results of the hierarchical logistic regression analysis are presented in

Table 5.

4.6. Ordinary Least Squares (OLS)—Predictors of AI_TrustEthics

The model examines which factors shape consumers’ trust and perceived ethics of AI (DV: AI_TrustEthics). The predictors entered were: age, gender, Master’s degree, consumption_identity, breadth of online shopping (Online_Breath), AI use at work (ai_work), income satisfaction, marital status, following social trends, and reliance on reviews.

Model performance. The specification performs strongly: R = 0.722, R2 = 0.521, Adjusted R2 = 0.511, SEE = 0.32145. The omnibus test is significant (F(10,486) = 52.904, p < 0.001), indicating that the model explains about 52% of the variance in AI_TrustEthics.

Key predictors. Τhe strongest positive predictor is AI use at work (ai_work): those who actively use AI in their job report higher trust and a more favourable ethical view. Women reported slightly higher trust levels toward AI technologies and Higher education (Master’s degree), also showed positive associations, suggesting that greater exposure and formal learning experiences contribute to stronger trust in AI.

Breadth of online shopping was another significant positive predictor, implying that more digitally active consumers are more comfortable with and trusting toward AI-based systems. By contrast, age and income satisfaction are negatively associated with AI_TrustEthics, suggesting that older consumers and those more satisfied with their income are somewhat less trusting or more skeptical toward AI in marketing contexts.

Two additional negatives relationships stood out: consumption_identity (seeing purchases as self-expression) and reliance on reviews—both r factors were linked to a more cautious stance toward AI. Marital status shows a small negative but significant effect, while following social trends is not statistically significant at α = 0.05. Overall, the model highlights that everyday digital engagement and direct professional use of AI foster trust and ethical acceptance, whereas identity-based consumption and reliance on peer cues reduce it. These findings align with prior evidence that familiarity and instrumental experience promote positive attitudes toward AI (

Gursoy et al., 2019;

Lu et al., 2019), while self-expressive consumption tends to increase ethical sensitivity and caution (

Belk, 2013;

Berger & Heath, 2007).

Table 6 presents a compact summary of the main coefficients included in the final model.

4.7. Diagnostic Checks & Robustness

Before interpreting the findings, we examined whether the models met the necessary statistical assumptions and whether the results were stable across specifications. For the logistic regression predicting acceptance of AI-based advertising, the model showed a good overall fit and calibration. The Hosmer–Lemeshow test was non-significant (χ

2(8) = 12.635,

p = 0.125), indicating no systematic mismatch between predicted and observed probabilities The model also discriminated effectively between acceptance and non-acceptance (AUC = 0.864), which is considered strong performance for this type of analysis (

Fawcett, 2006). At the usual 0.50 threshold, overall accuracy reached 92.5%, with high specificity (95.6%) and acceptable sensitivity (80.8%). For the linear model explaining AI_TrustEthics, standard diagnostic checks indicated that assumptions were adequately satisfied. The distribution of residuals approximated normality, the variance in errors was roughly constant, and no cases exerted undue influence (Cook’s D < 0.02; leverage < 0.12). These results suggest that a few extreme observations did not distort the estimates or conclusions. Taken together, the diagnostics confirm that both models are statistically sound and that the reported findings are robust. The key relationships observed—particularly the role of trust and ethical perceptions—remain stable across tests and specifications.

5. Discussion

The findings of this study provide important insights into the acceptance of AI-based personalized advertising. Across the regression models, trust and ethics emerged as the strongest and most consistent predictor. Consumers who perceive AI as trustworthy and ethically acceptable were significantly more likely to embrace AI-driven personalization. This result reinforces the idea that ethical evaluations are central to technology adoption—without trust, even the most advanced personalization systems risk rejection.

Trust and Ethics (H1): The dominant role of trust and perceived ethics confirms that consumers evaluate not only what AI does but also how it operates. This aligns with prior research showing that transparency, fairness, and explainability increase willingness to use AI-based systems (

Lu et al., 2019;

Venkatesh et al., 2012). From a theoretical standpoint, this finding supports the argument that ethical assurance acts as a prerequisite for acceptance. From a managerial point of view, marketers should therefore prioritize clear communication, user control, and transparency in data practices to build durable trust. In other words, without trust, even the most sophisticated personalization strategies risk rejection. Beyond ethical evaluations, consumers’ familiarity with AI also plays a key role in shaping acceptance.

Familiarity and Digital Behavior (H2–H3): The analysis also highlights that exposure and familiarity with AI tools strengthen acceptance once trust is established. ands-on experience, such as using chatbots or generative tools, helps consumers form realistic expectations and reduces perceived risk. This supports technology acceptance models (UTAUT), which propose that direct interaction increases perceived usefulness and lowers uncertainty (

Gursoy et al., 2019).

Digital consumer behavior, however, showed weaker direct effects. While more active digital users initially appeared more positive toward AI, these effects diminished after controlling for attitudes. This suggests an indirect pathway: digital engagement may shape trust and familiarity, which in turn influence acceptance. Future studies could formally test this mediating mechanism to clarify how online behavior translates into openness to AI-driven marketing.

Identity and Autonomy (H4): This study found a negative and significant association between consumption identity and acceptance of AI personalization. Consumers who view their purchases as expressions of personal identity were less likely to welcome algorithmic targeting. This finding underlines a tension between personalization and autonomy: when consumers perceive AI as infringing on their self-expression, they resist engagement. This nuance adds depth to existing debates on personalization, illustrating that relevance alone is insufficient when it threatens individuality (

Lemon & Verhoef, 2016). For marketers, this means that personalization strategies should respect consumer autonomy and avoid overly invasive profiling.

Demographic Factors (H4): Several demographic characteristics also shaped acceptance. Age showed a negative association, indicating that younger consumers are generally more open to AI-based marketing. Income was positively related to acceptance, while marital status and job responsibility produced mixed effects—suggesting that life stage and socio-economic context influence how AI is perceived. These results partially support H5 and are consistent with prior work linking demographic diversity to differences in technology readiness (

Venkatesh et al., 2012).

6. Theoretical and Managerial Implications

From a theoretical perspective, the results expand existing frameworks of AI acceptance by integrating ethical evaluations, identity motives, and experiential familiarity into a single model. Prior research has largely treated these dimensions separately, yet the present findings show that ethical assurance, self-concept, and experience interact in shaping consumer responses to AI. This integrated approach advances our understanding of how moral, cognitive, and social mechanisms jointly determine acceptance. It also contributes to theories of digital trust and technology adoption by emphasizing that ethical credibility and identity alignment are core antecedents of AI trust.

From a managerial perspective, the results emphasize that acceptance of AI-driven marketing depends not only on technical efficiency but also on transparency, fairness, and respect for autonomy. Marketers should design trust-building strategies—for example, offering clear explanations of how personalization works, allowing users to adjust data-sharing settings, and visibly committing to ethical AI principles. These practices strengthen confidence and minimize perceptions of manipulation.

Furthermore, personalization strategies should balance relevance with respect for individuality. For consumers who view consumption as self-expression, excessive personalization can feel intrusive. Responsible AI marketing requires sensitivity to these identity-based concerns, ensuring that technology enhances, rather than constrains, consumer agency.

Overall, this study suggests that firms seeking to implement AI successfully must combine innovation with ethical and human-centered design, while scholars should continue to refine conceptual models that connect ethics, identity, and digital trust within AI acceptance research.

7. Limitations and Future Research

This study, like all empirical research, has some limitations that should be recognized. First, the data were collected in Greece, which may limit how far the findings can be generalized to other countries or cultural settings. Cultural values influence how people view technology and ethics; so, future studies could use cross-country comparisons to see whether similar results appear in other contexts. Second, this study followed a cross-sectional design, meaning that it captured attitudes at one specific point in time. Longitudinal research would help identify how trust, familiarity, and ethical perceptions develop as people gain more experience with AI in their daily lives and whether these changes affect acceptance over time.

Third, despite efforts to include both online and paper-based responses, the sample may still lean toward younger or more educated participants who are relatively comfortable with technology. Future research could aim for more diverse samples, including participants with different education levels, occupations, and digital skills, to achieve a broader picture of consumer attitudes toward AI. Finally, this study relied on self-reported data, which can be affected by social desirability or limited self-awareness. Combining surveys with behavioral observations or experimental approaches could provide deeper and more objective insights.

Despite these limitations, this study provides a useful foundation for understanding how people accept AI-driven marketing. Future research could extend this framework through longitudinal, cross-cultural, and mixed-method studies to explore more deeply how ethical trust, personal identity, and everyday digital experience interact in shaping acceptance of AI technologies.

8. Conclusions

This study underscores the central role of trust and perceived ethics in shaping consumer acceptance of AI in marketing. When consumers perceive AI as reliable, transparent, and fair, they are much more likely to embrace personalization. Familiarity with AI—especially through practical experience at work—also reinforces positive attitudes, showing that real-world interaction reduces uncertainty and strengthens confidence. At the same time, identity-related concerns act as a brake on acceptance, as consumers who associate consumption with self-expression may perceive personalization as intrusive. This confirms that AI acceptance is not purely technological but also deeply human, reflecting a balance between usefulness, ethics, and individuality. For companies, the message is clear: to integrate AI successfully, they must not only innovate technologically but also cultivate trust, transparency, and respect for consumer autonomy. By doing so, AI in marketing can evolve toward a more ethical, human-centered model that benefits both businesses and consumers.

Author Contributions

Conceptualization, V.M.; methodology, V.M. and P.S.; software, I.A. and K.S.; validation, P.S.; formal analysis, V.M., I.A. and K.S.; investigation, V.M.; data curation, V.M., I.A. and K.S.; writing—original draft preparation, V.M.; writing—review and editing, V.M. and P.S.; supervision, P.S.; project administration, P.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the anonymous and voluntary nature of the survey. According to the regulations of the University of Western Macedonia and national legislation, anonymous, voluntary surveys that do not collect sensitive or personally identifiable data are exempt from formal IRB approval. The study was conducted in accordance with the Declaration of Helsinki.

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

The data presented in this study are available from the corresponding author on request. The data are not publicly available due to privacy restrictions.

Acknowledgments

The authors thank all the survey participants for their valuable contributions.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

Operational definitions and codings of variables (

Appendix A).

Table A1.

Operational definitions and codings of variables (

Appendix A).

| Variable | Description/Items | Scale/Coding | Computation | Reliability/Notes |

|---|

| Accept_AI_Ads (DV) | Acceptance of AI-driven advertising | 0 = No, 1 = Yes | — | Binary dependent variable |

| AI_TrustEthics | “Do you trust AI?”, “Do you consider AI ethical?” | 0 = No, 1 = Yes | MEAN(trust, ethics) | α = 0.800. Skip logic: if respondent does not know what AI is, these items are set to system-missing (no imputation). Higher scores = stronger trust/perceived ethics. |

| AI_ExposureUse | Use of chatbots; use of ChatGPT-3.5; use of AI at work (ai_work) | 0 = No, 1 = Yes | MEAN(chatbots, chatgpt_usage, ai_work) | α = 0.819. Higher scores = greater exposure/use. |

| Digital_Behavior | (1) Online_Breadth: number of product/service categories purchased online (sum of Yes); (2) Prepurchase_Research: indicator of research before purchase (Yes/No) plus count of criteria checked (price/reviews/availability, etc.); (3) OmniChannel: showrooming + webrooming | Mixed metrics (binary/counts) | Compute Z for the three components → MEAN(Z_Online_Breadth, Z_Prepurchase_Research, Z_OmniChannel) | Standardize subcomponents before averaging to avoid scale-weighting. Higher scores = more active digital behavior. |

| Social influence | influence_by_others_2 (perceived influence of others on purchases) | Likert 1–5 (higher = greater influence) | Single-item | Multi-item version showed low consistency → single-item retained. |

| Consumption_Identity | “To what extent do your purchases express your personal identity/personality?” | Likert 1–5 | Single-item | Unidimensional content; treated as standalone indicator. |

| Controls | Age, Gender, Master, Married, Job_responsibility, Income_satisfaction | Age: years (continuous) · Gender/Master/Married/Job_responsibility: 0/1 · Income_satisfaction: 1–5 | — | Used in Block 1 of the hierarchical logistic model. |

References

- Aiken, L. S., & West, S. G. (1991). Multiple regression: Testing and interpreting interactions. Sage. [Google Scholar]

- Ameen, N., Tarhini, A., Reppel, A., & Anand, A. (2021). Customer experiences in the age of artificial intelligence. Computers in Human Behavior, 114, 106548. [Google Scholar] [CrossRef]

- Arsenyan, J., & Mirowska, A. (2021). Almost human? A comparative case study on the social media presence of virtual influencers. International Journal of Human-Computer Studies, 155, 102694. [Google Scholar] [CrossRef]

- Awad, N. F., & Krishnan, M. S. (2006). The personalization privacy paradox: An empirical evaluation of information transparency and the willingness to be profiled online for personalization. MIS Quarterly, 30(1), 13–28. [Google Scholar] [CrossRef]

- Belk, R. W. (1988). Possessions and the extended self. Journal of Consumer Research, 15(2), 139–168. [Google Scholar] [CrossRef]

- Belk, R. W. (2013). Extended self in a digital world. Journal of Consumer Research, 40(3), 477–500. [Google Scholar] [CrossRef]

- Berger, J., & Heath, C. (2007). Where consumers diverge from others: Identity signaling and product domains. Journal of Consumer Research, 34(2), 121–134. [Google Scholar] [CrossRef]

- Bergkvist, L., & Rossiter, J. R. (2007). The predictive validity of multiple-item vs. single-item measures of the same constructs. Journal of Marketing Research, 44(2), 175–184. [Google Scholar] [CrossRef]

- Carifio, J., & Perla, R. (2008). Resolving the 50-year debate around using and misusing Likert scales. Medical Education, 42(12), 1150–1152. [Google Scholar] [CrossRef]

- Chernev, A., Hamilton, R., & Gal, D. (2011). Competing for consumer identity: Limits to self-expression and the perils of lifestyle branding. Journal of Marketing, 75(3), 66–82. [Google Scholar] [CrossRef]

- Dietvorst, B. J., Simmons, J. P., & Massey, C. (2015). Algorithm aversion: People erroneously avoid algorithms after seeing them err. Journal of Experimental Psychology: General, 144(1), 114–126. [Google Scholar] [CrossRef]

- Dignum, V. (2019). Responsible Artificial Intelligence: How to develop and use AI in a responsible way. Springer. [Google Scholar] [CrossRef]

- Dwivedi, Y. K., Rana, N. P., Jeyaraj, A., Clement, M., & Williams, M. D. (2019). Re-examining the Unified Theory of Acceptance and Use of Technology (UTAUT): Towards a revised theoretical model. Information Systems Frontiers, 25(3), 729–747. [Google Scholar] [CrossRef]

- Fawcett, T. (2006). An introduction to ROC analysis. Pattern Recognition Letters, 27(8), 861–874. [Google Scholar] [CrossRef]

- Field, A. (2018). Discovering statistics using IBM SPSS statistics (5th ed.). SAGE Publications. [Google Scholar]

- Floridi, L., Cowls, J., Beltrametti, M., Chatila, R., Chazerand, P., Dignum, V., Luetge, C., Madelin, R., Pagallo, U., Rossi, F., Schafer, B., Valcke, P., & Vayena, E. (2018). AI4People—An ethical framework for a good AI society: Opportunities, risks, principles, and recommendations. Minds and Machines, 28(4), 689–707. [Google Scholar] [CrossRef]

- Gefen, D. (2000). E-commerce: The role of familiarity and trust. Omega, 28(6), 725–737. [Google Scholar] [CrossRef]

- Glikson, E., & Woolley, A. W. (2020). Human trust in artificial intelligence: Review of empirical research. Academy of management annals, 14(2), 627–660. [Google Scholar] [CrossRef]

- Gursoy, D., Chi, O. H., Lu, L., & Nunkoo, R. (2019). Consumers acceptance of artificially intelligent (AI) device use in service delivery. International Journal of Information Management, 49, 157–169. [Google Scholar] [CrossRef]

- Hair, J. F., Black, W. C., Babin, B. J., & Anderson, R. E. (2019). Multivariate data analysis (8th ed.). Cengage. [Google Scholar]

- Hayes, A. F. (2018). Introduction to mediation, moderation, and conditional process analysis (2nd ed.). Guilford. [Google Scholar]

- Hosmer, D. W., Lemeshow, S., & Sturdivant, R. X. (2013). Applied logistic regression (3rd ed.). Wiley. [Google Scholar] [CrossRef]

- Huang, M.-H., & Rust, R. T. (2021). A strategic framework for artificial intelligence in marketing. Journal of the Academy of Marketing Science, 49(1), 30–50. [Google Scholar] [CrossRef]

- Kumar, V., Ashraf, R., & Nadeem, W. (2024). AI-powered marketing: What, where, and how? International Journal of Information Management, 77, 102783. [Google Scholar] [CrossRef]

- Lazaris, C., & Vrechopoulos, A. (2014, June 18–20). From multi-channel to omni-channel retailing: Review of the literature and calls for research. 2nd International Conference on Contemporary Marketing Issues (ICCMI) (pp. 655–663), Athens, Greece. [Google Scholar]

- Lemon, K. N., & Verhoef, P. C. (2016). Understanding customer experience throughout the customer journey. Journal of Marketing, 80(6), 69–96. [Google Scholar] [CrossRef]

- Li, Q., Wang, C., Liu, X., & Jiang, S. (2023). The influence of anthropomorphic cues on trust and acceptance of conversational agents. Journal of Medical Internet Research, 25, e44479. [Google Scholar] [CrossRef]

- Lobschat, L., Mueller, B., Eggers, F., Brandimarte, L., Diefenbach, S., Kroschke, M., & Wirtz, J. (2021). Corporate digital responsibility. Journal of Business Research, 122, 875–888. [Google Scholar] [CrossRef]

- Logg, J. M., Minson, J. A., & Moore, D. A. (2019). Algorithm appreciation: People prefer algorithmic to human judgment. Organizational Behavior and Human Decision Processes, 151, 90–103. [Google Scholar] [CrossRef]

- Lu, L., Cai, R., & Gursoy, D. (2019). Developing and validating a service robot integration willingness scale. International Journal of Hospitality Management, 80, 36–51. [Google Scholar] [CrossRef]

- Martin, K. D., & Murphy, P. E. (2017). The role of data privacy in marketing. Journal of the Academy of Marketing Science, 45(2), 135–155. [Google Scholar] [CrossRef]

- Mikalef, P., Krogstie, J., Pappas, I. O., & Pavlou, P. (2020). Exploring the relationship between big data analytics capability and competitive performance: The mediating roles of dynamic and operational capabilities. Information & Management, 57, 103169. [Google Scholar] [CrossRef]

- Nagy, S., & Hajdú, N. (2021). Consumer acceptance of the use of artificial intelligence in online shopping: Evidence from Hungary. Amfiteatru Economic, 23(56), 155–173. [Google Scholar] [CrossRef]

- Norman, G. (2010). Likert scales, levels of measurement and the “laws” of statistics. Advances in Health Sciences Education, 15(5), 625–632. [Google Scholar] [CrossRef]

- Riandhi, A. N., Arviansyah, M. R., & Sondari, M. C. (2025). AI and consumer behavior: Trends, technologies, and future directions from a scopus-based systematic review. Cogent Business & Management, 12(1), 2544984. [Google Scholar] [CrossRef]

- Tabachnick, B. G., & Fidell, L. S. (2019). Using multivariate statistics (7th ed.). Pearson. [Google Scholar]

- Tavakol, M., & Dennick, R. (2011). Making sense of Cronbach’s alpha. International Journal of Medical Education, 2, 53–55. [Google Scholar] [CrossRef] [PubMed]

- Venkatesh, V., Thong, J. Y. L., & Xu, X. (2012). Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Quarterly, 36(1), 157–178. [Google Scholar] [CrossRef]

- Verhoef, P. C., Kannan, P. K., & Inman, J. J. (2015). From multi-channel retailing to omni-channel retailing: Introduction to the special issue on multi-channel retailing. Journal of Retailing, 91(2), 174–181. [Google Scholar] [CrossRef]

- Wang, C., Li, Y., Dai, J., Gu, X., & Yu, T. (2024). Factors influencing university students’ behavioral intention to use generative artificial intelligence: Integrating the Theory of Planned Behavior and AI literacy. International Journal of Human–Computer Interaction, 41(11), 6649–6671. [Google Scholar] [CrossRef]

- Wanous, J. P., Reichers, A. E., & Hudy, M. J. (1997). Overall job satisfaction: How good are single-item measures? Journal of Applied Psychology, 82(2), 247–252. [Google Scholar] [CrossRef] [PubMed]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).