Scan-to-EDTs: Automated Generation of Energy Digital Twins from 3D Point Clouds

Abstract

1. Introduction

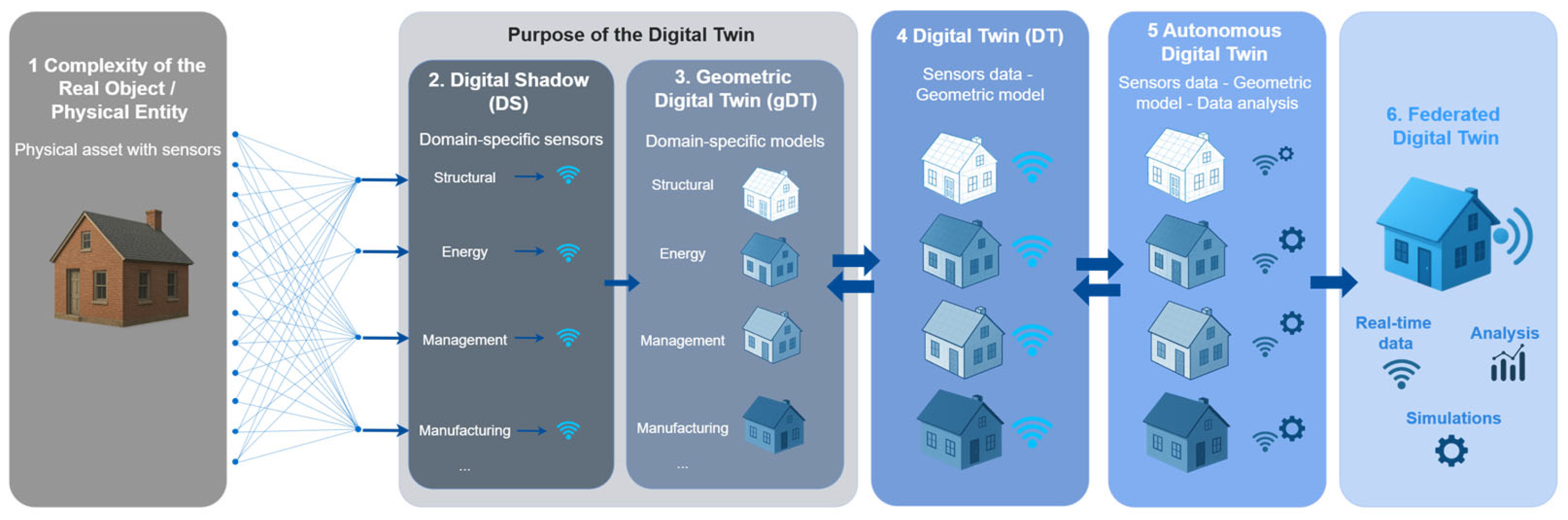

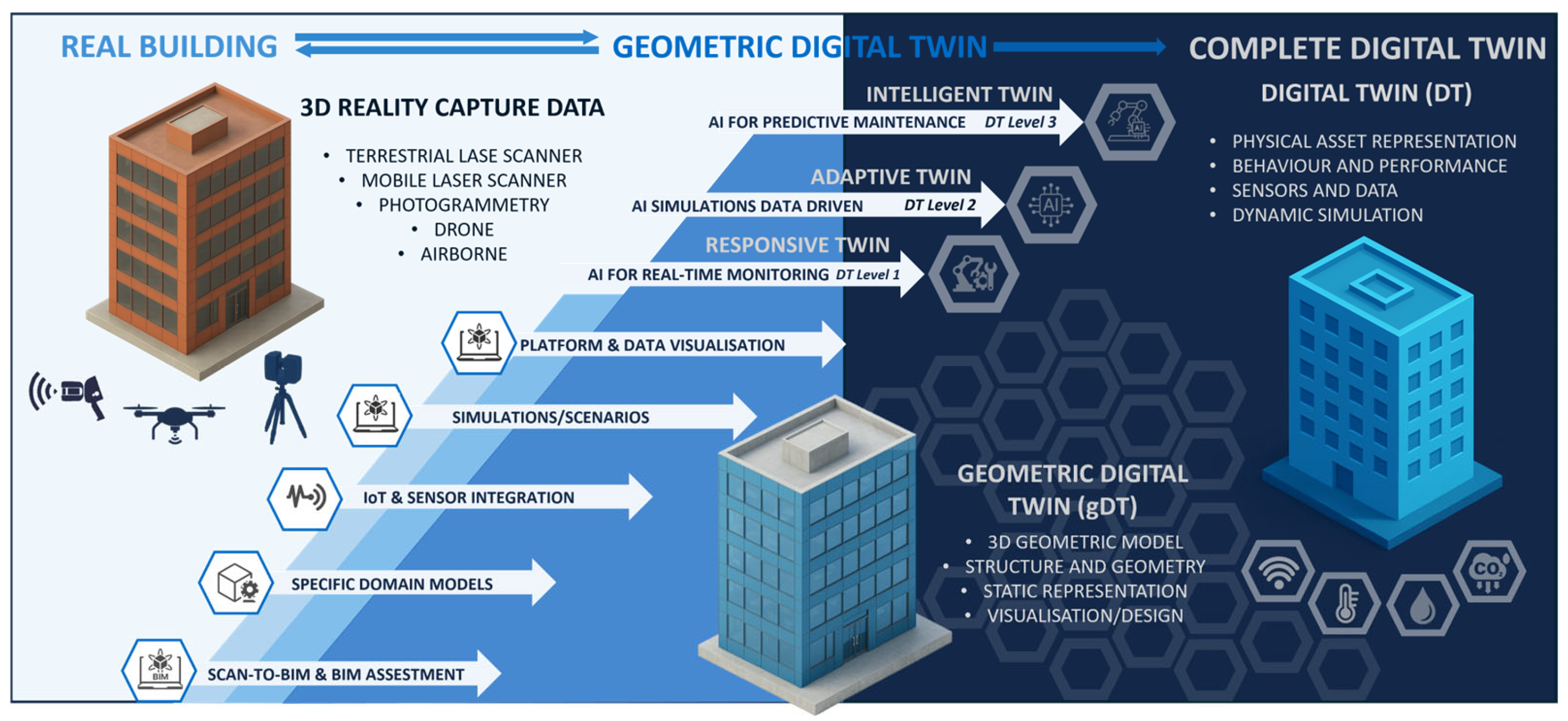

1.1. Context of the DT for Building Applications

1.2. Aim of the Research

- (i)

- Deriving simulation-ready Energy Digital Twins (EDTs) directly from point clouds without manual steps, BIM stages, or reliance on proprietary authoring tools;

- (ii)

- Delivering watertight, simulation-ready models with full topology, semantics, and energy attributes for reasoning, querying and interoperability;

- (iii)

- Unifying solid and B-Rep representations in a single multi-level gDT and integrated graph for simulations, monitoring, IoT linking, and cross-scale navigation;

- (iv)

- Enabling standards-based exchange via gbXML and optional IFC to ensure interoperability while avoiding redundant steps and minimizing data loss.

2. State of the Art and Related Research

2.1. Advancements in Semantic Segmentation: Transformers and 3D Open Vocabularies

2.2. Image-Based Classification and Semantic Segmentation

2.3. Object Detection in Challenging Environments: Latest Strategies and Techniques

2.4. Late Fusion Approaches

2.5. Advancements in Scan-to-BIM

2.6. BIM, BEM, and Building Management Systems (BMSs)

2.7. Topologic BIM (TBIMs)

2.8. Digital Twins for Buildings

- DT Level 1: Real-time sensor integration for monitoring, visualization, and diagnostics.

- DT Level 2: Dynamic simulation, forecasting, and optimization (also from historic data).

- DT Level 3: Analytics for failure prediction and strategic building management, contingent on historical data availability.

3. Methodology

- Object Classification and Instance Segmentation (Section 3.1 and Section 3.2): Detect architectural components in the 3D point cloud, merging point-based and image-based outputs via late fusion [94].

- Scan-to-BIM Process (Section 3.3): Build an intermediate solid model (Figure 4, step A) with a graph representation of geometry and spatial configuration, aligning with object-level reconstruction in [94] and solid mesh representation [66].

- Scan-to-BEM Process (Section 3.4): Augment the model with energy devices, their localization, and their data for integration with building energy modeling workflows.

- Topologic Model Generation (Section 3.5): Convert the 2D Topologic Map [95] into a 3D graph-based topology with adjacencies, containment hierarchies, and watertight room enclosures, and fully closed 3D spaces with seamlessly joined surfaces, ensuring validity for energy and spatial simulations.

- Geometric Digital Twin output (Section 3.6): Derive the final multi-level gDT that integrates geometry, topology, and semantic energy attributes in a graph-based model, ready for simulation-driven DT development.

3.1. Object Classification and Instance Segmentation: Point Clouds

3.2. Object Classification and Instance Segmentation: Images

3.3. From Geometric Graph to Reconstructed Objects

- represents the floor class, serving as the base to which the lower bonds of all walls are connected (Section 3.3.1);

- represents the ceiling class, serving as the upper connection point for the tops of all wall elements (Section 3.3.1);

- denotes the wall class, containing all identified vertical partition elements (Section 3.3.2);

- refers to the column class, capturing all structural columns within the environment;

- denote the sets of doors and windows, so it defines the generalized openings class (Section 3.3.3);

- represents the unclassified class, encompassing all elements that do not fall into any of the defined categories above.

3.3.1. Floors () and Ceiling () Class

3.3.2. Wall Class ()

3.3.3. Openings () Class: Windows and Doors

3.4. Scan-to-BEM

3.5. Topologic Map and Topologic Model

- Watertightness: All surfaces enclosing interior volumes must form a completely closed shell, without gaps or discontinuities.

- Consistency and Manifoldness: Shared surfaces between adjacent spaces must be consistently defined, ensuring topological correctness.

- is a 3D rotation matrix is the rotation matrix of the local frame;

- is the center of the bounding box;

- represents the extents of the bounding box along each local axis.

- (i)

- Emit rays uniformly from the building centroid over 360° at a fixed angular step; record each ray’s farthest valid wall hit and wall ID.

- (ii)

- Use filter hits to remove outliers/duplicates and retain points consistent with the exterior boundaries.

- (iii)

- Sort the remaining points counterclockwise by polar angle about the centroid.

- (iv)

- Detect gaps (distance/disconnect thresholds) and insert bridging segments via a Convex-Hull–based completion to obtain a continuous boundary polygon.

3.6. The Multilevel Geometric Digital Twin (gDT)

- (i)

- Volumetric Model (TSM): Watertight, room-level spatial cells (CellComplex model) for thermal zoning and energy simulation.

- (ii)

- Thematic Surface Model (B-Rep): Walls, floors, ceilings, and openings, each semantically annotated with material, function, and energy performance data.

- (iii)

- Energy System Elements and Devices: Geometric-topological representations and spatial registration of sensors and devices, enabling semantic graph-based reasoning and zone-level device mapping.

- (iv)

- Ancillary/Building physics data: A graph structure embedding construction layers, material properties, and performance attributes.

4. Results

- are the predicted and ground truth (GT) oriented bounding boxes (OBBs);

- is the intersection volume;

- is the union volume.

- TP are true positives, predicted OBBs correctly matched to a GT OBB;

- FP are false positives, predicted OBBs not matched to any GT OBB;

- FN are false negatives, GT OBBs not matched by any prediction.

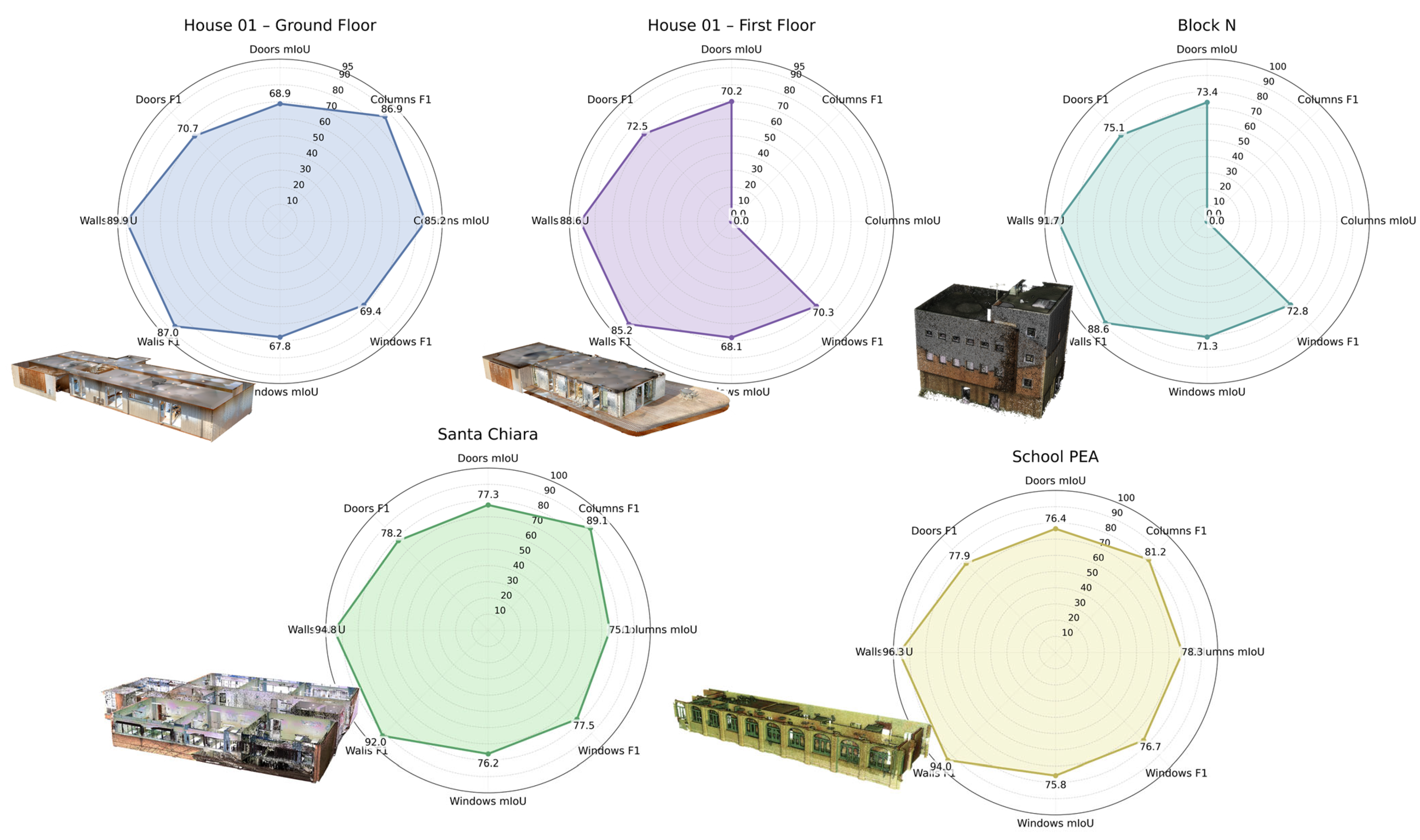

4.1. Data Classification

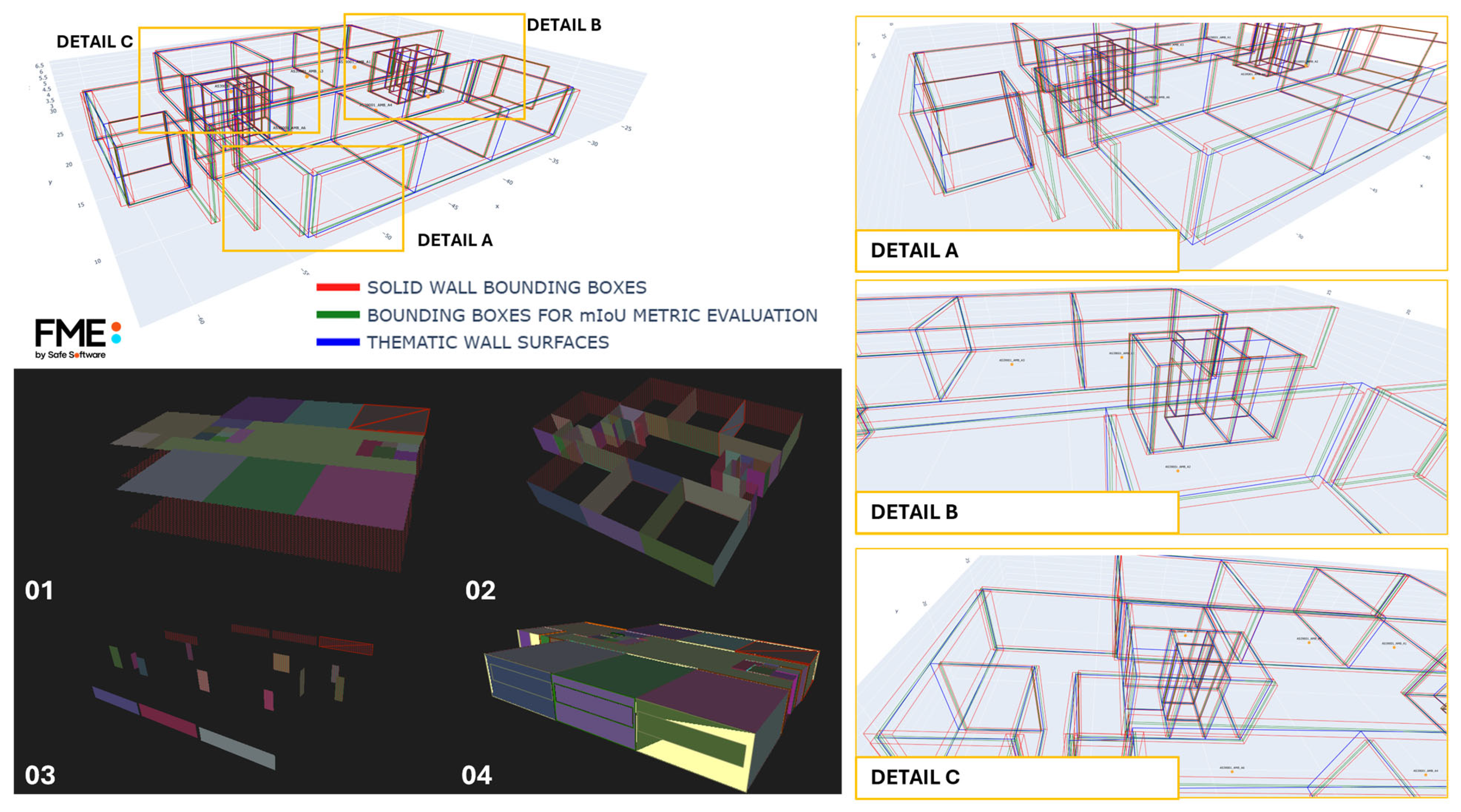

4.2. Scan-to-BIM Process: Reconstruction Results

4.3. Topologic Solid (TSM) and B-Rep Model Results

- is the set of predicted points

- is the set of ground truth points ;

- is the Euclidean distance between and ;

- is the distance threshold (th).

4.4. Energy Simulations

5. Conclusions and Future Research

- Deriving simulation-ready Energy Digital Twins (EDTs) directly from raw point clouds, using a graph-based native architecture that converts unstructured surveys into concrete, topologically consistent models without manual stages or proprietary tools.

- Delivering watertight, simulation ready models with consistent topology and semantics, including adjacency, containment, connectivity, and energy attributes, enabling reliable reasoning, efficient querying, and seamless interoperability.

- Unifying solid and B-Rep representations in a single multi-level gDT and integrated graph, enabling simulations, monitoring, IoT linking, optimization, and cross-scale navigation with consistent behavior across levels.

- Enabling standards-based exchange via gbXML, CityGML and, optionally, IFC, ensuring standards-compliant interoperability while avoiding redundant steps and minimizing data loss across energy and BIM workflows.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Available online: https://www.iea.org/energy-system/buildings (accessed on 12 August 2025).

- Gouveia, J.P.; Aelenei, L.; Aelenei, D.; Ourives, R.; Bessa, S. Improving the Energy Performance of Public Buildings in the Mediterranean Climate via a Decision Support Tool. Energies 2024, 17, 1105. [Google Scholar] [CrossRef]

- Tsemekidi Tzeiranaki, S.; Bertoldi, P.; Dagostino, D.; Castellazzi, L.; Maduta, C. Energy Consumption and Energy Efficiency Trends in the EU, 2000–2022; Publications Office of the European Union: Luxembourg, 2025. [Google Scholar]

- European Parliament. Fact Sheets on the European Union: Renewable Energy. European Parliament. 2024. Available online: https://www.europarl.europa.eu/factsheets/en/sheet/70/renewable-energy (accessed on 12 August 2025).

- Mendes, V.F.; Cruz, A.S.; Gomes, A.P.; Mendes, J.C. A Systematic Review of Methods for Evaluating the Thermal Performance of Buildings through Energy Simulations. Renew. Sustain. Energy Rev. 2024, 189, 113875. [Google Scholar] [CrossRef]

- Liebenberg, M.; Jarke, M. Information Systems Engineering with Digital Shadows: Concept and Use Cases in the Internet of Production. Inf. Syst. 2023, 114, 102182. [Google Scholar] [CrossRef]

- Hu, X.; Olgun, G.; Assaad, R.H. An Intelligent BIM-Enabled Digital Twin Framework for Real-Time Structural Health Monitoring Using Wireless IoT Sensing, Digital Signal Processing, and Structural Analysis. Expert Syst. Appl. 2024, 252, 124204. [Google Scholar] [CrossRef]

- Kreuzer, T.; Papapetrou, P.; Zdravkovic, J. Artificial Intelligence in Digital Twins—A Systematic Literature Review. Data Knowl. Eng. 2024, 151, 102304. [Google Scholar] [CrossRef]

- Armijo, A.; Zamora-Sánchez, D. Integration of Railway Bridge Structural Health Monitoring into the Internet of Things with a Digital Twin: A Case Study. Sensors 2024, 24, 2115. [Google Scholar] [CrossRef]

- Cummins, L.; Sommers, A.; Ramezani, S.B.; Mittal, S.; Jabour, J.; Seale, M.; Rahimi, S. Explainable Predictive Maintenance: A Survey of Current Methods, Challenges and Opportunities. IEEE Access 2024, 12, 57574–57602. [Google Scholar] [CrossRef]

- Attaran, S.; Attaran, M.; Celik, B.G. Digital Twins and Industrial Internet of Things: Uncovering Operational Intelligence in Industry 4.0. Decis. Anal. J. 2024, 10, 100398. [Google Scholar] [CrossRef]

- Meddaoui, A.; Hain, M.; Hachmoud, A. The benefits of predictive maintenance in manufacturing excellence: A case study to establish reliable methods for predicting failures. Int. J. Adv. Manuf. Technol. 2023, 128, 3685–3690. [Google Scholar] [CrossRef]

- Asare, K.A.B.; Liu, R.; Anumba, C.J.; Issa, R.R.A. Real-World Prototyping and Evaluation of Digital Twins for Predictive Facility Maintenance. J. Build. Eng. 2024, 97, 110890. [Google Scholar] [CrossRef]

- Liu, Z.; Li, M.; Ji, W. Development and Application of a Digital Twin Model for Net Zero Energy Building Operation and Maintenance Utilizing BIM-IoT Integration. Energy Build. 2025, 328, 115170. [Google Scholar] [CrossRef]

- Naeem, A.; Ho, C.O.; Kolderup, E.; Jain, R.K.; Benson, S.; de Chalendar, J. EnergyPlus as a computational engine for commercial building operational digital twins. Energy Build. 2025, 329, 115257. [Google Scholar] [CrossRef]

- Pavirani, F.; Gokhale, G.; Claessens, B.; Develder, C. Demand Response for Residential Building Heating: Effective Monte Carlo Tree Search Control Based on Physics-Informed Neural Networks. Energy Build. 2024, 311, 114161. [Google Scholar] [CrossRef]

- Mohammadi, S.; Aibinu, A.A.; Oraee, M. Legal and Contractual Risks and Challenges for BIM. J. Leg. Aff. Dispute Resolut. Eng. Constr. 2024, 16, 1. [Google Scholar] [CrossRef]

- Palha, R.P.; Hüttl, R.M.C.; da Costa e Silva, A.J. BIM Interoperability for Small Residential Construction Integrating Warranty and Maintenance Management. Autom. Constr. 2024, 166, 105639. [Google Scholar] [CrossRef]

- Yang, Y.; Pan, Y.; Zeng, F.; Lin, Z.; Li, C. A gbXML Reconstruction Workflow and Tool Development to Improve the Geometric Interoperability between BIM and BEM. Buildings 2022, 12, 221. [Google Scholar] [CrossRef]

- Yang, Z.; Tang, C.; Zhang, T.; Zhang, Z.; Doan, D.T. Digital Twins in Construction: Architecture, Applications, Trends and Challenges. Buildings 2024, 14, 2616. [Google Scholar] [CrossRef]

- Szpilko, D.; Fernando, X.; Nica, E.; Budna, K.; Rzepka, A.; Lăzăroiu, G. Energy in Smart Cities: Technological Trends and Prospects. Energies 2024, 17, 6439. [Google Scholar] [CrossRef]

- Mousavi, Y.; Gharineiat, Z.; Karimi, A.A.; McDougall, K.; Rossi, A.; Gonizzi Barsanti, S. Digital Twin Technology in Built Environment: A Review of Applications, Capabilities and Challenges. Smart Cities 2024, 7, 2595–2621. [Google Scholar] [CrossRef]

- Wang, W.; Xu, K.; Song, S.; Bao, Y.; Xiang, C. From BIM to Digital Twin in BIPV: A Review of Current Knowledge. Sustain. Energy Technol. Assess. 2024, 67, 103855. [Google Scholar] [CrossRef]

- Yavan, F.; Maalek, R.; Toğan, V. Structural Optimization of Trusses in Building Information Modeling (BIM) Projects Using Visual Programming, Evolutionary Algorithms, and Life Cycle Assessment (LCA) Tools. Buildings 2024, 14, 1532. [Google Scholar] [CrossRef]

- Wang, Q.; Chen, X.; Zhang, Z.; Meng, Y.; Shen, T.; Gu, Y. Masking Graph Cross-Convolution Network for Multispectral Point Cloud Classification. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–15. [Google Scholar] [CrossRef]

- Zhao, H.; Jiang, L.; Jia, J.; Torr, P.; Koltun, V. Point Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 16259–16268. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Han, T.; Chen, Y.; Ma, J.; Liu, X.; Zhang, W.; Zhang, X.; Wang, H. Point Cloud Semantic Segmentation with Adaptive Spatial Structure Graph Transformer. Int. J. Appl. Earth Obs. Geoinf. 2024, 133, 104105. [Google Scholar] [CrossRef]

- Zhou, W.; Wang, Q.; Jin, W.; Shi, X.; He, Y. Graph Transformer for 3D Point Clouds Classification and Semantic Segmentation. Comput. Graph. 2024, 124, 104050. [Google Scholar] [CrossRef]

- Zhou, W.; Zhao, Y.; Xiao, Y.; Min, X.; Yi, J. TNPC: Transformer-Based Network for Point Cloud Classification. Expert Syst. Appl. 2024, 239, 122438. [Google Scholar] [CrossRef]

- Wang, L.; Huang, M.; Yang, Z.; Wu, R.; Qiu, D.; Xiao, X.; Li, D.; Chen, C. LBNP: Learning Features Between Neighboring Points for Point Cloud Classification. PLoS ONE 2025, 20, e0314086. [Google Scholar] [CrossRef] [PubMed]

- Xie, Y.; Tu, Z.; Yang, T.; Zhang, Y.; Zhou, X. EdgeFormer: Local Patch-Based Edge Detection Transformer on Point Clouds. Pattern Anal. Appl. 2025, 28, 11. [Google Scholar] [CrossRef]

- Thomas, H.; Tsai, Y.-H.; Barfoot, T.D.; Zhang, J. KPConvX: Modernizing Kernel Point Convolution with Kernel Attention. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 5525–5535. [Google Scholar]

- Wang, L.; Deng, H.; Li, S.; Liu, C. PPT: A Point Patch Transformer for Point Cloud Classification. Optica Open 2025, preprint. [Google Scholar] [PubMed]

- Park, Y.; Tran, D.D.T.; Kim, M.; Kim, H.; Lee, Y. SP2Mask4D: Efficient 4D Panoptic Segmentation Using Superpoint Transformers. In Proceedings of the 2025 International Conference on Electronics, Information, and Communication (ICEIC), Osaka, Japan, 19–22 January 2025; pp. 1–4. [Google Scholar]

- Fan, Y.; Wang, Y.; Zhu, P.; Hui, L.; Xie, J.; Hu, Q. Uncertainty-Aware Superpoint Graph Transformer for Weakly Supervised 3D Semantic Segmentation. IEEE Trans. Fuzzy Syst. 2025, 33, 1899–1912. [Google Scholar] [CrossRef]

- Mei, G.; Riz, L.; Wang, Y.; Poiesi, F. Vocabulary-Free 3D Instance Segmentation with Vision and Language Assistant. arXiv 2024, arXiv:2408.10652. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.; et al. Segment Anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 3992–4003. [Google Scholar]

- Liu, S.; Zeng, Z.; Ren, T.; Li, F.; Zhang, H.; Yang, J.; Li, C.; Yang, J.; Su, H.; Zhu, J.; et al. Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection. arXiv 2023, arXiv:2303.05499. [Google Scholar]

- Zhang, W.; Pang, J.; Chen, K.; Loy, C.C. K-Net: Towards Unified Image Segmentation. arXiv 2021, arXiv:2106.14855. [Google Scholar] [CrossRef]

- Qin, Z.; Liu, J.; Zhang, X.; Tian, M.; Zhou, A.; Yi, S.; Li, H. Pyramid Fusion Transformer for Semantic Segmentation. arXiv 2022, arXiv:2201.04019. [Google Scholar] [CrossRef]

- Cheng, B.; Schwing, A.G.; Kirillov, A. Per-Pixel Classification is Not All You Need for Semantic Segmentation. Adv. Neural Inf. Process. Syst. 2021, 34, 17864–17875. [Google Scholar]

- Wu, Z.; Gan, Y.; Xu, T.; Wang, F. Graph-Segmenter: Graph Transformer with Boundary-aware Attention for Semantic Segmentation. Front. Comput. Sci. 2023, 18, 185327. [Google Scholar] [CrossRef]

- Dai, K.; Jiang, Z.; Xie, T.; Wang, K.; Liu, D.; Fan, Z.; Li, R.; Zhao, L.; Omar, M. SOFW: A Synergistic Optimization Framework for Indoor 3D Object Detection. IEEE Trans. Multimed. 2025, 27, 637–651. [Google Scholar] [CrossRef]

- Lin, T.; Yu, Z.; McGinity, M.; Gumhold, S. An Immersive Labeling Method for Large Point Clouds. Comput. Graph. 2024, 124, 104101. [Google Scholar] [CrossRef]

- Fol, C.R.; Shi, N.; Overney, N.; Murtiyoso, A.; Remondino, F.; Griess, V.C. 3D Dataset Generation Using Virtual Reality for Forest Biodiversity. Int. J. Digit. Earth 2024, 17, 2422984. [Google Scholar] [CrossRef]

- Shi, X.; Wang, S.; Zhang, B.; Ding, X.; Qi, P.; Qu, H.; Li, N.; Wu, J.; Yang, H. Advances in Object Detection and Localization Techniques for Fruit Harvesting Robots. Agronomy 2025, 15, 145. [Google Scholar] [CrossRef]

- ManyCore Research Team. SpatialLM: Large Language Model for Spatial Understanding. GitHub Repository. 2025. Available online: https://github.com/manycore-research/SpatialLM (accessed on 28 March 2025).

- Yang, Q.; Zhao, Y.; Cheng, H. MMLF: Multi-Modal Multi-Class Late Fusion for Object Detection with Uncertainty Estimation. arXiv 2024, arXiv:2410.08739. [Google Scholar]

- Ren, D.; Li, J.; Wu, Z.; Guo, J.; Wei, M.; Guo, Y. MFFNet: Multimodal Feature Fusion Network for Point Cloud Semantic Segmentation. Vis. Comput. 2024, 40, 5155–5167. [Google Scholar] [CrossRef]

- Agostinho, L.; Pereira, D.; Hiolle, A.; Pinto, A. TEFu-Net: A Time-Aware Late Fusion Architecture for Robust Multi-Modal Ego-Motion Estimation. Robot. Auton. Syst. 2024, 177, 104700. [Google Scholar] [CrossRef]

- Ji, A.; Chew, A.W.Z.; Xue, X.; Zhang, L. An Encoder-Decoder Deep Learning Method for Multi-Class Object Segmentation from 3D Tunnel Point Clouds. Autom. Constr. 2022, 137, 104187. [Google Scholar] [CrossRef]

- Samadzadegan, F.; Toosi, A.; Dadrass Javan, F. A Critical Review on Multi-Sensor and Multi-Platform Remote Sensing Data Fusion Approaches: Current Status and Prospects. Int. J. Remote Sens. 2024, 46, 1327–1402. [Google Scholar] [CrossRef]

- Schuegraf, P.; Schnell, J.; Henry, C.; Bittner, K. Building Section Instance Segmentation with Combined Classical and Deep Learning Methods. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, II, 407–414. [Google Scholar] [CrossRef]

- Liu, Y.; Huang, H.; Gao, G.; Ke, Z.; Li, S.; Gu, M. Dataset and Benchmark for As-Built BIM Reconstruction from Real-World Point Cloud. Autom. Constr. 2025, 173, 106096. [Google Scholar] [CrossRef]

- Bassier, M.; Vergauwen, M. Clustering of Wall Geometry from Unstructured Point Clouds Using Conditional Random Fields. Remote Sens. 2019, 11, 1586. [Google Scholar] [CrossRef]

- Skoury, L.; Leder, S.; Menges, A.; Wortmann, T. Digital Twin Architecture for the AEC Industry: A Case Study in Collective Robotic Construction. In Proceedings of the MODELS Companion ‘24: Proceedings of the ACM/IEEE 27th International Conference on Model Driven Engineering Languages and Systems; Linz, Austria, 22–27 September 2024, Volume HS 18.

- Vega Torres, M.A.; Braun, A.; Borrmann, A. BIM-SLAM: Integrating BIM Models in Multi-session SLAM for Lifelong Mapping using 3D LiDAR. In Proceedings of the International Symposium on Automation and Robotics in Construction ISARC, Chennai, India, 4–7 July 2023. [Google Scholar]

- Huang, H.; Qiao, Z.; Yu, Z.; Liu, C.; Shen, S.; Zhang, F.; Yin, H. SLABIM: A SLAM-BIM Coupled Dataset in HKUST Main Building. arXiv 2025, arXiv:2502.16856. [Google Scholar] [CrossRef]

- Lin, S.; Duan, L.; Jiang, B.; Liu, J.; Guo, H.; Zhao, J. Scan vs. BIM: Automated Geometry Detection and BIM Updating of Steel Framing through Laser Scanning. Autom. Constr. 2025, 170, 105931. [Google Scholar] [CrossRef]

- Bahreini, F.; Nasrollahi, M.; Taher, A.; Hammad, A. Ontology for BIM-Based Robotic Navigation and Inspection Tasks. Buildings 2024, 14, 2274. [Google Scholar] [CrossRef]

- Hu, X.; Assaad, R.H. A BIM-Enabled Digital Twin Framework for Real-Time Indoor Environment Monitoring and Visualization by Integrating Autonomous Robotics, LiDAR-Based 3D Mobile Mapping, IoT Sensing, and Indoor Positioning Technologies. J. Build. Eng. 2024, 86, 108901. [Google Scholar] [CrossRef]

- Yarovoi, A.; Cho, Y.K. Review of Simultaneous Localization and Mapping (SLAM) for Construction Robotics Applications. Autom. Constr. 2024, 162, 105344. [Google Scholar] [CrossRef]

- De Geyter, S.; Bassier, M.; De Winter, H.; Vergauwen, M. Review of Window and Door Type Detection Approaches. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLVIII-2/W1, 65–72. [Google Scholar] [CrossRef]

- van der Vaart, J.; Stoter, J.; Agugiaro, G.; Arroyo Ohori, K.; Hakim, A.; El Yamani, S. Enriching Lower LoD 3D City Models with Semantic Data Computed by the Voxelisation of BIM Sources. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2024, X-4/W5, 297–308. [Google Scholar] [CrossRef]

- Bassier, M.; Vermandere, J.; De Geyter, S.; De Winter, H. GEOMAPI: Processing Close-Range Sensing Data of Construction Scenes with Semantic Web Technologies. Autom. Constr. 2024, 164, 105454. [Google Scholar] [CrossRef]

- Tran, H.; Khoshelham, K. Procedural Reconstruction of 3D Indoor Models from Lidar Data Using Reversible Jump Markov Chain Monte Carlo. Remote Sens. 2020, 12, 838. [Google Scholar] [CrossRef]

- Walch, A.; Szabo, A.; Steinlechner, H.; Ortner, T.; Gröller, E.; Schmidt, J. BEMTrace: Visualization-Driven Approach for Deriving Building Energy Models from BIM. IEEE Trans. Vis. Comput. Graph. 2025, 31, 240–250. [Google Scholar] [CrossRef]

- Reddy, V.J.; Hariram, N.P.; Ghazali, M.F.; Kumarasamy, S. Pathway to Sustainability: An Overview of Renewable Energy Integration in Building Systems. Sustainability 2024, 16, 638. [Google Scholar] [CrossRef]

- Arowoiya, V.A.; Moehler, R.C.; Fang, Y. Digital Twin Technology for Thermal Comfort and Energy Efficiency in Buildings: A State-of-the-Art and Future Directions. Energy Built Environ. 2024, 5, 641–656. [Google Scholar] [CrossRef]

- Krispel, U.; Evers, H.; Tamke, M.; Ullrich, T. Data Completion in Building Information Management: Electrical Lines from Range Scans and Photographs. Vis. Eng. 2017, 5, 4. [Google Scholar] [CrossRef]

- Yeom, S.; Kim, J.; Kang, H.; Jung, S.; Hong, T. Digital Twin (DT) and Extended Reality (XR) for Building Energy Management. Energy Build. 2024, 323, 114746. [Google Scholar] [CrossRef]

- Han, F.; Du, F.; Jiao, S.; Zou, K. Predictive Analysis of a Building’s Power Consumption Based on Digital Twin Platforms. Energies 2024, 17, 3692. [Google Scholar] [CrossRef]

- Michalakopoulos, V.; Pelekis, S.; Kormpakis, G.; Karakolis, V.; Mouzakitis, S.; Askounis, D. Data-Driven Building Energy Efficiency Prediction Using Physics-Informed Neural Networks. In Proceedings of the 2024 IEEE Conference on Technologies for Sustainability (SusTech), Portland, OR, USA, 14–17 April 2024; pp. 84–91. [Google Scholar]

- Walczyk, G.; Ożadowicz, A. Building Information Modeling and Digital Twins for Functional and Technical Design of Smart Buildings with Distributed IoT Networks—Review and New Challenges Discussion. Future Internet 2024, 16, 225. [Google Scholar] [CrossRef]

- Kiavarz, H.; Jadidi, M.; Esmaili, P. A Graph-Based Explanatory Model for Room-Based Energy Efficiency Analysis Based on BIM Data. Front. Built Environ. 2023, 9, 1256921. [Google Scholar] [CrossRef]

- Wang, M.; Lilis, G.N.; Mavrokapnidis, D.; Katsigarakis, K.; Korolija, I.; Rovas, D. A Knowledge Graph-Based Framework to Automate the Generation of Building Energy Models Using Geometric Relation Checking and HVAC Topology Establishment. Energy Build. 2024, 325, 115035. [Google Scholar] [CrossRef]

- Available online: https://github.com/ladybug-tools (accessed on 16 April 2025).

- Zhou, Y.; Liu, J. Advances in Emerging Digital Technologies for Energy Efficiency and Energy Integration in Smart Cities. Energy Build. 2024, 315, 114289. [Google Scholar] [CrossRef]

- Liu, Z.; He, Y.; Demian, P.; Osmani, M. Immersive Technology and Building Information Modeling (BIM) for Sustainable Smart Cities. Buildings 2024, 14, 1765. [Google Scholar] [CrossRef]

- Jabi, W.; Soe, S.; Theobald, P.; Aish, R.; Lannon, S. Enhancing Parametric Design through Non-Manifold Topology. Des. Stud. 2017, 52, 96–114. [Google Scholar] [CrossRef]

- Yue, Y.; Kontogianni, T.; Schindler, K.; Engelmann, F. Connecting the Dots: Floorplan Reconstruction Using Two-Level Queries. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Aish, R.; Jabi, W.; Lannon, S.; Wardhana, N.; Chatzivasileiadi, A. Topologic: Tools to Explore Architectural Topology. In Proceedings of the Advances in Architectural Geometry 2018, Gothenburg, Sweden, 22–25 September 2018. [Google Scholar]

- Moradi, M.A.; Mohammadrashidi, O.; Niazkar, N.; Rahbar, M. Revealing Connectivity in Residential Architecture: An Algorithmic Approach to Extracting Adjacency Matrices from Floor Plans. Front. Archit. Res. 2024, 13, 370–386. [Google Scholar] [CrossRef]

- Han, J.; Lu, X.-Z.; Lin, J.-R. Unified Network-Based Representation of BIM Models for Embedding Semantic, Spatial, and Topological Data. arXiv 2025, arXiv:2505.22670. [Google Scholar]

- Allen, B.D. Digital twins and living models at NASA. In Proceedings of the Digital Twin Summit, Virtual, 3–4 November 2021. [Google Scholar]

- Nguyen, T.D.; Adhikari, S. The Role of BIM in Integrating Digital Twin in Building Construction: A Literature Review. Sustainability 2023, 15, 10462. [Google Scholar] [CrossRef]

- Tuhaise, V.V.; Tah, J.H.M.; Abanda, F.H. Technologies for Digital Twin Applications in Construction. Autom. Constr. 2023, 152, 104931. [Google Scholar] [CrossRef]

- Kang, T.W.; Mo, Y. A Comprehensive Digital Twin Framework for Building Environment Monitoring with Emphasis on Real-Time Data Connectivity and Predictability. Dev. Built Environ. 2024, 17, 100309. [Google Scholar] [CrossRef]

- Chen, X.; Pan, Y.; Gan, V.J.L.; Yan, K. 3D reconstruction of semantic-rich digital twins for ACMV monitoring and anomaly detection via scan-to-BIM and time-series data integration. Dev. Built Environ. 2024, 19, 100503. [Google Scholar] [CrossRef]

- Arsecularatne, B.P.; Rodrigo, N.; Chang, R. Review of reducing energy consumption and carbon emissions through digital twin in built environment. J. Build. Eng. 2024, 98, 111150. [Google Scholar] [CrossRef]

- Wysocki, O.; Schwab, B.; Biswanath, M.K.; Zhang, Q.; Zhu, J.; Froech, T.; Heeramaglore, M.; Hijazi, I.; Kanna, K.; Pechinger, M.; et al. TUM2TWIN: Introducing the Large-Scale Multimodal Urban Digital Twin Benchmark Dataset. arXiv 2025, arXiv:2505.07396. [Google Scholar]

- Faraji, A.; Arya, S.H.; Ghasemi, E.; Soleimani, H. Considering the Digital Twin as the Evolved Level of BIM in the Context of Construction 4.0. In Proceedings of the 1st National & International Conference on Architecture, Advanced Technologies and Construction Management (IAAC), Tehran, Iran, 15 November 2023. [Google Scholar]

- Roman, O.; Bassier, M.; De Geyter, S.; De Winter, H.; Farella, E.M.; Remondino, F. BIM Module for Deep Learning-Driven Parametric IFC Reconstruction. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2024, XLVIII-2/W8, 403–410. [Google Scholar] [CrossRef]

- Roman, O.; Mazzacca, G.; Farella, E.M.; Remondino, F.; Bassier, M.; Agugiaro, G. Towards Automated BIM and BEM Model Generation Using a B-Rep-Based Method with Topological Map. ISPRS Ann. Phot. Remote Sens. Spat. Inf. Sci. 2024, X-4, 287–294. [Google Scholar] [CrossRef]

- Wu, X.; Jiang, L.; Wang, P.-S.; Liu, Z.; Liu, X.; Qiao, Y.; Ouyang, W.; He, T.; Zhao, H. Point Transformer v3: Simpler Faster Stronger. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 4840–4851. [Google Scholar]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the International Conference on Advanced Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024. [Google Scholar]

- Hahsler, M.; Piekenbrock, M. dbscan: Density-Based Spatial Clustering of Applications with Noise (DBSCAN) and Related Algorithms. J. Stat. Softw. 2023, 91, 1–30. [Google Scholar]

- Agugiaro, G.; Peters, R.; Stoter, J.; Dukai, B. Computing Volumes and Surface Areas Including Party Walls for the 3DBAG Data Set; TU Delft/3DGI: Delft, The Netherlands, 2023. [Google Scholar]

- Roman, O.; Farella, E.M.; Rigon, S.; Remondino, F.; Ricciuti, S.; Viesi, D. From 3D Surveying Data to BIM to BEM: The INCUBE Dataset. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, XLVIII-1/W3, 175–182. [Google Scholar] [CrossRef]

- FME Software. Copyright (c) Safe Software Inc. Available online: www.safe.com (accessed on 28 March 2025).

- Agugiaro, G.; Padsala, R. A proposal to update and enhance the CityGML Energy Application Domain Extension. ISPRS Ann. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2025, X-4/W6-2025, 1–8. [Google Scholar] [CrossRef]

| ||||

|---|---|---|---|---|

| House 01 | Block N | PEA School | Santa Chiara | |

| Type of device | MLS | TLS | TLS | TLS |

| Density | 6 mm | 5mm | 5 mm | 5mm |

| Number of Floorplans | 2 | 4 | 1 | 1 |

| Contains indoor sensor data | ✗ | ✗ | ✓ | ✓ |

| Elements | Std Deviation (%) | mIoU (%) | mAcc (%) |

|---|---|---|---|

| Unclassified | 5.1 | 78.3 | 85.4 |

| Floors | 2.6 | 94.2 | 96.8 |

| Ceilings | 2.7 | 92.7 | 95.6 |

| Walls | 2.4 | 87.5 | 89.4 |

| Columns | 0.7 | 44.1 | 71.5 |

| Doors | 1.1 | 51.6 | 65.3 |

| Windows | 1.6 | 58.8 | 70.2 |

| Elements | Metrics | Columns [%] | Doors [%] | Walls [%] | Windows [%] |

|---|---|---|---|---|---|

| House 01—First floor NavVis dataset | mIoU | — | 70.2 | 88.6 | 68.1 |

| F1 | — | 72.5 | 85.2 | 70.3 | |

| House 01—Ground Floor NavVis dataset | mIoU | 85.2 | 68.9 | 89.9 | 67.8 |

| F1 | 86.9 | 70.7 | 87.0 | 69.4 | |

| Block N KULeuven Campus | mIoU | — | 73.4 | 91.7 | 71.3 |

| F1 | — | 75.1 | 88.6 | 72.8 | |

| Santa Chiara Trento, Italy | mIoU | 75.1 | 77.3 | 94.8 | 76.2 |

| F1 | 89.1 | 78.2 | 92.0 | 77.5 | |

| School PEA Italy | mIoU | 78.3 | 76.4 | 96.3 | 75.8 |

| F1 | 81.2 | 77.9 | 94.0 | 76.7 |

| Elements | Topological Solid Model (TSM) | B-Rep Model (th = 0.065 m) | ||||||

|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | mIoU | Precision | Recall | F1 | mIoU | |

| House 01—Ground Floor | 0.950 | 0.903 | 0.926 | 0.948 | 0.899 | 0.879 | 0.889 | 0.849 |

| House 01—First Floor | 0.961 | 0.922 | 0.941 | 0.958 | 0.921 | 0.901 | 0.911 | 0.871 |

| Block N—Ground Floor | 0.947 | 0.930 | 0.938 | 0.981 | 0.931 | 0.911 | 0.921 | 0.881 |

| Block N—First Floor | 0.973 | 0.943 | 0.958 | 0.968 | 0.938 | 0.918 | 0.928 | 0.888 |

| Block N—Second Floor | 0.984 | 0.957 | 0.970 | 0.972 | 0.946 | 0.926 | 0.936 | 0.896 |

| Block N—Third Floor | 0.983 | 0.953 | 0.968 | 0.969 | 0.981 | 0.961 | 0.971 | 0.931 |

| Santa Chiara—Italy | 0.937 | 0.879 | 0.907 | 0.934 | 0.898 | 0.878 | 0.888 | 0.848 |

| School PEA—Italy | 0.930 | 0.867 | 0.897 | 0.927 | 0.887 | 0.867 | 0.877 | 0.837 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roman, O.; Bassier, M.; Agugiaro, G.; Arroyo Ohori, K.; Farella, E.M.; Remondino, F. Scan-to-EDTs: Automated Generation of Energy Digital Twins from 3D Point Clouds. Buildings 2025, 15, 4060. https://doi.org/10.3390/buildings15224060

Roman O, Bassier M, Agugiaro G, Arroyo Ohori K, Farella EM, Remondino F. Scan-to-EDTs: Automated Generation of Energy Digital Twins from 3D Point Clouds. Buildings. 2025; 15(22):4060. https://doi.org/10.3390/buildings15224060

Chicago/Turabian StyleRoman, Oscar, Maarten Bassier, Giorgio Agugiaro, Ken Arroyo Ohori, Elisa Mariarosaria Farella, and Fabio Remondino. 2025. "Scan-to-EDTs: Automated Generation of Energy Digital Twins from 3D Point Clouds" Buildings 15, no. 22: 4060. https://doi.org/10.3390/buildings15224060

APA StyleRoman, O., Bassier, M., Agugiaro, G., Arroyo Ohori, K., Farella, E. M., & Remondino, F. (2025). Scan-to-EDTs: Automated Generation of Energy Digital Twins from 3D Point Clouds. Buildings, 15(22), 4060. https://doi.org/10.3390/buildings15224060