Integration of Drone-Based 3D Scanning and BIM for Automated Construction Progress Control

Abstract

1. Background

Overview of Site Progress Control: Barriers and Challenges

2. Literature Review

Technologies Enhancing of the Site Control New Methodology

- BIM Methodology including 4D planning

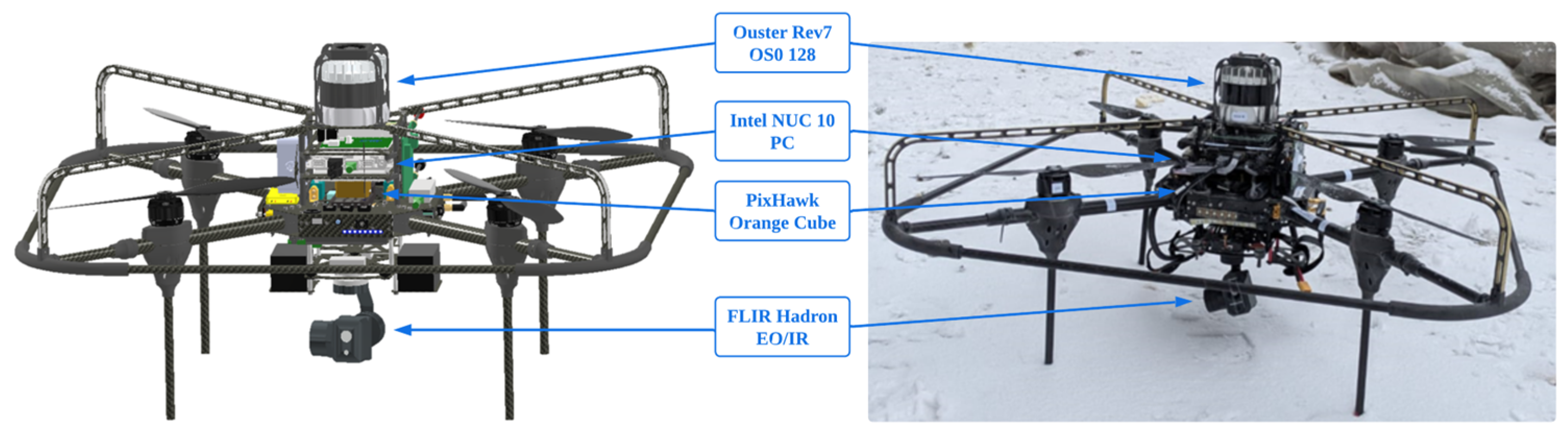

- Drone—based onsite data capture

- 3D Point cloud generation and process from scan sensors [17]

- FME for Data integration

- -

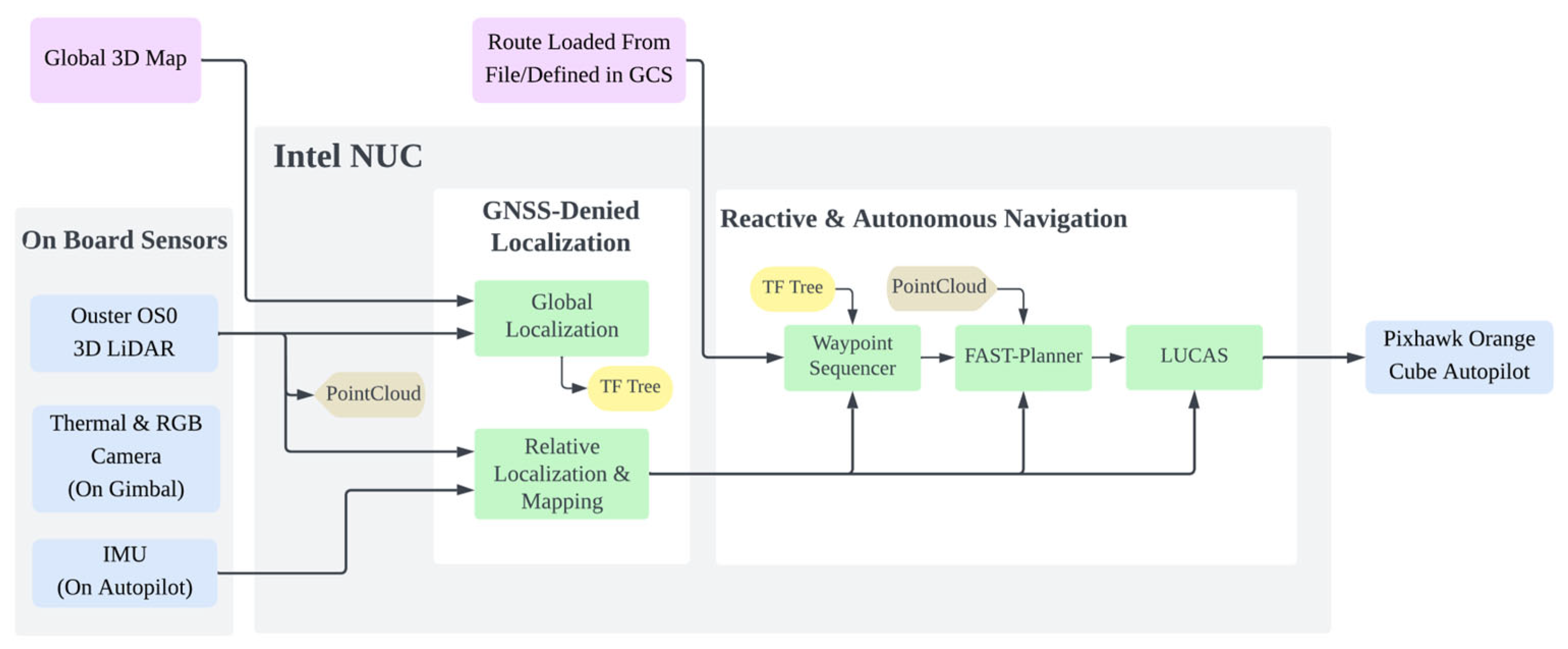

- Developing a fully autonomous aerial vehicle (drone) with the capability to navigate safely in close environments without GNSS signal and online mapping.

- -

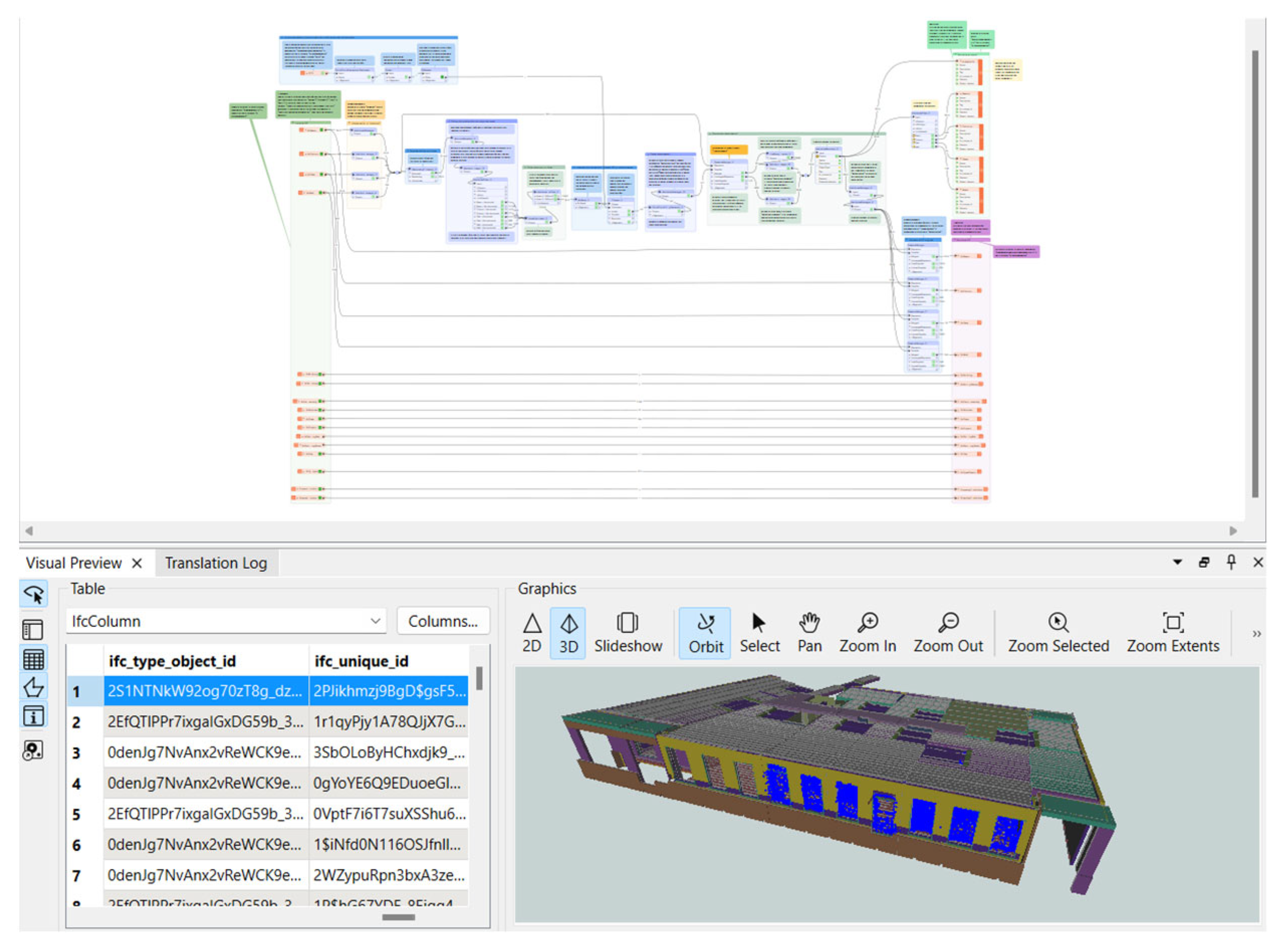

- 3D point clouds and BIM models integration automatization by visual programming with FME tool.

3. Materials and Methods

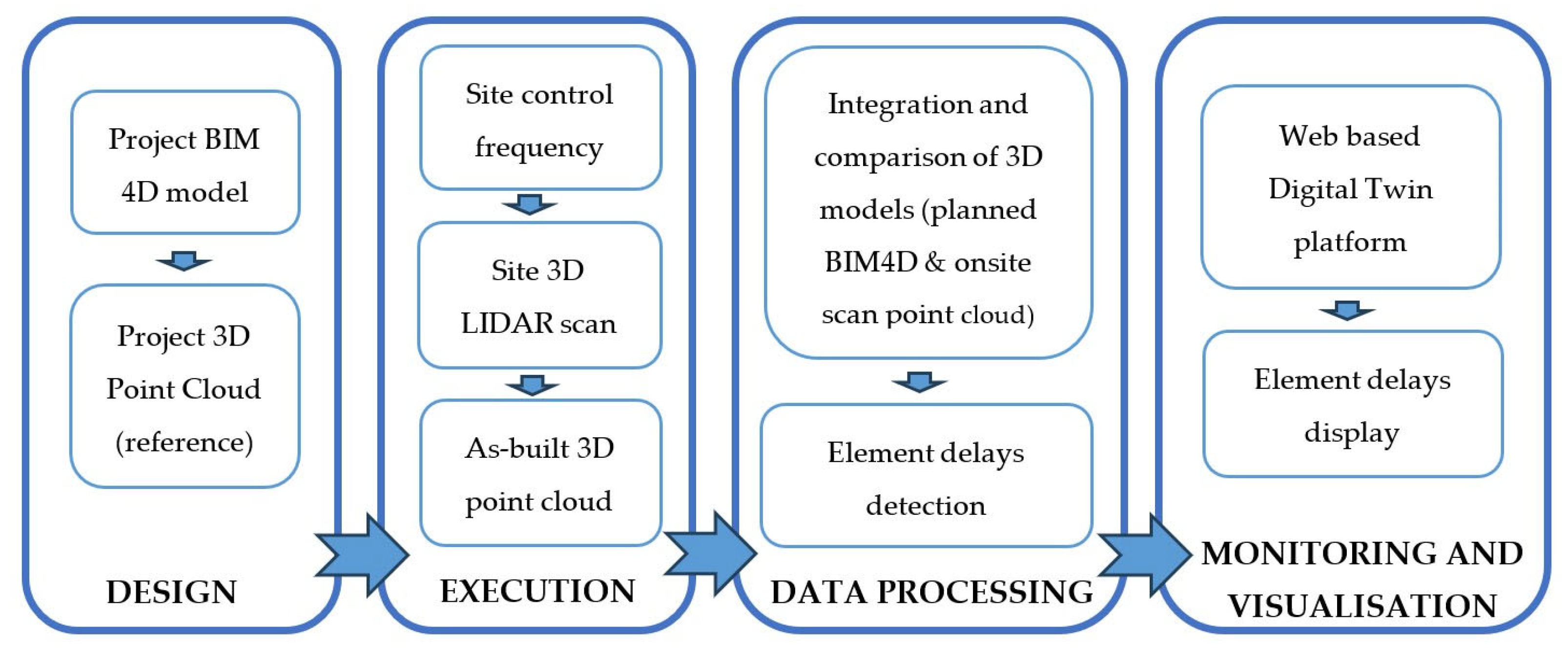

3.1. Design and Development of a Workflow for Automatic Control Monitoring

- (a)

- DESIGN PHASE

- Project Information Model drafting according to Specific Requirements:

- Transformation of the project BIM model into a 3D reference point cloud for later scan positioning (optional)

- (b)

- EXECUTION PHASE

- Definition of the site control frequency

- Scan of the construction site with Drone

- Point cloud generation of the site

- (c)

- DATA PROCESSING PHASE

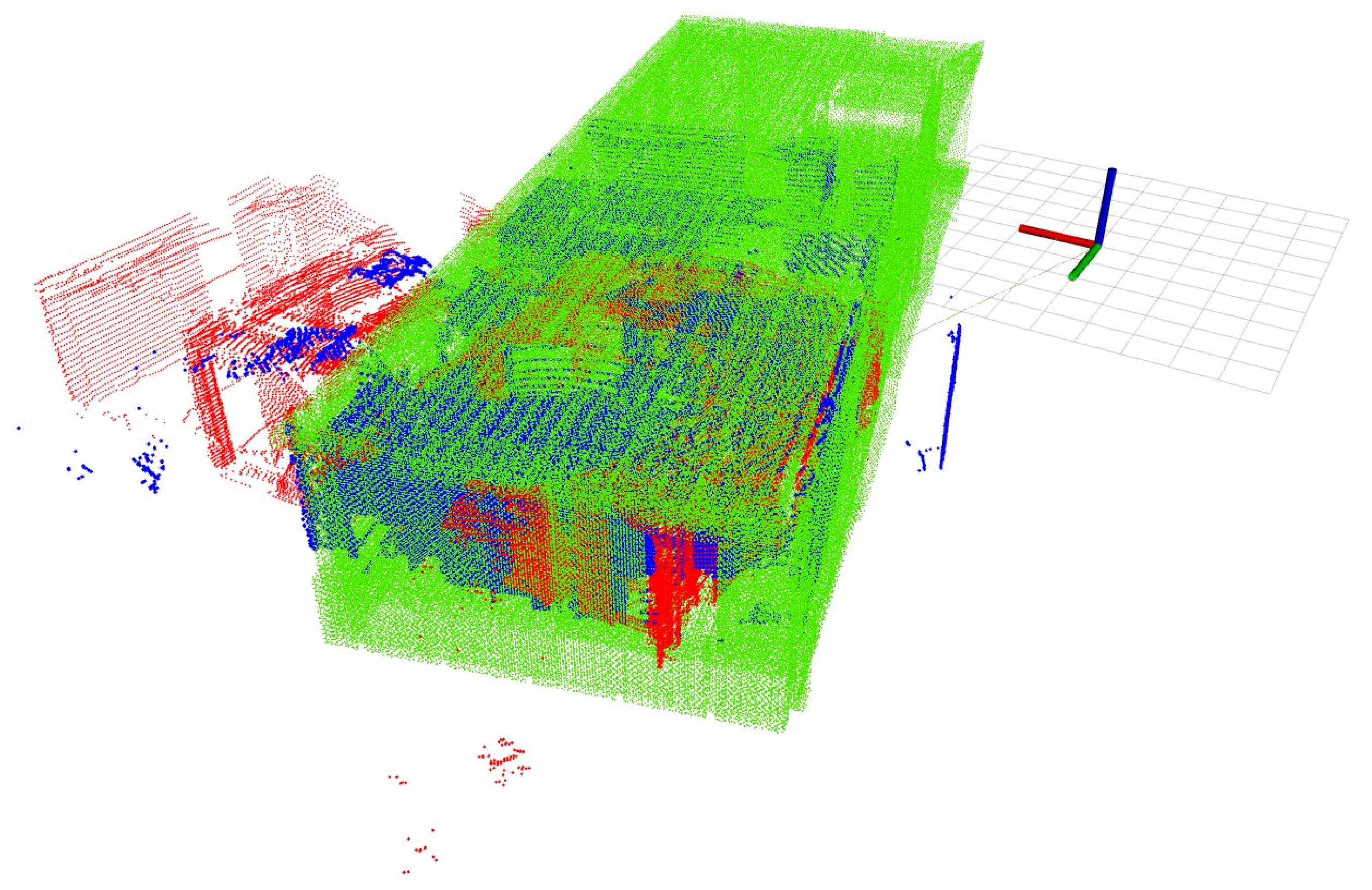

- Comparison of BIM4D model (filtered) with the onsite scan point cloud

- (d)

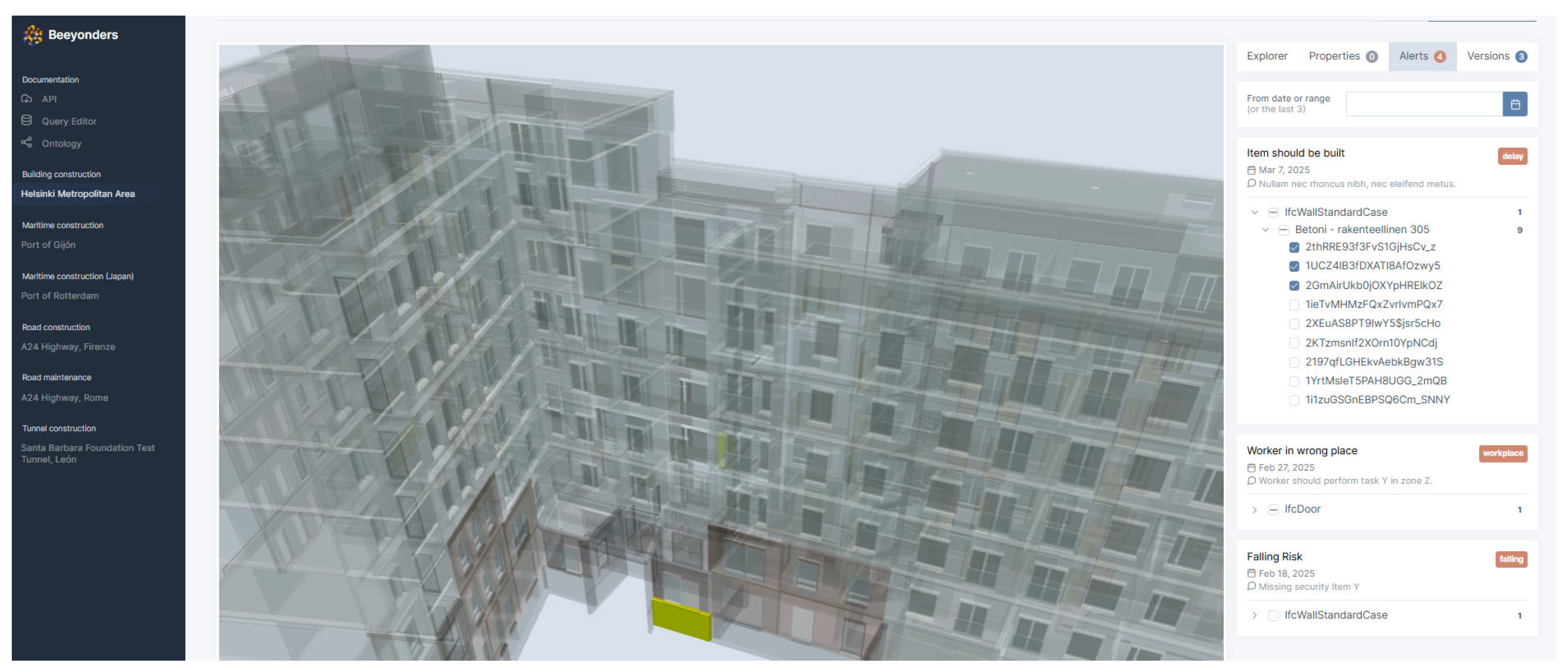

- MONITORING AND VISUALIZATION PHASE

3.2. Development of KEY Activities

- Development and adaptation of the drone for the scan of the construction site (EXECUTION PHASE)

- -

- GNSS-Denied Localization

- -

- Reactive and Autonomous Navigation

- Data processing with FME for the 3D models integration (DESIGN and DATA PROCESSING PHASES)

- -

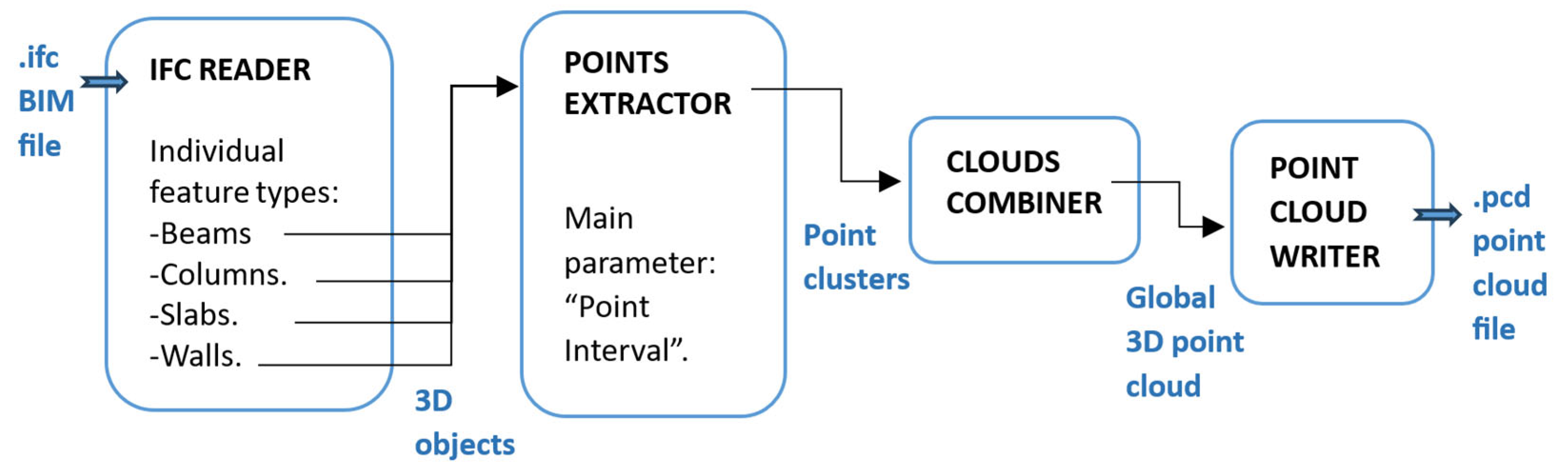

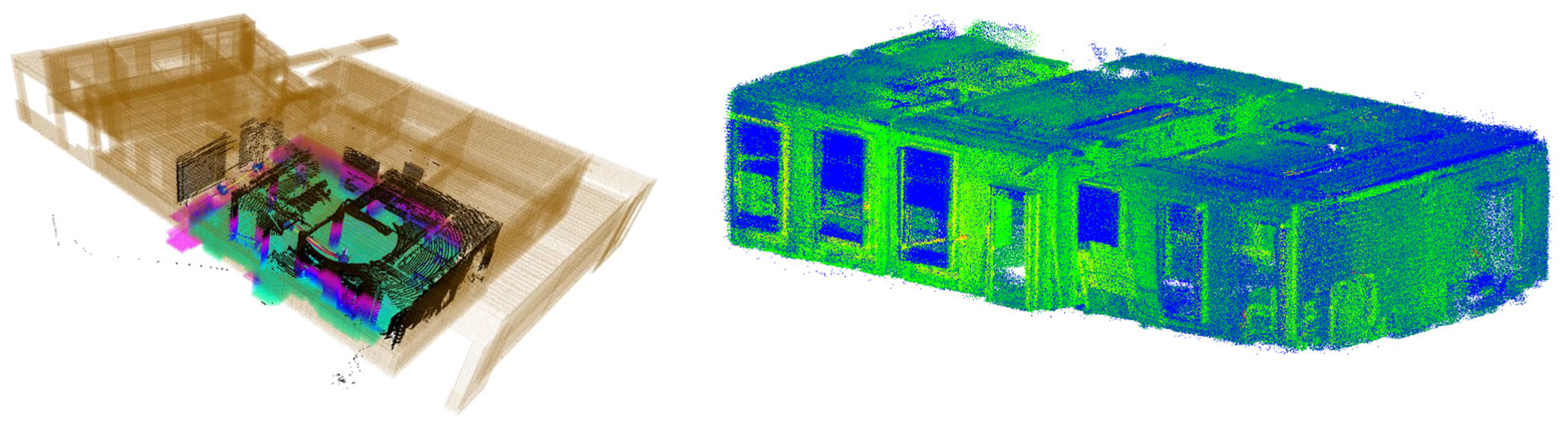

- Reference point cloud generation:

- -

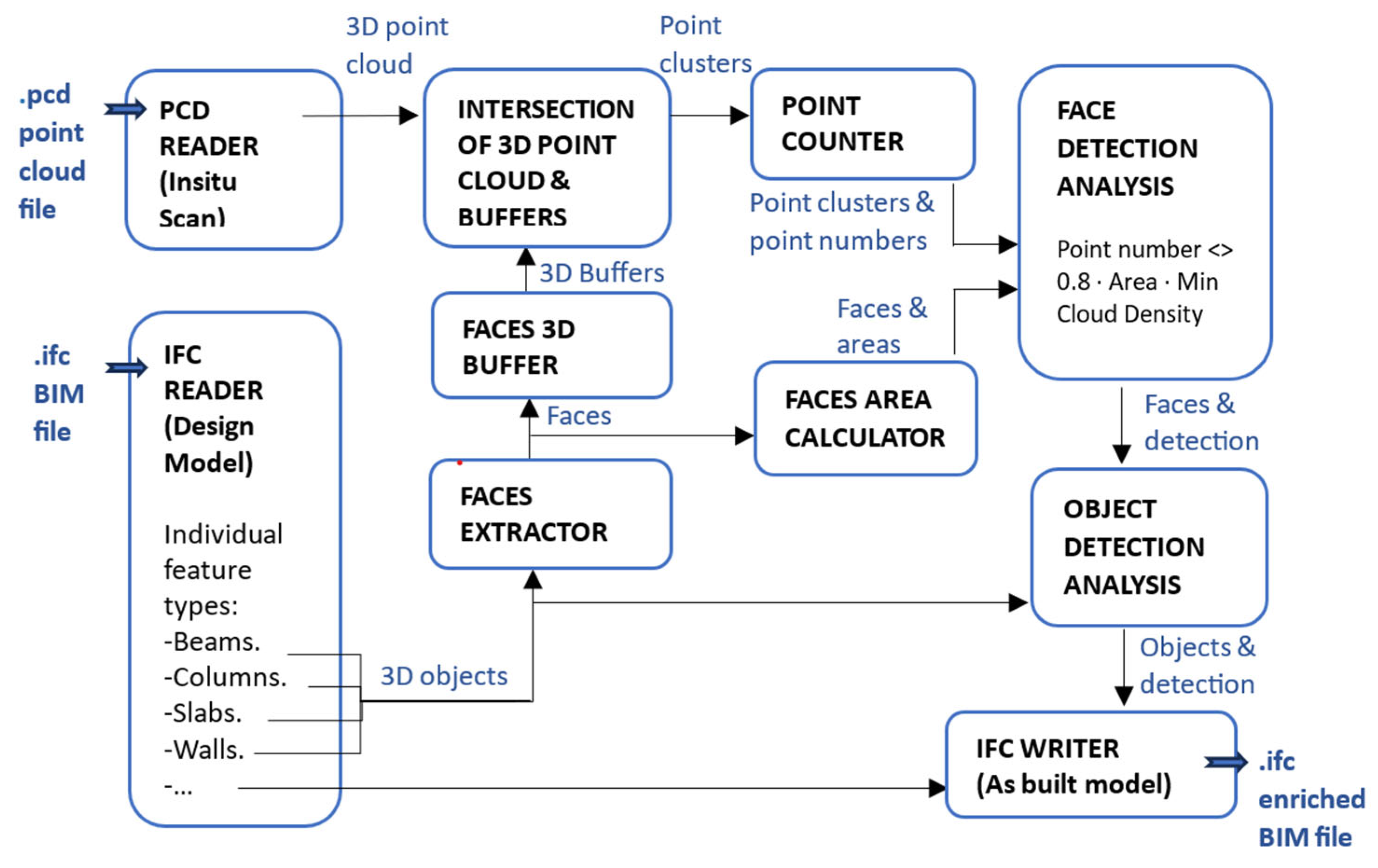

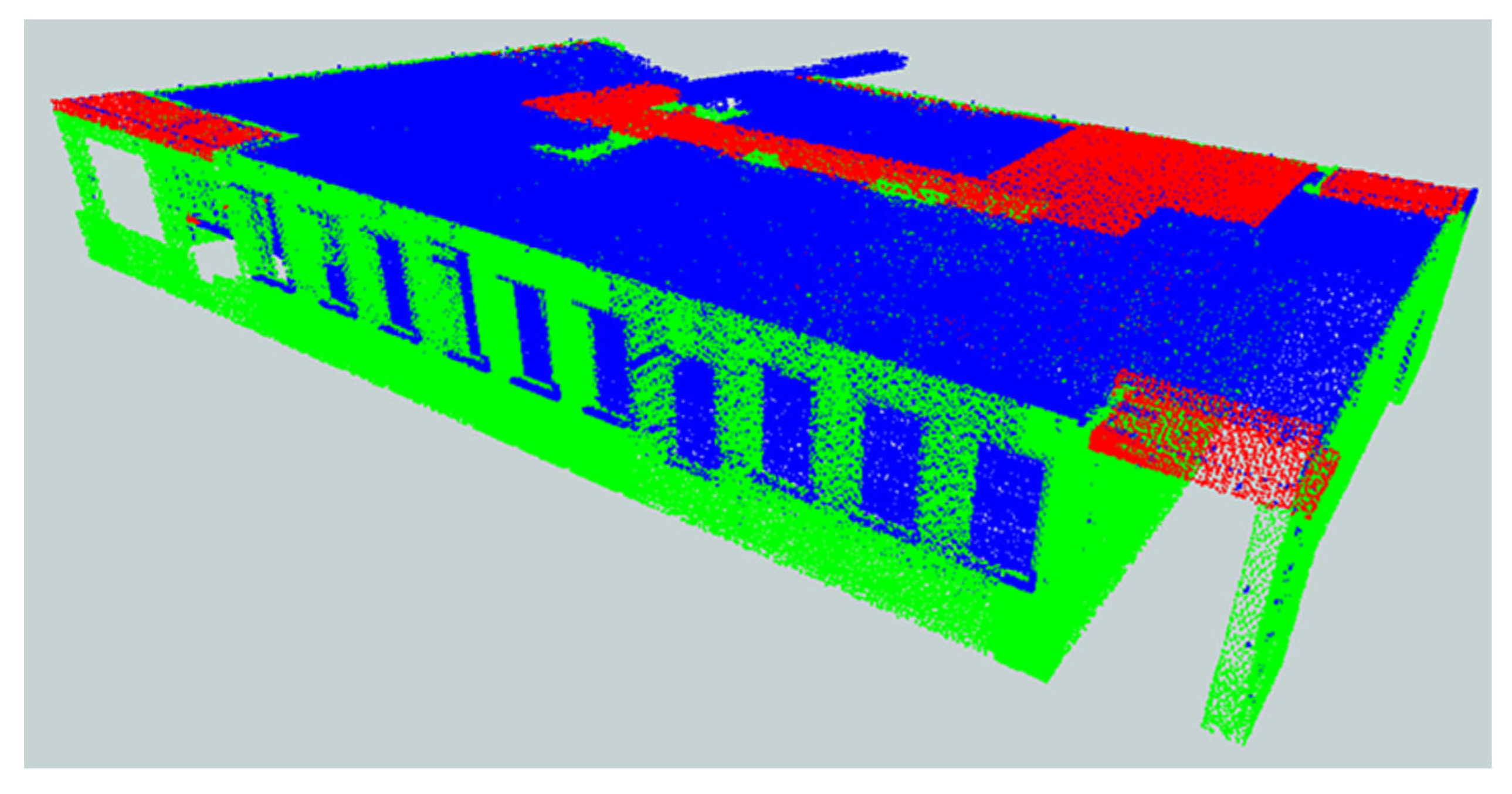

- Planned BIM model and scan point cloud comparison:

3.3. Validation Through an Application of the Innovative Workflow to a Real Case Study

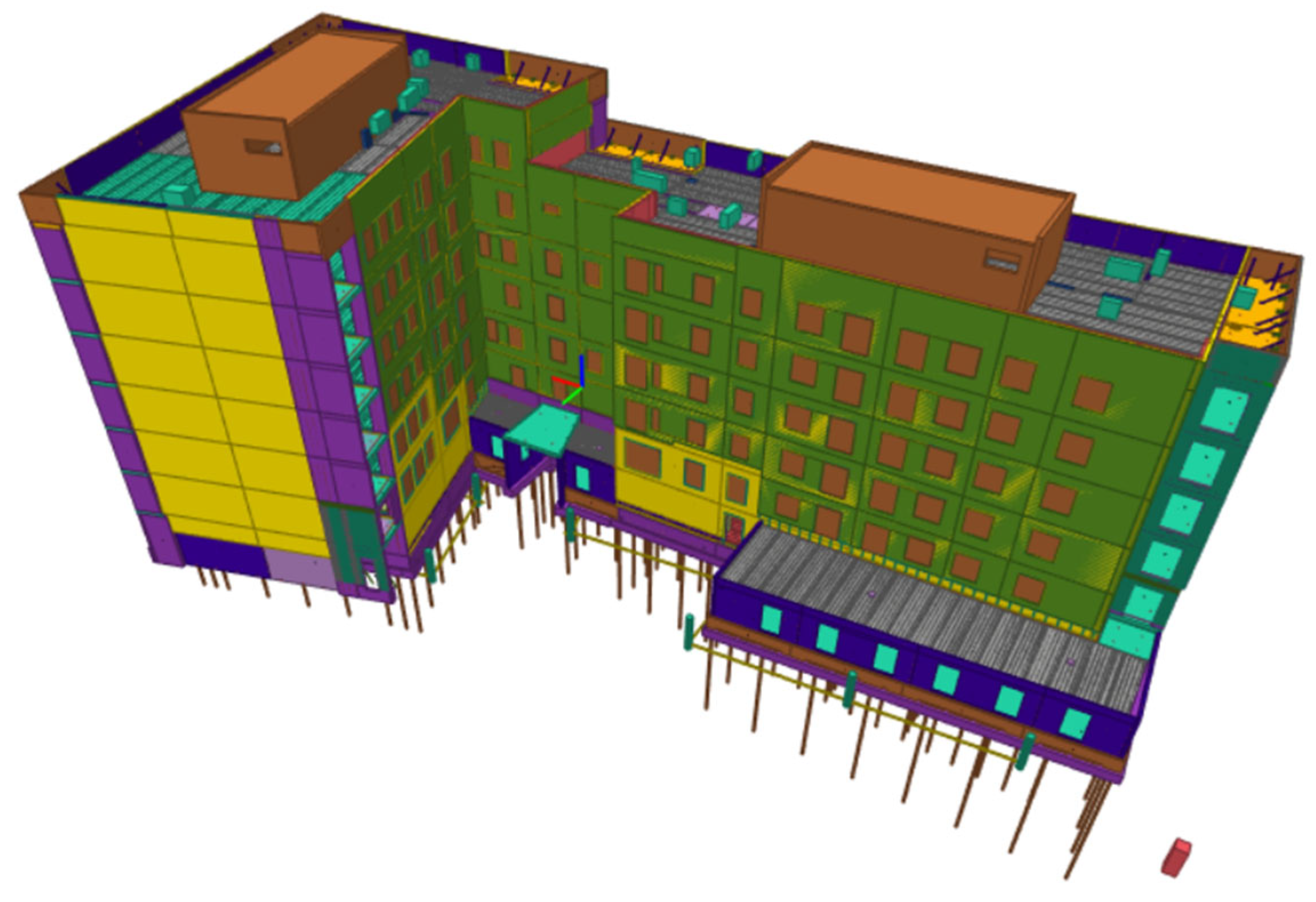

- Eight-story residential building including 99 apartments executed by Fira Rakennus Oy in Finland. The building is in a newly built metropolitan area in Pasila, Helsinki.

- Frame erection construction phase including inner gypsum-based separation walls.

- Construction technology based on precast concrete elements (slabs and walls). This involves specific considerations, for example, the control is limited to the detection of planned elements in their location (the possibility of common erroneous executions of in situ concrete is dismissed).

- Work quality improvement by covering more space in the quality inspections;

- Reduction in the project throughput time by reducing manual monitoring times;

- Reducing reaction times to onsite safety hazards compared to manual surveying;

- Human worker acceptance of the automated monitoring technology.

4. Findings

Application of the Methodology to the Case Study

- (a)

- DESIGN PHASE

- Project BIM4D model

- 3D reference point cloud for later scan positioning

- (b)

- EXECUTION PHASE

- Definition of the site control frequency

- Scan of the construction site with Drone

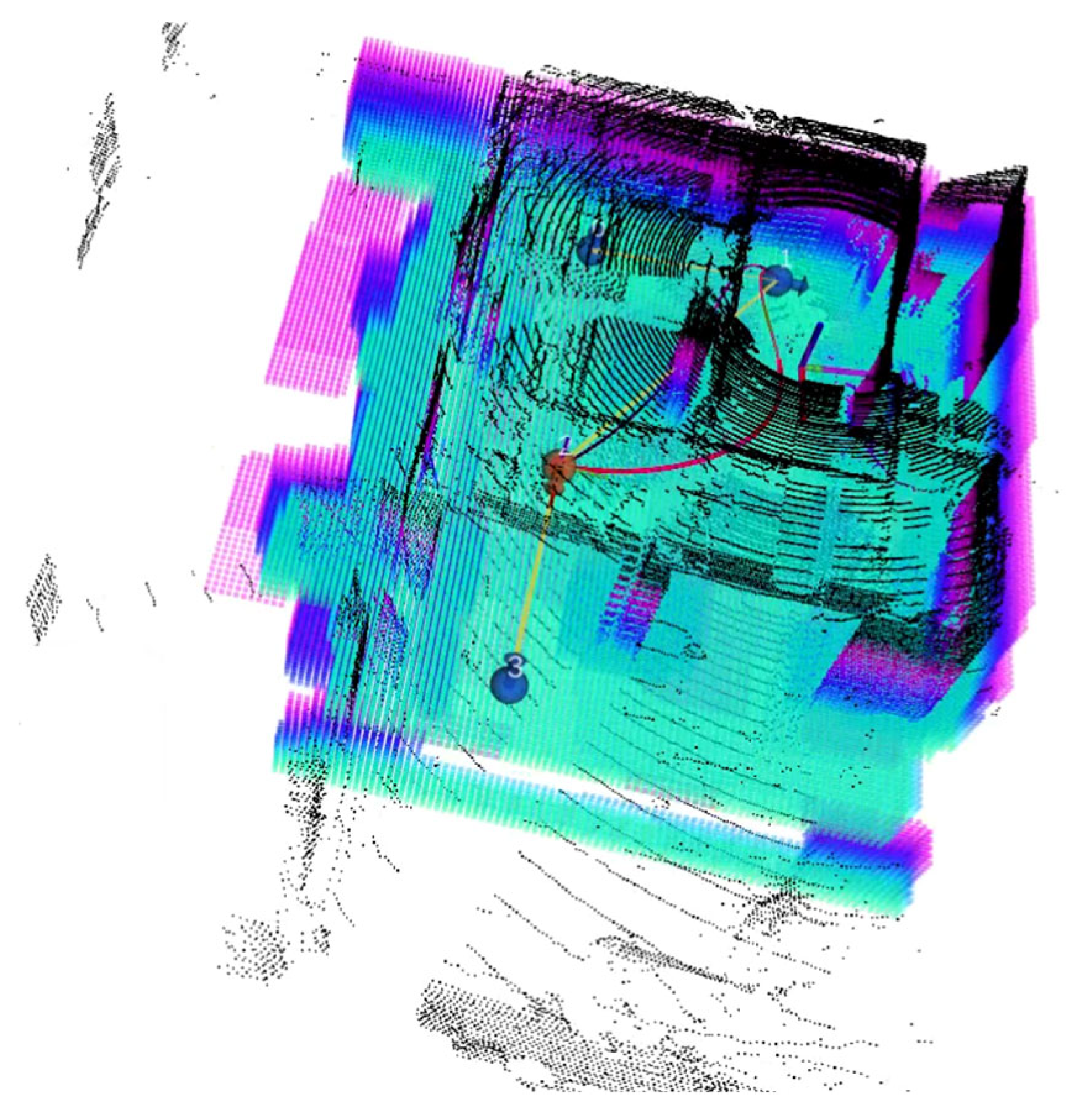

- Point cloud generation of the site

- (g)

- DATA PROCESSING PHASE

- Comparison of BIM4D model (filtered) with the onsite scan point cloud

- (h) MONITORING AND VISUALIZATION PHASE

- Detection accuracy: 92% match between executed elements identified by the workflow and ground-truth verification.

- False positive/negative rates: <5% misclassification of elements.

- Time savings: monitoring time reduced by approximately 70% compared to manual inspections.

- Reliability: 95% of drone missions completed without operator intervention, with only occasional retries due to battery exchange or adverse lighting conditions.

5. Discussions

5.1. Limitations

5.2. Computational Requirements and Scalability

6. Conclusions

6.1. Conclusions of the Research and Impact on Construction Industry

6.2. Future Prospects and Pending Challenges

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| FME | Feature Manipulation Engine |

| ETL | Extract, Transform, Load |

| BIM | Building Information Modeling |

| 4D | Fourth Dimension |

| SLAM | Simultaneous Localization and Mapping |

| IFC | Industry Foundation Classes |

| MVD | Model View Definition |

| MEP | Mechanical, Electrical, and Plumbing |

| LIDAR | Light Detection and Ranging |

| PCD | Point Cloud Data |

| GNSS | Global Navigation Satellite System |

| FLU | Forward-Left-Up |

| ISO | International Organization or Standardization |

| ASCII | American Standard Code for Information Interchange |

| KPI | Key Performance Indicator |

| LCA | Life Cycle Assessment |

| REST | Representational State Transfer |

| API | Application Programming Interface |

| IRR | Internal Rate of Return |

References

- Mathew, A.; Li, S.; Pluta, K.; Djahel, R.; Brilakis, I. Digital Twin Enabled Construction Progress Monitoring. In Proceedings of the 2024 European Conference on Computing in Construction, Crete, Greece, 15–17 July 2024; Available online: https://ec-3.org/publications/conferences/EC32024/papers/EC32024_210.pdf (accessed on 16 December 2024).

- Teslim, B.; Suprise, W. Comparing Manual and Automated Auditing Techniques in Building Assessments. 2024. Available online: https://www.researchgate.net/publication/386372774 (accessed on 10 March 2025).

- Chauhan, I.; Seppänen, O. Automatic indoor construction progress monitoring: Challenges and solution. In Proceedings of the 2023 European Conference on Computing in Construction, Crete, Greece, 10–12 July 2023. [Google Scholar] [CrossRef]

- Tsige, G.Z.; Alsadik, B.S.A.; Oude Elberink, S.; Bassier, M. Automated Scan-vs-BIM Registration Using Columns Segmented by Deep Learning for Construction Progress Monitoring. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2025, XLVIII-G-2025, 1455–1462. Available online: https://isprs-archives.copernicus.org/articles/XLVIII-G-2025/1455/2025/ (accessed on 18 August 2025).

- Gruner, F.; Romanschek, E.; Wujanz, D.; Clemen, C.; Wujanz, D. Scan vs. BIM: Patch-Based Construction Progress Monitoring Using BIM and 3D Laser Scanning (ProgressPatch). 2023. Available online: https://fig.net/resources/proceedings/fig_proceedings/fig2023/papers/ts05d/TS05D_gruner_romanschek_et_al_12203.pdf (accessed on 16 December 2024).

- Ibrahimkhil, M.; Shen, X.; Barati, K. Enhanced Construction Progress Monitoring through Mobile Mapping and As-built Modeling. In Proceedings of the 38th International Symposium on Automation and Robotics in Construction (ISARC 2021), Dubai, United Arab Emirates, 2–4 November 2021. [Google Scholar] [CrossRef]

- Kavaliauskas, P.; Fernandez, J.B.; McGuinness, K.; Jurelionis, A. Automation of Construction Progress Monitoring by Integrating 3D Point Cloud Data with an IFC-Based BIM Model. Buildings 2022, 12, 1754. [Google Scholar] [CrossRef]

- Reja, V.; Bhadaniya, P.; Varghese, K.; Ha, Q. Vision-Based Progress Monitoring of Building Structures Using Point-Intensity Approach. In Proceedings of the 38th International Symposium on Automation and Robotics in Construction (ISARC 2021), Dubai, United Arab Emirates, 2–4 November 2021. [Google Scholar] [CrossRef]

- Yu, S. Research on Construction Progress Monitoring Based on 3D Point Clouds and BIM Models. In Proceedings of the 2024 4th International Conference on Electronic Information Engineering and Computer Communication (EIECC), Wuhan, China, 27–29 December 2024; pp. 667–673. [Google Scholar] [CrossRef]

- Kim, B.; Jo, I.; Ham, N.; Kim, J.-J. Simplified Scan-vs-BIM Frameworks for Automated Structural Inspection of Steel Structures. Appl. Sci. 2024, 14, 11383. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 December 2020; pp. 5135–5142. [Google Scholar] [CrossRef]

- Kaess, M.; Johannsson, H.; Roberts, R.; Ila, V.; Leonard, J.; Dellaert, F. iSAM2: Incremental smoothing and mapping with fluid relinearization and incremental variable reordering. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3281–3288. [Google Scholar] [CrossRef]

- Zheng, C.; Xu, W.; Zou, Z.; Hua, T.; Yuan, C.; He, D.; Zhou, B.; Liu, Z.; Lin, J.; Zhu, F.; et al. FAST-LIVO2: Fast, Direct LiDAR–Inertial–Visual Odometry. IEEE Trans. Robot. 2025, 41, 326–346. [Google Scholar] [CrossRef]

- Koide, K.; Yokozuka, M.; Oishi, S.; Banno, A. Globally Consistent 3D LiDAR Mapping With GPU-Accelerated GICP Matching Cost Factors. IEEE Robot. Autom. Lett. 2021, 6, 8591–8598. [Google Scholar] [CrossRef]

- Maskeliūnas, R.; Maqsood, S.; Vaškevičius, M.; Gelšvartas, J. Fusing LiDAR and Photogrammetry for Accurate 3D Data: A Hybrid Approach. Remote Sens. 2025, 17, 443. [Google Scholar] [CrossRef]

- Rui, Y.; Lim, Y.-W.; Siang, T. Construction Project Management Based on Building Information Modeling (BIM). Civ. Eng. Archit. 2021, 9, 2055–2061. [Google Scholar] [CrossRef]

- Rebolj, D.; Pučko, Z.; ČušBabič, N.; Bizjak, M.; Mongus, D. Point cloud quality requirements for Scan-vs-BIM based automated construction progress monitoring. Autom. Constr. 2017, 84, 323–334. [Google Scholar] [CrossRef]

- Wang, Q.; Tan, Y.; Mei, Z. Computational Methods of Acquisition and Processing of 3D Point Cloud Data for Construction Applications. Arch. Comput. Methods Eng. 2020, 27, 479–499. [Google Scholar] [CrossRef]

- Safe Software. FME: Data Integration Platform. 2024. Available online: https://fme.safe.com (accessed on 15 January 2024).

- Caballero, F.; Merino, L. DLL: Direct LIDAR Localization. A map-based localization approach for aerial robots. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 28–30 September 2021; pp. 5491–5498. [Google Scholar] [CrossRef]

- Zhou, B.; Gao, F.; Wang, L.; Liu, C.; Shen, S. Robust and Efficient Quadrotor Trajectory Generation for Fast Autonomous Flight. IEEE Robot. Autom. Lett. 2019, 4, 3529–3536. [Google Scholar] [CrossRef]

- Murillo, J.I.; Montes, M.A.; Zahinos, R.; Trujillo, M.A.; Viguria, A.; Heredia, G. Simplifying Autonomous Aerial Operations: LUCAS, a Lightweight Framework for UAV Control and Supervision. In Proceedings of the 2025 International Conference on Unmanned Aircraft Systems (ICUAS), Charlotte, NC, USA, 14–17 May 2025; pp. 854–861. [Google Scholar] [CrossRef]

- Wei, H.; Li, R.; Cai, Y.; Yuan, C.; Ren, Y.; Zou, Z.; Wu, H.; Zheng, C.; Zhou, S.; Xue, K.; et al. LAMM: Large-scale multi-session point-cloud map merging. IEEE Robot. Autom. Lett. 2024, 10, 88–95. [Google Scholar] [CrossRef]

- Zhu, F.; Zhao, Y.; Chen, Z.; Jiang, C.; Zhu, H.; Hu, X. DyGS-SLAM: Realistic Map Reconstruction in Dynamic Scenes Based on Double-Constrained Visual SLAM. Remote Sens. 2025, 17, 625. [Google Scholar] [CrossRef]

- Liu, Y.; Dong, S.; Wang, S.; Yin, Y.; Yang, Y.; Fan, Q.; Chen, B. SLAM3R: Real-time dense scene reconstruction from monocular RGB videos. arXiv 2024. [Google Scholar] [CrossRef]

- Jing, S.; Li, X.; Maru, M.B.; Yu, B.; Cha, G.; Park, S. Occlusion-aware hybrid learning framework for point cloud understanding in building mechanical, electrical, and plumbing systems. Energy and Buildings. 2025, 344, 115955. [Google Scholar] [CrossRef]

- Yue, H.; Wang, Q.; Zhao, H.; Zeng, N.; Tan, Y. Deep learning applications for point clouds in the construction industry: A review. Autom. Constr. 2024, 168, 105769. [Google Scholar] [CrossRef]

- Abreu, N.; Pinto, A.; Matos, A.; Pires, M. Procedural point cloud modelling in scan-to-BIM and scan-vs-BIM applications: A review. ISPRS Int. J. Geo-Inf. 2023, 12, 260. [Google Scholar] [CrossRef]

- Savarese, S.; Fischer, M.; Flager, F.; Hamledari, H. Using UAVs for Automated BIM-Based Construction Progress Monitoring and Quality Control. Center for Integrated Facility Engineering, Stanford University. 2020. Available online: https://cife.stanford.edu (accessed on 15 January 2024).

- Kielhauser, C.; Renteria Manzano, R.; Hoffman, J.J.; Adey, B.T. Automated construction progress and quality monitoring for commercial buildings with unmanned aerial systems: An application study from Switzerland. Infrastructures 2020, 5, 98. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tárrago Garay, N.; Jimenez Fernandez, J.C.; San Mateos Carreton, R.; Montes Grova, M.A.; Kruth, O.; Elguezabal, P. Integration of Drone-Based 3D Scanning and BIM for Automated Construction Progress Control. Buildings 2025, 15, 3487. https://doi.org/10.3390/buildings15193487

Tárrago Garay N, Jimenez Fernandez JC, San Mateos Carreton R, Montes Grova MA, Kruth O, Elguezabal P. Integration of Drone-Based 3D Scanning and BIM for Automated Construction Progress Control. Buildings. 2025; 15(19):3487. https://doi.org/10.3390/buildings15193487

Chicago/Turabian StyleTárrago Garay, Nerea, Jose Carlos Jimenez Fernandez, Rosa San Mateos Carreton, Marco Antonio Montes Grova, Oskari Kruth, and Peru Elguezabal. 2025. "Integration of Drone-Based 3D Scanning and BIM for Automated Construction Progress Control" Buildings 15, no. 19: 3487. https://doi.org/10.3390/buildings15193487

APA StyleTárrago Garay, N., Jimenez Fernandez, J. C., San Mateos Carreton, R., Montes Grova, M. A., Kruth, O., & Elguezabal, P. (2025). Integration of Drone-Based 3D Scanning and BIM for Automated Construction Progress Control. Buildings, 15(19), 3487. https://doi.org/10.3390/buildings15193487