Two-Scale Physics-Informed Neural Networks for Structural Dynamics Parameter Inversion: Numerical and Experimental Validation on T-Shaped Tower Health Monitoring

Abstract

1. Introduction

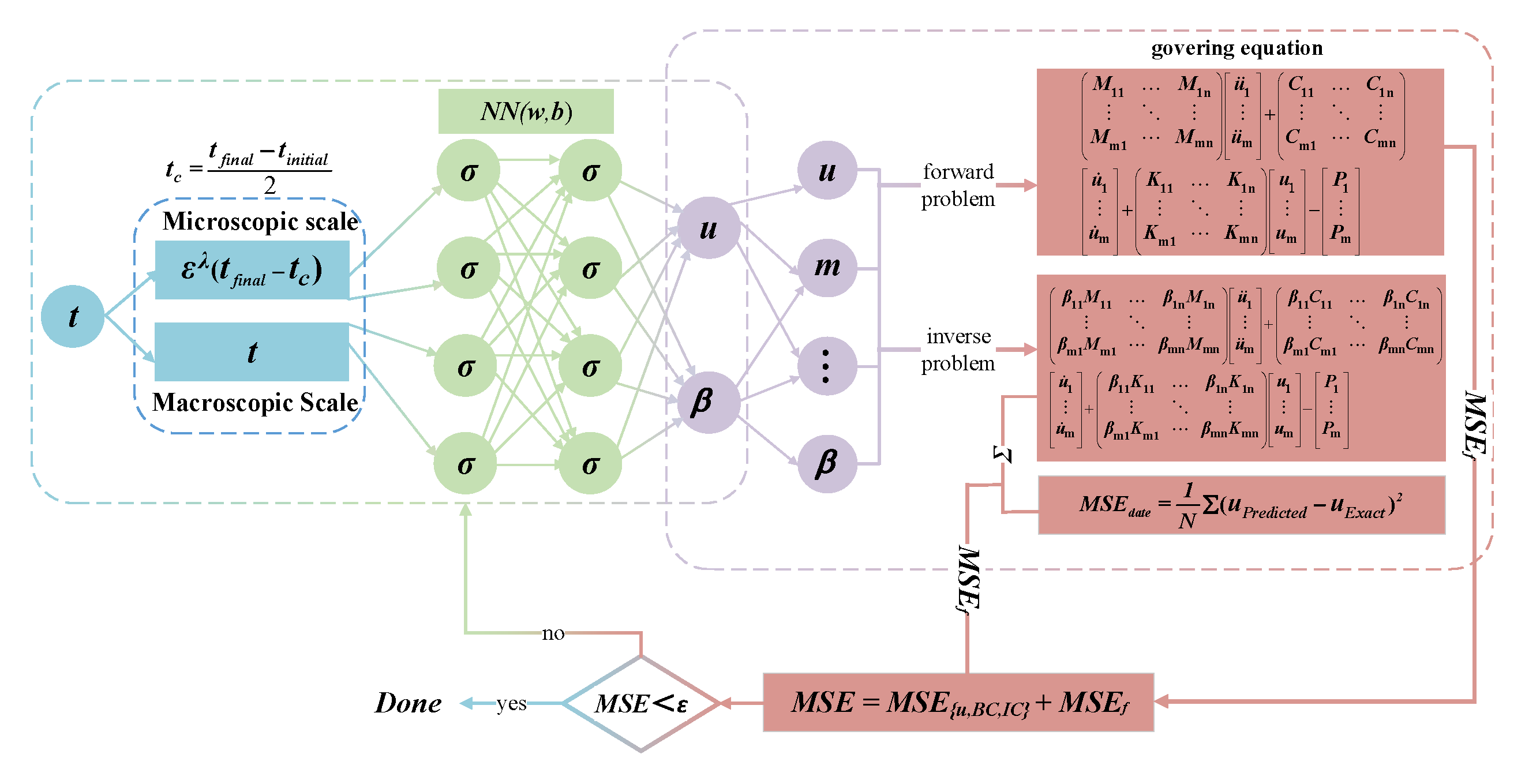

- (1)

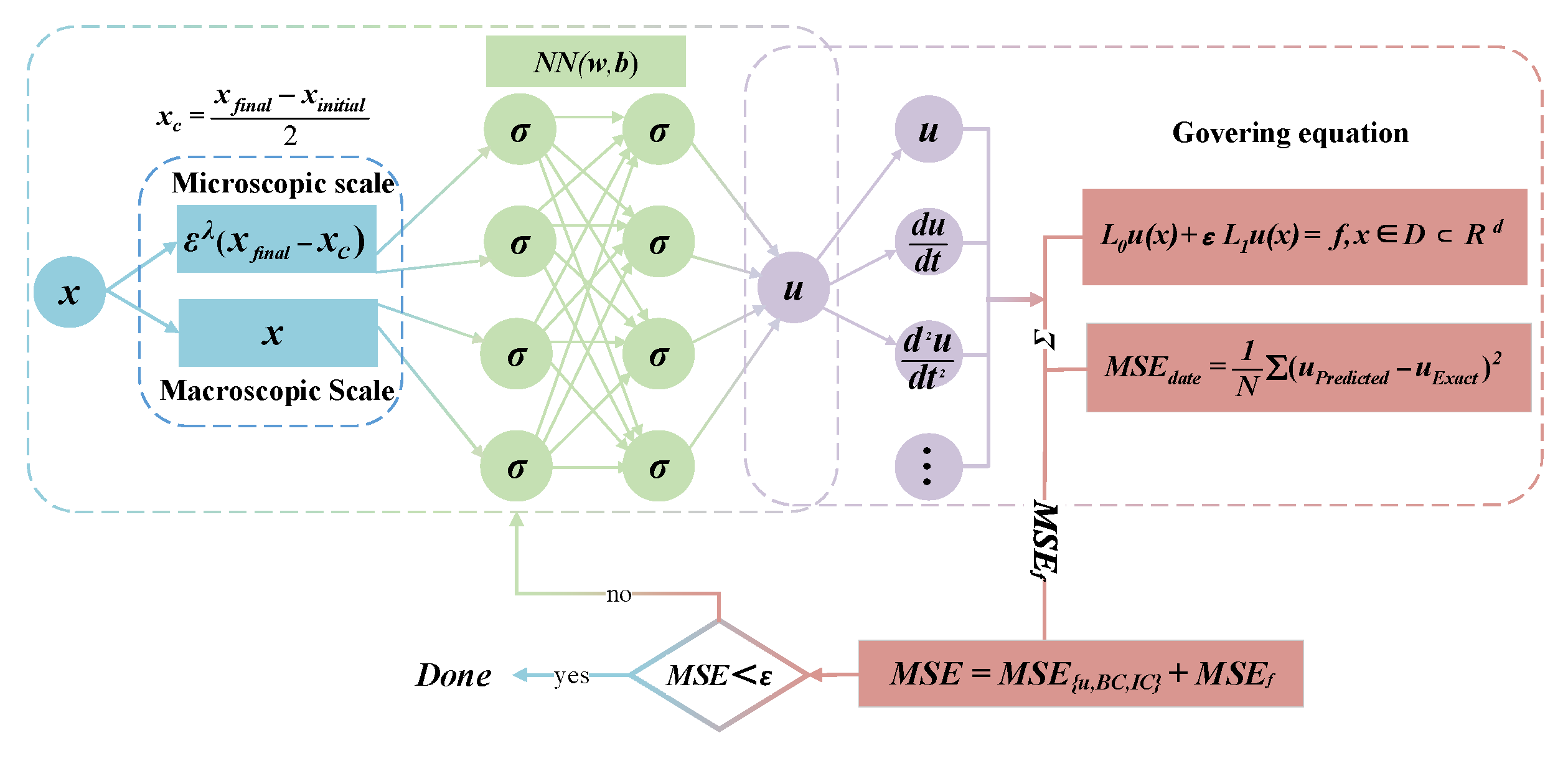

- In the network architecture, introducing scale parameters at the input layer breaks the limitation of single scale, achieving the decoupling of two-scale features and the fusion of cross-scale information.

- (2)

- At the level of physical modeling, we define the stiffness-to-mass ratio as a characteristic scale parameter, construct scale auxiliary variables, establish a universal scale correlation mechanism, and break through the limitations of traditional methods.

- (3)

- The proposed TSPINN framework integrates finite element principles with two-scale physics-informed learning to achieve damage localization and stiffness/mass quantification of T-shaped tower structures under dynamic excitation. By incorporating finite element principles, it addresses the limitations of traditional physics-informed neural networks in scenarios involving small parameters and single partial differential equations, demonstrating superior performance in complex structural contexts.

2. Methodology

2.1. Two-Scale Physics-Informed Neural Network Principles

- is the dominant differential operator, representing the main physical behaviors or macrodynamic characteristics of the system.

- is a dimensionless small parameter (), reflecting the relative strength of secondary effects or micro-scale disturbances.

- serves as a secondary differential operator (perturbation operator), typically describing microscale corrections induced by viscous, diffusive, or nonlinear effects. Although its overall contribution is suppressed by ε, it may dominate the form of the solution in localized regions such as boundary layers, shock waves, or resonance bands.

- is the source term or external forcing term that drives the dynamic response of the system.

- (a)

- Original input variable (x): the primary variable in the partial differential equation representing the original spatial or temporal variable.

- (b)

- Scaling variables (): The computational domain D is characterized by its centroid , and a small parameter ε governing the equation, which serves to resolve multiscale features in the governing equation’s solution profile. Through the strategic coupling of the spatial coordinate x with the scaled variables , the architecture explicitly targets microscale variations while maintaining macroscale solution fidelity.

- (c)

- Scale parameter (): This parameter is designed to assist the network in feature capture across different scales, thereby effectively addressing multi-scale issues.

| Algorithm 1: Successive training of two-scale neural networks for PDEs with small parameters |

|

| Algorithm 2: Algorithm embedded with TSPINNs |

|

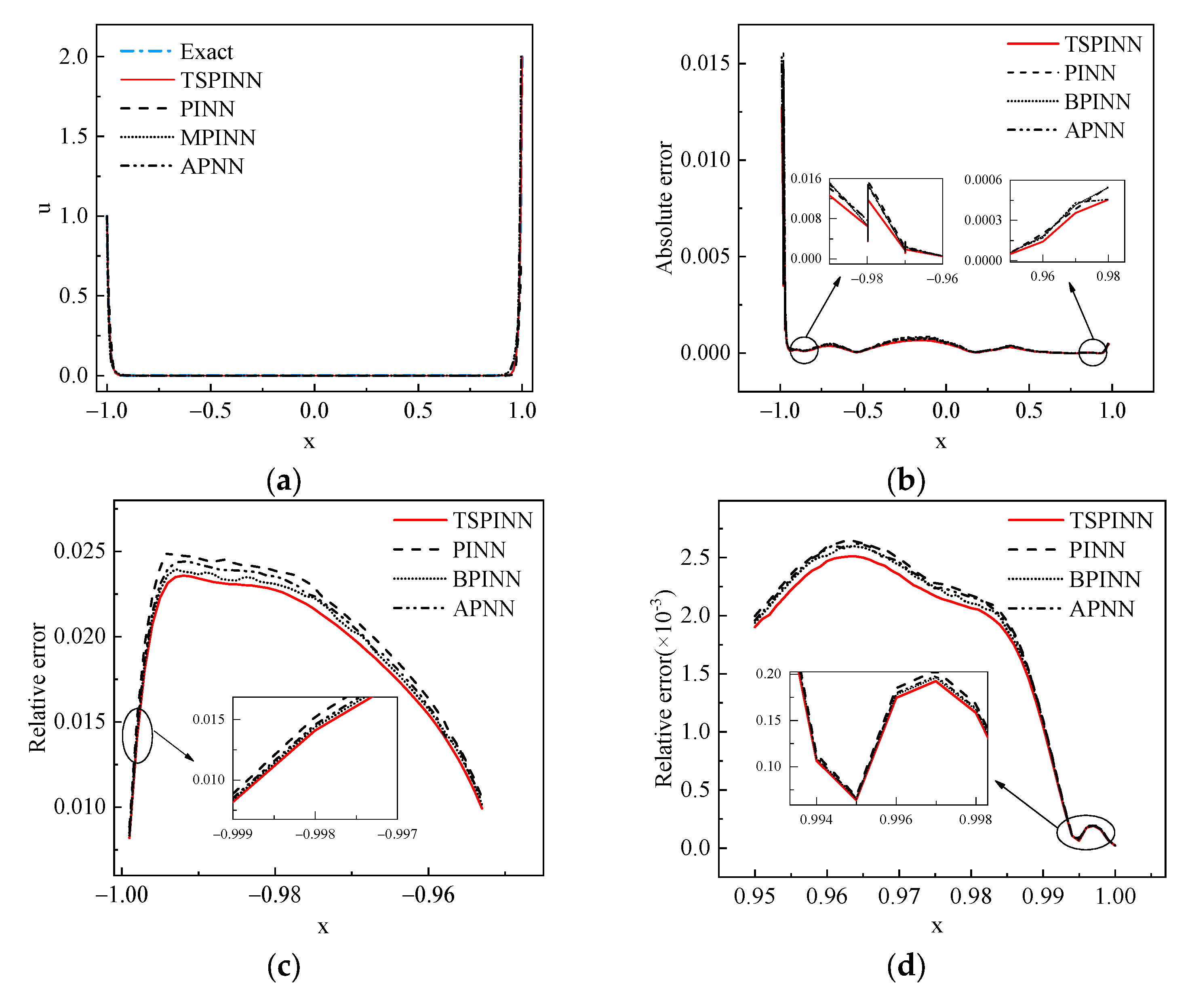

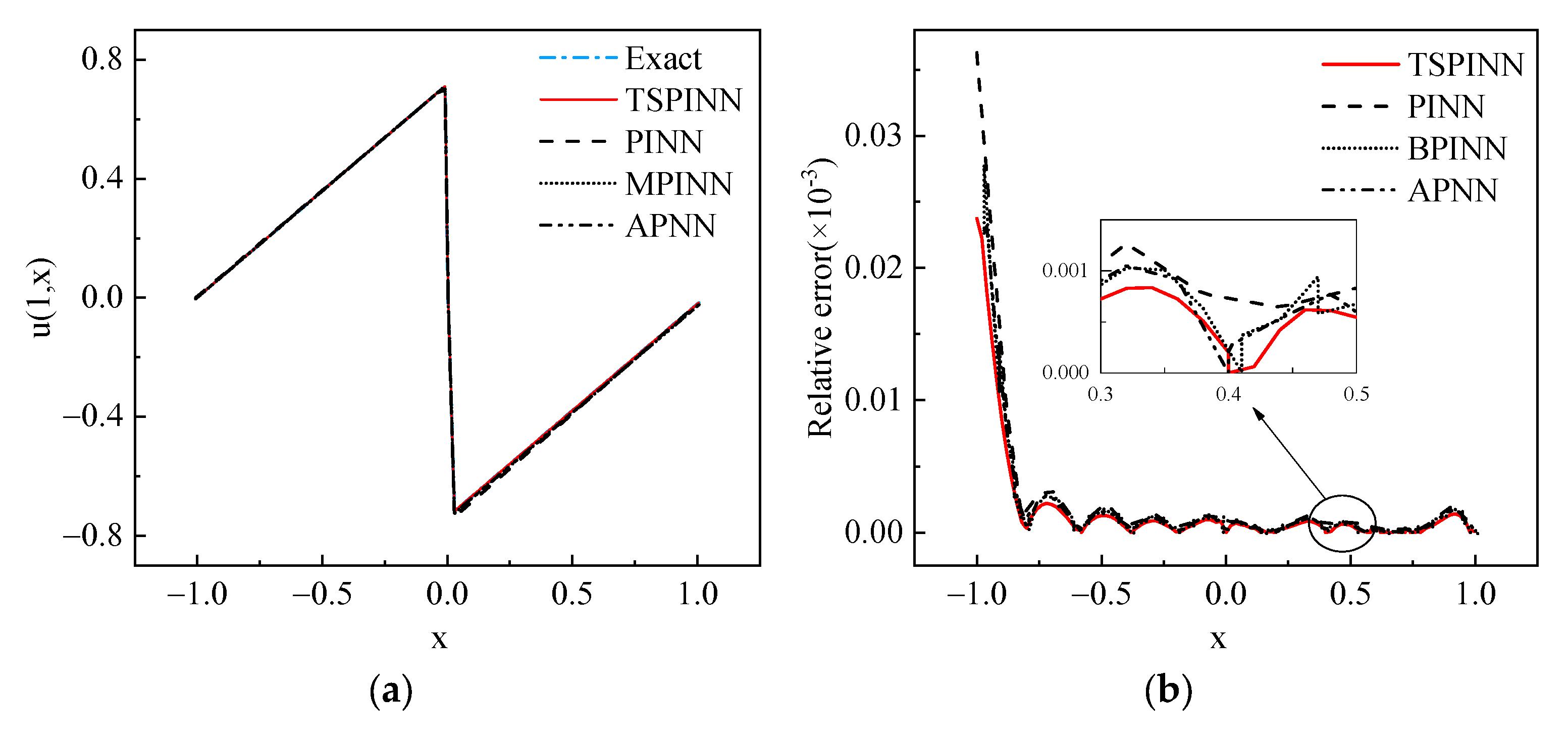

2.2. Numerical Experiments

- Example 1 (1D ODE with one boundary layer):

- Example 2 (1D ODE with two boundary layer):

- Example 3 (1D viscous Burgers’ equation):

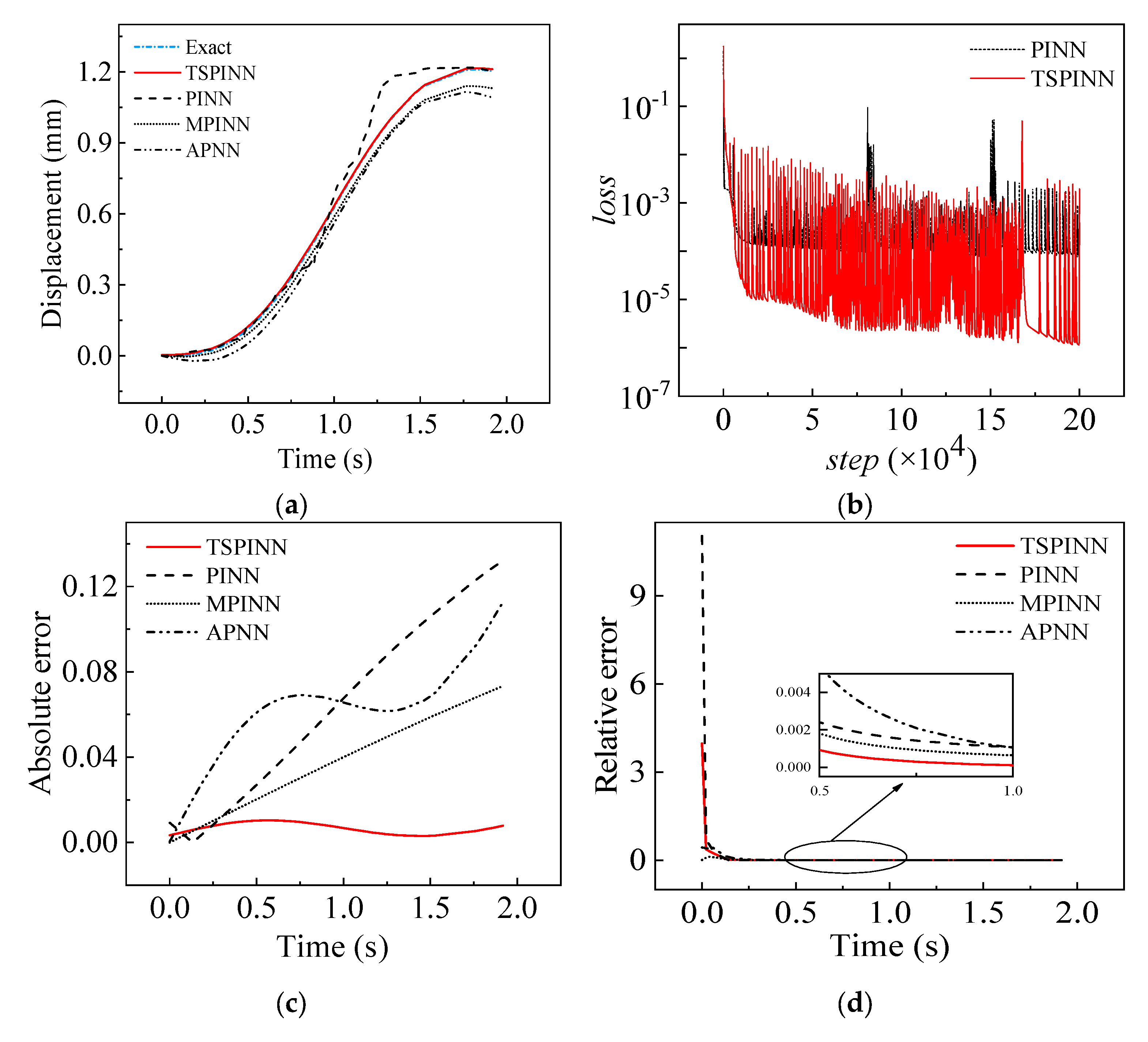

- Example 4 (SDOF):

3. Parameter Inversion

3.1. Finite Elements Error

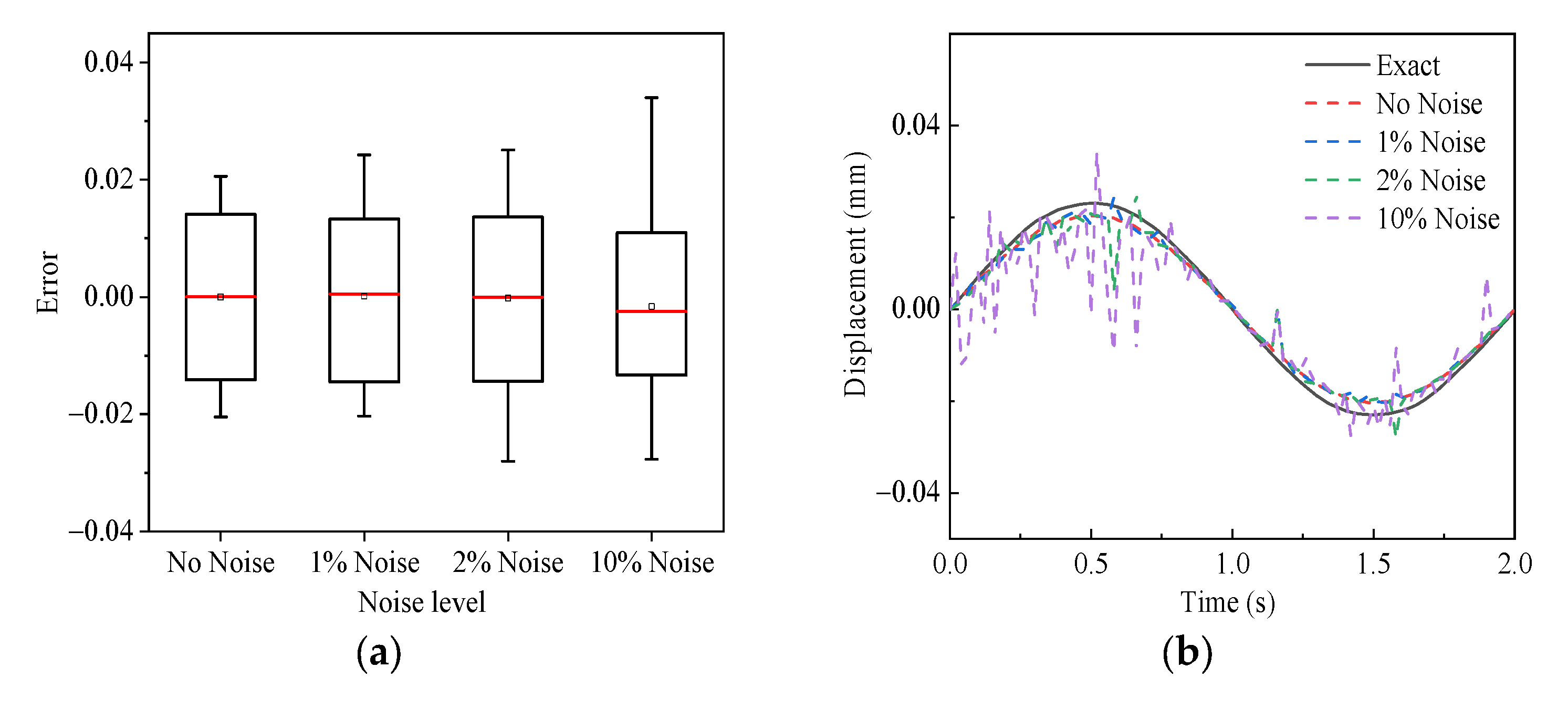

3.2. Observation Noise

3.3. Problem Setup

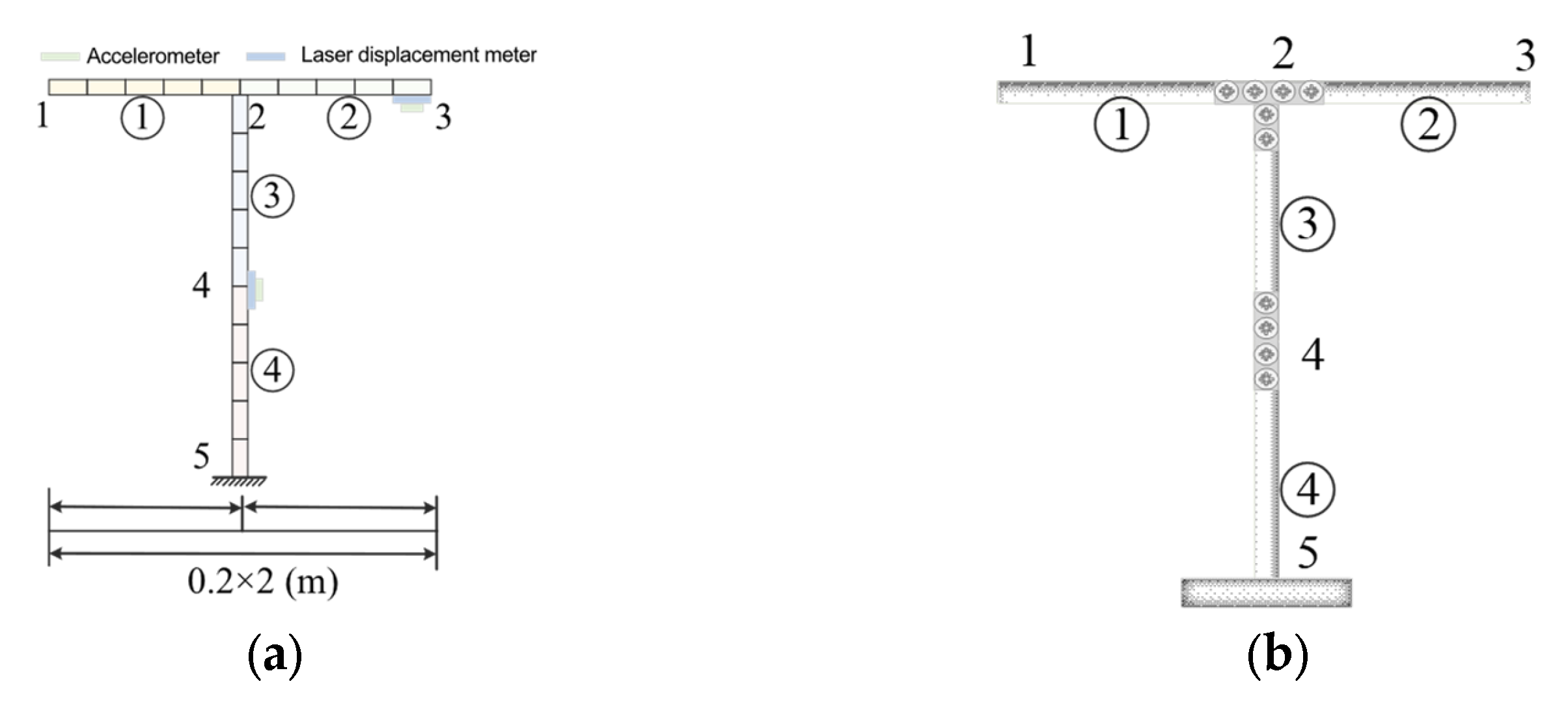

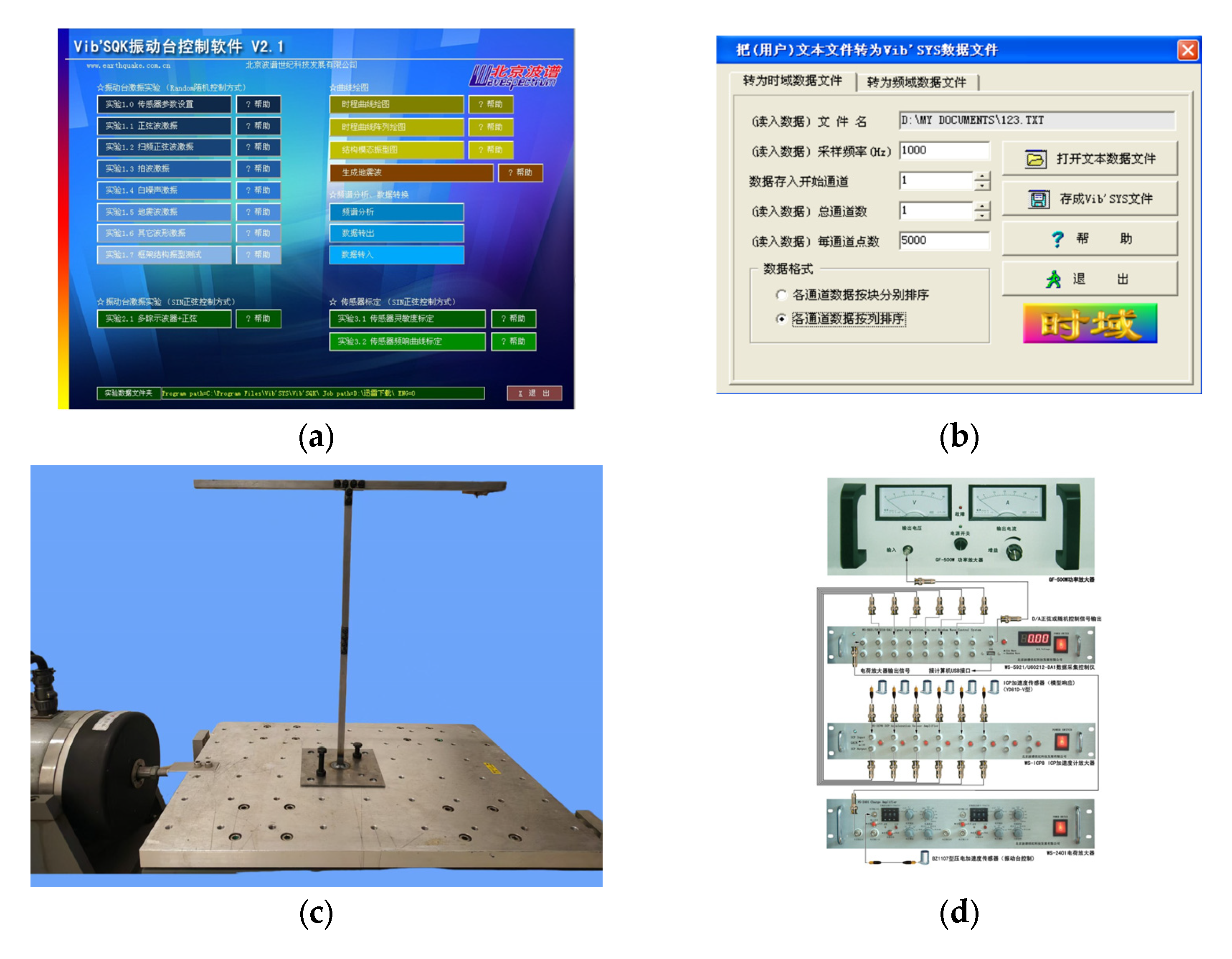

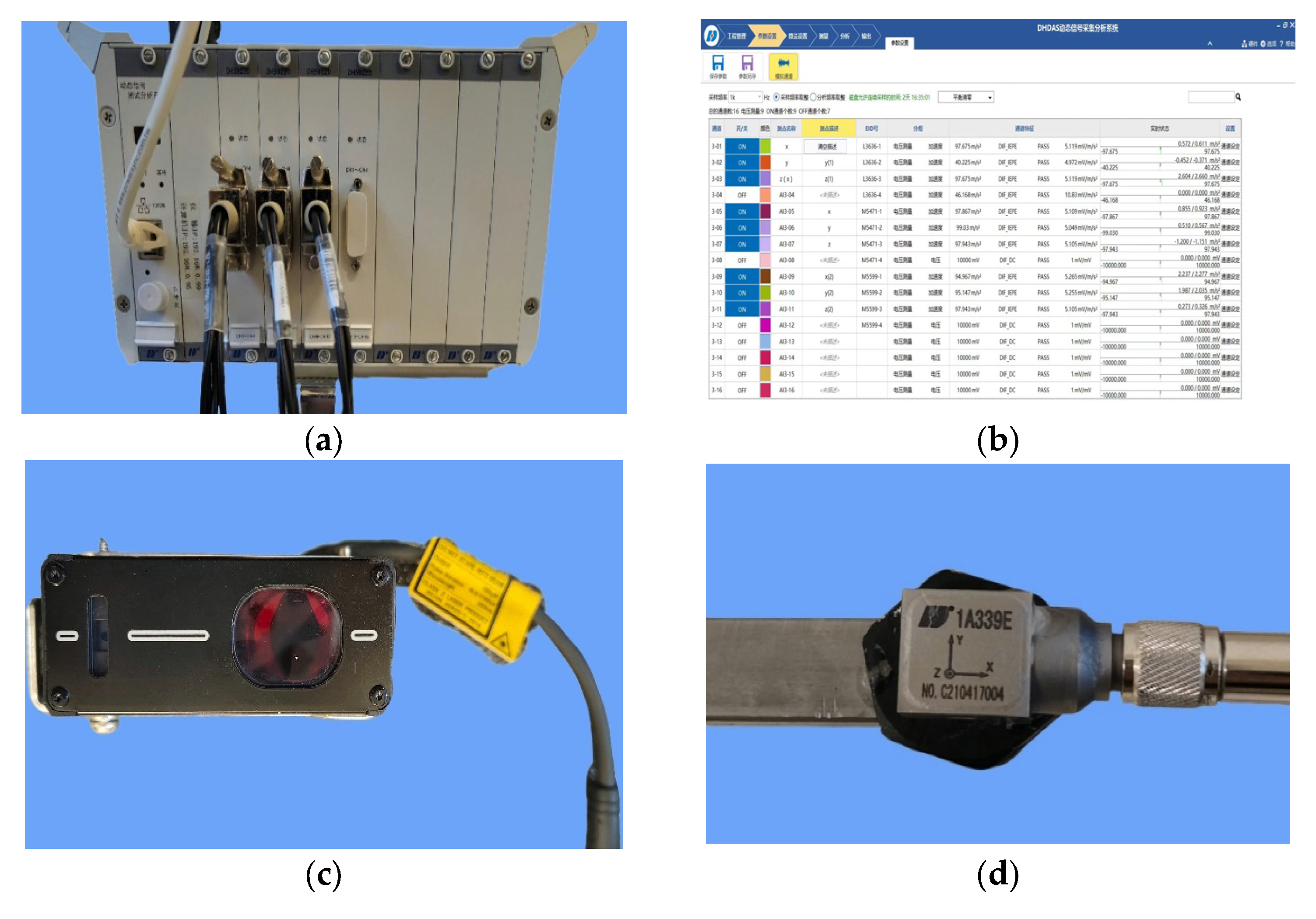

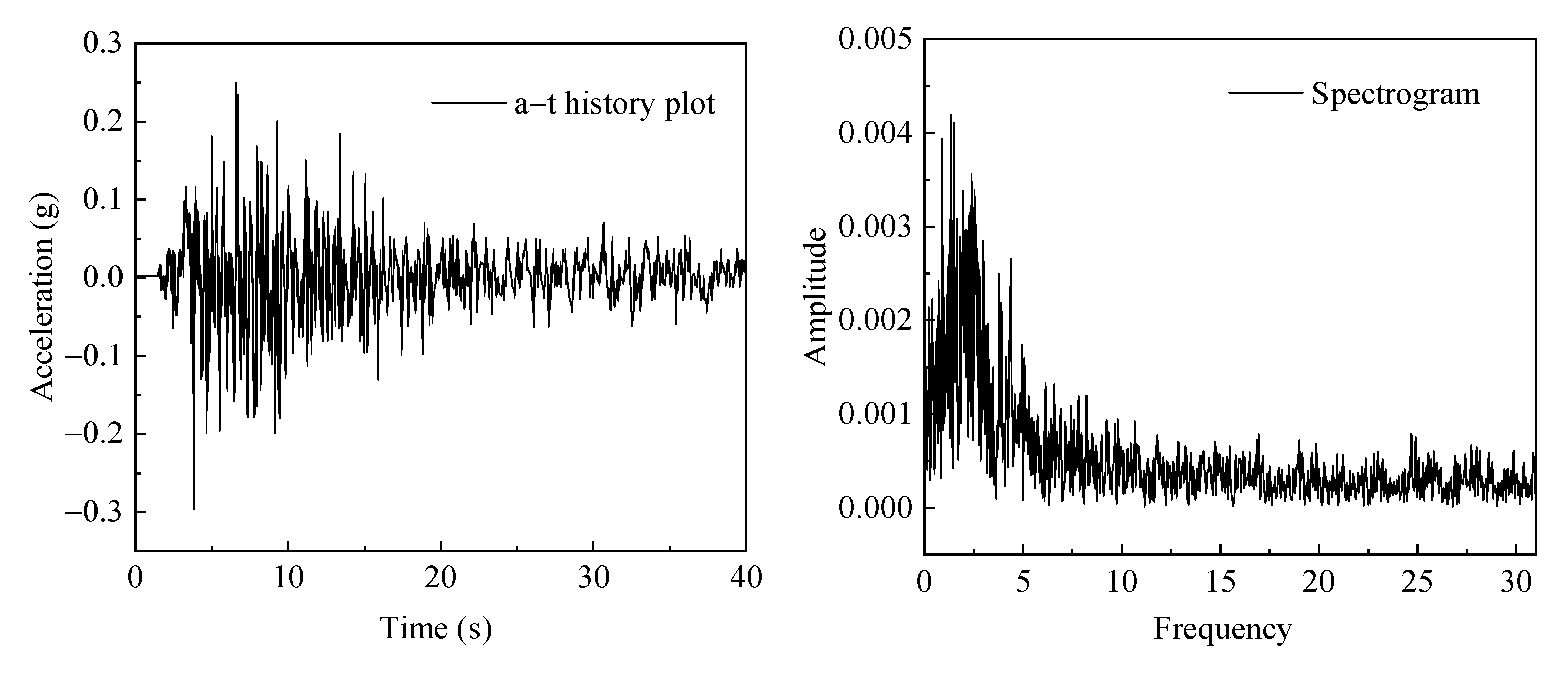

3.4. Experiment Setup

- (1)

- The programmable signal generation produces excitation signals.

- (2)

- Power amplification actuates the electrodynamic shaker.

- (3)

- Closed-loop feedback enables real-time parameter adjustment while synchronously acquiring response data.

- (1)

- DH5922D dynamic signal analysis system: Provides 256 kHz/channel synchronous sampling, incorporating signal conditioning and anti-aliasing filter for parallel acquisition of multi-physical signals.

- (2)

- Keyence laser displacement sensor (LK—H151): Captures spot displacement via laser triangulation with micron-level resolution.

- (3)

- Donghua test accelerometer (1A339E): Utilizes a mass-spring-piezoelectric sensing system with a linearity error of ≤±1%FS.

3.5. Test Condition

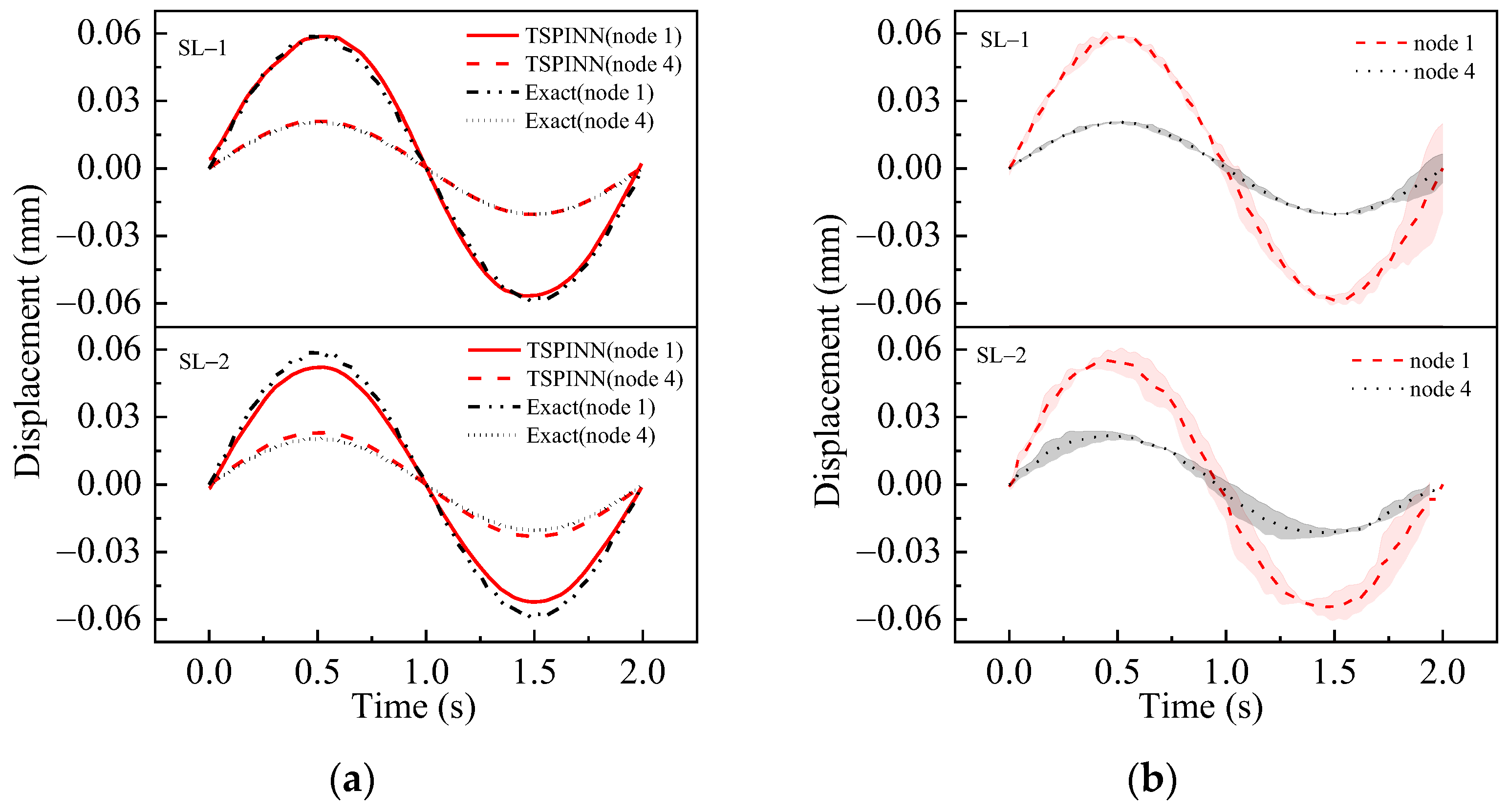

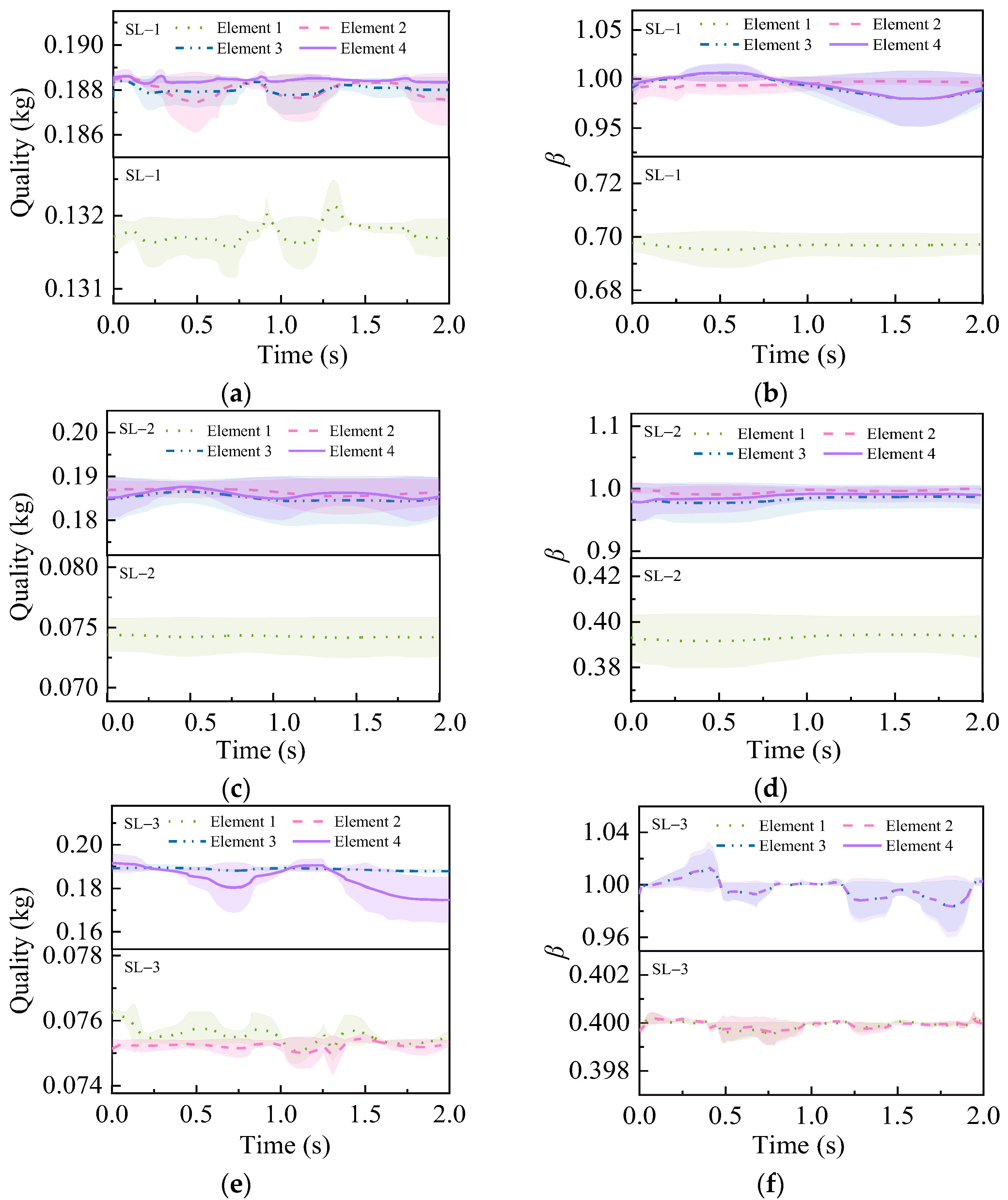

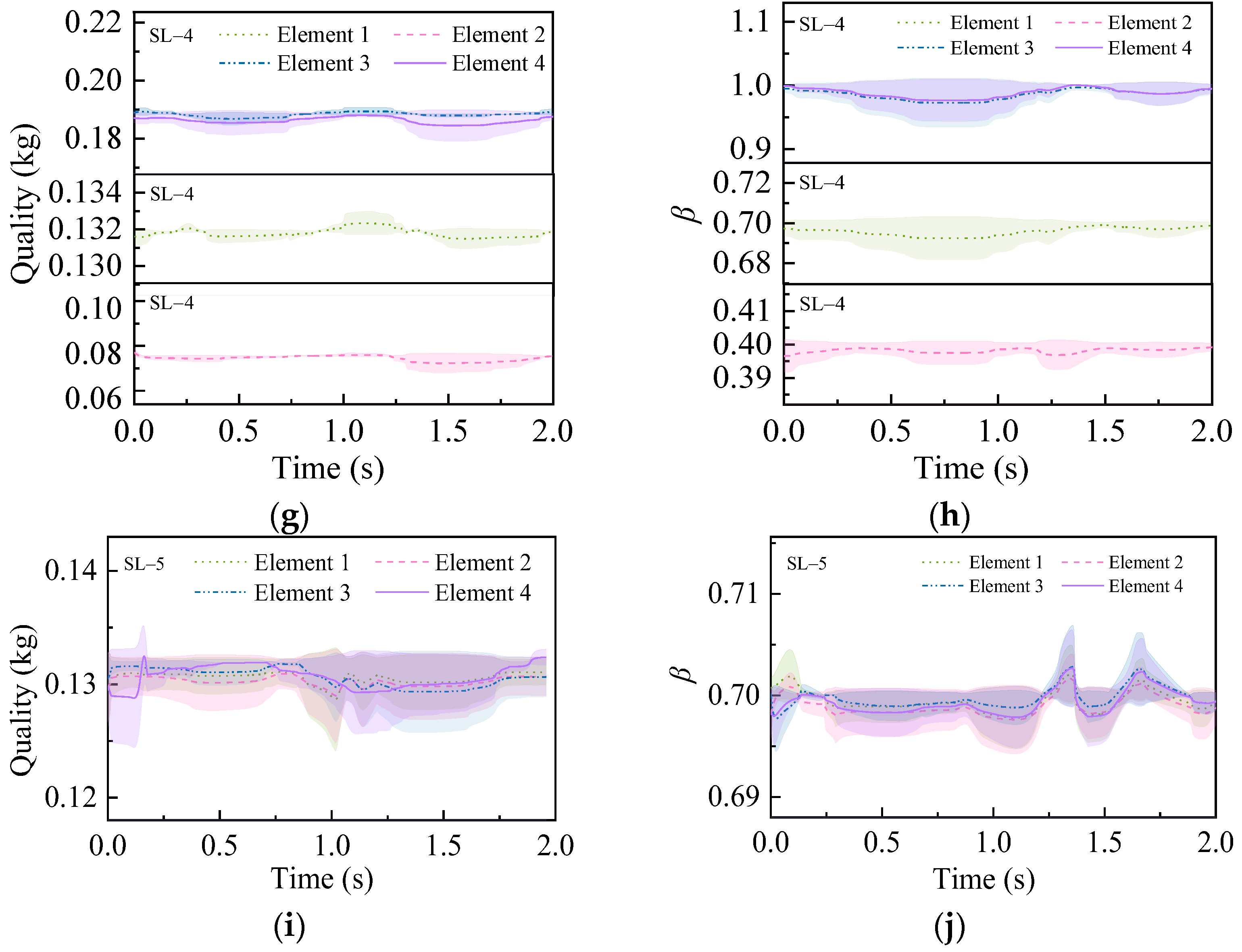

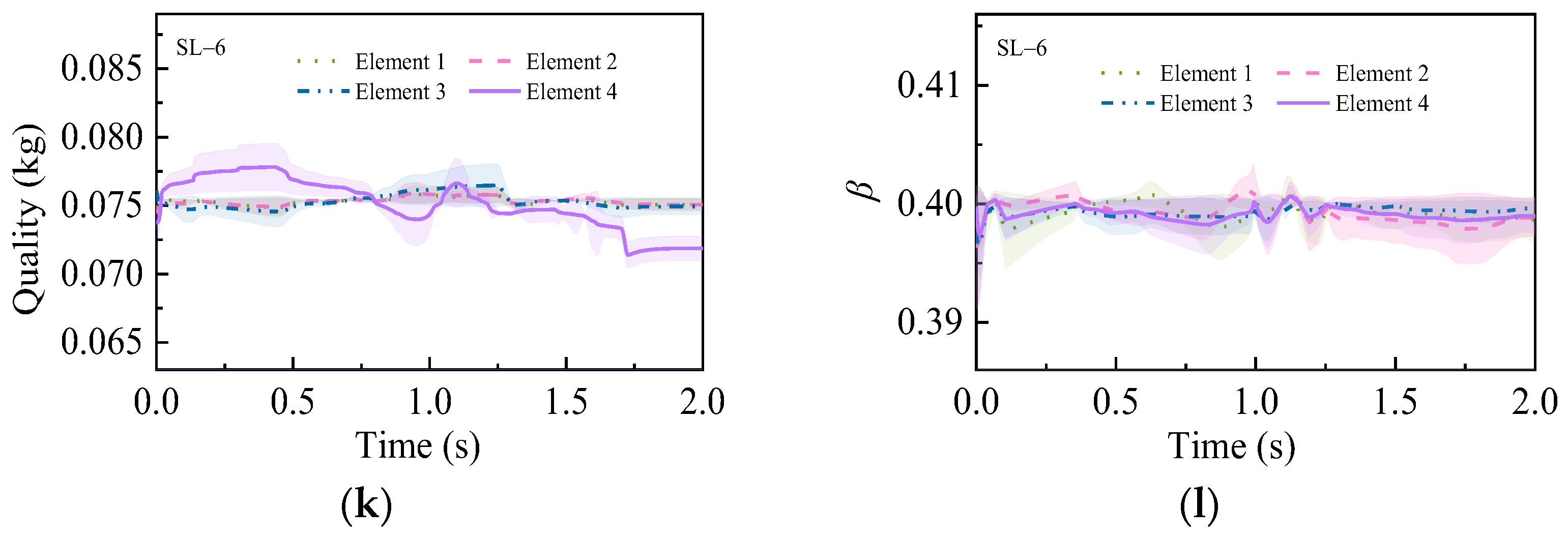

3.6. T-Shaped Tower Structure Structural Parameters Inversion

- (1)

- Compared to element 1 and element 2, elements 3 and 4 exhibit slightly increased identification deviations, though remaining within acceptable engineering tolerance ranges.

- (2)

- With the increase in structural damage severity (SL-1 to SL-2), TSPINNs show more stable identification ability, yielding narrower error bandwidths.

- (3)

- When comparing structural cases with varying damage severities (SL-3 vs. SL-4) at identical positions, TSPINNs show greater confidence in mass parameter identification for cross-damage-level comparisons than for same-severity cases, with significantly tighter error distributions substantiating this enhanced reliability.

- (4)

- When all elements are damaged simultaneously (SL-5 to SL-6), TSPINNs maintain a robust performance (peak standard deviation ≤ 1.09 × 10−2).

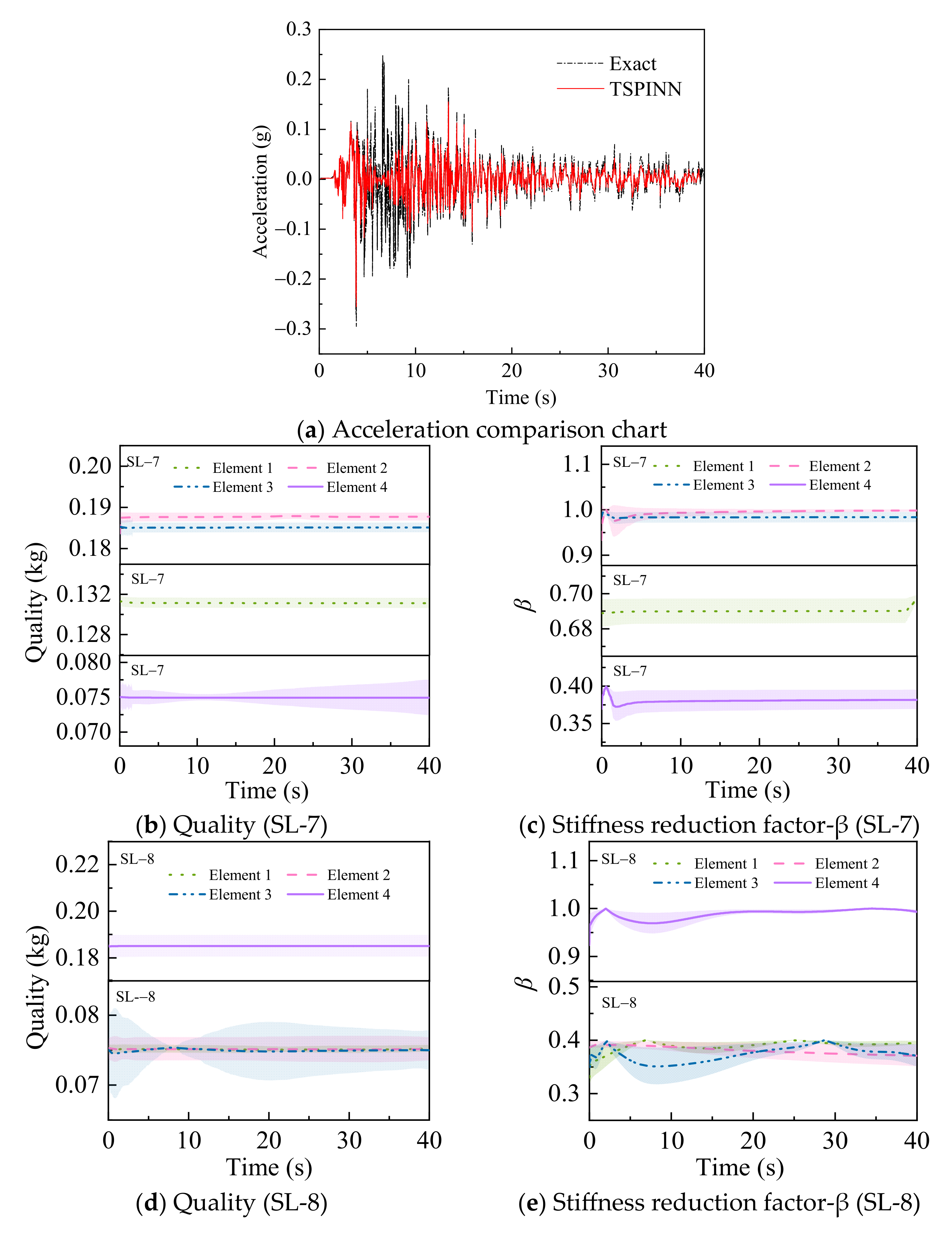

- (1)

- Load identification errors surge in the 5–10 s interval (peaking at 17%), potentially attributed to nonlinear response abruptions;

- (2)

- Higher inversion accuracy (mean error < 3%) prevails in other periods.

- (1)

- Stiffness reduction coefficient identification achieves a 2.7% mean error;

- (2)

- Mass parameters identification maintains a 0.46% mean error;

- (3)

- Error bandwidth fluctuation remains <2%.

4. Conclusions

- (1)

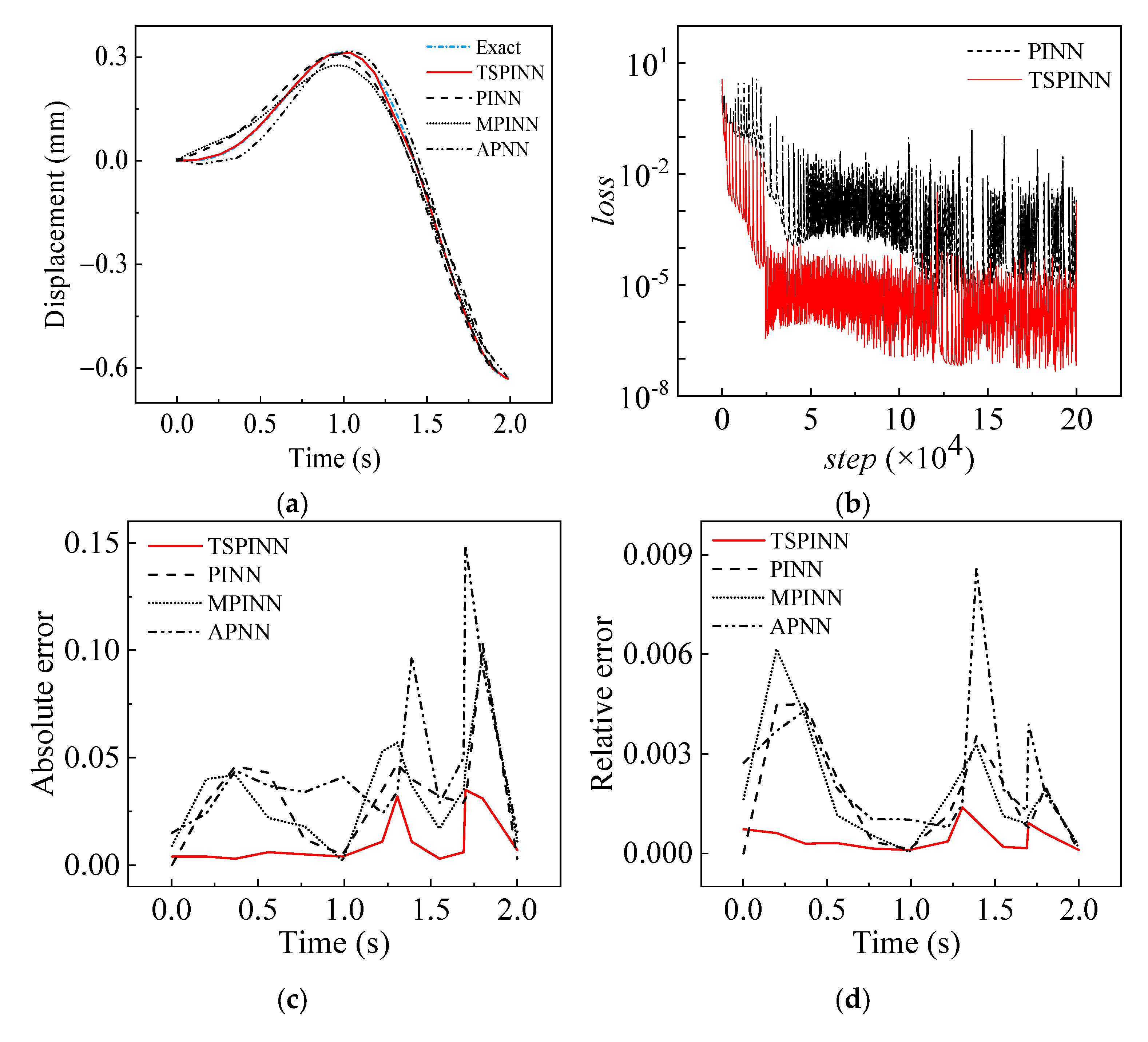

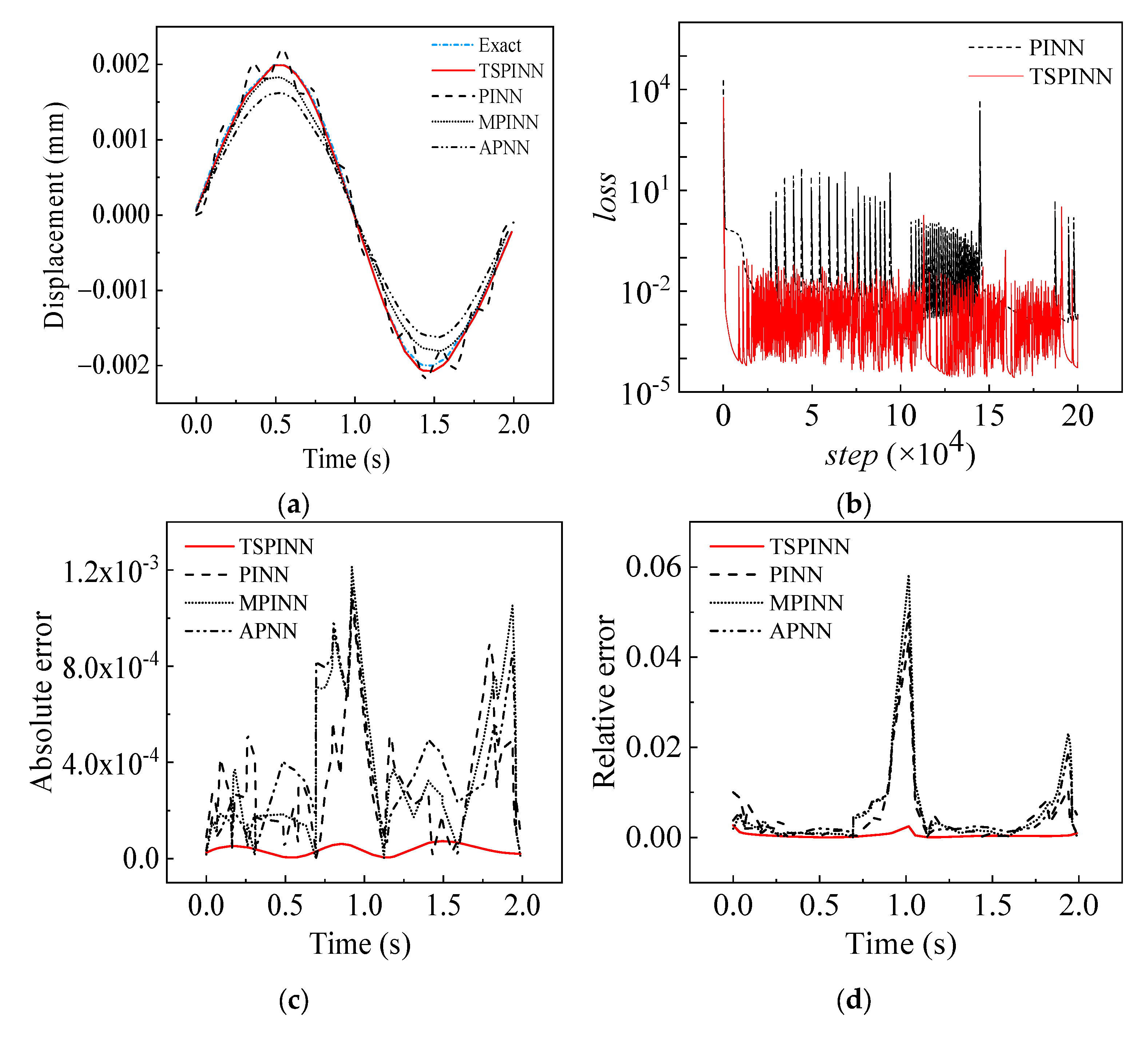

- Dynamic response analysis of SDOF demonstrates TSPINNs’ technical superiority in stiff modal coupling scenarios:

- One to two orders of magnitude improvement in prediction accuracy over conventional PINNs;

- Accelerated loss function convergence with 2–3 orders of magnitude lower final residuals;

- Effective mitigation of numerical oscillations in high-gradient regions via ε-parameter scale-aware mechanisms.

- (2)

- Shaking table test on a T-tower prototype establishes a damage scenario database, revealing the following:

- Less than 10% average maximum deviation between identified and experimental mass/stiffness parameters;

- Robust performance under complex damage scenarios.

- (1)

- Computational cost: The TSPINN method may incur high computational costs when addressing more complex problems, requiring advanced hardware configurations. Future work should focus on algorithm optimization to reduce computational resource demands and improve efficiency, thereby enhancing the method’s feasibility across diverse computational environments.

- (2)

- Sensitivity to network hyperparameters: Like many machine learning methods, TSPINNs’ performance is sensitive to hyperparameter selection (e.g., learning rate, network depth, neuron count per layer). Inappropriate hyperparameters may lead to slow training, convergence difficulties, or overfitting, compromising accuracy and generalizability. Future efforts should prioritize systematic hyperparameter tuning strategies to minimize manual intervention and improve model stability and reliability.

- (3)

- Scalability: The current validation is limited to simplified structures (such as T-shaped towers). More complex geometries (irregular trusses, multi-physical field coupling) require the integration of modular sub-networks or domain decomposition techniques.

- (4)

- Noise Robustness: Robustness and accuracy are relatively good under low noise conditions; however, performance is significantly affected in high noise scenarios, leading to reduced reliability. It is necessary to integrate denoising (wavelet transforms) and uncertainty quantification (Bayesian framework).

- (5)

- The capability of the proposed methodology to characterize complex structural parameters under minor damage conditions remains unverified. Given that minor structural degradation necessitates quantitative characterization for establishing TSPINNs’ damage identification viability, subsequent investigations will prioritize the development of systematic quantification frameworks and algorithm robustness enhancement.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yuen, K.V.; Au, S.K.; Beck, J. Two-Stage structural health monitoring approach for Phase I benchmark studies. J. Eng. Mech. 2004, 130, 16–33. [Google Scholar] [CrossRef]

- Yin, T.; Jiang, Q.H.; Yuen, K.V. Vibration-based damage detection for structural connections using incomplete modal data by Bayesian approach and model reduction technique. Eng. Struct. 2017, 132, 260–277. [Google Scholar] [CrossRef]

- Lai, Z.; Nagarajaiah, S. Sparse structural system identification method for nonlinear dynamic systems with hysteresis/inelastic behavior. Mech. Syst. Signal Process. 2019, 117, 813–842. [Google Scholar] [CrossRef]

- Banerjee, B.; Roy, D.; Vasu, R.M. Self-regularized pseudo time-marching schemes for structural system identification with static measurements: Self-regularized pseudo time-marching schemes. Int. J. Numer. Methods Eng. 2010, 82, 896–916. [Google Scholar] [CrossRef]

- Pathirage, C.S.N.; Li, J.; Li, L.; Hao, H.; Liu, W.; Ni, P. Structural damage identification based on autoencoder neural networks and deep learning. Eng. Struct. 2018, 172, 13–28. [Google Scholar] [CrossRef]

- Guo, F.; Cui, Q.; Zhang, H.; Wang, Y.; Zhang, H.; Zhu, X.; Chen, J. A new deep learning-based approach for concrete crack identification and damage assessment. Adv. Struct. Eng. 2024, 27, 2303–2318. [Google Scholar] [CrossRef]

- Hu, S.L.J.; Yang, W.L.; Li, H.J. Signal decomposition and reconstruction using complex exponential models. Mech. Syst. Signal Process. 2013, 40, 421–438. [Google Scholar]

- Bao, N.; Zhang, T.; Huang, R.; Biswal, S.; Su, J.; Wang, Y. A Deep Transfer Learning Network for Structural Condition Identification with Limited Real-World Training Data. Struct. Control Health Monit. 2023, 2023, 18. [Google Scholar] [CrossRef]

- He, Z.C.; Zhang, Z.; Li, E. Multi-source random excitation identification for stochastic structures based on matrix perturbation and modified regularization method. Mech. Syst. Signal Process. 2019, 119, 266–292. [Google Scholar] [CrossRef]

- Shu, J.; Ding, W.; Zhang, J.; Lin, F.; Duan, Y. Continual-learning-based framework for structural damage recognition. Struct. Control Health Monit. 2022, 29. [Google Scholar] [CrossRef]

- Lu, L.; Jin, P.; Pang, G.; Zhang, Z.; Karniadakis, G.E. Learning nonlinear operators via DeepONet based on the universal approximation theorem of operators. Nat. Mach. Intell. 2021, 3, 218–229. [Google Scholar] [CrossRef]

- Li, Z.; Kovachki, N.; Azizzadenesheli, K.; Liu, B.; Bhattacharya, K.; Stuart, A.; Anandkumar, A. Fourier Neural Operator for Parametric Partial Differential Equations. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 3–7 May 2021. [Google Scholar]

- Xu, C.; Cao, B.T.; Yuan, Y.; Meschke, G. Transfer learning based physics-informed neural networks for solving inverse problems in engineering structures under different loading scenarios. Comput. Methods Appl. Mech. Eng. 2023, 405, 115852. [Google Scholar] [CrossRef]

- Penwarden, M.; Zhe, S.; Narayan, A.; Kirby, R.M. A metalearning approach for Physics-Informed Neural Networks (PINNs): Application to parameterized PDEs. J. Comput. Phys. 2023, 477, 111912. [Google Scholar] [CrossRef]

- Shi, W.; Huang, X.; Gao, X.; Wei, X.; Zhang, J.; Bian, J.; Yang, M.; Liu, T.-Y. LordNet: Learning to Solve Parametric Partial Differential Equations without Simulated Data. arXiv 2022, arXiv:2206.09418. [Google Scholar]

- Koric, S.; Abueidda, D.W. Data-driven and physics-informed deep learning operators for solution of heat conduction equation with parametric heat source. Int. J. Heat Mass Transf. 2022, 203, 123809. [Google Scholar] [CrossRef]

- Wang, S.; Perdikaris, P. Long-time integration of parametric evolution equations with physics-informed DeepONets. J. Comput. Phys. 2022, 475, 111855. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-Informed Neural Networks: A Deep Learning Framework for Solving Forward and Inverse Problems Involving Nonlinear Partial Differential Equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics Informed Deep Learning (Part II): Data-driven Discovery of Nonlinear Partial Differential Equations. arXiv 2017, arXiv:1711.10566. [Google Scholar]

- Lu, L.; Meng, X.; Mao, Z.; Karniadakis, G.E. DeepXDE: A deep learning library for solving differential equations. SIAM Rev. 2021, 63, 208–228. [Google Scholar] [CrossRef]

- Nabian, M.A.; Meidani, H. A Deep Neural Network Surrogate for High-Dimensional Random Partial Differential Equations. arXiv 2018, arXiv:1806.02957. [Google Scholar]

- Zhang, D.; Guo, L.; Karniadakis, G.E. Learning in Modal Space: Solving Time-Dependent Stochastic PDEs Using Physics-Informed Neural Networks. SIAM J. Sci. Comput. 2020, 42, A639–A665. [Google Scholar] [CrossRef]

- Zhang, D.; Lu, L.; Guo, L.; Karniadakis, G.E. Quantifying total uncertainty in physics-informed neural networks for solving forward and inverse stochastic problems. J. Comput. Phys. 2019, 397, 108850. [Google Scholar] [CrossRef]

- Rahaman, N.; Arpit, D.; Baratin, A.; Draxler, F.; Lin, M.; Hamprecht, F.A.; Bengio, Y.; Courville, A. On the Spectral Bias of Deep Neural Networks. arXiv 2018, arXiv:1806.08734. [Google Scholar]

- Basri, R.; Jacobs, D.; Kasten, Y.; Kritchman, S. The Convergence Rate of Neural Networks for Learned Functions of Different Frequencies. arXiv 2019, arXiv:1906.00425. [Google Scholar]

- Zhang, T.; Dey, B.; Kakkar, P.; Dasgupta, A.; Chakraborty, A. Frequency-compensated PINNs for Fluid-dynamic Design Problems. arXiv 2020, arXiv:2011.01456. [Google Scholar]

- Baydin, A.G.; Pearlmutter, B.A.; Radul, A.A.; Siskind, J.M. Automatic differentiation in machine learning: A survey. J. Mach. Learn. Res. 2018, 18, 1–43. [Google Scholar]

- Wang, N.; Chen, Q.; Chen, Z. Reconstruction of nearshore wave fields based on physics-informed neural networks. Coast. Eng. 2022, 176, 104167. [Google Scholar] [CrossRef]

- Jeong, H.; Bai, J.; Batuwatta-Gamage, C.; Rathnayaka, C.; Zhou, Y.; Gu, Y. A Physics-Informed Neural Network-based Topology Optimization (PINNTO) framework for structural optimization. Eng. Struct. 2022, 278, 115484. [Google Scholar] [CrossRef]

- Luong, K.A.; Le-Duc, T.; Lee, J. Deep reduced-order least-square method—A parallel neural network structure for solving beam problems. Thin-Walled Struct. 2023, 191, 111044. [Google Scholar] [CrossRef]

- Niu, J.; Xu, W.; Qiu, H.; Li, S.; Dong, F. 1-D coupled surface flow and transport equations revisited via the physics-informed neural network approach. J. Hydrol. 2023, 625 Pt B, 130048. [Google Scholar] [CrossRef]

- Raissi, M.; Yazdani, A.; Karniadakis, G.E. Hidden fluid mechanics: Learning velocity and pressure fields from flow visualizations. Science 2020, 367, 1026–1030. [Google Scholar] [CrossRef] [PubMed]

- Cai, S.; Wang, Z.; Fuest, F.; Jeon, Y.J.; Gray, C.; Karniadakis, G.E. Flow over an espresso cup: Inferring 3-D velocity and pressure fields from tomographic background oriented Schlieren via physics-informed neural networks. J. Fluid Mech. 2021, 915, A102. [Google Scholar] [CrossRef]

- Karniadakis, G.E.; Cai, S.; Mao, Z.; Wang, Z.; Yin, M. Physics-informed neural networks (PINNs) for fluid mechanics: A review. Acta Mech. Sin. 2021, 37, 1729–1740. [Google Scholar]

- Raissi, M.; Wang, Z.; Triantafyllou, M.S.; Karniadakis, G.E. Deep learning of vortex-induced vibrations. J. Fluid Mech. 2018, 861, 119–137. [Google Scholar] [CrossRef]

- Wang, R.; Kashinath, K.; Mustafa, M.; Albert, A.; Yu, R. Towards Physics-informed Deep Learning for Turbulent Flow Prediction. In Proceedings of the KDD ’20: The 26th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Virtual, 6–10 July 2020. [Google Scholar]

- Jin, X.; Cai, S.; Li, H.; Karniadakis, G.E. NSFnets (Navier-Stokes flow nets): Physics-informed neural networks for the incompressible Navier-Stokes equations. J. Comput. Phys. 2021, 426, 109951. [Google Scholar] [CrossRef]

- Yin, M.; Zheng, X.; Humphrey, J.D.; Karniadakis, G.E. Non-Invasive Inference of Thrombus Material Properties with Physics-Informed Neural Networks. Comput. Methods Appl. Mech. Eng. 2021, 375, 113603. [Google Scholar] [CrossRef]

- Hou, X.; Zhou, X.; Liu, Y. Reconstruction of ship propeller wake field based on self-adaptive loss balanced physics-informed neural networks. Ocean Eng. 2024, 309, 118341. [Google Scholar] [CrossRef]

- Sitte, M.P.; Doan, N.A.K. Velocity reconstruction in puffing pool fires with physics-informed neural networks. Phys. Fluids 2022, 34, 087124. [Google Scholar] [CrossRef]

- Martin, C.H.; Oved, A.; Chowdhury, R.A.; Ullmann, E.; Peters, N.S.; Bharath, A.A.; Varela, M. EP-PINNs: Cardiac Electrophysiology Characterisation Using Physics-Informed Neural Networks. Front. Cardiovasc. Med. 2021, 8, 768419. [Google Scholar] [CrossRef]

- Wu, W.; Daneker, M.; Turner, K.T.; Jolley, M.A.; Lu, L. Identifying heterogeneous micromechanical properties of biological tissues via physics-informed neural networks. Small Methods 2024, 9, e2400620. [Google Scholar] [CrossRef]

- Hosseini, V.R.; Mehrizi, A.A.; Gungor, A.; Afrouzi, H.H. Application of a physics-informed neural network to solve the steady-state Bratu equation arising from solid biofuel combustion theory. Fuel 2022, 332, 125908. [Google Scholar] [CrossRef]

- Taassob, A.; Ranade, R.; Echekki, T. Physics-Informed Neural Networks for Turbulent Combustion: Toward Extracting More Statistics and Closure from Point Multiscalar Measurements. Energy Fuels 2023, 37, 17484–17498. [Google Scholar] [CrossRef]

- Rucker, C.; Erickson, B.A. Physics-informed deep learning of rate-and-state fault friction. Comput. Methods Appl. Mech. Eng. 2024, 430, 117211. [Google Scholar] [CrossRef]

- Liu, J.; Li, Y.; Sun, L.; Wang, Y.; Luo, L. Physics and data hybrid-driven interpretable deep learning for moving force identification. Eng. Struct. 2025, 329, 119801. [Google Scholar] [CrossRef]

- Nagao, M.; Datta-Gupta, A.; Onishi, T.; Sankaran, S. Physics Informed Machine Learning for Reservoir Connectivity Identification and Robust Production Forecasting. SPE J. 2024, 29, 4527–4541. [Google Scholar] [CrossRef]

- Wang, L.; Liu, G.; Wang, G.; Zhang, K. M-PINN: A mesh-based physics-informed neural network for linear elastic problems in solid mechanics. Int. J. Numer. Methods Eng. 2024, 125, e7444. [Google Scholar] [CrossRef]

- Jin, S.; Ma, Z.; Zhang, T.A. Asymptotic-Preserving Neural Networks for Multiscale Vlasov–Poisson–Fokker–Planck System in the High-Field Regime. J. Sci. Comput. 2024, 99, 61. [Google Scholar] [CrossRef]

| Learning Rates (α) | Collocation Points | Activation Function | Network Size |

|---|---|---|---|

| Adaptive learning rate | Uniform distribution | Tanh | (1, 60, 60, 60, 60, 60, 60, 60, 60, 1) |

| Section (m2) | Length (m) | Elastic Modulus (Pa) | Density (kg/m3) | Force (N) | Finite Elements |

|---|---|---|---|---|---|

| 0.012 × 0.01 | 0.15 | 2.1 × 1011 | 7850 | 4 | |

| 0.012 × 0.01 | 0.15 | 2.1 × 1011 | 7850 | 12 | |

| 0.012 × 0.01 | 0.15 | 2.1 × 1011 | 7850 | 20 |

| Section (m2) | Length (m) | Elastic Modulus (Pa) | Density (kg/m3) | Shaking Table Excitation | Laser Displacement Sensor | Accelerometer |

|---|---|---|---|---|---|---|

| Rectangle (0.012×0.01) | 0.2 | 2.1 × 1011 | 7850 | 2000sin (3.14 t)/Random load | LK-H151 | 1A339E |

| Working Condition | Damage Types | Damaged Structure Members | Level of Damage | Damage Schematic |

|---|---|---|---|---|

| SL-1 | Single | 1 | 30% |  |

| SL-2 | Single | 1 | 60% | |

| SL-3 | Multiple | 1, 2 | 60% | |

| SL-4 | Multiple | 1, 2 | 30%, 60% | |

| SL-5 | Multiple | 1, 2, 3, 4 | 30% | |

| SL-6 | Multiple | 1, 2, 3, 4 | 60% | |

| SL-7 | Multiple | 1, 4 | 30%, 60% | |

| SL-8 | Multiple | 1, 2, 3 | 60% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Zhang, X.; Zhong, Y.; Yan, Z.; Hong, Y. Two-Scale Physics-Informed Neural Networks for Structural Dynamics Parameter Inversion: Numerical and Experimental Validation on T-Shaped Tower Health Monitoring. Buildings 2025, 15, 1876. https://doi.org/10.3390/buildings15111876

Liu X, Zhang X, Zhong Y, Yan Z, Hong Y. Two-Scale Physics-Informed Neural Networks for Structural Dynamics Parameter Inversion: Numerical and Experimental Validation on T-Shaped Tower Health Monitoring. Buildings. 2025; 15(11):1876. https://doi.org/10.3390/buildings15111876

Chicago/Turabian StyleLiu, Xinpeng, Xuemei Zhang, Yongli Zhong, Zhitao Yan, and Yu Hong. 2025. "Two-Scale Physics-Informed Neural Networks for Structural Dynamics Parameter Inversion: Numerical and Experimental Validation on T-Shaped Tower Health Monitoring" Buildings 15, no. 11: 1876. https://doi.org/10.3390/buildings15111876

APA StyleLiu, X., Zhang, X., Zhong, Y., Yan, Z., & Hong, Y. (2025). Two-Scale Physics-Informed Neural Networks for Structural Dynamics Parameter Inversion: Numerical and Experimental Validation on T-Shaped Tower Health Monitoring. Buildings, 15(11), 1876. https://doi.org/10.3390/buildings15111876