AI-Based Variable Importance Analysis of Mechanical and ASR Properties in Activated Waste Glass Mortar

Abstract

1. Introduction

2. Experimental Program and Methodologies

2.1. Dataset Collection

2.2. Materials

2.3. Mix Design and WGP Activation

- Mechanical activation: A ball mill was used to grind waste glass with an average particle size of 300 µm down to 75 µm. It was anticipated that the resulting finer glass powder would exhibit improved pozzolanic performance.

- Chemical activation: For this experiment, the chemical activators employed were sodium sulfate (Na2SO4), calcium hydroxide (Ca(OH)2), and sodium hydroxide (NaOH). Sodium sulfate acted as the salty activator and was used to produce the sodium-sulfate-activated mortar, where it was fully dissolved in the mixing water. Sodium hydroxide and calcium hydroxide were combined in equal proportions by weight to serve as the alkaline activator, referred to as the sodium–calcium hydroxide co-activated mortar; both components were partially dissolved in water before incorporation. Sodium hydroxide and sodium sulfate, being water-soluble, could be readily added through the mixing water in appropriate dosages. In contrast, calcium hydroxide, due to its limited solubility, was dry-blended with WGP prior to mixing with cement and sand, resulting in the calcium-hydroxide-only-activated mortar. The mortar production followed the guidelines outlined in ASTM C305 [24].

2.4. Unconfined Compressive Test

2.5. Alkali–Silica Reaction

2.6. Machine Learning Model Establishment

2.6.1. Gradient Boosting Regressor

2.6.2. Other Machine Learning Techniques

2.6.3. Regression Evaluation Metrics

2.7. Model Interpretability and Visualization

2.7.1. Partial Dependence Plots (PDPs)

2.7.2. Shapley Additive Explanation (SHAP)

3. Results and Discussion

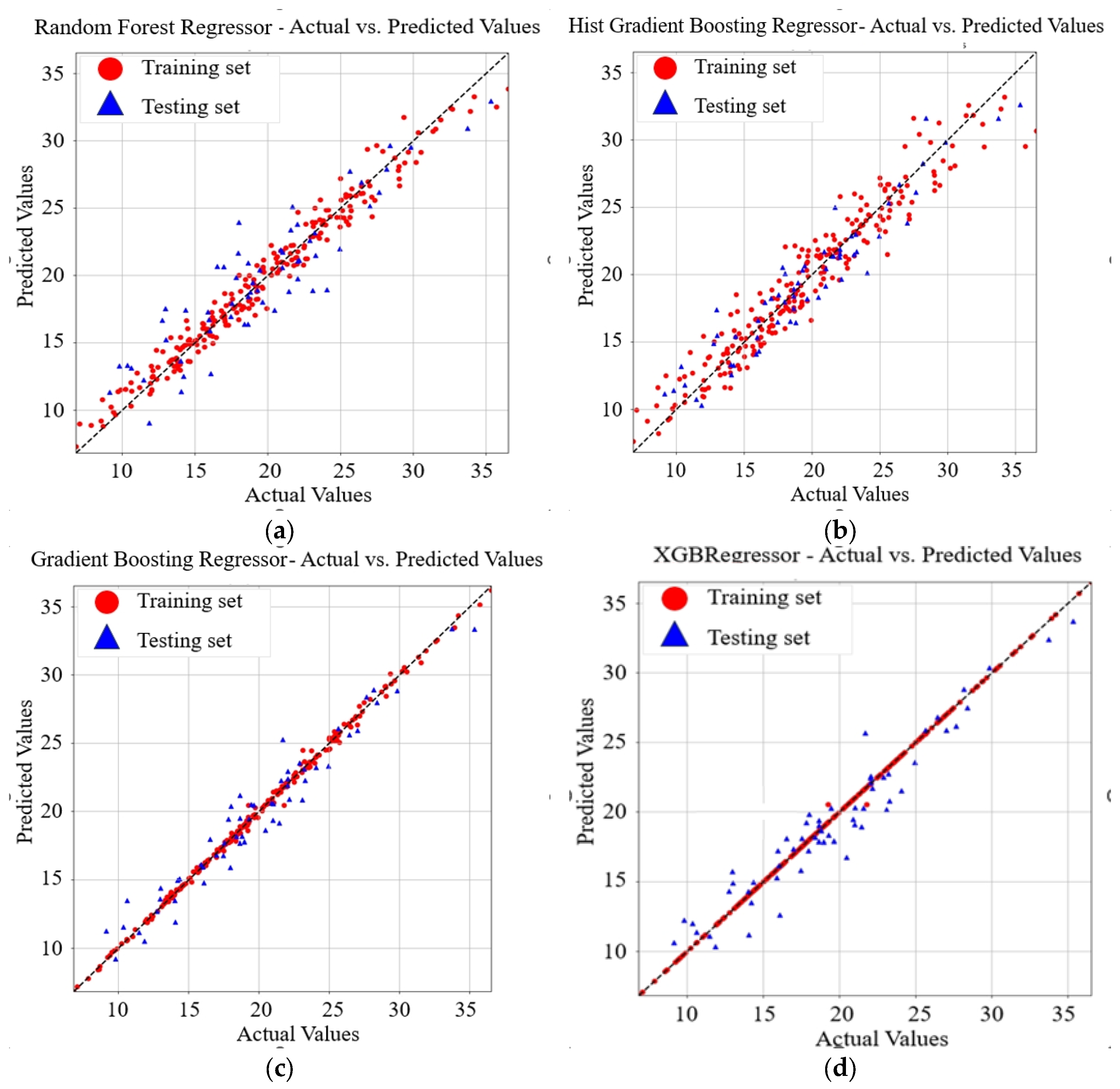

3.1. ML Prediction of UCS Results

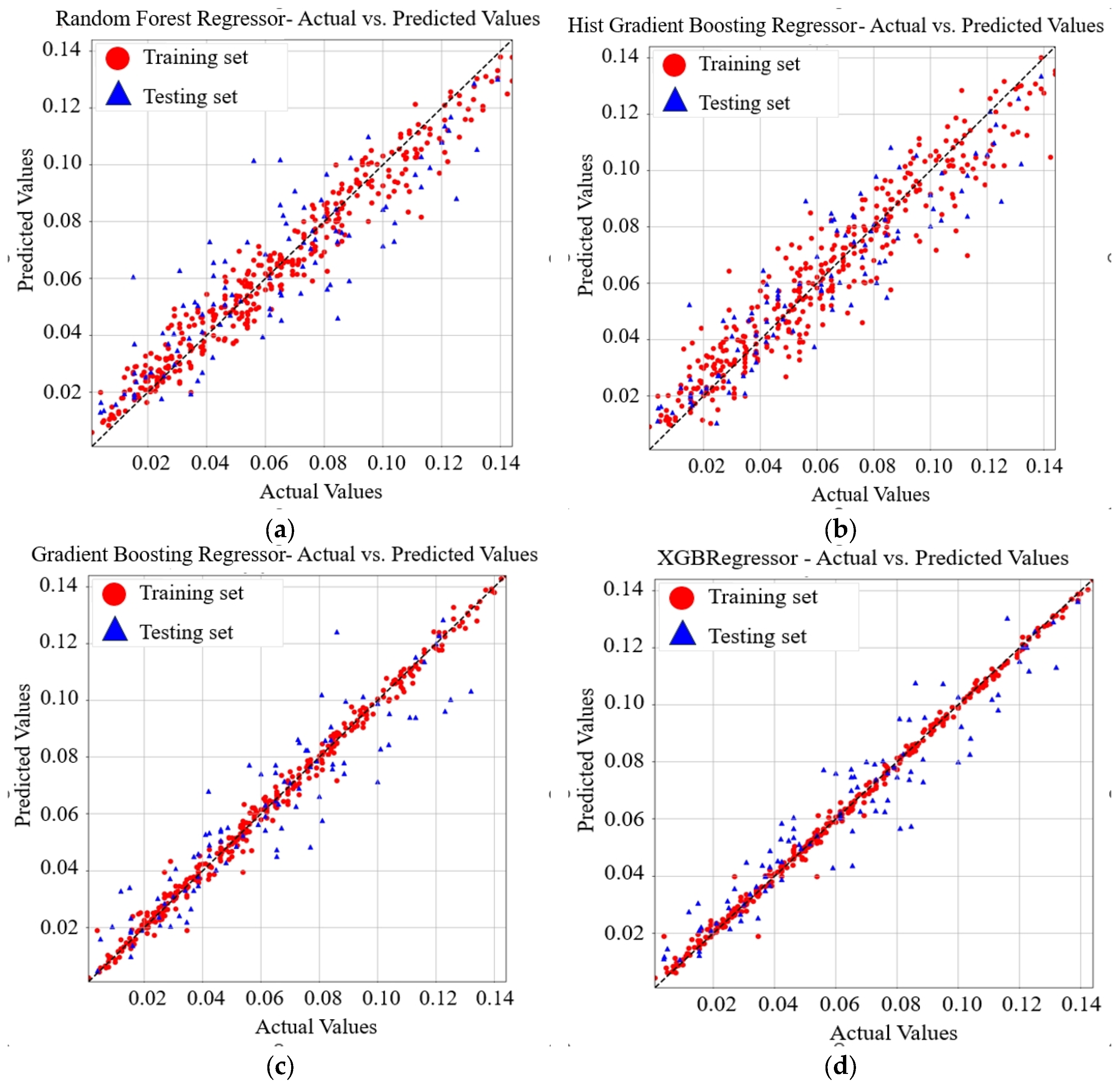

3.2. ML Prediction of ASR Expansion Results

3.3. Model Interpretability by PDP and SHAP

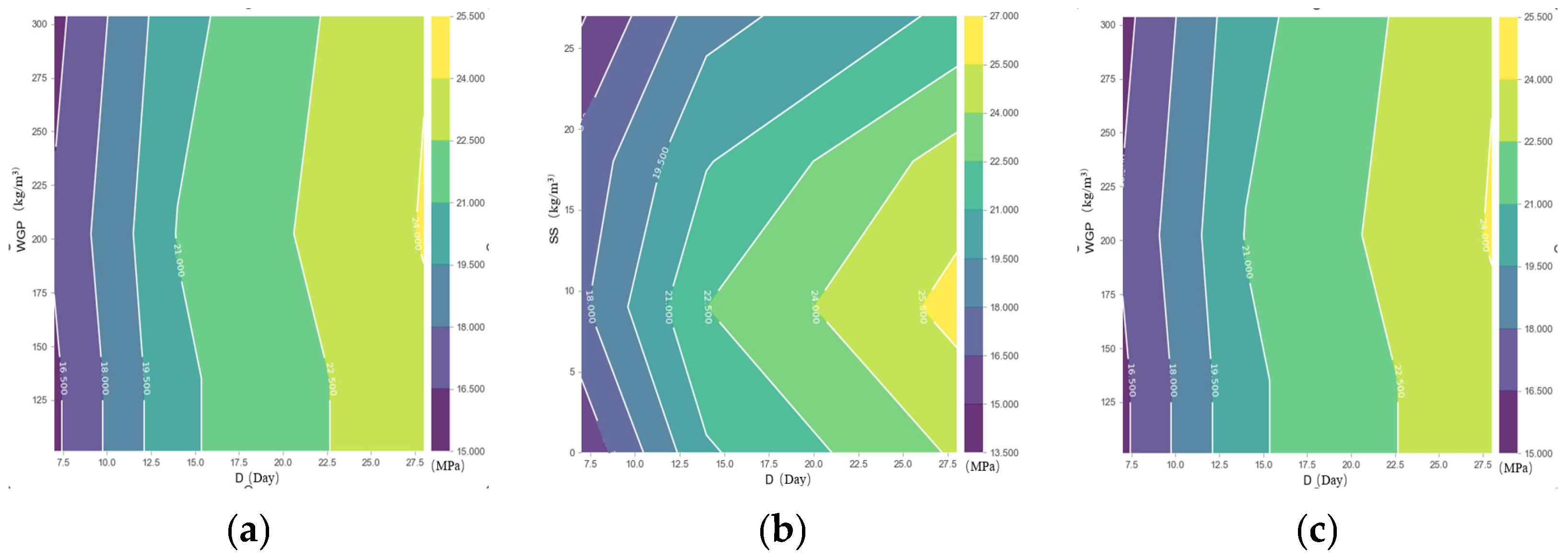

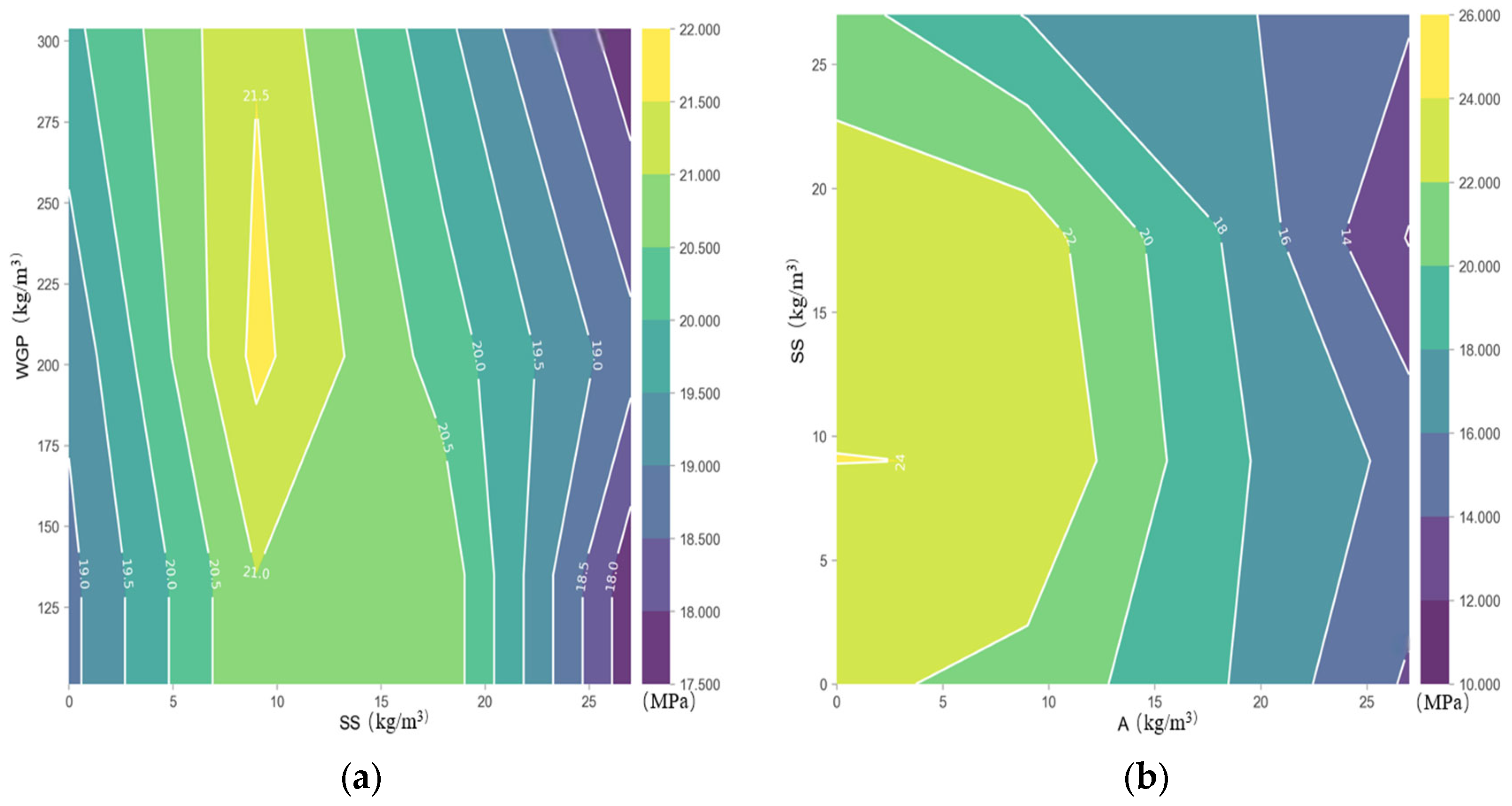

3.3.1. The PDP Explanation Related to the UCS

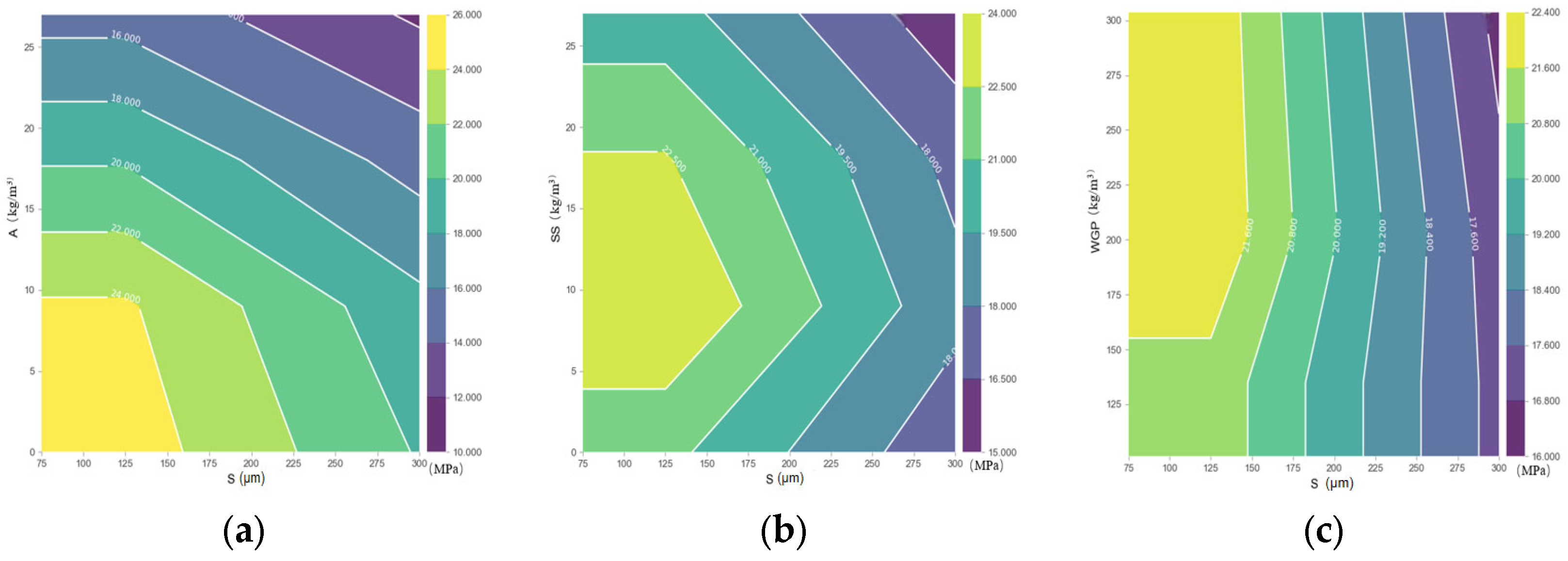

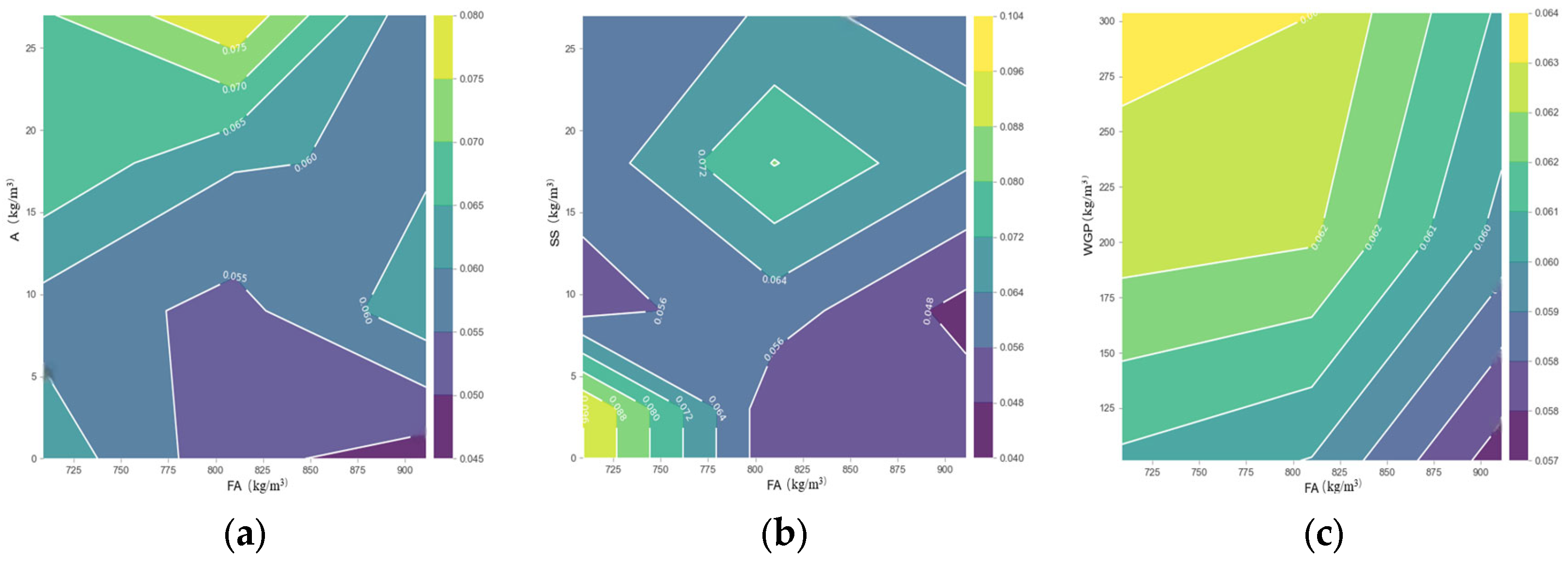

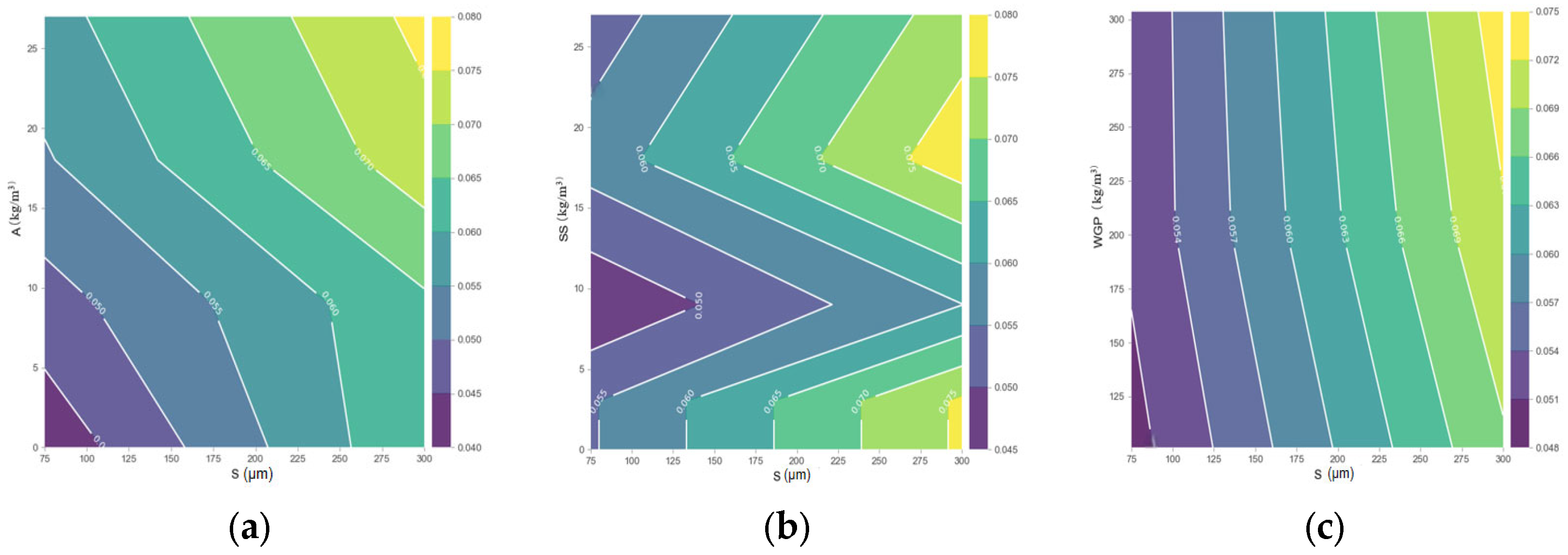

3.3.2. The PDP Explanation Related to the ASR

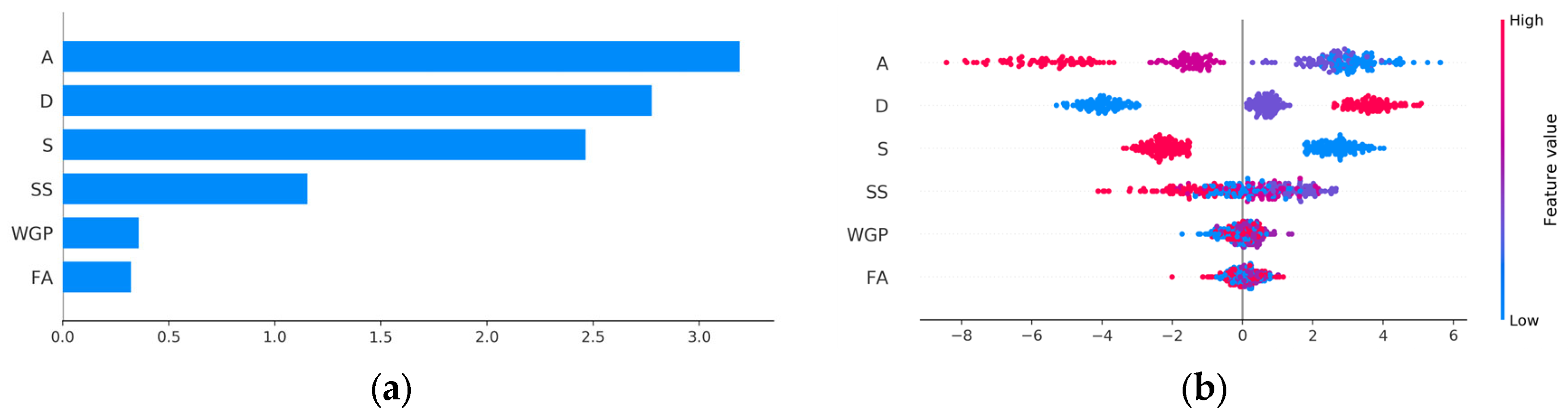

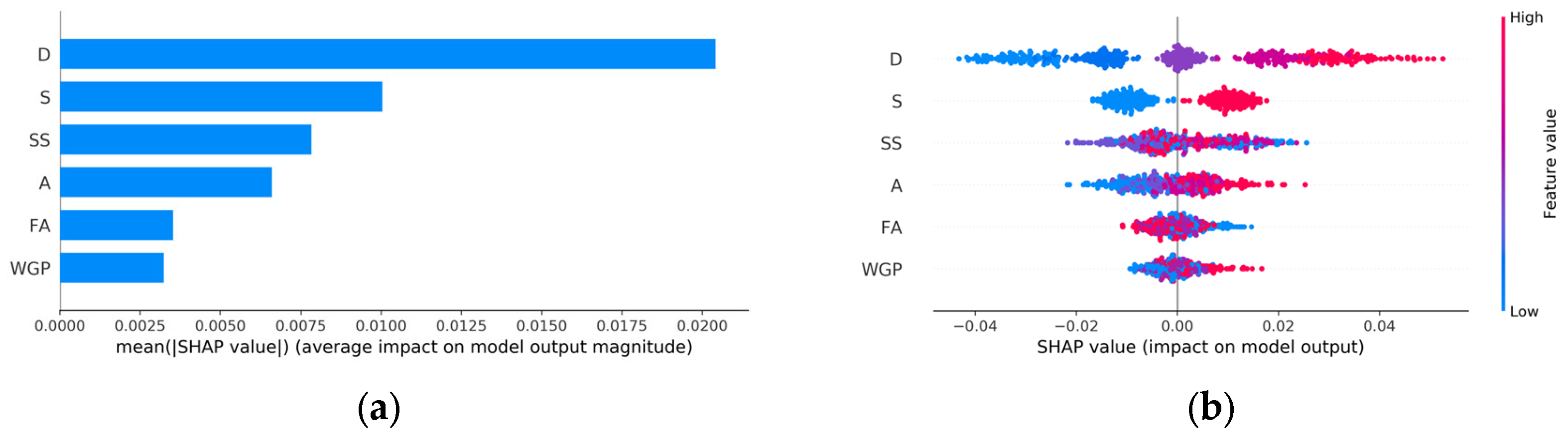

3.3.3. Shapley Additive Explanation (SHAP)

4. Conclusions

- (1)

- GBR achieved the best predictive performance for UCS (RMSE = 1.3085, R2 = 0.9465), while XGBoost outperformed others in predicting ASR (RMSE = 0.0101, R2 = 0.9110). The superior performance of these two models was attributed to their ability to capture nonlinear feature interactions and their robustness under limited dataset conditions.

- (2)

- The WGP content showed a nonlinear effect, with an optimal strength observed at 202.5 kg/m3 replacement. Under this condition, UCS values reached 58.6 MPa at 28 days with binary alkali activation, exceeding the 45.3 MPa baseline of the control group. When WGP is 708.75 kg/m3 and the sodium sulfate is less than 5 kg/m3, the alkali–silica reaction value is maximized.

- (3)

- Through SHAP value analysis, it is evident that the alkali feature significantly influences the unconfined compressive strength prediction, with importance weights up to 0.432. Curing duration ranks as the second most important variable, whereas the fine aggregate feature exhibits the least consequential effect on UCS prediction. When the fine aggregate is 1012.5 kg/m3 and there is no glass powder substitution, the SHAP value is negative, further indicating that glass powder substitution is beneficial for enhancing the positive influence on UCS.

- (4)

- Curing duration has the greatest impact on the prediction of alkali–silica reaction, while waste glass powder has the smallest impact on ASR prediction. However, when WGP is 303.75 kg/m3, its positive influence on ASR becomes most evident.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

Appendix A

| ID | OPC (kg/m3) | 75 μm WGP (kg/m3) | 300 μm WGP (kg/m3) | Ground Silica Sand (kg/m3) | Water (kg/m3) | Na2SO4 (kg/m3) | Alkali (kg/m3) | Compressive Strength (MPa) | ASR Expansion (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 7d | 14d | 28d | 2d | 4d | 7d | 10d | 14d | ||||||||

| 1 | 450 | 0 | 0 | 1012.5 | 211.5 | 0 | 0 | 17.44 | 24.15 | 26.84 | 0.0196 | 0.0225 | 0.0308 | 0.0346 | 0.0462 |

| 2 | 450 | 101.25 | 0 | 911.25 | 211.5 | 0 | 0 | 19.64 | 27.14 | 35.72 | 0.0066 | 0.0077 | 0.0156 | 0.0192 | 0.0192 |

| 3 | 450 | 101.25 | 0 | 911.25 | 211.5 | 0 | 9 | 17.50 | 23.06 | 25.00 | 0.0500 | 0.0562 | 0.0654 | 0.0692 | 0.0712 |

| 4 | 450 | 101.25 | 0 | 911.25 | 211.5 | 0 | 18 | 15.02 | 21.00 | 23.11 | 0.0281 | 0.0385 | 0.0550 | 0.0677 | 0.0815 |

| 5 | 450 | 101.25 | 0 | 911.25 | 211.5 | 0 | 27 | 9.71 | 12.75 | 14.49 | 0.0246 | 0.0262 | 0.0308 | 0.0423 | 0.0769 |

| 6 | 450 | 101.25 | 0 | 911.25 | 211.5 | 9 | 0 | 19.67 | 27.01 | 29.35 | 0.0009 | 0.0100 | 0.0120 | 0.0350 | 0.0650 |

| 7 | 450 | 101.25 | 0 | 911.25 | 211.5 | 9 | 9 | 20.89 | 25.37 | 29.84 | 0.0346 | 0.0385 | 0.0519 | 0.0692 | 0.1038 |

| 8 | 450 | 101.25 | 0 | 911.25 | 211.5 | 9 | 18 | 15.83 | 20.32 | 23.63 | 0.0154 | 0.0214 | 0.0315 | 0.0577 | 0.0731 |

| 9 | 450 | 101.25 | 0 | 911.25 | 211.5 | 9 | 27 | 13.97 | 17.63 | 21.50 | 0.0246 | 0.0308 | 0.0446 | 0.0538 | 0.0808 |

| 10 | 450 | 101.25 | 0 | 911.25 | 211.5 | 18 | 0 | 21.92 | 27.65 | 36.53 | 0.0308 | 0.0369 | 0.0531 | 0.0885 | 0.1000 |

| 11 | 450 | 101.25 | 0 | 911.25 | 211.5 | 18 | 9 | 21.24 | 26.54 | 30.34 | 0.0231 | 0.0292 | 0.0538 | 0.0984 | 0.1320 |

| 12 | 450 | 101.25 | 0 | 911.25 | 211.5 | 18 | 18 | 16.02 | 19.45 | 22.89 | 0.0154 | 0.0254 | 0.0538 | 0.0808 | 0.1308 |

| 13 | 450 | 101.25 | 0 | 911.25 | 211.5 | 18 | 27 | 13.98 | 0.00 | 18.64 | 0.0100 | 0.0231 | 0.0615 | 0.0885 | 0.1160 |

| 14 | 450 | 101.25 | 0 | 911.25 | 211.5 | 27 | 0 | 16.52 | 21.60 | 23.61 | 0.0123 | 0.0231 | 0.0654 | 0.0731 | 0.0962 |

| 15 | 450 | 101.25 | 0 | 911.25 | 211.5 | 27 | 9 | 16.97 | 20.42 | 23.57 | 0.0038 | 0.0115 | 0.0462 | 0.0846 | 0.1192 |

| 16 | 450 | 101.25 | 0 | 911.25 | 211.5 | 27 | 18 | 12.78 | 17.04 | 19.66 | 0.0085 | 0.0231 | 0.0615 | 0.1077 | 0.1224 |

| 17 | 450 | 101.25 | 0 | 911.25 | 211.5 | 27 | 27 | 10.62 | 14.35 | 16.33 | 0.0046 | 0.0247 | 0.0462 | 0.0810 | 0.1000 |

| 18 | 450 | 202.5 | 0 | 810 | 211.5 | 0 | 0 | 18.13 | 26.44 | 27.90 | 0.0075 | 0.0138 | 0.0308 | 0.0423 | 0.0462 |

| 19 | 450 | 202.5 | 0 | 810 | 211.5 | 0 | 9 | 18.96 | 25.77 | 28.73 | 0.0192 | 0.0238 | 0.0423 | 0.0462 | 0.0531 |

| 20 | 450 | 202.5 | 0 | 810 | 211.5 | 0 | 18 | 15.58 | 20.57 | 23.97 | 0.0235 | 0.0269 | 0.0423 | 0.0538 | 0.0731 |

| 21 | 450 | 202.5 | 0 | 810 | 211.5 | 0 | 27 | 12.37 | 16.35 | 18.46 | 0.0235 | 0.0269 | 0.0308 | 0.0465 | 0.0760 |

| 22 | 450 | 202.5 | 0 | 810 | 211.5 | 9 | 0 | 22.49 | 28.96 | 31.53 | 0.0308 | 0.0308 | 0.0538 | 0.0515 | 0.0538 |

| 23 | 450 | 202.5 | 0 | 810 | 211.5 | 9 | 9 | 23.74 | 30.53 | 33.92 | 0.0157 | 0.0346 | 0.0385 | 0.0538 | 0.0692 |

| 24 | 450 | 202.5 | 0 | 810 | 211.5 | 9 | 18 | 19.21 | 21.55 | 23.12 | 0.0200 | 0.0386 | 0.0465 | 0.0612 | 0.0808 |

| 25 | 450 | 202.5 | 0 | 810 | 211.5 | 9 | 27 | 16.08 | 20.46 | 25.55 | 0.0538 | 0.0577 | 0.0713 | 0.0831 | 0.0865 |

| 26 | 450 | 202.5 | 0 | 810 | 211.5 | 18 | 0 | 21.79 | 25.66 | 27.48 | 0.0346 | 0.0538 | 0.0654 | 0.0692 | 0.0692 |

| 27 | 450 | 202.5 | 0 | 810 | 211.5 | 18 | 9 | 24.96 | 29.01 | 33.73 | 0.0346 | 0.0538 | 0.0615 | 0.0756 | 0.1000 |

| 28 | 450 | 202.5 | 0 | 810 | 211.5 | 18 | 18 | 19.25 | 23.29 | 25.72 | 0.0385 | 0.0615 | 0.0885 | 0.1038 | 0.1065 |

| 29 | 450 | 202.5 | 0 | 810 | 211.5 | 18 | 27 | 9.23 | 11.99 | 16.15 | 0.0421 | 0.0731 | 0.0885 | 0.1077 | 0.1055 |

| 30 | 450 | 202.5 | 0 | 810 | 211.5 | 18 | 0 | 19.26 | 25.56 | 28.40 | 0.0038 | 0.0269 | 0.0423 | 0.0542 | 0.0615 |

| 31 | 450 | 202.5 | 0 | 810 | 211.5 | 27 | 9 | 19.70 | 27.17 | 30.19 | 0.0077 | 0.0192 | 0.0500 | 0.0650 | 0.0846 |

| 32 | 450 | 202.5 | 0 | 810 | 211.5 | 27 | 18 | 17.04 | 22.70 | 25.22 | 0.0154 | 0.0260 | 0.0423 | 0.0462 | 0.0577 |

| 33 | 450 | 202.5 | 0 | 810 | 211.5 | 27 | 27 | 13.09 | 18.69 | 22.60 | 0.0385 | 0.0846 | 0.0962 | 0.1000 | 0.1115 |

| 34 | 450 | 303.75 | 0 | 708.75 | 211.5 | 0 | 0 | 17.82 | 26.44 | 31.88 | 0.0462 | 0.0692 | 0.1086 | 0.1192 | 0.1423 |

| 35 | 450 | 303.75 | 0 | 708.75 | 211.5 | 0 | 9 | 19.59 | 26.89 | 31.35 | 0.0708 | 0.0731 | 0.0538 | 0.0615 | 0.0962 |

| 36 | 450 | 303.75 | 0 | 708.75 | 211.5 | 0 | 18 | 18.20 | 23.54 | 26.05 | 0.0654 | 0.0662 | 0.0727 | 0.0754 | 0.1019 |

| 37 | 450 | 303.75 | 0 | 708.75 | 211.5 | 0 | 27 | 14.53 | 18.93 | 21.32 | 0.0769 | 0.0769 | 0.0846 | 0.0769 | 0.1115 |

| 38 | 450 | 303.75 | 0 | 708.75 | 211.5 | 9 | 0 | 23.45 | 28.16 | 34.19 | 0.0200 | 0.0250 | 0.0390 | 0.0640 | 0.0670 |

| 39 | 450 | 303.75 | 0 | 708.75 | 211.5 | 9 | 9 | 24.73 | 29.65 | 35.33 | 0.0154 | 0.0200 | 0.0269 | 0.0500 | 0.0654 |

| 40 | 450 | 303.75 | 0 | 708.75 | 211.5 | 9 | 18 | 18.65 | 22.95 | 29.05 | 0.0165 | 0.0187 | 0.0568 | 0.0765 | 0.0846 |

| 41 | 450 | 303.75 | 0 | 708.75 | 211.5 | 9 | 27 | 13.78 | 17.54 | 21.75 | 0.0175 | 0.0254 | 0.0358 | 0.0586 | 0.0765 |

| 42 | 450 | 303.75 | 0 | 708.75 | 211.5 | 18 | 0 | 20.65 | 25.37 | 32.57 | 0.0154 | 0.0154 | 0.0238 | 0.0385 | 0.0508 |

| 43 | 450 | 303.75 | 0 | 708.75 | 211.5 | 18 | 9 | 22.03 | 25.65 | 32.69 | 0.0192 | 0.0288 | 0.0346 | 0.0427 | 0.0462 |

| 44 | 450 | 303.75 | 0 | 708.75 | 211.5 | 18 | 18 | 18.80 | 20.89 | 23.24 | 0.0346 | 0.0538 | 0.0577 | 0.0615 | 0.0731 |

| 45 | 450 | 303.75 | 0 | 708.75 | 211.5 | 18 | 27 | 10.65 | 12.45 | 14.01 | 0.0216 | 0.0386 | 0.0462 | 0.0650 | 0.0840 |

| 46 | 450 | 303.75 | 0 | 708.75 | 211.5 | 27 | 0 | 18.65 | 24.06 | 29.41 | 0.0154 | 0.0308 | 0.0423 | 0.0654 | 0.0769 |

| 47 | 450 | 303.75 | 0 | 708.75 | 211.5 | 27 | 9 | 17.96 | 18.02 | 22.06 | 0.0208 | 0.0269 | 0.0346 | 0.0513 | 0.0615 |

| 48 | 450 | 303.75 | 0 | 708.75 | 211.5 | 27 | 18 | 15.96 | 22.16 | 23.82 | 0.0154 | 0.0346 | 0.0486 | 0.0692 | 0.0808 |

| 49 | 450 | 303.75 | 0 | 708.75 | 211.5 | 27 | 27 | 13.56 | 17.06 | 20.24 | 0.0268 | 0.0346 | 0.0540 | 0.0654 | 0.0912 |

| 50 | 450 | 0 | 101.25 | 911.25 | 211.5 | 0 | 0 | 16.20 | 22.51 | 25.01 | 0.0070 | 0.0150 | 0.0330 | 0.0410 | 0.0510 |

| 51 | 450 | 0 | 101.25 | 911.25 | 211.5 | 0 | 9 | 13.75 | 18.01 | 20.23 | 0.0160 | 0.0810 | 0.1090 | 0.1110 | 0.1080 |

| 52 | 450 | 0 | 101.25 | 911.25 | 211.5 | 0 | 18 | 12.05 | 16.80 | 18.65 | 0.0490 | 0.0610 | 0.0840 | 0.0950 | 0.1150 |

| 53 | 450 | 0 | 101.25 | 911.25 | 211.5 | 0 | 27 | 7.86 | 10.23 | 11.02 | 0.0380 | 0.0450 | 0.0620 | 0.0840 | 0.1130 |

| 54 | 450 | 0 | 101.25 | 911.25 | 211.5 | 9 | 0 | 15.54 | 21.02 | 25.04 | 0.0050 | 0.0120 | 0.0230 | 0.0560 | 0.0845 |

| 55 | 450 | 0 | 101.25 | 911.25 | 211.5 | 9 | 9 | 18.29 | 25.40 | 26.66 | 0.0270 | 0.0460 | 0.0570 | 0.0950 | 0.1020 |

| 56 | 450 | 0 | 101.25 | 911.25 | 211.5 | 9 | 18 | 13.77 | 19.31 | 20.79 | 0.0160 | 0.0250 | 0.0370 | 0.0660 | 0.0830 |

| 57 | 450 | 0 | 101.25 | 911.25 | 211.5 | 9 | 27 | 12.02 | 16.22 | 19.13 | 0.0250 | 0.0340 | 0.0620 | 0.0580 | 0.0910 |

| 58 | 450 | 0 | 101.25 | 911.25 | 211.5 | 18 | 0 | 17.86 | 21.65 | 29.03 | 0.0490 | 0.0510 | 0.0770 | 0.1200 | 0.1310 |

| 59 | 450 | 0 | 101.25 | 911.25 | 211.5 | 18 | 9 | 18.69 | 24.95 | 26.40 | 0.0270 | 0.0340 | 0.0660 | 0.1110 | 0.1320 |

| 60 | 450 | 0 | 101.25 | 911.25 | 211.5 | 18 | 18 | 13.46 | 19.26 | 20.83 | 0.0180 | 0.0280 | 0.0720 | 0.1090 | 0.1440 |

| 61 | 450 | 0 | 101.25 | 911.25 | 211.5 | 18 | 27 | 12.02 | 14.65 | 16.03 | 0.0110 | 0.0260 | 0.0760 | 0.0960 | 0.1400 |

| 62 | 450 | 0 | 101.25 | 911.25 | 211.5 | 27 | 0 | 13.00 | 19.02 | 18.73 | 0.0120 | 0.0540 | 0.0860 | 0.1200 | 0.1390 |

| 63 | 450 | 0 | 101.25 | 911.25 | 211.5 | 27 | 9 | 14.26 | 19.60 | 21.45 | 0.0040 | 0.0150 | 0.0520 | 0.0940 | 0.1310 |

| 64 | 450 | 0 | 101.25 | 911.25 | 211.5 | 27 | 18 | 10.35 | 16.19 | 17.14 | 0.0090 | 0.0300 | 0.0710 | 0.1340 | 0.1390 |

| 65 | 450 | 0 | 101.25 | 911.25 | 211.5 | 27 | 27 | 9.13 | 13.35 | 14.62 | 0.0050 | 0.0350 | 0.0630 | 0.0930 | 0.1110 |

| 66 | 450 | 0 | 202.5 | 810 | 211.5 | 0 | 0 | 15.08 | 20.94 | 23.27 | 0.0210 | 0.0340 | 0.0620 | 0.0700 | 0.0850 |

| 67 | 450 | 0 | 202.5 | 810 | 211.5 | 0 | 9 | 14.65 | 21.02 | 23.22 | 0.0210 | 0.0360 | 0.0540 | 0.0700 | 0.0860 |

| 68 | 450 | 0 | 202.5 | 810 | 211.5 | 0 | 18 | 12.11 | 16.85 | 19.25 | 0.0180 | 0.0640 | 0.0760 | 0.0840 | 0.1110 |

| 69 | 450 | 0 | 202.5 | 810 | 211.5 | 0 | 27 | 9.53 | 13.56 | 14.13 | 0.0300 | 0.0510 | 0.0560 | 0.0730 | 0.0940 |

| 70 | 450 | 0 | 202.5 | 810 | 211.5 | 9 | 0 | 18.40 | 22.05 | 25.65 | 0.0340 | 0.0490 | 0.0720 | 0.0790 | 0.1010 |

| 71 | 450 | 0 | 202.5 | 810 | 211.5 | 9 | 9 | 18.36 | 22.05 | 25.12 | 0.0290 | 0.0380 | 0.0550 | 0.0790 | 0.0890 |

| 72 | 450 | 0 | 202.5 | 810 | 211.5 | 9 | 18 | 14.02 | 15.52 | 17.57 | 0.0210 | 0.0500 | 0.0560 | 0.0800 | 0.0920 |

| 73 | 450 | 0 | 202.5 | 810 | 211.5 | 9 | 27 | 11.90 | 13.71 | 19.93 | 0.0590 | 0.0650 | 0.0820 | 0.1080 | 0.1130 |

| 74 | 450 | 0 | 202.5 | 810 | 211.5 | 18 | 0 | 17.02 | 21.05 | 24.02 | 0.0210 | 0.0650 | 0.0850 | 0.1080 | 0.1210 |

| 75 | 450 | 0 | 202.5 | 810 | 211.5 | 18 | 9 | 17.72 | 19.73 | 24.28 | 0.0440 | 0.0600 | 0.0670 | 0.0920 | 0.1140 |

| 76 | 450 | 0 | 202.5 | 810 | 211.5 | 18 | 18 | 15.21 | 17.47 | 18.78 | 0.0490 | 0.0770 | 0.1040 | 0.1340 | 0.1260 |

| 77 | 450 | 0 | 202.5 | 810 | 211.5 | 18 | 27 | 7.07 | 8.64 | 12.34 | 0.0520 | 0.0850 | 0.1220 | 0.1370 | 0.1390 |

| 78 | 450 | 0 | 202.5 | 810 | 211.5 | 27 | 0 | 15.62 | 20.65 | 22.45 | 0.0069 | 0.0550 | 0.0680 | 0.0760 | 0.0950 |

| 79 | 450 | 0 | 202.5 | 810 | 211.5 | 27 | 9 | 14.78 | 19.29 | 23.09 | 0.0080 | 0.0210 | 0.0550 | 0.0810 | 0.1060 |

| 80 | 450 | 0 | 202.5 | 810 | 211.5 | 27 | 18 | 13.22 | 15.89 | 18.30 | 0.0170 | 0.0280 | 0.0490 | 0.0840 | 0.1120 |

| 81 | 450 | 0 | 202.5 | 810 | 211.5 | 27 | 27 | 10.60 | 13.83 | 17.29 | 0.0420 | 0.1130 | 0.1250 | 0.1290 | 0.1210 |

| 82 | 450 | 0 | 303.75 | 708.75 | 211.5 | 0 | 0 | 14.36 | 19.03 | 21.69 | 0.0410 | 0.0760 | 0.0860 | 0.1300 | 0.1440 |

| 83 | 450 | 0 | 303.75 | 708.75 | 211.5 | 0 | 9 | 15.12 | 21.98 | 25.65 | 0.0420 | 0.0650 | 0.0810 | 0.0960 | 0.1230 |

| 84 | 450 | 0 | 303.75 | 708.75 | 211.5 | 0 | 18 | 14.05 | 18.05 | 22.01 | 0.0800 | 0.1050 | 0.1210 | 0.1280 | 0.1230 |

| 85 | 450 | 0 | 303.75 | 708.75 | 211.5 | 0 | 27 | 11.85 | 15.65 | 17.12 | 0.1020 | 0.1260 | 0.1160 | 0.1210 | 0.1350 |

| 86 | 450 | 0 | 303.75 | 708.75 | 211.5 | 9 | 0 | 18.00 | 22.05 | 27.35 | 0.0760 | 0.0790 | 0.0870 | 0.0910 | 0.1090 |

| 87 | 450 | 0 | 303.75 | 708.75 | 211.5 | 9 | 9 | 15.33 | 20.65 | 22.61 | 0.0390 | 0.0530 | 0.0860 | 0.1130 | 0.1160 |

| 88 | 450 | 0 | 303.75 | 708.75 | 211.5 | 9 | 18 | 11.19 | 16.98 | 19.17 | 0.0130 | 0.0150 | 0.0290 | 0.0560 | 0.0930 |

| 89 | 450 | 0 | 303.75 | 708.75 | 211.5 | 9 | 27 | 8.54 | 12.10 | 13.33 | 0.0280 | 0.0370 | 0.0430 | 0.0650 | 0.0950 |

| 90 | 450 | 0 | 303.75 | 708.75 | 211.5 | 18 | 0 | 16.22 | 20.65 | 27.02 | 0.0320 | 0.0290 | 0.0400 | 0.0650 | 0.0910 |

| 91 | 450 | 0 | 303.75 | 708.75 | 211.5 | 18 | 9 | 14.54 | 18.47 | 21.58 | 0.0320 | 0.0530 | 0.0640 | 0.0710 | 0.0860 |

| 92 | 450 | 0 | 303.75 | 708.75 | 211.5 | 18 | 18 | 11.47 | 15.88 | 16.15 | 0.0620 | 0.0950 | 0.1040 | 0.1030 | 0.1120 |

| 93 | 450 | 0 | 303.75 | 708.75 | 211.5 | 18 | 27 | 6.82 | 9.39 | 9.80 | 0.0370 | 0.0730 | 0.0850 | 0.0890 | 0.1060 |

| 94 | 450 | 0 | 303.75 | 708.75 | 211.5 | 27 | 0 | 14.21 | 19.65 | 24.06 | 0.0310 | 0.0480 | 0.0810 | 0.0860 | 0.0980 |

| 95 | 450 | 0 | 303.75 | 708.75 | 211.5 | 27 | 9 | 10.60 | 12.97 | 14.41 | 0.0390 | 0.0480 | 0.0600 | 0.0860 | 0.1070 |

| 96 | 450 | 0 | 303.75 | 708.75 | 211.5 | 27 | 18 | 9.89 | 15.73 | 16.29 | 0.0260 | 0.0620 | 0.0840 | 0.1220 | 0.1260 |

| 97 | 450 | 0 | 303.75 | 708.75 | 211.5 | 27 | 27 | 8.68 | 11.94 | 13.97 | 0.0500 | 0.0600 | 0.0800 | 0.0930 | 0.1231 |

References

- Sun, J.; Wang, Y.; Liu, S.; Dehghani, A.; Xiang, X.; Wei, J.; Wang, X. Mechanical, chemical and hydrothermal activation for waste glass reinforced cement. Constr. Build. Mater. 2021, 301, 124361. [Google Scholar] [CrossRef]

- Singh, A.; Wang, Y.; Zhou, Y.; Sun, J.; Xu, X.; Li, Y.; Liu, Z.; Chen, J.; Wang, X. Utilization of antimony tailings in fiber-reinforced 3D printed concrete: A sustainable approach for construction materials. Constr. Build. Mater. 2023, 408, 133689. [Google Scholar] [CrossRef]

- Sun, J.; Ma, Y.; Li, J.; Zhang, J.; Ren, Z.; Wang, X. Machine learning-aided design and prediction of cementitious composites containing graphite and slag powder. J. Build. Eng. 2021, 43, 102544. [Google Scholar] [CrossRef]

- Sun, J.; Wang, X.; Zhang, J.; Xiao, F.; Sun, Y.; Ren, Z.; Zhang, G.; Liu, S.; Wang, Y. Multi-objective optimisation of a graphite-slag conductive composite applying a BAS-SVR based model. J. Build. Eng. 2021, 44, 103223. [Google Scholar] [CrossRef]

- Feng, W.; Wang, Y.; Sun, J.; Tang, Y.; Wu, D.; Jiang, Z.; Wang, J.; Wang, X. Prediction of thermo-mechanical properties of rubber-modified recycled aggregate concrete. Constr. Build. Mater. 2022, 318, 125970. [Google Scholar] [CrossRef]

- Sun, J.; Wang, J.; Zhu, Z.; He, R.; Peng, C.; Zhang, C.; Huang, J.; Wang, Y.; Wang, X. Mechanical performance prediction for sustainable high-strength concrete using bio-inspired neural network. Buildings 2022, 12, 65. [Google Scholar] [CrossRef]

- Zhang, J.; Sun, Y.; Li, G.; Wang, Y.; Sun, J.; Li, J. Machine-learning-assisted shear strength prediction of reinforced concrete beams with and without stirrups. Eng. Comput. 2022, 38, 1293–1307. [Google Scholar] [CrossRef]

- Sun, J.; Yue, L.; Xu, K.; He, R.; Yao, X.; Chen, M.; Cai, T.; Wang, X.; Wang, Y. Multi-objective optimisation for mortar containing activated waste glass powder. J. Mater. Res. Technol. 2022, 18, 1391–1411. [Google Scholar] [CrossRef]

- Sun, J.; Wang, Y.; Yao, X.; Ren, Z.; Zhang, G.; Zhang, C.; Chen, X.; Ma, W.; Wang, X. Machine-Learning-Aided Prediction of Flexural Strength and ASR Expansion for Waste Glass Cementitious Composite. Appl. Sci. 2021, 11, 6686. [Google Scholar] [CrossRef]

- Ren, Z.; Sun, J.; Zeng, X.; Chen, X.; Wang, Y.; Tang, W.; Wang, X. Research on the electrical conductivity and mechanical properties of copper slag multiphase nano-modified electrically conductive cementitious composite. Constr. Build. Mater. 2022, 339, 127650. [Google Scholar] [CrossRef]

- Zava, A.E.; Apebo, S.N.; Adeke, P.T. The optimum amount of waste glass aggregate that can substitute fine aggregate in concrete. Aksaray J. Sci. Eng. 2020, 4, 159–171. [Google Scholar] [CrossRef]

- Sun, R.; Wang, D.; Cao, H.; Wang, Y.; Lu, Z.; Xia, J. Ecological pervious concrete in revetment and restoration of coastal Wetlands: A review. Constr. Build. Mater. 2021, 303, 124590. [Google Scholar] [CrossRef]

- Figueira, R.; Sousa, R.; Coelho, L.; Azenha, M.; De Almeida, J.; Jorge, P.; Silva, C. Alkali-silica reaction in concrete: Mechanisms, mitigation and test methods. Constr. Build. Mater. 2019, 222, 903–931. [Google Scholar] [CrossRef]

- Adaway, M.; Wang, Y. Recycled glass as a partial replacement for fine aggregate in structural concrete–Effects on compressive strength. Electron. J. Struct. Eng. 2015, 14, 116–122. [Google Scholar] [CrossRef]

- He, L.; Yin, J.; Gao, X. Additive Manufacturing of Bioactive Glass and Its Polymer Composites as Bone Tissue Engineering Scaffolds: A Review. Bioengineering 2023, 10, 672. [Google Scholar] [CrossRef]

- Wang, Q.; Yi, Y.; Ma, G.; Luo, H. Hybrid effects of steel fibers, basalt fibers and calcium sulfate on mechanical performance of PVA-ECC containing high-volume fly ash. Cem. Concr. Compos. 2019, 97, 357–368. [Google Scholar] [CrossRef]

- Açikgenç, M.; Ulaş, M.; Alyamaç, K.E. Using an artificial neural network to predict mix compositions of steel fiber-reinforced concrete. Arab. J. Sci. Eng. 2015, 40, 407–419. [Google Scholar] [CrossRef]

- Sun, J.; Zhang, J.; Gu, Y.; Huang, Y.; Sun, Y.; Ma, G. Prediction of permeability and unconfined compressive strength of pervious concrete using evolved support vector regression. Constr. Build. Mater. 2019, 207, 440–449. [Google Scholar] [CrossRef]

- Shariati, M.; Armaghani, D.J.; Khandelwal, M.; Zhou, J.; Khorami, M. Assessment of longstanding effects of fly ash and silica fume on the compressive strength of concrete using extreme learning machine and artificial neural network. J. Adv. Eng. Comput. 2021, 5, 50–74. [Google Scholar] [CrossRef]

- Feng, D.-C.; Liu, Z.-T.; Wang, X.-D.; Chen, Y.; Chang, J.-Q.; Wei, D.-F.; Jiang, Z.-M. Machine learning-based compressive strength prediction for concrete: An adaptive boosting approach. Constr. Build. Mater. 2020, 230, 117000. [Google Scholar] [CrossRef]

- Ling, T.-C.; Poon, C.-S. Properties of architectural mortar prepared with recycled glass with different particle sizes. Mater. Des. 2011, 32, 2675–2684. [Google Scholar] [CrossRef]

- Niu, Y.; Cheng, C.; Luo, C.; Yang, P.; Guo, W.; Liu, Q. Study on rheological property, volumetric deformation, mechanical strength and microstructure of mortar incorporated with waste glass powder (WGP). Constr. Build. Mater. 2023, 408, 133420. [Google Scholar] [CrossRef]

- Li, Q.; Qiao, H.; Li, A.; Li, G. Performance of waste glass powder as a pozzolanic material in blended cement mortar. Constr. Build. Mater. 2022, 324, 126531. [Google Scholar] [CrossRef]

- ASTM C305; Standard Practice for Mechanical Mixing of Hydraulic Cement Pastes and Mortars of Plastic Consistency. American Society for Testing and Materials (ASTM): West Conshohocken, PA, USA, 1995.

- Fall, M.; Célestin, J.; Pokharel, M.; Touré, M. A contribution to understanding the effects of curing temperature on the mechanical properties of mine cemented tailings backfill. Eng. Geol. 2010, 114, 397–413. [Google Scholar] [CrossRef]

- Lam, C.S.; Poon, C.S.; Chan, D. Enhancing the performance of pre-cast concrete blocks by incorporating waste glass–ASR consideration. Cem. Concr. Compos. 2007, 29, 616–625. [Google Scholar] [CrossRef]

- ASTM C1260; Standard Test Method for Potential Alkali Reactivity of Aggregates. American Society for Testing and Materials (ASTM): West Conshohocken, PA, USA, 2007.

- Multon, S.; Sellier, A.; Cyr, M. Chemo–mechanical modeling for prediction of alkali silica reaction (ASR) expansion. Cem. Concr. Res. 2009, 39, 490–500. [Google Scholar] [CrossRef]

- Chen, Y.; Jia, Z.; Mercola, D.; Xie, X. A gradient boosting algorithm for survival analysis via direct optimization of concordance index. Comput. Math. Methods Med. 2013, 2013, 873595. [Google Scholar] [CrossRef]

- Zharmagambetov, A.; Gabidolla, M.; Carreira-Perpiñán, M.Ê. Improved boosted regression forests through non-greedy tree optimization. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar]

- Guelman, L. Gradient boosting trees for auto insurance loss cost modeling and prediction. Expert Syst. Appl. 2012, 39, 3659–3667. [Google Scholar] [CrossRef]

- Rokach, L. Decision forest: Twenty years of research. Inf. Fusion 2016, 27, 111–125. [Google Scholar] [CrossRef]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Huang, Y.; Liu, Y.; Li, C.; Wang, C. GBRTVis: Online analysis of gradient boosting regression tree. J. Vis. 2019, 22, 125–140. [Google Scholar] [CrossRef]

- Liu, P.; Fu, B.; Yang, S.X.; Deng, L.; Zhong, X.; Zheng, H. Optimizing survival analysis of XGBoost for ties to predict disease progression of breast cancer. IEEE Trans. Biomed. Eng. 2020, 68, 148–160. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Gayathri, R.; Rani, S.U.; Čepová, L.; Rajesh, M.; Kalita, K. A comparative analysis of machine learning models in prediction of mortar compressive strength. Processes 2022, 10, 1387. [Google Scholar] [CrossRef]

- Rasheed, Z.; Aravamudan, A.; Sefidmazgi, A.G.; Anagnostopoulos, G.C.; Nikolopoulos, E.I. Advancing flood warning procedures in ungauged basins with machine learning. J. Hydrol. 2022, 609, 127736. [Google Scholar] [CrossRef]

- Ul Hassan, C.A.; Iqbal, J.; Hussain, S.; AlSalman, H.; Mosleh, M.A.; Sajid Ullah, S. A computational intelligence approach for predicting medical insurance cost. Math. Probl. Eng. 2021, 2021, 1162553. [Google Scholar] [CrossRef]

- Barnston, A.G. Correspondence among the correlation, RMSE, and Heidke forecast verification measures; refinement of the Heidke score. Weather Forecast. 1992, 7, 699–709. [Google Scholar] [CrossRef]

- Sutherland, J.; Peet, A.; Soulsby, R. Evaluating the performance of morphological models. Coast. Eng. 2004, 51, 917–939. [Google Scholar] [CrossRef]

- Zhao, Q.; Hastie, T. Causal interpretations of black-box models. J. Bus. Econ. Stat. 2021, 39, 272–281. [Google Scholar] [CrossRef]

- Gigerenzer, G.; Brighton, H. Homo heuristicus: Why biased minds make better inferences. Top. Cogn. Sci. 2009, 1, 107–143. [Google Scholar] [CrossRef]

- Van den Broeck, G.; Lykov, A.; Schleich, M.; Suciu, D. On the tractability of SHAP explanations. J. Artif. Intell. Res. 2022, 74, 851–886. [Google Scholar] [CrossRef]

- Feyzi, F. CGT-FL: Using cooperative game theory to effective fault localization in presence of coincidental correctness. Empir. Softw. Eng. 2020, 25, 3873–3927. [Google Scholar] [CrossRef]

- Algaba, E.; Fragnelli, V.; Sánchez-Soriano, J. Handbook of the Shapley Value; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- Mitrentsis, G.; Lens, H. An interpretable probabilistic model for short-term solar power forecasting using natural gradient boosting. Appl. Energy 2022, 309, 118473. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Erion, G.; Chen, H.; DeGrave, A.; Prutkin, J.M.; Nair, B.; Katz, R.; Himmelfarb, J.; Bansal, N.; Lee, S.-I. From local explanations to global understanding with explainable AI for trees. Nat. Mach. Intell. 2020, 2, 56–67. [Google Scholar] [CrossRef]

- Bulla, C.; Birje, M.N. Improved data-driven root cause analysis in fog computing environment. J. Reliab. Intell. Environ. 2022, 8, 359–377. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Nguyen, A.; Fuchs, T.; Lipson, H. Understanding neural networks through deep visualization. arXiv 2015, arXiv:1506.06579. [Google Scholar]

- Jas, K.; Dodagoudar, G. Explainable machine learning model for liquefaction potential assessment of soils using XGBoost-SHAP. Soil Dyn. Earthq. Eng. 2023, 165, 107662. [Google Scholar] [CrossRef]

- Greenwell, B.M.; Boehmke, B.C.; Gray, B. Variable Importance Plots-An Introduction to the vip Package. R J. 2020, 12, 343. [Google Scholar] [CrossRef]

- Dickinson, Q.; Meyer, J.G. Positional SHAP (PoSHAP) for Interpretation of machine learning models trained from biological sequences. PLoS Comput. Biol. 2022, 18, e1009736. [Google Scholar] [CrossRef]

- Fetsch, C.R.; DeAngelis, G.C.; Angelaki, D.E. Visual–vestibular cue integration for heading perception: Applications of optimal cue integration theory. Eur. J. Neurosci. 2010, 31, 1721–1729. [Google Scholar] [CrossRef]

- Abdullah, A.; Jaafar, M.; Taufiq-Yap, Y.; Alhozaimy, A.; Al-Negheimish, A.; Noorzaei, J. The effect of various chemical activators on pozzolanic reactivity: A review. Sci. Res. Essays 2012, 7, 719–729. [Google Scholar] [CrossRef]

- Liu, C.; Huang, X.; Wu, Y.-Y.; Deng, X.; Zheng, Z. The effect of graphene oxide on the mechanical properties, impermeability and corrosion resistance of cement mortar containing mineral admixtures. Constr. Build. Mater. 2021, 288, 123059. [Google Scholar] [CrossRef]

- Huseien, G.F.; Shah, K.W.; Sam, A.R.M. Sustainability of nanomaterials based self-healing concrete: An all-inclusive insight. J. Build. Eng. 2019, 23, 155–171. [Google Scholar] [CrossRef]

- Atiş, C.D.; Bilim, C.; Çelik, Ö.; Karahan, O. Influence of activator on the strength and drying shrinkage of alkali-activated slag mortar. Constr. Build. Mater. 2009, 23, 548–555. [Google Scholar] [CrossRef]

- Aly, M.; Hashmi, M.; Olabi, A.; Messeiry, M.; Abadir, E.; Hussain, A. Effect of colloidal nano-silica on the mechanical and physical behaviour of waste-glass cement mortar. Mater. Des. 2012, 33, 127–135. [Google Scholar] [CrossRef]

- Ullah, I.; Liu, K.; Yamamoto, T.; Zahid, M.; Jamal, A. Prediction of electric vehicle charging duration time using ensemble machine learning algorithm and Shapley additive explanations. Int. J. Energy Res. 2022, 46, 15211–15230. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Erion, G.G.; Lee, S.-I. Consistent individualized feature attribution for tree ensembles. arXiv 2018, arXiv:1802.03888. [Google Scholar]

- Litovsky, R.Y.; Parkinson, A.; Arcaroli, J. Spatial hearing and speech intelligibility in bilateral cochlear implant users. Ear Hear. 2009, 30, 419. [Google Scholar] [CrossRef]

- Nie, B.; Xue, J.; Gupta, S.; Patel, T.; Engelmann, C.; Smirni, E.; Tiwari, D. Machine learning models for GPU error prediction in a large scale HPC system. In Proceedings of the 2018 48th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), Luxembourg, 25–28 June 2018; pp. 95–106. [Google Scholar]

- Bove, C.; Aigrain, J.; Lesot, M.-J.; Tijus, C.; Detyniecki, M. Contextualization and exploration of local feature importance explanations to improve understanding and satisfaction of non-expert users. In Proceedings of the 27th International Conference on Intelligent User Interfaces, Helsinki, Finland, 22–25 March 2022; pp. 807–819. [Google Scholar]

- Liu, Y.; Liu, Z.; Luo, X.; Zhao, H. Diagnosis of Parkinson’s disease based on SHAP value feature selection. Biocybern. Biomed. Eng. 2022, 42, 856–869. [Google Scholar] [CrossRef]

- Caruana, R.; Lou, Y.; Gehrke, J.; Koch, P.; Sturm, M.; Elhadad, N. Intelligible models for healthcare: Predicting pneumonia risk and hospital 30-day readmission. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Sydney, Australia, 10–13 August 2015; pp. 1721–1730. [Google Scholar]

- Gomi, H.; Osu, R. Task-dependent viscoelasticity of human multijoint arm and its spatial characteristics for interaction with environments. J. Neurosci. 1998, 18, 8965–8978. [Google Scholar] [CrossRef]

- Leibold, M.A.; Holyoak, M.; Mouquet, N.; Amarasekare, P.; Chase, J.M.; Hoopes, M.F.; Holt, R.D.; Shurin, J.B.; Law, R.; Tilman, D. The metacommunity concept: A framework for multi-scale community ecology. Ecol. Lett. 2004, 7, 601–613. [Google Scholar] [CrossRef]

| Chemical Composition | WGP | Cement |

|---|---|---|

| 74.02% | 20.10% | |

| 1.40% | 4.60% | |

| 0.19% | 2.80% | |

| 11.25% | 63.40% | |

| 3.34% | 1.30% | |

| 0.33% | 2.70% | |

| 9.03% | 0.60% | |

| 0.29% | - | |

| True density | 2.3–2.5 t/m3 | 3.0–3.2 t/m3 |

| Total chloride | - | 0.02% |

| Variables | Number of Levels | Magnitude |

|---|---|---|

| WGP size (μm) | 2 | 75, 300 |

| WGP replacement ratio (%) | 4 | 0, 10, 20, 30 |

| Alkali ratio (%) | 4 | 0, 2, 4, 6 |

| Sodium sulfate ratio (%) | 4 | 0, 2, 4, 6 |

| Curing duration (days) | 3 | 7, 14, 28 |

| Model\Hyperparameter | n_estimator (Before/After) | max_depth (Before/After) |

|---|---|---|

| Random Forest | 100/345 | None (no limit)/10 |

| Gradient Boosting Regressor | 100/470 | 3/4 |

| Hist Gradient Boosting Regressor | 100 (max_iter)/498 | None (no limit)/4 |

| XGBoost | 100/119 | None (no limit)/4 |

| Variables | Random Forest | Gradient Boosting Regressor | Hist Gradient Boosting Regressor | XGBoost |

|---|---|---|---|---|

| RMSE | 2.4168 | 1.3085 | 1.7490 | 1.5794 |

| MSE | 5.8411 | 1.7121 | 3.0590 | 2.4946 |

| MAE | 1.9743 | 1.0320 | 1.4090 | 1.2695 |

| R2 | 0.8176 | 0.9465 | 0.9045 | 0.9221 |

| Model\Hyperparameter | n_estimator (Before/After) | max_depth (Before/After) |

|---|---|---|

| Random Forest | 100/116 | None (no limit)/13 |

| Gradient Boosting Regressor | 100/496 | 3/4 |

| Hist Gradient Boosting Regressor | 100 (max_iter)/646 | None (no limit)/8 |

| XGBoost | 100/89 | None (no limit)/8 |

| Variables | Random Forest | Gradient Boosting Regressor | Hist Gradient Boosting Regressor | XGBoost |

|---|---|---|---|---|

| RMSE | 0.0166 | 0.0127 | 0.0136 | 0.0101 |

| MSE | 0.0003 | 0.0002 | 0.0002 | 0.0001 |

| MAE | 0.0134 | 0.0096 | 0.0109 | 0.0080 |

| R2 | 0.7610 | 0.8602 | 0.8396 | 0.9110 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, F.; Zhang, X.; Zhang, Y.; Wang, D.; Tian, H.; Xu, J.; Luo, W.; Zhang, Y. AI-Based Variable Importance Analysis of Mechanical and ASR Properties in Activated Waste Glass Mortar. Buildings 2025, 15, 1866. https://doi.org/10.3390/buildings15111866

Wu F, Zhang X, Zhang Y, Wang D, Tian H, Xu J, Luo W, Zhang Y. AI-Based Variable Importance Analysis of Mechanical and ASR Properties in Activated Waste Glass Mortar. Buildings. 2025; 15(11):1866. https://doi.org/10.3390/buildings15111866

Chicago/Turabian StyleWu, Fei, Xin Zhang, Yanan Zhang, Dong Wang, Hua Tian, Jing Xu, Wei Luo, and Yuzhuo Zhang. 2025. "AI-Based Variable Importance Analysis of Mechanical and ASR Properties in Activated Waste Glass Mortar" Buildings 15, no. 11: 1866. https://doi.org/10.3390/buildings15111866

APA StyleWu, F., Zhang, X., Zhang, Y., Wang, D., Tian, H., Xu, J., Luo, W., & Zhang, Y. (2025). AI-Based Variable Importance Analysis of Mechanical and ASR Properties in Activated Waste Glass Mortar. Buildings, 15(11), 1866. https://doi.org/10.3390/buildings15111866