Abstract

In intelligent building, efficient surface defect detection is crucial for structural safety and maintenance quality. Traditional methods face three challenges in complex scenarios: locating defect features accurately due to multi-scale texture and background interference, missing fine cracks because of their tiny size and low contrast, and the insufficient generalization of irregular defects due to complex geometric deformation. To address these issues, an improved version of the You Only Look Once (YOLOv8) algorithm is proposed for building surface defect detection. The dataset used in this study contains six common building surface defects, and the images are captured in diverse scenarios with different lighting conditions, building structures, and ages of material. Methodologically, the first step involves a normalization-based attention module (NAM). This module minimizes irrelevant features and redundant information and enhances the salient feature expression of cracks, delamination, and other defects, improving feature utilization. Second, for bottlenecks in fine crack detection, an explicit vision center (EVC) feature fusion module is introduced. It focuses on integrating specific details and overall context, improving the model’s effectiveness. Finally, the backbone network integrates deformable convolution net v2 (DCNV2) to capture the contour deformation features of targets like mesh cracks and spalling. Our experimental results indicate that the improved model outperforms YOLOv8, achieving a 3.9% higher mAP50 and a 4.2% better mAP50-95. Its performance reaches 156 FPS, suitable for real-time inspection in smart construction scenarios. Our model significantly improves defect detection accuracy and robustness in complex scenarios. The study offers a reliable solution for accurate multi-type defect detection on building surfaces.

1. Introduction

Building surfaces are crucial in daily life, providing functions like load bearing, sound insulation, fire protection, and other important roles. Due to factors such as residence time, building quality, etc., building surface structures inevitably experience surface cracking problems caused by creep, shrinkage, and loading [1]. Although small in size, microcracks are often early signs of structural failure, and these cracks, if not detected in time, may expand into larger cracks or even trigger structural collapse. Therefore, the accurate localization and detection of surface microcracks is essential to ensuring the safety and durability of building structures. In addition, the detection of microcracks has a wide range of applications in industrial fields such as aerospace, machinery manufacturing, and electronic equipment which require high structural integrity and reliability, where the presence of microcracks may lead to equipment failure or performance degradation. In addition, the increasing influence of factors such as natural disasters and man-made damages has led to different degrees of crack damages in building surfaces [2]. Detecting and repairing cracks on building surfaces promptly is crucial in order to avoid potential risks. However, existing detection methods have many limitations and cannot fully meet the needs of practical applications.

Traditional methods: The traditional method of detecting cracks on building surfaces mainly relies on manual inspection. This method is not only time-consuming and labor-intensive, but also prone to inaccurate detection results due to human factors [3]. The automation of crack detection is urgently needed to reduce the workload of inspectors and increase the efficiency of the inspection infrastructure. In the construction sector, computer vision technology is revolutionizing the detection of wall defects with its efficiency and automation. Building safety is strengthened through this technology, and maintenance efficiency is also greatly increased [4]. Conventional photoelectric detection approaches involve techniques such as eddy current testing, magnetic flux leakage, infrared thermography, and ultrasonic pulse methods [5,6,7,8]. Despite their technical merits, these approaches have not achieved widespread industrial deployment, primarily due to prohibitive implementation costs.

Modern methods: In recent years, the emergence of deep learning techniques has brought new hope for wall crack detection. The crack detection technology for wall surfaces has evolved from traditional digital image analysis methods to the wide application of deep learning methods today [9]. Object detection algorithms using deep learning fall into two main categories [10]: traditional methods relying on CNNs and newer approaches utilizing transformer architectures. Within the classical CNN framework, the R-CNN series demonstrates progressive evolution—R-CNN pioneered CNN-based feature extraction [11], Fast R-CNN enhanced feature sharing through ROI pooling [12], and Mask R-CNN extended functionality to instance segmentation [13,14]. The SSD and YOLO series achieve efficient inference via single-stage detection architectures [15,16,17]. Significant progress has been made in improving their detection accuracy. However, these methods still face some challenges in practical applications. Transformer-based approaches are represented by DETR (Detection Transformer), with its enhanced variant RT-DETR significantly improving real-time detection performance through a hybrid encoder design [18]. However, its model complexity and computational resource requirements are also increased significantly, limiting its widespread deployment in practical applications.

The YOLO algorithm, recognized as a leading single-stage detection technique [19,20], has received considerable attention since its development. Its effectiveness in detecting objects has enhanced its importance in wall defect detection. Deep learning-based object detection techniques have seen major breakthroughs in structural crack detection in recent times. The proposed R-YOLO v5 technique offers a fresh approach to detecting bridge cracks. Its accuracy is greatly enhanced by using improved attention strategies and better loss function design [21]. Further advancing the field, Ye et al. [22] introduced the YOLOv7 network, which includes three innovative self-developed modules tailored for crack detection. Their method showed excellent performance in detecting cracks of different sizes and maintained strong robustness when handling images affected by various noise levels and types [23,24,25]. The proposed system showed particular strength in challenging scenarios where traditional methods often fail, such as in the presence of surface stains, shadows, or complex background patterns. Recent research has made notable strides in detecting structural cracks and tackling key issues linked to precision, computational efficiency, and robustness in real-world applications [26,27,28].

There are still significant limitations in the adaptability of state-of-the-art models such as YOLOv11n and YOLOv12n for complex building surface defect detection tasks. In the case of similar numbers of model parameters, the latest models such as YOLOv12n are less accurate and fast than YOLOv8. In contrast, YOLOv8, with its modular design and lightweight Bottleneck structure, achieves better real-time performance and hardware adaptability while maintaining high accuracy, thus better fitting the demanding resource efficiency needs of industrial inspection scenarios. The integration of attention mechanisms, network pruning, and specialized modules has opened up new possibilities for automated structural health monitoring, potentially revolutionizing infrastructure maintenance practices [28,29,30]. In the domain of machine learning applications for detecting defects on building surfaces, challenges like low precision in detection and sluggish processing rates persist, and there is significant potential for enhancing the effectiveness of various detection models [31,32,33]. Considering these challenges and insights, YOLOv8 offers high-precision detection and demonstrates exceptional real-time performance, further reducing the overall model complexity, making it more appropriate for identifying surface defects on buildings. Thus, YOLOv8 is adopted as the foundational architecture in this research.

This study presents a novel algorithm, YOLO-NED, designed to identify flaws on building surfaces. Although past algorithms have solved part of the problem to a certain extent, they often have limitations and are unable to fully cope with the diversity of wall defects. In contrast, our solution can comprehensively cover the detection of building surface defects including, but not limited to, problems such as exposed reinforcement, delamination, efflorescence, cracks, spalling, and rust stains. Compared with traditional methods, YOLO-NED improves its attention mechanism, adds feature fusion, and optimizes its network structure to significantly improve the detection of complex backgrounds and small targets. Overall, the main contributions of this paper are as follows:

- The NAM is incorporated into the base model to prioritize prominent defects on the building surface while diminishing less important details. This optimization significantly enhances the algorithm’s precision and ensures that the model fully leverages crucial feature data.

- The use of an EVC feature fusion strategy in the backbone part of the network, which aims to deeply explore local and global features, provides comprehensive and accurate feature recognition and greatly improves our ability to detect subtle targets and small objects, making our model more sensitive in capturing building defects.

- DCNV2 is implemented to substitute C2f; this modification greatly enhances feature extraction capabilities and strengthens the model’s robustness against anomalies. This advancement makes our model more adept and adaptable at identifying irregularly shaped defects.

The structure of this paper is organized as follows: Section 2, Materials and Methods, describes the experimental dataset and the model structure of YOLOv8 in detail; Section 3, Improvements to YOLOv8, describes the three core innovations of YOLO-NED, introducing the information of the NAM, EVC, and DCNV2 modules, respectively; Section 4, Experiments, describes the experimental environment and evaluation indexes; Section 5, Analysis and Results of YOLO-NED, demonstrates the superiority of YOLO-NED through ablation experiments and comparative experiments; Section 6, Discussion, and Section 7, Conclusions, summarize the practical value of the model and point out directions for future optimization. All abbreviations in this article are detailed in Table A1.

2. Materials and Methods

2.1. Data

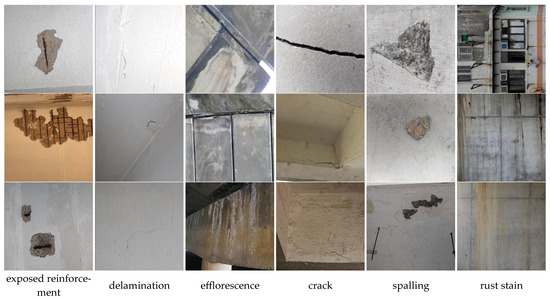

The dataset for this study was mainly obtained from the Internet and the related literature. The dataset used in this study is publicly available on Hugging Face: https://huggingface.co/datasets/xueaidezhouzhou/buildingsurfacedefectdetection/tree/main, accessed on 21 May 2025. The dataset covered six prevalent types of building surface defects, including exposed reinforcement, delamination, efflorescence, cracks, spalling, and rust stain. It contained 7354 images in total. Each image was accompanied by annotations that provide precise location and category information for the defects. Images in the dataset may have contained multiple defect types at the same time, so each image may have been assigned multiple labels to accurately describe its defects. Specifically, the dataset comprised 3309 images of exposed reinforcement with a total of 8019 labels, 746 images of delamination with 1005 labels, 1848 images of efflorescence with 4239 labels, 3933 images of cracks with 10,983 labels, 2568 images of spalling with 7627 labels, and 2362 images of rust stain with 7211 labels.

The images in this dataset were captured under diverse lighting conditions, covering both indoor and outdoor scenes, including natural light, artificial light sources, and other lighting environments. This variety of acquisition environments helped the model better adapt to various environments in practical applications, thus significantly improving its robustness. Images were captured from multiple construction projects covering different types of building structures, including residential buildings, commercial buildings, and industrial facilities. The age of these buildings ranged from new constructions to more than 30 years old, and the photos covered a wide range of building materials such as concrete, metal, and composites. This diverse dataset was designed to ensure that the model could accommodate different types of building surface and defect characteristics. In addition, images of building surfaces with different coating types were included in the dataset. The coating types included common paints, waterproof coatings, and anti-corrosion coatings. Coatings not only affect the brightness and contrast of an image, but they can also mask or obscure potential defective features. For example, waterproof coatings may reflect light, resulting in highlighted areas in an image, which can interfere with the detection of defects such as cracks. These coatings also varied in age and maintenance status, further adding to the complexity and diversity of the dataset.

All the images in the dataset were color images, with a uniform resolution of 640 × 640 pixels, stored in jpg format. The annotation information of the images was provided in the form of text files. However, there were some challenges with this dataset. Firstly, there were significant differences in the appearance and characteristics of different types of defects, which made it difficult for the model to recognize them. Second, the complex background patterns in some images interfered with the model’s detection of defects, which further increased the detection difficulty. In addition, the images being under different lighting conditions may have had an impact on the detection performance of the model, resulting in less accurate detection results. Finally, certain defects occupied small areas in the images, which meant that the model needed to be more accurate in detection, as otherwise it would be easy to miss these tiny defects.

To ensure robust experimentation and evaluation, the dataset was divided into training, validation, and test sets with an 8:1:1 allocation. Specifically, 5882 images were randomly selected for the training set, and 736 images were randomly selected for the validation set and test set, respectively. This distribution was accomplished by randomizing the allocation. We randomly divided the entire dataset to ensure uniform data allocation. This randomized division method helped to avoid bias caused by the order of the data and ensured that the performance of the model on different data subsets was more representative and reliable. This balanced distribution facilitated comprehensive model training, validation, and test assessment. An example of each defect category in the dataset is shown in Figure 1.

Figure 1.

Examples of each defect type in the dataset in this paper.

2.2. Related Works

In the field of construction defect detection, deep learning techniques have significantly enhanced the performance of computer vision. However, some current studies are limited to focusing only on the detection of a single defect type. Other studies, aimed at detecting multiple defect types, exhibit problems of omissions and misjudgments in practical applications, which largely restrict their application scope and reliability in complex real-world engineering scenarios.

He et al. [34] and Wu et al. [35] enhanced the YOLOv4 model to improve the accuracy of crack detection. Zhang et al. [36], Xing et al. [37], Wang et al. [38], and Qi et al. [39] have all contributed to the enhancement of crack detection effectiveness through improvements to the YOLOv5 and YOLOv7 models. The YOLOv7 model has also been widely applied in concrete crack detection. Ni et al. [40] further enhanced model performance by introducing an improved network architecture. Detecting architectural surface defects in complex real-world environments is challenging due to the varying crack sizes and inconspicuous features, which collectively limit model effectiveness. Dong et al. [41] noted that YOLOv8 tends to produce false alarms and miss detections when applied to concrete surface crack detection. Despite notable advancements, the majority of existing studies have primarily focused on detecting single defect types and more conspicuous defects, such as cracks. Given the necessity of detecting multiple defect types across varied environmental conditions, this limitation may hinder the broader application of these models in practice.

In practice, the existence of problems such as non-significant characteristics of building surface defects and high numbers of small target defects pose significant challenges. Section 3 deals with these issues.

2.3. YOLOv8 Algorithm

Compared to conventional detection algorithms, YOLO applies a novel framework that reframes the detection task as a regression problem with a unified neural network. The algorithm achieves rapid target detection by splitting the image into multiple grid cells and predicting the target bounding boxes. Figure 2 illustrates the network architecture of YOLOv8. Its backbone network is made up of C2f and SPPF modules and integrates the CSP idea to enhance feature extraction efficiency. At the network’s neck, YOLOv8 integrates the ELAN structure from YOLOv7 and swaps the C3 module in YOLOv5 with the C2f structure to achieve smoother gradient propagation. The C2f structure not only enhances the feature fusion performance of the convolutional neural network but also increases the inference speed and further reduces the model’s complexity [42]. YOLOv8 also introduces the SPPF module in the YOLOv5 architecture. This is a spatial pyramid pooling layer that extends the receptive field and facilitates the fusion of local features with global features, thus enriching the feature information [43]. Compared with YOLOv5, YOLOv8 makes significant improvements in the head section by adopting the mainstream decoupled head structure. This approach separates the classification and detection heads to enhance the model’s adaptability and performance [44].

Figure 2.

Diagram of YOLOv8 algorithm’s network architecture.

The Bottleneck component in YOLOv8 focuses on extracting and enhancing features, and is one of the core building blocks in the network. The Bottleneck module is the key building block in YOLOv8, which is precisely designed with two convolutional blocks. The initial convolutional module reduces the input channel count c1 to an intermediate channel count c, typically a portion of the output channel count c2. Right after this, the next convolutional module expands the intermediate channel count c to the output channel count c2, potentially using group convolution methods to enhance performance. When the input channel count c1 equals the output channel count c2 and the configuration parameter shortcut is set to true, the module will enable residual concatenation. This means that the input features will be superimposed directly on top of the output features, creating a jump connection to enhance the information flow and learning capability of the network. The main reason for using the Bottleneck module is to decrease the network’s parameter count. This speeds up both training and inference while enhancing feature extraction efficiency. In this way, the Bottleneck module achieves a lightweight model while maintaining the performance of the network.

3. Improvements to YOLOv8

Figure 3 illustrates the structural diagram of the YOLO-NED model. To enhance the effectiveness and speed of target detection, we integrate various strategies into this new framework. Firstly, the introduction of the NAM attention mechanism method for building defect detection achieves accurate localization, capturing significant defects on the building surface and fading out irrelevant details. Secondly, incorporating EVC feature fusion boosts the recognition of precise targets and tiny items, as well as the capacity to detect intricate features on the building surface. Finally, the DCNV2 module improves the model’s ability to extract features and handle spatial deformations, as well as its generalization performance.

Figure 3.

Network structure diagram of YOLO-NED.

3.1. The NAM

We introduce the NAM, a lightweight and efficient attention module, which is an innovative extension of a convolutional block attention module (CBAM) based on normalization techniques. CBAM is an efficient hybrid attention mechanism that significantly improves defect recognition accuracy through dual-path feature correlation detection. The dual-path design takes into account the global context and local details to solve the problem of distinguishing small targets from complex backgrounds. The channel attention path is conducive to the suppression of irrelevant background data, and the spatial attention path highlights the key spatial location of defects to effectively locate the geometric center of irregular defects.

The NAM focuses on the key features in an image and achieves an accurate measure of image saliency by re-designing the channel and spatial attention submodules [45]. This module subtly measures the saliency of image features by normalizing the scale factor, effectively minimizing non-essential channel and pixel details in the image. The NAM is innovative in that it not only improves the recognition of salient features in images, but also solves the problems neglected in previous studies. It enables the model to prioritize essential details within an image by precise weight distribution, enhancing the speed and precision of feature extraction.

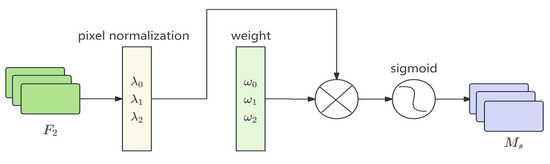

Figure 4 illustrates the design of the NAM channel attention submodule. This module enables the model to determine “what to focus on”, aligning with the classification aspect of detecting building surface defects. With the input feature map , the module initially computes the scale factors , , , …, of each channel of by regular normalization; then, the weights of the scale factors of each channel of are calculated as follows:

Figure 4.

NAM for channel attention.

Each channel’s weight value is used as a penalty term for the original feature map , and the resulting image channel weight coefficients are generated through the sigmoid activation function.

Figure 5 illustrates the NAM spatial attention submodule. This module helps the model learn “where to look”, addressing the localization challenge in building surface defect detection. For the input feature map , the module initially computes the scale factors r , , , …, for each pixel using normalization. Next, it determines the weight of these scale factors for each pixel as follows:

Figure 5.

Spatial attention submodule of NAM.

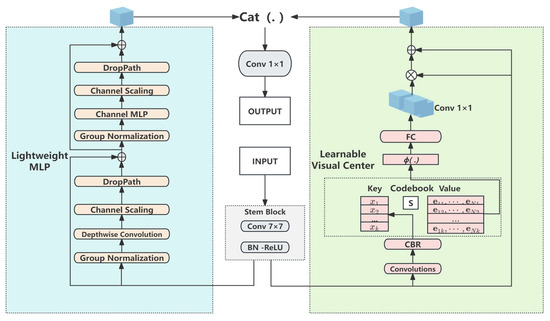

3.2. The EVC Module

The centralized feature pyramid network (CFPN) is a state-of-the-art centralized feature pyramid network that significantly improves the performance of feature detection by providing a well-defined visual center that focuses on a few key points and captures remote dependencies. The CFPN provides an explicit visual center (EVC) to focus on key information. The CFPN can efficiently handle multi-scale features, which is crucial for detecting defects of different sizes. By focusing on key points and capturing remote dependencies, the CFPN can better identify and localize defects in complex backgrounds and improve detection accuracy when processing images that contain complex background patterns or shadows.

The EVC includes a multilayer perceptron (MLP) and a learnable vision center (LVC). Their architectures are illustrated in Figure 6. The MLP is responsible for capturing deep connections in images, while the LVC focuses on integrating information from local corners of the image [46]. To further refine the features, we embed a Stem block between these two parallel processing units, which includes a 7 × 7 convolutional layer, batch normalization (BN), and an activation function layer, improving the representation of features through the smoothing process of the Stem blocks.

Figure 6.

Structure of EVC.

The MLP includes a residual module using depth-separable convolution and another based on the channel MLP. Initially, deep convolution is applied to the input features to enhance their representation. This is followed by channel scaling and regularization, after which residual concatenation with the input is performed. Here, the MLP module’s input comes from the deeply differentiable convolution module. The input to the deeply differentiable convolution module is the group-normalized feature mapping, which can be expressed as follows:

The channel MLP module receives its input from the deeply differentiable convolution module’s output, which performs group normalization prior to the channel MLP operation. This process can be represented as follows:

The LVC functions as an encoder equipped with an intrinsic dictionary, which includes an intrinsic codebook and a collection of learnable vision-centered scale factors. The LVC is handled in two stages. The input features first pass through a convolutional layer with CBR processing. Subsequently, these processed features are integrated with the codebook via a learnable scaling factor. This factor facilitates the mapping of positional information to and , as delineated by the following formula:

where represents the scale factor, denotes the i-th pixel, and is the k-th visual code. ReLU and BN are calculated by combining and all , followed by channel-wise multiplication and the addition of the inputs and the local features as follows:

The feature mappings of the two modules are combined along the channel dimension to form the EVC’s output. The output can be represented as follows:

where MLP () denotes remote dependencies and LVC () denotes localized corner regions. The combined signature incorporates the strengths of both modules, enabling the detection model to capture a comprehensive range of discriminative features. The ECV technique intelligently allocates features to individual targets within a multi-scale feature hierarchy and customizes an exclusive region of interest (ROI) for every object by precisely determining critical details like its size, shape, and position. These ROIs are then weighted and combined with the multi-scale feature hierarchy, significantly enhancing the identification of objects across various scales.

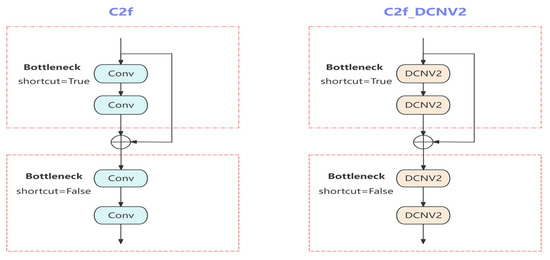

3.3. The DCNV2 Module

With its adaptive geometric deformation mechanism, DCNV2 strengthens image feature extraction. DCNV2 assigns an offset variable to each of the nine sampling points in each 3 × 3 convolutional kernel. The replacement of Conv in the Bottleneck in C2f with DCNV2 is shown in Figure 7. These offsets are not fixed but are obtained through network learning, allowing the convolutional kernel to dynamically adjust its size and position depending on the shape and size of the target object [47]. This dynamic adjustment significantly improves the model’s ability to detect irregularly shaped and sized objects, allowing the network to capture the target’s features more accurately. By learning the weights for each sampling point, irrelevant background data are suppressed, and the disturbance caused by unrelated factors is diminished [47]. The blue arrows mainly represent the data flow and convolution operations, while the green arrows represent the generation and application of offset values. Blue squares: represent elements in the input feature map. These elements are the inputs to the convolution operation. Green square: represents the offset field generated after the convolution operation. These offset values are used to adjust the position of the convolution kernel to achieve deformable convolution. Figure 8 shows the structural representation of the DCNV2 framework.

Figure 7.

Comparison of C2f and C2f_DCNV2 structures.

Figure 8.

The structural illustration of the DCNV2 framework.

The DCNV2 formula for a given sampling point is as follows:

Each position of in the output feature map has a value of , denoted by . The weight of the convolution kernel at is represented by belongs to , with indicating the size of the senses. For instance, R {(1,1), (1,0) … (1,1)} represents a 3 × 3 convolution kernel. The position in the feature map is indicated by the variable , . The variable represents the offsets added at the standard convolutional sampling points with extra offsets. The variable represents the penalty parameter, which is a decimal value ranging from 0 to 1.

The bilinear interpolation formula is defined as follows:

The notation represents the pixel value computed by interpolation. The term refers to a random point within the offset range, specified as ( = ). The notation defines the spatial coordinates of the input feature map, and the function represents the bilinear interpolation function utilized by a 2D convolution kernel.

4. Experiments

4.1. Environment and Configuration of Experiments

All the tests in this research were carried out on a system featuring an Intel (R) Xeon (R) Platinum 8255C processor, an NVIDIA GeForce RTX 2080 Ti graphics card, and Ubuntu 20.04 as the operating system. The equipment was sourced from Moore Threads, a manufacturer located in Beijing, China. The software environment consisted of Python 3.8, PyTorch version 1.8.1, and Cuda 11.1. First, all the training images were standardized to a size of 640 × 640 pixels. This standardization aimed to strike a balance between resolution and model effectiveness, allowing the model to extract intricate details from the images without excessive computational cost. Second, the total iterations were established at 500, and the batch size was assigned a value of 8. These parameters were chosen to ensure that model training was sufficiently robust and that computational resources were used effectively during the training period. The number of iterations was sufficient to allow the model to learn adequately on the training data, while the batch size was chosen to improve the training efficiency as much as possible while ensuring the stable learning of the model.

The gradient during the training process was optimized using the stochastic gradient descent (SGD) algorithm, in which the initial learning rate and momentum were set to 0.01 and 0.937, respectively. The settings of the learning rate and momentum, on the other hand, were based on the best practices from experience and previous studies, aiming at balancing the speed of the training and the stability of the model convergence. In this study, the hyperparameters were selected after rigorous experimental validation, and the utilized hyperparameter configuration was found to exhibit the best performance on the validation set. As shown in Table 1.

Table 1.

Training parameter settings.

4.2. Evaluation Indicators

The effectiveness of the building surface defect model was measured in this study using standard evaluation criteria for object detection tasks, including the check accuracy (), recall (), , and mean average precision (), which were calculated using the following formulas:

5. Analysis and Results of YOLO-NED

5.1. Experimental Results of Ablation

To evaluate the suggested enhancement’s effectiveness, the experimental and control groups were used to train or test under consistent environmental and training parameters, and the results were recorded to verify the effectiveness of the proposed improvement strategy; the experiment was conducted using YOLOv8n as the baseline model. In our ablation experiments, we tested the modifications in different combinations to explore the interactions between the factors and their effects on the overall performance. The results are shown in Table 2. Here, ✓ means that a module is included, and × means that it is excluded.

Table 2.

Results of module enhancements on YOLOv8n.

The first group directly applies YOLOv8n. Its mAP50 value is 0.690 and its mAP50-95 value is 0.487. In the second group, the NAM is added to the baseline model, which focuses on the significant building surface defect-related information and weakens the less relevant information, resulting in a mAP50 value of 0.707 and a mAP50-95 value of 0.497, improving on the baseline model by 1.7% for mAP50, and the mAP50-95 improved by 1%. In the third group, the EVC and NAM modules were added to the baseline model, which incorporate the explicit visual centers shown by the CFPN feature fusion strategy, building on the attention mechanism added in the second group and improving the detection of small and insignificant targets, with a 3.4% improvement over the baseline for model mAP50 and mAP50-95 improved by 3%. The fourth group, after adding the NAM attention mechanism and incorporating EVC feature fusion, then introduces DCNV2 instead of C2f, which endows the model with greater adaptability, enabling it to remain robust in the face of anomalies. The fifth and sixth groups add an Inner-IoU module and an ODConv module, respectively, to the third group’s configuration, and the experimental data show weaker surface modeling capabilities than the fourth group in both cases.

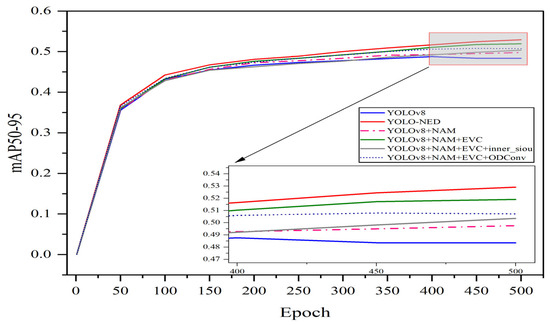

The second group significantly improves the localization accuracy of multi-scale defects by adding the NAM through the adaptive calibration of channels and spatial dimension weights, which effectively suppresses the interference of complex texture backgrounds and improves the mAP50 metrics by 1.7%; the third group fuses the local details with the global contextual features after adding the EVC to construct the multi-scale visual saliency maps, and solves the problem of low-contrast small target leakage detection, improving the F1 value by 3.4%; the fourth group adds DCNV2 to dynamically adjust the position of convolution kernel sampling points, which enhances the geometric adaptation to irregular defect contours and improves the mAP50-95 by 4.2%. The synergistic optimization of the three leads to the optimal comprehensive detection performance of the model in complex scenarios. This improved performance means that our model is able to recognize critical information more acutely and is able to deal with complex defects on building surfaces more flexibly, with an improvement of 3.9% for the baseline model mAP50 and 4.2% for mAP50-95. It is clear that the inclusion of the three modules, NAM, EVC, and DCNV2, into YOLOv8 contributes more to the recognition of defect detection on building surfaces. To better visualize the performance comparison of each model during the experiments, we created a visual plot of the mAP50-95 values for each model, as depicted in Figure 9.

Figure 9.

Comparison of YOLO-NED with different object detection models.

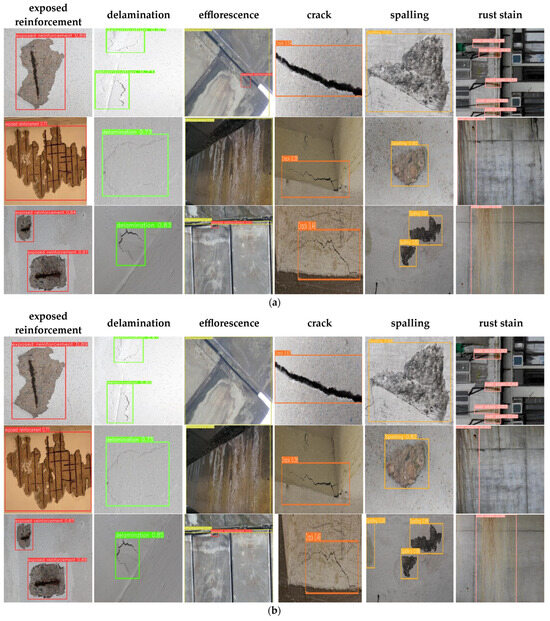

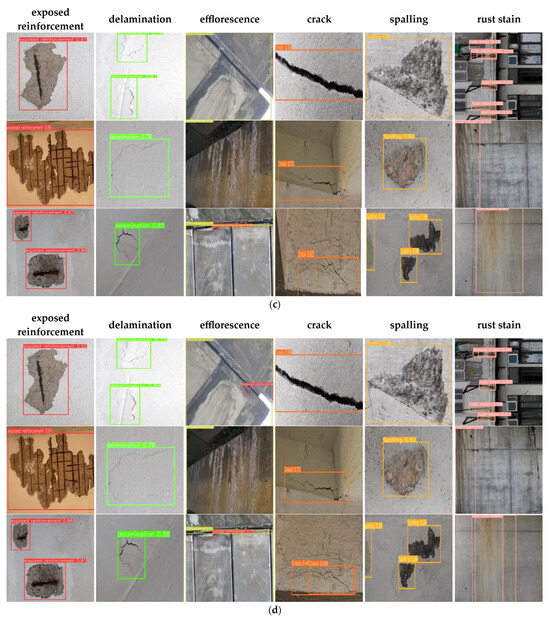

Figure 10 reveals how the gradual integration of the NAM, EVC, and DCNV2 structures greatly enhances the effectiveness of the building surface defect detection process in the ablation experiments. By carefully analyzing the experimental phenomena, we find that the baseline model is not as effective as it should be in detecting defects in the building surface, and there are obvious deficiencies in recognizing defects in the building surface. However, with the addition of new modules one by one, the model’s detection performance was substantially boosted, not only locating building surface defects more accurately but also improving the accuracy of recognition. Table 3 presents the detection performance for each type of defect. When all the modules were integrated into YOLOv8, the detection accuracy improved substantially, accompanied by a significant rise in confidence. These results strongly demonstrate that the integration of the NAM, EVC, and DCNV2 into YOLOv8 greatly enhances the model’s capabilities for building surface defect detection.

Figure 10.

Comparison of detection effects. (a) YOLOv8; (b) YOLOv8 model fused with NAM; (c) detection effects of YOLOv8 model fusing NAM and EVC modules; (d) YOLO-NED.

Table 3.

Detection results.

5.2. Comparative Experiments

The ablation experiments successfully validate the practicality of our algorithm. In order to further examine its performance, we conducted an exhaustive comparative analysis of the optimization model proposed in this study with Faster R-CNN, RT-DETR, and so on under the same dataset partitioning conditions. This comparison not only demonstrates the competitiveness of our model, but also highlights its significant advantages in performance, and the performance evaluation of different algorithms on the dataset of building surface defects are shown in Table 4.

Table 4.

Performance evaluation of different algorithms on dataset of building surface defects.

Table 4 shows the experimental findings. Our YOLO-NED model achieves strong results in detecting building surface defects. The data prove this method works effectively for structural flaw identification tasks. Compared to Fast R-CNN, YOLO-NED achieves a significant improvement of 28.9% on the mAP50 metric, while at the same time achieving a 24.4% improvement on the more stringent mAP50-95 metric, which demonstrates a substantial improvement in accuracy. On the other hand, the RT-DETR model uses a strong transformer architecture. However, this model has some limitations; it encounters multiple challenges in practical applications, such as excessive memory consumption, complex training process, and insufficient detail capturing capability. This is especially true when detecting tiny targets in building surface defects. Even so, YOLO-NED achieves a subtle improvement of 0.1% and 0.3% in the mAP50 and mAP50-95 metrics, respectively, which proves its superiority in detail processing and accuracy control. YOLOv8n has won wide acclaim in the industry for its ultra-fast detection speed and relatively low computational effort. However, when faced with the complex and variable task of detecting defects on building surfaces, its detection accuracy still leaves much to be desired. Similarly, YOLOv5n, YOLOv6, YOLOv8, YOLOv10, YOLOv11, and YOLOv12n do not fully meet the needs of practical applications when facing the diversity and complexity of building surface defects. In contrast, YOLO-NED improves by 7.4%, 11.7%, 3.9%, 4%, 4.5%, and 0.3% over these models in the mAP50 metric, and by 12.5%, 11.2%, 4.2%, 3.4%, 5%, and 1% in the mAP50-95 metric, respectively, showing a significant advantage in its detection accuracy.

Table 5 demonstrates the accuracy performance of four models, YOLOv8n, YOLOv10n, YOLOv11n, and YOLOv12n, in the task of detecting six types of surface defects on a building, as well as the total time used for model training. The results show that with similar parameter counts for these four models (3.0M, 2.7M, 2.6M, and 2.6M, respectively), YOLOv8n performs well in a number of metrics. Specifically, YOLOv8n has the highest mAP50 value, indicating that it outperforms the other three models in terms of defect detection accuracy. In addition, YOLOv8n has the shortest training time, which further highlights its advantage in efficiency. In terms of detection accuracy for the six types of building surface defects, YOLOv8n achieves the highest accuracy performance in most of the defect detection tasks. This demonstrates that YOLOv8n has a significant advantage in overall performance, especially in most application-specific scenarios, where it shows excellent detection capabilities.

Table 5.

Comparison of YOLOv8, YOLOv10, YOLOv11, and YOLOv12 in defect detection and training time.

6. Discussion

Identifying building surface defects is key. It is a main field of study in construction security, which not only provides safety and security, but also reduces losses; this helps the construction industry grow well. In the practical application of defect detection on building surfaces, a model may receive a large amount of irrelevant or redundant information, which may make it difficult for the model to identify and localize critical defect features.

Although the YOLO-NED model has demonstrated high accuracy and excellent real-time detection capability in the field of building surface defect detection, there are still some limitations that need to be further optimized and improved for its practical application. For example, there is still room for improvement in the detection accuracy of fine cracks or small-sized defects in scenes with complex textured backgrounds. In addition, the robustness of the model under different lighting conditions needs to be enhanced in order to ensure that defects can be detected stably and accurately in various real-world scenarios.

Although the detection speed of YOLO-NED has basically met the requirements of industrial applications, it may still face the challenge of tight computational resources when deployed on resource-constrained devices. To this end, the introduction of more advanced feature fusion strategies can be considered to further enhance the feature extraction capability of the model for small targets. Meanwhile, a lightweight attention mechanism can be explored to enable it to focus on small target regions more accurately, thus improving its detection accuracy.

On the one hand, data enhancement techniques can be used to expand the training dataset to improve the generalization ability of the model; on the other hand, researchers should investigate how to improve the feature extraction module of the model so that it can better learn the textural features related to defects. In addition, in order to achieve more efficient deployment on resource-constrained devices, model compression techniques such as knowledge distillation and network pruning need to be further investigated. Through these methods, the number of parameters and the computational complexity of the model can be reduced without significantly degrading the model’s performance.

Similarly, YOLO-NED can be applied not only to building surfaces, but also to defect detection for other structures, such as bridges and roads, which not only helps to expand the range of applications of YOLO-NED, but also enhances its value and impact in real-world engineering environments.

7. Conclusions

In order to cope with the complexity and difficulty of recognizing building face defects, this paper proposes the YOLO-NED model. The model proposed in this paper is designed to solve the problem of poor accuracy in recognizing multiple surface defects on buildings. The results show that this model can accurately detect various defects even in the context of multiple defect types. The conclusions of this paper are as follows:

- The addition of the attention mechanism to the model captures information related to building surface defects, making the model more effective in recognizing them against complex backgrounds. The model then incorporates a feature fusion module, which enhances its ability to recognize small targets. Finally, Deformable Convolutional Network v2 is introduced at the backbone network level to enhance the model’s feature extraction capability.

- YOLO-NED attains mAP50 and mAP50-95 scores of 72.9% and 52.9% on our building surface defects dataset, which are 3.9% and 4.2% higher than the baseline algorithm, respectively. Its performance reaches 156 FPS, suitable for real-time inspection in smart construction scenarios.

- This approach fulfills the demand for precision in identifying structural surface flaws, while considering ease of use and adaptability in real-world settings, offering robust assistance for construction quality assessments.

The YOLO-NED model introduces a novel approach for detecting building surface defects, achieving higher accuracy without sacrificing real-time processing capabilities. Despite the notable advancements of the YOLO-NED model in identifying building surface defects, several limitations persist. For example, the detection accuracy of the model in dealing with complex textures and small defects needs to be further improved, and in some cases, false or missed detections may occur. Future research directions may include further optimizing the model’s structure to enhance the detection of minor defects and exploring more advanced feature extraction techniques to reduce background interference in order to further improve the accuracy of defect detection on building surfaces.

Author Contributions

The authors confirm their contributions to the paper as follows: Conceptualization: Y.M., X.L. and X.Y.; methodology: L.S.; software: W.G.; formal analysis: Y.M. and X.X.; investigation C.L. and Y.L.; funding acquisition: Z.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Shandong Province Science and Technology Small and Medium-Sized Enterprises Innovation Ability Improvement Project (grant no. 2024TSGC0285) and the Taian Science and Technology Innovation Development Project (grant no. 2023GX027). Lin Sun was supported by the Shandong Province Science and Technology Small and Medium-Sized Enterprises Innovation Ability Improvement Project (grant no. 2024TSGC0285), and Xiaoxia Lin was supported by the Taian Science and Technology Innovation Development Project (grant no. 2023GX027).

Data Availability Statement

The data that support the findings of this study are available from the author Yingzhou Meng upon reasonable request.

Acknowledgments

The authors would like to express their gratitude to the members of the research group for their support.

Conflicts of Interest

Authors Chunwei Leng, Yan Li and Zhenyu Niu were employed by the company Hanqing Data Consulting Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Appendix A

Table A1.

Glossary of terms corresponding to acronyms and full names in this paper.

Table A1.

Glossary of terms corresponding to acronyms and full names in this paper.

| NO. | Acronyms | Full Name |

|---|---|---|

| 1 | BN | Batch normalization |

| 2 | DETR | Detection Transformer |

| 3 | EVC | Explicit vision center |

| 4 | LVC | Learnable vision center |

| 5 | mAP | Mean average precision |

| 6 | MLP | Multilayer perceptron |

| 7 | NAM | Normalization-based attention module |

| 8 | P | Precision |

| 9 | R | Recall |

| 10 | ROI | Region of interest |

| 11 | R-CNN | Region-based CNN |

| 12 | SSD | Single-Shot Multibox Detector |

| 13 | SGD | Stochastic gradient descent |

| 14 | YOLO | You Only Look Once |

| 15 | CFPN | Centralized feature pyramid network |

| 16 | CBAM | Convolutional block attention module |

References

- Basu, S.; Orr, S.A.; Aktas, Y.D. A geological perspective on climate change and building stone deterioration in London: Implications for urban stone-built heritage research and management. Atmosphere 2020, 11, 788. [Google Scholar] [CrossRef]

- Pan, Y.; Zhang, X.; Jin, X.; Yu, H.; Rao, J.; Tian, S.; Luo, L.; Li, C. Road pavement condition mapping and assessment using remote sensing data based on MESMA. In Proceedings of the 9th Symposium of the International Society for Digital Earth, Halifax, NS, Canada, 5–9 October 2015. [Google Scholar]

- Amin, D.; Akhter, S. Deep learning-based defect detection system in steel sheet surfaces. In Proceedings of the 2020 IEEE Region 10 Symposium (TENSYMP), Dhaka, Bangladesh, 5–7 June 2020; pp. 444–448. [Google Scholar]

- Vivekananthan, V.; Vignesh, R.; Vasanthaseelan, S.; Joel, E.; Kumar, K.S. Concrete bridge crack detection by image processing technique by using the improved OTSU method. Mater. Today 2023, 74, 1002–1007. [Google Scholar] [CrossRef]

- Meng, X.B.; Lu, M.Y.; Yin, W.L.; Bennecer, A.; Kirk, K.J. Evaluation of Coating Thickness Using Lift-Off Insensitivity of Eddy Current Sensor. Sensors 2021, 21, 419. [Google Scholar] [CrossRef]

- Wang, G.; Xiao, Q.; Gao, Z.H.; Li, W.; Jia, L.; Liang, C.; Yu, X. Multifrequency AC Magnetic Flux Leakage Testing for the Detection of Surface and Backside Defects in Thick Steel Plates. IEEE Magn. Lett. 2022, 13, 8102105. [Google Scholar] [CrossRef]

- Jing, X.; Yang, X.-Y.; Xu, C.-H.; Chen, G.; Ge, S. Infrared thermal images detecting surface defect of steel specimen based on morphological algorithm. J. China Univ. Pet. 2012, 36, 146–150. [Google Scholar]

- Andi, M.; Yohanes, G.R. Experimental study of crack depth measurement of concrete with ultrasonic pulse velocity (UPV). In Proceedings of the IOP Conference Series: Materials Science and Engineering, Bali, Indonesia, 7–8 August 2019; Volume 673, p. 012047. [Google Scholar]

- Zhu, Z.; Al-Qadi, I.L. Crack Detection of Asphalt Concrete Using Combined Fracture Mechanics and Digital Image Correlation. J. Transp. Eng. Part B Pavements 2023, 149, 04023012. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Boston, MA, USA, 7–12 June 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1–9. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Honolulu, HI, USA, 21–26 July 2017; pp. 2961–2969. [Google Scholar]

- Zaidi, S.S.A.; Ansari, M.S.; Aslam, A.; Kanwal, N.; Asghar, M.; Lee, B. A survey of modern deep learning based object detection models. Digit. Signal Process. 2022, 126, 103514. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Choutri, K.; Lagha, M.; Meshoul, S.; Batouche, M.; Bouzidi, F.; Charef, W. Fire Detection and Geo-Localization Using UAV’s Aerial Images and Yolo-Based Models. Appl. Sci. 2023, 13, 11548. [Google Scholar] [CrossRef]

- Singla, R.; Sharma, S.; Sharma, S.K. Infrared imaging for detection of defects in concrete structures. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Stavanger, Norway, 30 November–1 December 2023; Volume 1289, p. 012064. [Google Scholar]

- Liu, Y.; Zhou, T.; Xu, J.; Hong, Y.; Pu, Q.; Wen, X. Rotating Target Detection Method of Concrete Bridge Crack Based on YOLOv5. Appl. Sci. 2023, 13, 11118. [Google Scholar] [CrossRef]

- Ye, G.; Qu, J.; Tao, J.; Dai, W.; Mao, Y.; Jin, Q. Autonomous surface crack identification of concrete structures based on the YOLOv7 algorithm. J. Build. Eng. 2023, 73, 106688. [Google Scholar] [CrossRef]

- Lau, K.W.; Po, L.M.; Rehman, Y.A.U. Large Separable Kernel Attention: Rethinking the Large Kernel Attention design in CNN. Expert Syst. Appl. 2024, 236, 121352. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A lightweight-design for real-time detector architectures. J. Real-Time Image Process. 2024, 21, 62. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. Available online: https://arxiv.org/abs/1804.02767 (accessed on 22 May 2024).

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. Available online: https://arxiv.org/abs/2004.10934 (accessed on 20 May 2024).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. Available online: https://arxiv.org/abs/2209.02976 (accessed on 25 May 2024).

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Han, S.; Pool, J.; Tran, J.; Dally, W.J. Learning Both Weights and Connections for Efficient Neural Networks. arXiv 2015, arXiv:1506.02626. Available online: https://arxiv.org/abs/1506.02626 (accessed on 15 May 2024).

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. Available online: https://arxiv.org/abs/1503.02531 (accessed on 15 May 2024).

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. Available online: https://arxiv.org/abs/1704.04861 (accessed on 15 May 2024).

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. arXiv 2017, arXiv:1707.01083. Available online: https://arxiv.org/abs/1707.01083 (accessed on 16 May 2024).

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- He, Z.; Su, C.; Deng, Y. A Novel Hybrid Approach for Concrete Crack Segmentation Based on Deformable Oriented-YOLOv4 and Image Processing Techniques. Appl. Sci. 2024, 14, 1892. [Google Scholar] [CrossRef]

- Wu, P.; Liu, A.; Fu, J.; Ye, X.; Zhao, Y. Autonomous Surface Crack Identification of Concrete Structures Based on an Improved One-Stage Object Detection Algorithm. Eng. Struct. 2022, 272, 114962. [Google Scholar] [CrossRef]

- Zhang, X.; Luo, Z.; Ji, J.; Sun, Y.; Tang, H.; Li, Y. Intelligent Surface Cracks Detection in Bridges Using Deep Neural Network. Int. J. Struct. Stab. Dyn. 2024, 24, 2450046. [Google Scholar] [CrossRef]

- Xing, Y.; Han, X.; Pan, X.; An, D.; Liu, W.; Bai, Y. EMG-YOLO: Road Crack Detection Algorithm for Edge Computing Devices. Front. Neurorobot. 2024, 18, 1423738. [Google Scholar] [CrossRef]

- Wang, J.; Wang, P.; Qu, L.; Pei, Z.; Ueda, T. Automatic Detection of Building Surface Cracks Using UAV and Deep Learning combined Approach. Struct. Concr. 2024, 25, 2302–2322. [Google Scholar] [CrossRef]

- Qi, Y.; Ding, Z.; Luo, Y.; Ma, Z. A Three-Step Computer Vision-Based Framework for Concrete Crack Detection and Dimensions Identification. Buildings 2024, 14, 2360. [Google Scholar] [CrossRef]

- Ni, Y.-H.; Wang, H.; Mao, J.-X.; Xi, Z.; Chen, Z.-Y. Quantitative Detection of Typical Bridge Surface Damages Based on Global Attention Mechanism and YOLOv7 Network. Struct. Health Monit. 2024, 24, 941–962. [Google Scholar] [CrossRef]

- Dong, X.; Liu, Y.; Dai, J. Concrete Surface Crack Detection Algorithm Based on Improved YOLOv8. Sensors 2024, 24, 5252. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.F.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Liu, Y.; Shao, Z.; Teng, Y.; Hoffmann, N. NAM: Normalization-based Attention Module. arXiv 2021, arXiv:2111.12419. [Google Scholar]

- Quan, Y.; Zhang, D.; Zhang, L.; Tang, J. Centralized feature pyramid for object detection. IEEE Trans. Image Process. 2023, 32, 4341–4354. [Google Scholar] [CrossRef] [PubMed]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable Convolutional Networks. arXiv 2017, arXiv:1703.06211. [Google Scholar]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable ConvNets v2: More Deformable, Better Results. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).