Abstract

Architectural design decisions are primarily made through an interaction between an architect and a client during the conceptual design phase. However, in larger-scale public architecture projects, the client is frequently represented by a community that embraces numerous stakeholders. The scale, social diversity, and political layers of such collective clients make their interaction with architects challenging. A solution to address this challenge is using new information technologies that automate design interactions on an urban scale through crowdsourcing and artificial intelligence technologies. However, since such technologies have not yet been applied and tested in field conditions, it remains unknown how communities interact with such systems and whether useful concept designs can be produced in this way. To fill this gap in the literature, this paper reports the results of a case study architecture project where a novel crowdsourcing system was used to automate interactions with a community. The results of both quantitative and qualitative analyses revealed the effectiveness of our approach, which resulted in high-level stakeholder satisfaction and yielded conceptual designs that better reflect stakeholders’ preferences. Along with identifying opportunities for using advanced technologies to automate design interactions in the concept design phase, we also highlight the challenges of such technologies, thus warranting future research.

1. Introduction

In this paper, we present a case study where a novel architectural crowdsourcing system was used to produce a concept design of the Innovation, Culture, and Education (ICE) building at the ZHR industrial park in multicultural northern Israel. The proposed crowdsourcing system automates large-scale communication between many architects and a community of project stakeholders (e.g., the developer, neighbors, and future employees) who collectively produce an architectural design.

Architecture can be seen as a design process that involves reflection and negotiation between the architects of a project, on the one hand, and stakeholders, on the other hand [1]. Accordingly, in small-scale architecture projects (e.g., private residences), clients are primary users and owners who possess shared power over the design [2]. This participation of project stakeholders in the design ensures that the results fit stakeholders’ needs. However, in large-scale or public projects, the situation is different, as the client is mostly an institution and thus might not be able to represent the needs of all users of the designed building [3]. For instance, a to-be-designed theater can be expected to serve a large and undefined group of individuals, including patrons, employees, and an immediate community, all of whom are largely not involved in the design process. Under such circumstances, involving all stakeholders in the design process becomes a challenging, if ever feasible, task.

Yet, advocates of Participatory Design (PD) argue that the involvement of end-users in the design process would produce a better design fit [4]. In the PD framework, participants from a variety of backgrounds, positions, and interests share their knowledge and talents to jointly explore a problem and produce possible solutions. Indeed, the idea that residents should be able to participate in urban and architectural design is not new and has been practiced through the last 50 years [5]. Overall, a common assumption in the field of urban planning research is that community participation supports democratic culture and fairer communities, which makes such participation essential for sustainable development [3,6].

However, despite the extensive prior research in the PD field and the availability of several commercial technologies, organizing a significant participatory process in the field of architecture remains a challenging undertaking. The many and varied factors impeding PD implementation include intra-community politics [7], bureaucracy [8], knowledge gaps [9], loss of public trust in politicians and local authorities [10], as well as requirements related to time and effort from the participants and organizers.

To bridge this research gap, in this paper, we present the results of implementing a novel architecture crowdsourcing process to produce concept design. The proposed crowdsourcing technology enables effective and organized design interaction between a community and architects on a large scale, and is capable of dealing deals with these challenges. Addressing the knowledge gaps by adapting the tasks and communication to the participants’ knowledge, the proposed technology remains inexpensive, as it does not require the participants much time to interact. Furthermore, along with allowing for continuous interaction over the design process, it also preserves the participants’ privacy and enables them to express themselves in an individual and authentic way without political pressure. Finally, the proposed technology is accessible through a multilingual interface and is available 24/7. The specific research questions (RQ) addressed in this study are as follows:

- RQ 1

- How effective is the crowdsourcing process in terms of design quality, cost, and user satisfaction in real-life conditions?

- RQ 2

- Can non-professional participants choose the best designs in experts’ assessment?

- RQ 3

- Can the iterative voting process achieve an agreement or consensus? What is the minimum voting threshold needed to remove unsatisfactory designs from the process?

- RQ 4

- When should the crowdsourcing process stop?

Our hypotheses related to the aforementioned research questions were as follows:

H1.

We expected that the crowdsourcing process would result in an average quality design, higher cost, and higher stakeholders’ satisfaction.

H2.

Considering previous evidence that novice architecture students selected the best designs, we expected that non-professionals would perform similarly well using the wisdom of the crowds.

H3.

We expected that the design tree would converge in a consensus after several iterations. We also assumed that designs with only one vote and branches of the design development tree with only one vote would be removed.

H4.

We planned six design iterations that, as we expected, would provide sufficient time for the design to converge.

Along with confirmatory evidence for the hypotheses listed above, the results of our case study revealed the political and practical complexities involved in real-life architecture projects. Previous research on collaborative and social computing mostly involved design tasks experimented within the framework of an educational institution [11,12]. However, architecture and urban planning require prior knowledge of engineering, society, and art, as well as awareness of a specific complex political context, architecture, and urban planning in realistic situations [13]. Similarly, although the crowdsourcing method proposed in the present study was previously tested in laboratory conditions with architecture students, with promising results [12], it remains unknown how it copes with the complexities of real-life design projects. In reality, the stakeholders are diverse and can include not only lay members of a community but also entrepreneurs with conflicting needs, interests, and different beliefs.

Previous research on urban crowdsourcing has indicated the need for more adequate evaluation methods for such participatory processes [14]. While some researchers have evaluated the process according to factors such as participation, representation, transparency, user-friendliness, levels of interaction, quality of the visuals, and feeling of community [15], no study has regarded the improvement of the design due the participation as an evaluation criterion. To address this gap in the literature, and to ensure the robustness and reliability of our findings, we performed a design quality evaluation based on a control group that competed with the experiment group to figure out how community involvement could contribute to a better design. The design quality was evaluated by six expert architects.

Finally, we discuss the findings in light of relevant crowdsourcing processes theories and contribute the following:

- We present a design negotiation model that includes both crowdsourcing of professionals’ work and crowdsourcing of community feedback and ideas.

- We demonstrate the crowdsourcing model’s effectiveness, efficiency, and participant satisfaction in a real-world project.

- We identify the challenges of the crowdsourcing model for future research.

Overall, the results of our case study demonstrate the potential of crowdsourcing for large-scale architecture projects. Our findings suggest that crowdsourcing is an effective and efficient way to involve stakeholders in the design process and can result in good design quality and high user satisfaction.

2. Related Works

Due to their inherent subjectivity and rootedness in a specific context, design problems are difficult to solve [16]. In general, design problems are said to belong to a family of problems called ‘wicked problems’ where the evaluation of a solution differs from person to person [17] and where they are ill-defined [18]. In the remainder of this section, we review previous work on design processes, participatory design, and crowdsourcing.

2.1. Design Process

Since the 1960s, research has dealt with design as an organizational and psychological process, and a significant body of research has sought to establish design as a unique discipline [19,20].

There is a broad understanding of design as a complex process, akin to an algorithm, that consists of different components [19]. Early research concluded that the design process consists of the following three parts: (1) problem definition, data collection, and analysis and agreement on the design requirements; (2) synthesis of design; and (3) evaluating the suitability of designs to problem definition [21]. To date, various models of design processes have been proposed in various fields, ranging from engineering to business processes [22]. However, most of them consist of similar components defined by Jones in 1965—namely, analysis, synthesis, and evaluation [21].

Yet, one other important characteristic of the design process is its iterative nature. While Herbert Simon described the design process as a rational thinking method to solve problems through searching for a solution in the solution space [23], this process of finding a solution is also based on iterations [24]. Said differently, due to the existence of complex information dependencies, the iterative design process requires that the design work is repeated over and over again [25]. Through this iterative course, new revisions emerge and are improved or discarded. Such a repetitive nature of the design process provides a fundamental structure for the development of design process models [26].

Likewise, in a different conceptualization where researchers view the design process as an exploration [27] rather than a search, it has also been acknowledged that an evolutionary design process is based on the exploration of not only the solution space but also the problem space [28]. In this co-evolution model, it is expected that both problem requirements and solution candidates are developed until a satisfactory fit can be achieved. In applied studies, the co-evolution model was found to be helpful in describing the design process [29,30,31].

2.2. Crowdsourcing

The term “crowdsourcing”, coined by Jeff Howe in 2005, refers to a method of outsourcing business processes via the Internet to an undefined group of people [32]. Crowdsourcing consists of publishing an open call online and collecting human input. In this way, it is possible to use information technologies to organize online labor and produce exciting collective knowledge products such as Wikipedia or open-source software. Although the very term “crowdsourcing” and the technology that enables it today are relatively new, the underlying idea of using the “wisdom of crowds” goes back to the writings of Aristotle. Later on, the mathematician Galton demonstrated that in bull weight estimation contests, the average of the participants’ estimates was closest to the actual weight of the bull [33]. Likewise, in architectural design practice, architectural competitions, a popular kind of crowdsourcing [34], have traditionally been used to find innovative ideas.

In recent years, crowdsourcing has become a popular research modality in the field of human–computer interaction (HCI), especially in tasks related to design, text-writing, idea generation, and creativity. In general, crowdsourcing methods can be categorized into those that rely on laypeople or experts [35]. However, regardless of what actors constitute “the crowd,” one of the main goals of crowdsourcing is that, through collective wisdom, non-professionals can be helped to achieve a result equivalent to that affordable from professionals.

2.2.1. Types of Tasks

The three types of creative crowdsourcing tasks can broadly be classified as contests, networks, and games [36]. In contests, participants generate solutions simultaneously by responding to an open online call. Network crowdsourcing involves breaking down the problem into pieces that are solved individually and then combined into a solution. Finally, through crowdsourcing games, humans can be engaged in solving challenges using game mechanics, such as quizzes, and the most effective solutions are ranked and can then be aggregated. By exploring solutions simultaneously, all three types of crowdsourcing systems generate various solutions. It is, however, more challenging to use games and network approaches. This is because these require the ability to divide a large task into smaller pieces and then consolidate them into one as part of a larger product.

Several approaches for decomposing tasks and reassembling the solutions have been used to address this challenge of game and network crowdsourcing systems [37]: sequential, parallel, recursive, iterative, hybrid, and macro-task workflows. Sequential workflows divide a task into sub-tasks that are then solved sequentially [38]. The output of one task influences the output of the next in this workflow. Parallel workflows divide a task into sub-tasks that multiple participants can independently perform. Recursive workflows subdivide a complex task recursively until simple sub-tasks are formulated. Then, the results are aggregated recursively as well [39]. Iterative workflows consist of repeated micro-tasks to improve previous results until the budget has run out or the job is complete [40]. Hybrid workflows combine several workflow approaches that benefit from several advantages while requiring optimization [41]. Finally, macro-task workflows are used when the tasks require expert knowledge and are non-decomposable [42].

2.2.2. Tasks Scope

Workflows are made out of various tasks. Researchers have suggested four primary crowdsourcing task categories: micro, complex, macro, and creative tasks [37]. A micro-task is typically independent, straightforward, and time-saving resulting from the disassembly of a large task and requires straightforward reassembly and aggregation. An example of a micro-task would be the classification of images or the translation of sentences. Next, complex tasks require specific domain knowledge, including small tasks such as writing a paragraph, programming a software function, or proofreading a text. Furthermore, macro-tasks are more extensive in scope and require a high level of expertise. For example, macro-tasks include programming, design, or article writing. Lastly, creative tasks are monovalent assignments that often involve providing nearly finalized creative solutions, such as in design competitions. Therefore, creative tasks require expert-level knowledge and a significant amount of time.

2.3. Design Crowdsourcing

Design crowdsourcing systems comprise several components: open call, reward mechanism, crowd selection, crowd structure, solution evaluation, workflow, and quality control [43].

Several crowdsourcing methods have been proposed in the past few years using sketching. For example, sketching has been used to design and organize a room [44]. Participants were provided written instructions and asked to create a room plan sketch. The designs were evaluated by other participants using a multi-stage evaluation method. A more sophisticated approach used idea trees to develop product designs for the elderly [45]. The participants could use the idea trees to consolidate similar concepts into distinct branches and develop new ideas. In this way, the system facilitates a competitive environment that enables participants to view and assess the ideas of others, thereby promoting the collective advancement of ideas. An alternative method, Crowd vs. Crowd, combines professional competition with crowdsourced support for designers by providing them with information rather than designing [46]. Finally, using a different approach, researchers explored the potential of genetic algorithms as a creative method, utilizing the fusion and mutation of various sketches [47].

An essential part of the design process is evaluating and criticizing the design object [43]. In order to assess designs more effectively, a design review system has been proposed that can provide constructive feedback and aid designers in refining their designs [48]. To obtain a more precise assessment of the crowd, it is essential to present users with multiple designs [49]. Furthermore, previous research has found that crowdsourced anonymous feedback is more specific and valuable than communal public feedback [50].

2.4. Architecture and Urban Design Crowdsourcing

The design of architectural and urban design projects differs from other product design processes, such as logos, websites, or tangible products, due to its reliance on public urban spaces and the need for adaptation to the local culture and regulations. This renders crowdsourcing of architecture a challenging task, as there can be knowledge gaps that impede the successful completion of the project.

Urban design has been suggested as one of the first potential uses for crowdsourcing [8]. The literature outlines four main types of crowdsourcing for urban design: ideation [51], co-creation [14,52], mapping [53], and opinion-gathering [54,55]. These are typically achieved through competitions, open collaboration, and virtual labor markets [56]. Citizen Design Science was proposed as a crowdsourcing framework for urban design, which involves both bottom-up citizen input and top-down designer implementation [52]. In this model, the designer first sets up the design tool with specific rules, creating a design task for citizens to tackle. Citizens then submit their design proposals, and the designer evaluates the feedback received from the citizens. Based on this feedback, the designer identifies relevant design criteria that they can use to inform their master planning. Furthermore, parametric design tools allowed crowds to explore urban design possibilities [57].

A different approach to crowdsourcing design involves using professionals to develop designs [58]. Angelico and As introduced a system for crowdsourcing architecture via online competitions [34] which are the primary crowdsourcing workflow to produce professional architectural designs [59,60,61], but also exploits the architects [62]. Another method enables sequential and parallel design explorations based on an evolutionary development tree [11]. In this approach, participants create parallel designs and select the most effective for further refinement. Experimental results revealed that the crowdsourcing process achieved better performance than the performance of each individual participant, which is attributed to collective intelligence [12]. Another approach engages with stakeholders and architects using crowdsourcing, in which stakeholders participate in co-briefing and co-design [14]. The outcomes of both processes are used to inform a professional design competition and are decided by a public voting session.

3. Crowdsourcing System Description

The design method implemented in the present study is based on an architectural crowdsourcing technique previously developed [11,12]. This online information system produces and delivers customized tasks to diverse participants of the design process according to the specific stage of the design process. In previous research, the system was used with architects only to produce a design without the involvement of the community.

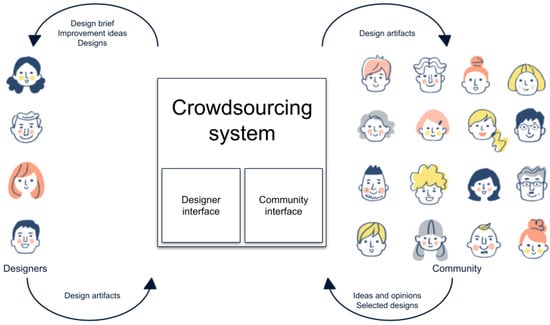

In the current study, the system involves the following three kinds of users: (1) stakeholders; (2) architects; and (3) the system manager. Stakeholders are individuals related to the future structure, such as future tenants, landlords, developers, neighbors, and future potential users. Architects are design professionals in charge of creating various design documents. Lastly, the system managers are experts responsible for providing the strategic brief and progression of the design process.

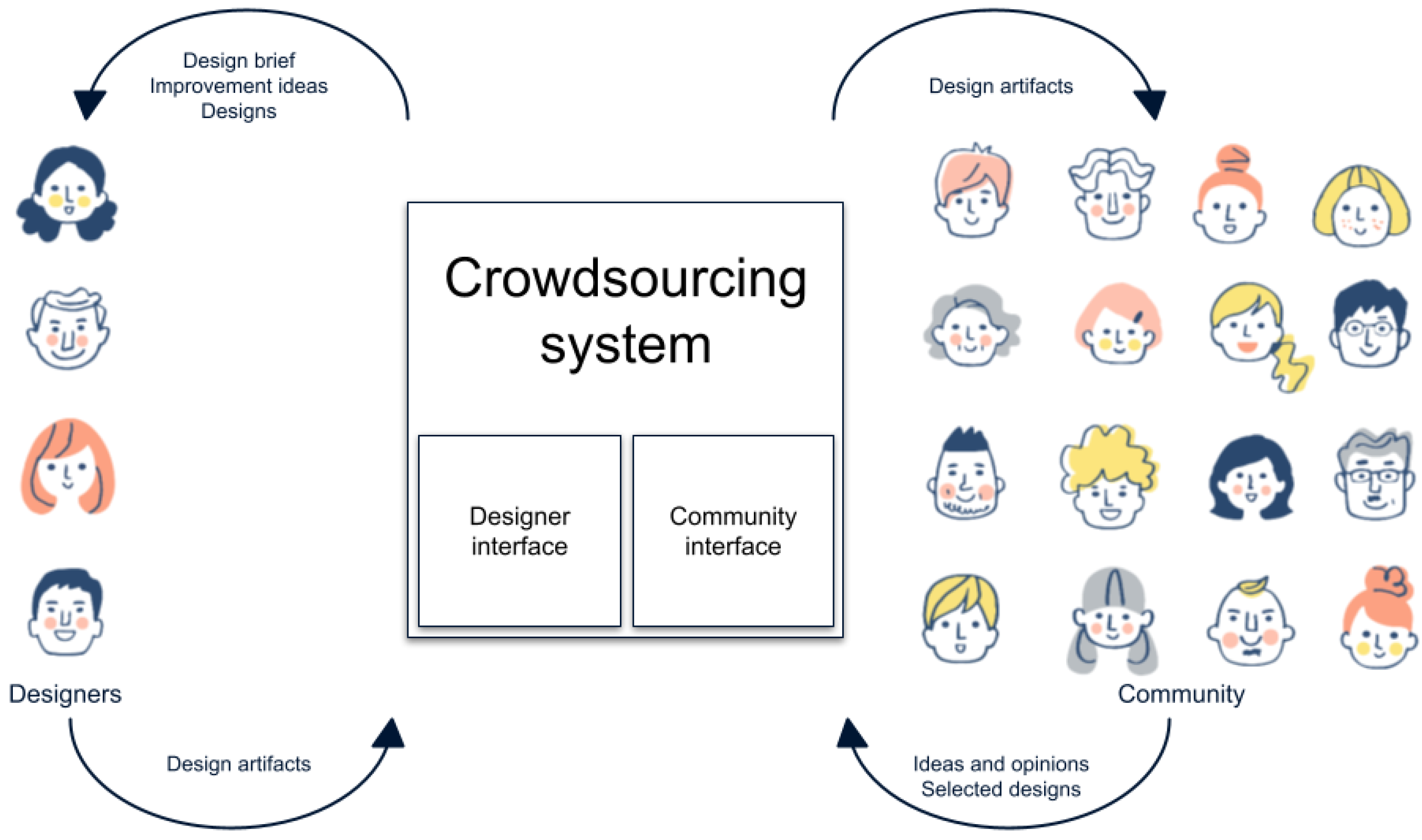

By and large, this system presupposes a negotiation between stakeholders and architect groups (see Figure 1). Specifically, the system communicates information to the participants and requests information from them accordingly, to then pass information from stakeholders to architects and back to the stakeholders. Through these iterations, the design evolves.

Figure 1.

The negotiation crowdsourcing model involves the system providing tasks to the community, with the goal of collecting their insights and ideas through natural language. This input is then processed and assigned as a design task to multiple designers, who create a range of design solutions. These solutions are then presented to the community, allowing them to vote on their preferred option and provide feedback.

3.1. DSR Blocks

Generally, the process used in the present study is based on DSR (Design, Select, and Review) blocks. DSR blocks are iterative sub-processes where designs are produced, evaluated, and improved. In what follows, we briefly describe the corresponding process stages.

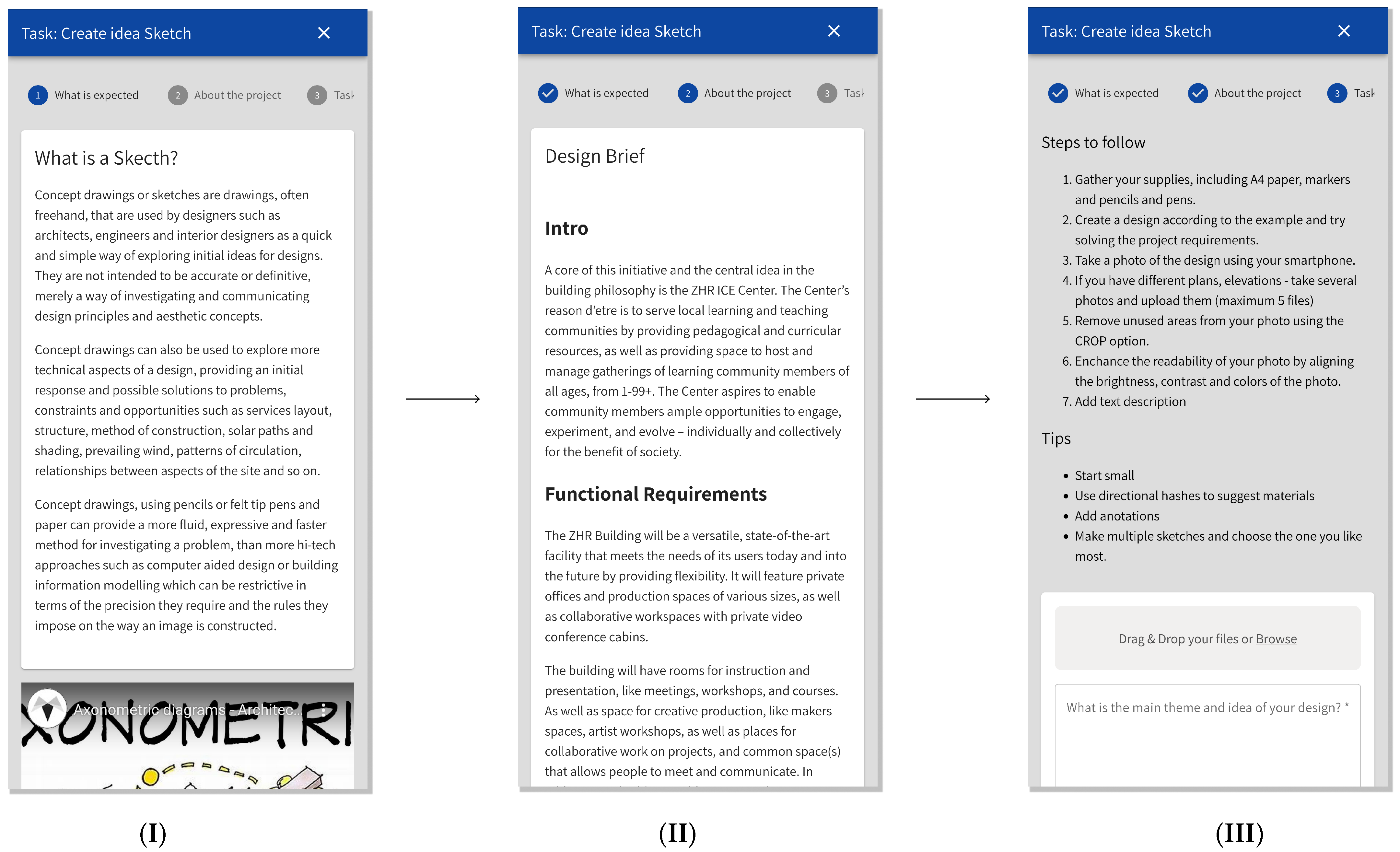

In the Design stage, architects produce design artifacts offline according to various requirements and then upload their digital artifacts to the system (see Figure 2). Architects work separately and in parallel, as if in a design competition. However, unlike design competitions, design tasks in the design process are limited by time and scope; accordingly, the process requires less effort than in competitions. In addition, architects are compensated for their working hours, and the payment is assured. At the same time, architects are motivated to perform well, as they are aware that they will receive more tasks if they succeed in producing designs selected in the process.

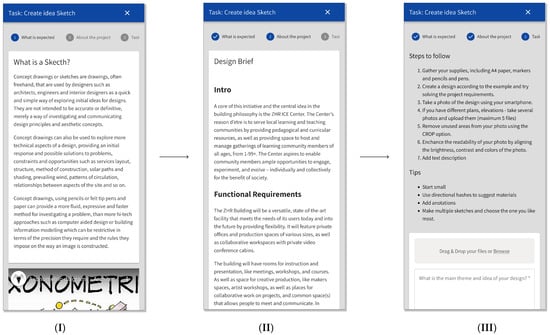

Figure 2.

Design task user flow screens (from left to right): (I) The design task goals and expected design artifacts are presented and explained. (II) The design brief is presented to provide the context of the project. (III) The participant is given some technical instructions and can upload the produced artifact files.

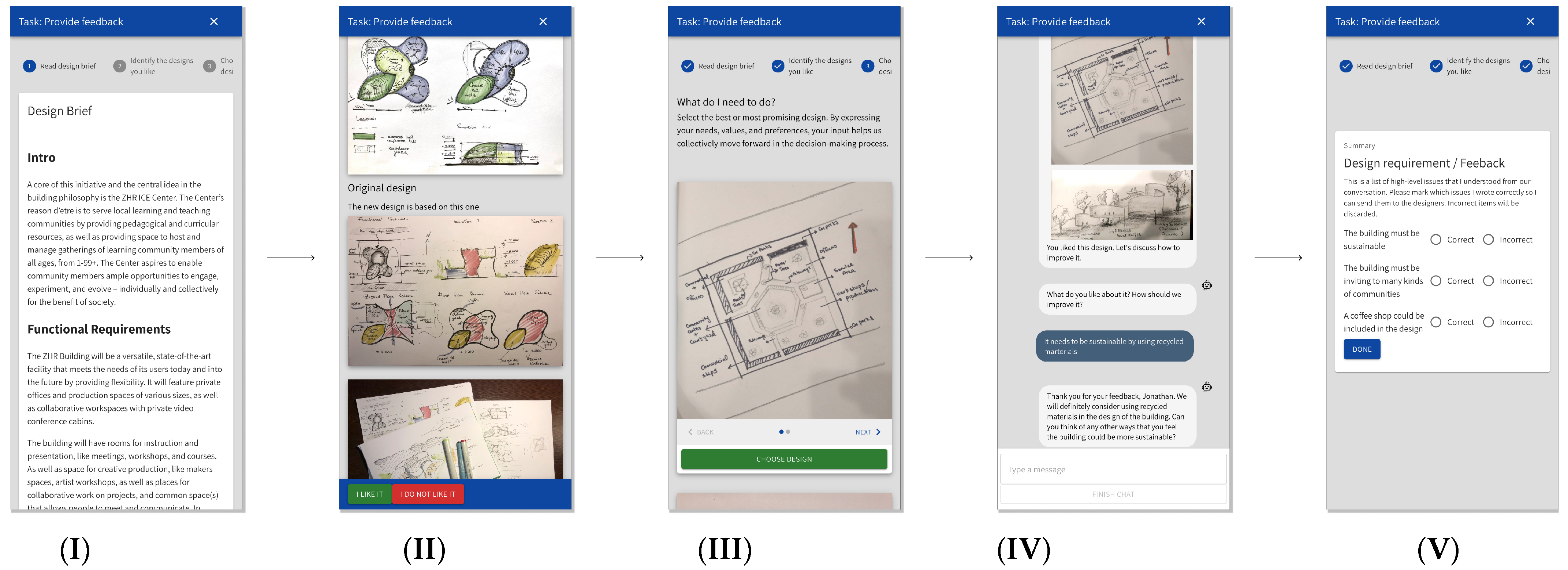

Once the design stage concludes, the process progresses to the Selection and Review stages (see Figure 3). In the Selection stage, the stakeholders evaluate the design artifacts and select the most fitting according to their opinion. In the Review stage, the stakeholders are asked to comment on how the design could improve further. These tasks are executed in parallel and without interaction between the participants.

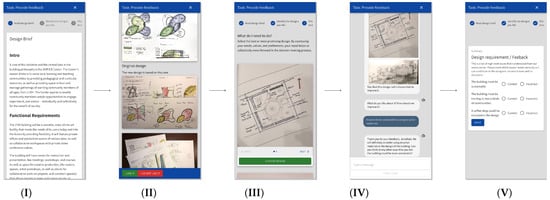

Figure 3.

Selection and review tasks user flow screens (from left to right): (I) The design brief is presented to provide the context of the project. (II) New and baseline designs are presented, and the most appealing designs are marked. (III) The marked designs are presented, and only one is selected. (IV) The design is discussed using a chatbot interface that allows for communication in natural language. (V) The chatbot summarizes the conversation into a list of feedback items selected for further development.

Due to the multiplicity of opinions of the participants, these stages conclude with selecting multiple selected designs. This requires that many other designs are not selected and are thus removed from the process. At the end of the Select and Review stages, a list of the designs that the participants prefer, along with their improvement ideas, is produced. The selected artifacts with improvement ideas become the input of a sequential DSR block that improves them.

3.2. Design Process

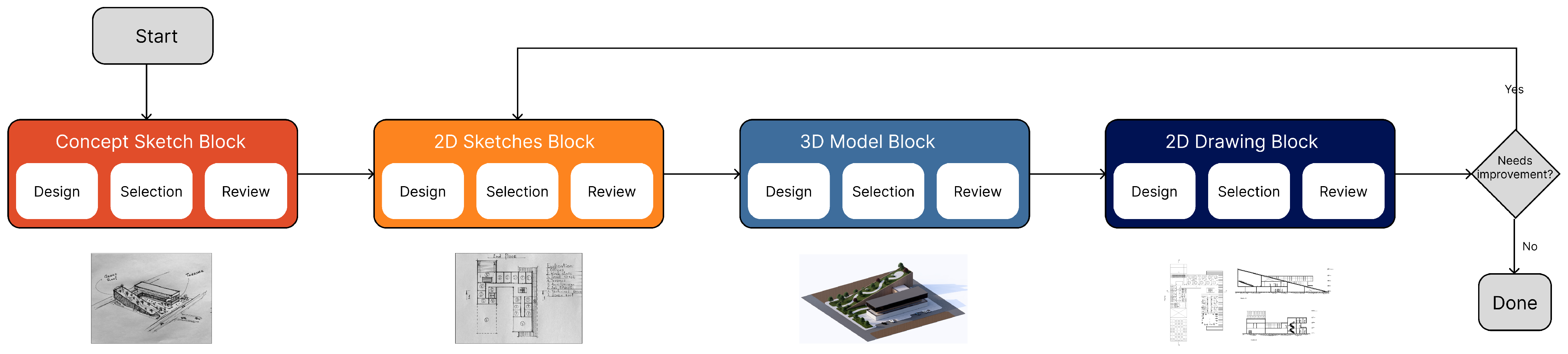

The design process consists of four types of serially executed DSR blocks. The process begins with a written design brief that includes plot measurement and photo files (see Figure 4).

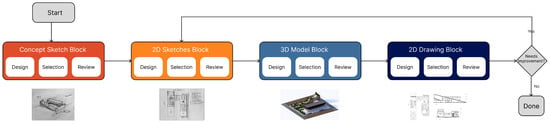

Figure 4.

Design process consisting of four DSR blocks with an output example.

- 1.

- The goal of the first DSR block is to generate a variety of design ideas in the form of architectural concept sketches.

- 2.

- The concept sketches in the second DSR block are further developed into two-dimensional plans, sections, and elevation sketches.

- 3.

- The concept and 2D sketches are then processed into 3D digital models in the third block.

- 4.

- Finally, the sketches and 3D models are digitized into precise blueprints in the fourth block.

Once all four DSR blocks conclude, they can be reiterated to improve the design.

4. Materials and Methods

In this section, we describe the research method used to test the crowdsourcing design method in field conditions, with an actual project, developer, and authentic community participation.

As discussed in Section 2, most previous studies on the crowdsourcing method were conducted in academic settings and included students and hypothetical design tasks. Accordingly, although the results of those studies were promising, it remained to be seen how the crowdsourcing method would perform in real-life settings. In such settings, different aspects that may influence human behavior should be considered since the stakes are high, and the design outcomes are associated with high financial costs.

4.1. The ZHR ICE Center

In order to test the crowdsourcing design method in field conditions, it was necessary to find a developer partner who planned to construct a community-oriented building, was interested in involving the community in the design process, and was ready to take risks. Therefore, we turned to local architect offices to find such a partner. After several months of searching, we found our partner for the project.

The developer partner had a 5000 sqm plot in the “Tzahar Industrial Park,” which serves five localities in the Hula Valley, northeastern Israel. On this plot, the developer wanted to construct an office and workshop building for the local high-tech industry. Of relevance for our research, the developer planned to provide 20% of the spaces to local NGOs, artists, and educators for free to contribute to the local community. This part of the building was named the “Tzahar Innovation, Culture, and Education Center” and would be supported by the rent income of the remaining parts of the building. The ICE center and office building size should be about 3000 sqm, spanning up to three stories, from which 2400 would be rented, and 600 sqm would be assigned to the ZHR ICE center.

The Tzahar industrial zone is jointly owned by the localities of Safed, Hazor HaGalilit, Rosh Pina, Tuba Zangariyya, and the Upper Galilee Regional Council. In terms of community participation, we were interested in a location near the village of Tuba Zangriyya, a traditional Bedouin Arab community and home to about 7000 residents. This area is considered underserved among the predominantly Jewish Israeli establishment. The Arab Al-Hayeb tribe founded this settlement back in 1908, and the tribe fought with the Jewish people in the 1948 Independence War. In recent years, the village has suffered from high crime rates, and its socioeconomic index is 3 (out of 10) (in 2019) [63].

On the opposite side of Tzahar, there is the Jewish locality of Rosh Pinna, established in 1875. Rosh Pinna has about 3200 residents, and its socioeconomic rating is 7 (per 2019) [63]. Other localities of the ZHR industrial area are located at a greater distance and are less relevant to our investigation.

4.2. Participants

After receiving Internal Review Board clearance, we recruited community participants for the project via an online landing page with a registration system. The page was promoted among local online groups and advertising that reached, according to the advertising system, slightly over 15,000 people. In addition, we also reached out to local communities and asked them to share information about our project and landing page among their members. These communities included an entrepreneurs’ group, an artists’ group, a local community center, and an educators’ group. Finally, we approached neighboring employees and business owners both personally and via posting flyers in neighboring businesses.

Architects for the project were recruited through Upwork, a large-scale freelance website. We posted a job on Upwork for every crowdsourcing task and chose among several interested architects. Architects were selected based on budget, portfolios, and personal communication with them via the platform.

4.3. The Architectural Design Process

Overall, the most widely used design project delivery workflow, which consists of several consequent stages, is the RIBA “plan of work” [64]. However, of the entire workflow needed to construct a building using the RIBA plan, in our participatory design project we focused on the brief and concept design stages, which are most significant from the cultural, functional, and aesthetic perspectives. Therefore, our first milestone was creating a design brief and subsequent production of artifacts that would match the design concept for further design.

4.4. Creating a Design Brief

First, the developer formulated strategic objectives of the project (Step 0 in RIBA’s plan). Next, we had a “town hall” meeting with project participants who agreed to participate and discuss the project’s design requirements. After the meeting, we transcribed the discussion recording. In addition, the participants interacted with an online system to obtain additional design requirements. During these activities, the design requirements were collected into a single document (see Appendix A). This design brief document served as a starting point for our study.

4.5. Control Group

In order to evaluate the quality of the designs produced using the crowdsourcing process, we needed a control group—namely, alternative professional design products created based on the same design brief. To this end, we used the same design brief to launch an online architectural competition on the Arcbazar, a commercial and relatively successful architectural competition “crowdsourcing”. The control group was not aware of our experimental part of the study. For the online architectural competition, we set a prize budget of 1400 USD (780 USD for first place, 390 USD for second place, and 140 USD for third place) and emphasized that we were looking for a concept design of a building, rather than a final solution. Other than the winners, the participants were not rewarded for their work. Therefore, we assumed that the competition would produce high-quality architectural proposals, which would be a significant challenge to the community design process.

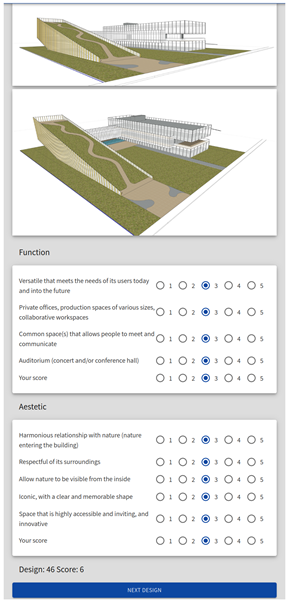

4.6. Architectural Design Quality Assessment

In order to compare the quality of the designs produced in the crowdsourcing process and in the competition, we needed to select an appropriate evaluation method. While many such methods are based on expert opinions of innovation and functionality criteria, architecture is more complex and very situated, so the corresponding designs are traditionally evaluated by expert panels with a holistic approach toward the design fit. For example, a building with traditional architecture can be the most appropriate design in a particular case over an innovative and original design.

To produce a numeric quality score for each architectural design, we used the evaluation of six architectural experts. These experts were all architects with advanced degrees, some with industry experience and others with teaching experience. Three of them had a Ph.D. degree, and three had an M.Sc. Two of the six involved experts were women. In order to standardize the design display, we selected two perspectives and floor plans for each design. This was critical since some competition entries had over 20 high-quality rendered and well-presented videos that other designs did not have, as we requested only concept design.

During the quality assessment, the experts rated the study design artifacts produced by the crowdsourcing and competition groups. The rating process took place online using web conference video software with the supervision of the research team with each expert separately. To ensure that the experts evaluate the works seriously, the researchers asked them to explain their final evaluation. The designs were presented to the experts randomly, and the entire rating process was recorded.

The evaluation was performed using a web-based system that included an evaluation form (see Appendix B). The experts were asked to consider each design and rate its compliance with the most crucial requirements mentioned in the design brief. The requirements were divided into aesthetic and functional requirements categories, and the experts could add personal ratings to each category to the calculated score. Each requirement and the personal rating were rated on a scale of 1 to 5.

Once the experts filled out the forms, an average score was computed, and the researchers asked the experts if the score was appropriate. Occasionally, the experts adjust their evaluation to modify the final rating to represent their opinion better. After the quality assessment session, all the expert ratings were standardized to reduce the effect of experts with a higher rating amplitude on the average. Finally, the average score was computed for each design.

Since design opinions are inherently subjective, we checked that the average ratings made sense and inspected for large deviations among the experts’ ratings, which would indicate a lack of agreement. Furthermore, the Pearson correlation coefficients between the ratings provided by the experts were computed to assess whether a particular expert was exhibiting an anomalous pattern. We expected a linear correlation between every two experts’ ratings. Therefore, a negative correlation would indicate an anomaly.

4.7. Surveys

User experience data were collected via online surveys that the study participants filled in immediately after interacting with the system. We collected data from the experiment participants, architects, and the community. Since we did not have access to anonymous participants of the design competition, which served as our control group, we did not collect survey data from these individuals.

5. Results

The community design process took over two months to complete. A total of 18 community stakeholders, 30 architects, and 68 designers (the control group) participated in the study. In addition, the control group submitted 25 competition submissions.

5.1. Effectiveness of the Crowdsourcing Design Process

The community design process evolution tree is shown in Figure 5. As mentioned previously in Section 4.2, in the first DSR block, we published a job on Upwork to produce a concept design as a 2 h concept sketch according to the requirements specified in the design brief. After a week, we received 17 sketches, each produced by a different architect. Once the sketches were acquired, the community participants were assigned selection and review tasks. A total of 32 participants selected seven sketches (selected artifacts had at least two votes). The block concluded with 13 participants providing 37 improvement ideas in the review task.

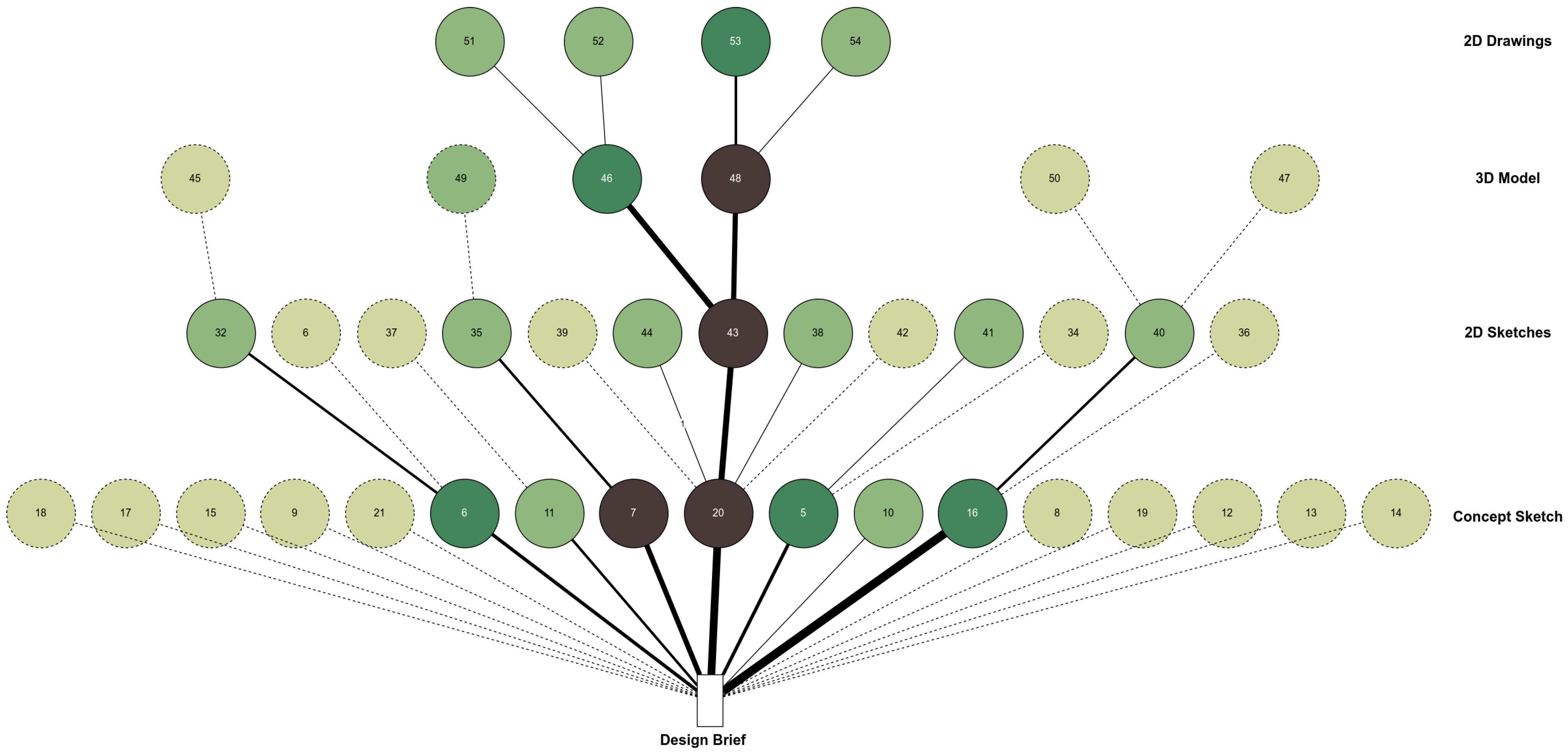

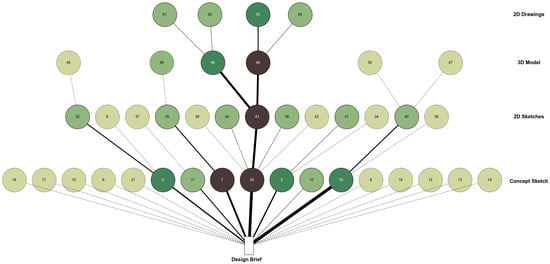

Figure 5.

Design development tree diagram. Each level represents a DSR block. The selected designs appear in a deeper tone, while the designs that were not selected by the community are presented in a lighter tone.

In the second block, the sketches were developed into plans, elevations, and section sketches. We published a new job to produce new sketches, considering the participants’ feedback, and limited the task performance time to two hours. The designers were allowed to select the design to develop from the available designs. After 13 new sketches were produced and saved on our system, the community members were assigned new selection and review tasks. Then, 18 participants examined the new plan sketches together with the concept sketches and selected five of them. In the review task, 14 participants produced 45 improvement ideas.

In the third and last DSR block, the selected designs were developed into 3D SketchUp models. We published a job again and recruited five further architects to produce six 3D models. This time we decided to recruit a smaller number of architects since we had a small number of designs that stemmed from only four concepts. The 3D tasks took longer and were more expensive. The received models were then presented to the community using a 3D viewer, which allowed for inspecting the design from different directions. At this point, 16 participants chose two of the models. Both models stemmed from the same concept sketch, meaning the design process converged.

In the third and last DSR block, the selected designs were developed into 3D SketchUp models. We published a job again and recruited five architects to produce six 3D models. This time we recruited a smaller number of architects since we had a small number of designs that stemmed from only four concepts. The 3D tasks took longer and were more expensive. After receiving the models, they were presented to the community using a three-dimensional viewer, which allowed for inspecting the design from different directions. Sixteen participants chose two of the models. Both models stemmed from the same concept sketch, meaning the design process converged.

After the selection of the models by the community, the chosen design was apparent. We posted a job to produce Autocad drawings from the models and sketch plans. To help the architects produce the drawings, they were provided with outlines of the floor plans, elevations, and sections produced from the models using the cross-section function. We obtained four drawings that were very similar. Since the design converged and there were insignificant differences between the drawings, we saw no value in having the community select the design, and the process concluded.

5.2. Design Quality

Design quality was evaluated based on experts’ opinions and compared to the control—i.e., the designs obtained from an architect competition. For that competition, a total of 68 architects from around the world registered, and, in the end, a total of 25 architectural designs were submitted. During the two-month competition, we responded to various questions submitted by the contestants. In line with our expectations, the resulting designs were expressed and communicated using high-quality exterior and interior 3D renders, videos, and drawings.

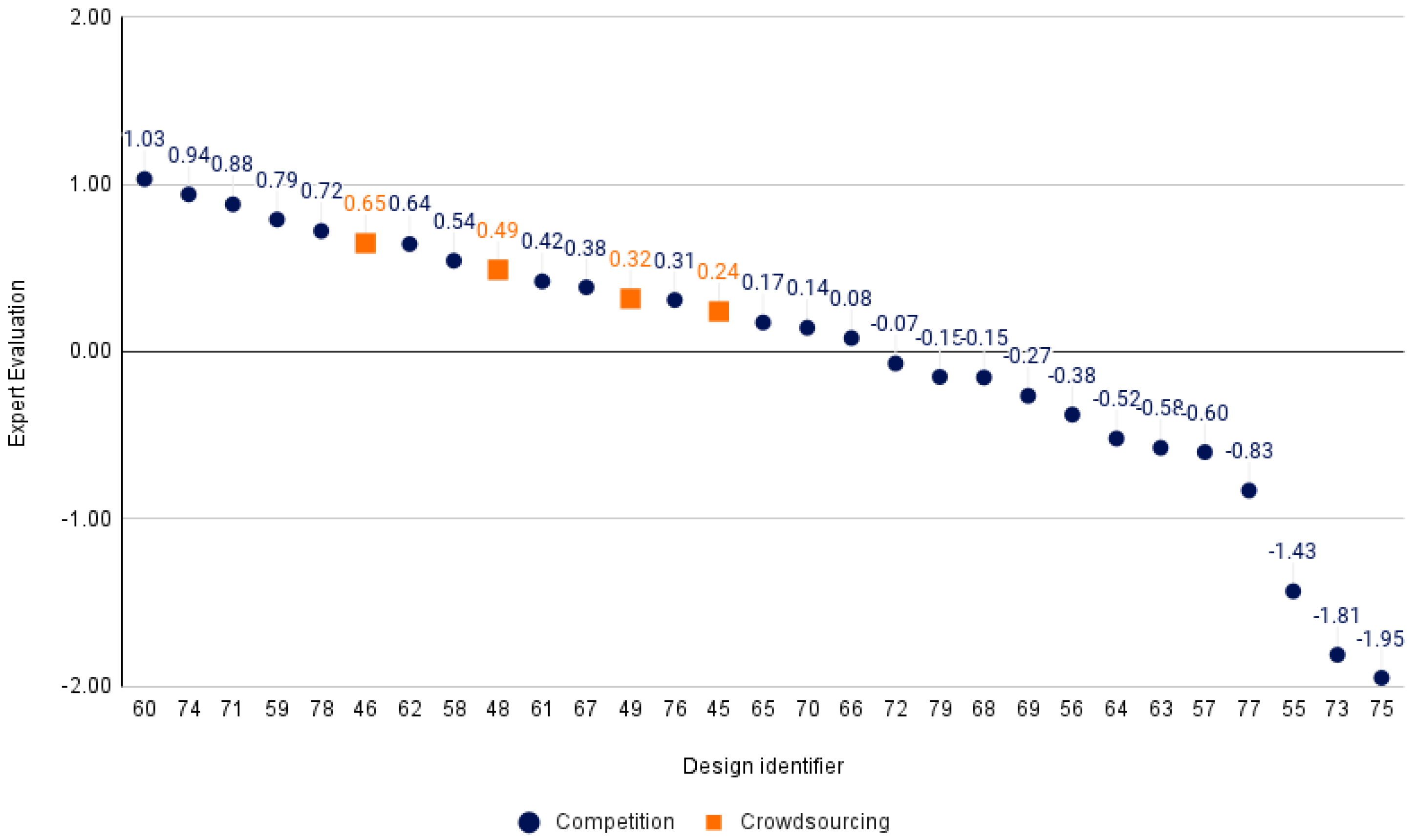

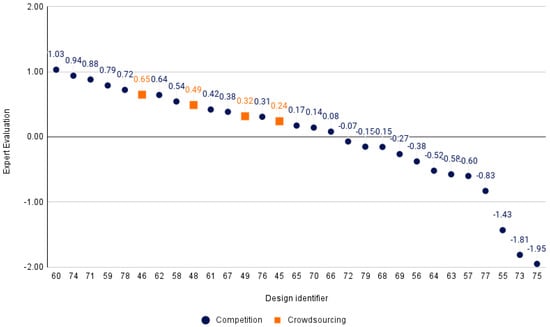

We then added the four 3D models from the crowdsourcing process (identified as designs 45–49) to the 25 designs from the competition (identified as designs 55–79) and asked six architecture experts to evaluate them. Although the participants did not select two of the four 3D models for further development, we were also interested in evaluating them. The overall average expert rating was 2.9 (min = −1.95, max = 1.03; SD = 0.77). The squared Pearson correlation was computed among the expert ratings to ensure agreement. Overall, there was a mostly positive weak to moderate correlation among the ratings, indicating a reasonable level of agreement (avg = 0.28, min = 0.15, max = 0.51).

The expert rankings are presented in Figure 6. The crowdsourcing designs received ratings of 0.65, 0.49, 0.32, and 0.24, which placed those designs at the 6th, 9th, 12th, and 14th places among all the designs produced during the competition and crowdsourcing process. The project developer chose the first places from the competition entries according to his preferences and taste. He chose designs 72, 61, and 74 (which were given 14th, 11th, and 2nd places among the competition entries). The latter rating thus did not correlate with the experts’ evaluation.

Figure 6.

The results of the experts’ rating of competition designs and the designs obtained in the community design process.

Taken together, the results of the quality evaluation demonstrated that (1) the crowdsourcing process produced equally high-quality designs as compared to architectural competition entries; (2) the community successfully selected an excellent design, which was among the top ones (6th place out of 29) and better than 80% of the proposals produced by competition participants; (3) still, the quality of five competition designs was higher than that of the designs produced in the community process.

5.3. Design Costs

In the first DSR, we received 17 concept sketches that cost a total of $261 (avg. = $15.3, min = $5, max = $21). The second DSR block yielded 13 2D sketches and costed $235 (avg. = $22.81, min = $5.25, max = $42). In the third DSR block, the participants produced six 3D models out of the selected designs at the cost of $105 (avg. = $17.5, min = $5.25, max = $21). Finally, we paid $185 (avg. = $42.25, min = $35, max = $50) for four CAD drawing sets. Overall, the total net cost of crowdsourcing freelancers’ compensation amounted to $786 (excluding the Upwork website fees and tip, ca. $21.23 for each job). As the design process progressed, from the 3D model DSR block, there was less need to produce multiple artifacts since the workers produced models very similar or identical to the baseline models.

As mentioned in Section 4.5, the total design competition cost was $1400. The competition participants produced a total of 25 designs. Therefore, on average, we spent $56 for each design in the competition (yet, as discussed earlier, only the winning architects were compensated for their work). The number of hours invested in each design remains unknown. However, considering that the competition designs were presented using high-quality renders and a complete set of plans, we can extrapolate that creating the designs, drawings, renders, and videos took several days to complete.

5.4. Participants’ Satisfaction

Next, the study participants’ insights and opinions about the crowdsourcing design process were obtained during a concluding meeting with six participants. During the discussion, the participants expressed their high satisfaction with the process and compared the community design process to common public participation processes. However, unlike standard participatory design processes in Israel, in this project, the participants were involved in design decisions over a significant period of time and saw how their involvement affected the final design. Moreover, they agreed that the selected design was the most appropriate, even though there was still some room for further improvement.

To explore the ways in which the design process can be improved, we encouraged the participants to share their experiences with aspects they found difficult and confusing or where they thought that the technology would benefit from improvement. One participant said, and others agreed on the point, that sometimes the emails came as a surprise in the evening. As a result, the participants felt they needed to spend more time examining the designs in-depth, frequently acted based on intuition, and felt pressed for time. This made them wonder if it was fair to quickly decide on the designers’ work that took a significant time to produce.

Furthermore, one of our participants, an architect with substantial experience in public participation processes in Israel, compared the process of this project to those carried out in his office. He mentioned that there was an aesthetic bias caused by the technical graphic quality of the architectural expression of an idea. This bias can, in his opinion, result in favoring aesthetic ideas over ideas with a good design concept.

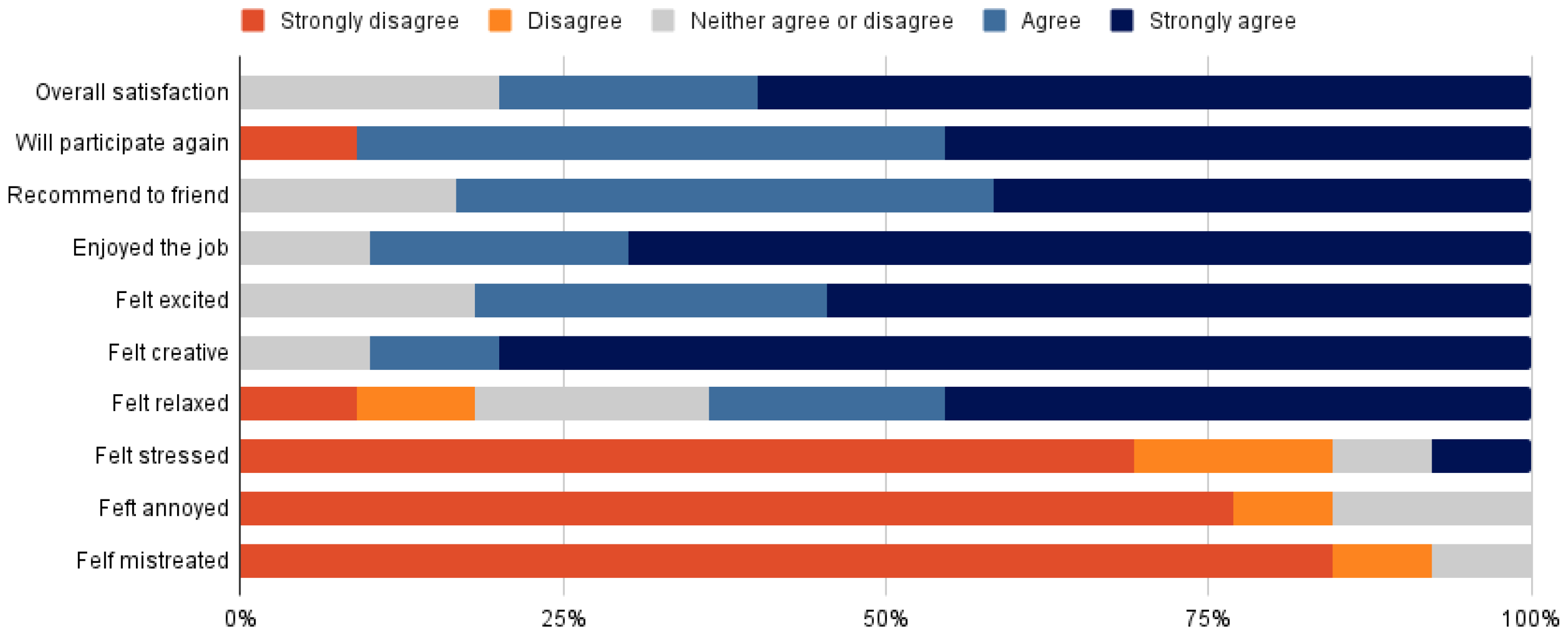

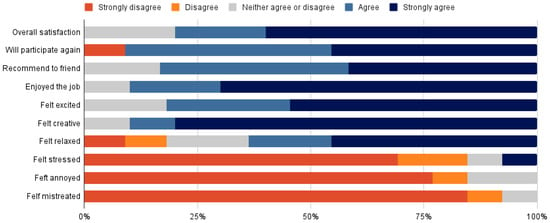

5.5. Architects’ Satisfaction

To learn about the crowd-working architects’ satisfaction, we asked them to complete a survey and comment on their experience. The participants were asked to rate the survey items using a 5-point Likert scale. A total of 14 architects (9 males, 5 females) aged, on average, 29.6 years old (min = 25, max = 40, SD = 4.54) participated in this survey. Among them, six had a Bachelor’s degree, while eight had a Master’s degree. In terms of employment status, eleven were self-employed, two were looking for work, and one preferred not to answer.

Overall, the survey results showed high satisfaction among the architects with their participation in the design process (see Figure 7). The architects participated mainly financially, and most thought the remuneration was fair. However, one architect stated that he preferred to work as a freelancer instead of working in an office because of the COVID-19 epidemic. Another architect said that he had to work as a freelancer because there was a war in his country. This architect also thought that the reward was insufficient.

Figure 7.

Architects survey results revealing the satisfaction of freelance architects with performing the various jobs.

6. Discussion

We presented a crowdsourcing negotiation model informed by design method processes (see Figure 1). This model is based on two crowdsourcing processes: one performing design generation and exploration through a professional design competition and the other collecting and aggregating feedback and opinions from the project stakeholders.

In this section, we summarize the results of experimenting with a novel crowdsourcing architecture design system in real-life settings and discuss the challenges and opportunities afforded by the proposed system.

6.1. Effectiveness of the Crowdsourcing Process

The renowned designer Sir Alec Issigonis famously remarked that “a camel is a horse designed a committee” in criticism of collective decision-making, signifying that numerous conflicting opinions can lead to a poorly constructed design. There is an agreement that citizen participation is fundamental for democracy. Some researchers argued that large-scale collaborations may demonstrate collective intelligence [12]. However, participatory processes are costly and time-consuming, and proof of their performance and effectiveness needs to be provided.

Recent research has developed methods of large-scale urban participation; yet, no evaluation of the quality of the design has been made [52], only an assessment of the process [14] or the properties of the designs [51]. In this study, we evaluated the outcome quality of the process in comparison to a control group to establish a measure of achievement. Although this measure is subjective, it supplies a reference point, and we think such a measure is necessary to advance the research on design techniques.

Concerning our first research question (RQ1—“How effective is the crowdsourcing process in terms of design quality, cost, and user satisfaction in real-life conditions?”), our results showed that the crowdsourcing process was cheaper than the competition and resulted in fewer designs with above-average design quality. However, the competition process resulted in several designs that were better than the designs obtained in the crowdsourcing process. Furthermore, since only one design was allowed to be established on a given plot, the competition process produced a better design.

One of the reasons for this outcome is that the competing architects were not limited by time and were rewarded only if they produced the best design (i.e., they became winners of the competition). Accordingly, of 25 participating architects, 22 competitors were not rewarded for their work, including the designer who produced the best design (in the experts’ evaluation), which is exploitive [62]. However, the results of our survey of crowdsourcing architects revealed their high satisfaction rate from the reward and experience of working on the project. Taken together, our findings clearly demonstrate that the community design process is fairer and positively impacts the workers and community. However, further research is needed to enhance the quality of design outcomes and to surpass the performance of the competition model.

6.2. Non-Professional Participants’ Quality Evaluation

As concerns our second research question (RQ2—“Can non-professional participants choose the best designs in experts’ assessment?”), the participants agreed that it was challenging to evaluate the designs. In the concluding discussion, they shared their insecurity as to whether they could select the right design; they also felt inadequate in deciding which design should be selected since much thought was invested in all participating designs. Another difficulty was that the developer selected the winning design that was evaluated as low-quality by the experts. Finally, one of the community participants, an expert architect in public participation, mentioned that the graphic presentation of a design could distort the election results.

However, all participants agreed that in the end, only the best designs were chosen at the different stages, resulting in an excellent design. Consistently, the experts’ rating also found that the selected crowdsourcing design was of high quality.

Acknowledging that design quality evaluation is a challenging task for non-professionals and experts, our results showed that the variance of opinions was not significant, and with a sufficient number of participants, the "wisdom of the crowd" can mitigate various biases, errors, and differences of opinion so to identify the best designs. In previous research, this phenomenon was observed with architecture students with varying levels of education [11]. In the present study, we also found confirmatory evidence of this pattern with non-professionals.

6.3. Achieving Consensus

One of our motivations for developing and testing the effectiveness of the participatory design method was to improve democratic traditions through the effective communal participation of various actors in the design process. Architectural design dramatically impacts the urban quality of life, and involving the community in the design process could result in better designs. Moreover, as discussed in the introduction, design is a “wicked problem,” and the solutions can be subjective and situated in the context. Accordingly, design problems have numerous valid solutions that depend on the audience.

In general, the design process algorithm affords the solution space exploration with multiple design ideas and developing the best ones in parallel, thus allowing for the development of various creative solutions. However, as more design candidates are produced in each iteration, it may become difficult to reduce their number to reach a consensus. This challenge can be solved by reducing the ideas based on the tree-like development structure. In the present study, once several designs were selected on the current DSR block, the remaining idea branches from previous levels were removed. For instance, in the third iteration, three designs (49, 46, and 48) were selected with 4, 6, and 6 votes. Considering that designs 46 and 48 stem from the same baseline design, we outweighed them over design 49 since it was overwhelmed by 12 votes over four votes only—75% of the participants.

Answering RQ-3, “How can the iterative voting process achieve an agreement or consensus?” we used an evolutionary approach to select a branch of the design tree that represents the agreement of most of the participants. In the earlier iterations, designs selected only once were removed, which is below 6.25% of the votes. However, when the process progressed to the third iteration, the design branch with 75% of the participants’ votes was selected.

These thresholds were essential to expedite the design development since, in this real-life project, there were time and budget considerations imposed by the developer. Therefore, developing many designs, especially those selected only once, would have been wasteful. Instead, it made sense to produce many design derivatives from selected designs more repeatedly. Moreover, there was visible fatigue of participation over time which is also a consideration to shorten the design process by removing designs with minor changes to be selected.

6.4. Ending the Design Process

In this case study, we aimed to address the problem of when the design process should stop and formulated RQ-4 as “When should the crowdsourcing process stop?”. Previous studies had a stopping condition that a human manager decided when the design was ready after a 3D model was produced [11,12]. The reason is that the 3D model integrates all design sketches spatially. Other participatory approaches utilized a human manager to oversee the procedure [14,51,52]. The hypothesis was that the process should stop when a consensus is achieved. As mentioned, the process did not achieve a consensus, and the design was selected based on a significant majority vote.

We noted several aspects of the crowdsourcing process that should be considered in order to determine when to stop the process. We learned from the concluding discussion meeting that the community members found it challenging to identify differences between similar designs. This challenge is tough when using a mobile phone to review architectural drawings, as most participants did. This brings another challenge—slight differences between the designs are hard to evaluate without professional knowledge and experience. It can be frustrating to non-professional participants since they feel that they might not be experienced enough to judge professional designs.

This is problematic, as many iterations are necessary for successful design and collective intelligence has been found to emerge after a substantial number of iterations [12]. Several strategies can be employed, including stronger engagement from stakeholders, clarification of differences between design candidates, and emphasizing the importance of participation. However, together with the participation fatigue over time, our observations suggest that in advanced design stages, where more minor adjustments are made, the participatory process should adjourn and move into a more detailed design phase involving professionals. Future research is needed to determine the optimal measure for addressing this issue.

6.5. Who Decides What Goes in?

The question of what happens with user input is complex. It is considered the ’black box’ of participatory planning [65], with algorithms or people sorting and filtering the data behind the scenes [66]. This allows planners or politicians to reject ideas that are not in line with their vision without considering citizens’ interests.

On the contrary, the current system permits citizens to provide input, regardless of whether it is in agreement or not. This input is then given to multiple designers who make professional decisions and create new solutions that address only some of the issues. Finally, through the design evaluation process, the participants can decide which design is most suitable and the best compromise.

In this study, the process and the transparency it provided engendered a high degree of trust in the community. Despite the success of the process, it is important to note that the concept design still needs to be further developed. Without public oversight, the design may be altered, leading to a decrease in trust from the citizens who had previously been involved in the process.

6.6. Limitations

The study had several limitations that arose from the field conditions of the case study. As a result, the research was limited to a specific geographic region and population, so the results may not be applicable or generalizable to other populations or areas.

A further limitation of the research was the private ownership of the design project, which dampened some of the participants’ motivation in the design process. Furthermore, the design project was situated in an industrial area with limited accessibility to the community. It is recommended that future research involves a publicly owned design project in close proximity to a community.

The research team employed an email-based approach to communicate with the participants, which may have limited the sample size due to the inability to contact those who did not use email regularly. To improve the response rate in future research, it is recommended that phone numbers be collected and communication be conducted using more immediate methods, such as instant messaging.

Despite these limitations, the results of this study provide insight into the effects of design projects on the community.

7. Conclusions

In this study, we experimentally tested a crowdsourcing system developed to produce conceptual designs in an actual architectural design project. Our results revealed that the crowdsourcing process based on the proposed system yielded designs of similar quality to those produced by expert architects in a design competition. Moreover, we also found that the crowdsourcing process allowed the community members to influence the final design by voting and providing feedback. The latter finding clearly demonstrates the value and utility of the “wisdom of the crowd” in early conceptual design stages. Furthermore, our results also demonstrated that the designs produced using the participatory approach received very high satisfaction ratings from the community members. These high ratings were afforded by the transparent involvement of community members in the design process.

Furthermore, while our results showed that the participants of the design competition succeeded in producing superior designs, the competition approach failed to compensate the designers for their effort fairly. Overall, this lack of compensation to freelancers is unsustainable over time since, with an increase in the number of contestants, their chance to be compensated decreases. This highlights the advantages of using the crowdsourcing process, which, as an alternative solution, produces high-quality designs, generates high community engagement, and is, at the same time, fair toward the participating designers.

Finally, our results also revealed that in real-life projects, the participatory design process should not progress beyond the conceptual stages since more minor deviations in the design are harder to identify and evaluate by laypeople. Our findings also showed that taking into account the participants’ fatigue and budget constraints, the design process has to converge after fewer iterations than we initially expected.

Author Contributions

Conceptualization, methodology, J.D., S.Y., Q.Y. and A.S.; software, formal analysis, J.D.; investigation, data curation, J.D. and S.Y.; resources, A.S., J.D. and S.Y.; writing—original draft preparation, J.D.; writing—review and editing, J.D., S.Y., Q.Y. and A.S.; visualization, J.D.; supervision, A.S. and Q.Y.; project administration, J.D.; funding acquisition, Q.Y. and A.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by Valiant Limited.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and was approved by the Ethics Committee of the Technion Institute of Technology (No. 118571, 15 May 2022) and by Cornell University Institutional Review Board Concurrence of Exemption Notice IRB0143299.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Acknowledgments

The research team would like to thank Dan Nissimyan for gracious financial support, and providing opportunity to experiment with the ICE project. We would like to acknowledge the help of our research assistants Roni Hillel, Tamar Levinger, from Technion MTRL and Dasha Sobotine from the Technion IIT. We would also like to thank Valiant Investment team including Eylon Yadin, Omer Aviram and Mike Schnall.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. The Design Brief

Appendix A.1. The ZHR Building and Innovation, Culture, and Education Center

We are designing the ZHR Building, a 3000 square meter building for industrial, technological, and educational companies and their respective communities. The building will contain the ZHR Innovation, Culture and Education (“ICE”) Center alongside offices and micro-manufacture sites. This is a collaborative design project, in which the community produced this design brief together with the developer.

Appendix A.1.1. Information Provided by the Developer

The Site

The plot is located in the Zefat, Hatzor, Rosh Pinna Industrial Park (ZHR) in northern Israel, just off Route 90. The Industrial Park serves the municipalities and residents of Zefat, Hatzor, Rosh Pinna, Tuba Zangariyya, and the Upper Galilee Regional Council (comprised of 29 localities, a horse ranch, and the Domus Galilee). The park serves ±60,000 citizens and their economic initiatives.

The Industrial Park contains high-tech companies, government utility services, heavy industry, service providers, commercial businesses, and a wedding hall.

The Developer—Coffeeshop Alchemy LLC

We are a learning organization that believes in cultivating and developing learning dispositions throughout life. However, only ‘part’ of the building is explicitly dedicated to learning communities. The OECD defines a “learning organization” as a place where the beliefs, values, and norms of employees are brought to bear in support of sustained learning; where a “learning atmosphere” or “culture” is nurtured, and “learning to learn” is essential for everyone involved.

Churchill declared, “We shape our buildings; thereafter they shape us”, almost 100 years later we still agree, that the building should support the development of learning dispositions and growth mindsets among its inhabitants. A rich variety of spaces can provide tenants with increased choice, access, and agency in how they prefer to learn, work, and teach. Thoughtfully designed spaces can encourage people to become engaged members of a community and they can help support people in taking intellectual risks—and we have learned that this is where the magic happens. Proper design, configuration, and flexibility can catalyze learning, action, and breakthroughs, and that’s Coffeeshop Alchemy. The company is excited to also build its offices in this building.

A core of this initiative and the central idea in the building philosophy is the ZHR ICE Center. The Center’s reason d’etre is to serve local learning and teaching communities by providing pedagogical and curricular resources, as well as providing space to host and manage gatherings of learning community members of all ages, from 1–99+. The Center aspires to enable community members ample opportunities to engage, experiment, and evolve—individually and collectively for the benefit of society.

Tenants/Inhabitants

The tenants will likely be information industry businesses such as software, services, small manufacturing, and commerce. A central part of the building will be allocated to the ZHR ICE Center.

Collaborative and common spaces should serve to catalyze social interaction and keep meetings efficient and enjoyable. We are interested in versatile “Unofficial Spaces” whose function is not explicitly defined and are open to interpretation and ephemeral ownership by the people that use them.

People will arrive at the building mainly by car since it is remote. It should also be accessible by bicycle, or by foot. A train station is planned to be constructed about 1000 m. from the plot in the future.

Appendix A.1.2. Information Provided by the Community

Functional Requirements

The ZHR Building will be a versatile, state-of-the-art facility that meets the needs of its users today and into the future by providing flexibility. It will feature private offices and production spaces of various sizes, as well as collaborative workspaces with private video conference cabins.

The building will have rooms for instruction and presentation, like meetings, workshops, and courses. As well as space for creative production, like makers spaces, artist workshops, as well as places for collaborative work on projects, and common space(s) that allows people to meet and communicate. In addition, the building could have an auditorium (concert and/or conference hall), conference breakout rooms, a changing art display space, and spaces for sports or physical fitness. The building will also have an outdoor plaza/yard, a cafeteria/kitchen/coffee corner and dining room with seating areas, and formal and informal meeting places.

Aesthetic Aspirations

The aesthetic vision for the project is to create a building that would have a harmonious relationship with nature (nature entering the building), be respectful of its surroundings, and allow nature to be visible from the inside. The building should be designed to be iconic, with a clear and memorable shape. The goal is to create a space that is highly accessible and inviting, with plenty of light and open space. The elevations of the building should be designed to be innovative and inviting and to show the uses of the building by being transparent.

The building should be designed in a way that takes advantage of the stunning views of the mountains and the surrounding nature. The building has two fronts, one facing the street (south) and the other facing the inter-city road (north).

Sustainability and Accessibility Aspirations

The building should be designed to be as sustainable and eco-friendly as possible. It should be made of materials that can be reused or recycled, and that require minimal energy to produce. It should be designed and built in a way that minimizes operational energy requirements, for example, positioning the structure in a way that leverages the natural sun and wind directions to reduce energy needs.

All spaces in the building should be accessible, comfortable, welcoming for all ages and abilities, and adaptable enough to be used for a variety of purposes.

Social Impact

The building, in its entirety, acts as a physical platform for cultural and educational innovation, benefiting both ICE and other tenants. The building serves as a hub for the community, offering a variety of activities and places to gather, both during the day and in the evening. It should showcase the community’s art and culture, both inside and out, and provide a space for youth to meet.

The building will serve as a fulcrum for local communities to come together, leverage talents and creativity, learn new skills and experiment with abilities, develop one’s potential, build long-term relationships, and improve the learning experience and outcomes.

Appendix A.1.3. Technical Requirements

Technical Constraints

- Plot size: 5000 square meters (m). Indicated by a blue line on the surveyor’s map.

- Building size ±3000 m

- The building must be 5 m away from the neighboring plots and adjacent streets (visualized as a red line on the surveyor’s map).

- Maximum height: 3 stories—12 m

Suggested Space Allocation

- Industrial spaces

- -

- 1500 m of “offices, workshops, and production” (various spaces)

- -

- 450 m for commercial use (maximum)

- -

- 450 m service areas (stairs, storage, technical, corridors)

- Community Center (ICE)

- -

- 450 m of “offices, workshops, and production” (various spaces)

- -

- 150 m of service areas (stairs, storage, technical, corridors)

- -

- Parking

- -

- 45 cars

- -

- Community Center (ICE)

Appendix B. Expert Evaluation Web-Form

References

- Schön, D.A. The Reflective Practitioner; Routledge: London, UK, 2017. [Google Scholar] [CrossRef]

- McDonnell, J. Collaborative negotiation in design: A study of design conversations between architect and building users. CoDesign 2009, 5, 35–50. [Google Scholar] [CrossRef]

- Calderon, C. Unearthing the political: Differences, conflicts and power in participatory urban design. J. Urban Des. 2020, 25, 50–64. [Google Scholar] [CrossRef]

- Reich, Y.; Konda, S.L.; Monarch, I.A.; Levy, S.N.; Subrahmanian, E. Varieties and issues of participation and design. Des. Stud. 1996, 17, 165–180. [Google Scholar] [CrossRef]

- Luck, R. Participatory design in architectural practice: Changing practices in future making in uncertain times. Des. Stud. 2018, 59, 139–157. [Google Scholar] [CrossRef]

- Münster, S.; Georgi, C.; Heijne, K.; Klamert, K.; Rainer Noennig, J.; Pump, M.; Stelzle, B.; van der Meer, H. How to involve inhabitants in urban design planning by using digital tools? An overview on a state of the art, key challenges and promising approaches. Procedia Comput. Sci. 2017, 112, 2391–2405. [Google Scholar] [CrossRef]

- Krüger, M.; Duarte, A.B.; Weibert, A.; Aal, K.; Talhouk, R.; Metatla, O. What is participation? Emerging challenges for participatory design in globalized conditions. Interactions 2019, 26, 50–54. [Google Scholar] [CrossRef]

- Brabham, D.C. Crowdsourcing as a Model for Problem Solving: An Introduction and Cases. Convergence 2008, 14, 75–90. [Google Scholar] [CrossRef]

- Dortheimer, J.; Margalit, T. Open-source architecture and questions of intellectual property, tacit knowledge, and liability. J. Archit. 2020, 25, 276–294. [Google Scholar] [CrossRef]

- Transit Cooperative Research Program Synthesis Program; Synthesis Program; Transit Cooperative Research Program; Transportation Research Board; National Academies of Sciences, Engineering, and Medicine. Public Participation Strategies for Transit; Transportation Research Board: Washington, DC, USA, 2011; p. 22865. [Google Scholar] [CrossRef]

- Dortheimer, J.; Neuman, E.; Milo, T. A Novel Crowdsourcing-based Approach for Collaborative Architectural Design. In Proceedings of the 38th eCAADe Conference Anthropologic: Architecture and Fabrication in the Cognitive Age, Berlin, Germany, 16–17 September 2020; pp. 155–164. [Google Scholar]

- Dortheimer, J. Collective Intelligence in Design Crowdsourcing. Mathematics 2022, 10, 539. [Google Scholar] [CrossRef]

- Frich, J.; Mose Biskjaer, M.; Dalsgaard, P. Twenty Years of Creativity Research in Human-Computer Interaction: Current State and Future Directions. In Proceedings of the 2018 Designing Interactive Systems Conference, Hong Kong, China, 9–13 June 2018; pp. 1235–1257. [Google Scholar] [CrossRef]

- Hofmann, M.; Münster, S.; Noennig, J.R. A Theoretical Framework for the Evaluation of Massive Digital Participation Systems in Urban Planning. J. Geovis. Spat. Anal. 2020, 4, 3. [Google Scholar] [CrossRef]

- Ataman, C.; Herthogs, P.; Tuncer, B.; Perrault, S. Multi-Criteria Decision Making in Digital Participation—A framework to evaluate participation in urban design processes. In Proceedings of the 40th eCAADe Conference Co-creating the Future: Inclusion in and through Design, Ghent, Belgium, 13–16 September 2022; pp. 401–410. [Google Scholar] [CrossRef]

- Gero, J.S. Constructive Memory in Design Thinking. In Design Thinking Research Symposium: Design Representation; MIT: Cambridge, UK, 1999; pp. 29–35. [Google Scholar]

- Rittel, H.W.J.; Webber, M.M. Dilemmas in a General Theory of Planning. Policy Sci. 1973, 4, 155–169. [Google Scholar] [CrossRef]

- Simon, H.A. The structure of ill structured problems. Artif. Intell. 1973, 4, 181–201. [Google Scholar] [CrossRef]

- Gregory, S.A. Design and the Design Method. In The Design Method; Gregory, S.A., Ed.; Springer: Boston, MA, USA, 1966; pp. 3–10. [Google Scholar] [CrossRef]

- Cross, N. Designerly Ways of Knowing: Design Discipline. Des. Issues 1982, 4, 221–227. [Google Scholar]

- Jones, C.J. A Method of Systematic Design. In Proceedings of the Conference on Design Methods; Jones, C.J., Thornley, D.G., Eds.; Pergamon Press: Oxford, UK, 1963; pp. 53–73. [Google Scholar]

- Howard, T.; Culley, S.; Dekoninck, E. Describing the creative design process by the integration of engineering design and cognitive psychology literature. Des. Stud. 2008, 29, 160–180. [Google Scholar] [CrossRef]

- Simon, H.A. The Sciences of the Artificial; MIT Press: Cambridge, MA, USA, 1969; p. 123. [Google Scholar]

- Kline, S.J. Innovation is not a linear process. Res. Manag. 1985, 28, 36–45. [Google Scholar] [CrossRef]

- Smith, R.P.; Tjandra, P. Experimental Observation of Iteration in Engineering Design. Res. Eng. Des.-Appl. Concurr. Eng. 1998, 10, 107–117. [Google Scholar] [CrossRef]

- Takeda, H.; Veerkamp, P.; Tomiyama, T.; Yoshikawa, H. Modeling design processes. AI Mag. 1990, 11, 37–48. [Google Scholar]

- Corne, D.; Smithers, T.; Ross, P. Solving design problems by computational exploration. In Proceedings of the IFIP WG5.2 on Formal Design Methods for CAD, Tallin, Estonia, 16–19 June 1993; pp. 249–270. [Google Scholar]

- Maher, M.L.; Poon, J.; Boulanger, S. Formalising Design Exploration as Co-Evolution. In IFIP Advances in Formal Design Methods for CAD; Gero, J.S., Sudweeks, F., Eds.; Springer: Boston, MA, USA, 1996; pp. 3–30. [Google Scholar] [CrossRef]

- Maher, M.L.; Tang, H.H. Co-evolution as a computational and cognitive model of design. Res. Eng. Des. 2003, 14, 47–64. [Google Scholar] [CrossRef]

- Wiltschnig, S.; Christensen, B.T.; Ball, L.J. Collaborative problem–solution co-evolution in creative design. Des. Stud. 2013, 34, 515–542. [Google Scholar] [CrossRef]

- Dorst, K.; Cross, N. Creativity in the design process: Co-evolution of problem–solution. Des. Stud. 2001, 22, 425–437. [Google Scholar] [CrossRef]

- Howe, J. The Rise of Crowdsourcing. Available online: https://www.wired.com/2006/06/crowds/ (accessed on 9 January 2023).

- Galton, F. Vox populi (the wisdom of crowds). Nature 1907, 75, 450–451. [Google Scholar] [CrossRef]

- Angelico, M.; As, I. Crowdsourcing Architecture: A Disruptive Model in Architectural Practice. In Proceedings of the ACADIA, Halifax, NS, Canada, 1–7 October 2007; pp. 439–443. [Google Scholar]

- Kittur, A.; Nickerson, J.V.; Bernstein, M.; Gerber, E.; Shaw, A.; Zimmerman, J.; Lease, M.; Horton, J. The Future of Crowd Work. In Proceedings of the 2013 Conference on Computer Supported Cooperative Work, San Antonio, TX, USA, 23–27 February 2013; p. 1301. [Google Scholar] [CrossRef]

- Yu, L.; Nickerson, J.V.; Sakamoto, Y. Collective Creativity: Where we are and where we might go. In Proceedings of the Collective Intelligence 2012, Ho Chi Minh City, Vietnam, 28–30 November 2012. [Google Scholar]

- Bhatti, S.S.; Gao, X.; Chen, G. General framework, opportunities and challenges for crowdsourcing techniques: A Comprehensive survey. J. Syst. Softw. 2020, 167, 110611. [Google Scholar] [CrossRef]

- Jiang, H.; Matsubara, S. Efficient Task Decomposition in Crowdsourcing. In Proceedings of the Principles and Practice of Multi-Agent Systems, Gold Coast, QLD, Australia, 1–5 December 2014; Dam, H.K., Pitt, J., Xu, Y., Governatori, G., Ito, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; Volume 8861, pp. 65–73. [Google Scholar] [CrossRef]

- Kulkarni, A.; Can, M.; Hartmann, B. Turkomatic: Automatic Recursive Task and Workflow Design for Mechanical Turk. In Proceedings of the Human Computation: Papers from the 2011 AAAI Workshop, San Francisco, CA, USA, 8 August 2011; pp. 2053–2058. [Google Scholar] [CrossRef]

- LaToza, T.D.; Ben Towne, W.; Adriano, C.M.; Van Der Hoek, A. Microtask programming: Building software with a crowd. In Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology, Honolulu, HI, USA, 5–8 October 2014; pp. 43–54. [Google Scholar] [CrossRef]

- Kittur, A.; Smus, B.; Khamkar, S.; Kraut, R. CrowdForge Crowdsourcing Complex Work. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology (UIST ’11), Santa Barbara, CA, USA, 16–19 October 2011; pp. 43–52. [Google Scholar] [CrossRef]

- Retelny, D.; Robaszkiewicz, S.; To, A.; Lasecki, W.S.; Patel, J.; Rahmati, N.; Doshi, T.; Valentine, M.; Bernstein, M.S. Expert Crowdsourcing with Flash Teams. In Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology (UIST ’14), Honolulu, HI, USA, 5–8 October 2014; pp. 75–85. [Google Scholar] [CrossRef]

- Niu, X.J.; Qin, S.F.; Vines, J.; Wong, R.; Lu, H. Key Crowdsourcing Technologies for Product Design and Development. Int. J. Autom. Comput. 2019, 16, 1–15. [Google Scholar] [CrossRef]

- Wu, H.; Corney, J.; Grant, M. Relationship between quality and payment in crowdsourced design. In Proceedings of the 2014 IEEE 18th International Conference on Computer Supported Cooperative Work in Design, CSCWD 2014, Hsinchu, Taiwan, 21–23 May 2014; pp. 499–504. [Google Scholar] [CrossRef]

- Sun, L.; Xiang, W.; Chen, S.; Yang, Z. Collaborative sketching in crowdsourcing design: A new method for idea generation. Int. J. Technol. Des. Educ. 2015, 25, 409–427. [Google Scholar] [CrossRef]

- Park, C.H.; Son, K.; Lee, J.H.; Bae, S.H. Crowd vs. crowd: Large-scale cooperative design through open team competition. In Proceedings of the 2013 Conference on Computer Supported Cooperative Work, CSCW ’13, San Antonio, TX, USA, 23–27 February 2013; p. 1275. [Google Scholar] [CrossRef]

- Yu, L.; Nickerson, J.V. Cooks or Cobblers? Crowd Creativity through Combination. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’11), Vancouver, BC, Canada, 7–12 May 2011; pp. 1393–1402. [Google Scholar] [CrossRef]