Artificial Intelligence-Aided Tooth Detection and Segmentation on Pediatric Panoramic Radiographs in Mixed Dentition Using a Transfer Learning Approach

Abstract

1. Introduction

- task integration: a YOLOv11-based framework that jointly performs detection, enumeration, and polygonal instance segmentation in a single pass, tailored to mixed dentition;

- efficient pediatric labeling: a hybrid pre-annotation strategy that reduces manual burden while preserving accuracy;

- dentition-aware reporting: comprehensive stratification of performance by dentition type and error profiling, highlighting clinically relevant failures that prior studies did not examine.

2. Materials and Methods

2.1. Study Design

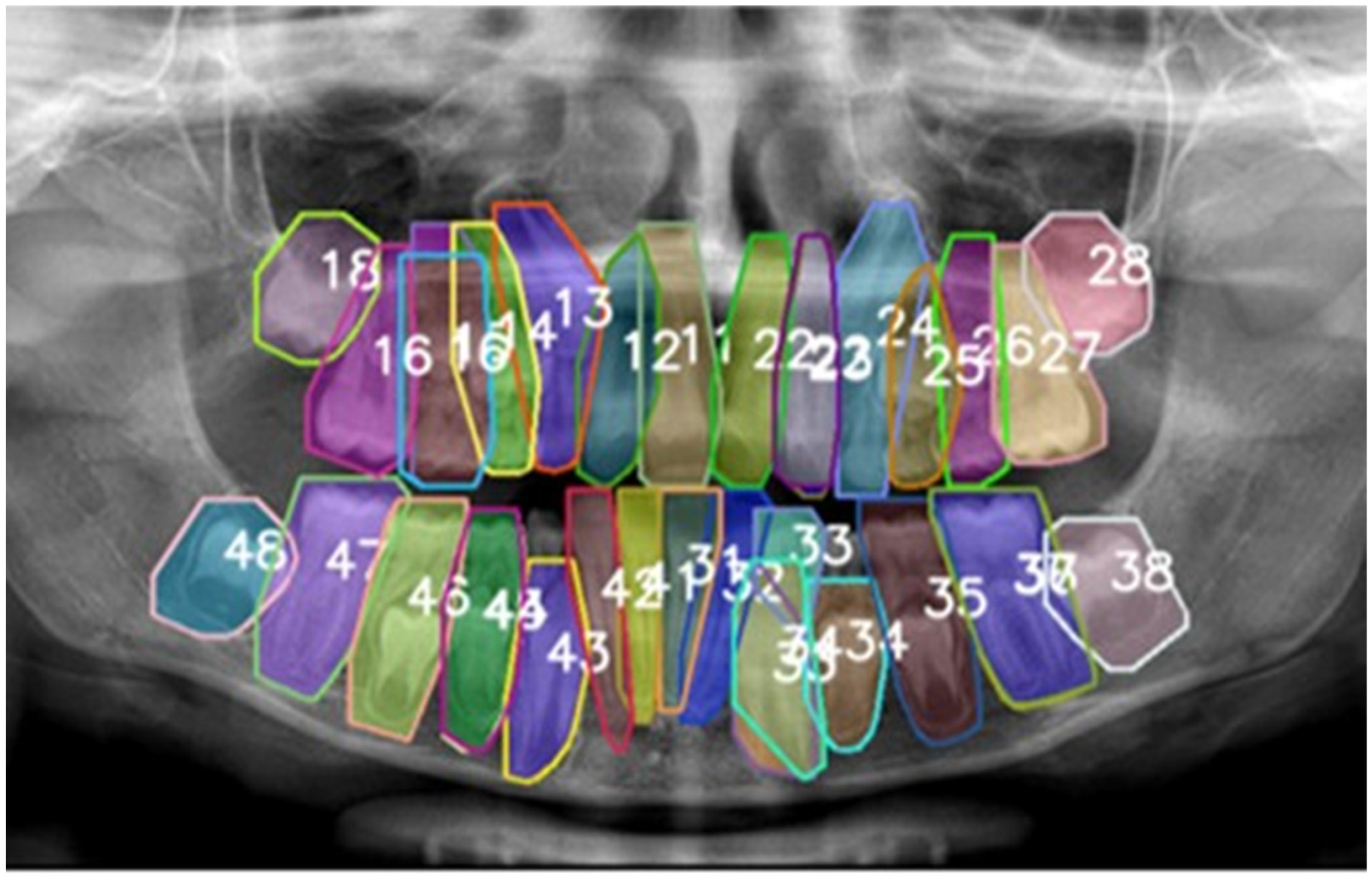

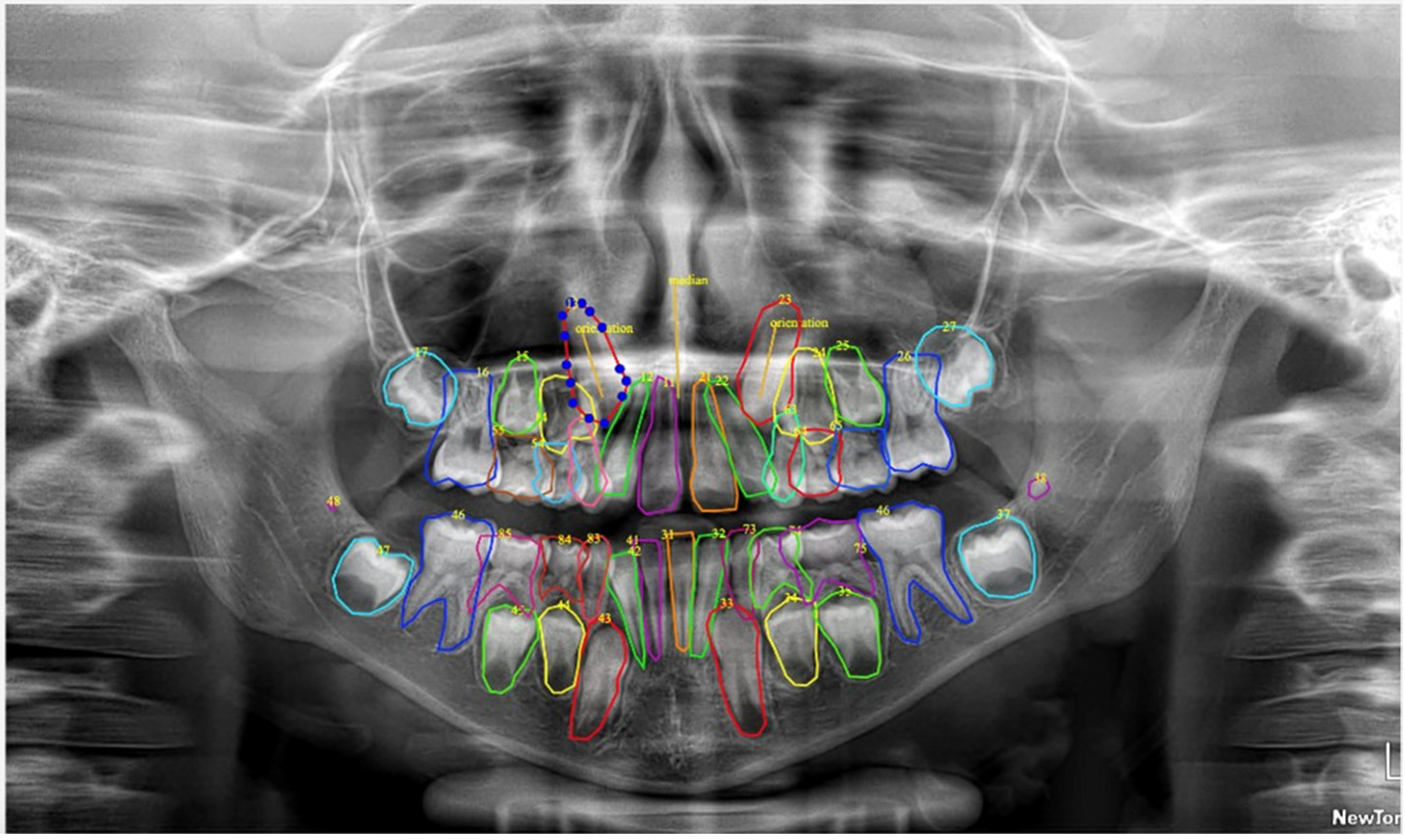

2.2. Dataset Description

2.3. Labeling Protocol

2.4. AI Model Architecture and Training

- detect the location of each object (in our case, each tooth),

- classify the object (e.g., tooth 11, tooth 12, tooth 35, etc.), and

- segment its precise contour (tooth boundary).

- backbone: extracts visual features from the input image (e.g., shapes, contours), leveraging convolutional blocks with batch normalization and SiLU activations;

- neck: employs upsampling and concatenation operations to generate multi-resolution feature maps, enabling the combination and enhancement of features across different scales;

- heads:

- ○

- detection head: outputs the position, class label, and confidence score for each object. For each grid cell, the detection head predicts bounding-box parameters (tx, ty, tw, th), an objectness score, and class logits. The detection loss combines three components: a bounding-box regression loss (IoU-based), an objectness loss (BCE), and a classification loss (Cross-Entropy or BCE, depending on the parametrization);

- ○

- segmentation head: produces pixel-level masks for each detected object using an instance-aware single-shot approach. The head generates a small set of prototype masks and, for each detection, a coefficient vector. The final mask for detection i is obtained by linearly combining the prototypes with the coefficients, followed by a sigmoid activation and cropping according to the bounding box.

2.5. Output Layer Configuration

- bounding box coordinates (x, y, width, height),

- class label (e.g., 11, 12, …), and

- an instance-specific segmentation mask.

2.6. Evaluation Metrics & Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| PR | Panoramic Radiographs |

| DL | Deep Learning |

| LLM | Large Language Model |

| FDI | Federation Dentaire Internationale |

| DIBINEM | Department of Biomedical and Neuromotor Sciences |

| DISI | Department of Computer Science and Engineering |

| MICCAI | Medical Image Computing and Computer-Assisted Intervention |

| DENTEX | Dental Enumeration and Diagnosis on Panoramic-X-rays |

| mAP | mean Average Precision |

| IoU | Intersection over Union |

References

- Lee, S.J.; Poon, J.; Jindarojanakul, A.; Huang, C.-C.; Viera, O.; Cheong, C.W.; Lee, J.D. Artificial intelligence in dentistry: Exploring emerging applications and future prospects. J. Dent. 2025, 155, 105648. [Google Scholar] [CrossRef]

- Buldur, M.; Sezer, B. Evaluating the accuracy of Chat Generative Pre-trained Transformer version 4 (ChatGPT-4) responses to United States Food and Drug Administration (FDA) frequently asked questions about dental amalgam. BMC Oral Health 2024, 24, 605. [Google Scholar] [CrossRef]

- Sezer, B.; Aydoğdu, T. Performance of advanced artificial intelligence models in pulp therapy for immature permanent teeth: A comparison of ChatGPT-4 Omni, DeepSeek, and Gemini Advanced in accuracy, completeness, response time, and readability. J. Endod. 2025; in press. [Google Scholar] [CrossRef]

- Incerti Parenti, S.; Bartolucci, M.L.; Biondi, E.; Maglioni, A.; Corazza, G.; Gracco, A.; Alessandri-Bonetti, G. Online patient education in obstructive sleep apnea: ChatGPT versus Google Search. Healthcare 2024, 12, 1781. [Google Scholar] [CrossRef]

- Kapoor, D.; Garg, D.; Tadakamadla, S.K. Brush, byte, and bot: Quality comparison of artificial intelligence-generated pediatric dental advice across ChatGPT, Gemini, and Copilot. Front. Oral Health 2025, 6, 1652422. [Google Scholar] [CrossRef] [PubMed]

- Mukhopadhyay, A.; Mukhopadhyay, S.; Biswas, R. Evaluation of large language models in pediatric dentistry: A Bloom’s taxonomy-based analysis. Folia Med. 2025, 67, e154338. [Google Scholar] [CrossRef] [PubMed]

- Sallam, M. ChatGPT utility in healthcare education, research, and practice: Systematic review on the promising perspectives and valid concerns. Healthcare 2023, 11, 887. [Google Scholar] [CrossRef]

- American Academy of Pediatric Dentistry. Management of the developing dentition and occlusion in pediatric dentistry. Pediatr. Dent. 2018, 40, 352–365. [Google Scholar]

- Ericson, S.; Kurol, J. Early treatment of palatally erupting maxillary canines by extraction of the primary canines. Eur. J. Orthod. 1988, 10, 283–295. [Google Scholar] [CrossRef]

- Maganur, P.C.; Vishwanathaiah, S.; Mashyakhy, M.; Abumelha, A.S.; Robaian, A.; Almohareb, T.; Almutairi, B.; Alzahrani, K.M.; Binalrimal, S.; Marwah, N.; et al. Development of artificial intelligence models for tooth numbering and detection: A systematic review. Int. Dent. J. 2024, 74, 917–929. [Google Scholar] [CrossRef]

- Rokhshad, R.; Mohammad, F.D.; Nomani, M.; Mohammad-Rahimi, H.; Schwendicke, F. Chatbots for conducting systematic reviews in pediatric dentistry. J. Dent. 2025, 158, 105733. [Google Scholar] [CrossRef] [PubMed]

- Alenezi, O.; Bhattacharjee, T.; Alseed, H.A.; Tosun, Y.I.; Chaudhry, J.; Prasad, S. Evaluating the efficacy of various deep learning architectures for automated preprocessing and identification of impacted maxillary canines in panoramic radiographs. Int. Dent. J. 2025, 75, 100940. [Google Scholar] [CrossRef] [PubMed]

- Minhas, S.; Wu, T.H.; Kim, D.G.; Chen, S.; Wu, Y.C.; Ko, C.C. Artificial intelligence for 3D reconstruction from 2D panoramic X-rays to assess maxillary impacted canines. Diagnostics 2024, 14, 196. [Google Scholar] [CrossRef] [PubMed]

- Özçelik, S.T.A.; Üzen, H.; Şengür, A.; Fırat, H.; Türkoğlu, M.; Çelebi, A.; Gül, S.; Sobahi, N.M. Enhanced panoramic radiograph-based tooth segmentation and identification using an attention gate-based encoder-decoder network. Diagnostics 2024, 14, 2719. [Google Scholar] [CrossRef]

- Kaya, E.; Gunec, H.G.; Gokyay, S.S.; Kutal, S.; Gulum, S.; Ates, H.F. Proposing a CNN method for primary and permanent tooth detection and enumeration on pediatric dental radiographs. J. Clin. Pediatr. Dent. 2022, 46, 293–298. [Google Scholar] [CrossRef]

- Peker, R.B.; Kurtoglu, C.O. Evaluation of the performance of a YOLOv10-based deep learning model for tooth detection and numbering on panoramic radiographs of patients in the mixed dentition period. Diagnostics 2025, 15, 405. [Google Scholar] [CrossRef]

- Arslan, C.; Yucel, N.O.; Kahya, K.; Sunal Akturk, E.; Germec Cakan, D. Artificial intelligence for tooth detection in cleft lip and palate patients. Diagnostics 2024, 14, 2849. [Google Scholar] [CrossRef]

- Bakhsh, H.H.; Alomair, D.; AlShehri, N.A.; Alturki, A.U.; Allam, E.; ElKhateeb, S.M. Validation of an artificial intelligence-based software for the detection and numbering of primary teeth on panoramic radiographs. Diagnostics 2025, 15, 1489. [Google Scholar] [CrossRef]

- Beser, B.; Reis, T.; Berber, M.N.; Topaloglu, E.; Gungor, E.; Kılıc, M.C.; Duman, S.; Çelik, Ö.; Kuran, A.; Bayrakdar, I.S. YOLOv5-based deep learning approach for tooth detection and segmentation on pediatric panoramic radiographs in mixed dentition. BMC Med. Imaging 2024, 24, 172. [Google Scholar] [CrossRef]

- Jocher, G.; Qiu, J. Ultralytics YOLO11 [Software], version 11.0.0; Ultralytics: Frederick, MD, USA, 2024; License: AGPL-3.0. Available online: https://github.com/ultralytics/ultralytics (accessed on 1 November 2024).

- Mongan, J.; Moy, L.; Kahn, C.E., Jr. Checklist for artificial intelligence in medical imaging (CLAIM): A guide for authors and reviewers. Radiol. Artif. Intell. 2020, 2, e200029. [Google Scholar] [CrossRef]

- von Elm, E.; Altman, D.G.; Egger, M.; Pocock, S.J.; Gøtzsche, P.C.; Vandenbroucke, J.P.; STROBE Initiative. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: Guidelines for reporting observational studies. J. Clin. Epidemiol. 2008, 61, 344–349. [Google Scholar] [CrossRef]

- Hamamci, I.E.; Er, S.; Simsar, E.; Yuksel, A.E.; Gultekin, S.; Ozdemir, S.D.; Yang, K.; Li, H.B.; Pati, S.; Stadlinger, B.; et al. DENTEX: An abnormal tooth detection with dental enumeration and diagnosis benchmark for panoramic X-rays. arXiv 2023, arXiv:2305.19112. [Google Scholar] [CrossRef]

- Hamamci, I.E.; Er, S.; Simsar, E.; Sekuboyina, A.; Gundogar, M.; Stadlinger, B.; Mehl, A.; Menze, B. Diffusion-based hierarchical multi-label object detection to analyze panoramic dental X-rays. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2023, Proceedings of 26th International Conference, Vancouver, BC, Canada, 8–12 October 2023; Greenspan, H., Madabhushi, A., Mousavi, P., Salcudean, S., Duncan, J., Syeda-Mahmood, T., Taylor, R., Eds.; Springer: Cham, Switzerland, 2023; Volume 14225, pp. 389–399. [Google Scholar] [CrossRef]

- Torsello, F.; D’Amico, G.; Staderini, E.; Marigo, L.; Cordaro, M.; Castagnola, R. Factors Influencing Appliance Wearing Time during Orthodontic Treatments: A Literature Review. Appl. Sci. 2022, 12, 7807. [Google Scholar] [CrossRef]

- European Commission. Artificial Intelligence in Healthcare; European Commission: Brussels, Belgium, 2024; Available online: https://health.ec.europa.eu/ehealth-digital-health-and-care/artificial-intelligence-healthcare_en (accessed on 1 September 2025).

- World Health Organization. Ethics and Governance of Artificial Intelligence for Health: WHO Guidance; World Health Organization: Geneva, Switzerland, 2023; Available online: https://www.who.int/publications/i/item/9789240084759 (accessed on 1 September 2025).

- Smith, A.; Arena, R.; Bacon, S.L.; Faghy, M.A.; Grazzi, G.; Raisi, A.; Vermeesch, A.L.; Ong’wen, M.; Popovic, D.; Pronk, N.P. Recommendations on the use of artificial intelligence in health promotion. Prog. Cardiovasc. Dis. 2024, 87, 37–43. [Google Scholar] [CrossRef]

- Patini, R.; Staderini, E.; Camodeca, A.; Guglielmi, F.; Gallenzi, P. Case reports in pediatric dentistry journals: A systematic review about their effect on impact factor and future investigations. Dent. J. 2019, 7, 103. [Google Scholar] [CrossRef]

- Kılıc, M.C.; Bayrakdar, I.S.; Çelik, Ö.; Bilgir, E.; Orhan, K.; Aydın, O.B.; Kaplan, F.A.; Sağlam, H.; Odabaş, A.; Aslan, A.F.; et al. Artificial intelligence system for automatic deciduous tooth detection and numbering in panoramic radiographs. Dentomaxillofac Radiol. 2021, 50, 20200172. [Google Scholar] [CrossRef]

- Surdu, S.; Dall, T.M.; Langelier, M.; Forte, G.J.; Chakrabarti, R.; Reynolds, R.L. The paediatric dental workforce in 2016 and beyond. J. Am. Dent. Assoc. 2019, 150, 609–617.e5. [Google Scholar] [CrossRef]

- Alessandri Bonetti, G.; Zanarini, M.; Incerti Parenti, S.; Marini, I.; Gatto, M.R. Preventive treatment of ectopically erupting maxillary permanent canines by extraction of deciduous canines and first molars: A randomized clinical trial. Am. J. Orthod. Dentofac. Orthop. 2011, 139, 316–323. [Google Scholar] [CrossRef] [PubMed]

- Barlow, S.T.; Moore, M.B.; Sherriff, M.; Ireland, A.J.; Sandy, J.R. Palatally impacted canines and the modified index of orthodontic treatment need. Eur. J. Orthod. 2009, 31, 362–366. [Google Scholar] [CrossRef] [PubMed]

| Class | Precision (P) | Recall (R) | mAP0.5 | mAP50_95 | F1 Score |

|---|---|---|---|---|---|

| 11 | 0.989 | 0.993 | 0.995 | 0.708 | 0.991 |

| 12 | 0.962 | 0.980 | 0.990 | 0.627 | 0.971 |

| 13 | 0.983 | 0.987 | 0.992 | 0.645 | 0.985 |

| 14 | 0.961 | 0.974 | 0.985 | 0.520 | 0.967 |

| 15 | 0.988 | 1.000 | 0.995 | 0.573 | 0.994 |

| 16 | 0.991 | 0.926 | 0.960 | 0.611 | 0.957 |

| 17 | 0.973 | 0.919 | 0.941 | 0.669 | 0.945 |

| 18 | 0.946 | 0.990 | 0.980 | 0.620 | 0.968 |

| 21 | 0.982 | 0.987 | 0.994 | 0.669 | 0.984 |

| 22 | 0.986 | 0.986 | 0.987 | 0.598 | 0.986 |

| 23 | 0.991 | 0.987 | 0.987 | 0.626 | 0.989 |

| 24 | 0.963 | 1.000 | 0.995 | 0.489 | 0.981 |

| 25 | 0.958 | 0.987 | 0.993 | 0.622 | 0.972 |

| 26 | 0.976 | 0.993 | 0.995 | 0.718 | 0.984 |

| 27 | 0.987 | 0.908 | 0.920 | 0.632 | 0.946 |

| 28 | 0.991 | 0.975 | 0.993 | 0.624 | 0.983 |

| 31 | 0.976 | 0.993 | 0.995 | 0.491 | 0.984 |

| 32 | 0.979 | 0.993 | 0.995 | 0.509 | 0.986 |

| 33 | 0.990 | 0.993 | 0.995 | 0.554 | 0.992 |

| 34 | 0.976 | 0.980 | 0.993 | 0.550 | 0.978 |

| 35 | 1.000 | 0.982 | 0.995 | 0.550 | 0.991 |

| 36 | 0.993 | 0.974 | 0.988 | 0.694 | 0.984 |

| 37 | 0.976 | 0.981 | 0.994 | 0.664 | 0.978 |

| 38 | 0.957 | 0.986 | 0.988 | 0.555 | 0.972 |

| 41 | 0.987 | 0.991 | 0.995 | 0.474 | 0.989 |

| 42 | 0.982 | 0.974 | 0.985 | 0.502 | 0.978 |

| 43 | 0.988 | 0.993 | 0.995 | 0.575 | 0.991 |

| 44 | 0.964 | 0.974 | 0.984 | 0.557 | 0.969 |

| 45 | 0.977 | 0.993 | 0.993 | 0.493 | 0.985 |

| 46 | 0.958 | 0.986 | 0.987 | 0.617 | 0.972 |

| 47 | 0.919 | 0.942 | 0.980 | 0.683 | 0.930 |

| 48 | 0.967 | 0.985 | 0.992 | 0.623 | 0.976 |

| 51 | 0.553 | 0.800 | 0.635 | 0.179 | 0.654 |

| 52 | 0.643 | 0.778 | 0.780 | 0.400 | 0.704 |

| 53 | 0.948 | 0.921 | 0.981 | 0.490 | 0.934 |

| 54 | 0.914 | 0.950 | 0.965 | 0.464 | 0.932 |

| 55 | 0.963 | 0.941 | 0.972 | 0.457 | 0.952 |

| 61 | 0.846 | 1.000 | 0.995 | 0.455 | 0.917 |

| 62 | 0.874 | 0.693 | 0.849 | 0.319 | 0.773 |

| 63 | 0.963 | 0.877 | 0.962 | 0.470 | 0.918 |

| 64 | 0.955 | 0.917 | 0.940 | 0.484 | 0.936 |

| 65 | 0.931 | 1.000 | 0.994 | 0.563 | 0.964 |

| 72 | 1.000 | 0.634 | 0.863 | 0.349 | 0.776 |

| 73 | 1.000 | 0.947 | 0.965 | 0.410 | 0.973 |

| 74 | 0.959 | 0.950 | 0.980 | 0.387 | 0.955 |

| 75 | 0.930 | 0.866 | 0.963 | 0.401 | 0.897 |

| 82 | 0.809 | 0.714 | 0.793 | 0.310 | 0.759 |

| 83 | 0.874 | 1.000 | 0.994 | 0.448 | 0.933 |

| 84 | 0.954 | 0.975 | 0.992 | 0.339 | 0.965 |

| 85 | 0.956 | 0.969 | 0.990 | 0.372 | 0.962 |

| Class | Precision (P) | Recall (R) | mAP0.5 | mAP50_95 | F1 Score |

|---|---|---|---|---|---|

| 11 | 0.975 | 0.980 | 0.974 | 0.586 | 0.978 |

| 12 | 0.955 | 0.973 | 0.977 | 0.415 | 0.964 |

| 13 | 0.976 | 0.980 | 0.983 | 0.420 | 0.978 |

| 14 | 0.922 | 0.935 | 0.933 | 0.351 | 0.928 |

| 15 | 0.907 | 0.918 | 0.895 | 0.259 | 0.913 |

| 16 | 0.978 | 0.914 | 0.932 | 0.492 | 0.945 |

| 17 | 0.940 | 0.888 | 0.896 | 0.495 | 0.913 |

| 18 | 0.865 | 0.905 | 0.891 | 0.399 | 0.884 |

| 21 | 0.963 | 0.966 | 0.974 | 0.368 | 0.965 |

| 22 | 0.946 | 0.945 | 0.943 | 0.386 | 0.945 |

| 23 | 0.937 | 0.933 | 0.930 | 0.352 | 0.935 |

| 24 | 0.865 | 0.899 | 0.853 | 0.276 | 0.882 |

| 25 | 0.952 | 0.980 | 0.984 | 0.468 | 0.966 |

| 26 | 0.963 | 0.980 | 0.987 | 0.563 | 0.971 |

| 27 | 0.974 | 0.896 | 0.910 | 0.481 | 0.933 |

| 28 | 0.917 | 0.902 | 0.921 | 0.407 | 0.909 |

| 31 | 0.884 | 0.900 | 0.836 | 0.225 | 0.892 |

| 32 | 0.925 | 0.939 | 0.915 | 0.294 | 0.932 |

| 33 | 0.983 | 0.987 | 0.986 | 0.414 | 0.985 |

| 34 | 0.916 | 0.920 | 0.889 | 0.297 | 0.918 |

| 35 | 0.986 | 0.968 | 0.975 | 0.415 | 0.977 |

| 36 | 0.987 | 0.968 | 0.986 | 0.537 | 0.977 |

| 37 | 0.950 | 0.955 | 0.956 | 0.534 | 0.953 |

| 38 | 0.948 | 0.976 | 0.977 | 0.370 | 0.962 |

| 41 | 0.947 | 0.951 | 0.944 | 0.257 | 0.949 |

| 42 | 0.889 | 0.881 | 0.844 | 0.234 | 0.885 |

| 43 | 0.975 | 0.980 | 0.979 | 0.407 | 0.977 |

| 44 | 0.938 | 0.947 | 0.939 | 0.370 | 0.943 |

| 45 | 0.957 | 0.972 | 0.968 | 0.308 | 0.965 |

| 46 | 0.953 | 0.980 | 0.981 | 0.383 | 0.966 |

| 47 | 0.900 | 0.923 | 0.952 | 0.490 | 0.911 |

| 48 | 0.932 | 0.949 | 0.951 | 0.414 | 0.940 |

| 51 | 0.555 | 0.800 | 0.635 | 0.162 | 0.655 |

| 52 | 0.644 | 0.778 | 0.780 | 0.230 | 0.704 |

| 53 | 0.933 | 0.905 | 0.968 | 0.316 | 0.919 |

| 54 | 0.842 | 0.875 | 0.837 | 0.203 | 0.858 |

| 55 | 0.945 | 0.923 | 0.945 | 0.338 | 0.934 |

| 61 | 0.678 | 0.800 | 0.642 | 0.193 | 0.734 |

| 62 | 0.632 | 0.500 | 0.554 | 0.140 | 0.558 |

| 63 | 0.838 | 0.763 | 0.738 | 0.149 | 0.799 |

| 64 | 0.887 | 0.852 | 0.853 | 0.339 | 0.869 |

| 65 | 0.916 | 0.983 | 0.981 | 0.465 | 0.948 |

| 72 | 0.771 | 0.490 | 0.616 | 0.204 | 0.599 |

| 73 | 0.824 | 0.781 | 0.687 | 0.187 | 0.802 |

| 74 | 0.934 | 0.925 | 0.960 | 0.307 | 0.930 |

| 75 | 0.930 | 0.866 | 0.965 | 0.310 | 0.897 |

| 82 | 0.655 | 0.571 | 0.612 | 0.095 | 0.610 |

| 83 | 0.851 | 0.973 | 0.959 | 0.232 | 0.908 |

| 84 | 0.783 | 0.800 | 0.748 | 0.127 | 0.792 |

| 85 | 0.913 | 0.924 | 0.949 | 0.258 | 0.918 |

| Study | n (PRs) | Age/Dentition | Task(s) | Model | Detection mAP0.5/F1 | Segmentation mAP0.5/F1 |

|---|---|---|---|---|---|---|

| Kaya et al., 2022 [15] | 4545 | Pediatric, mixed | Detection + numbering | YOLOv4 | 0.92/0.91 | - |

| Beser et al., 2024 [19] | 3854 | Pediatric, mixed | Det. + Segm. | YOLOv5 | 0.98/0.99 | 0.98 |

| Peker et al., 2025 [16] | 200 | Pediatric, mixed | Detection | YOLOv10 | 0.968/0.919 | - |

| Kilic et al., 2021 [30] | 1125 | Pediatric, deciduous | Detection + numbering | Faster R-CNN | 0.93/0.91 | - |

| Our work | 250 | Pediatric, mixed | Det. + Segm. + Enumeration | YOLOv11-seg | 0.963/0.953 | 0.89 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Incerti Parenti, S.; Tsiotas, G.; Maglioni, A.; Lamberti, G.; Fiordelli, A.; Rossi, D.; Bononi, L.; Alessandri-Bonetti, G. Artificial Intelligence-Aided Tooth Detection and Segmentation on Pediatric Panoramic Radiographs in Mixed Dentition Using a Transfer Learning Approach. Diagnostics 2025, 15, 2615. https://doi.org/10.3390/diagnostics15202615

Incerti Parenti S, Tsiotas G, Maglioni A, Lamberti G, Fiordelli A, Rossi D, Bononi L, Alessandri-Bonetti G. Artificial Intelligence-Aided Tooth Detection and Segmentation on Pediatric Panoramic Radiographs in Mixed Dentition Using a Transfer Learning Approach. Diagnostics. 2025; 15(20):2615. https://doi.org/10.3390/diagnostics15202615

Chicago/Turabian StyleIncerti Parenti, Serena, Giorgio Tsiotas, Alessandro Maglioni, Giulia Lamberti, Andrea Fiordelli, Davide Rossi, Luciano Bononi, and Giulio Alessandri-Bonetti. 2025. "Artificial Intelligence-Aided Tooth Detection and Segmentation on Pediatric Panoramic Radiographs in Mixed Dentition Using a Transfer Learning Approach" Diagnostics 15, no. 20: 2615. https://doi.org/10.3390/diagnostics15202615

APA StyleIncerti Parenti, S., Tsiotas, G., Maglioni, A., Lamberti, G., Fiordelli, A., Rossi, D., Bononi, L., & Alessandri-Bonetti, G. (2025). Artificial Intelligence-Aided Tooth Detection and Segmentation on Pediatric Panoramic Radiographs in Mixed Dentition Using a Transfer Learning Approach. Diagnostics, 15(20), 2615. https://doi.org/10.3390/diagnostics15202615