Abstract

Performance degradation of wind turbine blades often stems from geometric asymmetry induced by damage. Existing methods for assessing damage face challenges in balancing accuracy and efficiency due to their limited ability to capture fine-grained geometric asymmetries associated with multi-scale damage under complex background interference. To address this, based on the high-speed detection model YOLOv10-N, this paper proposes a novel detection model named MMC-YOLO. First, the Multi-Scale Perception Gated Convolution (MSGConv) Module was designed, which constructs a full-scale receptive field through multi-branch fusion and channel rearrangement to enhance the extraction of geometric asymmetry features. Second, the Multi-Scale Enhanced Feature Pyramid Network (MSEFPN) was developed, integrating dynamic path aggregation and an SENetv2 attention mechanism to suppress background interference and amplify damage response. Finally, the Channel-Compensated Filtering (CCF) module was constructed to preserve critical channel information using a dynamic buffering mechanism. Evaluated on a dataset of 4818 wind turbine blade damage images, MMC-YOLO achieves an 82.4% mAP [0.5:0.95], representing a 4.4% improvement over the baseline YOLOv10-N model, and a 91.1% recall rate, an 8.7% increase, while maintaining a lightweight parameter count of 4.2 million. This framework significantly enhances geometric asymmetry defect detection accuracy while ensuring real-time performance, meeting engineering requirements for high efficiency and precision.

1. Introduction

Amidst the global transition towards renewable energy [1,2], wind power has emerged as a critical pillar of sustainable development [3,4,5]. The efficient operation of wind turbine blades hinges fundamentally on their meticulously designed geometric symmetry—a cornerstone not only for optimizing aerodynamic efficiency, reducing noise, and minimizing drag, but also for ensuring uniform structural load distribution and maintaining long-term integrity [6]. However, prolonged exposure to harsh operational conditions (high-speed winds, rain erosion, ice accretion, lightning strikes) inevitably induces surface damage (e.g., cracks, delamination, erosion) on the blades [7]. The core mechanism of such damage lies in the disruption of the blades’ inherent local and potentially global symmetric structure, leading to significant symmetry breaking. This phenomenon not only degrades aerodynamic performance but also triggers stress concentrations, accelerating structural failure and posing substantial economic losses and safety risks [8]. Consequently, developing an intelligent and efficient detection system for wind turbine blade surface damage centers critically on the precise identification and localization of this performance-degrading symmetry breaking, which is paramount for ensuring the safe and stable operation of wind farms [9].

Traditional non-destructive testing (NDT) techniques—such as acoustic emission [10], thermography [11], ultrasonic testing [12], and vibration analysis [13]—suffer from inherent limitations in large-scale wind farm inspection scenarios, including high complexity in data processing, vulnerability to environmental interference, and prohibitive equipment costs, rendering them inadequate for routine monitoring needs. In contrast, computer vision-based inspection utilizing unmanned aerial vehicle (UAV) platforms offers a revolutionary solution due to its broad coverage, enhanced operational safety, and significant cost-effectiveness. Memari et al. [14] systematically explored multi-scenario UAV applications for blade inspection and emphasized deep learning as the core driver for enhancing automation and detection accuracy, and Zhang et al. [15] deeply analyzed critical technical challenges like image processing and sensor fusion for static/dynamic blade defect detection using UAVs. These works collectively establish that intelligent inspection systems coupling UAV image acquisition with deep learning algorithms represent the state-of-the-art approach for wind turbine blade surface damage detection.

Current deep learning-based models for blade damage detection primarily adopt two-stage (e.g., R-CNN series) or single-stage (e.g., SSD/YOLO series) object detection strategies. Within the two-stage paradigm, Diaz et al. [16] achieved 82.42% detection accuracy on the DTU dataset by refining the Cascade Mask R-CNN architecture using a cascaded structure; furthermore, Pratt. et al. [17] enhanced robustness in specific scenarios by integrating backbone networks (VGG19, Xception, ResNet-50) with a multivariate fuzzy voting system. However, the inherent complexity of two-stage models—involving sequential region proposal generation followed by classification and regression—incurs substantial computational overhead and slow inference speeds, hindering their suitability for real-time wind farm inspection. Conversely, single-stage models, benefiting from their end-to-end architecture, offer greater efficiency. Significant research efforts focus on making these models both lightweight and accurate: for instance, enhancing YOLOv5 by fusing the lightweight ShuffleNetv2 backbone with a spatial-channel attention mechanism (SNCA-SSD) [18], introducing efficient GhostBottleneckv2 modules [19], and leveraging genetic algorithm optimization [20] to reduce parameters and improve accuracy. Likewise, other efforts have focused on improving YOLOv8 by implementing dynamic separable convolution with Wise-IoU loss [21], incorporating multi-head self-attention mechanisms [22], and deploying multi-scale feature refinement modules [23] to strengthen the model’s geometric feature perception and defect information extraction capabilities. Despite these advancements yielding marked improvements in performance and efficiency, practical deployment faces substantial hurdles: Firstly, multi-scale damage features (especially micro-cracks) on blade surfaces are easily obscured against complex and variable backgrounds (e.g., sky, terrain, stains, varying illumination), resulting in high miss rates. Secondly, inherent tension exists between the low-cost, real-time requirements of inspection tasks deployed on edge devices and the demand for high detection accuracy. Crucially, existing methods struggle to effectively perceive local symmetry changes [24,25] induced by damage within challenging backgrounds while simultaneously balancing high precision and low latency [26,27].

The recent introduction of the “NMS-free [28] dual label assignment strategy” in YOLOv10 [29] significantly enhances inference efficiency, with its lightweight variant YOLOv10-N exhibiting considerable potential for edge deployment. However, constrained by interference from complex backgrounds around turbine blades and the multi-scale nature of the damage itself, YOLOv10-N demonstrates insufficient sensitivity to subtle symmetry breaking (like fine cracks), leading to suboptimal detection accuracy and recall rates in real-world wind farm environments.

To overcome these challenges, effectively address the critical difficulty of identifying damage-induced symmetry breaking, and reconcile the accuracy–efficiency trade-off, we introduce the MMC-YOLO detection framework. Building upon the efficient backbone of YOLOv10-N, this framework incorporates targeted architectural optimizations. Its core innovations lie in significantly enhancing the model’s capabilities for perceiving, fusing, and representing multi-scale damage features—manifest as local symmetry changes on the blade surface—while maintaining highly efficient inference:

- We propose a Multi-Scale Perception Gated Convolution (MSGConv) Module. Leveraging multi-branch structures and channel recombination, this module establishes symmetry in feature utilization to alleviate channel isolation, thereby constructing a balanced multi-scale perceptual space.

- We design a Multi-Scale Enhanced Feature Pyramid Network (MSEFPN). This incorporates dynamic path selection and adaptive feature replacement mechanisms to bolster the robustness of feature fusion. Furthermore, it employs the SENetv2 attention mechanism to dynamically amplify the response strength of damage features and suppress irrelevant background interference.

- We propose a Channel-Compensated Filtering (CCF) Module. This module utilizes an adaptive compression strategy based on channel energy distribution. This strategy dynamically buffers the dimensionality reduction process, thereby maximizing the retention of core defect information.

2. Related Works

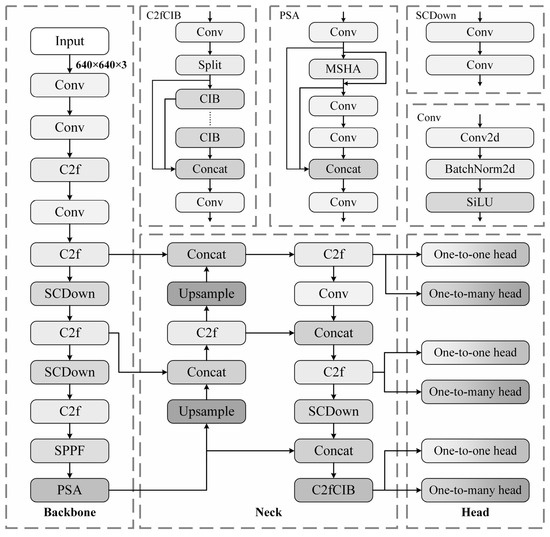

In the field of industrial real-time detection, single-stage object detection algorithms have demonstrated significant advantages due to their linear computational complexity and inherent end-to-end deployment capability. Among these, the YOLO series has become the mainstream solution in industrial applications, thanks to its remarkable balance between detection speed and accuracy. The recently released YOLOv10 represents a breakthrough advancement: as an iterative progression of YOLOv8, this model retains the efficient core architecture while fundamentally restructuring the post-processing mechanism and optimizing key architectural components. This has led to improved detection accuracy and inference speed with fewer parameters. YOLOv10 offers a multi-scale architecture system with versions N/S/M/B/L/X. Its ultra-lightweight version, YOLOv10-N (as detailed in Figure 1), is particularly suitable for deployment in industrial edge computing scenarios, where stringent resource constraints are common.

Figure 1.

Architecture of the YOLOv10-N network model.

2.1. Backbone Network: Efficient Extraction of Image Features

YOLOv10-N focuses on eliminating computational redundancy. It introduces a novel spatial–channel decoupling down-sampling (SCDown) module that decouples the spatial down-sampling and channel transformation operations of traditional 3 × 3 convolutions. First, it reduces the channel dimension through a 1 × 1 convolution, followed by depthwise convolutions to reduce spatial resolution, significantly reducing both computational complexity and parameter count. This is particularly effective when dealing with shallow layers and high-resolution inputs. To address the computational overhead of the self-attention mechanism, YOLOv10-N employs an efficient partial self-attention (PSA) module, which divides the feature channels evenly. It applies a simplified NPSA module to only half of the channels and deploys it solely at the end of the backbone network, balancing global modeling with computational efficiency.

2.2. Neck Network: A Key Hub for Multi-Scale Feature Fusion

YOLOv10 uses compact inverted residual blocks (CIB) as the basic unit, utilizing channel rank analysis to suppress redundancy. It combines depthwise convolutions and pointwise convolutions to efficiently mix features. In deeper CIB blocks, larger kernel depthwise convolutions are used to expand the receptive field of deep features. Structural reparameterization ensures inference efficiency. Based on this, the principles of CIB are integrated with YOLOv8’s C2f module and incorporate residual connections from the ELAN architecture, creating an enhanced C2fCIB module to improve feature fusion efficiency. Ultimately, the neck network combines the PAFPN path with the C2fCIB module, forming a bidirectional cross-scale fusion mechanism, which integrates “bottom-up feature enhancement” and “top-down semantic refinement.”

2.3. Head Network: Direct Determinants of End-to-End Detection Performance

YOLOv10 employs a dual-branch detection head architecture: for each feature map scale outputted by the neck, a pair of multi-assigning heads (inheriting the traditional YOLO multi-positive sample supervision strategy to provide rich training signals) and a pair of one-to-one assigning heads (executing a strict one-to-one label assignment strategy to achieve NMS-free inference) are configured in parallel. Both branches share the features extracted by the backbone and neck, enabling collaborative parameter optimization. The matching metric for the NMS-free branch is formally defined by the unified expression:

where S is the spatial prior factor (indicating whether the predicted anchor point is within the target instance), P is the classification confidence, and and b are the predicted and ground truth bounding box coordinates, respectively. α and β are hyperparameters that adjust the weight between classification and localization tasks. This design paradigm fundamentally eliminates the inherent reliance of traditional detectors on NMS, thereby significantly reducing inference latency and simplifying deployment workflows.

To address the rigorous demands of wind turbine blade damage detection—namely, high precision, real-time responsiveness, and deployment efficiency—this study adopts YOLOv10 as the foundational detection framework. In comparison to other YOLO variants such as YOLOv5, YOLOv8, YOLOv9 [30], YOLOv11 [31], and YOLOv12 [32], which rely on non-maximum suppression (NMS), YOLOv10 circumvents the inference delay typically introduced by NMS through an NMS-free inference architecture. Moreover, while previous versions enhance detection performance via reversible modules or multi-head self-attention mechanisms, such strategies often increase computational complexity or impose substantial demands on hardware resources. In contrast, YOLOv10 introduces a training–inference decoupling strategy that effectively balances accuracy and computational efficiency. Particularly, its lightweight variant, YOLOv10-N, demonstrates strong applicability for high-frequency, real-time inspection tasks in resource-constrained environments, making it highly suitable for deployment in wind energy infrastructure.

Nevertheless, several challenges persist in applying YOLOv10-N to wind turbine blade damage detection. First, blade damage typically exhibits pronounced multi-scale characteristics, ranging from microscopic cracks to macroscopic defects. Traditional convolutional networks, constrained by their fixed receptive fields, struggle to simultaneously capture features across such diverse scales. Second, current feature fusion mechanisms often lack robustness to variations in scale and perspective, thereby increasing susceptibility to background noise and reducing sensitivity to subtle damage patterns. Third, although channel compression techniques enhance model efficiency, they may inadvertently suppress critical information. In particular, cross-channel energy collapse can result in the loss of weak but important features, such as those associated with early-stage micro-cracks. These limitations underscore the necessity for structural innovations and clarify the technical direction pursued in this study.

3. Methods

Transformer architectures, underpinned by their self-attention mechanism—particularly multi-head self-attention—exhibit a strong capacity for modeling complex, long-range dependencies across spatial positions. This enables a more comprehensive understanding of global contextual information, which is especially advantageous for detecting multi-scale damage patterns and subtle geometric asymmetries in wind turbine blades. Nevertheless, existing Transformer-based object detectors, such as YOLO-World [33] and RT-DETR [34], face significant obstacles in practical deployment on edge devices. These challenges primarily stem from the high computational complexity, substantial memory footprint, and reduced inference speed associated with self-attention operations. Furthermore, despite their proficiency in global context modeling, Transformer models often exhibit diminished sensitivity to fine-grained local features, particularly under cluttered or complex background conditions—thereby limiting their effectiveness in precision-critical defect detection scenarios.

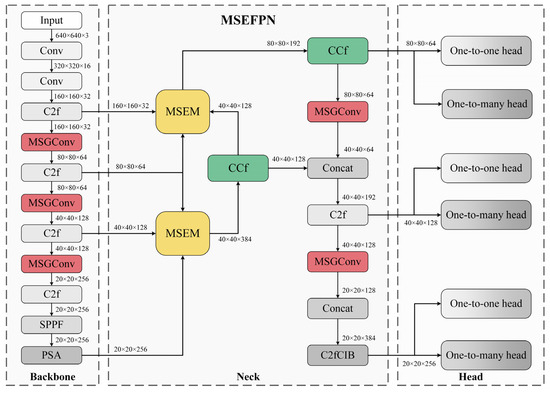

To address these limitations, we propose MMC-YOLO, a novel detection framework that synergistically integrates the global feature modeling capability of Transformers with the efficient inference characteristics of YOLOv10-N. The proposed architecture is illustrated in Figure 2. The proposed architecture is structured around three sequential stages: multi-scale perception, contextual feature fusion, and information compression. By embedding three core architectural modules into both the backbone and neck networks, MMC-YOLO establishes a closed-loop collaborative mechanism that substantially enhances the model’s ability to capture, integrate, and represent multi-scale damage features. Importantly, this is achieved without compromising inference speed, thereby making the model suitable for real-time deployment in resource-constrained wind energy environments. The detailed architectural design is illustrated in Table 1.

Figure 2.

Architecture of the MMC-YOLO network model.

Table 1.

Core modules of MMC-YOLO and their functions.

3.1. MSGConv Module

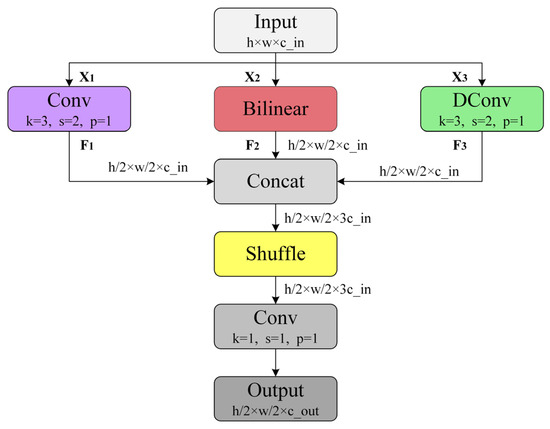

The traditional single receptive field convolution method shows significant limitations in detecting surface damage on wind turbine blades. These methods either focus on micro-detail analysis, which fails to capture the spatial topological features of damage at the meter scale, or concentrate on macro-structural recognition, leading to the loss of information on microscopic damages such as millimeter-scale micro-cracks. Therefore, they cannot meet the extreme multi-scale detection requirements spanning three orders of magnitude, from millimeter-scale micro-cracks to meter-scale structural damage. To address this challenge, we have designed a multi-scale gated fusion convolution module (MSGConv), as shown in Figure 3. By collaboratively constructing a multi-scale representation mechanism in the feature space, MSGConv effectively bridges this capability gap. MSGConv employs a three-branch collaborative architecture to process input feature information hierarchically, achieving comprehensive damage feature coverage:

Figure 3.

Structure of the MSGConv network model.

- Macro-structural Perception Branch: This branch is aimed at extracting low-frequency structural features. It uses a standard 3 × 3 convolution kernel to spatially filter the input feature map X1, followed by a ReLU non-linear activation:where represents the standard convolution kernel, and ReLU introduces non-linearity. This operation explicitly extends the receptive field to the decimeter-scale damage level, enabling the accurate capture of the spatial topology and edge fractures of large corrosion areas on wind turbine blades. It provides a crucial spatial semantic foundation for damage region segmentation.

- Micro-detail Fidelity Branch: To preserve high-fidelity details such as micro-cracks, this branch adopts a sub-pixel processing strategy to avoid information degradation caused by down-sampling. Specifically, it processes the feature X2 using a bilinear interpolation branch:where represents the spatial binary mask of the micro-crack region, and is the Hadamard product operator (element-wise multiplication). This design effectively prevents high-frequency signal attenuation, enabling the model to precisely capture the edge discontinuities inherent to micro-cracks.

- Depth Decoupling Enhancement Branch: This branch focuses on representing local material heterogeneity. It utilizes depthwise convolution operations for channel-level feature enhancement:where the superscript indicates the spatial location indexed by channels, and represents the depthwise separable convolution operator. Through this operator, the model strengthens the material heterogeneity response in localized regions of the blade surface, significantly enhancing the activation intensity for microscopic damages such as coating delamination and stress cracks.

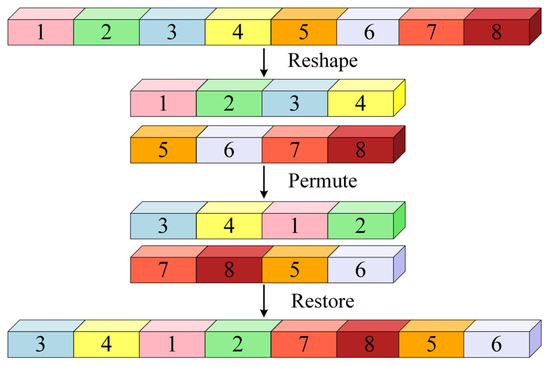

Wind turbine blade defects exhibit significant inter-channel feature coupling characteristics. For instance, crack damage requires the simultaneous activation of the texture channel (representing surface material changes) and the edge channel (capturing discontinuities in the geometric contours). However, the outputs of MSGConv’s branches—macro-structural features , micro-detail features , and material heterogeneity features —correspond to feature spaces of different scales and semantic types, with inherent channel-level information isolation posing a challenge. Direct fusion would result in insufficient inter-branch feature correlation. To address this issue, MSGConv introduces a Channel Shuffle mechanism [35], as shown in Figure 4.

where represents the channel dimension permutation function, and is the grouping hyperparameter. This mechanism forcibly breaks the channel group boundaries, allowing inter-branch features to be reassembled along the channel dimension. Applications in wind turbine blade detection demonstrate that this operation enhances inter-channel feature interaction, enabling the model to jointly represent compound damage patterns such as “micro-cracks extending along the edge of large corrosion areas” or “stress concentration within delaminated coating regions,” significantly improving the model’s ability to jointly represent multi-scale defects.

Figure 4.

The diagram of the Channel Shuffle operation.

3.2. MSEFPN Architecture

The original path aggregation feature pyramid network (PAFPN) has significant flaws in wind turbine blade damage detection. Its fixed feature fusion paths fail to adapt to the extreme scale differences between the macro view of the entire blade and the micro details of millimeter-scale micro-cracks in blade images. It also struggles with the geometric distortions caused by changes in viewpoint, leading to insufficient capability for detecting sub-pixel-level micro-damages. Additionally, the linear feature fusion mechanism exacerbates the confusion between background interferences, such as rust, dirt, and vegetation occlusions, and subtle defect features. The root cause lies in the lack of channel-level feature differentiation in PAFPN, resulting in the equal-weight fusion of damage-related channels and noise-dominant channels. The core issue stems from the PAFPN architecture’s inability to distinguish between channels at the feature level, which leads to the equal-weight fusion of damage information channels with noise-dominated channels.

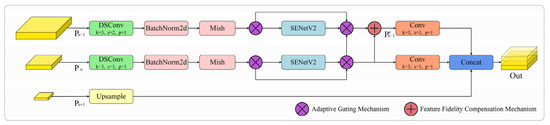

To overcome these bottlenecks, we propose the MSEFPN, which addresses these technical limitations through the Multi-Scale Enhancement Module (MSEM). As shown in Figure 5, MSEM employs a hierarchical adaptive decision mechanism that dynamically evaluates the informational value of features. It enables the adaptive fusion of global structural information, such as the overall blade shape, with local detailed features, such as crack edge topology. Moreover, it integrates the SENetv2 channel attention mechanism [36] for feature recalibration, enhancing the spectral response of defect-related channels, such as micro-crack textures, while suppressing ineffective channels dominated by background interference. This significantly improves the distinguishability of damage features and effectively solves the technical challenges present in PAFPN.

Figure 5.

Structure of the MSEM network model.

As shown in Figure 5, MSEM processes multi-scale features , , . First, the spatial dimensions of and are adjusted to match the resolution of the target level through depthwise separable convolution (DSConv) or upsampling operations. This operation efficiently achieves spatial alignment, laying the groundwork for subsequent fusion. Batch normalization (BN) is then applied to stabilize feature distribution. Next, the Mish activation function is used to enhance the model’s ability to represent complex damage textures (e.g., micro-cracks, localized corrosion), improving the feature’s robustness and sensitivity to subtle damage signals.

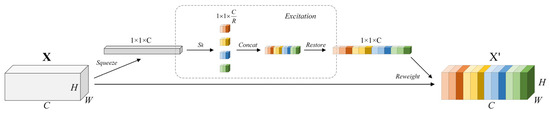

Following this, the SENetv2 channel attention mechanism, as shown in Figure 6, amplifies defect-related feature responses through channel-level recalibration. Specifically, the input feature tensor (where is feature height, is feature width, and is the number of channels) undergoes three stages:

Figure 6.

Schematic diagram of the SENetV2 attention mechanism.

- Spatial Compression: Global average pooling (GAP) compresses the spatial dimensions:where the subscript represents the channel index, and the resulting vector characterizes the global statistics of each channel, laying the foundation for channel dependency relationships.

- Multi-Path Interactive Learning: The vector is input to four parallel fully connected (FC) layer groups:where is the weight of the -th FC group, and is the reduction coefficient ( and ). Each parallel, isomorphic FC branch independently learns and outputs a set of channel weights, focusing on capturing different, complementary interaction dependency patterns among the input feature channels.

- Weight Generation and Feature Re-weighting: The outputs of the four paths are concatenated and passed through a final FC layer to restore the channel dimension and perform feature recalibration:where is the Sigmoid function, and the output represents the relevance weights of each channel to damage features. Feature recalibration is then performed through channel-wise multiplication:where denotes element-wise multiplication, selectively amplifying the activations of defect-related channels while suppressing background noise interference.

However, channel recalibration is enforced regardless of whether the feature layer contains valid damage information. This not only results in wasted computational resources but may also exacerbate background interference by over-processing noisy features. To address this, MSEMFPN introduces an adaptive gating mechanism based on damage energy metrics. By dynamically assessing the richness of defect-related information in the feature layer, SENetv2 channel recalibration is triggered only when the defect information is insufficient, avoiding redundant computation of high-value features:

where is the measure of “defect energy” in the feature , indicating the richness of defect-related information in that feature layer, and is a predefined threshold used to determine whether attention refinement is required.

Additionally, during the wind turbine blade imaging process, shallow features are susceptible to distortions caused by uneven lighting, surface dirt, and other interferences. If directly fused with deeper semantic features, this would introduce pseudo-edge information and blur the true defect boundaries. Therefore, MSEMFPN incorporates a feature fidelity compensation mechanism, quantifying the structural consistency between shallow features and adjacent layers using the Structural Similarity Index (SSIM):

where measures the fidelity difference between and in structural information, and is a predefined similarity threshold. This prevents distorted features from contaminating the fusion result, ensuring that the final fused features always contain valid defect information, thus improving the reliability of feature fusion.

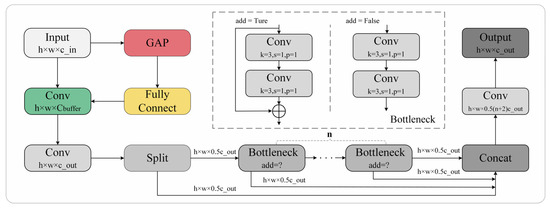

3.3. CCF Module

During feature extraction, deep neural networks commonly reduce channel dimensions to alleviate computational load and model complexity. However, each channel carries rich and indispensable information critical for accurate detection. Surface defects on wind turbine blades often exhibit cross-channel characteristics—for instance, micro-cracks may simultaneously activate channels responsible for edge detection, texture enhancement, and noise suppression. In the conventional C2f module, channel reduction from to can be mathematically expressed as follows:

where denotes the input feature tensor, and is the weight matrix of a convolution. This projection operation inevitably leads to the collapse of cross-channel feature energy: if the channels associated with defect-related features are pruned, the corresponding information is permanently lost.

To quantify this loss, we define the Channel Preservation Rate as follows:

where represents the activation of the defect feature on channel , and denotes the squared L2 norm, which reflects feature energy. For micro-cracks spanning 3 to 5 channels, conventional methods yield a preservation rate of only 0.4 to 0.6, implying a loss of 40–60% of feature energy. This degradation significantly impairs detection performance: on one hand, already weak defect signals (typically <1% of total image energy) become further attenuated, increasing the miss rate for small targets; on the other hand, loss of cross-channel context (e.g., blade contours) exacerbates localization errors at damage boundaries.

To address this challenge, we propose the CCF module, as shown in Figure 7, which introduces a dynamic buffered reduction strategy within the C2f structure. This mechanism adapts channel compression to the cross-channel nature of blade defect features by decomposing the dimensionality reduction into two learnable stages: First, a buffer width is predicted to mitigate abrupt feature loss:

where and are the height and width of the feature map, and denotes the global average pooled channel statistics. is the weight matrix of a fully connected layer used to predict the optimal buffer width, and is the bias term. Second, the feature tensor is projected in two steps:

where and are the projection weights of the buffer and output stages, respectively. This two-stage projection is functionally equivalent to a single transformation , but offers distinct advantages: preserves the integrity of cross-channel features, while focuses on pruning redundant background channels.

Figure 7.

Structure of the CCF network model.

This strategy significantly improves the preservation rate of defect features, enabling the detection head to receive more complete and informative feature representations. Notably, the CCF module is selectively deployed after the MSEM, where the channel reduction is most aggressive. This deliberate placement ensures maximal benefit from dynamic reduction while avoiding excessive computational overhead.

4. Experimental Configuration

4.1. Experimental Environment and Parameter Settings

All experiments were implemented using the PyTorch 2.0.1 deep learning framework, with the development environment configured on Python 3.9.19. The model was trained and evaluated on a workstation running Windows 10, accelerated by CUDA 12.5. Dataset annotation was conducted using the open-source tool LabelImg. The hardware configuration comprised an Intel® Core™ i9-10900X CPU (Santa Clara, CA, USA), an NVIDIA RTX 4070 GPU (Santa Clara, CA, USA), and 128 GB of system memory. The detailed experimental settings are presented in Table 2. All hyperparameters strictly followed the official YOLOv10-N implementation to ensure fair benchmarking and reproducibility.

Table 2.

Network parameter settings.

4.2. Datasets

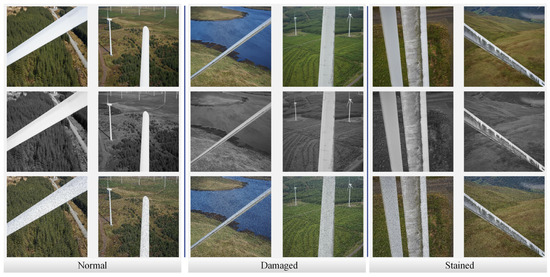

This study presents the construction of a wind turbine blade surface defect dataset, based on images acquired by unmanned aerial vehicles (UAVs) during routine inspection operations at wind farms located in Yandun, Hami, Xinjiang. From the collected raw imagery, a total of 1651 high-quality images were selected as valid samples. To enhance dataset diversity and improve the generalization ability of detection models, two data augmentation techniques—weighted grayscale transformation and salt-and-pepper noise injection—were applied, resulting in an expanded dataset comprising 4818 images.

All images were meticulously annotated according to the visual characteristics of typical blade defects and were categorized into three classes: Normal (defect-free blades), Damaged (structural defects including cracks, breakdowns, and coating delamination), and Stained (surface contamination such as oil or grime). The number of annotated instances in each category was 1586, 2112, and 1903 respectively, yielding a total of 5601 labeled defect instances. Each instance was annotated with a precise bounding box and an associated class label.

The dataset was randomly partitioned into training, validation, and test subsets at a ratio of 7:2:1. The annotation process was conducted using the LabelImg tool, initially generating XML files in accordance with the VOC2007 format. These files were subsequently converted into YOLO-compatible TXT files via a custom script, ensuring compliance with the standard YOLO training framework. Representative examples of annotated images are presented in Figure 8, highlighting the structural distinctions and visual variability among defect categories, thereby demonstrating the dataset’s utility for wind turbine blade defect detection applications.

Figure 8.

Representative images of the three types of wind turbine blades, accompanied by illustrations demonstrating the enhanced graphical effects.

4.3. Image Processing

In wind turbine blade damage detection tasks, actual inspection images often suffer from degraded quality due to sudden lighting changes and environmental disturbances. If the training data lacks scene diversity, the model is prone to overfitting, leading to a significant drop in detection performance in complex natural environments. To address this issue, this study employs two augmentation strategies: Weighted Grayscale Conversion and Salt-and-Pepper Noise Injection, aimed at enhancing the model’s generalization ability.

Weighted Grayscale Conversion adjusts the RGB channel weights (Equation: ) to simulate human visual perception under varying lighting conditions. This forces the model to learn illumination-invariant damage representations, significantly improving the model’s robustness under extreme conditions such as strong backlighting and low light. Salt-and-Pepper Noise Injection adds high-density black-and-white pixels (noise density: 0.5–5%) at random to simulate real-world noise scenarios, such as sensor malfunctions or signal transmission interference. This drives the model to extract robust features from degraded images, effectively enhancing the recognition accuracy of subtle damage in harsh environments like rain, fog, and dust.

4.4. Perfomance Evaluation

In the performance evaluation of wind turbine blade damage detection models, a single evaluation metric is insufficient to fully represent the model’s true performance in complex real-world scenarios. A comprehensive evaluation system should be constructed from multiple dimensions, including detection accuracy, localization precision, model complexity, and computational efficiency. Therefore, this study selects precision, recall, mean Average Precision (mAP 50 and mAP [0.5:0.95]), single-frame post-processing time, number of parameters (Params), and computational cost (GFLOPs) as core evaluation metrics, enabling a comprehensive and scientific quantification of model performance.

Precision and recall are fundamental metrics for measuring model detection accuracy. Precision reflects the proportion of true positive samples among those predicted as positive, while recall indicates the model’s ability to capture true positive samples. The combination of both metrics helps avoid high false positives or false negatives. mAP is calculated by determining the area under the precision–recall curve at various confidence thresholds. mAP 50 reflects the basic detection capability, while mAP [0.5:0.95] (with IoU thresholds from 0.5 to 0.95, in steps of 0.05) focuses on evaluating the localization accuracy of damage targets under complex backgrounds. Post-processing time, as a key real-time metric, quantifies the time cost from raw model predictions to the final detection results, directly affecting field inspection efficiency. Params directly reflects the model’s structural complexity and memory usage, constraining feasibility for deployment on edge devices. GFLOPs, by calculating the floating-point operations required for a single-frame inference, determines the operational energy efficiency on embedded devices. These metrics complement each other, providing a combined benchmark for evaluating both the algorithmic performance and engineering feasibility of the wind turbine blade damage detection model. The formulas for calculating precision, recall, mAP, and the number of parameters are defined in Equations (18)–(22).

5. Experiments and Results

5.1. Comparative Experiments on Models

To evaluate the performance advantages of the proposed wind turbine blade damage detection model, this study compares representative models from different detection frameworks and development stages. These models include the classic two-stage detector Faster R-CNN [37], the single-stage detector SSD, the widely used lightweight YOLO series models (YOLOv3-tiny [38], YOLOv5-N, YOLOv7-tiny [39], YOLOv8-N), and the baseline model YOLOv10-N. Through rigorous training and comparison, a comprehensive verification of the overall performance of the proposed model is achieved.

It is noteworthy that, despite the improvements made to YOLOv10-N based on YOLOv8-N, including the adoption of a dual-label assignment strategy, its performance on this dataset varies. As shown in Table 3, for wind turbine blade damage detection tasks, YOLOv8-N outperforms YOLOv10-N in key metrics such as precision, recall, mAP50, and mAP [50:95], except for the parameter count. An analysis of detection results across different image types in Table 4 shows that, while YOLOv10-N has the advantage in post-processing speed, it performs less accurately when dealing with wind turbine blade datasets that contain complex backgrounds and small-scale damage, as compared to YOLOv8-N which uses NMS.

Table 3.

Comparison of key performance indicators among different models for wind turbine blade damage detection.

Table 4.

Analysis of detection results and post-processing speeds for various wind turbine blade damage types.

Based on the dual-label assignment strategy (preserving fast post-processing potential), the proposed MMC-YOLO model achieves a significant improvement in detection accuracy. Compared to YOLOv8-N, MMC-YOLO’s precision, recall, mAP50, and mAP [50:95] increase by 0.3%, 3.8%, 0.9%, and 2.0%, respectively. When compared to YOLOv10-N, the corresponding improvements are 1.5%, 7.3%, 2.9%, and 4.4%, with the proposed model achieving the highest damage detection accuracy. These results indicate that the proposed model, while maintaining efficient post-processing capability, significantly enhances detection accuracy across various targets and effectively mitigates interference from complex backgrounds, demonstrating superior performance for wind turbine blade damage detection.

In the comprehensive comparison with other models, MMC-YOLO demonstrates leading detection accuracy. For instance, the mAP [50:95] of Faster R-CNN is 36.7%, and SSD is 36.4%, both far lower than the 82.4% achieved by the proposed model. Although MMC-YOLO has a slightly higher parameter count and GFLOPs than some lightweight models, its parameter scale remains within a moderate range, meeting the requirements for lightweight deployment. The significant improvement in accuracy justifies the investment in computational resources. In conclusion, MMC-YOLO not only demonstrates technical feasibility but also superior performance in wind turbine blade damage detection, showing great potential for practical applications.

5.2. Comparative Experiments on Attention Mechanisms

To comprehensively validate the superiority of the proposed SENetV2 attention module, comparative experiments were conducted under identical experimental conditions using the dataset in this study. Six advanced attention mechanisms commonly used in computer vision were selected for comparison: AssemFormer [40] (an efficient sequence information integration method based on Transformer), Axial [41] (multi-dimensional axial attention), CBAM [42] (channel–spatial dual attention), PPA [43] (hierarchical attention for small object detection), SENet [44] (channel dependency modeling), and SimAM [45] (a parameter-free attention mechanism).

The selection of these modules is based on their coverage of advanced attention technologies across different dimensions and application scenarios. After replacing the SENetV2 module in the MMC-YOLO architecture with each of the above comparison modules, the results shown in Table 5 indicate that SENetV2 significantly outperforms all the comparison modules on the wind turbine blade defect dataset. By aggregating dense layers to collaboratively process channel-level and global features, SENetV2 accurately captures subtle defects in the blades and excels in both feature integrity and extraction precision. These results not only confirm the effectiveness of SENetV2 but also highlight its unique advantages in complex feature extraction tasks and the special synergistic effect of the proposed model architecture.

Table 5.

Performance comparison of state-of-the-art attention mechanisms for wind turbine blade defect detection.

5.3. Ablation Experiments

To accurately evaluate the contribution of each proposed module to the model’s performance, ablation experiments were conducted under controlled conditions. All training parameters were kept constant across all experimental groups, except for the specific module being evaluated. Evaluation metrics included Precision, Recall, mAP50, mAP [0.5:0.95], number of parameters, and computational complexity. The experimental results are shown in Table 6 (where the symbol “√” indicates that the corresponding module is activated).

Table 6.

Ablation study results of model modules for performance contribution evaluation.

The results indicate that when the MSGConv module is introduced alone, it enhances inter-channel information exchange through multi-branch fusion and Channel Shuffle, resulting in a 3.2% increase in mAP [0.5:0.95]. Introducing the multi-scale feature fusion neck structure MSEFPN alone significantly improved the model’s performance. Its integrated Multi-Scale Expansion Module (MSEM) effectively extended the feature fusion paths between the backbone and neck. By combining the SENetv2 attention mechanism, the model explicitly enhanced shallow features and suppressed irrelevant noise. This design boosted both the efficiency of multi-scale feature aggregation and the consistency of semantic representations. Despite increasing the parameter count by only ~3%, the model’s mAP [0.5:0.95] improved by 1.2%, demonstrating superior feature representation and a high performance-to-complexity ratio. When the CCF module is introduced independently, its stepwise dimension-reduction strategy with dynamic buffering replaces conventional channel compression methods. This allows fine adjustment of the feature information flow, leading to a 2.4% gain in mAP [0.5:0.95].

In the module combination experiments, the synergy between MSGConv and MSEFPN enhances channel relationships and optimizes feature fusion, resulting in a 3.7% increase in mAP [0.5:0.95]. The combination of MSGConv and CCF reduces information loss and improves channel compression, achieving a 3.8% improvement in mAP [0.5:0.95]. The combination of MSEFPN and CCF strengthens both feature fusion and information retention, leading to a 2.8% increase in mAP [0.5:0.95]. When MSGConv, MSEFPN, and CCF are all used together, MSGConv optimizes channel relationships, MSEFPN refines feature fusion, and CCF ensures the quality of compression. The complementary functions of these modules yield a significant improvement of 4.4% in mAP [0.5:0.95], achieving the best model performance.

In conclusion, both individual modules and their combinations significantly improve model performance by optimizing the processes of feature extraction, fusion, and compression. The synergistic effect of all three modules achieves the best results, providing a more efficient solution for surface defect detection in wind turbine blades.

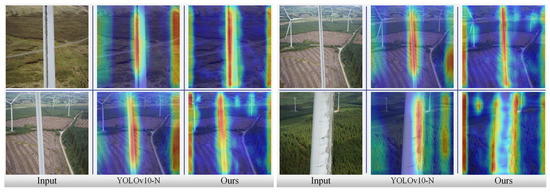

5.4. Visiualization Analysis

To intuitively verify the effectiveness of deep feature learning in the proposed MMC-YOLO model, this study employs the Grad-CAM [46] technique to generate class activation heatmaps, as illustrated in Figure 9. A comparative analysis between the original YOLOv10 and MMC-YOLO reveals distinct differences in feature response patterns. The heatmaps produced by YOLOv10 exhibit a dispersed distribution, with basic activation on the blade surface but blurred localization. Notably, the model demonstrates significantly weakened responses in damage-prone regions and along edge structures, indicating its susceptibility to background interference.

Figure 9.

Comparison of heatmaps between YOLOv10-N and the model proposed in this paper. All results were obtained using the Grad-CAM technique applied to the last convolutional layer of the models.

In contrast, MMC-YOLO generates highly focused and class-specific activation maps. The highlighted regions precisely align with actual damage locations, presenting clear boundaries and substantially suppressed background noise. This phenomenon directly validates the effectiveness of the proposed model enhancements. Specifically, the multi-scale feature interaction mechanism of MSGConv, the cross-layer detail enhancement and semantic fusion capability of MSEFPN, and the damage-aware feature preservation strategy of the CCF module collectively establish a synergistic closed-loop framework for feature extraction, fusion, and transmission. These components significantly improve the representational quality of key regions.

Finally, the detection results on the dataset, as shown in Figure 10, further corroborate the model’s effectiveness by demonstrating accurate localization of damage areas, correct category classification, and reliable confidence scores—underscoring the practical benefits of the proposed feature optimization strategy.

Figure 10.

These images show the damage detection results of MMC-YOLO for wind turbine blades.

6. Discussion

Despite the promising results achieved by MMC-YOLO—integrating MSGConv, MSEFPN, and CCF modules—in terms of precision, recall, and mAP, several limitations merit further consideration. First, the current evaluation lacks statistical significance testing, which weakens the reliability of the performance comparison, particularly when improvements between models are marginal. Furthermore, the analysis primarily focuses on successful detection cases, with limited investigation into failure scenarios such as misclassifications caused by glare or contamination overlap. This oversight hampers a comprehensive understanding of the model’s robustness boundaries, especially under challenging real-world conditions.

Key underlying factors contributing to these limitations are the relatively small scale of the dataset and the use of a single data source in the current experiments, which restrict coverage of complex scenarios involving extreme lighting, severe pollution, or surface degradation. This, in turn, affects the model’s ability to generalize across varied environmental conditions. Moreover, although MMC-YOLO exhibits potential for cross-domain adaptation, its effectiveness has not been validated in heterogeneous industrial contexts such as petrochemical infrastructure or railway inspection, where structural and visual characteristics differ significantly. Lastly, the integration of additional modules, while beneficial to detection performance, results in increased model complexity, posing challenges for deployment on resource-constrained edge devices such as micro UAVs. Future research should aim to optimize the architecture to improve real-time efficiency and ensure broader applicability without sacrificing accuracy.

7. Conclusions

Accurate detection of wind turbine blade damage plays a critical role in maintaining the safety and operational integrity of wind power systems. To address the limitations of the YOLOv10-N model—particularly its constrained receptive field adaptability and the information loss caused by aggressive feature compression—this study proposed an enhanced detection framework, MMC-YOLO, incorporating Transformer-inspired global modeling capabilities. The framework is organized into three tightly coupled stages: multi-scale perception, contextual fusion, and information compression. Specifically, the MSGConv module employs a channel-shuffled multi-branch design to enhance geometric adaptability; the MSEFPN module improves feature fusion and localization accuracy by integrating dynamic path aggregation with the SENetv2 attention mechanism; and the CCF module adopts a progressive buffered dimensionality-reduction strategy to preserve salient features and suppress background interference. These components collectively enable precise modeling of damage features under varying scales and complex environmental conditions.

Experimental results on a wind turbine blade defect dataset demonstrated that MMC-YOLO achieved consistent performance gains over the baseline YOLOv10-N model, with a 1.5% increase in precision, an 8.7% improvement in recall, and mAP50 and mAP [0.5:0.95] increases of 2.9% and 4.4%, respectively. Furthermore, the model maintained high real-time inference efficiency, making it well-suited for deployment in time-sensitive scenarios. This work effectively bridges the gap between lightweight architecture and high-accuracy multi-scale damage detection, offering a scalable and practical solution for intelligent identification of localized structural anomalies in wind turbine blades. The proposed framework lays a foundation for future advancements in vision-based wind power maintenance systems, particularly in scenarios that demand robust perception under limited computational resources.

Author Contributions

Conceptualization, C.L.; methodology, C.L.; software, C.L., X.A. and N.X.; validation, C.L. and C.Z.; formal analysis, C.L., C.Z. and X.G.; investigation, C.L., X.A. and N.X.; resources, C.Z.; data curation, C.L., C.Z. and X.G.; writing—original draft preparation, C.L.; writing—review and editing, C.L., X.G. and X.A.; visualization, C.L. and X.A.; supervision, C.Z. and X.G.; project administration, C.Z. and X.G.; funding acquisition, C.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the Autonomous Region Key R&D and Achievement Transformation Program Project, grant number 2023YFSW0003, and in part by the Basic Research Fund Project for Autonomous Region Universities, grant number 2024QNJS116.

Data Availability Statement

All data supporting the findings of this study are included within the article. For any additional data or code requests, please contact the corresponding author, who will provide such materials upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ou, K.; Gao, S.; Wang, Y.; Zhai, B.; Zhang, W. Assessment of the Renewable Energy Consumption Capacity of Power Systems Considering the Uncertainty of Renewables and Symmetry of Active Power. Symmetry 2024, 16, 1184. [Google Scholar] [CrossRef]

- Zhao, Y.; Yang, S.; Liu, S.; Zhang, S.; Zhong, Z. Optimal Economic Research of Microgrids Based on Multi-Strategy Integrated Sparrow Search Algorithm under Carbon Emission Constraints. Symmetry 2024, 16, 388. [Google Scholar] [CrossRef]

- Zhao, S.; Zhu, Y.; Lou, L.; Zhou, A.; Ma, Y.; Sun, J. Comparison and Analysis of Major Research Methods for Non-Destructive Testing of Wind Turbine Blades. Rev. Sci. Instrum. 2025, 96, 051501. [Google Scholar] [CrossRef] [PubMed]

- Ge, X.; Zhang, C.; Sun, M.; Liu, C.; An, X. Enhanced Equivalent Strain Damage Model Predicting Multiaxial Non-Proportional Metal Fatigue Life. J. Constr. Steel Res. 2025, 235, 109787. [Google Scholar] [CrossRef]

- Ge, X.; Zhang, C.; Liu, G.; Xu, S.; Zhang, J.; Wu, Y. A Fatigue Damage Accumulation Model Considering Load Interaction and Material Parameters under Variable Load Conditions. Fatigue Fract. Eng. Mater. Struct. 2025, 48, 2518–2539. [Google Scholar] [CrossRef]

- Ruzhanskyi, A.; Kostyk, S.; Korobiichuk, I.; Shybetskyi, V. Improving Hydrodynamics and Energy Efficiency of Bioreactor by Developed Dimpled Turbine Blade Geometry. Symmetry 2025, 17, 693. [Google Scholar] [CrossRef]

- Song, X.; Xing, Z.; Jia, Y.; Song, X.; Cai, C.; Zhang, Y.; Wang, Z.; Guo, J.; Li, Q. Review on the Damage and Fault Diagnosis of Wind Turbine Blades in the Germination Stage. Energies 2022, 15, 7492. [Google Scholar] [CrossRef]

- Wang, W.; Xue, Y.; He, C.; Zhao, Y. Review of the Typical Damage and Damage-Detection Methods of Large Wind Turbine Blades. Energies 2022, 15, 5672. [Google Scholar] [CrossRef]

- Gohar, I.; Yew, W.K.; Halimi, A.; See, J. Review of State-of-the-Art Surface Defect Detection on Wind Turbine Blades through Aerial Imagery: Challenges and Recommendations. Eng. Appl. Artif. Intell. 2025, 144, 109970. [Google Scholar] [CrossRef]

- Yang, C.; Ding, S.; Zhou, G. Wind Turbine Blade Damage Detection Based on Acoustic Signals. Sci. Rep. 2025, 15, 3930. [Google Scholar] [CrossRef] [PubMed]

- Jensen, F.; Sorg, M.; von Freyberg, A.; Balaresque, N.; Fischer, A. Detection of Erosion Damage on Airfoils by Means of Thermographic Flow Visualization. Eur. J. Mech. B-Fluids 2024, 104, 123–135. [Google Scholar] [CrossRef]

- Zhu, X.; Guo, Z.; Zhou, Q.; Zhu, C.; Liu, T.; Wang, B. Damage Identification of Wind Turbine Blades Based on Deep Learning and Ultrasonic Testing. Nondestruct. Test. Eval. 2025, 40, 508–533. [Google Scholar] [CrossRef]

- Moreno-Oliva, V.I.; Flores-Diaz, O.; Román-Hernández, E.; Campos-García, M.; Campos-Mercado, E.; Dorrego-Portela, J.R.; Hernandez-Escobedo, Q.; Franco, J.A.; Perea-Moreno, A.-J.; García, A.A. Vibration Measurement Using Laser Triangulation for Applications in Wind Turbine Blades. Symmetry 2021, 13, 1017. [Google Scholar] [CrossRef]

- Memari, M.; Shakya, P.; Shekaramiz, M.; Seibi, A.C.; Masoum, M.A.S. Review on the Advancements in Wind Turbine Blade Inspection: Integrating Drone and Deep Learning Technologies for Enhanced Defect Detection. IEEE Access 2024, 12, 33236–33282. [Google Scholar] [CrossRef]

- Zhang, S.; He, Y.; Gu, Y.; He, Y.; Wang, H.; Wang, H.; Yang, R.; Chady, T.; Zhou, B. UAV Based Defect Detection and Fault Diagnosis for Static and Rotating Wind Turbine Blade: A Review. Nondestruct. Test. Eval. 2025, 40, 1691–1729. [Google Scholar] [CrossRef]

- Diaz, P.M.; Tittus, P. Fast Detection of Wind Turbine Blade Damage Using Cascade Mask R-DSCNN-Aided Drone Inspection Analysis. Signal Image Video Process. 2023, 17, 2333–2341. [Google Scholar] [CrossRef]

- Pratt, R.; Allen, C.; Masoum, M.A.S.; Seibi, A. Defect Detection and Classification on Wind Turbine Blades Using Deep Learning with Fuzzy Voting. Machines 2025, 13, 283. [Google Scholar] [CrossRef]

- Zhao, H.; Gao, Y.; Deng, W. Defect Detection Using Shuffle Net-CA-SSD Lightweight Network for Turbine Blades in IoT. IEEE Internet Things J. 2024, 11, 32804–32812. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar]

- Zhang, Y.; Wang, L.; Huang, C.; Luo, X. Wind Turbine Blade Defect Detection Based on the Genetic Algorithm-Enhanced YOLOv5 Algorithm Using Synthetic Data. IEEE Trans. Ind. Appl. 2025, 61, 653–665. [Google Scholar] [CrossRef]

- Zou, L.; Chen, A.; Li, C.; Yang, X.; Sun, Y. DCW-YOLO: An Improved Method for Surface Damage Detection of Wind Turbine Blades. Appl. Sci. 2024, 14, 8763. [Google Scholar] [CrossRef]

- Tong, L.; Fan, C.; Peng, Z.; Wei, C.; Sun, S.; Han, J. WTBD-YOLOv8: An Improved Method for Wind Turbine Generator Defect Detection. Sustainability 2024, 16, 4467. [Google Scholar] [CrossRef]

- Ma, L.; Jiang, X.; Tang, Z.; Zhi, S.; Wang, T. Wind Turbine Blade Defect Detection Algorithm Based on Lightweight MES-YOLOv8n. IEEE Sens. J. 2024, 24, 28409–28418. [Google Scholar] [CrossRef]

- Dong, X.; Yang, J.; Teoh, A.B.J.; Yu, D.; Li, X.; Jin, Z. Video-Based Face Outline Recognition. Pattern Recognit. 2024, 152, 110482. [Google Scholar] [CrossRef]

- Dong, X.; Wang, L.; Lv, X.; Zhang, X.; Zhang, H.; Pu, B.; Gao, Z.; Liao, I.Y.; Jin, Z. CertainTTA: Estimating Uncertainty for Test-Time Adaptation on Medical Image Segmentation. Inf. Fusion 2025, 123, 103300. [Google Scholar] [CrossRef]

- Li, Y.; Yang, S.; Zheng, Y.; Lu, H. Improved Point-Voxel Region Convolutional Neural Network: 3D Object Detectors for Autonomous Driving. IEEE Trans. Intell. Transp. Syst. 2022, 23, 9311–9317. [Google Scholar] [CrossRef]

- Yang, S.; Lu, H.; Li, J. Multifeature Fusion-Based Object Detection for Intelligent Transportation Systems. IEEE Trans. Intell. Transp. Syst. 2023, 24, 1126–1133. [Google Scholar] [CrossRef]

- Neubeck, A.; Van Gool, L. Efficient Non-Maximum Suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 850–855. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024; pp. 1–21. [Google Scholar] [CrossRef]

- Vu, T.C.; Nguyen, T.V.; Nguyen, T.V.; Nguyen, D.T.; Dinh, L.Q.; Nguyen, M.D.; Nguyen, H.T.; Nguyen, H.T.; Nguyen, M.T. Object Detection in Remote Sensing Images Using Deep Learning: From Theory to Applications in Intelligent Transportation Systems. J. Future Artif. Intell. Technol. 2025, 2, 227–241. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Cheng, T.; Song, L.; Ge, Y.; Liu, W.; Wang, X.; Shan, Y. Yolo-World: Real-Time Open-Vocabulary Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 16901–16911. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 16965–16974. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Mahendran, N. SENetV2: Aggregated Dense Layer for Channelwise and Global Representations. arXiv 2023, arXiv:2311.10807. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2023, Vancouver, BC, Canada, 17–24 June 2023; IEEE Computer Society: Washington, DC, USA, 2023; Volume 2023-June, pp. 7464–7475. [Google Scholar]

- Dai, W.; Liu, R.; Wu, Z.; Wu, T.; Wang, M.; Zhou, J.; Yuan, Y.; Liu, J. Exploiting Scale-Variant Attention for Segmenting Small Medical Objects. arXiv 2024, arXiv:2407.07720. [Google Scholar]

- Ho, J.; Kalchbrenner, N.; Weissenborn, D.; Salimans, T. Axial Attention in Multidimensional Transformers. arXiv 2019, arXiv:1912.12180. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the 15th European Conference on Computer Vision, ECCV 2018, Munich, Germany, 8–14 September 2018; Spinger: Berlin/Heidelberg, Germany, 2018; Volume 11211 LNCS, pp. 3–19. [Google Scholar]

- Xu, S.; Zheng, S.; Xu, W.; Xu, R.; Wang, C.; Zhang, J.; Teng, X.; Li, A.; Guo, L. HCF-Net: Hierarchical Context Fusion Network for Infrared Small Object Detection. In Proceedings of the 2024 IEEE International Conference on Multimedia and Expo (ICME), Niagara Falls, ON, Canada, 15–19 July 2024; pp. 1–6. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. SimAM: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the 38th International Conference on Machine Learning, ICML 2021, Virtual, 18–24 July 2021; Volume 139, pp. 11863–11874. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations From Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).