YOLO-AWK: A Model for Injurious Bird Detection in Complex Farmland Environments

Abstract

1. Introduction

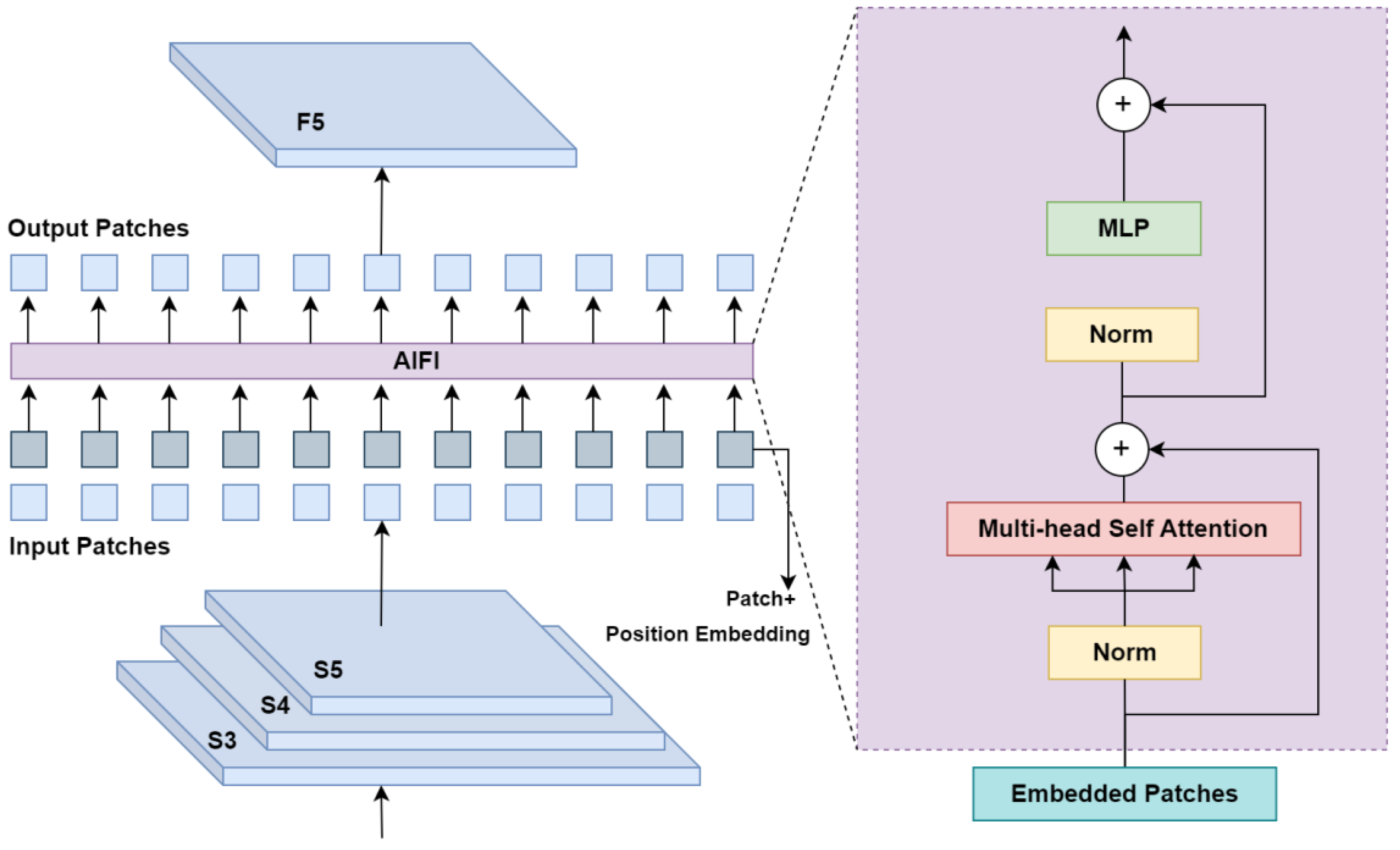

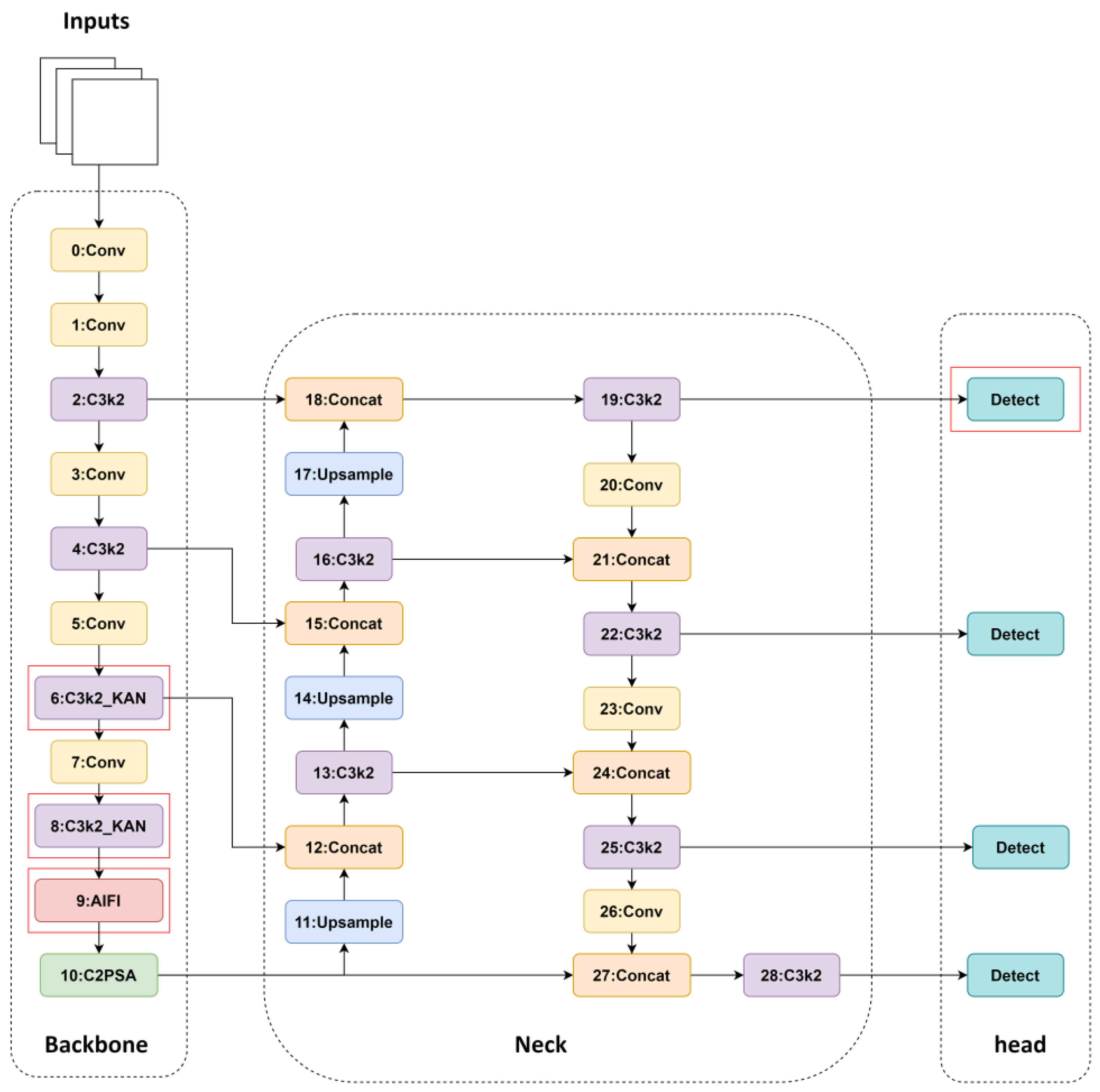

- To address the problems of complex background of farmland scenes, where vegetation and other disturbing elements are easily confused with birds, we introduce an intrastate feature interaction (AIFI) module to replace the SPPF module of the YOLOv11n model to improve the model’s ability to recognize bird targets under complex backgrounds.

- To address the problem that bird targets have irregular shapes and large-scale differences, and the CIoU used in the YOLOv11n model has limited ability to locate and identify a variety of bird targets, we use the WIoUv3 as a new loss function, so that the model can more accurately locate and identify bird targets of different shapes and sizes.

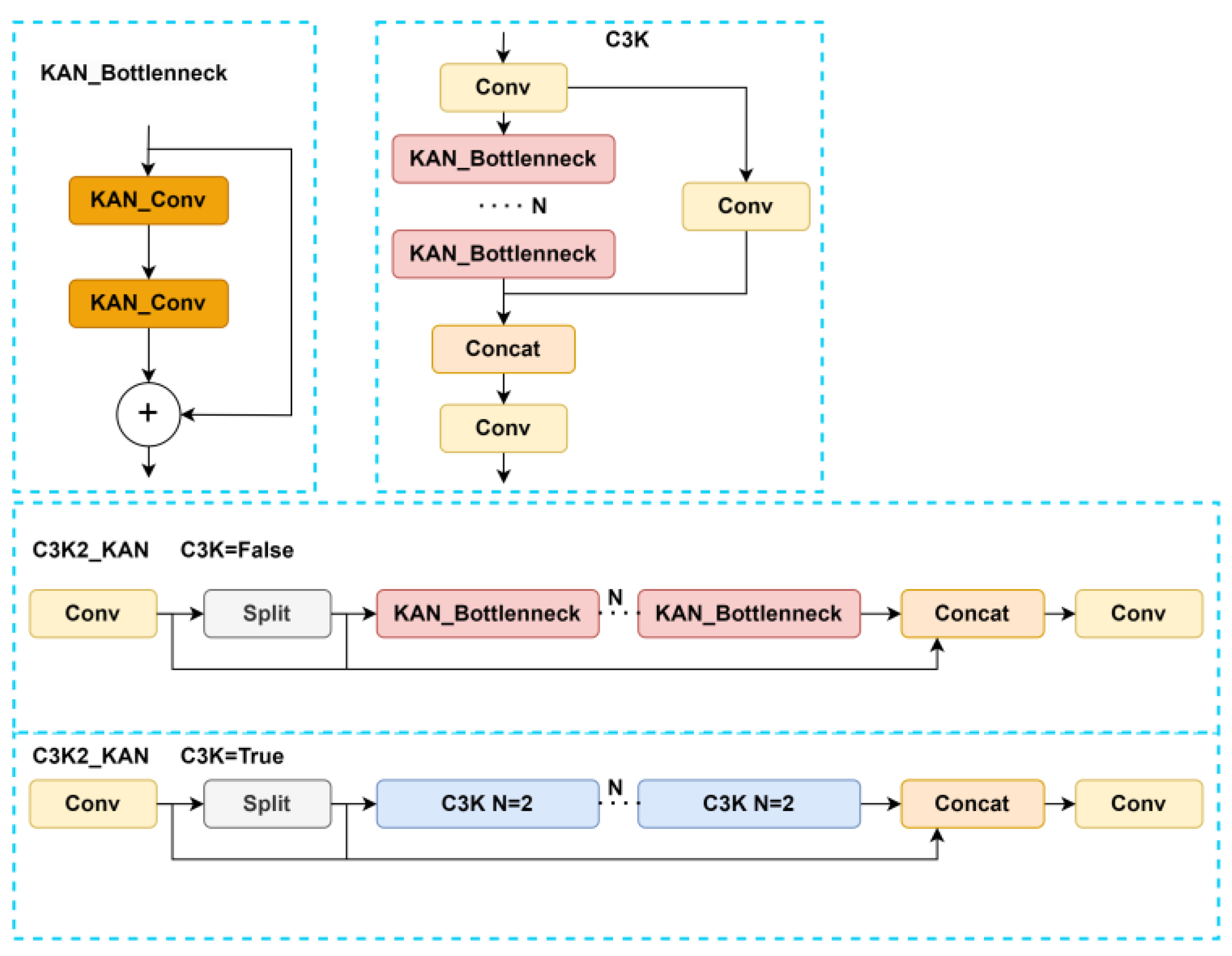

- Aiming at the problems such as more noise interference and interferences in farmland, and the limited feature extraction ability of C3K2 in YOLOv11n, we fuse the KAN module with C3K2 and construct the new C3K2_KAN module to remove the noise interference and to improve the extracting ability of residual features of birds.

- Aiming at the problems that bird targets in farmland are generally small, and the three detection heads that come with YOLOv11n do not make enough use of shallow features, which affects the detection performance of small targets, we add a small target detection head to the model to improve the detection accuracy of the model for small bird targets.

2. Materials and Methods

2.1. Data Collection

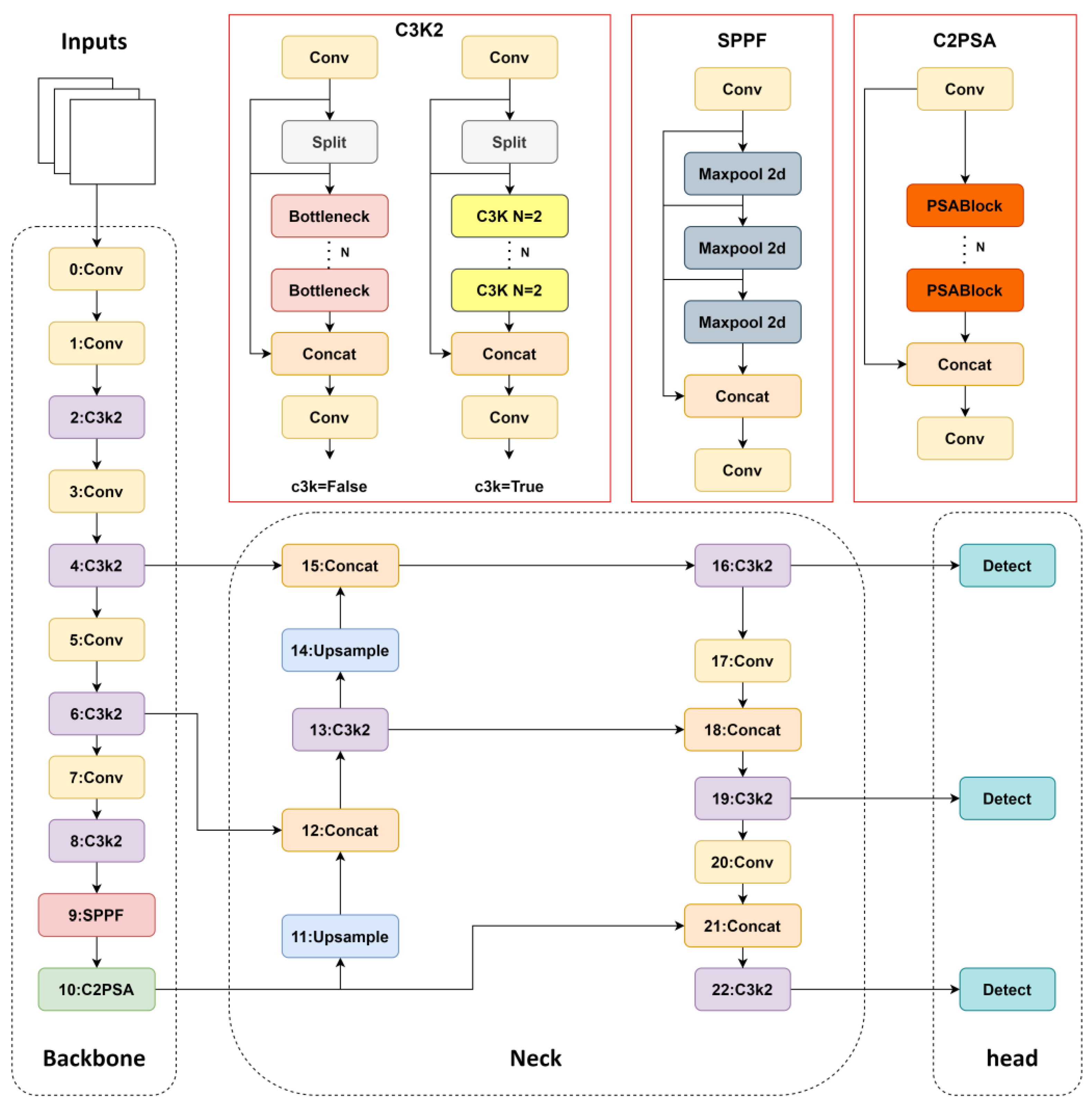

2.2. YOLOv11 Model

2.3. Improvements to YOLOv11n

2.3.1. Improvements to the SPPF Module

- 1.

- For the input high-level feature , it is first segmented and spread into sequence form, and then after preserving the spatial information by positional encoding, as well as a linear projection transformation of the feature , Equation (1) is obtained:

- 2.

- A multi-head attention mechanism is used to establish global dependencies between features. The calculation process of the multi-head self-attention mechanism is shown in Equation (2):

- 3.

- The serialized attentional output is restored to a two-dimensional spatial structure by a tensor reshaping operation using the operation:

2.3.2. Loss Function Optimization

2.3.3. Improvements to the C3K2 Module

2.3.4. Add Small Target Detection Head

3. Results

3.1. Experimental Environment and Training Parameters

3.2. Evaluation Metrics

3.3. Loss Function Comparison Experiment

3.4. Model Comparison Experiment

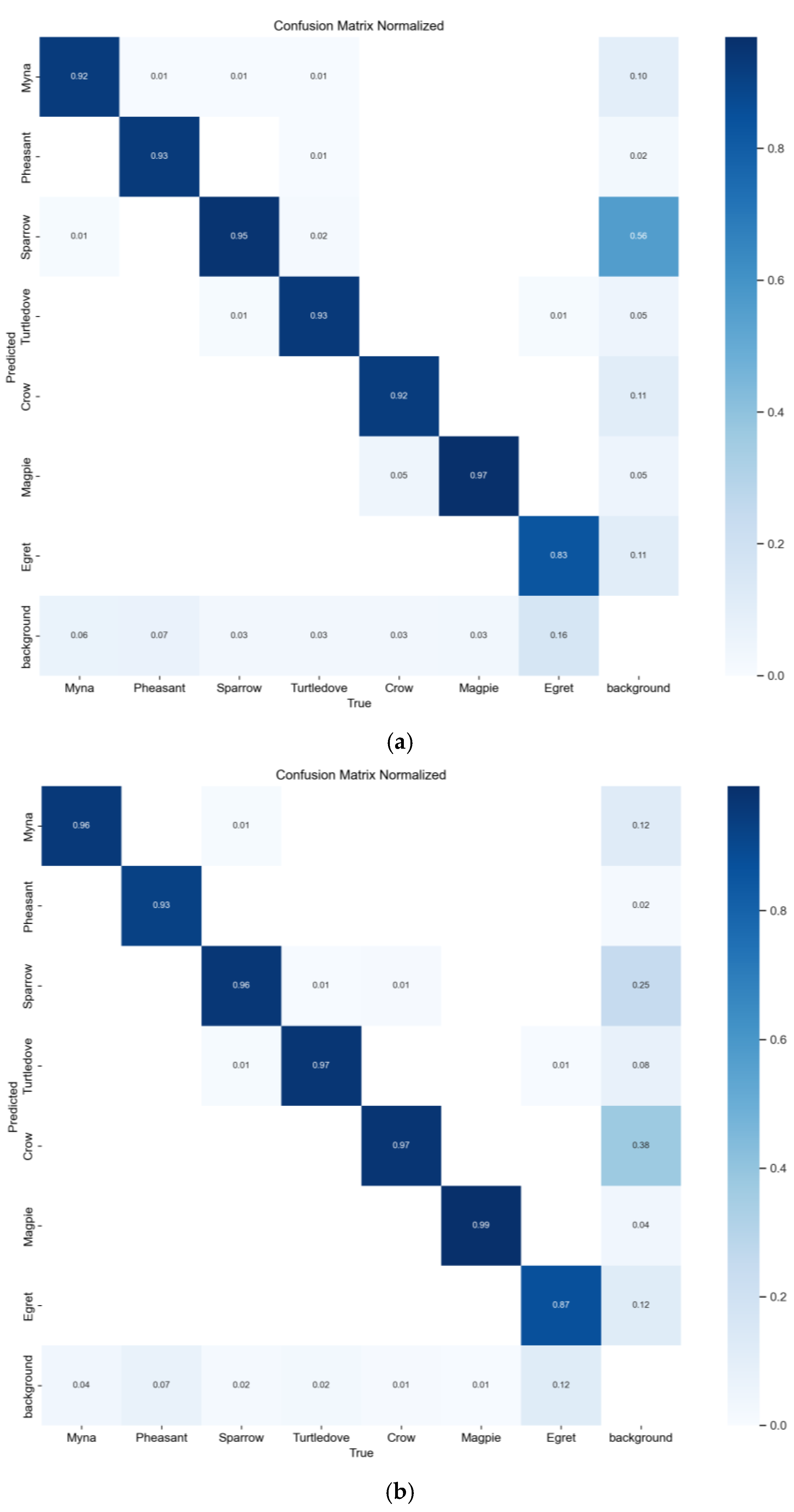

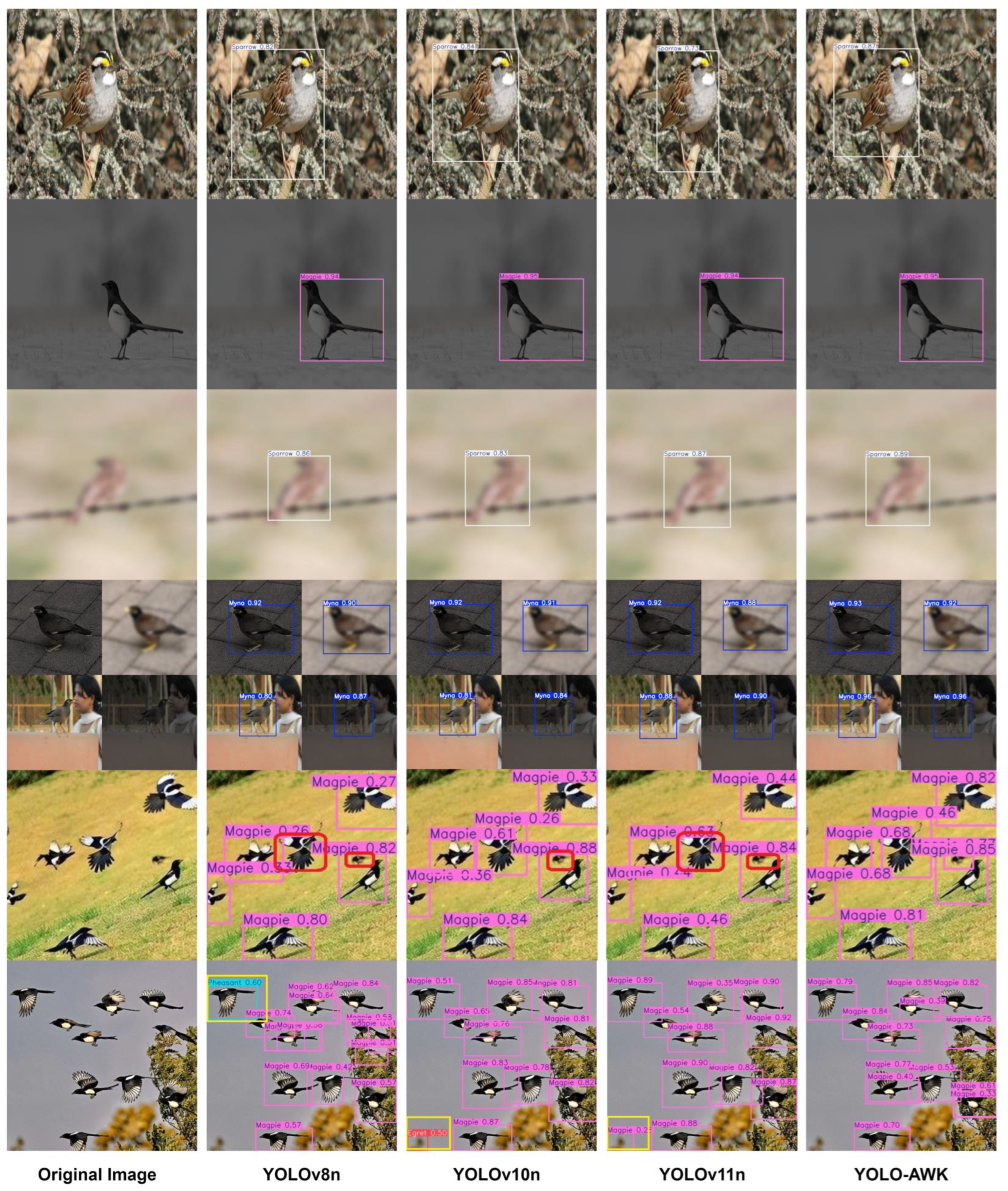

3.5. Ablation Experiment

3.6. Visual Contrast Experiment

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Savary, S.; Ficke, A.; Aubertot, J.N.; Hollier, C. Crop Losses Due to Diseases and Their Implications for Global Food Production Losses and Food Security. Food Secur. 2012, 4, 519–537. [Google Scholar] [CrossRef]

- De Mey, Y.; Demont, M.; Diagne, M. Estimating Bird Damage to Rice in Africa: Evidence from the Senegal River Valley. J. Agric. Econ. 2012, 63, 175–200. [Google Scholar] [CrossRef]

- Hiron, M.; Rubene, D.; Mweresa, C.K.; Ajamma, Y.U.O.; Owino, E.A.; Low, M. Crop Damage by Granivorous Birds Despite Protection Efforts by Human Bird Scarers in a Sorghum Field in Western Kenya. Ostrich 2014, 85, 153–159. [Google Scholar] [CrossRef]

- Jiang, X.; Sun, Y.; Chen, F.; Ge, F.; Ouyang, F. Control of Maize Aphids by Natural Enemies and Birds under Different Farmland Landscape Patterns in North China. Chin. J. Biol. Control 2021, 37, 863. [Google Scholar] [CrossRef]

- Wood, C.; Qiao, Y.; Li, P.; Ding, P.; Lu, B.; Xi, Y. Implications of Rice Agriculture for Wild Birds in China. Waterbirds 2010, 33, S30–S43. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Bi, X.; Hu, J.; Xiao, B.; Li, W.; Gao, X. IEMask R-CNN: Information-Enhanced Mask R-CNN. IEEE Trans. Big Data 2023, 9, 688–700. [Google Scholar] [CrossRef]

- Zhai, S.; Shang, D.; Wang, S.; Dong, S. DF-SSD: An Improved SSD Object Detection Algorithm Based on DenseNet and Feature Fusion. IEEE Access 2020, 8, 24344–24357. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Qiu, Z.; Zhu, X.; Liao, C.; Shi, D.; Kuang, Y.; Li, Y.; Zhang, Y. Detection of Bird Species Related to Transmission Line Faults Based on Lightweight Convolutional Neural Network. IET Gener. Transm. Distrib. 2022, 16, 869–881. [Google Scholar] [CrossRef]

- Zhao, L.; Li, S. Object detection algorithm based on improved YOLOv3. Electronics 2020, 9, 537. [Google Scholar] [CrossRef]

- Gai, R.; Chen, N.; Yuan, H. A detection algorithm for cherry fruits based on the improved YOLO-v4 model. Neural Comput. Appl. 2023, 35, 13895–13906. [Google Scholar] [CrossRef]

- Yi, X.; Qian, C.; Wu, P.; Maponde, B.T.; Jiang, T.; Ge, W. Research on Fine-Grained Image Recognition of Birds Based on Improved YOLOv5. Sensors 2023, 23, 8204. [Google Scholar] [CrossRef] [PubMed]

- Gao, S.H.; Cheng, M.M.; Zhao, K.; Zhang, X.Y.; Yang, M.H.; Torr, P.H.S. Res2Net: A New Multi-Scale Backbone Architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 652–662. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Liang, H.; Zhang, X.; Kong, J.; Zhao, Z.; Ma, K. SMB-YOLOv5: A Lightweight Airport Flying Bird Detection Algorithm Based on Deep Neural Networks. IEEE Access 2024, 12, 84878–84892. [Google Scholar] [CrossRef]

- Ristea, N.C.; Madan, N.; Ionescu, R.T.; Nasrollahi, K.; Khan, F.S.; Moeslund, T.B.; Shah, M. Self-Supervised Predictive Convolutional Attentive Block for Anomaly Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13576–13586. [Google Scholar]

- Jiang, T.; Zhao, J.; Wang, M. Bird Detection on Power Transmission Lines Based on Improved YOLOv7. Appl. Sci. 2023, 13, 11940. [Google Scholar] [CrossRef]

- Qian, J.; Lin, J.; Bai, D.; Xu, R.; Lin, H. Omni-Dimensional Dynamic Convolution Meets Bottleneck Transformer: A Novel Improved High Accuracy Forest Fire Smoke Detection Model. Forests 2023, 14, 838. [Google Scholar] [CrossRef]

- Bayraktar, I.; Bakirci, M. Attention-augmented YOLO11 for high-precision aircraft detection in synthetic aperture radar imagery. In Proceedings of the 2025 27th International Conference on Digital Signal Processing and Its Applications (DSPA), Moscow, Russia, 26–28 March 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, D.; Tan, J.; Wang, H.; Kong, L.; Zhang, C.; Pan, D.; Li, T.; Liu, J. SDS-YOLO: An Improved Vibratory Position Detection Algorithm Based on YOLOv11. Measurement 2025, 244, 116518. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2024; pp. 16965–16974. [Google Scholar]

- Huang, P.; Tian, S.; Su, Y.; Tan, W.; Dong, Y.; Xu, W. IA-CIOU: An Improved IOU Bounding Box Loss Function for SAR Ship Target Detection Methods. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 10569–10582. [Google Scholar] [CrossRef]

- Deng, L.; Wu, S.; Zhou, J.; Zou, S.; Liu, Q. LSKA-YOLOv8n-WIoU: An Enhanced YOLOv8n Method for Early Fire Detection in Airplane Hangars. Fire 2025, 8, 67. [Google Scholar] [CrossRef]

- Somvanshi, S.; Javed, S.A.; Islam, M.M.; Pandit, D.; Das, S. A Survey on Kolmogorov-Arnold Network. ACM Comput. Surv. 2024. [Google Scholar] [CrossRef]

- Badgujar, C.M.; Poulose, A.; Gan, H. Agricultural object detection with You Only Look Once (YOLO) Algorithm: A bibliometric and systematic literature review. Comput. Electron. Agric. 2024, 223, 109090. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar] [CrossRef]

- Zhang, Y.-F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Chen, Z.; Chen, K.; Lin, W.; See, J.; Yu, H.; Ke, Y.; Yang, C. Piou loss: Towards accurate oriented object detection in complex environments. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part V.. Springer: Cham, Switzerland, 2020; pp. 195–211. [Google Scholar]

- Yao, J.; Qi, J.; Zhang, J.; Shao, H.; Yang, J.; Li, X. A Real-Time Detection Algorithm for Kiwifruit Defects Based on YOLOv5. Electronics 2021, 10, 1711. [Google Scholar] [CrossRef]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. UAV-YOLOv8: A Small-Object-Detection Model Based on Improved YOLOv8 for UAV Aerial Photography Scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef] [PubMed]

- Fatehi, F.; Bagherpour, H.; Parian, J.A. Enhancing the Performance of YOLOv9t Through a Knowledge Distillation Approach for Real-Time Detection of Bloomed Damask Roses in the Field. Smart Agric. Technol. 2025, 10, 100794. [Google Scholar] [CrossRef]

- Li, Y.; Guo, Z.; Sun, Y.; Chen, X.; Cao, Y. Weed Detection Algorithms in Rice Fields Based on Improved YOLOv10n. Agriculture 2024, 14, 2066. [Google Scholar] [CrossRef]

- Zhou, K.; Jiang, S. Forest Fire Detection Algorithm Based on Improved YOLOv11n. Sensors 2025, 25, 2989. [Google Scholar] [CrossRef] [PubMed]

| Species | Number |

|---|---|

| Myna | 594 |

| Pheasant | 535 |

| Sparrow | 593 |

| Turtledove | 515 |

| Crow | 597 |

| Magpie | 564 |

| Egret | 453 |

| Loss Function | Precision/% | Recall/% | mAP@0.5/% | mAP@0.5:0.95/% |

|---|---|---|---|---|

| CIoU | 91.2 | 88.9 | 94.2 | 72.3 |

| DIoU | 91.9 | 86.9 | 94.1 | 72.2 |

| EIoU | 92.3 | 87.2 | 94.5 | 72 |

| GIoU | 92.6 | 87.5 | 94.3 | 71.5 |

| PIoU | 92.3 | 87.5 | 94.1 | 72.4 |

| WIoUv3 | 93.0 | 88.3 | 94.6 | 72.4 |

| Models | Precision/% | Recall/% | mAP@0.5/% | mAP@0.5:0.95/% | Parameters/M | GFLOPs | FPS |

|---|---|---|---|---|---|---|---|

| Faster R-CNN | 81.8 | 83.5 | 83.2 | 59.9 | 28.3 | 164.3 | 40.5 |

| YOLOv5n | 93.0 | 83.9 | 93.1 | 70.2 | 1.8 | 4.1 | 238.1 |

| YOLOv8n | 91.1 | 88.3 | 94.1 | 72.4 | 3.0 | 8.1 | 222.2 |

| YOLOv9t | 90.1 | 87.9 | 93.7 | 72.1 | 2.8 | 11.7 | 149.3 |

| YOLOv10n | 91.6 | 89.3 | 94.5 | 73.6 | 2.69 | 8.2 | 163.9 |

| YOLOv11n | 91.2 | 88.9 | 94.2 | 72.3 | 2.58 | 6.3 | 217.4 |

| YOLO-AWK | 93.9 | 91.2 | 95.8 | 75.3 | 4.02 | 10.5 | 169.5 |

| YOLOv11n | AIFI | WIoUv3 | KAN | Add Small Target Detection Head | Precision | Recall | mAP@0.5 | mAP@0.5:0.95 | Parameters/M | GFLOPs |

|---|---|---|---|---|---|---|---|---|---|---|

| √ | - | - | - | - | 91.2 | 88.9 | 94.2 | 72.3 | 2.58 | 6.3 |

| √ | √ | - | - | - | 90.9 | 89.5 | 94.4 | 72.7 | 3.21 | 6.6 |

| √ | - | √ | - | - | 93.0 | 88.3 | 94.6 | 72.4 | 2.58 | 6.3 |

| √ | - | - | √ | - | 92.8 | 88.6 | 94.6 | 72.9 | 3.32 | 6.3 |

| √ | - | - | - | √ | 91.8 | 90.2 | 94.8 | 72.8 | 2.66 | 10.2 |

| √ | √ | √ | - | - | 93.2 | 87.8 | 94.8 | 73.5 | 3.21 | 6.6 |

| √ | √ | √ | √ | - | 93.8 | 89.4 | 95.3 | 74.3 | 3.95 | 6.6 |

| √ | √ | √ | √ | √ | 93.9 | 91.2 | 95.8 | 75.3 | 4.02 | 10.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, X.; Cheng, Y.; Dong, M.; Xie, X. YOLO-AWK: A Model for Injurious Bird Detection in Complex Farmland Environments. Symmetry 2025, 17, 1210. https://doi.org/10.3390/sym17081210

Yang X, Cheng Y, Dong M, Xie X. YOLO-AWK: A Model for Injurious Bird Detection in Complex Farmland Environments. Symmetry. 2025; 17(8):1210. https://doi.org/10.3390/sym17081210

Chicago/Turabian StyleYang, Xiang, Yongliang Cheng, Minggang Dong, and Xiaolan Xie. 2025. "YOLO-AWK: A Model for Injurious Bird Detection in Complex Farmland Environments" Symmetry 17, no. 8: 1210. https://doi.org/10.3390/sym17081210

APA StyleYang, X., Cheng, Y., Dong, M., & Xie, X. (2025). YOLO-AWK: A Model for Injurious Bird Detection in Complex Farmland Environments. Symmetry, 17(8), 1210. https://doi.org/10.3390/sym17081210