1. Introduction

In the complex systems of nature and industrial production, the principle of symmetry always plays a key role in revealing laws and optimizing processes. As an important branch of the textile industry, cotton spinning occupies a basic position in the national economy [

1]. In 2024, China’s cotton output reached 6.164 million tons, up 9.7% year on year, with Xinjiang contributing 5.686 million tons as the main producing region, accounting for 92.3% of the country’s total output. Cotton, as one of the most important natural fibers in the world, is not only the core raw material of the textile industry, but also an important economic crop. During the processes of cotton harvesting, transportation and processing, some non-fibrous substances that do not belong to cotton, namely, cotton impurities, are often mixed in [

2]. The fiber quality and impurity content of cotton form a pair of “symmetrical contradictions”. The fiber quality of cotton directly affects the quality of textile products, while the impurity content is directly related to processing costs and production efficiency [

3]. However, the current cotton sorting process is facing considerable challenges. The existing sorting methods mainly rely on manual labor, which is inefficient and has difficulty meeting the growing market demand and the processing requirements of high-quality cotton. Although there are already improved techniques abroad, their core technologies have long been monopolized and the equipment costs are high, making it difficult for them to become widespread in domestic cotton spinning enterprises. The asymmetry between this technology and demand urgently calls for a fast and accurate method to detect impurities in falled cotton, thereby increasing the efficiency and quality symmetries in the cotton processing flow, and providing support for, and guaranteeing, subsequent cotton processing.

Researchers have conducted extensive studies on the detection of impurities in cotton. Currently, the commonly used methods mainly include traditional image processing methods, traditional machine learning methods and deep learning methods.

Traditional methods rely on manually designed image features and algorithmic rules, and realize impurity detection through preprocessing, feature extraction and threshold segmentation. Ding et al. [

4] can accurately detect common impurities based on the Gabor filter, Otsu method and morphological filtering. Xia et al. [

5] used the Canny algorithm to distinguish impurities and raw cotton through gray second-order differentiation, gradient threshold selection, non-maximum suppression and morphological operations. He et al. [

6] used wavelet transformation, regional average gradient threshold and multi-scale discrimination to detect cotton knot impurities. Li et al. [

7] constructed an RGB three-dimensional color model and combined nonlinear dual-threshold and differential algorithms to realize fine impurity detection. Some studies are based on near-infrared spectroscopy technology. For example, Li et al. [

8] constructed a spectrum acquisition system, and improved the prediction accuracy through the SELU activation function and standardized preprocessing. Zhou et al. [

9] used MSC to preprocess spectral data and combined it with the PLSR algorithm to construct an impurity content prediction model.

Although traditional methods are effective in simple scenes, their insufficient feature generalization ability is prominent when facing industrial-grade complex backgrounds (such as cotton wool texture interference and impurity scale differences), prompting researchers to turn to deep learning methods. At present, deep learning object detection algorithms have been widely used in the field of cotton processing. Yolo (You Only Look Once) is a representative single-stage object detection algorithm, which is famous for its real-time performance and high accuracy. The core idea is to transform object detection into a regression problem, and directly predict the location and category of the object through a single forward inference, which significantly improves the detection speed [

10]. Yolov1 creates a single-stage real-time detection framework, but its accuracy is limited. Yolov2 improves stability with anchor boxes and multi-scale prediction. Yolov3 introduces a Darknet-53 backbone and feature pyramid to enhance multi-scale detection. Yolov4 integrates CSPDarknet53, spatial pyramid pooling, and systematic training strategies. Yolov5 uses the CSPDarknet53 architecture, optimizes the SPPF module and relies on the anchor-based mechanism to obtain high flexibility, but generates model redundancy due to anchor box dependence. Yolov6 turns to anchor-free design and uses RepVGG to reparameterize the backbone and decouple the detection head to improve efficiency, but its lightweight ability is insufficient. The unified framework of yolov8 is an anchor-free paradigm with full task compatibility. Through C2f cross-stage feature fusion and other optimization of bounding box regression, the mAP is 5–10% higher than that of the previous generation, and it also supports multi-task expansion and efficient deployment [

11]. Zhang et al. [

12] proposed a cotton weed detection method based on the improved Canny operator and yolov5 neural network, which effectively improved the detection speed and accuracy. Xu et al. [

13] proposed an anchor-free lightweight detection network model based on the improved yolov4-tiny to improve the recognition rate of white and near-cotton color impurities in raw cotton. Li et al. [

14] improved the accuracy and speed of impurity detection by enhancing the backbone network and header network of yolov7. Zhou et al. [

15] integrated the context enhancement module and selective kernel attention into the yolov5s model to improve the overall efficiency, but ignored the number of parameters of the model. Zhang et al. [

16] used the multi-channel fusion segmentation algorithm and the improved yolov4 model to realize the detection of cotton impurities and the calculation of impurity rate. Although the goal was achieved in the detection function, the model volume and detection speed were not specifically optimized. Jiang et al. [

17] proposed a cotton impurity instance segmentation algorithm based on yolov8s-Seg to realize the pixel-level segmentation of cotton and impurities. However, the model has a large number of parameters and the mAP50 index is 80.8, so there is still room for improvement in model lightweight and detection accuracy. The yolov5-U-Net++ model proposed by Feng et al. [

18] shows high performance in cotton foreign fiber detection, al-though the accuracy is 98.80%, the model size is as high as 94.2 MB, and the lightweight performance needs to be further improved. Aiming at the problems of complex shapes and large scale differences of cotton impurities, Wang et al. [

19] improved the yolov5 model by introducing an adaptive anchor box algorithm, embedding the MCA attention mechanism in the feature fusion layer, and using the GIoU loss function to optimize the matching accuracy, which effectively improved the performance, but the number of parameters was still 7.06 M, and the model simplicity needed to be optimized. Liu et al. [

20] introduced the GhostBottleneck module and SoftPool structure into the yolov5 model, which improved the detection accuracy by 2.59% while reducing the model volume, providing a feasible idea for balancing model lightweight and detection accuracy, but there is still room for improvement in the targeted optimization of carding machines dropping cotton impurities. Zhang et al. [

21] used a BP neural network combined with selection, crossover and mutation operations of the genetic algorithm in YCbCr color space to optimize the weights and thresholds of the neural network, and the impurity detection rate reached 92.3%. Han et al. [

22] proposed a raw cotton impurity detection algorithm based on residuals and an attention mechanism. The visual attention mechanism was introduced into the Faster RCNN network, ResNet50 was used as the feature extraction network and RoIAlign was used to reduce the quantization error. Xu et al. [

23] cut the redundant structure of a MobileNetV3 network and deployed an improved receptive field module in the pooling layer. The experimental results show that the detection of a single image takes 0.02 s and the average accuracy rate reaches 89.05%.

Although object detection technology has made important progress in the field of clutter detection, its industrial application still faces many technical challenges. Traditional image processing methods are not robust to illumination changes and impurity morphology diversity, and need to rely on much manual parameter adjustment, which is difficult to adapt to complex scenes of industrial pipelines. The method of combining near-infrared spectroscopy technology with traditional machine learning requires professional spectrum acquisition equipment, and it cannot locate the spatial position of impurities, which is difficult to meet the needs of “detection–sorting” integration. Although the deep learning models represented by yolo and Faster RCNN perform well in general detection, their inherent defects due to a large number of parameters and high computational complexity make it difficult to meet the strict requirements of real-time and low power consumption in industrial scenarios. In particular, the existing models have obvious deficiencies in feature representation ability and spatial context modeling in dealing with the key problems such as missing detection of small impurities, misidentification of adhesion targets, and poor adaptability to morphological variations, which are common in cotton spinning production lines. Therefore, it is necessary to further study the design method of lightweight models for balancing detection accuracy and reasoning efficiency.

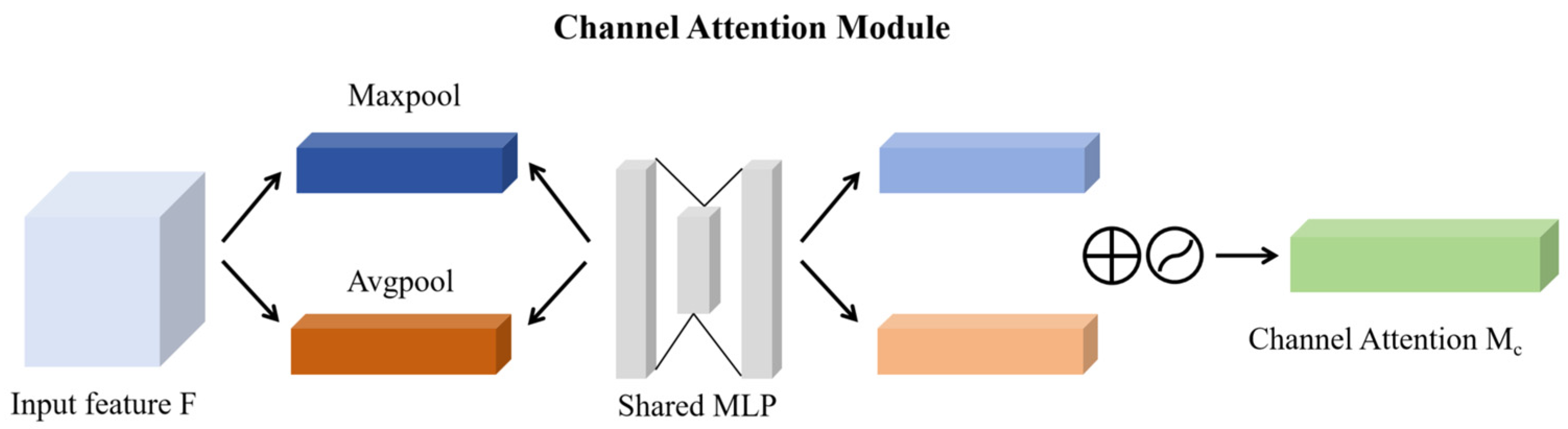

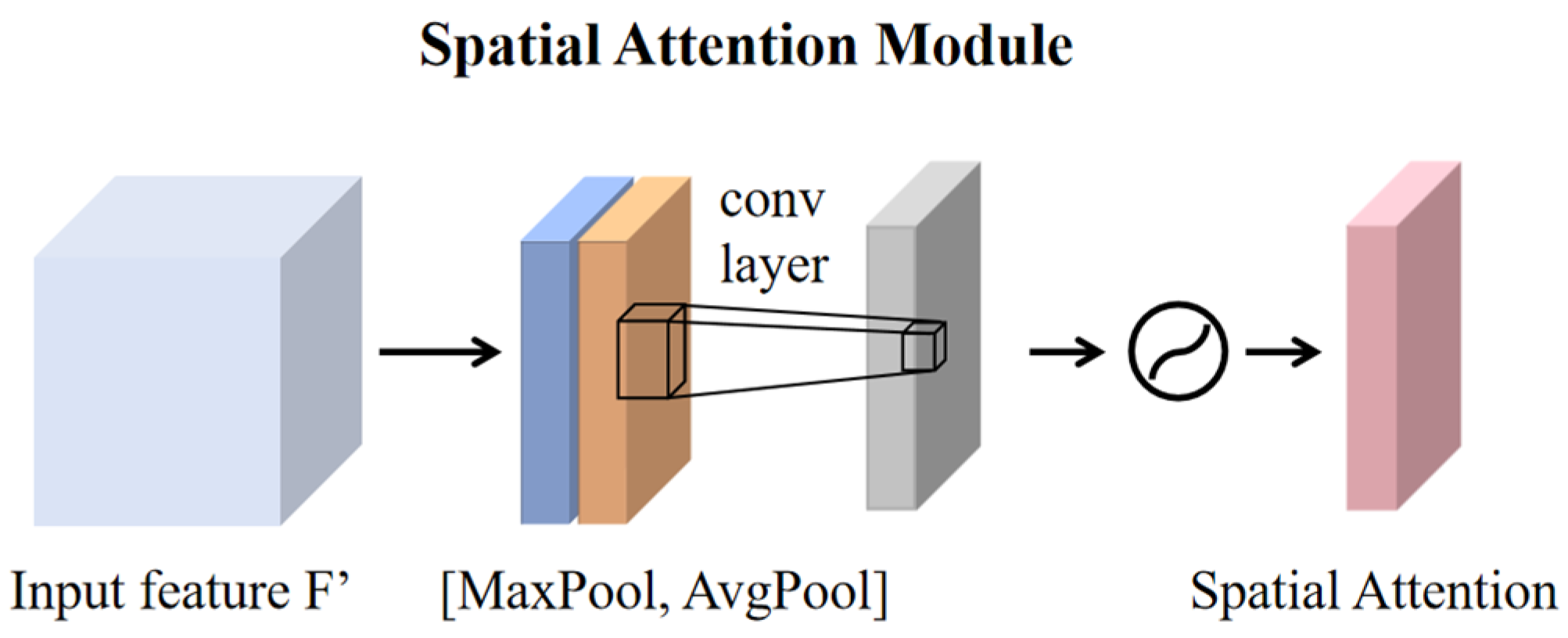

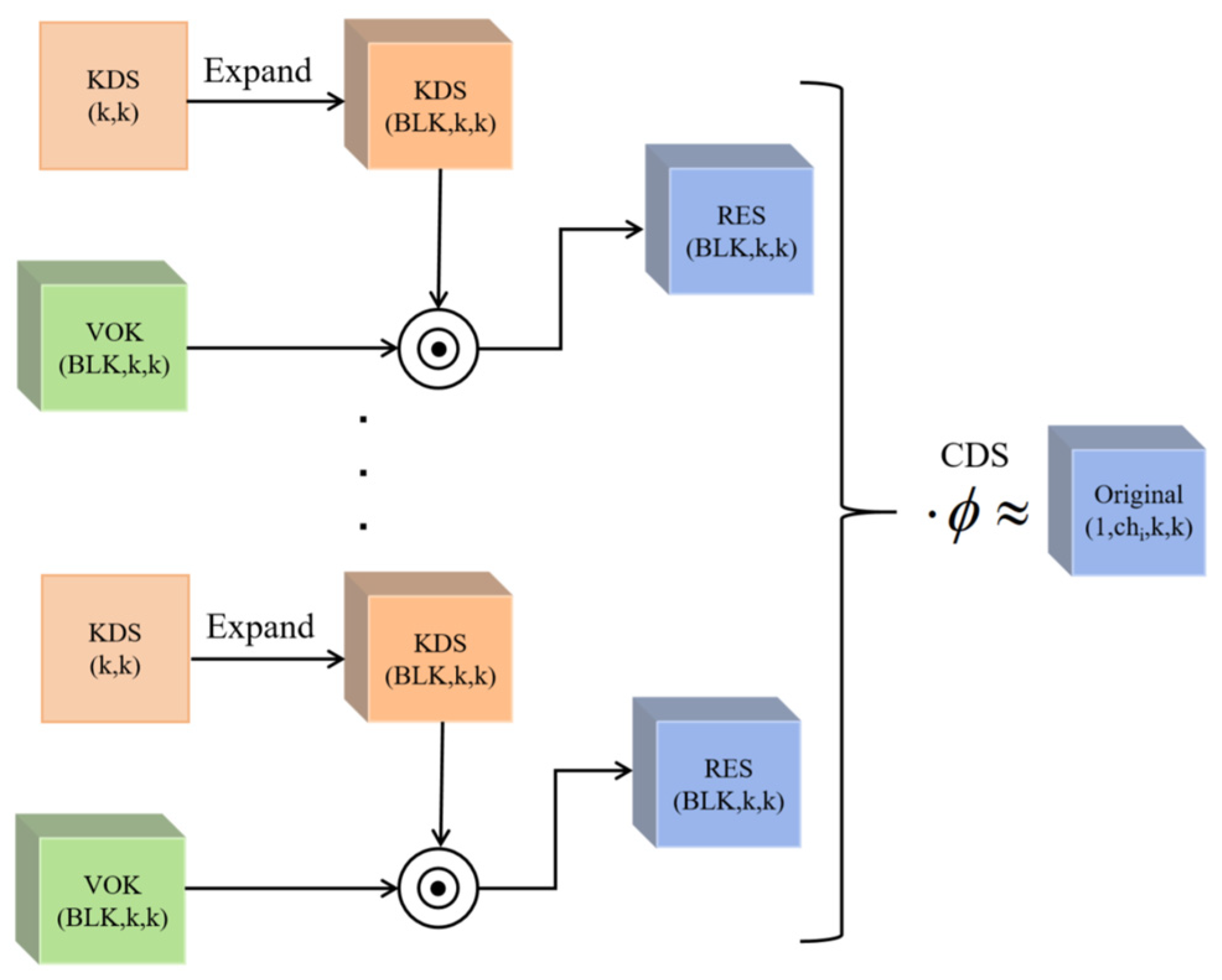

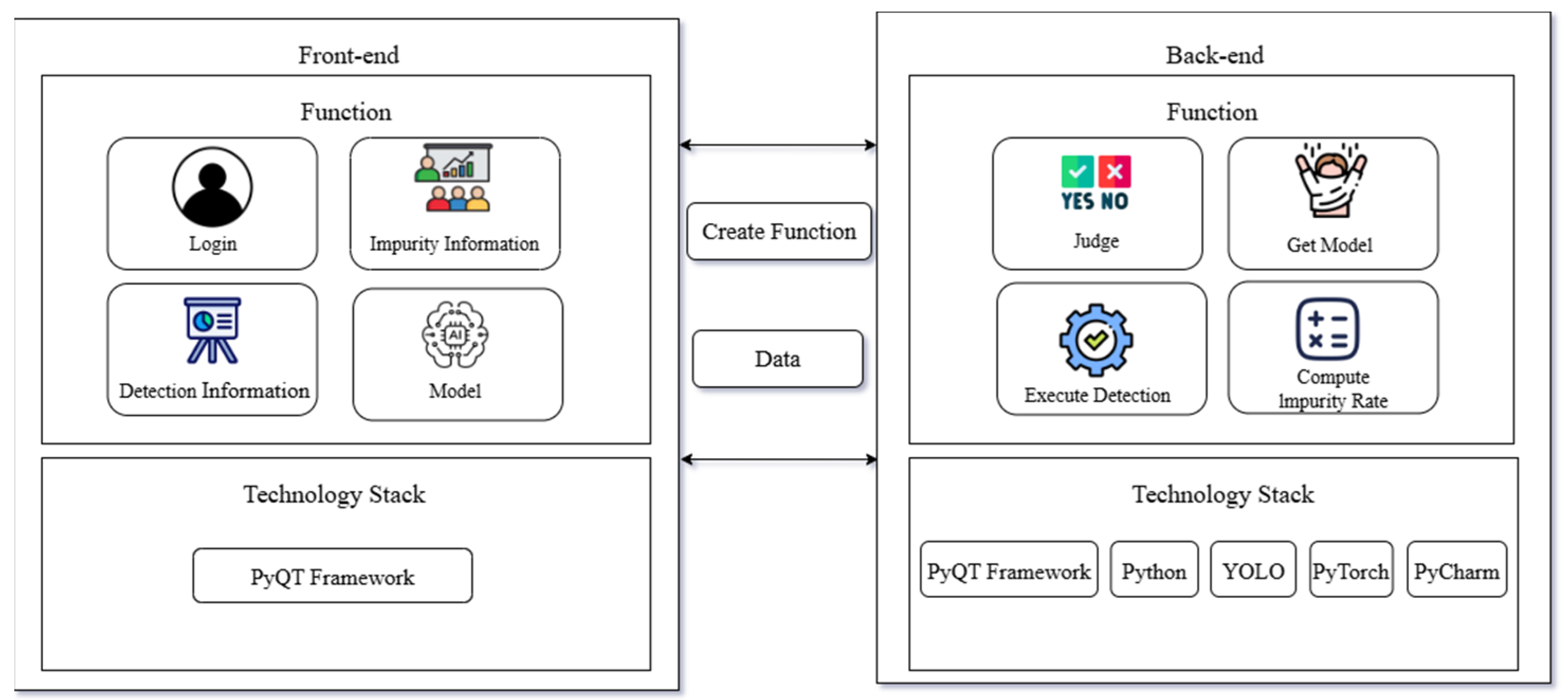

In this study, based on the previous research on falled cotton impurity detection and the successful application of the yolo series of algorithms in the field of agriculture, we address the problems of the existing models in terms of insufficient feature characterization capability (missed detection of tiny impurities), inefficient computation (arithmetic limitations of industrial equipment), and poor localization robustness (overlapping and false detection of impurities), and propose an improved model—Cotton-YOLO—based on yolov8, through the effective application of symmetry concepts in the algorithmic structure and performance balancing. Cotton-YOLO is a falled cotton impurity detection model based on yolov8. The CBAM attention module is introduced to dynamically suppress the interference of cotton wool and enhance the characteristics of small impurities through the channel and spatial coordination mechanism. The C2f_DSConv lightweight structure was used to replace the standard convolution with the Distributed Shift convolution (DSConv), and the Variable Quantization Kernel (VQK) and Distributed Shift operation (KDS/CDS) were used to reduce the number of parameters and computational redundancy by 12%. While maintaining the ability of multi-scale feature fusion, the model could be run on low-power devices. The inference speed is 50.51 fps. The Wise-IoU loss is improved, and the bounding box regression is optimized by the dynamic outlier mechanism. Compared with the improved intersection and union loss such as GIoU and DIoU, it can more accurately measure the geometric and distribution differences between the predicted box and the real box when dealing with the overlap of falled cotton impurities, scale differences and irregular shapes. We develop a visual detection platform to automate the entire process from image acquisition to impurity rate calculation, meeting real-time detection requirements and providing a symmetrical solution that balances efficiency and light weight for cotton impurity detection.

3. Case Validation

3.1. Experimental Configuration

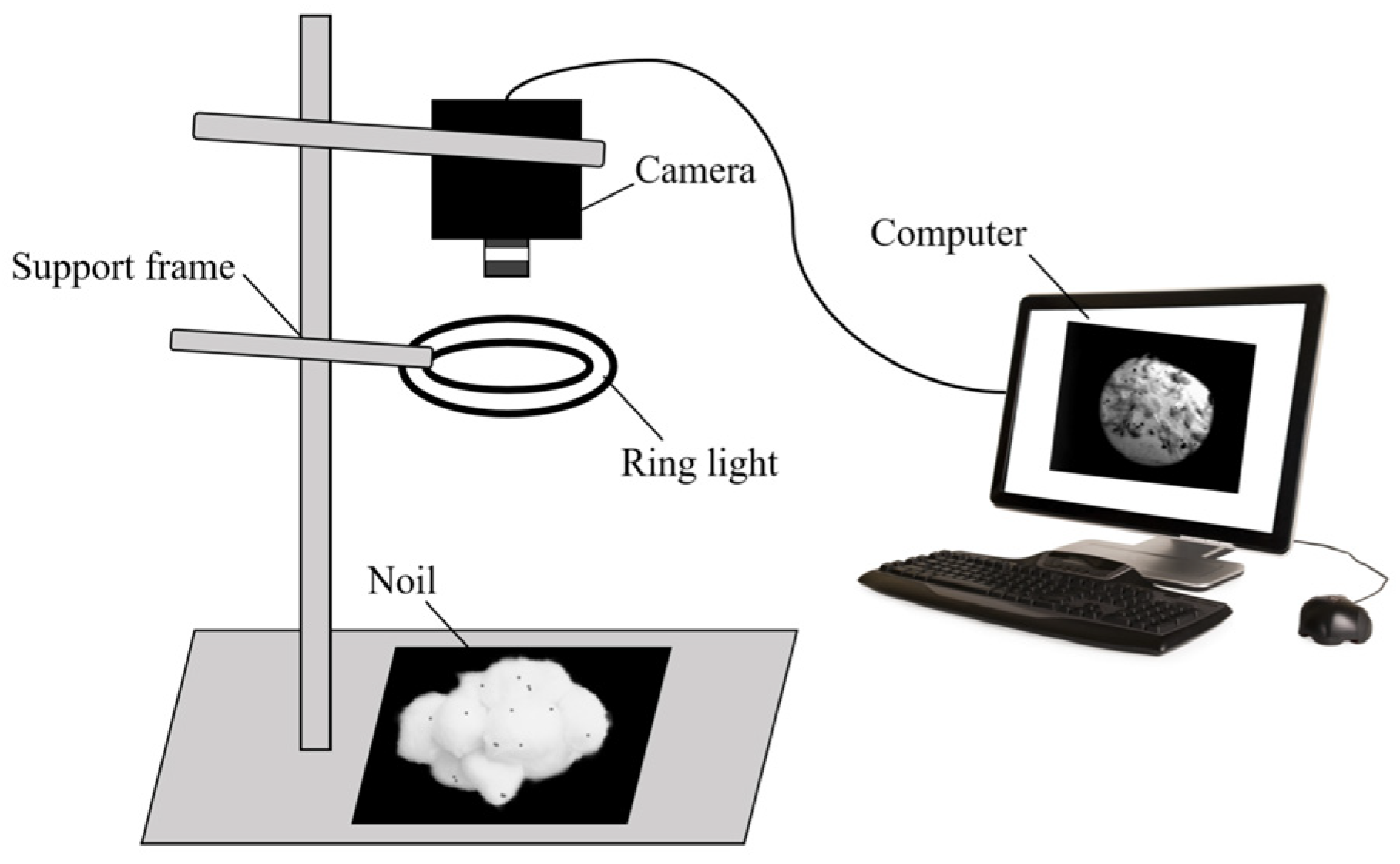

In order to validate the effectiveness of the method used in this study for the detection of falled cotton impurities, 5 kg of dropout cotton was collected from the card processing site and randomly divided into 200 samples; each dropout sample was opened completely to maximize the exposure of foreign fibers, and each sample was of the same weight. A graphical device was then used to capture images of all 200 samples. The hardware platform and software environment used for model training and testing in this study are described in

Table 1.

In this study, this paper uses the SGD optimizer to fine-tune the model parameters by initializing the learning rate to 0.01. The training process consists of 200 epochs, where batch size (denoting the number of images trained in each batch) is set to 2. The training and validation losses converge progressively over the course of the 200 epochs, as shown in

Figure 12.

3.2. Performance Comparison Experiments for Alternative Models

Cotton-YOLO shows excellent comprehensive performance in impurity detection tasks. This experiment aims to comprehensively evaluate the performance of Cotton-YOLO, an improved lightweight detection model for falled cotton impurities based on yolov8. We select multiple representative object detection models for comparison, including the classic Faster RCNN, lightweight MobileNet-SSD, efficient EfficientDet-D0, and yolo series yolov5, yolov6, yolov8, and yolov8-nano models. Several key performance indicators, such as precision (P), recall (R), mAP50, mAP50–95, number of parameters and FPS, were used to measure the performance of each model in the falled cotton impurity detection task, and the experimental results are shown in

Table 2.

The experimental results show that Cotton-YOLO achieved an accuracy rate of 0.865 in the detection task of falled cotton impurities. Although slightly lower than Faster RCNN’s 0.929, compared with MobileNet-SSD’s 0.814, EfficientDet-D0’s 0.79, yolov6’s 0.761 and yolov8-Nano’s 0.811, they were, respectively, 5.1%, 7.5%, 10.4% and 5.4% higher. In terms of recall rate, Cotton-YOLO reached 0.807, which is much higher than other alternative models, indicating that it can detect falled cotton impurities more comprehensively. Furthermore, the performance of mAP50 reached an impressive 0.896, both higher than other comparison models, and was more than 10% higher than Faster RCNN and yolov6, verifying the model’s strong identification ability for impurity features. Meanwhile, mAP50–95 reached 0.501, also surpassing other comparison models and maintained excellent robustness under strict positioning standards. In terms of parameter quantity, the parameter quantity of Cotton-YOLO is only 2,714,105, which is lower than that of all other models except yolov8-Nano, and is much lower than that of Faster RCNN, MobileNet-SSD, EfficientDet-D0 and yolov6. In terms of detection speed, the detection rate of Cotton-YOLO reached 50.51 fps. Although it was slightly lower than the 50.76 of yolov5, it exceeded all the remaining models. Although the accuracy of Cotton-YOLO is slightly lower than that of FasterRCNN, mAP50–95 is only 1% less than yolov5, and the number of parameters is slightly more than that of yolov8-Nano; Cotton-YOLO far exceeds these three models in other aspects. The golden triangle balance of precision, speed and lightweight of Cotton-YOLO makes it have broad practical implementation potential in production scenarios.

Compared with the original yolov8 model, Cotton-YOLO performs better in multiple key performance indicators. In terms of accuracy, Cotton-YOLO is 0.865, which is higher than yolov8’s 0.862. It can bring more accurate results in the detection of falled cotton impurities with high precision requirements. In terms of recall rate, Cotton-YOLO reached 0.807, which is higher than yolov8’s 0.804, enabling the detection of more cotton impurities and enhancing the comprehensiveness of the detection. It has more obvious advantages in terms of the number of parameters and detection speed. In terms of parameter quantity, yolov8 is 3,005,843 and Cotton-YOLO is 2,714,105, a reduction of 12%. It occupies fewer hardware resources and is suitable for deployment on resource-constrained devices. In terms of detection speed, the Cotton-YOLO rate is 50.51 fps, which is 3.5% higher than the 48.78 fps of yolov8. It can process images faster and meet the real-time detection requirements. Although both are 0.896 on mAP50, showing the same performance, Cotton-YOLO has a lower parameter count and a faster detection speed. It can be seen that Cotton-YOLO is more in line with the actual production requirements of falled cotton impurity detection.

Figure 13 shows the visual representation of the detection results achieved by Cotton-YOLO.

3.3. Ablation Experiments

Using the same training and validation datasets containing images of falled cotton impurities, this study employs a variety of techniques for model enhancement. The detection results of different models are shown in

Figure 14, and the metrics of these experiments are recorded in

Table 3. First, the yolov8 + CBAM model, which contains the new CBAM module, shows an increase in precision (P) of about 1%, indicating that the module effectively improves the accuracy of the model in identifying positive samples. The average precision mean mAP50–95 improved by 0.2%, which implies that the model’s integrated detection performance is optimized under different intersection over union (IoU) ratio thresholds. Meanwhile, the frame rate (FPS) is improved by about 4%, showing a significant improvement in the inference speed of the model, but there is a small increase in the number of model parameters. Taken together, the addition of the new CBAM module has a positive impact on the model performance, especially in terms of accuracy and inference speed. In addition the new DSConv module yolov8 + DSConv model, the accuracy rate is improved by about 0.7%, which optimizes the model’s ability to identify positive samples to some extent. In addition, the yolov8 + DSConv model has a significant advantage in the number of parameters, which decreased by about 12% compared with the original model, compensating for the increase in the number of parameters of the yolov8 + CBAM model. The results show that the DSConv module has a positive impact on the model performance. The yolov8 + WIoU model with an improved loss function of Wise-IoU improves recall by about 0.3%, mAP50 by about 0.2%, and mAP50–95 by about 0.1% compared to the original yolov8 model. While maintaining the computational volume (the number of parameters remains unchanged) and inference speed (the frame rate FPS is maintained at 48.78), the model achieves performance improvement in both recall and mean average precision, which fully demonstrates that the Wise-IoU loss function plays a positive role in model optimization.

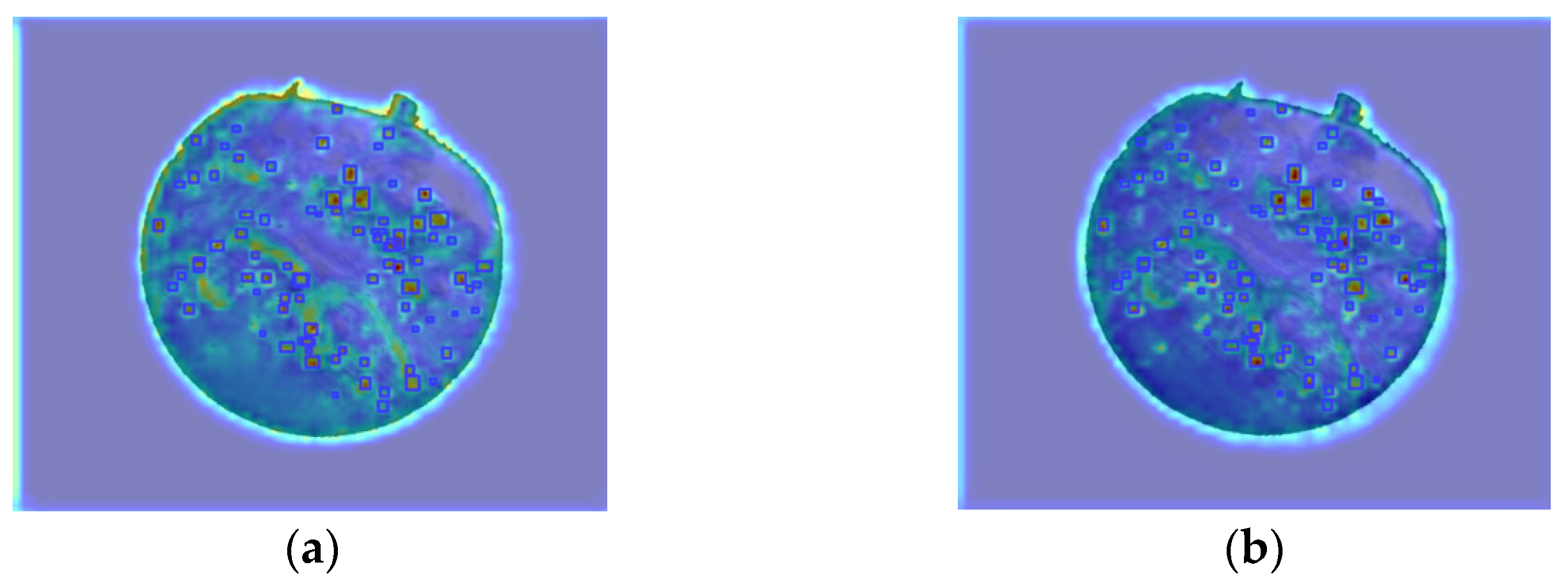

In order to verify the performance of CBAM in the detection of falled cotton impurities, the GradCAM++ method was applied to generate the class activation heat map; the visualization of the heat map before and after adding CBAM attention is shown in

Figure 15. As can be seen from the figure, before the introduction of the CBAM attention mechanism, the response of the heat map to the tiny impurities is more scattered, and there are a large number of false activations (darker-colored regions) in the background region of the falled cotton (non-impurity region), indicating that the model has insufficient ability to capture the characteristics of the impurities, and is susceptible to the interference of the complex texture; after the introduction of the CBAM, the heat map is significantly focused on the location of the real impurities, and the false activations in the background region are reduced dramatically, which intuitively proves that CBAM effectively suppresses the background noise and enhances the feature representation of tiny impurities through the synergistic mechanism of channel and spatial attention.

3.4. Discussion of Experimental Results

Experimental analysis shows that the improvement strategy proposed in this study has a significant optimization effect on the performance of falled cotton impurity detection. The CBAM module significantly enhances the ability to capture tiny impurity features through the dual attention mechanism of channel and space. Experiments show that the introduction of this module improves the accuracy rate by about 1% and the inference speed by 4%, which verifies its dynamic feature focusing ability in the case of complex lint backgrounds. However, the small increase in the number of parameters may pose a challenge for deployment in extreme lightweight scenarios, requiring a trade-off between precision and efficiency. The C2f_DSConv module reduces the number of model parameters by 12% while maintaining the ability of multi-scale feature fusion by replacing the standard convolution with lightweight DSConv. Experiments show that the accuracy of the yolov8 + DSConv model is improved by about 0.7%, but the mAP50–95 is reduced to 0.498, which is 0.6% lower than that of the original model. This phenomenon stems from the fact that the quantization operation of DSConv slightly underpreserves some fine-grained features, such as impurity edge texture, when reducing the computational cost, resulting in a slight decrease in localization accuracy at medium and high IoU thresholds. However, its lightweight feature provides the feasibility for real-time detection of mobile terminals or embedded devices. The Wise-IoU loss function balances the training weights of samples with different qualities through a dynamic outlier mechanism, and without increasing the number of parameters and inference elapsed time, the recall rate with the mAP50–95 increases by 0.3% and 0.1%, respectively, which proves that it is effective in optimizing the localization precision of irregularly distributed impurities, especially in overlapping and complex shape impurity scenarios. Compared with the existing models, Cotton-YOLO also shows obvious advantages in terms of comprehensive performance.

In general, the Cotton-YOLO model combines the advantages of CBAM, C2f_DSConv and Wise-IoU, but its mAP50–95 is 0.501, which is slightly lower than that of yolov8 + CBAM (0.506) and yolov8 + WIoU (0.505). This difference is a reasonable trade-off in module collaborative optimization: the feature enhancement effect of CBAM is partially offset by the lightweight operation of DSConv, while the localization accuracy improvement of Wise-IoU alleviates this loss. Considering the requirements of industrial scenarios, Cotton-YOLO maintains the same mAP50 as the original model (89.6%) while reducing the number of parameters by 12% and increasing the frame rate by 3.5% (50.51 fps), which far exceeds the frame rate threshold of 20 Hz in the carding machine detection scene. The buffer space is also reserved for the falled cotton transmission speed fluctuation, and the triangle balance of “precision-speed–lightweight” is realized.

In conclusion, the improvement strategy in this study effectively improves the accuracy and speed, and reduces the model parameters to achieve light weight.

5. Conclusions and Prospects

In the automation and intelligent transformation of the cotton spinning industry, the accuracy and efficiency of falled cotton impurity detection directly affect product quality and processing cost, which is the key issue of industrial upgrading. In order to solve the problems of insufficient accuracy of traditional methods and the large number of parameters of deep learning models, this study carries out a series of works. Firstly, an impurity detection detection platform is built and a dataset is established to provide a basis for model training. Based on the symmetry design of yolov8, a lightweight model Cotton-YOLO is proposed. By fusing the dual-path symmetric CBAM attention module and the C2f_DSConv lightweight structure, the feature capture ability of small impurities is enhanced, and the complexity is reduced. At the same time, the Wise-IoU loss function is introduced to optimize the bounding box regression to improve the robustness of irregular and overlapping impurity localization. The key results include the following: In terms of light weight, the number of parameters is reduced to 2.71 M, which is about 12% less than that of the original yolov8 (3.01 M). In terms of efficiency, the inference speed reaches 50.51 fps, which is 3.5% higher than the original, and meets the industrial real-time requirement. In terms of precision, the precision rate is 86.5%, the recall rate is 80.7%, and the mAP50 rate is 89.6%. Finally, the visual detection interface was designed to realize the automation of the whole process and provide an operation carrier for industrial landing.

This research has promising applications. The Cotton-YOLO model and lightweight strategy can detect falled cotton impurities, aiding cotton quality improvement and textile machinery upgrades. It can also extend to agriculture, food processing, resource recycling, and pharmaceuticals, offering references for foreign object sorting tasks requiring speed, accuracy, and lightweight design.

Although it performs well in the detection of falled cotton impurities, Cotton-YOLO also has limitations: poor robustness in complex scenarios (e.g., extreme light, shadows, dynamic impurities) and failure to distinguish impurity types (e.g., soil, branches), restricting fine sorting. Future work will achieve fine-grained impurity classification, enhance complex environment adaptability and tiny impurity detection via adaptive preprocessing and multi-modal fusion, and use NAS to optimize edge device adaptation, advancing field validation and cross-domain applications.