Decentralized Federated Learning with Node Incentive and Role Switching Mechanism for Network Traffic Prediction in NFV Environment

Abstract

1. Introduction

- (1)

- Modeling the VNF Migration Problem: This manuscript develops a mathematical model for the VNF migration problem under dynamic traffic conditions in NFV networks involving multiple service function providers.

- (2)

- Decentralized Federated Learning Mechanism: This manuscript proposes a decentralized FL mechanism based on blockchain that detects and excludes malicious nodes from key roles using a consensus mechanism integrated with a dynamic role management strategy.

- (3)

- Asynchronous Communication and Dynamic Role Switching: This manuscript designs an asynchronous aggregation strategy and introduces a role-switching mechanism driven by nodes’ historical contributions, helping to balance computational load and improve system stability.

- (4)

- Node Join-and-Exit Mechanism: This manuscript introduces a dynamic mechanism to manage node join-and-exit events. The system evaluates node performance based on VNF migration outcomes and applies corresponding reward and punishment mechanisms, thereby enhancing system robustness against malicious behavior.

2. Related Work

2.1. Network Traffic Forecasting

2.2. Blockchain and Federated Learning

3. System Architecture

3.1. Mathematical Model of VNF Migration Problem

3.1.1. Decision Variable

3.1.2. Constraints

3.1.3. Migration Cost

- (1)

- Delay Cost

- (2)

- Operating Cost

- (3)

- Rejection Cost

3.1.4. Optimization Objectives

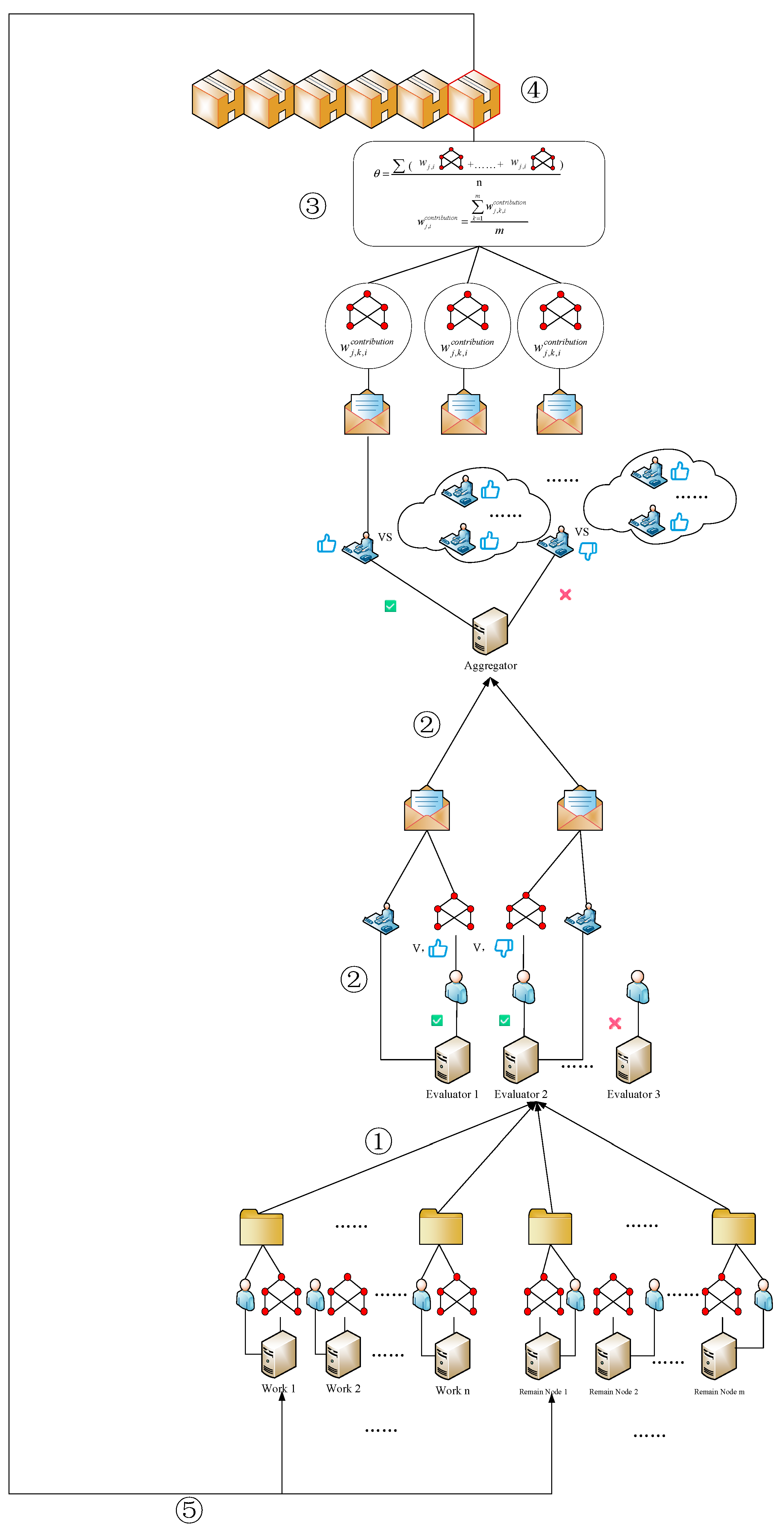

3.2. System Model

3.2.1. General Architecture

3.2.2. Implementation Steps

- (1)

- Rejection Cost

- (2)

- Aggregation Method

- (3)

- Role switching mechanism

3.3. Consensus Mechanism

3.4. Smart Contract

4. Algorithms

| Algorithm 1: Blockchain and Decentralized Federated Learning Framework with Node Incentive |

| Inputs: Node set , Block and Global model . |

| Output: New block , New global model . |

| 1 For each node i: |

| 2 Randomize the type to which the nodes belong. |

| 3 End for |

| 4 For each node i: |

| 5 If node i belongs worker: |

| 6 ←0; |

| 7 End if |

| 8 End for |

| 9 For each round out_r from 1 to EPOCH_OUT: |

| 10 For each round in_r from 1 to EPOCH_IN: |

| 11 Train each local model using local private historical traffic data; //At Worker |

| 12 Generate transactions with the hash value of model parameter of each local model and authentication information, then send them to all Evaluator nodes; //At Worker |

| 13 For each model i: //At Evaluator |

| 14 If model i is worker or remain node: |

| 15 For each Evaluator : |

| 16 Verify the identity of the node; |

| 17 If identity passes: |

| 18 Evaluate the migration cost of the model i according to Equation (25); |

| 19 Compute the gap ratio according to Equation (28); |

| 20 If < threshold : |

| 21 Select the node and update the contribution according to Equation (30); |

| 22 Else: |

| 23 Ignore the node and update the contribution according to Equation (29); |

| 24 End if |

| 25 End if |

| 26 End for |

| 27 End if |

| 28 End for |

| 29 For each node k://At Aggregator |

| 30 If node k is evaluator: |

| 31 Compute evaluation for evaluator according to Equation (31); |

| 32 If evaluation > threshold : |

| 33 Generate a transaction containing the validation results and send it to the Aggregator node. |

| 34 Else: |

| 35 Set evaluator to be the remaining node; |

| 36 Set the contribution value of the remaining node k to 0; |

| 37 If the number of evaluator nodes is less than half the maximum number of evaluator nodes. |

| 38 TRANS = 1; |

| 39 Else: |

| 40 TRANS = 0; |

| 41 End if |

| 42 End if |

| 43 End if |

| 44 End for |

| 45 For each Worker://At Aggregator |

| 46 If more than half of the Evaluators select this worker. |

| 47 Select this worker as the aggregation node; |

| 48 End if |

| 49 End for |

| 50 For each worker://At Aggregator |

| 51 Calculate the actual contribution according to Equation (32); |

| 52 End for |

| 53 Generate global model and new block according to Equation (26) using all of the hash values of local models of the selected aggregator; |

| 54 Broadcast the new block to all Workers and the Remaining nodes. |

| 55 If TRANS==1: |

| 56 break; |

| 57 End if |

| 58 End for |

| 59 Reassign node roles according to Algorithm 2; |

| 60 End for |

| Algorithm 2: Node Role Assignment and Switching |

| Input: Current roles of all nodes. |

| Output: Updated node roles (Aggregator, Worker, Residual, or Evaluator nodes). |

| 1 Select a node as aggregator from the Workers and Residual nodes with the highest contribution; |

| 2 Select k nodes as evaluators from the Workers and Residual nodes with higher contribution; |

| 3 For each node i: |

| 4 If the node i is the former Evaluator or Aggregator: |

| 5 set the node i as Worker; |

| 6 End if |

| 7 End for |

| 8 Select n-m-1 nodes as Workers from the Workers and Residual nodes with higher contribution; |

| 9 Set the remaining nodes as Residual nodes; |

| 10 Return the result of the updated role assignment. |

5. Simulation

5.1. Settings

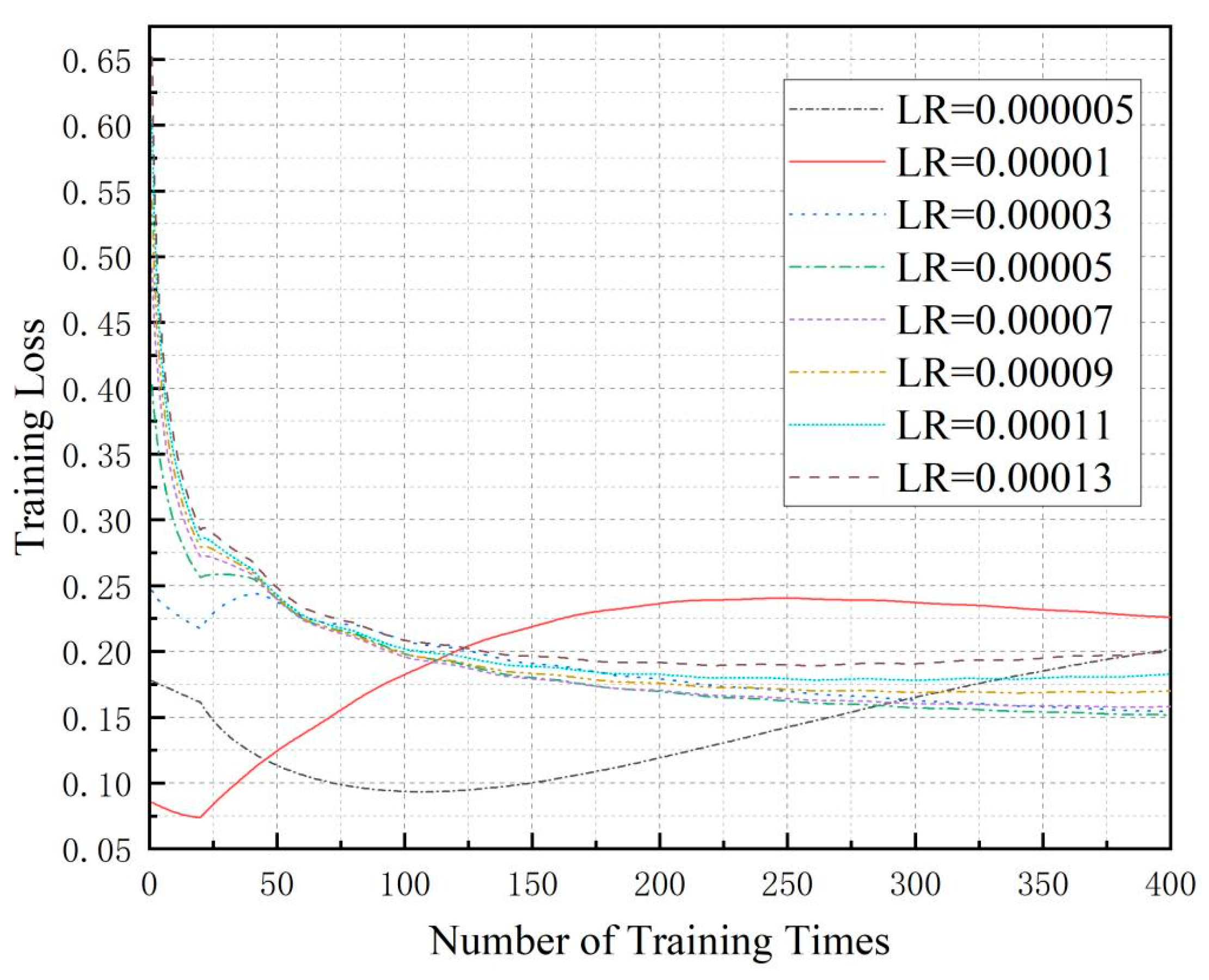

5.1.1. Parameter Settings

5.1.2. Data Sources

5.1.3. Benchmark Schemes

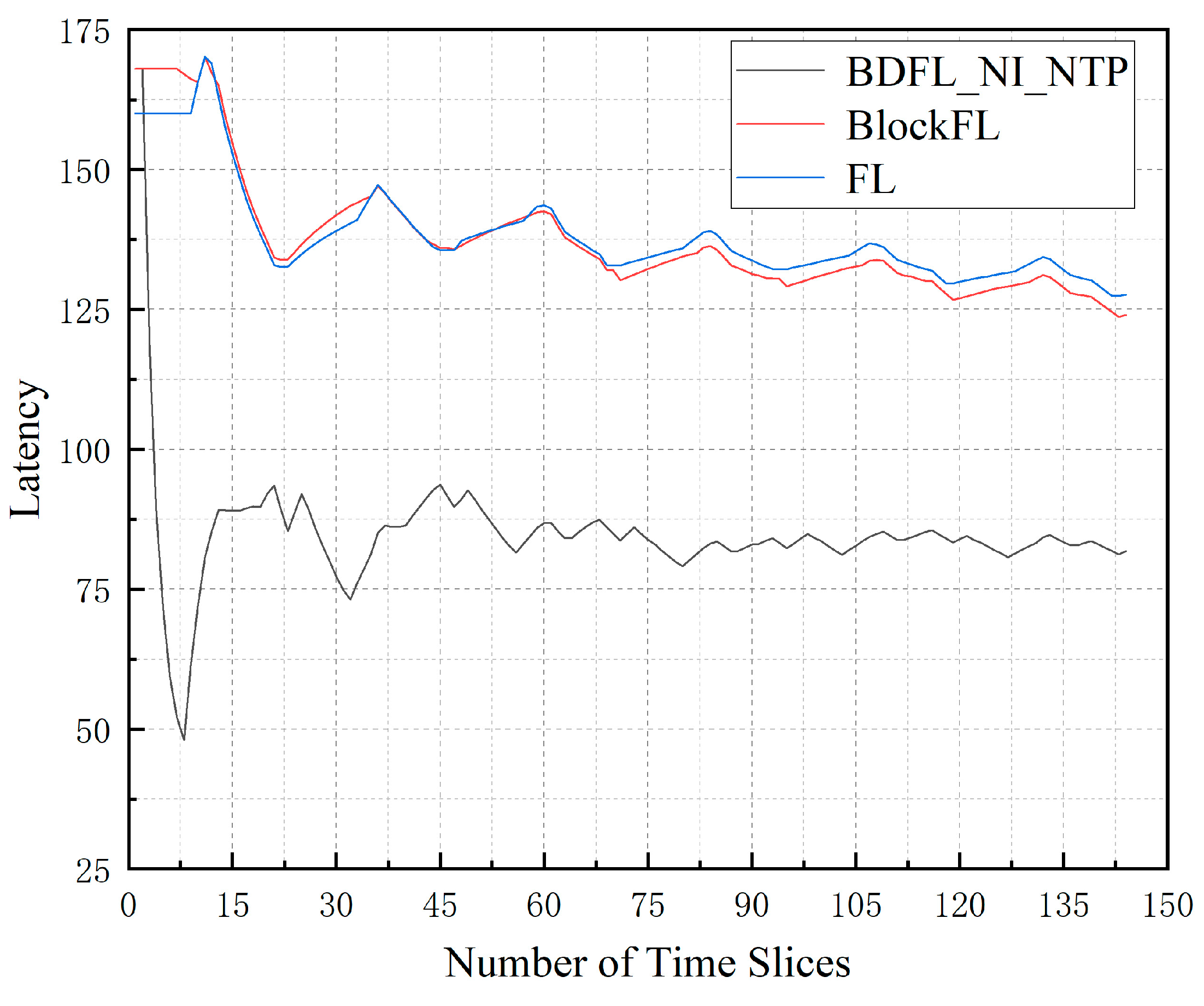

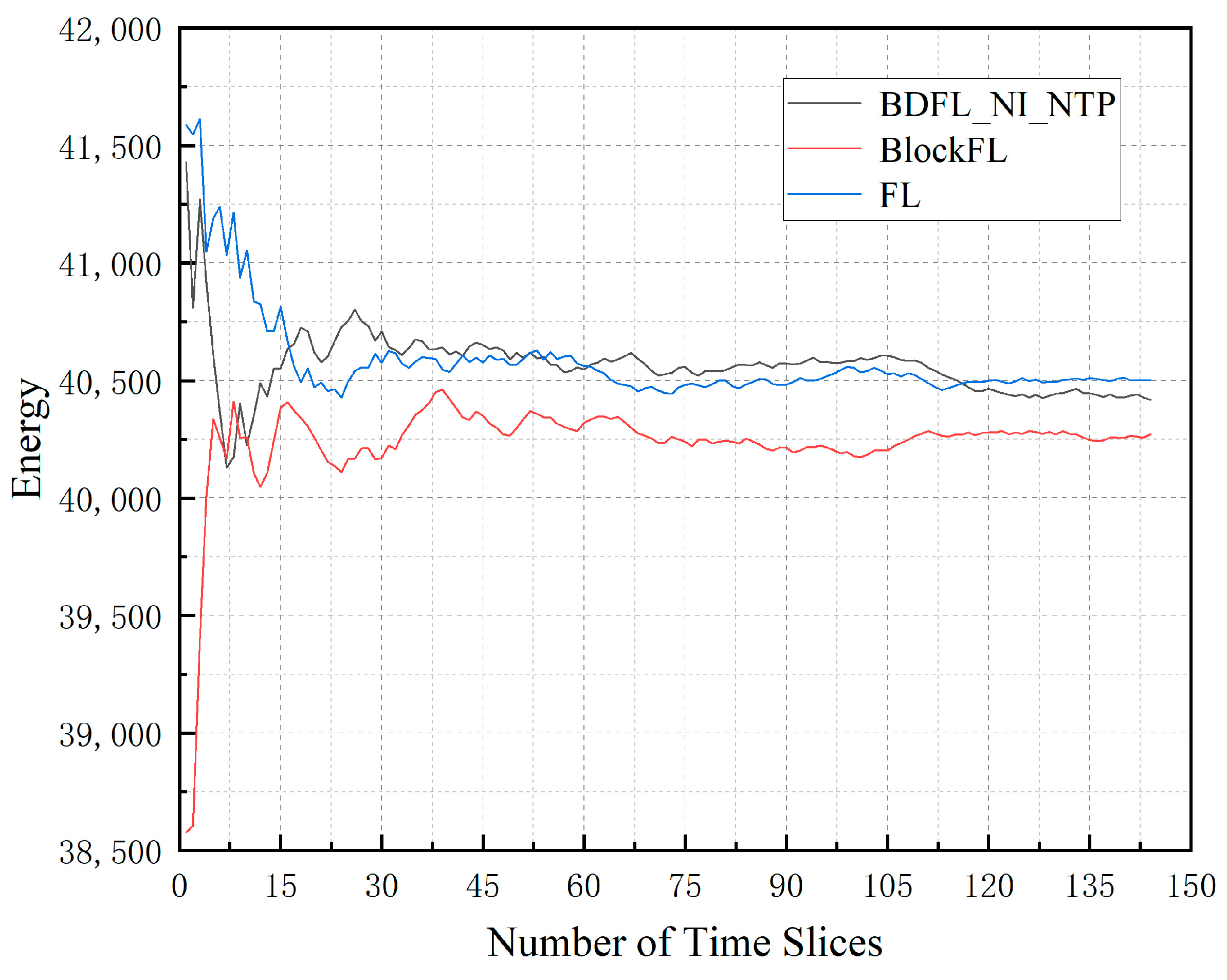

- (1)

- Baseline 1, FL: This is a network traffic prediction scheme based on a centralized server-based federated learning (FL) framework without a validation mechanism, where all local model parameters are directly aggregated [23].

- (2)

- Baseline 2, BlockFL: BlockFL is a decentralized architecture combining blockchain and federated learning, which adopts a committee-based validation mechanism for intermediate parameters. After training, nodes upload updates and trigger aggregation operations [20]. This model does not incorporate a role-switching mechanism.

- (1)

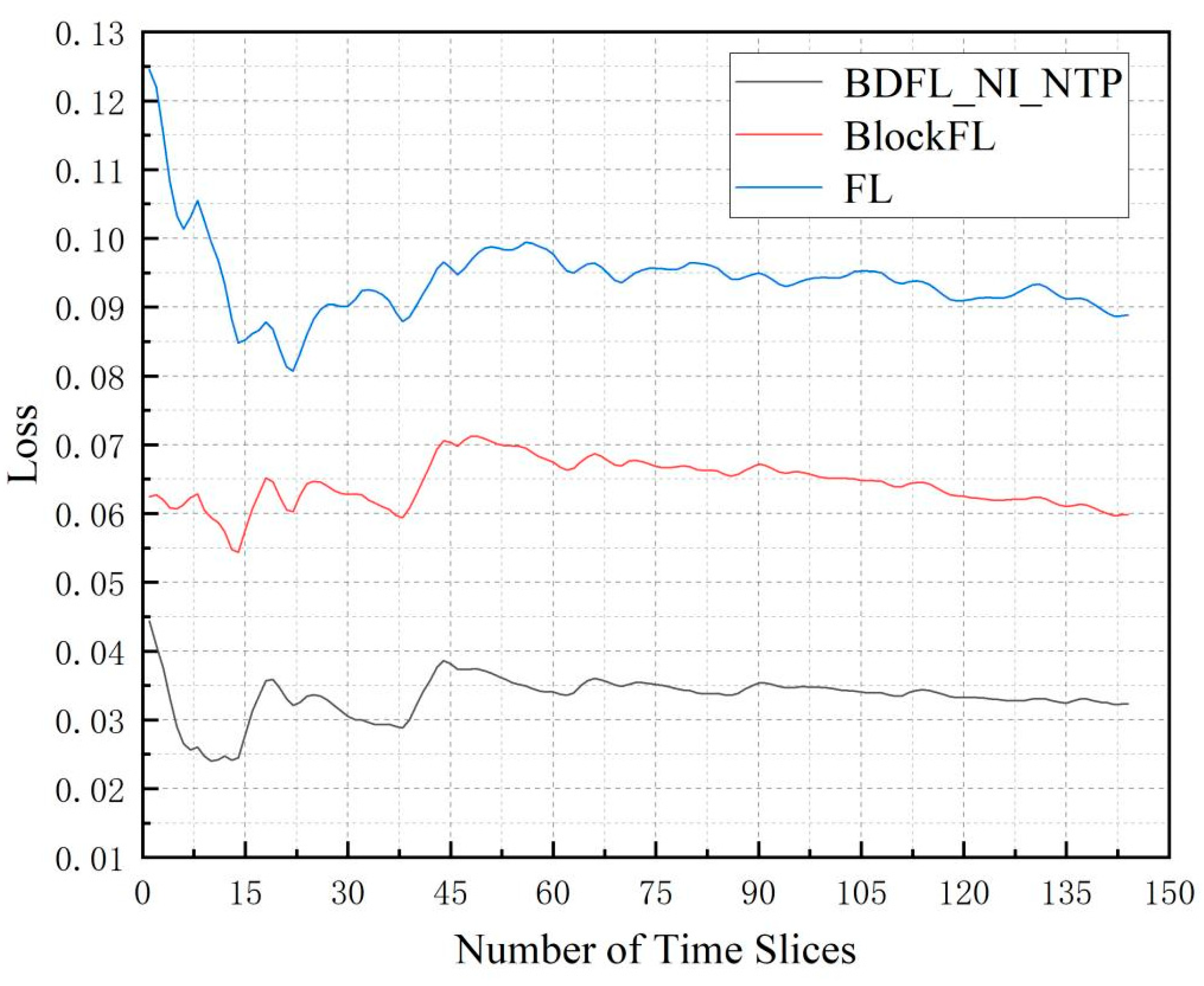

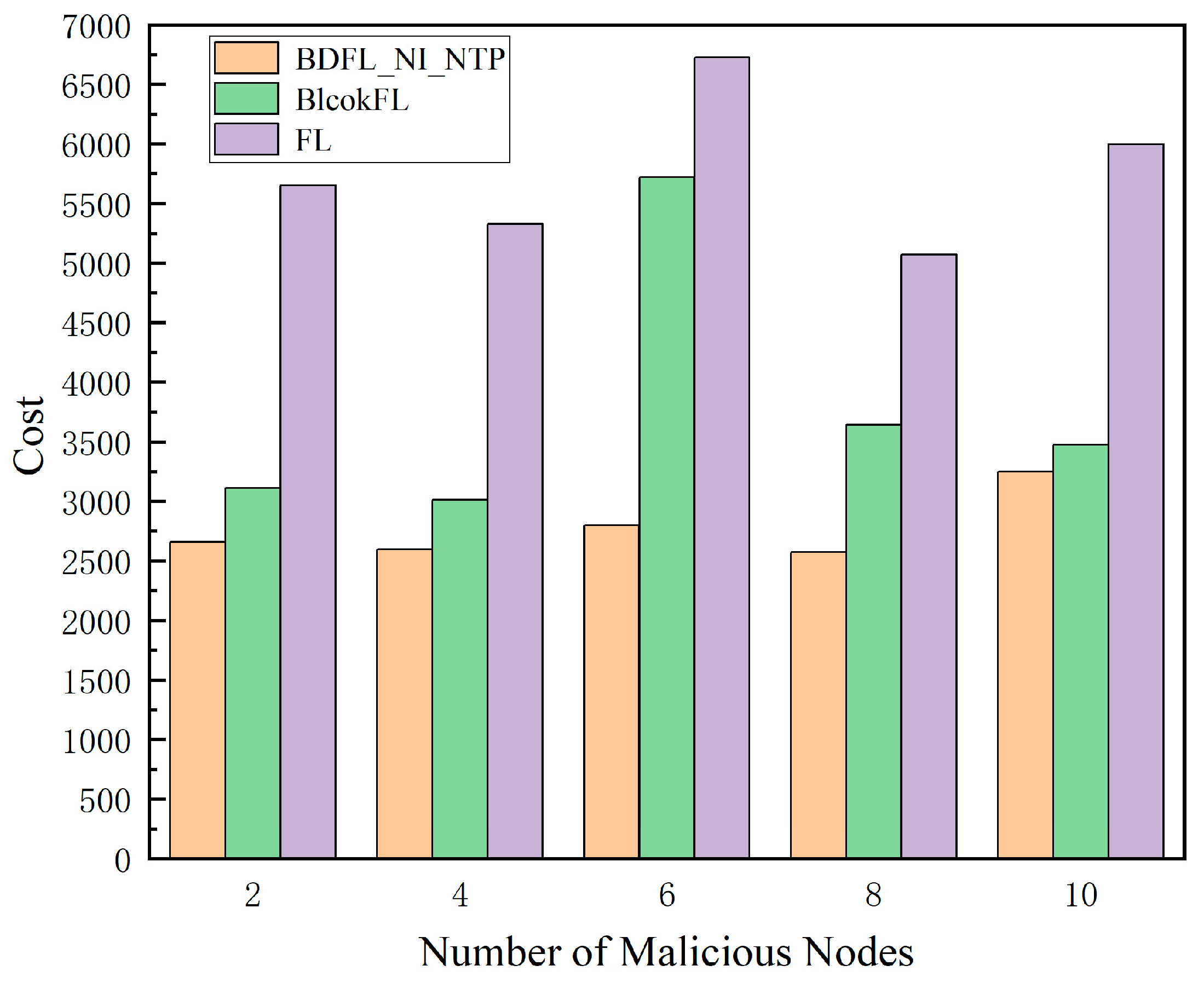

- Decoupling Objectives for Comparative Analysis: FL focuses on privacy preservation but lacks decentralization, while BlockFL introduces blockchain-based trust mechanisms yet remains vulnerable to malicious node attacks. Our method’s core innovation lies in simultaneously achieving decentralization and malicious attack resistance. By comparing with these single-objective solutions, we effectively demonstrate the necessity of multi-objective co-design (as evidenced by experimental results showing 59% and 155% reductions in loss values for FL and BlockFL, respectively, under malicious node attacks).

- (2)

- Scenario and Assumption Differences: Although the approach proposed in reference [14,15,16,17,18,19] (Section 2.2) shares some similarities with ours, the node roles in references [12,14,15,17] remain fixed. Only reference [19] supports adaptive node role changes—albeit based on computationally expensive () role-switching algorithms that scale poorly with edge device networks (training time increases exponentially). In contrast, our role-switching algorithm maintains time complexity, theoretically supporting significantly larger-scale deployments.

5.2. Evaluation Indicators

- (1)

- Loss: It represents the average MSE loss of the predicted network traffic. It is defined as follows.where is MSE loss between the prediction value and actual value for the i-th data group, and is the total number of data points.

- (2)

- Latency: It represents the average migration latency of the physical network. It is defined as follows.where is analyzed in Equation (21).

- (3)

- Energy: The average amount of energy consumption of the network, which consists of energy consumption of all servers of the accepted SFC requests. It is defined as follows.where is defined in Equation (23).

- (4)

- Punish: It is the resource required by the failed SFC requests. An SFC request is said to be failed when it can not be embedded successfully. It can be defined as follows.where is defined in Equation (24).

- (5)

- Cost: It is the linear combination of the above three metrics, and it is defined as follows.where is calculated by Equation (25).

5.3. Performance Evaluation

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, Q.; Tang, L.; Wu, T.; Chen, Q. Deep reinforcement learning for resource demand prediction and virtual function network migration in digital twin network. IEEE Internet Things J. 2023, 10, 19102–19116. [Google Scholar] [CrossRef]

- Cai, J.; Zhou, Z.; Huang, Z.; Dai, W.; Yu, F.R. Privacy-preserving deployment mechanism for service function chains across multiple domains. IEEE Trans. Netw. Service Manag. 2024, 21, 1241–1256. [Google Scholar] [CrossRef]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Advances and open problems in federated learning. Found. Trends Mach. Learn. 2021, 14, 1–210. [Google Scholar] [CrossRef]

- Li, Z.; Yu, H.; Zhou, T.; Luo, L.; Fan, M.; Xu, Z.; Sun, G. Byzantine resistant secure blockchained federated learning at the edge. IEEE Netw. 2021, 35, 295–301. [Google Scholar] [CrossRef]

- Li, Q.; Li, X.; Zhou, L.; Yan, X. AdaFL: Adaptive client selection and dynamic contribution evaluation for efficient federated learning. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 6645–6649. [Google Scholar] [CrossRef]

- Hou, Y.; Zhao, L.; Lu, H. Fuzzy neural network optimization and network traffic forecasting based on improved differential evolution. Future Gener. Comput. Syst. 2018, 81, 425–432. [Google Scholar] [CrossRef]

- Ramakrishnan, N.; Soni, T. Network traffic prediction using recurrent neural networks. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 187–193. [Google Scholar] [CrossRef]

- Huang, C.-W.; Chiang, C.-T.; Li, Q. A study of deep learning networks on mobile traffic forecasting. In Proceedings of the 2017 IEEE 28th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), Montreal, QC, Canada, 8–13 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Pan, C.; Zhu, J.; Kong, Z.; Shi, H.; Yang, W. DC-STGCN: Dual-channel based graph convolutional networks for network traffic forecasting. Electronics 2021, 10, 1014. [Google Scholar] [CrossRef]

- Huo, L.; Jiang, D.; Qi, S.; Miao, L. A blockchain-based security traffic measurement approach to software defined networking. Mobile Netw. Appl. 2021, 26, 586–596. [Google Scholar] [CrossRef]

- Qi, Y.; Hossain, M.S.; Nie, J.; Li, X. Privacy-preserving blockchain-based federated learning for traffic flow prediction. Future Gener. Comput. Syst. 2021, 117, 328–337. [Google Scholar] [CrossRef]

- Guo, H.; Meese, C.; Li, W.; Shen, C.C.; Nejad, M. B2SFL: A bi-level blockchained architecture for secure federated learning-based traffic prediction. IEEE Trans. Serv. Comput. 2023, 16, 4360–4374. [Google Scholar] [CrossRef]

- Kurri, V.; Raja, V.; Prakasam, P. Cellular traffic prediction on blockchain-based mobile networks using LSTM model in 4G LTE network. Peer-to-Peer Netw. Appl. 2021, 14, 1088–1105. [Google Scholar] [CrossRef]

- Feng, L.; Zhao, Y.; Guo, S.; Qiu, X.; Li, W.; Yu, P. BAFL: A blockchain-based asynchronous federated learning framework. IEEE Trans. Comput. 2022, 71, 1092–1103. [Google Scholar] [CrossRef]

- Li, J.; Shao, Y.; Wei, K.; Ding, M.; Ma, C.; Shi, L.; Han, Z.; Poor, H.V. Blockchain assisted decentralized federated learning (BLADE-FL): Performance analysis and resource allocation. IEEE Trans. Parallel Distrib. Syst. 2022, 33, 2401–2415. [Google Scholar] [CrossRef]

- Połap, D.; Srivastava, G.; Yu, K. Agent architecture of an intelligent medical system based on federated learning and blockchain technology. J. Inf. Secur. Appl. 2021, 58, 102748. [Google Scholar] [CrossRef]

- Cui, L.; Su, X.; Zhou, Y. A fast blockchain-based federated learning framework with compressed communications. IEEE J. Sel. Areas Commun. 2022, 40, 3358–3372. [Google Scholar] [CrossRef]

- Ren, Y.; Hu, M.; Yang, Z.; Feng, G.; Zhang, X. BPFL: Blockchain-based privacy-preserving federated learning against poisoning attack. Inf. Sci. 2024, 665, 120377. [Google Scholar] [CrossRef]

- Kasyap, H.; Manna, A.; Tripathy, S. An efficient blockchain assisted reputation aware decentralized federated learning framework. IEEE Trans. Netw. Service Manag. 2023, 20, 2771–2782. [Google Scholar] [CrossRef]

- Kim, H.; Park, J.; Bennis, M.; Kim, S.-L. Blockchained on-device federated learning. IEEE Commun. Lett. 2020, 24, 1279–1283. [Google Scholar] [CrossRef]

- Qiao, S.; Jiang, Y.; Han, N.; Hua, W.; Lin, Y.; Min, S.; Wu, X. LBFL: A lightweight blockchain-based federated learning framework with proof-of-contribution committee consensus. IEEE Trans. Big Data 2024. [Google Scholar] [CrossRef]

- Che, C.; Li, X.; Chen, C.; He, X.; Zheng, Z. A decentralized federated learning framework via committee mechanism with convergence guarantee. IEEE Trans. Parallel Distrib. Syst. 2022, 33, 4783–4800. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. 2019, 10, 12. [Google Scholar] [CrossRef]

| Framework | Malicious Node Detection Mechanism | Reward Form | Incentive Mechanism | Consensus Mechanism | Role Switching Algorithm Complexity | Malicious Node Tolerance | Model Convergence Speed | Dynamic Scalability |

|---|---|---|---|---|---|---|---|---|

| BCFL [17] | None | None | None | PoW | None | Depends on blockchain consensus (PoW/PBFT) | Relatively fast | Fixed roles |

| BLADE-FL [15] | None | None | None | PoW | None | None | Fast convergence even under limited resources | Fixed roles |

| PoIS [19] | Based on deviation between predictions and ground truth | None | None | PoIS (lightweight consensus) | High | Fast convergence speed | Fewer role types, but supports dynamic switching | |

| BAFL [14] | Based on deviation in prediction metric values | Token incentives | Reward adjusted based on magnitude of device metric changes | PoW + Genetic algorithm optimization | None | High | Fast convergence speed | Fixed roles |

| BPFL [18] | Based on model gradient differences | ASSET token incentives | Reward adjusted based on model gradient similarity | Credit-based validator election | None | High | Medium-slow convergence speed (security prioritized) | Fixed roles |

| BlockFL [20] | None | None | None | PoW | None | None | Fast convergence speed | Fixed roles |

| B2SFL [12] | None | None | None | Raft consensus algorithm | None | None | Affected by synchronization mechanisms and latency | Fixed roles |

| BDFL_NI_NTP (this paper) | Dynamic role switching (cross-node comparison of judgment results) | None | Reward adjusted based on similarity between judgment results | Consensus redesigned based on adaptive node role-switching mechanism | High | Fast convergence speed | Supports dynamic role switching |

| Hyper Parameters | Values |

|---|---|

| Learning rate | 0.0001 |

| Dropout factor | 0.2 |

| The number of outer layer cycles | 10 |

| The number of inner layer cycles | 5 |

| in Equation (18) | 20 |

| in Equation (20) | 1 |

| in Equation (22) | 100 |

| in Equation (22) | 500 |

| in Equation (23) | 0.01 |

| in Equation (24) | 50 |

| in Equation (24) | 5 |

| in Equation (24) | 20 |

| in Equation (25) | 0.01 |

| in Equation (25) | 20 |

| in Equation (25) | 1 |

| in Equation (29) | 100 |

| in Equation (30) | 100 |

| 1 | |

| 50% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, Y.; Liu, B.; Li, J.; Jia, L. Decentralized Federated Learning with Node Incentive and Role Switching Mechanism for Network Traffic Prediction in NFV Environment. Symmetry 2025, 17, 970. https://doi.org/10.3390/sym17060970

Hu Y, Liu B, Li J, Jia L. Decentralized Federated Learning with Node Incentive and Role Switching Mechanism for Network Traffic Prediction in NFV Environment. Symmetry. 2025; 17(6):970. https://doi.org/10.3390/sym17060970

Chicago/Turabian StyleHu, Ying, Ben Liu, Jianyong Li, and Linlin Jia. 2025. "Decentralized Federated Learning with Node Incentive and Role Switching Mechanism for Network Traffic Prediction in NFV Environment" Symmetry 17, no. 6: 970. https://doi.org/10.3390/sym17060970

APA StyleHu, Y., Liu, B., Li, J., & Jia, L. (2025). Decentralized Federated Learning with Node Incentive and Role Switching Mechanism for Network Traffic Prediction in NFV Environment. Symmetry, 17(6), 970. https://doi.org/10.3390/sym17060970