Symmetry-Aware Superpixel-Enhanced Few-Shot Semantic Segmentation

Abstract

1. Introduction

2. Related Work

2.1. Few-Shot Learning

2.2. Semantic Segmentation

2.3. Few-Shot Semantic Segmentation

3. Task Definition

4. Methodology

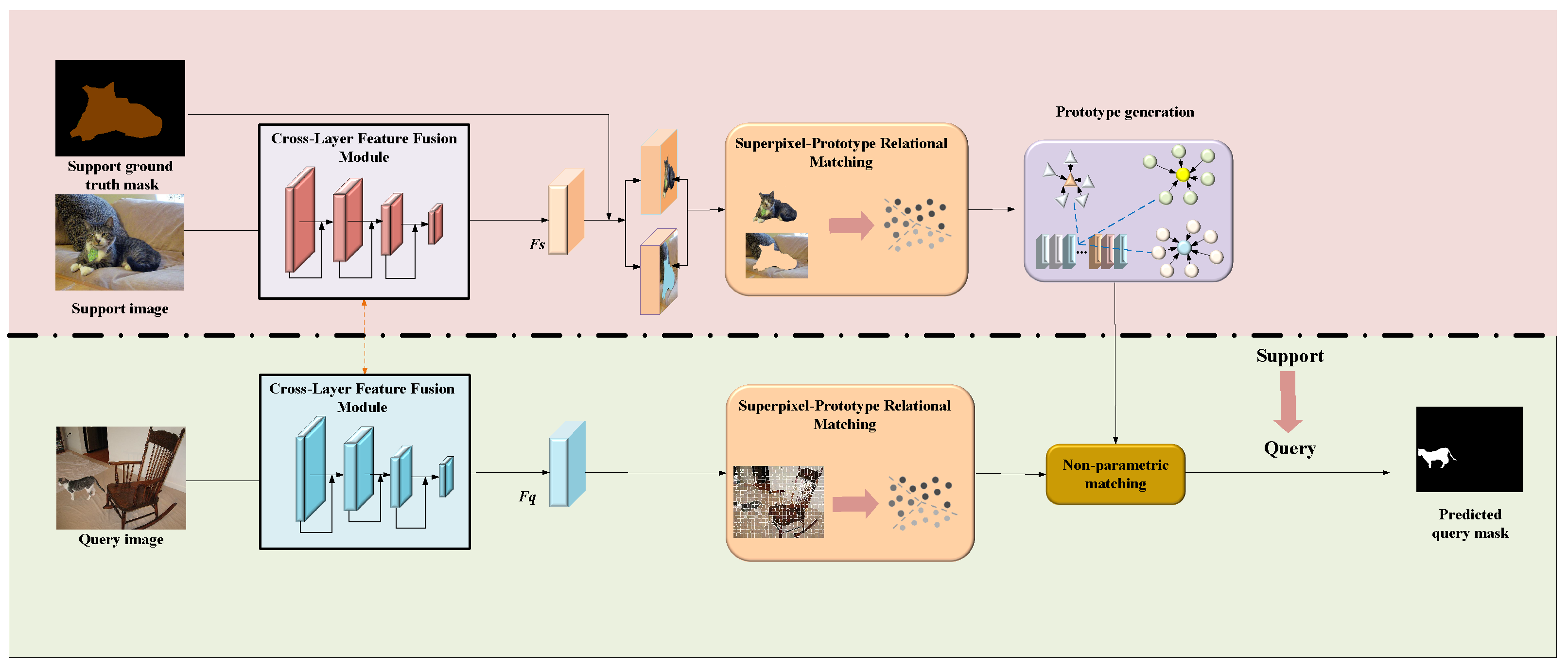

4.1. Overview

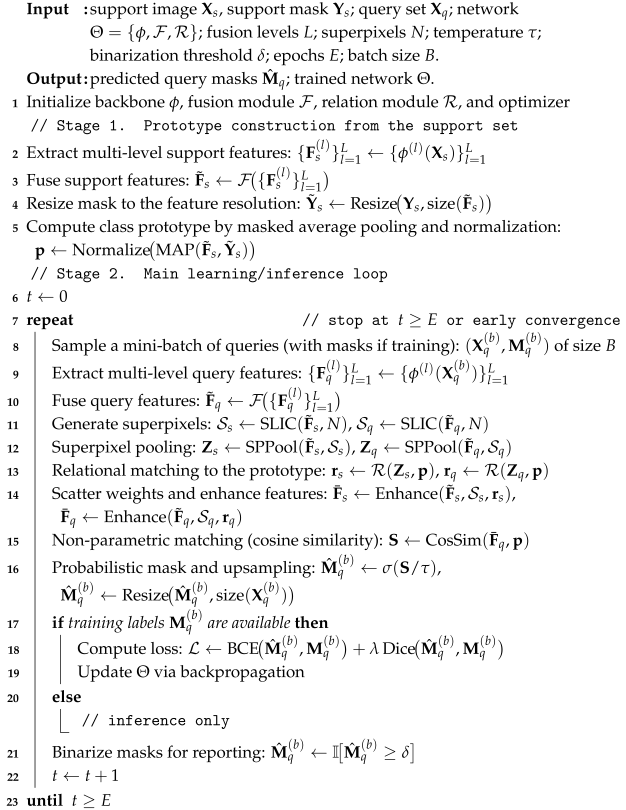

| Algorithm 1: Prototype-guided few-shot image segmentation via cross-layer fusion and superpixel-relational matching |

|

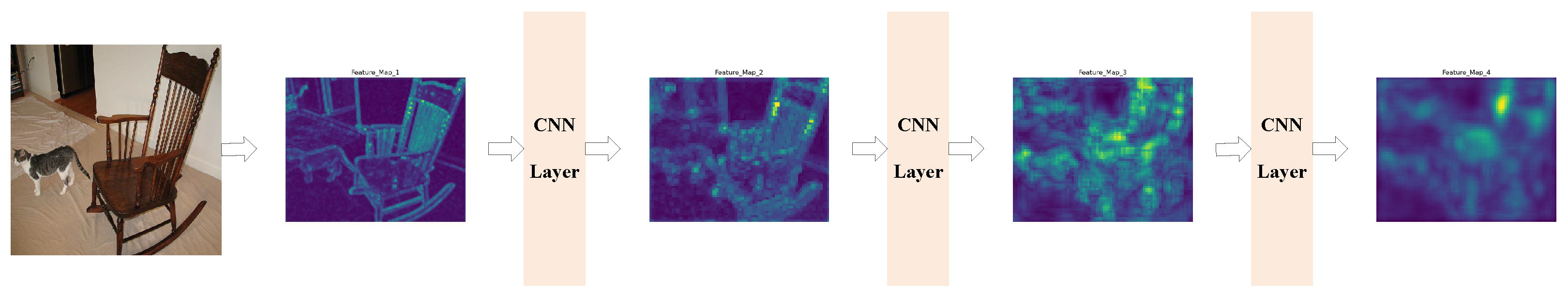

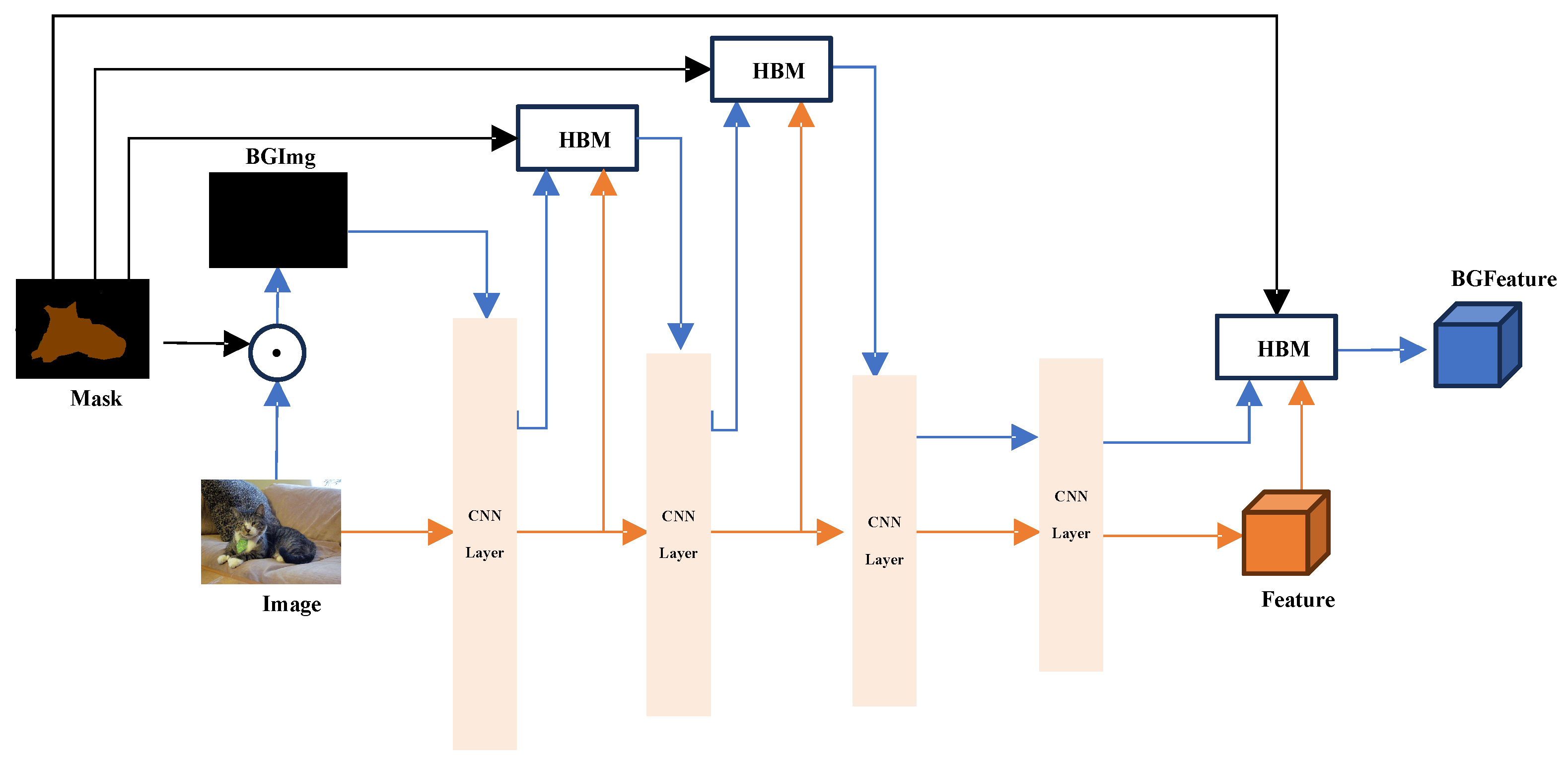

4.2. Cross-Layer Feature Fusion

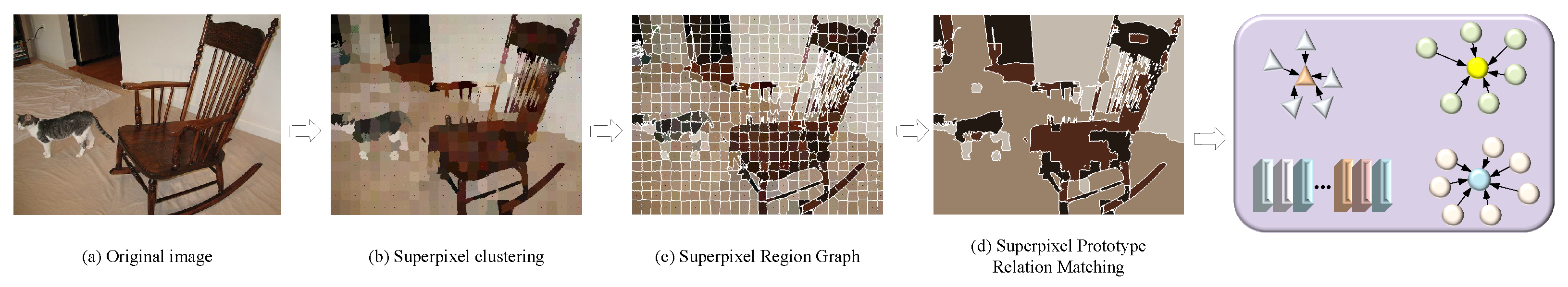

4.3. Superpixel–Prototype Relational Matching

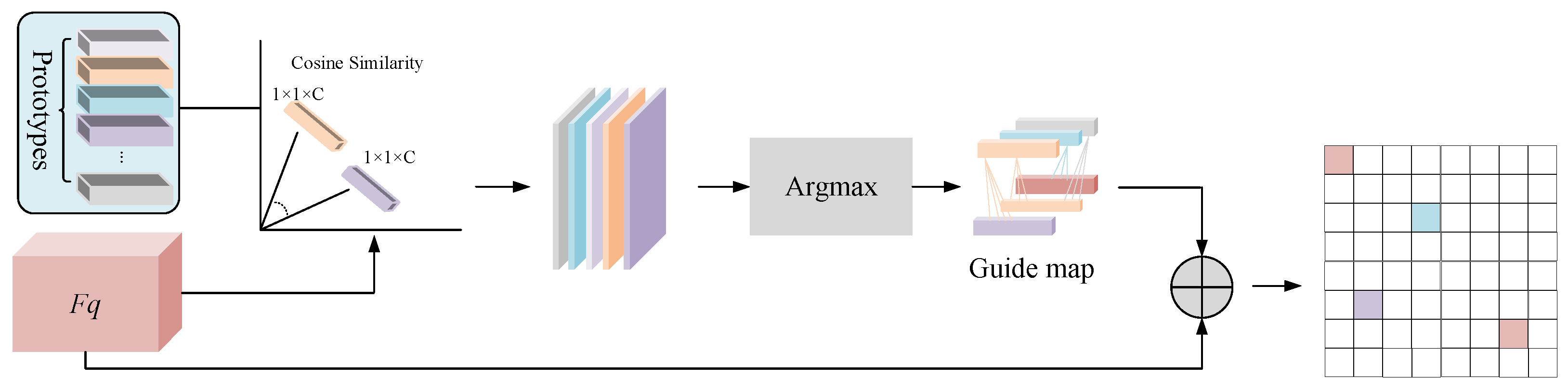

4.4. Non-Parametric Metric Learning

5. Experimental Design

5.1. Datasets and Evaluation Protocol

5.2. Implementation Details

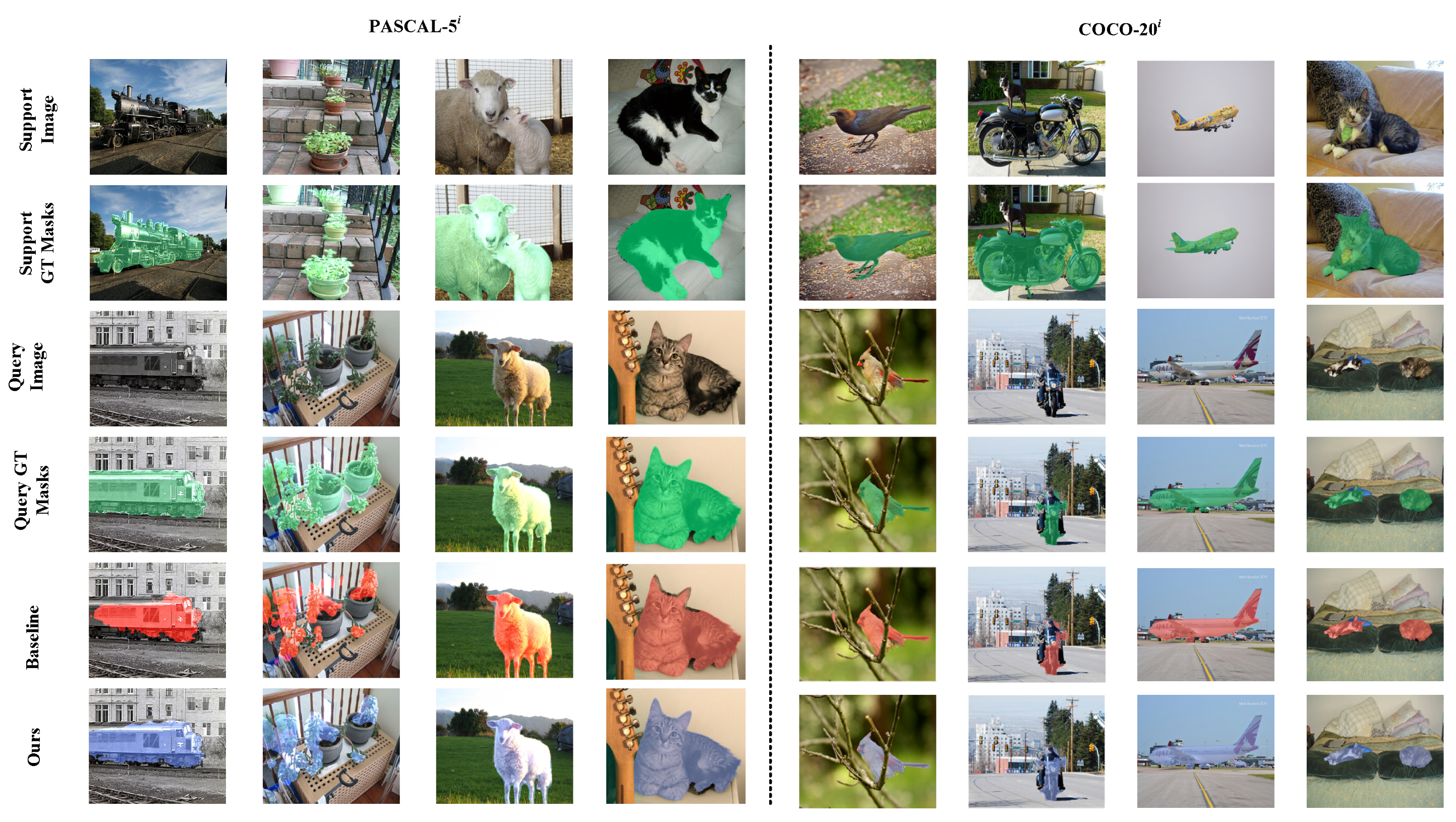

5.3. Experimental Results

5.4. Ablation Studies

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Han, D.; Shi, J.; Zhao, J.; Wu, H.; Zhou, Y.; Li, L.H.; Khan, M.K.; Li, K.C. LRCN: Layer-residual Co-Attention Networks for visual question answering. Expert Syst. Appl. 2025, 263, 125658. [Google Scholar] [CrossRef]

- Xia, C.; Li, X.; Gao, X.; Ge, B.; Li, K.C.; Fang, X.; Zhang, Y.; Yang, K. PCDR-DFF: Multi-modal 3D object detection based on point cloud diversity representation and dual feature fusion. Neural Comput. Appl. 2024, 36, 9329–9346. [Google Scholar] [CrossRef]

- He, W.; Zhang, Y.; Zhuo, W.; Shen, L.; Yang, J.; Deng, S.; Sun, L. APSeg: Auto-Prompt Network for Cross-Domain Few-Shot Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 23762–23772. [Google Scholar]

- Jin, K.; Du, W.; Tang, M.; Liang, W.; Li, K.; Pathan, A.S.K. LSODNet: A Lightweight and Efficient Detector for Small Object Detection in Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2025, 18, 24816–24828. [Google Scholar] [CrossRef]

- Shen, W.; Ma, A.; Wang, J.; Zheng, Z.; Zhong, Y. Adaptive Self-Supporting Prototype Learning for Remote Sensing Few-Shot Semantic Segmentation. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 5634116. [Google Scholar] [CrossRef]

- Johnander, J.; Edstedt, J.; Felsberg, M.; Khan, F.S.; Danelljan, M. Dense Gaussian Processes for Few-Shot Segmentation. In Lecture Notes in Computer Science, Proceedings of the Computer Vision, ECCV 2022, PT XXIX, 17th European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Brostow, G., Cisse, M., Farinella, G., Hassner, T., Eds.; Springer Nature: Cham, Switzerland, 2022; Volume 13689, pp. 217–234. [Google Scholar] [CrossRef]

- Ma, J.; Bai, S.; Pan, W. Boosting Few-Shot Semantic Segmentation with Prior-Driven Edge Feature Enhancement Network. IEEE Trans. Artif. Intell. 2025, 6, 211–220. [Google Scholar] [CrossRef]

- Zhang, H.; Xu, J.; Jiang, S.; He, Z. Simple Semantic-Aided Few-Shot Learning. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 28588–28597. [Google Scholar] [CrossRef]

- McCall, A. Few-Shot Learning in Computer Vision: Overcoming Data Scarcity. ResearchGate 2022. Available online: https://www.researchgate.net/publication/390542684_Few-Shot_Learning_in_Computer_Vision_Overcoming_Data_Scarcity (accessed on 8 October 2025).

- Zhao, J.; Kong, L.; Lv, J. An Overview of Deep Neural Networks for Few-Shot Learning. Big Data Min. Anal. 2025, 8, 145–188. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, C.; Ni, B.; Xu, M.; Yang, X. Variational Few-Shot Learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Dvornik, N.; Schmid, C.; Mairal, J. Diversity with Cooperation: Ensemble Methods for Few-Shot Classification. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV 2019), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3722–3730. [Google Scholar] [CrossRef]

- Schwartz, E.; Karlinsky, L.; Shtok, J.; Harary, S.; Marder, M.; Kumar, A.; Feris, R.; Giryes, R.; Bronstein, A. Delta-encoder: An effective sample synthesis method for few-shot object recognition. In Advances in Neural Information Processing Systems; Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2018; Volume 31. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical Networks for Few-shot Learning. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar] [CrossRef]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.S.; Hospedales, T.M. Learning to Compare: Relation Network for Few-Shot Learning. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 1199–1208. [Google Scholar] [CrossRef]

- Guo, Y.; Codella, N.C.; Karlinsky, L.; Codella, J.V.; Smith, J.R.; Saenko, K.; Rosing, T.; Feris, R. A Broader Study of Cross-Domain Few-Shot Learning. In Image Processing Computer Vision Pattern Recognition and Graphics, Proceedings of the 16th European Conference on Computer Vision-ECCV-Biennial, Electrical Network, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J., Eds.; PT XXVII; Springer International Publishing: Cham, Switzerland, 2020; Volume 12372, pp. 124–141. [Google Scholar] [CrossRef]

- Wang, Y.; Lee, D.; Heo, J.; Park, J. One-Shot Summary Prototypical Network Toward Accurate Unpaved Road Semantic Segmentation. IEEE Signal Process. Lett. 2021, 28, 1200–1204. [Google Scholar] [CrossRef]

- Zhang, X.; Wei, Y.; Yang, Y.; Huang, T.S. SG-One: Similarity Guidance Network for One-Shot Semantic Segmentation. IEEE Trans. Cybern. 2020, 50, 3855–3865. [Google Scholar] [CrossRef]

- Tian, P.; Wu, Z.; Qi, L.; Wang, L.; Shi, Y.; Gao, Y. Differentiable meta-learning model for few-shot semantic segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12087–12094. [Google Scholar]

- Askari, F.; Fateh, A.; Mohammadi, M.R. Enhancing few-shot image classification through learnable multi-scale embedding and attention mechanisms. Neural Netw. 2025, 187, 107339. [Google Scholar] [CrossRef]

- Ren, G.; Liu, J.; Wang, M.; Guan, P.; Cao, Z.; Yu, J. Few-Shot Object Detection via Dual-Domain Feature Fusion and Patch-Level Attention. Tsinghua Sci. Technol. 2025, 30, 1237–1250. [Google Scholar] [CrossRef]

- Liu, T.; Sun, F. Self-Aligning Multi-Modal Transformer for Oropharyngeal Swab Point Localization. Tsinghua Sci. Technol. 2024, 29, 1082–1091. [Google Scholar] [CrossRef]

- Paeedeh, N.; Pratama, M.; Ma’sum, M.A.; Mayer, W.; Cao, Z.; Kowlczyk, R. Cross-domain few-shot learning via adaptive transformer networks. Knowl.-Based Syst. 2024, 288, 111458. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, Y.; Chen, Z.; Feng, C.; Zhu, K. Global Spatial-Temporal Information Encoder-Decoder Based Action Segmentation in Untrimmed Video. Tsinghua Sci. Technol. 2025, 30, 290–302. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, X.; Yang, B.; Guan, Q.; Chen, Q.; Chen, J.; Wu, Q.; Xie, Y.; Xia, Y. Advances in attention mechanisms for medical image segmentation. Comput. Sci. Rev. 2025, 56, 100721. [Google Scholar] [CrossRef]

- Zhi, P.; Jiang, L.; Yang, X.; Wang, X.; Li, H.W.; Zhou, Q.; Li, K.C.; Ivanović, M. Cross-Domain Generalization for LiDAR-Based 3D Object Detection in Infrastructure and Vehicle Environments. Sensors 2025, 25, 767. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Lecture Notes in Computer Science, Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W., Frangi, A., Eds.; PT III; Tech Univ Munich: Munich, Germany; Friedrich Alexander Univ Erlangen Nuremberg: Erlangen, Germany, 2015; Volume 9351, pp. 234–241. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- He, J.; Deng, Z.; Zhou, L.; Wang, Y.; Qiao, Y. Adaptive Pyramid Context Network for Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Choi, S.; Kim, J.T.; Choo, J. Cars Can’t Fly Up in the Sky: Improving Urban-Scene Segmentation via Height-Driven Attention Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Elhassan, M.A.; Huang, C.; Yang, C.; Munea, T.L. DSANet: Dilated spatial attention for real-time semantic segmentation in urban street scenes. Expert Syst. Appl. 2021, 183, 115090. [Google Scholar] [CrossRef]

- Guo, L.; Li, X.; Wang, J.; Xiao, J.; Hou, Y.; Zhi, P.; Yong, B.; Li, L.; Zhou, Q.; Li, K. EdgeVidCap: A Channel-Spatial Dual-Branch Lightweight Video Captioning Model for IoT Edge Cameras. Sensors 2025, 25, 4897. [Google Scholar] [CrossRef]

- Catalano, N.; Matteucci, M. Few Shot Semantic Segmentation: A review of methodologies, benchmarks, and open challenges. arXiv 2024, arXiv:2304.05832. [Google Scholar] [CrossRef]

- Tang, S.; Yan, S.; Qi, X.; Gao, J.; Ye, M.; Zhang, J.; Zhu, X. Few-shot medical image segmentation with high-fidelity prototypes. Med. Image Anal. 2025, 100, 103412. [Google Scholar] [CrossRef]

- Shaban, A.; Bansal, S.; Liu, Z.; Essa, I.; Boots, B. One-Shot Learning for Semantic Segmentation. arXiv 2017, arXiv:1709.03410. [Google Scholar] [CrossRef]

- Li, A.; Luo, T.; Xiang, T.; Huang, W.; Wang, L. Few-Shot Learning with Global Class Representations. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Wang, K.; Liew, J.H.; Zou, Y.; Zhou, D.; Feng, J. PANet: Few-Shot Image Semantic Segmentation with Prototype Alignment. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Luo, X.; Wu, H.; Zhang, J.; Gao, L.; Xu, J.; Song, J. A Closer Look at Few-shot Classification Again. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; Krause, A., Brunskill, E., Cho, K., Engelhardt, B., Sabato, S., Scarlett, J., Eds.; Proceedings of Machine Learning Research; PMLR: Cambridge, MA, USA, 2023; Volume 202, pp. 23103–23123. [Google Scholar]

- Liu, Y.; Zhu, Y.; Chong, H.; Yu, M. Few-shot image semantic segmentation based on meta-learning: A review. J. Intell. Fuzzy Syst. 2024, 47, 351–367. [Google Scholar] [CrossRef]

- Dong, N.; Xing, E.P. Few-shot semantic segmentation with prototype learning. In Proceedings of the BMVC, Newcastle, UK, 3–6 September 2018; Volume 3, p. 4. [Google Scholar]

- Zhang, C.; Lin, G.; Liu, F.; Yao, R.; Shen, C. CANet: Class-Agnostic Segmentation Networks with Iterative Refinement and Attentive Few-Shot Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Rakelly, K.; Shelhamer, E.; Darrell, T. Conditional Networks for Few-Shot Semantic Segmentation. ICLRWorkshop. 2018. Available online: https://openreview.net/forum?id=SkMjFKJwG (accessed on 8 October 2025).

- Siam, M.; Oreshkin, B.N.; Jagersand, M. AMP: Adaptive Masked Proxies for Few-Shot Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- He, Z.; Li, L.; Wang, H. Symmetry-Guided Dual-Branch Network with Adaptive Feature Fusion and Edge-Aware Attention for Image Tampering Localization. Symmetry 2025, 17, 1150. [Google Scholar] [CrossRef]

- Pambala, A.K.; Dutta, T.; Biswas, S. SML: Semantic meta-learning for few-shot semantic segmentation☆. Pattern Recognit. Lett. 2021, 147, 93–99. [Google Scholar] [CrossRef]

- Zhang, B.; Xiao, J.; Qin, T. Self-Guided and Cross-Guided Learning for Few-Shot Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 8312–8321. [Google Scholar]

- Yang, B.; Liu, C.; Li, B.; Jiao, J.; Ye, Q. Prototype Mixture Models for Few-Shot Semantic Segmentation. In Image Processing Computer Vision Pattern Recognition and Graphics, Proceedings of the 16th European Conference on Computer Vision-ECCV-Biennial, Electrical Network, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J., Eds.; PT VIII; Springer International Publishing: Cham, Switzerland, 2020; Volume 12353, pp. 763–778. [Google Scholar] [CrossRef]

- Lu, Z.; He, S.; Zhu, X.; Zhang, L.; Song, Y.Z.; Xiang, T. Simpler Is Better: Few-Shot Semantic Segmentation with Classifier Weight Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Virtual, 11–17 October 2021; pp. 8741–8750. [Google Scholar]

- Li, G.; Jampani, V.; Sevilla-Lara, L.; Sun, D.; Kim, J.; Kim, J. Adaptive Prototype Learning and Allocation for Few-Shot Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 8334–8343. [Google Scholar]

- Liu, Y.; Zhang, X.; Zhang, S.; He, X. Part-Aware Prototype Network for Few-Shot Semantic Segmentation. In Image Processing Computer Vision Pattern Recognition and Graphics, Proceedings of the 16th European Conference on Computer Vision-ECCV-Biennial, Electrical Network, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J., Eds.; PT IX; Springer International Publishing: Cham, Switzerland, 2020; Volume 12354, pp. 142–158. [Google Scholar] [CrossRef]

- Li, W.; Xu, J.; Huo, J.; Wang, L.; Gao, Y.; Luo, J. Distribution consistency based covariance metric networks for few-shot learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8642–8649. [Google Scholar]

- Strudel, R.; Garcia, R.; Laptev, I.; Schmid, C. Segmenter: Transformer for Semantic Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Virtual, 11–17 October 2021; pp. 7262–7272. [Google Scholar]

- Chen, H.; Li, H.; Li, Y.; Chen, C. Sparse spatial transformers for few-shot learning. Sci.-China-Inf. Sci. 2023, 66, 210102. [Google Scholar] [CrossRef]

- Dos Santos, M.E.; Guimarães, S.J.F.; Patrocínio, Z.K.G. Cross-Attention Vision Transformer for Few-Shot Semantic Segmentation. In Proceedings of the 2023 IEEE Ninth Multimedia Big Data (BigMM), Laguna Hills, CA, USA, 11–13 December 2023; pp. 64–71. [Google Scholar] [CrossRef]

- Fateh, A.; Mohammadi, M.R.; Jahed-Motlagh, M.R. MSDNet: Multi-scale decoder for few-shot semantic segmentation via transformer-guided prototyping. Image Vis. Comput. 2025, 162, 105672. [Google Scholar] [CrossRef]

- Shao, J.; Gong, B.; Dai, K.; Li, D.; Jing, L.; Chen, Y. Query-support semantic correlation mining for few-shot segmentation. Eng. Appl. Artif. Intell. 2023, 126, 106797. [Google Scholar] [CrossRef]

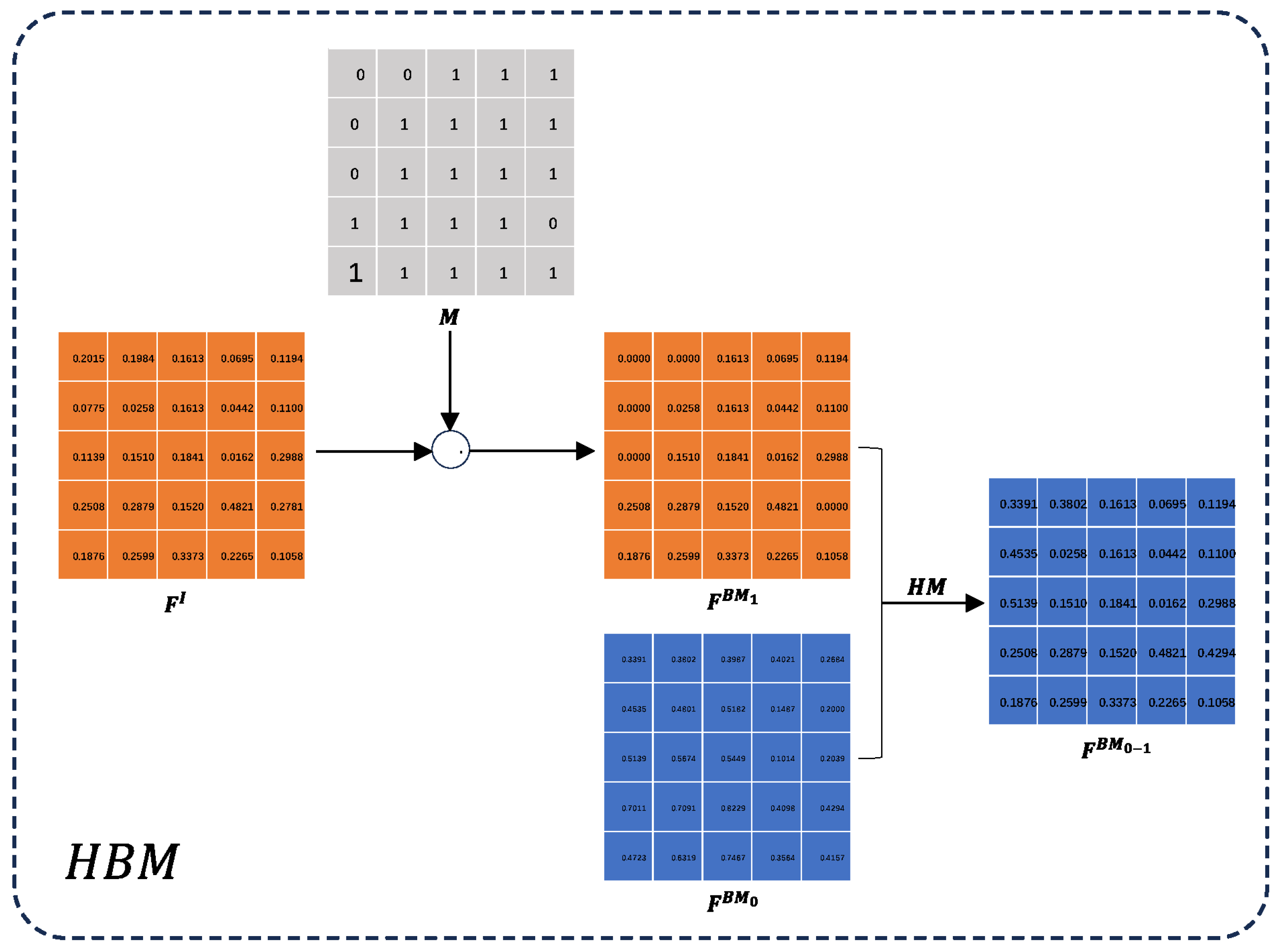

- Moon, S.; Sohn, S.S.; Zhou, H.; Yoon, S.; Pavlovic, V.; Khan, M.H.; Kapadia, M. HM: Hybrid Masking for Few-Shot Segmentation. In Proceedings of the Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 506–523. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Hariharan, B.; Arbeláez, P.; Bourdev, L.; Maji, S.; Malik, J. Semantic contours from inverse detectors. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 991–998. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Lecture Notes in Computer Science, Proceedings of the 13th European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; PT V; Springer International Publishing: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. [Google Scholar] [CrossRef]

- Yang, L.; Zhuo, W.; Qi, L.; Shi, Y.; Gao, Y. Mining Latent Classes for Few-Shot Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Virtual, 11–17 October 2021; pp. 8721–8730. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Chang, Z.; Lu, Y.; Ran, X.; Gao, X.; Zhao, H. Simple yet effective joint guidance learning for few-shot semantic segmentation. Appl. Intell. 2023, 53, 26603–26621. [Google Scholar] [CrossRef]

- Gao, G.; Fang, Z.; Han, C.; Wei, Y.; Liu, C.H.; Yan, S. DRNet: Double Recalibration Network for Few-Shot Semantic Segmentation. IEEE Trans. Image Process. 2022, 31, 6733–6746. [Google Scholar] [CrossRef]

- Tian, Z.; Zhao, H.; Shu, M.; Yang, Z.; Li, R.; Jia, J. Prior Guided Feature Enrichment Network for Few-Shot Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1050–1065. [Google Scholar] [CrossRef]

- Chang, Z.; Lu, Y.; Wang, X.; Ran, X. MGNet: Mutual-guidance network for few-shot semantic segmentation. Eng. Appl. Artif. Intell. 2022, 116, 105431. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, S.; Yang, Z.X.; Wu, E. Learning self-target knowledge for few-shot segmentation. Pattern Recognit. 2024, 149, 110266. [Google Scholar] [CrossRef]

- Lang, C.; Cheng, G.; Tu, B.; Han, J. Few-Shot Segmentation via Divide-and-Conquer Proxies. Int. J. Comput. Vis. 2024, 132, 261–283. [Google Scholar] [CrossRef]

- Wang, H.; Yang, Y.; Jiang, X.; Cao, X.; Zhen, X. You only need the image: Unsupervised few-shot semantic segmentation with co-guidance network. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Virtual, 25–28 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1496–1500. [Google Scholar]

- Hu, T.; Yang, P.; Zhang, C.; Yu, G.; Mu, Y.; Snoek, C.G. Attention-based multi-context guiding for few-shot semantic segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8441–8448. [Google Scholar]

- Nguyen, K.; Todorovic, S. Feature Weighting and Boosting for Few-Shot Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 622–631. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, X.; Hu, Y.; Yang, Y.; Cao, X.; Zhen, X. Few-Shot Semantic Segmentation with Democratic Attention Networks. In Image Processing Computer Vision Pattern Recognition and Graphics, Proceedings of the 16th European Conference on Computer Vision-ECCV-Biennial, Electrical Network, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J., Eds.; PT XIII; Springer International Publishing: Cham, Switzerland, 2020; Volume 12358, pp. 730–746. [Google Scholar] [CrossRef]

- Wang, H.; Yang, Y.; Cao, X.; Zhen, X.; Snoek, C.; Shao, L. Variational Prototype Inference for Few-Shot Semantic Segmentation. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Electrical Network, Virtual, 5–9 January 2021; pp. 525–534. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, X.; Wang, Q.; Wu, W.; Chang, X.; Liu, J. RPMG-FSS: Robust Prior Mask Guided Few-Shot Semantic Segmentation. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 6609–6621. [Google Scholar] [CrossRef]

- Hu, Y.; Huang, X.; Luo, X.; Han, J.; Cao, X.; Zhang, J. Learning Foreground Information Bottleneck for few-shot semantic segmentation. Pattern Recognit. 2024, 146, 109993. [Google Scholar] [CrossRef]

- Xie, G.S.; Liu, J.; Xiong, H.; Shao, L. Scale-Aware Graph Neural Network for Few-Shot Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 5475–5484. [Google Scholar]

- Liu, B.; Ding, Y.; Jiao, J.; Ji, X.; Ye, Q. Anti-Aliasing Semantic Reconstruction for Few-Shot Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 9747–9756. [Google Scholar]

- Fan, Q.; Pei, W.; Tai, Y.W.; Tang, C.K. Self-support Few-Shot Semantic Segmentation. In Lecture Notes in Computer Science, Proceedings of the 17th European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Brostow, G., Cisse, M., Farinella, G., Hassner, T., Eds.; PT XIX; Springer Nature: Cham, Switzerland, 2022; Volume 13679, pp. 701–719. [Google Scholar] [CrossRef]

- Guan, H.; Spratling, M. Query semantic reconstruction for background in few-shot segmentation. Vis. Comput. 2024, 40, 799–810. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, N.; Cao, Q.; Yao, X.; Han, J.; Shao, L. Learning Non-Target Knowledge for Few-Shot Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 11573–11582. [Google Scholar]

| Methods | Backbone | 1-Shot | 5-Shot | ||

|---|---|---|---|---|---|

| mIoU | FB-IoU | mIoU | FB-IoU | ||

| OSLSM [37] | VGG-16 | 40.8 | 61.3 | 44.0 | 61.5 |

| co-FCN [44] | 41.1 | 60.1 | 41.4 | 60.2 | |

| PL [42] | 42.7 | 61.2 | 43.7 | 62.3 | |

| AMP [45] | 43.4 | 62.2 | 46.9 | 63.8 | |

| PANet [39] | 48.1 | 66.5 | 55.7 | 68.4 | |

| SG-One [18] | 46.3 | 63.1 | 47.1 | 65.9 | |

| JGLNet [66] | 49.3 | 68.3 | 55.6 | 70.6 | |

| DRNet [67] | 52.4 | 67.5 | 55.2 | 70.0 | |

| PFENet [68] | 58.0 | – | 59.0 | – | |

| MGNet [69] | 43.9 | 67.8 | 50.3 | 50.3 | |

| LSTNet [70] | 58.5 | – | 60.4 | – | |

| DCP [71] | 62.6 | 75.6 | 67.8 | 80.6 | |

| SSENet (Ours) | 65.4 | 77.2 | 68.3 | 81.3 | |

| PANet * [39] | ResNet-50 | 48.7 | 66.9 | 55.6 | 71.4 |

| CGNet [72] | 47.6 | 64.1 | 49.5 | 66.2 | |

| PPNet [52] | 52.9 | – | 63.0 | – | |

| SML [47] | 51.3 | 67.1 | 60.0 | 72.2 | |

| PFENet [68] | 60.8 | 73.3 | 61.9 | 73.9 | |

| ASGNet [51] | 59.3 | 69.2 | 63.9 | 74.2 | |

| DRNet [67] | 58.6 | 71.4 | 61.7 | 73.7 | |

| DGPNet [6] | 63.2 | – | 73.1 | – | |

| MSDNet [57] | 64.3 | 77.1 | 68.7 | 82.1 | |

| DCP [71] | 66.1 | 77.6 | 70.3 | 78.5 | |

| SSENet (Ours) | 67.4 | 78.9 | 71.0 | 81.3 | |

| PANet * [39] | ResNet-101 | 51.2 | 70.3 | 57.5 | 72.0 |

| A-MCG [73] | – | 61.2 | – | 62.2 | |

| PPNet [52] | 55.2 | 70.9 | 65.1 | 77.5 | |

| FWB [74] | 56.2 | – | 59.9 | – | |

| DAN [75] | 58.2 | 71.9 | 60.5 | 72.3 | |

| VPI [76] | 57.3 | – | 60.4 | – | |

| ASGNet [51] | 59.3 | 71.7 | 64.4 | 75.2 | |

| LSTNet [70] | 61.8 | – | 64.2 | – | |

| PRMG [77] | 62.6 | – | 65.7 | – | |

| PFENet+ [78] | 62.6 | 75.1 | 64.0 | 76.6 | |

| MSDNet [57] | 64.7 | 77.3 | 70.8 | 85.0 | |

| DCP [71] | 67.3 | 78.5 | 71.5 | 82.7 | |

| SSENet (Ours) | 68.2 | 78.3 | 72.5 | 81.6 | |

| Methods | Task | mIoU | FB-IoU | ||||

|---|---|---|---|---|---|---|---|

| VGG-16 | ResNet-50 | ResNet-101 | VGG-16 | ResNet-50 | ResNet-101 | ||

| PANet (Baseline) | 1-shot | 45.1 | 45.3 | 49.8 | 64.2 | 64.4 | 68.6 |

| SSENet (Ours) | 62.7 | 63.2 | 67.9 | 73.8 | 74.1 | 77.5 | |

| PANet (Baseline) | 5-shot | 48.2 | 48.8 | 54.4 | 67.4 | 68.6 | 73.1 |

| SSENet (Ours) | 59.4 | 60.2 | 65.3 | 78.2 | 79.5 | 80.9 | |

| Methods | Backbone | 1-Shot | 5-Shot | ||

|---|---|---|---|---|---|

| mIoU | FB-IoU | mIoU | FB-IoU | ||

| PANet [39] | VGG-16 | 20.9 | 59.2 | 29.7 | 63.5 |

| DRNet [67] | 18.5 | 58.3 | 25.2 | 62.6 | |

| MGNet [69] | 27.8 | 61.1 | 35.6 | 63.8 | |

| JGLNet [66] | 25.3 | 61.8 | 34.7 | 63.6 | |

| LSTNet [70] | 35.8 | – | 37.5 | – | |

| PFENet [68] | 34.1 | 60.0 | 37.7 | 61.6 | |

| SML [47] | 22.6 | 59.3 | – | – | |

| SAGNN [79] | 37.3 | 61.2 | 40.7 | 63.1 | |

| SSENet (Ours) | 43.1 | 65.8 | 45.6 | 66.8 | |

| RPMM [49] | ResNet-50 | 30.6 | 60.4 | 42.5 | 67.0 |

| PANet * [39] | 23.6 | 63.0 | 34.2 | 64.1 | |

| PPNet [52] | 29.0 | – | 38.5 | – | |

| SML [47] | 23.3 | 59.5 | – | – | |

| ASR [80] | 33.8 | – | 36.7 | – | |

| MLC [63] | 33.9 | – | 40.6 | – | |

| ASGNet [51] | 34.6 | 60.4 | 42.5 | 67.1 | |

| CWT [50] | 32.9 | – | 41.3 | – | |

| DRNet [67] | 23.3 | 61.4 | 32.2 | 64.8 | |

| SSP [81] | 33.6 | – | 41.3 | – | |

| QSCMNet [58] | 36.4 | 60.7 | 42.8 | 64.8 | |

| LSTNet [70] | 36.6 | – | 38.0 | – | |

| PFENet + QSR [82] | 35.1 | – | 38.2 | – | |

| DCP [71] | 45.5 | – | 50.9 | – | |

| SSENet (Ours) | 47.0 | 70.1 | 53.8 | 73.9 | |

| FWB [74] | ResNet-101 | 21.2 | – | 23.7 | – |

| A-MCG [73] | – | 52.0 | – | 64.7 | |

| PANet * [39] | 35.1 | 63.7 | 41.4 | 66.5 | |

| PMMs [49] | 29.6 | – | 34.3 | – | |

| DAN [75] | 24.4 | 62.3 | 29.6 | 63.9 | |

| PFENet [68] | 38.5 | 63.0 | 42.7 | 65.8 | |

| VPI [76] | 23.4 | – | 27.8 | – | |

| SAGNN [79] | 37.2 | 60.9 | 42.7 | 63.4 | |

| CWT [50] | 32.4 | – | 42.0 | – | |

| NTRENet [83] | 39.1 | 67.5 | 43.2 | 69.6 | |

| PFENet+ [78] | 38.2 | 61.8 | 39.9 | 63.4 | |

| LSTNet [70] | 38.2 | – | 38.2 | – | |

| PFENet + QSR [82] | 36.9 | – | 41.2 | – | |

| DCP [71] | 44.6 | – | 49.4 | – | |

| SSENet (Ours) | 47.2 | 68.9 | 53.6 | 72.4 | |

| Methods | Task | mIoU | FB-IoU | ||||

|---|---|---|---|---|---|---|---|

| VGG-16 | ResNet-50 | ResNet-101 | VGG-16 | ResNet-50 | ResNet-101 | ||

| PANet (Baseline) | 1-shot | 20.5 | 22.3 | 34.2 | 58.7 | 59.6 | 63.4 |

| SSENet (Ours) | 39.7 | 41.0 | 45.8 | 64.3 | 69.2 | 64.9 | |

| PANet (Baseline) | 5-shot | 32.7 | 32.5 | 40.1 | 61.2 | 62.2 | 65.8 |

| SSENet (Ours) | 48.2 | 46.9 | 51.2 | 66.0 | 71.4 | 70.6 | |

| Variants | PASCAL- | COCO- | Speed (FPS) | ||

|---|---|---|---|---|---|

| mIoU | FB-mIoU | mIoU | FB-mIoU | ||

| F + B (Baseline) | 48.7 | 66.9 | 23.6 | 63.0 | 17.4 |

| + C | 50.3 | 67.8 | 25.8 | 63.7 | 17.2 |

| C + C | 52.1 | 68.6 | 28.2 | 64.3 | 17.2 |

| C + C + F + B | 54.2 | 69.5 | 33.8 | 64.9 | 17.2 |

| + C + FS + B | 56.0 | 70.3 | 35.1 | 65.6 | 16.8 |

| + C + F + BS | 57.8 | 71.2 | 36.4 | 66.2 | 16.8 |

| C + + FS + B | 59.4 | 72.0 | 38.6 | 66.8 | 16.8 |

| C + + F + BS | 61.1 | 72.9 | 40.7 | 67.4 | 16.7 |

| C + C + F + BS | 62.6 | 73.7 | 42.8 | 68.1 | 16.7 |

| C + C + FS + B | 64.2 | 74.6 | 44.7 | 68.7 | 16.5 |

| C + C + F + BS | 65.8 | 75.9 | 46.2 | 69.3 | 16.5 |

| C + C + FS + BS (Ours) | 67.4 | 78.9 | 47.0 | 70.1 | 16.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, L.; Li, X.; Wang, J.; Tong, Y.; Xiao, J.; Zhou, R.; Li, L.-H.; Zhou, Q.; Li, K.-C. Symmetry-Aware Superpixel-Enhanced Few-Shot Semantic Segmentation. Symmetry 2025, 17, 1726. https://doi.org/10.3390/sym17101726

Guo L, Li X, Wang J, Tong Y, Xiao J, Zhou R, Li L-H, Zhou Q, Li K-C. Symmetry-Aware Superpixel-Enhanced Few-Shot Semantic Segmentation. Symmetry. 2025; 17(10):1726. https://doi.org/10.3390/sym17101726

Chicago/Turabian StyleGuo, Lan, Xuyang Li, Jinqiang Wang, Yuqi Tong, Jie Xiao, Rui Zhou, Ling-Huey Li, Qingguo Zhou, and Kuan-Ching Li. 2025. "Symmetry-Aware Superpixel-Enhanced Few-Shot Semantic Segmentation" Symmetry 17, no. 10: 1726. https://doi.org/10.3390/sym17101726

APA StyleGuo, L., Li, X., Wang, J., Tong, Y., Xiao, J., Zhou, R., Li, L.-H., Zhou, Q., & Li, K.-C. (2025). Symmetry-Aware Superpixel-Enhanced Few-Shot Semantic Segmentation. Symmetry, 17(10), 1726. https://doi.org/10.3390/sym17101726