A Novel Learning Rate Schedule in Optimization for Neural Networks and It’s Convergence

Abstract

1. Introduction

2. Machine Learning Method

2.1. Direction Method

2.1.1. Gradient Descent Method

2.1.2. Momentum Method

2.2. Learning Rate Schedule

2.2.1. Time-Based Learning Rate Schedule

2.2.2. Step-Based Learning Rate Schedule

2.2.3. Exponential-Based Learning Rate Schedule

| Algorithm 1: Pseudocode of exponential-based learning rate schedule. |

| : Initialize learning rate |

| d: Decay rate |

| (Initialize time step) |

2.3. Adaptive Optimization Methods

2.3.1. Adaptive Gradient (Adagrad)

2.3.2. Root Mean Square Propagation (RMSProp)

2.3.3. Adaptive Moment Estimation (Adam)

| Algorithm 2: Pseudocode of Adam method. |

| : Learning rate |

| : Exponential decay rates for the moment estimates |

| : Cost function with parameters w |

| : Initial parameter vector |

| (Initialize timestep) |

| not converged |

| (Resulting parameters) |

3. The Proposed Method

| Algorithm 3: Pseudocode of cost-based learning rate schedule. |

| : Initialize learning rate |

| : Cost function with parameters w |

| (Initialize time step) |

4. Numerical Tests

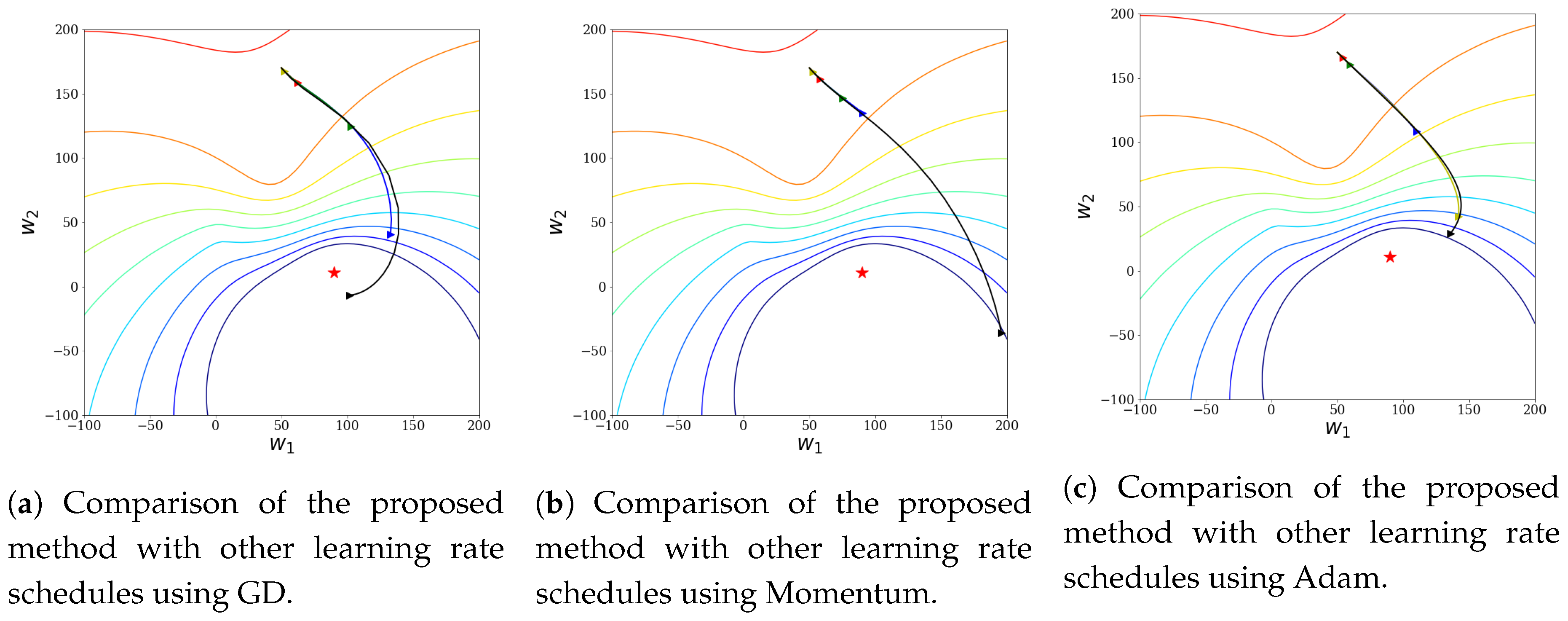

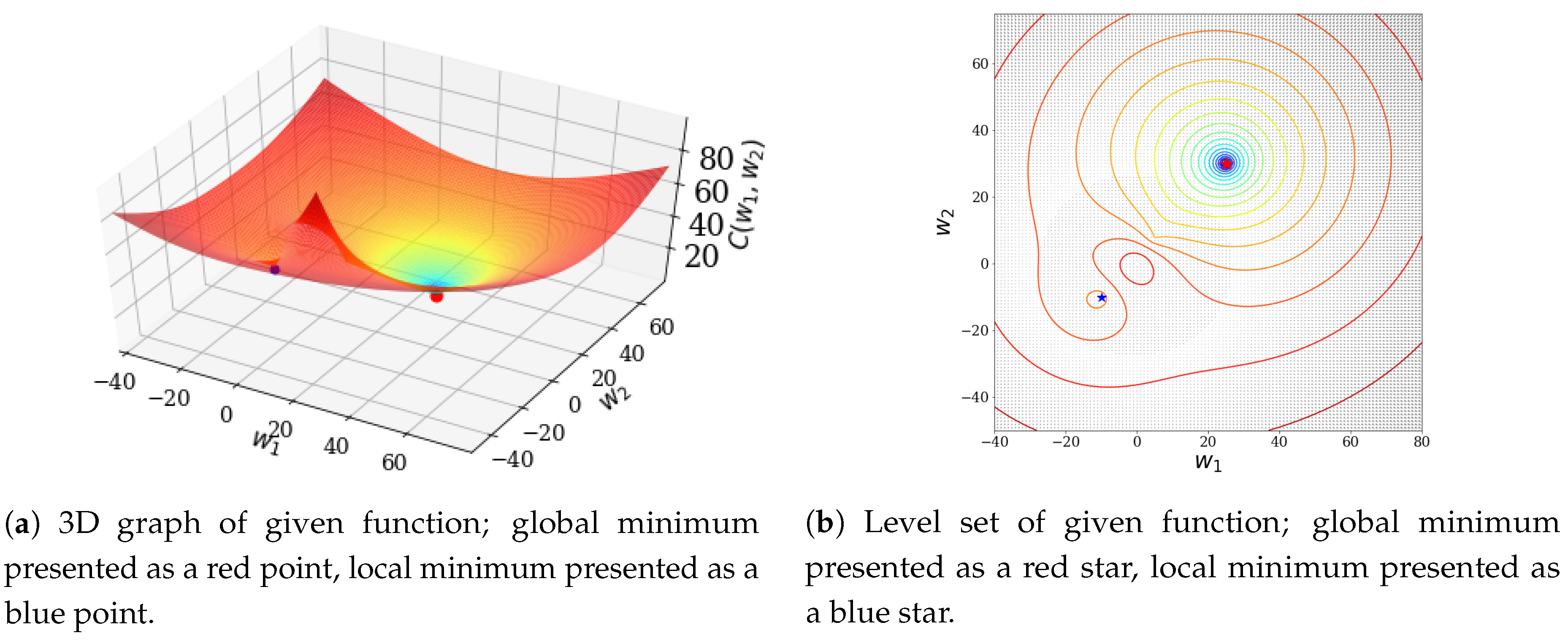

4.1. Two-Variable Function Test Using Weber’s Function

4.1.1. Case 1: Weber’s Function with Three Terms Added

4.1.2. Case 2: Weber’s Function with Four Terms Added

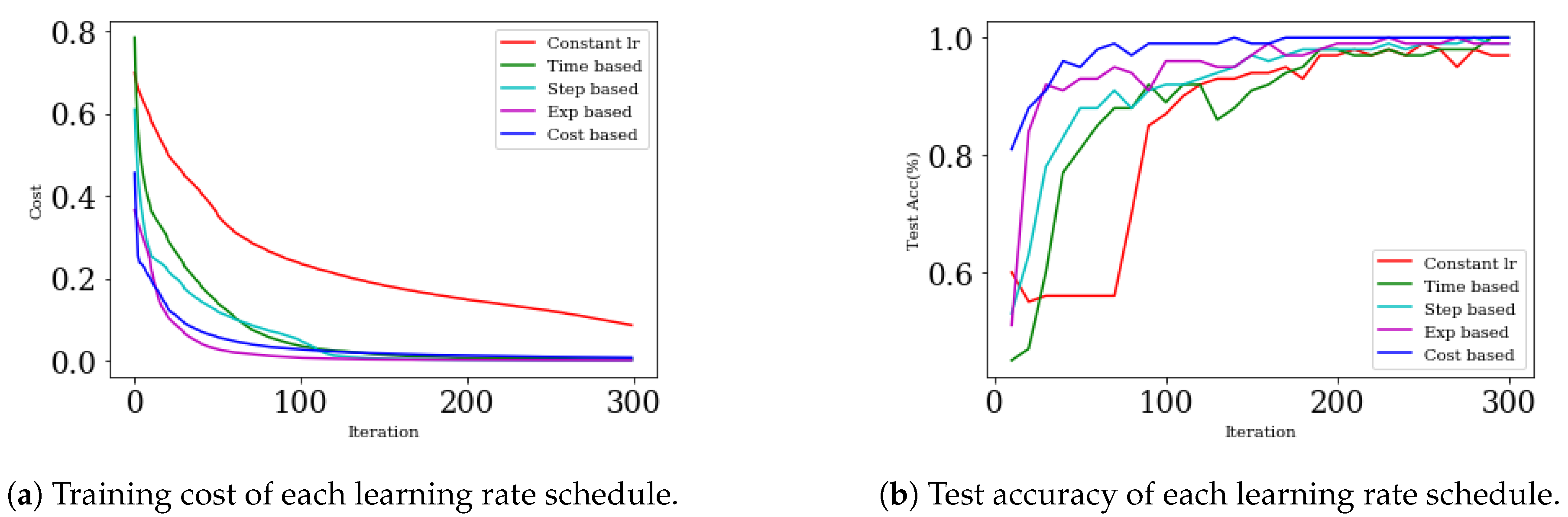

4.2. Binary Classification with Multilayer Perceptron (MLP)

4.3. MNIST with MLP

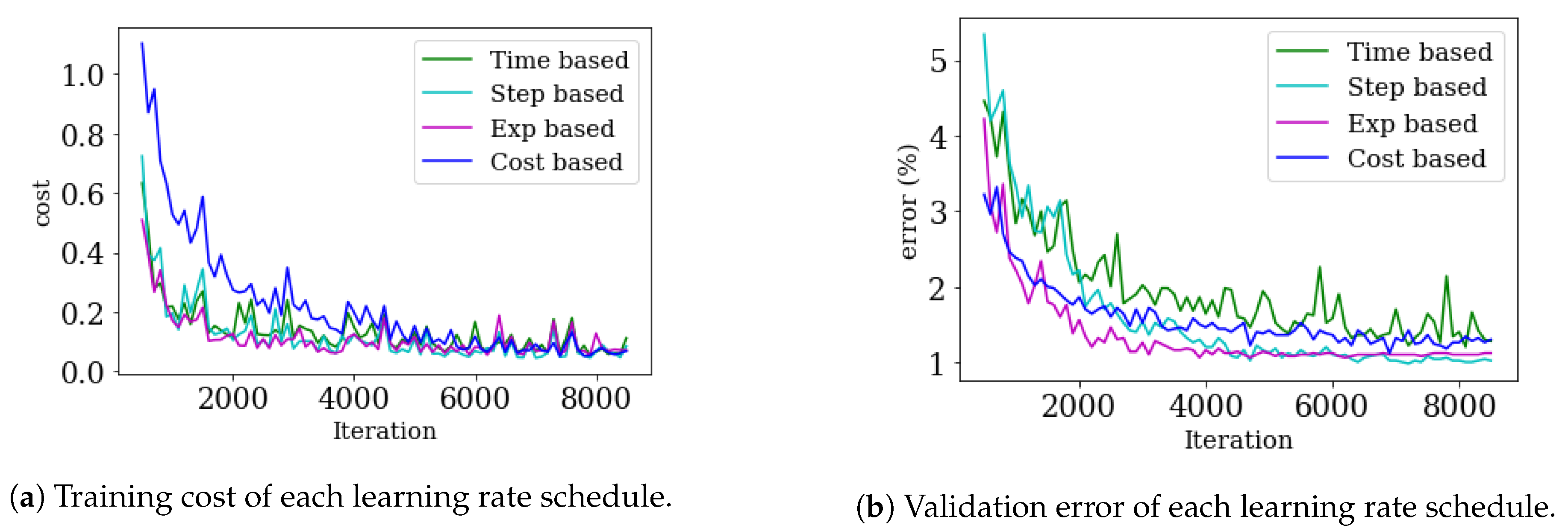

4.4. MNIST with Convolutional Neural Network (CNN)

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1

Appendix A.2

References

- Bishop, C.M.; Wheeler, T. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; The MIT Press Cambridge: London, UK, 2016. [Google Scholar]

- Dean, J.; Corrado, G.; Monga, R.; Chen, K.; Devin, M.; Le, Q.; Mao, M.; Ranzato, M.; Senior, A.; Tucker, P.; et al. Large scale distributed deep networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems—NIPS 2012, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1223–1231. [Google Scholar]

- Amari, S. Natural gradient works efficiently in learning. Neural Comput. 1998, 10, 251–276. [Google Scholar] [CrossRef]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Neural Information Processing Systems–NIPS 2012, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Pascanu, R.; Bengio, Y. Revisiting natural gradient for deep networks. arXiv 2013, arXiv:1301.3584. [Google Scholar]

- Sutskever, I.; Martens, J.; Dahl, G.; Hinton, G.E. On the importance of initialization and momentum in deep learning. In Proceedings of the 30th International Conference on Machine Learning—ICML 2013, Atlanta, GA, USA, 16–21 June 2013; pp. 1139–1147. [Google Scholar]

- Forst, W.; Hoffmann, D. Optimization—Theory and Practice; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Nesterov, Y. Introductory Lectures on Convex Optimization: A Basic Course; Springer: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Bengio, Y. Practical recommendations for gradient-based training of deep architectures. arXiv 2012, arXiv:1206.5533. [Google Scholar]

- Ge, R.; Kakade, S.M.; Kidambi, R.; Netrapalli, P. The Step Decay Schedule: A Near Optimal, Geometrically Decaying Learning Rate Procedure For Least Squares. arXiv 2019, arXiv:1904.12838. [Google Scholar]

- Li, Z.; Arora, S. An Exponential Learning Rate Schedule for Deep Learning. arXiv 2019, arXiv:1910.07454. [Google Scholar]

- Duchi, J.; Hazan, E.; Singer, Y. Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 2011, 12, 2121–2159. [Google Scholar]

- Tieleman, T.; Hinton, G.E. Lecture 6.5—RMSProp, COURSERA: Neural Networks for Machine Learning; Technical Report; University of Toronto: Toronto, ON, Canada, 2012. [Google Scholar]

- Zeiler, M.D. Adadelta: An adaptive learning rate method. arXiv 2012, arXiv:1212.5701. [Google Scholar]

- Kingma, D.P.; Ba, J. ADAM: A method for stochastic optimization. In Proceedings of the 3rd International Conference for Learning Representations—ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Reddi, S.J.; Kale, S.; Kumar, S. On the Convergence of ADAM and Beyond. arXiv 2019, arXiv:1904.09237. [Google Scholar]

- Yi, D.; Ahn, J.; Ji, S. An Effective Optimization Method for Machine Learning Based on ADAM. Appl. Sci. 2020, 10, 1073. [Google Scholar] [CrossRef]

- Kochenderfer, M.; Wheeler, T. Algorithms for Optimization; The MIT Press Cambridge: London, UK, 2019. [Google Scholar]

- Bottou, L.; Curtis, F.E.; Nocedal, J. Optimization Methods for Large-Scale Machine Learning. SIAM Rev. 2018, 60, 223–311. [Google Scholar] [CrossRef]

- Roux, N.L.; Fitzgibbon, A.W. A fast natural newton method. In Proceedings of the 27th International Conference on Machine Learning—ICML 2010, Haifa, Israel, 21–24 June 2010; pp. 623–630. [Google Scholar]

- Sohl-Dickstein, J.; Poole, B.; Ganguli, S. Fast large-scale optimization by unifying stochastic gradient and quasi-newton methods. In Proceedings of the 31st International Conference on Machine Learning—ICML 2014, Beijing, China, 21–24 June 2014; pp. 604–612. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Becker, S.; LeCun, Y. Improving the Convergence of Back-Propagation Learning with Second Order Methods; Technical Report; Department of Computer Science, University of Toronto: Toronto, ON, Canada, 1988. [Google Scholar]

- Kelley, C.T. Iterative methods for linear and nonlinear equations. In Frontiers in Applied Mathematics; SIAM: Philadelphia, PA, USA, 1995. [Google Scholar]

- Kelley, C.T. Iterative Methods for Optimization. In Frontiers in Applied Mathematics; SIAM: Philadelphia, PA, USA, 1999. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J.; Yi, D.; Ji, S. A Novel Learning Rate Schedule in Optimization for Neural Networks and It’s Convergence. Symmetry 2020, 12, 660. https://doi.org/10.3390/sym12040660

Park J, Yi D, Ji S. A Novel Learning Rate Schedule in Optimization for Neural Networks and It’s Convergence. Symmetry. 2020; 12(4):660. https://doi.org/10.3390/sym12040660

Chicago/Turabian StylePark, Jieun, Dokkyun Yi, and Sangmin Ji. 2020. "A Novel Learning Rate Schedule in Optimization for Neural Networks and It’s Convergence" Symmetry 12, no. 4: 660. https://doi.org/10.3390/sym12040660

APA StylePark, J., Yi, D., & Ji, S. (2020). A Novel Learning Rate Schedule in Optimization for Neural Networks and It’s Convergence. Symmetry, 12(4), 660. https://doi.org/10.3390/sym12040660