Proof. is constructed by induction on . For the base case, let . Then is constructed by Construction 5 and 7. Here, Claim (i) holds by Lemma 20(ii), Lemma 18(i) and Lemma 13(vi), Claim (ii) by Lemma 18(i), (iii) is clear and (iv) by Lemma 18(iv).

For the induction case, let . Let , where . Then is divided into two subsets which occur in the left subtree and right subtree of , respectively. Then . Let Suppose that derivations of and of are constructed such that Claims from (i) to (iv) hold. There are three cases to be considered in the following.

Case 1. for all . Then and .

For Claim (i), let and . By the induction hypothesis, for all . Since then . Thus by Lemmas 11 and 12. Then . Thus by Proposition 2(i). Hence, for all , by . Then for all . Claims (ii) and (iii) follow directly from the induction hypothesis.

For Claim (iv), let . It follows from the induction hypothesis that for all and, for some or for some . Then by .

If for some then for all by the definition of branches to I. Thus we assume that for some in the following. If then for all thus for all . Thus let in the following. By the proof of Claim (i) above, . Then by and . Thus . Hence for all .

Case 2. for all . Then and . This case is proved by a procedure similar to that of Case 1 and omitted.

Case 3. for some and for some .

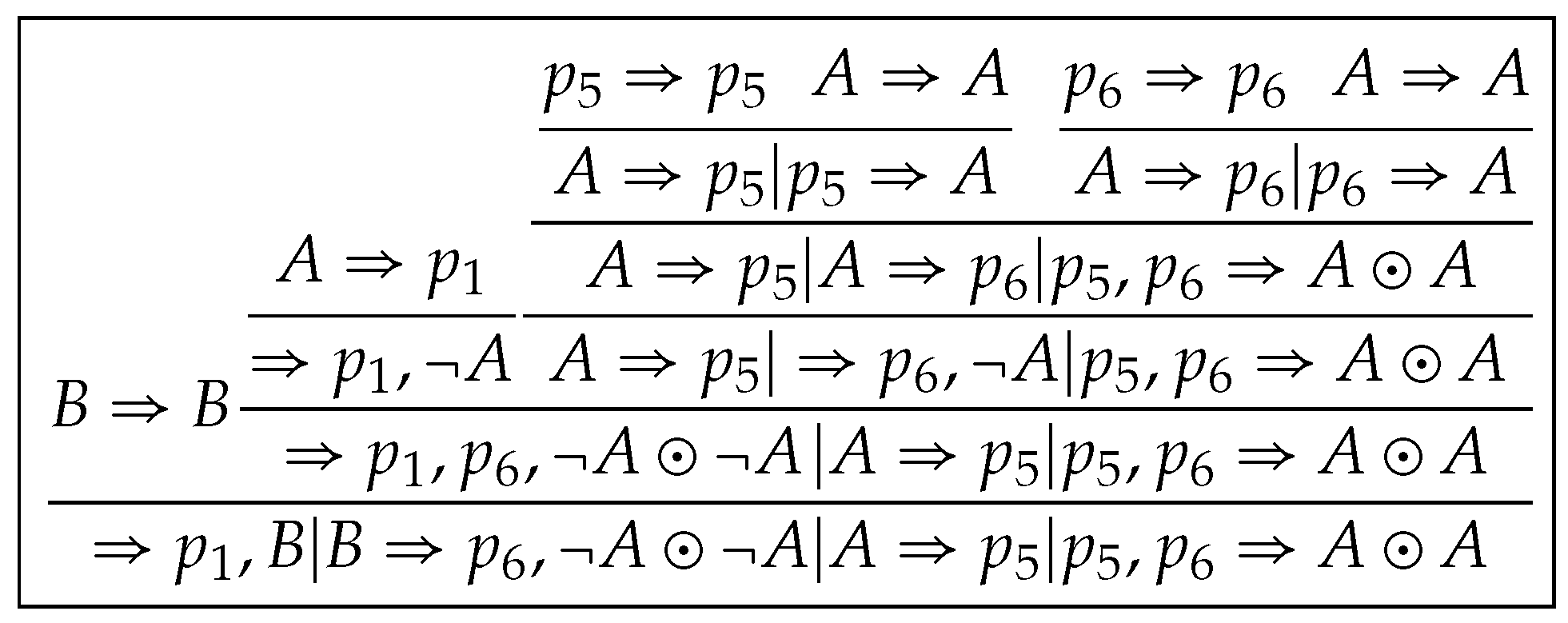

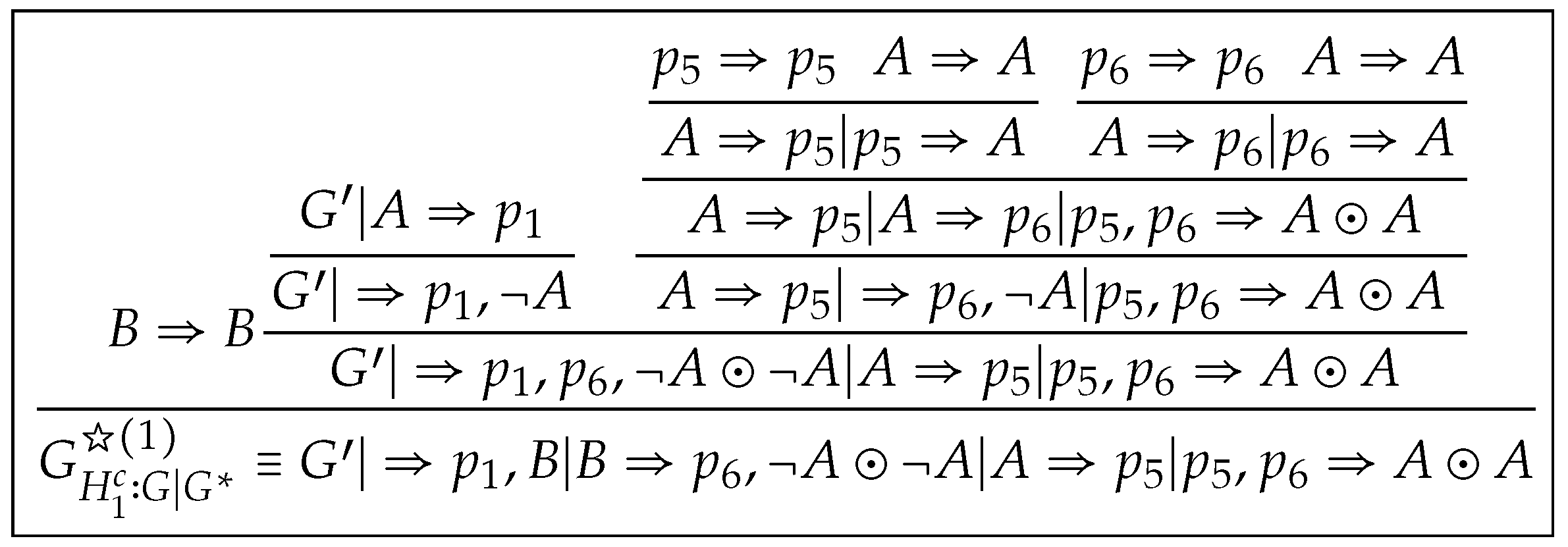

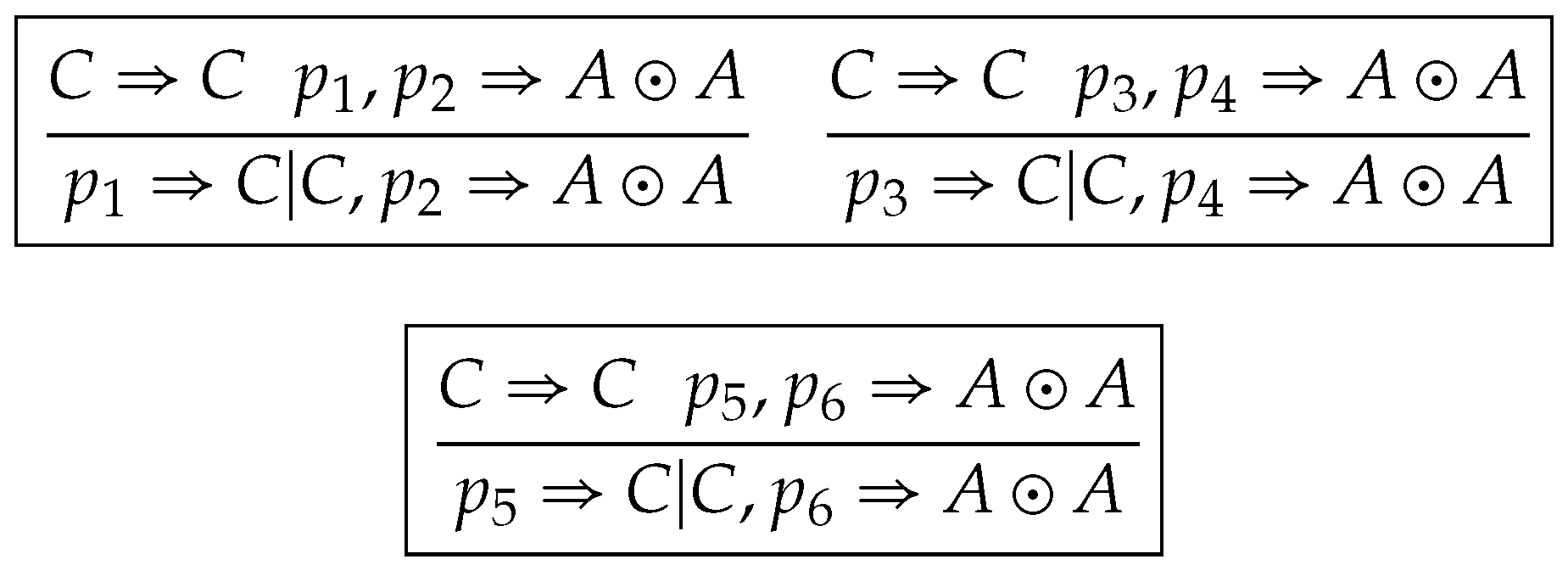

Given

such that

and

for all

, where,

,

is closed for all

,

Then

by

and

for all

. Thus

for all

and

by

and Construction 4.

For each above, we construct a derivation in which you may regard as a subroutine, and as its input in the following stage 1. Then a derivation is constructed by calling in Stage 2, in which you may regard as a routine and as its subroutine.

Before proceeding to deal with Case 3, we present the following property of which are derived from Claims (i) ∼ (iv) and applicable to or under the induction hypothesis.

Notation 9. Letbe two close hypersequents, for some and for some . Generally, is a copy of , i.e., eigenvariables in have different identification numbers with those in , so are .

Lemma 24. implies .

Proof. Let . Then by Lemma 19(i). Thus or . If then by and Proposition 1 (ii). If then by Proposition 2(i). Thus by Lemma 11, Lemma 14(i). Hence by , . □

Lemma 25. (1) is an m-ary tree and, is a binary tree;

(2) Let then for some ;

(3) Let then ;

(4) Let in then for all .

(5) Let , for some . Then .

Proof. (1) is immediately from Claim (i). (2) holds by and for some by . (3) holds by Proposition 1(iii), (2) and .

For (4), let . Then for each , and for some by (2). Thus and by Proposition 1(ii). Hence by , and by . Thus and by (3), . Hence for all . (5) is from (4). □

Lemma 26. Let for all such that and . Then and for all .

Proof. The proof is by induction on n. Let then by Lemma 25(5) and . For the induction step, let for some then by Lemma 25(5). Since then for some by Claim (ii). Then by . Thus by Lemma 25(5). □

Definition 30. Let . The module of at , which we denote by , is defined as follows: (1) ; (2) if ; (3) if , .

Each node of is determined bottom-up, starting with , whose root is and leaves may be branches, leaves of or lower hypersequents of -applications. While each node of is determined top-down, starting with , whose root is a subset of and leaves contain and some leaves of .

Lemma 27. (1) is a derivation without in .

(2) Let and . Then for all and .

Proof. Part (1) is clear and (2) immediately follows from Lemma 26. □

Now, we continue to deal with Case 3 in the following.

Stage 1 Construction of Subroutine Roughly speaking, is constructed by replacing some nodes with in post-order. However, the ordinal postorder-traversal algorithm cannot be used to construct because the tree structure of is generally different from that of at some nodes satisfying . Thus we construct a sequence of trees for all inductively as follows.

For the base case, we mark all -applications in as unprocessed and define such marked derivation to be . For the induction case, let be constructed. If all applications of in are marked as processed, we firstly delete the root of the tree resulting from the procedure and then, apply to the root of the resulting derivation if it is applicable otherwise add an -application to it and finally, terminate the procedure. Otherwise we select one of the outermost unprocessed -applications in , say, , and perform the following steps to construct in which be revised as such that

(a) is constructed by locally revising and leaving other nodes of unchanged, particularly including ;

(b) is a derivation in ;

(c) if for all otherwise

for some .

Remark 3. By two superscripts ∘ and · in or , we indicate the unprocessed state and processed state, respectively. This procedure determines an ordering for all -applications in and the subscript indicates that it is the -th application of in a post-order transversal of . and ( and ) are the premise and conclusion of (), respectively.

Step 1 (Delete). Take the module

out of

. Since

is the unique unprocessed

-applications in

by its choice criteria,

is the same as

by Claim (a). Thus it is a derivation. If

for all

, delete all internal nodes of

. Otherwise there exists

such that

for all

and

by Lemma 27(2) and

, then delete all

,

. We denote the structure resulting from the deletion operation above by

. Since

then

is a tree by Lemma 26. Thus it is also a derivation.

Step 2 (Update). For each which satisfies and for some , we replace H with for each , .

Since and is the outermost unprocessed

-application in then and has been processed. Thus Claims (b) and (c) hold for by the induction hypothesis. Then is a valid -application since , and are valid, where , .

Lemma 28. Let . Then .

Proof. Since then for some . If then . Otherwise all applications between and H are one-premise rules by Lemma 26. Then by Claim (ii). Thus by , for some by Claim (i). □

Since by Lemma 28 and for each by Lemma 24, then as side-hypersequent of H. Thus this step updates the revision of downward to .

Let be the number of satisfying the above conditions, , and for all be updated as , , , respectively. Then is a derivation and .

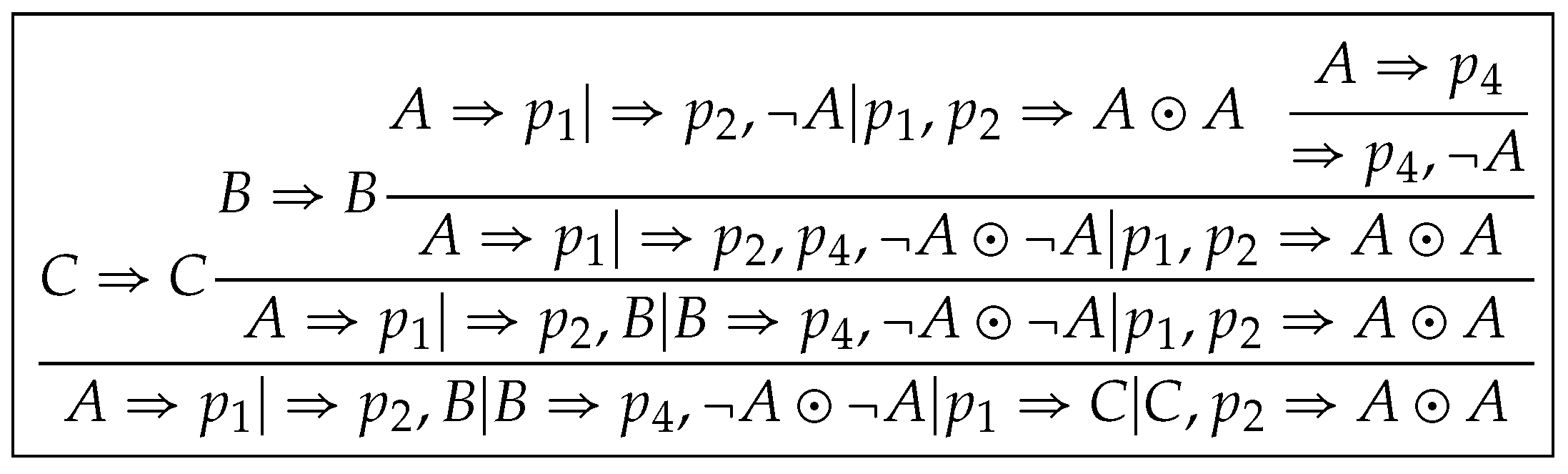

Step 3 (Replace). All

are processed in post-order. If

for all

and

it proceeds by the following procedure otherwise it remains unchanged. Let

be in the form

Then for all by Lemma 28, .

Firstly, replace

with

. We may rewrite the roots of

and

as

respectively.

Let . By Lemma 28, . By Lemma 14, or for all . Thus . Secondly, we replace H with for all . Let be the number of satisfying the replacement conditions above, , and for all be updated as , , , respectively. Then is a derivation of and

Step 4 (Separation along ). Apply the separation algorithm along

to

and denote the resulting derivation by

whose root is labeled by

. Then all

in

are transformed into

in

. Since

,

and

are separable in

by a procedure similar to that of Lemma 21. Let

and

be separated into

and

, respectively. By Claim (iii),

where

.

Step 5 (Put back). Replace in with and mark as processed, i.e., revise as . Among leaves of , all are updated as and others keep unchanged in . Then this replacement is feasible, especially, be replaced with . Define the tree resulting from Step 5 to be . Then Claims (a), (b) and (c) hold for by the above construction.

Finally, we construct a derivation of from , in , which we denote by .

Remark 4. All elimination rules used in constructing are extracted from . Since is a derivation in without , we may extract elimination rules from which we may use to construct by a procedure similar to that of constructing with minor revision at every node H that . Note that updates and replacements in Steps 2 and 3 are essentially inductive operations but we neglect it for simplicity.

We may also think of constructing as grafting in by adding to some . Since the rootstock of the grafting process is invariant in Stage 2, we encapsulate as an rule in whose premises are and conclusion is , i.e.,where, is closed. Stage 2. Construction of routine . A sequence of trees for all is constructed inductively as follows. , , are defined as those of Stage 1. Then we perform the following steps to construct in which be revised as such that Claims (a) and (b) are same as those of Stage 1 and (c) if for all otherwise for some .

Step 1 (Delete).

and

are defined as before.

satisfies

for all

and

.

Step 2 (Update). For all which satisfy and for some , we replace H with for all , . Then Claims (a) and (b) are proved by a procedure as before. Let be the number of satisfying the above conditions. , and for all be updated as , , , respectively. Then is a derivation and .

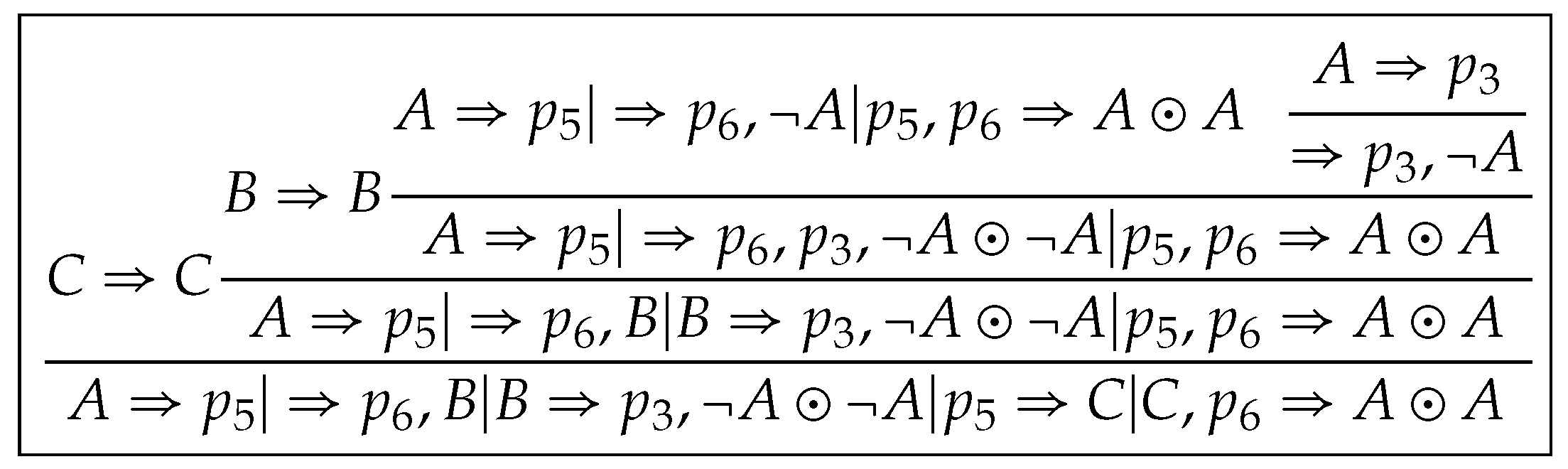

Step 3 (Replace). All

are processed in post-order. If

for all

and

it proceeds by the following procedure otherwise it remains unchanged. Let

be in the form

Then there exists the unique such that .

Firstly, we replace with . We may rewrite the roots of , as respectively.

Let . Then by Lemma 28. Thus Define .

Then we replace

H with

for all

.

Let

be the number of

satisfying the replacement conditions as above,

,

and

for all

be updated as

,

,

, respectively. Then

is a derivation and

, where

Step 4 (Separation along ). Apply the separation algorithm along to and denote the resulting derivation by whose root is labeled by .

By Claim (iii),

.

Then

where

.

Step 5 (Put back). Replace in with and revise as . Define the resulting tree from Step 5 to be then Claims (a), (b) and (c) hold for by the above construction.

Finally, we construct a derivation of from , …, in . Since the major operation of Stage 2 is to replace with for all satisfying , then we denote the resulting derivation from Stage 2 by .

In the following, we prove that the claims from (i) to (iv) hold if and .

For Claims (i) and (ii): Let and . Then for all by Lemma 17(iv).

If for some , then for all by . Thus Claim (i) holds and Claim (ii) holds by Lemma 25(5) and Lemma 19(i). Note that Lemma 25(5) is independent of Claims from (ii) to (iv).

Otherwise is built up from , or by keeping their focus and principal sequents unchanged and making their side-hypersequents possibly to be modified, but which has no effect on discussing Claim (ii) and then Claim (ii) holds for by the induction hypothesis on Claim (ii) of or .

If is from then and by the choice of and at Stage 1. By the induction hypothesis, for all and for all . Then for all , by , .

If is from then by Step 3 at Stage 1. Then . Thus . Hence . Therefore for all by . Thus for all by and the induction hypothesis from . The case of built up from is proved by a procedure similar to above and omitted.

Claim (iii) holds by Step 4 at Stages 1 and 2. Note that in the whole of Stage 1, we treat as a side-hypersequent. But it is possible that there exists such that . Since we have not applied the separation algorithm to in Step 4 at Stage 1, then it could make Claim (iii) invalid. But it is not difficult to find that we just move the separation of such to Step 4 at Stage 2. Of course, we can move it to Step 4 at Stage 1, but which make the discussion complicated.

For Claim (iv), we prove (1) for all and , (2) for all and . Only (1) is proved as follows and (2) by a similar procedure and omitted.

Let . Then and by the definition of . By a procedure similar to that of Claim (iv) in Case 1, we get and assume that for some and let in the following.

Suppose that . Then and by . Hence by . Therefore , a contradiction thus . Then by and . Thus . Hence for all . This completes the proof of Theorem 2. □