A Machine Learning Approach for the Estimation of Total Dissolved Solids Concentration in Lake Mead Using Electrical Conductivity and Temperature

Abstract

1. Introduction

- What is the strength of the relationship between TDS, EC, and temperature in Lake Mead?

- How does the accuracy of estimation of TDS from EC and temperature using ensemble models compare with a standalone model?

- Which ML model offers the best predictive accuracy in the estimation of TDS using EC and temperature?

2. Materials and Methods

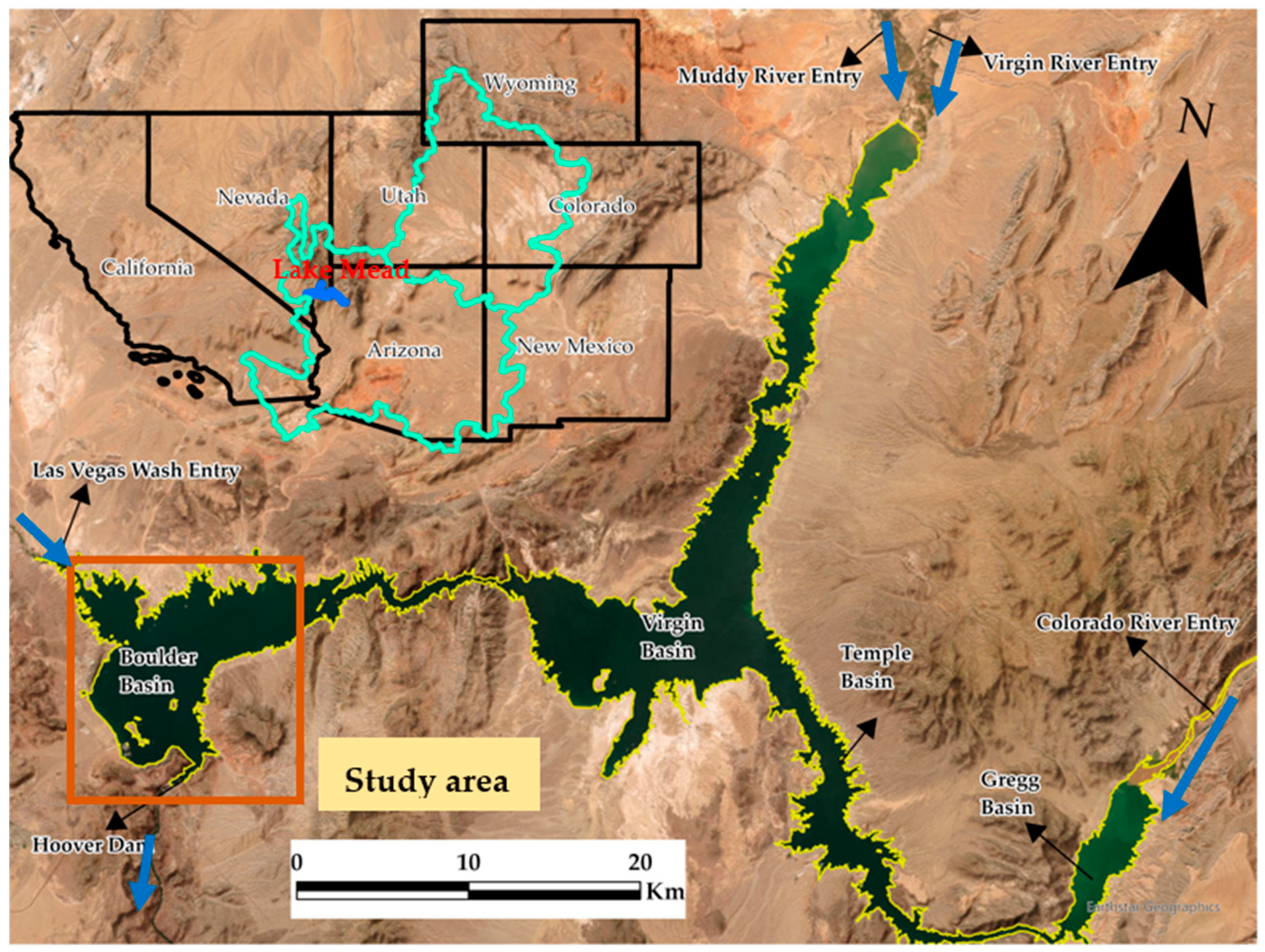

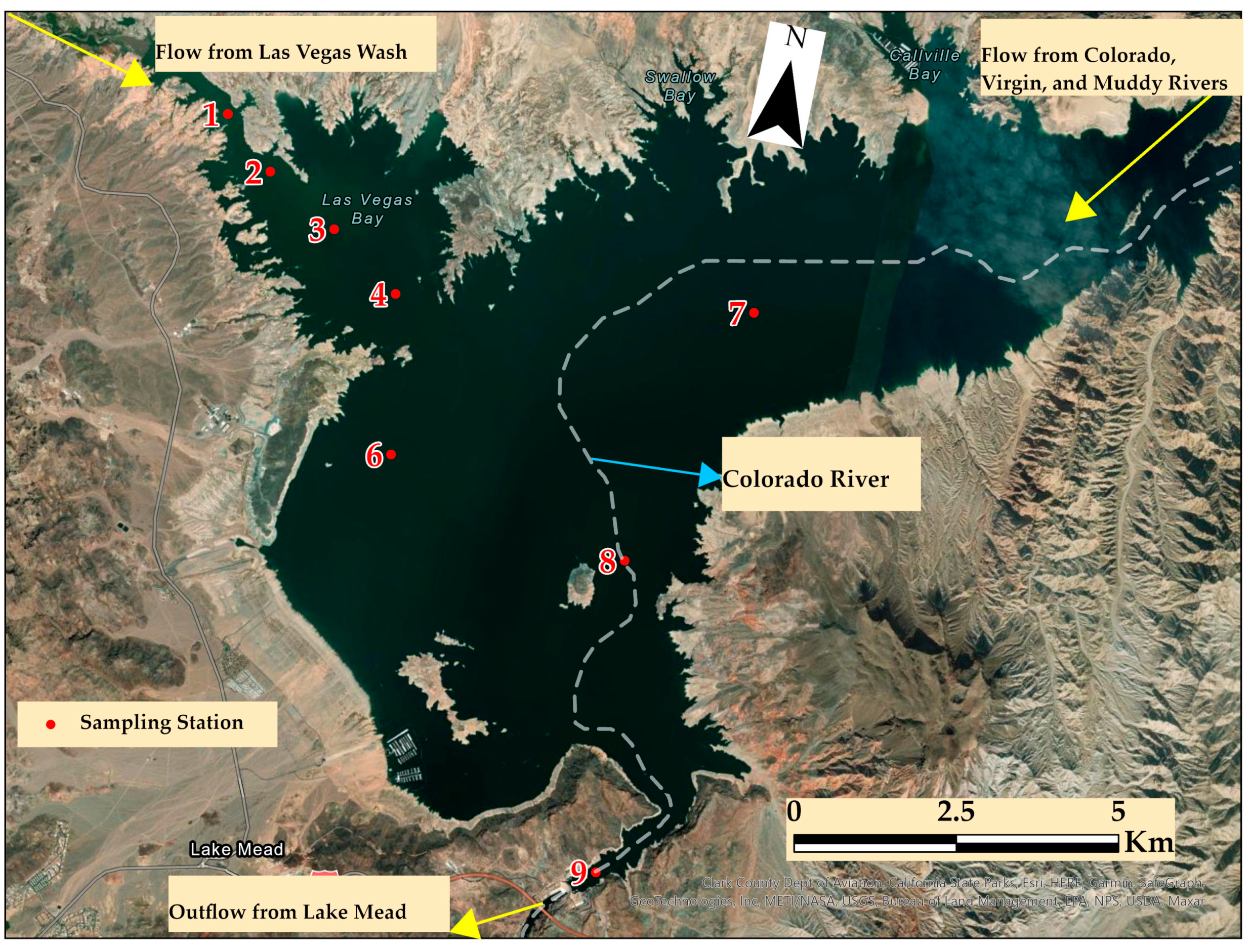

2.1. Study Area Descriptions

2.2. Data Collection

2.3. Modeling and Analysis

2.3.1. Standalone ML Techniques

2.3.2. Ensemble ML Techniques

2.3.3. ML Model Hyperparameter Optimization

2.3.4. Model Evaluation Metrics

3. Results and Discussion

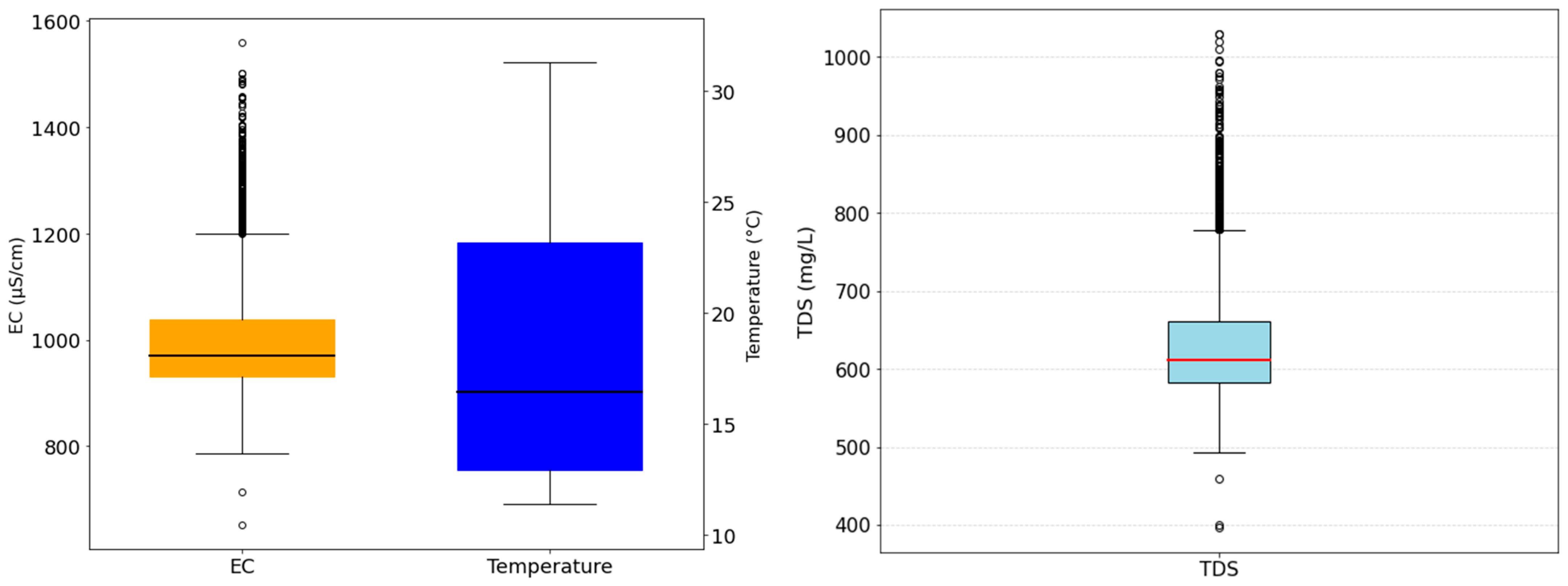

3.1. Statistical Summary

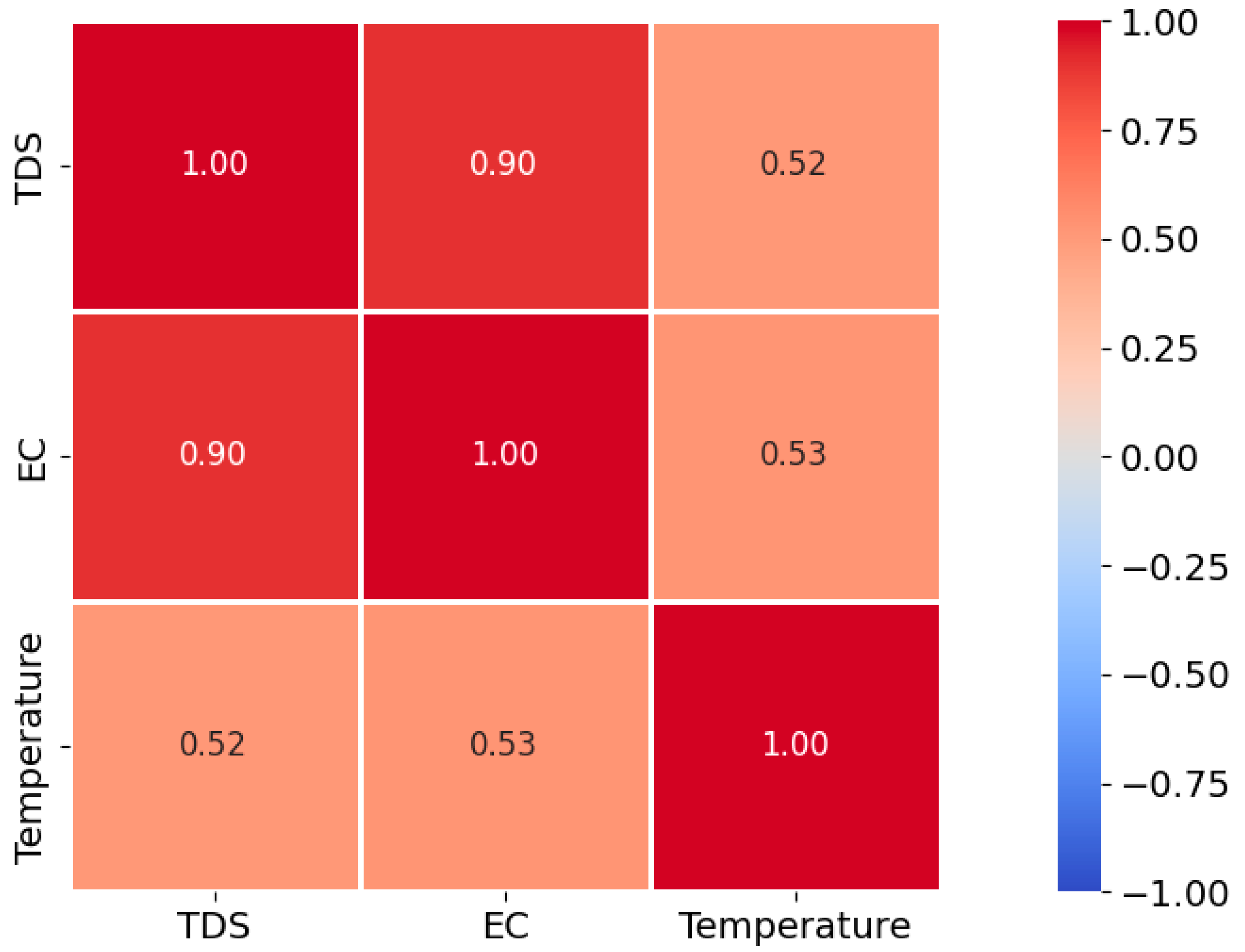

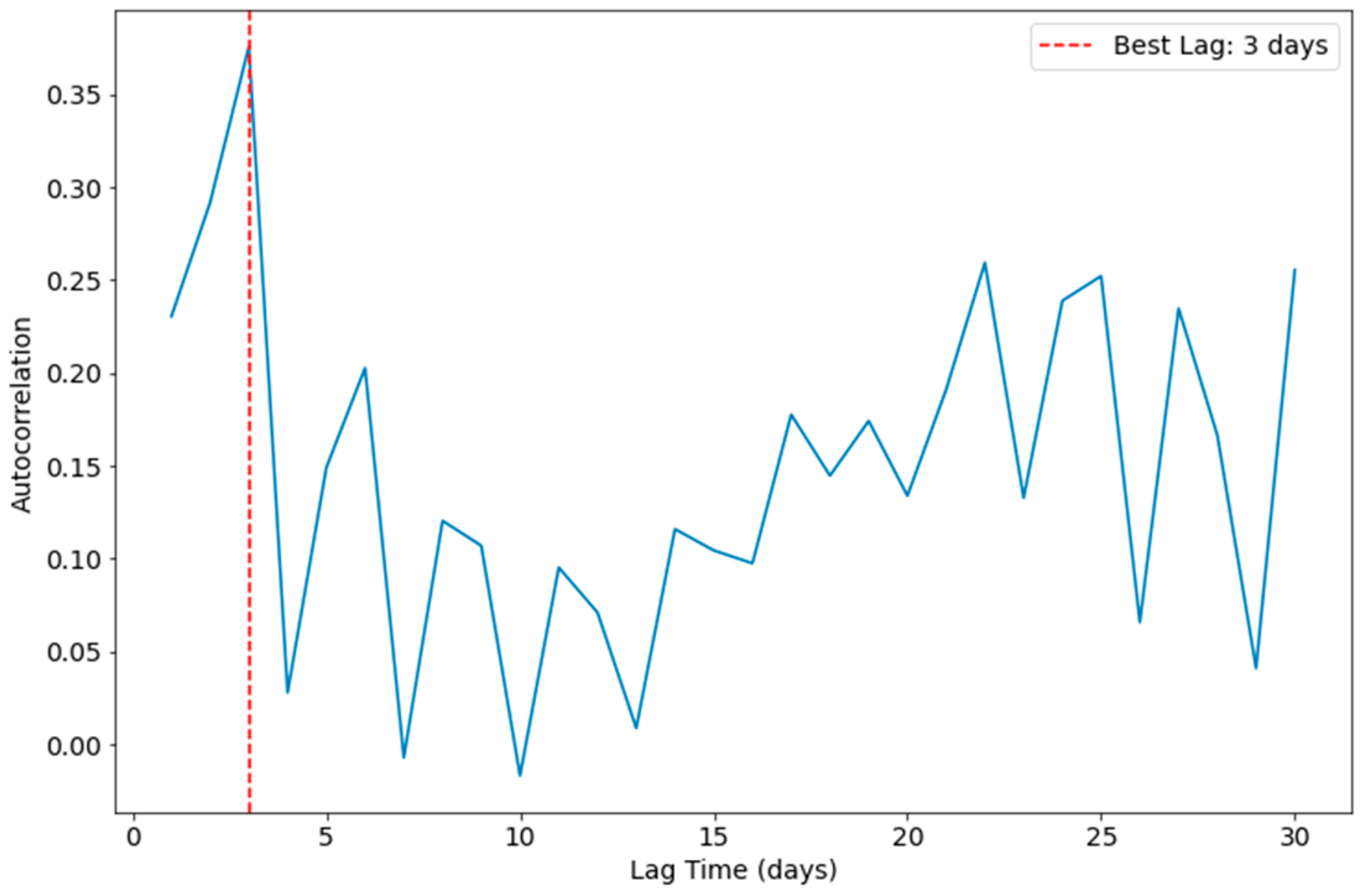

3.2. Correlation Analysis

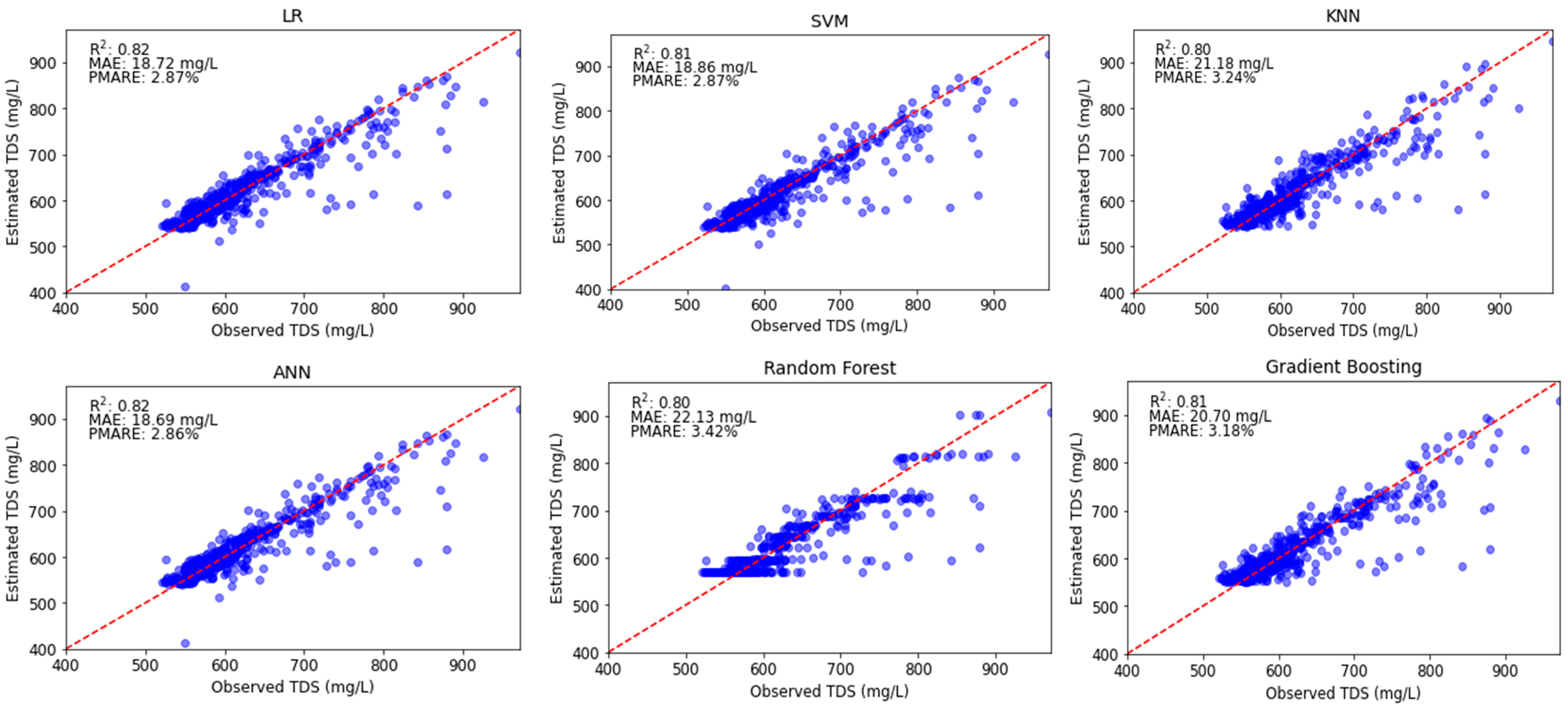

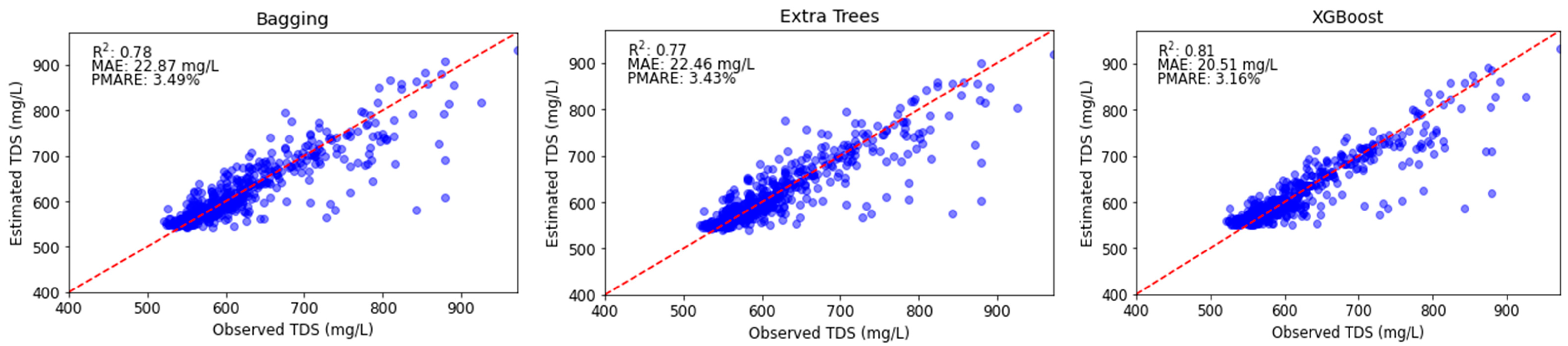

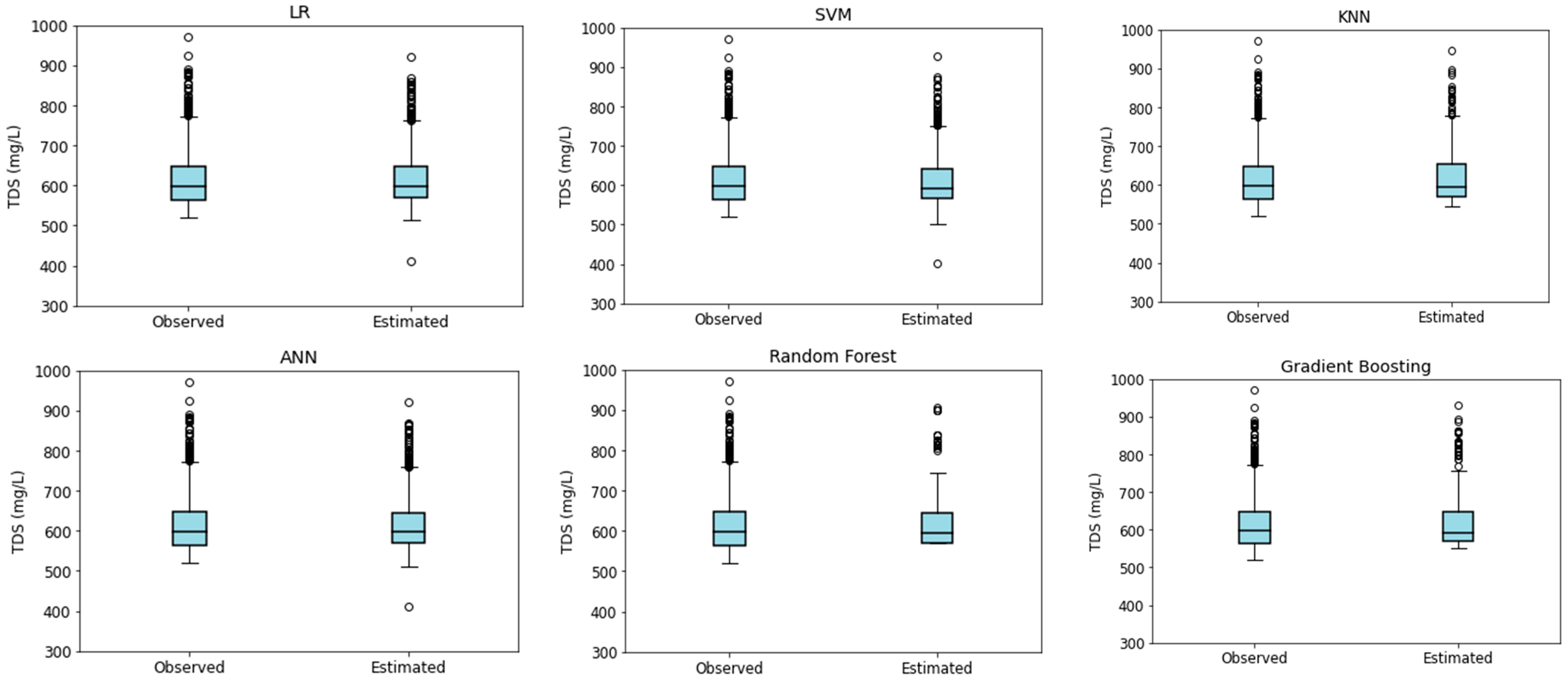

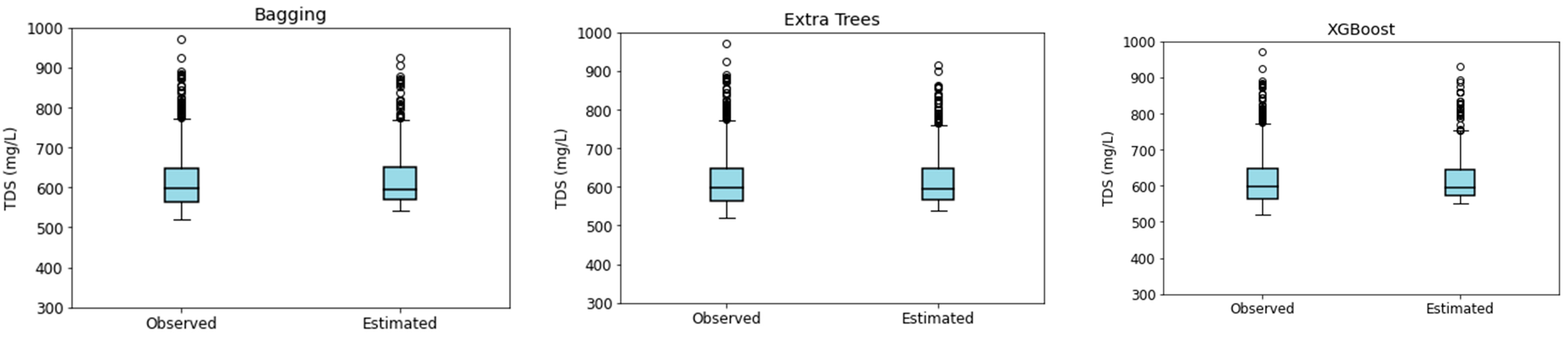

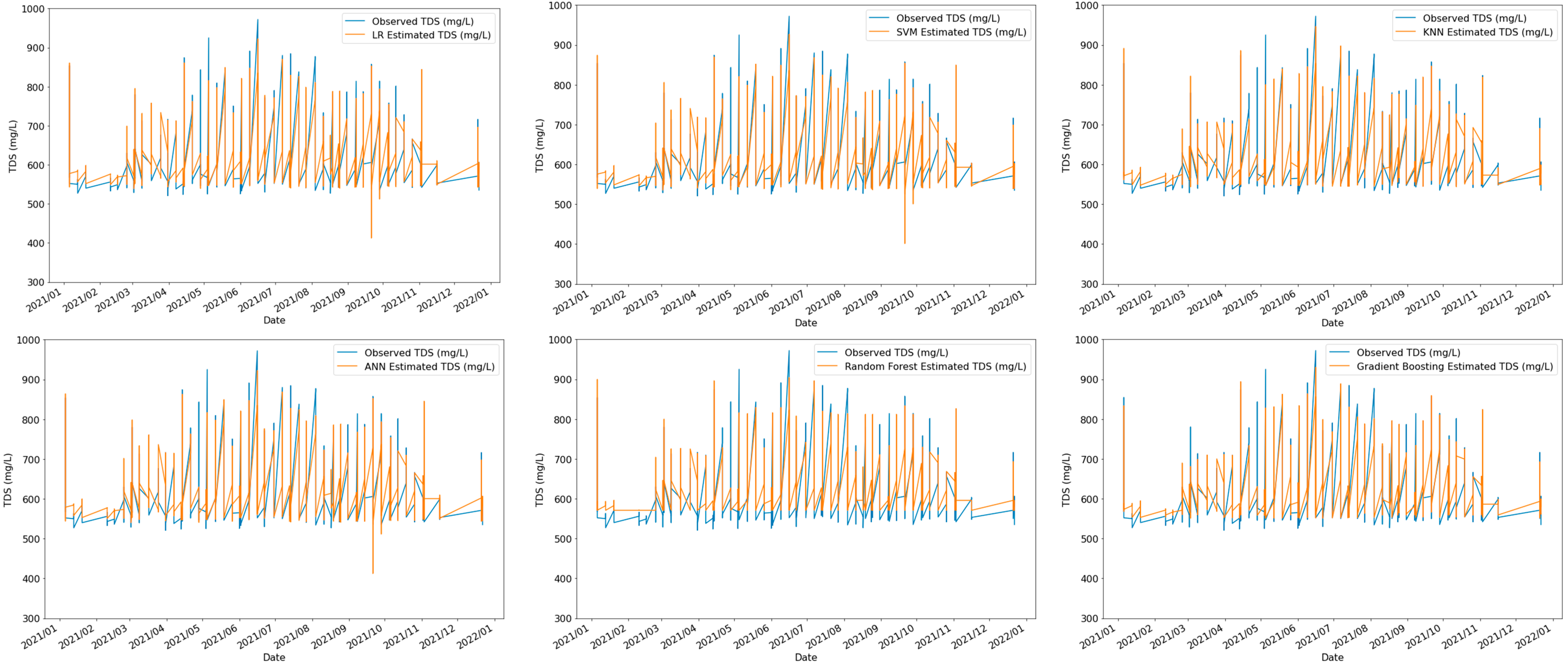

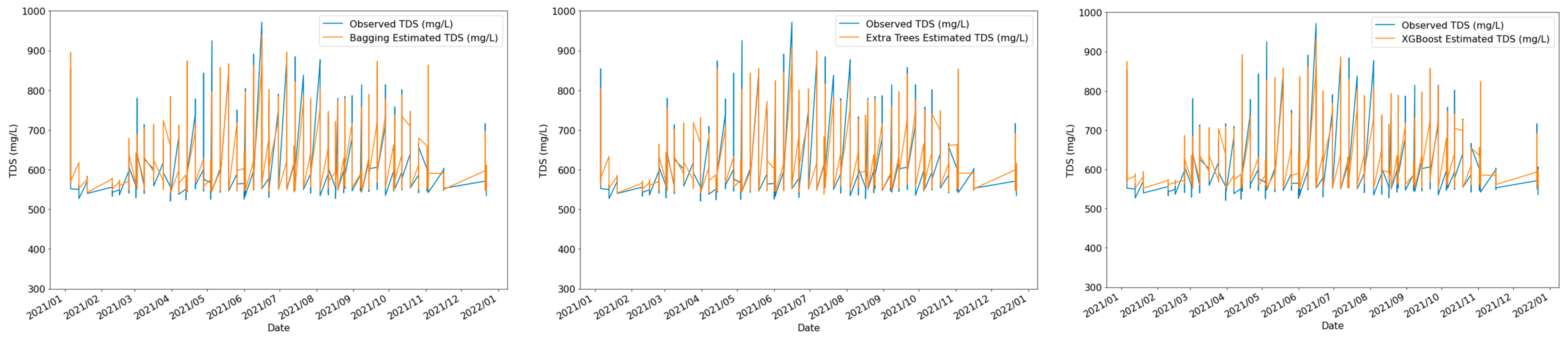

3.3. ML Predictions and Analysis

3.3.1. ML Model Hyperparameters Optimization

3.3.2. Model Performance Assessment

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Venkatesan, A.K.; Ahmad, S.; Johnson, W.; Batista, J.R. Salinity Reduction and Energy Conservation in Direct and Indirect Potable Water Reuse. Desalination 2011, 272, 120–127. [Google Scholar] [CrossRef]

- Adjovu, G.E.; Stephen, H.; Ahmad, S. Monitoring of Total Dissolved Solids Using Remote Sensing Band Reflectance and Salinity Indices: A Case Study of the Imperial County Section, AZ-CA, of the Colorado River. In Proceedings of the World Environmental and Water Resources Congress 2022, Atlanta, Georgia, 5–8 June 2022. [Google Scholar] [CrossRef]

- Adjovu, G.E.; Stephen, H.; Ahmad, S. Spatial and Temporal Dynamics of Key Water Quality Parameters in a Thermal Stratified Lake Ecosystem: The Case Study of Lake Mead. Earth 2023, 4, 461–502. [Google Scholar] [CrossRef]

- Wheeler, K.G.; Udall, B.; Wang, J.; Kuhn, E.; Salehabadi, H.; Schmidt, J.C. What Will It Take to Stabilize the Colorado River? Science 2022, 377, 373–375. [Google Scholar] [CrossRef] [PubMed]

- Rahaman, M.M.; Thakur, B.; Kalra, A.; Ahmad, S. Modeling of GRACE-Derived Groundwater Information in the Colorado River Basin. Hydrology 2019, 6, 19. [Google Scholar] [CrossRef]

- Venkatesan, A.K.; Ahmad, S.; Batista, J.R.; Johnson, W.S. Total Dissolved Solids Contribution to the Colorado River Associated with the Growth of Las Vegas Valley. In Proceedings of the World Environmental and Water Resources Congress 2010, Providence, RI, USA, 16–20 May 2010; pp. 3376–3385. [Google Scholar] [CrossRef]

- Shaikh, T.A.; Adjovu, G.E.; Stephen, H.; Ahmad, S. Impacts of Urbanization on Watershed Hydrology and Runoff Water Quality of a Watershed: A Review. In Proceedings of the World Environmental and Water Resources Congress 2023, Henderson, NV, USA, 21–25 May 2023; Volume 1, pp. 1271–1283. Available online: https://ascelibrary.org/doi/10.1061/9780784484852.116 (accessed on 25 May 2023).

- Sowby, R.B.; Hotchkiss, R.H. Minimizing Unintended Consequences of Water Resources Decisions. J. Water Resour. Plan. Manag. 2022, 148, 02522007. [Google Scholar] [CrossRef]

- Shope, C.L.; Gerner, S.J. Assessment of Dissolved-Solids Loading to the Colorado River in the Paradox Basin between the Dolores River and Gypsum Canyon, Utah; U.S. Geological Survey Scientific Investigations Report 2014-5031; U.S. Geological Survey: Reston, VA, USA, 2016. [CrossRef]

- Nauman, T.W.; Ely, C.P.; Miller, M.P.; Duniway, M.C. Salinity Yield Modeling of the Upper Colorado River Basin Using 30-m Resolution Soil Maps and Random Forests. Water Resour. Res. 2019, 55, 4954–4973. [Google Scholar] [CrossRef]

- Tillman, F.D.; Day, N.K.; Miller, M.P.; Miller, O.L.; Rumsey, C.A.; Wise, D.R.; Longley, P.C.; McDonnell, M.C. A Review of Current Capabilities and Science Gaps in Water Supply Data, Modeling, and Trends for Water Availability Assessments in the Upper Colorado River Basin. Water 2022, 14, 3813. [Google Scholar] [CrossRef]

- Adjovu, G.E.; Stephen, H.; Ahmad, S. Spatiotemporal Variability in Total Dissolved Solids and Total Suspended Solids along the Colorado River. Hydrology 2023, 10, 125. [Google Scholar] [CrossRef]

- Khan, I.; Khan, A.; Khan, M.S.; Zafar, S.; Hameed, A.; Badshah, S.; Rehman, S.U.; Ullah, H.; Yasmeen, G. Impact of City Effluents on Water Quality of Indus River: Assessment of Temporal and Spatial Variations in the Southern Region of Khyber Pakhtunkhwa, Pakistan. Environ. Monit. Assess. 2018, 190, 267. [Google Scholar] [CrossRef]

- Adjovu, G.E.; Stephen, H.; James, D.; Ahmad, S. Overview of the Application of Remote Sensing in Effective Monitoring of Water Quality Parameters. Remote Sens. 2023, 15, 1938. [Google Scholar] [CrossRef]

- U.S. EPA. 2018 Edition of the Drinking Water Standards and Health Advisories Tables; U.S. EPA: Washington, DC, USA, 2018. Available online: https://www.epa.gov/system/files/documents/2022-01/dwtable2018.pdf (accessed on 25 May 2023).

- EPA. National Primary Drinking Water Guidelines; U.S. EPA: Washington, DC, USA, 2009. Available online: https://www.epa.gov/sites/production/files/2016-06/documents/npwdr_complete_table.pdf (accessed on 25 May 2023).

- Mejía Ávila, D.; Torres-Bejarano, F.; Martínez Lara, Z. Spectral Indices for Estimating Total Dissolved Solids in Freshwater Wetlands Using Semi-Empirical Models. A Case Study of Guartinaja and Momil Wetlands. Int. J. Remote Sens. 2022, 43, 2156–2184. [Google Scholar] [CrossRef]

- Hach Solids (Total & Dissolved). Available online: https://www.hach.com/parameters/solids (accessed on 25 May 2023).

- Butler, B.A.; Ford, R.G. Evaluating Relationships between Total Dissolved Solids (TDS) and Total Suspended Solids (TSS) in a Mining-Influenced Watershed. Mine Water Environ. 2018, 31, 18–30. [Google Scholar] [CrossRef]

- Shareef, M.A.; Toumi, A.; Khenchaf, A. Estimating of Water Quality Parameters Using SAR and Thermal Microwave Remote Sensing Data. In Proceedings of the 2nd International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), Monastir, Tunisia, 21–23 March 2016; pp. 586–590. [Google Scholar] [CrossRef]

- Ladwig, R.; Rock, L.A.; Dugan, H.A. Impact of Salinization on Lake Stratification and Spring Mixing. Limnol. Oceanogr. Lett. 2021, 8, 93–102. [Google Scholar] [CrossRef]

- Fant, C.; Srinivasan, R.; Boehlert, B.; Rennels, L.; Chapra, S.C.; Strzepek, K.M.; Corona, J.; Allen, A.; Martinich, J. Climate Change Impacts on Us Water Quality Using Two Models: HAWQS and US Basins. Water 2017, 9, 118. [Google Scholar] [CrossRef]

- Denys, L. Incomplete Spring Turnover in Small Deep Lakes in SE Michigan. McNair Sch. Res. J. 2010, 2, 10. [Google Scholar]

- Sauck, W.A. A Model for the Resistivity Structure of LNAPL Plumes and Their Environs in Sandy Sediments. J. Appl. Geophys. 2000, 44, 151–165. [Google Scholar] [CrossRef]

- Jiang, L.; Liu, Y.; Hu, X.; Zeng, G.; Wang, H.; Zhou, L.; Tan, X.; Huang, B.; Liu, S.; Liu, S. The Use of Microbial-Earthworm Ecofilters for Wastewater Treatment with Special Attention to Influencing Factors in Performance: A Review. Bioresour. Technol. 2016, 200, 999–1007. [Google Scholar] [CrossRef]

- Chapter 5—Sampling. In NPDES Compliance Inspection Manual; U.S. Environmental Protection Agency: Washington, DC, USA, 2017. Available online: https://www.epa.gov/sites/default/files/2017-03/documents/npdesinspect-chapter-05.pdf (accessed on 25 May 2023).

- Rusydi, A.F. Correlation between Conductivity and Total Dissolved Solid in Various Type of Water: A Review. IOP Conf. Ser. Earth Environ. Sci. 2018, 118, 012019. [Google Scholar] [CrossRef]

- Rodger, B.; Baird, A.D.; Eaton, E.W.R. Standard Methods for the Examination of Water and Wastewater; American Public Health Association, American Water Works Association, Water Environment Federation: Washington, DC, USA, 2017; pp. 1–1545. [Google Scholar]

- Shareef, M.A.; Toumi, A.; Khenchaf, A. Estimation and Characterization of Physical and Inorganic Chemical Indicators of Water Quality by Using SAR Images. SAR Image Anal. Model. Technol. XV 2015, 9642, 96420U. [Google Scholar] [CrossRef]

- Woodside, J. What Is the Difference among Turbidity, TDS, and TSS? Available online: https://www.ysi.com/ysi-blog/water-blogged-blog/2022/05/understanding-turbidity-tds-and-tss (accessed on 25 May 2023).

- Gholizadeh, M.H.; Melesse, A.M.; Reddi, L. A Comprehensive Review on Water Quality Parameters Estimation Using Remote Sensing Techniques. Sensors 2016, 16, 1298. [Google Scholar] [CrossRef] [PubMed]

- Adjovu, G.E.; Ali Shaikh, T.; Stephen, H.; Ahmad, S. Utilization of Machine Learning Models and Satellite Data for the Estimation of Total Dissolved Solids in the Colorado River System. In Proceedings of the World Environmental and Water Resources Congress 2023, Henderson, NV, USA, 21–24 May 2023; Volume 1, pp. 1147–1160. [Google Scholar]

- Taylor, M.; Elliott, H.A.; Navitsky, L.O. Relationship between Total Dissolved Solids and Electrical Conductivity in Marcellus Hydraulic Fracturing Fluids. Water Sci. Technol. 2018, 77, 1998–2004. [Google Scholar] [CrossRef]

- Kupssinskü, L.S.; Guimarães, T.T.; De Souza, E.M.; Zanotta, D.C.; Veronez, M.R.; Gonzaga, L.; Mauad, F.F. A Method for Chlorophyll-a and Suspended Solids Prediction through Remote Sensing and Machine Learning. Sensors 2020, 20, 2125. [Google Scholar] [CrossRef]

- Peterson, K.T.; Sagan, V.; Sidike, P.; Cox, A.L.; Martinez, M. Suspended Sediment Concentration Estimation from Landsat Imagery along the Lower Missouri and Middle Mississippi Rivers Using an Extreme Learning Machine. Remote Sens. 2018, 10, 1503. [Google Scholar] [CrossRef]

- Yang, H.; Kong, J.; Hu, H.; Du, Y.; Gao, M.; Chen, F. A Review of Remote Sensing for Water Quality Retrieval: Progress and Challenges. Remote Sens. 2022, 14, 1770. [Google Scholar] [CrossRef]

- Wakjira, T.G.; Rahmzadeh, A.; Alam, M.S.; Tremblay, R. Explainable Machine Learning Based Efficient Prediction Tool for Lateral Cyclic Response of Post-Tensioned Base Rocking Steel Bridge Piers. Structures 2022, 44, 947–964. [Google Scholar] [CrossRef]

- Najafzadeh, M.; Ghaemi, A.; Emamgholizadeh, S. Prediction of Water Quality Parameters Using Evolutionary Computing-Based Formulations. Int. J. Environ. Sci. Technol. 2019, 16, 6377–6396. [Google Scholar] [CrossRef]

- Najafzadeh, M.; Ghaemi, A. Prediction of the Five-Day Biochemical Oxygen Demand and Chemical Oxygen Demand in Natural Streams Using Machine Learning Methods. Environ. Monit. Assess. 2019, 191, 380. [Google Scholar] [CrossRef]

- Kaur, H.; Malhi, A.K.; Pannu, H.S. Machine Learning Ensemble for Neurological Disorders. Neural Comput. Appl. 2020, 32, 12697–12714. [Google Scholar] [CrossRef]

- Singh, A.K. Impact of the Coronavirus Pandemic on Las Vegas Strip Gaming Revenue. J. Gambl. Bus. Econ. 2021, 14. [Google Scholar] [CrossRef]

- Kutty, A.A.; Wakjira, T.G.; Kucukvar, M.; Abdella, G.M.; Onat, N.C. Urban Resilience and Livability Performance of European Smart Cities: A Novel Machine Learning Approach. J. Clean. Prod. 2022, 378, 134203. [Google Scholar] [CrossRef]

- Hope, T.M.H. Linear Regression. In Machine Learning; Elsevier: Amsterdam, The Netherlands, 2020; pp. 67–81. ISBN 9780128157398. [Google Scholar]

- Li, S.; Song, K.; Wang, S.; Liu, G.; Wen, Z.; Shang, Y.; Lyu, L.; Chen, F.; Xu, S.; Tao, H.; et al. Quantification of Chlorophyll-a in Typical Lakes across China Using Sentinel-2 MSI Imagery with Machine Learning Algorithm. Sci. Total Environ. 2021, 778, 146271. [Google Scholar] [CrossRef]

- Najafzadeh, M.; Basirian, S. Evaluation of River Water Quality Index Using Remote Sensing and Artificial Intelligence Models. Remote Sens. 2023, 15, 2359. [Google Scholar] [CrossRef]

- Najafzadeh, M.; Homaei, F.; Farhadi, H. Reliability Assessment of Water Quality Index Based on Guidelines of National Sanitation Foundation in Natural Streams: Integration of Remote Sensing and Data-Driven Models; Springer: Dordrecht, The Netherlands, 2021; Volume 54, ISBN 0123456789. [Google Scholar]

- Melesse, A.M.; Ahmad, S.; McClain, M.E.; Wang, X.; Lim, Y.H. Suspended Sediment Load Prediction of River Systems: An Artificial Neural Network Approach. Agric. Water Manag. 2011, 98, 855–866. [Google Scholar] [CrossRef]

- Bayati, M.; Danesh-Yazdi, M. Mapping the Spatiotemporal Variability of Salinity in the Hypersaline Lake Urmia Using Sentinel-2 and Landsat-8 Imagery. J. Hydrol. 2021, 595, 126032. [Google Scholar] [CrossRef]

- Bedi, S.; Samal, A.; Ray, C.; Snow, D. Comparative Evaluation of Machine Learning Models for Groundwater Quality Assessment. Environ. Monit. Assess. 2020, 192, 776. [Google Scholar] [CrossRef] [PubMed]

- Adjovu, G.E.; Ahmad, S.; Stephen, H. Analysis of Suspended Material in Lake Mead Using Remote Sensing Indices. In Proceedings of the World Environmental and Water Resources Congress 2021, Virtual, 7–11 June 2021. [Google Scholar]

- Edalat, M.M.; Stephen, H. Socio-Economic Drought Assessment in Lake Mead, USA, Based on a Multivariate Standardized Water-Scarcity Index. Hydrol. Sci. J. 2019, 64, 555–569. [Google Scholar] [CrossRef]

- Rosen, M.R.; Turner, K.; Goodbred, S.L.; Miller, J.M. A Synthesis of Aquatic Science for Management of Lakes Mead and Mohave; US Geological Survey: Reston, VA, USA, 2012; ISBN 9781411335271.

- Morfín, O. Effects of System Conservation on Salinity in Lake Mead. Available online: https://www.multi-statesalinitycoalition.com/wp-content/uploads/2017-Morfin.pdf (accessed on 25 May 2023).

- Venkatesan, A.K.; Ahmad, S.; Johnson, W.; Batista, J.R. Systems Dynamic Model to Forecast Salinity Load to the Colorado River Due to Urbanization within the Las Vegas Valley. Sci. Total Environ. 2011, 409, 2616–2625. [Google Scholar] [CrossRef] [PubMed]

- Dunbar, M.; Harney, S.; Morgan, D.; LaRance, D.; Speaks, F. Lake Mead and Las Vegas Wash 2019 Annual Report; City of Las Vegas, Clark County Water Reclamation District, City of Henderson City, City of North Las Vegas. 2020. Available online: https://drive.google.com/file/d/1XSWvEf74XX2KULmsYQ3ZHRAsOo8RsXN8/view?usp=sharing (accessed on 25 May 2023).

- Di Napoli, M.; Carotenuto, F.; Cevasco, A.; Confuorto, P.; Di Martire, D.; Firpo, M.; Pepe, G.; Raso, E.; Calcaterra, D. Machine Learning Ensemble Modelling as a Tool to Improve Landslide Susceptibility Mapping Reliability. Landslides 2020, 17, 1897–1914. [Google Scholar] [CrossRef]

- Zounemat-Kermani, M.; Batelaan, O.; Fadaee, M.; Hinkelmann, R. Ensemble Machine Learning Paradigms in Hydrology: A Review. J. Hydrol. 2021, 598, 126266. [Google Scholar] [CrossRef]

- Wakjira, T.G.; Ibrahim, M.; Ebead, U.; Alam, M.S. Explainable Machine Learning Model and Reliability Analysis for Flexural Capacity Prediction of RC Beams Strengthened in Flexure with FRCM. Eng. Struct. 2022, 255, 113903. [Google Scholar] [CrossRef]

- Chen, J.; de Hoogh, K.; Gulliver, J.; Hoffmann, B.; Hertel, O.; Ketzel, M.; Bauwelinck, M.; van Donkelaar, A.; Hvidtfeldt, U.A.; Katsouyanni, K.; et al. A Comparison of Linear Regression, Regularization, and Machine Learning Algorithms to Develop Europe-Wide Spatial Models of Fine Particles and Nitrogen Dioxide. Environ. Int. 2019, 130, 104934. [Google Scholar] [CrossRef]

- Maulud, D.; Abdulazeez, A.M. A Review on Linear Regression Comprehensive in Machine Learning. J. Appl. Sci. Technol. Trends 2020, 1, 140–147. [Google Scholar] [CrossRef]

- Ansari, M.; Akhoondzadeh, M. Mapping Water Salinity Using Landsat-8 OLI Satellite Images (Case Study: Karun Basin Located in Iran). Adv. Sp. Res. 2020, 65, 1490–1502. [Google Scholar] [CrossRef]

- Rong, S.; Bao-Wen, Z. The Research of Regression Model in Machine Learning Field. MATEC Web Conf. 2018, 176, 8–11. [Google Scholar] [CrossRef]

- Kavitha, S.; Varuna, S.; Ramya, R. A Comparative Analysis on Linear Regression and Support Vector Regression. In Proceedings of the 2016 Online International Conference on Green Engineering and Technologies (IC-GET) 2016, Coimbatore, India, 19 November 2016. [Google Scholar] [CrossRef]

- Ahmad, S.; Kalra, A.; Stephen, H. Estimating Soil Moisture Using Remote Sensing Data: A Machine Learning Approach. Adv. Water Resour. 2010, 33, 69–80. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.; Sanchez-Castillo, M.; Chica-Olmo, M.; Chica-Rivas, M. Machine Learning Predictive Models for Mineral Prospectivity: An Evaluation of Neural Networks, Random Forest, Regression Trees and Support Vector Machines. Ore Geol. Rev. 2015, 71, 804–818. [Google Scholar] [CrossRef]

- Banadkooki, F.B.; Ehteram, M.; Panahi, F.; Sammen, S.S.; Othman, F.B.; EL-Shafie, A. Estimation of Total Dissolved Solids (TDS) Using New Hybrid Machine Learning Models. J. Hydrol. 2020, 587, 124989. [Google Scholar] [CrossRef]

- Rumora, L.; Miler, M.; Medak, D. Impact of Various Atmospheric Corrections on Sentinel-2 Land Cover Classification Accuracy Using Machine Learning Classifiers. ISPRS Int. J. Geo-Inf. 2020, 9, 277. [Google Scholar] [CrossRef]

- Phyo, P.P.; Byun, Y.C.; Park, N. Short-Term Energy Forecasting Using Machine-Learning-Based Ensemble Voting Regression. Symmetry 2022, 14, 160. [Google Scholar] [CrossRef]

- Alexei Botchkarev Performance Metrics (Error Measures) in Machine Learning Regression, Forecasting and Prognostics: Properties and Typology. J. Chem. Inf. Model. 1981, 53, 1689–1699.

- Kumar, V.; Sharma, A.; Bhardwaj, R.; Thukral, A.K. Water Quality of River Beas, India, and Its Correlation with Reflectance Data. J. Water Chem. Technol. 2020, 42, 134–141. [Google Scholar] [CrossRef]

- Kumar, V.; Sharma, A.; Chawla, A.; Bhardwaj, R.; Thukral, A.K. Water Quality Assessment of River Beas, India, Using Multivariate and Remote Sensing Techniques. Environ. Monit. Assess. 2016, 188, 137. [Google Scholar] [CrossRef]

- Mosavi, A.; Ozturk, P.; Chau, K.W. Flood Prediction Using Machine Learning Models: Literature Review. Water 2018, 10, 1536. [Google Scholar] [CrossRef]

- Song, K.; Li, L.; Wang, Z.; Liu, D.; Zhang, B.; Xu, J.; Du, J.; Li, L.; Li, S.; Wang, Y. Retrieval of Total Suspended Matter (TSM) and Chlorophyll-a (Chl-a) Concentration from Remote-Sensing Data for Drinking Water Resources. Environ. Monit. Assess. 2011, 184, 1449–1470. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Ma, Y. Ensemble Machine Learning: Methods and Applications; Zhang, C., Ma, Y., Eds.; Springer: Boston, MA, USA, 2012; ISBN 978-1-4419-9325-0. [Google Scholar]

- Rocca, J. Ensemble Methods: Bagging, Boosting and Stacking. Available online: https://towardsdatascience.com/ensemble-methods-bagging-boosting-and-stacking-c9214a10a205 (accessed on 25 May 2023).

- Scikit Learn Hyperparameter Tuning. Available online: https://inria.github.io/scikit-learn-mooc/python_scripts/ensemble_hyperparameters.html (accessed on 25 May 2023).

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely Randomized Trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Livingston, F. Implementation of Breiman’s Random Forest Machine Learning Algorithm. Mach. Learn. J. Pap. 2005, Fall, 1–13. [Google Scholar]

- Tillman, F.D.; Anning, D.W.; Heilman, J.A.; Buto, S.G.; Miller, M.P. Managing Salinity in Upper Colorado River Basin Streams: Selecting Catchments for Sediment Control Efforts Using Watershed Characteristics and Random Forests Models. Water 2018, 10, 676. [Google Scholar] [CrossRef]

- Wolff, S.; O’Donncha, F.; Chen, B. Statistical and Machine Learning Ensemble Modelling to Forecast Sea Surface Temperature. J. Mar. Syst. 2020, 208, 103347. [Google Scholar] [CrossRef]

- Imen, S.; Chang, N.B.; Yang, Y.J. Developing the Remote Sensing-Based Early Warning System for Monitoring TSS Concentrations in Lake Mead. J. Environ. Manag. 2015, 160, 73–89. [Google Scholar] [CrossRef]

- Wakjira, T.G.; Abushanab, A.; Ebead, U.; Alnahhal, W. FAI: Fast, Accurate, and Intelligent Approach and Prediction Tool for Flexural Capacity of FRP-RC Beams Based on Super-Learner Machine Learning Model. Mater. Today Commun. 2022, 33, 104461. [Google Scholar] [CrossRef]

- Sciikit Learn Sklearn.Model_selection.KFold. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.KFold.html (accessed on 25 May 2023).

- Wang, Z.; Lei, Y.; Cui, H.; Miao, H.; Zhang, D.; Wu, Z.; Liu, G. Enhanced RBF Neural Network Metamodelling Approach Assisted by Sliced Splitting-Based K-Fold Cross-Validation and Its Application for the Stiffened Cylindrical Shells. Aerosp. Sci. Technol. 2022, 124, 107534. [Google Scholar] [CrossRef]

- Shah, M.I.; Javed, M.F.; Abunama, T. Proposed Formulation of Surface Water Quality and Modelling Using Gene Expression, Machine Learning, and Regression Techniques. Environ. Sci. Pollut. Res. 2021, 28, 13202–13220. [Google Scholar] [CrossRef] [PubMed]

- Saberioon, M.; Brom, J.; Nedbal, V.; Souček, P.; Císař, P. Chlorophyll-a and Total Suspended Solids Retrieval and Mapping Using Sentinel-2A and Machine Learning for Inland Waters. Ecol. Indic. 2020, 113, 106236. [Google Scholar] [CrossRef]

- Dritsas, E.; Trigka, M. Efficient Data-Driven Machine Learning Models for Water Quality Prediction. Computation 2023, 11, 16. [Google Scholar] [CrossRef]

- Leigh, C.; Kandanaarachchi, S.; McGree, J.M.; Hyndman, R.J.; Alsibai, O.; Mengersen, K.; Peterson, E.E. Predicting Sediment and Nutrient Concentrations from High-Frequency Water-Quality Data. PLoS ONE 2019, 14, e0215957. [Google Scholar] [CrossRef] [PubMed]

- Mahanty, B.; Lhamo, P.; Sahoo, N.K. Inconsistency of PCA-Based Water Quality Index–Does It Reflect the Quality? Sci. Total Environ. 2023, 866, 161353. [Google Scholar] [CrossRef] [PubMed]

- Jung, K.; Bae, D.H.; Um, M.J.; Kim, S.; Jeon, S.; Park, D. Evaluation of Nitrate Load Estimations Using Neural Networks and Canonical Correlation Analysis with K-Fold Cross-Validation. Sustainability 2020, 12, 400. [Google Scholar] [CrossRef]

- Mamat, N.; Hamzah, M.F.; Jaafar, O. Hybrid Support Vector Regression Model and K-Fold Cross Validation for Water Quality Index Prediction in Langat River, Malaysia. bioRxiv 2021. [Google Scholar] [CrossRef]

- Normawati, D.; Ismi, D.P. K-Fold Cross Validation for Selection of Cardiovascular Disease Diagnosis Features by Applying Rule-Based Datamining. Signal Image Process. Lett. 2019, 1, 23–35. [Google Scholar] [CrossRef]

- Scikit Learn Supervised Learning-Scikit Learn Documentation. Available online: https://scikit-learn.org/0.23/supervised_learning.html (accessed on 25 May 2023).

- VanderPlas, J. Python Data Science Handbook; O’Reilly Media: Sebastopol, CA, USA, 2019; Volume 53, ISBN 9788578110796. [Google Scholar]

- Grus, J. Data Science from Scratch; O’Reilly Media: Sebastopol, CA, USA, 2019; Volume 1542, ISBN 9781492041139. [Google Scholar]

- Adjovu, G.E.; Gamble, R. Development of HEC-HMS Model for the Cane Creek Watershed. In Proceedings of the 22nd Tennessee Water Resources Symposium, Burns, TN, USA, 10–12 April 2019; Tennessee Section of the American Water Resources Association: Nashville, TN, USA; pp. 1C-2–1C-6. Available online: https://img1.wsimg.com/blobby/go/12ed7af3-57dc-468c-af58-da8360f35f16/downloads/Proceedings2019.pdf?ver=1618503482462 (accessed on 25 May 2023).

- Moriasi, D.N.; Arnold, J.G.; Van Liew, M.W.; Bingner, R.L.; Harmel, R.D.; Veith, T.L. Model Evaluation Guidelines for Systematic Quantification of Accuracy in Watershed Simulations. Trans. ASABE 2007, 50, 885–900. [Google Scholar] [CrossRef]

- da Silva, M.G.; de Aguiar Netto, A.d.O.; de Jesus Neves, R.J.; do Vasco, A.N.; Almeida, C.; Faccioli, G.G. Sensitivity Analysis and Calibration of Hydrological Modeling of the Watershed Northeast Brazil. J. Environ. Prot. 2015, 6, 837–850. [Google Scholar] [CrossRef]

- Chicco, D.; Warrens, M.J.; Jurman, G. The Coefficient of Determination R-Squared Is More Informative than SMAPE, MAE, MAPE, MSE and RMSE in Regression Analysis Evaluation. PeerJ Comput. Sci. 2021, 7, e623. [Google Scholar] [CrossRef] [PubMed]

- Saberi-Movahed, F.; Najafzadeh, M.; Mehrpooya, A. Receiving More Accurate Predictions for Longitudinal Dispersion Coefficients in Water Pipelines: Training Group Method of Data Handling Using Extreme Learning Machine Conceptions. Water Resour. Manag. 2020, 34, 529–561. [Google Scholar] [CrossRef]

- Adjovu, G.E. Evaluating the Performance of A GIS-Based Tool for Delineating Swales Along Two Highways in Tennessee. Ph.D. Thesis, Tennessee Technological University, Cookeville, TN, USA, 2020. [Google Scholar]

- Sun, K.; Rajabtabar, M.; Samadi, S.Z.; Rezaie-Balf, M.; Ghaemi, A.; Band, S.S.; Mosavi, A. An Integrated Machine Learning, Noise Suppression, and Population-Based Algorithm to Improve Total Dissolved Solids Prediction. Eng. Appl. Comput. Fluid Mech. 2021, 15, 251–271. [Google Scholar] [CrossRef]

- Abba, S.I.; Linh, N.T.T.; Abdullahi, J.; Ali, S.I.A.; Pham, Q.B.; Abdulkadir, R.A.; Costache, R.; Nam, V.T.; Anh, D.T. Hybrid Machine Learning Ensemble Techniques for Modeling Dissolved Oxygen Concentration. IEEE Access 2020, 8, 157218–157237. [Google Scholar] [CrossRef]

- Rhoades, J.D.; Corwin, D.L.; Lesch, S.M. Geospatial Measurements of Soil Electrical Conductivity to Assess Soil Salinity and Diffuse Salt Loading from Irrigation. Geophys. Monogr. Ser. 1998, 108, 197–215. [Google Scholar] [CrossRef]

- Sehar, S.; Aamir, R.; Naz, I.; Ali, N.; Ahmed, S. Reduction of Contaminants (Physical, Chemical, and Microbial) in Domestic Wastewater through Hybrid Constructed Wetland. ISRN Microbiol. 2013, 2013, 350260. [Google Scholar] [CrossRef]

- Poisson, A. Conductivity/Salinity/Temperature Relationship of Diluted and Concentrated Standard Seawater. IEEE J. Ocean. Eng. 1980, 5, 41–50. [Google Scholar] [CrossRef]

- Rietman, E.A.; Kaplan, M.L.; Cava, R.J. Lithium Ion-Poly (Ethylene Oxide) Complexes. I. Effect of Anion on Conductivity. Solid State Ionics 1985, 17, 67–73. [Google Scholar] [CrossRef]

- Kurra, S.S.; Naidu, S.G.; Chowdala, S.; Yellanki, S.C.; Sunanda, E. Water Quality Prediction Using Machine Learning. Int. Res. J. Mod. Eng. Technol. Sci. 2022, 04, 692–696. Available online: https://www.irjmets.com/uploadedfiles/paper/issue_5_may_2022/22391/final/fin_irjmets1651989957.pdf (accessed on 25 May 2023).

- Lin, S.; Zheng, H.; Han, B.; Li, Y.; Han, C.; Li, W. Comparative Performance of Eight Ensemble Learning Approaches for the Development of Models of Slope Stability Prediction. Acta Geotech. 2022, 17, 1477–1502. [Google Scholar] [CrossRef]

- Ewusi, A.; Ahenkorah, I.; Aikins, D. Modelling of Total Dissolved Solids in Water Supply Systems Using Regression and Supervised Machine Learning Approaches. Appl. Water Sci. 2021, 11, 13. [Google Scholar] [CrossRef]

- Leggesse, E.S.; Zimale, F.A.; Sultan, D.; Enku, T.; Srinivasan, R.; Tilahun, S.A. Predicting Optical Water Quality Indicators from Remote Sensing Using Machine Learning Algorithms in Tropical Highlands of Ethiopia. Hydrology 2023, 10, 110. [Google Scholar] [CrossRef]

- Cederberg, J.R.; Paretti, N.V.; Coes, A.L.; Hermosillo, E.; Lucia, A. Estimation of Dissolved-Solids Concentrations Using Continuous Water-Quality Monitoring and Regression Models at Four Sites in the Yuma Area, Arizona and California, January 2017 through March 2019; Scientific Investigations Report 2021-5080; U.S. Geological Survey: Reston, VA, USA, 2021; pp. 1–26. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning Data Mining, Inference, and Prediction; Springer: New York, NY, USA, 2009; Available online: https://www.amazon.com/Elements-Statistical-Learning-Prediction-Statistics/dp/0387848576 (accessed on 25 May 2023).

- Nguyen, P.T.B.; Koedsin, W.; McNeil, D.; Van, T.P.D. Remote Sensing Techniques to Predict Salinity Intrusion: Application for a Data-Poor Area of the Coastal Mekong Delta, Vietnam. Int. J. Remote Sens. 2018, 39, 6676–6691. [Google Scholar] [CrossRef]

- Hafeez, S.; Wong, M.S.; Ho, H.C.; Nazeer, M.; Nichol, J.; Abbas, S.; Tang, D.; Lee, K.H.; Pun, L. Comparison of Machine Learning Algorithms for Retrieval of Water Quality Indicators in Case-II Waters: A Case Study of Hong Kong. Remote Sens. 2019, 11, 617. [Google Scholar] [CrossRef]

- Guo, H.; Huang, J.J.; Chen, B.; Guo, X.; Singh, V.P. A Machine Learning-Based Strategy for Estimating Non-Optically Active Water Quality Parameters Using Sentinel-2 Imagery. Int. J. Remote Sens. 2021, 42, 1841–1866. [Google Scholar] [CrossRef]

- Yang, S.; Liang, M.; Qin, Z.; Qian, Y.; Li, M.; Cao, Y. A Novel Assessment Considering Spatial and Temporal Variations of Water Quality to Identify Pollution Sources in Urban Rivers. Sci. Rep. 2021, 11, 8714. [Google Scholar] [CrossRef]

- Skiena, S. Lecture 14: Correlation and Autocorrelation, Department of Computer Science. Ph.D. Thesis, State University of New York, Stony Brook, NY, USA. Available online: https://www3.cs.stonybrook.edu/~skiena/691/lectures/lecture14.pdf (accessed on 25 May 2023).

- Jat, P. Geostatistical Estimation of Water Quality Using River and Flow Covariance Models. Ph.D. Dissertation, University of North Carolina at Chapel Hill, Chapel Hill, CA, USA, 2016. [Google Scholar] [CrossRef]

| No. | Station | Location | Lat. /Long. | Sampling Frequency |

|---|---|---|---|---|

| 1 | LWLVB1.2 | In channel 1.2 miles from the confluence of the Las Vegas Wash and Las Vegas Bay. | Movable | Weekly (March–October) Monthly (November–February) |

| 2 | LWLVB1.85 | In channel 1.85 miles from the confluence of the Las Vegas Wash and Las Vegas Bay. | Movable | Weekly (March–October) Monthly (November–February) |

| 3 | LWLVB2.7 | In channel 2.7 miles from the confluence of the Las Vegas Wash and Las Vegas Bay. | Movable | Biweekly (March–October) Monthly (November–February) |

| 4 | LWLVB3.5 | In channel 3.5 miles from the confluence of the Las Vegas Wash and Las Vegas Bay. | Movable | Biweekly (March–October) Monthly (November–February) |

| 5 | IPS3 | In Boulder Basin on the northeast side of the mouth of Las Vegas Bay. | 36.0896° N 114.7662° W | Monthly year-round |

| 6 | BB3 | In Boulder Basin on the northeast side of Saddle Island. | 36.0715° N 114.7832° W | Monthly year-round |

| 7 | CR350.0SE0.55 | Between Battleship Rock and Burro Point. | 36.0985° N 114.7257° W | Monthly year-round |

| 8 | CR346.4 | In Boulder Basin between Sentinel Island and the shoreline of Castle Cove. | 36.0617° N 114.7392° W | Monthly year-round |

| 9 | CR342.5 | In Boulder Basin in the middle of Black Canyon, near Hoover Dam. | 36.01910° N 114.7333° W | Monthly year-round |

| No. | Station | Max. TDS mg/L | Avg. TDS mg/L | Min. TDS mg/L | Std. TDS mg/L | Time Range |

|---|---|---|---|---|---|---|

| 1 | LWLVB1.2 | 1030 | 716 | 555 | 107 | 2016–2021 |

| 2 | LWLVB1.85 | 957 | 670 | 523 | 75 | 2016–2021 |

| 3 | LWLVB2.7 | 803 | 626 | 459 | 51 | 2016–2021 |

| 4 | LWLVB3.5 | 784 | 605 | 493 | 41 | 2016–2021 |

| 5 | IPS3 | 661 | 581 | 521 | 24 | 2017–2021 |

| 6 | BB3 | 789 | 598 | 536 | 33 | 2016–2021 |

| 7 | CR350.0SE0.55 | 700 | 585 | 532 | 29 | 2016–2021 |

| 8 | CR346.4 | 667 | 588 | 397 | 35 | 2016–2021 |

| 9 | CR342.5 | 667 | 588 | 524 | 29 | 2016–2021 |

| Model | Hyperparameter | Optimal Value | |

|---|---|---|---|

| Standalone | LR | Fit intercept | False |

| SVM | Kernel | Linear | |

| C | 1 | ||

| Gamma | 0.1 | ||

| KNN | Number of neighbors | 10 | |

| Weights | Uniform | ||

| ANN | Hidden layer sizes | 100 | |

| Activation | ReLU | ||

| Ensemble | Bagging | Number of estimators | 20 |

| GBM | Learning rate | 0.1 | |

| Number of estimators | 100 | ||

| ET | Number of estimators | 100 | |

| RF | Number of estimators | 100 | |

| Maximum depth | 3 | ||

| XGBoost | Learning rate | 0.1 | |

| Maximum depth | 3 |

| Training (Sample Size =1928) | Testing (Sample Size = 483) | External Validation (Unseen Data) (Sample Size = 553) | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Model | R2 | RMSE (mg/L) | MAE (mg/L) | PMARE (%) | SI | NSE | PBIAS (%) | R2 | RMSE (mg/L) | MAE (mg/L) | PMARE (%) | SI | NSE | PBIAS (%) | R2 | RMSE (mg/L) | MAE (mg/L) | PMARE (%) | SI | NSE | PBIAS (%) |

| LR | 0.80 | 34.77 | 20.40 | 3.06 | 0.05 | 0.80 | −0.25 | 0.83 | 34.28 | 20.18 | 3.04 | 0.05 | 0.83 | −0.09 | 0.82 | 33.09 | 18.72 | 2.87 | 0.05 | 0.82 | 0.11 |

| SVM | 0.80 | 35.35 | 19.50 | 2.90 | 0.06 | 0.80 | 0.40 | 0.83 | 34.61 | 19.01 | 2.83 | 0.05 | 0.83 | 0.62 | 0.81 | 34.40 | 18.86 | 2.87 | 0.05 | 0.81 | 0.97 |

| KNN | 0.84 | 31.76 | 19.21 | 2.88 | 0.05 | 0.84 | −0.23 | 0.81 | 36.98 | 22.51 | 3.38 | 0.06 | 0.81 | −0.07 | 0.80 | 35.12 | 21.18 | 3.24 | 0.06 | 0.80 | 0.09 |

| ANN | 0.80 | 34.82 | 20.18 | 3.03 | 0.05 | 0.80 | −0.27 | 0.83 | 34.35 | 19.70 | 2.95 | 0.05 | 0.83 | 0.06 | 0.82 | 33.33 | 18.80 | 2.88 | 0.05 | 0.82 | 0.09 |

| Bagging | 0.97 | 14.57 | 8.47 | 1.27 | 0.02 | 0.97 | −0.11 | 0.81 | 36.41 | 22.67 | 3.42 | 0.06 | 0.81 | −0.06 | 0.78 | 36.68 | 22.19 | 3.39 | 0.06 | 0.78 | −0.03 |

| GBM | 0.87 | 28.08 | 17.28 | 2.61 | 0.04 | 0.87 | −0.19 | 0.87 | 0.84 | 33.88 | 20.14 | 3.02 | 0.05 | 0.84 | 0.81 | 34.52 | 20.71 | 3.19 | 0.06 | 0.81 | 0.02 |

| ET | 1.00 | 2.28 | 0.14 | 0.02 | 0.00 | 1.00 | 0.00 | 0.80 | 37.35 | 22.67 | 3.42 | 0.06 | 0.80 | −0.08 | 0.77 | 37.68 | 22.26 | 3.40 | 0.06 | 0.77 | 0.06 |

| RF | 0.82 | 33.70 | 20.44 | 3.07 | 0.05 | 0.82 | −0.28 | 0.81 | 36.84 | 21.79 | 3.24 | 0.06 | 0.81 | 0.06 | 0.80 | 35.25 | 22.13 | 3.42 | 0.06 | 0.80 | −0.30 |

| XGBoost | 0.87 | 28.87 | 17.54 | 2.64 | 0.05 | 0.87 | −0.20 | 0.84 | 33.98 | 20.19 | 3.02 | 0.05 | 0.84 | 0.08 | 0.81 | 34.19 | 20.51 | 3.16 | 0.05 | 0.81 | −0.01 |

| Training (Sample Size =1928) | Testing (Sample Size = 483) | External Validation (Unseen Data) (Sample Size = 553) | OverallAvg. | ||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Ranks | Ranks | Ranks | |||||||||||||||||||||||

| Model | R2 | RMSE | MAE | PMARE | SI | NSE | PBIAS | Avg. | R2 | RMSE | MAE | PMARE | SI | NSE | PBIAS | Avg. | R2 | RMSE | MAE | PMARE | SI | NSE | PBIAS | Avg. | |

| LR | 7 | 7 | 8 | 8 | 7 | 7 | 6 | 7.1 | 3 | 3 | 4 | 5 | 4 | 3 | 8 | 4.3 | 1 | 1 | 1 | 2 | 1 | 1 | 7 | 2.0 | 4.5 |

| SVM | 9 | 9 | 6 | 6 | 9 | 9 | 9 | 8.1 | 5 | 5 | 1 | 1 | 3 | 5 | 9 | 4.1 | 4 | 4 | 3 | 1 | 3 | 4 | 9 | 4.0 | 5.4 |

| KNN | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5.0 | 8 | 8 | 7 | 7 | 8 | 8 | 5 | 7.3 | 6 | 6 | 6 | 6 | 6 | 6 | 5 | 5.9 | 6.0 |

| ANN | 8 | 8 | 7 | 7 | 8 | 8 | 7 | 7.6 | 4 | 4 | 2 | 2 | 5 | 4 | 2 | 3.3 | 2 | 2 | 2 | 3 | 2 | 2 | 6 | 2.7 | 4.5 |

| Bagging | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2.0 | 6 | 6 | 8 | 8 | 6 | 6 | 3 | 6.1 | 8 | 8 | 8 | 7 | 8 | 8 | 3 | 7.1 | 5.1 |

| GBM | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3.0 | 1 | 1 | 3 | 4 | 1 | 1 | 1 | 1.7 | 5 | 5 | 5 | 5 | 5 | 5 | 2 | 4.6 | 3.1 |

| ET | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1.0 | 9 | 9 | 9 | 9 | 9 | 9 | 7 | 8.7 | 9 | 9 | 9 | 8 | 9 | 9 | 4 | 8.1 | 6.0 |

| RF | 6 | 6 | 9 | 9 | 6 | 6 | 8 | 7.1 | 7 | 7 | 6 | 6 | 7 | 7 | 4 | 6.3 | 7 | 7 | 7 | 9 | 7 | 7 | 8 | 7.4 | 7.0 |

| XGBoost | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4.0 | 2 | 2 | 5 | 3 | 2 | 2 | 6 | 3.1 | 3 | 3 | 4 | 4 | 4 | 3 | 1 | 3.1 | 3.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adjovu, G.E.; Stephen, H.; Ahmad, S. A Machine Learning Approach for the Estimation of Total Dissolved Solids Concentration in Lake Mead Using Electrical Conductivity and Temperature. Water 2023, 15, 2439. https://doi.org/10.3390/w15132439

Adjovu GE, Stephen H, Ahmad S. A Machine Learning Approach for the Estimation of Total Dissolved Solids Concentration in Lake Mead Using Electrical Conductivity and Temperature. Water. 2023; 15(13):2439. https://doi.org/10.3390/w15132439

Chicago/Turabian StyleAdjovu, Godson Ebenezer, Haroon Stephen, and Sajjad Ahmad. 2023. "A Machine Learning Approach for the Estimation of Total Dissolved Solids Concentration in Lake Mead Using Electrical Conductivity and Temperature" Water 15, no. 13: 2439. https://doi.org/10.3390/w15132439

APA StyleAdjovu, G. E., Stephen, H., & Ahmad, S. (2023). A Machine Learning Approach for the Estimation of Total Dissolved Solids Concentration in Lake Mead Using Electrical Conductivity and Temperature. Water, 15(13), 2439. https://doi.org/10.3390/w15132439