The Use of Three-Dimensional Images and Food Descriptions from a Smartphone Device Is Feasible and Accurate for Dietary Assessment

Abstract

1. Introduction

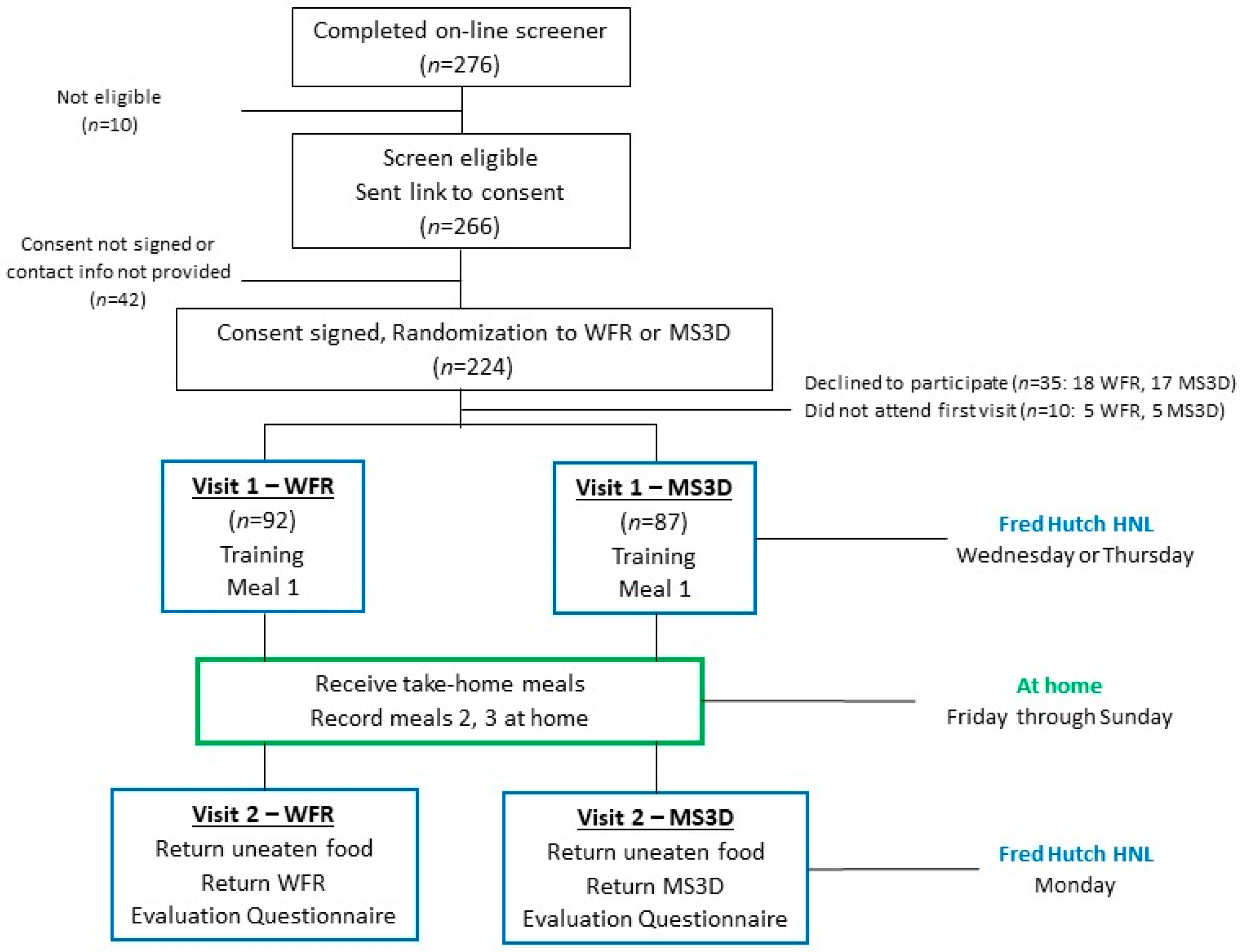

2. Materials and Methods

2.1. Study Participants

2.2. Participant Activities

2.3. MealScan3D System

2.4. Written Food Record

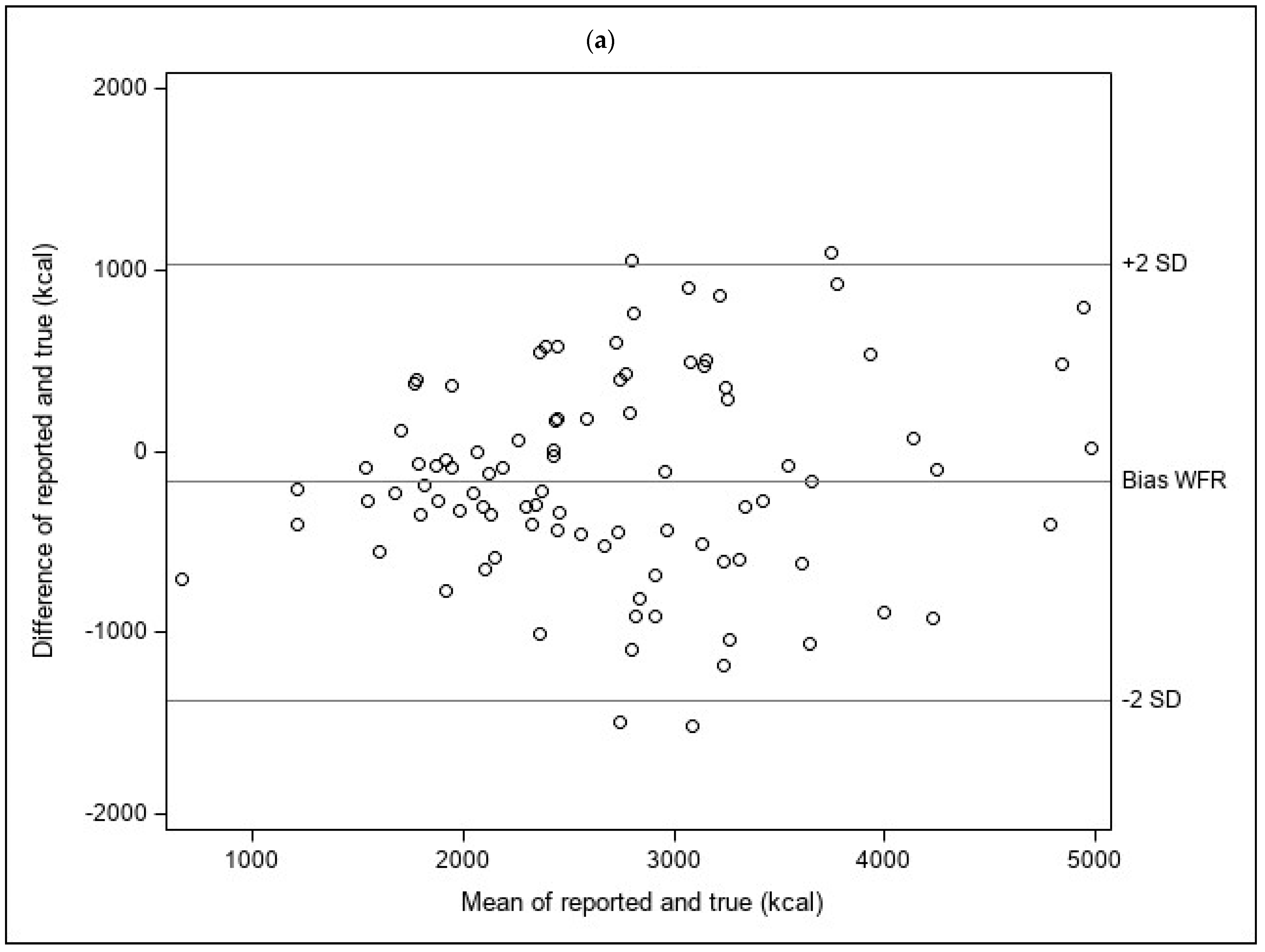

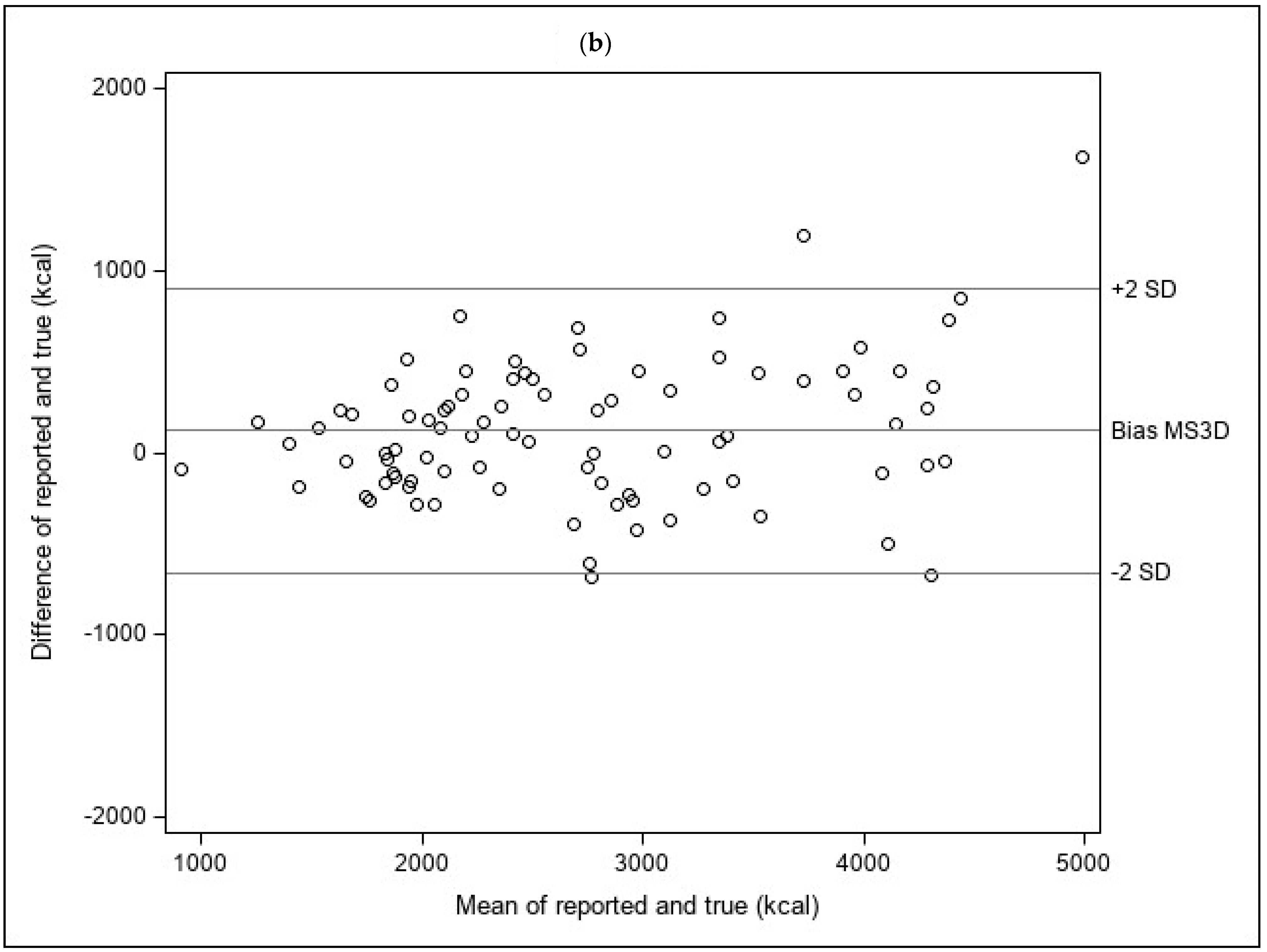

2.5. MealScan3D Data Processing

2.6. True Intake

2.7. Energy

2.8. Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

- MealScan3D food volume estimation methods

- 3D Scanning

- Segmentation

- Reconstruction

- Volume Estimation

References

- Subar, A.F.; Kipnis, V.; Troiano, R.P.; Midthune, D.; Schoeller, D.A.; Bingham, S.; Sharbaugh, C.O.; Trabulsi, J.; Runswick, S.; Ballard-Barbash, R.; et al. Using Intake Biomarkers to Evaluate the Extent of Dietary Misreporting in a Large Sample of Adults: The Open Study. Am. J. Epidemiol. 2003, 158, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Freedman, L.S.; Commins, J.M.; Moler, J.E.; Arab, L.; Baer, D.J.; Kipnis, V.; Midthune, D.; Moshfegh, A.J.; Neuhouser, M.L.; Prentice, R.L.; et al. Pooled Results from 5 Validation Studies of Dietary Self-Report Instruments Using Recovery Biomarkers for Energy and Protein Intake. Am. J. Epidemiol. 2014, 180, 172–188. [Google Scholar] [CrossRef]

- Freedman, L.S.; Commins, J.M.; Moler, J.E.; Willett, W.; Tinker, L.F.; Subar, A.F.; Spiegelman, D.; Rhodes, D.; Potischman, N.; Neuhouser, M.L.; et al. Pooled Results from 5 Validation Studies of Dietary Self-Report Instruments Using Recovery Biomarkers for Potassium and Sodium Intake. Am. J. Epidemiol. 2015, 181, 473–487. [Google Scholar] [CrossRef] [PubMed]

- Prentice, R.L.; Tinker, L.F.; Huang, Y.; Neuhouser, M.L. Calibration of Self-Reported Dietary Measures Using Biomarkers: An Approach to Enhancing Nutritional Epidemiology Reliability. Curr. Atheroscler. Rep. 2013, 15, 353. [Google Scholar] [CrossRef] [PubMed]

- Williamson, D.A.; Allen, H.R.; Martin, P.D.; Alfonso, A.; Gerald, B.; Hunt, A. Digital Photography: A New Method for Estimating Food Intake in Cafeteria Settings. Eat. Weight. Disord. 2004, 9, 24–28. [Google Scholar] [CrossRef] [PubMed]

- Pettitt, C.; Liu, J.; Kwasnicki, R.M.; Yang, G.-Z.; Preston, T.; Frost, G. A Pilot Study to Determine Whether Using a Lightweight, Wearable Micro-Camera Improves Dietary Assessment Accuracy and Offers Information on Macronutrients and Eating Rate. Br. J. Nutr. 2016, 115, 160–167. [Google Scholar] [CrossRef]

- Martin, C.K.; Han, H.; Coulon, S.M.; Allen, H.R.; Champagne, C.M.; Anton, S.D. A Novel Method to Remotely Measure Food Intake of Free-Living Individuals in Real Time: The Remote Food Photography Method. Br. J. Nutr. 2009, 101, 446–456. [Google Scholar] [CrossRef]

- Casperson, S.L.; Sieling, J.; Moon, J.; Johnson, L.; Roemmich, J.N.; Whigham, L. A Mobile Phone Food Record App to Digitally Capture Dietary Intake for Adolescents in a Free-Living Environment: Usability Study. JMIR mHealth uHealth 2015, 3, e30. [Google Scholar] [CrossRef]

- Ptomey, L.T.; Willis, E.A.; Honas, J.J.; Mayo, M.S.; Washburn, R.A.; Herrmann, S.D.; Sullivan, D.K.; Donnelly, J.E. Validity of Energy Intake Estimated by Digital Photography Plus Recall in Overweight and Obese Young Adults. J. Acad. Nutr. Diet. 2015, 115, 1392–1399. [Google Scholar] [CrossRef]

- E Rollo, M.; Ash, S.; Lyons-Wall, P.; Russell, A. Trial of a Mobile Phone Method for Recording Dietary Intake in Adults with Type 2 Diabetes: Evaluation and Implications for Future Applications. J. Telemed. Telecare 2011, 17, 318–323. [Google Scholar] [CrossRef]

- Wang, D.-H.; Kogashiwa, M.; Kira, S. Development of a New Instrument for Evaluating Individuals’ Dietary Intakes. J. Am. Diet. Assoc. 2006, 106, 1588–1593. [Google Scholar] [CrossRef]

- Gemming, L.; Doherty, A.; Kelly, P.; Utter, J.; Ni Mhurchu, C. Feasibility of a Sensecam-Assisted 24-h Recall to Reduce under-Reporting of Energy Intake. Eur. J. Clin. Nutr. 2013, 67, 1095–1099. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Stathopoulou, T.; Vasiloglou, M.F.; Pinault, L.F.; Kiley, C.; Spanakis, E.K.; Mougiakakou, S. goFOODTM: An Artificial Intelligence System for Dietary Assessment. Sensors 2020, 20, 4283–4300. [Google Scholar] [CrossRef] [PubMed]

- Rhyner, D.; Loher, H.; Dehais, J.; Anthimopoulos, M.; Shevchik, S.; Botwey, R.H.; Duke, D.; Stettler, C.; Diem, P.; Mougiakakou, S. Carbohydrate Estimation by a Mobile Phone-Based System Versus Self-Estimations of Individuals with Type 1 Diabetes Mellitus: A Comparative Study. J. Med. Internet Res. 2016, 18, e101. [Google Scholar] [CrossRef]

- Kong, F.; Tan, J. Dietcam: Automatic Dietary Assessment with Mobile Camera Phones. Pervasive Mob. Comput. 2012, 8, 147–163. [Google Scholar] [CrossRef]

- Puri, M.; Zhu, Z.; Yu, Q.; Divakaran, A.; Sawhney, H. Recognition and Volume Estimation of Food Intake Using a Mobile Device. In Proceedings of the 2009 Workshop on Applications of Computer Vision (WACV), Snowbird, UT, USA, 7–8 December 2009. [Google Scholar]

- Zhang, W.; Yu, Q.; Siddiquie, B.; Divakaran, A.; Sawhney, H. “Snap-N-Eat”: Food Recognition and Nutrition Estimation on a Smartphone. J. Diabetes Sci. Technol. 2015, 9, 525–533. [Google Scholar] [CrossRef] [PubMed]

- Fang, S.; Zhu, F.; Jiang, C.; Zhang, S.; Boushey, C.J.; Delp, E.J. A Comparison of Food Portion Size Estimation Using Geometric Models and Depth Images. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016. [Google Scholar]

- Makhsous, S.; Mohammad, H.M.; Schenk, J.M.; Mamishev, A.V.; Kristal, A.R. A Novel Mobile Structured Light System in Food 3d Reconstruction and Volume Estimation. Sensors 2019, 19, 564. [Google Scholar] [CrossRef]

- Fang, S.; Liu, C.; Zhu, F.; Delp, E.J.; Boushey, C.J. Single-View Food Portion Estimation Based on Geometric Models. ISM 2015, 2015, 385–390. [Google Scholar]

- Chae, J.; Woo, I.; Kim, S.; Maciejewski, R.; Zhu, F.; Delp, E.J.; Boushey, C.J.; Ebert, D.S. Volume Estimation Using Food Specific Shape Templates in Mobile Image-Based Dietary Assessment. Proc. SPIE Int. Soc. Opt. Eng. 2011, 7873, 78730K. [Google Scholar]

- Zhu, F.; Bosch, M.; Woo, I.; Kim, S.; Boushey, C.J.; Ebert, D.S.; Delp, E.J. The Use of Mobile Devices in Aiding Dietary Assessment and Evaluation. IEEE J. Sel. Top. Signal Process. 2010, 4, 756–766. [Google Scholar]

- Jia, W.; Chen, H.-C.; Yue, Y.; Li, Z.; Fernstrom, J.; Bai, Y.; Li, C.; Sun, M. Accuracy of Food Portion Size Estimation from Digital Pictures Acquired by a Chest-Worn Camera. Public Health Nutr. 2014, 17, 1671–1681. [Google Scholar] [CrossRef]

- Williamson, D.A.; Allen, H.R.; Martin, P.D.; Alfonso, A.J.; Gerald, B.; Hunt, A. Comparison of Digital Photography to Weighed and Visual Estimation of Portion Sizes. J. Am. Diet. Assoc. 2003, 103, 1139–1145. [Google Scholar] [CrossRef]

- Gemming, L.; Rush, E.; Maddison, R.; Doherty, A.; Gant, N.; Utter, J.; Ni Mhurchu, C. Wearable Cameras Can Reduce Dietary under-Reporting: Doubly Labelled Water Validation of a Camera-Assisted 24 h Recall. Br. J. Nutr. 2015, 113, 284–291. [Google Scholar] [CrossRef]

- Martin, C.K.; Correa, J.B.; Han, H.; Allen, H.R.; Rood, J.C.; Champagne, C.M.; Gunturk, B.K.; Bray, G.A. Validity of the Remote Food Photography Method (RFPM) for Estimating Energy and Nutrient Intake in near Real-Time. Obesity 2012, 20, 891–899. [Google Scholar] [CrossRef]

- Rollo, M.E.; Ash, S.; Lyons-Wall, P.; Russell, A.W. Evaluation of a Mobile Phone Image-Based Dietary Assessment Method in Adults with Type 2 Diabetes. Nutrients 2015, 7, 4897–4910. [Google Scholar] [CrossRef]

- Boushey, C.J.; Spoden, M.; Delp, E.J.; Zhu, F.; Bosch, M.; Ahmad, Z.; Shvetsov, Y.B.; DeLany, J.P.; Kerr, D.A. Reported Energy Intake Accuracy Compared to Doubly Labeled Water and Usability of the Mobile Food Record among Community Dwelling Adults. Nutrients 2017, 9, 312. [Google Scholar] [CrossRef]

- Kawano, Y.; Yanai, K. Real-Time Mobile Food Recognition System. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- He, H.; Kong, F.; Tan, J. Dietcam: Multiview Food Recognition Using a Multikernel Svm. IEEE J. Biomed. Health Inform. 2016, 20, 848–855. [Google Scholar] [CrossRef] [PubMed]

- Kolar, A.S.; Patterson, R.E.; White, E.; Neuhouser, M.L.; Frank, L.L.; Standley, J.; Potter, J.D.; Kristal, A.R. A Practical Method for Collecting 3-Day Food Records in a Large Cohort. Epidemiology 2005, 579–583. [Google Scholar] [CrossRef]

- Bland, J.M.; Altman, D.G. Statistical Methods for Assessing Agreement between Two Methods of Clinical Measurement. Lancet 1986, 327, 307–310. [Google Scholar] [CrossRef]

- Weaver, B.; Wuensch, K.L. SPSS and SAS Programs for Comparing Pearson Correlations and OLS Regression Coefficients. Behav. Res. Methods 2013, 45, 880–895. [Google Scholar] [CrossRef]

- SAS Institute Inc. Sas/Ets® 13.2 User’s Guide. The Syslin Procedure; SAS Institute Inc.: Cary, NC, USA, 2014; Available online: https://support.sas.com/documentation/onlinedoc/ets/132/syslin.pdf (accessed on 16 February 2021).

- McClung, H.L.; Sigrist, L.D.; Smith, T.J.; Karl, J.P.; Rood, J.C.; Young, A.J.; Bathalon, G.P. Monitoring Energy Intake: A Hand-Held Personal Digital Assistant Provides Accuracy Comparable to Written Records. J. Am. Diet. Assoc. 2009, 109, 1241–1245. [Google Scholar] [CrossRef]

- Nyström, C.D.; Forsum, E.; Henriksson, H.; Trolle-Lagerros, Y.; Larsson, C.; Maddison, R.; Timpka, T.; Löf, M. A Mobile Phone Based Method to Assess Energy and Food Intake in Young Children: A Validation Study against the Doubly Labelled Water Method and 24 h Dietary Recalls. Nutrients 2016, 8, 50. [Google Scholar] [CrossRef]

- Yuan, C.; Spiegelman, D.; Rimm, E.B.; Rosner, B.A.; Stampfer, M.J.; Barnett, J.B.; Chavarro, J.E.; Subar, A.F.; Sampson, L.K.; Willett, W.C. Validity of a Dietary Questionnaire Assessed by Comparison with Multiple Weighed Dietary Records or 24-h Recalls. Am. J. Epidemiol. 2017, 185, 570–584. [Google Scholar] [CrossRef]

- Rosilene, W.; Cumming, R.; Travison, T.; Blyth, F.; Naganathan, V.; Allman-Farinelli, M.; Hirani, V. Relative Validity of a Diet History Questionnaire against a Four-Day Weighed Food Record among Older Men in Australia: The Concord Health and Ageing in Men Project (Champ). J. Nutr. Health Aging 2015, 19, 603–610. [Google Scholar] [CrossRef] [PubMed]

- Willett, W.C.; Sampson, L.; Stampfer, M.J.; Rosner, B.; Bain, C.; Witschi, J.; Hennekens, C.H.; Speizer, F.E. Reproducibility and Validity of a Semiquantitative Food Frequency Questionnaire. Am. J. Epidemiol. 1985, 122, 51–65. [Google Scholar] [CrossRef] [PubMed]

- Al-Shaar, L.; Yuan, C.; Rosner, B.; Dean, S.B.; Ivey, K.L.; Clowry, C.M.; A Sampson, L.; Barnett, J.B.; Rood, J.; Harnack, L.J.; et al. Reproducibility and Validity of a Semiquantitative Food Frequency Questionnaire in Men Assessed by Multiple Methods. Am. J. Epidemiol. 2021, 190, 1122–1132. [Google Scholar] [CrossRef] [PubMed]

| MS3D n = 87 | WFR n = 92 | p-Value | Total n = 179 | |

|---|---|---|---|---|

| Mean ± SD | Mean ± SD | Mean ± SD | ||

| Age (yrs) | 33.8 ± 11.0 | 35.1 ± 12.2 | 0.31 | 33.6 ± 11.5 |

| n (%) | n (%) | n (%) | ||

| Sex | ||||

| Male | 18 (20.7) | 31 (33.7) | 0.05 | 49 (27.4) |

| Female | 69 (79.3) | 61 (66.3) | 130 (72.6) | |

| Education | ||||

| High School or Less | 12 (13.8) | 12 (13.0) | 0.14 | 24 (13.4) |

| College | 57 (65.5) | 49 (53.3) | 106 (59.6) | |

| Graduate/Professional | 18 (20.7) | 31 (33.7) | 49 (27.4) | |

| Responsible for Food Shopping | ||||

| All or most | 61 (70.1) | 64 (70.0) | 0.98 | 125 (69.8) |

| Some or None | 26 (29.9) | 24 (30.0) | 54 (31.2) | |

| Responsible for Food Preparation | ||||

| All or most | 57 (66.3) | 60 (65.9) | 0.96 | 117 (66.1) |

| Some or None | 29 (33.7) | 31 (34.1) | 60 (33.9) | |

| Responsible for Meal Planning | ||||

| All or most | 60 (69.8) | 61 (67.0) | 0.70 | 121 (68.4) |

| Some or None | 26 (30.2) | 30 (33.0) | 56 (31.6) | |

| Ethnicity | ||||

| Hispanic or Latino | 5 (5.8) | 11 (12.0) | 0.15 | 16 (9.0) |

| Not Hispanic or Latino | 81 (94.2) | 81 (88.0) | 162 (91.0) | |

| Race | ||||

| Asian | 20 (23.0) | 18 (19.6) | 0.34 | 38 (21.6) |

| White | 53 (60.9) | 61 (66.3) | 114 (64.8) | |

| Other | 12 (16.1) | 10 (12.1) | 24 (13.6) | |

| MS3D | WFR | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| n | True Mean ± SD | Reported Mean ± SD | Difference Mean ± SE | n | True Mean ± SD | Reported Mean ± SD | Difference Mean ± SE | p-Value for Absolute Difference between Arms | |

| Total Energy a (kcal) | 87 | 2671 ± 879 | 2791 ± 981 | 120 ± 42 c | 92 | 2800 ± 884 | 2628 ± 928 | −171 ± 63 c | <0.0001 |

| Meal 1 | |||||||||

| Lasagna (g) | 87 | 221.9 ± 93.4 | 300.7 ± 139.5 | 78.7 ± 6.3 d | 92 | 240.7 ± 97.3 | 290.5 ± 155.8 | 49.8 ± 14.8 c | 0.07 |

| Broccoli (g) | 86 | 78.4 ± 36.4 | 83.5 ± 38.9 | 5.1 ± 1.8 c | 91 | 75.7 ± 31.7 | 164.0 ± 85.2 | 88.3 ± 7.1 d | <0.0001 |

| Garlic Bread (g) | 86 | 77.3 ± 39.2 | 64.8 ± 34.9 | −12.5 ± 1.8 d | 89 | 75.7 ± 34.6 | 55.9 ± 36.8 | −19.7 ± 4.0 d | 0.10 |

| Meal 2 | |||||||||

| Chicken (g) | 85 | 129.5 ± 63.0 | 174.3 ± 91.4 | 44.8 ± 5.4 d | 91 | 139.2 ± 62.0 | 154.5 ± 70.5 | 15.3 ± 5.9 b | <0.001 |

| BBQ Sauce (g) | 53 | 43.9 ± 28.9 | 59.7 ± 34.3 | 15.7 ± 2.9 d | 80 | 49.4 ± 53.9 | 52.1 ± 61.6 | 2.7 ± 5.1 | 0.03 |

| Green Beans (g) | 83 | 123.9 ± 65.9 | 127.3 ± 71.3 | 3.4 ± 3.5 | 90 | 128.6 ± 68.1 | 165.6 ± 99.8 | 37.0 ± 6.2 d | <0.0001 |

| Mashed Potatoes (g) | 85 | 172.6 ± 80.7 | 200.7 ± 95.9 | 28.1 ± 4.0 d | 90 | 177.3 ± 82.1 | 252.7 ± 163.1 | 75.4 ± 11.5 d | <0.001 |

| Butter (g) | 41 | 6.5 ± 4.9 | 19.4 ± 12.2 | 12.9 ± 1.6 d | 61 | 8.8 ± 6.5 | 12.9 ± 10.8 | 4.1 ± 1.1 d | <0.0001 |

| Cookies (g) | 76 | 68.4 ± 22.3 | 96.5 ± 40.4 | 28.0 ± 3.4 d | 86 | 63.8 ± 22.7 | 39.2 ± 39.7 | −24.6 ± 4.2 d | 0.52 |

| Meal 3 | |||||||||

| Pork Loin (g) | 83 | 127.9 ± 61.5 | 177.4 ± 89.9 | 49.5 ± 4.5 d | 91 | 134.8 ± 65.2 | 147.1 ± 76.0 | 12.3 ± 5.8 b | <0.0001 |

| Apple cider glaze (g) | 51 | 37.4 ± 24.0 | 38.2 ± 25.2 | 0.8 ± 2.9 | 83 | 34.6 ± 26.3 | 37.2 ± 37.3 | 2.6 ± 3.2 | 0.69 |

| Roasted Carrots (g) | 85 | 138.9 ± 76.4 | 134.3 ± 83.3 | −4.6 ± 3.1 | 91 | 138.7 ± 74.8 | 164.6 ± 101.3 | 25.9 ± 6.8 d | 0.005 |

| Orzo (g) | 86 | 126.4 ± 61.8 | 123.5 ± 64.3 | −2.9 ± 3.7 | 91 | 135.6 ± 66.2 | 160.3 ± 90.7 | 24.7 ± 6.2 d | 0.003 |

| Butter (g) | 25 | 7.2 ± 5.1 | 19.4 ± 12.5 | 12.2 ± 2.1 d | 34 | 7.2 ± 6.2 | 10.1 ± 7.2 | 2.9 ± 1.3 b | <0.001 |

| Rice Pudding (g) | 64 | 133.7 ± 87.1 | 140.2 ± 91.5 | 6.5 ± 4.0 | 83 | 117.4 ± 80.4 | 161.2 ± 160.0 | 43.9± 10.1 d | <0.001 |

| Validity (Pearson Correlations) b | Regression Models c | |||||||

|---|---|---|---|---|---|---|---|---|

| Intercept d ± SE | Slope ± SE | |||||||

| MS3D | WFR | p-Value for Difference | MS3D | WFR | MS3D | WFR | p-Value for Difference f | |

| Total Energy e (kcal) | 0.92 | 0.77 | <0.0001 | 2613 ± 50 | 2869 ± 49 | 0.82± 0.05 | 0.74 ± 0.05 | 0.28 |

| Meal 1 | ||||||||

| Lasagna (g) | 0.95 | 0.45 | <0.0001 | 218.6 ± 7.1 | 242.1 ± 6.9 | 0.63 ± 0.05 | 0.28 ± 0.04 | <0.0001 |

| Broccoli (g) | 0.91 | 0.68 | <0.0001 | 113.5 ± 3.1 | 65.8 ± 2.3 | 0.85 ± 0.06 | 0.25 ± 0.02 | <0.0001 |

| Garlic Bread (g) | 0.90 | 0.44 | <0.0001 | 72.7 ± 2.7 | 77.5 ± 2.7 | 1.01 ± 0.08 | 0.42 ± 0.07 | <0.0001 |

| Meal 2 | ||||||||

| Chicken (g) | 0.86 | 0.64 | <0.001 | 123.4 ± 4.5 | 144.6 ± 4.3 | 0.59 ± 0.05 | 0.57 ± 0.06 | 0.75 |

| BBQ Sauce (g) | 0.79 | 0.69 | 0.22 | 40.6 ± 3.2 | 50.4 ± 2.6 | 0.66 ± 0.09 | 0.40 ± 0.04 | 0.01 |

| Green Beans (g) | 0.90 | 0.82 | 0.04 | 140.1 ± 4.0 | 118.1 ± 3.7 | 0.83 ± 0.05 | 0.56 ± 0.04 | <0.0001 |

| Mashed Potatoes (g) | 0.93 | 0.80 | <0.001 | 193.4 ± 4.7 | 167.0 ± 4.4 | 0.78 ± 0.05 | 0.40 ± 0.03 | <0.0001 |

| Butter (g) | 0.58 | 0.64 | 0.66 | 5.6 ± 0.8 | 9.8 ± 0.6 | 0.24 ± 0.06 | 0.39± 0.06 | 0.07 |

| Cookies (g) | 0.71 | 0.30 | <0.001 | 56.6 ± 2.8 | 68.4 ± 2.5 | 0.39 ± 0.06 | 0.17 ± 0.05 | <0.01 |

| Meal 3 | ||||||||

| Pork Loin (g) | 0.92 | 0.70 | <0.0001 | 117.9 ± 4.2 | 143.4 ± 4.1 | 0.63 ± 0.05 | 0.60 ± 0.05 | 0.68 |

| Apple cider glaze (g) | 0.65 | 0.64 | 0.92 | 37.7 ± 2.7 | 35.3 ± 2.2 | 0.62 ± 0.13 | 0.45 ± 0.06 | 0.18 |

| Roasted Carrots (g) | 0.94 | 0.76 | <0.0001 | 152.4 ± 4.3 | 130.5 ± 4.2 | 0.86 ± 0.05 | 0.56 ± 0.04 | <0.0001 |

| Orzo (g) | 0.85 | 0.76 | 0.08 | 141.8 ± 4.3 | 125.7 ± 4.1 | 0.82 ± 0.06 | 0.55 ± 0.04 | 0.001 |

| Butter (g) | 0.54 | 0.51 | 0.87 | 6.0 ± 1.1 | 8.7 ± 1.0 | 0.22 ± 0.08 | 0.38 ± 0.11 | 0.25 |

| Rice Pudding (g) | 0.94 | 0.92 | 0.41 | 144.3 ± 4.0 | 113.1 ± 3.5 | 0.89 ± 0.04 | 0.46 ± 0.02 | <0.0001 |

| Pearson Correlations b | Regression Models c | |||||||

|---|---|---|---|---|---|---|---|---|

| Intercept d ± SE | Slope ± SE | |||||||

| Scanner- Measured | Participant- Estimated | p-Value for Difference | Scanner-Measured | Participant- Estimated | Scanner-Measured | Participant- Estimated | p-Value for Difference f | |

| Total Energy e (kcal) | 0.92 | 0.81 | <0.001 | 2619 ± 38 | 2821 ± 57 | 0.72 ± 0.04 | 0.63 ± 0.05 | 0.06 |

| Meal 1 | ||||||||

| Lasagna (g) | 0.95 | 0.71 | <0.001 | 218.1 ± 3.2 | 224.5 ± 7.1 | 0.60 ± 0.02 | 0.41 ± 0.05 | <0.001 |

| Broccoli (g) | 0.91 | 0.73 | <0.001 | 80.7 ± 1.7 | 76.6 ± 2.7 | 0.74 ± 0.04 | 0.58 ± 0.07 | 0.02 |

| Garlic Bread (g) | 0.90 | 0.74 | <0.001 | 73.7 ± 1.8 | 80.4 ± 2.9 | 0.87 ± 0.05 | 0.75 ± 0.09 | 0.15 |

| Meal 2 | ||||||||

| Chicken (g) | 0.86 | 0.73 | <0.01 | 121.9 ± 3.6 | 134.5 ± 4.8 | 0.53 ± 0.04 | 0.47 ± 0.06 | 0.36 |

| BBQ Sauce (g) | 0.79 | 0.42 | <0.001 | 49.7 ± 2.6 | 45.8 ± 3.4 | 0.47 ± 0.06 | 0.18 ± 0.06 | <0.001 |

| Green Beans (g) | 0.90 | 0.79 | 0.001 | 138.4 ± 3.3 | 113.8 ± 4.6 | 0.73 ± 0.04 | 0.44 ± 0.04 | <0.001 |

| Mashed Potatoes (g) | 0.93 | 0.81 | <0.001 | 185.3 ± 3.3 | 164.1 ± 5.3 | 0.71 ± 0.04 | 0.44 ± 0.04 | <0.001 |

| Butter (g) | 0.58 | 0.71 | 0.26 | 6.0 ± 0.7 | 6.7 ± 0.6 | 0.11 ± 0.04 | 0.11 ± 0.04 | 0.83 |

| Cookies (g) | 0.71 | 0.42 | <0.001 | 59.8 ± 2.1 | 78.7 ± 5.2 | 0.24 ± 0.03 | 0.29 ± 0.12 | 0.59 |

| Meal 3 | ||||||||

| Pork Loin (g) | 0.92 | 0.77 | <0.001 | 119.5 ± 2.7 | 136.4 ± 4.3 | 0.59 ± 0.03 | 0.58 ± 0.05 | 0.79 |

| Apple cider glaze (g) | 0.65 | 0.39 | 0.06 | 41.1 ± 2.5 | 37.2 ± 3.2 | 0.35 ± 0.07 | 0.07 ± 0.06 | <0.001 |

| Roasted Carrots (g) | 0.94 | 0.85 | <0.001 | 154.4 ± 2.9 | 123.9 ± 4.6 | 0.81 ± 0.03 | 0.63 ± 0.05 | <0.001 |

| Orzo (g) | 0.85 | 0.79 | 0.09 | 138.4 ± 3.6 | 117.1 ± 4.3 | 0.64 ± 0.05 | 0.43 ± 0.04 | <0.001 |

| Butter (g) | 0.54 | 0.77 | 0.11 | 6.9 ± 0.9 | 8.0 ± 0.8 | 0.10 ± 0.05 | 0.19 ± 0.06 | 0.12 |

| Rice Pudding | 0.94 | 0.74 | <0.001 | 146.3 ± 3.9 | 122.1 ± 7.7 | 0.78 ± 0.04 | 0.46 ± 0.06 | <0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schenk, J.M.; Boynton, A.; Kulik, P.; Zyuzin, A.; Neuhouser, M.L.; Kristal, A.R. The Use of Three-Dimensional Images and Food Descriptions from a Smartphone Device Is Feasible and Accurate for Dietary Assessment. Nutrients 2024, 16, 828. https://doi.org/10.3390/nu16060828

Schenk JM, Boynton A, Kulik P, Zyuzin A, Neuhouser ML, Kristal AR. The Use of Three-Dimensional Images and Food Descriptions from a Smartphone Device Is Feasible and Accurate for Dietary Assessment. Nutrients. 2024; 16(6):828. https://doi.org/10.3390/nu16060828

Chicago/Turabian StyleSchenk, Jeannette M., Alanna Boynton, Pavel Kulik, Alexei Zyuzin, Marian L. Neuhouser, and Alan R. Kristal. 2024. "The Use of Three-Dimensional Images and Food Descriptions from a Smartphone Device Is Feasible and Accurate for Dietary Assessment" Nutrients 16, no. 6: 828. https://doi.org/10.3390/nu16060828

APA StyleSchenk, J. M., Boynton, A., Kulik, P., Zyuzin, A., Neuhouser, M. L., & Kristal, A. R. (2024). The Use of Three-Dimensional Images and Food Descriptions from a Smartphone Device Is Feasible and Accurate for Dietary Assessment. Nutrients, 16(6), 828. https://doi.org/10.3390/nu16060828