5.2. Comparison with State-of-the-Arts

We conduct comprehensive comparisons between our proposed MSSA approach and five established cross-modal retrieval methods (MTFN [

43], SCAN [

36], VSE++ [

44], CAMERA [

45], and CAMP [

46]), as well as 16 specialized RSITR retrieval methods (AMFMN [

13], SWAN [

12], HyperMatch [

47], GaLR [

15], SMLGN [

48], MCRN [

49], CABIR [

10], HVSA [

50], SSJDN [

14], LW-MCR [

51], KAMCL [

52], MGRM [

53], MSITA [

54], MSA [

17], GHSCN [

38], and PGRN [

19]).

SCAN provides context for inferring image–text similarity by assigning variable weights to different image regions and words. VSE++ improves image–text retrieval performance by introducing hard negative samples and the maximum hinge loss function (MH). CAMP effectively fuses text and image features through an adaptive message passing mechanism, thereby improving the accuracy of retrieval. MTFN employs a multi-modal tensor fusion network to project multiple modal features into various tensor spaces. CAMERA adaptively captures the information flow of inter-modal context from different perspectives for multi-modal retrieval. AMFMN is a RSITR method with fine-grained and multi-scale features. GaLR is a RSITR method based on global and local information. MCRN addresses the challenges of multi-source (image, text, and audio) cross-modal retrieval. CABIR overcomes the defects present in existing research in solving the interference arising from overlapping image semantic attributes due to the complexity of multi-scene semantics. LW-MCR is a lightweight multi-scale RSITR method. SWAN proposes an innovative context-sensitive integration framework designed to mitigate semantic ambiguity through the amplification of contextual awareness. HVSA considers the significant substantial variances in images and the pronounced resemblance among textual descriptions, addressing the varying levels of matching difficulty across different samples. HyperMatch models the relationship between RS image features to improve retrieval accuracy. KAMCL proposes knowledge-assisted momentum contrast learning for RSITR. SSJDN emphasizes scale and semantic decoupling during matching. MGRM tackles the issue of information redundancy and precision degradation in existing methods. MSITA boosts retrieval performance by capturing multi-scale salient information. SMLGN employs dual guidance strategies: intra-modal fusion of hierarchical features and inter-modal bidirectional fine-grained interaction, facilitating the development of a unified semantic representation space. MSA presents a single-scale alignment method to enhance performance based on the previous multi-scale fusion features. GHSCN employs a graph-based hierarchical semantic consistency network, which enhances intra-modal associations and cross-modal interactions between RS images and text through unimodal and cross-modal graph aggregation modules (UGA/CGA). PGRN introduces an end-to-end Prompt-based Granularity-unified Representation Network designed to mitigate cross-modal semantic granularity discrepancies.

From

Table 1, it is evident that MSSA excels in the RSITR task on the UCM Caption dataset, with a significant improvement in performance. When compared to other methods, MSSA achieved outstanding results in retrieving text from images, with a score of 22.38 on R@1, which improved to 60.95 on R@5 and 79.52 on R@10. In the reverse direction, the model scored 15.80 on R@1, 64.76 on R@5, and 93.52 on R@10, respectively. These results indicate that MSSA exhibits robust retrieval capabilities regardless of the direction of the query, whether the retrieval process is driven by textual queries to locate images or by visual content to identify corresponding text. Notably, compared to the classical RSITR method AMFMN, the comprehensive evaluation metric mR improved by 10.08%. Compared to the lightweight LW-MCR, MSSA achieved a performance increase of 10.81%. SSJDN simultaneously optimizes scale decoupling and semantic decoupling, yet our method still attained an improvement of 4.57%. Although SMLGN employs a more complex backbone, our approach still outperforms it, increasing retrieval accuracy by 7.86%. MSA proposes a multi-scale alignment strategy, but our method achieved an improvement of 4.05% and surpassed the latest PGRN method by 4.1%. This enhancement underscores the superiority and stability of MSSA, confirming that the retrieval approach based on multi-scale semantic guidance proposed in this paper offers significant advantages. Furthermore, it demonstrates that MSSA effectively mitigates semantic confusion, resulting in improved retrieval accuracy.

Table 2 reveals that MSSA exhibits the best performance among all compared approaches on the RSITMD dataset, excelling in every evaluation criterion, with the exception of the R@1 and R@5 precision in the text-to-image retrieval. Nevertheless, the R@1 precision in this direction was 15.09, which is 0.44 lower than the MSA method, placing MSSA second only to MSA; the R@5 precision was 47.21, which is 0.22 lower than the most recent method. In the comparison of the comprehensive evaluation metric mR, our method improved the mR value by 15.68% compared to the classical remote sensing image–text retrieval method AMFMN. Compared to the method based on global and local information (GALR), our method achieved an enhancement of 13.99%. MSITA captures multi-scale significant features, but MSSA still shows a substantial improvement in retrieval accuracy by 10.92%. Compared to the graph-based hierarchical semantic consistency alignment method CHSCN, the mR value increased by 6.39%. Compared to the latest PGRN, MSSA’s mR value is higher by 6.08%. These outcomes emphasize the fundamental contribution of cross-modal multi-scale feature learning to the enhanced system capabilities. By effectively fusing features at various levels, MSSA is able to capture the deeper relationships across the spectrum of visual and textual feature, providing a more comprehensive understanding of their interconnections. This, in turn, boosts the model’s recognition capabilities and retrieval accuracy. Consequently, the approach proves to be both feasible and effective in addressing the challenges of information asymmetry and feature sparsity in cross-modal retrieval, further validating the necessity and efficacy of multi-scale semantic perception.

On the RSICD dataset, the experimental results of MSSA is compared with other methods, and the results are presented in

Table 3. It is evident from the table that HyperMatch models the relationships between RS image features, resulting in an improvement in retrieval accuracy, and our method achieves a gain of 12.68 compared to it. The mR value of MSSA is 11.82% higher than that of SWAN. Looking at some recent related methods, MSSA shows an improvement of nearly 10% over MSA, demonstrating outstanding performance. When compared to the latest method, PGRN, MSSA achieved gains of 6.31, 6.58, and 10.43 in image-to-text retrieval for R@1, R@5, and R@10, respectively. Similarly, in text-to-image retrieval, MSSA outperformed existing methods by 2.29 and 4.1 in R@5 and R@10, respectively. Furthermore, the mR value increased by nearly 5%, highlighting the overall improvement in MSSA’s retrieval performance. These results indicate the significance of incorporating multi-scale semantic information, which equips the model with improved capability to discern both fine-grained and high-level relationships between visual and textual data, consequently advancing its capacity to comprehend and distill complex cross-modal features. The enhanced retrieval accuracy not only reflects the power of this approach but also demonstrates MSSA’s superior generalization capabilities in handling richly informative and complex RS scenarios.

5.3. Ablation Studies

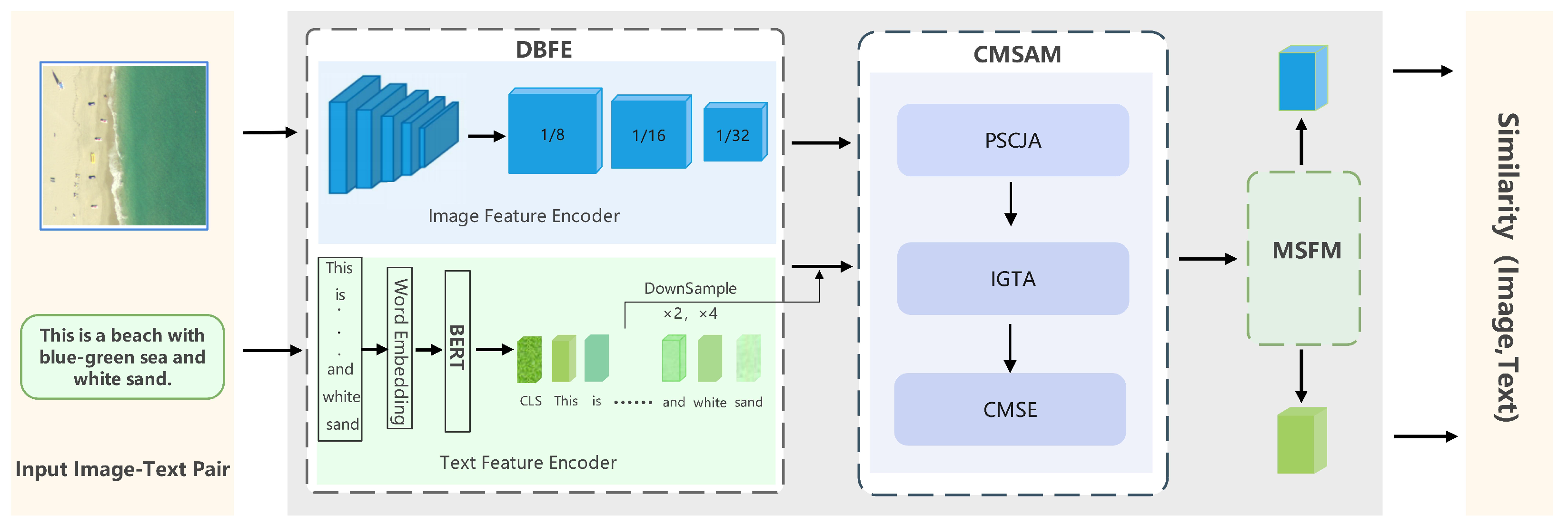

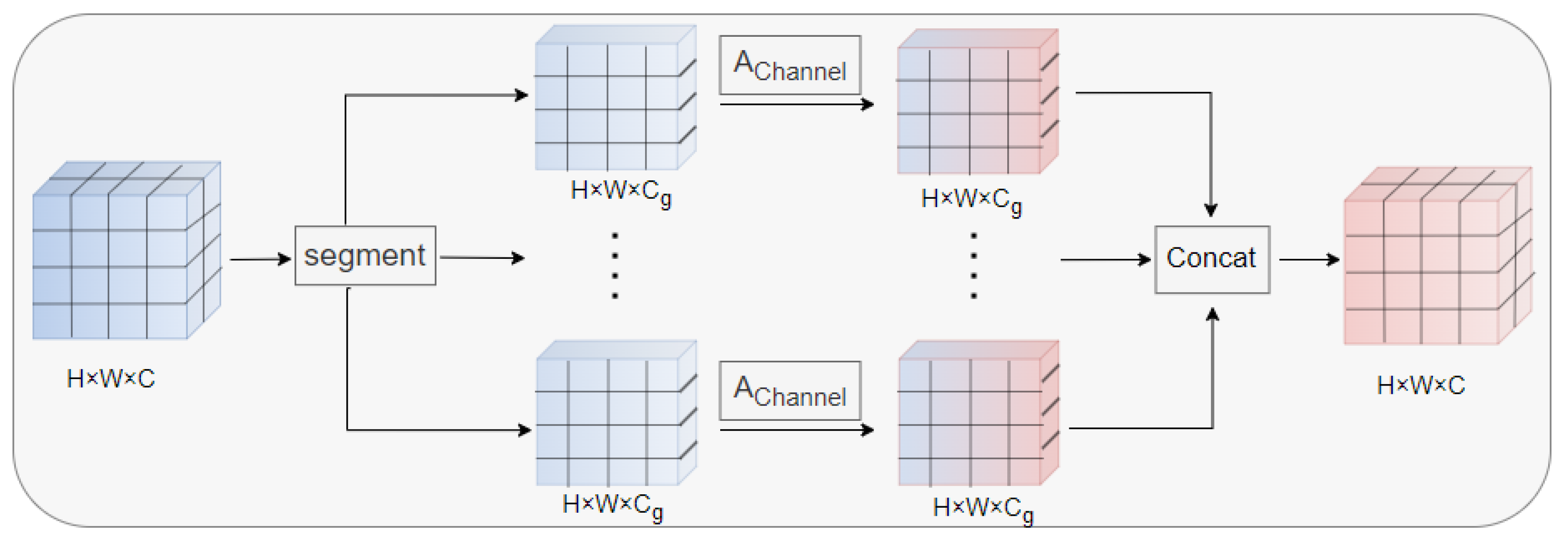

Using the UCM Caption dataset, we performed extensive ablation studies to quantitatively assess the impact of the key innovative elements of MSSA. First, we examined the feasibility and effectiveness of the CMSAM module. Next, we compared its performance with three different attention mechanisms to validate that the designed PSCJA is capable of effectively capturing RS image features, transitioning seamlessly from local to global contexts. Furthermore, we assessed the necessity of multi-scale alignment within the MSSA model and investigated how varying the number of learnable semantic tokens affects retrieval performance. Lastly, to control for potential biases from text feature encoding, we evaluated various visual backbones including ResNet model (ResNet18, ResNet50, ResNet108) and ViT as image feature extractors.

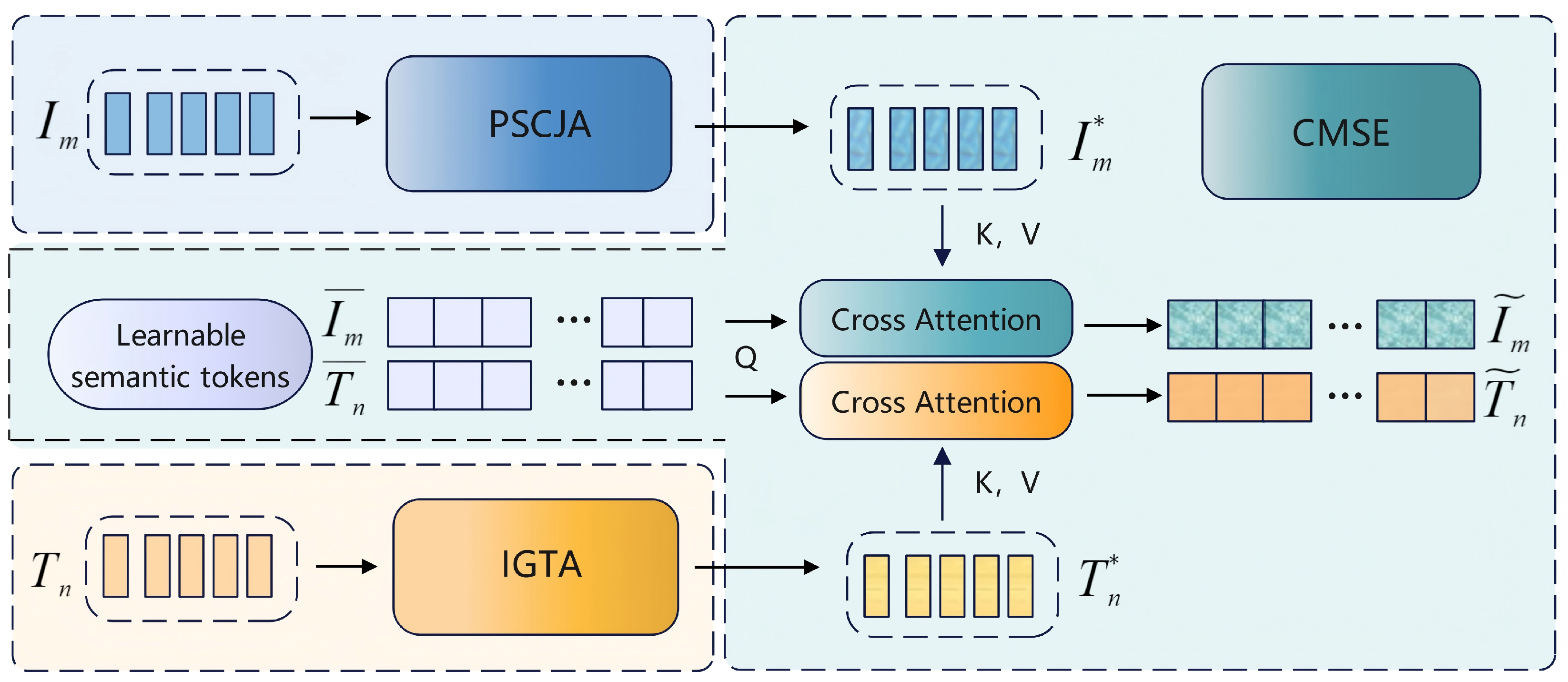

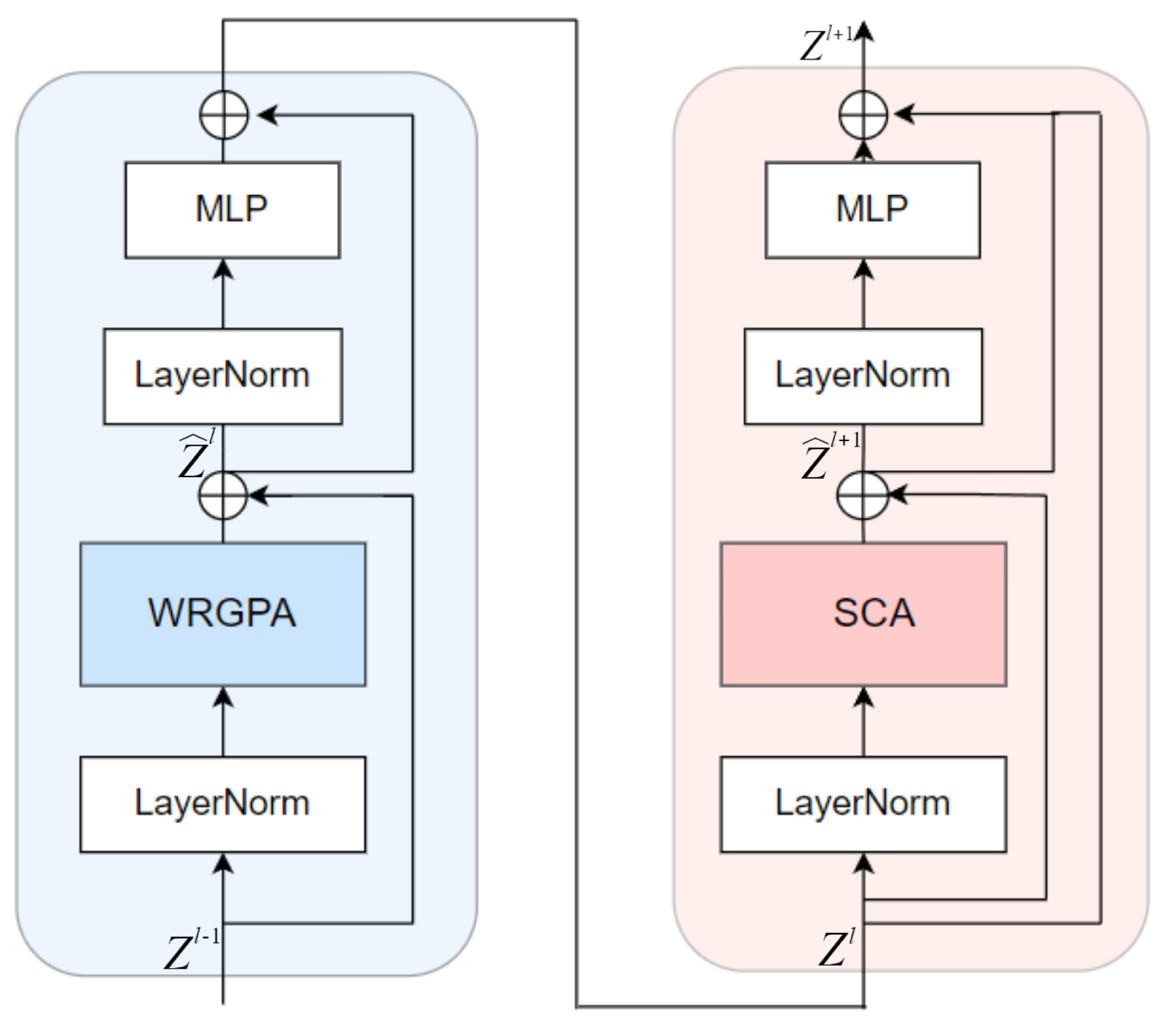

CMSAM is a key innovative module in MSSA that consists of three components: PSCJA, IGTA, and CMSE. To verify how the combined influence of these three factors contributes to the improvement of retrieval results, we conducted ablation experiments by sequentially removing each module. In

Table 4, the check marks under PSCJA, IGTA, and CMSE indicate that the module is retained; otherwise, it means the module is removed.

From the results in

Table 4, it is evident that, when only a module is retained, the comprehensive performance (measured by the mR value) of the model does not reach an ideal level. Specifically, with only the PSCJA retained, the mR value is 53.43; with only the IGTA retained, the mR value is 53.25; and, with only the CMSE retained, the mR value is 52.69. However, when both the PSCJA and IGTA are retained to enhance image and text features, the mR values are improved by 1.67, 1.85, and 2.41 compared to the individual modules, respectively. This indicates that PSCJA and IGTA can effectively capture multi-scale RS image and text features, providing key feature representations for cross-modal retrieval. While the other two combinations, PSCJA and CMSE, achieve an mR of 55.3, but suffer from limited Text-to-Image metrics (e.g., Text-to-Image R@1) due to the lack of IGTA’s visual guidance for text; IGTA and CMSE attain an mR of 54.43, benefiting from text optimization yet showing insufficient Image-to-Text precision (e.g., Image-to-Text R@1), as image features are not enhanced by PSCJA. Furthermore, when the three modules work together, the mR value reaches 56.16, and the image to text R@1 and R@5, as well as the text-to-image R@1 and R@10, which also achieve their best values. Based on this, it is speculated that PSCJA and IGTA provide high-quality cross-modal inputs for CMSE, while CMSE further captures the deep semantic associations that have not been resolved by the previous modules. In addition, the comparison of R@1, R@5, and R@10 indicators in all combinations shows that omitting any module will lead to performance defects, which fully highlights the necessity of joint design of three modules.

The above analysis fully indicates that there is a significant synergistic effect between intra-modal feature enhancement and cross-modal semantic extraction. Both work together at different levels and scales to perceive and fuse cross-modal semantic information, dramatically boosting the model’s retrieval accuracy and generalization capability. In other words, relying solely on either intra-modal feature enhancement or cross-modal semantic extraction cannot fully unleash the model’s potential. The combination of all three components is crucial to enhancing retrieval effectiveness.

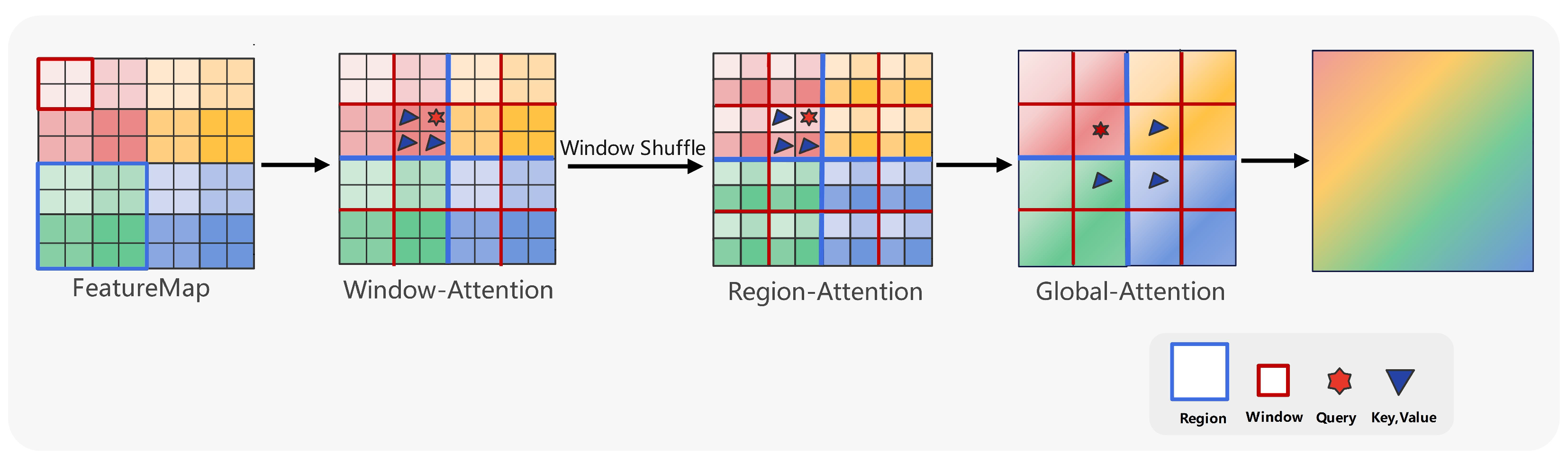

Table 5 presents the results of replacing the PSCJA with three other attention mechanisms, evaluated under the same dataset and configuration to assess the superiority of PSCJA in progressively extracting features from spatial and channel dimensions. The three attention mechanisms compared are (1) Vanilla MSA [

2], which calculates attention for every single token within the input sequence in relation to each of the remaining tokens, capturing global long-range dependencies; (2) SW-MSA [

25], composed of window attention and sliding window attention, which restricts the scope of attention to within the window and between adjacent windows, balancing the receptive field and computational efficiency; (3) Focal Self-Attention (FSA) [

55] mimics the observation mechanism of human eyes and enables every token to concentrate on nearby tokens at a fine level of detail while also addressing more distant tokens at a broader level, thereby effectively representing both local and global visual relationships.

As shown in

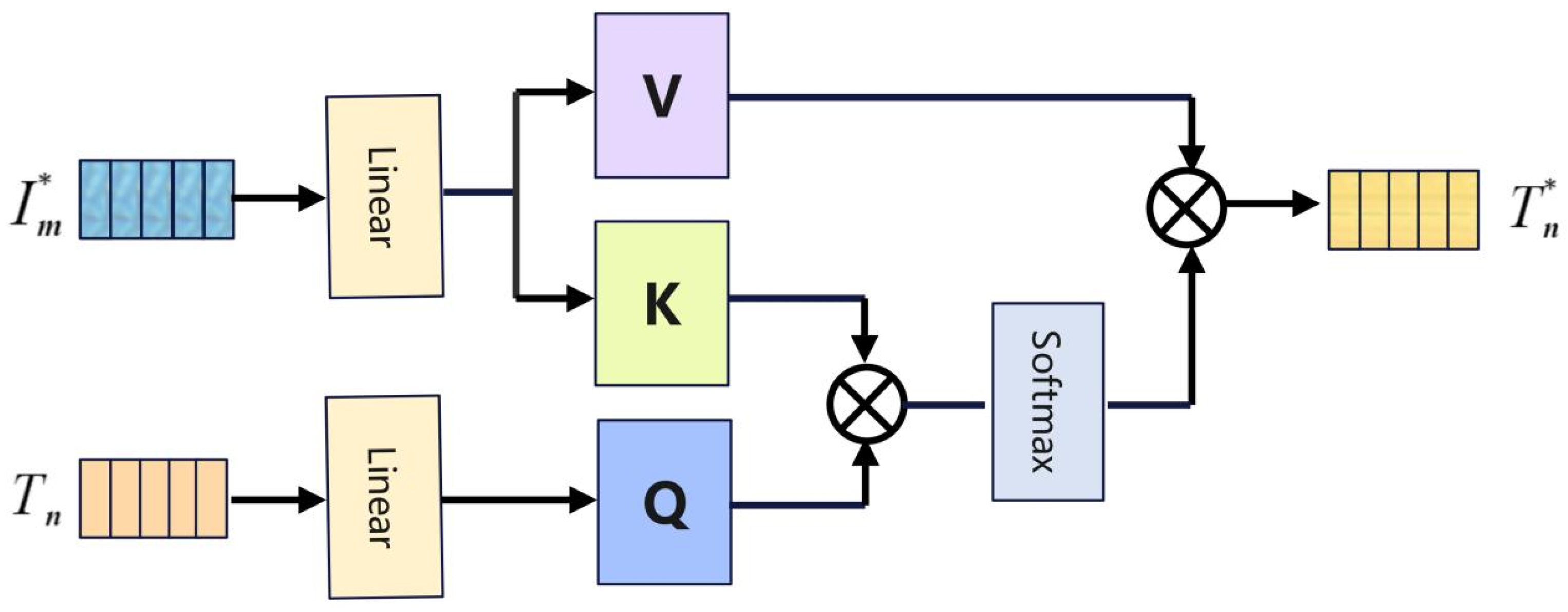

Table 5, although Vanilla MSA can provide global, fine-grained attention for each token, it incurs excessive “meaningless” computations because of the substantial redundancy in RS images, resulting in suboptimal performance. SW-MSA, on the other hand, captures local area details effectively and achieves an R@1 precision of 22.53 in image-to-text retrieval, which is 0.15 higher than PSCJA. However, owing to the existence of various scale-varied objects (buildings, roads, vegetation, etc.) in RS images, this mechanism struggles to adapt well to these multi-scale features within a fixed-scale window. It also fails to fully capture the contextual information over a large range (e.g., urban areas, forests, rivers), resulting in an mR value that is 1.59 lower than PSCJA. FSA introduces a mechanism to selectively focus on important information, achieving R@1 and R@5 values of 19.80 and 70.75 in the text-to-image retrieval, which are 3.99 and 5.99 higher than PSCJA, respectively. However, due to the irregularly shaped targets present in RS images, a fixed attention range is inadequate to capture these target features well, leading to the overall mR value not surpass that of PSCJA. For WRGPA, its R@1 metric in image-to-text reaches 24.29, which is the highest value among all attention mechanisms. This indicates that WRGPA can accurately capture local fine-grained features in RS images through the progressive spatial attention design. However, other indicators are lower than PSCJA due to the lack of integration of channel semantic information. SCA performs well on R@5 (70.95) and R@10 (88.67) in text-to-image, indicating that SCA can selectively activate image channel features related to text semantics. However, its modeling of spatial multi-scale features is insufficient, resulting in poor overall performance. The PSCJA presented in our study takes into account the multi-scale features of RS images and effectively captures important visual information from local to global through a hierarchical feature learning mechanism. The experimental results further confirm the superiority of PSCJA in RS image feature extraction.

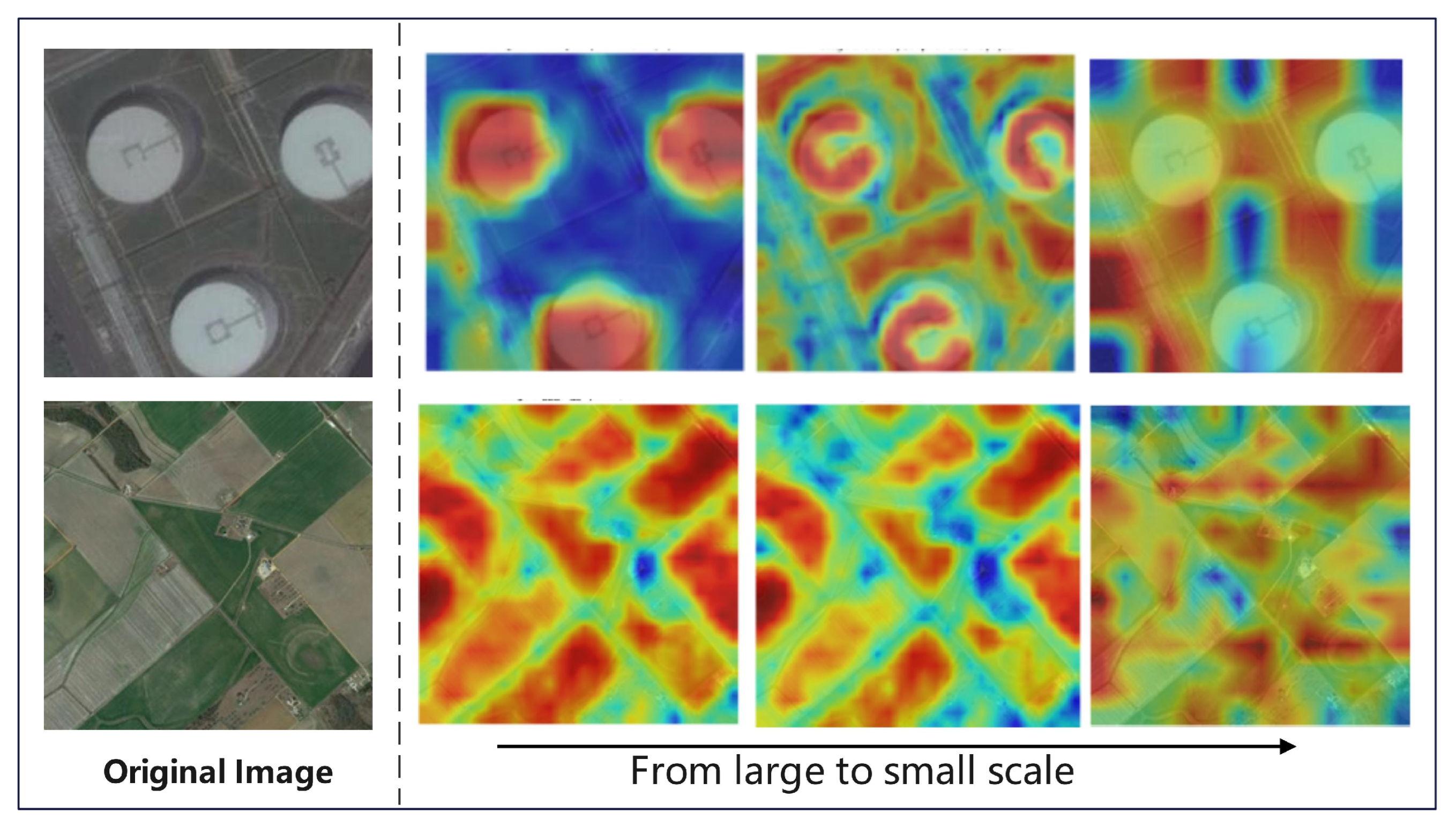

As shown in

Figure 8, the visual results of the PSCJA mechanism at multiple scales intuitively validate its hierarchical semantic perception and scale-adaptive representation capabilities. In the industrial scene of oil tanks, the larger-scale feature maps clearly capture multiple targets and their boundaries. As the scale of the feature maps decreases, the areas of focus gradually expand from the overall oil tank to the spatial relationships of the surrounding facilities. In the agricultural scene, PSCJA effectively captures the global semantic structure of the scene, which corresponds closely to the salient visual information of the original image. This indicates that our proposed joint attention mechanism, transitioning from local to global spatial channels, aligns well with the characteristics of RS images.

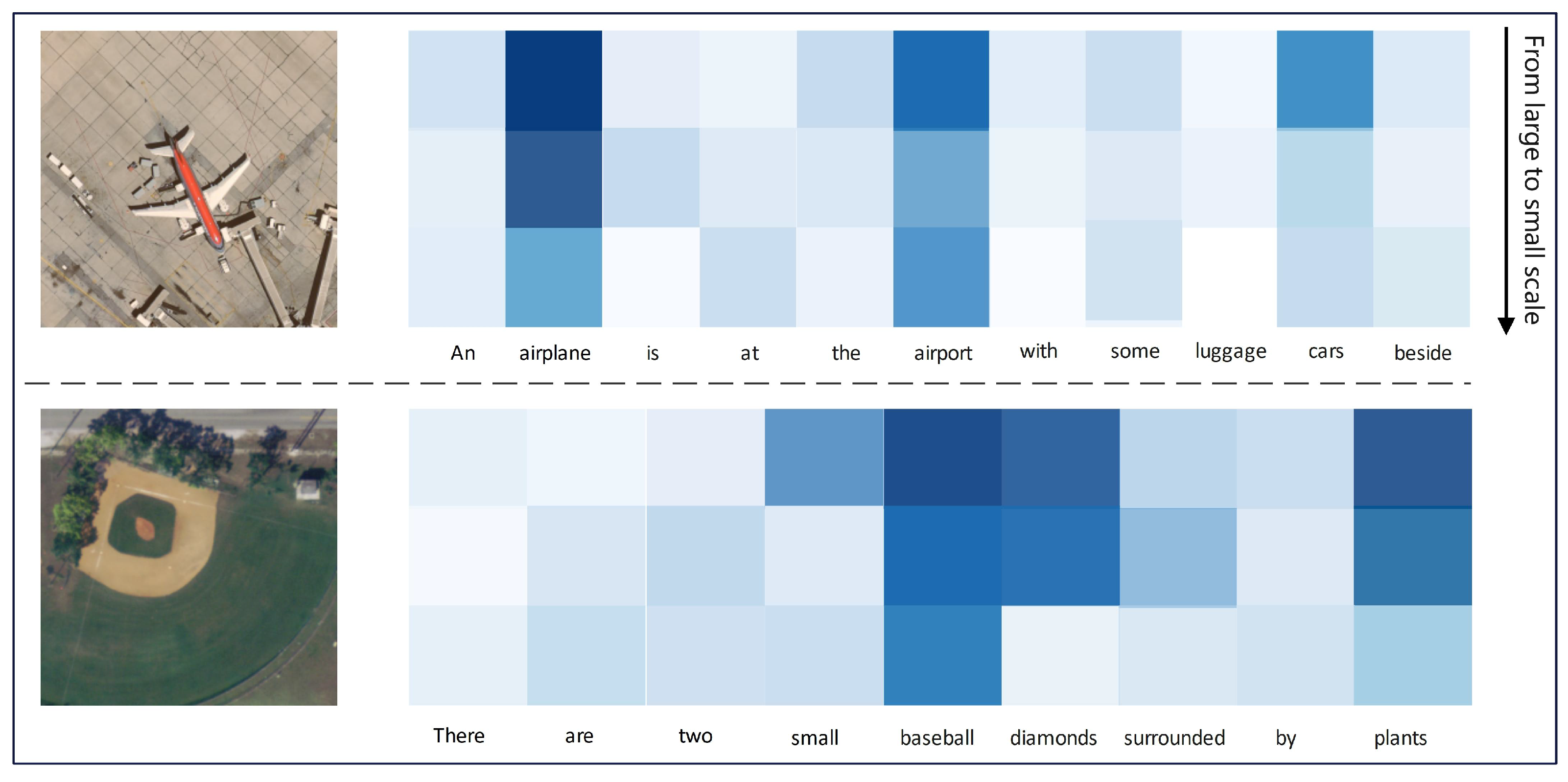

To verify the effectiveness of the IGTA, we visualized it. As shown in

Figure 9, two cases are presented, with the horizontal axis representing each word described in the sentence and the vertical axis representing features at different scales, decreasing in order from top to bottom. We use the depth of color to reflect the attention level of each word. The darker the color, the higher the attention weight of the word. In the first example, the airport RS image and the text ”An airplane is stopped at the airport with some luggage cars nearby” correspond to higher attention weights for words such as “airplane”, “airport”, and “luggage cars” in the text, accurately matching with visual content such as airplanes and airport facilities in the image. In the second example, the RS image is of a baseball field and the text description is “Two small baseball diamonds surrounded by plants”. The attention weights of words such as “baseball diamonds” and “plants” have significantly increased and are highly correlated with visual elements such as baseball fields and surrounding vegetation in the image. This indicates that IGTA has successfully focused text features on image related semantic information through cross-modal attention computation, verifying its design goal of guiding text to focus on image related content and improving the interpretability of cross-modal semantic alignment.

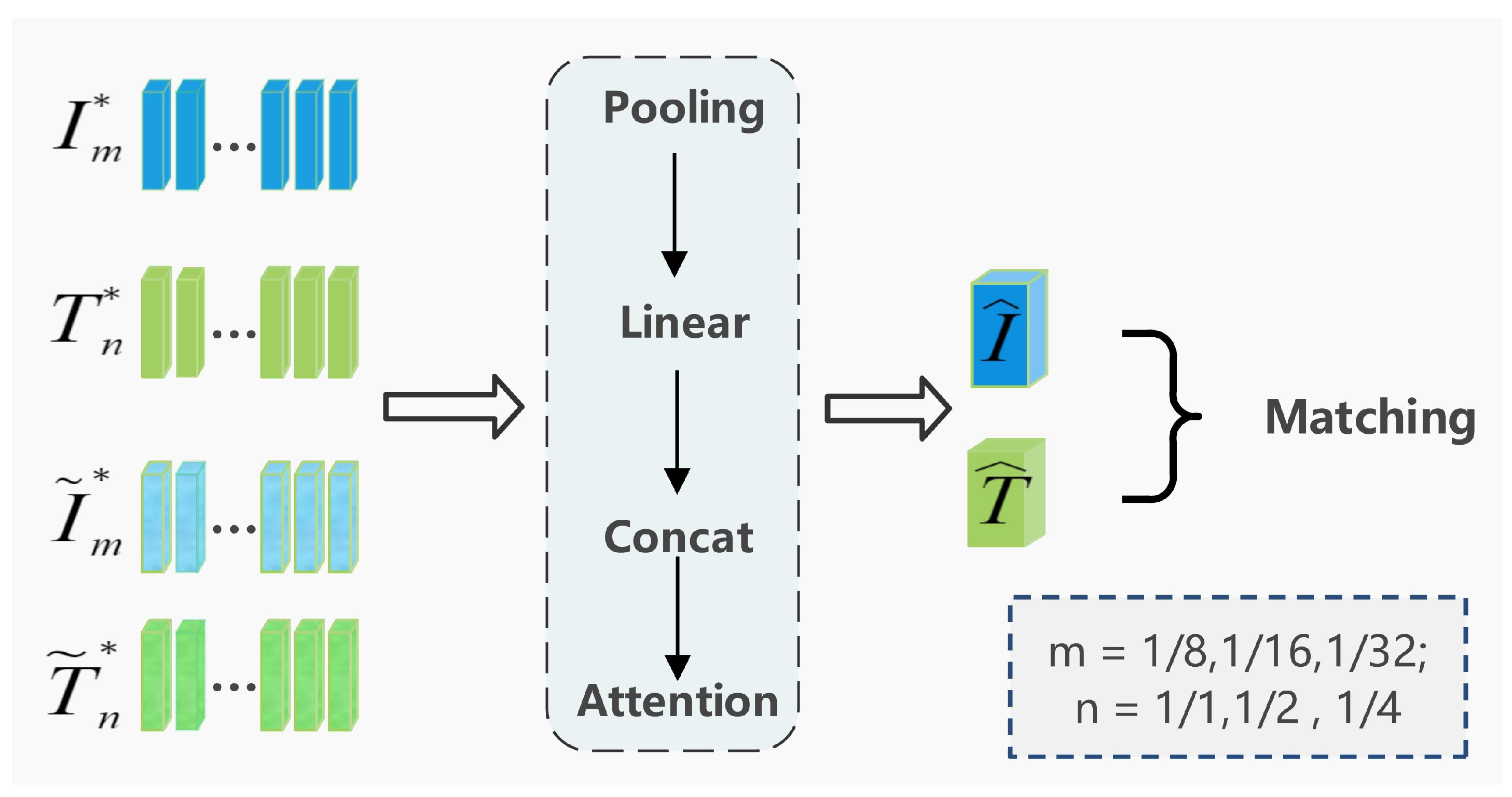

The cross-modal RSITR task requires combining information from multiple levels of representation, ranging from basic visual attributes (e.g., color, shape, and surface patterns) to intermediate features (such as key objects and their interactions), as well as high-level semantics and rich contextual information, to accurately understand and match images with text. MSSA is designed to extract multi-scale features and performs cross-modal semantic perception across three distinct hierarchical levels. With the aim of evaluating the rationality and necessity of this multi-scale strategy, we conducted ablation experiments on feature maps generated from the original images at scales of 1/8, 1/16, and 1/32, as well as various combinations of these scales, and compared their retrieval accuracy and inference time.

As illustrated in

Table 6, the 1/8 resolution feature map attained an mR score of 52.91, which is 0.47 and 1.70 higher than the results from the lower resolution scales of 1/16 and 1/32, respectively. Although 1/8 resolution feature maps preserve more effective information to improve performance, they result in higher processing speed overhead; on the contrary, 1/32 resolution feature maps provide the fastest processing speed, but lose a large number of key tokens. This reflects a clear trade-off between the performance and processing speed of higher resolution feature maps. In addition, feature combinations from different scales produced better results, with mR values of 54.94, 54.42, and 53.49, significantly exceeding the results of single-scale features while their processing speed remained within an acceptable range without excessive speed loss. This demonstrates the synergistic effect of multi-scale features in enhancing model performance while balancing processing efficiency. Considering the above analysis, we ultimately chose feature maps of 1/8, 1/16, and 1/32, which allow for feature enhancement and semantic perception within and across modalities at each scale. This strategy overcomes the limitations of single scale features (slow speed or poor performance) and achieves more robust performance improvements at a reasonable processing speed.

CMSAM introduces learnable semantic tokens at three different scales to harvest a sophisticated semantic understanding from visual and textual attributes; this approach aims to strengthen the association between the two modalities and enhance the final matching performance. Generally, a greater number of tokens can capture richer and more diverse semantic information, as each token can learn different patterns and details. However, increasing the number of tokens also raises the model’s computational complexity and training difficulty. To examine this trade-off, we adjusted the number of learnable semantic tokens and evaluated its impact on the model performance.

As shown in

Table 7, as the number of learnable semantic tokens increases gradually to fewer than 20, the mR value exhibits a stable upward trend, indicating that effective learning of semantic information. When the number of tokens reaches 25 and 30, the mR values are 56.22 and 56.33, respectively, which are 0.05 and 0.17 higher than the result with 20 tokens, with only minimal fluctuations. This phenomenon suggests that, beyond 20 tokens, additional tokens do not lead to substantial gains in information and may introduce redundancy or unnecessary information noise. In the process of semantic extraction, 20 tokens is adequate to encompass the vast majority of useful semantic information, thereby achieving optimal performance.

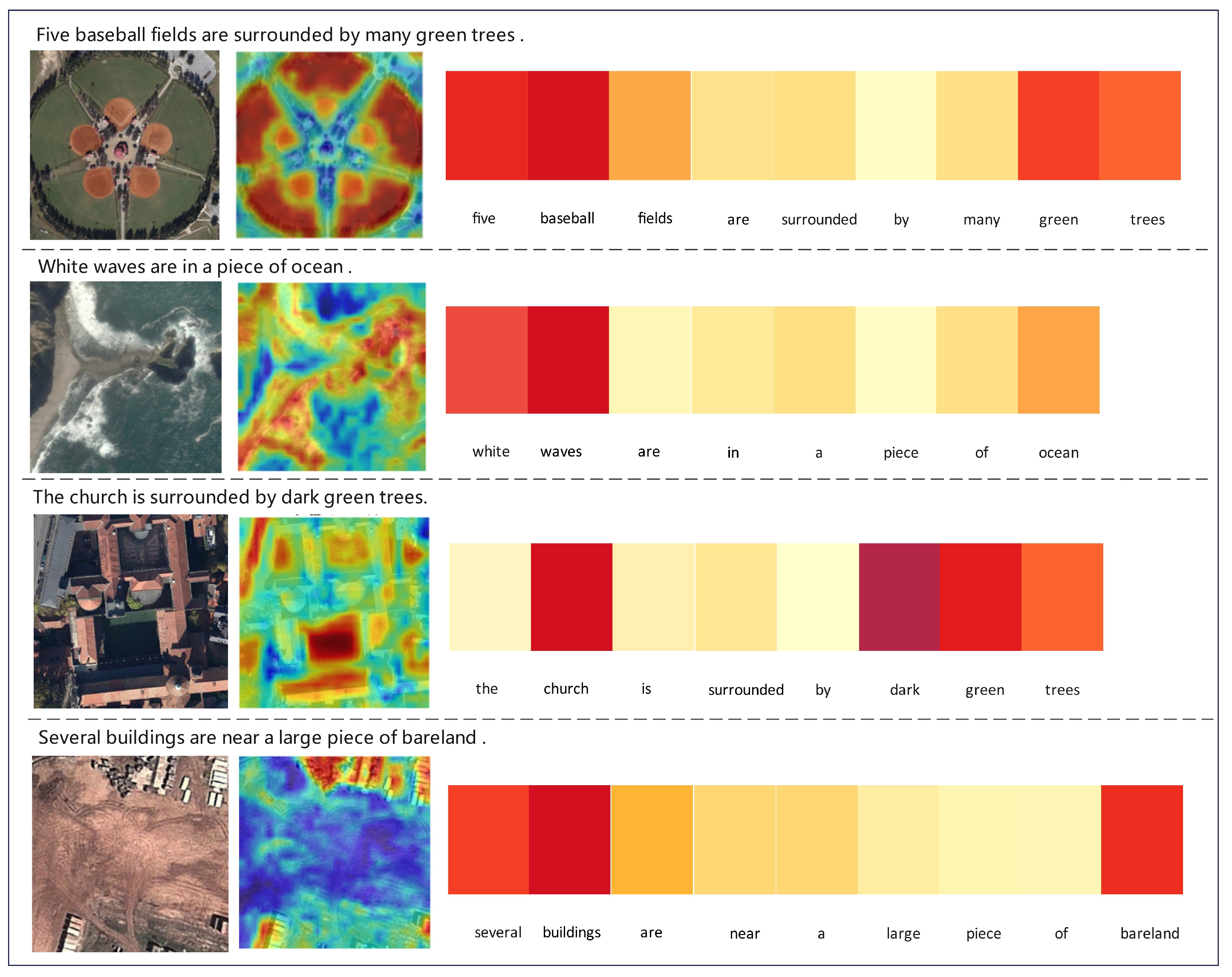

We visualized the learnable semantic tokens of the CMSE module, as shown in

Figure 10. The left side displays the original image along with its corresponding textual description, while the right side illustrates the visual results of the semantic extraction from the image and text. From the experimental results, it is evident that the learnable semantic tokens accurately capture salient information across different modalities for various scenes, such as baseball fields, oceans, and barren land. The heatmaps of the learnable semantic tokens on the image side precisely locate the core visual regions (e.g., baseball fields, white waves, church buildings, bareland, and surrounding structures). Additionally, the attention intensity on the text side is closely related to the semantic significance of the text (e.g., the core meanings of “five/baseball fields”, “white/waves”, “church”, “dark green trees”, “several buildings/bareland”). The learning outcomes regarding quantity, target scope, color, and spatial relationships demonstrate a precise cross-modal alignment, which effectively validates the capability of the two sets of learnable semantic tokens in capturing the core semantics of both images and texts, as well as achieving cross-modal semantic matching.

We compared PSCJA with three Transformer-based attention mechanisms, Params and Flops, as shown in

Table 8. Vanilla MSA is a standard multi-head self-attention mechanism that provides a baseline for traditional self attention methods despite its high computational complexity. SW-MSA adopts a sliding window with relatively low parameter count of only 16.83 M and FLOPs of 41.29 G, this indicates that SW-MSA can significantly reduce computational overhead when dealing with long sequences. PSCJA takes into account both local and global saliency features across spatial and channel dimensions, with a parameter count of 17.46 M and FLOPs of 43.31 G. Its complexity is slightly higher than that of SW-MSA, yet significantly lower than that of FSA.

To exclude interference from the text feature encoder, we systematically compared several image feature extraction networks, including ResNet series (ResNet18, ResNet50, ResNet101) and ViT. As can be seen from

Table 9, ViT emerged as the top-performing image feature extraction model, achieving the mR value of 56.45. Among the ResNet series, ResNet50 delivered the best result, recuring second place overall with an mR value of 56.16, which is only 0.29 lower than ViT. However, it outperformed ResNet18 and ResNet101 by 0.36 and 2.48, respectively. Although ViT has a slightly better retrieval accuracy than ResNet50, its computational cost is much higher. From the perspective of model efficiency, ViT has 86.3 M parameters, which is approximately 3.2 times that of ResNet50 (26.28 M); at the same time, the inference time of ViT is 38.2 ms per image, while ResNet50 is 28.64 ms. The improvement in retrieval accuracy obtained from this approach is not cost-effective compared to the computational cost. Therefore, ResNet50 is chosen as the image feature extractor to achieve a more reasonable balance between performance and efficiency.

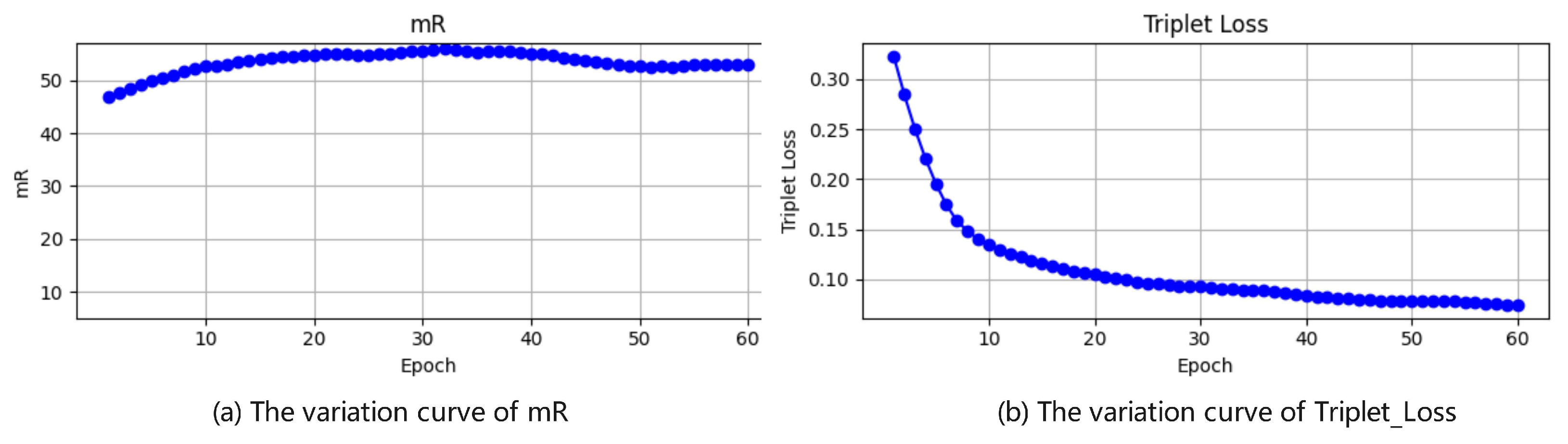

As shown in

Figure 11a, the figure displays the variation trend of mR values across the UCM Caption dataset, where it can be observed that the mR value gradually increases throughout the training process, reaching its maximum at the 32nd epoch, and then shows a fluctuating downward trend.

Figure 11b presents the change curve of the loss value, which is evident that the loss value decreases gradually during training, with a significant drop before the 30th epoch, followed by a slower reduction thereafter. As evidenced by these results, the developed algorithm displays reliable convergence properties.

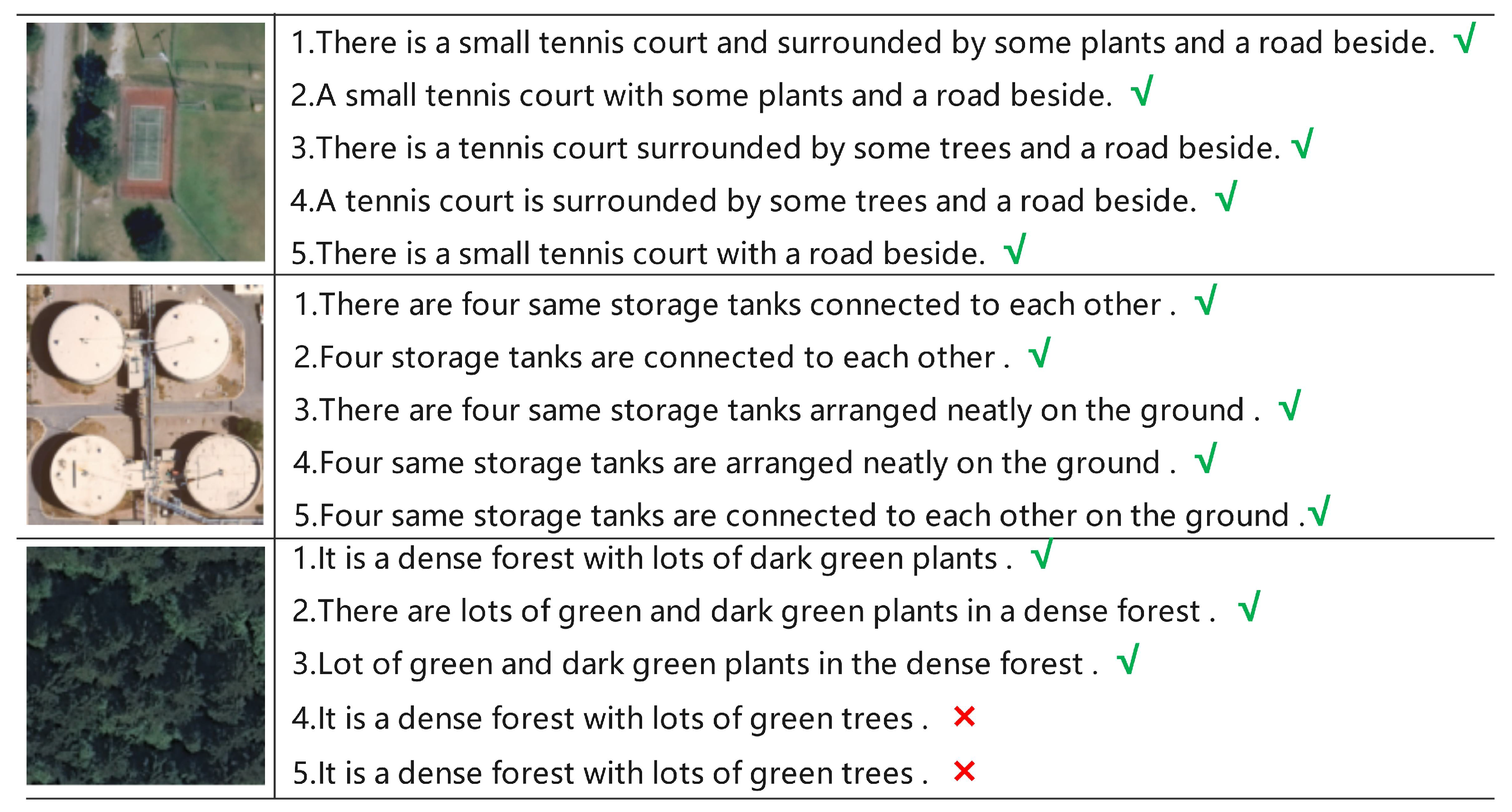

To intuitively verify the cross-modal retrieval performance of the proposed method, we conducted retrieval visualization experiments for “image-to-text” and “text-to-image” on the UCM Caption dataset. Displayed the top 5 retrieved results. The green markers in the figure indicate ground truth, while the red markers indicate incorrect search results. Looking at

Figure 12, for the first query image, the top five text descriptions accurately capture core information such as “tennis court”, “surrounding plants”, and “adjacent road”, and the descriptions of details like “small” and “trees/plants” also match the images. The second query image accurately covers key semantics such as “four storage tanks” and “connected/arranged neatly”. For the third query image, the first three descriptions correctly express “dense forest” and “dark green plants”, but the fourth and fifth descriptions mistakenly refer to “plants” as “trees”, reflecting the model’s deficiency in distinguishing subdivided semantics like “general vegetation” and “trees”.

In the visualization results shown in

Figure 13, for QueryText1, the top three retrieved images accurately match the intersection shape, and the ground truth result marked in green ranks second, indicating that the model can effectively understand the spatial relationship of “intersection” and “roads vertical”. The first three results for QueryText2 accurately present the diamond-shaped field features, while the fourth image is marked red due to deviations in the field shape, showing that the model still has room for optimization in judging precise geometric shapes like “regular baseball diamond”. For QueryText3, the first and second images contain buildings and roads but lack prominent vehicle features, and the third and fourth images are marked red because they lack the core element of “cars parked neatly”, indicating that the model still needs to be strengthened in the semantic alignment of fine-grained objects (such as “cars parked neatly”). These shortcomings also spurred our further efforts.