Sensor Synergy in Bathymetric Mapping: Integrating Optical, LiDAR, and Echosounder Data Using Machine Learning

Abstract

1. Introduction

- -

- Investigating the bathymetric modeling performance of ICESat-2 LiDAR as a training dataset coupled with Sentinel-2, Göktürk-1, and UAV imagery data with different spatial resolutions, independently for the study sub-region.

- -

- Examining the impact of using ICESat-2 LiDAR data independently and its fusion with single-beam echosounding data for the training and validation of bathymetric models over a large region.

- -

- Exploring the effect of brightness values derived from TCT, in addition to spectral bands of imagery, on bathymetric modeling performance.

- -

- Investigating the performance of machine learning-based bathymetric modeling algorithms for this region.

2. Study Area and Data

2.1. Study Area

2.2. Data

2.2.1. Sentinel-2

2.2.2. Göktürk-1

2.2.3. Aerial Imagery

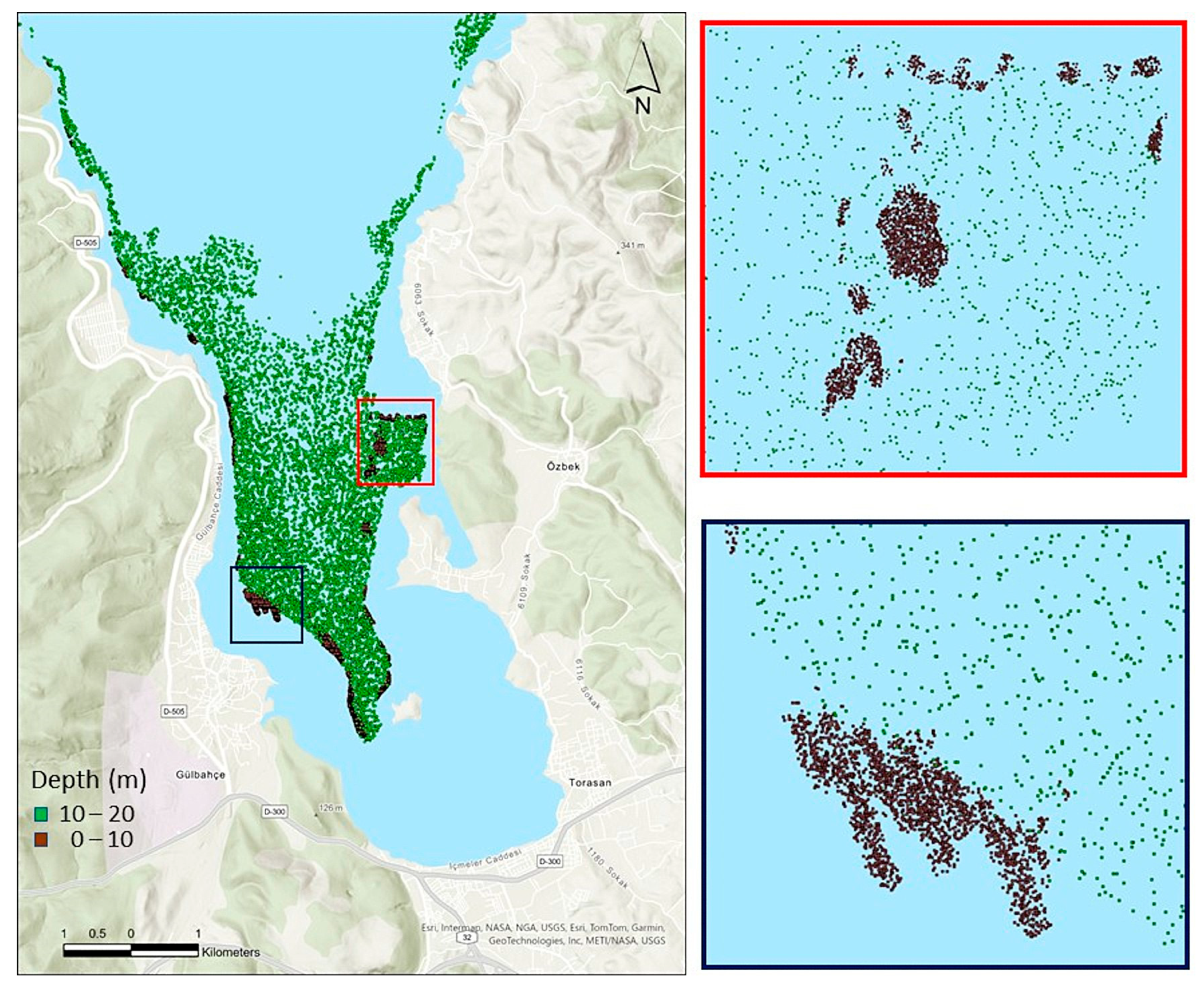

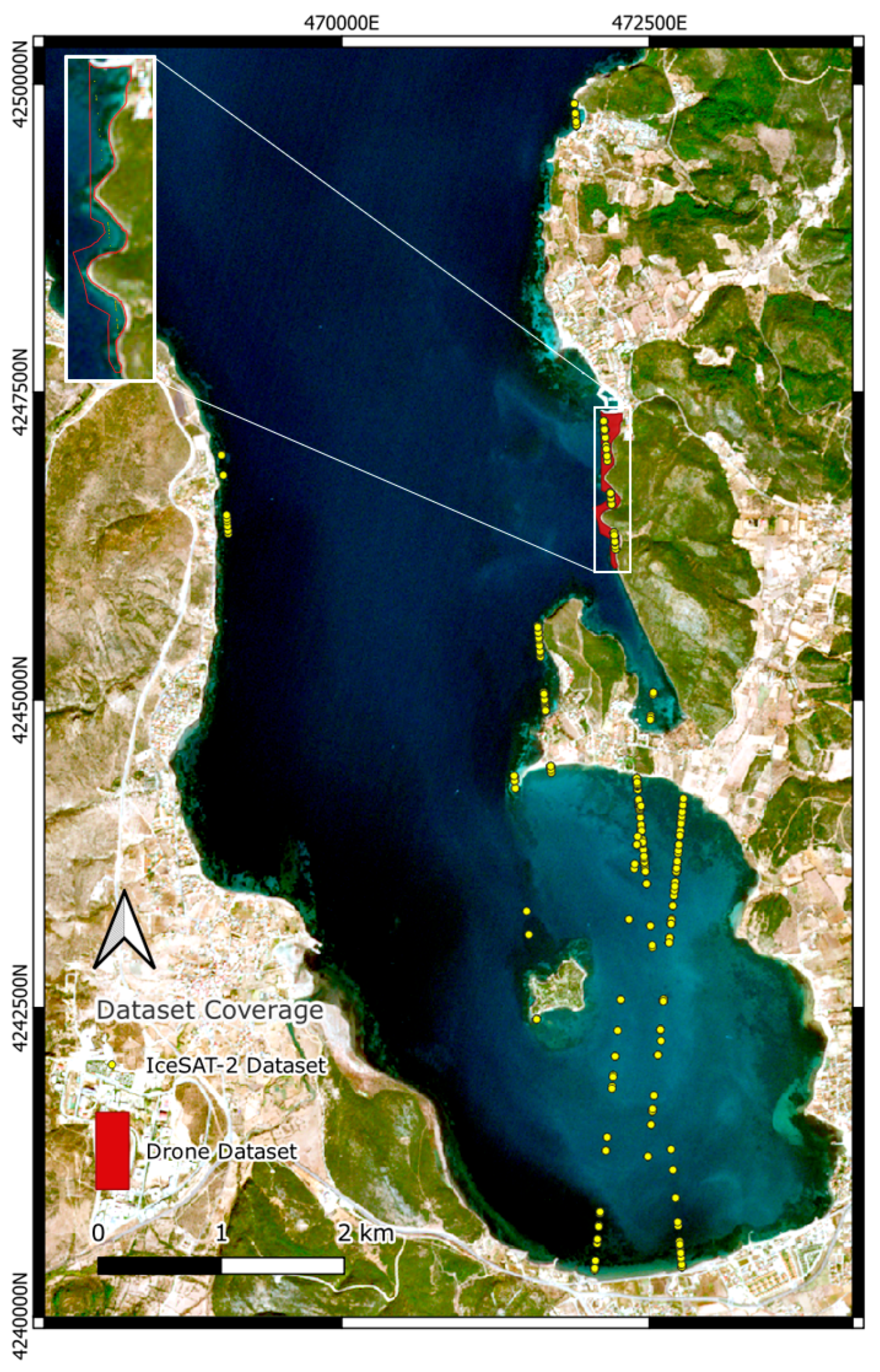

2.2.4. IceSat-2

2.2.5. Single-Beam Echosounder

3. Methodology

3.1. Atmospheric Correction

3.2. IceSAT-2 Preprocessing

3.3. Tasseled Cap Transformation

3.4. Machine Learning Based Bathymetry Extraction Models

3.4.1. Random Forest

3.4.2. Extreme Gradient Boosting

3.4.3. Hyperparameter Tunning

4. Results

4.1. Sub-Region Analysis with IceSat-2 LiDAR

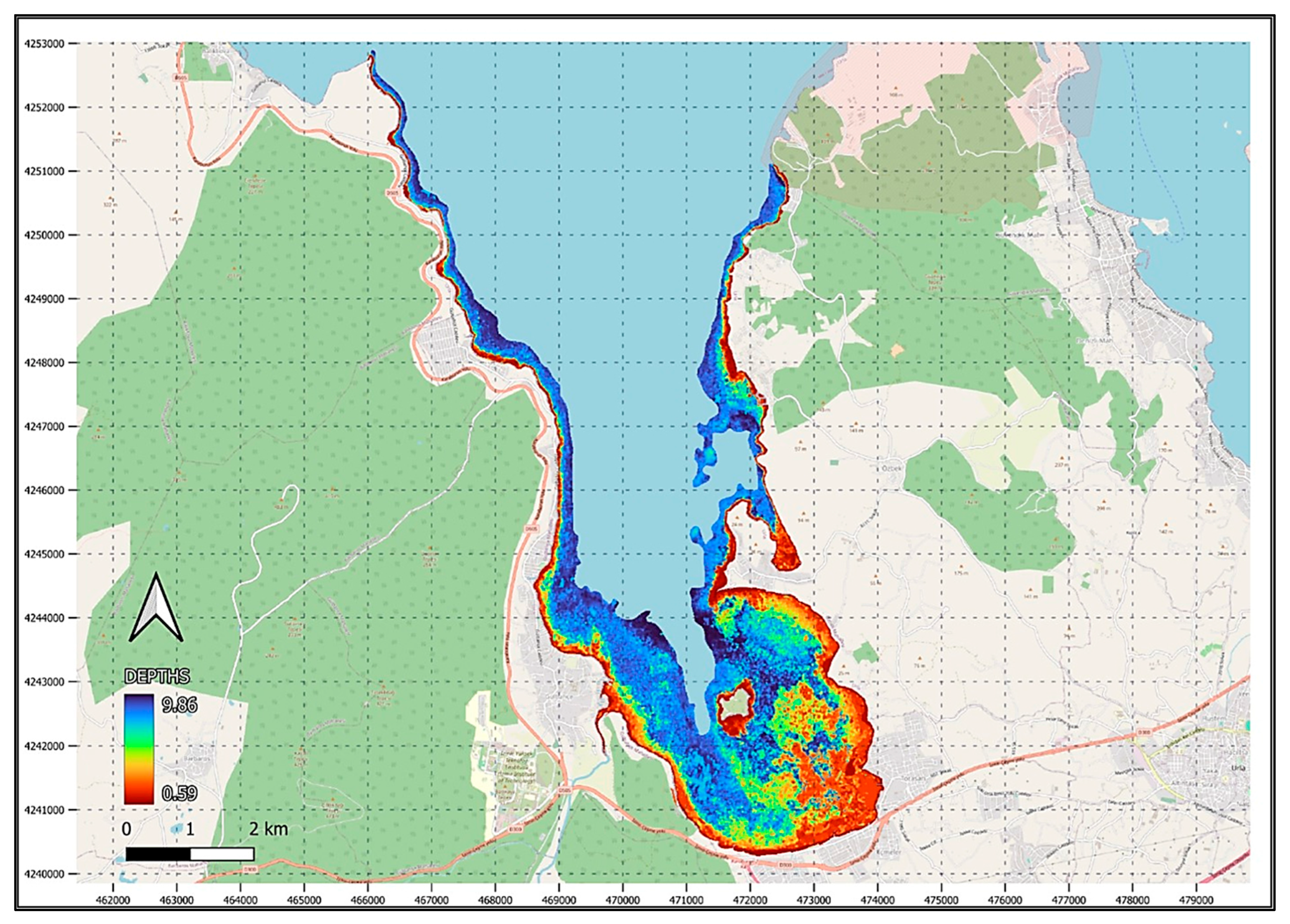

4.2. Full Region Analysis with Fusion Approach

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Neumann, B.; Vafeidis, A.T.; Zimmermann, J.; Nicholls, R.J. Future Coastal Population Growth and Exposure to Sea-Level Rise and Coastal Flooding—A Global Assessment. PLoS ONE 2015, 10, e0118571. [Google Scholar] [CrossRef]

- McMichael, C.; Dasgupta, S.; Ayeb-Karlsson, S.; Kelman, I. A Review of Estimating Population Exposure to Sea-Level Rise and the Relevance for Migration. Environ. Res. Lett. 2020, 15, 123005. [Google Scholar] [CrossRef]

- Melet, A.; Teatini, P.; Le Cozannet, G.; Jamet, C.; Conversi, A.; Benveniste, J. Earth Observations for Monitoring Marine Coastal Hazards and Their Drivers. Surv. Geophys. 2020, 41, 1489–1534. [Google Scholar] [CrossRef]

- Viaña-Borja, S.P.; González-Villanueva, R.; Alejo, I.; Stumpf, R.P.; Navarro, G.; Caballero, I. Satellite-Derived Bathymetry Using Sentinel-2 in Mesotidal Coasts. Coast. Eng. 2025, 195, 104644. [Google Scholar] [CrossRef]

- International Hydrographic Organization. C-55 Status of Hydrographic Surveying and Charting Worldwide; International Hydrographic Bureau: Monte Carlo, Monaco, 2021. [Google Scholar]

- Jawak, S.D.; Vadlamani, S.S.; Luis, A.J. A synoptic review on deriving bathymetry information using remote sensing technologies: Models, methods and comparisons. Adv. Rem. Sens. 2015, 4, 147–162. [Google Scholar] [CrossRef]

- Bai, Z.; Sun, Z.; Fan, B.; Liu, A.-A.; Wei, Z.; Yin, B. Multiscale Spatio-Temporal Attention Network for Sea Surface Temperature Prediction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 5866–5877. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, D.; Li, L.; Ning, R.; Yu, S.; Gao, N. Application of Remote Sensing Technology in Water Quality Monitoring: From Traditional Approaches to Artificial Intelligence. Water Res. 2024, 267, 122546. [Google Scholar] [CrossRef]

- Evagorou, E.; Hasiotis, T.; Petsimeris, I.T.; Monioudi, I.N.; Andreadis, O.P.; Chatzipavlis, A.; Christofi, D.; Kountouri, J.; Stylianou, N.; Mettas, C.; et al. A Holistic High-Resolution Remote Sensing Approach for Mapping Coastal Geomorphology and Marine Habitats. Remote Sens. 2025, 17, 1437. [Google Scholar] [CrossRef]

- Jiang, Z.; Zhang, J.; Ma, Y.; Mao, X. Research on Remote Sensing Quantitative Inversion of Oil Spills and Emulsions Using Fusion of Optical and Thermal Characteristics. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 8472–8489. [Google Scholar] [CrossRef]

- Wang, S.; Qin, B. Application of Optical Remote Sensing in Harmful Algal Blooms in Lakes: A Review. Remote Sens. 2025, 17, 1381. [Google Scholar] [CrossRef]

- Li, H.; Li, L.; Wang, H.; Zhang, W.; Ren, P. Underwater Image Captioning with AquaSketch-Enhanced Cross-Scale Information Fusion. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–18. [Google Scholar] [CrossRef]

- Mavraeidopoulos, A.K.; Oikonomou, E.; Palikaris, A.; Poulos, S. A hybrid bio-optical transformation for satellite bathymetry modeling using sentinel-2 imagery. Remote Sens. 2019, 11, 2746. [Google Scholar] [CrossRef]

- Traganos, D.; Poursanidis, D.; Aggarwal, B.; Chrysoulakis, N.; Reinartz, P. Estimating Satellite-Derived Bathymetry (SDB) with the Google Earth Engine and Sentinel-2. Remote Sens. 2018, 10, 859. [Google Scholar] [CrossRef]

- Jagalingam, P.; Akshaya, B.; Hegde, A.V. Bathymetry mapping using Landsat 8 satellite imagery. Procedia Eng. 2015, 116, 560–566. [Google Scholar] [CrossRef]

- Lambert, S.E.; Parrish, C.E. Refraction correction for spectrally derived bathymetry using UAS imagery. Remote Sens. 2023, 15, 3635. [Google Scholar] [CrossRef]

- Ashphaq, M.; Srivastava, P.K.; Mitra, D. Review of near-shore satellite derived bathymetry: Classification and account of five decades of coastal bathymetry research. J. Ocean Eng. Sci. 2021, 6, 340–359. [Google Scholar] [CrossRef]

- Duan, Z.; Chu, S.; Cheng, L.; Ji, C.; Li, M.; Shen, W. Satellite-derived bathymetry using Landsat-8 and Sentinel-2A images: Assessment of atmospheric correction algorithms and depth derivation models in shallow waters. Opt. Express 2022, 30, 3238–3261. [Google Scholar] [CrossRef]

- Gao, J. Bathymetric mapping by means of remote sensing: Methods, accuracy and limitations. Prog. Phys. Geogr. Earth Environ. 2009, 33, 103–116. [Google Scholar] [CrossRef]

- Kutser, T.; Hedley, J.D.; Giardino, C.; Roelfsema, C.M.; Brando, V.E. Remote sensing of shallow waters—A 50-year retrospective and future directions. Remote Sens. Environ. 2020, 240, 111619. [Google Scholar] [CrossRef]

- Turner, I.L.; Harley, M.D.; Almar, R.; Bergsma, E.W.J. Satellite optical imagery in coastal engineering. Coast. Eng. 2021, 167, 103919. [Google Scholar] [CrossRef]

- Dickens, K.; Armstrong, A. Machine Learning of Derived Bathymetry and Coastline Detection. SMU Data Sci. Rev. 2019, 2, 4. [Google Scholar]

- Misra, A.; Ramakrishnan, B. Assessment of coastal geomorphological changes using multi-temporal Satellite-Derived Bathymetry. Cont. Shelf Res. 2020, 207, 104213. [Google Scholar] [CrossRef]

- Moeinkhah, A.; Shakiba, A.; Azarakhsh, Z. Assessment of regression and classification methods using remote sensing technology for detection of coastal depth (case study of bushehr port and kharg island). J. Indian Soc. Remote Sens. 2019, 47, 1019–1029. [Google Scholar] [CrossRef]

- Watts, A.B.; Tozer, B.; Harper, H.; Boston, B.; Shillington, D.J.; Dunn, R. Evaluation of Shipboard and Satellite-derived Bathymetry and Gravity Data over Seamounts in the Northwest Pacific Ocean. J. Geophys. Res. Solid Earth 2020, 125, e2020JB020396. [Google Scholar] [CrossRef]

- Almar, R.; Bergsma, E.W.J.; Gawehn, M.A.; Aarninkhof, S.G.J.; Benshila, R. High-frequency Temporal Wave-pattern Reconstruction from a few Satellite Images: A New Method towards Estimating Regional Bathymetry. J. Coast. Res. 2020, 95, 996–1000. [Google Scholar] [CrossRef]

- Hodúl, M.; Chénier, R.; Faucher, M.A.; Ahola, R.; Knudby, A.; Bird, S. Photogrammetric Bathymetry for the Canadian Arctic. Mar. Geod. 2020, 43, 23–43. [Google Scholar] [CrossRef]

- Parrish, C.E.; Magruder, L.A.; Neuenschwander, A.L.; Forfinski-Sarkozi, N.; Alonzo, M.; Jasinski, M. Validation of ICESat-2 ATLAS Bathymetry and Analysis of ATLAS’s Bathymetric Mapping Performance. Remote Sens. 2019, 11, 1634. [Google Scholar] [CrossRef]

- Salameh, E.; Frappart, F.; Almar, R.; Baptista, P.; Heygster, G.; Lubac, B.; Raucoules, D.; Almeida, L.P.; Bergsma, E.W.J.; Capo, S.; et al. Monitoring Beach Topography and Nearshore Bathymetry Using Spaceborne Remote Sensing: A Review. Remote Sens. 2019, 11, 2212. [Google Scholar] [CrossRef]

- Lyzenga, D.R. Passive remote sensing techniques for mapping water depth and bottom features. Appl. Opt. 1978, 17, 379–383. [Google Scholar] [CrossRef]

- Caballero, I.; Stumpf, R.P. Retrieval of Nearshore Bathymetry from Sentinel-2A and 2B satellites in South Florida Coastal Waters. Estuar. Coast. Shelf Sci. 2019, 226, 106277. [Google Scholar] [CrossRef]

- Evagorou, E.; Mettas, C.; Agapiou, A.; Themistocleous, K.; Hadjimitsis, D. Bathymetric Maps from Multi-temporal Analysis of Sentinel-2 Data: The Case Study of Limassol, Cyprus. Adv. Geosci. 2018, 45, 397–407. [Google Scholar] [CrossRef]

- Sagawa, T.; Yamashita, Y.; Okumura, T.; Yamanokuchi, T. Satellite derived bathymetry using machine learning and multi-temporal satellite images. Remote Sens. 2019, 11, 1155. [Google Scholar] [CrossRef]

- Laporte, J.; Dolou, H.; Avis, J.; Arino, O. Thirty Years of Satellite Derived Bathymetry: The Charting Tool That Hydrographers Can No Longer Ignore. Int. Hydrogr. Rev. 2020, 30, 129–154. [Google Scholar] [CrossRef]

- Capo, S.; Lubac, B.; Marieu, V.; Robinet, A.; Bru, D.; Bonneton, P. Assessment of the Decadal Morphodynamic Evolution of a Mixed Energy Inlet using Ocean Color Remote Sensing. Ocean Dyn. 2014, 64, 1517–1530. [Google Scholar] [CrossRef]

- Heege, T.; Hartman, K.; Wettle, M. Effective Surveying Tool for Shallow-Water Zones. Satellite-Derived Bathymetry. Hydro International. 2017. Available online: https://www.hydro-international.com/content/article/effective-surveying-tool-for-shallow-water-zones (accessed on 5 May 2025).

- Caballero, I.; Stumpf, R.P. Towards Routine Mapping of Shallow Bathymetry in Environments with Variable Turbidity: Contribution of Sentinel-2A/B Satellites Mission. Remote Sens. 2020, 12, 451. [Google Scholar] [CrossRef]

- Cahalane, C.; Magee, A.; Monteys, X.; Casal, G.; Hanafin, J.; Harris, P. A Comparison of Landsat 8, RapidEye and Pleiades Products for Improving Empirical Predictions of Satellite-Derived Bathymetry. Remote Sens. Environ. 2019, 233, 111414. [Google Scholar] [CrossRef]

- Da Silveira, C.B.L.; Strenzel, G.M.R.; Maida, M.; Araujo, T.C.M.; Ferreira, B.P. Multiresolution Satellite-Derived Bathymetry in Shallow Coral Reefs: Improving Linear Algorithms with Geographical Analysis. J. Coast. Res. 2020, 36, 1247–1265. [Google Scholar] [CrossRef]

- Guzinski, R.; Spondylis, E.; Michalis, M.; Tusa, S.; Brancato, G.; Minno, L.; Hansen, L.B. Exploring the Utility of Bathymetry Maps Derived with Multispectral Satellite Observations in the Field of Underwater Archaeology. Open Archaeol. 2016, 2, 243–263. [Google Scholar] [CrossRef]

- Lyzenga, D.R. Shallow-Water Bathymetry Using Combined Lidar and Passive Multispectral Scanner Data. Int. J. Remote Sens. 1985, 6, 115–125. [Google Scholar] [CrossRef]

- Stumpf, R.P.; Holderied, K.; Sinclair, M. Determination of Water Depth with High-Resolution Satellite Imagery over Variable Bottom Types. Limnol. Oceanogr. 2003, 48, 547–556. [Google Scholar] [CrossRef]

- Ceyhun, Ö.; Yalçın, A. Remote Sensing of Water Depths in Shallow Waters via Artificial Neural Networks. Estuar. Coast. Shelf Sci. 2010, 89, 89–96. [Google Scholar] [CrossRef]

- Manessa, M.D.M.; Kanno, A.; Sekine, M.; Haidar, M.; Yamamoto, K.; Imai, T.; Higuchi, T. Satellite-Derived Bathymetry Using Random Forest Algorithm and Worldview-2 Imagery. Geoplanning 2016, 3, 117–126. [Google Scholar] [CrossRef]

- Mudiyanselage, S.S.J.D.; Abd-Elrahman, A.; Wilkinson, B.; Lecours, V. Satellite-Derived Bathymetry Using Machine Learning and Optimal Sentinel-2 Imagery in South-West Florida Coastal Waters. GISci. Remote Sens. 2022, 59, 1143–1158. [Google Scholar] [CrossRef]

- Gafoor, F.A.; Al-Shehhi, M.R.; Cho, C.-S.; Ghedira, H. Gradient Boosting and Linear Regression for Estimating Coastal Bathymetry Based on Sentinel-2 Images. Remote Sens. 2022, 14, 5037. [Google Scholar] [CrossRef]

- Susa, T. Satellite Derived Bathymetry with Sentinel-2 Imagery: Comparing Traditional Techniques with Advanced Methods and Machine Learning Ensemble Models. Mar. Geod. 2022, 45, 435–461. [Google Scholar] [CrossRef]

- Gülher, E.; Alganci, U. Satellite-Derived Bathymetry Mapping on Horseshoe Island, Antarctic Peninsula, with Open-Source Satellite Images: Evaluation of Atmospheric Correction Methods and Empirical Models. Remote Sens. 2023, 15, 2568. [Google Scholar] [CrossRef]

- Liu, S.; Wang, L.; Liu, H.; Su, H.; Li, X.; Zheng, W. Deriving Bathymetry from Optical Images with a Localized Neural Network Algorithm. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5334–5342. [Google Scholar] [CrossRef]

- Nagamani, P.V.; Chauhan, P.; Sanwlani, N.; Ali, M.M. Artificial Neural Network Based Inversion of Benthic Substrate Bottom Type and Bathymetry in Optically Shallow Waters—Initial Model Results. J. Indian Soc. Remote Sens. 2012, 40, 137–143. [Google Scholar] [CrossRef]

- Ai, B.; Wen, Z.; Wang, Z.; Wang, R.; Su, D.; Li, C.; Yang, F. Convolutional Neural Network to Retrieve Water Depth in Marine Shallow Water Area from Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2888–2898. [Google Scholar] [CrossRef]

- Wan, J.; Ma, Y. Shallow Water Bathymetry Mapping of Xinji Island Based on Multispectral Satellite Image Using Deep Learning. J. Indian Soc. Remote Sens. 2021, 49, 2019–2032. [Google Scholar] [CrossRef]

- Zhou, W.; Tang, Y.; Jing, W.; Li, Y.; Yang, J.; Deng, Y.; Zhang, Y. A Comparison of Machine Learning and Empirical Approaches for Deriving Bathymetry from Multispectral Imagery. Remote Sens. 2023, 15, 393. [Google Scholar] [CrossRef]

- Cheng, J.; Chu, S.; Cheng, L. Advancing Shallow Water Bathymetry Estimation in Coral Reef Areas via Stacking Ensemble Machine Learning Approach. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 12511–12530. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, X.; Han, W.; Huang, X.; Chen, Y.; Li, J.; Wang, L. Satellite-Driven Deep Learning Algorithm for Bathymetry Extraction. In Web Information Systems Engineering—WISE 2024; Barhamgi, M., Wang, H., Wang, X., Eds.; Lecture Notes in Computer Science; Springer: Singapore, 2025; Volume 15439. [Google Scholar] [CrossRef]

- Yildiz, I.; Stanev, E.V.; Staneva, J. Advancing Bathymetric Reconstruction and Forecasting Using Deep Learning. Ocean Dyn. 2025, 75, 36. [Google Scholar] [CrossRef]

- Islam, K.A.; Abul-Hassan, O.; Zhang, H.; Hill, V.; Schaeffer, B.; Zimmerman, R.; Li, J. Ensemble Machine Learning Approaches for Bathymetry Estimation in Multi-Spectral Images. Geomatics 2025, 5, 34. [Google Scholar] [CrossRef]

- Agrafiotis, P.; Janowski, Ł.; Skarlatos, D.; Demir, B. MAGICBATHYNET: A Multimodal Remote Sensing Dataset for Bathymetry Prediction and Pixel-Based Classification in Shallow Waters. In Proceedings of the IGARSS 2024—2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; pp. 249–253. [Google Scholar]

- Agrafiotis, P.; Demir, B. Deep Learning-Based Bathymetry Retrieval without In-Situ Depths Using Remote Sensing Imagery and SfM-MVS DSMs with Data Gaps. ISPRS J. Photogramm. Remote Sens. 2025, 225, 341–361. [Google Scholar] [CrossRef]

- Kulha, N.; Ruha, L.; Väkevä, S.; Koponen, S.; Viitasalo, M.; Virtanen, E.A. Satellite Bathymetry Estimation in the Optically Complex Northern Baltic Sea. Estuar. Coast. Shelf Sci. 2024, 298, 108634. [Google Scholar] [CrossRef]

- Darmanin, G.; Gauci, A.; Deidun, A.; Galone, L.; D’Amico, S. Satellite-Derived Bathymetry for Selected Shallow Maltese Coastal Zones. Appl. Sci. 2023, 13, 5238. [Google Scholar] [CrossRef]

- Poursanidis, D.; Traganos, D.; Chrysoulakis, N.; Reinartz, P. Cubesats Allow High Spatiotemporal Estimates of Satellite-Derived Bathymetry. Remote Sens. 2019, 11, 1299. [Google Scholar] [CrossRef]

- Collin, A.; Etienne, S.; Feunteun, E. VHR Coastal Bathymetry Using WorldView-3: Colour versus Learner. Remote Sens. Lett. 2017, 8, 1072–1081. [Google Scholar] [CrossRef]

- Forfinski-Sarkozi, N.A.; Parrish, C.E. Analysis of MABEL Bathymetry in Keweenaw Bay and Implications for ICESat-2 ATLAS. Remote Sens. 2016, 8, 772. [Google Scholar] [CrossRef]

- Li, Y.; Gao, H.; Jasinski, M.F.; Zhang, S.; Stoll, J.D. Deriving High-Resolution Reservoir Bathymetry from ICESat-2 Prototype Photon-Counting Lidar and Landsat Imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7883–7893. [Google Scholar] [CrossRef]

- Le Quilleuc, A.; Collin, A.; Jasinski, M.F.; Devillers, R. Very High-Resolution Satellite-Derived Bathymetry and Habitat Mapping Using Pleiades-1 and ICESat-2. Remote Sens. 2022, 14, 133. [Google Scholar] [CrossRef]

- Jia, D.; Li, Y.; He, X.; Yang, Z.; Wu, Y.; Wu, T.; Xu, N. Methods to Improve the Accuracy and Robustness of Satellite-Derived Bathymetry through Processing of Optically Deep Waters. Remote Sens. 2023, 15, 5406. [Google Scholar] [CrossRef]

- Liu, Y.; Zhou, Y.; Yang, X. Bathymetry Derivation and Slope-Assisted Benthic Mapping Using Optical Satellite Imagery in Combination with ICESat-2. Int. J. Appl. Earth Obs. Geoinf. 2024, 127, 103700. [Google Scholar] [CrossRef]

- Lv, J.; Li, S.; Wang, X.; Qi, C.; Zhang, M. Long-Term Satellite-Derived Bathymetry of Arctic Supraglacial Lake from ICESat-2 and Sentinel-2. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2024, 48, 469–477. [Google Scholar] [CrossRef]

- Gülher, E.; Alganci, U. Satellite–Derived Bathymetry in Shallow Waters: Evaluation of Gokturk-1 Satellite and a Novel Approach. Remote Sens. 2023, 15, 5220. [Google Scholar] [CrossRef]

- Chu, S.; Cheng, L.; Ruan, X.; Zhuang, Q.; Zhou, X.; Li, M.; Shi, Y. Technical Framework for Shallow-Water Bathymetry with High Reliability and No Missing Data Based on Time-Series Sentinel-2 Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8745–8763. [Google Scholar] [CrossRef]

- Yunus, A.P.; Dou, J.; Song, X.; Avtar, R. Improved Bathymetric Mapping of Coastal and Lake Environments Using Sentinel-2 and Landsat-8 Images. Sensors 2019, 19, 2788. [Google Scholar] [CrossRef]

- Markus, T.; Neumann, T.; Martino, A.; Abdalati, W.; Brunt, K.; Csatho, B.; Farrell, S.; Fricker, H.; Gardner, A.; Harding, D.; et al. The Ice, Cloud, and Land Elevation Satellite-2 (ICESat-2): Science Requirements, Concept, and Implementation. Remote Sens. Environ. 2017, 190, 260–273. [Google Scholar] [CrossRef]

- Neumann, T.A.; Martino, A.J.; Markus, T.; Bae, S.; Bock, M.R.; Brenner, A.C.; Harbeck, K. The Ice, Cloud, and Land Elevation Satellite–2 Mission: A Global Geolocated Photon Product Derived from the Advanced Topographic Laser Altimeter System. Remote Sens. Environ. 2019, 233, 111325. [Google Scholar] [CrossRef]

- Berk, A.; Anderson, G.; Acharya, P.; Bernstein, L.; Muratov, L.; Lee, J.; Fox, M.; Adler-Golden, S.; Chetwynd, J.; Hoke, M. MODTRAN5: 2006 Update. Proc. SPIE 2006, 6233, 62331F. [Google Scholar] [CrossRef]

- Richter, R.; Schläpfer, D. Atmospheric/Topographic Correction for Satellite Imagery: ATCOR-2/3 User Guide; DLR IB 565-01/17; DLR: Wessling, Germany, 2017. [Google Scholar]

- Goodman, J.A.; Lee, Z.; Ustin, S. Influence of Atmospheric and Sea-Surface Corrections on Retrieval of Bottom Depth and Reflectance Using a Semi-Analytical Model: A Case Study in Kaneohe Bay, Hawaii. Appl. Opt. 2008, 47, F1–F11. [Google Scholar] [CrossRef] [PubMed]

- Hedley, J.D.; Harborne, A.R.; Mumby, P.J. Technical Note: Simple and Robust Removal of Sun-Glint for Mapping Shallow-Water Benthos. Int. J. Remote Sens. 2005, 26, 2107–2112. [Google Scholar] [CrossRef]

- Neuenschwander, A.; Magruder, L. The Potential Impact of Vertical Sampling Uncertainty on ICESat-2/ATLAS Terrain and Canopy Height Retrievals for Multiple Ecosystems. Remote Sens. 2016, 8, 1039. [Google Scholar] [CrossRef]

- Kauth, R.J.; Thomas, G.S. The Tasseled-Cap: A Graphic Description of the Spectral-Temporal Development of Agricultural Crops as Seen by Landsat. In Proceedings of the Symposium on Machine Processing of Remotely Sensed Data, West Lafayette, IN, USA, 15–17 June 1976; pp. 41–51. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- International Hydrographic Organization. Standards for Hydrographic Surveys of the International Hydrographic Organization, S-44 Edition 6.1.0, Monte Carlo. 2022. Available online: https://iho.int/uploads/user/pubs/standards/s-44/S-44_Edition_6.1.0.pdf (accessed on 20 April 2025).

| RF | XGBoost |

|---|---|

| bootstrap: True | objective: ‘reg:squarederror’ |

| ccp_alpha: 0.32 | base_score: 0.5 |

| criterion: squared_error | booster: ‘gbtree’ |

| max_features: 1.0 | tree_method: ‘exact’ |

| min_samples_leaf: 2 | colsample_bynode: 2 |

| min_samples_split: 4 | colsample_bytree: 2 |

| n_estimators: 200 | learning_rate: 0.3201 |

| oob_score: False | n_estimators: 200 |

| random_state: 45 | num_parallel_tree: 2 |

| warm_start: True | predictor: ‘auto’ |

| Band Pair/Metrics | RMSE (m) | MAE (m) | R2 | |||

|---|---|---|---|---|---|---|

| Random Forest | ||||||

| Gkt-1 | Gkt-1(B) | Gkt-1 | Gkt-1(B) | Gkt-1 | Gkt-1(B) | |

| Blue-Green | 0.58 | 0.21 | 0.27 | 0.13 | 0.88 | 0.94 |

| Green-Red | 0.71 | 0.21 | 0.45 | 0.13 | 0.89 | 0.94 |

| Blue-Red | 0.72 | 0.23 | 0.47 | 0.14 | 0.86 | 0.93 |

| S-2 | S-2(B) | S-2 | S-2(B) | S-2 | S-2(B) | |

| Blue-Green | 0.59 | 0.35 | 0.32 | 0.28 | 0.85 | 0.92 |

| Green-Red | 0.75 | 0.42 | 0.48 | 0.28 | 0.86 | 0.91 |

| Blue-Red | 0.78 | 0.42 | 0.47 | 0.28 | 0.84 | 0.91 |

| Aerial | Aerial (B) | Aerial | Aerial (B) | Aerial | Aerial (B) | |

| Blue-Green | 0.17 | 0.16 | 0.06 | 0.05 | 0.96 | 0.96 |

| Green-Red | 0.18 | 0.16 | 0.07 | 0.05 | 0.96 | 0.96 |

| Blue-Red | 0.18 | 0.16 | 0.05 | 0.05 | 0.96 | 0.96 |

| XGBoost | ||||||

| Gkt-1 | Gkt-1(B) | Gkt-1 | Gkt-1(B) | Gkt-1 | Gkt-1(B) | |

| Blue-Green | 0.58 | 0.21 | 0.27 | 0.13 | 0.87 | 0.94 |

| Green-Red | 0.71 | 0.22 | 0.46 | 0.13 | 0.89 | 0.94 |

| Blue-Red | 0.72 | 0.24 | 0.47 | 0.14 | 0.88 | 0.93 |

| S-2 | S-2(B) | S-2 | S-2(B) | S-2 | S-2(B) | |

| Blue-Green | 0.59 | 0.35 | 0.32 | 0.28 | 0.85 | 0.91 |

| Green-Red | 0.75 | 0.42 | 0.48 | 0.28 | 0.86 | 0.91 |

| Blue-Red | 0.78 | 0.42 | 0.47 | 0.28 | 0.84 | 0.91 |

| Aerial | Aerial (B) | Aerial | Aerial (B) | Aerial | Aerial (B) | |

| Blue-Green | 0.17 | 0.16 | 0.06 | 0.05 | 0.96 | 0.96 |

| Green-Red | 0.18 | 0.16 | 0.07 | 0.05 | 0.96 | 0.96 |

| Blue-Red | 0.18 | 0.16 | 0.05 | 0.05 | 0.96 | 0.96 |

| Information/Depth Range (m) | 0–10 (a) | 0–10 (b) | 0–15 (c) | 0–20 (d) |

|---|---|---|---|---|

| Training Data Amount | 10 K | 10 K | 11 K | 12 K |

| Training Data Source | SBE Only | SBE and LiDAR | SBE and LiDAR | SBE and LiDAR |

| RMSE (m) | 0.77 | 0.67 | 1.41 | 2.6 |

| MAE (m) | 0.53 | 0.32 | 0.88 | 1.73 |

| R2 | 0.46 | 0.95 | 0.88 | 0.73 |

| Pearson-R | 0.68 | 0.97 | 0.97 | 0.85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gülher, E.; Alganci, U. Sensor Synergy in Bathymetric Mapping: Integrating Optical, LiDAR, and Echosounder Data Using Machine Learning. Remote Sens. 2025, 17, 2912. https://doi.org/10.3390/rs17162912

Gülher E, Alganci U. Sensor Synergy in Bathymetric Mapping: Integrating Optical, LiDAR, and Echosounder Data Using Machine Learning. Remote Sensing. 2025; 17(16):2912. https://doi.org/10.3390/rs17162912

Chicago/Turabian StyleGülher, Emre, and Ugur Alganci. 2025. "Sensor Synergy in Bathymetric Mapping: Integrating Optical, LiDAR, and Echosounder Data Using Machine Learning" Remote Sensing 17, no. 16: 2912. https://doi.org/10.3390/rs17162912

APA StyleGülher, E., & Alganci, U. (2025). Sensor Synergy in Bathymetric Mapping: Integrating Optical, LiDAR, and Echosounder Data Using Machine Learning. Remote Sensing, 17(16), 2912. https://doi.org/10.3390/rs17162912