Abstract

Hyperspectral image classification (HSIC) is a key task in the field of remote sensing, but the complex nature of hyperspectral data poses a serious challenge to traditional methods. Although deep learning significantly improves classification performance through automatic feature extraction, manually designed network architectures suffer from issues such as dependence on expert experience and lack of flexibility. Neural architecture search (NAS) provides new ideas for HSIC through automated network structure optimization. This article systematically reviews the application progress of NAS in HSIC: firstly, the core components of NAS are analyzed, and the characteristics of various methods are compared from three aspects: search space, search strategy, and performance evaluation. Furthermore, the focus is on exploring NAS technology based on convolutional neural networks, covering 1D, 2D, and 3D convolutional architectures and their innovative integration with various technologies, revealing the advantages of NAS in HSIC. However, NAS still faces challenges such as high computing resource requirements and insufficient interpretability. This article systematically reviews the application of NAS in the field of HSIC for the first time, facilitating readers to quickly understand the development process of NAS in HSIC and the advantages and disadvantages of various technologies, proposing possible future research directions.

1. Introduction

Hyperspectral imaging technology is an important imaging method in the field of remote sensing. It acquires continuous electromagnetic spectral data ranging from visible light to near-infrared and even short-wave infrared bands, providing richer representation information for surface objects. Compared to traditional multispectral imaging systems [1], hyperspectral imaging can collect hundreds of narrow spectral bands over the same area, with each band typically having a wavelength range of only a few nanometers or tens of nanometers. This capability allows hyperspectral imaging to capture more detailed and precise spectral features. In hyperspectral images (HSIs), each pixel contains not only conventional spatial information but also rich spectral information. Specifically, the spectral values of each pixel can be represented as a high-dimensional vector, where each element of the vector corresponds to the spectral reflectance at different wavelengths. This high-dimensional spectral information allows the spectral characteristics of each pixel to be precisely compared with those of other pixels, enabling the accurate distinction of different materials or objects in the image. Since hyperspectral imaging can capture extremely subtle spectral differences, it has significant advantages in many applications that require precise identification and classification [2].

For instance, in environmental science [3], hyperspectral imaging can be used to monitor the health of vegetation [4], soil [5], and pollutants [6], identify water pollution sources [7], and assess forest cover changes [8]. In agriculture, it enables fine-grained monitoring of crop growth [9] and provides real-time information on soil moisture, plant diseases, and pests, supporting precision agriculture and helping farmers increase crop yields and reduce pesticide use. In the mining industry, hyperspectral imaging can be used to identify mineral types, distributions, and the mining potential of resources, aiding mineral exploration and extraction [10]. Furthermore, hyperspectral imaging is widely applied in military reconnaissance, urban planning, disaster monitoring, and ocean observation. In these fields, HSIs provide higher precision in land cover classification, change detection, and environmental assessment than traditional remote sensing techniques by capturing and analyzing subtle spectral features.

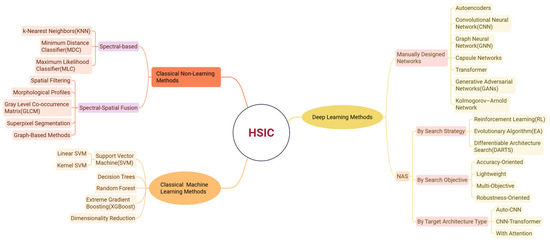

Hyperspectral image classification (HSIC) is one of the core tasks of hyperspectral remote sensing technology, aimed at assigning a unique land cover class label to each pixel in the image [11]. However, classification tasks face numerous challenges due to the high-dimensional nature of hyperspectral data, strong inter-band correlations, and the presence of mixed pixels. A mind map of the HSIC method is shown in Figure 1.

Figure 1.

Mind map of HSIC method.

First, a significant characteristic of HSIs is the extremely high dimensionality of spectral information. Each pixel contains hundreds of reflectance values across different bands, covering wavelengths from visible light to near-infrared and even short-wave infrared regions. Due to the large number of bands and the narrow intervals between adjacent bands, directly processing these high-dimensional data results in a substantial computational burden. Additionally, there are often strong correlations between the bands in HSIs, leading to information redundancy. This redundancy not only increases the computational load but also introduces the risk of overfitting in classification models, ultimately affecting the classification performance.

Secondly, HSIs exhibit phenomena such as “same spectrum of different objects” and “different spectrum of same object.” That is, the spectral features of different objects may be highly similar within certain bands, or the same object may exhibit differences in spectral features across different bands. These characteristics lead to highly nonlinear relationships in the data, making it difficult for traditional statistical pattern recognition methods to effectively handle such complex nonlinear data, thereby increasing the difficulty of classification tasks. Furthermore, due to the relatively low spatial resolution of HSI, the pixels often represent a mixture of multiple land cover types. This mixed pixel phenomenon implies that the spectral information represented by a single pixel may come from different land covers, making accurate classification challenging. For example, a pixel may contain spectral features of vegetation, soil, and water, a situation that is quite common in HSIs. The existence of mixed pixels presents an additional challenge for accurate classification and requires more complex models and methods for effective handling.

Another common challenge in supervised HSIC is the scarcity of training samples. Labeling each pixel with its land cover class requires significant manual effort, and acquiring training samples is time-consuming and labor-intensive, resulting in small labeled datasets. The lack of sufficient labeled samples limits the effectiveness of classification model training. Insufficient samples may prevent classifiers from effectively learning the diversity and complexity of land cover types, thereby affecting classification accuracy and generalization capabilities. Additionally, because spectral differences between different land covers can be quite small—especially for land covers with similar spectral characteristics—the limited number of labeled samples may not fully represent the variation in different land covers, further reducing classification accuracy.

In the early stages of HSIC research, the focus was primarily on utilizing the spectral information of HSIs in combination with traditional pattern recognition techniques for pixel-level classification [12]. For instance, the K-nearest neighbor classifier [13,14], due to its simple theory and operational procedures, has been widely applied in HSIC tasks, while support vector machines [15,16] have also achieved satisfactory results in HSIC. Additionally, methods such as logistic regression [17], sparse representation-based classifiers [18], and maximum likelihood classifiers [19] have been extensively used and have shown promising performance in practice. However, for HSIs with complex land cover distributions, relying solely on spectral information often fails to accurately distinguish between different land cover classes [20]. Therefore, many researchers have started to incorporate spatial information into HSIC methods. Such approaches are generally referred to as spectral–spatial feature-based classification methods. For example, the Markov random field model [21] is commonly used to extract spatial information from HSIs and has achieved certain successes. In addition to the Markov random field model, researchers have also proposed morphology-based methods to effectively integrate spatial and spectral information in HSIs [22,23]. Similarly, techniques such as texture feature descriptors and Gabor filters have also been employed to extract the combined spatial–spectral information in HSIs [24,25]. Most of the methods mentioned above rely heavily on manual extraction of spatial and spectral features, which is largely dependent on the expertise and intuition of domain experts. While these approaches have achieved certain levels of success, they are often confronted with the cumbersome process of feature engineering. Fortunately, deep learning techniques provide a more ideal solution for feature extraction in hyperspectral imaging [26]. Specifically, deep learning methods are capable of automatically learning abstract and high-level feature representations directly from raw data, without the need for complex and time-consuming manual feature design. By progressively aggregating low-level features, deep learning models are able to effectively capture both spatial and spectral information from images, thereby reducing the reliance on expert knowledge that is often required in traditional methods [27,28]. In HSIC tasks, Lin et al. [29] were among the first to apply deep learning techniques, achieving significant improvements in classification performance. Following this, Chen et al. [30]. proposed a stacked autoencoder model for extracting high-level features from HSIs, further enhancing the classification results. Additionally, Mou et al. [31] leveraged recurrent neural networks to tackle HSIC problems.

In recent years, convolutional neural networks have become one of the most powerful tools for HSIC, with many convolutional neural network-based methods outperforming traditional support vector machine-based methods in terms of classification accuracy [32,33,34,35,36,37]. For instance, Makantasis et al. [38]. utilized a convolutional neural network to simultaneously encode both spatial and spectral information from HSIs, employing a multi-layer perceptron for pixel classification with promising results. Moreover, Lee et al. [34] designed an innovative contextual deep convolutional neural network model, which extracts contextual information by exploring the spatial–spectral relationships between neighboring pixels, thus improving classification accuracy. Compared to traditional hand-crafted feature extraction methods, deep learning-based models are capable of leveraging the deep features within HSIs more effectively, offering stronger feature representation abilities and robustness [39]. As a result, deep learning-based approaches have become the mainstream in HSIC [40]. These approaches include models such as deep belief networks [41], capsule networks [42], and graph neural networks [43,44], which have shown excellent performance across a variety of applications. Among them, graph neural networks, by modeling image pixels or hyperpixels as graph nodes and constructing edges using the spatial–spectral similarity between nodes [45], are able to explicitly capture the complex non-regular spatial structures and long-range dependencies in hyperspectral data [46], effectively overcoming the limitation of the local receptive field of traditional convolutional neural networks (CNNs) [47]. Transformer-based models [48] further enhance global context modeling through self-attention mechanisms, effectively learning spectral–spatial relationships without predefined receptive fields. Recently, lightweight and hybrid variants have been proposed specifically for hyperspectral tasks. Mamba-based architectures [49] provide efficient long-range dependency modeling with linear complexity, making them well-suited for high-dimensional spectral sequences. Capsule networks [50] address spatial transformation robustness by preserving hierarchical part–whole relationships through a process called dynamic routing. This mechanism allows lower-level capsules to dynamically agree on activating higher-level capsules, improving classification consistency against variations like rotation or viewpoint changes in complex scenes. Kolmogorov–Arnold Networks (KANs) [51] offer a novel function-based representation, capable of modeling highly nonlinear spectral responses through learned univariate function compositions.

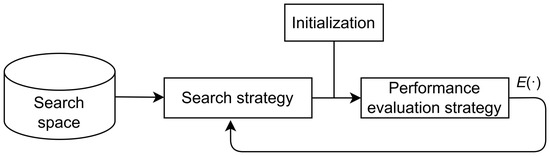

However, despite the significant success of deep learning techniques in HSIC, the models mentioned above typically rely on manually designed network architectures. In practical applications, designing an optimal network architecture is a complex and time-consuming task. Network architecture design requires not only deep expertise from the researchers but also extensive experimentation and iterative fine-tuning to validate the effectiveness of each design decision. Researchers are often required to adjust multiple factors, such as the number of layers, the number of neurons in each layer, and the connection patterns between layers, in order to find the most suitable architecture for a specific task. Instead of a single universally optimal architecture, different tasks and datasets require architectures tailored to their specific characteristics. As such, the design process becomes highly challenging and typically requires considerable experimentation and tuning. This process is heavily reliant on the researchers’ experience and deep understanding of the data, and this dependence introduces subjectivity and uncertainty into the design, often resulting in a time-consuming and computationally intensive procedure [52]. As research in this field progresses, an increasing number of researchers are recognizing the limitations of manually designed architectures and are thus exploring more efficient and automated methods for architecture design [53]. Fortunately, since the success of Zoph et al. [54] in applying neural architecture search with reinforcement learning, this research has garnered widespread attention. The main goal of neural architecture search is to identify the optimal neural network architecture for a specific task and dataset by optimizing the network structure [52]. Neural architecture search methods generally consist of three key components: the search space, search strategy, and performance evaluation strategy [55]. The overall framework is shown in Figure 2.

Figure 2.

The overall framework of neural architecture search. E(·) denotes the expectation operator.

The search space refers to the predefined set of selectable network architecture components, including layer types, the number of layers, and the number of neurons in each layer. Search strategies are responsible for selecting and optimizing the most appropriate combination of these components to achieve optimal performance. Performance evaluation strategies are used to assess the performance of different architectures through training and testing, thereby validating their effectiveness.

Although there are excellent review articles in both the HSIC field [56,57,58,59,60] and the neural architecture search field [53,61,62,63], there are relatively few reviews specifically addressing the application of neural architecture search in HSIC. Therefore, this paper aims to provide a comprehensive overview of neural architecture search-based HSIC methods, helping readers interested in this field to quickly and thoroughly understand the latest research developments.

1.1. Literature Selection Criteria

To ensure a comprehensive and systematic review, a rigorous literature search and selection process was employed. The selection criteria are detailed as follows:

Keyword Search: Primary search queries included combinations of core terms such as “neural architecture search”, “NAS”, “automated architecture design”, “hyperspectral image classification”, and “HSIC”.

Database Sources: We systematically queried major scientific databases, prioritizing those with high relevance to this research domain.

IEEE Xplore: This served as the primary source, encompassing leading publication venues such as IEEE Transactions on Geoscience and Remote Sensing, IEEE Geoscience and Remote Sensing Letters, and other IEEE journals.

Remote Sensing: This was included as a key journal focusing on hyperspectral image analysis and related applications.

arXiv: We searched arXiv to capture cutting-edge preprints and emerging trends, while acknowledging their preliminary nature.

Conference Portals: We targeted top-tier AI and computer vision conferences—such as CVPR, ECCV, ICML, and AAAI—where interdisciplinary NAS-HSIC studies are increasingly being reported.

Timeframe: This review primarily focused on literature published between 2018—when differentiable NAS began gaining prominence and applications in remote sensing emerged—and 2024, to capture the latest advancements. Foundational earlier studies on NAS or HSIC were also included when relevant.

Screening Process: The selection followed a multi-stage screening procedure:

Initial Screening (Title/Abstract): Articles were first screened for relevance to NAS specifically applied to HSIC. Studies focusing exclusively on general NAS without HSIC applications, or solely on HSIC without NAS, were excluded.

Full-Text Assessment: Potentially relevant articles underwent detailed evaluation, with particular emphasis on methodological innovations in NAS design or adaptations addressing HSIC-specific challenges (e.g., high dimensionality, mixed pixels). Only studies providing empirical evaluations on standard HSI datasets were considered, while those limited to incremental parameter tuning without true architectural search were excluded.

Quality Filtering: Priority was given to peer-reviewed journal articles and conference proceedings. Although arXiv preprints were included, they were critically evaluated for technical soundness. Studies lacking sufficient methodological detail, empirical validation, or clear relevance to NAS-HSIC were excluded. The literature that focuses solely on HSIC or solely on NAS without addressing their intersection was excluded from the core analysis of this review; however, its content still provides theoretical support for the background section of this paper.

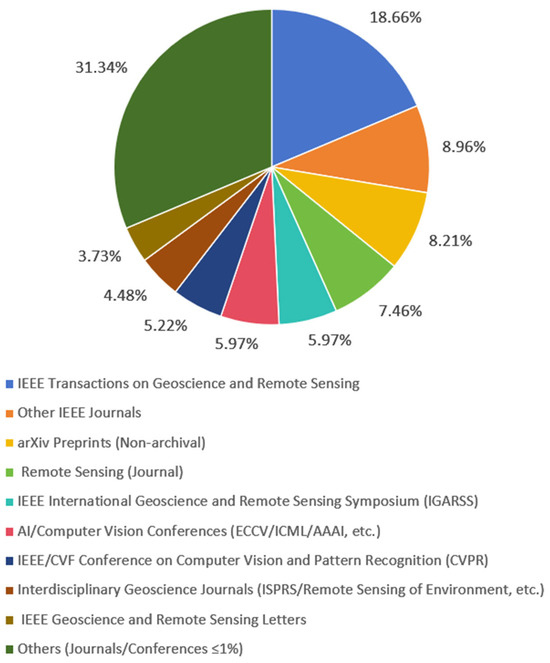

Figure 3 provides a pie chart summarizing the distribution of publication channels for relevant literature in the past five years. The data shows that IEEE Transactions on Geoscience and Remote Sensing is a core journal with a significantly higher proportion than other publications; The IEEE series of journals dominate, reflecting the technological continuity in the traditional field of geoscience and remote sensing. It is worth noting that the proportion of the preprint platform arXiv and the top computer vision conference CVPR indicates an increasing trend of open sharing mode and interdisciplinary technology integration.

Figure 3.

Distribution map of literature sources.

1.2. Main Contributions

This comprehensive review paper makes the following significant contributions to the field of NAS for HSIC:

First Systematic Review Focused on NAS-HSIC: To the best of our knowledge, this work presents the first dedicated and systematic review specifically addressing the application and progress of NAS techniques in the domain of HSIC. While excellent reviews exist separately for HSIC and NAS, this paper bridges the gap by providing a focused examination of their intersection.

In-Depth Analysis of NAS Components in HSIC Context: We provide a detailed dissection of the core NAS components—search space, search strategy (including evolutionary algorithms, reinforcement learning, and gradient descent), and performance evaluation strategies—specifically analyzing their characteristics, adaptations, and implications within the unique challenges of hyperspectral data processing.

Structured Taxonomy and Comprehensive Coverage of CNN-based NAS-HSIC Methods: This paper offers a structured taxonomy and thorough examination of prevailing NAS approaches for HSIC, with a particular emphasis on Convolutional Neural Network (CNN) architectures. We meticulously categorize and analyze key developments in:

1D-CNN-based NAS: Focusing on spectral feature extraction.

2D-CNN-based NAS: Balancing spatial–spectral processing with efficiency.

3D-CNN-based NAS: Integrating joint spatial–spectral feature learning and recent innovations (e.g., asymmetric convolutions, attention mechanisms, Transformers).

Critical Analysis of Challenges and Forward-Looking Future Directions: Moving beyond summarizing existing work, we critically analyze the persistent challenges hindering wider NAS adoption in HSIC, namely search efficiency limitations, prohibitive computational costs, and the interpretability dilemma of NAS-generated models. Based on this analysis, we propose concrete and promising future research directions.

Collectively, these contributions provide researchers and practitioners with a valuable resource to quickly grasp the state-of-the-art, understand the strengths and weaknesses of various NAS techniques for HSIC, identify critical research gaps, and guide future advancements in automating efficient and accurate HSI analysis.

The remainder of this paper is organized as follows. Section 2 provides a comprehensive analysis of core NAS methodologies (search space design, search strategies, performance evaluation). Section 3 critically examines specific algorithmic advancements in NAS tailored for HSIC, categorizing them by their underlying network paradigm and highlighting key innovations. Performance results on representative hyperspectral datasets are discussed within this section to validate the effectiveness of the reviewed algorithms. Section 4 discusses the persistent challenges and limitations of applying NAS in the HSIC domain. Finally, Section 5 concludes this paper and outlines promising future research directions focused on advancing NAS algorithms for hyperspectral analysis.

2. Neural Architecture Search

2.1. Search Space

The search space defines the set of neural architectures that a neural architecture search algorithm can potentially discover. The design of the search space plays a crucial role in determining the ultimate performance of the algorithm. Not only does it define the degrees of freedom for the search process, but it also, to some extent, directly determines the performance upper bound of the algorithm.

A search space with too many degrees of freedom may consume excessive computational resources, while a search space that is too small might lead to the loss of potentially optimal neural architectures. Therefore, it is necessary to carefully reconstruct the search space. For a given task, prior knowledge such as typical network architectures and established network construction rules are often used to effectively narrow down the search space, speeding up the search process. However, this approach can also limit the algorithm’s ability to discover novel architectures that go beyond current human knowledge.

More specifically, the search space is typically composed of a predefined set of operations, such as convolution operations, transposed convolutions, pooling, and fully connected layers, as well as neural network architecture configurations, such as architecture templates, connection methods, and the number of convolutional channels in the initial stages for feature extraction. These operations and architectural configurations define the set of neural architectures that the neural architecture search algorithm can explore within its search space.

The fundamental operations in neural architecture search still originate from artificial neural networks. Convolutional neural networks consist of a series of operations such as convolution, pooling, and fully connected layers, making these operations a basic component of the neural architecture search operation set. Typically, the hyperparameters of these operations, such as the size of convolutional kernels, the number of channels, the stride, the size of pooling filters, and the number of neurons in each layer of a fully connected network, are also part of the search space. Neural architecture search discretizes these parameters into a list of candidate settings, which includes hyperparameter configurations commonly used in manually designed neural networks.

By combining the above operation set space with the network architecture, we can fully define a neural architecture search space. This comprehensive encoding of both operations and architectures allows neural architecture search to explore the vast space of possible configurations, efficiently searching for the optimal network architecture. The use of discrete search spaces and encoding schemes enables the algorithm to handle a wide variety of architectures and operations, making it more flexible and scalable.

2.1.1. Global Search Space

When manually designing a layer in an artificial neural network, the parameters that need to be determined include the size and number of convolutional kernels, the type and stride of convolution, the number of fully connected layers, and residual connections. In neural architecture search, determining these hyperparameters layer by layer is equivalent to directly searching for the entire neural network structure. This search space is referred to as the global search space. Depending on the network shape, the global search space can be divided into chain structures and multi-branch structures.

In a chain structure, each hidden layer of the neural network is connected only to the adjacent previous and next layers, with no cross-layer connections. The entire network is composed of a stack of convolutional layers, pooling layers, and fully connected layers, forming a chain-like structure. Networks such as LeNet [64] and VGG [65] are typical examples of chain structure networks. However, chain structures inherently suffer from issues such as small scale, limited learning capability, and the potential for problems like vanishing or exploding gradients. To address these challenges, multi-branch network structures emerged. These structures introduce branching elements on top of the chain structure, allowing for cross-layer connections. Networks like GoogLeNet’s Inception module [66], ResNet’s residual module [67], and DenseNet’s dense connections all feature multi-branch structures [68].

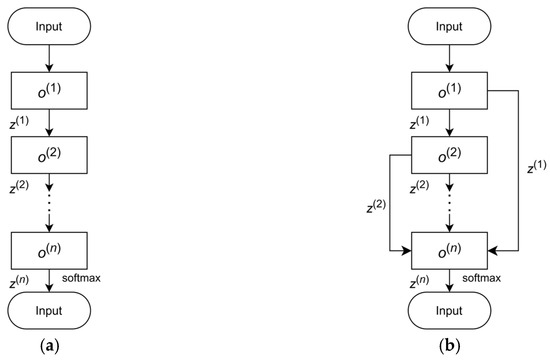

Multi-branch structures enable multi-dimensional feature fusion, resolving the problems of vanishing and exploding gradients. As a result, they have become the dominant network architecture in current deep learning research and applications. Whether it is a chain network or a branch network, a neural network can be viewed as a directed acyclic graph (DAG) located between the input and output nodes. The structural diagram is shown in Figure 4.

Figure 4.

Directed acyclic schematic diagrams of neural networks. (a) Simple chained directed acyclic graph; (b) residual network directed acyclic graph.

Therefore, the computation of a neural network can be expressed as:

where is an operation from the candidate set, the i-th operation in the chain structure or the i-th node’s operation in the branch structure; is the result of the operation ; represents a summation or merging operation. The global search space is equivalent to searching all the operators in the directed acyclic graph of the neural network. Most deep neural networks consist of dozens of hidden layers, which are typically composed of tens to hundreds of such operators. Traversing the entire global search space formed by these operators would be computationally infeasible and would consume enormous computational resources.

2.1.2. Local Search Space

To save computational resources, and based on the experience that manually designed neural network structures often reuse the same modules, Zoph [69] proposed a search space based on the cell structure, where the search process for the neural architecture is performed within the cell. Once the cell structure is determined, cells are stacked according to predefined rules to form the final network structure. Zoph [69] classified cells into two types: normal cells and reduction cells. The normal cell returns feature maps of the same size as the input feature maps, while the reduction cell performs downsampling and doubles the number of channels. Since the internal structure of the same type of cell is identical, the neural architecture search is simplified to searching within the two types of cell structures, significantly improving the search efficiency.

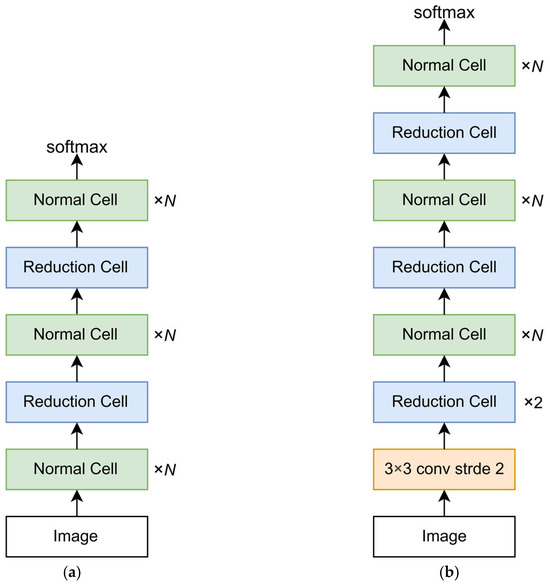

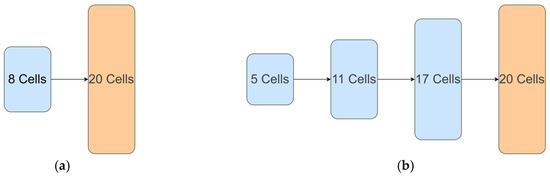

According to experience in manually designing neural network structures, it is common to stack several normal cells consecutively before adding a reduction cell. This approach helps reduce the number of parameters and extract high-dimensional features. Based on this, neural architecture search has proposed two cell-based network frameworks for the CIFAR-10 and ImageNet datasets, as shown in Figure 5.

Figure 5.

Cell-based search space neural network architecture. (a) CIFAR-10; (b) ImageNet.

When designing a cell-based search space, classical experiences from manually designed neural networks can also serve as a reference. More flexible and effective methods can be incorporated into the cell to greatly improve its performance. Cai [70] and others introduced dense connections from DenseNet [68] into the cell search space. Rawal [71] and others fixed the repetition number of two types of cells, while Liu [72] and others placed reduction cells at one-third and two-thirds of the network. Huang [73] and others proposed a differentiable neural architecture search algorithm at both the cell and network levels to search for a lightweight single-image super-resolution model, aiming to construct a more lightweight and accurate super-resolution structure.

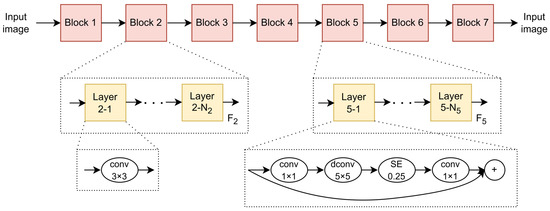

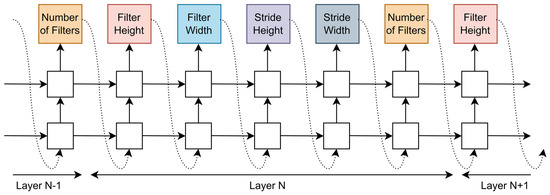

MNASNet [74], which stands for Platform-aware Neural Architecture Search for Mobile, proposes a hierarchical search space based on the cell-based search space. In this approach, cells are further subdivided into smaller structural components called blocks. Each block can have different internal layer structures, such as Layer 2-1 and Layer 5-1. Within the same block, the layer structures are generally the same, with the key difference being that the stride of the first layer (such as Layer 5-1) is 2, which is used for downsampling, while the other layers (such as Layer 5-2 to Layer 5-N5) have a stride of 1. During the search process, MNASNet needs to determine the operations and connections for each block, as shown in Figure 6. It can be seen that the search space within each block is still based on the cell structure, meaning that identical layers are stacked together. The former approach can be viewed as a further encapsulation of the latter.

Figure 6.

The overall framework of MNASNet.

Both the global search space and the local search space incorporate prior knowledge from manually designed architectures. As the network depth increases, the number of channels in the feature matrices also grows. While global network structure search can more easily extract high-dimensional features, it also increases the design and search complexity. A key operation to balance network depth and search difficulty is stacking cells. The cell-based search space has been proven to be an effective method for neural network architecture design. The block-based search space strikes a balance between the diversity of the global search space and the lightweight nature of the cell-based structure. Currently, the predominant design approaches for neural architecture search are based on cell and block structures.

2.1.3. Addressing HSIC Challenges Through Search Space Design

The design of the NAS space is profoundly influenced by the unique characteristics of hyperspectral data:

High Dimensionality: HSIs consist of hundreds of spectral bands, resulting in extremely high input dimensionality. Performing a global search over all possible network layer configurations across the entire spectral depth becomes computationally prohibitive. This challenge strongly motivates the adoption of localized search spaces. By constraining the search to reusable modules—such as cells or blocks—and stacking them hierarchically, NAS significantly reduces the complexity of the search while still capturing essential spatial–spectral patterns. Furthermore, search spaces are often enriched with operations specifically designed for spectral processing, such as 1D convolutions operating exclusively along the spectral dimension, or factorized 3D convolutions that decouple spatial and spectral processing. These strategies mitigate the parameter explosion typically associated with full 3D convolutional kernels.

Spatial–Spectral Correlations and Mixed Pixels: Accurate classification of mixed pixels necessitates effective modeling of complex interactions among neighboring pixels (spatial context) and across spectral bands. Thus, the search space must offer sufficient flexibility to identify architectures capable of such fusion. This entails the inclusion of diverse operations such as 2D convolutions (focusing on spatial features), 3D convolutions (joint spatial–spectral modeling), recurrent connections (modeling spectral sequences), attention mechanisms (highlighting informative bands or spatial regions), and skip connections (alleviating vanishing gradients in deeper architectures). The choice between chain-structured or multi-branch architectures—either at the global search space level or within cell-level designs—directly affects the model’s ability to learn complex spatial–spectral relationships. Multi-branch structures, which are commonly adopted in successful NAS designs for HSIC, inherently promote feature fusion, which is critical for handling mixed pixels.

Limited Labeled Samples: Excessively large or complex search spaces increase the risk of overfitting, especially under limited training data. Therefore, principled search space design, guided by domain knowledge—for example, favoring smaller convolutional kernels in early layers or incorporating regularization operations such as dropout—can constrain the search towards architectures with better generalization ability. This is essential for achieving robust performance in small-sample HSIC tasks.

2.2. Search Strategy

After determining the search space, we need to find the architecture that performs optimally, a process known as architecture optimization. Currently, there are various search strategies available for finding the optimal neural architecture. In the following, we will provide a detailed introduction to the search strategies commonly used in the hyperspectral domain.

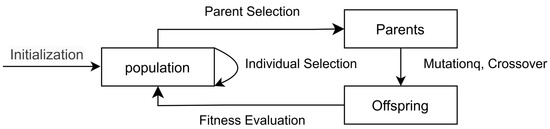

2.2.1. Evolutionary Algorithm

Evolutionary algorithms (EAs) [75] have been widely applied as a metaheuristic optimization method for neural architecture search. They are population-based search strategies inspired by the process of natural evolution, designed to optimize network architectures, as shown in Figure 7.

Figure 7.

Schematic diagram of evolutionary algorithm.

Population initialization is the starting point of evolutionary algorithms, which directly affects the search efficiency and final performance. Early studies tend to construct simple small-scale populations to reduce computational cost, but as the search space expands, the balance between diversity and complexity becomes a key issue. NasNet [69] introduces evolutionary algorithms into NAS for the first time, and its initialization strategy contains only 1000 minimalist networks containing only global pooling layers, gradually extending the depth of the network by eliminating low-precision individuals generation by generation and retaining the weights of the parents. Although this method reduces the initial computational overhead, it may fall into local optimality due to the lack of structural diversity. The subsequent MNASNet [73] is improved by randomly generating 20 architectural units as the initial population, increasing the diversity through genetic recombination, and setting an accuracy threshold to eliminate poor-quality offspring, which balances exploration and exploitation. To address the problem of the low evolutionary speed of large-scale networks, Chen [76] proposes the Net2Net framework to accelerate the search through knowledge migration. The core idea is to train small networks first, then use their weights as a priori knowledge to initialize the corresponding parts of larger networks. Experiments show that this method saves about 30% of the training time in the ImageNet task.

Mutation operations are at the heart of evolutionary algorithms and directly affect the effectiveness of architecture exploration. Conventional random mutations may lead to performance fluctuations and repetitive training, so researchers have focused on function-preserving mutations and efficient coding strategies. Suganuma [77] proposed a ternary-encoded genotype representation, which decomposes the network structure into an active part and an inactive part. Inactive genes are involved in mutation but do not affect the current network phenotype and are only activated during offspring evaluation. This method reduces the mutation cost by more than 60% by decoupling the structure and training. Elsken [78] further designed function-holding switching operations to ensure that the output of the network is the same before and after the mutation, and that some offspring can be evaluated without retraining. Xie [79] used fixed-length encoding to represent network layer types and connectivity to improve cross-task generalization by enforcing migration consistency. In CIFAR-10-to-ImageNet migration experiments, its search architecture improves the accuracy by 4.2% compared to random search. Real [80], on the other hand, introduces diversity constraints to prohibit the repeated selection of high-precision individuals as parents to avoid premature convergence of the population. Its AmoebaNet family of models achieves 83.9% Top-1 accuracy on ImageNet, outperforming contemporaneous manually designed models (e.g., ResNet-152) and searching three times faster than reinforcement learning.

Early evolutionary algorithms were computationally expensive due to the need for complete training of each child, and recent research has achieved efficiency breakthroughs through agent evaluation and hierarchical pruning. Wistuba [81] proposed biased-order pruning to dynamically remove redundant operations based on the contribution of network layers. Its core is to construct the inter-layer biased-order relationship graph and retain only the connections with greater than threshold impact on accuracy, which reduces the search time to 0.3 GPU-Days in the CIFAR-10 task. Li [82], on the other hand, utilizes a weight-sharing strategy to reuse the parent’s weights to initialize the child’s in the block-level search space, which improves the search efficiency to less than 1 GPU-Day. Chu [83] proposed the MoreMNAS algorithm, which introduces multi-objective evolution to mobile NAS for the first time, balancing exploration and exploitation by jointly optimizing accuracy, inference latency, and energy consumption. In the Pixel-3 mobile deployment test, its search architecture improves the inference speed by 22% with the same accuracy compared to the MNASNet model.

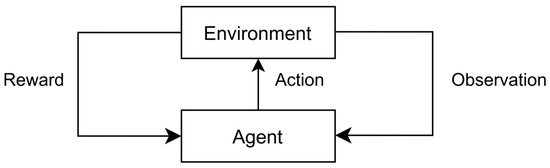

2.2.2. Reinforcement Learning

Reinforcement learning is a key branch of machine learning in which an agent interacts with an environment and receives rewards that guide its decision-making for subsequent states. The goal is to maximize cumulative rewards through repeated iterations, as shown in Figure 8. In the context of NAS, the agent continuously modifies the neural network structure. The state represents the constructed network, while the reward is the accuracy of the network on a validation set. Common RL-based NAS strategies include those based on Q-learning and policy gradient methods.

Figure 8.

Reinforcement learning framework.

Zhong [84] applied RL to NAS and proposed the MetaQNN and BlockQNN methods, respectively. The MetaQNN method uses a greedy exploration strategy combined with experience replay in Q-learning to search for optimal network architectures. On the other hand, BlockQNN encodes the layers of the neural network as a network structure code (NSC) and uses this NSC to search within a block-based search space. This method achieved a 97.35% accuracy on the CIFAR-10 dataset, but it is computationally expensive.

Zoph [54] and his team introduced a reinforcement learning approach where a Recurrent Neural Network (RNN) serves as the controller for neural architecture search. The method optimizes RNN parameters using reinforcement learning and represents the network structure as a variable-length encoding. The maximum number of layers is constrained, and the policy gradient method is employed to optimize the performance of the RNN controller. This approach leverages autoregressive control to efficiently navigate the architecture space, as illustrated in Figure 9.

Figure 9.

Automatic regression control structure.

Reinforcement learning as a NAS search strategy has its drawbacks: each time a new neural network architecture is generated, it requires retraining, which is very time-consuming.

2.2.3. Gradient Descent

Search spaces based on reinforcement learning and evolutionary algorithms, due to their discrete and non-differentiable nature, prevent the use of gradient-based optimization strategies in the search process. This necessitates the evaluation of a large number of network structures during the search, consuming substantial computational resources during training. Gradient-based search strategies aim to create a differentiable search space, enhancing search efficiency. DARTS [72] is a pioneering algorithm, being the first to employ a gradient descent method. It relaxes the discrete space using the Softmax function, thereby enabling the search for neural architectures within a continuous and differentiable space. The specific formula is as follows:

In this context, o(x) represents the operation performed on the input x, denotes the weight assigned to operation between the node pair (i,j), and K is the number of predefined candidate operations. After relaxation, the task of searching architectures is transformed into the joint optimization of the neural architecture and its weights. These two types of parameters are optimized alternately, forming a bi-level optimization problem. Specifically, the architecture and weights are optimized using the validation set and training set, respectively. The training and validation losses are represented as and , respectively. Hence, the total loss function can be expressed as follows:

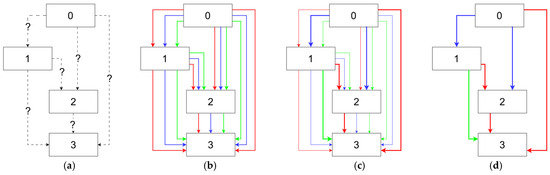

Figure 10 provides an overview of DARTS. Specifically, the entire search space is treated as a directed acyclic graph (DAG), where each node represents the output of the current layer, and multiple edges exist between nodes. Each edge represents a candidate operation for the network architecture. The softmax function is used to integrate the multiple edges between two nodes into a unified output, thus transforming discrete operations into continuous ones. Subsequently, gradient descent is applied to update the parameters of the entire DAG. While DARTS introduced efficient gradient-based optimization by formulating each edge’s output as a weighted summation of candidate operations, this design inherently creates computational bottlenecks. Specifically, maintaining simultaneous activation of all operators during search causes GPU memory consumption to scale linearly with the number of candidate operations. To address this critical limitation, recent advancements [85,86,87,88,89] have shifted toward stochastic architecture sampling through differentiable relaxations.

Figure 10.

Overview of DARTS. (a) The data can only flow from lower-level nodes to higher-level nodes, and the operations on edges are initially unknown. (b) The initial operation on each edge is a mixture of candidate operations, each having equal weight. (c) The weight of each operation is learnable and ranges from 0 to 1. (d) The final neural architecture is constructed by preserving the maximum weight-value operation on each edge.

At the end of the search, only the edges with the highest weights between nodes are retained, and the directed acyclic graph formed by these edges represents the optimal network architecture. However, a drawback of this method is that since the supernet covers the entire search space, all the parameters of the supernet need to be stored in memory, requiring computers with high memory resources [90]. To mitigate GPU memory constraints during architectural exploration, conventional NAS implementations employing modular building blocks typically adopt a two-phase paradigm: a compressed search configuration followed by an expanded evaluation model. As illustrated in Figure 11a, the DARTS framework [72] exemplifies this approach by utilizing an 8-layer prototype during architectural discovery, which subsequently scales to 20 layers during final evaluation through direct component replication. While this methodology successfully identifies optimal substructures for shallow configurations, its inherent assumption of architectural scalability remains theoretically unsubstantiated. Empirical observations reveal performance degradation (1.2–3.8% accuracy drop on ImageNet [72]) when naively extending the discovered modules to deeper networks. This phenomenon stems from a fundamental discrepancy between gradient dynamics in deep architectures and the optimization objectives governing shallow search processes, manifesting as either representational consistency breakdown or training instability in expanded configurations. To address the architectural depth discrepancy inherent in conventional NAS paradigms, Chen [91] developed Progressive Differentiable Architecture Search (P-DARTS), introducing a multi-phase optimization framework that systematically bridges the configuration gap between search and evaluation phases. The methodology implements depth-adaptive progressive stacking, where network layers are incrementally augmented at phase transitions while preserving architectural continuity through differentiable parameter inheritance. This phased approach mitigates depth-induced optimization divergence but introduces quadratic computational scaling with layer additions. To resolve this efficiency–quality trade-off, P-DARTS integrates an adaptive pruning strategy that compacts the operation space from 5→3→2 candidates via entropy-guided elimination, as visualized in Figure 11b. The resultant hierarchical optimization scheme achieves 47% computational overhead reduction relative to depth-naive implementations. Benchmark evaluations on CIFAR-10 demonstrate P-DARTS’s superiority, yielding a 2.50% test error rate versus DARTS’s 2.83%, attributable to its depth-aware architecture distillation mechanism.

Figure 11.

Difference between DARTS and P-DARTS: (a) DARTS; (b) P-DARTS.

2.2.4. Efficiency Considerations Under HSIC Challenges

The inherently high computational cost of HSIC tasks significantly influences the selection and adaptation of NAS strategies. Given the high dimensionality of hyperspectral data, training and evaluating even a single architecture can be computationally expensive. Therefore, search strategies must demonstrate high sample efficiency to be practical for HSIC.

Evolutionary Algorithms (EAs): EAs have shown strong potential in exploring diverse architectures suitable for the complex nature of mixed pixels in HSIC. To alleviate the computational burden, EA frameworks often incorporate techniques such as weight inheritance, function-preserving mutations, low-fidelity evaluations (e.g., using reduced training epochs or smaller data subsets), and early stopping. Additionally, diversity maintenance strategies are critical to prevent premature convergence in the high-dimensional and complex architecture search space.

Reinforcement Learning (RL): While RL offers powerful policy exploration capabilities, it typically suffers from high sample complexity, requiring a large number of architecture evaluations. Consequently, RL is less frequently applied to HSIC compared to gradient-based or evolutionary methods, unless it is augmented with significant weight sharing mechanisms or performance prediction modules to reduce computational costs.

Gradient-Based Methods: Gradient-based NAS methods, such as DARTS, are widely adopted due to their lower search cost relative to RL and most EA approaches. However, the need to maintain a supernet containing all candidate operations results in considerable memory consumption, which can become prohibitive when handling large HSI patches or deep architectures. To mitigate this issue, techniques such as input downsampling or constraining the search space are often employed.

Handling Complexity: The strategies themselves must navigate the complex relationship between spatial and spectral features inherent in mixed pixels. EAs with diverse populations and RL with exploration mechanisms aim to avoid local optima that might miss effective fusion strategies. Gradient methods rely on continuous relaxation to smoothly explore combinations.

2.3. Performance Evaluation Strategy

Performance evaluation strategies are primarily used to assess the performance of the network architectures discovered through neural architecture search, providing necessary decision support for the search strategy. Neural architecture search algorithms need to evaluate a large number of search results. If traditional deep learning evaluation processes are used directly, the computational power and time costs for the platform would be enormous [80]. To improve evaluation efficiency and reduce search time, a more efficient and reasonable performance evaluation strategy needs to be designed.

In the training process of neural networks, factors such as dataset size, number of training epochs, network structure complexity, and input tensor size all directly impact training time. To accelerate the evaluation of network performance, scholars have proposed various methods to address these time-consuming factors, including low-fidelity evaluation, early stopping, surrogate models, and weight sharing.

2.3.1. Low-Fidelity Evaluation

In order to evaluate network performance, performance evaluation strategies typically require training each network obtained from the search strategy until convergence, which is the most time-consuming step in neural architecture search. Low-fidelity methods focus on accelerating convergence by improving the dataset and network structure, thus enhancing the efficiency of neural architecture search. Low-fidelity methods refer to techniques that improve search efficiency by shortening training time, reducing the training dataset size [92], decreasing the number of network layers [72], and using lower-resolution images. These methods approximate network performance by constructing simplified network structures, such as reducing the number of filters and block structures, which helps to increase evaluation efficiency. When the number of iterations is the same, optimizing the network structure is more reasonable than reducing the dataset size, as it benefits from more accurate gradient estimates. Tan [93] further explored model scaling and proposed EfficientNet, which effectively balances depth, width, and resolution.

Although low-fidelity methods reduce computational costs and speed up model convergence, they also have certain drawbacks. Chrabaszcz [94] pointed out through experiments that low-fidelity methods can cause a discrepancy between the evaluation results and the convergence results, leading to an underestimation of the network’s performance. In most cases, as long as the relative ranking of different network architectures remains stable, performance evaluation will not significantly affect structural changes. However, when the difference between low-fidelity evaluation and full evaluation is too large, the relative ranking order may change substantially. In such cases, it is necessary to gradually increase the fidelity. Klein [95] proposed a generative model that predicts the validation error as a function of training set size. This model, during the optimization process, extrapolates to the full dataset and explores the initial configuration on a subset of the data. They also constructed a Bayesian optimization process, which addresses the issue of low-fidelity evaluations potentially causing significant biases, with very low cost.

2.3.2. Early Stopping

In deep learning, early stopping refers to halting the training process when the accuracy on the validation set no longer improves, or when the network has not yet converged. This technique effectively prevents overfitting and helps to achieve the best generalization performance. Applying early stopping in neural architecture search can significantly reduce search time [95,96], limiting the number of training epochs. Based on experience in designing networks, the accuracy of different models at the same point during training can reflect, to some extent, the relative accuracy after convergence. Zheng [97] proposed and proved a hypothesis that the performance ranking of different networks remains consistent throughout each training epoch. Therefore, during the network evaluation training, a lower epoch number can be set. If the network has not converged by the end of training, the network accuracy at this point can be used as the evaluation metric. The learning curve reflects the error on the training and test sets during the network’s learning process [98,99]. When the network converges, the test error is slightly higher than the training error. Domhan [96] proposed that after the initial training of the network, the network accuracy after convergence can be predicted by interpolating the learning curve, and network architectures with low predicted accuracy can be terminated. Additionally, some methods simultaneously consider architecture hyperparameter predictions for the optimal learning curve [79,100,101]. Rawal [71] used the learning curve from the first 10 epochs to predict the accuracy of the network model after 40 epochs, and their search results for Seq2seq models were comparable to manually designed architectures. Mills [102] proposed GENNAPE (Generalized Neural Architecture Performance Estimator), a generalized neural architecture performance estimator. By representing a given neural network as a computational graph of atomic operations, it can model any architecture. It first learns the graph encoder through contrastive learning and encourages network separation using topological features. Then, multiple predictor heads are trained, and finally, the performance prediction is achieved through fuzzy membership soft aggregation of the neural network.

2.3.3. Surrogate Model

Surrogate model-based methods start from the task itself, using a simplified version of the actual task to train the surrogate model and evaluate its performance, before finally transferring the best surrogate model to the actual task [103]. Simplification of the task can be achieved by reducing the scale and resolution of the dataset. For example, MNASNet [74] combines the ideas of surrogate models and early stopping, initially training 8000 models for 5 epochs on the CIFAR-10 training set, and then training the top 15 performing models using the full datasets.

Some studies [104,105] predict network performance by training accuracy prediction models, thus bypassing the evaluation step on the validation set. This method is one of the fastest performance estimation strategies available, but it requires training a surrogate model and demands high accuracy. Additionally, another study [106] introduced the TransNAS-Bench-101 method, which includes network performance across seven tasks. It evaluates 7352 backbones across these tasks, providing 51,464 models with detailed training information.

Beyond just designing surrogate models, researchers have also explored the importance of efficient parameter tuning strategies when transferring surrogate models to downstream tasks. For instance, a novel neural architecture search algorithm [107] that utilizes structured and unstructured pruning learning for a parameter-efficient tuning architecture was proposed, demonstrating effective results.

2.3.4. Weight Sharing

The performance evaluation strategy based on weight sharing is derived from the training process of the networks to be evaluated. It uses the same weights to evaluate different search results, avoiding the time overhead caused by repeated training. There are two main methods for weight sharing: network morphisms and one-shot methods.

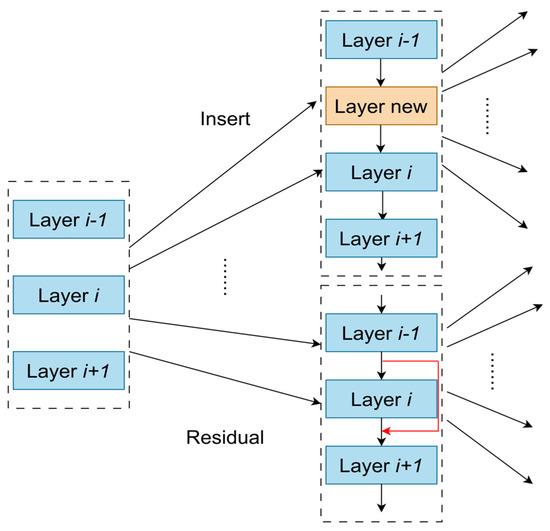

One approach, proposed by Wei [108], uses network morphisms, which involves modifying the architecture of a trained initial network while retaining its functionality, as shown in Figure 12. For example, new layers may be inserted, or convolution kernel sizes may be changed, progressively expanding the initial network to obtain new neural network architectures. Since the new network inherits the knowledge from the initial network, it does not need to be trained from scratch and can converge in just a few epochs. The LEMONADE [107] algorithm, introduced by Elsken et al., also uses the network morphism approach.

Figure 12.

Schematic diagram of network morphism.

Network morphisms continuously increase the capacity of the neural network architecture, leading to increasingly complex networks. To simplify the architecture, Liu [62] proposed Net2Net, which designs an approximate network morphisms algorithm that utilizes a knowledge transfer mechanism to increase the depth of the initial network. Jin [109] used Bayesian optimization to guide network morphisms, proposing the auto-keras system to improve search effectiveness.

The one-shot method constructs a super-network that corresponds to the search space, eliminating the need for separate training after the search. The super-network includes all possible operations between nodes and shares weights between nodes that are connected by edges. The network architectures that can be searched are the sub-networks within the super-network, so only the super-network itself needs to be trained.

A method called efficient neural architecture search, proposed by Pham et al. [110], implements weight sharing between sub-networks and uses reinforcement learning with an approximate gradient method to train the one-shot framework. Differentiable architecture search [72], developed by others, jointly optimizes all parameters of the one-shot model, enabling the training of a heterogeneous architecture search algorithm. Another approach, proposed by Gaier et al. [111], introduces an architecture search algorithm that is independent of weights. This approach shares weights across the network but further trains the searched network to evaluate its performance, improving the accuracy of network performance evaluation while ensuring search efficiency.

2.3.5. Analysis of Evaluation Methods Tailored for HSIC

Performance evaluation strategies are critical in NAS for HSIC due to the substantial computational cost of training models on hyperspectral data. The core motivation behind all efficient evaluation approaches is to directly address the heavy computational burden inherent to HSIC.

Low-fidelity evaluation techniques—such as downsampling spectral or spatial dimensions, reducing the number of training epochs, or using smaller image patches—can significantly accelerate the evaluation of individual architectures. However, it is essential to ensure that the reduction in fidelity does not distort the relative performance rankings of architectures or eliminate information crucial for distinguishing challenging classes or mixed pixels.

Early stopping methods, which predict final performance from early learning curves or terminate training of poorly performing architectures prematurely, are key to improving efficiency. This strategy is especially valuable given the long training times per architecture typical in HSIC tasks. Nevertheless, the assumption of consistent ranking throughout training requires careful validation in light of HSIC’s complex task characteristics.

Surrogate models, trained on architectural features or performance on small proxy tasks, aim to completely bypass the costly training on full hyperspectral datasets. The main challenge here is how to construct surrogate models that can generalize accurately from proxy tasks or datasets to the target HSIC data and task, given the significant domain gap.

Among all strategies, weight sharing stands out as one of the most influential in HSIC-NAS. By training a supernet that covers the entire search space, multiple sub-networks can be evaluated with minimal additional cost, greatly reducing total computational expense. However, concerns remain regarding fairness during supernet training, such as the “rich-get-richer” phenomenon, where early strong-performing operations dominate throughout the search. Additionally, a retraining gap may exist between the performance estimated via weight sharing and the true performance achieved when retraining a child architecture from scratch. Methods such as Noisy-DARTS have been proposed to improve fairness in supernet training. On the other hand, the memory footprint of the supernet itself poses a significant practical limitation, especially when it must support diverse operation types necessary for spatial–spectral fusion in HSIC, making this issue particularly pronounced.

3. Algorithmic Advancements of NAS in HSIC

In the previous chapter, we introduced the fundamental concepts of NAS, explaining how it optimizes deep learning model design by automating the search for network architectures. NAS leverages optimization techniques such as reinforcement learning, evolutionary algorithms, and gradient-based methods to explore suitable architectures for different tasks. This approach has garnered widespread attention because it can significantly improve model performance and computational efficiency while also reducing the complexity of manual design. Building upon this theoretical foundation, this chapter focuses on the algorithmic advancements of NAS in HSIC. It explores classic research outcomes on NAS in HSIC, discussing how NAS addresses the unique challenges of hyperspectral data, the various strategies applied, and the research progress and experimental results achieved in this field.

3.1. CNN-Based NAS for HSIC

In HSIC, Convolutional Neural Networks (CNNs) are widely used due to their excellent ability to extract spatial and spectral features [112]. Convolutional neural networks extract spatial features from images through convolutional layers, and they reduce the dimensionality of feature maps using pooling layers to obtain more abstract features [113]. Fully connected layers then transform these features into classification decisions. Neural architecture search can automate the optimization of the network structure, enabling the network to process the high-dimensional features of HSIs more efficiently. Through NAS, the system automatically explores and discovers optimal neural architectures—including layer connectivity, operator types, and overall topology—rather than simply tuning predefined parameters. This process can yield configurations such as depthwise separable convolutions, residual connections, and attention mechanisms, thereby enhancing the network’s representational capacity. In contrast, conventional hyperparameter tuning adjusts preset variables (e.g., learning rate, batch size) within a fixed architecture. NAS-based approaches can significantly improve classification performance [114].

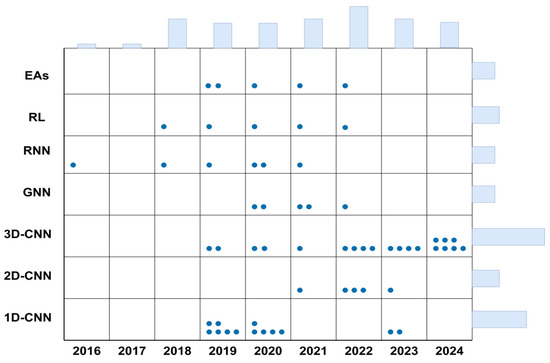

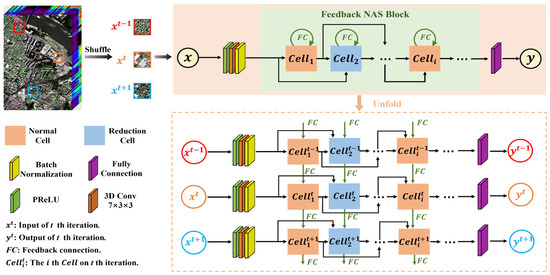

Figure 13 summarizes the changes in research popularity of different algorithm methods in this field from 2016 to 2024. The horizontal axis represents the year, and the vertical axis lists seven typical methods, including evolutionary algorithms (EAs), reinforcement learning (RL), recurrent neural networks (RNNs), graph neural networks (GNNs), and convolutional neural networks (CNNs) of different dimensions. From the distribution trend, it can be seen that convolutional neural networks continue to dominate in this field. Therefore, this article mainly focuses on the in-depth study of CNN-based NAS methods.

Figure 13.

Algorithmic method statistical chart.

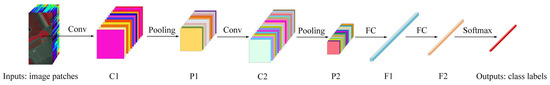

3.1.1. The General Structure of CNNs

The typical structure of a CNN consists of a series of alternating convolutional layers, pooling layers, and fully connected layers. In the convolutional layer, image patches are convolved with convolutional kernels to extract features containing spatial contextual information. Then, the pooling layer reduces the dimensionality of the feature maps produced by the convolutional layer, further refining the features into more generalized and abstract representations. Finally, these feature maps are transformed into feature vectors through fully connected layers, which are used for subsequent classification or decision-making tasks [115,116]. The architecture of a CNN is shown in Figure 14.

Figure 14.

The architecture of a CNN [40].

Convolutional layers are the core components of CNNs. In each convolutional layer, the input data is convolved with multiple learnable filters, generating multiple feature maps. Let the input data be a cube of size m × n × d, where m × n represents the spatial dimensions and d is the number of channels. xi denotes the i-th feature map. Suppose the convolutional layer has k filters, with the j-th filter characterized by weight wj and bias bj. The output of the j-th convolutional layer is given as follows:

Here, ∗ represents the convolution operator, and f() is the activation function used to enhance the nonlinearity of the network. Recently, the ReLU activation function has been widely used. ReLU has two main advantages: fast convergence and robustness against the vanishing gradient problem. The formula for ReLU is as follows:

The use of activation functions not only increases the non-linearity of the network but also helps to address the vanishing gradient problem, thereby accelerating the model’s convergence speed.

Pooling layers are typically inserted after several convolutional layers to reduce the spatial dimensions of feature maps while also lowering the computational cost and number of parameters. Pooling operations help to eliminate redundant information, allowing the network to extract more abstract features. Common pooling operations include max pooling and average pooling. For average pooling, assuming the pooling window size is p × p, the pooling operation can be expressed as:

where F is the number of elements in the pooling window, and xij is the activation value at position (i,j) within the window.

After the pooling layer, the feature maps are typically flattened and passed to fully connected layers. In traditional neural networks, fully connected layers are used to extract deeper and more abstract features. Fully connected layers achieve this by reshaping the feature maps into an n-dimensional vector (for example, with a dimension of 4096 in AlexNet). The formula for the fully connected layer can be expressed as:

where X, Y, W, and b refer to the input, output, weight, and bias of a fully connected layer, respectively.

Compared to traditional methods relying on handcrafted features, DL can automatically learn high-level discriminative features directly from complex hyperspectral data. Leveraging these features, DL-based methods can effectively address the challenge of significant spatial variability in spectral characteristics. Capitalizing on this advantage, researchers have developed a variety of deep network architectures for feature extraction, achieving excellent classification performance. However, networks of different depths or types may extract distinct features, such as spectral features, spatial features, or spectral–spatial joint features. Consequently, subsequent sections of this chapter will summarize NAS methods from the perspective of deep networks, extracting these three different feature types.

However, training deep networks typically requires a large number of labeled samples to learn network parameters. In the field of remote sensing, labeled data is often scarce due to the high cost and time-consuming nature of its collection. This scarcity leads to the data scarcity problem in HSIC, motivating research into few-shot classification problems [117]. Recently, several effective methods have been proposed to mitigate this issue to some extent.

There are various methods in HSIC to cope with the data scarcity problem, such as data augmentation, transfer learning, semi-supervised learning, and network optimization methods.

(1) Data augmentation [118,119] is the most intuitive method to solve the data scarcity problem, which generates additional virtual samples by transforming known samples through a transformation function. This method is simple and efficient, and it was often used in the past [120].

(2) Transfer Learning [121], on the other hand, is a method that introduces useful information learned from the source data into the target data. In HSIC scenarios where data is scarce, transfer learning works in three main ways. The first is the most commonly used pre-training model fine-tuning [122], where first, a deep neural network is trained on a large-scale source dataset so that it learns a strong base feature extraction capability. Then, this pre-training model is used as a starting point for fine-tuning the model using the limited target dataset in the HSIC domain. In this way, the model does not need to learn all the low-level features from scratch, greatly reducing the amount of target data required. The second is the feature extractor [123], which treats the pre-trained model as a fixed feature extractor. the HSIC target data is forward propagated through this pre-trained model to obtain high-dimensional feature vectors. These feature vectors are then fed into a new, relatively simple classifier for training. The new classifier only needs to learn how to make task-specific decisions based on these generalized features, which requires much less target data. The third one is domain adaptation [124], where specialized techniques are used to align the feature distributions of the source and target domains when the source and target data are shifted despite the domain bias, allowing the source domain knowledge to be migrated to the target domain more efficiently. The subsequent classification can be further categorized into two approaches: unsupervised learning and supervised learning. The main objective of unsupervised feature learning is to extract useful features from a large amount of unlabeled data. Deep networks are carefully designed in an encoder–decoder paradigm for the network to learn without using labeling information. Moreover, classification performance can be improved by passing the trained network and fine-tuning the labeled dataset. Transfer learning has been used in cross-scenario HSIC, but in recent years, due to the improvement of computer power, cross-scenario and NAS have gradually been combined to improve classification performance and robustness. This has gradually become a popular research direction, and we will focus on the combination of cross-scenario and NAS in the last section on future research directions.

(3) The main objective of network optimization [125] is to further improve network performance by employing more efficient modules than the original or improving the training strategy to enhance the learning efficiency and generalization ability of the model under limited data. In the data-scarce HSIC task, well-designed network structures and optimization strategies are particularly important to help models extract more robust and discriminative features from a small number of samples and effectively mitigate the risk of overfitting. Specific methods include, but are not limited to, lightweight and efficient architecture design, introduction of attention mechanisms, knowledge distillation, optimized training strategies, and regularization techniques. Compared with data augmentation and transfer learning, network optimization focuses on the design of the model structure itself and fine-tuning of the training process, aiming to build a neural network that is more suitable for efficient learning in small sample environments with stronger generalization ability. It is an indispensable key technical aspect of NAS in solving the HSIC data scarcity problem [117].

1D Auto-CNN-Based Methods

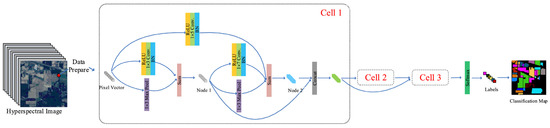

In HSIC, Convolutional Neural Networks (CNNs) are a commonly used deep learning architecture that can effectively extract spatial and spectral features from images.

The 1D-CNN is a convolutional neural network designed based on NAS, specifically for spectral feature extraction in HSIs [126]. In 1D convolutions, the spectral information of each pixel is treated as a one-dimensional spectral vector, where each dimension represents a spectral band. The 1D convolutional kernel slides across these spectral bands to capture the features and relationships between the bands. Therefore, 1D Auto-CNN primarily relies on spectral data for classification while neglecting the spatial structure in HSIs.

In HSIC, the 1D convolutional layer helps to extract key patterns from the spectral information of each pixel. This architecture is particularly suitable for tasks where spatial information is not required or is less important. The overall algorithmic framework is illustrated in Figure 15.

Figure 15.

Framework of 1D Auto-CNN for HSIC [126].

In recent years, the rapid development of Neural Architecture Search (NAS) techniques has introduced a novel automated paradigm for the design of 1D-convolutional neural networks in HSIC. According to the experimental results presented by Paoletti et al. [127], the 1D Auto-CNN, or AAtt-CNN1D, demonstrates significant advantages in HSIC, particularly when the number of training samples is limited. The model’s automatic CNN architecture design eliminates the need for manual design, saving considerable time and effort while reducing the potential for human error. The experiments showed that 1D Auto-CNN outperforms traditional methods such as SVM, RBF-SVM, and 1D DCNN on datasets like Salinas, Pavia, KSC, and Indian Pines, achieving higher performance in terms of overall accuracy (OA), average accuracy (AA), and Kappa coefficients (K). The training process, which includes the architecture search phase, takes approximately 12 min, which is a relatively fast procedure compared to manual architecture design. However, due to the limited number of training samples, overfitting remains a challenge, despite the use of L2 regularization and other techniques. This issue is more prominent in hyperspectral datasets with fewer available labeled samples. While the 1D Auto-CNN’s architecture search and design process is efficient, the model’s performance can still suffer when training data is scarce. Overall, the 1D Auto-CNN offers a promising solution for HSIC, especially when training data is limited, and its ability to automatically design architectures tailored to specific datasets highlights its potential in achieving high classification accuracy.

2D Auto-CNN-Based Methods

The 2D convolutional network simultaneously processes spatial and spectral information, but it typically focuses more on spatial features. Unlike 1D convolution, the 2D convolutional network uses a 2D convolutional kernel to process the spatial dimensions (width and height) of the image, and it is commonly applied for learning the spatial structure of the image. In HSIC, the 2D convolutional layer helps to extract spatial patterns from the image while retaining spectral information, making it suitable for tasks where spatial structure is more important [128]. Compared to 3D convolution, it only performs convolution operations on the spatial dimensions, with fewer trainable parameters and lower computational cost, making it more practical in resource-constrained scenarios [129].

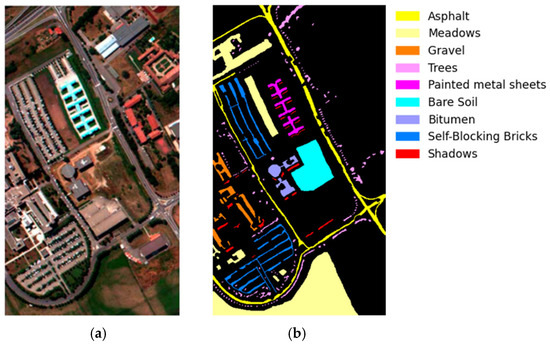

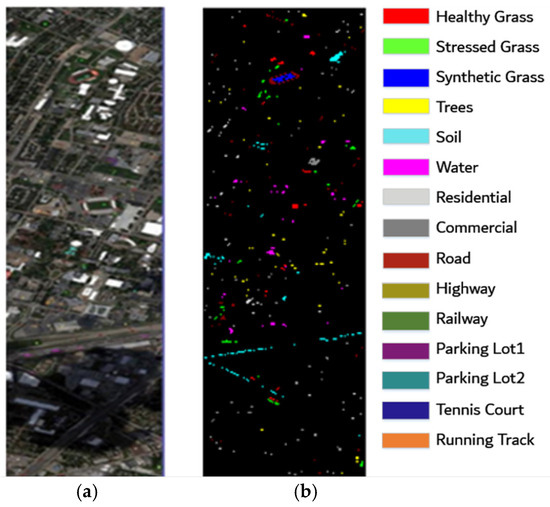

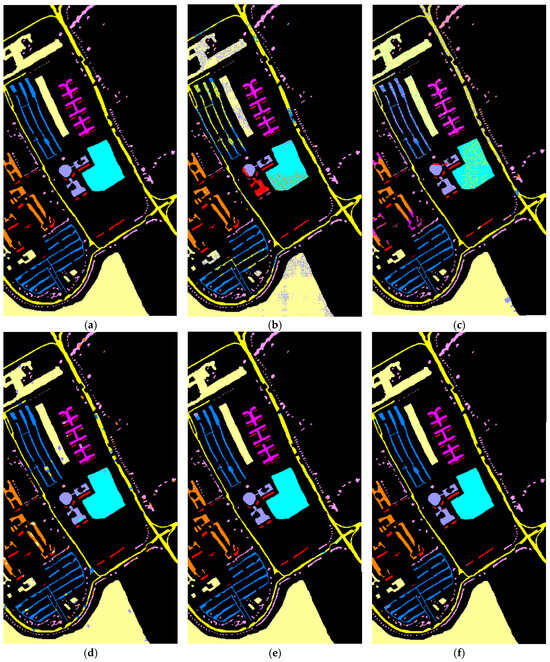

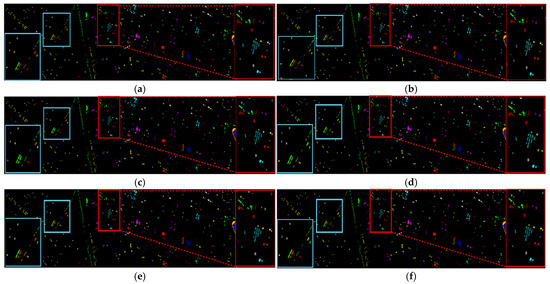

According to the experimental results provided by Han et al. [130], the AutoNAS framework employs various sizes of 2D convolution operations, including 1 × 1 convolution, 3 × 3 convolution, 5 × 5 convolution, and 7 × 7 convolution for hyperspectral unmixing tasks. The use of these convolution kernels enables the network to flexibly extract features at different spatial scales, thereby improving the accuracy and effectiveness of the unmixing process. The experiments demonstrate that AutoNAS can automatically optimize the network architecture while searching for convolution kernel configurations, eliminating the need for manual intervention and avoiding the architecture design and tuning issues inherent in traditional methods. The experimental results across multiple hyperspectral datasets show that AutoNAS outperforms other deep learning methods that rely on manually designed architectures. Although the computational cost of automatic architecture search is relatively high, AutoNAS achieves higher unmixing accuracy while maintaining computational efficiency when compared to manually designed methods. Therefore, by combining 2D convolution and automatic architecture search techniques, AutoNAS demonstrates significant potential in HSI unmixing tasks, especially when dealing with complex and nonlinear scenarios [131].