STCD-EffV2T Unet: Semi Transfer Learning EfficientNetV2 T-Unet Network for Urban/Land Cover Change Detection Using Sentinel-2 Satellite Images

Abstract

1. Introduction

2. Materials and Datasets

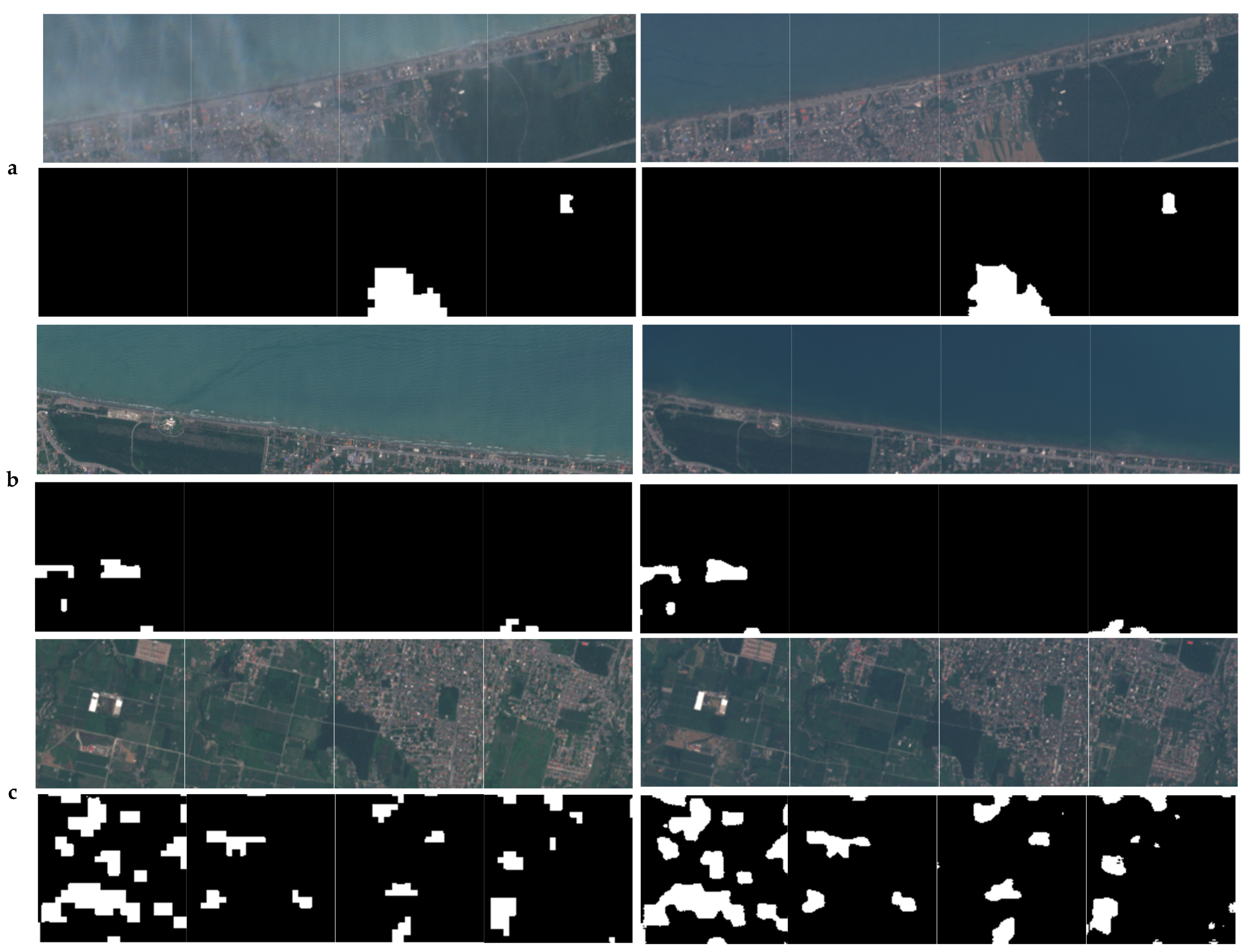

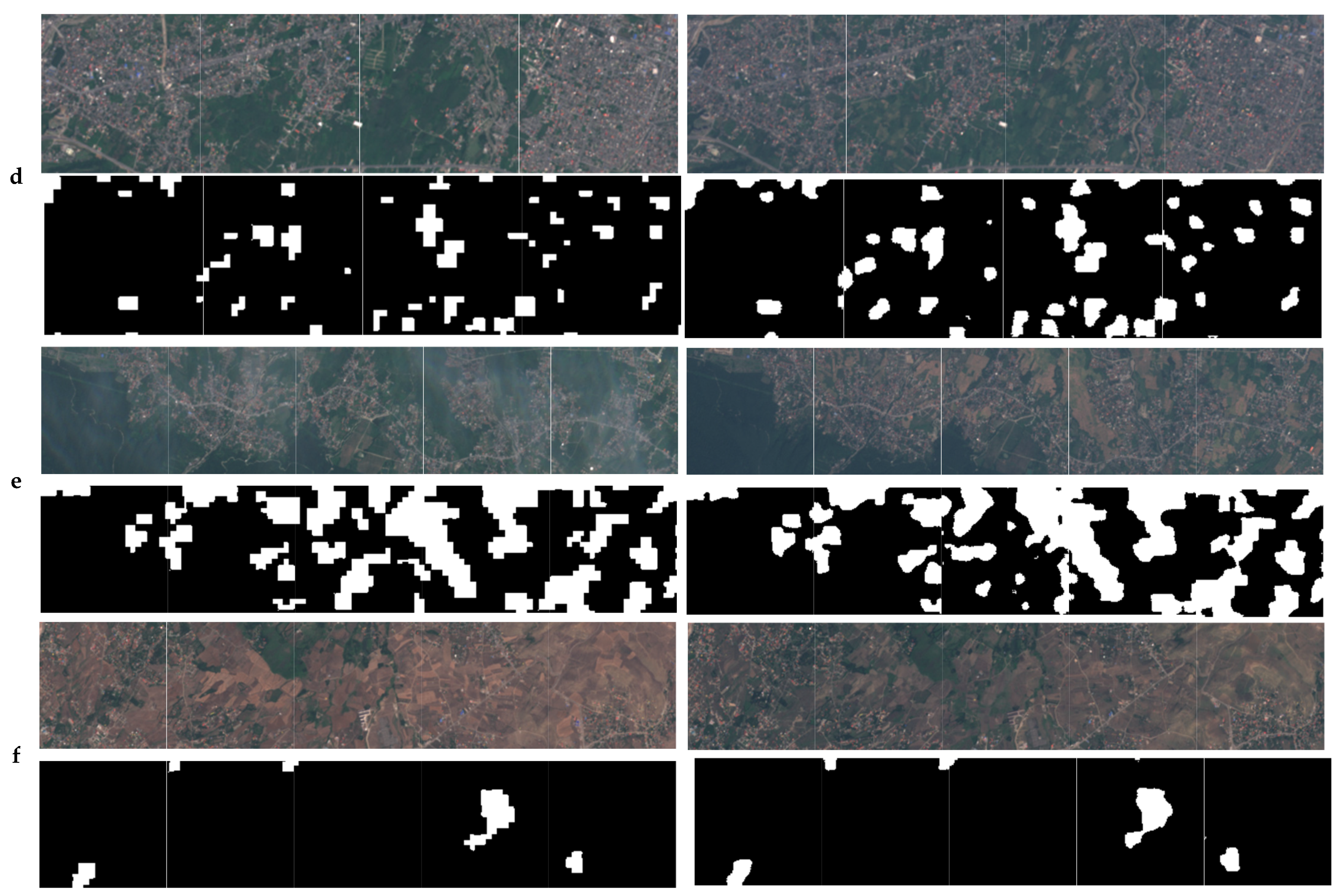

2.1. Sentinel-2 Satellite Image of the Northern Iran Dataset

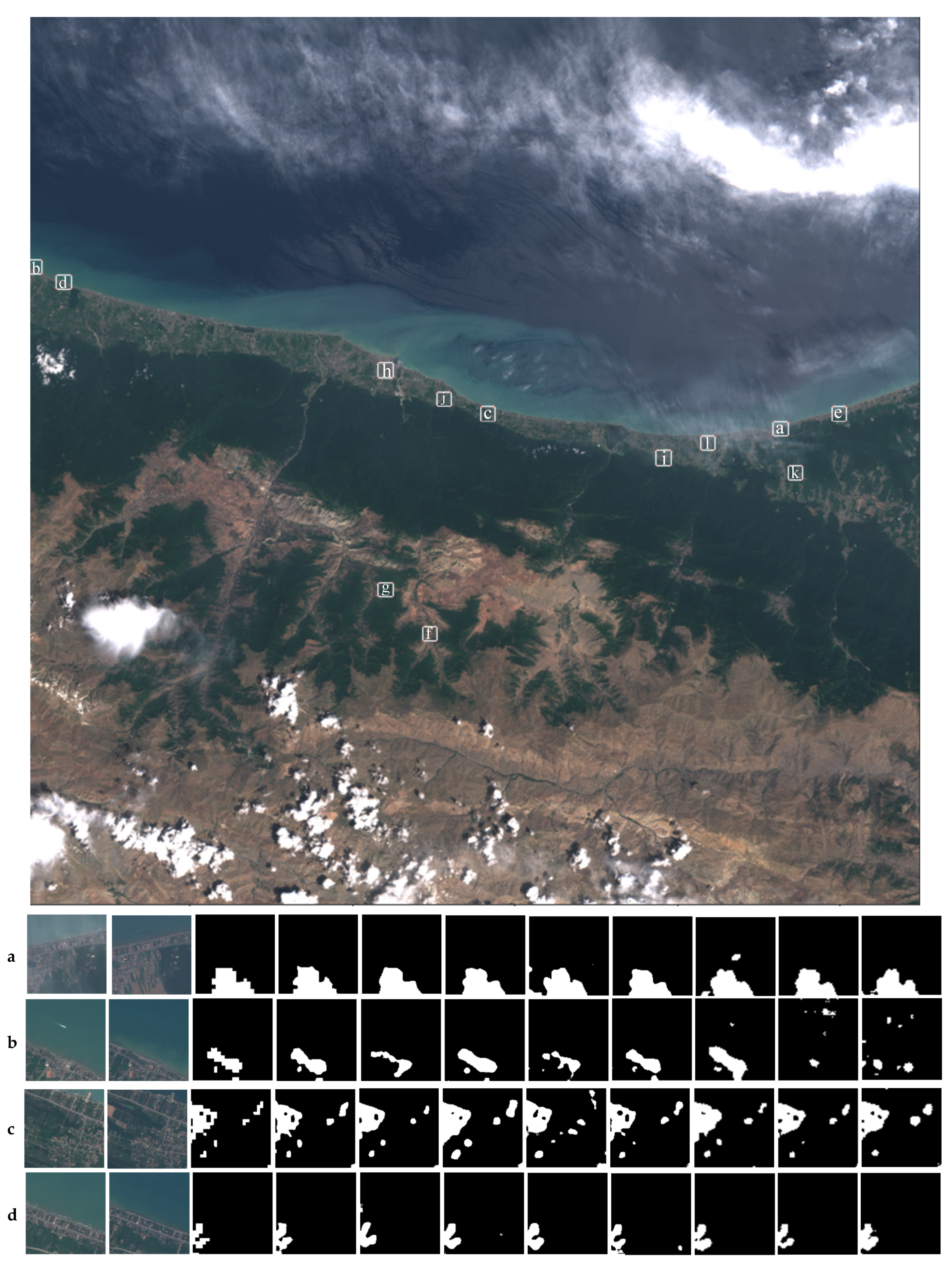

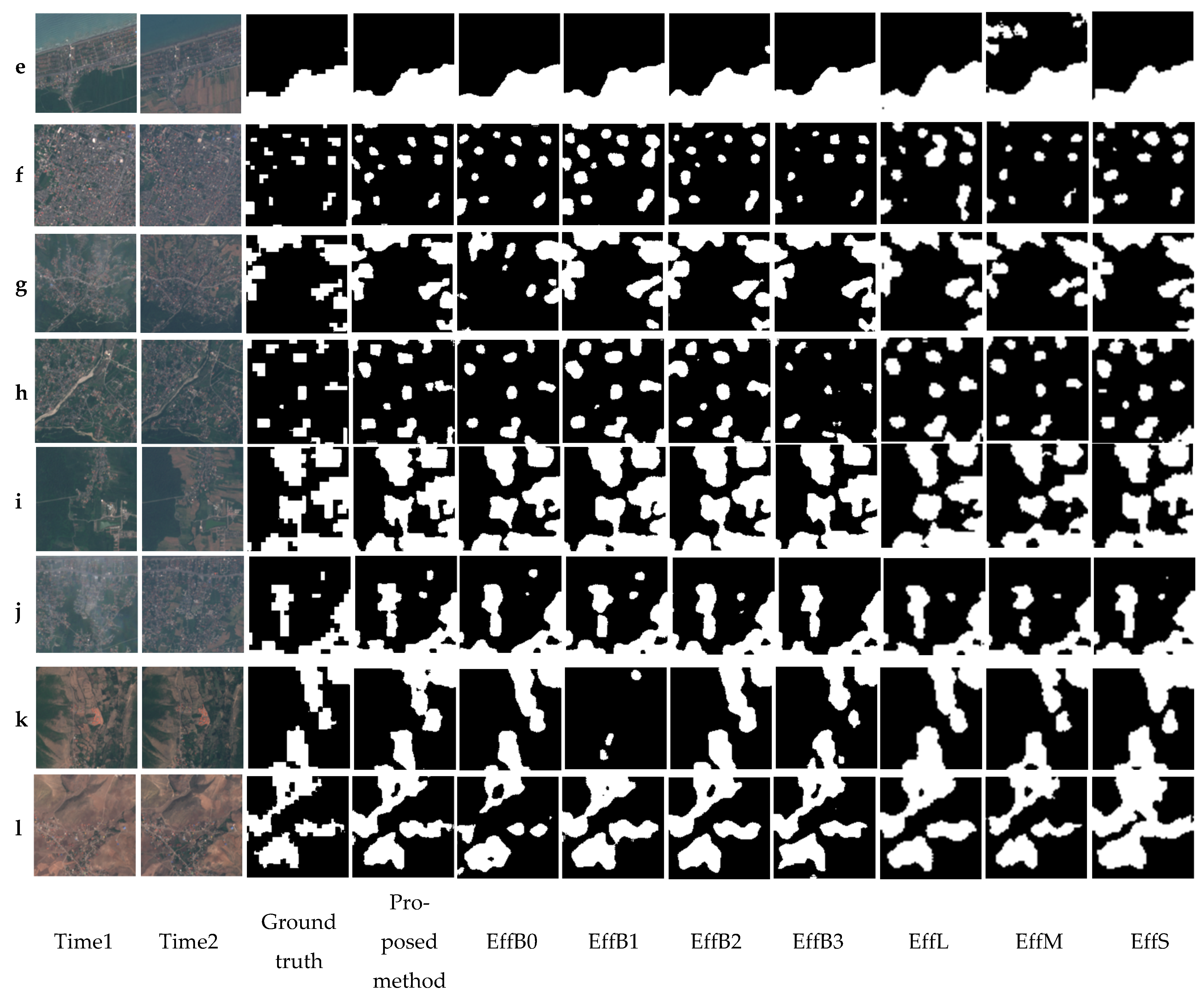

2.2. Onera Sentinel-2 Satellite Change Detection (OSCD) Dataset

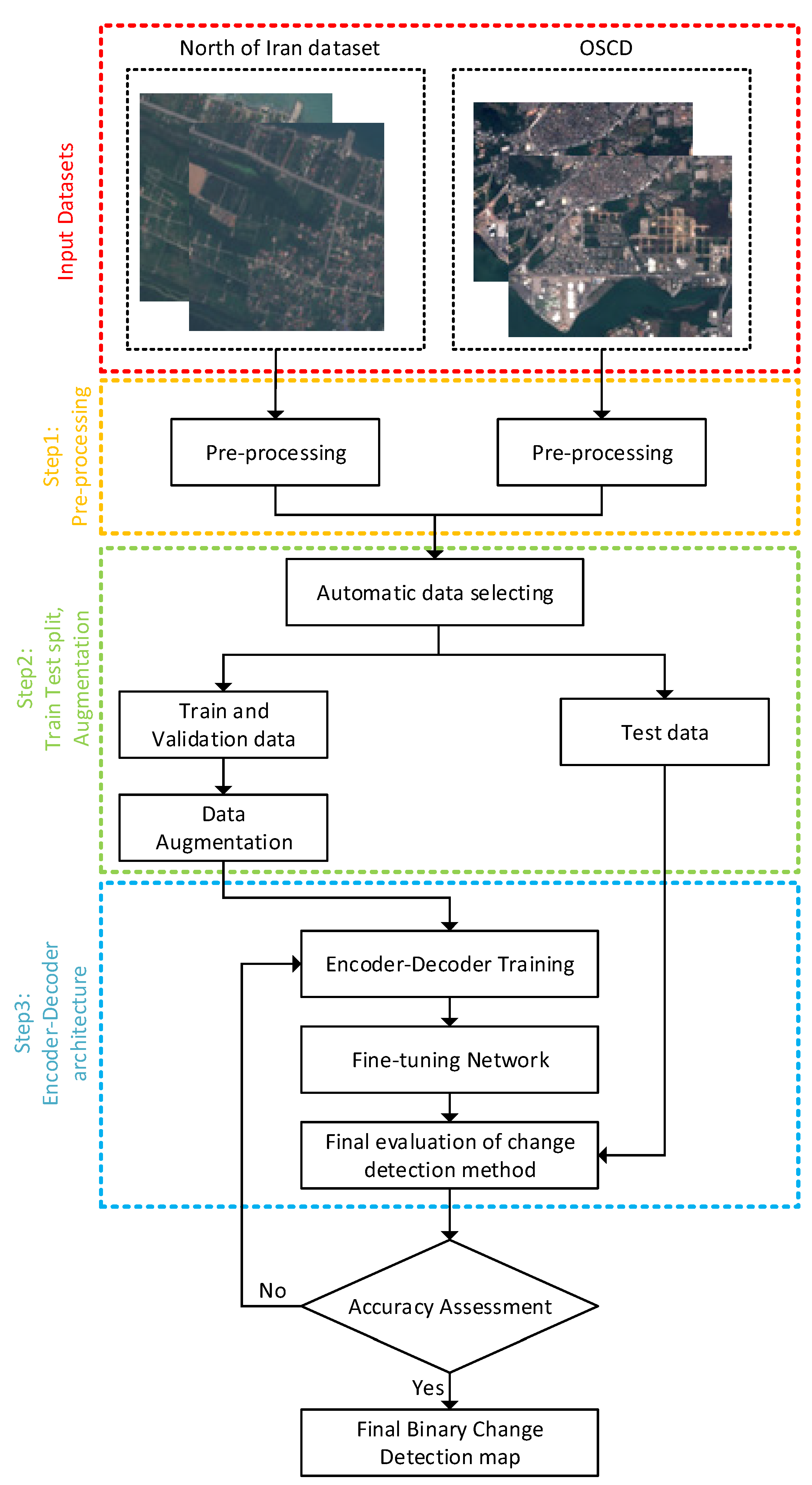

3. Proposed Method

3.1. Pre-Processing

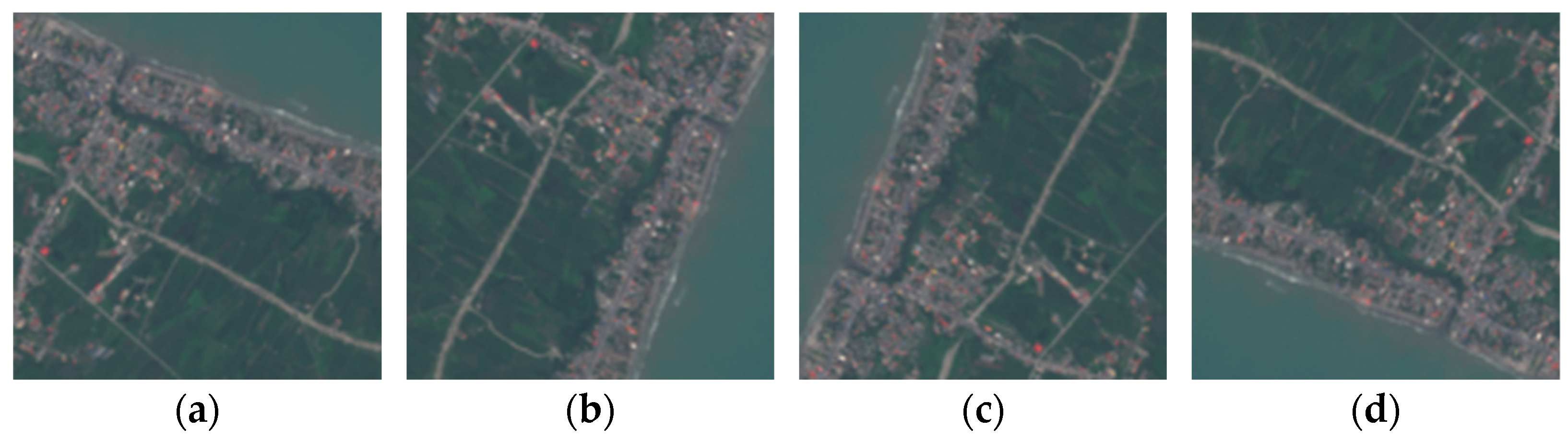

3.2. Train and Test Split and Augmentation

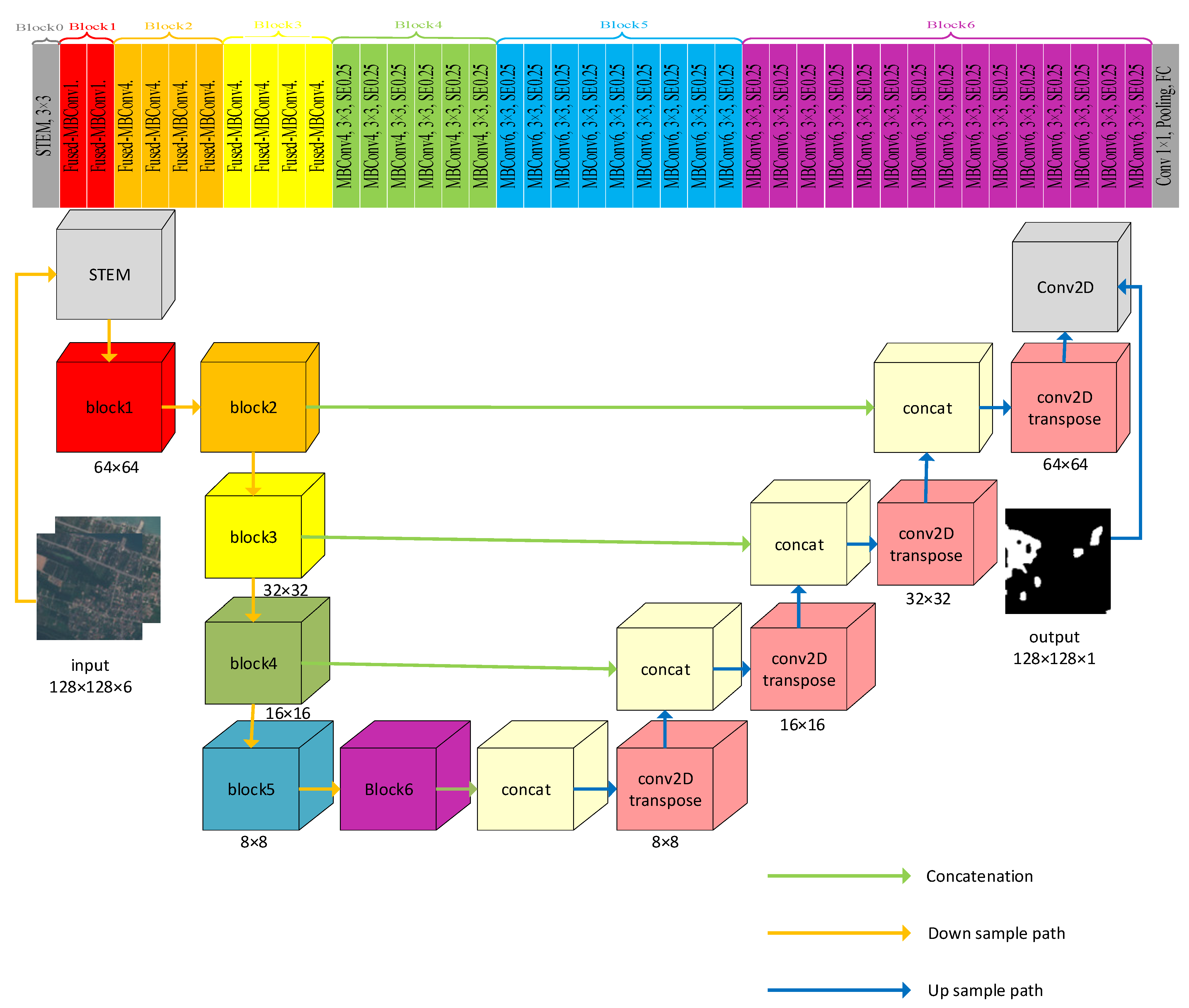

3.3. Encoder-Decoder Architecture

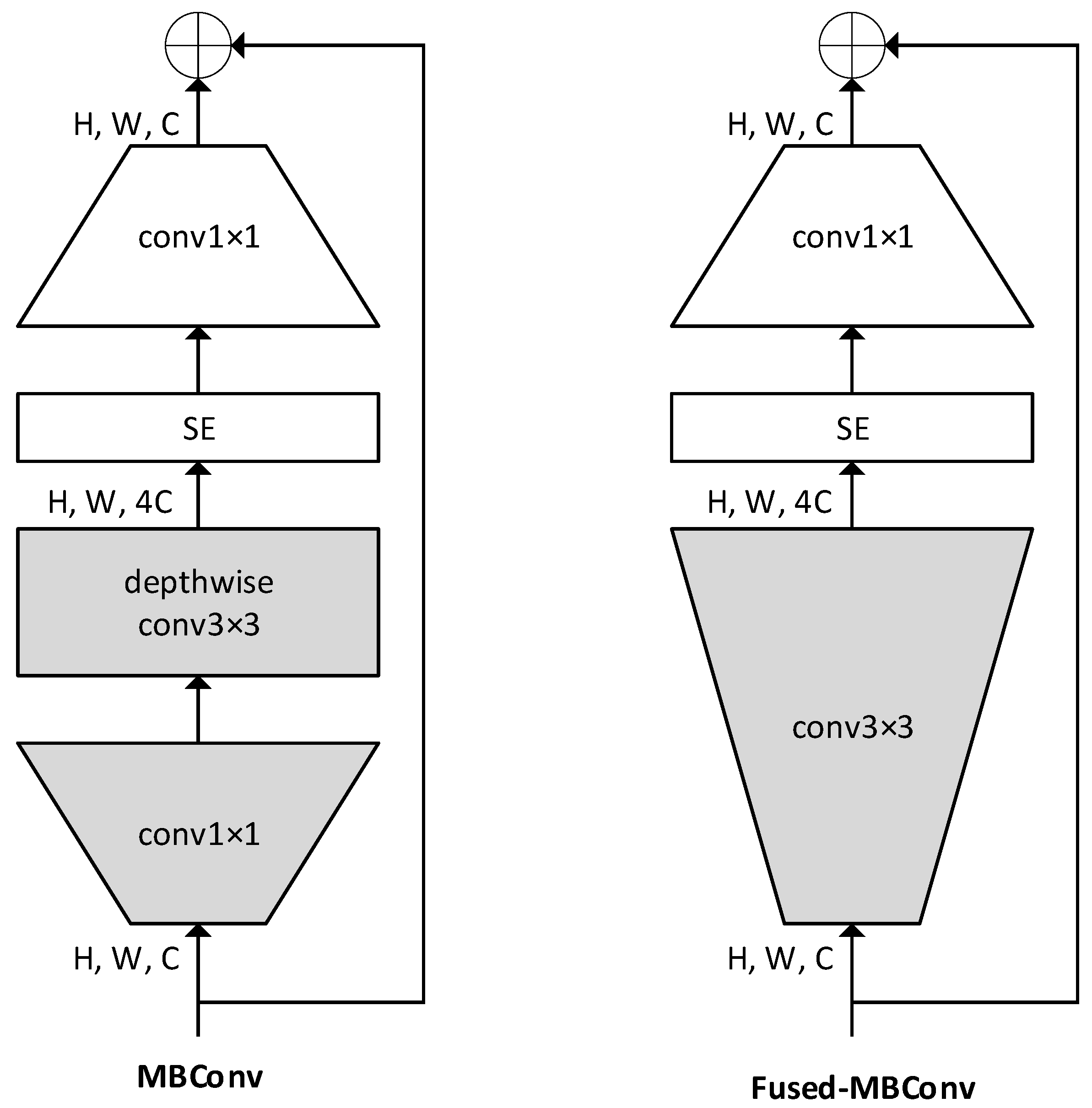

3.3.1. EfficientNet Encoder

3.3.2. Transfer Learning with Different Input Channels

3.3.3. Unet Decoder

3.3.4. Loss Function

3.3.5. Accuracy Assessment

3.3.6. Comparative Methods

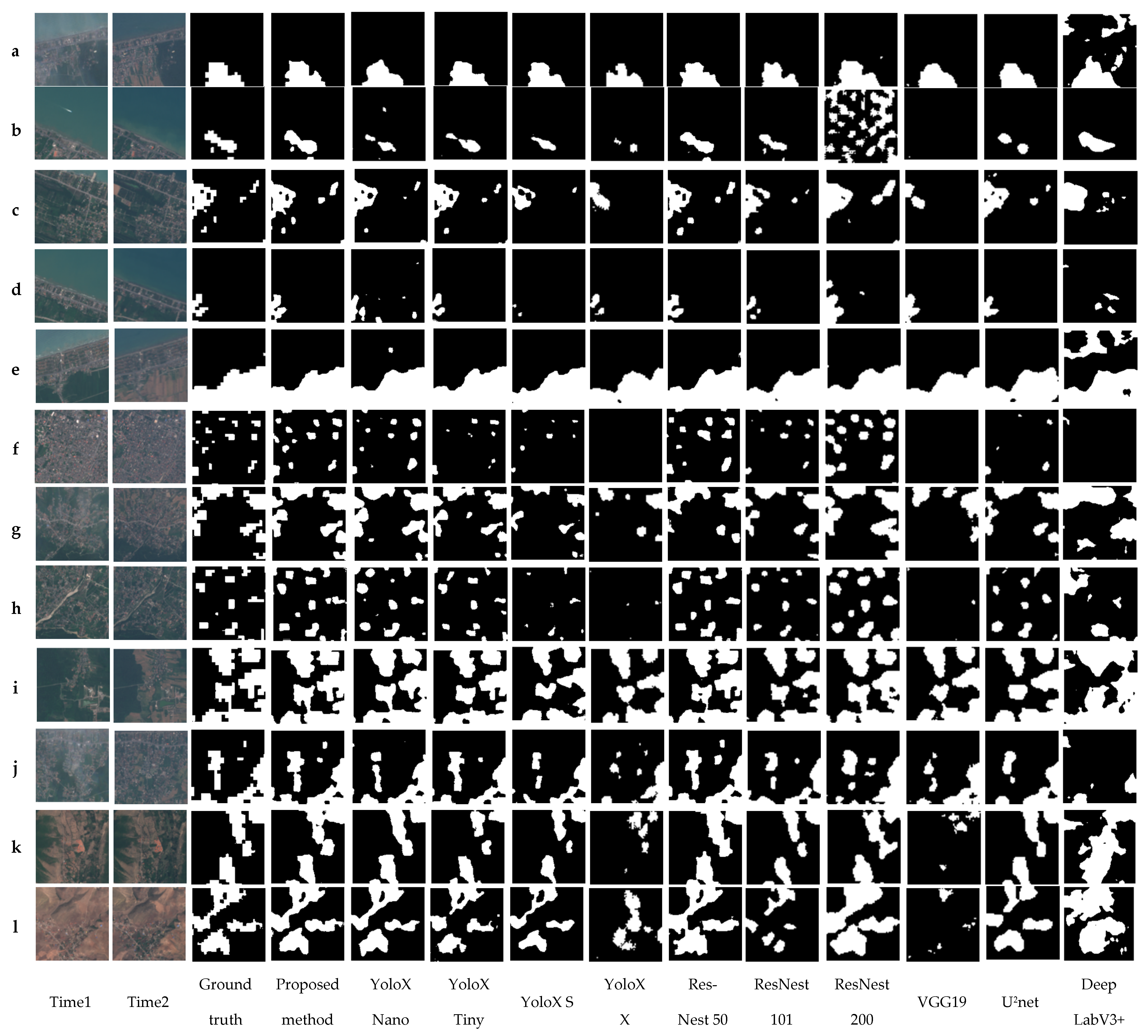

- YoloX [61]: the Yolo family networks are generally used for object detection. These networks are fast and accurate, and trained on the COCO dataset. In this study, we use YoloX series such as Nano, Tiny, S, and X, which are trained on the COCO dataset as an encoder, and the convolutional layers of Unet as a decoder.

- ResNest [62]: this network, named the split-attention network, includes four series: ResNest50, ResNest101, ResNest200, and ResNest269. The number of parameters increases according to the number of these networks. In this study, we use ResNest50, ResNest101, and ResNest200, which were trained by the ImageNet dataset as the encoder part and convolutional layers of Unet as the decoder part.

- VGG19 [63]: this network is one of the most famous networks for many remote sensing tasks. In this study, we use VGG19 which was pre-trained by ImageNet as an encoder path and convolutional layers of Unet as a decoder path.

- DeepLabV3+ [64]: the last modification of the DeepLab network is DeepLabV3+, uploaded at http://keras.io. This network is used for multiclass segmentation. In the architecture of the DeepLabV3+ network, the ResNet50 is the backbone which is pre-trained by ImageNet. In this study, we use DeepLabV3+ for binary change detection, and we change the input channels into six channels and share the weight with the third method in Section 3.3.4.

- U2Net [65]: this network is one of the newest Unet network forms proposed for salient object detection. U2Net is a two-level nested U-structure. In the structure of this network, different residual U blocks are used. We compare the performance of this approach with our proposed method.

4. Experimental Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jia, L.; Wang, J.; Ai, J.; Jiang, Y. A hierarchical spatial-temporal graph-kernel for high-resolution SAR image change detection. Int. J. Remote Sens. 2020, 41, 3866–3885. [Google Scholar] [CrossRef]

- Du, P.; Wang, X.; Chen, D.; Liu, S.; Lin, C.; Meng, Y. An improved change detection approach using tri-temporal logic-verified change vector analysis. ISPRS J. Photogramm. Remote Sens. 2020, 161, 278–293. [Google Scholar] [CrossRef]

- Goswami, A.; Sharma, D.; Mathuku, H.; Gangadharan, S.M.P.; Yadav, C.S.; Sahu, S.K.; Pradhan, M.K.; Singh, J.; Imran, H. Change Detection in Remote Sensing Image Data Comparing Algebraic and Machine Learning Methods. Electronics 2022, 11, 431. [Google Scholar] [CrossRef]

- Shafique, A.; Cao, G.; Khan, Z.; Asad, M.; Aslam, M. Deep learning-based change detection in remote sensing images: A review. Remote Sens. 2022, 14, 871. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, M.; Zhang, R.; Chen, S.; Zhan, Z. Change detection based on artificial intelligence: State-of-the-art and challenges. Remote Sens. 2020, 12, 1688. [Google Scholar] [CrossRef]

- Bai, T.; Wang, L.; Yin, D.; Sun, K.; Chen, Y.; Li, W.; Li, D. Deep learning for change detection in remote sensing: A review. Geo-Spat. Inf. Sci. 2022, 1–27. [Google Scholar] [CrossRef]

- Bruzzone, L.; Prieto, D.F. Automatic analysis of the difference image for unsupervised change detection. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1171–1182. [Google Scholar] [CrossRef]

- Chen, J.; Gong, P.; He, C.; Pu, R.; Shi, P. Land-use/land-cover change detection using improved change-vector analysis. Photogramm. Eng. Remote Sens. 2003, 69, 369–379. [Google Scholar] [CrossRef]

- Gong, P. Change detection using principal component analysis and fuzzy set theory. Can. J. Remote Sens. 1993, 19, 22–29. [Google Scholar] [CrossRef]

- Celik, T. Unsupervised change detection in satellite images using principal component analysis and k-means clustering. IEEE Geosci. Remote Sens. Lett. 2009, 6, 772–776. [Google Scholar] [CrossRef]

- Silván-Cárdenas, J.L.; Wang, L. On quantifying post-classification subpixel landcover changes. ISPRS J. Photogramm. Remote Sens. 2014, 98, 94–105. [Google Scholar] [CrossRef]

- Yuan, F.; Sawaya, K.E.; Loeffelholz, B.C.; Bauer, M.E. Land cover classification and change analysis of the Twin Cities (Minnesota) Metropolitan Area by multitemporal Landsat remote sensing. Remote Sens. Environ. 2005, 98, 317–328. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L.; Marconcini, M. A novel approach to unsupervised change detection based on a semisupervised SVM and a similarity measure. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2070–2082. [Google Scholar] [CrossRef]

- Healey, S.P.; Cohen, W.B.; Yang, Z.; Brewer, C.K.; Brooks, E.B.; Gorelick, N.; Hernandez, A.J.; Huang, C.; Hughes, M.J.; Kennedy, R.E. Mapping forest change using stacked generalization: An ensemble approach. Remote Sens. Environ. 2018, 204, 717–728. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Khelifi, L.; Mignotte, M. Deep learning for change detection in remote sensing images: Comprehensive review and meta-analysis. IEEE Access 2020, 8, 126385–126400. [Google Scholar] [CrossRef]

- Cao, G.; Wang, B.; Xavier, H.-C.; Yang, D.; Southworth, J. A new difference image creation method based on deep neural networks for change detection in remote-sensing images. Int. J. Remote Sens. 2017, 38, 7161–7175. [Google Scholar] [CrossRef]

- Daudt, R.C.; Le Saux, B.; Boulch, A. Fully convolutional siamese networks for change detection. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP); IEEE: New York, NY, USA, 2018; pp. 4063–4067. [Google Scholar]

- Zhang, W.; Lu, X. The spectral-spatial joint learning for change detection in multispectral imagery. Remote Sens. 2019, 11, 240. [Google Scholar] [CrossRef]

- Wang, M.; Tan, K.; Jia, X.; Wang, X.; Chen, Y. A deep siamese network with hybrid convolutional feature extraction module for change detection based on multi-sensor remote sensing images. Remote Sens. 2020, 12, 205. [Google Scholar] [CrossRef]

- Lin, Y.; Li, S.; Fang, L.; Ghamisi, P. Multispectral change detection with bilinear convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1757–1761. [Google Scholar] [CrossRef]

- Seydi, S.T.; Hasanlou, M.; Amani, M. A new end-to-end multi-dimensional CNN framework for land cover/land use change detection in multi-source remote sensing datasets. Remote Sens. 2020, 12, 2010. [Google Scholar] [CrossRef]

- Zhang, M.; Shi, W. A feature difference convolutional neural network-based change detection method. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7232–7246. [Google Scholar] [CrossRef]

- Yang, L.; Chen, Y.; Song, S.; Li, F.; Huang, G. Deep Siamese networks based change detection with remote sensing images. Remote Sens. 2021, 13, 3394. [Google Scholar] [CrossRef]

- Jiang, X.; Li, G.; Zhang, X.-P.; He, Y. A semisupervised Siamese network for efficient change detection in heterogeneous remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–18. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A spatial-temporal attention-based method and a new dataset for remote sensing image change detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Wang, D.; Chen, X.; Jiang, M.; Du, S.; Xu, B.; Wang, J. ADS-Net: An Attention-Based deeply supervised network for remote sensing image change detection. Int. J. Appl. Earth Obs. Geoinf. 2021, 101, 102348. [Google Scholar]

- Ji, S.; Wei, S.; Lu, M. Fully convolutional networks for multisource building extraction from an open aerial and satellite imagery data set. IEEE Trans. Geosci. Remote Sens. 2018, 57, 574–586. [Google Scholar] [CrossRef]

- Ma, J.; Shi, G.; Li, Y.; Zhao, Z. MAFF-Net: Multi-Attention Guided Feature Fusion Network for Change Detection in Remote Sensing Images. Sensors 2022, 22, 888. [Google Scholar] [CrossRef]

- Papadomanolaki, M.; Verma, S.; Vakalopoulou, M.; Gupta, S.; Karantzalos, K. Detecting urban changes with recurrent neural networks from multitemporal Sentinel-2 data. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium; IEEE: New York, NY, USA, 2019; pp. 214–217. [Google Scholar]

- Wiratama, W.; Lee, J.; Sim, D. Change detection on multi-spectral images based on feature-level U-Net. IEEE Access 2020, 8, 12279–12289. [Google Scholar] [CrossRef]

- Chen, H.; Wu, C.; Du, B. Towards deep and efficient: A deep Siamese self-attention fully efficient convolutional network for change detection in VHR images. arXiv 2021, arXiv:2108.08157. [Google Scholar]

- Zhang, H.; Wang, M.; Wang, F.; Yang, G.; Zhang, Y.; Jia, J.; Wang, S. A novel squeeze-and-excitation W-net for 2D and 3D building change detection with multi-source and multi-feature remote sensing data. Remote Sens. 2021, 13, 440. [Google Scholar] [CrossRef]

- Ding, Q.; Shao, Z.; Huang, X.; Altan, O. DSA-Net: A novel deeply supervised attention-guided network for building change detection in high-resolution remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2021, 105, 102591. [Google Scholar] [CrossRef]

- Wang, Y.; Gao, L.; Hong, D.; Sha, J.; Liu, L.; Zhang, B.; Rong, X.; Zhang, Y. Mask DeepLab: End-to-end image segmentation for change detection in high-resolution remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102582. [Google Scholar] [CrossRef]

- Wei, H.; Chen, R.; Yu, C.; Yang, H.; An, S. BASNet: A Boundary-Aware Siamese Network for Accurate Remote-Sensing Change Detection. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, P.; Tapete, D.; Jiang, L.; Shangguan, B.; Huang, L.; Liu, G. A deeply supervised image fusion network for change detection in high resolution bi-temporal remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 183–200. [Google Scholar] [CrossRef]

- Raza, A.; Huo, H.; Fang, T. EUNet-CD: Efficient UNet++ for change detection of very high-resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Papadomanolaki, M.; Vakalopoulou, M.; Karantzalos, K. A deep multitask learning framework coupling semantic segmentation and fully convolutional LSTM networks for urban change detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 7651–7668. [Google Scholar] [CrossRef]

- Ahangarha, M.; Shah-Hosseini, R.; Saadatseresht, M. Deep learning-based change detection method for environmental change monitoring using sentinel-2 datasets. Environ. Sci. Proc. 2020, 5, 15. [Google Scholar]

- Mou, L.; Zhu, X.X. A recurrent convolutional neural network for land cover change detection in multispectral images. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium; IEEE: New York, NY, USA, 2018; pp. 4363–4366. [Google Scholar]

- Gong, M.; Yang, Y.; Zhan, T.; Niu, X.; Li, S. A generative discriminatory classified network for change detection in multispectral imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 321–333. [Google Scholar] [CrossRef]

- Luo, X.; Li, X.; Wu, Y.; Hou, W.; Wang, M.; Jin, Y.; Xu, W. Research on change detection method of high-resolution remote sensing images based on subpixel convolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1447–1457. [Google Scholar] [CrossRef]

- Alvarez, J.L.H.; Ravanbakhsh, M.; Demir, B. S2-cGAN: Self-supervised adversarial representation learning for binary change detection in multispectral images. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium; IEEE: New York, NY, USA, 2020; pp. 2515–2518. [Google Scholar]

- Zhang, Y.; Deng, M.; He, F.; Guo, Y.; Sun, G.; Chen, J. FODA: Building change detection in high-resolution remote sensing images based on feature–output space dual-alignment. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8125–8134. [Google Scholar] [CrossRef]

- Saha, S.; Mou, L.; Qiu, C.; Zhu, X.X.; Bovolo, F.; Bruzzone, L. Unsupervised deep joint segmentation of multitemporal high-resolution images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8780–8792. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnetv2: Smaller models and faster training. In Proceedings of the 38th International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Bigdeli, B.; Gomroki, M.; Pahlavani, P. Generation of digital terrain model for forest areas using a new particle swarm optimization on LiDAR data. Surv. Rev. 2020, 52, 115–125. [Google Scholar] [CrossRef]

- Gomroki, M.; Jafari, M.; Sadeghian, S.; Azizi, Z. Application of intelligent interpolation methods for DTM generation of forest areas based on LiDAR data. PFG-J. Photogramm. Remote Sens. Geoinform. Sci. 2017, 85, 227–241. [Google Scholar] [CrossRef]

- Khoshboresh-Masouleh, M.; Alidoost, F.; Arefi, H. Multiscale building segmentation based on deep learning for remote sensing RGB images from different sensors. J. Appl. Remote Sens. 2020, 14, 034503. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Baheti, B.; Innani, S.; Gajre, S.; Talbar, S. Eff-unet: A novel architecture for semantic segmentation in unstructured environment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Virtual, 14–19 June 2020; pp. 358–359. [Google Scholar]

- Niu, H.; Lin, Z.; Zhang, X.; Jia, T. Image Segmentation for pneumothorax disease Based on based on Nested Unet Model. In Proceedings of the 2022 3rd International Conference on Computer Vision, Image and Deep Learning & International Conference on Computer Engineering and Applications (CVIDL & ICCEA), Changchun, China, 20–22 May 2022; IEEE: New York, NY, USA, 2022; pp. 756–759. [Google Scholar]

- Thanh, N.C.; Long, T.Q. CRF-EfficientUNet: An Improved UNet Framework for Polyp Segmentation in Colonoscopy Images With Combined Asymmetric Loss Function and CRF-RNN Layer. IEEE Access 2021, 9, 156987–157001. [Google Scholar]

- Üzen, H.; Turkoglu, M.; Aslan, M.; Hanbay, D. Depth-wise Squeeze and Excitation Block-based Efficient-Unet model for surface defect detection. Vis. Comput. 2022, 1–20. [Google Scholar] [CrossRef]

- Mathews, M.R.; Anzar, S.M.; Krishnan, R.K.; Panthakkan, A. EfficientNet for retinal blood vessel segmentation. In Proceedings of the 2020 3rd International Conference on Signal Processing and Information Security (ICSPIS), Dubai, United Arab Emirates, 25–26 November 2020; IEEE: New York, NY, USA, 2020; pp. 1–4. [Google Scholar]

- Wu, H.; Souedet, N.; Jan, C.; Clouchoux, C.; Delzescaux, T. A general deep learning framework for neuron instance segmentation based on efficient UNet and morphological post-processing. Comput. Biol. Med. 2022, 150, 106180. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Peng, D.; Zhang, Y.; Guan, H. End-to-end change detection for high resolution satellite images using improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE international Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Zhang, H.; Wu, C.; Zhang, Z.; Zhu, Y.; Lin, H.; Zhang, Z.; Sun, Y.; He, T.; Mueller, J.; Manmatha, R. Resnest: Split-attention networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2736–2746. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

| Category | Subcategory | Definition | Mode | Advantages | Limitations | Applications |

|---|---|---|---|---|---|---|

| Visual Analysis | _ | Generate a change map by visual interpretation | Supervised | Highly reliable results | Difficult to update, for large applications time-consuming, labor-intensive | Used in different fields before [3] |

| Algebra-based methods | Image differencing | A change map is generated by performing algebraic operations or transformations. | Unsupervised | Simple and easy to implement and interprets, it decreases the impact of sunglasses’ topography shadow. | Difficult to choose the proper threshold to identify the change map, Difficult to choose appropriate image bands, there is no “from-to” change information, and the Distribution of results is not normal. | Urban land use [7], Urban land use and cover [8] |

| Image regression | ||||||

| Image rationing | ||||||

| Chang vector analysis | ||||||

| Vegetation index differencing | ||||||

| Transformation | Principle component analysis (PCA) | A change map is produced by using transformation methods; these methods are utilized to suppress correlated information and highlight variance. | Unsupervised | Reduce the redundancy between bands, emphasize different information in taken components | Detailed change information cannot extract | Rural-urban land cover [9], Land cover [10] |

| Tasseled Cap | ||||||

| Chi-Square | ||||||

| Gramm-Schmidt | ||||||

| Classification methods | Post- classification comparison | A change map is generated by a classification method | Supervised unsupervised hybrid | Provide change information matrix, do not need atmospheric correction | Selecting training data is challenging | Land cover [11], Urban land cover [12], Urban land cover [13], Forest change detection [14] |

| Spectral-Temporal combined analysis | ||||||

| EM- transformed (Expectation Maximization) | ||||||

| Unsupervised change detection methods | ||||||

| Hybrid change detection | ||||||

| Artificial Neural Networks | ||||||

| Advanced Method | Li-Strahler reflectance model | transform the spectral reflectance values into physically based parameters | hybrid | More straightforward to comprehend than the spectral signature. Can derive vegetation information | Complicated and time-consuming, developing the proper mode is challenging. | Land cover [3] |

| Spectral mixture model | ||||||

| Biophysical parameter method | ||||||

| GIS technique | Integrate GIS and RS methods | Use different data sources for change detection | hybrid | Land use information can update directly in the GIS database | The quality of the result change map depends on different data type | Forest change detection [15] |

| GIS method |

| Category | Sub-Category | Mode | Data | Advantages | Limitations | Applications |

|---|---|---|---|---|---|---|

| CNN | Deep brief network | Supervised | Multispectral SPOT-5 and Landsat images, google earth images | High accuracy | Time of processing | Urban land use and vegetation [17] |

| CNN | Fully convolutional Siamese network | Supervised | Multispectral Onera Sentinel-2 Satellite images (OSCD) | Trained end to end | Huge amount of training data | Urban land use [18] |

| CNN | Spectral, spatial joint learning network | Supervised | Multispectral Taizhou and Kunshan dataset | High performance | Huge amount of training data | Urban land use [19] |

| CNN | Siamese deep network with hybrid convolutional feature extraction module | Supervised | Multispectral ZY-3 and GF-2 satellite images | Extraction robust deep features | Could not separate pixel from its neighbor for classification | Urban rural-urban non-urban land use [20] |

| CNN | Bilinear convolutional neural network | Supervised | Multispectral Lansat-8 satellite images | End-to-end training | Generating label data is challenging | River and Waterland use [21] |

| CNN | Multidimensional CNN | Unsupervised | OSCD | End to end | Time-consuming | Urban land use land cover [22] |

| CNN | Feature difference convolutional neural network | Pre-trained | Multispectral Worldview-3, QuickBird and Ziyuan-3 satellite images | Powerful robustness and generalization ability | Require huge amount of pixel-level training samples | Urban land use [23] |

| CNN | Deep Siamese semantic segmentation network | Supervised | RGB building images | Decrease training sample issue | Poor performance in detecting the exact boundary of the building | Urban construction [24] |

| CNN | Semi-supervised Siamese network based on transfer learning | Pre-trained | Haiti earthquake QuickBird satellite images | Decrease computational cost | Error map | Urban land cover [25] |

| CNN | Attention mechanism-based deep supervision network | Supervised | Multispectral LEVIR CD dataset [26] | High performance | mode complex | Urban land cover [27] |

| CNN | Multi-Attention guided feature fusion network | Supervised | LEVIRCD [26], WHUCD [28] | Enhance deep feature extraction and fusion | A large number of parameters | Building change detection [29] |

| AE | Multispectral Unet | Supervised | OSCD | End to end | Low performance | Urban land use [4] |

| AE and RNN | Combination of Unet and robust Recurrent Networks such as LSTM | Supervised | OSCD | End to end | A large amount of training data | Urban land use [30] |

| AE | Unet | Supervised | Multispectral KompSAT-3 satellite images | Solve spectral distortion issue | Computational complexity | Urban land use and forest [31] |

| AE | Deep self-attention fully efficient convolutional Siamese network | Supervised | Google Earth multispectral season varying dataset | End to end | Complexity of model | Urban land use [32] |

| AE | Developed from Unet and SeNet | Supervised | Multispectral Wuhan dataset from IKONOS | End to end | A large amount of training data | 2D and 3D building change detection [33] |

| AE | Intensely supervised attention-guided network | Supervised | LEVIRCD [26] | Extract deep features efficiently | Time-consuming | Building change detection [34] |

| AE | Hierarchical self-attention augmented the Laplacian pyramid | Supervised | Higres satellite images | Extracting deep features | Complex model | Urban land use [4] |

| AE | Feature regularized mask DeepLab | Supervised | LEVIRCD [26], GF-1 satellite images | End to end | Cannot detect edge properly | Building change detection [35] |

| AE | Boundary-aware Siamese network | Supervised | LEVIRCD [26] | End to end, sharp boundary | Complex model | Urban land use [36] |

| AE | Efficient Unet++ | Supervised | LEVIRCD [26], CD dataset [37] | Minimize computational parameters | Time-consuming | Urban land use [38] |

| AE | Multitask learning framework L-Unet | Supervised | OSCD | Solve the illumination differences problem and registration error | A poor performance, especially in preserving object shape | Urban change detection [39] |

| AE | Unet | Supervised | OSCD | Simple model, easy to implement | Cannot recognize small changes | Urban change detection [40] |

| RNN | Recurrent convolutional Neural Network | Supervised | Multispectral Taizhou dataset | End to end | Cannot extract all deep feature | Urban land use [41] |

| GAN | Generative discriminatory classified network | Supervised | Multispectral Worldview-2 and GF-1 satellite images | Decrease training sample issue | Complexity of model | Urban land use and water [42] |

| GAN | Deep GAN with improved DeepLabV3+ | Unsupervised | OSCD, Landsat-8, and google earth satellite images | high performance | Huge amount of training data | Urban land use [43] |

| GAN | Self-supervised conditional GAN | Semi-supervised | Multispectral Worldview-2 satellite images | Extract features at multiple resolutions | Model complexity | Urban land use [44] |

| GAN | Feature output space dual alignment | Supervised | LEVIRCD [26], WHUCD [28] | Address the problem of the pseudo changes | Super-parameters α and β are issue | Building change detection [45] |

| DBN | Deep joint segmentation | Unsupervised | Multispectral Sentinel-2 and Pleiades images | Any labeled training pixel is not required | Time-consuming | Urban land use [46] |

| Datasets | Time | Bands | Spectrum Range (µm) | Spatial Resolution (m) | |

|---|---|---|---|---|---|

| Onera Sentinel-2 satellite images Change Detection (OSCD) | Time1 | 2015 | Blue | 0.45~0.52 | 10 |

| Green | 0.52~0.59 | ||||

| Time2 | 2018 | Red | 0.63~0.69 | ||

| North of Iran Sentinel-2 Satellite images | Time1 | 2017 | Blue | 0.45~0.52 | 10 |

| Green | 0.52~0.59 | ||||

| Time2 | 2021 | Red | 0.63~0.69 | ||

| Metric | Formula |

|---|---|

| Precision | |

| F1-score | |

| IOU | |

| Accuracy | |

| Kappa Coefficient (KC) |

| Method | Accuracy (%) | Precision (%) | F1-Score (%) | IOU (%) | Kappa Coefficient (KC) | Time of Training (h min s) | Parameters (Million) |

|---|---|---|---|---|---|---|---|

| STCD-EffV2T Unet (proposed method) North of Iran dataset | 97.66 | 99.61 | 98.79 | 97.60 | 0.67 | 2 h 10 min 34 s | 6.6 M |

| STCD-EffV2T Unet (proposed method) OSCD | 97.32 | 98.44 | 97.05 | 96.34 | 0.59 | 5 min 0 s | 6.6 M |

| EffV2 B0 Unet | 97.45 | 99.60 | 98.68 | 97.40 | 0.59 | 1 h 15 min 26 s | 4.3 M |

| EffV2 B1 Unet | 97.54 | 99.29 | 98.72 | 97.48 | 0.63 | 1 h 50 min 30 s | 4.8 M |

| EffV2 B2 Unet | 97.31 | 98.98 | 98.60 | 97.25 | 0.63 | 1 h 56 min 3 s | 5.2 M |

| EffV2 B3 Unet | 97.23 | 98.89 | 98.56 | 97.16 | 0.62 | 2 h 5 min 32 s | 6.2 M |

| EffV2 L Unet | 97.17 | 99.03 | 98.53 | 97.10 | 0.60 | 3 h 30 min 45 s | 26.0 M |

| EffV2 M Unet | 97.03 | 99.14 | 98.46 | 96.97 | 0.56 | 3 h 38 min 56 s | 13.7 M |

| EffV2 S Unet | 97.23 | 99.30 | 98.56 | 97.17 | 0.58 | 2 h 38 min 44 s | 8.8 M |

| YoloXNano Unet | 97.20 | 99.00 | 98.54 | 97.13 | 0.60 | 1 h 17 min 3 s | 2.1 M |

| YoloXTiny Unet | 97.37 | 99.04 | 98.66 | 97.24 | 0.61 | 1 h 15 min 22 s | 2.9 M |

| YoloXX Unet | 97.17 | 99.07 | 98.45 | 97.13 | 0.51 | 3 h 53 min 46 s | 20.4 M |

| YoloXS Unet | 97.38 | 99.08 | 98.65 | 97.34 | 0.55 | 1 h 18 min 41 s | 3.5 M |

| VGG19 Unet | 97.04 | 99.07 | 98.62 | 97.24 | 0.58 | 1 h 28 min 34 s | 18.2 M |

| ResNest50 Unet | 97.05 | 99.05 | 98.47 | 97.15 | 0.62 | 3 h 21 min 26 s | 16.5 M |

| ResNest101 Unet | 97.27 | 99.09 | 98.58 | 97.21 | 0.61 | 3 h 41 min 0 s | 34.8 M |

| ResNest200 Unet | 95.57 | 97.15 | 97.67 | 95.46 | 0.50 | 9 h 36 min 34 s | 56.8 M |

| DeepLabV3+ | 92.82 | 95.03 | 96.21 | 92.70 | 0.29 | 2 h 30 min 2 s | 17.0 M |

| U2Net | 97.37 | 99.03 | 98.60 | 97.30 | 0.60 | 6 h 2 min 18 s | 44.0 M |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gomroki, M.; Hasanlou, M.; Reinartz, P. STCD-EffV2T Unet: Semi Transfer Learning EfficientNetV2 T-Unet Network for Urban/Land Cover Change Detection Using Sentinel-2 Satellite Images. Remote Sens. 2023, 15, 1232. https://doi.org/10.3390/rs15051232

Gomroki M, Hasanlou M, Reinartz P. STCD-EffV2T Unet: Semi Transfer Learning EfficientNetV2 T-Unet Network for Urban/Land Cover Change Detection Using Sentinel-2 Satellite Images. Remote Sensing. 2023; 15(5):1232. https://doi.org/10.3390/rs15051232

Chicago/Turabian StyleGomroki, Masoomeh, Mahdi Hasanlou, and Peter Reinartz. 2023. "STCD-EffV2T Unet: Semi Transfer Learning EfficientNetV2 T-Unet Network for Urban/Land Cover Change Detection Using Sentinel-2 Satellite Images" Remote Sensing 15, no. 5: 1232. https://doi.org/10.3390/rs15051232

APA StyleGomroki, M., Hasanlou, M., & Reinartz, P. (2023). STCD-EffV2T Unet: Semi Transfer Learning EfficientNetV2 T-Unet Network for Urban/Land Cover Change Detection Using Sentinel-2 Satellite Images. Remote Sensing, 15(5), 1232. https://doi.org/10.3390/rs15051232