1. Introduction

Advanced Driver Assistant Systems (ADAS) are increasingly being considered in the context of the research towards autonomous driving vehicles. By and large, ADAS require abundant and reliable data acquired by various sensors, such as cameras, LiDARs, and radars. Automotive radars, in particular, have proven to be excellent tools for sensing the surrounding environment thanks to the day and night imaging capability, and the ability to measure distances and velocities with great accuracy. Although radars are used for ADAS systems, the interest in the development of this technology in the automotive scenario has been growing widely in the last decades [

1,

2,

3,

4,

5].

Current automotive radars generally provide coarser resolution than optical and LiDAR sensors, which is perceived as an intrinsic limitation of such technology. Indeed, vehicle-based radar imaging is typically implemented using Multiple-Input Multiple-Output (MIMO) devices, for which angular resolution is bounded by the number of Tx/Rx channels forming the MIMO array. For this reason, achieving fine angular resolution requires the implementation of more complex, large, and expensive devices. Nowadays, radars used for automotive purposes operate in the W-band [

6] (above 76 GHz) and are mostly used for crash avoidance and object detection at short, medium or long range depending on their wavelength.

To overcome such limitations, recent researches have focused on the concept of synthetic aperture radar (SAR), where an arbitrarily long array is formed by exploiting the natural motion of the vehicle [

1,

2,

3,

4]. Still, a necessary condition for any conventional SAR imaging algorithm is that the radar pulses are acquired continuously, meaning that the device is constantly operating. Unluckily, this is not the case in the current automotive scenarios: due to radio-frequency regulations and hardware requirements, radar devices are typically designed to operate on a non-continuous basis by sending bursts of few hundreds of pulses alternated with periods of silence. This acquisition mode creates gaps in the spatial spectrum of SAR images, which manifest themselves through the appearance of grating lobes in SAR images, leading to false target detections. This problem is typical of SAR imaging and it has been widely covered in the literature [

7,

8].

The aim of this paper is to design, implement and test a routine able to remove grating lobes from SAR images generated by processing data acquired in bursts. The goal is achieved with several steps. First of all, a space-invariant reference system is derived in which the spectrum of the data depends uniquely on the position of the sensor and not on the position of the target. Unlike more common reference systems such as the Cartesian or the polar ones, this reference frame allows us to see the gaps in the spectrum of the data. The high resolution SAR image (still showing grating lobes) will be the coherent sum of the bursts. Each burst, however, must be focused properly using autofocusing techniques [

3] in order to correct for possible trajectory errors. Then, each burst must be phase calibrated [

9,

10] in order to allow for a fully coherent summation. Both steps are described carefully in this paper. Finally, the removal of grating lobes is achieved with the CLEAN algorithm, developed in [

7] for speckle and grating lobes suppression in astronomy and it is widely used also in telecommunications [

11,

12,

13,

14].

This paper is organized as follows:

Section 2 covers the geometry and the signal model, and the basic notions of automotive SAR are dealt.

Section 3 regards the wavenumber domain in SAR imaging, where the theory regarding the spatial spectral domain characteristics of a burst acquired signal are covered. In

Section 4, the new mathematical framework which is the corner stone of this work and that allows a space-invariant spatial spectrum descriptor is developed, also the autofocus, phase calibration technique and the grating lobes suppression algorithm are described in this section.

Section 5 shows the real data results used to validate this work and finally in

Section 6, the conclusions are drawn.

2. Geometry, Signal Model and SAR Processing

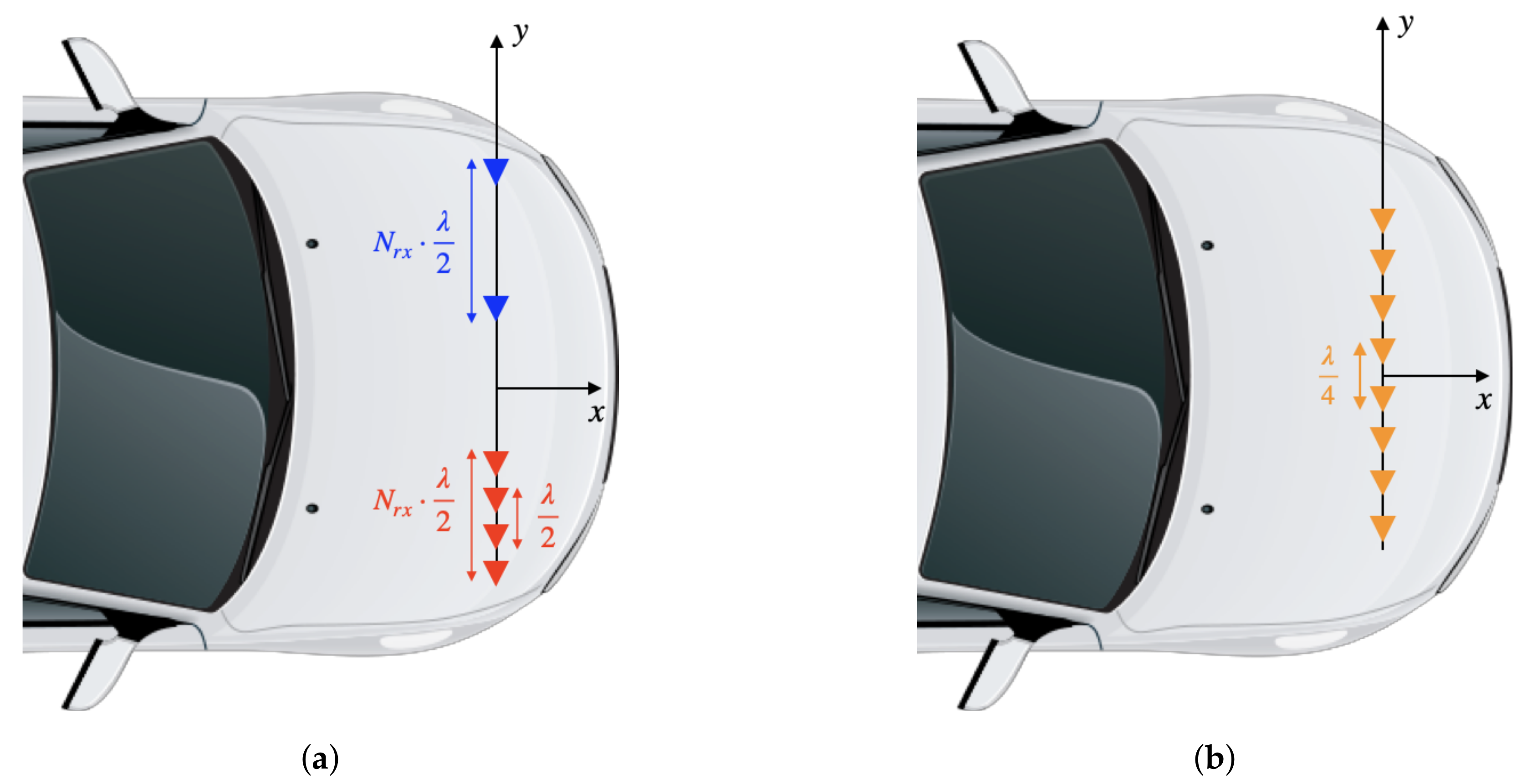

In this paper we will consider a MIMO radar having

transmitting antennas and

receiving ones, forming an equivalent monostatic virtual array of

elements. The elements and their spatial locations are represented in

Figure 1.

The radar is mounted with a arbitrary installation angle on the car. A forward looking geometry is indicated for target detection and navigation assistance; a side looking geometry is, instead, more indicated for parking detection, while a squinted geometry can be useful for collision avoidance. From now on, we will consider a forward looking geometry with the virtual elements displaced along the y direction.

It is well known that a system such as the one just described will provide a slant range resolution equal to [

15]:

where

c is the speed of light and

B is the bandwidth of the radar pulse. The angular resolution, instead, is related to the length of the virtual array as:

where

is the distance between elements of the virtual array,

is the total length of the virtual array,

is the off boresight angle as depicted in

Figure 1.

In order to avoid ambiguities, the distance between the virtual elements should be equal to

[

16]. With this spacing, if we take as an example a radar with eight virtual channels working at 77 GHz, the finest achievable resolution is roughly fifteen deg.

One way to improve angular resolution is by exploiting the well known SAR concept. While the MIMO array is transmitting pulses, the vehicle where it is mounted moves, generating the so-called synthetic aperture. While for the MIMO radar the resolution was bounded by the length of the virtual array, now the resolution is bounded just by the length of the aperture as

where

is the length of the synthetic aperture. Notice that now at the denominator there is a

function. While the MIMO array provides the best resolution at its boresight (in front of the car), the SAR image has its best resolution in the direction orthogonal to the motion (i.e., at the left or right of the car).

It is now useful to go deeper into the signal model and in SAR processing (also called SAR focusing). Without loss of generality, we assume that the vehicle travels in a rectilinear trajectory along

x. We remark that this is not a necessary condition for SAR imaging to work, but it simplifies the following derivations. The car travels with a velocity equal to

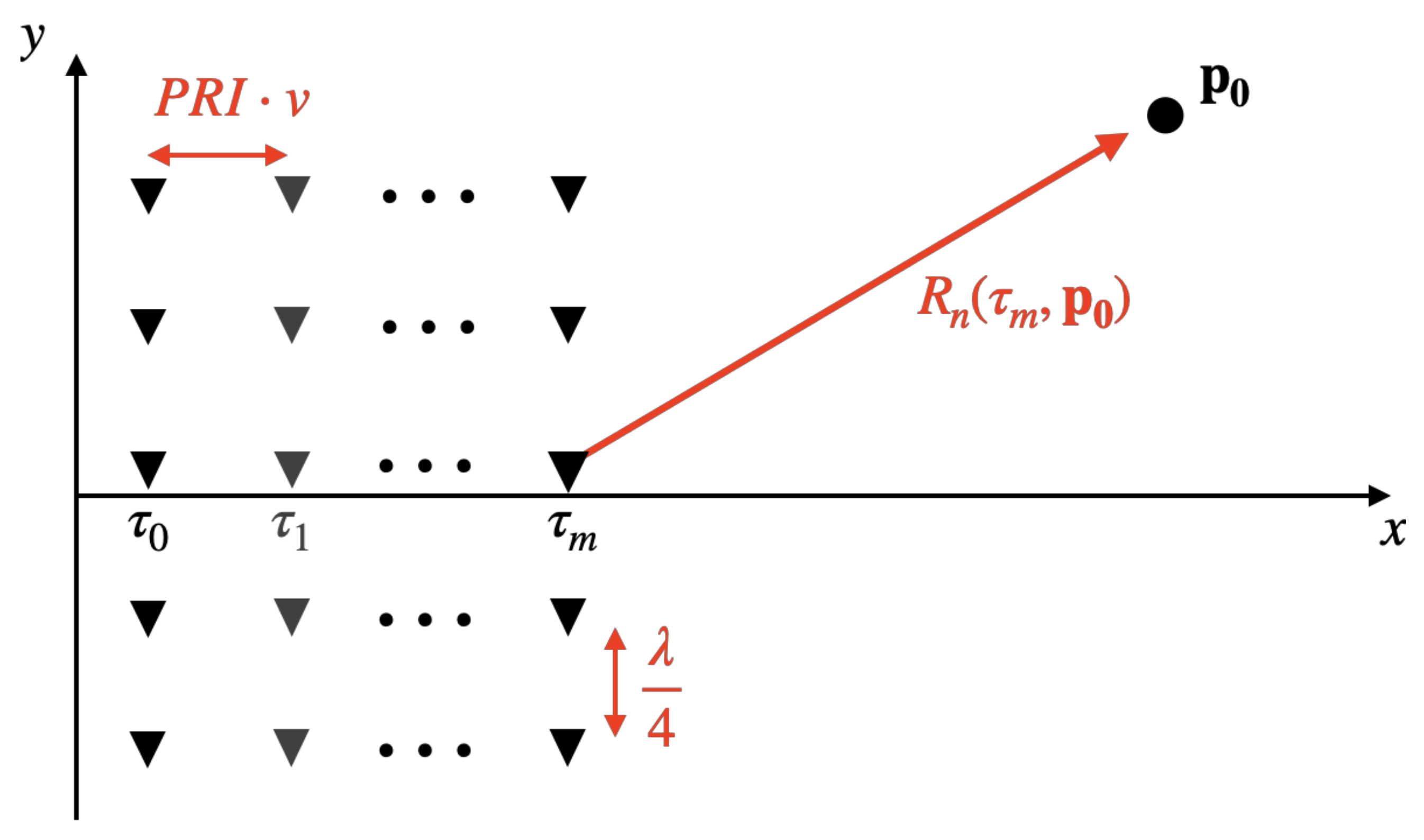

and the MIMO radar transmits a linearly frequency modulated chirp every Pulse Repetition Interval (PRI) second. Given the velocity, the radar system adapts its PRI in order to mantain a spatial sempling distance of [

16]

where

is the number of MIMO channels. A visual representation is given in

Figure 2.

After the reception of the echo, the signal is range compressed. The signal model of the range compressed signal is [

17]

where

r is the slant range;

is the slow time sampled every PRI seconds;

n is the index of the virtual element;

is the geometrical position of the target;

is the distance from the th virtual element to the target at time .

Notice that in Equation (

5), we have neglected an amplitude factor and all the bistatic effects (i.e., we are considering an ideal monostatic equivalent of the MIMO TX/RX scheme).

We can now exploit a set of samples to form a SAR image by using a simple but straightforward time domain back projection (TDBP) algorithm [

16]:

where

is the SAR image at pixel

, the expression

indicates the range compressed signal evaluated at a range

, which is the distance between the

th virtual phase center and the generic pixel

p at time

.

We highlight that Equation (

6) can be split into two separate summations. First, we form a set of low-resolution snapshots by coherently summing over the channels:

where

is the low-resolution image generated by the MIMO radar at time

. We now sum over all the slow times, obtaining the final SAR image:

The two-step summation opens the possibility for advanced and efficient focusing schemes [

4] and autofocusing algorithms to refine the vehicle trajectory [

3,

18].

3. Wavenumbers in SAR Images

In this section, we will recall the concept of

wavenumber which is widely used in geophysical, radar and SAR imaging. Any SAR experiment can be described in two conjugate domains: the direct domain referred to usually as time or space domain, and the frequency or wavenumber domain. This representation comes useful since any SAR experiment can be considered as the enlightenment of the spatial frequency components of a target, as extensively explained in [

19].

If we focus for a moment on a simple Cartesian 3D reference system, the transformation from the spatial domain to the wavenumber domain can be performed by a simple 3D Fourier transform, that is by projecting the spatial signal on the basis function:

where

is the so-called

wavevector and its components are called

wavenumbers. The wavenumbers can be computed by simply taking the partial derivative of the phase

w.r.t. of each spatial variable. Taking advantage of the gradient notation:

where

∇ is the gradient operator and

is the phase in Equation (

9). The transmitted signal can be represented in the spatial domain (in the far field approximation) as a plane wave illuminating the target. The direction of propagation of such a wave is defined by the wavevector

and it is parallel to the line joining the sensor and the target. It follows that a movement of the sensor corresponds to a change in the illuminated wavenumber, or, in other words, to an expansion of the bandwidth of the signal in the wavenumber domain.

To clarify the need of a custom reference frame in place of a Cartesian or polar one, we must highlight a few characteristics of the signal in the wavenumber domain, such as the dependence of the illuminated wavenumber by both the position of the sensor and the target in the scene. This issue is discussed more in detail in the next section.

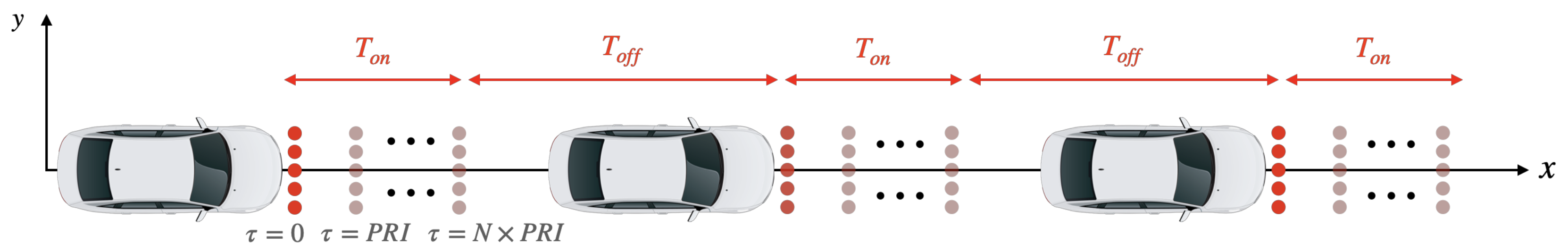

4. Imaging with Bursted Data

This section encloses the heart of this work. First, a description of the burst acquisition mode is provided as well as the spectral coverage of the most common reference systems and the derivation of a proper reference system where the spectral space invariance property holds. Second, the procedure for burst phase calibration is provided and finally, the CLEAN algorithm is described. A visual representation of our workflow is given in

Figure 3.

4.1. Burst Data Acquisitions

As described in

Section 3, the reciprocal position of radar and target determines the illuminated wavenumbers and thus the SAR angular resolution. Usually, the processed synthetic aperture is uniformly sampled; therefore, the coverage in the wavenumber domain is uniform (without “holes”).

In some cases, and in particular in the automotive radar, SAR acquisitions may be performed on a non-continuous basis. The reason behind this lies on the regulations establishing the coexistence between different telecommunication devices [

20] and for electronic battery power saving. In practice, this acquisition mode, called

burst mode, consists in turning on and off, periodically, the radar along the traveled trajectory.

Figure 4 explains the concept clearly.

The result of this non-continuous acquisition is that, in the spatial frequency domain, some wavenumbers are not covered, generating some gaps in the spectrum. In the focused image, these gaps manifest themselves as artifacts commonly known as grating lobes. We recall once again that the positions of the covered areas (from now on called tiles) and the positions of the gaps in a Cartesian reference frame are a function of both the radar position and the target position. For an artificial problem composed by a single target, the gaps will be visible in the spectrum, but this is not the case if the scene is composed by a variety of different targets distributed in any position of the field of view.

The need arises for suitable data representation that allows a common spectral description for all the targets in the image in order to allow a standard signal processing. In the following section, a mathematical framework will be developed in order to overcome this problem.

4.2. 2D Tessellation

In this subsection, we are going to analyze the spectral behavior of the most common reference systems: we start from the simplest one which is the Cartesian; then, we will proceed with a frequency description of the signal in the polar coordinates and finally on a custom (and appropriate for our needs) reference frame.

4.2.1. Cartesian Reference System

Given a 2D Cartesian reference system as in

Figure 5 and a target located in

, the range equation for a given time instant

is

where

is the position of the radar along the x axis at time ;

is the position of the radar along the y axis at time ;

are the coordinates of the target.

The spectral contributes

can be computed as the partial derivative of the range equation w.r.t. for each variable:

where

is the look angle from the boresight of the radar to the target. The above equations are obviously space variant, i.e., they depend on both the position of the target

and the position of the radar.

4.2.2. The Polar Reference System

Another common reference system is the polar one., depicted in

Figure 6. It is possible to fix a relationship between the polar and the Cartesian system as

where

r is the range and

the angle.

It is rather easy, thanks to the derivative chain rule, to compute the spectral contributes under this reference system

, as

where

is the angle between the sensor and the target and

is the angular position of the target in the polar reference frame. If we place the origin of the polar grid at the center of the aperture and we assume a conveniently small aperture where

, we can expand Equation (

15) in Taylor series around the point

, obtaining

which can be considered almost constant for short enough apertures. For the angular wavenumber, instead, we have:

which, instead, is not constant. Since the dependency on the position of the radar is not perfectly highlighted, let us use the relation (

14) to explicit the differential

as a function of

:

in order to refer Equation (

17) explicitly to the radar position, it is possible to look at the differentials

and

as the displacement of the sensor position along the trajectory, namely

which depends on both the sensor position and the position of the target. Again, the polar reference frame is space variant and therefore not suitable for our purpose.

4.2.3. The (r,e) Reference System

In this section, we develop a reference system such that the illuminated wavenumbers are uniquely dependent on the position of the sensor and not on the position of the targets in the scene. We call this system

, since one variables remains the range (which is space invariant) and the other is instead a transformation of the angle

, such that the resulting wavenumber is independent upon the target position:

There is no particular solution

allowing a total independence of

from the target position. However, if we assume that the target trajectory is along only one direction, let us say

x, we can discard the other term by forcing

. Therefore (

20) becomes

It comes straightforward that in order to have space invariance the ratio

must be equal to

and therefore:

From which we can derive the change of variable needed:

The reference system built upon this assumption is ambiguous at bore-sight, since the function

is even. One possible solution is to process separately two sides of the scene, left and right. However, this solution can cause some problems in merging the two images. Another possible solution is to change Equation (

22), taking into consideration the ambiguities. We then consider

which leads to

Thanks to Equation (

26), it is possible compute the spectral coverage in the

domain, using the derivative chain rule:

Plugging Equation (

25) in Equation (

27) one gets

The spectral descriptor has sudden change of sign around

. This can become an issue for the space invariance property that we require from our custom reference system. In particular, due to the dependency on

in Equation (

28), the space spectral descriptor will lose it’s space invariance property. For instance, let us assume to have a target at a positive angle

and to have only positive values of the radar sensor

x. The covered tile in the spatial spectral domain will be purely positive. On the other hand, if we have a target with a negative angle

, only negative wavenumbers will be covered, which is not the spatial invariance property we would like to have. Anyway, in order to make the spectral contributes of all the targets in the scene sum up in the same spectral portion, the synthetic aperture must be centered around

; in addition, an odd number of bursts must be processed. This must be done in order to exploit the odd symmetry of the spatial spectral estimator, in order to let the targets’ spatial spectral component sum up in the same spectral portion.

4.3. Constant Spectrum Approximation and Validity Limits

In order to achieve a space spectral invariance property in Equation (

28), we would like to consider as a first approximation

therefore, we define a validity region for this approximation, where the value of

r is comparable with the total synthetic aperture length. In addition, in order to take into account the burst acquisition mode, we will introduce an indicator function

, which takes the value 1 if the radar is acquiring the data and 0 otherwise.

By taking into account the above considerations, Equation (

28) becomes

By using Equation (

30), it is possible to compute the spatial spectral bandwidth and the central wavenumber in a straightforward manner. The spectral bandwidth can be computed by considering the displacement of the vehicle within one burst:

where

is the displacement along the direction of motion, and

refers to the total synthetic aperture. In addition, the previous equation can be used to state the resolution in the

reference system, indeed:

where

is the resolution along the

e-variable. Similarly, it is possible to define the central wavenumber by using the midpoint of the synthetic aperture

, as

The above equations can be referred to a constant spectrum approximation (CSA). Actually the CSA presents some limits, in particular outside the validity region defined thanks to Equation (

29), i.e., in the

near range zone, where the range becomes comparable to the synthetic aperture length. However, thanks to the CSA, it is possible to predict exactly the image spectral component according to the two laws expressed in Equation (

31) and in Equation (

33).

4.4. Residual Motion Compensation and Phase Calibration

This subsection describes at high level the autofocus and calibration procedure performed on each single burst after their focusing. We remark that these procedures are mandatory to remove the residual trajectory error and to be able to jointly exploit all the bursts, achieving in this way extremely fine resolutions.

4.4.1. Residual Motion Compensation

An important aspect of accurate SAR imaging is the knowledge of the platform trajectory, which should be known with an accuracy of a fraction of a wavelength [

10]. A threshold can be set by simply requiring that the velocity error must be smaller than the velocity resolution of the system:

where

is the synthetic aperture time, i.e., the time taken by the vehicle to move along the synthetic aperture. An error larger than

will result in a mis-localization of the target of more than a resolution cell. Small wavelengths and high integration times call for a very high accuracy in the knowledge of the trajectory, which is typically an unmet condition in standard navigation units. This condition calls for a dedicated procedure to refine the platform motion directly from radar data. The residual motion compensation procedure used during this work has been fully developed and explained in [

18]. In this paper, we will briefly recall the main concepts.

The availability of a multichannel device greatly simplifies the task of residual velocity estimation, which can be carried out to within sufficient accuracy by analysis of the Doppler frequency at different off-boresight angles, see for example [

3]. The most common model in literature is the constant

velocity error. This model is generally valid over short synthetic apertures [

3,

21,

22,

23]. This hypothesis leads to an easy expression of the residual Doppler frequency over each target in the scene:

where

is the residual radial velocity as seen by a target at angular position

,

is the residual velocity along

x, while

is the one along

y. The estimation of the parameters of interest (

and

) can be carried out by detecting a set of

N fixed Ground Control Points (GCPs) in the scene and by retrieving the residual Doppler frequency over each of them. In this way we obtain a linear system of equations:

Notice that the measurement vector containing all the residual Doppler frequencies can be obtained by a simple Fourier Transform of the slow-time over each detected GCP in the scene. This is the case thanks to the assumption of constant velocity error that in turn is responsible for a constant residual Doppler frequency. The design matrix is built upon the knowledge of the off-boresight position of each GCP. In this setting, a multichannel device is essential to provide an a priori knowledge of the angular positions of the GCPs. The system of Equation (

36) can be inverted using a simple least squares (LS) approach obtaining an estimate of the residual velocities.

4.4.2. Phase Calibration

Once the residual motion compensation procedure has been completed, each burst is properly focused. A further calibration step is now necessary to compensate for the displacement between single bursts apertures. Let us assume that we have a set of

SAR images and that they are not aligned with each other. The displacement can be modeled as a phase shift between bursts as

where

is the wavevector associated with the spatial position

and

is the residual displacement between two bursts. In order to explain the phase calibration procedure, let us consider

, i.e., a couple of SAR images, one as the master image and the other as the slave one. Let us also consider a set of GCP shared between the two; more precisely, we will refer to

as the complex amplitude of the

th GCP of the master image, and to

as the complex amplitude of the

th GCP of the slave one. The procedure consists in maximizing the following expression w.r.t.

:

where

is the wavevector associated with the spatial position

of the

th GCP.

In practice, this procedure consists in an exhaustive search that takes place for each dimension of the images. Once the optimum value of has been found, a phase field is applied to the slave image in order to compensate for the residual phase shift. In the end, the final image is computed as the sum of the two images.

4.5. The CLEAN Algorithm

The CLEAN algorithm was first introduced for removing grating lobe induced artifacts in astronomy [

7] and also is widely adopted in radar imaging [

11,

12,

13,

14]. It consists in successively selecting bright targets and removing their side-lobe responses by subtracting the impulse response of each target individually in an iterative manner. Our use-case lies in a class of techniques called compressive sensing, which aim at reconstructing a signal, given an under-determined system to be solved; those problems are commonly used to tackle unevenly sampled, periodically recurring, gapped, arbitrarily sparse data problems, which are common in SAR data processing and in astronomy [

7,

8,

11,

12,

13,

14,

24,

25]. We chose CLEAN as a target estimation algorithm for our work due to its low computational cost. Because of this, CLEAN is suitable for a real time implementation as required by the automotive application. This algorithm works under the assumption of impulsive sources to be estimated. Generally speaking, given a generic discrete signal

, CLEAN performs a peak estimation, i.e., it selects the maximum value of

as the signal source and then it gives as result a new signal

, made up by the highest peaks of

.

More accurately, let us define a generic discrete signal

, of

elements and its sparse spectrum

of

element, and assume that there exists a impulse response filter

known

a priori such that it perfectly describes the unitary impulse response of the system. The signal

can be described as the sum of scaled impulses, namely:

where

is a complex value describing the amplitude of the signal. The CLEAN algorithm performs a peak estimation by taking the position of the maximum of

and its value, as

where

k is the position of the maximum and

its value. A new impulsive discrete signal is now formed by considering a single peak in the position of the maximum (

k) with a value defined as before:

Then, the impulse response of the estimated source

is computed thanks to a convolution as

where

is the filter describing the unitary system impulse response. The next step of the CLEAN algorithm involves the computation of an error sequence

, as

After that, the CLEAN procedure is iteratively applied to , until stopping criteria are met. In our work, we have used a threshold under which the signal is considered pure noise and a maximum number of peaks are to be found; as a rule of thumb, 20 targets were selected. The advantage of CLEAN is that it both performs the side-lobes removing procedure and a denoising procedure, since as said before, is indeed an impulsive signal made up by the estimated peaks of .

5. Results

In this section, the results of our work are shown. The data have been acquired during an acquisition campaign performed with an eight-channel radar called ScanBrick

® developed by Aresys

® and working at 77 GHz. The ScanBrick

® used a bandwidth

GHz, which provides a range resolution of about 15 cm. In

Table 1, the system parameters are summed up. For a more detailed explanation of the hardware set up of the experiment, see [

16].

The Radar data are accompanied by the IMU data in order to know the location of the vehicle with respect to a Cartesian external reference system. The vehicle is also equipped with a camera mounted on the car rooftop in order to provide the optical ground truth of the scene.

The radar is mounted in a forward looking configuration; therefore, the radar boresight is pointing in the direction of motion. The eight-channel radar is implemented with two transmitting antennas and with four receiving ones , leading to eight virtual monostatic antennas spaced by . The angular resolution provided by an eight-channels radar is about 14 deg. Each burst is a portion of the trajectory made of slow time samples. In each burst, the car travels for as little as 30 cm which would lead to a maximum resolution of 0.37 deg (at the right or the left of the car).

On the other hand, the whole aperture comprehensive of both on and off times, as in

Figure 4, is about

m, which leads to an extremely fine angular resolution of

deg: five times finer than the angular resolution provided by one burst.

The radar uses a multiplexing scheme known as time division multiplexing (TDM). It consists in delaying the signal transmission from the second Tx-antenna in order to not create interference. The delay is given by the PRI. For this reason, there is a spatial shift between the two virtual antenna clusters. Indeed, since the vehicle is moving at a given velocity and the transmission is delayed, the second antenna cluster is shifted in space. This shift can be computed since both the velocity and the PRI are known by the system. This was not an issue, since the TDBP algoritm we used for focusing the images takes into consideration the position of the antennas phase centers and compensates, exactly, the antenna shift. Thus, no artifacts due to the TDM arose.

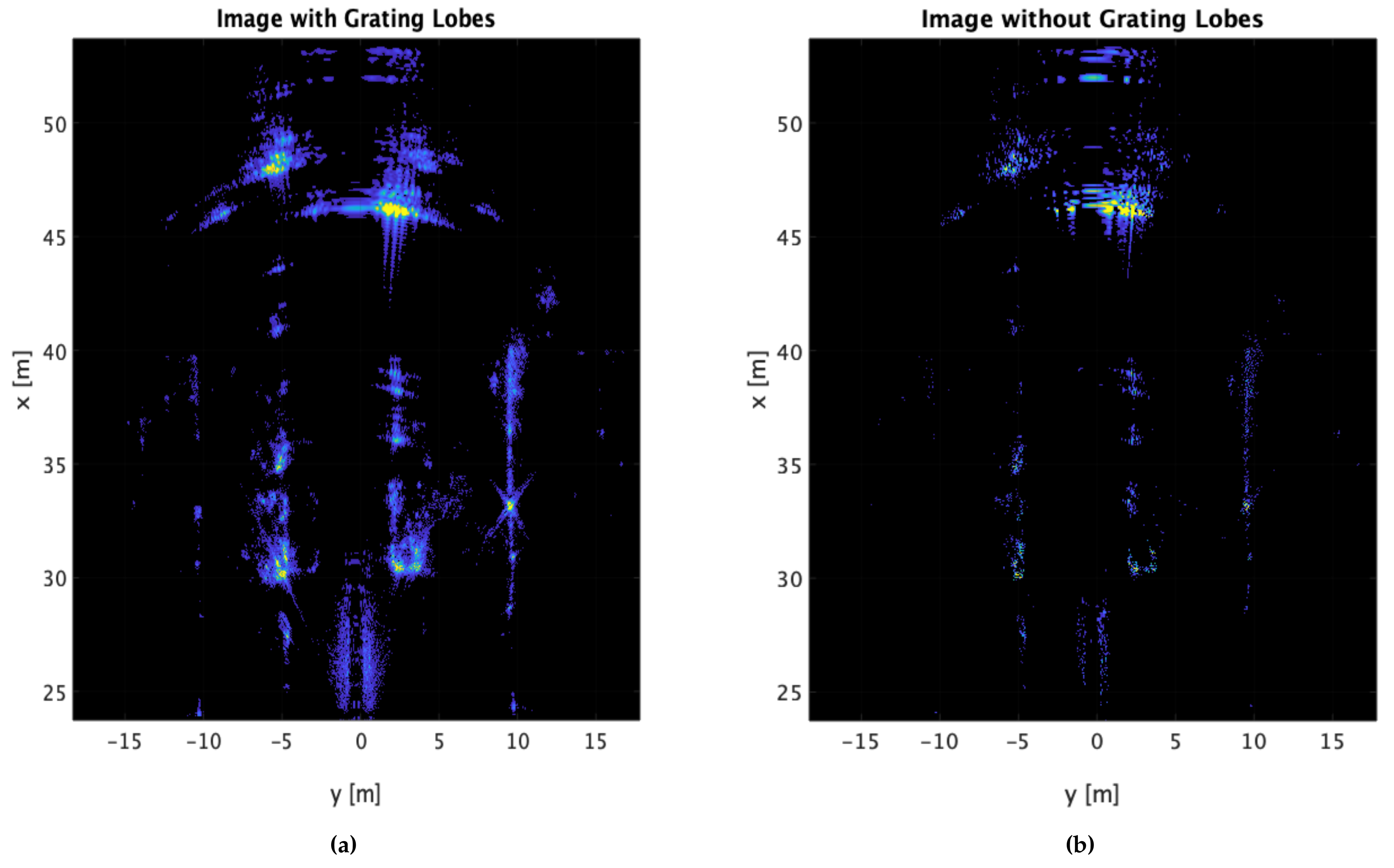

The SAR image after the phase calibration procedure, considering the whole aperture, is depicted in

Figure 7a. The image is dense of peaks as an effect of the aberrations due to the burst acquisition mode. After the grating lobes suppressing procedure using

as the maximum number of targets per-row, the image shown in

Figure 7b has preserved the brightest targets. Let us notice that, thanks to the CLEAN algorithm, a point target reaches one-pixel resolution.

The spectrum of the grating lobes corrupted image is depicted in

Figure 7c. The spatial spectrum is consistent with the theory developed in the previous sections. The spectral estimation, as said before, clearly shows a validity zone where the gaps are observable. The size of each tile is proportional to the length of the synthetic aperture exploited by each burst, as expected from Equation (

30). In the near range, instead, the CSA is no longer valid and we see that the three tiles in the spectrum merge around

.

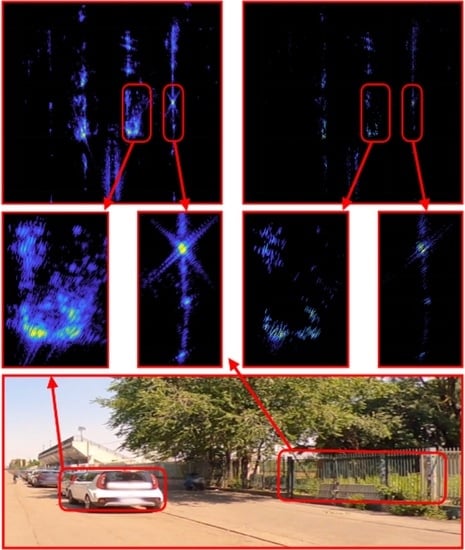

Both the

Figure 7a and

Figure 7b have been projected into the Cartesian domain, respectively, in

Figure 8a,b. In the former, it is difficult to distinguish the shapes of the objects due to the grating lobes presence and details not being sharp; in the latter, instead, the details are sharper and the resolution is increased. A rough comparison can be performed with the optical image in order to identify the shapes of the objects in the scene. The optical image is presented in

Figure 9.

We would like to draw attention to two particular objects in the images. The gate in

Figure 10c has been zoomed in the SAR images and can be found in

Figure 10a,b. In the former, the grating lobes can be seen in a clear manner since the effect is very similar to a blurring effect. In

Figure 10b, instead, the bright back-scattering points have a shape that is sharper.

The other notable detail is depicted in

Figure 11c, whose SAR equivalents are

Figure 11a,b. In the latter, the shape of the parked car is much more clear, since details are recovered and grating lobes are suppressed.

In

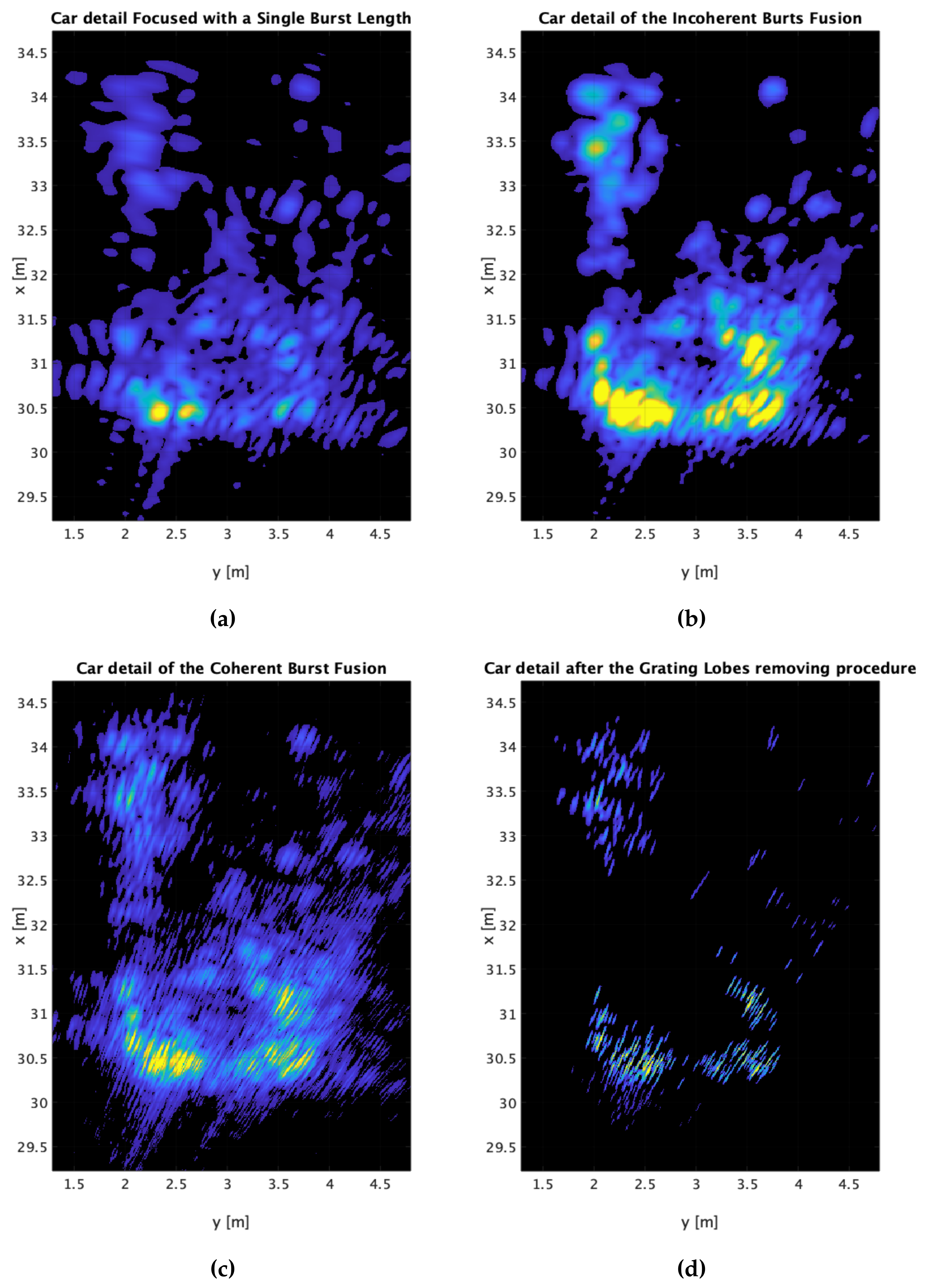

Figure 12, we propose a comparison between different processing with one or multiple bursts.

Figure 12a represents the focused car by using a single burst. The resolution is rather poor, but at least no grating lobes are visible.

Figure 12b shows the incoherent sum of the three bursts. In this case, noise is abated by the incoherent average, but there is no significant resolution improvement. An even better imaging capability can be found in

Figure 12c which is the coherent sum of the three SAR images. Some details start to emerge, but, on the other hand, the grating lobes artifacts are clearly visible. The last image in

Figure 12d shows our processing; details are reconstructed and the shape of the car is much more defined. Sidelobes from strong reflecting surfaces are also suppressed (see the bottom left of the image).

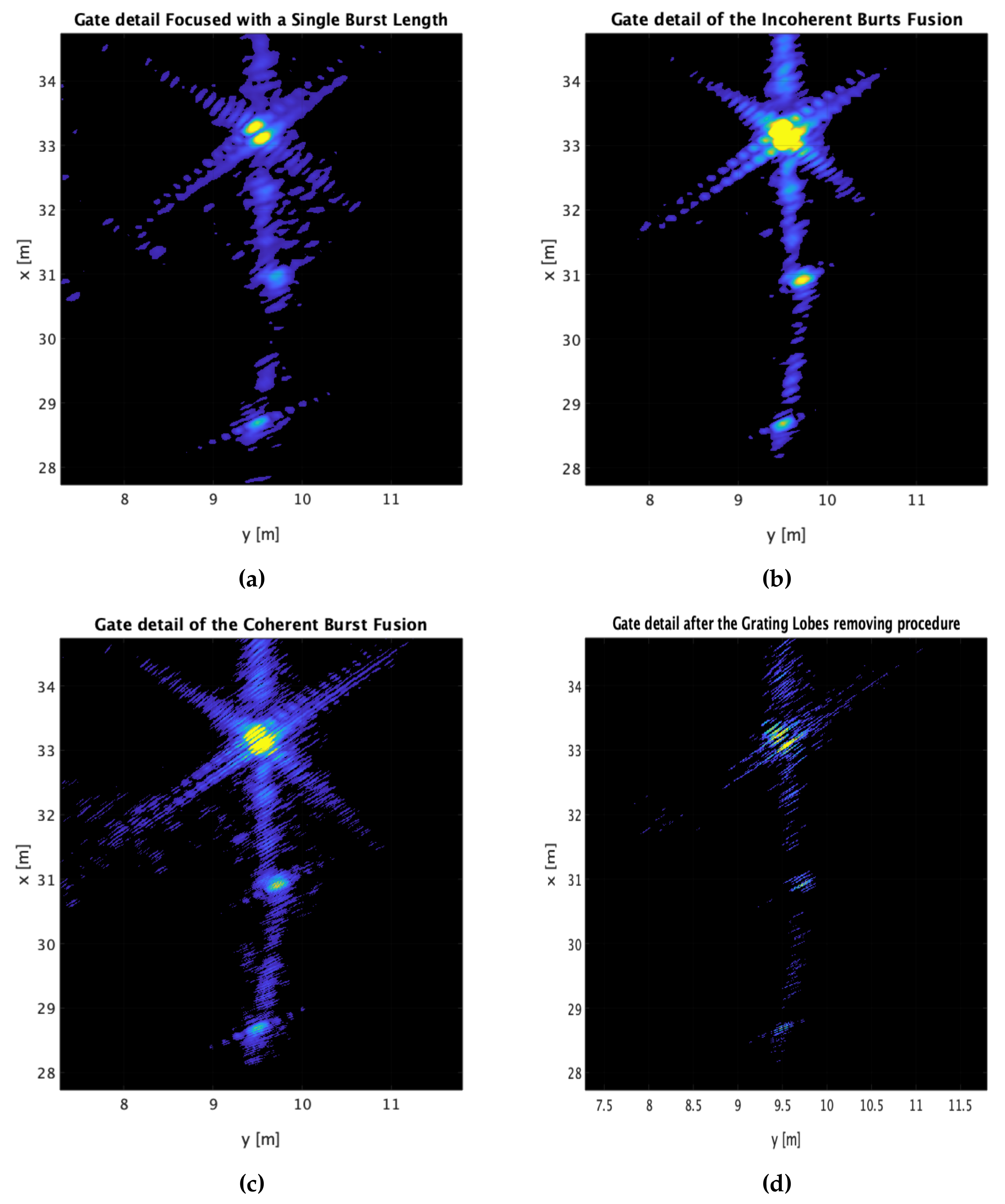

The previous comments can be written also for

Figure 13. In

Figure 13d, the single poles can be spotted in the images as point scattering targets after the grating lobes removing procedure. The information retrieved by the obtained images can be merged with that coming from cameras and LiDAR for a future step ahead into the autonomous driving.

6. Conclusions

In this paper, we have presented an algorithm to remove grating lobes from vehicle-based SAR images. Automotive radars are required by the law to operate in a non-continuous manner. While this condition does not represent an issue in classical automotive radar imaging, this is not the case for automotive SAR. Indeed, SAR processing is performed by exploiting a set of pulses acquired continuously from different spatial positions. If the transmission is not continuous, however, there are some gaps in the illuminated spatial spectrum of the scene; therefore, the reconstructed image will contain some aberrations called grating lobes.

This paper proposes a complete mathematical description of the spatial spectrum of a SAR image focused using burst data. A workflow to correct grating lobes is then presented. We start by detailing why a Cartesian or polar reference system cannot be used to solve this problem. We then continue by deriving a suitable reference system, which is a transformation of the polar one allowing for a space-invariant spectral representation. In other words, the spectrum of the image will depend only upon the position of the sensor and not of the target itself. The workflow continues by exploiting a compressive sensing algorithm called CLEAN, which allows the recovery of an extremely fine-resolution image without grating lobes.

The whole process has been validated through a real data set acquired with an automotive radar operating in W-band, mounted on a vehicle in forward-looking configuration. The procedure proposed in this paper has proven to remove the aberration in the image, leading to a clear recognition of the targets and their details.