AEFormer: Zoom Camera Enables Remote Sensing Super-Resolution via Aligned and Enhanced Attention

Abstract

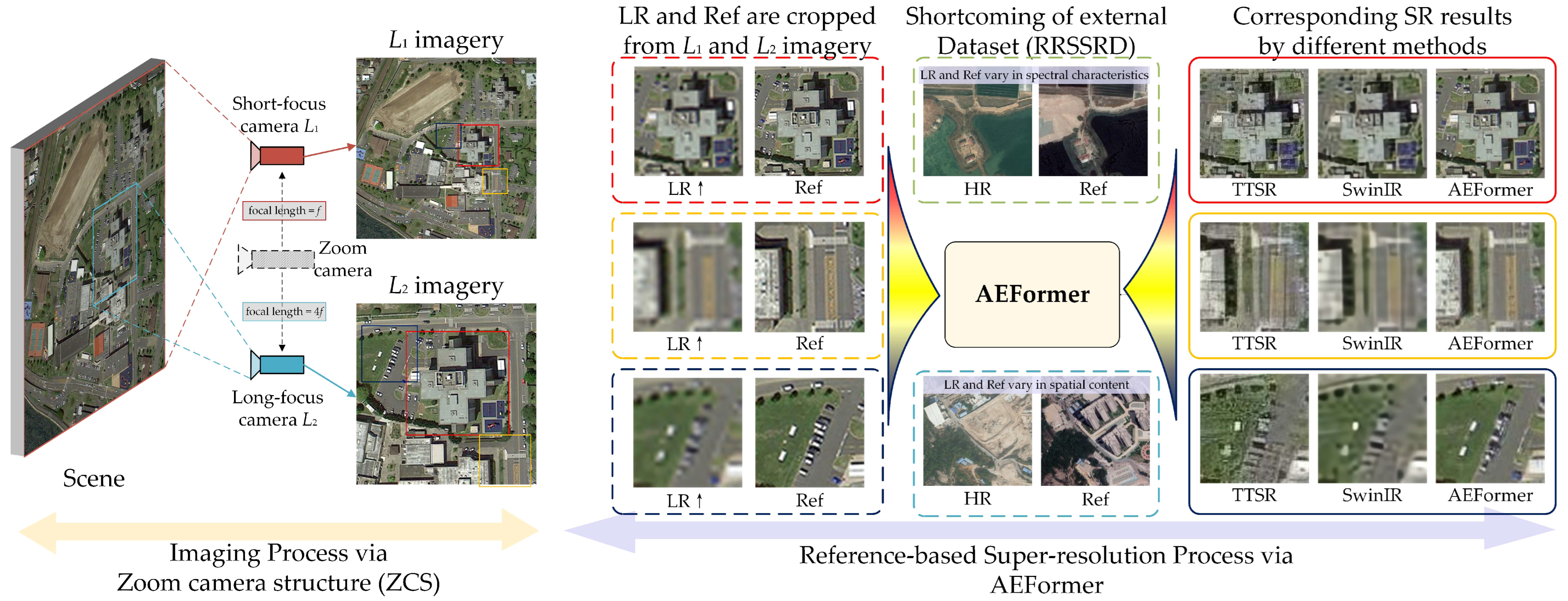

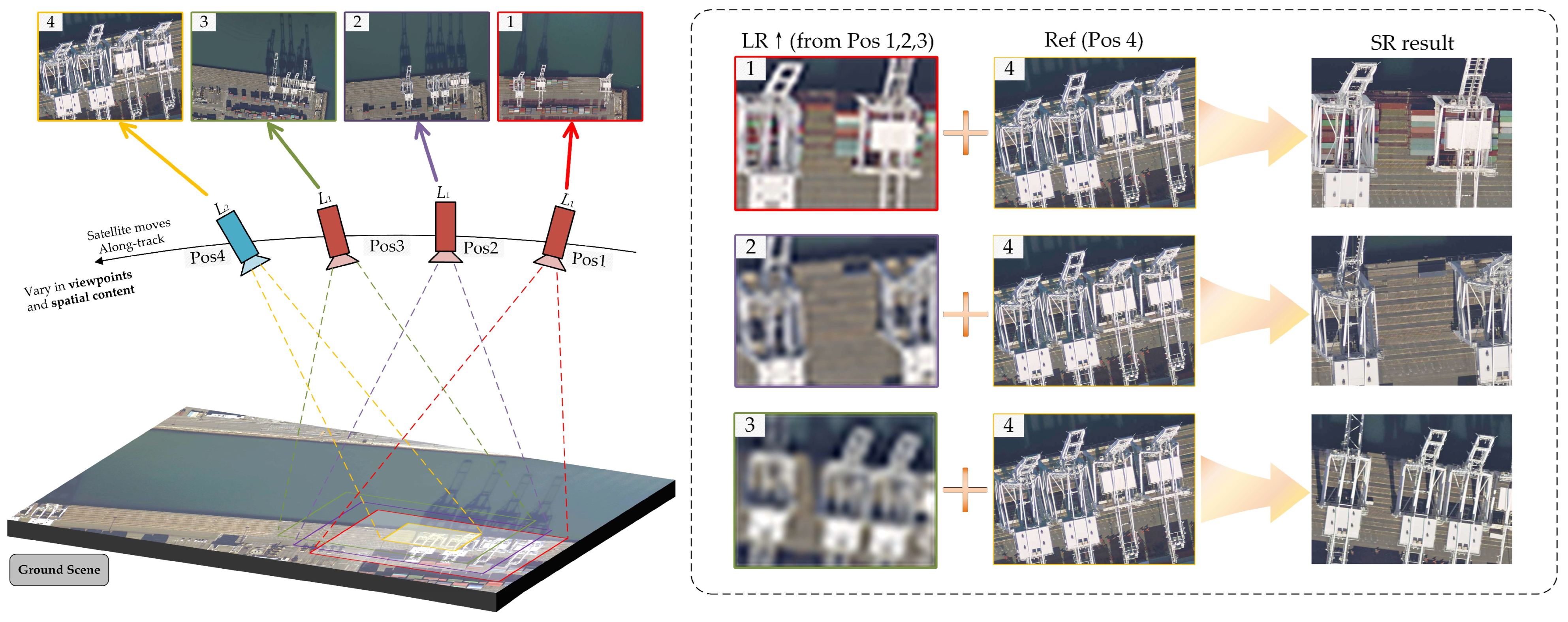

:1. Introduction

- (1)

- This study proposes a novel network for super-resolving remote sensing imagery, namely AEFormer. To the best of our knowledge, AEFormer is one of the first ViT-based RefSR networks in the field of remote sensing. Compared with existing SR networks, especially CNN-based ones, AEFormer exhibits extraordinary performance both qualitatively and quantitatively;

- (2)

- The core advantage of AEFormer lies in the proposed aligned and enhanced attention. Due to the strong representation capability of ViT, aligned and enhanced attention represents a significant improvement to existing RefSR frameworks;

- (3)

- The proposed ZCS is capable of enhancing the efficiency and quality of remote sensing imagery in both temporal and spatial dimensions. To the best of our knowledge, ZCS is pioneering in the field of remote sensing, which may provide insights for future satellite camera design.

2. Related Works

2.1. Single Image Super Resolution (SISR)

2.2. Reference-Based Super Resolution (RefSR)

2.3. Vision Transformer (ViT)

2.4. Dual Camera for Super Resolution

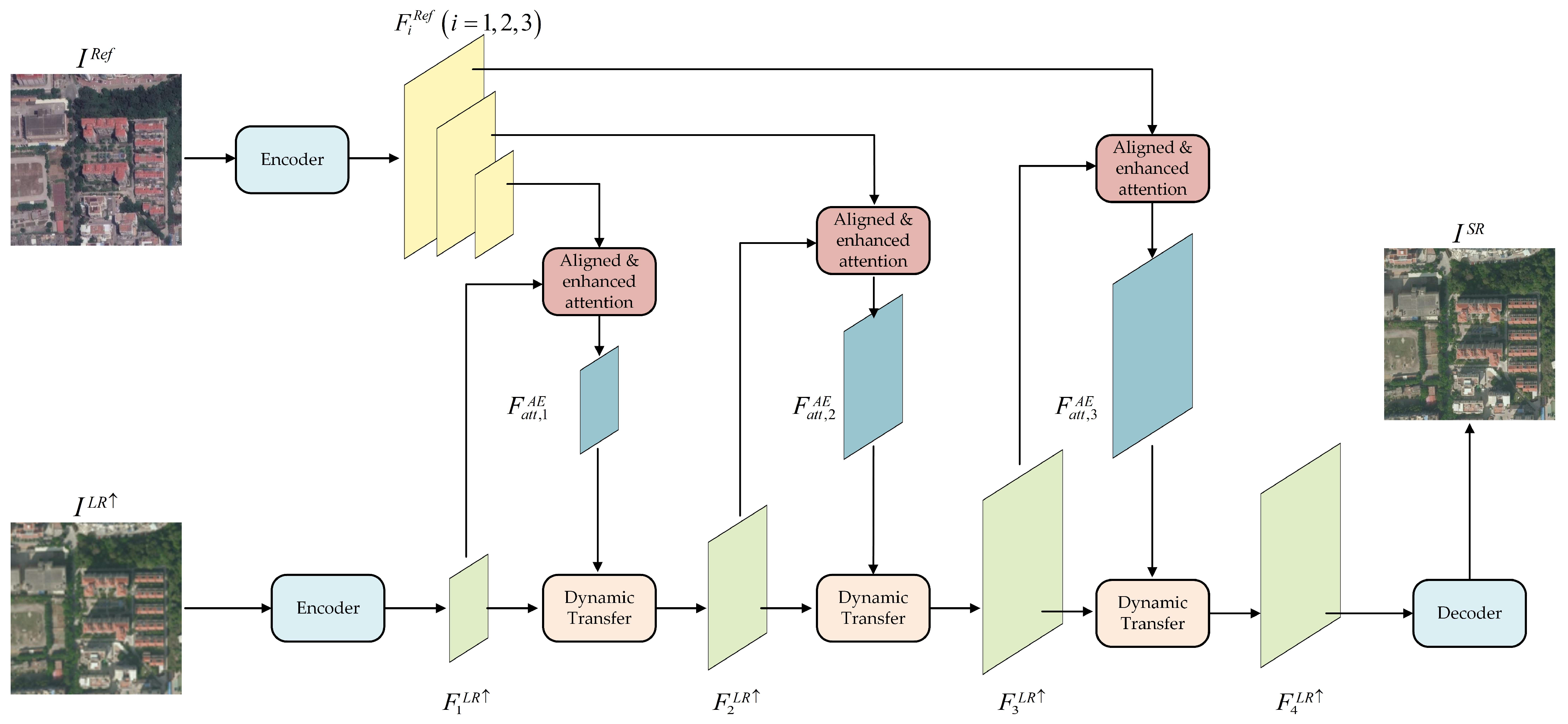

3. Methodology

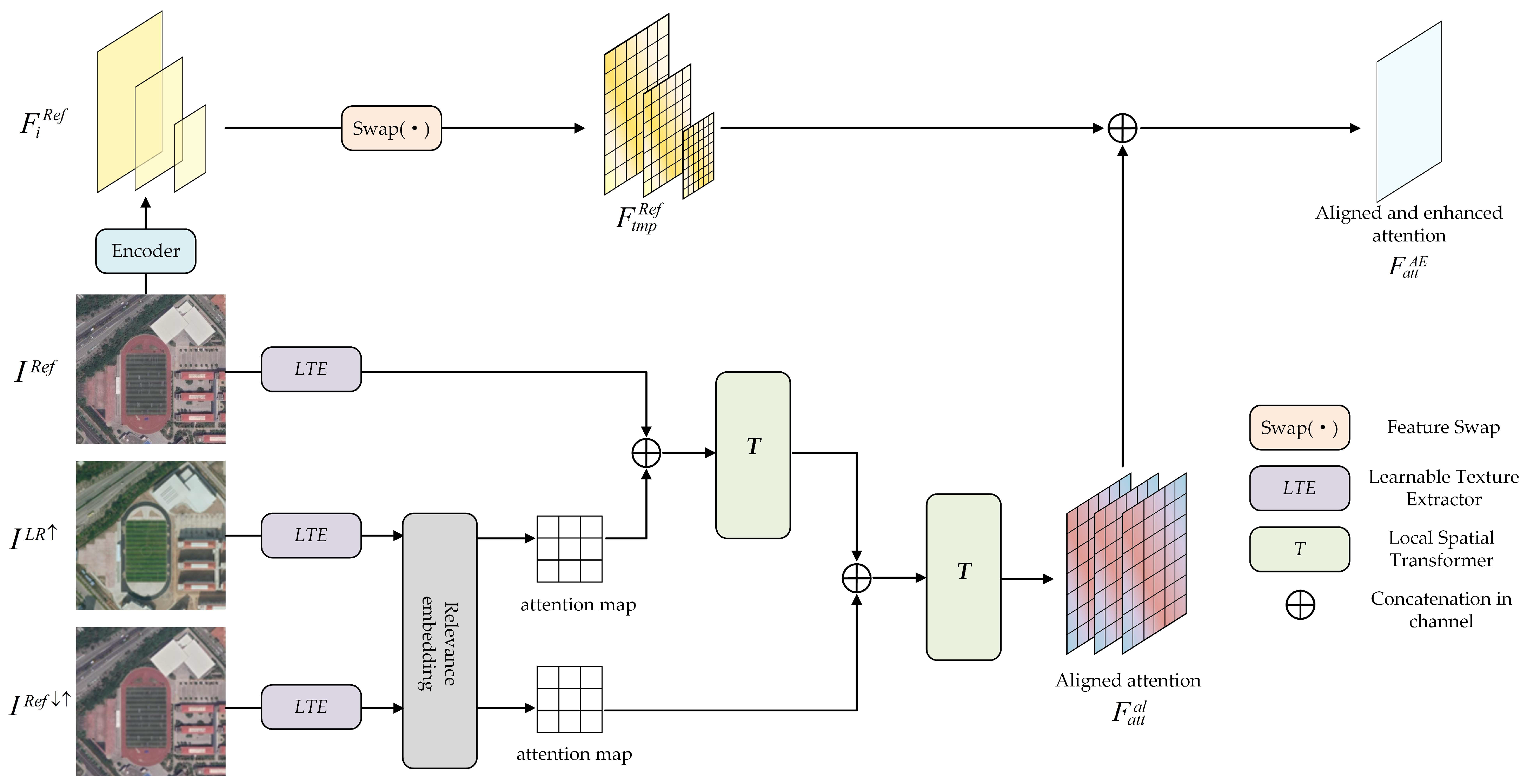

3.1. Feature Alignment Based on Aligned and Enhanced Attention

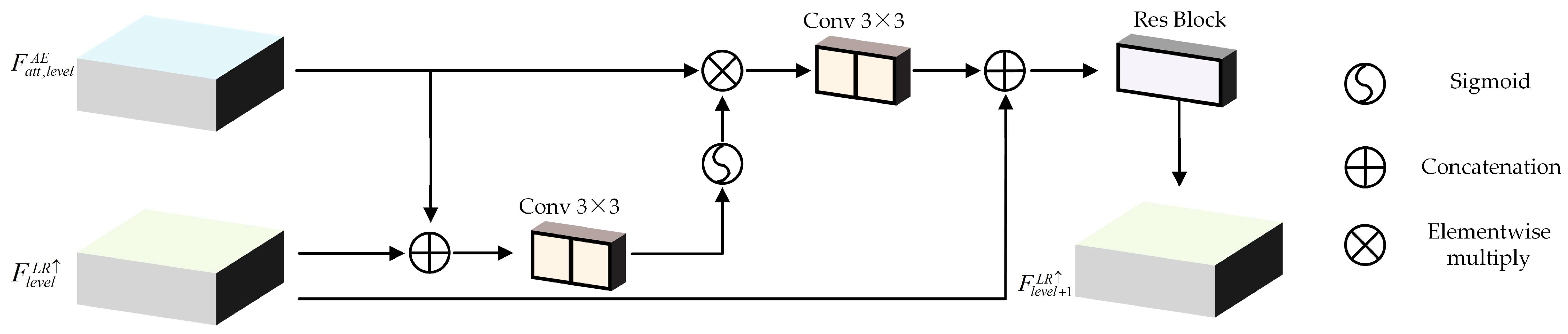

3.2. Feature Transfer Based on the Dynamic Transfer Module

3.3. Loss Function

4. Experiment

4.1. Dataset and Implementation Details

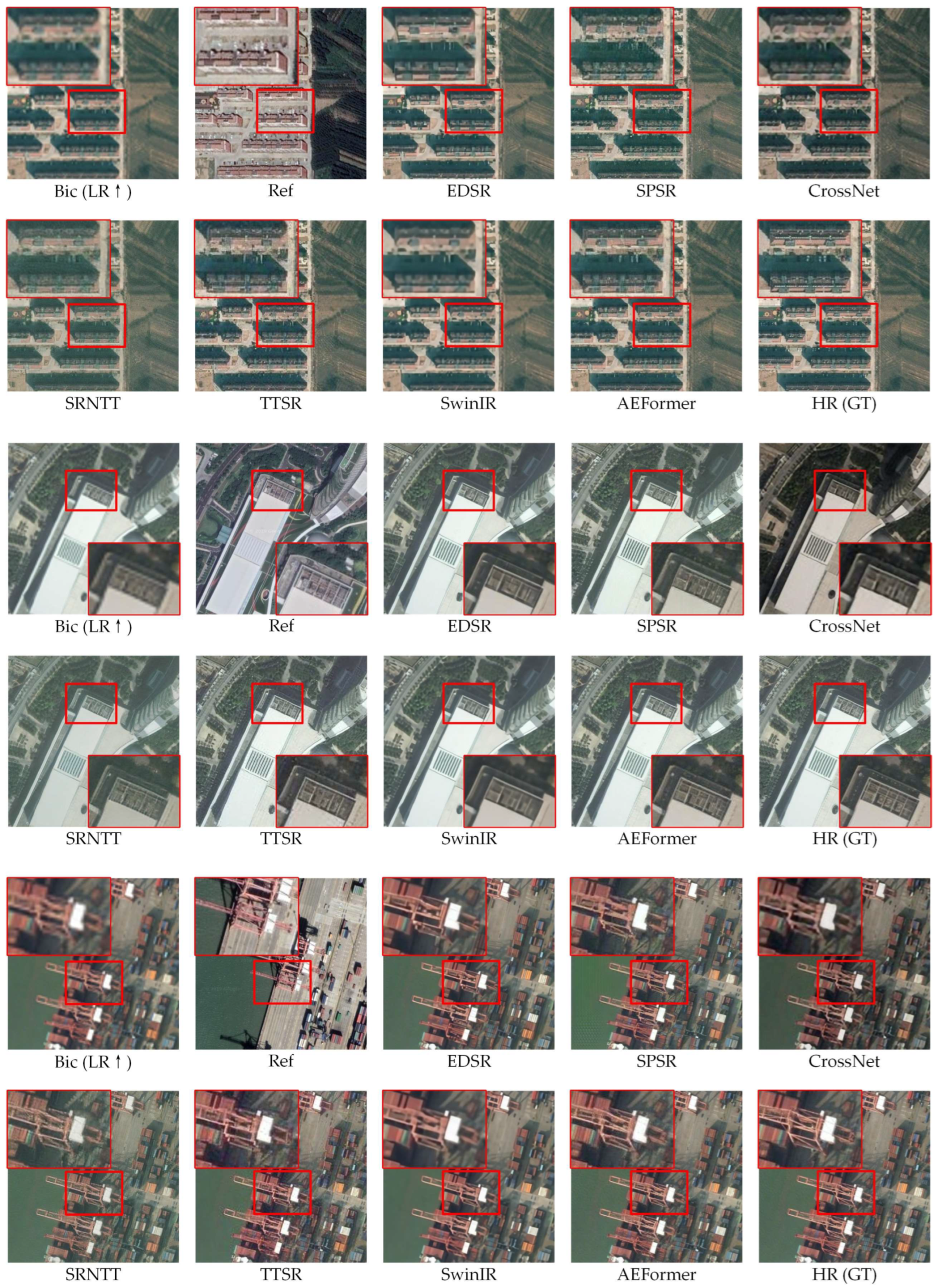

4.2. Comparison with State-of-the-Art Methods

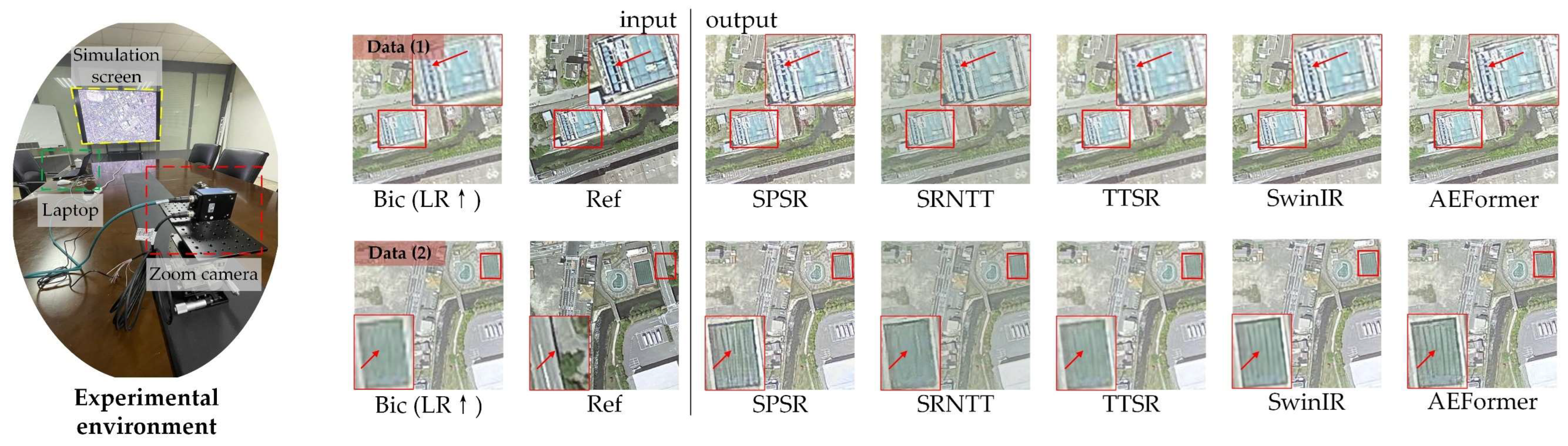

4.3. ZCS for Real-World Super Resolution

5. Discussion

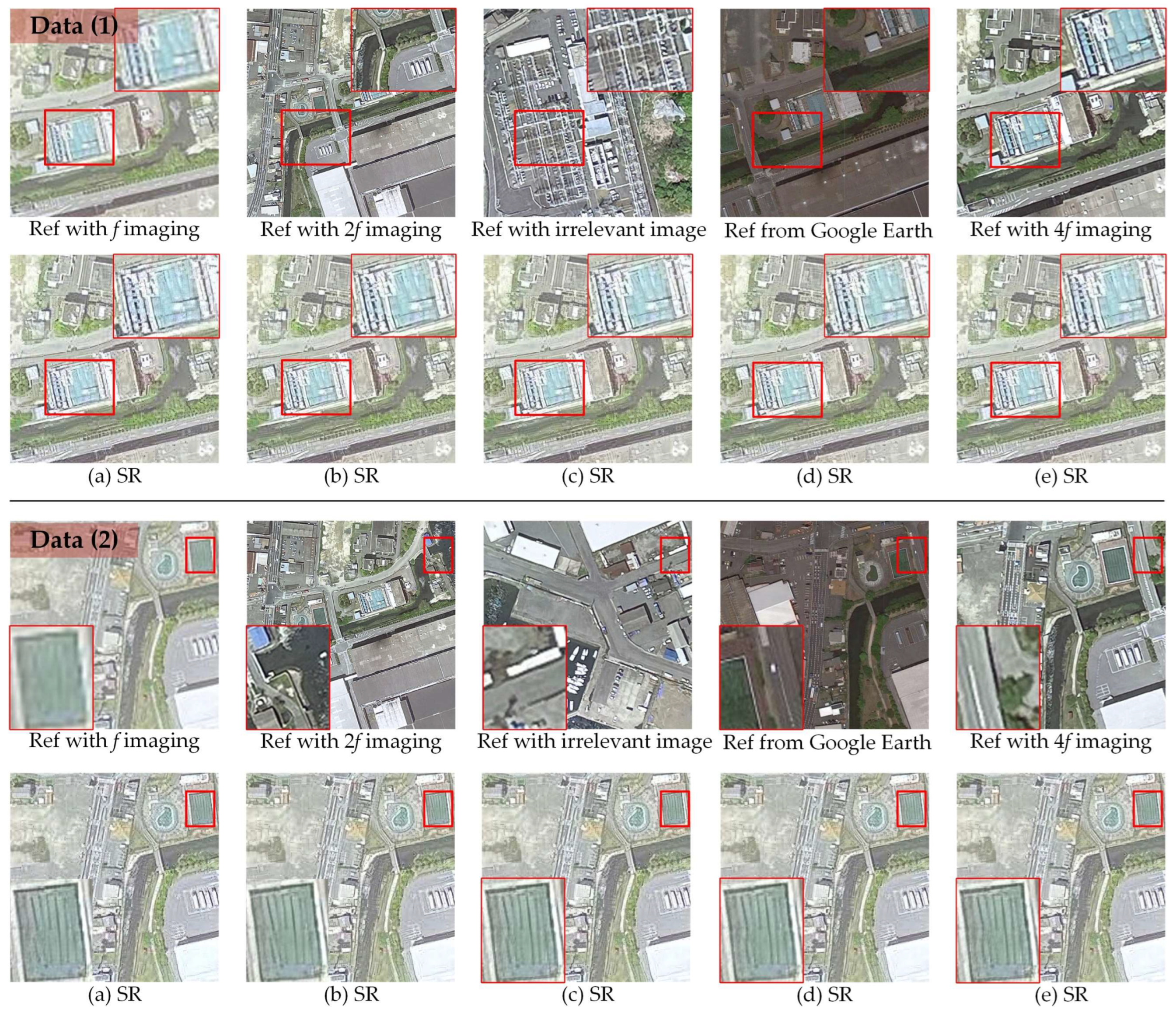

5.1. Effectiveness and Limitation of ZCS

5.2. Effectiveness of Aligned and Enhanced Attention

5.3. Effectiveness of Dynamic Transfer Module

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Abbreviation | Full Name |

| SR | Super resolution |

| LR | Low resolution |

| Ref | Reference (image) |

| HR | High resolution |

| GT | Ground truth |

| ViT | Vision transformer |

| LTE | Learnable texture extractor |

| ZCS | Zoom camera structure |

| FOV | Field of view |

| SISR | Single-image super resolution |

| Ref-SR | Reference-based super resolution |

| CNN | Convolutional neural network |

| GAN | Generative adversarial network |

| AEFormer | Reference-based super-resolution network via aligned and enhanced attention |

References

- Tsai, R.Y.; Huang, T.S. Multiframe image restoration and registration. Multiframe Image Restor. Regist. 1984, 1, 317–339. [Google Scholar]

- Zhang, H.; Yang, Z.; Zhang, L.; Shen, H. Super-Resolution Reconstruction for Multi-Angle Remote Sensing Images Considering Resolution Differences. Remote Sens. 2014, 6, 637–657. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.G.; He, K.M.; Tang, X.O. Learning a Deep Convolutional Network for Image Super-Resolution. In Proceedings of the 13th European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 184–199. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.M.; Tang, X.O. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [PubMed]

- Zhao, M.H.; Ning, J.W.; Hu, J.; Li, T.T. Hyperspectral Image Super-Resolution under the Guidance of Deep Gradient Information. Remote Sens. 2021, 13, 2382. [Google Scholar] [CrossRef]

- Xu, Y.Y.; Luo, W.; Hu, A.N.; Xie, Z.; Xie, X.J.; Tao, L.F. TE-SAGAN: An Improved Generative Adversarial Network for Remote Sensing Super-Resolution Images. Remote Sens. 2022, 14, 2425. [Google Scholar] [CrossRef]

- Guo, M.Q.; Zhang, Z.Y.; Liu, H.; Huang, Y. NDSRGAN: A Novel Dense Generative Adversarial Network for Real Aerial Imagery Super-Resolution Reconstruction. Remote Sens. 2022, 14, 1574. [Google Scholar] [CrossRef]

- Wang, P.J.; Bayram, B.; Sertel, E. A comprehensive review on deep learning based remote sensing image super-resolution methods. Earth-Sci. Rev. 2022, 232, 25. [Google Scholar] [CrossRef]

- Singla, K.; Pandey, R.; Ghanekar, U. A review on Single Image Super Resolution techniques using generative adversarial network. Optik 2022, 266, 31. [Google Scholar] [CrossRef]

- Qiao, C.; Li, D.; Guo, Y.T.; Liu, C.; Jiang, T.; Dai, Q.H.; Li, D. Evaluation and development of deep neural networks for image super-resolution in optical microscopy. Nat. Methods 2021, 18, 194–202. [Google Scholar] [CrossRef]

- Dong, R.; Zhang, L.; Fu, H. RRSGAN: Reference-Based Super-Resolution for Remote Sensing Image. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5601117. [Google Scholar] [CrossRef]

- Zheng, H.T.; Ji, M.Q.; Wang, H.Q.; Liu, Y.B.; Fang, L. CrossNet: An End-to-End Reference-Based Super Resolution Network Using Cross-Scale Warping. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 87–104. [Google Scholar]

- Zhang, Z.F.; Wang, Z.W.; Lin, Z.; Qi, H.R. Image Super-Resolution by Neural Texture Transfer. In Proceedings of the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 7974–7983. [Google Scholar]

- Yang, F.Z.; Yang, H.; Fu, J.L.; Lu, H.T.; Guo, B.N. Learning Texture Transformer Network for Image Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Electr Network, Seattle, WA, USA, 14–19 June 2020; pp. 5790–5799. [Google Scholar]

- Wang, T.; Xie, J.; Sun, W.; Yan, Q.; Chen, Q. Dual-camera super-resolution with aligned attention modules. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2001–2010. [Google Scholar]

- Zhang, Y.F.; Li, T.R.; Zhang, Y.; Chen, P.R.; Qu, Y.F.; Wei, Z.Z. Computational Super-Resolution Imaging with a Sparse Rotational Camera Array. IEEE Trans. Comput. Imaging 2023, 9, 425–434. [Google Scholar] [CrossRef]

- Liu, S.-B.; Xie, B.-K.; Yuan, R.-Y.; Zhang, M.-X.; Xu, J.-C.; Li, L.; Wang, Q.-H. Deep learning enables parallel camera with enhanced- resolution and computational zoom imaging. PhotoniX 2023, 4, 17. [Google Scholar] [CrossRef]

- Zhu, X.Z.; Hu, H.; Lin, S.; Dai, J.F. Deformable ConvNets v2: More Deformable, Better Results. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 9300–9308. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ledig, C.; Theis, L.; Huszar, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.H.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 105–114. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1132–1140. [Google Scholar]

- Blau, Y.; Mechrez, R.; Timofte, R.; Michaeli, T.; Zelnik-Manor, L. The 2018 PIRM Challenge on Perceptual Image Super-Resolution. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 334–355. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Ma, C.; Rao, Y.M.; Lu, J.W.; Zhou, J. Structure-Preserving Image Super-Resolution. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 7898–7911. [Google Scholar] [CrossRef]

- Liu, J.; Yuan, Z.; Pan, Z.; Fu, Y.; Liu, L.; Lu, B. Diffusion Model with Detail Complement for Super-Resolution of Remote Sensing. Remote Sens. 2022, 14, 4834. [Google Scholar] [CrossRef]

- Han, L.; Zhao, Y.; Lv, H.; Zhang, Y.; Liu, H.; Bi, G.; Han, Q. Enhancing Remote Sensing Image Super-Resolution with Efficient Hybrid Conditional Diffusion Model. Remote Sens. 2023, 15, 3452. [Google Scholar] [CrossRef]

- Yuan, Z.; Hao, C.; Zhou, R.; Chen, J.; Yu, M.; Zhang, W.; Wang, H.; Sun, X. Efficient and Controllable Remote Sensing Fake Sample Generation Based on Diffusion Model. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–12. [Google Scholar] [CrossRef]

- Jiang, Y.M.; Chan, K.C.K.; Wang, X.T.; Loy, C.C.; Liu, Z.W. Robust Reference-based Super-Resolution via C-2-Matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Electr Network, Virtual, 19–25 June 2021; pp. 2103–2112. [Google Scholar]

- Shim, G.; Park, J.; Kweon, I.S. Robust reference-based super-resolution with similarity-aware deformable convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8425–8434. [Google Scholar]

- Zhang, J.Y.; Zhang, W.X.; Jiang, B.; Tong, X.D.; Chai, K.Y.; Yin, Y.C.; Wang, L.; Jia, J.H.; Chen, X.X. Reference-Based Super-Resolution Method for Remote Sensing Images with Feature Compression Module. Remote Sens. 2023, 15, 1103. [Google Scholar] [CrossRef]

- Lu, L.Y.; Li, W.B.; Tao, X.; Lu, J.B.; Jia, J.Y. MASA-SR: Matching Acceleration and Spatial Adaptation for Reference-Based Image Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Electr Network, Virtual, 19–25 June 2021; pp. 6364–6373. [Google Scholar]

- Chen, T.Q.; Schmidt, M. Fast patch-based style transfer of arbitrary style. arXiv 2016, arXiv:1612.04337. [Google Scholar]

- Barnes, C.; Shechtman, E.; Finkelstein, A.; Goldman, D.B. PatchMatch: A Randomized Correspondence Algorithm for Structural Image Editing. ACM Trans. Graph. 2009, 28, 11. [Google Scholar] [CrossRef]

- Cao, J.Z.; Liang, J.Y.; Zhang, K.; Li, Y.W.; Zhang, Y.L.; Wang, W.G.; Van Gool, L. Reference-Based Image Super-Resolution with Deformable Attention Transformer. In Proceedings of the 17th European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 325–342. [Google Scholar]

- Zhou, R.; Zhang, W.; Yuan, Z.; Rong, X.; Liu, W.; Fu, K.; Sun, X. Weakly Supervised Semantic Segmentation in Aerial Imagery via Explicit Pixel-Level Constraints. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Yuan, Z.; Zhang, W.; Tian, C.; Mao, Y.; Zhou, R.; Wang, H.; Fu, K.; Sun, X. MCRN: A Multi-source Cross-modal Retrieval Network for remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2022, 115, 103071. [Google Scholar] [CrossRef]

- Yuan, Z.; Zhang, W.; Tian, C.; Rong, X.; Zhang, Z.; Wang, H.; Fu, K.; Sun, X. Remote Sensing Cross-Modal Text-Image Retrieval Based on Global and Local Information. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Fischer, P.; Ilg, E.; Hausser, P.; Hazirbas, C.; Golkov, V.; van der Smagt, P.; Cremers, D.; Brox, T. FlowNet: Learning Optical Flow with Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 2758–2766. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Liang, J.Y.; Cao, J.Z.; Sun, G.L.; Zhang, K.; Van Gool, L.; Timofte, R. SwinIR: Image Restoration Using Swin Transformer. In Proceedings of the 18th IEEE/CVF International Conference on Computer Vision (ICCV), Electr Network, Montreal, BC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Lu, Z.S.; Li, J.C.; Liu, H.; Huang, C.Y.; Zhang, L.L.; Zeng, T.Y. Transformer for Single Image Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 456–465. [Google Scholar]

- Wu, H.P.; Xiao, B.; Codella, N.; Liu, M.C.; Dai, X.Y.; Yuan, L.; Zhang, L. CvT: Introducing Convolutions to Vision Transformers. In Proceedings of the 18th IEEE/CVF International Conference on Computer Vision (ICCV), Electr Network, Montreal, BC, Canada, 11–17 October 2021; pp. 22–31. [Google Scholar]

- Chen, X.; Wang, X.; Zhou, J.; Dong, C. Activating More Pixels in Image Super-Resolution Transformer. arXiv 2022, arXiv:2205.04437v3. [Google Scholar]

- Grosche, S.; Regensky, A.; Seiler, J.; Kaup, A. Image Super-Resolution Using T-Tetromino Pixels. arXiv 2023, arXiv:2111.09013. [Google Scholar]

- Ma, J.Y.; Tang, L.F.; Fan, F.; Huang, J.; Mei, X.G.; Ma, Y. SwinFusion: Cross-domain Long-range Learning for General Image Fusion via Swin Transformer. IEEE-CAA J. Autom. Sin. 2022, 9, 1200–1217. [Google Scholar] [CrossRef]

- Wilburn, B.; Joshi, N.; Vaish, V.; Talvala, E.V.; Antunez, E.; Barth, A.; Adams, A.; Horowitz, M.; Levoy, M. High performance imaging using large camera arrays. ACM Trans. Graph. 2005, 24, 765–776. [Google Scholar] [CrossRef]

- Yu, S.; Moon, B.; Kim, D.; Kim, S.; Choe, W.; Lee, S.; Paik, J. Continuous digital zooming of asymmetric dual camera images using registration and variational image restoration. Multidimens. Syst. Signal Process. 2018, 29, 1959–1987. [Google Scholar] [CrossRef]

- Manne, S.K.R.; Prasad, B.H.P.; Rosh, K.S.G. Asymmetric Wide Tele Camera Fusion for High Fidelity Digital Zoom; Springer: Singapore, 2020; pp. 39–50. [Google Scholar]

- Chen, C.; Xiong, Z.W.; Tian, X.M.; Zha, Z.J.; Wu, F. Camera Lens Super-Resolution. In Proceedings of the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 1652–1660. [Google Scholar]

- Guo, P.Y.; Asif, M.S.; Ma, Z. Low-Light Color Imaging via Cross-Camera Synthesis. IEEE J. Sel. Top. Signal Process. 2022, 16, 828–842. [Google Scholar] [CrossRef]

- Wang, X.T.; Chan, K.C.K.; Yu, K.; Dong, C.; Loy, C.C.G. EDVR: Video Restoration with Enhanced Deformable Convolutional Networks. In Proceedings of the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 1954–1963. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A. Spatial transformer networks. In Advances in Neural Information Processing Systems; The MIT Press: Cambridge, MA, USA, 2015; p. 28. [Google Scholar]

- Zhang, S.; Yuan, Q.Q.; Li, J.; Sun, J.; Zhang, X.G. Scene-Adaptive Remote Sensing Image Super-Resolution Using a Multiscale Attention Network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4764–4779. [Google Scholar] [CrossRef]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A. Improved Training of Wasserstein GANs. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Bustince, H.; Montero, J.; Mesiar, R. Migrativity of aggregation functions. Fuzzy Sets Syst. 2009, 160, 766–777. [Google Scholar] [CrossRef]

- Johnson, J.; Alahi, A.; Li, F.F. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. In Proceedings of the 14th European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 694–711. [Google Scholar]

- Sajjadi, M.S.M.; Scholkopf, B.; Hirsch, M. EnhanceNet: Single Image Super-Resolution Through Automated Texture Synthesis. In Proceedings of the 16th IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4501–4510. [Google Scholar]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in Vision: A Survey. ACM Comput. Surv. 2022, 54, 41. [Google Scholar] [CrossRef]

- Wang, X.T.; Yu, K.; Wu, S.X.; Gu, J.J.; Liu, Y.H.; Dong, C.; Qiao, Y.; Loy, C.C. ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 63–79. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved Techniques for Training GANs. In Proceedings of the Advances in Neural Information Processing Systems 29 (NIPS 2016), Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Lucic, M.; Kurach, K.; Michalski, M.; Gelly, S.; Bousquet, O. Are GANs Created Equal? A Large-Scale Study. In Proceedings of the Advances in Neural Information Processing Systems 31 (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “Completely Blind” Image Quality Analyzer. IEEE Signal Process. Lett. 2013, 20, 209–212. [Google Scholar] [CrossRef]

- Liu, L.X.; Liu, B.; Huang, H.; Bovik, A.C. No-reference image quality assessment based on spatial and spectral entropies. Signal Process. Image Commun. 2014, 29, 856–863. [Google Scholar] [CrossRef]

- Ma, C.; Yang, C.Y.; Yang, X.K.; Yang, M.H. Learning a no-reference quality metric for single-image super-resolution. Comput. Vis. Image Underst. 2017, 158, 1–16. [Google Scholar] [CrossRef]

- Tu, Z.; Yang, X.; Fu, Z.; Gao, S.; Yang, G.; Jiang, L.; Wu, M.; Wang, S. Concatenating wide-parallax satellite orthoimages for simplified regional mapping via utilizing line-point consistency. Int. J. Remote Sens. 2023, 44, 4857–4882. [Google Scholar] [CrossRef]

- Wadduwage, D.N.; Singh, V.R.; Choi, H.; Yaqoob, Z.; Heemskerk, H.; Matsudaira, P.; So, P.T.C. Near-common-path interferometer for imaging Fourier-transform spectroscopy in wide-field microscopy. Optica 2017, 4, 546–556. [Google Scholar] [CrossRef]

- Aleman-Castaneda, L.A.; Piccirillo, B.; Santamato, E.; Marrucci, L.; Alonso, M.A. Shearing interferometry via geometric phase. Optica 2019, 6, 396–399. [Google Scholar] [CrossRef]

- Zeng, Y.; Fu, J.; Chao, H.; Guo, B. Learning pyramid-context encoder network for high-quality image inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1486–1494. [Google Scholar]

| Test Set | Metrics | Bicubic | EDSR [22] | SPSR [25] | CrossNet [12] | SRNTT [13] | TTSR [14] | TTSR_rec [14] | SwinIR [41] | AEFormer (Ours) | AEFormer _rec (Ours) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | LPIPS | 0.3667 | 0.1688 | 0.1723 | 0.2897 | 0.2004 | 0.1944 | 0.2323 | 0.2351 | 0.1035 | 0.1639 |

| PI | 7.1020 | 5.1290 | 3.2918 | 5.3949 | 3.7471 | 3.4192 | 5.7505 | 6.2364 | 3.2000 | 5.5191 | |

| NIQE | 7.7933 | 5.6877 | 4.2980 | 6.0194 | 4.1205 | 4.1673 | 6.2667 | 6.9530 | 4.2309 | 6.0150 | |

| FID | 126.0413 | 89.9880 | 75.6160 | 90.0235 | 89.4061 | 89.2271 | 97.1016 | 123.0567 | 39.1042 | 79.7634 | |

| IS | 1.9299 | 2.0919 | 2.0071 | 1.9316 | 2.0796 | 1.9836 | 2.0115 | 2.0883 | 1.9882 | 2.0982 | |

| PSNR | 29.6840 | 32.2835 | 30.4216 | 31.0801 | 29.1221 | 30.9589 | 32.8617 | 33.4122 | 32.4519 | 34.2224 | |

| SSIM | 0.7914 | 0.8750 | 0.7908 | 0.8590 | 0.7977 | 0.7957 | 0.8558 | 0.8758 | 0.8470 | 0.8915 | |

| 2 | LPIPS | 0.3920 | 0.2704 | 0.2105 | 0.3093 | 0.2348 | 0.2110 | 0.2400 | 0.2681 | 0.1340 | 0.1960 |

| PI | 7.0139 | 4.9010 | 3.0975 | 5.2704 | 3.7498 | 3.1980 | 5.8804 | 6.2632 | 3.1927 | 5.6102 | |

| NIQE | 7.6505 | 5.5122 | 4.0160 | 5.9580 | 4.1104 | 4.0012 | 6.5053 | 6.9457 | 4.1917 | 6.2035 | |

| FID | 127.7582 | 104.1339 | 98.8441 | 113.6950 | 115.4572 | 99.1046 | 107.9276 | 125.6784 | 47.9718 | 83.3458 | |

| IS | 2.0822 | 2.0836 | 2.0543 | 1.9980 | 2.1643 | 2.0933 | 2.1141 | 2.1376 | 2.1200 | 2.1272 | |

| PSNR | 29.5621 | 31.1646 | 29.2977 | 31.0895 | 27.9063 | 29.9981 | 32.1126 | 32.5679 | 31.6659 | 33.2675 | |

| SSIM | 0.7638 | 0.8319 | 0.7446 | 0.8295 | 0.7727 | 0.7543 | 0.8275 | 0.8406 | 0.8119 | 0.8615 | |

| 3 | LPIPS | 0.4748 | 0.2712 | 0.2040 | 0.3266 | 0.2426 | 0.2212 | 0.3210 | 0.3491 | 0.1414 | 0.2874 |

| PI | 7.0493 | 5.0103 | 2.8630 | 5.1190 | 3.9161 | 3.0213 | 5.8568 | 6.5596 | 2.8314 | 5.7783 | |

| NIQE | 7.6804 | 5.4921 | 3.9324 | 5.1493 | 4.3254 | 3.9501 | 6.3419 | 7.3428 | 3.9168 | 6.2950 | |

| FID | 138.0656 | 101.5913 | 73.8934 | 106.9849 | 80.1084 | 75.8917 | 99.1774 | 127.0837 | 43.2799 | 98.4504 | |

| IS | 1.9127 | 1.9825 | 2.0001 | 1.9197 | 2.1088 | 2.0276 | 2.0170 | 1.9656 | 2.0359 | 1.9612 | |

| PSNR | 27.7658 | 29.4900 | 28.0084 | 28.9832 | 27.8890 | 28.7439 | 30.5491 | 30.9032 | 29.6163 | 31.3204 | |

| SSIM | 0.7275 | 0.8071 | 0.7082 | 0.7897 | 0.7472 | 0.7281 | 0.7997 | 0.8117 | 0.7646 | 0.8282 | |

| 4 | LPIPS | 0.3749 | 0.2807 | 0.2427 | 0.3216 | 0.2574 | 0.2326 | 0.2470 | 0.2817 | 0.1509 | 0.2143 |

| PI | 7.1793 | 5.3996 | 3.3195 | 5.4073 | 4.2947 | 3.4277 | 6.3508 | 6.5094 | 3.5329 | 6.1760 | |

| NIQE | 7.7796 | 5.6910 | 4.1089 | 5.8788 | 4.5599 | 4.0359 | 6.8673 | 7.2425 | 4.2829 | 6.6927 | |

| FID | 123.8509 | 98.8013 | 104.6603 | 112.0716 | 112.9005 | 99.0680 | 100.5237 | 124.4605 | 57.2116 | 88.4482 | |

| IS | 2.1570 | 2.1563 | 2.1753 | 2.0994 | 2.1225 | 2.1860 | 2.1172 | 2.1140 | 2.1787 | 2.1158 | |

| PSNR | 30.0465 | 31.5246 | 29.1996 | 30.1077 | 29.2247 | 30.1338 | 32.4450 | 32.9487 | 31.9228 | 33.5955 | |

| SSIM | 0.7593 | 0.8233 | 0.7231 | 0.7690 | 0.7687 | 0.7394 | 0.8184 | 0.8310 | 0.7962 | 0.8496 | |

| Average | LPIPS | 0.4021 | 0.2478 | 0.2074 | 0.3118 | 0.2338 | 0.2148 | 0.2601 | 0.2835 | 0.1325 | 0.2154 |

| PI | 7.0861 | 5.1100 | 3.1430 | 5.2979 | 3.9269 | 3.2666 | 5.9596 | 6.3922 | 3.1893 | 5.7709 | |

| NIQE | 7.7260 | 5.5958 | 4.0888 | 5.7514 | 4.2791 | 4.0386 | 6.4953 | 7.1210 | 4.1556 | 6.3016 | |

| FID | 128.9290 | 98.6286 | 88.2535 | 105.6938 | 99.4681 | 90.8229 | 101.1826 | 125.0698 | 46.8919 | 87.5020 | |

| IS | 2.0205 | 2.0786 | 2.0592 | 1.9872 | 2.1188 | 2.0726 | 2.0650 | 2.0764 | 2.0807 | 2.0756 | |

| PSNR | 29.2646 | 31.1157 | 29.2318 | 30.3151 | 28.5355 | 29.9587 | 31.9921 | 32.4580 | 31.4142 | 33.1015 | |

| SSIM | 0.7605 | 0.8343 | 0.7417 | 0.8118 | 0.7716 | 0.7544 | 0.8254 | 0.8398 | 0.8049 | 0.8577 |

| Methods | Data (1) | Data (2) | ||

|---|---|---|---|---|

| PI | NIQE | PI | NIQE | |

| SPSR [25] | 3.508 | 3.3052 | 3.0197 | 3.1995 |

| SRNTT [13] | 3.5142 | 3.9132 | 3.1793 | 3.8485 |

| TTSR [14] | 5.6652 | 5.8128 | 5.3023 | 5.5889 |

| SwinIR [41] | 6.4644 | 6.9157 | 6.4344 | 7.0353 |

| AEFormer | 2.8890 | 3.5367 | 2.9647 | 3.6203 |

| ZCS Configuration | Data (1) | Data (2) | ||

|---|---|---|---|---|

| PI | NIQE | PI | NIQE | |

| Irrelevant image as Ref | 2.9043 | 3.5912 | 2.9829 | 3.6536 |

| Google Earth as Ref | 2.9959 | 3.7749 | 2.8806 | 3.5593 |

| Ref with focal length = f | 3.1877 | 3.7340 | 2.9960 | 3.6807 |

| Ref with focal length = 2f | 2.9189 | 3.5839 | 2.9810 | 3.6476 |

| Ref with focal length = 4f | 2.8890 | 3.5367 | 2.9647 | 3.6203 |

| Module | Evaluation Metrics | ||||

|---|---|---|---|---|---|

| Ref↓↑ Branch | Aligned Attention | Enhanced Attention | LPIPS | PSNR | SSIM |

| × | × | × | 0.1580 | 30.9693 | 0.8171 |

| × | √ | × | 0.1362 | 31.9833 | 0.8392 |

| √ | √ | × | 0.1139 | 32.4702 | 0.8396 |

| √ | √ | √ | 0.1035 | 32.4519 | 0.8470 |

| Module within Transfer Module | Evaluation Metrics | ||||

|---|---|---|---|---|---|

| Sigmoid Module | Convolution Module | LPIPS | PI | PSNR | SSIM |

| × | × | 0.2495 | 6.2780 | 32.3798 | 0.8320 |

| × | √ | 0.1896 | 6.1993 | 32.7164 | 0.8426 |

| √ | × | 0.2098 | 6.3817 | 32.9354 | 0.8371 |

| √ | √ | 0.1639 | 5.5191 | 34.2224 | 0.8915 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tu, Z.; Yang, X.; Tang, X.; Xu, T.; He, X.; Liu, P.; Jiang, L.; Fu, Z. AEFormer: Zoom Camera Enables Remote Sensing Super-Resolution via Aligned and Enhanced Attention. Remote Sens. 2023, 15, 5409. https://doi.org/10.3390/rs15225409

Tu Z, Yang X, Tang X, Xu T, He X, Liu P, Jiang L, Fu Z. AEFormer: Zoom Camera Enables Remote Sensing Super-Resolution via Aligned and Enhanced Attention. Remote Sensing. 2023; 15(22):5409. https://doi.org/10.3390/rs15225409

Chicago/Turabian StyleTu, Ziming, Xiubin Yang, Xingyu Tang, Tingting Xu, Xi He, Penglin Liu, Li Jiang, and Zongqiang Fu. 2023. "AEFormer: Zoom Camera Enables Remote Sensing Super-Resolution via Aligned and Enhanced Attention" Remote Sensing 15, no. 22: 5409. https://doi.org/10.3390/rs15225409

APA StyleTu, Z., Yang, X., Tang, X., Xu, T., He, X., Liu, P., Jiang, L., & Fu, Z. (2023). AEFormer: Zoom Camera Enables Remote Sensing Super-Resolution via Aligned and Enhanced Attention. Remote Sensing, 15(22), 5409. https://doi.org/10.3390/rs15225409