Abstract

Deep learning has been demonstrated to be a powerful nonlinear modeling method with end-to-end optimization capabilities for hyperspectral Images (HSIs). However, in real classification cases, obtaining labeled samples is often time-consuming and labor-intensive, resulting in few-shot training samples. Based on this issue, a multipath and multiscale Siamese network based on spatial-spectral features for few-shot hyperspectral image classification (MMSN) is proposed. To conduct classification with few-shot training samples, a Siamese network framework with low dependence on sample information is adopted. In one subnetwork, a spatial attention module (DCAM), which combines dilated convolution and cosine similarity to comprehensively consider spatial-spectral weights, is designed first. Then, we propose a residual-dense hybrid module (RDHM), which merges three-path features, including grouped convolution-based local residual features, global residual features and global dense features. The RDHM can effectively propagate and utilize different layers of features and enhance the expression ability of these features. Finally, we construct a multikernel depth feature extraction module (MDFE) that performs multiscale convolutions with multikernel and hierarchical skip connections on the feature scales to improve the ability of the network to capture details. Extensive experimental evidence shows that the proposed MMSN method exhibits a superior performance on few-shot training samples, and its classification results are better than those of other state-of-the-art classification methods.

1. Introduction

Hyperspectral images (HSIs) have hundreds of continuous bands, and unlike general three-channel images, they provide not only spatial information but also rich spectral information that can be used to distinguish land cover types. Therefore, HSIs have been widely used in forest vegetation cover monitoring [1], land use classification [2,3], change detection [4], anomaly detection [5], environmental protection [6] and other fields. To achieve high accuracy and a strong generalization ability in HSI classification tasks, deep convolutional neural networks (CNNs) have attracted much attention due to their excellent classification performance. The purpose of HSI classification is to classify HSIs into predefined labels in a pixel-by-pixel manner. Labeled data scarcity is a challenging issue in HSI classification tasks as obtaining labeled samples is often time-consuming and labor-intensive.

With the development of deep learning technology, various approaches have been explored in HSI classification tasks. At present, CNNs are the popular methods in this field. CNNs including the 1DCNN, the 2DCNN and the 3DCNN, as well as some hybrid networks [7], have achieved an excellent performance in HSI classification tasks. The 1DCNN is mainly applied to the spectral dimensions of HSIs [8]. An HSI contains continuous spectral information, and the 1DCNN utilizes the spectral curves of the given image as inputs and performs convolution operations along the spectral dimension. It extracts features in the spectral dimension, but does not take the spatial features into account. The 2DCNN is mainly applied to the spatial dimensions of HSIs [9]. The convolution operation is carried out along the spatial dimension to extract spatial features, such as texture and shape features, but this approach does not consider spectral features. The 3DCNN simultaneously considers both spectral and spatial information [10,11]. It successfully integrates spectral and spatial information and captures more comprehensive HSI features. Although traditional CNNs have achieved significant success in HSI classification, these models are prone to overfitting challenges due to the insufficient number of available training samples. Insufficient training samples can prevent the model from adequately learning the features of the input data. When the training data are limited, such a network model tends to over-rely on the noise and local features of the training samples, failing to generalize well. As a result, although the model may perform well on the training dataset, it will fail on new unknown data.

Based on the basic CNNs, some more complex models suitable for the HSI classification methods have been proposed. For example, Zilong Zhong et al. used spectral and spatial residual blocks to learn rich spectral features and spatial background discriminant features from HSIS [12]. Aiming to reduce the required training time and achieve improved HSI classification accuracy, Wenju Wang et al. proposed a fast dense spectral-spatial convolution (FDSSC) framework by using different convolution kernel sizes to extract the spectral features and spatial features [13]. In addition, considering that traditional CNNs do not make full use of the complementary and interrelated information between different layers in deep convolutional models, Weiwei Song et al. adopted a fusion mechanism to build a very deep network to make full use of the multilayer features [14]. To overcome the challenges of large dimensions, the spatial variability of spectral features and the scarcity of labeled data, Anirban Santara et al. extracted the spectral-spatial features from specific bands for classification purposes [15]. Xiangrong Zhang et al. incorporated spectral partitioning and feature extraction into an end-to-end network by utilizing grouped convolution [16].

In many practical situations, due to the lack of training samples, the HSI classification task can be seen as few-shot classification, which means that the training dataset contains only very limited training samples for each class. For few-shot classification, the above CNN-based models still easily fall into the dilemma of overfitting. The Siamese network is a high-performance network that can be used for few-shot classification tasks. The Siamese network consists of two identical or similar subnetworks that share the same weights and parameters. These two subnetworks receive two instances (image pairs) as input data. The objective of a Siamese network is to carry out a classification task by learning the similarities or differences between the two instances. Recently, Siamese networks have been preliminarily used for HSI classification. For example, Shizhi Zhao et al. proposed a Siamese neural network based on a 1DCNN and a 2DCNN by using pixel pairs to significantly expand the training set and to better characterize the spectral-spatial features [17]. B. Gowthama et al. integrated principal component analysis (PCA)-based dimensionality reduction, the Siamese network framework and a CNN to achieve an improved HSI classification performance with a small sample [18]. Zeyu Cao proposed a Siamese network based on a 3DCNN, which combined contrastive information and label information to process small sample classification tasks [19]. Zhaohui Xue et al. utilized a spectral-spatial Siamese network consisting of a 1DCNN and a 2DCNN to extract spectral-spatial features [20]. Mengbin Rao et al. constructed a Siamese 3DCNN with an adaptive spatial-spectral pyramid pooling layer to achieve an improved generalization and classification performance [21]. Weiquan Wang et al. proposed a Siamese CNN method based on CutMix data augmentation and the Softloss function [22]. Zhi He et al. utilized a 3D Siamese network with superpixel segmentation and incorporated a 3D spatial pyramid pooling layer in the network to flexibly extract the multiscale features of each targeted pixel [23]. Despite the fact that numerous studies have utilized Siamese networks for HSI classification, further exploration is still needed to determine how to improve the classification performance with few-shot samples. Through the above analyses, it can be seen that the existing HSI classification methods based on the Siamese network are still facing the following problems: (1) In the classification process, the differing importance of neighborhood pixels are not fully considered; (2) The extraction features are relatively monotonous, and the rich information in the HSI is not well utilized; (3) In the network, only one scale of the convolutional kernel is used, and it is unable to reflect multiscale information.

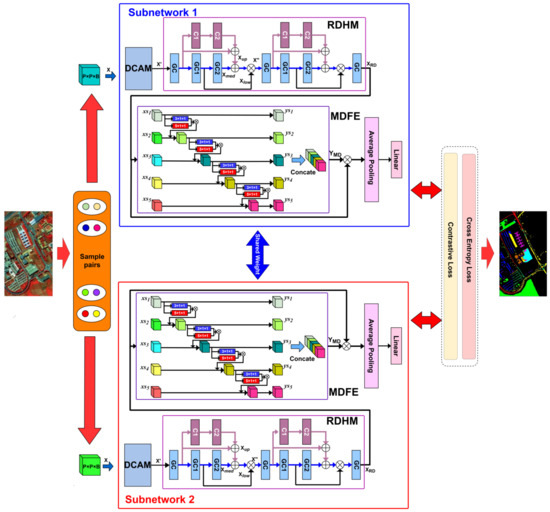

To solve the above problems, inspired by 3DCSN [19] and Res2Net, which increases the receptive field by constructing hierarchical residual-like connections [24], a multipath and multiscale Siamese network based on spatial-spectral features for few-shot hyperspectral image classification (MMSN) is proposed. The MMSN is based on the Siamese network framework, which has low dependence on sample information. It consists of two subnetworks with the same structure and shared weights. In one subnetwork, a dilatation-cosine attention module (DCAM) is designed to first measure the spatial-spectral weight in each patch. Then, a three-path residual-dense hybrid multipath (RDHM) module composed of residual connections, dense connections and grouped convolutions is proposed to effectively propagate and utilize the features at different layers. Finally, a multikernel depth feature extraction (MDFE) module is constructed to extract multiscale semantic features.

In summary, the main innovative contributions of this paper can be summarized as follows.

- (1)

- A multipath and multiscale Siamese network based on spatial-spectral features for few-shot hyperspectral image classification (MMSN) is proposed. The MMSN is based on the Siamese network framework, and each subnetwork consists of three modules: a dilatation-cosine attention module (DCAM), a residual-dense hybrid multipath (RDHM) module and a multikernel depth feature extraction (MDFE) module. The MMSN effectively extracts and utilizes the spatial-spectral features of HSIs, achieving promising classification results with few-shot training samples.

- (2)

- A spatial attention mechanism called the dilatation-cosine attention module (DCAM) is designed, which combines dilated convolutions and cosine similarity to realize the comprehensive measurement of the spatial-spectral patch weights. By using dilated convolutions, the module expands the receptive field in the spectral dimension. With the help of cosine similarity, the importance degrees of different pixels in the spatial dimension are quantified.

- (3)

- A multipath spectral feature extraction module called the residual-dense hybrid multipath (RDHM) module is constructed. The RDHM module integrates three types of features: grouped convolution-based local residual features, global residual features and global dense features. By combining these features, it effectively propagates and utilizes the features at different layers, which not only retains the original features but also continuously explores new features. With the extracted local and global features, the expressiveness of the comprehensive features is enhanced.

- (4)

- A multikernel depth feature extraction (MDFE) module is constructed to explore the deep semantic features of the input image. By using multiscale convolution with multikernel and hierarchical skip connections, the MDFE can better retain and utilize the semantic information of an image and improve its ability of capture details.

The rest of this article is structured as follows: In Section 2, brief introductions of the related techniques are reviewed. In Section 3, the proposed MMSN method and the details of each module are presented. The classification results are reported in Section 4. Furthermore, Section 5 discusses the parameters. Finally, the conclusions are provided in Section 6.

2. Related Works

In this section, some related works will be briefly reviewed, including Siamese networks, Residual and Dense networks and the Res2Net module.

2.1. Siamese Network

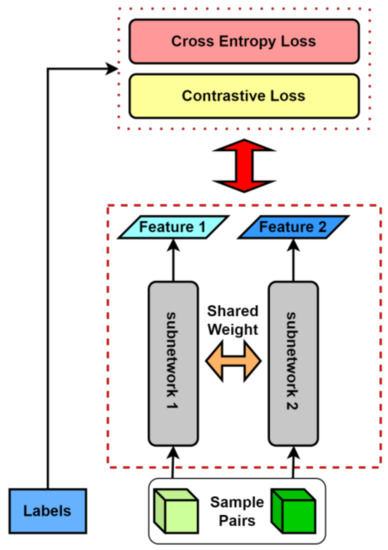

A Siamese network has a dual-branch structure composed of two identical or similar subnetworks, where the two subnetworks share weights. This Siamese network takes paired data as inputs and extracts their feature representations through the subnetworks, as shown in Figure 1. These feature representations are compared through fully connected layers, and the network outputs are the similarities among the input data pairs. By introducing labels during training, the network can learn the similarity relationships between the input data pairs, enabling the classification of new input data [18]. One important characteristic of Siamese networks is their ability to deal with unlabeled data, as they do not rely on specific labels to train models. Instead, they depend on the relative relationships between the input data [20]. This makes Siamese networks widely applicable in many practical scenarios.

Figure 1.

Structure of a Siamese network.

2.2. Residual and Dense Networks

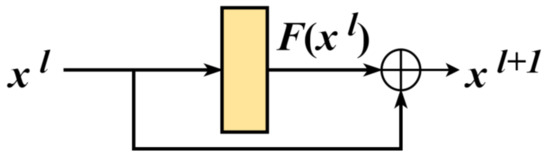

The core idea of the residual network (ResNet) is to introduce skip connections so that the network can learn identity mappings. This allows the gradients from higher layers to propagate back to lower layers more efficiently, thereby facilitating and regularizing the model training process [25]. ResNet is composed of a series of residual blocks, and each residual block has a skip connection, as shown in Figure 2.

Figure 2.

Residual block.

These skip connections allow the direct transmission of information between network layers, which helps to avoid the vanishing gradient problem that can occur during gradient propagation in deep networks, making it easier for the network to train deep architectures. The skip connections can be expressed mathematically as Formula (1):

Each residual block is divided into two parts: a direct mapping part and a residual part. is a direct mapping, which is reflected as the lower curve in Figure 2; represents the residual part, typically consisting of two or three convolutional operations, as depicted in the upper part of Figure 2.

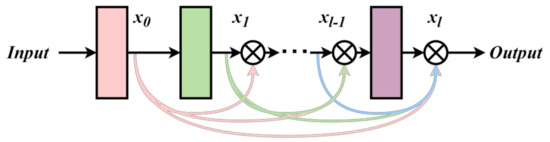

DenseNet, on the other hand, is characterized by establishing dense connections between each pair of layers in the network [26], as shown in Figure 3.

Figure 3.

Dense block.

In DenseNet, each layer is connected to all previous layers, allowing each layer in the network to directly access the information from all previous layers. DenseNet is a compact model that is easy to train and parameter-efficient due to its feature reuse mechanism. DenseNet is expressed mathematically as Formula (2):

where refers to the concatenation of the feature maps generated in layers .

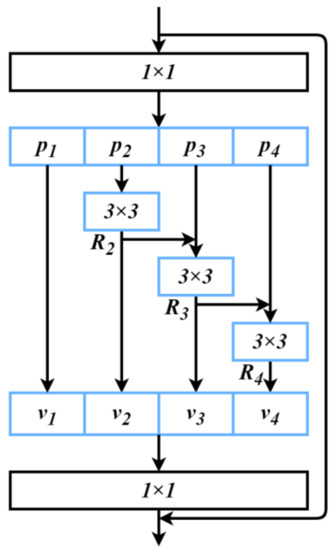

2.3. Res2Net

Res2Net is an improved residual network architecture, as shown in Figure 4. It introduces hierarchical residual connections and filter groups to extract features [24]. In Res2Net, filter groups are used, which not only increases the capacity of the network but also increases its flexibility and improves the efficiency of the feature extraction process. Additionally, Res2Net incorporates hierarchical residual connections, where features derived from different layers are connected in a residual manner, promoting information flow and propagation [24]. This connectivity scheme can capture information from different layers, and the features can be better integrated, which improves the expression ability of the network.

Figure 4.

Architecture of Res2Net.

After the convolution, the feature map is evenly divided into Q feature scales, denoted as , where . With the exception of , which stays as it is, each passes through a convolution layer, and this operation is represented as . represents the output of . is then added to as the input of . Therefore, can be expressed as Formula (3):

3. The Proposed MMSN

In this section, the proposed MMSN method and the details of each module will be presented.

Inspired by 3DCSN [19] and Res2Net [24], the MMSN is proposed for his classification with few-shot training samples. The MMSN is based on the Siamese network framework, and each subnetwork branch mainly consists of a dilatation-cosine attention module (DCAM), residual-dense hybrid multipath (RDHM) and multikernel depth feature extraction (MDFE) module, as illustrated in the flowchart in Figure 5.

Figure 5.

Flowchart of the proposed MMSN. Two identical Siamese subnetworks consist of DCAM module, RDHM module and MDFE module.

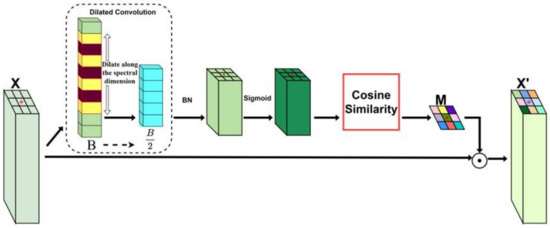

3.1. Dilation-Cosine Attention Module (DCAM)

The DCAM expands the receptive field of the spectral dimension through dilated convolution and utilizes the cosine similarity to quantify the importance levels of different pixels in the spatial dimension. It realizes the comprehensive measurement of the spatial-spectral weight of each patch. The diagram of the DCAM is shown in Figure 6. The input patch is first passed through dilated convolution to obtain a feature map with reduced channels. Dilated convolution expands the receptive field of the spectral dimension, effectively preserving the spectral information while reducing the computational complexity by halving the spectral dimension. Then, the sigmoid activation function is applied to restrict the values to (0,1). Next, the cosine similarity between the central pixel and its neighboring pixels is computed to obtain the spatial weight mask . Finally, the weight mask is multiplied with the original input in an elementwise manner to obtain a weighted output , which can be represented mathematically as Formula (4):

where represents the elementwise multiplication operation.

Figure 6.

Diagram of the DCAM. The input patch is X and the weighted output is . B represents the number of channels.

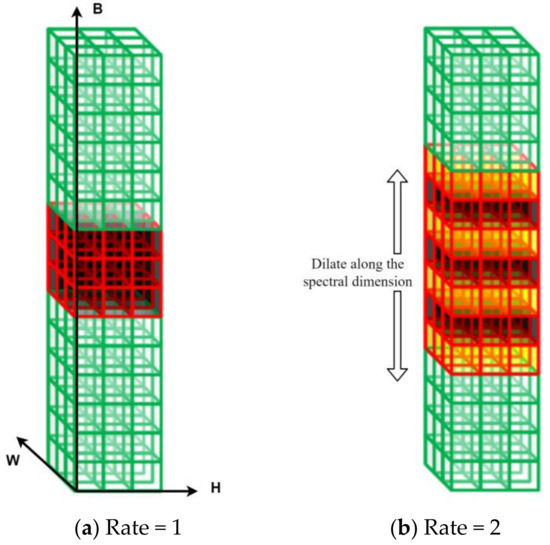

For the high spectral dimensionality of HSIs, dilated convolution is used to expand the receptive field along the spectral dimension to effectively extract spectral information [27]. The dilated convolution process is illustrated in Figure 7a, where the black cuboid represents a convolutional kernel and the red lines represent the covered receptive field. When the dilation rate along the spectral dimension is set to 1 (Rate = 1, Rate represents the dilation rate along the spectral dimension), it is the same as a regular 3D convolutional kernel. In Figure 7b, when the dilation rate along the spectral dimension is set to 2 (Rate = 2), the receptive field expands to , where the yellow part indicates the dilated region.

Figure 7.

Illustration of the dilated convolution process. (a) Dilated convolution with dilation rate 1, (b) Dilated convolution with dilation rate 2. The red lines represent the covered receptive field, the black cuboid represents the original convolution kernel, and the yellow part represents the dilated region. B represents the channel dimension, W represents the width dimension, and H represents the height dimension.

The cosine similarity measure is used to quantify the importance levels of different pixels in a patch. It is a simple calculation method that is less affected by noise and outliers. The input patch obtains the cosine weight mask through Formula (5):

where represents the spectral values of the pixels in row and column of , and represents the center of . is the attention weight mask of the pixels in row and column of . is a two-norm operator, and is as defined in Formula (6):

In Formula (6), represents a dilated convolution with a kernel size of and a dilation rate of 2 in the spectral dimension. is the sigmoid activation function.

The DCAM realizes the comprehensive measurement of the spatial-spectral patch weights by performing dilated convolution on the spectral dimension and utilizing the cosine similarities of the spatial pixels.

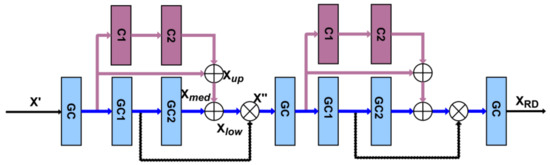

3.2. Residual-Dense Hybrid Multipath (RDHM)

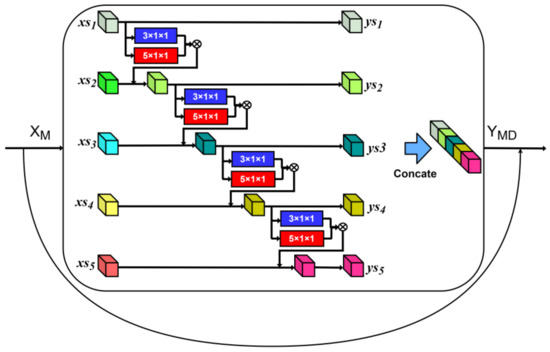

To extract comprehensive information from the input image, a residual-dense hybrid multipath (RDHM) module is constructed, as shown in Figure 8. This module integrates three paths of features, including grouped convolution-based local residual features, global residual features and global dense features. It preserves the original features while continuously exploring new features, effectively propagating and utilizing the features from different levels. By combining the local and global features, the expressive power of the comprehensive features is enhanced.

Figure 8.

Diagram of the RDHM module. The upper purple branch is global residual branch, the lower black skip branch is global dense branch, and the intermediate blue branch is local residual branch.

The input of RDHM is , which is also the output of the DCAM. The three GC steps are grouped convolutions, which further achieve dimensional transformation to ensure that the overall feature dimensionality remains consistent. GC1 and GC2 in the intermediate blue branch represent grouped convolutions, which can extract local residual features. C1 and C2 in the upper purple branch represent regular convolutions, which can extract global residual features. The lower black skip branch directly utilizes hierarchical skip connections to extract global dense features. Then, a parallel grouped convolution of the local residual branch produces an output . The global residual branch generates an output , and the global dense branch generates an output . This can be mathematically represented as Formulas (7)–(10):

The RDHM consists of two cascaded residual-dense hybrid modules. This design helps to improve the comprehensive exploration of the complex features contained in the spectral data. We encapsulate the above Formulas (7)–(10) as a module operation ; then, the output can be mathematically expressed as Formulas (11) and (12):

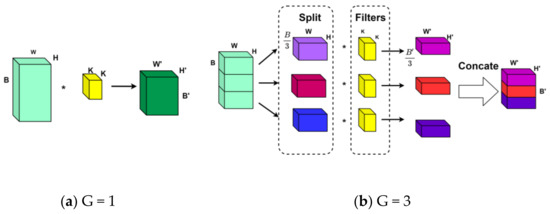

Among them, the local residual branch adopts a grouped convolution operation. The grouped convolution first divides the spectral bands into band scales with equal band widths (), where represents the number of groups. As shown in Figure 9, when , this process is the same as ordinary convolution; when , the spectral bands are divided into three groups. After grouping, each band scale is separately convolved to generate feature maps. The computational complexity is reduced through grouped convolution, which is realized as an equivalent parallel network operation.

Figure 9.

Illustration of grouped convolution. (a) Grouped convolution with grouped number 1, (b) Grouped convolution with grouped number 3. K represents the size of the convolutional kernel, and “*” represents the convolution operation.

3.3. Multikernel Depth Feature Extraction (MDFE) Module

Inspired by the Res2Net model [24], a multikernel depth feature extraction (MDFE) module is proposed. MDFE constructs layered residual-like connections within a single residual block to extract fine-grained features. It utilizes multiscale convolutions with multikernels to increase the receptive field of each network layer. This approach not only retains and utilizes the semantic information of the input image but also enhances the ability of the network to capture details.

First, the feature map is evenly divided into five feature scales along the spectral dimension. Two parallel grouped convolutions with kernel sizes of and are used to construct a multiscale convolution with multikernels. The features from scale 1 are retained as fine-grained features, which are the outputs of scale 1. The outputs of scale 1 are passed through the multiscale convolution with multikernels, and then added to the features derived from scale 2 to generate fine-grained features as the outputs of scale 2. This combination mode achieves cross-scale connections. Similarly, the outputs of scale 2 are passed through the multiscale convolution with multikernels, and then added to the features from scale 3, and this process continues iteratively until we obtain fine-grained features for all five scales. Finally, these fine-grained features are concatenated along the spectral dimension to form the final semantic feature. The entire process is illustrated in Figure 10 and can be expressed mathematically as Formulas (13) and (14):

Figure 10.

Diagram of the MDFE module. The input is , the semantic feature output is , and rectangular boxes stand for the multikernels.

In the above formula, represents the feature scales, where . and represents the convolution operations with kernel sizes of 3 1 1 and 5 1 1, respectively. represents the output of the g-th layer.

3.4. Siamese Network Framework

For classification with few-shot training samples, the Siamese network framework with low dependence on sample information is adopted by the proposed MMSN. It consists of two identical subnetworks. Each subnetwork independently processes the input data, extracts the feature representations and uses metric learning strategies to distinguish the similarities among the inputs. The two subnetworks share parameters to ensure the consistency of the predictions.

The HSI dataset has N 3D labeled patches, which are composed of the center pixels to be classified and their neighboring pixels, denoted as , and the label for each patch is , where C is the total number of HSI classes. The input data of the MMSN consist of sample pairs with few-shot training samples, and it can achieve data augmentation by constructing sample pairs. Assuming that [] is a randomly selected sample pair, the label for this sample pair can be represented as Formula (15):

During the contrastive learning process, the two subnetworks share weights. Samples [] are first fed to the DCAM to calculate the importance levels of the different pixels in each patch, and the output weighted samples are then fed into the subsequent network branch. θ is the parameter in the contrastive learning process; θ can be updated using the following Formulas (16)–(18):

where represents the contrastive loss function and represents the entire subnet operation. By minimizing the contrastive loss (), the subnetworks can map the input samples to a space with smaller intraclass distances and larger interclass distances.

The margin is usually a constant, with a typical value of 1.25. d represents the Euclidean distance between the two feature vectors. Additionally, the positive margin is used to maintain a lower bound on the distance between paired inputs with different labels.

Furthermore, we use an average pooling operation to extract feature vectors from the features. Finally, we use a fully connected layer to generate predictions of size . The predicted label is determined by the label with the highest probability value. We train using the cross-entropy loss () during the prediction process, and is updated according to Formulas (19) and (20):

where represents the true label value of the i-th training sample, represents the predicted label of the i-th training sample and and represent the j-th training sample and its label, respectively.

4. Experiments and Results

In the experiments, three real HSIs are used to verify the classification performance of the proposed approach. All datasets and the corresponding ground truths can be downloaded from the website http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes (accessed on 1 May 2023). Three training samples are randomly selected from each dataset for each class and tested 10 times. The average value is taken as the result. The proposed MMSN uses a sliding window of size to generate a series of data patches. During the contrastive learning process, the learning rate is set to and the weight decay parameter is set to 0. During the classification process, the learning rate is set to and the weight decay is set to . All experiments were conducted on a PC with 80 GB of memory and an RTX 3080 GPU and implemented in Python.

4.1. Datasets

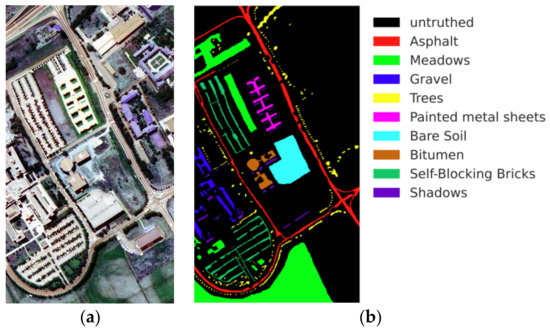

Pavia University (PU) dataset: This dataset was captured by Pavia University using a Reflective Optics System Imaging Spectrometer (ROSIS) sensor over an urban scene in 2003 with spectral bands in the range of 430 to 860 nm. The dataset has 103 spectral bands with pixels. It includes nine land cover classes; the names and quantities of the classes can be found in Table 1. A pseudocolor image and its corresponding ground truth image of PU are shown in Figure 11.

Table 1.

The number of samples in each class samples of the PU dataset.

Figure 11.

A pseudocolor image and ground truth image of PU. (a) The pseudocolor image. (b) The ground truth image.

Indian Pines (IP) dataset: The IP dataset is the first batch of HSI data collected by the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) sensor over the Indian Pines region in northwest Indiana in 1996. The dataset has 220 bands, and 20 noise and water absorption bands (, and ) are excluded, leaving 200 bands available for the experiment. The dataset has images with pixels. It includes 16 classes of land cover. The details are shown in Table 2. A pseudocolor image and its corresponding ground truth image of IP are shown in Figure 12.

Table 2.

A number of samples of each class samples of the IP dataset.

Figure 12.

A pseudocolor image and its corresponding ground truth image of the IP dataset. (a) The pseudocolor image. (b) The ground truth image.

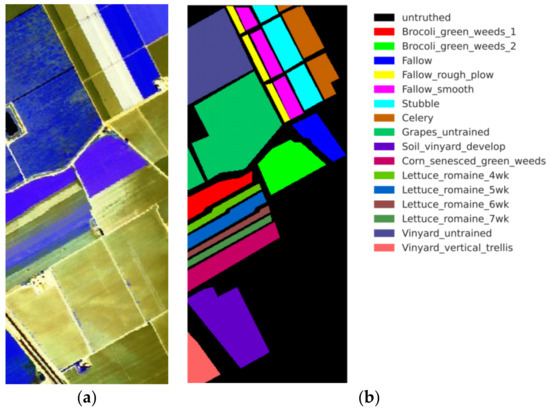

Salinas Valley (SA) dataset: This dataset was captured by the AVIRIS sensor in Salinas Valley, California, USA in 1998. The data have a spatial resolution of 3.7 m and include 144 spectral bands after excluding 20 water absorption bands. The dataset has images with pixels. There are 16 land cover classes in the scene, which can be seen in Table 3. A pseudocolor image and its corresponding ground truth image of SA are shown in Figure 13.

Table 3.

The number of each class samples of the SA dataset.

Figure 13.

A pseudocolor image and its corresponding ground truth image of the SA dataset. (a) The pseudocolor image. (b) The ground truth image.

4.2. Evaluation Metrics

We evaluate the classification performance of the tested methods according to three commonly used classification metrics: the overall accuracy (OA) [28], average accuracy (AA) [29] and kappa coefficient (K) [28]. The OA represents the ratio of the number of correct labels to the total number of labels, the AA is the average of the accuracies achieved for all classes and the Kappa coefficient measures the consistency of the predicted labels with the true values.

4.3. Method Comparison

To further verify the effectiveness of the proposed MMSN, representative state-of-the-art classification methods are selected for comparison purposes, including the SSRN [12], FDSSC [13], DFFN [14], BASSNet [15], SPRN [16], 3DCSN [19], Sia-3DCNN and S3Net [20]. Table 4, Table 5 and Table 6 display the classification accuracy results obtained on the PU, IP and SA datasets, respectively. On all three datasets, the proposed MMSN outperforms the other methods in terms of the OA, AA and Kappa metrics. On the PU dataset, the OA of the proposed MMSN reaches 80.73%; this value is superior to that of S3Net (75.24%), which has the highest accuracy among the comparison methods. The OA of the proposed approach is 5.49% higher than that of this method and 27.89% higher than that of the DFFN (52.84%). On the IP dataset, the OA of the proposed MMSN, SPRN (which has the highest accuracy among the comparison methods) and DFFN are 72.82%, 69.27% and 41.04%, respectively, showing that the result of the MMSN is 3.55% higher than that of the SPRN and 31.78% higher than that of the DFFN. On the SA dataset, the OA of the proposed MMSN reaches 90.81% when compared to that of S3Net (88.84%), which has the highest accuracy among the comparison methods; the value of the proposed method is 1.97% higher than that of this method and 15.15% higher than that of BASSNet (75.66%).

Table 4.

Classification accuracies of different methods for PU dataset (3 training samples per class). The best results are show in bold typeface.

Table 5.

Classification accuracies of different methods on the IP dataset (3 training samples per class). The best results are show in bold typeface.

Table 6.

Classification accuracies of different methods for SA dataset (3 training samples per class). The best results are show in bold typeface.

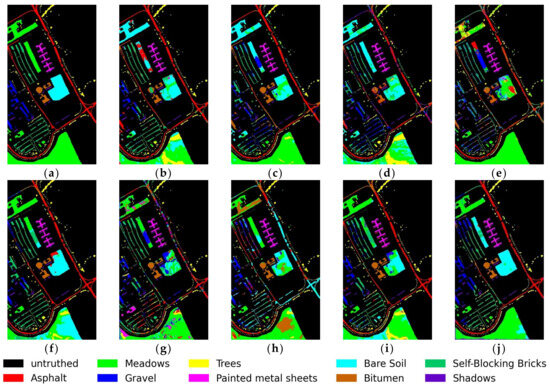

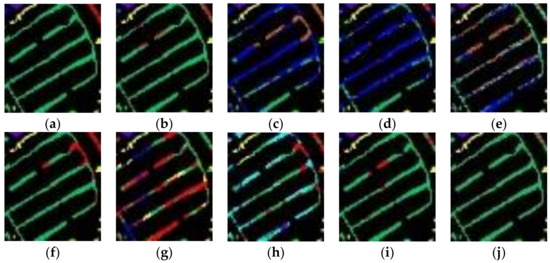

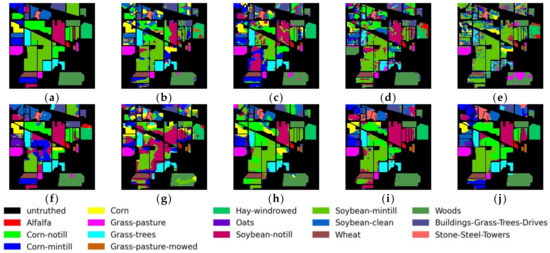

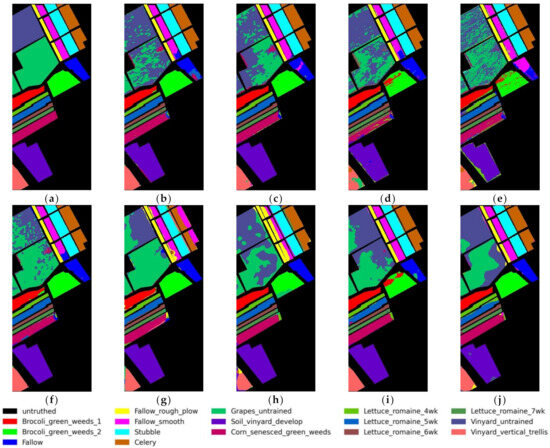

Figure 14, Figure 15, Figure 16 and Figure 17 show the corresponding classification maps. It can be observed that the classification maps of the comparative methods have more salt-and-pepper noises, while that of the proposed MMSN has the least salt-and-pepper noises, demonstrating the superior classification performance of the MMSN.

Figure 14.

Classification maps produced for the PU dataset: (a) Ground truth, (b) SSRN (c) FDSSC, (d) DFFN, (e) BASSNet, (f) SPRN, (g) Sia-3DCNN, (h) 3DCSN, (i) S3Net, (j) Proposed MMSN.

Figure 15.

Partial enlarged classification maps obtained for the PU dataset: (a) Ground truth, (b) SSRN, (c) FDSSC, (d) DFFN, (e) BASSNet, (f) SPRN, (g) Sia-3DCNN, (h) 3DCSN, (i) S3Net, (j) Proposed MMSN.

Figure 16.

Classification maps produced for the IP dataset: (a) Ground truth, (b) SSRN, (c) FDSSC, (d) DFFN, (e) BASSNet, (f) SPRN, (g) Sia-3DCNN, (h) 3DCSN, (i) S3Net, (j) Proposed MMSN.

Figure 17.

Classification maps produced for the SA dataset: (a) Ground truth, (b) SSRN, (c) FDSSC, (d) DFFN, (e) BASSNet, (f) SPRN, (g) Sia-3DCNN, (h) 3DCSN (i) S3Net, (j) Proposed MMSN.

Additionally, Table 4 shows that the proposed MMSN achieves the highest classification accuracy of 97.65% for the “Self-Blocking Bricks” class in the PU dataset. In conjunction with Figure 15, which shows the partial enlarged classification maps of PU, it can be seen that the distribution of the “Self-Blocking Bricks” class (display in green) exhibits a narrow diagonal shape with many edges. The classification maps of the comparative methods have more misclassified pixels with the incorrect color in the edge regions, whereas the proposed MMSN can obtain satisfactory classification results.

The principal reasons for the above results are as follows. When performing classification with few-shot training samples, the Siamese network framework with low dependence on sample information is adopted by the proposed MMSN. Furthermore, during the feature extraction process of the subnetwork, the DCAM comprehensively considers the spatial-spectral weights; the RDHM effectively propagates and utilizes different levels of features and enhances the expression capabilities of the features; the MDFE implements multiscale convolutions with multikernels and hierarchical skip connections on the image patches to improve the ability of the network to capture details. For these reasons, the proposed MMSN can achieve an excellent classification performance.

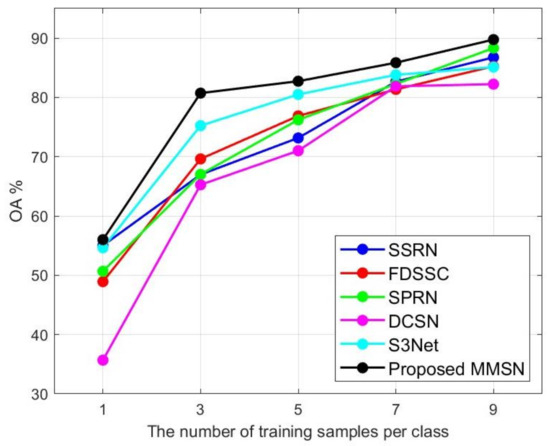

4.4. Effects of Different Number of Training Samples

This section discusses the effects of different numbers of raining samples. For each class of the PU dataset, experiments are conducted using 1, 3, 5, 7 and 9 labeled samples per class as training samples, and the proposed MMSN was also compared with the SSRN, FDSSC, SPRN, 3DCSN and S3Net, which achieved a better performance in the comparison. The OA results obtained with different numbers of training samples are shown in Figure 18. It can be observed that with the increase in the number of training samples, the OAs of all the methods have an upward trend due to the increased amount of available sample information. The proposed MMSN consistently achieves the highest OAs among all of the methods under different numbers of training samples. This indicates that the proposed MMSN has a superior classification advantage, particularly in cases with few-shot training samples.

Figure 18.

OA results obtained with different numbers of training samples for PU dataset.

5. Discussion

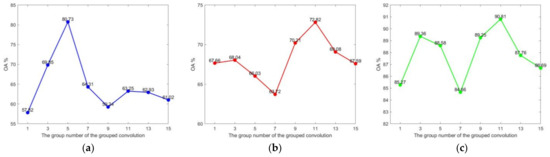

5.1. Parameter Discussion—Number of Groups in the Grouped Convolutions

To validate the impact of the number of groups in the grouped convolutions on the resulting classification performance, we conduct comparative experiments with different numbers of groups. The numbers of groups are set from 1 to 15 with intervals of 2, and the specific OA results are shown in Figure 19. When the number of groups is 1, grouped convolutions are not used, and a simple CNN directly extracts the features from the entire spectral band. On the PU dataset, the OA reaches its maximum value of 80.73% when the number of groups is 5, which is 23.21% higher than that obtained when not using grouped convolutions (when the number of groups is 1). On the IP dataset, the OA reaches its maximum value of 72.82% when the number of groups is 11, which is 5.16% higher than that obtained when not using grouped convolutions. Similarly, on the SA dataset, the OA reaches its maximum value of 90.81% when the number of groups is also 11, which is 5.54% higher than that obtained when not using grouped convolutions. Therefore, it can be concluded that grouped convolution operations are effective for classification. Indeed, we also observed that using grouped convolution does not always lead to improved classification accuracy because it may destroy the continuous identification information of the spectral dimensions by dividing the spectral bands into segments with equal widths. By selecting an appropriate number of groups, we can better utilize the available spectral information and achieve an improved classification performance.

Figure 19.

OA results obtained with different numbers of groups: (a) PU, (b) IP and (c) SA.

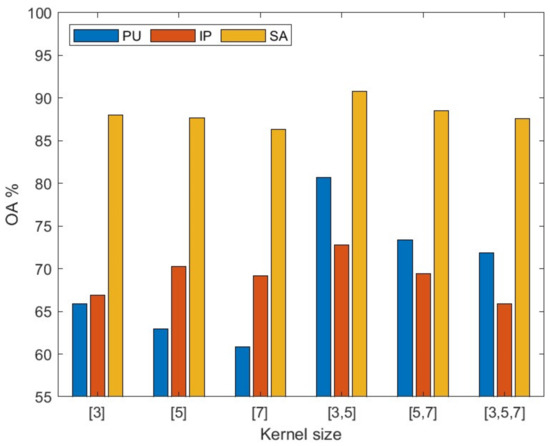

5.2. Parameter Discussion—Multiscale Convolution with Multikernel

To verify the impact of the multiscale convolution process with multikernels in the MDFE module, different single kernel sizes of as well as multiscale convolution with multikernels possessing different kernel size combinations of are compared. means that the kernel size is ; denotes the multiscale convolution process with multikernels constructed by two parallel grouped convolutions with kernel sizes of and ; and represents the multiscale convolution process with multikernels constructed by three parallel grouped convolutions with kernel sizes of , and . The OA results are shown in Figure 20. It can be observed that the use of multiscale convolution with multikernels can achieve higher OAs than those produced using single kernel convolutions . This verifies the effectiveness of multiscale convolution with multi-kernels. Smaller kernel sizes can better capture local details, while larger kernel sizes can better capture global contextual information. Multiscale convolution with multikernels can comprehensively utilize information from different scales. Furthermore, in the multiscale convolution operation with multikernels, the trade-off between local and global information needs to be considered. By using different kernel size combinations, the local and global features can be effectively integrated, resulting in better classification results. According to Figure 20, it can be seen that with the kernel size combination of the multiscale convolution with multikernels achieves the highest OA values on all three datasets. Therefore, in the proposed MMSN, multiscale convolution with multikernels of is adopted.

Figure 20.

OA results obtained with different kernel sizes.

5.3. Ablation Experiment

To validate the effectiveness of the Siamese network framework, the DCAM and the RDHM and MDFE modules, we conducted ablation experiments on the PU dataset. Table 7 shows that the OA drops by 14.12% when the Siamese network framework is not used. This indicates that the utilization of the Siamese network framework enhances the resulting classification accuracy by constructing sample pairs and learning the similarity relationships between the input sample pairs. When the RDHM module is not used, the OA drops by 29.29%, demonstrating that the fusion of three types of features (grouped convolution-based local residual features, global residual features and global dense features) can enhance the expression capabilities of the features. When the MDFE module is not used, the accuracy drops by 12.81%, indicating that the MDFE module is effective at mining deep semantic features and improving the ability of the network to capture details. The absence of the DCAM leads to a significant drop in accuracy of 15.76%, demonstrating the effectiveness of comprehensively considering the spatial-spectral weights in the patch. These modules effectively enhance the feature representation ability, detail capture ability and discriminative capability of the network, thereby improving its classification accuracy. By comprehensively utilizing these modules, the proposed MMSN can achieve a good classification performance.

Table 7.

Impacts of different modules on the PU dataset. Y means that the corresponding module is used; N means that the corresponding module is not used.

6. Conclusions

In this paper, a MMSN is proposed for few-shot HSI classification. The MMSN employs the Siamese network framework, and in each subnetwork, the DCAM comprehensively considers the spatial-spectral weights; the RDHM effectively propagates and utilizes different levels of features and enhances the expression capabilities of the features; the MDFE performs multiscale convolutions with multikernels and hierarchical skip connections on the image patches to improve the ability of the network to capture details.

The MMSN exhibits an excellent HSI classification performance with few-shot training samples. However, the MMSN employs random sample selection. In future work, we plan to integrate the MMSN with semisupervised models to select the appropriate representative training samples, thereby improving the stability of the classification results.

Author Contributions

Investigation, J.Y. and J.Q. (Jia Qin); Methodology, J.Y. and J.Q. (Jia Qin); Writing, J.Q. (Jia Qin); Editing, J.Y., J.Q. (Jinxi Qian) and A.L.; Valuable advice, L.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Nos. 62001434, 62071084).

Data Availability Statement

Publicly available datasets were analyzed during the study can be found at http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes (accessed on 1 May 2023).

Acknowledgments

The authors would like to thank the editors and the anonymous reviewers for their valuable comments and suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Awad, M.; Jomaa, I.; Arab, F. Improved Capability in Stone Pine Forest Mapping and Management in Lebanon Using Hyperspectral CHRIS-Proba Data Relative to Landsat ETM+. Photogramm. Eng. Remote Sens. 2014, 80, 725–731. [Google Scholar] [CrossRef]

- Liang, H.; Li, Q. Hyperspectral imagery classification using sparse representations of convolutional neural network features. Remote Sens. 2016, 8, 99. [Google Scholar] [CrossRef]

- Sun, W.; Yang, G.; Du, B.; Zhang, L.; Zhang, L. A sparse and low-rank near-isometric linear embedding method for feature extraction in hyperspectral imagery classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4032–4046. [Google Scholar] [CrossRef]

- Marinelli, D.; Bovolo, F.; Bruzzone, L. A Novel Change Detection Method for Multitemporal Hyperspectral Images Based on Binary Hyperspectral Change Vectors. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4913–4928. [Google Scholar] [CrossRef]

- Zhao, C.; Wang, Y.; Qi, B.; Wang, J. Global and local real-time anomaly detectors for hyperspectral remote sensing imagery. Remote Sens. 2015, 7, 3966–3985. [Google Scholar] [CrossRef]

- Awad, M. Sea water chlorophyll-a estimation using hyperspectral images and supervised Artificial Neural Network. Ecol. Inform. 2014, 24, 60–68. [Google Scholar] [CrossRef]

- Hu, H. Deep convolutional neural networks for hyperspectral image classification. J. Sens. 2015, 2015 Pt 3, 258619. [Google Scholar] [CrossRef]

- Yue, J.; Zhao, W.; Mao, S.; Liu, H. Spectral–spatial classification of hyperspectral images using deep convolutional neural networks. Remote Sens. Lett. 2015, 6, 468–477. [Google Scholar] [CrossRef]

- Yue, J.; Mao, S.; Li, M. A deep learning framework for hyperspectral image classification using spatial pyramid pooling. Remote Sens. Lett. 2016, 7, 875–884. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefevre, S. Deep Learning for Classification of Hyperspectral Data: A Comparative Review. IEEE Geosci. Remote Sens. Mag. 2019, 7, 159–173. [Google Scholar] [CrossRef]

- Mei, S.; Ji, J.; Geng, Y. Unsupervised Spatial–Spectral Feature Learning by 3D Convolutional Autoencoder for Hyperspectral Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6808–6820. [Google Scholar] [CrossRef]

- Zhong, Z.; Li, J.; Luo, Z. Spectral-Spatial Residual Network for Hyperspectral Image Classification: A 3-D Deep Learning Framework. IEEE Trans. Geosci. Remote Sens. 2017, 56, 847–858. [Google Scholar] [CrossRef]

- Wang, W.; Dou, S.; Jiang, Z.; Sun, L. A Fast Dense Spectral–Spatial Convolution Network Framework for Hyperspectral Images Classification. Remote Sens. 2018, 10, 1068. [Google Scholar] [CrossRef]

- Song, W.; Li, S.; Fang, L. Hyperspectral image classification with deep feature fusion network. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3173–3184. [Google Scholar] [CrossRef]

- Santara, A. BASS net: Band-adaptive spectral-spatial feature learning neural network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5293–5301. [Google Scholar] [CrossRef]

- Zhang, X.; Shang, S.; Tang, X. Spectral Partitioning Residual Network With Spatial Attention Mechanism for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5507714. [Google Scholar] [CrossRef]

- Zhao, S.; Li, W.; Du, Q.; Ran, Q. Hyperspectral Classification Based on Siamese Neural Network Using Spectral-Spatial Feature. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 2567–2570. [Google Scholar] [CrossRef]

- Gowthama, B.; Ajay Kumar Reddy, I.; Subba Reddy, T. Hyperspectral Image Analysis using Principal Component Analysis and Siamese Network. Turk. J. Comput. Math. Educ. 2021, 12, 1191–1198. [Google Scholar]

- Cao, Z.; Li, X.; Jianfeng, J. 3D convolutional siamese network for few-shot hyperspectral classification. J. Appl. Remote Sens. 2020, 14, 048504. [Google Scholar] [CrossRef]

- Xue, Z.; Zhou, Y.; Du, P. S3Net: Spectral–Spatial Siamese Network for Few-Shot Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5531219. [Google Scholar] [CrossRef]

- Rao, M.; Tang, P.; Zhang, Z. A Developed Siamese CNN with 3D Adaptive Spatial-Spectral Pyramid Pooling for Hyperspectral Image Classification. Remote Sens. 2020, 12, 1964. [Google Scholar] [CrossRef]

- Wang, W.; Chen, Y.; He, X.; Li, Z. Soft Augmentation-Based Siamese CNN for Hyperspectral Image Classification with Limited Training Samples. IEEE Geosci. Remote Sens. Lett. 2022, 19, 5508505. [Google Scholar] [CrossRef]

- He, Z.; Shi, Q.; Liu, K. Object-Oriented Mangrove Species Classification Using Hyperspectral Data and 3-D Siamese Residual Network. IEEE Geosci. Remote Sens. Lett. 2020, 17, 2150–2154. [Google Scholar] [CrossRef]

- Gao, S.H.; Cheng, M.M.; Zhao, K. Res2Net: A New Multi-Scale Backbone Architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 652–662. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Yu, F.; Koltun, V.; Funkhouser, T. Dilated residual networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 472–480. [Google Scholar] [CrossRef]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Richards, J.A. Classifier performance and map accuracy. Remote Sens. Environ. 1996, 57, 161–166. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).