Abstract

GLFNet is proposed to be utilized for the detection and matching of local features among remote-sensing images, with existing sparse feature points being leveraged as guided points. Local feature matching is a crucial step in remote-sensing applications and 3D reconstruction. However, existing methods that detect feature points in image pairs and match them separately may fail to establish correct matches among images with significant differences in lighting or perspectives. To address this issue, the problem is reformulated as the extraction of corresponding features in the target image, given guided points from the source image as explicit guidance. The approach is designed to encourage the sharing of landmarks by searching for regions in the target image with features similar to the guided points in the source image. For this purpose, GLFNet is developed as a feature extraction and search network. The main challenge lies in efficiently searching for accurate matches, considering the massive number of guided points. To tackle this problem, the search network is divided into a coarse-level match network-based guided point transformer that narrows the search space and a fine-level regression network that produces accurate matches. The experimental results on challenging datasets demonstrate that the proposed method provides robust matching and benefits various applications, including remote-sensing image registration, optical flow estimation, visual localization, and reconstruction registration. Overall, a promising solution is offered by this approach to the problem of local feature matching in remote-sensing applications.

1. Introduction

Pairwise image feature matching aims to identify and correspond the same or similar content from two or more images at the pixel level. It is a fundamental problem in remote sensing, with various applications in remote-sensing image registration [1,2,3,4], change detection [5,6], and 3D reconstruction [7]. The most popular solution for image matching is to separately detect key points for the source and target images as feature points and establish matches between them, followed by traditional algorithms [8,9,10,11,12] and deep-learning-based approaches [13,14,15,16]. Some applications further select a subset of matched points sharing the same landmarks in space so that a global registration model can be derived. However, it is observed that image matching often encounters challenges at this stage, particularly when dealing with images exhibiting substantial differences in lighting or perspectives. Such discrepancies can lead to tracking failures in camera localization and incomplete results in 3D reconstruction, as separate landmarks detected from different images may not correspond to the same points in 3D space.

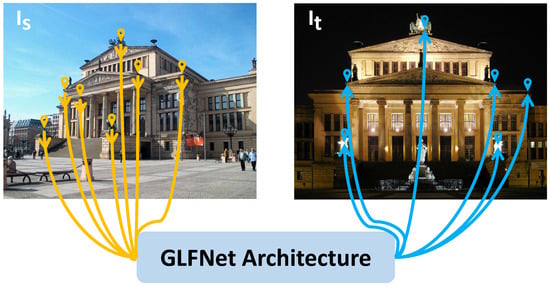

Some works address this challenge by designing detectors to produce more feature points [9,10,17,18], which increases the opportunity for sharing landmarks in both images. However, it also raises the chance of producing false-positive matches and requires additional matching networks [11,12,15] to improve matching accuracy. In scenarios such as 3D reconstruction and visual localization, incremental matching based on existing models is often required. Leveraging the existing feature points can significantly improve efficiency and accuracy in such cases. As shown in Figure 1, the problem is reformulated by additionally considering guided points from the source image as guidance to regress corresponding points in the target image. It is argued that such a formulation encourages corresponding points from the image pair to share landmarks.

Figure 1.

GLFNet is introduced to facilitate local feature matching between images, with guided points as the guidance. Diverging from the conventional approach of independently extracting and matching feature points from both images, the problem is reformulated by utilizing the guided points in the source image to guide the regression of exact coordinates for corresponding points in the target image. By adopting this novel perspective, the sharing of landmarks among matching pairs is fostered, thereby promoting more robust and accurate image matching.

For this reformulated problem, there are existing methods that can potentially address it. However, they suffer from certain drawbacks. One straightforward approach involves regressing the corresponding location in the target image for each feature point from the source image using [16]. However, this method involves iterative regression over the entire image, leading to increased runtime linearly with the number of guided points, which becomes time-consuming. Another brute-force solution exhaustively checks pixel-wise correspondences from a 4D correlation tensor [14]. Despite the fusion of features from two different resolutions in the 4D correlation tensor, the receptive field of 4D convolution remains limited to each match’s neighborhood area. It is essential to highlight that both of these methods lack the ability to embed information from guided points in the feature representation process. To overcome these challenges, GLFNet is proposed to search for corresponding feature points in a coarse-to-fine manner based on the guided point transformer.

In detail, GLFNet consists of a feature extraction network and a search network. Each image is passed through the feature extraction network to produce coarse and fine feature maps. Further, the search network is split into a coarse-level match network and a fine-level regression network. The coarse-level match network embeds coarse image features to coarse patch features using the guided point transformer. Then, it matches the patches and guided points by solving an optimal transport problem based on the guided point transformer. Once the coarse matches are established, the fine-level coordinate regression network is utilized to regress the 2D coordinate for all guided points in its matched local patch based on fine feature maps. While [16] iteratively regresses the coordinate for each feature point in the entire image, the local search space supports the regression network to execute only once for all points but with higher accuracy, leading to acceleration by 173 times. As a result, the coarse-to-fine search network efficiently estimates accurate coordinates in the target image corresponding to guided ones in the source image.

The proposed method benefits various applications by introducing guided points. It outperforms the state of the art for remote-sensing image registration, optical flow estimation, and visual localization. By using GLFNet as the image-matching module, the standard pipeline of 3D reconstruction successfully incorporates more images into the camera graph. Based on guided points, it is found that GLFNet significantly improves alternative methods on an image-matching benchmark [19] in terms of accuracy.

Overall, the core contributions of the paper are:

- The image-matching problem is reformulated by considering guided points as input.

- GLFNet is designed with a coarse-to-fine search network to efficiently and accurately detect and match corresponding points. Additionally, the guided point transformer is proposed to incorporate the guided points information during feature representation.

- The GLFNet significantly improves the standard image-matching task and benefits various applications.

2. Related Work

2.1. Detector-Based Local Feature Matching

The detector is the key part of the detector-based local feature-matching method. Before the arrival of the deep learning era, SIFT [8] was the most successful hand-crafted local features detector, which is applied to a wide range of computer vision works. ORB [20] combines the advantages of FAST [21] and BRIEF [22] to efficiently complete feature extraction and feature description. Deep-learning-based methods can significantly improve performance in difficult environments. D2Net [9] is an approach where a single convolutional neural network plays a dual role: it is simultaneously a dense feature descriptor and a feature detector. ASLFeat [17] takes advantage of the inherent feature hierarchy to restore spatial resolution and low-level details for accurate keypoint localization. SuperPoint [10] proposes a self-supervised training method through homographic adaptation, which is currently the most widely used. GMS [11] encapsulates motion smoothness as the statistical likelihood of a certain number of matches in a region and enables translation of high match numbers into high match quality, which makes it perform well in the case of low textures and blurs. R2D2 [18] proposes a predictor of the local descriptor discriminativeness, which can ignore ambiguous areas, thus leading to reliable keypoint detection and description. The above-mentioned local features use the nearest neighbor search to find matches between images. Recently, SuperGlue [12] proposed a network flexible context aggregation mechanism based on attention, which achieves impressive performance by learning feature matching via graph neural networks. However, these methods rely on the detector and cannot guarantee that detected feature points from images share landmarks.

2.2. Detector-Free Local Feature Matching

Detector-free methods do not require a detector and directly describe and match in a unified manner. NCNet [13] proposed a different approach by directly learning the dense correspondences in an end-to-end manner. It constructs 4D cost volumes to enumerate all the possible matches between the images and uses 4D convolutions to regularize the cost volume and enforce neighborhood consensus among all the matches. DGC-Net [23] and GLU-Net [24] are based on the optical flow method, which can provide a dense matching effect in the case of large transformation. DualRC-Net [14] obtains pixel-wise correspondences in a coarse-to-fine manner which extracts both coarse- and fine-resolution feature maps. A full but coarse 4D correlation tensor is produced by a coarse map, which will be refined by a learnable neighborhood consensus module. The fine-resolution feature maps are used to obtain the final dense correspondences guided by the refined coarse 4D correlation tensor. ASpanFormer [25] is built on the hierarchical attention structure, adopting a novel attention operation that is capable of adjusting attention span in a self-adaptive manner. LoFTR [15] describes the image by a transformer that first establishes pixel-wise dense matches at a coarse level and later refines the matches. COTR [16] first downsamples each image with a CNN, and then the result is fed into a transformer along with the query point. The work of this paper is inspired by LoFTR [15] and COTR [16] in terms of using self- and cross-attention for message passing, but a fine-level regression is proposed to obtain more accurate sub-pixel coordinates, and it can be guaranteed that the corresponding coordinates will share the 3D points.

2.3. Transformers in Vision

Due to its parallelism, high computational efficiency, and more interpretable model, Transformer has become the most popular model in the field of natural language processing (NLP). Recently, in order to apply Transformers to vision tasks, a lot of related studies have appeared, such as Transformers in image segmentation [26,27,28,29], Transformers in object detection [30,31,32,33,34], Transformers in image classification [35,36,37], and Transformers in image enhancement [38,39]. Concurrently with our work, some researchers [15,16,40] also apply Transformers to the field of local feature matching. The Transformer can improve the accuracy of matching by capturing the context information between two images well.

3. Method

3.1. Problem Definition

The image-matching problem is formulated as determining a set of point coordinates in the target image that matches guided points in the source image , as described in Equation (1).

While a straightforward solution to this problem is by directly regressing [16], the problem is proposed to be efficiently solved with more accurate regression in two stages. At the first stage, images are subdivided into coarse-level patches and at resolution for both and . The aim is to learn an embedding for each patch so that the angles between latent feature vectors of patches measure their similarity. Specifically, the patch similarity between two patches is defined as:

is the patch embedding that maps a patch to a unit feature vector in the latent space.

When the patch match is established based on the similarity score, the coordinate regression problem is solved in a manner similar to Equation (1) but in a local manner:

Specifically, is learned as a coordinate regression function that maps each guided point in to a target point coordinate in . is the patch in the source image where locates in. is the most similar patch to and can be obtained from Equation (2).

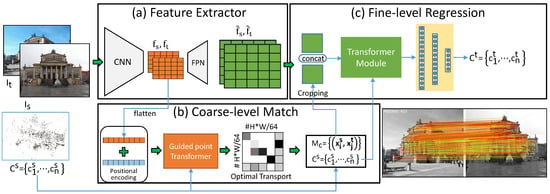

Figure 2 illustrates GLFNet architecture as the solution to the problem. The feature extraction network (Figure 2a) is introduced in Section 3.2. It serves as a basis for the coarse-level matching network (Figure 2b) for Equation (2), which will be described in Section 3.3. Section 3.4 shows the fine-level regression network (Figure 2c) that implements Equation (3). More implementation details are finally included in Section 3.5.

Figure 2.

The GLFNet architecture. The proposed method is made of three components: (a) the feature extractor, which can extract the input features of coarse-level match network and fine-level regression; (b) the coarse-level match network, which can make the images divided into multiple patches to calculate the coarse match prediction; and (c) the fine-level regression, which can obtain the fine regression coordinates.

3.2. Feature Extraction Network

First, the source and target images and are passed through a feature extraction network in Figure 2a to derive image features in both low resolution and high resolution. Specifically, each image is downsampled through a CNN [41] to obtain a coarse feature map, where each feature is corresponding to a subdivided patch in or . Then, the coarse feature map is upsampled back to one half of the original resolution using an FPN network [42] to derive the fine feature for each pixel . The feature extraction network serves as an image backbone, where the coarse features are further processed to match coarse patches and are analyzed during coordinate regression.

3.3. Coarse-Level Match Network

Figure 2b illustrates the coarse-level match network that matches patches in the target image to those in the source image. To implement the patch embedding in Equation (2), a transformer architecture with self-attention and cross-attention layers is used to consider coarse features in both source and target images.

3.3.1. Positional Encoding

First, the patch coordinate is encoded with a 2D extension of the standard positional encoding [43] as follows:

, is the coordinate of a patch , d is the number of dimensions of the encoded feature, and represents the value of its m-th feature dimension. The coarse feature is added with its positional encoding for patch , which enriches image features with spatial information. For each image, all patch features are stacked as a matrix , representing the input to the first transformer module.

3.3.2. Guided Point Transformer

Our transformer module in Figure 2b consists of several attention layers [43]. The i-th attention layer can be described as mapping a query and a set of key–value pairs to an output. , , and can be expressed as follow:

and are parameters to learn, ℓ stands for the ℓ-th attention layer, and represents the output from the -th layer and input to the ℓ-th layer. Next, the message is obtained by weighting and aggregation through the attention mechanism:

where the attention weight . Finally, the feature for the next layer is outputted:

where denotes concatenation.

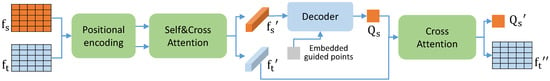

To enhance performance, a combination of self-attention and cross-attention mechanisms is employed to capture descriptive patch features through multiple attention layers, as illustrated in Figure 3. Building upon the traditional transformer architecture, a novel approach called guided point transformer is introduced. Initially, the extracted features are passed through a feature extractor, resulting in coarse feature maps and . These maps are then transformed into one-dimensional vectors and augmented with positional coding. Next, the self-attention and cross-attention layers are employed to exploit high-dimensional information within and between the images, producing output features and . To incorporate information from guided points, these points are encoded using positional encoding, and a cross-attention layer is employed to decode the guided points’ features from the feature map . Subsequently, another cross-attention layer is used to extract information between and , resulting in and for subsequent optimal transport operation.

Figure 3.

The guided point transformer. The coarse feature maps and are transformed into one-dimensional vectors with positional coding and sent to self-attention and cross-attention layers. Then, the embedded guided points and are sent to the decoder to decode the . Subsequently, another cross-attention layer is used to extract information between and .

3.3.3. Coarse-Level Matching

After the transformer module, the features for both the source guided points and target images are obtained as and . The matches between source and target are established by solving an optimal transport problem following SuperGlue [12] using Sinkhorn algorithm [44]. The pairwise similarity is computed scores as:

where is the inner product. Then, the optimal transport problem is solved to obtain an assignment matrix by maximizing the total score . Then, the mutual nearest neighbor (MNN) is used to find the nearest neighbor of the assignment matrix . The coarse-level matches can be obtained:

where is the threshold based on which low-quality matches are filtered out. To handle patches without valid matches, a dustbin is added for each image [12] so that the optimal transport establishes pseudo-matches between these patches and dustbins.

3.4. Fine-Level Regression

The guided points are divided by downsampling factors to obtain their respective coordinates on the coarse and fine feature maps. By utilizing the guided points’ coordinates on the coarse feature map, the patches can be determined to which they belong. Subsequently, the corresponding patches of each guided point on the fine feature map can be determined based on coarse-level matches . To enhance the accuracy of subsequent window ranges, the center coordinates of each patch are adopted instead of the top-left coordinates. Precisely, the coordinates of the corresponding patch are adjusted by adding 0.5 and multiplying by the downsampling factor, yielding the coarse correspondence coordinates on the target image. In the fine-level regression stage, the accurate coordinate is regressed given context information of neighborhood at in the source and in the target feature maps. For each location at the original resolution, its neighborhood is represented as a 9 × 9 window centered at . For each location in the window, the bilinear sampling is performed at both the coarse feature map and the fine feature map from the feature extractor. All collected features from 9 × 9 locations are aggregated as the point feature for . The point features for and its coarse correspondence are further concatenated to represent the context information for the i-th input point.

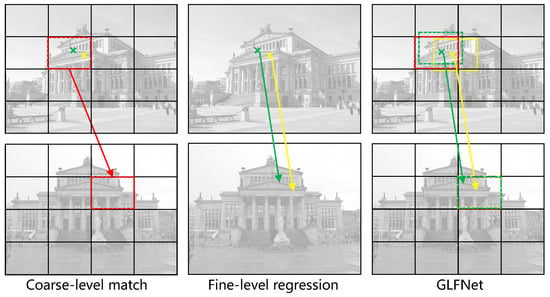

A transformer module is utilized, which contains a self-attention layer and a cross-attention layer, to further enrich the context information. In detail, the query is provided as the positional encoding of the input point coordinates . The context information for the input point from two images is stacked as a matrix and pass them as the key–value pair to the network. The output of the transformer module is finally passed through a 3-layer MLP to predict the offsets of the target points to . The offsets are added to to obtain the final correspondence coordinates . As shown in Figure 4, a coarse-to-fine approach is introduced, which effectively combines the advantages of both methods, ensuring both efficiency and performance benefits.

Figure 4.

Coarse matching alone will lead to fast matching speeds but large errors. Direct global regression such as COTR [16] is very slow and inaccurate due to the large range of regression. GLFNet narrows the search space based on the results of coarse matching and performs regression in a small range, taking into account both accuracy and speed.

3.5. The Implementation Details

In order to train the network, two losses are designed to guide the coarse-level match network and the fine-level regression network. First, the primary objective of the network is to generate an assignment matrix that aligns with the ground truth. Therefore, the assignment matrix is penalized for matches between patches and dustbins.

d represents the dustbin patch, is the correspondence matches from the ground truth, and and are patches without matches from source and target images in the ground truth.

The regression network is additionally supervised given ground-truth corresponding points using mean squared errors.

The final loss includes the losses for both terms: . The GLFNet is trained on the MegaDepth dataset [45], which provides both images and corresponding dense depth maps, generated by SfM [46]. The proposed model is trained using Adam optimizer with an initial learning rate of and a batch size of 8. The model converges after 72 h of training on 8 GTX 3090 GPUs. A modified version of ResNet-18 [41] is used as the feature extractor. The entire model is trained end-to-end with randomly initialized weights. The number of coarse match layers is set to 4, and the number of adaptive fine regression layers is set to 1. The threshold of the assignment matrix is set to 0.2.

4. Experiments

Initially, the effectiveness of the two primary components and the parameter sensitivity of the proposed GLFNet is evaluated in Section 4.1. Subsequently, experimental evaluations are conducted to determine the contribution of guided points in Section 4.2. Finally, a comparison of the proposed method with state-of-the-art techniques across multiple tasks is presented. Specifically, Section 4.3.1 examines the performance of remote-sensing image registration, while Section 4.3.2 compares reconstruction registration performance. Additionally, Section 4.3.3 evaluates the performance of motion image analysis in the context of optical flow estimation, and Section 4.3.4 assesses the performance of visual localization.

4.1. Ablation Study

In order to evaluate the effectiveness of the two main components (coarse-level match network and fine-level regression) in the proposed GLFNet, an ablation study is conducted on HPatches [47]. Furthermore, to gauge the sensitivity of GLFNet to its parameters, a sensitivity analysis is conducted on HPatches [47] focusing on the main parameters.

4.1.1. Ablation Study

Table 1 shows the comparison of different components of GLFNet. Since the coarse-level match network only matches each patch on the downsampled image with the guided point, leading to a relatively lower accuracy when solely using this module. Furthermore, the performance of the transformer module in the coarse-level match network is compared, demonstrating the clear superiority of the guided point transformer over the conventional transformer.

Table 1.

Ablation study on HPatches [47].

Fine-level regression regresses coordinates on a sub-pixel scale, so it is competitive to take only this module. However, based on the coarse-level match network, GLFNet performs fine-level regression, which integrates the advantages of the two modules and achieves high accuracy. For the time cost, as shown in Table 1, the coarse-level match network is fast. Due to the large range of regression, the speed of fine-level regression is slow. However, GLFNet narrows the search space by the coarse-level match network, which can ensure high speed while maintaining high accuracy.

4.1.2. Parameter Sensitivity Analysis

To investigate the impact of various parameters on the proposed method, the parameter sensitivity experiments are conducted on key parameters and the results are presented in Table 2. In terms of the window size for fine-level regression, it is discovered that 9 × 9 yields optimal accuracy while also considering computational efficiency. When reducing the size from 9 × 9, a significant drop in GLFNet’s matching accuracy is observed, albeit with a slight improvement in efficiency. Conversely, increasing the size beyond 11 × 11 leads to a notable reduction in both the efficiency and accuracy of GLFNet. The reason behind this lies in the fact that smaller sizes result in reduced regression areas, thus decreasing the algorithm’s running time, but at the expense of decreased precision in returning accurate coordinates. Conversely, larger sizes expand the regression area and increase the algorithm’s running time without proportionally enhancing regression accuracy. As for the parameter used in coarse-level matching, it is determined that 0.2 serves as the optimal threshold. Altering in either direction results in reduced model accuracy, emphasizing the significance of the chosen value. Interestingly, different values exhibit no significant difference in GLFNet’s operational efficiency due to the swift operation of the matching filter module.

Table 2.

Parameter sensitivity experiment on HPatches [47].

4.2. Experimental Evaluations

4.2.1. The Contribution of Guided Points

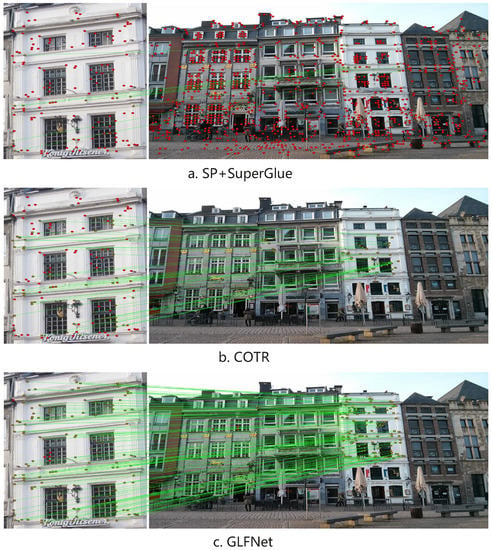

The problem is reformulated as corresponding feature extraction in the target image given guided points from the source image as explicit guidance. So, the contribution of guided points as input is intended to be demonstrated. As shown in Figure 5, this is a scene with big differences in perspective. The SuperPoint [10] is adopted to extract points on the source image as guided points. SuperPoint+SuperGlue [10,12] extracts feature points on two images, respectively, and then performs matching between point pairs. COTR [16] performs a global search on the target image for each guided point. GLFNet first matches the patches of the two images based on the guided point transformer and then regresses the exact coordinates based on guided points. Therefore, the qualitative example proves that guided points are contributive, and GLFNet can use guided points efficiently.

Figure 5.

Comparison between the proposed GLFNet, COTR [16], and SuperPoint + SuperGlue [10,12] in the case of scenes with large differences in perspective. The results clearly demonstrate that GLFNet effectively leverages guided points and performs admirably in scenarios characterized by large differences in perspective.

4.2.2. Image Matching with Guided Points

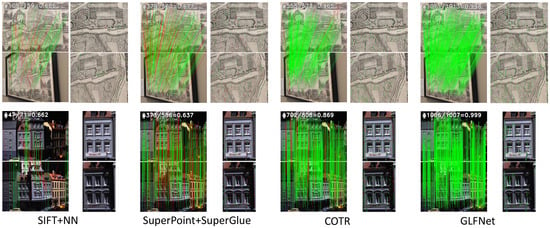

Since the contribution of the guiding points has been demonstrated, the image matching is completed under this premise. HPatches [47] is a very popular dataset to evaluate image matching, which contains 57 scenes under photometric changes and 59 sequences that show significant geometric deformations due to viewpoint change. Because the problem is reformulated, methods that can solve this problem are selected for comparison. The baseline includes two categories of methods: (1) detector-based image-matching methods: D2Net + NN [9], R2D2 + NN [18], and SuperGlue + SuperPoint [10,12]; (2) a detector-free image-matching method: COTR [16]. To ensure a fair comparison between methods that produce different numbers of matches, the corner error between images is computed. Meanwhile, three different guided points are adopted for comparison to prove the superiority of the proposed method. LoFTR and ASpanFormer are also included in the comparison, which are the SOTA detector-free methods but cannot solve the reformulated problem.

As shown in Table 3 and Figure 6, GLFNet has a significant improvement in matching accuracy under all error threshold conditions. Specifically, when the error threshold is smaller, the improvement of GLFNet is more significant. At the same time, GLFNet is better than most methods in time cost while maintaining high accuracy. In particular, compared with the COTR method, which can also take guided points as input, the time cost has been improved exponentially. By the way, GLFNet also outperforms LoFTR and ASpanFormer in time cost and accuracy.

Table 3.

Image matching on HPatches [47]. The AUC of the corner error in percentage is reported. The time measurement experiments for all algorithms were run on the same machine.

Figure 6.

Qualitative image-matching results of Hpatches [47]. The image pair on the left displays the image-matching results, with the correct matching rate (CMR) calculation shown in the upper left. The image pairs on the right exhibit enlarged sub-results, where green feature points denote correct matching, and red feature points signify incorrect matching. Notably, GLFNet demonstrates superior performance compared to all the comparison methods.

4.3. Comparison

4.3.1. Remote-Sensing Image Registration

Remote-sensing image registration represents a critical and fundamental step in the process of aligning images of the same scene, captured by either the same or different sensors, at different times, and/or from varying perspectives. The primary objective of remote-sensing image registration is to achieve accurate alignment between the target and source images. This field finds broad applications, including change detection, landscape planning, and agriculture monitoring. The image registration pipeline typically encompasses feature extraction, feature matching, transformation model estimation, and image transformation. Among these stages, feature matching is particularly vital, making GLFNet an effective approach for enhancing this task.

Datasets

The Google Earth dataset [1] is employed to assess the efficacy of GLFNet in remote-sensing image registration. Comprising over 9000 high-resolution satellite images acquired across multiple seasons, the dataset primarily covers the Greater Boston area. The input and template images exhibit notable differences in traffic conditions and vegetation, posing a significant challenge to feature matching.

Comparison

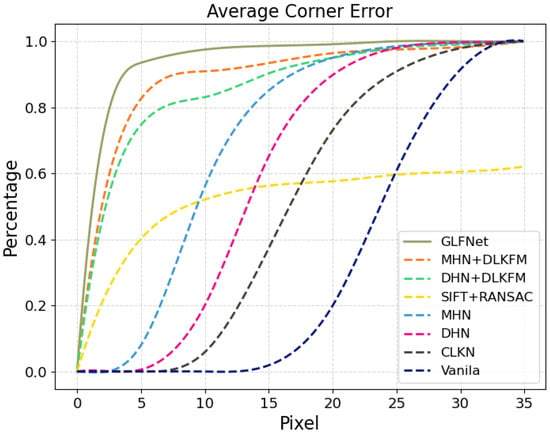

The performance of the proposed method is evaluated by comparing it with two classical methods, SIFT + RANSAC [8] and CLKN [48], and three state-of-the-art methods, DHN [49], MHN [50], and DLKFM [1]. Vanilla is also included as a baseline, which predicts the centers of four corner boxes without any prior knowledge. The evaluation metric used is the average corner error in pixels, which is calculated as the L2 distance between the warped and original corners, following the methodology outlined in [1].

Results

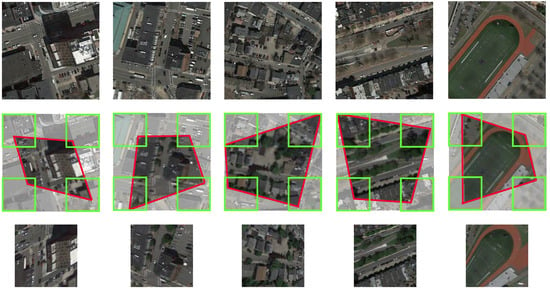

In Table 4, Figure 7 and Figure 8, the comparison results between GLFNet and benchmark methods are presented. The proposed method outperforms state-of-the-art methods across different error scales. Specifically, the proposed method achieves the three-pixel error threshold for most images, while the baseline methods do not. Moreover, the greater the error requirement, the more significant the improvement over the baseline methods.

Table 4.

Remote-sensing image registration on Google Earth.

Figure 7.

Qualitative remote-sensing image registration results of Google Earth. The topmost row displays the input image, while the bottommost row depicts the template image. GLFNet is employed to carry out matching between the input image and the template, leading to the computation of the rotation matrix. The middle row represents the region within the input image where the template image corresponds.

Figure 8.

Comparison of average corner error on Google Earth. The x-axis represents the average pixel error, and the y-axis represents the proportion of images with an average pixel error lower than x. The proposed method outperforms state-of-the-art methods across different error scales.

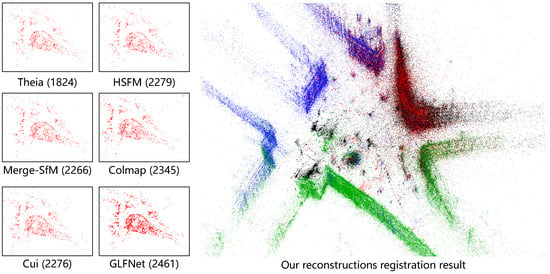

4.3.2. Reconstruction Registration

With the development of 3D reconstruction, an increasing number of 3D models are being reconstructed, giving rise to the task of reconstruction registration. This registration process involves either incremental reconstruction based on a large-scale 3D model or the combination of multiple 3D models with small overlapping regions. Following the pipeline proposed in [51], the reconstruction registration procedure consists of three main steps: image retrieval, image matching, and transformation solving. In this work, GLFNet is taken as a replacement for the conventional image-matching module. The key advantage of GLFNet lies in its ability to directly utilize the existing 3D points from the 3D model as guided points, thus avoiding errors that could arise from re-extracting feature points. This enables us to obtain more accurate 3D point-matching pairs, consequently enhancing the effectiveness of subsequent transformation solutions.

Datasets

Following [51], the proposed method is evaluated on the public realistic synthetic scenarios datasets published in 1DSFM [52], which consists of twelve medium-scale datasets, a large-scale dataset Piccadilly, and a challenging dataset Gendarmenmarkt with symmetric architectures. The dataset is first divided into subsets for partial reconstruction through off-the-shelf community detection algorithms, and then partial reconstruction results for each subset are obtained using COLMAP [46] as input to the model.

Comparison

The proposed method is compared with four state-of-the-art global structure from motion (SfM) methods, two incremental SfM methods, and Merge-SfM [51]. Most methods gather all images together to re-perform SfM reconstruction, while the proposed method and Merge-SfM [51] are different from this. The standard SfM system [51] is replaced with GLFNet, and more implemented materials are provided in the supplementary material. Following [52], the calculated , and are compared with [53] to evaluate the effectiveness of registration. The units are approximately in meters, as geotags associated with images in the collection are used to place each sequential SfM reconstruction in an approximate world coordinate frame, and a RANSAC approach [54] is used to compute the absolute orientation between a candidate reconstruction and the sequential SfM solution.

Results

Table 5 shows the comparison of reconstruction results between GLFNet and other methods. GLFNet preserves all the recovered images from partial reconstructions and outperforms state-of-the-art methods in reconstruction accuracy. Specifically, the median and mean position errors of the proposed method are competitive on most datasets, which proves the success of our registration. Based on the success, the number of recovered cameras of the proposed method is significantly higher than other methods, and the most important reason is that GLFNet increases the probability of matching. A qualitative example of GLFNet is shown in Figure 9.

Table 5.

Reconstruction registration accuracy comparison on public realistic synthetic scenarios datasets [52]. and denote the median and mean position errors in meters respectively by taking the result of [53] as a reference; represents the cameras in the largest connected component of the input EG graph, which is published in [55]; and represents the recovered cameras.

Figure 9.

Qualitative reconstructions registration result of Piccadilly. Piccadilly consists of six partial reconstructions (each color represents a different partial 3D reconstruction), one of them is chosen as the source reconstruction, and GLFNet is used to match the guided points in the source reconstruction to the other five reconstructions. The numbers in parentheses represent the number of recovered cameras.

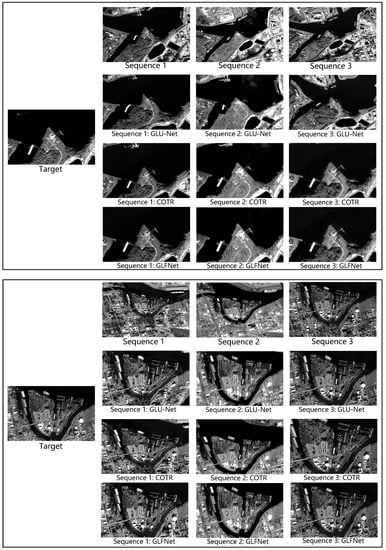

4.3.3. Optical Flow Estimation

Optical flow estimation is a fundamental component of motion image analysis, serving the purpose of estimating pixel motion between two frames to determine sparse feature sets or the displacement of all image pixels, subsequently calculating their motion vectors. This technique finds extensive applications in object detection and tracking, image dominant plane extraction, motion detection, robot navigation, and visual odometry. A crucial step in optical flow estimation involves identifying pixel correspondences between the two frames, a task adeptly addressed by GLFNet through image matching, thereby enhancing the overall process.

Datasets

Following [16], to evaluate the effect of the proposed method in real 3D scenes of optical flow estimation, the ETH3D dataset [60] is adopted, which is multi-view and contains indoor scenes and outdoor scenes captured from a moving hand-held camera. A set of sparse geometrically consistent image correspondences is provided [46].

Comparison

The proposed method is compared with three state-of-the-art image-matching methods applied to this task, which are DGC-Net [23], GLU-Net [24], and COTR [16]. Meanwhile, the proposed method is compared with three state-of-the-art optical flow methods applied to this task, which are LiteFlowNet [61], PWC-Net [62], and RAFT [63]. Specifically, image pairs are sampled from each sequence at different intervals to analyze different magnitudes of geometric transformations, and the provided points are taken as ground-truth correspondences. For each selected interval, there are approximately 500 image pairs in total. The average end-point error (AEPE) is defined as the Euclidean distance between estimated and ground truth flow fields. The AEPE at different intervals is employed as the evaluation metrics.

Results

Table 6 and Figure 10 show the comparison of optical flow estimation results between GLFNet and other methods. The proposed method outperforms the baseline method for different intervals, and the improvement is obvious when the interval is large. Because the large interval leads to large differences in the perspective of the images, which improves the difficulty of matching. However, the proposed method can also accurately match when the difference in perspective is large. As shown in Figure 11, the effectiveness of GLFNet on satellite images also is demonstrated.

Table 6.

Quantitative results for optical flow estimation. The average end-point error (AEPE) at different sampling “rates” (frame intervals) is reported. The proposed method performs significantly better as the rate increases and the problem becomes more difficult.

Figure 10.

Qualitative optical flow estimation result of ETH3D. Pairs of images from ETH3D taken by two different cameras. The proposed method significantly outperforms state-of-the-art methods.

Figure 11.

Qualitative optical flow estimation result of satellite images. GLFNet is applied to remote-sensing images, and superior results were achieved.

4.3.4. Visual Localization

The visual localization task is to estimate the 6-DoF poses of target images with respect to the reference 3D scene model. Thus, it relies on highly robust local feature-matching methods. GLFNet is also beneficial for this task. In real-world scenarios, databases are often already described by descriptors. Traditional methods need to re-describe the base map in the database to complete visual localization, which is time-consuming. However, the proposed method can achieve a good result on this task without re-describing the base map.

Datasets

The Aachen Day-Night dataset [64,65] is used to demonstrate the effectiveness of GLFNet for visual localization in outdoor scenes, whose base map is described by SIFT [8]. And SIFT [8] is the most common descriptor in the industry. The dataset contains 4328 source images taken during the daytime with hand-held cameras over about two years and 922 target images taken during the daytime and nighttime with mobile phone cameras. The dataset considers varying conditions, e.g., day–night changes and scene geometry changes.

Comparison

The GLFNet is compared with state-of-the-art methods without changing the base map descriptor. And the AUC of the pose error at thresholds (0.25 m, 2°)/(0.5 m, 5°)/(5 m, 10°) is adopted as the metrics, where the pose error is defined as the maximum angular error in rotation and translation. In practice, GLFNet transfers sparse points directly from the original COLMAP [46] project into target images. To recover the camera pose, the PnP is solved from predicted matches with RANSAC [54]. The process is kept consistent for all methods.

Results

The evaluation results of GLFNet and state-of-the-art methods are provided in Table 7. The proposed method has a significant improvement over the state-of-the-art methods in both daytime and nighttime scenarios. In the daytime scenario, the baseline methods all work well. However, in the nighttime scenario, the gap between the proposed method and the baseline is large because the lighting difference between images is too big.

Table 7.

Visual localization evaluation on the Aachen Day-Night benchmark [64,65]. The proposed method outperforms the state-of-the-art methods.

5. Discussion

As detailed in Section 4.1, the ablation experiments are conducted to assess the performance of the proposed guided point transformer in image-matching tasks in comparison to the traditional transformer. The results demonstrate the significant advantages of the guided point transformer. We observed that using the coarse-level yields faster matching speeds, although the precision is not as high. Conversely, relying solely on fine-level regression improves accuracy but sacrifices matching speed. To strike a balance between speed and accuracy, the proposed coarse-to-fine approach is introduced, which effectively combines the advantages of both methods, ensuring both efficiency and performance benefits.

As detailed in Section 4.2, the experimental evaluation of GLFNet’s performance is presented. Qualitative assessments in scenarios with large perspective differences demonstrate the valuable contribution of guided points. Moreover, on the standard matching dataset Hpaches, GLFNet exhibits superior performance, attributable to three key factors. Firstly, the introduction of guided points into the image-matching problem provides valuable guidance, significantly enhancing both efficiency and accuracy. Secondly, the guided point transformer, a core component of GLFNet, effectively incorporates guided points into the feature expression process, enabling comprehensive exploration of high-dimensional information between the guided points and the target image. This fosters the extraction of informative representations crucial for achieving accurate matches. Thirdly, the network architecture design of GLFNet plays a pivotal role. By utilizing a coarse-level network, the search space is efficiently narrowed down, effectively reducing computation time. Concurrently, the fine-level network accurately regresses the coordinates, ensuring high precision in the matching process. Additionally, GLFNet exhibits remarkable flexibility, allowing seamless integration with any detector, thus broadening its potential applications across a wide range of scenarios.

As detailed in Section 4.3, the comparison of GLFNet’s effectiveness across multiple tasks is presented. Notably, GLFNet achieves significant performance improvements in remote-sensing image registration compared to state-of-the-art methods. Moreover, in the reconstruction registration task, GLFNet exhibits excellent robustness across various scenarios. In the context of optical flow estimation, GLFNet outperforms all matching-based and optical-flow-based methods, with its advantage becoming particularly prominent when dealing with larger frame intervals. Additionally, GLFNet demonstrates its capacity to effectively leverage 3D points from existing 3D models, which proves beneficial for visual positioning tasks.

Despite its strengths, GLFNet does have certain limitations. The presence of dynamic objects in the image can introduce changes in their positions between the source and target images, posing significant challenges to the matching process. In future work, we aim to explore the incorporation of semantic information into the matching algorithm to mitigate the impact of dynamic objects on the matching performance.

6. Conclusions

This paper presents a novel approach to image matching by reformulating the problem with the guidance of guided points. This reformulation proves beneficial for tasks such as reconstruction registration, visual positioning, and incremental reconstruction, as it encourages corresponding points to share landmarks in 3D space. To address this reformulated problem, GLFNet is proposed, which is a guided local feature-matching method that efficiently matches local features with the assistance of guided points. GLFNet comprises a coarse-to-fine network based on the guided point transformer, which enables effective search space narrowing at the coarse level and precise coordinate regression at the fine level. Extensive experiments, including ablation studies and parameter sensitivity analyses, validate the effectiveness of each proposed module in GLFNet. The results of the experimental evaluation demonstrate GLFNet’s superiority in image-matching tasks. Additionally, GLFNet’s performance is evaluated across various tasks, showcasing its effectiveness in downstream applications. In the future, we plan to investigate techniques to mitigate the impact of dynamic objects on image matching and explore ways to incorporate semantic information into the matching process. These efforts will further enhance the applicability and robustness of GLFNet in real-world scenarios.

Author Contributions

Conceptualization, S.D., Y.X. and J.H.; methodology, S.D., Y.X. and M.L.; software, M.L. and Y.X.; validation, Y.X. and M.L.; formal analysis, J.H. and M.S.; investigation, S.D. and Y.X.; resources, M.S. and M.L.; data curation, M.L. and Y.X.; writing—original draft preparation, S.D. and Y.X.; writing—review and editing, J.H., M.L. and M.S.; visualization, M.S.; supervision, M.S.; project administration, M.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The links to the data sets used in this paper are as follows: HPatches: http://icvl.ee.ic.ac.uk/vbalnt/hpatches/; Google Earth: https://github.com/placeforyiming/CVPR21-Deep-Lucas-Kanade-Homography; 1DSFM: https://www.cs.cornell.edu/projects/1dsfm/; ETH3D: https://www.eth3d.net/datasets; Aachen Day-Night: https://www.visuallocalization.net/datasets/.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhao, Y.; Huang, X.; Zhang, Z. Deep Lucas-Kanade Homography for Multimodal Image Alignment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Surrey, UK, 3–7 September 2012; pp. 15950–15959. [Google Scholar]

- Liang, C.; Dong, Y.; Zhao, C.; Sun, Z. A Coarse-to-Fine Feature Match Network Using Transformers for Remote Sensing Image Registration. Remote Sens. 2023, 15, 3243. [Google Scholar] [CrossRef]

- Cao, L.; Zhuang, S.; Tian, S.; Zhao, Z.; Fu, C.; Guo, Y.; Wang, D. A Global Structure and Adaptive Weight Aware ICP Algorithm for Image Registration. Remote Sens. 2023, 15, 3185. [Google Scholar] [CrossRef]

- Deng, X.; Mao, S.; Yang, J.; Lu, S.; Gou, S.; Zhou, Y.; Jiao, L. Multi-Class Double-Transformation Network for SAR Image Registration. Remote Sens. 2023, 15, 2927. [Google Scholar] [CrossRef]

- Qin, R.; Gruen, A. 3D change detection at street level using mobile laser scanning point clouds and terrestrial images. ISPRS J. Photogramm. Remote Sens. 2014, 90, 23–35. [Google Scholar] [CrossRef]

- Ardila, J.P.; Bijker, W.; Tolpekin, V.A.; Stein, A. Multitemporal change detection of urban trees using localized region-based active contours in VHR images. Remote Sens. Environ. 2012, 124, 413–426. [Google Scholar] [CrossRef]

- Favalli, M.; Fornaciai, A.; Isola, I.; Tarquini, S.; Nannipieri, L. Multiview 3D reconstruction in geosciences. Comput. Geosci. 2012, 44, 168–176. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dusmanu, M.; Rocco, I.; Pajdla, T.; Pollefeys, M.; Sivic, J.; Torii, A.; Sattler, T. D2-Net: A Trainable CNN for Joint Description and Detection of Local Features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperPoint: Self-Supervised Interest Point Detection and Description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Bian, J.; Lin, W.Y.; Matsushita, Y.; Yeung, S.K.; Nguyen, T.D.; Cheng, M.M. GMS: Grid-based Motion Statistics for Fast, Ultra-Robust Feature Correspondence. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Sarlin, P.E.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperGlue: Learning Feature Matching with Graph Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Rocco, I.; Cimpoi, M.; Arandjelović, R.; Torii, A.; Pajdla, T.; Sivic, J. NCNet: Neighbourhood Consensus Networks for Estimating Image Correspondences. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1020–1034. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Han, K.; Li, S.; Prisacariu, V. Dual-Resolution Correspondence Networks. In The Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: New York, NY, USA, 2020; Volume 33, pp. 17346–17357. [Google Scholar]

- Sun, J.; Shen, Z.; Wang, Y.; Bao, H.; Zhou, X. LoFTR: Detector-Free Local Feature Matching with Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual Conference, 19–25 June 2021; pp. 8922–8931. [Google Scholar]

- Jiang, W.; Trulls, E.; Hosang, J.; Tagliasacchi, A.; Yi, K.M. COTR: Correspondence Transformer for Matching Across Images. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 6207–6217. [Google Scholar]

- Luo, Z.; Zhou, L.; Bai, X.; Chen, H.; Zhang, J.; Yao, Y.; Li, S.; Fang, T.; Quan, L. ASLFeat: Learning Local Features of Accurate Shape and Localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Revaud, J.; Weinzaepfel, P.; de Souza, C.R.; Humenberger, M. R2D2: Repeatable and Reliable Detector and Descriptor. In Proceedings of the NeurIPS, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.; Niessner, M. ScanNet: Richly-Annotated 3D Reconstructions of Indoor Scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2564–2571. [Google Scholar] [CrossRef]

- Rosten, E.; Drummond, T. Machine Learning for High-Speed Corner Detection. In Proceedings of the Computer Vision—ECCV 2006, Graz, Austria, 7–13 May 2006; Leonardis, A., Bischof, H., Pinz, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 430–443. [Google Scholar]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: Binary Robust Independent Elementary Features. In Proceedings of the Computer Vision—ECCV 2010, Crete, Greece, 5–11 September 2010; Daniilidis, K., Maragos, P., Paragios, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 778–792. [Google Scholar]

- Melekhov, I.; Tiulpin, A.; Sattler, T.; Pollefeys, M.; Rahtu, E.; Kannala, J. DGC-Net: Dense geometric correspondence network. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019. [Google Scholar]

- Truong, P.; Danelljan, M.; Timofte, R. GLU-Net: Global-Local Universal Network for dense flow and correspondences. In Proceedings of the 2020 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Chen, H.; Luo, Z.; Zhou, L.; Tian, Y.; Zhen, M.; Fang, T.; McKinnon, D.; Tsin, Y.; Quan, L. ASpanFormer: Detector-Free Image Matching with Adaptive Span Transformer. In Proceedings of the Computer Vision—ECCV 2022, Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer Nature: Cham, Switzerland, 2022; pp. 20–36. [Google Scholar]

- Xu, Z.; Zhang, W.; Zhang, T.; Yang, Z.; Li, J. Efficient Transformer for Remote Sensing Image Segmentation. Remote Sens. 2021, 13, 3585. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A.; Jmal, M.; Souidene Mseddi, W.; Attia, R. Wildfire Segmentation Using Deep Vision Transformers. Remote Sens. 2021, 13, 3527. [Google Scholar] [CrossRef]

- Li, Y.; Cheng, Z.; Wang, C.; Zhao, J.; Huang, L. RCCT-ASPPNet: Dual-Encoder Remote Image Segmentation Based on Transformer and ASPP. Remote Sens. 2023, 15, 379. [Google Scholar] [CrossRef]

- Zhong, B.; Wei, T.; Luo, X.; Du, B.; Hu, L.; Ao, K.; Yang, A.; Wu, J. Multi-Swin Mask Transformer for Instance Segmentation of Agricultural Field Extraction. Remote Sens. 2023, 15, 549. [Google Scholar] [CrossRef]

- Gong, H.; Mu, T.; Li, Q.; Dai, H.; Li, C.; He, Z.; Wang, W.; Han, F.; Tuniyazi, A.; Li, H.; et al. Swin-Transformer-Enabled YOLOv5 with Attention Mechanism for Small Object Detection on Satellite Images. Remote Sens. 2022, 14, 2861. [Google Scholar] [CrossRef]

- Li, Q.; Chen, Y.; Zeng, Y. Transformer with Transfer CNN for Remote-Sensing-Image Object Detection. Remote Sens. 2022, 14, 984. [Google Scholar] [CrossRef]

- Chen, G.; Mao, Z.; Wang, K.; Shen, J. HTDet: A Hybrid Transformer-Based Approach for Underwater Small Object Detection. Remote Sens. 2023, 15, 1076. [Google Scholar] [CrossRef]

- Xu, X.; Feng, Z.; Cao, C.; Li, M.; Wu, J.; Wu, Z.; Shang, Y.; Ye, S. An Improved Swin Transformer-Based Model for Remote Sensing Object Detection and Instance Segmentation. Remote Sens. 2021, 13, 4779. [Google Scholar] [CrossRef]

- Zhao, Q.; Liu, B.; Lyu, S.; Wang, C.; Zhang, H. TPH-YOLOv5++: Boosting Object Detection on Drone-Captured Scenarios with Cross-Layer Asymmetric Transformer. Remote Sens. 2023, 15, 1687. [Google Scholar] [CrossRef]

- Bazi, Y.; Bashmal, L.; Rahhal, M.M.A.; Dayil, R.A.; Ajlan, N.A. Vision Transformers for Remote Sensing Image Classification. Remote Sens. 2021, 13, 516. [Google Scholar] [CrossRef]

- He, X.; Chen, Y.; Lin, Z. Spatial-Spectral Transformer for Hyperspectral Image Classification. Remote Sens. 2021, 13, 498. [Google Scholar] [CrossRef]

- Qing, Y.; Liu, W.; Feng, L.; Gao, W. Improved Transformer Net for Hyperspectral Image Classification. Remote Sens. 2021, 13, 2216. [Google Scholar] [CrossRef]

- Ali, A.M.; Benjdira, B.; Koubaa, A.; Boulila, W.; El-Shafai, W. TESR: Two-Stage Approach for Enhancement and Super-Resolution of Remote Sensing Images. Remote Sens. 2023, 15, 2346. [Google Scholar] [CrossRef]

- Zheng, X.; Bao, Z.; Yin, Q. Terrain Self-Similarity-Based Transformer for Generating Super Resolution DEMs. Remote Sens. 2023, 15, 1954. [Google Scholar] [CrossRef]

- Tang, S.; Zhang, J.; Zhu, S.; Tan, P. Quadtree Attention for Vision Transformers. In Proceedings of the International Conference on Learning Representations, Virtual Event, 25–29 April 2022. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2017; Volume 30. [Google Scholar]

- Cuturi, M. Sinkhorn Distances: Lightspeed Computation of Optimal Transport. In Proceedings of the Advances in Neural Information Processing Systems, New York, NY, USA, 5–10 December 2013; Burges, C., Bottou, L., Welling, M., Ghahramani, Z., Weinberger, K., Eds.; Curran Associates, Inc.: New York, NY, USA, 2013; Volume 26. [Google Scholar]

- Li, Z.; Snavely, N. MegaDepth: Learning Single-View Depth Prediction From Internet Photos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Schonberger, J.L.; Frahm, J.M. Structure-From-Motion Revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Balntas, V.; Lenc, K.; Vedaldi, A.; Mikolajczyk, K. HPatches: A Benchmark and Evaluation of Handcrafted and Learned Local Descriptors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Chang, C.H.; Chou, C.N.; Chang, E.Y. CLKN: Cascaded Lucas-Kanade Networks for Image Alignment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Deep Image Homography Estimation. arXiv 2016, arXiv:1606.03798. [Google Scholar]

- Le, H.; Liu, F.; Zhang, S.; Agarwala, A. Deep Homography Estimation for Dynamic Scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Fang, M.; Pollok, T.; Qu, C. Merge-SfM: Merging Partial Reconstructions. In Proceedings of the BMVC, Wales, UK, 9–12 September 2019. [Google Scholar]

- Wilson, K.; Snavely, N. Robust Global Translations with 1DSfM. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 61–75. [Google Scholar]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Photo Tourism: Exploring Photo Collections in 3D. In The ACM SIGGRAPH 2006 Papers; Association for Computing Machinery: New York, NY, USA, 2006; pp. 835–846. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Ozyesil, O.; Singer, A. Robust Camera Location Estimation by Convex Programming. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Cui, Z.; Tan, P. Global Structure-From-Motion by Similarity Averaging. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Sweeney, C.; Sattler, T.; Hollerer, T.; Turk, M.; Pollefeys, M. Optimizing the Viewing Graph for Structure-From-Motion. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Cui, H.; Gao, X.; Shen, S.; Hu, Z. HSfM: Hybrid Structure-from-Motion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Sweeney, C.; Hollerer, T.; Turk, M. Theia: A Fast and Scalable Structure-from-Motion Library. In Proceedings of the 23rd ACM International Conference on Multimedia, Brisbane, Australia, 26–30 October 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 693–696. [Google Scholar] [CrossRef]

- Schöps, T.; Schönberger, J.L.; Galliani, S.; Sattler, T.; Schindler, K.; Pollefeys, M.; Geiger, A. A Multi-view Stereo Benchmark with High-Resolution Images and Multi-camera Videos. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2538–2547. [Google Scholar] [CrossRef]

- Hui, T.W.; Tang, X.; Loy, C.C. LiteFlowNet: A Lightweight Convolutional Neural Network for Optical Flow Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 8981–8989. [Google Scholar]

- Sun, D.; Yang, X.; Liu, M.Y.; Kautz, J. PWC-Net: CNNs for Optical Flow Using Pyramid, Warping, and Cost Volume. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Teed, Z.; Deng, J. RAFT: Recurrent All-Pairs Field Transforms for Optical Flow. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part II. Springer: Berlin/Heidelberg, Germany, 2020; pp. 402–419. [Google Scholar] [CrossRef]

- Sattler, T.; Weyand, T.; Leibe, B.; Kobbelt, L. Image Retrieval for Image-Based Localization Revisited. In Proceedings of the British Machine Vision Conference, Surrey, UK, 3–7 September 2012; pp. 76.1–76.12. [Google Scholar] [CrossRef]

- Zhang, Z.; Sattler, T.; Scaramuzza, D. Reference Pose Generation for Long-term Visual Localization via Learned Features and View Synthesis. Int. J. Comput. Vis. 2021, 129, 821–844. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).