Abstract

Mesoscale convective systems (MCSs) and associated hazardous meteorological phenomena cause considerable economic damage and even loss of lives in the mid-latitudes. The mechanisms behind the formation and intensification of MCSs are still not well understood due to limited observational data and inaccurate climate models. Improving the prediction and understanding of MCSs is a high-priority area in hydrometeorology. One may study MCSs either employing high-resolution atmospheric modeling or through the analysis of remote sensing images which are known to reflect some of the characteristics of MCSs, including high temperature gradients of cloud-top, specific spatial shapes of temperature patterns, etc. However, research on MCSs using remote sensing data is limited by inadequate (in size) databases of satellite-identified MCSs and poorly equipped automated tools for MCS identification and tracking. In this study, we present (a) the GeoAnnotateAssisted tool for fast and convenient visual identification of MCSs in satellite imagery, which is capable of providing AI-generated suggestions of MCS labels; (b) the Dataset of Mesoscale Convective Systems over the European Territory of Russia (DaMesCoS-ETR), which we created using this tool, and (c) the Deep Convolutional Neural Network for the Identification of Mesoscale Convective Systems (MesCoSNet), constructed following the RetinaNet architecture, which is capable of identifying MCSs in Meteosat MSG/SEVIRI data. We demonstrate that our neural network, optimized in terms of its hyperparameters, provides high MCS identification quality (, true positive rate ) and a well-specified detection uncertainty (false alarm ratio ). Additionally, we demonstrate potential applications of the GeoAnnotateAssisted labelling tool, the DaMesCoS-ETR dataset, and the MesCoSNet neural network in addressing MCS research challenges. Specifically, we present the climatology of axisymmetric MCSs over the European territory of Russia from 2014 to 2020 during summer seasons (May to September), obtained using MesCoSNet with Meteosat MSG/SEVIRI data. The automated identification of MCSs by the MesCoSNet artificial neural network opens up new avenues for previously unattainable MCS research topics.

1. Introduction

Over the past thirty years, northern Eurasia, including the territory of Russia, has experienced the warmest temperatures in recorded meteorological history. Air temperatures in Russia are increasing 2.5 times faster than the global average [1]. The observed climate warming has led to an intensification of convective processes in the atmosphere, as well as to an increase in the frequency and intensity of hazardous convective weather events [2,3,4]. In Russia, these events cause significant economic damage and loss of life. The most notable among them are extreme precipitation, including a flash flood in Krymsk in 2012 [5], strong tornadoes in both the European and Asian parts of the country [6,7,8], and destructive linear storms in 2010, 2017, and 2021 [9,10,11]. Hazardous convective events, i.e., heavy convective rainfall, tornadoes, squalls, and large hail, typically occur during the warm season and are generated by mesoscale convective systems (hereafter MCSs). Despite their importance, the characteristics and variability of MCSs over northern Eurasia remain understudied.

An MCS is an organized cluster of cumulonimbus clouds that forms a precipitation region larger than 100 km (in at least one direction) due to deep moist convection [12]. While the majority of MCSs are found in the tropics, a smaller number can be observed in the mid-latitudes during the warm season [13]. The most widely used classification of MCSs, proposed by Maddox [14], is based on their geometry and horizontal dimensions. Specifically, linear and axisymmetric MCSs are identified. The former are further subdivided into squall lines (meso-alpha scale) and cumulonimbus ridges (meso-beta scale) based on the Orlanski classification [15]. Axisymmetric MCSs include mesoscale convective complexes (MCCs) (meso-alpha scale systems) and cumulonimbus cloud clusters and supercells (meso-beta scale).

Observational and climatological studies of MCSs are based on the use of remote sensing data. In particular, long-term data series are provided by polar orbiting satellites such as NOAA or Terra/Aqua, and geostationary satellites like GOES, Meteosat, or Himawari. MCSs can be identified and tracked using infrared satellite images as they produce contiguous regions of extremely cold cloud-top temperatures [16]. In addition, geostationary satellite imagery has broad spatial and temporal coverage, and the methods for analyzing its data can be applied to any region of the globe. One of the first satellite-based studies of the climatological characteristics of MCSs (focusing on mesoscale convective complexes) was performed by Laing and Fritsch [13] for 1986–1997. Morel and Senesi [17,18] compiled a satellite-derived database of MCSs over Europe for 1993–1997. In subsequent years, many climatological studies of MCSs have been carried out both globally [19] and for macro-regions like the contiguous United States [19], China [20,21], and west Africa [22]. In addition to tracking MCSs, infrared satellite images are used to evaluate several characteristics of MCSs. In particular, signatures on the cloud-tops, such as overshooting tops (OTs) [23], cold rings [24], cold U/V features [25], and above-anvil cirrus plume [26], indicate strong updrafts and the areas with high potential for severe weather. Long-term databases of these signatures have been established for Europe [27,28], North America [29], and the globe [30].

Along with satellite images, weather radar data are widely used in climatological studies of MCSs [31]. These data have high spatial and temporal resolution and enable evaluation of MCS characteristics and evolution. Radar-based climatologies of MCSs have been compiled for the U.S. [32] and eastern Europe [33]. In addition to the MCSs itself and their morphological features, weather radar data have been successfully used to estimate the climatological characteristics of hailstorms [34,35]. The main limitation of radar data is their sparse spatial coverage in some regions. This is especially true in northern Eurasia, where the new Doppler weather radar system became operational in the 2010s and now only partially covers western Russia [36], while data from previous radars (MRL-5) are not integrated, fragmentary and often not digitized.

MCS statistics for northern Eurasia are currently fragmented and insufficient. Only a few research studies may be mentioned, including Abdullaev et al. [37], who studied 214 MCSs in the Moscow region in 1990–1995; Sprygin [38], who identified 30 long-lived MCSs over Belarus, Ukraine, and the central part of European Russia for 2009–2019; and Chernokulsky et al. [39], who estimated the characteristics of 128 convective storms and their cloud-tops associated with severe wind and tornado events over the western regions of northern Eurasia for 2006–2021. The main drawback of the aforementioned studies is that MCS selection was secondary and determined by the initial selection of hazardous events (i.e., squall or tornado), which may have biased MCS sampling towards more powerful MCSs. Currently, long-term homogeneous and unbiased statistics of MCSs are lacking not only for the entire northern Eurasia region, but also for its European part.

The identification of MCSs on satellite images can be carried out by experts or through the use of automated tools. The increasing amount of experimental data, especially those related to the spatial and temporal distribution of various meteorological parameters, has led to a growing reliance on automated data-processing and analysis techniques. At the same time, the time required to visually identify and study MCSs in satellite imagery remains significant. Alternatively, to better understand the dynamics of MCSs, experts can use advanced machine learning algorithms that can identify patterns and trends in the vast amounts of data available.

For two-dimensional data analysis, which is visually representable, frequently, computer vision techniques may be employed alongside the numerical simulation methods mentioned above. These include image-processing techniques adapted for visual pattern recognition, keypoint detection and clustering, and connected components identification. In addition to image-processing methods that analyze color and brightness [40], various approaches can be utilized, including image analysis with machine learning algorithms [41].

Machine learning (ML) and deep learning (DL) methods were shown to be useful tools in problems of pattern recognition, visual object detection and other computer vision tasks [42,43,44,45,46]. In recent studies, successful application of ML methods was shown when identifying extreme weather events in reanalyses data [47,48,49]. Most research groups focus on the identification of synoptic-scale atmospheric phenomena, such as tropical cyclones [50,51] and atmospheric rivers [52], because of clear representation of these events in most observational data and simulated atmospheric dynamics data. There are some studies on mesoscale geophysical phenomena identification using DL techniques: in Huang et al. 2017 [53], the authors identified submesoscale oceanic vortices in SAR satellite data; in Krinitskiy et al. 2018 [54], the authors demonstrated the capabilities of convolutional neural networks for classifying polar lows in infrared and microwave satellite mosaics. Further, in Krinitskiy et al. 2020 [55], the authors applied convolutional neural networks to the problem of polar lows identification in satellite mosaics in the Southern ocean.

To the best of our knowledge, there are only a few studies on the identification and tracking of mesoscale convective systems over the land in satellite imagery using deep learning techniques [29]. There are several reasons for this lack of accurate research:

- the signal-to-noise ratio is not very high for satellite imagery. This feature is not an issue for major events of synoptic scale. However, it becomes one in the case of mesoscale phenomena;

- one of the most impactful issues of supervised ML methods in the Earth Sciences is the lack of labeled datasets. There are a number of labeled benchmarks for classic computer vision tasks, like Microsoft COCO [56], Cityscapes [57], Imagenet [58] and others [59,60,61]. In climate sciences, the amount of remote sensing and modeling data are unprecedented today, but most of the datasets are unlabeled concerning semantically meaningful meteorological events and phenomena. There are rare exceptions, such as the Dataset of All-Sky Imagery over the Ocean (DASIO [41]) or the Southern Ocean Mesocyclones Dataset (SOMC [62]);

- in the rare cases of labeled datasets, the amount of labels is insufficient for training a reliable machine learning or deep learning model with good generalization;

- in the case of a labeled dataset, one needs to deal with the issue of uneven fractions of “positively” and “negatively” marked points (pixels) in a satellite image, meaning the area of the phenomena labeled in the image is much less compared to the phenomena-free area. This problem is referred to as “class imbalance” in machine learning. As a consequence, a deep learning model may suffer from loss of generalization from too many false alarms or too many missed events. In the case of unsuitable neural network configuration and improper training process, one may find the model in a state where it “prefers” to identify no events at all with low loss value instead of trying to find phenomena of interest, resulting in almost the same but insignificantly lower loss.

Most of the abovementioned issues do not affect the quality of the detection of synoptic-scale extreme atmospheric phenomena in reanalyses [47]. However, each one of these issues needs to be addressed in the case of MCS detection in remote sensing imagery.

The primary objective of our study is to determine the frequency of MCS occurrence over a specific geospatial region using remote sensing imagery and deep learning techniques. At the onset of our research, we acknowledged the challenges associated with obtaining a sufficiently large labeled dataset of MCSs in remote sensing imagery due to the costs of expert labeling. Unlike routine visual object detection (VOD), MCSs are not easily recognizable by non-expert human observers, rendering crowd-sourcing an impractical solution for collecting extensive labeled datasets.

Given the limitations in expert resources, we accepted certain trade-offs in the properties of the solution derived from our study. Consequently, we accepted a potential reduction in confidence regarding the detected MCS labels when employing a deep learning model trained on our labeled data. Despite this, our findings may still provide valuable insights into the activity of MCSs within the region of interest.

Our study’s goal can be rephrased as determining the probability of MCS occurrence within a given time frame. While we may not be able to detect MCSs with high precision in every remote sensing image, our approach can still yield accurate estimates of MCS frequency. This information can then be utilized for further research on correlations between MCSs and meteorological indices or for assessing their economic impact.

Thus, in this study, we address the challenge of estimating the frequency of mesoscale convective system occurrence over a specific region of interest, namely the European territory of Russia (ETR). To achieve this objective, we tackle a proxy problem, which involves detecting all MCSs present in each available remote sensing imagery snapshot. Subsequently, we derive the frequency of MCS occurrence based on the frequency of the satellite imagery. This approach allows one to estimate the prevalence of MCSs within the target region.

The problem of phenomenon detection in satellite imagery may be reformulated as a VOD problem, with the only exception that satellite imagery is not a visual image. This issue does not prevent one from applying state-of-the-art VOD methods though. Among them are the following approaches: VOD using a locate-and-adjust approach, and VOD using a semantic segmentation approach. In our study, we focused on the locate-and-adjust approach since our preliminary studies demonstrated that extreme mesoscale cyclones (also known as polar lows) and other extreme mesoscale phenomena are hard to segment with state-of-the-art deep convolutional neural networks taking a small labeled dataset into account [55]. Thus, we solve the problem of MCS detection using the locate-and-adjust approach, which implies two generic steps for detection: (i) detecting the rectangular bounding boxes of the phenomena, and (ii) assessing some score (probability) for them to represent a phenomenon of interest.

In our study, we develop the method based on deep neural networks, which we exploited in order to handle the issues of class imbalance and a low amount of labeled data. We started with the RetinaNet [63] architecture of neural networks, which was designed particularly to handle the class imbalance issue in VOD problems.

The rest of the paper is organized as follows: in Section 2, we describe the source data of our study, as well as the procedure for collecting the Dataset of Mesoscale Convective Systems over the ETR (DaMesCoS-ETR). In Section 3, we present the method based on deep convolutional neural networks (DCNN), namely, the Neural Network for the Identification of Mesoscale Convective Systems (MesCoSNet), which we developed following a ReinaNet [63] design for the detection of MCSs in remote sensing imagery; we also describe the method for estimating the frequency of MCSs over the ETR based on the results of MCS detection with MesCoSNet. In Section 4, we present the results of our study regarding the proxy-problem of MCS detection along with the problem of MCS frequency estimation over the ETR. In Section 5, we discuss the properties of the solutions we present. In Section 6, we summarize the results and provide an overview of the forthcoming studies based on the results we present.

2. Data

2.1. Remote Sensing Data

In this study, we used remote sensing data of several Meteosat satellites. Meteosat mission imagery has proven to be useful in meteorological studies since 1977 [64]. First, there were Meteosat 1–7, that were first-generation (MFG) satellites equipped with Meteosat Visible and Infrared Imager (MVIRI). MVIRI provided snapshots in three bands: visible imagery (0.5–0.9 m) with central wavelength of 0.7 m, water vapor mean temperature (5.7–7.1 m) with central wavelength of 6.4 m, and the 3-rd infrared band (10.5–12.5 m) with central wavelength of 11.5 m. First-generation Meteosat satellites were stopped on 31 March 2017. After the MFG, second generation satellites were gradually deployed: Meteosat Second Generation (MSG) Nos. 8, 9, 10, and 11. Currently, MSG are employed for meteorological observations according to the Eumetsat web portal [65]. All the MSG satellites are geostationary and operate on the 36,000 km high. We provide here a brief summary of MSG satellites to justify our decision on the data source for our study focused on the European territory of Russia.

Meteosat-8 (MSG-1) was deployed in 2002. On 1 February 2018, it was placed in the E position to serve Indian Ocean Data Coverage, replacing Meteosat-7. According to the Eumetsat web portal, it was retired on 1 July 2022. Meteosat-9 (MSG-2) was deployed in 2005. It is placed in the E position. The period of its imagery is 15 min. It is intended to function until 2025. Its view angle covers the whole Earth disk. On 1 June 2022, it was moved to E to serve IODC (Indian Ocean Data Coverage). Meteosat-10 (MSG-3) was deployed in 2012, and is placed in the E position. It has a full-Earth-disk view angle as well, and its imagery period is also 15 min. It also scans Europe, Africa and associated seas with a 5 min period. This satellite will operate until 2030. Meteosat-11 (MSG-4) was deployed in June 2015. It is placed in the E position. It has a full-Earth-disk view angle, and its imagery period is 15 min. According to the Eumetsat web portal, its availability lifetime is expected to end in 2033. A summary of these satellites is presented in Table 1.

Table 1.

Meteosat satellites operating used in this study for identifying mesoscale convective systems over European Russia [65].

There are SEVIRI instruments at each of the MSG satellites. SEVIRI stands for “Spinning Enhanced Visible Infra-Red Imager”, and it images reflected infrared radiation in 12 spectral bands. The SEVIRI instrument is capable of scanning every 5 min (MSG-3) or every 15 min (MSG-1,2,4). There is also visual imagery of high resolution (HRV) with 1 km resolution in sub-satellite point and 3 km elsewhere.

Following the practices described in recent studies for detecting MCSs using remote sensing imagery [24,66], we employed a limited subset of SEVIRI bands and their combination:

- band-5 ()—radio-brightness temperature imagery for m mean wavelength, which characterizes water vapor content in the atmosphere column;

- band-9 ()—radio-brightness temperature imagery for m mean wavelength, which characterizes the cloud-top temperature;

- BTD, which is the difference between ch5 and ch9: .

We also applied normalization for and non-linear re-scaling for in order to highlight the patterns in imagery that are characteristic for various MCS according to studies on the physics of mesoscale convective systems. These normalization and rescaling transformations are equivalent to those we applied while preprocessing satellite imagery data when training and testing our neural network MesCoSNet (see Section 3.2.2). As we mention in Section 3.2.2, these transformations are meant to bring the distribution of the features of source remote sensing data close to the distribution of the R,G,B features of images in the ImageNet [58] dataset, which includes images of real-world visual scenes observed by a person on an everyday basis.

2.2. Visual Identification and Tracking of Mesoscale Convective Systems in Remote Sensing Data

When collecting the DaMesCoS-ETR, we exploited the GeoAnnotateAssisted tool presented in Section 2.3. It transforms the source remote sensing imagery fields , as described further in Section 3.2.2, and presents it in the form of a conventional colorful image with auxiliary georeferencing information (not shown to an observer). It provides a toolkit for creating ellipse-shaped labels of MCS or other events, and for uniting them into tracks.

When labeling MCSs, an expert follows the established methodology of MCS identification. Classic definitions of MCSs and mesoscale convective complexes (MCC) of various types were proposed by Maddox in 1980 [14]: MCC has an area with cloud-top temperature C exceeding 100,000 km; the inside area with cloud-top temperature C should exceed 50,000 km. These characteristics should persist for more than 6 hours. The ratio between the minor and major diameters of an MCC candidate should be greater than .

However, some authors have proposed more relaxed MCC criteria. For example, in Morel and Senesi 2002 [17,18], the authors proposed using the gradient of the cloud-top temperature for MCC identification. Feidas and Cartalis (2001) [67] used the threshold values in some spectral bands. In recent years, the identification of MCS in satellite data has been based on the use of some typical features (also known as signatures or spatial patterns). There are three major signatures:

- Overshooting tops (OTs), which is the strong updraft rising above the anvil of an MCS. OTs can be identified in visible and infrared spectral bands. The formation of OTs is related to strong updrafts, which often points to the presence of a mesocyclone in the MCS. OTs are typical signatures of supercell clouds. They indicate the zones with high probability of hazardous weather events. A statistically significant relationship of OTs with severe weather was recently shown [68,69,70];

- The ring-shaped and U/V-shaped signatures (cold-U/V, cold ring), which are related to shielding of cold inside the area of the MCS cloud-top by the plume of relatively warm cloud particles. These particles are moved with high-speed updrafts from lower stratus clouds. Ring-shaped and U/V-shaped signatures are typically attributed to supercell clouds and can be used to detect them [24,68];

- The plumes of cirrus clouds above the anvil, the formation of which is related to the ejection of cloud particles by intense updrafts over the central part of an MCS [71,72].

When searching for an MCS, our experts examine the records of severe weather events reported in broadcast news, printed and electronic news feeds, and weather reports. We examined the reports associated with strong winds, extreme rainfall, and other extreme weather events. An expert then examines Meteosat imagery relevant to the report in terms of date and time. The major focus of the expert in this process was on the region of the report; however, we also examined adjacent territories.

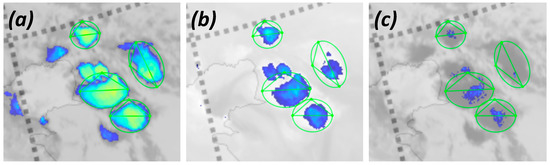

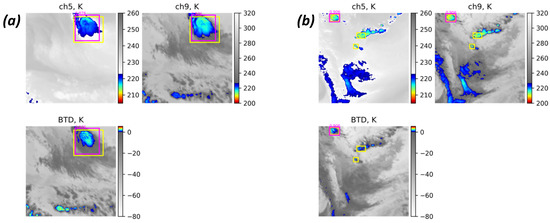

With the transformations of the Meteosat source data described in Section 3.2.2, the data from the SEVIRI instrument of Meteosat are presented in a way that a human expert can perceive comfortably. The fields of transformed features are presented to the expert one-by-one, and the expert may switch channel using the buttons in the GeoAnnotateAssisted app. We also adjusted the colormaps for each of the channels (namely, and , see Section 3.2.2). With these colormaps, the GeoAnnotateAssisted app. produces an image that visually represents one the channels. The areas of each image that typically correspond to MCS presence are colorful, with the brighter the color, the higher the radiobrightness temperature (for and channels). The rest of the images are “shaded” (presented in grayscale). The threshold values that are employed to shade the unnecessary areas are in accordance with the threshold-based MCS identification technique proposed by Maddox [14]: C, C. In the case of , the colorful area corresponds to values exceeding a particular threshold value C; the brightness of the color corresponds to the height of the . As we discuss further in Section 3.2.2, the scale of , representing the for visual examination and for our neural network is non-linear. The non-linearly rescaled highlights colorful MCS-relevant areas more expressively in comparison to commonly used linear rescaling. In Figure 1, we demostrate the resulting representations of channels as they are shown in the GeoAnnotateAssisted app. In this figure, we also show examples of MCS labeled by our experts (shown by the green ellipses).

Figure 1.

An example of four mesoscale convective systems labeled by our expert in Meteosat imagery using our GeoAnnotateAssisted labeling and tracking tool. Here, the actual representations of transformed channels and are shown (see Section 3.2.2 for details) using the actual color maps employed in GeoAnnotateAssisted. Green ellipses are the labels an expert has placed as a result of the examination of these representations. Here, in panels, the following channels are shown: (a) , (b) and (c) . We do not show temperature color bars, since the values of the re-scaled features , and are unitless.

2.3. GeoAnnotateAssisted-Client-Server Annotation Tool for Remote Sensing Data

In this section, we describe the software package we developed for the labeling of MCSs in Meteosat data snapshots. We name it GeoAnnotateAssisted.

If there is a need to label spatial patterns in a two-dimensional field for training an artificial neural network (ANN), there are several problems to solve. First, the data should be represented in a human-readable way, in a form that would help an expert to recognize the events clearly. Second, an expert needs a clear and simple way of labeling an event in this representation. Then, the labels an expert creates should be translated into representation-agnostic event attributes, which are typically (but not necessarily) its geospatial coordinates, physical size, etc. These attributes should then be transformable into labels that are suitable for the application of an ANN model. Finally, when tracking lasting natural phenomena, one needs to connect the labels of an individual event in several consecutive data snapshots.

In order to be able to solve these problems, the software that is used for labeling should meet several requirements:

- it should be easy enough to install on the annotator’s computer;

- it should be lightweight in terms of the annotator’s computer computational load while preprocessing remote sensing data;

- it should be capable of creating a human-readable representation of remote sensing data;

- it should be fast enough in creating this representation;

- the representation should be physics-related, e.g., demonstrating the anomalies of radio-brightness temperature in a certain band, which can be used to determine certain classes of identified phenomena;

- the representation should be adjustable to some extent for an expert to have an opportunity of validating an event candidate taking several representations into account;

- the labeling capabilities of the software should meet the requirements imposed by the specific requirements of the task, e.g., a capability for fancy shapes labeling should be implemented, and the labeling procedure should be fast enough (meaning one should not annotate an ellipse-shaped event using a free-form polygon since it is very time-consuming);

- the labeling tool should provide a way to conveniently link the labels of individual events across multiple consecutive data snapshots.

There are a number of ready-to-use labeling software packages available. Some of them are free-to-use, others are provided for a fee. They also differ in their installation procedures: some of them are browser-based, others are implemented in a programming language and require installation. Some of the tools are capable of suggesting the labels generated with either classic computer vision techniques or pretrained deep learning tools. However, these pretrained neural networks are not expected to work well in the case of weather phenomena since they were trained on the datasets of visual objects.

The most notable labeling software are the following:

- V7 [73] (https://www.v7labs.com/, accessed on 20 April 2023)—data labeling and management platform, which is paid or free of charge for educational open data; it is browser-based for an annotator; thus, the data is processed on the server-side. Automatic labels suggestion is implemented;

- SuperAnnotate [74] (https://www.superannotate.com/, accessed on 20 April 2023)—a proprietary annotation tool; paid or free-of-charge for early-stage startups;

- LabelMe [75] (https://github.com/wkentaro/labelme, accessed on 20 April 2023)—a classic graphical image annotation tool written in Python [76] with Qt for the visual interface. It was inspired by the LabelMe web-based annotation tool by MIT (http://labelme.csail.mit.edu/Release3.0/, accessed on 20 April 2023).

- CVAT [77] (https://cvat.org/, accessed on 2 April 2023)—an image annotation tool which is implemented in Python [76] and web-serving languages. The tool may be deployed on a self-hosted server in a number of ways which makes it versatile. The tool is also deployed on the Web at https://cvat.org/ (accessed on 2 April 2023) for public use. There is also a successor to this tool presented at cvat.ai (https://cvat.ai/, accessed on 20 April 2023), which is capable of algorithmic assistance (automated interactive algorithms, like intelligent scissors, histogram equalization, etc.).

- Labelimg [78] (https://github.com/tzutalin/labelImg, accessed on 20 April 2023)—an annotation tool implemented in Python with Qt, similar to LabelMe. It is designed to be deployed on a local annotator’s computer and requires a Python interpreter of a certain version.

- ImgLab [79] (https://imglab.in/, accessed on 20 April 2023)—client-server annotation tool, the client side of which is platform-independent and runs directly in a browser. Thus, it has no specific prerequisites for an annotator’s computer. Its server-side is deployed on a server with some software-specific requirements, however.

- ClimateContours [52]—to the best of our knowledge, is the only annotation tool adapted to the labeling of climate events, e.g., atmospheric rivers. It is essentially a variant of LabelMe by MIT (http://labelme.csail.mit.edu/Release3.0/, accessed on 20 April 2023); thus, in Table 2, we do not list it explicitly. At the moment, the web-deployed instance of ClimateContours (http://labelmegold.services.nersc.gov/climatecontours_gold/tool.html, accessed on 21 March 2021) is unavailable.

Table 2. Available image annotation tools along with GeoAnnotateAssisted (ours, see Section 2.3). Here, RSD stands for remote sensing data. Depends on deployment scheme. Due to unavailable RSD preprocessing feature. Common tools allow free-form polygons only, while in GeoAnnotateAssisted, ellipses and circles are allowed. In addition to the tool for manual tracking, GeoAnnotateAssisted is equipped with the optional capability of suggesting the linking between the labels in consecutive data snapshots using either a human-designed algorithm or an artificial neural network (e.g., the one we present in [80]). With colored text, we highlight the advantages of our GeoAnnotateAssisted labeling tool compared to other tools presented in this table.

Table 2. Available image annotation tools along with GeoAnnotateAssisted (ours, see Section 2.3). Here, RSD stands for remote sensing data. Depends on deployment scheme. Due to unavailable RSD preprocessing feature. Common tools allow free-form polygons only, while in GeoAnnotateAssisted, ellipses and circles are allowed. In addition to the tool for manual tracking, GeoAnnotateAssisted is equipped with the optional capability of suggesting the linking between the labels in consecutive data snapshots using either a human-designed algorithm or an artificial neural network (e.g., the one we present in [80]). With colored text, we highlight the advantages of our GeoAnnotateAssisted labeling tool compared to other tools presented in this table.

All of the annotation tools are characterized by their own advantages and flaws. Almost all the tools were developed for annotating images, and most of the efforts of their developers were focused on making the labeling faster and easier. However, none of them were designed to annotate geophysical phenomena specifically; thus, none of them meets all the requirements we listed above, due to the specifics of the task of labeling geophysical events in remote sensing data or other geospatially distributed fields. In Table 2, we provide a brief review of the annotation tools we listed above, highlighting the requirements of our MCS annotation task. In this table, we also present our annotation tool GeoAnnotateAssisted, which we describe further in Section 2.3.

While most of the publicly available annotation tools, including the ones enlisted in Table 2, are suitable for labeling ordinary images, they are not suited for labeling MCS in geospatial data, like remote sensing imagery, due to the absence of some of the must-have features we listed above. Therefore, we developed our own labeling tool, namely, “GeoAnnotateAssisted”. Satellite imagery preprocessing is a computationally expensive task. Once we developed the previous version of the tool, namely, “GeoAnnotate”, we faced the issue of data preprocessing taking several minutes in the case of a computer station not being powerful enough. Thus, we split the app into client and server parts. These two apps are implemented using the Python programming language [76] with Qt [81] as the backend for the graphical user interface of the client-side app. The server-side is implemented using the Flask [82] library for web applications.

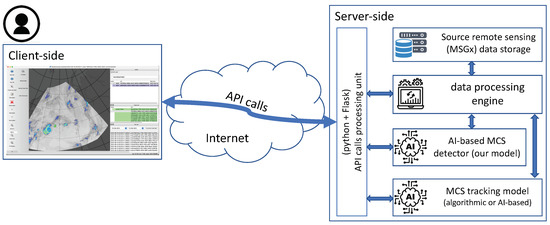

In Figure 2, we present the high-level architecture of our client-server annotation tool GeoAnnotateAssisted. It is capable of processing remote sensing data at the server-side and creating its visual representations. The representations are generated for various channels, including band-6, band-9 and BTD. The data representation task is adjustable; thus, an expert may create their own colormaps and data preprocessing pipelines for labeling new phenomena. In our study, for each channel, we created a unique colomap that highlights visually the features characterizing MCSs. The server-side app is equipped with optional capabilities as follows:

Figure 2.

High-level architecture of the annotation tool GeoAnnotateAssisted. The pictogram in the top-left corner stands for a user performing the annotation of MCS.

- the capability of suggesting the candidate-labels in new data snapshots. For this to happen, we employ the neural network we present in this study (see Section 3.1), operating on the server-side. The suggested labels are passed to the client-side app using JSON-based API so that an expert may examine them and either decline or accept and adjust their locations and forms. Here, JSON stands for JavaScript Object Notation [83], which is a unified text-based representation of objects to be passed between various programs, and API stands for application programming interface, which is a way to organize the communication between applications.

- the capability of suggesting the linking between the labels in consecutive data snapshots, using either a human-designed algorithm or an artificial neural network (e.g., the one we present in [80]).

One may launch the server-side app with both of these features switched on or off.

The client-side app of GeoAnnotateAssisted is free of the computations required for data projection since the projection and the interpolation are performed at the server-side. Thus, the client-side computational load is low. In GeoAnnotateAssisted, we also implemented the label shapes that are not common for most of the visual annotation tasks—it is capable of labeling the phenomena of elliptic and rounded shapes.

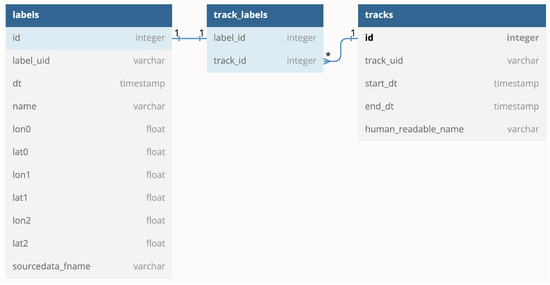

GeoAnnotateAssisted provides features for both labeling (identifying) atmospheric events in remote sensing imagery and tracking them. The data describing individual labels and tracks is recorded in the SQLite database the moment the label is created or corrected or track attribution is set. The database is placed on the data storage of the expert performing labeling. The schema of the database is presented in Figure 3. This schema is the same that DaMesCoS-ETR is distributed with since DaMesCoS-ETR is essentially the database of labels and tracking data collected by our experts. We plan to extend the dataset with labels and tracking data in the eastern territories of Russia.

Figure 3.

Structure of the database used in GeoAnnotateAssisted to store the labels and tracking information. It matches completely with the structure of DaMesCoS-ETR. We provide a complete description of this schema in the GitHub repository of DaMesCoS (http://github.com/mkrinitskiy/damescos, accessed on 20 April 2023). Here, label “1” close to certain ID fields indicate that the corresponding identifiers are unique within the scope of its table (e.g., the field “id” of table “labels”) Also, label “*” close to “track_id” field of the table “track_labels” indicates that this identifier is not unique within the scope of the table.

2.4. Collecting the Dataset of Mesoscale Convective Systems over the European Territory of Russia (DaMesCoS-ETR) Using the GeoAnnotateAssisted Labeling Tool

The core approach of our study is supervised machine learning, which is characterized by the need for labeled training data. Labeling is a process of creating the set of tuples of data instances with corresponding target labels. In the problem of MCS identification, data instances are remote sensing imagery, and labels are the tuples of values characterizing the position, size and form of MCSs in remote sensing data. MCSs are characterized by symmetric shapes which may be approximated by ellipses. Thus, in our study and in our Dataset of Mesoscale Convective Systems (DaMesCoS), we label the MCS instances as ellipses.

For collecting our Dataset of Mesoscale Convective Systems European over the Territory of Russia (DaMesCoS-ETR), our experts were employed in MCS labeling and tracking. We exploited the bands presented in Section 2.1 with linear (for ) and non-linear (for ) re-scaling, resulting in features , and (see visualization in Figure 1 and the transformation description in Section 3.2.2). The diagnostics of the contours of anomalous blobs in these images allows one to detect an MCS, assess its stage within its lifecycle as a convective storm, and also estimate its potential power [24,66].

There is also a classification of MCSs with the classes characterized by the spatial shape (also known as signatures) of the cloud-top’s radio-brightness temperature anomalies representing the convective storms. A signature is a certain spatial distribution of the low anomalies of radio-brightness temperature visible in the m channel in MSG imagery. In the case of convective storms, there are two known signatures: cold-U/V and cold-ring, according to the established classification [24,84]. These two signatures are purportedly related to the features of convective flows in the regions close to a convective cloud anvil. These two signatures are frequently detected for mature storms related to dangerous events [24,84,85,86,87]. Thus, we used these signatures as indicators of potentially severe storms when identifying MCSs in our study.

To reduce the time consumption of MCS labeling, we used records of local meteorological stations, experts, and also messages in various local news channels, both electronic and paper ones. These records contain messages about severe storms accompanied by strong winds, fallen woods and, in some case, damaged infrastructure. Using these records, we located the dates, times and geographical positions of possible MCS occurrences which we then checked in the satellite imagery. We also inspected spatially adjacent regions and closely related time frames since an MCS occurrence indicates favourable atmospheric instability and convection intensity conditions.

With an MCS located, we placed an elliptic shape label enclosing it. Then we would trace the event back in time through the previous satellite imagery snapshots until its generation, and also through the subsequent snapshots until dissipation. Sometimes an MCS would split into two or more other MCSs. In this case, for each of the newly occurring phenomena, we would start a new track; we would continue the track for the one MCS in this group that looked like inheriting the trajectory of the initial one. Alternatively, two MCSs may merge. In this case, we would terminate one of the tracks and continue the second. To continue the track, we would choose the MCS that was more developed before the merge.

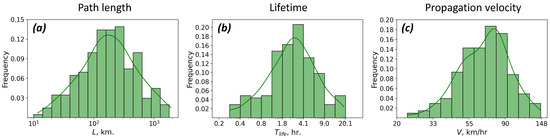

Following the procedure described above, we collected 205 tracks consisting of 3785 MCS labels in total. In Table 3, we present a summary of the DaMesCoS-ETR. We also present the basic MCS lifecycle distributions based on DaMesCoS-ETR in Figure 4. For the sake of clarity, we do not display the moments of the distributions in the figure since they are displayed in logarithmic scale. Instead, we present them in Table 3.

Table 3.

DaMesCoS-ETR summary. Here, MCC stands for mesoscale convective complex [14,88]; SC stands for supercell [89,90]; CS stands for meso-beta convective storms [91,92,93].

Figure 4.

Lifecycle characteristics of MCS in DaMesCoS-ETR based on expert labeling: (a) the distribution of path lengths L, km, (b) the distribution of lifetime , h, and (c) the distribution of propagation velocity V, km/h. The distributions are presented in logarithmic scale of the variables. The lines are the kernel density estimation of the distributions.

The DaMesCoS-ETR dataset is available on the GitHub repository (https://github.com/MKrinitskiy/DaMesCoS, accessed on 20 April 2023).

3. Methods

In this study, we exploited a deep convolutional neural network we designed based on RetinaNet [63]. RetinaNet is a deep convolutional neural network proposed in 2018 to perform the task of visual object detection (VOD). In artificial neural networks performing the VOD task, there are two approaches: one-stage detection [63,94,95,96,97,98,99] and two-stage detection [100,101,102]. In two-stage detectors, the first stage of proposing regions dramatically narrows down the number of candidate object locations. At the second stage, which is the classification and location adjustment step, complicated heuristics are applied in order to preserve a manageable ratio between foreground and background examples. In contrast, one-stage detectors process a vast amount of object proposals sampled regularly across an image. Within this approach, one needs to tackle the problems of background–foreground imbalance, and also tuning the network hyperparameters in order to make it meet the foreground–background ratio. It was demonstrated that one-stage detectors may deliver accuracy similar to that demonstrated by two-stage detectors [103]. At the same time, RetinaNet [63] provides the opportunity for the optimization of foreground-focusing capability, which is crucial in the case of strong foreground–background imbalance. This kind of imbalance is not very strong in the case of real-life photographic imagery similar to the ones presented in the MS COCO dataset [56], the KITTI multi-modal sensory dataset [104], the PASCAL VOC dataset [59], etc. It is often significant, however, in datasets originating from geophysics [41] and Earth sciences. MCSs may be considered statistical outliers, rare events [105]. They are mesoscale; thus, they are also characterized by a small footprint compared to synoptic-scale events, like cyclones. Thus, one may consider MCS labels in remote sensing imagery having a strong foreground–background imbalance favouring the background (the area where no MCSs are observed) over the foreground (the area occupied by MCSs).

3.1. RetinaNet Neural Network for the Identification of Mesoscale Convective Systems

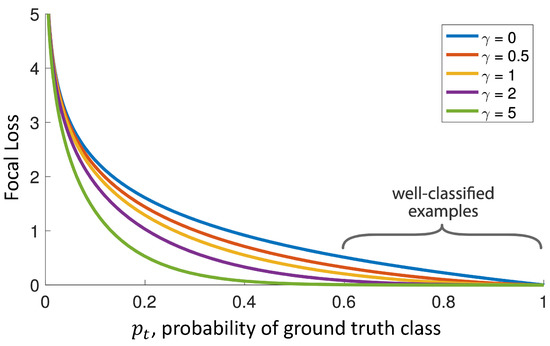

The problem of foreground–background imbalance is specifically addressed in RetinaNet [63] through dynamic scaling of commonly exploited cross-entropy (CE hereafter), where the scaling factor decays to zero as confidence in the correct class increases. In the original paper [63], this re-scaled loss is named focal loss (FL hereafter). In particular, one may write down the FL in the following way:

where —probability of the correct class for a rectangular bounding box (i.e., one of the object proposals); —probability of class k () for this bounding box; K is the number of classes; is the true label (either one or zero) for class k on this bounding box; is the balancing coefficient proposed in the original paper [63]; and is the focusing parameter. In the original paper, the default values are proposed to be set to the following: , . Here, one may note, the closer is to zero, the closer FL is to conventional CE. The intuition behind the FL is the following: during training regarding the classification sub-task, FL “encourages” the network to pay more attention to hard examples that are classified with less certainty . The contribution to the loss function of easily classified examples with close to 1 is downweighted through this dynamic scaling expressed in the coefficient. In Figure 5 (from the original paper Lin et al. 2018 [63]), we demonstrate this feature of FL: compared to conventional CE (), FL underweights well-classified objects compared to hard examples (the ones with low values of ).

Figure 5.

Focal loss graphs vs. probability of ground truth class depending on focusing parameter (from the original paper [63]). Here, we modified the captions for the sake of figure clarity. Note, that in the case of , focal loss (FL) is equivalent to the cross-entropy (CE).

The capability of FL to focus a network on under-represented objects is controlled by and hyperparameters. Thus, one may optimize these hyperparameters based on a scalar quality measure (mean average precision or mAP in the original paper), which is hard to fulfill through heuristics that are characteristic for two-staged detectors. This capability of targeted hyperparameter tuning of RetinaNet equipped with FL determined our choice in relation to the problem of MCS detection.

The main problem of this study is to infer MCS positions and sizes, i.e., bounding boxes of MCS (bboxes hereafter) for each time moment, meaning in each source data snapshot (see examples in Figure 13 shown by yellow rectangles). Thus, in terms of the VOD task, we consider one-class object detection, where the class is MCS. Thus, in our study is an output of the network modeling a Bernoulli-distributed variable that is commonly interpreted as an estimate of the probability of an object, enclosed with a detected bbox, to have class “1”, i.e., to be an MCS.

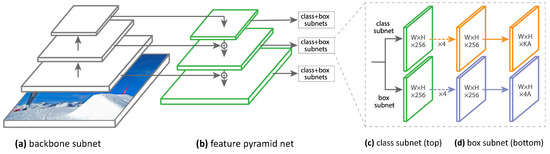

The fundamental approach of RetinaNet is the following: (a) an independent generator regularly places object proposals, varying in size and aspect ratio, that are rectangular bboxes densely covering an image; (b) the network itself processes an image with respect to this vast amount of generated bboxes, performing two tasks:

- a classification task for each bbox proposal, meaning estimating the probability of the bbox to enclose an MCS, and

- a regression task, meaning adjusting the position and the size of the bbox.

The network is constructed of four parts, (a) a feature extractor subnet (see Figure 6a); (b) a feature pyramid subnet (see Figure 6b); (c) a subnet performing the classification task for bbox proposals (see Figure 6c) and (d) a subnet performing the regression task for bbox proposals (see Figure 6d).

Figure 6.

RetinaNet architecture, from the original paper [63]. Here, we modified the captions of the first subnet to “backbone subnet” according to the common terminology established by 2023.

It is a common practice in detectors based on convolutional neural networks to exploit the transfer learning technique (TL) [106] using a backbone sub-network trained in advance on a large dataset like ImageNet [58]. In deep learning methods, TL addresses the lack of labeled data. In our study, ResNet-152 [45] was employed as a backbone network (see backbone subnet in Figure 6a).

3.2. Data Preprocessing

3.2.1. Transfer Learning

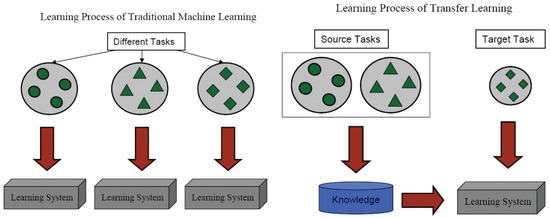

Transfer learning (TL) [107,108,109,110] is the basic approach in deep learning to solve the problem of insufficient training data. In Earth sciences and in geophysics, in particular, there is a common issue of a vast amount of unlabeled data describing natural processes with a small annotated subset. Thus, TL may be helpful in problems similar to MCS detection, and in other Earth science problems. When using TL, one tries to transfer the knowledge about the distribution of data in a hidden representation space from the source domain to the target domain. The process of knowledge transfer may be expressed in terms of the definition from Tan et al. [109]: given a learning task based on dataset , one can get help from dataset for the learning task ; transfer learning aims to improve the performance of the predictive function for learning task by discovering and transferring latent knowledge from and , where and/or ; in addition, typically, the size of is much larger compared to the size of : .

In our study, the source domain is the ImageNet dataset [58], and the source task is the ImageNet 1000-class classification problem; the target domain is Metesat remote sensing imagery, and the target task is the MCS identification problem.

In order to clarify the transfer learning approach, we show a symbolic scheme in Figure 7 (from Pan and Yang 2010 [110]), where the difference between conventional machine learning and machine learning involving transfer learning is presented. Within the traditional ML approach (Figure 7, left), hidden representations and predictive skills are learned by neural networks (namely, “learning systems”) independently from the datasets specific to their own tasks. Datasets are presented in Figure 7 as rounded shapes with markers of different types symbolizing data points of different origin. In contrast, within the approach of transfer learning (Figure 7, right), a learning system extracts generalizable knowledge (hidden representations mostly) from source datasets learning to perform source tasks; then, some of the extracted knowledge may be helpful when the system is learning to perform a target task. Within the TL approach, one relaxes the hypothesis of independent and identically distributed data of the domains and . With this requirement relaxed, one does not need to train the network in the target domain from scratch, which may significantly reduce the demand for training data and computational resources for training. Following the classification given in Tan et al. 2018 [109] and the methodology presented in RetinaNet paper [63], the network-based TL technique is exploited in RetinaNet with ResNet employed as a reusable part of the architecture. In particular, ResNet-152 [45] is employed in our study as the backbone (see Figure 6a).

Figure 7.

Difference between knowledge extraction in traditional machine learning (left) and with transfer learning employed (right) (from Pan and Yang 2010 [110]).

3.2.2. Domain Adaptation through Linear and Non-Linear Scaling

It was demonstrated in recent studies that the degree of similarity between the source and target domains plays a crucial role in target task performance [111] when one exploits the transfer learning technique. Thus, when one needs to exploit the pretrained ResNet-152 network as a feature extractor (also known as a “backbone” in some studies [63]), there is the need for harmonizing the dataset of the target domain with the dataset the backbone was pretrained on (ImageNet in the case of our study).

The snapshots of Meteosat remote sensing imagery may be projected to a plane; thus, it may be presented on a regular grid, which indicates some similarity with conventional digital images of ImageNet. At the same time, one may assume a strong covariate shift between projected satellite imagery and ImageNet images due to fundamentally different origins of the data. When one needs to harmonize the target domain dataset with the source domain dataset, one may employ domain adaptation (DA) techniques [112,113]. Within the DA approach, one seeks for the transformation of target domain data that brings their distribution close to the source domain, meaning not only the distributions of the features themselves, but also the distributions of representations in a hidden representation space. There are a number of DA techniques in computer vision tasks involving CNNs [112,113]. One of the fastest is feature-based adaptation [112]. In feature-based DA, the goal is to map the target data distribution to the source data distribution using either a human-designed or a trainable transformation. In our study, we exploited expert-designed transformation of remote sensing data constructed in a way that, to some extent, delivers imagery similar to the optical images of ImageNet. In order to design this transformation, we first normalize the source data according to Equation (3):

where X is the substitute for and ; and are shown in Table 4. As a result, we acquire scaled (also known as normalized) features corresponding to the original ones: , . One may note that the values of the scaled features are inverted with respect to the original ones. This was performed due to the need of a labeling expert to focus on the negative temperatures of the cloud-top—the stronger, the lower its temperature. Thus, in order to highlight the colder pixels, we inverted the scales of and while normalizing them.

Table 4.

Normalizing coefficients for source data.

We scaled the brightness temperature difference in a different way. We normalized it in a manner similar to and except for inverting the scale:

where X is the substitute for ; and are shown in Table 4.

With the normalization procedure described above, 99% of the values of the normalized source data were in the range . All the values outside this range were masked and, thus, did not contribute to the loss function of our detection model.

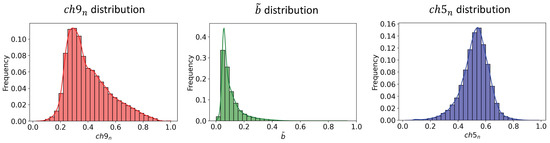

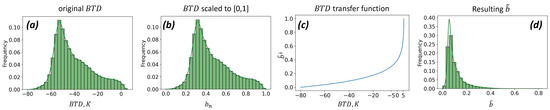

When performing exploratory data analysis for MCS labeling, we found that an expert needs to focus on the range of between and . We also found that the distribution is mainly concentrated in the region of negative values from to (see Figure 8a). In order to (a) harmonize the distribution of transformed with a typical color channel of digital imagery characteristic for ImageNet, and yet (b) preserve the semantics of the most needed range of data, we re-scaled the data non-linearly in the following way:

where are data normalized as expressed in Equation (4), are data re-scaled non-linearly, and are small constants employed for computational stability; typically, we set . In Figure 8, we present the original distribution, its normalized distribution acquired as a result of transformation in Equation (3), the transfer function presented in Equation (5), and the empirical distribution of , which is the non-linearly re-scaled . The effect of the non-linear re-scaling we applied is the following: instead of highlighting the pixels characterized by the most frequently observed , we suppress their brightness in the channel of , and yet we extend the variability of the values for the pixels that an observer needs to focus on the most. In this way, the labeling expert would be able to distinguish more color tones with higher contrast in the regions relevant to MCS formation and life cycle.

Figure 8.

Stages of data transformations within domain adaptation: (a) the original distribution; (b) its normalized distribution acquired as a result of transformation in Equation (4); (c) plot of the transfer function presented in Equation (5), and (d) the empirical distribution of non-linearly scaled employed as one of the spatially distributed features in our study.

As a result of normalization and non-linear re-scaling (only for ), we constructed the features that one may consider being close to the red, green and blue channels of ImageNet digital imagery, given the digital images being routinely preprocessed through features scaling with the coefficient . In Figure 9, we demonstrate the empirical distributions of the three resulting data features we use in our study: , and . One may consider them analogs of the color channels R,G,B in conventional digital optical imagery.

3.3. Training and Evaluation Procedure

3.3.1. Quality Measures

In our study, MCS identification is presented as being similar to a visual object detection (VOD) problem. That is, given a vector field (considering , and as vector components in each pixel) distributed on a regular spatial grid, one needs to identify and classify the spatial patterns in this field that represent the objects of interest (MCS in our study). The patterns are characterized by their locations and sizes; thus, typically, the goal of the VOD task is to create rectangular labels that enclose the individual objects of interest.

A typical quality measure for the identification of one object is Intersection over Union () [114], which is equivalent to the pixel-wise Jaccard score (see Equation (6)).

where is the set of pixels that are labeled as MCS (ground truth labels); is the set of pixels that are detected by an algorithm as MCS (detected labels); and is the cardinality of a set M, which is the area (the number of pixels) in the case of VOD in digital imagery or in remote sensing imagery. IoU is commonly used in VOD tasks for the determination of whether a detected label is a true positive (TP hereafter) or false positive (FP hereafter). For this to happen, one needs to (a) employ a threshold value to classify a label candidate as a detected label (see Equation (7)), and (b) employ a threshold value so a detected label may be classified as TP or FP (see Equation (8)).

where is the classification decision of whether a label candidate is a label of MCS based on the probability estimate inferred by the classification subnet of our neural network (see Figure 6c).

where is the TP/FP class for an i-th detected label. When there are multiple ground truth labels intersecting with the detected one, the score is calculated following one of the alternatives:

- computation by taking all ground truth labels into account, without considering the label-wise attribution of pixels to specific ground truth labels. This means that the score is based on the overall overlap between the predicted and ground truth labels, rather than on the individual label assignments of each pixel;

- computation of separately for each particular ground truth label that has a non-zero intersection with the detected label. The scores for each label are then averaged to obtain the final score. This approach takes into account the contribution of each ground truth label.

In our study, we followed the second approach. is the measure with minimum (worst) value and maximum (best) value .

In VOD tasks, there are no true negatives or false negatives since there are no negative labels for any class. Therefore, the performance of the detection algorithm can only be evaluated using precision and recall metrics expressed in the form presented in Equations (9) and (10)). Precision measures the accuracy of positive detections, and recall measures the proportion of actual positive instances that are correctly identified by our model.

where is the set of detected labels; is the set of ground truth labels; is assessed following the thresholding procedure described in Equation (8). and are the measures with minimum (worst) values and maximum (best) values .

There is a common quality measure for VOD tasks, which is the mean average precision [103,114]. The term “mean” here denotes averaging over classes; thus, it is irrelevant in our study due to one class only being considered. Thus, we exploited average precision (AP) as one more quality measure for our model. Average precision is the metric assessed based on the precision-vs-recall (PR) curve, which is acquired due to varying . AP is the area under the PR curve approximated using either n-points interpolation or employing all-points piecewise-continuous approximation (e.g., see Padilla et al. 2020 [114]). AP is the measure with minimum (worst) value and maximum (best) value .

During the hyperparameter optimization procedure, we optimized AP with respect to the parameters and procedure properties of the stochastic optimization algorithm (see Section 3.3.3), and value.

3.3.2. Reliable Quality Assessment

When analyzing physical processes generating an observational time series dataset, successive observations in the dataset may exhibit strong autocorrelation due to the smooth evolution of natural states. These natural states refer to the underlying physical phenomena that drive the observed features available in the form of remote sensing imagery in our study.

In machine learning, it is a common approach to evaluate a model through estimating quality metrics by testing a subset of data, this subset being acquired using random sampling from the original set of labeled examples. It is the correct approach where the examples are strictly independent yet identically distributed. In this case, quality assessment estimates with a testing subset are reliable.

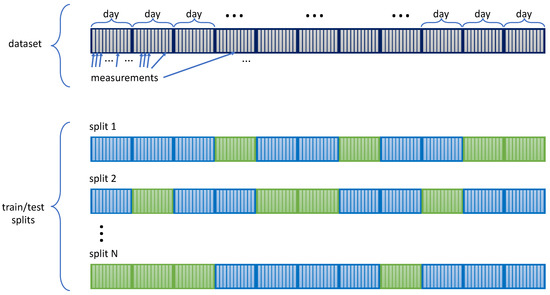

At the same time, since successive images may be strongly correlated, they may be considered as the same observations with noise-like perturbations, i.e., one can not assume that they are independent. On the contrary, they should be considered strongly dependent. Thus, it is important to avoid systematically adding successive examples from a time-series dataset obtained through observing a natural process to training and testing sets. It was shown that one needs to apply particular methods of sampling for validating the models trained on time-series data [115,116,117,118]. In our study, we address the issue of strongly correlated successive examples using day-wise random sampling. We present the scheme for this day-wise sampling strategy in Figure 10. With this approach, there is still the possibility for consecutive examples to replenish different subsets; it is two orders of magnitude lower compared to pure random sampling given a 15 min period of remote sensing imagery acquisition.

Figure 10.

Sampling strategy applied in our study for splitting the dataset into training and testing subsets. Here, we display individual Meteosat snapshots with corresponding MCS labels as “measurements” (thin vertical rectangles); days of observations are shown by thick rectangles; days of labeled observations contributing to a train subset are shown in light blue; and days of labeled observations contributing to a test subset are shown in light green.

Using the presented sampling strategy, we obtained seven different train-test splits. We trained our network on the training subset and evaluated the quality measures on the testing subset for each split. Then, we estimated the mean for each quality metric and assessed their uncertainty , where m denotes a metric. We assessed , assuming normal distributions for each metric. Thus, we calculated as a confidence interval with a 95% confidence level based on the sample estimate of the standard deviation computed using the sample of seven train-test splits.

Due to the high computational demand of the training procedure of artificial neural networks, we limited the validation of our model with one quality assessment train-validation split during hyperparameter optimization. That is, when optimizing the hyperparameters, we trained and validated the model once per hyperparameter set.

3.3.3. Training Procedure

Artificial neural networks are known to be sensitive to the details of the training procedure [119]. A training algorithm and the hyperparameter choice may either lower dramatically or improve significantly the resulting quality of the trained model. Currently, the most stable and commonly used training algorithm is Adam [120,121]. Adam is a first-order gradient-based optimization algorithm employing a momentum approach for estimating the lower-order moments of the loss function gradients. We exploit the Adam optimization procedure in our study.

The most important factors of optimization algorithms are the batch size and learning rate [119,122]. Due to the large size of remote sensing imagery, we could not vary the batch size significantly. In order to lower the noise in the gradient estimates, we set the largest batch size (batch_size = 8) that was technically available due to limitations of our computer hardware. Following common best practice, we optimized the learning rate schedule in order to acquire not only high quality AP, but also strong generalization skill. One may assess generalization through the gap between the quality estimated on the training and testing subsets. A small gap corresponds to good generalization, whereas a large gap means poor generalization.

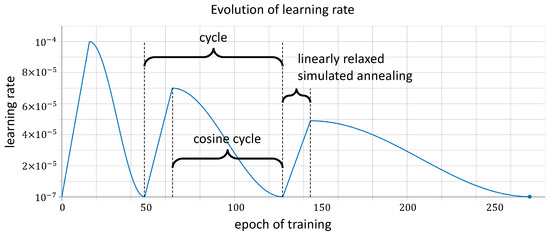

Following best practice proposed in recent studies, we employed a special learning rate schedule which was presented in Loshchilov and Hutter, 2016 [123]. The authors proposed the use of a cyclical schedule of learning rate characterized by a cosine-shaped decrease over training epochs and several annealing simulations. There have been a number of modifications to this schedule presented in recent studies. We employ the configuration characterized by the following features:

- we apply an increase in the period of simulated annealing with each cosine cycle using the multiplicative form (see Equation (11));where is the first period of simulated annealing, and in our study; is the multiplicative coefficient, and in our study; i is the cycle number starting from .

- we apply a linear increase in the learning rate prior to each cycle of cosine-shaped learning rate decay to mitigate the sudden changes in gradient moments that can occur within the Adam algorithm;

- we also apply exponential decay of simulated annealing magnitude with each cosine cycle using the multiplicative form (see Equation (12));where is the scale of simulated annealing compared to the initial learning rate; is its first value, and ; is the multiplicative coefficient, and in our study; i is the cycle number starting from .

The resulting learning rate schedule optimized through hyperparameter optimization is presented in Figure 11.

Figure 11.

Learning rate schedule employed in our study.

As we mentioned in Section 3.2.1, we employed the convolutional subnet of ResNet-152 [45] pre-trained on the ImageNet [58] dataset as a convolutional feature extractor in our network constructed following the RetinaNet [63] scheme (see Figure 6). Since the classifier and the regressor subnets are randomly initialized in the beginning of training, their initial weights are certainly suboptimal, which means poor performance of the model and high values for the regression and classification losses. Thus, during the initial epochs of training, the gradients of composite loss function are high. In the case of convolutional backbone weights being trained at this stage, one may significantly change the feature representations learned by the convolutional layers, which can negatively impact the performance of the model. In order to preserve the feature extraction skill of the ResNet backbone, we employed a warm-up step [124,125,126,127] for 48 epochs. The warm-up step in transfer learning is a technique used to harmonize the newly added layers (i.e., classifier and regressor subnets) with the pretrained ResNet convolutional backbone. At this step, the weights of the convolutional layers are frozen (i.e., the optimization algorithm does not update them), and only the weights of the newly added layers are updated during training. The warm-up step helps to ensure that the new classifier and regressor layers are harmonized with the pretrained convolutional backbone, resulting in a more effective transfer learning process.

Due to the small amount of data, the generalization ability of overparameterized neural networks may be suboptimal. A summary of DaMesCoS-ETR presented in Table 3 demonstrates that the amount of labeled data is insufficient for deep convolutional neural networks of a complexity similar to RetinaNet. To alleviate this issue, one may employ data augmentation. In our study, we applied the following augmentations to the training examples during training: random flip in both directions; blurring with Gaussian kernel of random width sampled from the range pixels; random rotation with angle sampled uniformly from the range ; random shift (translation) with the translation magnitude sampled from the range (measured in fractions of the image); and random shear in both directions with angles sampled independently from the range . We also applied random coarse dropout, which is the technique of dropping a small amount of randomly scattered small rectangular regions from the augmented image. We also attempted to apply computationally demanding elastic deformations to the images. However, this approach significantly slowed down the training and did not lead to a substantial improvement in MesCoSNet quality.

4. Results

Following the procedure described in Section 2.2, we conducted visual identification and tracking of MCS using our GeoAnnotateAssisted tool (see Section 2.3), resulting in the Dataset of Mesoscale Convective Systems over the European Territory of Russia (DaMesCoS-ETR, see summary in Section 2.4). We then constructed our deep convolutional neural network MesCoSNet following the method presented in Section 3. Following the sampling procedure described in Section 3.3.2, we generated several (typically seven) splits of the source DaMesCoS-ETR dataset into training and testing subsets. Employing the optimization procedure described in Section 3.3.3, we trained and evaluated our MesCoSNet with each split. We present the resulting measures of quality and their uncertainties in Table 5.

Table 5.

Quality metrics of MesCoSNet trained on DaMesCoS-ETR in our study.

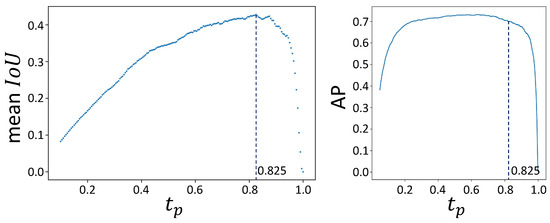

One of the hyperparameters we optimized is the (see Section 3.3.1). It is optimized during evaluation, and it does not impact training of the neural network. By default, . However, this value may not deliver the best quality. In Figure 12, we present the plot of the metric depending on and the plot of the AP metric depending on . The maximum of the mean is achieved at . The landscape of the AP metric is flat with respect to near this value; thus, one may use it without substantial degradation of AP.

Figure 12.

Plots of metrics and AP depending on as the supporting diagram for choosing the .

In Figure 13, we present some examples of the application of MesCoSNet. As one can see, qualitatively, our MesCoSNet performs the MCS detection task in good accordance with the ground truth. For example, in Figure 13a, one may see an almost perfect match of the detected MCS with the ground truth. In Figure 13b, the location and form of the identified label is close to the ground truth. However, there are missed MCS instances.

Figure 13.

Examples of MesCoSNet application in MCS identification problem using Meteosat remote sensing data. Here, source satellite imagery bands are presented using the color maps delivering the same visual experience as color maps exploited in GeoAnnotateAssisted. MCS labels detected by MesCoSNet are shown as pink rectangles; ground truth MCS labels from DaMesCoS-ETR are shown as yellow rectangles. We also display the certainty rate of MesCoSNet (class MCS probability) for the detected labels (see pink text in the top-left corner of pick rectangles.). Here, we present the cases of (a) our neural network MesCoSNet identifying an MCS close to ground truth and (b) our neural network MesCoSNet identifying one of three ground truth MCSs, thus, two MCSs were missed, whereas the identified one is located close to ground truth.

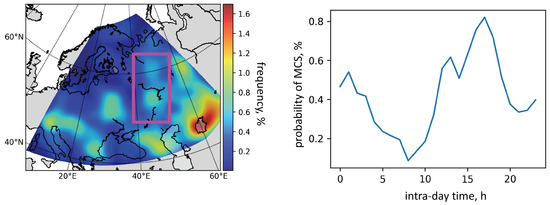

As a demonstration of potential applications of MesCoSNet trained on DaMesCoS-ETR, we present here a map of the frequency (probability estimate) of MCS occurrence over the European territory of Russia. We applied our MesCoSNet to the full set of Meteosat SEVIRI data in summer (May to September) from 2014 to 2017. We identified all MCS using the hyperparameter value . We then calculated the frequency of MCS occurrence in each pixel of the map that is covered by Meteosat imagery. In Figure 14, we present the resulting frequency map. It is worth noting that we did not filter overlapping occurrences of MCSs that may be attributed to one track. Thus, the frequency of MCSs in this result is most probably overestimated. Additional research is needed to accurately assess the corrected frequency of MCS occurrences while considering the tracking attribution of identified labels.

Figure 14.

Frequency of MCS occurrence over ETR in summer (May to September) as a result of MesCoSNet application with Meteosat SEVIRI imagery from 2014 till 2017: (left) frequency map; (right) the averaged over pink rectangle diurnal variation of MCS occurrence probability.

5. Discussion

In this paper, we presented the results of our holistic research regarding the identification of mesoscale convective systems in satellite imagery over the European territory of Russia. We started by developing our own tool GeoAnnotateAssisted for labeling and tracking MCS in Meteosat remote sensing imagery. Then, we collected the DaMesCoS-ETR dataset of labeled and tracked MCS instances. We then constructed the MesCoSNet, a deep convolutional neural network based on the RetinaNet architecture. We then trained it on Meteosat SEVIRI images, along with labels from DaMesCoS-ETR. Finally, we demonstrated some potential applications of our approach for identifying MCS in satellite imagery.

The quality we reached in our study, does not exceed the typical quality measures of contemporary detection models in the case of visual object detection problems. Today, the mAP quality measure is typically close to (reported for the SNIPER neural network [128] on the MS COCO dataset [56]) or (reported for the ATSS neural network [129] on the MS COCO dataset [56]). Possible cases for this behavior may be:

- a small amount of labeled data. In our study, we applied a number of approaches that may alleviate the negative impact of a small dataset: we applied data augmentation, and applied a transfer learning technique, along with domain adaptation of satellite imagery. However, there are more techniques that may deliver an increase in identification quality. One may pretrain the convolutional subnet on Meteosat SEVIRI data within a convolutional autoencoder approach; thus, the backbone would learn more informative features compared to the ones it learned from ImageNet;

- a low signal-to-noise ratio in satellite imagery, which is obvious in the images in Figure 1 and Figure 13. Some of the data preprocessing techniques we applied during MesCoSNet training are meant to reduce the impact of noise in satellite bands, e.g., Gaussian blur. Here, one may improve the source data further by adding relevant features characterized by a high signal-to-noise ratio, e.g., CAPE (convective available potential energy) or CIN (convective inhibition).

In Section 2.4, we presented the way we used to search for MCS events while labeling the Meteosat imagery. In particular, our experts studied records of local meteorological stations, expert views, and also messages in various local news channels. The goal of these studies was to find the signs of severe weather events that may indicate the passage of an MCS. A bias may be introduced by this manner of searching for severe storms. In particular, with this searching and identification procedure, the collected sample of labels and tracks may be biased spatially towards populated territories. Also, a bias towards extremely strong MCS may be introduced into the DaMesCoS-ETR through the searching procedure due to the natural disposition of a person to focus on hazardous events that may cause economic damage and even loss of human life.

Recently, a number of studies were reported at conferences demonstrating the capabilities of deep learning methods for addressing the problem of identification of mesoscale atmospheric phenomena. Some of them indicate results characterized by high quality measures. In particular, one research group reported an approach exploiting a convolutional neural network constructed following the U-net [130] architecture; another group reported the use of a feedforward artificial neural network (also known as a fully connected neural network or multilayer perceptron). Both reports are characterized by a similar approach for evaluating the models, which is the use of pixel-wise quality measures. In this discussion, we argue that pixel-wise quality measures that do not account for strong class imbalance are not reliable in addressing the MCS identification problem. Indeed, MCS are extremely rare events (e.g., see Figure 14); thus, the pixel-wise class imbalance may be characterized by an approximately 1:200 pixel class ratio. If one insists on using pixel-wise quality assessment, it is strongly advised to employ quality measures that account for a strong class imbalance. One potential choice may be the -score, with tuned accurately in accordance with researcher needs. In our study, we exploited object-wise quality metrics; thus, we did not suffer from inadequate metric formulation.

One may also observe that, in our study, we applied MesCoSNet closely following the architecture of RetinaNet. Within this approach, the labels are rectangular. In contrast, MCS labels are elliptic in DaMesCoS-ETR, which is in accordance with the methodology of visual MCS identification proposed in Maddox, 1980 [14]. Thus, one may test the approach of approximating the coordinates of three keypoints, defining the elliptic form of an MCS, instead of two tuples (i.e., location and size). Alternatively, a regression subnet may be trained to approximate the location, the azimuth angle of the main axis, and the eccentricity of the ellipse. In this case, the number approximated remains four.

6. Conclusions