Abstract

The rapid development of LiDAR technology has promoted great changes in forest resource surveys. The airborne LiDAR point cloud can provide the precise height and detailed structure of trees, and can estimate key forest resource indicators such as forest stock volume, diameter at breast height, and forest biomass at a large scale. By establishing relationship models between the forest parameters of sample plots and the calculated parameters of LiDAR, these developments may eventually expand the models to large-scale forest resource surveys of entire areas. In this study, eight sample plots in northeast China are used to verify and update the information using point cloud obtained by the LiDAR scanner riegl-vq-1560i. First, the tree crowns are segmented using the profile-rotating algorithm, and tree positions are registered based on dominant tree heights. Second, considering the correlation between crown shape and tree species, we use DBN classifier to identify species using features of crowns, which are extracted to 1D array. Third, when the tree species is known, parameters such as height, crown width, diameter at breast height, biomass, and stock volume can be extracted from trees, enabling accurate large-scale forest surveys based on LiDAR data. Finally, experiment results demonstrate that the F-score of the eight plots in the tree segmentation exceed 0.95, the accuracy of tree species correction exceeds 90%, and the R2 of tree height, east–west crown width, north–south crown width, diameter at breast height, aboveground biomass, and stock volume are 0.893, 0.757, 0.694, 0.840, 0.896 and 0.891, respectively. The above results indicate that the LiDAR-based survey is practical and can be widely applied in forest resource monitoring.

1. Introduction

Forests are the largest carbon reservoir and ecosystem on land, providing not only vital ecological services but also enormous economic benefits in the process of human development [1]. The acquisition of forestry parameters, such as tree height, crown width, species, biomass, etc., is critical in the process of investigation and monitoring. The monitoring of forest resources provides an effective scientific methodology for offground density estimations, change trend analysis, forest growth detection, harvest prediction, and so on [2,3,4]. Traditional forest resource monitoring is usually time-consuming and labor-intensive due to manual field collection, which is unsuitable for large-scale research. In addition, accuracy is limited by factors such as measurement instrument errors [5], positional errors caused by GPS signal obstruction, and coarse errors in manual measurements. However, manual measurements are still the most commonly used method in forestry surveys. Therefore, there is a need to explore new approaches for rapid and reliable data validation that can meet the requirements of forestry production and ecological construction [6]. Since the characteristics of remote sensing technology include wide monitoring range, quick data acquisition, and low cost, the application of remote sensing technology for rapid and large-scale validation and updating of forestry parameters is of both theoretical significance and practical importance.

Passive optical remote sensing, such as multispectral remote sensing, hyperspectral remote sensing, and high-resolution remote sensing, have been widely used for estimating forest parameters with notable progress and outcomes. The spectral information of passive optical remote sensing data from visible to near infrared reflects the physical structure parameters of the forest, and forestry parameters, such as vegetation index and texture information, can then be the derived. Ouma [7] used semivariance functions on QuickBird images to investigate the relationship between forest biomass and spectral variables in Kenya. Marshall and Thenkabail [8] compared the response of hyperspectral data EO-1 Hyperion and multispectral data on biomass generation, determining that hyperspectral data were superior. Mohammadi et al. [9] developed a model for forest stock estimation in northern Iraq using Landsat ETM+ data. Franklin [10] et al. estimated the canopy density of spruce using TM data with an accuracy of 80%. According to the findings of the preceding studies, passive optical remote sensing data are mostly used to invert the horizontal structural parameters of forests and are rarely utilized to estimate the vertical structure (e.g., tree height) of forests. This is mainly attributed to the low signal penetration of optical remote sensing data, which makes obtaining information in the vertical direction challenging. However, some researchers, such as Brown et al. [11], have tried to use high resolution overlapping stereo images to achieve crown height estimation, but the elevation accuracy of the undertree surface still cannot meet the requirements.

Synthetic Aperture Radar (SAR), the active remote sensing technology, has the ability to penetrate forest vegetation crowns and observe the ground in all weather conditions. SAR can also interact with treetops and trunks to capture the vertical structure of forests. Cloude and Papathanassiou [12] used polarization coherence tomography to reconstruct low-frequency three-dimensional (3D) images and provided a method for optimal interferometric baseline selection to estimate forest vertical structure. Blomberg et al. [13] used L-band SAR data from Argentina’s observation satellite SAOCOM to accurately invert forest biomass in northern Europe. Matasci et al. [14] approximated the aboveground biomass of forests with root mean square deviation (RMSD) error of less than 20% using European Space Agency (ESA) P-band radar data. Although SAR is sensitive to forest vertical structure, backscatter signal saturation often occurs when the forest biomass is large. For example, Luckman et al. [15] used JERS-1 SAR data to estimate tropical forest biomass and discovered that the backscatter coefficient saturated when the biomass reached 6 kg/m2, affecting the accuracy of forest biomass estimation.

Light Detection and Ranging (LiDAR) has advantages such as high angle resolution, distance resolution, and anti-interference ability, which make it possible to gather high precision three-dimensional surface information while avoiding signal saturation in high biomass areas [16]. Particularly in the field of forestry survey application, LiDAR has significant advantages over other remote sensing technologies with respect to forest height measurement and vertical structure acquisition in forest stands. LiDAR can provide highly accurate horizontal and vertical information of forests depending on the sampling method and configuration, but the optical sensors can only be used to provide detailed information on the horizontal distribution of forests. Therefore, this study will use airborne LiDAR data to identify the critical indicators of the forest resources present in the sample area.

LiDAR in forestry surveys can be divided into the area-based approach (ABA) and individual tree detection (IDT), depending on the survey scale. ABA uses statistical modeling to estimate forest characteristics by metrics derived from point clouds within a predefined plot or grid [17]. Lefsky et al. [18] laid the theoretical foundation for the ABA method and applied it to plot-level mean diameter at breast height (DBH). The strong correlation between tree height and DBH [19,20] is widely used in forestry inventories to estimate forest parameters such as stock volume and biomass [21,22].

This study focuses on the method of individual tree detection, i.e., detecting and delineating individual trees and estimating their parameters from point clouds based on geometric features. The basis for estimating forestry parameters is accurate segmentation of tree point clouds. Tree crown segmentation methods based on LiDAR data are mainly divided into the following two categories: raster-based tree segmentation and direct point cloud segmentation. By interpolating the three-dimensional point cloud, the raster-based tree segmentation first develops a digital surface model (DSM) and a crown height model (CHM) by normalizing the tree height. Then, based on the height undulations in the CHM, local maximum [23,24] or variable windows [25,26] are used to search for local maximum as initial treetop locations, and finally, edge detection or feature extraction methods are employed to identify tree crowns. Watershed segmentation algorithms [27,28,29] and flow tracking algorithms [30] are two examples of raster-based tree segmentation algorithms. The CHM-based segmentation method is quick and effective, but it can identify the wrong segment and omit details. This problem is partially addressed by a Fishing Net Dragging (FiND) method proposed by Liu et al. [31], with an overall accuracy of 82.4%. However, the segmentation accuracy is still influenced by the CHM resolution, and CHM only represents crown surface information without describing the crown’s vertical structure. With the development of LiDAR technology, the density and accuracy of point clouds have rapidly developed, and many researchers directly use the point cloud data to segment tree crowns [32,33]. Wang et al. [34] first proposed voxel segmentation of raw point cloud data with the vertical crown structure of the forest, dividing the crown areas of different heights based on the elevation distribution within the voxels and performing tree segmentation. Morsdorf et al. [35] used local maxima search as seed points for k-mean clustering of three-dimensional point clouds. Li et al. [36] proposed a top–to–bottom area growth algorithm relying on the relative distance between trees, and this method achieved 90% segmentation accuracy for coniferous forests. However, its applicability was not transferrable to dense forest areas with overlapping crowns. Compared with the traditional raster-based tree segmentation method, the direct segment processing of point cloud data can more accurately reflect the three-dimensional structure of trees. Unfortunately, the majority of segmentation studies on tree segmentation using LiDAR data prefer low-density stands, and most of them are not ideal for complex forest environments with overlapping crowns and a variety of tree species. Additionally, the single segmentation method is not universal and is challenging to apply to trees of different scales. Chen et al. [37] utilized the PointNet algorithm for direct segmentation of point cloud data. However, the segmentation results are significantly influenced by the appropriate voxel size, which limits its performance in the segmentation of trees at multiple scales in large areas. Yan et al. [38] proposed a self-adaptive bandwidth estimation method to estimate the optimal kernel bandwidth automatically without any prior knowledge of crown size, but it has not yet been applied to complex natural forest areas. This study builds upon previous work [39] and employs a rotation profile algorithm for tree crown segmentation. The algorithm dynamically captures the contour of the point cloud profile to determine the edges of the tree crown.

For the study of tree species classification and identification based on LiDAR data, Holmgren and Persson [40] used a supervised classification method to distinguish Norway spruce and Scots pine with 95% accuracy. Othmani et al. [41] used terrestrial laser scanning (TLS) data to distinguish five tree species using wavelet transform with an overall accuracy of 88%. Lin and Hyyppä [42] used a support vector machine approach to classify the tree species by extracting point cloud distribution, crown-internal and tree-external features, and achieved an overall accuracy of 85%. Kim et al. [43] extracted crown structure parameters for tree species classification using leaf-on and leaf-off LiDAR data in the growing and deciduous seasons; the results indicated that tree species identification from both data was superior to single season data. In addition, some other scholars have made full use of point cloud intensity information and introduced it into tree species classification studies, such as Ørka et al. [44], who combined structural and intensity features to classify Norway spruce and birch, and their results proved that the classification accuracy was better than using structural or intensity features alone. The primary benefit of LiDAR intensity is related to the reflectance of surface features; there are several intensity-related confounding variables, such as parameters connected to the feature’s environment, the sensor hardware system, and the data gathering geometry [45]. As a result, algorithmic parametric models based on intensity information are usually limited to a single location. Qin et al. [46] combined structural, spectral, and textural information to achieve an overall accuracy of 91.8% in tree species classification. However, vegetation spectral and textural information often vary over time, making it less universally applicable across seasons. As demonstrated above, accurate crown structure information is the most reliable feature for tree species classification. In this paper, deep belief network (DBN) is utilized to learn the shape of crown profiles of known tree species in sample plots. The method is suitable for tree species with different crown shapes and can be used in dense forests.

LiDAR has been successfully applied in forestry parameter extraction for a long time. Solodukhin et al. [47] used LiDAR point cloud data for tree height extraction, and the RMSE between their estimated tree height and photogrammetry results was 14 cm. The parameters that can be directly obtained from the segmented tree crowns are generated from the LiDAR data. Information such as tree height and crown width or height can be easily obtained, but the crown width diameter at breast height (DBH) and tree species cannot be directly obtained. Although LiDAR data cannot directly estimate the diameter at breast height of forest trees, some existing studies use measured data to establish relationships and indirectly infer tree diameter at breast height parameters from LiDAR data. For example, Shrestha and Wynne [48] estimated the diameter at breast height of trees in urban areas of central Oklahoma, USA, using the Optech ALTM 2050 system with an R2 of 0.89. As parameters derived from LiDAR coordinate information, crown structure parameters are widely used in forest biomass inversion. They are usually calculated from the vegetation echoes after elevation normalization, including 25%, 50%, and 75% percentile height, maximum tree height, mean tree height, and forest crown height. Bortolot and Wynne [49] established a regression analysis based on the 25%, 50%, and 75% percentile height and biomass and obtained correlation coefficients between predicted and actual measurements ranging from 0.59 to 0.82, with RMSE ranging from 13.6 to 140.4 t/ha. Wang et al. [50] estimated the aboveground biomass based on an Unmanned Aerial Vehicle (UAV) LiDAR system and the results showed that the mean height of trees was the most reasonable parameter to predict aboveground biomass. Several researchers have recognized the importance of LiDAR intensity data and applied it to biomass inversion, such as García et al. [51], who estimated biomass in a Mediterranean forest in central Spain using height parameters derived from airborne LiDAR point cloud data and distance-corrected intensity parameters; their results showed that intensity correction could improve the accuracy of forest biomass estimation. Numerous research studies have demonstrated that parameter estimation considering tree species classification is more accurate. Donoghue et al. [52] discovered that LiDAR-based tree height and biomass estimation algorithms for coniferous forests were not applicable to mixed forests. Jin et al. [53] introduced tree species as a dummy variable into the regression model when point cloud feature regression modeling was performed to estimate the stocking volume using the peak forest site in Guangxi, with an elevated coefficient of determination R2 of the model estimation results. Pang and Li [54] divided temperate forests in the Xiaoxing’an Mountains into coniferous, broadleaf, and mixed forests for biomass inversion, and the findings revealed that differentiated biomass modeling can further improve biomass estimation accuracy. Therefore, in this paper we will use existing tree species to verify and update the wrong tree species information in the sample plots, as well as correct the diameter at breast height, aboveground biomass, storage volume, and other parameters of trees in the sample plots based on accurate tree species information.

In summary, this paper focuses on the urgent needs of current forestry surveys and uses LiDAR point cloud data to verify and update the error information of manually collected data. The paper addresses the following issues: (1) To solve the segmentation problem of staggered crowns for the complex growing condition of the northeastern primeval forest, the rotating profile segmentation method is used to obtain the crown edge points and the segmentation point cloud, which is consistent with our previous work. (2) This paper utilizes the geometric structure information of tree crowns to identify species. The structures of individual tree species are very different, so this paper attempts to use the segmentation of the shape of the crown section. The deep belief network (DBN) method is used to establish the tree species recognition model and update the sample tree species error information. The expected contribution of this study is to extract profile information as one-dimensional arrays instead of fitting shapes for classification. Additionally, the application of DBN enables high-precision identification of tree species in complex natural forest areas. (3) Finally, the forest parameters are estimated and updated based on the corrected tree species information. And the superiority of LiDAR data in forest parameters extraction is verified to validate its feasibility for large-scale application in forest resource survey.

This paper is organized as follows: Section 1 discusses the significance and advantages of LiDAR point cloud data in forestry resource surveying as well as the current status and limitations, which leads to the method proposed in this paper. Section 2 includes an overview of the study area’s location and characteristics as well as an introduction to the experimental data. It also describes the paper’s research methods and processes. Section 3 contains the results of tree crown segmentation, species identification, and parameter extraction, while Section 4 has a full analysis and explanation of the findings. Section 5 summarizes the research findings and provides an outlook on future research work.

2. Materials and Methods

2.1. Materials

2.1.1. Study Area

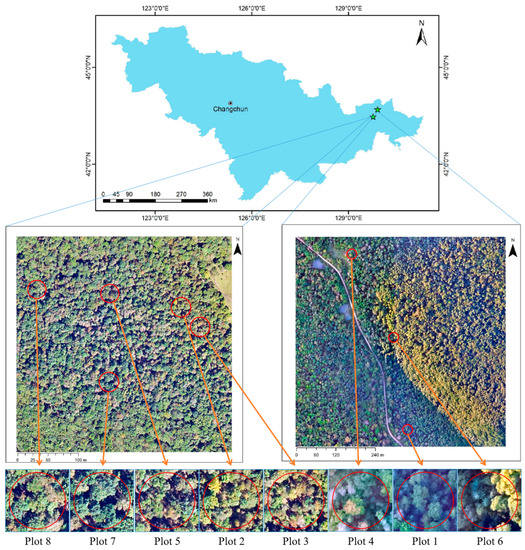

The Northeast China Hupao National Park is located in the northeastern region of China, along the border of Jilin Province and Heilongjiang Province, at 42°31′06″–44°14′49″N, 129°5′0″–131°18′48″E (as shown in Figure 1). The park is situated in the southern region of the Changbai Mountain Range’s Laoyeling branch, with the terrain mainly consisting of middle and low mountains, canyons, and hills, reaching an elevation of 1477.4 m at Laoyeling. The park’s soil is predominantly dark brown soil and marshy soil. The park is located at the heart of the temperate coniferous and broad-leaved mixed forest ecosystem in Asia, with a continental humid monsoon climate, boasting an extremely rich variety of temperate forest plant species. The forest coverage rate is 93.32%, with the main vegetation type being temperate coniferous and broad-leaved mixed forests.

Figure 1.

Images of eight sample plots in Northeast China Hupao National Park. The plot positions and IDs are shown in figure and the red circles represent the plots areas.

2.1.2. Sample Plot Data

In the study area, eight circular sample plots with a diameter of 30 m were established, and various forest parameters for each tree were measured within the plots. These parameters included tree height, north–south crown width, east–west crown width, height under branches (HUB), DBH, tree species, tree location, and three indirectly measured parameters: stock volume, aboveground biomass (AGB), and belowground biomass (BGB). For each sample plot, the mean value or the cumulative value of the measured parameters were recorded as shown in Table 1 (cumulative stock volume and cumulative AGB represent the total amount of tree volume and aboveground biomass within a sample plot area). As these are natural forests, the trees vary in height and crowns overlap. The terrain, tree species distribution, and tree growth conditions were all different for the eight sample plots, which meets the requirements for sample plot verification and updating tests.

Table 1.

Parameters for the eight sample plots.

The sample plot data were provided by the local forestry bureau. The direct parameters were obtained through measurements using instruments such as total stations and altimeters. The indirect parameters were derived through the relevant calculation formulas. These formulas have been validated in practice and widely applied. The sample plot data were collected in September 2018, the same year as the acquisition of the LiDAR data.

For the accuracy assessment of the estimated results, further field validation was conducted on the manually measured data to obtain the reference data, where professionals used instruments such as total stations and ultrasonic altimeter to measure the trees. These measurements were compared with the data obtained from the plot surveys to determine their consistency. If any differences were found, further investigation was carried out to identify the reasons. The field-validated data can be considered as true and reliable and can be used for comparison with estimated results to assess accuracy.

2.1.3. LiDAR Data

The LiDAR data were acquired using an airborne LiDAR scanning system, combined with IMU/DGPS-assisted surveying technology. Specifically, a Cessna 208b aircraft was selected to carry the riegl-vq-1560i LiDAR payload (with seamless integration of an Inertial Measurement Unit (IMU) and global navigation satellite system (GNSS)). The Cessna 208b aircraft also jointly observed data with ground Cooperating Operating Reference Stations (CORS) and artificial base stations. The LiDAR data acquisition flight altitude was set to an average height aboveground level (AGL) of 1000 m, with the laser recorder capturing four echoes for each laser pulse. With a flight line overlap of 20%, the average laser point density was 20 points per square meter (ppm2). In accordance with the project’s task specifications, the reported horizontal accuracy was between 15 and 25 cm, while the vertical accuracy was approximately 15 cm. The properties are shown in Table 2.

Table 2.

Properties of the point cloud data.

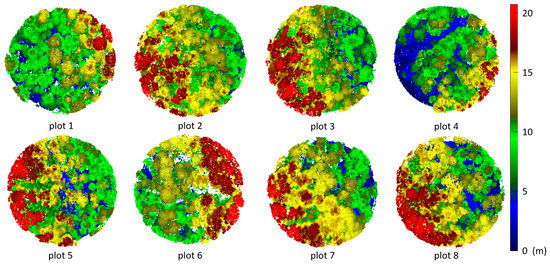

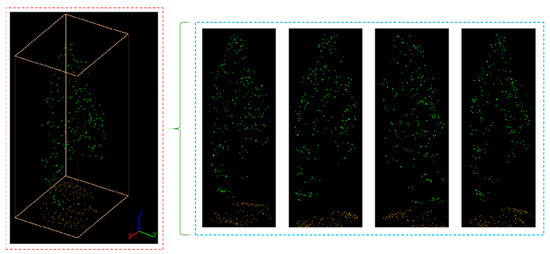

To conduct the experiment, the point cloud data were clipped using vectors of the sample plots boundaries with an additional buffer zone of 30 m radius reserved. The resulting point cloud data within the boundaries were used for the experiment (as shown in Figure 2).

Figure 2.

Point cloud data of the eight sample plots with buffer zone after clipping and normalization.

2.2. Methods

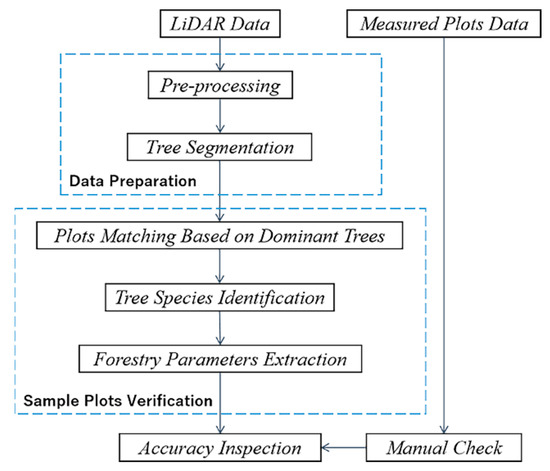

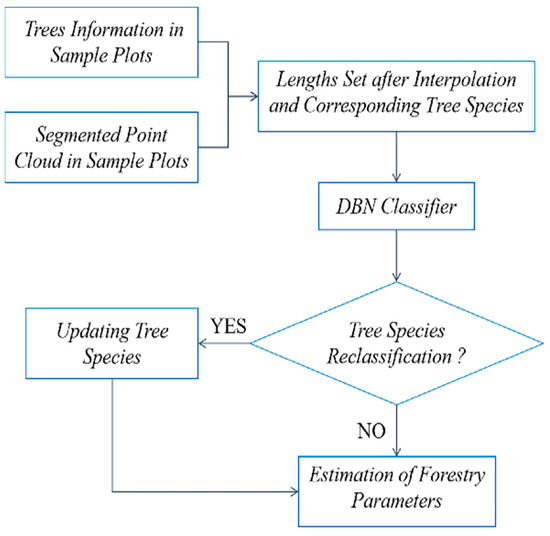

Figure 3 illustrates the workflow of this experiment, which consisted of two parts: data preparation and sample plot verification. The data preparation included the preprocessing of the point cloud data and the segmentation of trees in the plots. The sample plot verification involved plot matching, tree species identification, and the extraction of forestry parameters.

Figure 3.

Workflow of the overall method.

2.2.1. Point Cloud Preprocessing

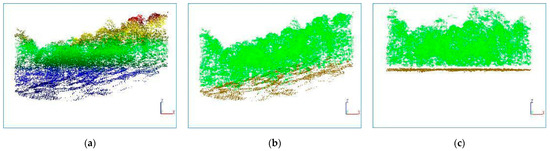

Preprocessing included filtering and normalization of point cloud data, aiming to obtain a point cloud that eliminated terrain effects and reflected the real horizontal and vertical structure of the forest.

Point cloud filtering consisted of two parts: noise removal and ground point separation. Noise in the point cloud refers to points with unreasonable elevation values. In this study, the radius outlier removal (ROR) filter [55] was used to remove these points. Ground point separation is the process of separating ground points and nonground points (mainly tree points in this study), and the obtained ground points can be used to generate a digital elevation model (DEM). Ground point separation was implemented using the LiDARForest software (version 1.0), which used the progressive triangulated irregular network (TIN) densification algorithm (PTD) proposed by Axelsson [56]. The thresholds of PTD were determined based on the terrain variability and point cloud density.

Before conducting relevant research, it is common to normalize the point cloud. Point cloud normalization refers to the process of subtracting the elevation values of DEM from point clouds. The normalized tree point cloud CNTP eliminates the influence of terrain and reflects the true horizontal and vertical distribution of trees. And the preprocessing procedure is shown in Figure 4.

Figure 4.

Point cloud preprocessing procedure. (a) Original point cloud; (b) Filtered point cloud; (c) Normalized point cloud. Point color in (a) is base on elevation. Ground points are shown in brown and tree points in green in (b,c).

To evaluate the accuracy, the following indicators were used. The reference data used were precisely manually identified DEM.

Separation accuracy: the ratio of correctly classified points to the total number of points in plot;

Type I error: nonground points classified as ground points after separation;

Type II error: ground points classified as nonground points after separation.

2.2.2. Tree Segmentation

To implement tree segmentation, the positions of the trees within the plots need to be determined. Based on the structure of the trees, the position of the highest point within an individual tree range can be selected as the center of the tree. Therefore, the local highest points in CNTP were selected as the initial treetop points.

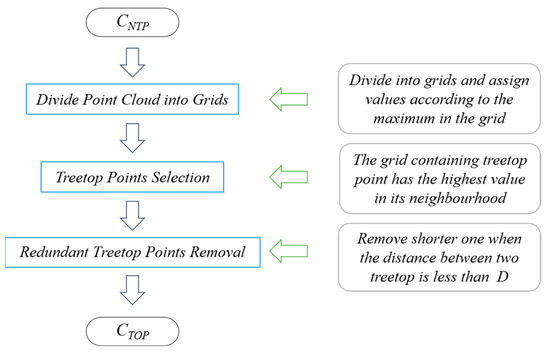

The CNTP was partitioned into grids, with the grid size, d, depending on the average distance between points. The elevation value Z of the highest tree point within each grid was assigned to the grid. If there were no tree points within the grid, it was assigned a value of 0. The treetop points were selected from the CNTP according to the following criteria: the grid containing the treetop point should have the maximum value within its neighborhood, and it should not be adjacent to any grid that does not contain any tree points. To remove the redundant treetop points, a distance threshold D was defined, and the shorter treetop points were removed when the distance between two treetop points was less than D. D served as an initial judgment for redundant treetops; it was not set too small to avoid considering genuine treetops as redundant. Finally, the collection of all the selected treetop points formed the initial treetop points set CTOP. The workflow for obtaining initial treetop points is shown in Figure 5.

Figure 5.

Workflow for obtaining initial treetop points. CNTP: normalized tree point cloud; CTOP: treetop points set; D: distance threshold.

After obtaining the initial treetop points, many studies use a clustering method to segment the crowns. This study focused on the vertical profile structure of initial points and utilized the rotational profiles to search for the edges of the individual tree crown, thereby performing tree segmentation and refining the positions of the trees.

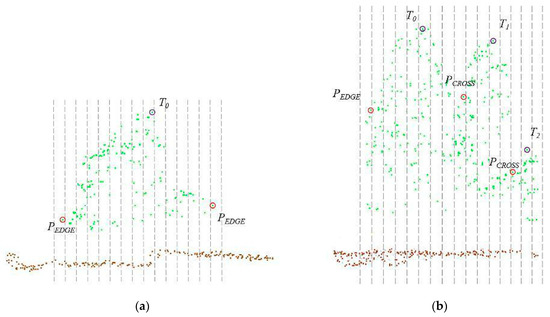

As shown in Figure 6, for a treetop point T0, the longitudinal profile at a certain angle may have the following cases: (a) the profile only contains the crown of T0; or (b) there are N treetop points Ti (1 ≤ i ≤ N) in addition to T0 within the profile, which is the general situation. The profile was divided into M subsegments Sj (1 ≤ j ≤ M) of equal length, Zj was the maximum Z value of the tree points in subsegment Sj, and the corresponding tree point was Pj. The edge point PEDGE and the intersection point PCROSS were searched in Pj. For situation (a), where only the crown of T0 exists in the profile, only PEDGE needs to be searched. In this case, the edge point was located at both ends of the profile, as shown in Figure 6. For situation (b), in addition to the edge point, the intersection point PCROSS between the crown of T0 and other crowns had to be found. According to the overlapping situation of tree crowns, the intersection point was regarded as a valley point in the profile. Therefore, Equation (1) for obtaining PEDGE and PCROSS was derived.

where Pj: the vertex of the subsection Sj of the profile, (1 ≤ j ≤ m − 1);

Figure 6.

Subsegments and the location of PEDGE and PCROSS. (a) Single crown with treetop T0; (b) Multiple crowns with treetops Ti (i = 0, 1, 2). Ground points are shown in brown and tree points in green.

Zj: Z value of Pj.

The profile varies in different directions. To accurately identify individual trees, the edges can be extracted by analyzing a series of rotational profiles, which are divided at intervals according to a certain angle. The steps are as follows:

Arrange CTOP in descending order of elevation to obtain the sequence of treetop points Ti;

Get a profile at the given angle for treetop T1. Let Zj be the maximum Z value of the tree points in subsegment Sj, and Pj is the corresponding tree point. Find PEDGE and PCROSS in Pj (Figure 6 and Equation (1)) to obtain the crown range under this profile;

Rotate the profile around T1 to another angle to obtain the new PEDGE and PCROSS. Repeat the rotation until the rotation angle reaches 180°; then, all possible edges are obtained as well as the rough range Rt of the tree;

Iterate over the remaining treetop points, removing the point from CTOP if it lies within Rt. All high tree points within Rt can be grouped into the tree’s crown;

Select the next treetop in descending order of elevation and repeat (2)–(4) until all treetop points have been segmented;

High points within a linear distance less than DMAX from the tree’s axis are grouped into the corresponding crown set. The tree’s axis is the line from the crown’s center of gravity to the ground, and this method can exclude some isolated high points.

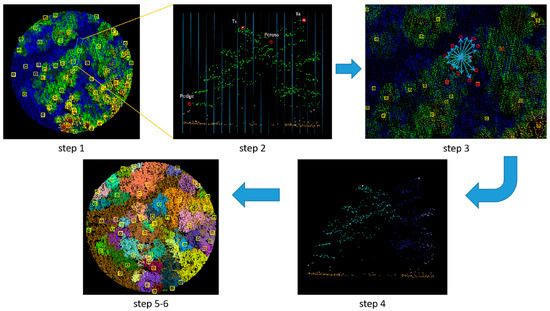

Workflow of rotational profile algorithm is shown in Figure 7, and Figure 8 shows the segmented result.

Figure 7.

Workflow of rotational profile algorithm. The unsegmented tree points color are based on the elevation and the segmented single trees are shown in different colors.

Figure 8.

Treetop points location after segmentation. (a) Normalized point cloud; (b) Segmented point cloud; (a) A partial profile of (b). The treetop points are shown in rectangles or circles in (b,c). And the segmented single trees are shown in different colors in (b,c).

To evaluate the correct segmentation, recall, precision, F-score [57], and balance accuracy [58] are used as indicators to verify and evaluate the accuracy of individual tree segmentation.

where r, p, F and BA are recall, precision, F-score and balance accuracy, respectively;

TP: number of correctly segmented trees;

FN: number of undersegmented trees;

FP: number of oversegmented trees;

FN: number of nontreetop points that are successfully excluded, (i.e., initial CTOP − (TP + FP + FN)).

2.2.3. Plot Matching Based on Dominant Trees

The spatial accuracy of measured data was limited by the measurement environment, while the point clouds were more accurate (see Table 2 for accuracy). The measured data and point cloud data do not match in spatial position, so plot matching was required to register the measured data to the point cloud data, achieving spatial consistency between the two data sets before further operations could be carried out.

The concept of matching degree R was proposed for plot matching: Δh is the difference between the measured tree height and the point cloud height. When the absolute value of Δh was less than the threshold DT, the position of the sample tree was considered correct. The matching degree of the plot was defined as the ratio of the number of trees that satisfy Δh < DT to the total number. When the matching degree of the plot was greater than 90%, the spatial position of the site can be considered accurate.

where R: the matching degree;

N: the number of trees in the plot;

n: the number of trees in the plot that satisfy Δh < DT.

When the matching degree did not meet the requirement, it was necessary to perform plot matching based on dominant trees. Dominant trees in forestry refer to the largest trees in terms of diameter, tree height, tree crown height, occupying the largest space, receiving the most sunlight, and experiencing almost no compression. Based on the positive correlation between tree height, diameter, and tree crown height, we defined the top 10% of tree heights (or 5% when there are many trees) in the plot as dominant trees. Using the position information of dominant trees, a translation and rotation coordinate transformation matrix was established between the plot and the LiDAR point cloud data. The plot was rotated and translated so that the position of the dominant trees in the plot matched that of the segmented point cloud, and plot attributes were updated when the matching degree exceeded 90%.

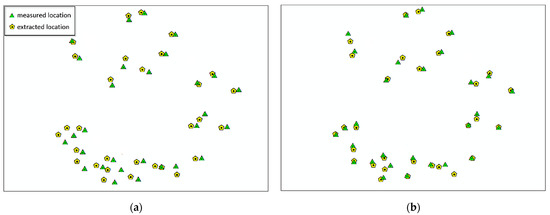

In fact, the positions of the dominant trees in measured data and the segmented point cloud cannot achieve complete consistency. This is because of the presence of inclines and tree crowns, which make the treetop position not fully represent the tree center. Therefore, position deviation value Dp (Equation (7)) was proposed to quantitatively reflect the deviation between the positions of the dominant trees in the measured data and the segmented point cloud. It was required that the matching degree R be greater than 90%, and the position deviation value Dp be smaller than the set threshold. At this point, the matching of the two data sets was considered complete, and could be used for subsequent parameter extraction. The plots matching results are shown in Figure 9.

where Dp: deviation value;

Figure 9.

Plots matching based on dominant trees. (a) Locations of the measured trees and extracted trees (from segmented point cloud) before matching; (b) Locations after matching.

n: the number of dominant trees in the plot;

Δdi: deviation of the position of the ith dominant tree in measured data and point cloud (1 ≤ i ≤ n).

2.2.4. Tree Species Identification and Verification

Traditional forestry surveys remain the most reliable method for obtaining tree species information, providing relatively accurate information about the species within the sample plots. However, it is undeniable that errors in judgment or recording can occur during manual measurements, making it necessary to further verify and update the tree species information. In this study, by selecting typical individuals of each tree species within the plots and obtaining their cross-sectional features, a DBN classifier was built to identify the tree species.

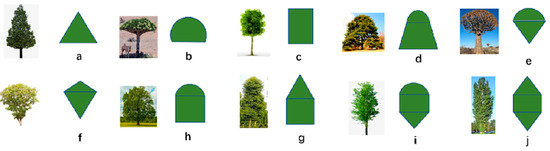

Currently, many scholars use the combination of crown point cloud and spectral information to achieve tree species identification [59,60]. However, the spectral information of a certain tree species changes with season, time, and leaf age. Therefore, in addition to deformation caused by occasional lightning strikes or rock invasion, the geometric shape of the tree crown is the more reliable information for tree species identification. Different tree species often have different tree crown shapes, as shown in Figure 10. In this paper, we sampled the crown width of typical species according to height to represent the geometric shape of the crown profile. The obtained width set was used as a sample to train the DBN classifier.

Figure 10.

The shape of the tree crowns and their simplified fitted shapes. (a) triangle. (b) sector. (c) rectangle. (d) The combination of sector and trapezoid from top to bottom. (e) The combination of sector and triangle from top to bottom. (f) The combination of two triangles from top to bottom. (g) The combination of sector and rectangle from top to bottom. (h) The combination of triangle and rectangle from top to bottom. (i) The combination of sector, rectangle and triangle from top to bottom. (j) The combination of triangle, rectangle and triangle from top to bottom.

In this study, 7 species were involved in tree species identification and they belonged to different categories with large morphological differences; thus, the use of simplified fitted shapes was sufficient. When faced with more challenging tree species identification (e.g., containing tree species with morphological characteristics or subspecies), the identification of categories can be based on the fitted shape first, and then morphological parameters, such as angle and aspect ratio, can be introduced to the identification.

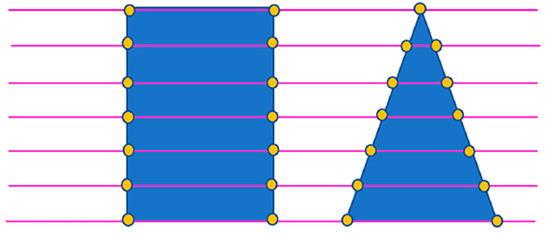

(1) Extraction of key points based on parallel lines

For a given set of segmented tree points of a single tree, draw a vertical line L from the treetop to the ground. Choose several profiles with a certain width DW centered on the treetop and use the selection criterion that they should not be connected to other tree crowns (as shown in Figure 11). The size of DW is related to the average point distance dis of the point cloud, generally set as DW = 2dis. On a profile, draw parallel lines along L with a certain spacing Δd to obtain the positions of endpoints within the parallel lines space. After all parallel lines are scanned, all endpoints can be defined as key points of the crown under the profile (as shown in Figure 12).

Figure 11.

Different profiles of the segmented point cloud from a single tree. Ground points are shown in brown and tree points in green.

Figure 12.

Extraction of key points based on parallel lines. The yellow points are the key points and the blue objects are the fitting shapes.

(2) Extraction of geometric morphological features of crown profiles

Connect key points on the same parallel lines and record the position li and length gi of obtained intersection (1 ≤ i ≤ n, the number of the lines is n + 1). The intersection positions and lengths obtained from parallel lines can summarize the shape of the tree crown, but the number of intersection lines for each tree may not be the same due to the influence of tree height. Due to the limitation of point cloud density, the length accuracy is reduced when the same number of intersection lines are forced to be intercepted for each tree. To facilitate the subsequent training of the DBN, the cubic spline interpolation method is used to interpolate the number of intersection lines for each tree to be consistent. The cubic spline interpolation method is highly accurate and can avoid the Runge phenomenon, which causes violent oscillations in the interpolation function curve during polynomial interpolation.

S(l) is set as the interpolation function. Each small interval of S(l) is a cubic function, as shown in Equations (8) and (9):

The function S(l) should satisfy the value of gi at node li, as shown in Equation (10):

At all nodes (except the first node and last node ), the first-order derivative and second-order derivative are continuous, ensuring the same slope and degree of curvature at the nodes, as shown in Equation (11):

The natural spline condition: the second derivatives of the first node and last node are 0, allowing the slope of the endpoints to remain balanced at a certain position and minimizing the oscillation of the interpolation function curve. Equation (12):

Based on Equations (10)–(12), the coefficients ai, bi, ci, and di in Equation (9) can be solved to determine the interpolation function S(l). Then, by reselecting appropriate positions l’i and substituting them into S(l), the corresponding intersection lengths g’i can be obtained. This process yields a set of lengths with equal dimensions for each profile, which can be used as a feature vector to describe the geometric characteristics of the profile.

(3) Learning and updating of tree species by DBN.

Deep belief network (DBN) [61] was chosen for learning and updating of tree species. Compared to most other deep learning models, DBN is more thoroughly trained on data, achieving better convergence in small-scale samples and making it more applicable in forest environments with limited sample sizes.

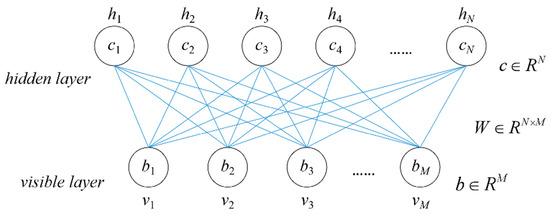

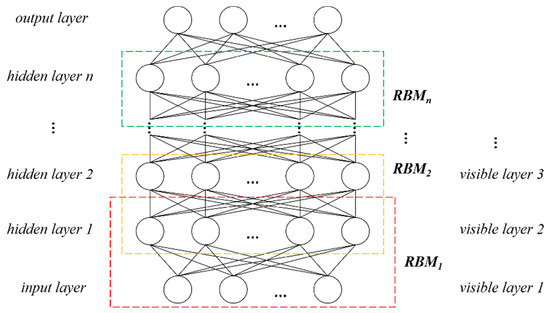

The DBN was formed by stacking multiple Restricted Boltzmann Machines (RBMs), which consists of two layers: a hidden layer and a visible layer (as shown in Figure 13). When stacking RBMs to form a deep learning network, the output layer (hidden layer) of the previous RBM serves as the input layer (visible layer) of the next RBM, and so on. Finally, an output layer was added to form the basic structure of the DBN.

Figure 13.

The structure of RBM.

RBM is a fully connected layer with no connections within each layer. Set as the network input; the neuron likelihood functions of the visible and hidden layer can be expressed as follows:

where , is the visible layer input vector;

, is the hidden layer input vector.

M and N are the number of neurons in the visible and hidden layers, respectively.

The energy function between visible layer and hidden layer is:

where : weight between ith visible layer neuron and jth hidden layer neuron;

: bias of ith visible layer neuron;

: bias of jth hidden layer neuron;

, are the network parameters, obtained by training.

The activation functions of visible layer and hidden layer neurons are:

Figure 14 shows the DBN structure. During the training process, the previous layer’s RBM is fully trained before the current layer’s RBM is trained, repeating until the final layer where a Softmax classifier is added to form a complete DBN.

Figure 14.

The structure of DBN.

In this study, the set of interpolated cubic spline intersection lengths g’i were used as input, while the corresponding tree species were used as the target output to train the DBN to achieve the profile-based tree species identification. According to the experiment, this study used a DBN with three layers of RBMs for the identification, which showed the fastest convergence speed and the highest accuracy among all models tested. The whole process of tree species identification is shown in Figure 15.

Figure 15.

Tree species information extraction and checking process.

2.2.5. Forestry Parameter Extraction

The segmented point cloud reflects the structure of individual tree crowns, and some forestry parameters are directly reflected in it. For example, the elevation of the treetop point can be used as the tree height. East–west crown width can be obtained based on the maximum distance in the east–west direction of the crown point set of an individual tree and the north–south crown width can be obtained similarly. Some parameters may not be directly obtained, but they can be indirectly calculated through estimating equations using known tree species, tree height, crown width, and other information extracted from the segmented point cloud as parameters. The methods for obtaining forestry parameters and estimating equation factors are shown in Table 3.

Table 3.

Forestry parameters available from LiDAR data.

DBH refers to the diameter of a tree trunk at a height of 130 cm above the ground and can be estimated by inputting the tree height into the model. Forest biomass is the energy foundation and material source of the forest ecosystem; it is divided into AGB and BGB, usually expressed in terms of dry matter or energy accumulation per unit area or unit time. AGB can be estimated from tree height and DBH. The stock volume is the total volume of living matter of a tree, expressed in cubic meters, and it can be estimated from the DBH. The estimation equations for forest parameters are closely related to tree species, and the models for different tree species are not universal. After tree species identification and updates the tree species information can be considered accurate and applied to the estimation of forestry parameters. The estimation models used in this study were provided by the local forestry bureau.

The estimation factor of the DBH estimation model is the tree height (H, in m), and the output is the DBH (DBH, in cm). The specific equations and application conditions are shown in Table 4.

Table 4.

DBH estimation models.

The factors of the AGB models are tree height (H, in m) and DBH (DBH, in cm), and the output is AGB (AGB, in t/ha). The specific equations are shown in Table 5.

Table 5.

AGB estimation models.

The same type of equation was used for the calculation of the stock volume of different tree species.

where V: stock volume, in m3;

D: DBH, in cm.

The rest of the parameters are shown in the Table 6.

Table 6.

Parameters of stock volume estimation models.

To quantitatively describe the estimation results of each parameter, the coefficient of determination (R2), the mean absolute error (MAE), and the root mean square error (RMSE) were chosen.

where : the measured value. (1 ≤ i ≤ n, n is the number of measured values);

: the estimated value;

: the average of measured values.

3. Results

Eight sample plots, totaling 318 trees, were chosen from the Northeast Tiger and Leopard National Park to test the plot verification and update method proposed in this paper. The accuracy of the results from the LiDAR data is compared using the outfield-confirmed data.

3.1. Ground Point Extraction

This article uses a PTD algorithm to separate ground points and nonground points in point cloud data. The eight plots used in this study have diverse terrain conditions, including flat areas, slopes, and other situations.

The ground point separation accuracy of the eight study plots is shown in Table 7.

Table 7.

The accuracy of the separation in sample plots.

As shown in Table 7, the separation accuracy for each plot is above 95%, indicating that the PTD algorithm has achieved excellent results and is suitable for various ground conditions. Considering the high separation accuracy, the separated ground points can be used for DEM generation to achieve normalization of point cloud data.

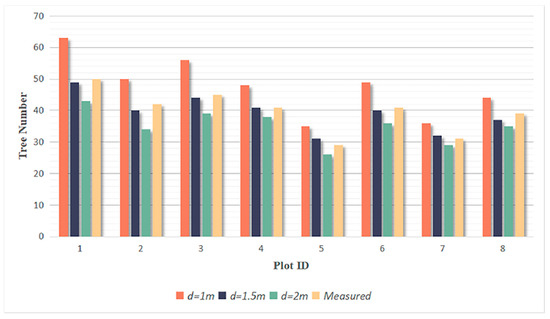

3.2. Tree Segmentation

The setting of d is based on the size of the tree crown in the sample plots, which is concentrated in the range of 2–4 m in diameter. The value of d should be close to the radius of the tree crown to ensure that the positions of the trees can be identified to the greatest extent possible. In the experiment, d is set to 1 m, 1.5 m, and 2 m to segment the eight sample plots. The segmented trees number in 8 plots are shown in Figure 16 and the detailed metrics are shown in Table 8 and Table 9.

Figure 16.

The number of trees segmented from each sample plot.

Table 8.

The TP, FN, FP, and TN of different grid sizes d.

Table 9.

The recall, precision, F-score, and balance accuracy of different grid sizes d.

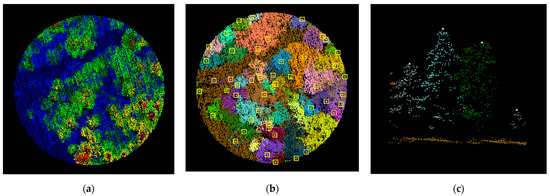

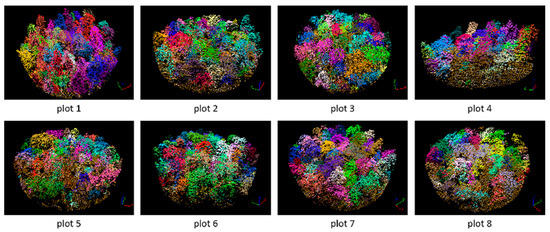

Among the three selected grid sizes, when d = 1.5 m, the total number of undersegmented and oversegmented trees is the lowest. The number of correctly segmented trees is similar to the actual number of trees in each plot, with a total of 308 correctly segmented trees, which was close to the total actual number of 318 trees. The recall, precision, F-score, and balance accuracy (BA) are calculated in Table 9, and the F-scores for all eight plots exceed 0.95 when d = 1.5 m, with the highest overall F-score of 0.976 and the highest overall BA of 0.933 in three grid sizes, indicating that the rotational profile algorithm achieves good segmentation results. Therefore, the part of the correctly segmented trees with d = 1.5 m was selected for subsequent species identification and parameter extraction. The segmented results of 8 plots are shown in Figure 17.

Figure 17.

Segmented point cloud of 8 plot. And the segmented single trees are shown in different colors.

3.3. Plot Matching and Tree Species Identification

The main purpose of plot matching is to solve the problem of positional differences between measured data and point clouds. To this end, this paper proposes the concept of matching degree and uses dominant trees for plot matching. However, due to the complexity of the actual situation, it is difficult to achieve complete consistency between the two datasets. Therefore, the deviation of the position of each tree is statistically collected and the deviation values are calculated, as shown in Table 10. Plot matching is considered complete when the matching degrees of the eight plots are greater than 90% and the deviation values are less than the threshold of 8 m.

Table 10.

Matching degrees and position deviation values of eight plots.

This study successfully summarizes the three-dimensional point clouds in the classification as feature vectors, which are one-dimensional arrays recording crown lengths. It also effectively preserves the detailed characteristic information, fully utilizing spatial structural information to summarize the geometric morphology of each tree, thus achieving tree species identification, classification, and updating. The main tree species in the plots include pine, oak, birch, elm, poplar, maple, and other species. Several typical profiles are selected as training samples to obtain the morphological structure and parameters of each species. Table 11 shows that when the sample size of a certain tree species is insufficient, more data can be obtained by selecting profiles in multiple directions.

Table 11.

The number of training samples.

The trained model is applied to achieve classification. To evaluate the classification performance, the correct rate is defined as the ratio of the number of successfully classified trees to the number of misclassified trees. The method proposed is used to update the tree species information of the trees in eight plots, and the results are shown in Table 12. “Other” in the table refers to the collection of miscellaneous trees in the plots, which are very diverse, less distributed, and generally small in size. The overall correct rate reaches 90.9%, providing a reliable tree species classification method and model for forest surveys.

Table 12.

Accuracy of the tree identification.

The details are listed in the form of a confusion matrix in Table 13, which can more accurately indicate the error correction information for each tree species.

Table 13.

Confusion matrix of tree species misinformation correction.

Table 12 and Table 13 show that most tree species can be corrected with 100% accuracy, and the few misclassified tree species are due to different degrees of damage to the tree crown or inaccurate profile structural information caused by being close to adjacent trees during segmentation. Overall, using LiDAR data to correct tree species information is a reliable method with high accuracy, suitable for classifying most normally growing tree species.

3.4. Forestry Parameters Extraction

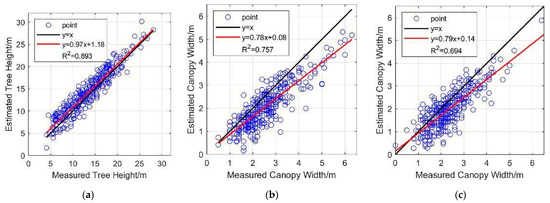

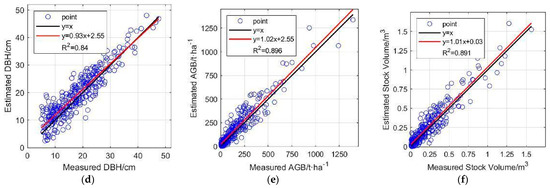

After tree species identification and updating, information is taken from the major tree species’ segmented point cloud, and is utilized to estimate forestry parameters. Linear regressions are performed between the measured forestry parameters and the estimated parameters of 265 trees (except “others”) and are shown in Figure 18. And the accuracy metrics of each parameter are shown in Table 14.

Figure 18.

Linear regression plots of six parameters. Red lines are linear regression lines. (a) Tree height; (b) East–west crown width; (c) North–south crown width; (d) DBH; (e) AGB; (f) Stock volume.

Table 14.

Estimation accuracy of forestry parameters.

The estimation results of four forestry parameters, namely, tree height, DBH, AGB, and stock volume, show strong consistency with the measured values, with R2 exceeding 0.8. Tree height shows higher consistency in the range of 20–30 m, and in the range of 5–20 m, the estimated tree heights are slightly higher than the measured heights in general, which was similarly reflected in the estimation of DBH. The accuracy of crown width estimation is relatively lower (R2 = 0.757 for east–west and R2 = 0.694 for north–south), and the estimated values are smaller than the measured values in general. This also confirms that the use of LiDAR data is expected to become a powerful tool for future forestry surveys.

4. Discussion

4.1. Tree Segmentation

The results of tree segmentation essentially depend on the point cloud density and forest growth conditions. Some small trees and closely spaced trees will inevitably be ignored, resulting in multiple trees being recognized as one after segmentation. When a tree is too large or the canopy shape is incomplete, it is easily segmented into multiple trees. Based on the selected initial treetop points, the method in this paper avoids the undersegmentation and oversegmentation of tree canopies by seeking profiles in multiple directions. Meanwhile, trees located within the high tree canopy range are identified as redundant parts of high trees and merged to further improve the accuracy of segmentation.

The results of tree segmentation are closely related to the grid size d. When d = 1 m, the maximum number of trees are segmented, but the computational cost increases. Moreover, the redundant part in initial treetop points also increases, resulting in 65 oversegmented trees, requiring a lot of effort to exclude in practical work. This also leads to the incompleteness of correctly segmented crowns, which affects the subsequent tree species identification and parameter extraction. However, if the value of d is set too large to 2 m, some short trees will be missed, and multiple trees with distances less than 2 m will be directly merged into one tree. The selection of the optimal value is related to point cloud density, crown size, and shape [39].

The watershed algorithm (WA) is based on the Canopy Height Model (CHM), which involves the projection and interpolation of point clouds to generate a two-dimensional raster model. As a result, its segmentation performance is poor [62,63]. To address the limitations of CHM, Ma et al. [64] developed a Vegetation Point Cloud Density Model (VPCDM) by analyzing the spatial density distribution of classified vegetation point clouds in the planar projection. The application of VPCDM to three study sites yielded F-scores of 0.97, 0.91, and 0.80, respectively. However, VPCDM is still a two-dimensional model and is limited by resolution constraints. Qin et al. [46] proposed a watershed–spectral–texture-controlled normalized cut (WST-Ncut) algorithm However, vegetation spectral and textural information often vary over time. Liu et al. [65] developed a multiround comparative shortest-path algorithm (M-CSP) to segment trees and shrub plants in urban environments. Lu et al. [33] proposed a bottom-up approach to segment trees from LiDAR data and achieved F-scores exceeding 0.9 in leaf-off forests. However, the bottom-up algorithm is susceptible to the influence of understory vegetation, such as shrubs, and requires additional removal steps. In addition, many three-dimensional segmentation algorithms require the presetting of multiple thresholds for segmenting trees of different scales. However, the rotating profiles analysis algorithm (RPAA) only requires determining the grid size to achieve the segmentation of trees at different scales within the plot. Furthermore, the complete profiles obtained during the segmentation process can be applied to subsequent operations. The results of each methods are shown in Table 15.

Table 15.

Comparison of different segmentation methods.

4.2. Tree Species Identification

Unlike plantation forests, overlapping phenomenon exists between the crowns of trees in natural forest areas in Northeast China. Therefore, the crown point clouds obtained by tree segmentation are incomplete and cannot accurately reflect the tree structure information. This is an important reason of the limits to the application of LiDAR data in natural forest areas.

Zou et al. [66] applied the DBN network to ground-based LiDAR point clouds and used tree projection images as low-level features to validate the feasibility of DBN for small-sample tree species classification. The accuracy achieved on two datasets was 93.1% and 95.6%, respectively. In this study, further abstraction of tree species features was conducted by fitting the tree canopy into basic shapes and representing them using an array of tree canopy lengths. This method reduced computational complexity (from three-dimensional to one-dimensional) while preserving contour features, effectively eliminating random errors caused by semirandom scattered point clouds.

The segmentation accuracy for seven tree species in this study reached 90.9%. Zhong et al. [67] employed an SVM/RF classifier based on fusion data, which performed well for general macroclasses but was less suitable for single tree species classification, achieving a classification accuracy of 76.5% for natural forests in Northeast China. Onishi et al. [68] used CNN to combine LiDAR data and RGB imagery for classification, achieving an accuracy of over 90%, but their results were affected by seasonal variations. Liu et al. [69] proposed a point-based deep neural network called LayerNet, capable of classifying three-dimensional point clouds with an accuracy of 93.2%, but it was only applied to Birch and Larch tree species.

The method used in this paper relies entirely on the geometric shape of the tree crown and does not involve any spectral information. Therefore, if the tree crown of a tree is damaged naturally or artificially, or if the tree crowns are too close to one another, it will affect the reliability of the geometric shape of the section. This can cause the tree species classification model to be unable to identify incorrect tree species information or to classify tree species information incorrectly. For example, in this experiment the elm and maple both show a combination of triangular and rectangular shapes in their sections, making them more prone to misclassification. However, as the spectral characteristics of the leaves and flowering periods of elm and maple are quite distinct, in future studies, we will try to integrate spectral information based on the distribution characteristics of tree species in the study area to achieve high precision tree species classification.

4.3. Forestry Parameters Extraction

Given that the known species of trees, tree height, crown width, DBH, AGB, and stock volume can be estimated, the estimated values show strong consistency with the measured values.

The accuracy of forestry parameter estimation depends largely on the result of tree segmentation; for example, crown width is directly determined by the size of the segmented crown. The study area is a natural forest, where crown overlap is more significant compared to artificial forests, resulting in segmented tree crowns being smaller than the actual situation and leading to an estimated crown width that is smaller than the actual measured value. This is more obvious for taller trees. Therefore, although both are direct parameters, the accuracy of crown width estimation is lower than that of tree height (R2 of tree height is 0.893, while that of east–west crown width is 0.757 and north–south crown width is 0.694).

Indirect forest parameters such as AGB use tree height and DBH as independent variables in estimation equations, and DBH estimation also uses tree height as input. Therefore, the accuracy of tree height is particularly important for the estimation of indirect forest parameters. Tree height has higher consistency within the range of 20–30 m, while in the range of 5–20 m, the estimated tree height is slightly higher than the measured tree height on average, which is caused by the uneven height distribution and overlapping of trees in natural forest. Correspondingly, in the estimation of DBH, which uses tree height as the only input, the estimated values of larger trees have higher consistency with the measured values (usually larger diameter corresponds to higher tree height), and the estimated values of smaller trees are slightly higher than the measured values. As for AGB and stock volume, they are obtained by substituting tree height and DBH into the estimation equations, and their accuracy (AGB R2 = 0.896, stock volume R2 = 0.891) is very close to that of tree height and DBH (tree height R2 = 0.893, DBH R2 = 0.840).

5. Conclusions

In this study, based on LiDAR point cloud data and measured data of Northeast Tiger and Leopard National Park, we conducted the verification and update of sample plot information and realized the whole process from data preprocessing to forestry parameter estimation to obtain the following conclusions:

- (1)

- The article uses the PTD algorithm to separate ground and nonground points with an accuracy of more than 95%, achieving excellent separation results and providing a good preparation for the subsequent steps;

- (2)

- The rotating profile algorithm is applied to the tree segmentation, and the oversegmentation and undersegmentation are suppressed when grid size d = 1.5 m. Under this condition, the F-scores of the eight sample plots exceed 0.94, the overall F-score is 0.975, and the overall BA is 0.933;

- (3)

- Using information on tree species from the plot samples, the correspondence between tree species and segmented crowns geometry is established, achieving tree species recognition and information correction based on LIDAR data, with an overall correctness rate of 90.9%;

- (4)

- Based on the updated tree species, tree height, east–west crown width, north–south crown width, DBH, AGB, and stock volume are extracted from the sample plots. R2 of these estimated parameters are 0.893, 0.757, 0.694, 0.840, 0.896 and 0.891, respectively, which strongly correlate with the measured values.

In the future, based on the forest tree species and distribution, comprehensive use of LiDAR point cloud and image data will enable more accurate estimation of forestry parameters. The establishment of tree species morphology databases, spectral databases, regional tree species classification model databases, etc., will promote the comprehensive evaluation system of remote sensing technology and become an important means for future forestry surveys.

Author Contributions

C.Y. conceived and designed the experiments. C.Y. and J.W. performed the experiments. J.X. and C.Q. analyzed the data. J.W. and H.M. wrote the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded and supported by National Key R&D Program of China grant number (2018YFB0504500), National Natural Science Foundation of China grant number (41101417) and National High Resolution Earth Observations Foundation grant number (11-H37B02-9001-19/22).

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy.

Conflicts of Interest

The authors declare there is no conflicts of interest regarding the publication of this paper. The founding sponsors had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Eckert, S. Improved Forest Biomass and Carbon Estimations Using Texture Measures from WorldView-2 Satellite Data. Remote Sens. 2012, 4, 810–829. [Google Scholar] [CrossRef]

- He, X.; Ren, C.; Chen, L.; Wang, Z.; Zheng, H. The Progress of Forest Ecosystems Monitoring with Remote Sensing Techniques. Sci. Geogr. Sin. 2018, 38, 97–1011. [Google Scholar] [CrossRef]

- Malhi, Y.; Baldocchi, D.D.; Jarvis, P.G. The carbon balance of tropical, temperate and boreal forests. Plant Cell Environ. 1999, 22, 715–740. [Google Scholar] [CrossRef]

- Dong, X.; Li, J.; Chen, H.; Zhao, L.; Zhang, L.; Xing, S. Extraction of individual tree information based on remote sensing images from an Unmanned Aerial Vehicle. J. Remote Sens. 2019, 23, 1269–1280. [Google Scholar] [CrossRef]

- Wang, L.; Yin, H. An Application of New Portable Tree Altimeter DZH-30 in Forest Resources Inventory. For. Resour. Wanagement 2019, 06, 132–136. [Google Scholar] [CrossRef]

- Liu, F.; Tan, C.; Zhang, G.; Liu, J. Estimation of Forest Parameter and Biomass for Individual Pine Trees Using Airborne LiDAR. Trans. Chin. Soc. Agric. Mach. 2013, 44, 219–224, 242. [Google Scholar] [CrossRef]

- Ouma, Y.O. Optimization of Second-Order Grey-Level Texture in High-Resolution Imagery for Statistical Estimation of Above-Ground Biomass. J. Environ. Inform. 2006, 8, 70–85. [Google Scholar] [CrossRef]

- Marshall, M.; Thenkabail, P. Advantage of hyperspectral EO-1 Hyperion over multispectral IKONOS, GeoEye-1, WorldView-2, Landsat ETM+, and MODIS vegetation indices in crop biomass estimation. ISPRS J. Photogramm. Remote Sens. 2015, 108, 205–218. [Google Scholar] [CrossRef]

- Mohammadi, J.; Shataee, S.; Babanezhad, M. Estimation of forest stand volume, tree density and biodiversity using Landsat ETM+Data, comparison of linear and regression tree analyses. Procedia Environ. Sci. 2011, 7, 299–304. [Google Scholar] [CrossRef]

- Franklin, S.E.; Hall, R.J.; Smith, L.; Gerylo, G.R. Discrimination of conifer height, age and crown closure classes using Landsat-5 TM imagery in the Canadian Northwest Territories. Int. J. Remote Sens. 2003, 24, 1823–1834. [Google Scholar] [CrossRef]

- Brown, S.; Pearson, T.; Slaymaker, D.; Ambagis, S.; Moore, N.; Novelo, D.; Sabido, W. Creating a virtual tropical forest from three-dimensional aerial imagery to estimate carbon stocks. Ecol. Appl. 2005, 15, 1083–1095. [Google Scholar] [CrossRef]

- Cloude, S.R.; Papathanassiou, K.P. Forest Vertical Structure Estimation using Coherence Tomography. In Proceedings of the IGARSS 2008—2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 6–11 July 2008. [Google Scholar] [CrossRef]

- Blomberg, E.; Ferro-Famil, L.; Soja, M.J.; Ulander, L.M.H.; Tebaldini, S. Forest Biomass Retrieval From L-Band SAR Using Tomographic Ground Backscatter Removal. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1030–1034. [Google Scholar] [CrossRef]

- Matasci, G.; Hermosilla, T.; Wulder, M.A.; White, J.C.; Coops, N.C.; Hobart, G.W.; Zald, H.S.J. Large-area mapping of Canadian boreal forest cover, height, biomass and other structural attributes using Landsat composites and lidar plots. Remote Sens. Environ. 2018, 209, 90–106. [Google Scholar] [CrossRef]

- Luckman, A.; Baker, J.; Honzák, M.; Lucas, R. Tropical Forest Biomass Density Estimation Using JERS-1 SAR: Seasonal Variation, Confidence Limits, and Application to Image Mosaics. Remote Sens. Environ. 1998, 63, 126–139. [Google Scholar] [CrossRef]

- Means, J. Use of Large-Footprint Scanning Airborne Lidar to Estimate Forest Stand Characteristics in the Western Cascades of Oregon. Remote Sens. Environ. 1999, 67, 298–308. [Google Scholar] [CrossRef]

- White, J.C.; Wulder, M.A.; Varhola, A.; Vastaranta, M.; Coops, N.C.; Cook, B.D.; Pitt, D.; Woods, M. A best practices guide for generating forest inventory attributes from airborne laser scanning data using an area-based approach. For. Chron. 2013, 89, 722–723. [Google Scholar] [CrossRef]

- Lefsky, M.A.; Cohen, W.B.; Acker, S.A.; Parker, G.G.; Spies, T.A.; Harding, D. Lidar Remote Sensing of the Canopy Structure and Biophysical Properties of Douglas-Fir Western Hemlock Forests. Remote Sens. Environ. 1999, 70, 339–361. [Google Scholar] [CrossRef]

- Temesgen, H.; Zhang, C.H.; Zhao, X.H. Modelling tree height–diameter relationships in multi-species and multi-layered forests: A large observational study from Northeast China. For. Ecol. Manag. 2014, 316, 78–89. [Google Scholar] [CrossRef]

- Schmidt, M.; Kiviste, A.; von Gadow, K. A spatially explicit height–diameter model for Scots pine in Estonia. Eur. J. For. Res. 2010, 130, 303–315. [Google Scholar] [CrossRef]

- Zheng, J.; Zang, H.; Yin, S.; Sun, N.; Zhu, P.; Han, Y.; Kang, H.; Liu, C. Modeling height-diameter relationship for artificial monoculture Metasequoia glyptostroboides in sub-tropic coastal megacity Shanghai, China. Urban For. Urban Green. 2018, 34, 226–232. [Google Scholar] [CrossRef]

- Ng’andwe, P.; Chungu, D.; Yambayamba, A.M.; Chilambwe, A. Modeling the height-diameter relationship of planted Pinus kesiya in Zambia. For. Ecol. Manag. 2019, 447, 1–11. [Google Scholar] [CrossRef]

- Hyyppä, J.; Yu, X.; Hyyppä, H.; Vastaranta, M.; Holopainen, M.; Kukko, A.; Kaartinen, H.; Jaakkola, A.; Vaaja, M.; Koskinen, J.; et al. Advances in Forest Inventory Using Airborne Laser Scanning. Remote Sens. 2012, 4, 1190–1207. [Google Scholar] [CrossRef]

- Kaartinen, H.; Hyyppä, J.; Yu, X.; Vastaranta, M.; Hyyppä, H.; Kukko, A.; Holopainen, M.; Heipke, C.; Hirschmugl, M.; Morsdorf, F.; et al. An International Comparison of Individual Tree Detection and Extraction Using Airborne Laser Scanning. Remote Sens. 2012, 4, 950–974. [Google Scholar] [CrossRef]

- Kampa, K.; Slatton, K.C. An adaptive multiscale filter for segmenting vegetation in ALSM data. In Proceedings of the IGARSS 2004. 2004 IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004. [Google Scholar] [CrossRef]

- Lee, H.; Slatton, K.C.; Roth, B.E.; Cropper, W.P. Adaptive clustering of airborne LiDAR data to segment individual tree crowns in managed pine forests. Int. J. Remote Sens. 2010, 31, 117–139. [Google Scholar] [CrossRef]

- Mei, C.; Durrieu, S. Tree crown delineation from digital elevation models and high resolution imagery. Proc. IAPRS 2004, 36, 218–223. [Google Scholar]

- Chen, Q.; Baldocchi, D.; Gong, P.; Kelly, M. Isolating Individual Trees in a Savanna Woodland Using Small Footprint Lidar Data. Photogramm. Eng. Remote Sens. 2006, 72, 923–932. [Google Scholar] [CrossRef]

- Tang, F.; Zhang, X.; Liu, J. Segmentation of tree crown model with complex structure from airborne LIDAR data. In Geoinformatics 2007: Remotely Sensed Data and Information; SPIE: Bellingham, DC, USA, 2007. [Google Scholar] [CrossRef]

- Leckie, D.; Gougeon, F.; Hill, D.; Quinn, R.; Armstrong, L.; Shreenan, R. Combined high-density lidar and multispectral imagery for individual tree crown analysis. Can. J. Remote Sens. 2003, 29, 633–649. [Google Scholar] [CrossRef]

- Liu, T.; Im, J.; Quackenbush, L.J. A novel transferable individual tree crown delineation model based on Fishing Net Dragging and boundary classification. ISPRS J. Photogramm. Remote Sens. 2015, 110, 34–47. [Google Scholar] [CrossRef]

- Reitberger, J.; Schnörr, C.; Krzystek, P.; Stilla, U. 3D segmentation of single trees exploiting full waveform LIDAR data. ISPRS J. Photogramm. Remote Sens. 2009, 64, 561–574. [Google Scholar] [CrossRef]

- Lu, X.; Guo, Q.; Li, W.; Flanagan, J. A bottom-up approach to segment individual deciduous trees using leaf-off lidar point cloud data. ISPRS J. Photogramm. Remote Sens. 2014, 94, 1–12. [Google Scholar] [CrossRef]

- Wang, Y.; Weinacker, H.; Koch, B. A Lidar Point Cloud Based Procedure for Vertical Canopy Structure Analysis And 3D Single Tree Modelling in Forest. Sensors 2008, 8, 3938–3951. [Google Scholar] [CrossRef]

- Morsdorf, F.; Meier, E.H.; Allgöwer, B.; Nüesch, D. Clustering in airborne laser scanning raw data for segmentation of single trees. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2003, 34, W13. [Google Scholar]

- Li, W.; Guo, Q.; Jakubowski, M.K.; Kelly, M. A New Method for Segmenting Individual Trees from the Lidar Point Cloud. Photogramm. Eng. Remote Sens. 2012, 78, 75–84. [Google Scholar] [CrossRef]

- Chen, X.; Jiang, K.; Zhu, Y.; Wang, X.; Yun, T. Individual Tree Crown Segmentation Directly from UAV-Borne LiDAR Data Using the PointNet of Deep Learning. Forests 2021, 12, 131. [Google Scholar] [CrossRef]

- Yan, W.; Guan, H.; Cao, L.; Yu, Y.; Li, C.; Lu, J. A Self-Adaptive Mean Shift Tree-Segmentation Method Using UAV LiDAR Data. Remote Sens. 2020, 12, 515. [Google Scholar] [CrossRef]

- Qian, C.; Yao, C.; Ma, H.; Xu, J.; Wang, J. Tree Species Classification Using Airborne LiDAR Data Based on Individual Tree Segmentation and Shape Fitting. Remote Sens. 2023, 15, 406. [Google Scholar] [CrossRef]

- Holmgren, J.; Persson, Å. Identifying species of individual trees using airborne laser scanner. Remote Sens. Environ. 2004, 90, 415–423. [Google Scholar] [CrossRef]

- Othmani, A.; Lew Yan Voon, L.F.C.; Stolz, C.; Piboule, A. Single tree species classification from Terrestrial Laser Scanning data for forest inventory. Pattern Recognit. Lett. 2013, 34, 2144–2150. [Google Scholar] [CrossRef]

- Lin, Y.; Hyyppä, J. A comprehensive but efficient framework of proposing and validating feature parameters from airborne LiDAR data for tree species classification. Int. J. Appl. Earth Obs. Geoinf. 2016, 46, 45–55. [Google Scholar] [CrossRef]

- Kim, S.; McGaughey, R.J.; Andersen, H.-E.; Schreuder, G. Tree species differentiation using intensity data derived from leaf-on and leaf-off airborne laser scanner data. Remote Sens. Environ. 2009, 113, 1575–1586. [Google Scholar] [CrossRef]

- Ørka, H.O.; Næsset, E.; Bollandsås, O.M. Classifying species of individual trees by intensity and structure features derived from airborne laser scanner data. Remote Sens. Environ. 2009, 113, 1163–1174. [Google Scholar] [CrossRef]

- Kashani, A.; Olsen, M.; Parrish, C.; Wilson, N. A Review of LIDAR Radiometric Processing: From Ad Hoc Intensity Correction to Rigorous Radiometric Calibration. Sensors 2015, 15, 28099–28128. [Google Scholar] [CrossRef]

- Qin, H.; Zhou, W.; Yao, Y.; Wang, W. Individual tree segmentation and tree species classification in subtropical broadleaf forests using UAV-based LiDAR, hyperspectral, and ultrahigh-resolution RGB data. Remote Sens. Environ. 2022, 280, 113143. [Google Scholar] [CrossRef]

- Solodukhin, V.I.; Zukov, A.J.; Mazugin, I.N. Laser aerial profiling of a forest. Lew NIILKh Leningr. Lesn. Khozyaistvo 1977, 10, 53–58. [Google Scholar]

- Shrestha, R.; Wynne, R.H. Estimating Biophysical Parameters of Individual Trees in an Urban Environment Using Small Footprint Discrete-Return Imaging Lidar. Remote Sens. 2012, 4, 484–508. [Google Scholar] [CrossRef]

- Bortolot, Z.J.; Wynne, R.H. Estimating forest biomass using small footprint LiDAR data: An individual tree-based approach that incorporates training data. ISPRS J. Photogramm. Remote Sens. 2005, 59, 342–360. [Google Scholar] [CrossRef]

- Wang, D.; Xin, X.; Shao, Q.; Brolly, M.; Zhu, Z.; Chen, J. Modeling Aboveground Biomass in Hulunber Grassland Ecosystem by Using Unmanned Aerial Vehicle Discrete Lidar. Sensors 2017, 17, 180. [Google Scholar] [CrossRef] [PubMed]

- García, M.; Riaño, D.; Chuvieco, E.; Danson, F.M. Estimating biomass carbon stocks for a Mediterranean forest in central Spain using LiDAR height and intensity data. Remote Sens. Environ. 2010, 114, 816–830. [Google Scholar] [CrossRef]

- Donoghue, D.; Watt, P.; Cox, N.; Wilson, J. Remote sensing of species mixtures in conifer plantations using LiDAR height and intensity data. Remote Sens. Environ. 2007, 110, 509–522. [Google Scholar] [CrossRef]

- Jin, J.; Yue, C.; Li, C.; Gu, L.; Luo, H.; Zhu, B. Estimation on Forest Volume Based on ALS Data and Dummy Variable Technology. For. Resour. Wanagement 2021, 01, 77–85. [Google Scholar] [CrossRef]

- PANG, Y.; LI, Z.-Y. Inversion of biomass components of the temperate forest using airborne Lidar technology in Xiaoxing’an Mountains, Northeastern of China. Chin. J. Plant Ecol. 2013, 36, 1095–1105. [Google Scholar] [CrossRef]

- Jia-Jia, J.; Xian-Quan, W.; Fa-Jie, D. An Effective Frequency-Spatial Filter Method to Restrain the Interferences for Active Sensors Gain and Phase Errors Calibration. IEEE Sens. J. 2016, 16, 7713–7719. [Google Scholar] [CrossRef]

- Axelsson, P. Processing of laser scanner data—Algorithms and applications. ISPRS J. Photogramm. Remote Sens. 1999, 54, 138–147. [Google Scholar] [CrossRef]

- Goutte, C.; Gaussier, E. A Probabilistic Interpretation of Precision, Recall and F-Score, with Implication for Evaluation. Adv. Inf. Retr. 2005, 3408, 345–359. [Google Scholar] [CrossRef]

- Brodersen, K.H.; Ong, C.S.; Stephan, K.E.; Buhmann, J.M. The Balanced Accuracy and Its Posterior Distribution. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010. [Google Scholar] [CrossRef]

- Goodenough, D.G.; Chen, H.; Dyk, A.; Richardson, A.; Hobart, G. Data Fusion Study Between Polarimetric SAR, Hyperspectral and Lidar Data for Forest Information. In Proceedings of the IGARSS 2008—2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 6–11 July 2008. [Google Scholar] [CrossRef]

- Alonzo, M.; Bookhagen, B.; Roberts, D.A. Urban tree species mapping using hyperspectral and lidar data fusion. Remote Sens. Environ. 2014, 148, 70–83. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Su, Y.; Jin, S.; Kelly, M.; Hu, T.; Ma, Q.; Li, Y.; Song, S.; Zhang, J.; Xu, G.; et al. The Influence of Vegetation Characteristics on Individual Tree Segmentation Methods with Airborne LiDAR Data. Remote Sens. 2019, 11, 2880. [Google Scholar] [CrossRef]

- Ma, K.; Chen, Z.; Fu, L.; Tian, W.; Jiang, F.; Yi, J.; Du, Z.; Sun, H. Performance and Sensitivity of Individual Tree Segmentation Methods for UAV-LiDAR in Multiple Forest Types. Remote Sens. 2022, 14, 298. [Google Scholar] [CrossRef]

- Ma, K.; Xiong, Y.; Jiang, F.; Chen, S.; Sun, H. A Novel Vegetation Point Cloud Density Tree-Segmentation Model for Overlapping Crowns Using UAV LiDAR. Remote Sens. 2021, 13, 1442. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, X.; Ma, Z.; Dong, N.; Xie, D.; Li, R.; Johnston, D.M.; Gao, Y.G.; Li, Y.; Lei, Y. Developing a more accurate method for individual plant segmentation of urban tree and shrub communities using LiDAR technology. Landsc. Res. 2022, 48, 313–330. [Google Scholar] [CrossRef]