Land Use and Land Cover Mapping with VHR and Multi-Temporal Sentinel-2 Imagery

Abstract

1. Introduction

2. Materials and Methods

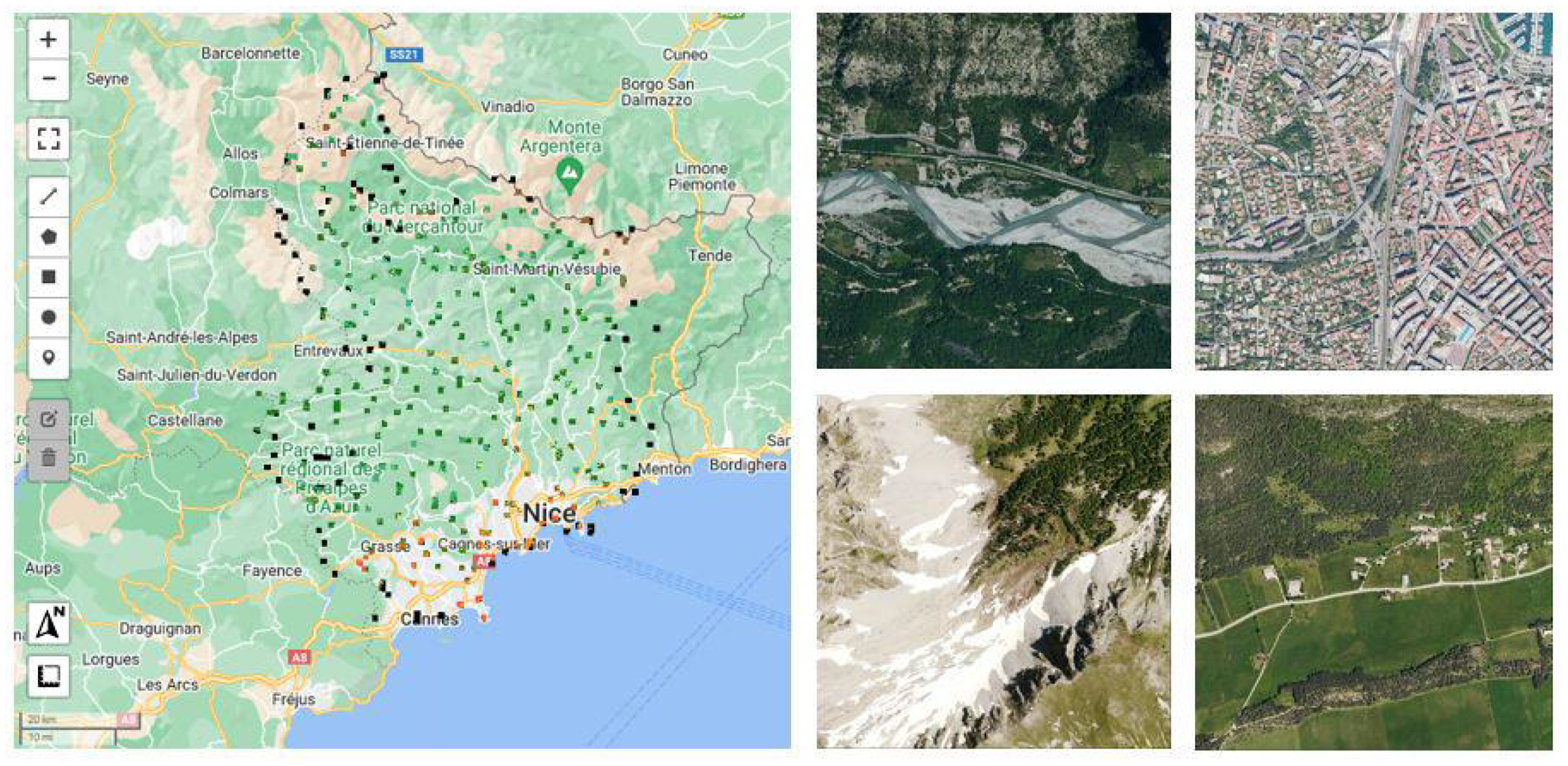

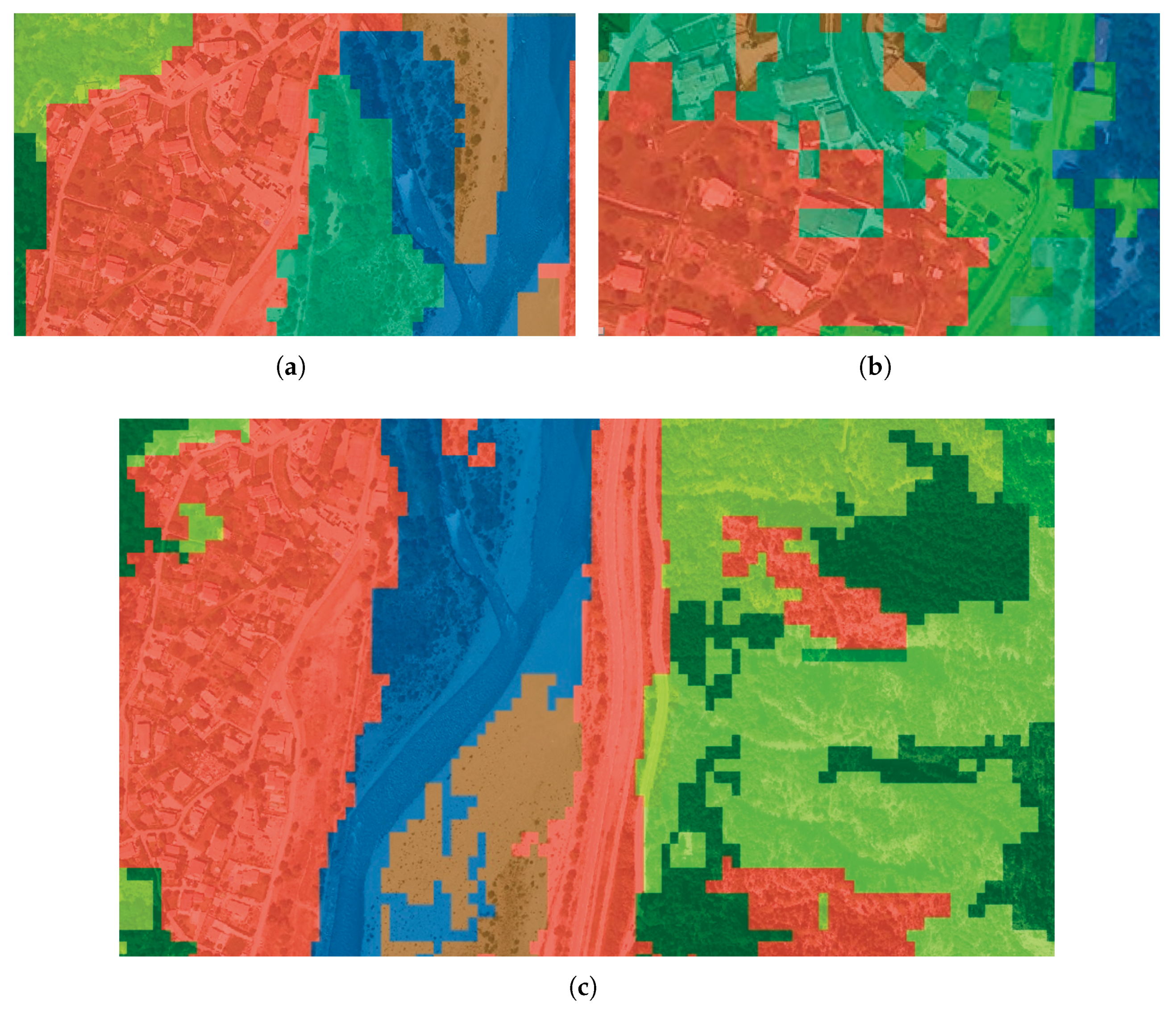

2.1. Study Area

2.2. Training Data

- Sentinel-2 imagery

- Very high resolution optical imagery

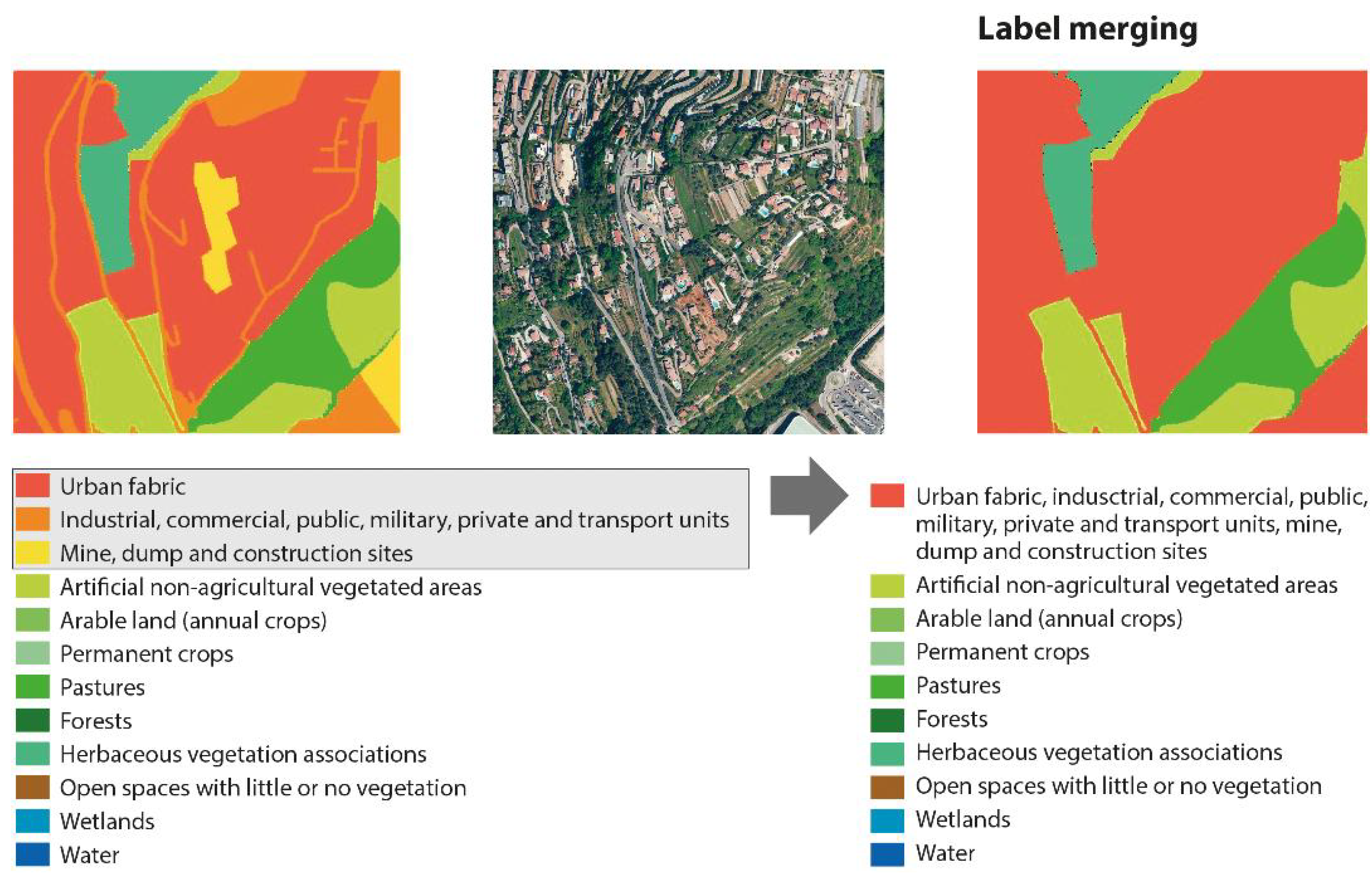

- Land Use/Land Cover labels

2.2.1. Spectral Indices

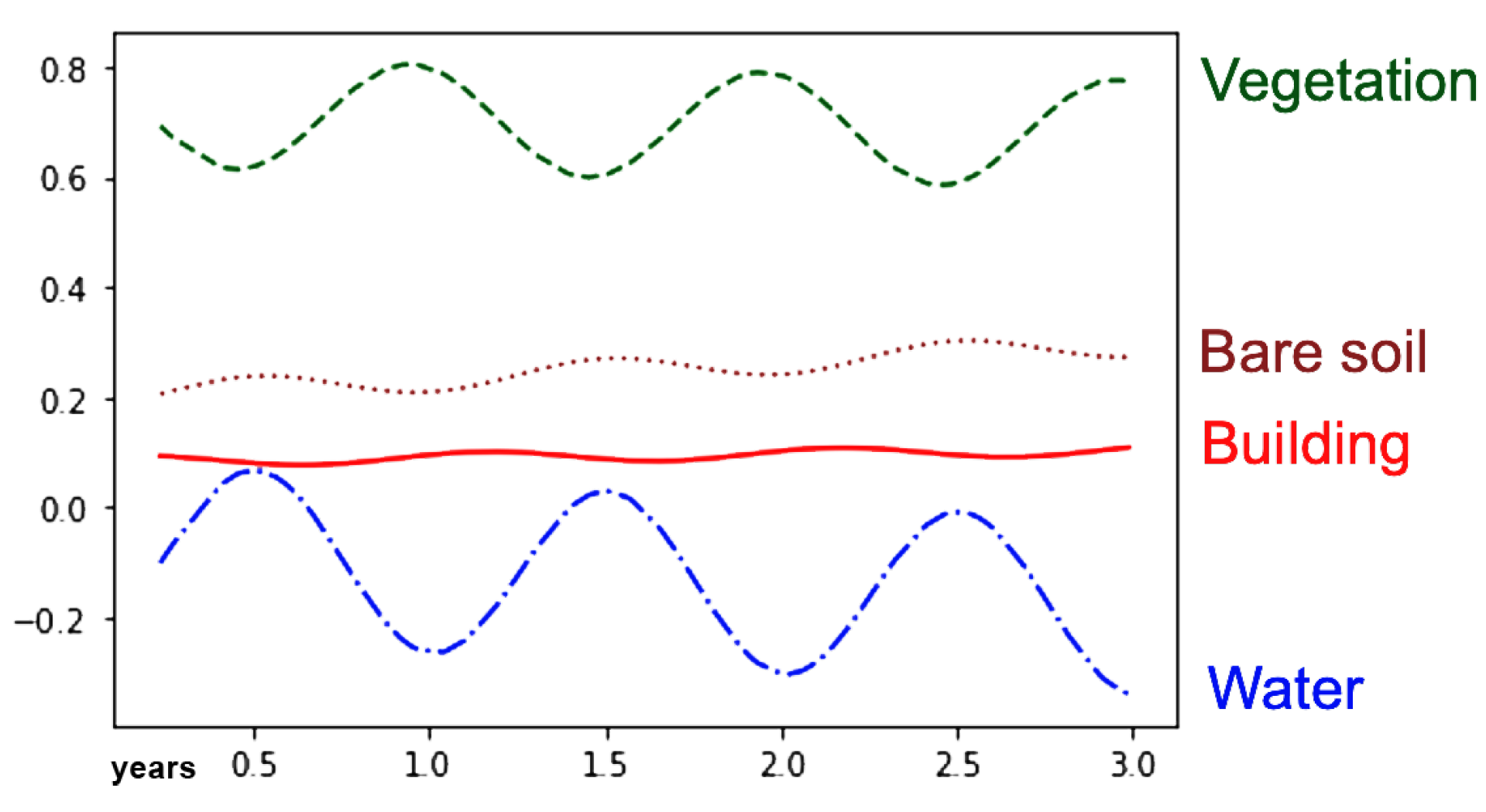

2.2.2. Pixel-Wise Temporal Analysis

2.3. Random Forest Classifier

2.4. GEOBIA: Geographic Object-Based Image Analysis

2.4.1. SNIC

2.4.2. GLCM

3. Results

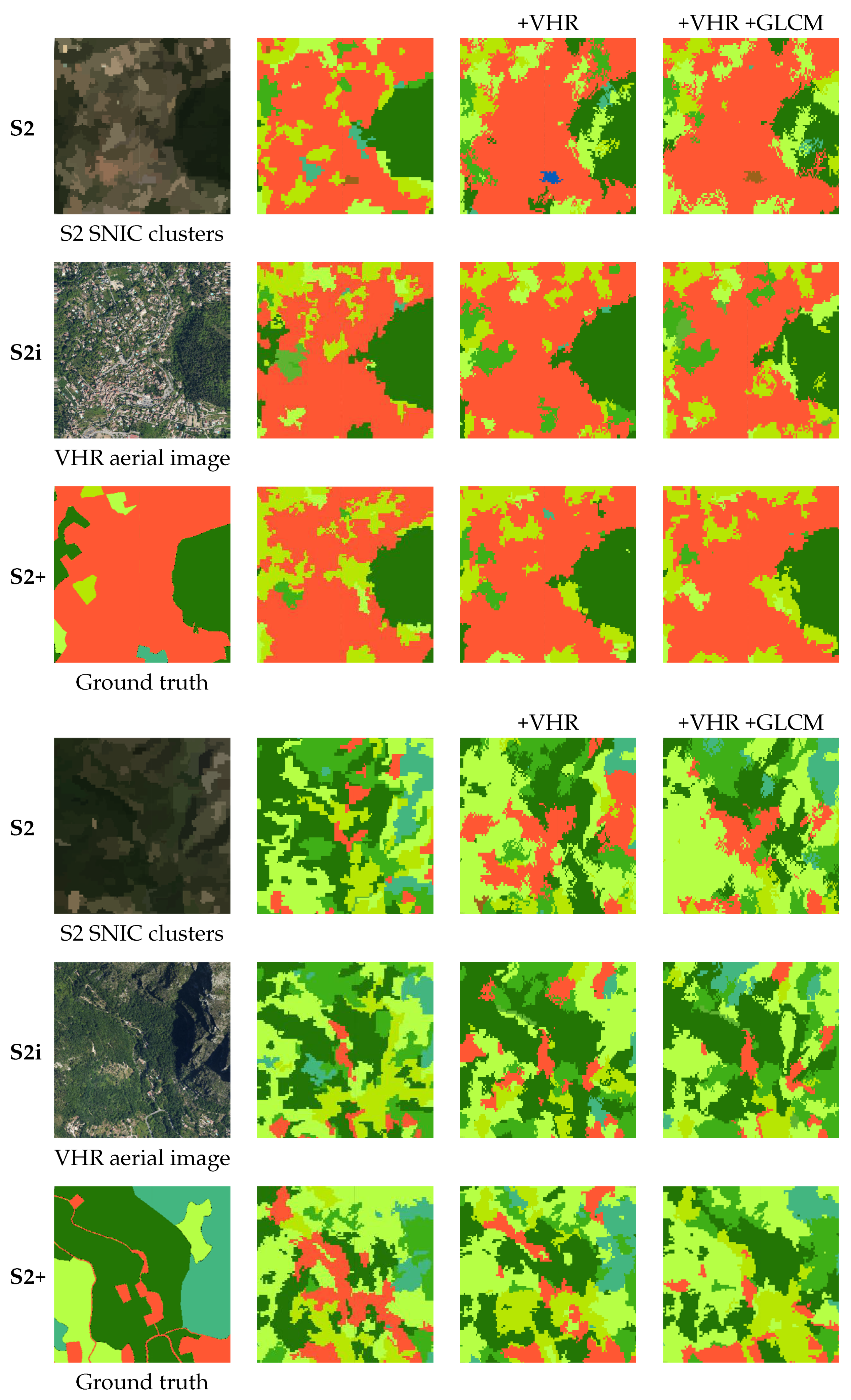

3.1. Improvements Adding the Temporal Analysis

3.2. Improvements Adding One VHR Image

3.3. Improvements Adding the GLCM

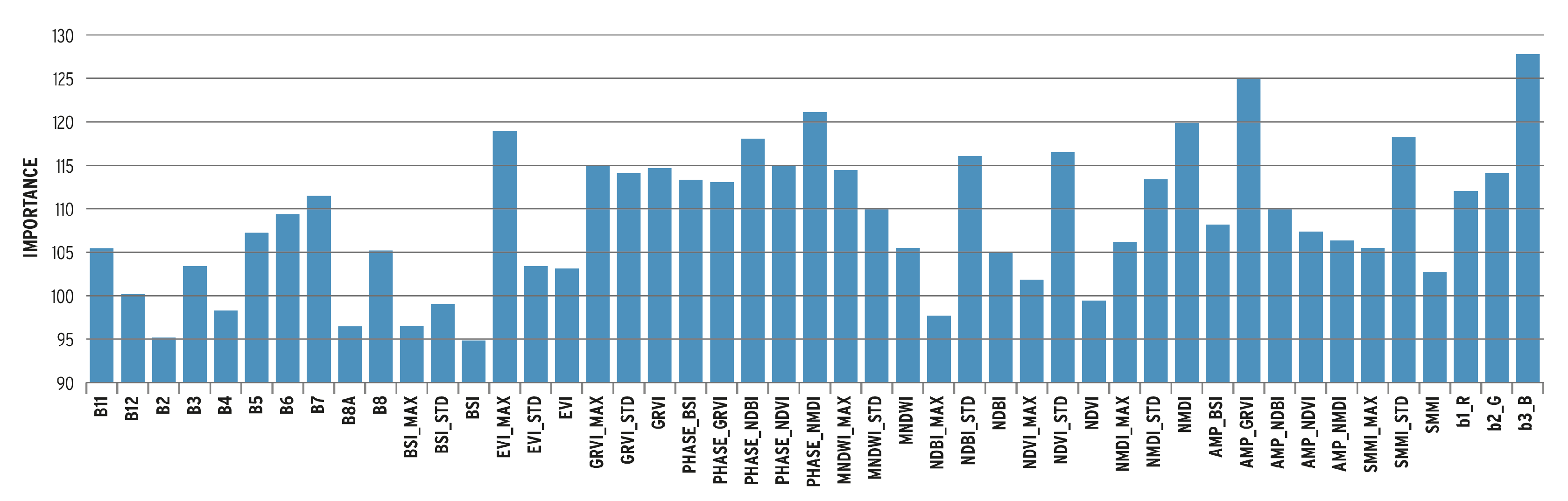

3.4. The Relative Importance Histogram

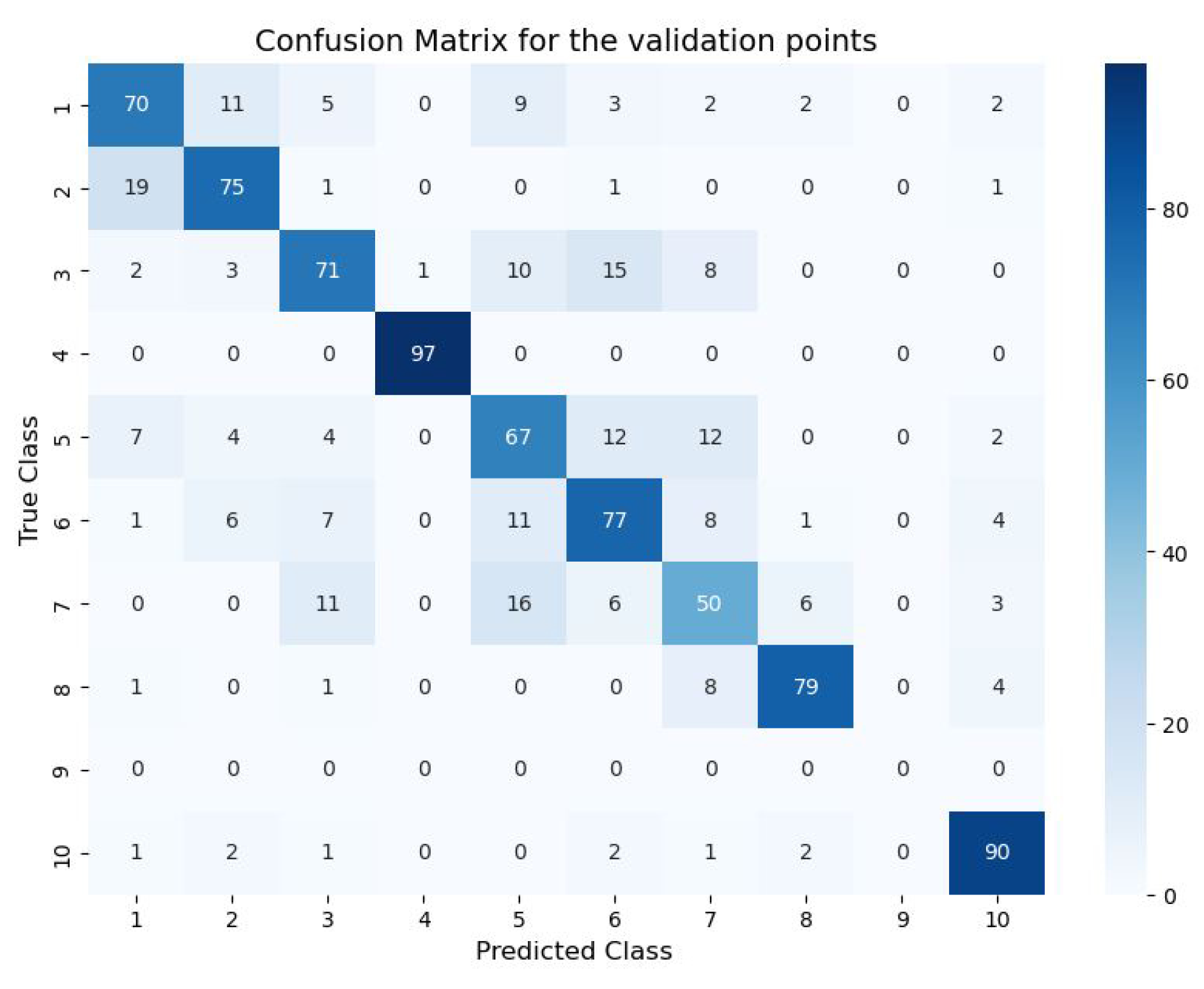

3.5. Confusion Matrix

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Vermeiren, K.; Crols, T.; Uljee, I.; Nocker, L.D.; Beckx, C.; Pisman, A.; Broekx, S.; Poelmans, L. Modelling urban sprawl and assessing its costs in the planning process: A case study in Flanders, Belgium. Land Use Policy 2022, 113, 105902. [Google Scholar] [CrossRef]

- Clerici, N.; Calderón, C.A.V.; Posada, J.M. Fusion of sentinel-1a and sentinel-2A data for land cover mapping: A case study in the lower Magdalena region, Colombia. J. Maps 2017, 13, 718–726. [Google Scholar] [CrossRef]

- Luca, G.D.; Silva, J.M.N.; Fazio, S.D.; Modica, G. Integrated use of Sentinel-1 and Sentinel-2 data and open-source machine learning algorithms for land cover mapping in a Mediterranean region. Eur. J. Remote Sens. 2022, 55, 52–70. [Google Scholar] [CrossRef]

- Abdi, A.M. Land cover and land use classification performance of machine learning algorithms in a boreal landscape using Sentinel-2 data. GIScience Remote Sens. 2020, 57, 1–20. [Google Scholar] [CrossRef]

- Erasmi, S.; Twele, A. Regional land cover mapping in the humid tropics using combined optical and SAR satellite data—A case study from Central Sulawesi, Indonesia. Int. J. Remote Sens. 2009, 30, 2465–2478. [Google Scholar] [CrossRef]

- Xu, L.; Herold, M.; Tsendbazar, N.E.; Masiliūnas, D.; Li, L.; Lesiv, M.; Fritz, S.; Verbesselt, J. Time series analysis for global land cover change monitoring: A comparison across sensors. Remote Sens. Environ. 2022, 271, 112905. [Google Scholar] [CrossRef]

- Lawton, M.N.; Martí-Cardona, B.; Hagen-Zanker, A. Urban growth derived from landsat time series using harmonic analysis: A case study in south england with high levels of cloud cover. Remote Sens. 2021, 13, 3339. [Google Scholar] [CrossRef]

- Padhee, S.K.; Dutta, S. Spatio-Temporal Reconstruction of MODIS NDVI by Regional Land Surface Phenology and Harmonic Analysis of Time-Series. GISci. Remote Sens. 2019, 56, 1261–1288. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.Q.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef]

- Chen, Y.; Ge, Y.; Heuvelink, G.B.; An, R.; Chan, Y. Object-based superresolution land-cover mapping from remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 328–340. [Google Scholar] [CrossRef]

- Sameen, M.I.; Pradhan, B.; Aziz, O.S. Classification of very high resolution aerial photos using spectral-spatial convolutional neural networks. J. Sens. 2018, 2018, 7195432. [Google Scholar] [CrossRef]

- Mboga, N.; Georganos, S.; Grippa, T.; Lennert, M.; Vanhuysse, S.; Wolff, E. Fully convolutional networks and geographic object-based image analysis for the classification of VHR imagery. Remote Sens. 2019, 11, 597. [Google Scholar] [CrossRef]

- Gavankar, N.L.; Ghosh, S.K. Object based building footprint detection from high resolution multispectral satellite image using K-means clustering algorithm and shape parameters. Geocarto Int. 2019, 34, 626–643. [Google Scholar] [CrossRef]

- Praticò, S.; Solano, F.; Fazio, S.D.; Modica, G. Machine learning classification of mediterranean forest habitats in google earth engine based on seasonal sentinel-2 time-series and input image composition optimisation. Remote Sens. 2021, 13, 586. [Google Scholar] [CrossRef]

- Gudmann, A.; Csikós, N.; Szilassi, P.; Mucsi, L. Improvement in satellite image-based land cover classification with landscape metrics. Remote Sens. 2020, 12, 3580. [Google Scholar] [CrossRef]

- Polykretis, C.; Grillakis, M.G.; Alexakis, D.D. Exploring the impact of various spectral indices on land cover change detection using change vector analysis: A case study of Crete Island, Greece. Remote Sens. 2020, 12, 319. [Google Scholar] [CrossRef]

- Haralick, R.M. Statistical and structural approaches to texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Numbisi, F.N.; Coillie, F.M.B.V.; Wulf, R.D. Delineation of Cocoa Agroforests Using Multiseason Sentinel-1 SAR Images: A Low Grey Level Range Reduces Uncertainties in GLCM Texture-Based Mapping. ISPRS Int. J. Geo-Inf. 2019, 8, 179. [Google Scholar] [CrossRef]

- Su, W.; Li, J.; Chen, Y.; Liu, Z.; Zhang, J.; Low, T.M.; Suppiah, I.; Hashim, S.A.M. Textural and local spatial statistics for the object-oriented classification of urban areas using high resolution imagery. Int. J. Remote Sens. 2008, 29, 3105–3117. [Google Scholar] [CrossRef]

- Okubo, S.; Muhamad, D.; Harashina, K.; Takeuchi, K.; Umezaki, M. Land use/cover classification of a complex agricultural landscape using single-dated very high spatial resolution satellite-sensed imagery. Can. J. Remote Sens. 2010, 36, 722–736. [Google Scholar] [CrossRef]

- Trias-Sanz, R.; Stamon, G.; Louchet, J. Using colour, texture and hierarchial segmentation for high-resolution remote sensing. ISPRS J. Photogramm. Remote Sens. 2008, 63, 156–168. [Google Scholar] [CrossRef]

- Dieu, T.; Le, H.; Pham, L.H.; Dinh, Q.T.; Thuy, N.T. Rapid method for yearly LULC classification using Random Forest and incorporating time-series NDVI and topography: A case study of Thanh Hoa province, Vietnam and topography: A case study of Thanh Hoa. Geocarto Int. 2022, 37, 17200–17215. [Google Scholar] [CrossRef]

- Walker, K.; Moscona, B.; Jack, K.; Jayachandran, S.; Kala, N.; Pande, R.; Xue, J.; Burke, M. Detecting Crop Burning in India using Satellite Data. arXiv 2022, arXiv:2209.10148. [Google Scholar]

- Tassi, A.; Vizzari, M. Object-oriented lulc classification in google earth engine combining snic, glcm and machine learning algorithms. Remote Sens. 2020, 12, 3776. [Google Scholar] [CrossRef]

- Castillo-Navarro, J.; Saux, B.L.; Boulch, A.; Audebert, N.; Lefèvre, S. Semi-Supervised Semantic Segmentation in Earth Observation: The MiniFrance Suite, Dataset Analysis and Multi-Task Network Study; Springer: New York, NY, USA, 2022; Volume 111, pp. 3125–3160. [Google Scholar] [CrossRef]

- Hänsch, R.; Persello, C.; Vivone, G.; Navarro, J.C.; Boulch, A.; Lefevre, S.; Saux, B.L. The 2022 IEEE GRSS Data Fusion Contest: Semisupervised Learning [Technical Committees]. IEEE Geosci. Remote Sens. Mag. 2022, 10, 334–337. [Google Scholar] [CrossRef]

- Diek, S.; Fornallaz, F.; Schaepman, M.E.; De Jong, R. Barest Pixel Composite for Agricultural Areas Using Landsat Time Series. Remote Sens. 2017, 9, 1245. [Google Scholar] [CrossRef]

- Villamuelas, M.; Fernández, N.; Albanell, E.; Gálvez-Cerón, A.; Bartolomé, J.; Mentaberre, G.; López-Olvera, J.R.; Fernández-Aguilar, X.; Colom-Cadena, A.; López-Martín, J.M.; et al. The Enhanced Vegetation Index (EVI) as a proxy for diet quality and composition in a mountain ungulate. Ecol. Indic. 2016, 61, 658–666. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Xu, H. Modification of normalised difference water index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Zha, Y.; Gao, J.; Ni, S. Use of normalized difference built-up index in automatically mapping urban areas from TM imagery. Int. J. Remote Sens. 2003, 24, 583–594. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Wang, L.; Qu, J.J. NMDI: A normalized multi-band drought index for monitoring soil and vegetation moisture with satellite remote sensing. Geophys. Res. Lett. 2007, 34, L20405. [Google Scholar] [CrossRef]

- Liu, Y.; Qian, J.; Yue, H. Comprehensive Evaluation of Sentinel-2 Red Edge and Shortwave-Infrared Bands to Estimate Soil Moisture. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 7448–7465. [Google Scholar] [CrossRef]

- Bai, T.; Sun, K.; Deng, S.; Li, D.; Li, W.; Chen, Y. Multi-scale hierarchical sampling change detection using Random Forest for high-resolution satellite imagery. Int. J. Remote Sens. 2018, 39, 7523–7546. [Google Scholar] [CrossRef]

- Lawrence, R.L.; Moran, C.J. The AmericaView classification methods accuracy comparison project: A rigorous approach for model selection. Remote Sens. Environ. 2015, 170, 115–120. [Google Scholar] [CrossRef]

- Achanta, R.; Süsstrunk, S. Superpixels and polygons using simple non-iterative clustering. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 4895–4904. [Google Scholar] [CrossRef]

- Gargees, R.S.; Scott, G.J. Deep Feature Clustering for Remote Sensing Imagery Land Cover Analysis. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1386–1390. [Google Scholar] [CrossRef]

- Hall-Beyer, M. Practical guidelines for choosing GLCM textures to use in landscape classification tasks over a range of moderate spatial scales. Int. J. Remote Sens. 2017, 38, 1312–1338. [Google Scholar] [CrossRef]

- Xu, Z.; Su, C.; Zhang, X. A semantic segmentation method with category boundary for Land Use and Land Cover (LULC) mapping of Very-High Resolution (VHR) remote sensing image. Int. J. Remote Sens. 2021, 42, 3146–3165. [Google Scholar] [CrossRef]

- Xie, G.; Niculescu, S. Mapping and monitoring of land cover/land use (LCLU) changes in the crozon peninsula (Brittany, France) from 2007 to 2018 by machine learning algorithms (support vector machine, Random Forest and convolutional neural network) and by post-classification comparison (PCC). Remote Sens. 2021, 13, 3899. [Google Scholar] [CrossRef]

- Sertel, E.; Ekim, B.; Osgouei, P.E.; Kabadayi, M.E. Land Use and Land Cover Mapping Using Deep Learning Based Segmentation Approaches and VHR Worldview-3 Images. Remote Sens. 2022, 14, 4558. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment Anything. arXiv 2023, arXiv:2304.02643. [Google Scholar]

| Index | Full Name | Formula |

|---|---|---|

| BSI [27] | bare soil index | |

| EVI [28] | enhanced vegetation index | |

| GRVI [29] | green-red vegetation index | |

| MNDWI [30] | modified normalized difference water index | |

| NDBI [31] | normalized difference built-up index | |

| NDVI [32] | normalized difference vegetation index | |

| NMDI [33] | normalized multi-band drought index | |

| SMMI [34] | soil moisture monitoring index |

| Input Data | OA | OA Improvement |

|---|---|---|

| S2 | 0.6262 | |

| S2i (S2 + indices) | 0.6732 | +0.0470 |

| S2+ (S2i + temporal analysis) | 0.7290 | +0.0558 |

| Input Data | Base OA | Improvement | With VHR | Clustering on |

|---|---|---|---|---|

| S2 | 0.6262 | +0.0443 | 0.6705 | S2 and VHR bands |

| +0.0934 | 0.7196 | VHR bands | ||

| S2i | 0.6732 | +0.0284 | 0.7016 | S2i and VHR bands |

| (S2 + indices) | +0.0103 | 0.6835 | VHR bands | |

| S2+ | 0.7290 | +0.0140 | 0.7430 | S2+ and VHR bands |

| (S2i + temporal analysis) | −0.0127 | 0.7163 | VHR bands |

| Input Data | Base OA | Improvement | OA | With | Clustering on |

|---|---|---|---|---|---|

| S2 | 0.6262 | +0.0443 | 0.6705 | VHR | S2 and VHR bands |

| +0.0328 | 0.6590 | GLCM | S2 bands | ||

| +0.0414 | 0.6676 | VHR & GLCM | S2 and VHR bands | ||

| S2i | 0.6732 | +0.0284 | 0.7016 | VHR | S2i and VHR bands |

| (S2 + indices) | +0.0066 | 0.6798 | GLCM | S2i bands | |

| +0.0536 | 0.7268 | VHR & GLCM | S2i and VHR bands | ||

| S2+ | 0.7290 | +0.0140 | 0.7430 | VHR | S2+ and VHR bands |

| (S2i + temporal analysis) | +0.0032 | 0.7322 | GLCM | S2+ bands | |

| +0.0076 | 0.7366 | VHR & GLCM | S2+ and VHR bands |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cuypers, S.; Nascetti, A.; Vergauwen, M. Land Use and Land Cover Mapping with VHR and Multi-Temporal Sentinel-2 Imagery. Remote Sens. 2023, 15, 2501. https://doi.org/10.3390/rs15102501

Cuypers S, Nascetti A, Vergauwen M. Land Use and Land Cover Mapping with VHR and Multi-Temporal Sentinel-2 Imagery. Remote Sensing. 2023; 15(10):2501. https://doi.org/10.3390/rs15102501

Chicago/Turabian StyleCuypers, Suzanna, Andrea Nascetti, and Maarten Vergauwen. 2023. "Land Use and Land Cover Mapping with VHR and Multi-Temporal Sentinel-2 Imagery" Remote Sensing 15, no. 10: 2501. https://doi.org/10.3390/rs15102501

APA StyleCuypers, S., Nascetti, A., & Vergauwen, M. (2023). Land Use and Land Cover Mapping with VHR and Multi-Temporal Sentinel-2 Imagery. Remote Sensing, 15(10), 2501. https://doi.org/10.3390/rs15102501