Designing Unmanned Aerial Survey Monitoring Program to Assess Floating Litter Contamination

Abstract

1. Introduction

2. Materials and Methods

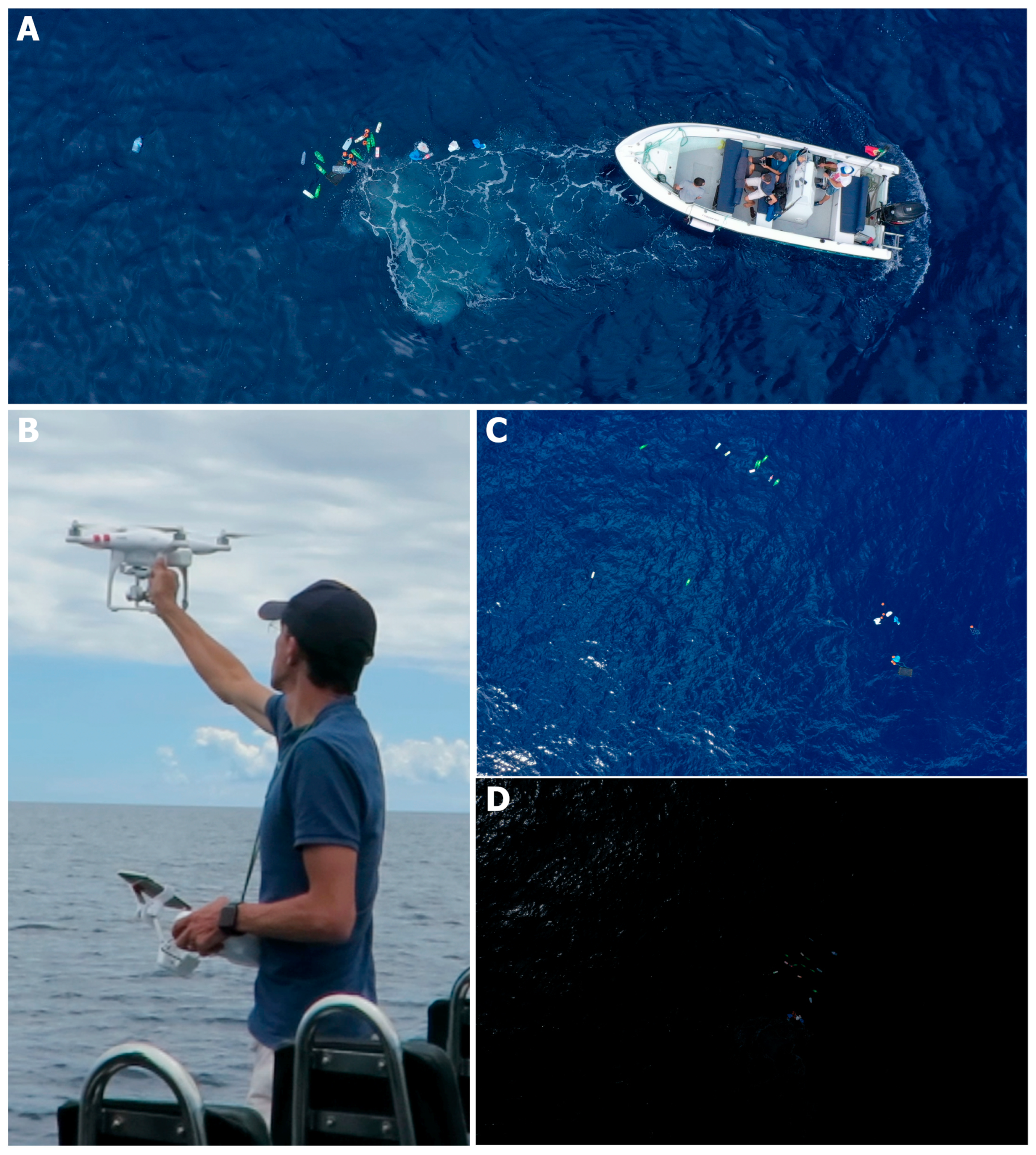

2.1. Data Collection

2.2. Comparison of Analytical Procedures

2.2.1. Visual Inspection and Manual Classification

2.2.2. Color- and Pixel-Based Detection Analysis

2.2.3. Machine Learning for Automated Object Detection and Classification

3. Results

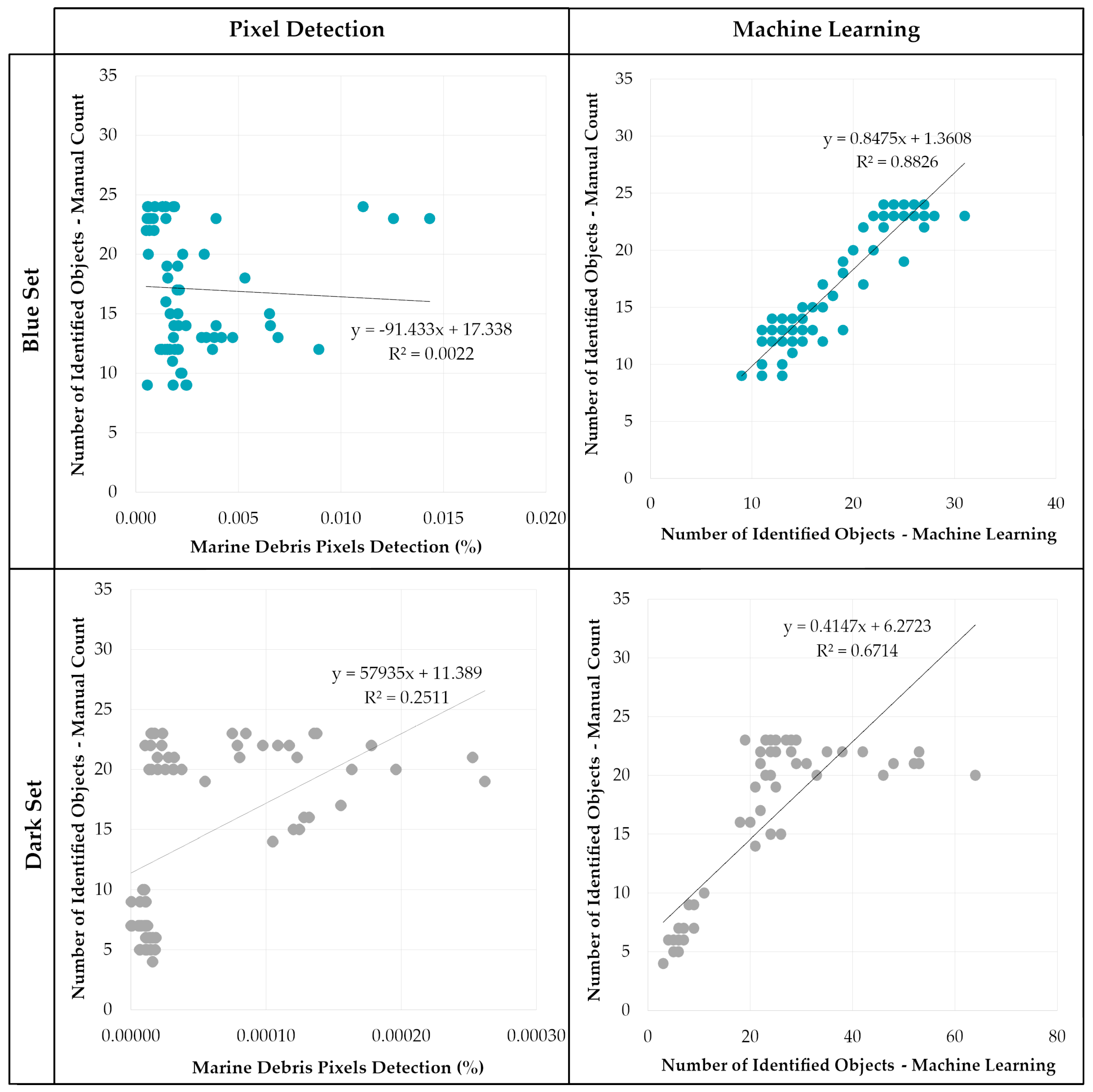

3.1. Performance Assessment

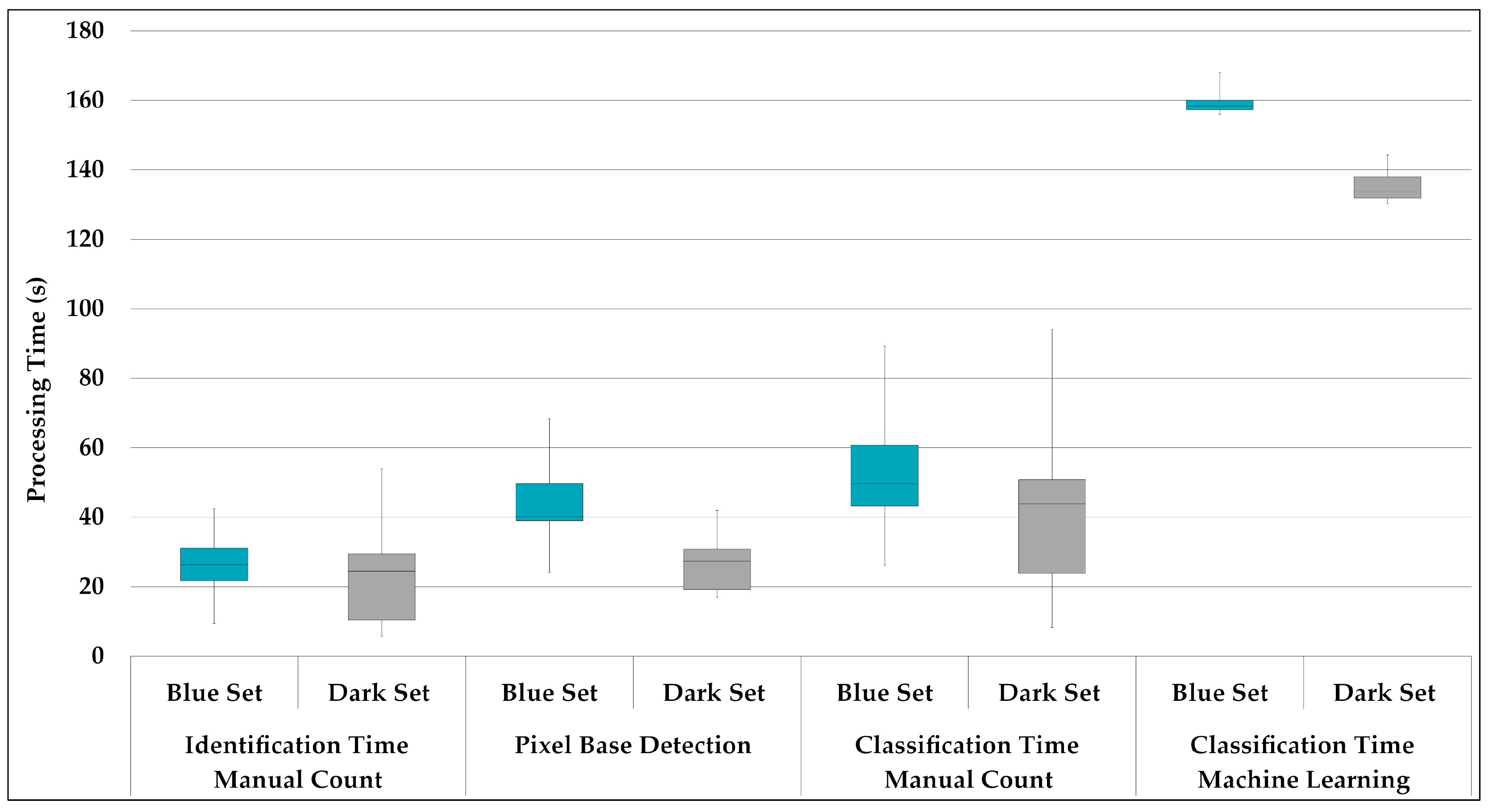

3.2. Comparing Processing Times and Requirements

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Veiga, J.M.; Vlachogianni, T.; Pahl, S.; Thompson, R.C.; Kopke, K.; Doyle, T.K.; Hartley, B.L.; Maes, T.; Orthodoxou, D.L.; Loizidou, X.I.; et al. Enhancing Public Awareness and Promoting Co-Responsibility for Marine Litter in Europe: The Challenge of MARLISCO. Mar. Pollut. Bull. 2016, 102, 309–315. [Google Scholar] [CrossRef]

- Gough, A. Educating for the Marine Environment: Challenges for Schools and Scientists. Mar. Pollut. Bull. 2017, 124, 633–638. [Google Scholar] [CrossRef]

- Schmid, C.; Cozzarini, L.; Zambello, E. Microplastic’s Story. Mar. Pollut. Bull. 2021, 162, 111820. [Google Scholar] [CrossRef]

- Al-Jaibachi, R.; Cuthbert, R.N.; Callaghan, A. Up and Away: Ontogenic Transference as a Pathway for Aerial Dispersal of Microplastics. Biol. Lett. 2018, 14, 20180479. [Google Scholar] [CrossRef]

- Reed, C. Dawn of the Plasticene Age. New Sci. 2015, 225, 28–32. [Google Scholar] [CrossRef]

- Williams, A.T.; Rangel-Buitrago, N. The Past, Present, and Future of Plastic Pollution. Mar. Pollut. Bull. 2022, 176, 113429. [Google Scholar] [CrossRef]

- Villarrubia-Gómez, P.; Cornell, S.E.; Fabres, J. Marine Plastic Pollution as a Planetary Boundary Threat—The Drifting Piece in the Sustainability Puzzle. Mar. Policy 2018, 96, 213–220. [Google Scholar] [CrossRef]

- Patrício Silva, A.L.; Prata, J.C.; Walker, T.R.; Campos, D.; Duarte, A.C.; Soares, A.M.V.M.; Barcelò, D.; Rocha-Santos, T. Rethinking and Optimising Plastic Waste Management under COVID-19 Pandemic: Policy Solutions Based on Redesign and Reduction of Single-Use Plastics and Personal Protective Equipment. Sci. Total Environ. 2020, 742, 140565. [Google Scholar] [CrossRef]

- Canning-Clode, J.; Sepúlveda, P.; Almeida, S.; Monteiro, J. Will COVID-19 Containment and Treatment Measures Drive Shifts in Marine Litter Pollution? Front. Mar. Sci. 2020, 7, 691. [Google Scholar] [CrossRef]

- Woods, J.S.; Verones, F.; Jolliet, O.; Vázquez-Rowe, I.; Boulay, A.-M. A Framework for the Assessment of Marine Litter Impacts in Life Cycle Impact Assessment. Ecol. Indic. 2021, 129, 107918. [Google Scholar] [CrossRef]

- Gallo, F.; Fossi, C.; Weber, R.; Santillo, D.; Sousa, J.; Ingram, I.; Nadal, A.; Romano, D. Marine Litter Plastics and Microplastics and Their Toxic Chemicals Components: The Need for Urgent Preventive Measures. Environ. Sci. Eur. 2018, 30, 13. [Google Scholar] [CrossRef] [PubMed]

- Abalansa, S.; El Mahrad, B.; Vondolia, G.K.; Icely, J.; Newton, A. The Marine Plastic Litter Issue: A Social-Economic Analysis. Sustainability 2020, 12, 8677. [Google Scholar] [CrossRef]

- Ogunola, O.S.; Onada, O.A.; Falaye, A.E. Mitigation Measures to Avert the Impacts of Plastics and Microplastics in the Marine Environment (A Review). Environ. Sci. Pollut. Res. 2018, 25, 9293–9310. [Google Scholar] [CrossRef] [PubMed]

- Galgani, F.; Hanke, G.; Werner, S.; De Vrees, L. Marine Litter within the European Marine Strategy Framework Directive. ICES J. Mar. Sci. 2013, 70, 1055–1064. [Google Scholar] [CrossRef]

- Chen, C.L. Regulation and management of marine litter. In Marine Anthropogenic Litter; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Maximenko, N.; Corradi, P.; Law, K.L.; van Sebille, E.; Garaba, S.P.; Lampitt, R.S.; Galgani, F.; Martinez-Vicente, V.; Goddijn-Murphy, L.; Veiga, J.M.; et al. Toward the Integrated Marine Debris Observing System. Front. Mar. Sci. 2019, 6, 309. [Google Scholar] [CrossRef]

- Danovaro, R.; Carugati, L.; Berzano, M.; Cahill, A.E.; Carvalho, S.; Chenuil, A.; Corinaldesi, C.; Cristina, S.; David, R.; Dell’Anno, A.; et al. Implementing and Innovating Marine Monitoring Approaches for Assessing Marine Environmental Status. Front. Mar. Sci. 2016, 3, 213. [Google Scholar] [CrossRef]

- Chambault, P.; Vandeperre, F.; Machete, M.; Lagoa, J.C.; Pham, C.K. Distribution and Composition of Floating Macro Litter off the Azores Archipelago and Madeira (NE Atlantic) Using Opportunistic Surveys. Mar. Environ. Res. 2018, 141, 225–232. [Google Scholar] [CrossRef]

- Tekman, M.B.; Krumpen, T.; Bergmann, M. Marine Litter on Deep Arctic Seafloor Continues to Increase and Spreads to the North at the HAUSGARTEN Observatory. Deep Sea Res. Part I Oceanogr. Res. Pap. 2017, 120, 88–99. [Google Scholar] [CrossRef]

- Lusher, A.L.; Burke, A.; O’Connor, I.; Officer, R. Microplastic Pollution in the Northeast Atlantic Ocean: Validated and Opportunistic Sampling. Mar. Pollut. Bull. 2014, 88, 325–333. [Google Scholar] [CrossRef]

- Rothäusler, E.; Jormalainen, V.; Gutow, L.; Thiel, M. Low Abundance of Floating Marine Debris in the Northern Baltic Sea. Mar. Pollut. Bull. 2019, 149, 110522. [Google Scholar] [CrossRef]

- Campana, I.; Angeletti, D.; Crosti, R.; Di Miccoli, V.; Arcangeli, A. Seasonal Patterns of Floating Macro-Litter across the Western Mediterranean Sea: A Potential Threat for Cetacean Species. Rend. Lincei Sci. Fis. Nat. 2018, 29, 453–467. [Google Scholar] [CrossRef]

- Suaria, G.; Aliani, S. Floating Debris in the Mediterranean Sea. Mar. Pollut. Bull. 2014, 86, 494–504. [Google Scholar] [CrossRef] [PubMed]

- Fossi, M.C.; Pedà, C.; Compa, M.; Tsangaris, C.; Alomar, C.; Claro, F.; Ioakeimidis, C.; Galgani, F.; Hema, T.; Deudero, S.; et al. Bioindicators for Monitoring Marine Litter Ingestion and Its Impacts on Mediterranean Biodiversity. Environ. Pollut. 2018, 237, 1023–1040. [Google Scholar] [CrossRef] [PubMed]

- Gajšt, T.; Bizjak, T.; Palatinus, A.; Liubartseva, S.; Kržan, A. Sea Surface Microplastics in Slovenian Part of the Northern Adriatic. Mar. Pollut. Bull. 2016, 113, 392–399. [Google Scholar] [CrossRef]

- Herrera, A.; Raymond, E.; Martínez, I.; Álvarez, S.; Canning-Clode, J.; Gestoso, I.; Pham, C.K.; Ríos, N.; Rodríguez, Y.; Gómez, M. First Evaluation of Neustonic Microplastics in the Macaronesian Region, NE Atlantic. Mar. Pollut. Bull. 2020, 153, 110999. [Google Scholar] [CrossRef]

- Prata, J.C.; da Costa, J.P.; Duarte, A.C.; Rocha-Santos, T. Methods for Sampling and Detection of Microplastics in Water and Sediment: A Critical Review. TrAC Trends Anal. Chem. 2019, 110, 150–159. [Google Scholar] [CrossRef]

- Di-Méglio, N.; Campana, I. Floating Macro-Litter along the Mediterranean French Coast: Composition, Density, Distribution and Overlap with Cetacean Range. Mar. Pollut. Bull. 2017, 118, 155–166. [Google Scholar] [CrossRef]

- Ruiz, I.; Burgoa, I.; Santos, M.; Basurko, O.C.; García-Barón, I.; Louzao, M.; Beldarrain, B.; Kukul, D.; Valle, C.; Uriarte, A.; et al. First Assessment of Floating Marine Litter Abundance and Distribution in the Bay of Biscay from an Integrated Ecosystem Survey. Mar. Pollut. Bull. 2022, 174, 113266. [Google Scholar] [CrossRef]

- Miladinova, S.; Macias, D.; Stips, A.; Garcia-Gorriz, E. Identifying Distribution and Accumulation Patterns of Floating Marine Debris in the Black Sea. Mar. Pollut. Bull. 2020, 153, 110964. [Google Scholar] [CrossRef]

- Carlson, D.F.; Suaria, G.; Aliani, S.; Fredj, E.; Fortibuoni, T.; Griffa, A.; Russo, A.; Melli, V. Combining Litter Observations with a Regional Ocean Model to Identify Sources and Sinks of Floating Debris in a Semi-Enclosed Basin: The Adriatic Sea. Front. Mar. Sci. 2017, 4, 78. [Google Scholar] [CrossRef]

- van Sebille, E.; Aliani, S.; Law, K.L.; Maximenko, N.; Alsina, J.M.; Bagaev, A.; Bergmann, M.; Chapron, B.; Chubarenko, I.; Cózar, A.; et al. The Physical Oceanography of the Transport of Floating Marine Debris. Environ. Res. Lett. 2020, 15, 023003. [Google Scholar] [CrossRef]

- Fossi, M.C.; Romeo, T.; Baini, M.; Panti, C.; Marsili, L.; Campani, T.; Canese, S.; Galgani, F.; Druon, J.-N.; Airoldi, S.; et al. Plastic Debris Occurrence, Convergence Areas and Fin Whales Feeding Ground in the Mediterranean Marine Protected Area Pelagos Sanctuary: A Modeling Approach. Front. Mar. Sci. 2017, 4, 167. [Google Scholar] [CrossRef]

- Hu, C. Remote Detection of Marine Debris Using Satellite Observations in the Visible and near Infrared Spectral Range: Challenges and Potentials. Remote Sens. Environ. 2021, 259, 112414. [Google Scholar] [CrossRef]

- Biermann, L.; Clewley, D.; Martinez-Vicente, V.; Topouzelis, K. Finding Plastic Patches in Coastal Waters Using Optical Satellite Data. Sci. Rep. 2020, 10, 5364. [Google Scholar] [CrossRef] [PubMed]

- Topouzelis, K.; Papageorgiou, D.; Suaria, G.; Aliani, S. Floating Marine Litter Detection Algorithms and Techniques Using Optical Remote Sensing Data: A Review. Mar. Pollut. Bull. 2021, 170, 112675. [Google Scholar] [CrossRef] [PubMed]

- Salgado-Hernanz, P.M.; Bauzà, J.; Alomar, C.; Compa, M.; Romero, L.; Deudero, S. Assessment of Marine Litter through Remote Sensing: Recent Approaches and Future Goals. Mar. Pollut. Bull. 2021, 168, 112347. [Google Scholar] [CrossRef]

- Topouzelis, K.; Papakonstantinou, A.; Garaba, S.P. Detection of Floating Plastics from Satellite and Unmanned Aerial Systems (Plastic Litter Project 2018). Int. J. Appl. Earth Obs. Geoinf. 2019, 79, 175–183. [Google Scholar] [CrossRef]

- Themistocleous, K.; Papoutsa, C.; Michaelides, S.; Hadjimitsis, D. Investigating Detection of Floating Plastic Litter from Space Using Sentinel-2 Imagery. Remote Sens. 2020, 12, 2648. [Google Scholar] [CrossRef]

- Von Schuckmann, K.; Le Traon, P.-Y.; Alvarez-Fanjul, E.; Axell, L.; Balmaseda, M.; Breivik, L.-A.; Brewin, R.J.W.; Bricaud, C.; Drevillon, M.; Drillet, Y.; et al. The Copernicus Marine Environment Monitoring Service Ocean State Report. J. Oper. Oceanogr. 2016, 9, s235–s320. [Google Scholar] [CrossRef]

- Martínez-Vicente, V.; Clark, J.R.; Corradi, P.; Aliani, S.; Arias, M.; Bochow, M.; Bonnery, G.; Cole, M.; Cózar, A.; Donnelly, R.; et al. Measuring Marine Plastic Debris from Space: Initial Assessment of Observation Requirements. Remote Sens. 2019, 11, 2443. [Google Scholar] [CrossRef]

- Sigler, M. The Effects of Plastic Pollution on Aquatic Wildlife: Current Situations and Future Solutions. Water Air Soil Pollut. 2014, 225, 2184. [Google Scholar] [CrossRef]

- Park, Y.-J.; Garaba, S.P.; Sainte-Rose, B. Detecting the Great Pacific Garbage Patch Floating Plastic Litter Using WorldView-3 Satellite Imagery. Opt. Express 2021, 29, 35288. [Google Scholar] [CrossRef] [PubMed]

- Lebreton, L.; Slat, B.; Ferrari, F.; Sainte-Rose, B.; Aitken, J.; Marthouse, R.; Hajbane, S.; Cunsolo, S.; Schwarz, A.; Levivier, A.; et al. Evidence That the Great Pacific Garbage Patch Is Rapidly Accumulating Plastic. Sci. Rep. 2018, 8, 4666. [Google Scholar] [CrossRef] [PubMed]

- Kylili, K.; Kyriakides, I.; Artusi, A.; Hadjistassou, C. Identifying Floating Plastic Marine Debris Using a Deep Learning Approach. Environ. Sci. Pollut. Res. 2019, 26, 17091–17099. [Google Scholar] [CrossRef] [PubMed]

- Garcia-Garin, O.; Monleón-Getino, T.; López-Brosa, P.; Borrell, A.; Aguilar, A.; Borja-Robalino, R.; Cardona, L.; Vighi, M. Automatic Detection and Quantification of Floating Marine Macro-Litter in Aerial Images: Introducing a Novel Deep Learning Approach Connected to a Web Application in R. Environ. Pollut. 2021, 273, 116490. [Google Scholar] [CrossRef]

- Monteiro, J.G.; Jiménez, J.L.; Gizzi, F.; Přikryl, P.; Lefcheck, J.S.; Santos, R.S.; Canning-Clode, J. Novel Approach to Enhance Coastal Habitat and Biotope Mapping with Drone Aerial Imagery Analysis. Sci. Rep. 2021, 11, 574. [Google Scholar] [CrossRef]

- Olivetti, D.; Roig, H.; Martinez, J.-M.; Borges, H.; Ferreira, A.; Casari, R.; Salles, L.; Malta, E. Low-Cost Unmanned Aerial Multispectral Imagery for Siltation Monitoring in Reservoirs. Remote Sens. 2020, 12, 1855. [Google Scholar] [CrossRef]

- Ventura, D.; Bonifazi, A.; Gravina, M.F.; Belluscio, A.; Ardizzone, G. Mapping and Classification of Ecologically Sensitive Marine Habitats Using Unmanned Aerial Vehicle (UAV) Imagery and Object-Based Image Analysis (OBIA). Remote Sens. 2018, 10, 1331. [Google Scholar] [CrossRef]

- Whitehead, K.; Hugenholtz, C.H.; Myshak, S.; Brown, O.; LeClair, A.; Tamminga, A.; Barchyn, T.E.; Moorman, B.; Eaton, B. Remote Sensing of the Environment with Small Unmanned Aircraft Systems (UASs), Part 2: Scientific and Commercial Applications. J. Unmanned Veh. Syst. 2014, 2, 86–102. [Google Scholar] [CrossRef]

- Papakonstantinou, A.; Batsaris, M.; Spondylidis, S.; Topouzelis, K. A Citizen Science Unmanned Aerial System Data Acquisition Protocol and Deep Learning Techniques for the Automatic Detection and Mapping of Marine Litter Concentrations in the Coastal Zone. Drones 2021, 5, 6. [Google Scholar] [CrossRef]

- Gupta, S.G.; Ghonge, M.; Jawandhiya, P.M. Review of Unmanned Aircraft System (UAS). Int. J. Adv. Res. Comput. Eng. Technol. 2013, 2, 1646–1658. [Google Scholar] [CrossRef]

- Tatum, M.C.; Liu, J. Unmanned Aircraft System Applications in Construction. Procedia Eng. 2017, 196, 167–175. [Google Scholar] [CrossRef]

- Escobar-Sánchez, G.; Haseler, M.; Oppelt, N.; Schernewski, G. Efficiency of Aerial Drones for Macrolitter Monitoring on Baltic Sea Beaches. Front. Environ. Sci. 2021, 8, 560237. [Google Scholar] [CrossRef]

- Udin, W.S.; Ahmad, A. Assessment of Photogrammetric Mapping Accuracy Based on Variation Flying Altitude Using Unmanned Aerial Vehicle. IOP Conf. Ser. Earth Environ. Sci. 2014, 18, 012027. [Google Scholar] [CrossRef]

- Gray, P.; Ridge, J.; Poulin, S.; Seymour, A.; Schwantes, A.; Swenson, J.; Johnston, D. Integrating Drone Imagery into High Resolution Satellite Remote Sensing Assessments of Estuarine Environments. Remote Sens. 2018, 10, 1257. [Google Scholar] [CrossRef]

- Rovira-Sugranes, A.; Razi, A.; Afghah, F.; Chakareski, J. A review of AI-enabled routing protocols for UAV networks: Trends, challenges, and future outlook. Ad Hoc Netw. 2022, 130, 102790. [Google Scholar] [CrossRef]

- Al-Rawabdeh, A.; Al-Gurrani, H.; Al-Durgham, K.; Detchev, I.; He, F.; El-Sheimy, N.; Habib, A. A robust registration algorithm for point clouds from uav images for change detection. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1, 765–772. [Google Scholar] [CrossRef]

- Madurapperuma, B.; Lamping, J.; McDermott, M.; Murphy, B.; McFarland, J.; Deyoung, K.; Smith, C.; MacAdam, S.; Monroe, S.; Corro, L.; et al. Factors Influencing Movement of the Manila Dunes and Its Impact on Establishing Non-Native Species. Remote Sens. 2020, 12, 1536. [Google Scholar] [CrossRef]

- Rossiter, T.; Furey, T.; McCarthy, T.; Stengel, D.B. Application of Multiplatform, Multispectral Remote Sensors for Mapping Intertidal Macroalgae: A Comparative Approach. Aquat. Conserv. Mar. Freshw. Ecosyst. 2020, 30, 1595–1612. [Google Scholar] [CrossRef]

- Casella, E.; Collin, A.; Harris, D.; Ferse, S.; Bejarano, S.; Parravicini, V.; Hench, J.L.; Rovere, A. Mapping Coral Reefs Using Consumer-Grade Drones and Structure from Motion Photogrammetry Techniques. Coral Reefs 2017, 36, 269–275. [Google Scholar] [CrossRef]

- Nahirnick, N.K.; Reshitnyk, L.; Campbell, M.; Hessing-Lewis, M.; Costa, M.; Yakimishyn, J.; Lee, L. Mapping with Confidence; Delineating Seagrass Habitats Using Unoccupied Aerial Systems (UAS). Remote Sens. Ecol. Conserv. 2019, 5, 121–135. [Google Scholar] [CrossRef]

- Rossi, L.; Mammi, I.; Pelliccia, F. UAV-Derived Multispectral Bathymetry. Remote Sens. 2020, 12, 3897. [Google Scholar] [CrossRef]

- Gonçalves, G.; Andriolo, U.; Pinto, L.; Duarte, D. Mapping Marine Litter with Unmanned Aerial Systems: A Showcase Comparison among Manual Image Screening and Machine Learning Techniques. Mar. Pollut. Bull. 2020, 155, 111158. [Google Scholar] [CrossRef] [PubMed]

- Gonçalves, G.; Andriolo, U.; Gonçalves, L.M.S.; Sobral, P.; Bessa, F. Beach Litter Survey by Drones: Mini-Review and Discussion of a Potential Standardization. Environ. Pollut. 2022, 315, 120370. [Google Scholar] [CrossRef] [PubMed]

- Gonçalves, G.; Andriolo, U.; Pinto, L.; Bessa, F. Detecting marine litter on sandy beaches by using UAS-based orthophotos and machine learning methods. In Proceedings of the WORKSHOP Standardization of Procedures in Using UAS for Environmental Monitoring, Coimbra, Portugal, 6 November 2019. [Google Scholar] [CrossRef]

- Andriolo, U.; Gonçalves, G.; Rangel-Buitrago, N.; Paterni, M.; Bessa, F.; Gonçalves, L.M.S.; Sobral, P.; Bini, M.; Duarte, D.; Fontán-Bouzas, Á.; et al. Drones for Litter Mapping: An Inter-Operator Concordance Test in Marking Beached Items on Aerial Images. Mar. Pollut. Bull. 2021, 169, 112542. [Google Scholar] [CrossRef] [PubMed]

- Gonçalves, G.; Andriolo, U.; Gonçalves, L.; Sobral, P.; Bessa, F. Quantifying Marine Macro Litter Abundance on a Sandy Beach Using Unmanned Aerial Systems and Object-Oriented Machine Learning Methods. Remote Sens. 2020, 12, 2599. [Google Scholar] [CrossRef]

- Bao, Z.; Sha, J.; Li, X.; Hanchiso, T.; Shifaw, E. Monitoring of Beach Litter by Automatic Interpretation of Unmanned Aerial Vehicle Images Using the Segmentation Threshold Method. Mar. Pollut. Bull. 2018, 137, 388–398. [Google Scholar] [CrossRef]

- Merlino, S.; Paterni, M.; Locritani, M.; Andriolo, U.; Gonçalves, G.; Massetti, L. Citizen Science for Marine Litter Detection and Classification on Unmanned Aerial Vehicle Images. Water 2021, 13, 3349. [Google Scholar] [CrossRef]

- Merlino, S.; Paterni, M.; Berton, A.; Massetti, L. Unmanned Aerial Vehicles for Debris Survey in Coastal Areas: Long-Term Monitoring Programme to Study Spatial and Temporal Accumulation of the Dynamics of Beached Marine Litter. Remote Sens. 2020, 12, 1260. [Google Scholar] [CrossRef]

- Deidun, A.; Gauci, A.; Lagorio, S.; Galgani, F. Optimising Beached Litter Monitoring Protocols through Aerial Imagery. Mar. Pollut. Bull. 2018, 131, 212–217. [Google Scholar] [CrossRef]

- Andriolo, U.; Garcia-Garin, O.; Vighi, M.; Borrell, A.; Gonçalves, G. Beached and Floating Litter Surveys by Unmanned Aerial Vehicles: Operational Analogies and Differences. Remote Sens. 2022, 14, 1336. [Google Scholar] [CrossRef]

- Fallati, L.; Polidori, A.; Salvatore, C.; Saponari, L.; Savini, A.; Galli, P. Anthropogenic Marine Debris Assessment with Unmanned Aerial Vehicle Imagery and Deep Learning: A Case Study along the Beaches of the Republic of Maldives. Sci. Total Environ. 2019, 693, 133581. [Google Scholar] [CrossRef] [PubMed]

- Kako, S.; Morita, S.; Taneda, T. Estimation of Plastic Marine Debris Volumes on Beaches Using Unmanned Aerial Vehicles and Image Processing Based on Deep Learning. Mar. Pollut. Bull. 2020, 155, 111127. [Google Scholar] [CrossRef] [PubMed]

- Garcia-Garin, O.; Borrell, A.; Aguilar, A.; Cardona, L.; Vighi, M. Floating Marine Macro-Litter in the North Western Mediterranean Sea: Results from a Combined Monitoring Approach. Mar. Pollut. Bull. 2020, 159, 111467. [Google Scholar] [CrossRef] [PubMed]

- Escobar-Sánchez, G.; Markfort, G.; Berghald, M.; Ritzenhofen, L.; Schernewski, G. Aerial and Underwater Drones for Marine Litter Monitoring in Shallow Coastal Waters: Factors Influencing Item Detection and Cost-Efficiency. Environ. Monit. Assess. 2022, 194, 863. [Google Scholar] [CrossRef]

- Kataoka, T.; Nihei, Y. Quantification of Floating Riverine Macro-Debris Transport Using an Image Processing Approach. Sci. Rep. 2020, 10, 2198. [Google Scholar] [CrossRef]

- Jakovljevic, G.; Govedarica, M.; Alvarez-Taboada, F. A Deep Learning Model for Automatic Plastic Mapping Using Unmanned Aerial Vehicle (UAV) Data. Remote Sens. 2020, 12, 1515. [Google Scholar] [CrossRef]

- Clapuyt, F.; Vanacker, V.; Van Oost, K. Reproducibility of UAV-Based Earth Topography Reconstructions Based on Structure-from-Motion Algorithms. Geomorphology 2016, 260, 4–15. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV for 3D Mapping Applications: A Review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Rusnák, M.; Sládek, J.; Kidová, A.; Lehotský, M. Template for High-Resolution River Landscape Mapping Using UAV Technology. Measurement 2018, 115, 139–151. [Google Scholar] [CrossRef]

- Joyce, K.E.; Duce, S.; Leahy, S.M.; Leon, J.; Maier, S.W. Principles and Practice of Acquiring Drone-Based Image Data in Marine Environments. Mar. Freshw. Res. 2019, 70, 952. [Google Scholar] [CrossRef]

- Xu, C.; Liao, X.; Tan, J.; Ye, H.; Lu, H. Recent Research Progress of Unmanned Aerial Vehicle Regulation Policies and Technologies in Urban Low Altitude. IEEE Access 2020, 8, 74175–74194. [Google Scholar] [CrossRef]

- Stöcker, C.; Bennett, R.; Nex, F.; Gerke, M.; Zevenbergen, J. Review of the Current State of UAV Regulations. Remote Sens. 2017, 9, 459. [Google Scholar] [CrossRef]

- Felis, J.J.; Kelsey, E.C.; Adams, J.; Stenske, J.G.; White, L.M. Population estimates for selected breeding seabirds at Kīlauea Point National Wildlife Refuge, Kauaʻi, in 2019. U.S. Geological Survey Data Series. 2020, 1130, 32. [Google Scholar] [CrossRef]

- Borghgraef, A.; Barnich, O.; Lapierre, F.; Van Droogenbroeck, M.; Philips, W.; Acheroy, M. An Evaluation of Pixel-Based Methods for the Detection of Floating Objects on the Sea Surface. EURASIP J. Adv. Signal Process. 2010, 2010, 978451. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multiBox detector. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A Survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Brown, J.B. Classifiers and Their Metrics Quantified. Mol. Inform. 2018, 37, 1700127. [Google Scholar] [CrossRef]

- Gao, M.; Hugenholtz, C.H.; Fox, T.A.; Kucharczyk, M.; Barchyn, T.E.; Nesbit, P.R. Weather Constraints on Global Drone Flyability. Sci. Rep. 2021, 11, 12092. [Google Scholar] [CrossRef]

- Leira, F.S.; Johansen, T.A.; Fossen, T.I. Automatic detection, classification and tracking of objects in the ocean surface from UAVs using a thermal camera. In Proceedings of the 2015 IEEE Aerospace Conference, Big Sky, MT, USA, 7–14 March 2015; pp. 1–10. [Google Scholar]

- Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A. Unmanned Aircraft Systems in Remote Sensing and Scientific Research: Classification and Considerations of Use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef]

- Doukari, M.; Batsaris, M.; Topouzelis, K. UASea: A Data Acquisition Toolbox for Improving Marine Habitat Mapping. Drones 2021, 5, 73. [Google Scholar] [CrossRef]

- Goddijn-Murphy, L.; Dufaur, J. Proof of Concept for a Model of Light Reflectance of Plastics Floating on Natural Waters. Mar. Pollut. Bull. 2018, 135, 1145–1157. [Google Scholar] [CrossRef] [PubMed]

- Lee, Z.; Ahn, Y.-H.; Mobley, C.; Arnone, R. Removal of Surface-Reflected Light for the Measurement of Remote-Sensing Reflectance from an above-Surface Platform. Opt. Express 2010, 18, 26313. [Google Scholar] [CrossRef] [PubMed]

- Maharjan, N.; Miyazaki, H.; Pati, B.M.; Dailey, M.N.; Shrestha, S.; Nakamura, T. Detection of River Plastic Using UAV Sensor Data and Deep Learning. Remote Sens. 2022, 14, 3049. [Google Scholar] [CrossRef]

- Su, J.; Liu, C.; Coombes, M.; Hu, X.; Wang, C.; Xu, X.; Li, Q.; Guo, L.; Chen, W.-H. Wheat Yellow Rust Monitoring by Learning from Multispectral UAV Aerial Imagery. Comput. Electron. Agric. 2018, 155, 157–166. [Google Scholar] [CrossRef]

| Methods | Manual Count | Pixel Base Detection | Machine Learning | |||||

|---|---|---|---|---|---|---|---|---|

| DataSet | Blue | Dark | Blue | Dark | Blue | Dark | ||

| Performance Evaluation | Average Process Times (s) | Identification | 26 s | 22 s | ||||

| Classification | 52 s | 40 s | ||||||

| Processing | 43 s | 26 s | ||||||

| Object Classification | 159 s | 135 s | ||||||

| Number of Objects Classified | μ | 141 | 117 | 152 | 157 | |||

| σ | 112 | 112 | 116 | 187 | ||||

| % of pixels detected | 0.0025% | 0.000049% | ||||||

| Estimated area | 5.31 | 0.089 | ||||||

| Performance from ML method | P: 63.59% R: 78.27% F1: 56.33% | P: 77.62% R: 77.71% F1: 66.15% | ||||||

| Work Interface | DotDotGouse | Workflow who to generate new Algorithm. |

| |||||

| Requests | Informatic skills |

|

| |||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Almeida, S.; Radeta, M.; Kataoka, T.; Canning-Clode, J.; Pessanha Pais, M.; Freitas, R.; Monteiro, J.G. Designing Unmanned Aerial Survey Monitoring Program to Assess Floating Litter Contamination. Remote Sens. 2023, 15, 84. https://doi.org/10.3390/rs15010084

Almeida S, Radeta M, Kataoka T, Canning-Clode J, Pessanha Pais M, Freitas R, Monteiro JG. Designing Unmanned Aerial Survey Monitoring Program to Assess Floating Litter Contamination. Remote Sensing. 2023; 15(1):84. https://doi.org/10.3390/rs15010084

Chicago/Turabian StyleAlmeida, Sílvia, Marko Radeta, Tomoya Kataoka, João Canning-Clode, Miguel Pessanha Pais, Rúben Freitas, and João Gama Monteiro. 2023. "Designing Unmanned Aerial Survey Monitoring Program to Assess Floating Litter Contamination" Remote Sensing 15, no. 1: 84. https://doi.org/10.3390/rs15010084

APA StyleAlmeida, S., Radeta, M., Kataoka, T., Canning-Clode, J., Pessanha Pais, M., Freitas, R., & Monteiro, J. G. (2023). Designing Unmanned Aerial Survey Monitoring Program to Assess Floating Litter Contamination. Remote Sensing, 15(1), 84. https://doi.org/10.3390/rs15010084