Multimodal and Multitemporal Land Use/Land Cover Semantic Segmentation on Sentinel-1 and Sentinel-2 Imagery: An Application on a MultiSenGE Dataset

Abstract

1. Introduction

2. Materials and Preprocessing Methods

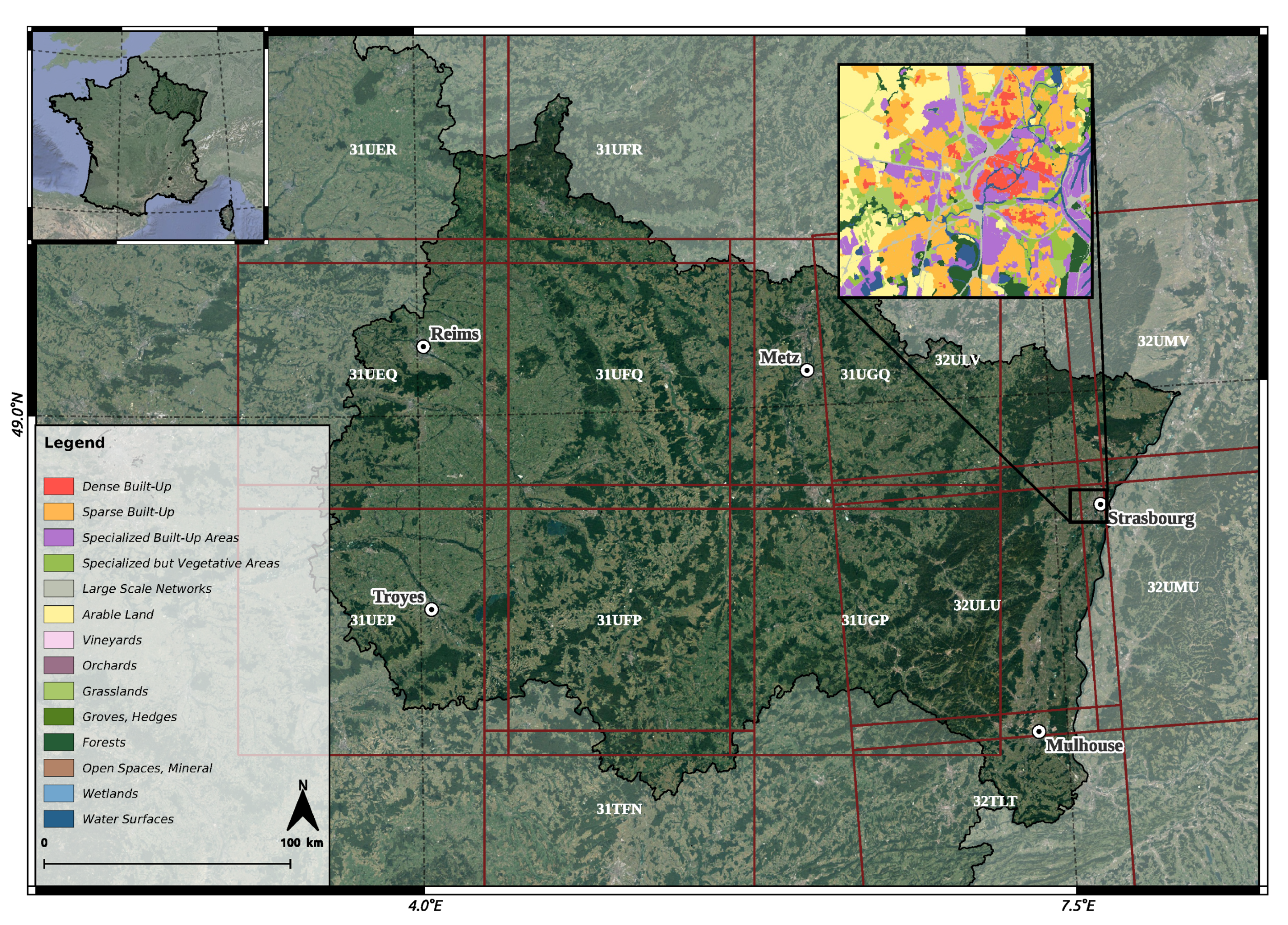

2.1. MultiSenGE dataset

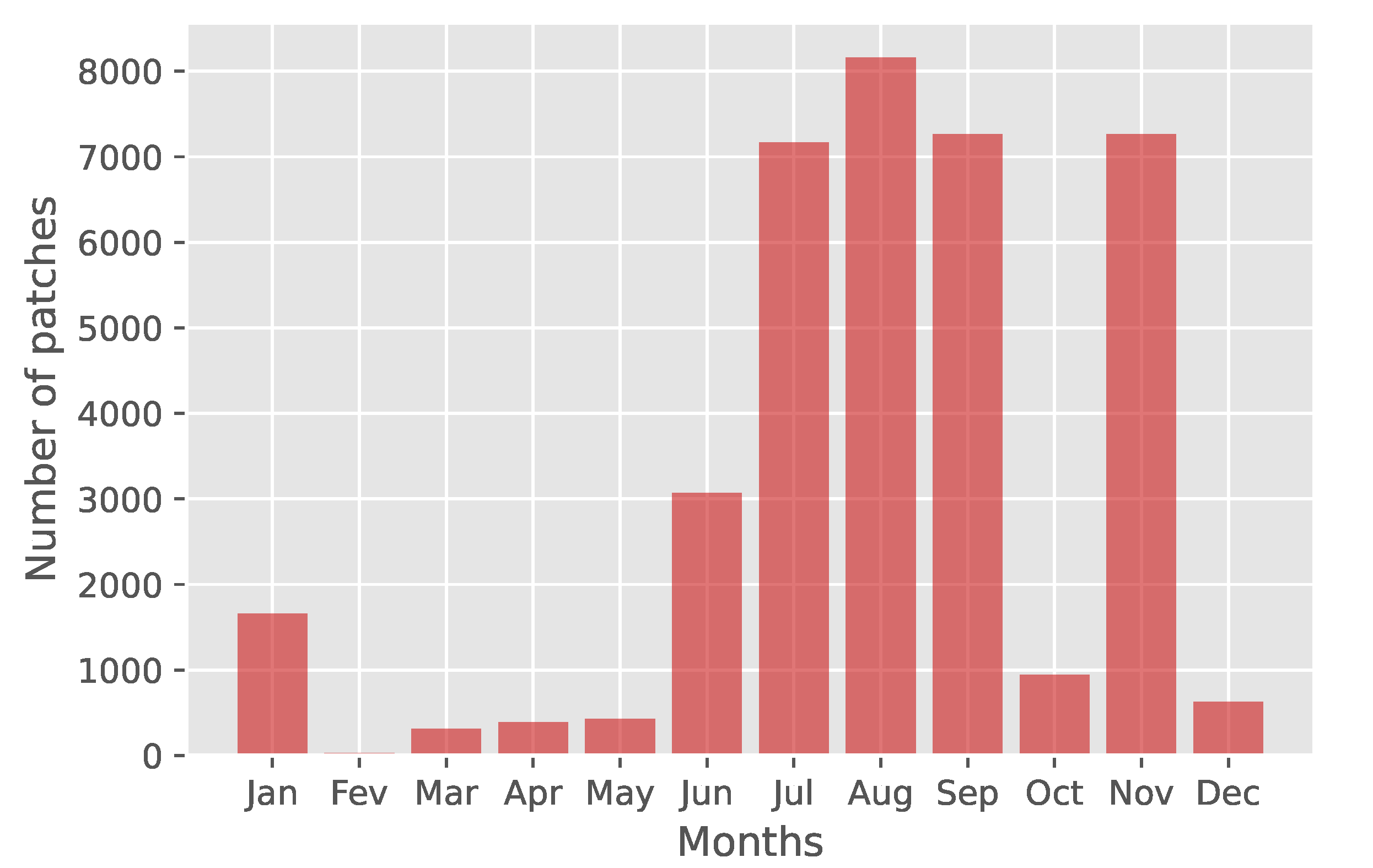

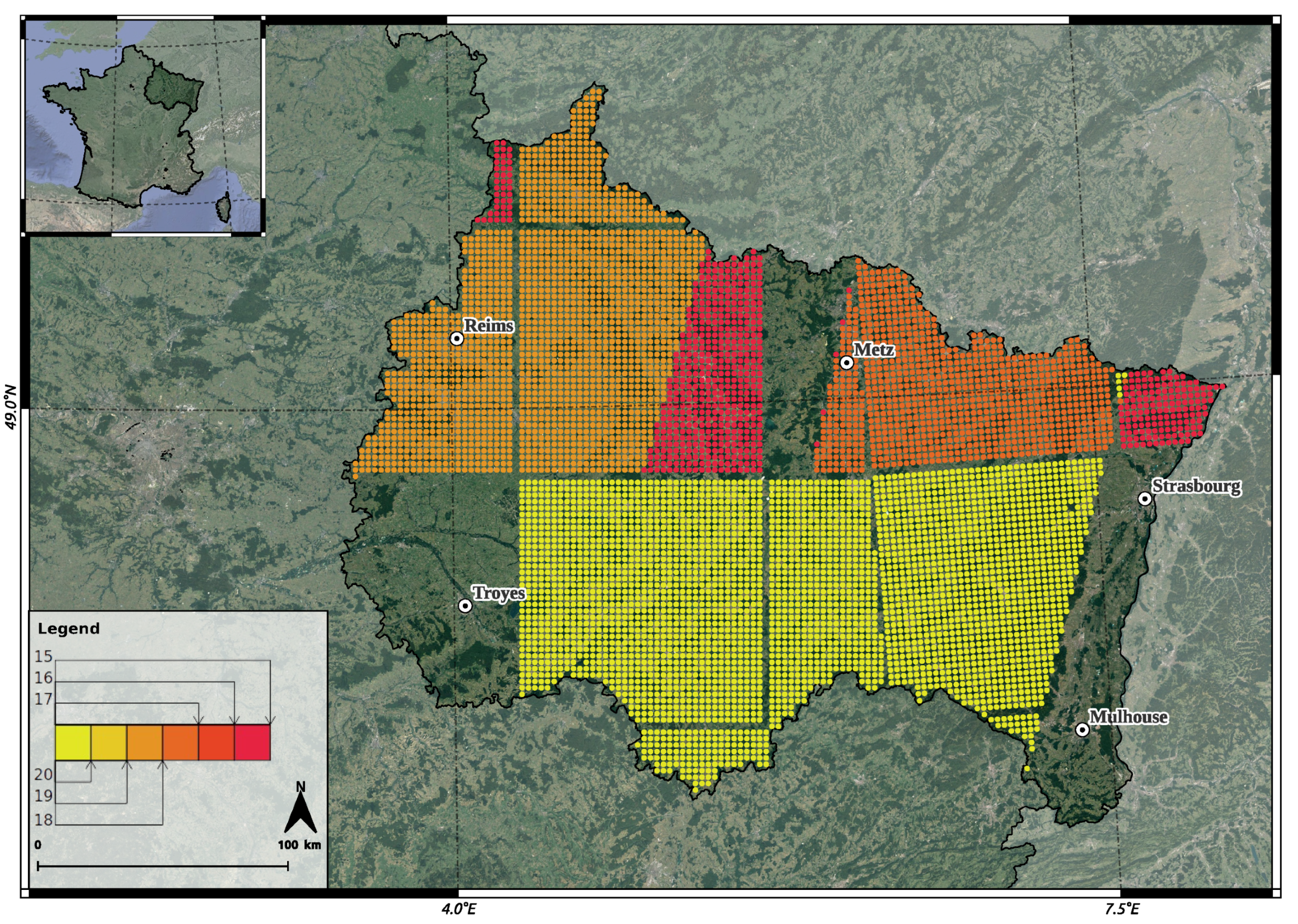

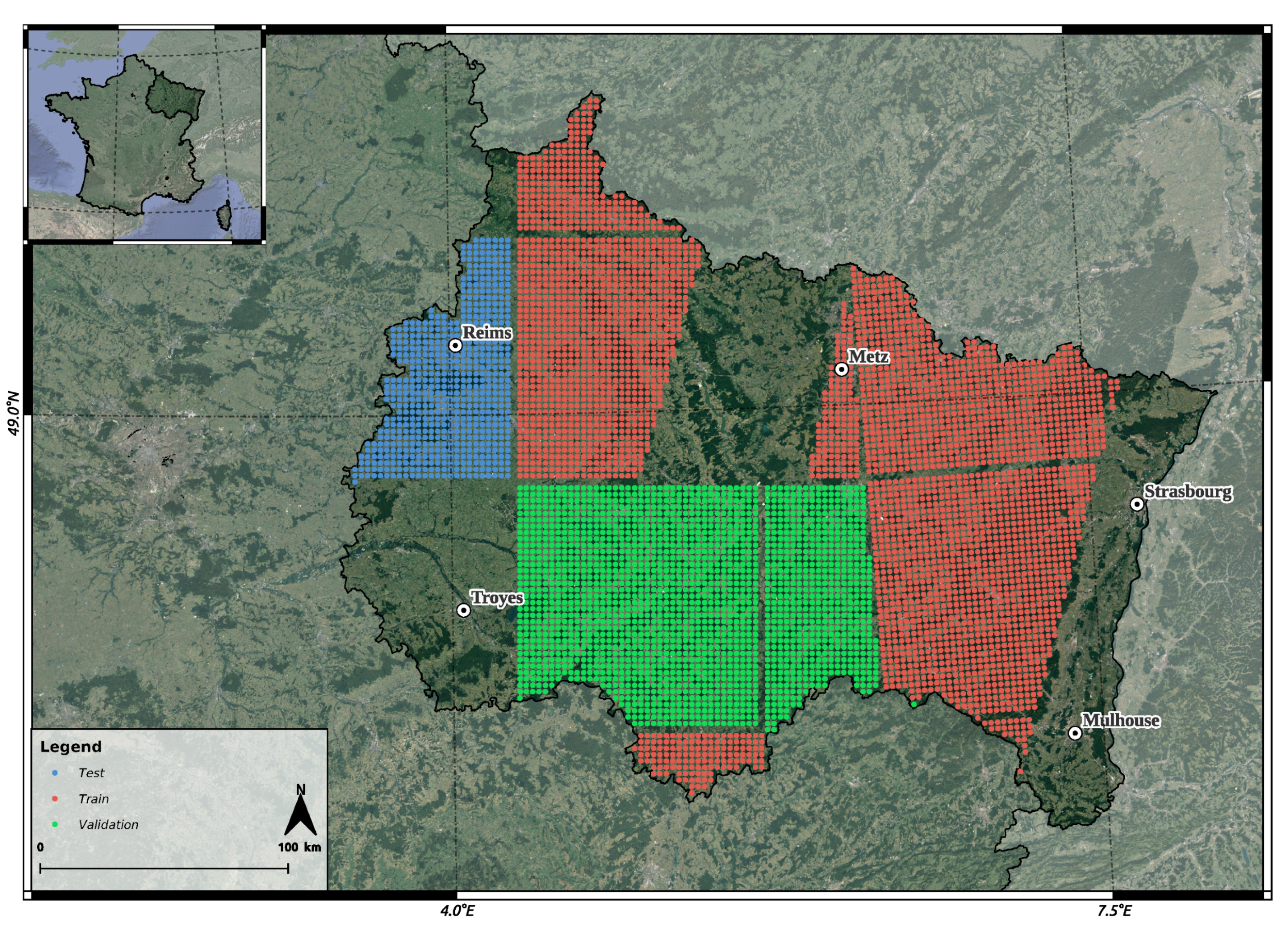

2.2. Optical and SAR Multitemporal Patches Selection

2.3. Reference Data Typology

3. Models

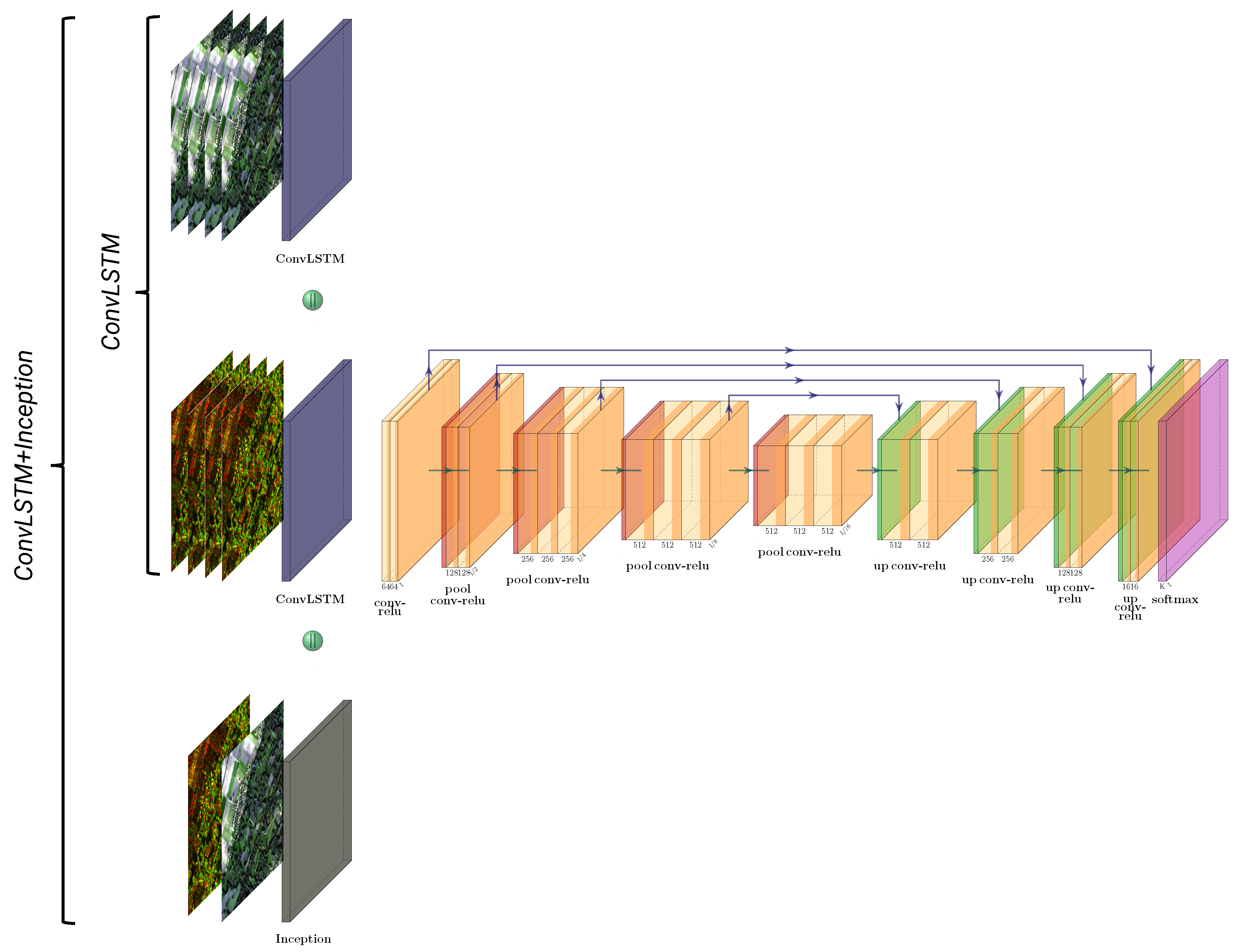

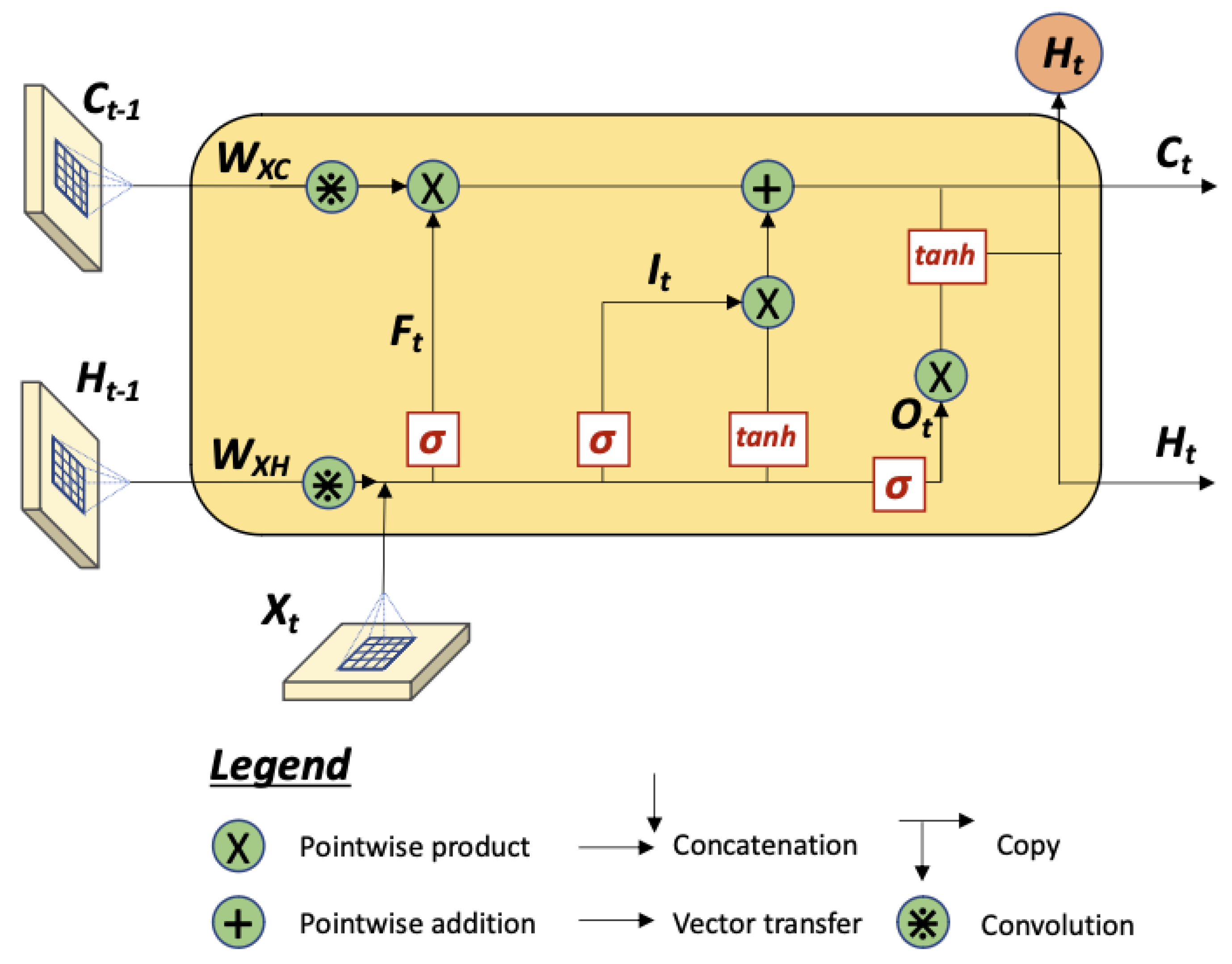

3.1. Spatio-Temporal Feature Extractor: ConvLSTM-S1/S2

3.2. Spatio-Spectral-Temporal Feature Extractor: ConvLSTM+Inception-S1S2

3.3. Experimentation Details

3.4. Implementation Details

3.5. Evaluation Metrics

4. Results

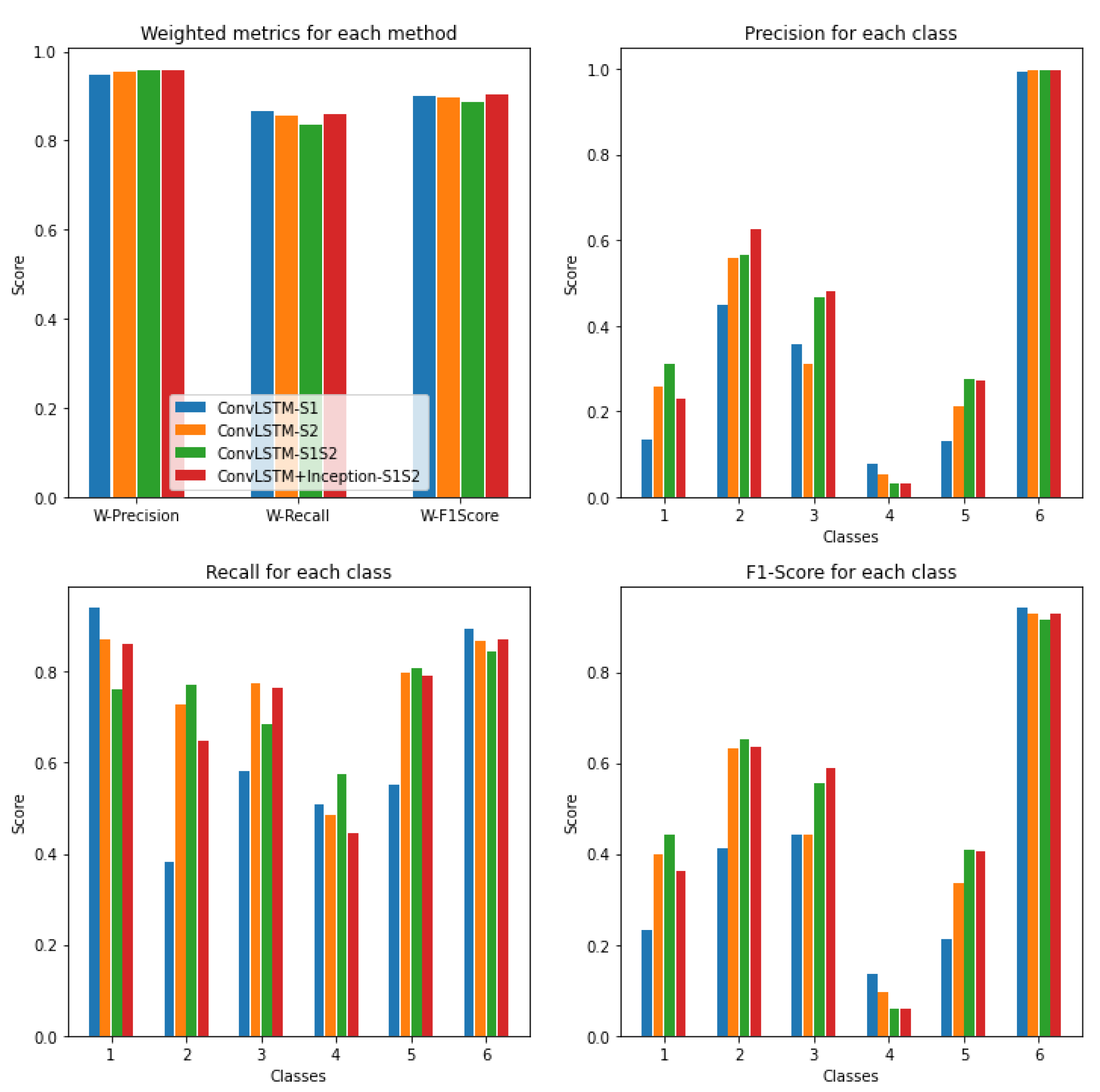

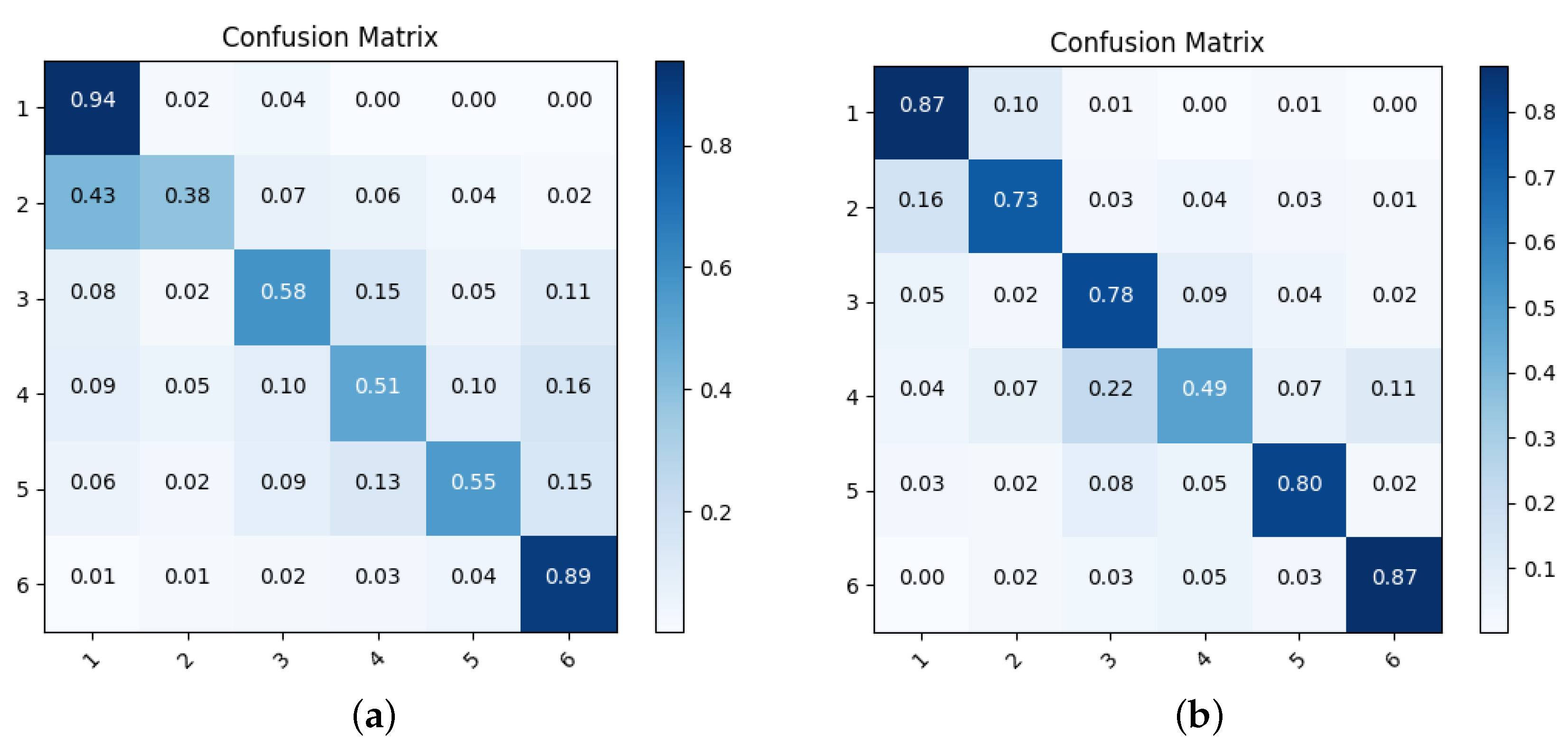

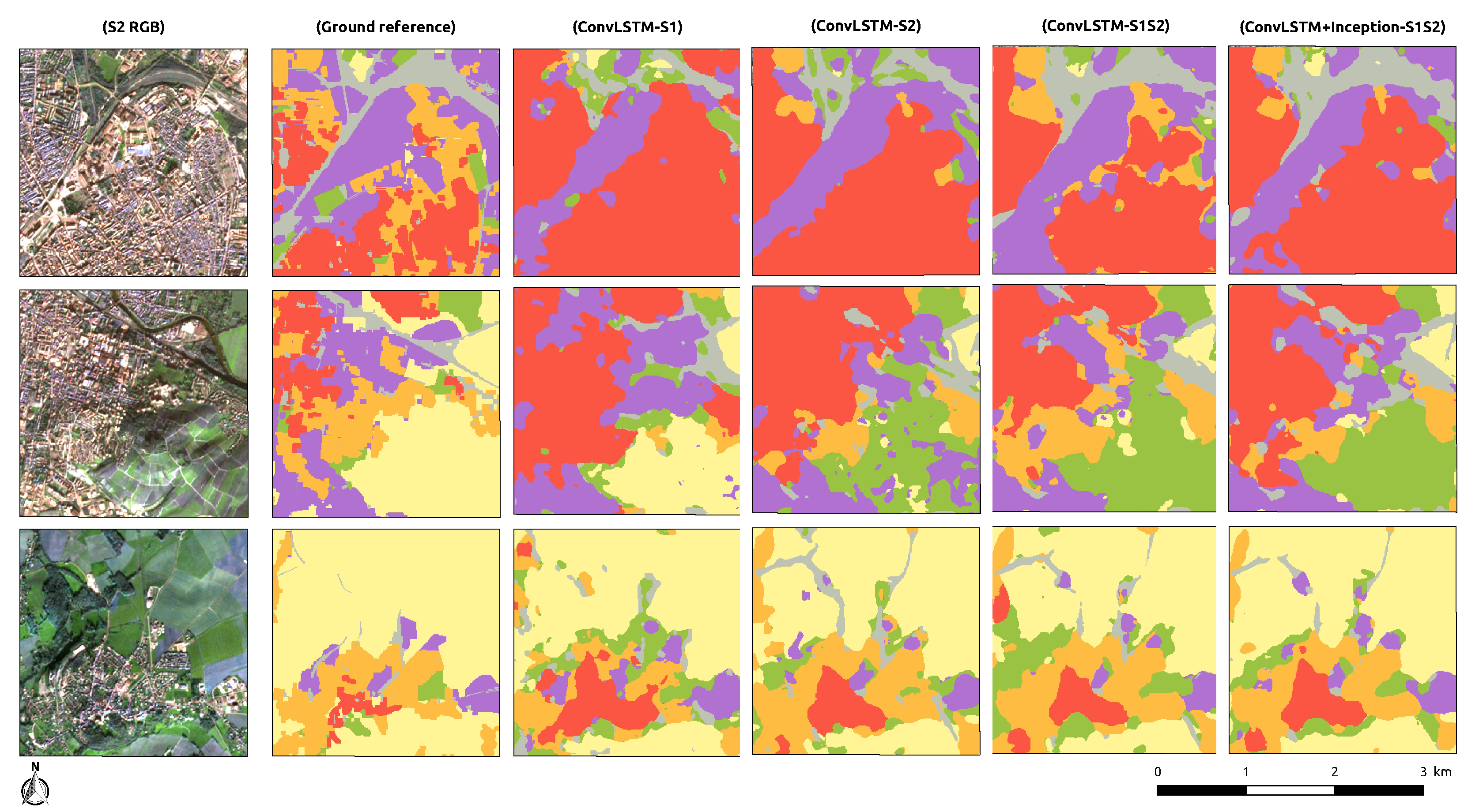

4.1. 6 Classes Results

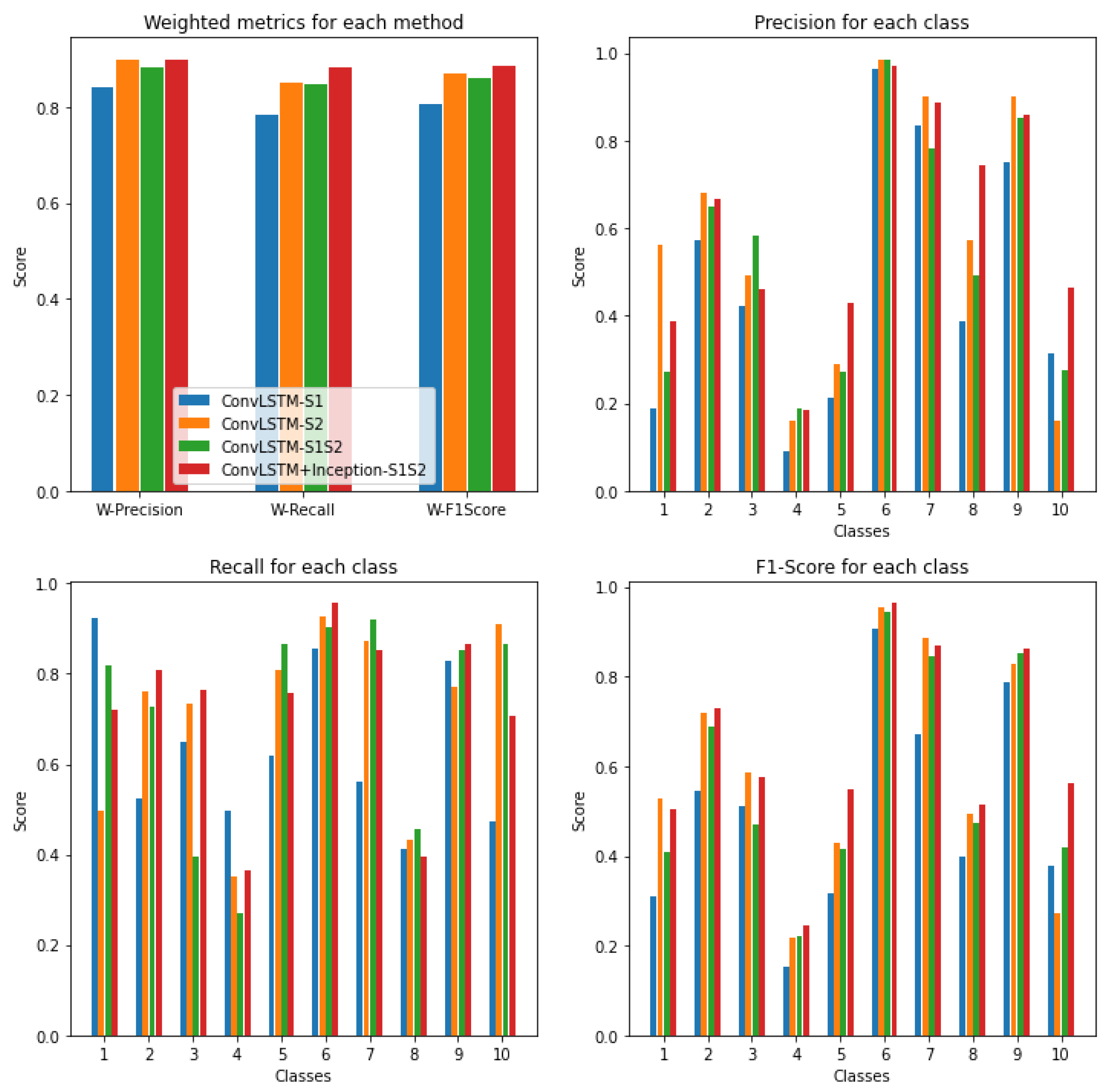

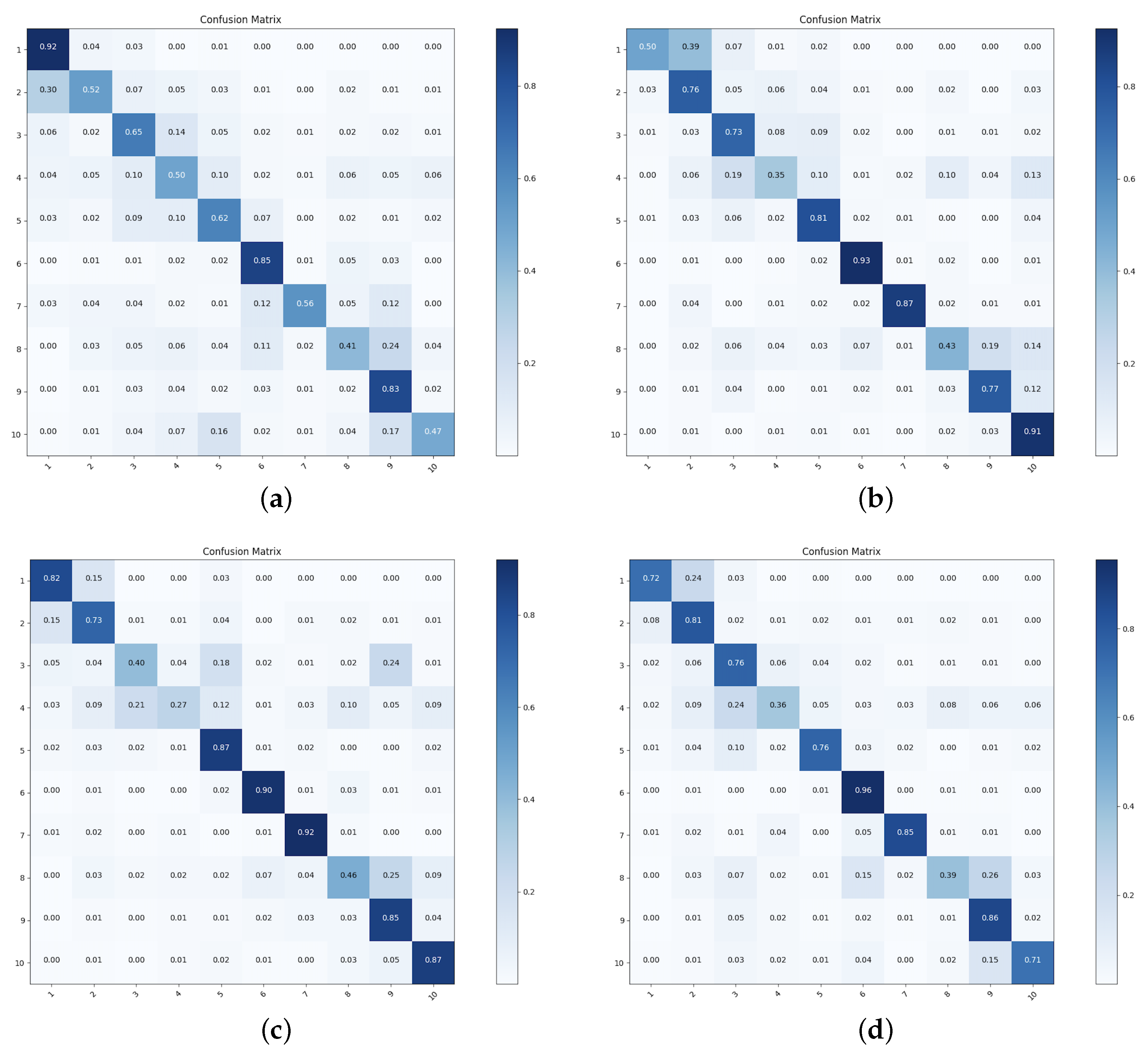

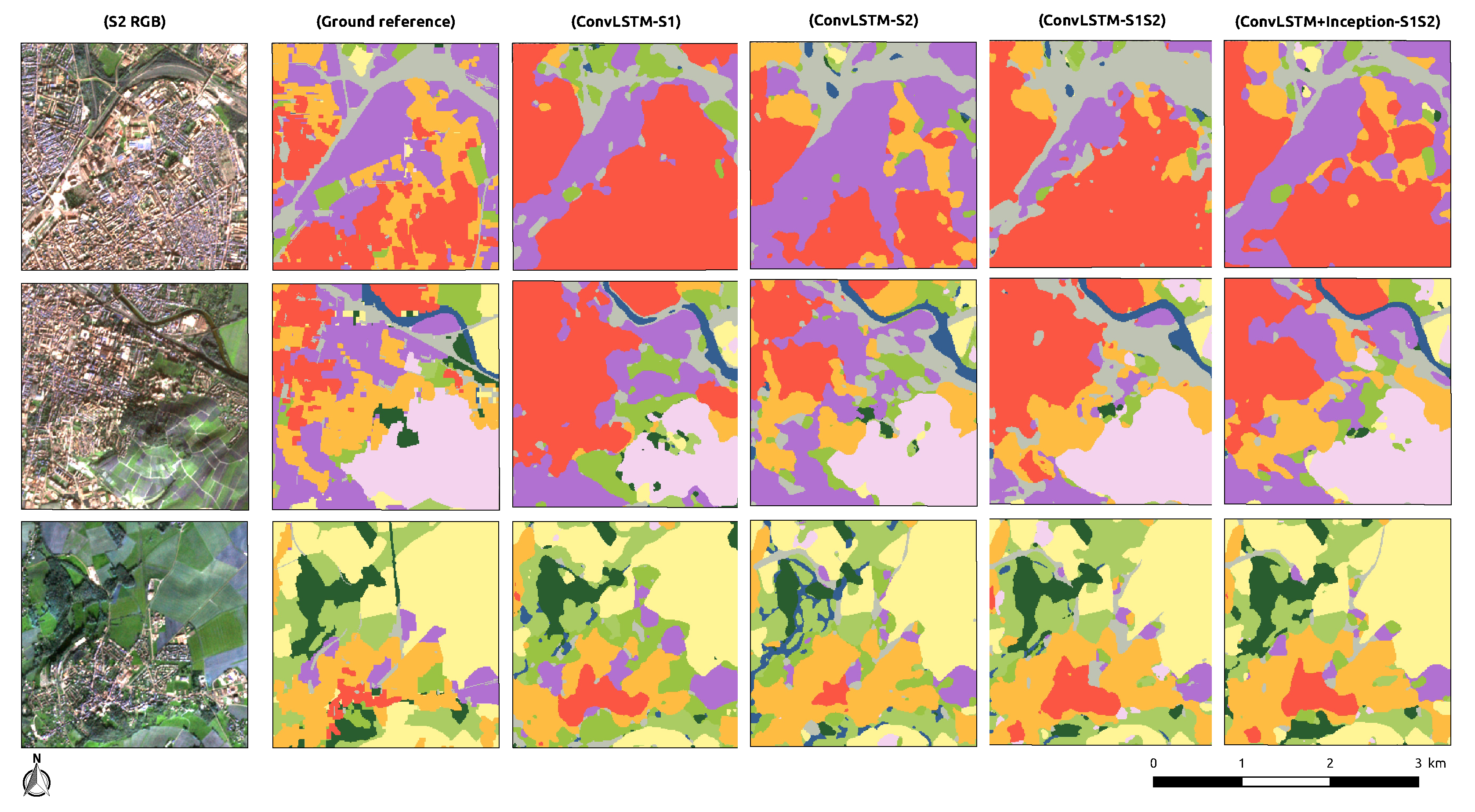

4.2. 10 Classes Results

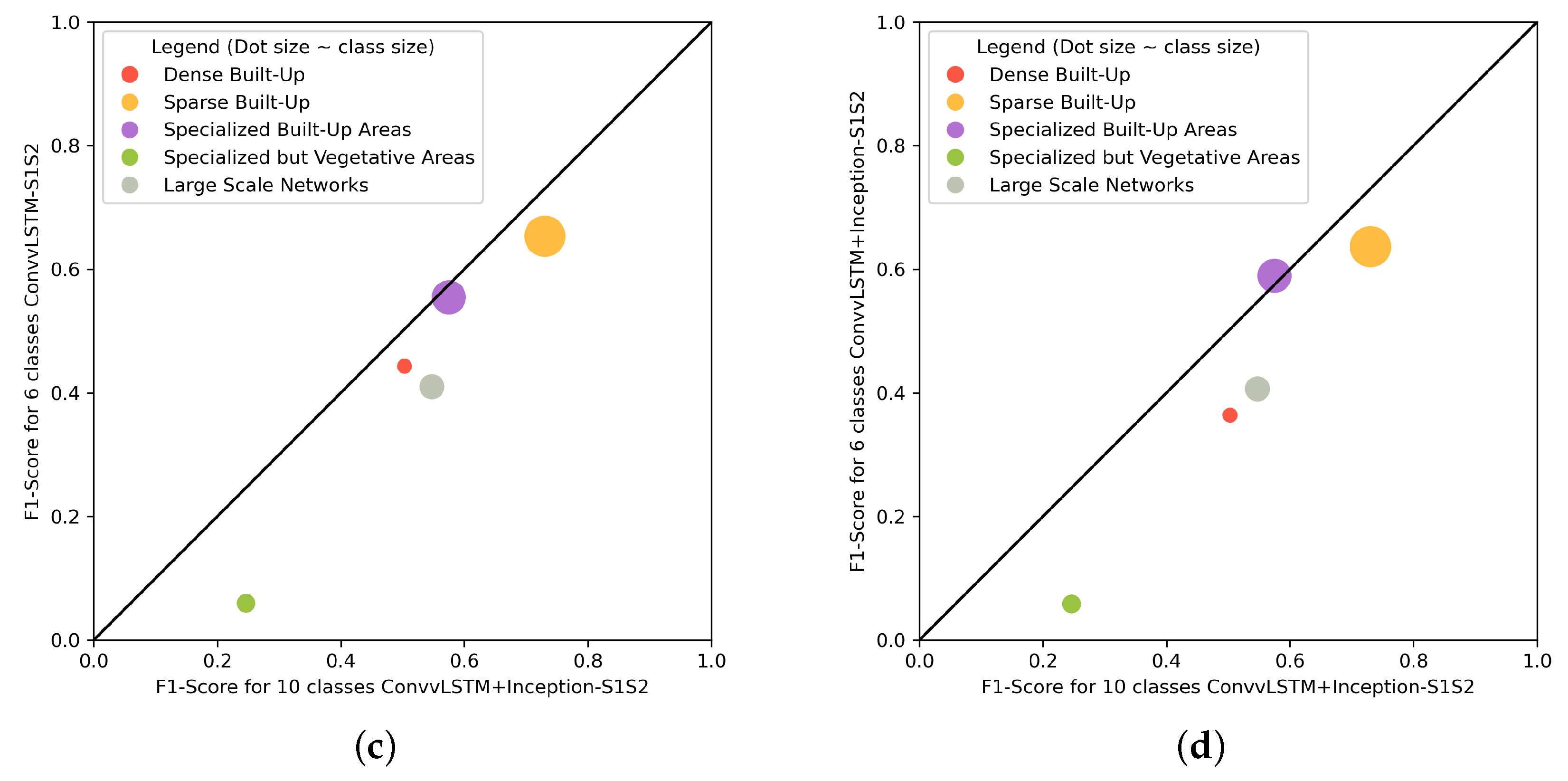

4.3. UFs Analysis

5. Discussion

5.1. Application on UF Mapping

5.2. Comparison with a State of the Art LULC Product

5.3. Network Performance

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Network |

| GRD | Ground Range Detection |

| IGN | Institut Géofgraphique National |

| LR | Learning Rate |

| LULC | Land Use Land Cover |

| SLC | Single Look Complex |

| UF | Urban Fabrics |

Appendix A

References

- Inglada, J.; Vincent, A.; Arias, M.; Tardy, B.; Morin, D.; Rodes, I. Operational High Resolution Land Cover Map Production at the Country Scale Using Satellite Image Time Series. Remote Sens. 2017, 9, 95. [Google Scholar] [CrossRef]

- Zanaga, D.; Van De Kerchove, R.; De Keersmaecker, W.; Souverijns, N.; Brockmann, C.; Quast, R.; Wevers, J.; Grosu, A.; Paccini, A.; Vergnaud, S.; et al. ESA WorldCover 10 m 2020 v100. 2021. Available online: https://zenodo.org/record/5571936 (accessed on 10 June 2022).

- Brown, C.F.; Brumby, S.P.; Guzder-Williams, B.; Birch, T.; Hyde, S.B.; Mazzariello, J.; Czerwinski, W.; Pasquarella, V.J.; Haertel, R.; Ilyushchenko, S.; et al. Dynamic World, Near real-time global 10 m land use land cover mapping. Sci. Data 2022, 9, 1–17. [Google Scholar] [CrossRef]

- Karra, K.; Kontgis, C.; Statman-Weil, Z.; Mazzariello, J.C.; Mathis, M.; Brumby, S.P. Global land use/land cover with Sentinel 2 and deep learning. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; IEEE: New York, NY, USA, 2021; pp. 4704–4707. [Google Scholar]

- Guo, X.; Zhang, C.; Luo, W.; Yang, J.; Yang, M. Urban Impervious Surface Extraction Based on Multi-Features and Random Forest. IEEE Access 2020, 8, 226609–226623. [Google Scholar] [CrossRef]

- Yang, X.; Li, Y.; Luo, Z.; Chan, P.W. The urban cool island phenomenon in a high-rise high-density city and its mechanisms. Int. J. Climatol. 2017, 37, 890–904. [Google Scholar] [CrossRef]

- El Mendili, L.; Puissant, A.; Chougrad, M.; Sebari, I. Towards a Multi-Temporal Deep Learning Approach for Mapping Urban Fabric Using Sentinel 2 Images. Remote Sens. 2020, 12, 423. [Google Scholar] [CrossRef]

- Li, J.; Narayanan, R. A shape-based approach to change detection of lakes using time series remote sensing images. IEEE Trans. Geosci. Remote Sens. 2003, 41, 2466–2477. [Google Scholar] [CrossRef]

- Karasiak, N.; Sheeren, D.; Fauvel, M.; Willm, J.; Dejoux, J.F.; Monteil, C. Mapping tree species of forests in southwest France using Sentinel-2 image time series. In Proceedings of the 2017 9th International Workshop on the Analysis of Multitemporal Remote Sensing Images (MultiTemp), Brugge, Belgium, 27–29 June 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Li, Q.; Wang, C.; Zhang, B.; Lu, L. Object-Based Crop Classification with Landsat-MODIS Enhanced Time-Series Data. Remote Sens. 2015, 7, 16091–16107. [Google Scholar] [CrossRef]

- Praticò, S.; Solano, F.; Di Fazio, S.; Modica, G. Machine Learning Classification of Mediterranean Forest Habitats in Google Earth Engine Based on Seasonal Sentinel-2 Time-Series and Input Image Composition Optimisation. Remote Sens. 2021, 13, 586. [Google Scholar] [CrossRef]

- Zhou, T.; Zhao, M.; Sun, C.; Pan, J. Exploring the Impact of Seasonality on Urban Land-Cover Mapping Using Multi-Season Sentinel-1A and GF-1 WFV Images in a Subtropical Monsoon-Climate Region. ISPRS Int. J. Geo-Inf. 2018, 7, 3. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Pelletier, C.; Webb, G.I.; Petitjean, F. Temporal convolutional neural network for the classification of satellite image time series. Remote Sens. 2019, 11, 523. [Google Scholar] [CrossRef]

- Zhang, P.; Ke, Y.; Zhang, Z.; Wang, M.; Li, P.; Zhang, S. Urban Land Use and Land Cover Classification Using Novel Deep Learning Models Based on High Spatial Resolution Satellite Imagery. Sensors 2018, 18, 3717. [Google Scholar] [CrossRef]

- Hafner, S.; Ban, Y.; Nascetti, A. Unsupervised domain adaptation for global urban extraction using sentinel-1 SAR and sentinel-2 MSI data. Remote Sens. Environ. 2022, 280, 113192. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the 2015 International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Wenger, R.; Puissant, A.; Weber, J.; Idoumghar, L.; Forestier, G. U-Net feature fusion for multi-class semantic segmentation of urban fabrics from Sentinel-2 imagery: An application on Grand Est Region, France. Int. J. Remote Sens. 2022, 43, 1983–2011. [Google Scholar] [CrossRef]

- Rußwurm, M.; Pelletier, C.; Zollner, M.; Lefèvre, S.; Körner, M. Breizhcrops: A time series dataset for crop type mapping. arXiv 2019, arXiv:1905.11893. [Google Scholar] [CrossRef]

- Ienco, D.; Interdonato, R.; Gaetano, R.; Ho Tong Minh, D. Combining Sentinel-1 and Sentinel-2 Satellite Image Time Series for land cover mapping via a multi-source deep learning architecture. ISPRS J. Photogramm. Remote Sens. 2019, 158, 11–22. [Google Scholar] [CrossRef]

- Ghassemian, H. A review of remote sensing image fusion methods. Inf. Fusion 2016, 32, 75–89. [Google Scholar] [CrossRef]

- Hedayati, P.; Bargiel, D. Fusion of Sentinel-1 and Sentinel-2 Images for Classification of Agricultural Areas Using a Novel Classification Approach. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 6643–6646. [Google Scholar] [CrossRef]

- Inglada, J.; Arias, M.; Tardy, B.; Morin, D.; Valero, S.; Hagolle, O.; Dedieu, G.; Sepulcre, G.; Bontemps, S.; Defourny, P. Benchmarking of algorithms for crop type land-cover maps using Sentinel-2 image time series. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 3993–3996. [Google Scholar] [CrossRef]

- Smets, P. What is Dempster-Shafer’s model. In Advances in the Dempster-Shafer Theory of Evidence; John Wiley & Sons, Inc.: New York, NY, USA, 1994; Volume 34. [Google Scholar]

- Clerici, N.; Calderón, C.A.V.; Posada, J.M. Fusion of Sentinel-1A and Sentinel-2A data for land cover mapping: A case study in the lower Magdalena region, Colombia. J. Maps 2017, 13, 718–726. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Beyond RGB: Very high resolution urban remote sensing with multimodal deep networks. ISPRS J. Photogramm. Remote Sens. 2018, 140, 20–32. [Google Scholar] [CrossRef]

- Liu, J.; Gong, M.; Qin, K.; Zhang, P. A Deep Convolutional Coupling Network for Change Detection Based on Heterogeneous Optical and Radar Images. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 545–559. [Google Scholar] [CrossRef] [PubMed]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Pfeuffer, A.; Schulz, K.; Dietmayer, K. Semantic segmentation of video sequences with convolutional lstms. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; IEEE: New York, NY, USA, 2019; pp. 1441–1447. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting, 2015. arXiv 2015, arXiv:1506.04214. [Google Scholar]

- Wang, D.; Yang, Y.; Ning, S. DeepSTCL: A deep spatio-temporal ConvLSTM for travel demand prediction. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; IEEE: New York, NY, USA, 2018; pp. 1–8. [Google Scholar]

- Jamaluddin, I.; Thaipisutikul, T.; Chen, Y.N.; Chuang, C.H.; Hu, C.L. MDPrePost-Net: A Spatial-Spectral-Temporal Fully Convolutional Network for Mapping of Mangrove Degradation Affected by Hurricane Irma 2017 Using Sentinel-2 Data. Remote Sens. 2021, 13, 5042. [Google Scholar] [CrossRef]

- Masolele, R.N.; De Sy, V.; Herold, M.; Marcos, D.; Verbesselt, J.; Gieseke, F.; Mullissa, A.G.; Martius, C. Spatial and temporal deep learning methods for deriving land-use following deforestation: A pan-tropical case study using Landsat time series. Remote Sens. Environ. 2021, 264, 112600. [Google Scholar] [CrossRef]

- Chang, Y.L.; Tan, T.H.; Chen, T.H.; Chuah, J.H.; Chang, L.; Wu, M.C.; Tatini, N.B.; Ma, S.C.; Alkhaleefah, M. Spatial-Temporal Neural Network for Rice Field Classification from SAR Images. Remote Sens. 2022, 14, 1929. [Google Scholar] [CrossRef]

- Wenger, R.; Puissant, A.; Weber, J.; Idoumghar, L.; Forestier, G. Multisenge: A Multimodal and Multitemporal Benchmark Dataset for Land Use/Land Cover Remote Sensing Applications. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, V-3-2022, 635–640. [Google Scholar] [CrossRef]

- Wenger, R.; Puissant, A.; Michéa, D. Towards an annual Urban Settlement map in France at 10m spatial resolution using a method for massive streams of Sentinel-2. LIVE CNRS UMR7362, Strasbourg, France, to be submitted.

- Maxwell, A.E.; Warner, T.A.; Guillén, L.A. Accuracy Assessment in Convolutional Neural Network-Based Deep Learning Remote Sensing Studies—Part 2: Recommendations and Best Practices. Remote Sens. 2021, 13, 2591. [Google Scholar] [CrossRef]

- Yakubovskiy, P. Segmentation Models. 2019. Available online: https://github.com/qubvel/segmentation_models (accessed on 8 November 2022).

- Zhang, P.; Ban, Y.; Nascetti, A. Learning U-Net without forgetting for near real-time wildfire monitoring by the fusion of SAR and optical time series. Remote Sens. Environ. 2021, 261, 112467. [Google Scholar] [CrossRef]

- Wei, P.; Chai, D.; Lin, T.; Tang, C.; Du, M.; Huang, J. Large-scale rice mapping under different years based on time-series Sentinel-1 images using deep semantic segmentation model. ISPRS J. Photogramm. Remote Sens. 2021, 174, 198–214. [Google Scholar] [CrossRef]

- Neves, A.K.; Körting, T.S.; Fonseca, L.M.G.; Girolamo Neto, C.D.; Wittich, D.; Costa, G.A.O.P.; Heipke, C. Semantic Segmentation of Brazilian Savanna Vegetation Using High Spatial Resolution Satellite Data and U-Net. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, V-3-2020, 505–511. [Google Scholar] [CrossRef]

- Ienco, D.; Gaetano, R.; Dupaquier, C.; Maurel, P. Land Cover Classification via Multitemporal Spatial Data by Deep Recurrent Neural Networks. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1685–1689. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Bai, H.; Cheng, J.; Su, Y.; Liu, S.; Liu, X. Calibrated Focal Loss for Semantic Labeling of High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 6531–6547. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Bera, S.; Shrivastava, V.K. Analysis of various optimizers on deep convolutional neural network model in the application of hyperspectral remote sensing image classification. Int. J. Remote Sens. 2020, 41, 2664–2683. [Google Scholar] [CrossRef]

- Pires de Lima, R.; Marfurt, K. Convolutional neural network for remote-sensing scene classification: Transfer learning analysis. Remote Sens. 2019, 12, 86. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B.; Sharma, G.; Maulud, K.N.A.; Alamri, A. Improving road semantic segmentation using generative adversarial network. IEEE Access 2021, 9, 64381–64392. [Google Scholar] [CrossRef]

- Li, H.; Li, Y.; Zhang, G.; Liu, R.; Huang, H.; Zhu, Q.; Tao, C. Global and local contrastive self-supervised learning for semantic segmentation of HR remote sensing images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Guillén, L.A. Accuracy Assessment in Convolutional Neural Network-Based Deep Learning Remote Sensing Studies—Part 1: Literature Review. Remote Sens. 2021, 13, 2450. [Google Scholar] [CrossRef]

- Wenger, R.; Puissant, A.; Weber, J.; Idoumghar, L.; Forestier, G. A New Remote Sensing Benchmark Dataset for Machine Learning Applications: MultiSenGE, 2022. ANR-17-CE23-0015. Available online: https://zenodo.org/record/6375466#.Y6q45UwRWUk (accessed on 8 June 2022).

- Iqbal, H. HarisIqbal88/PlotNeuralNet v1.0.0. 2018. Available online: https://zenodo.org/record/2526396#.Y6q5CEwRWUk (accessed on 8 June 2022).

| Days Gap for Two Consecutive Months | Number of Patches |

|---|---|

| 15 | 6560 |

| 16 | 5890 |

| 17 | 5890 |

| 18 | 4960 |

| 19 | 3178 |

| 20 | 3178 |

| MultiSenGE Semantic Classes | MultiSenGE Distribution |

|---|---|

| Dense Built-Up (1) | 0.37% |

| Sparse Built-Up (2) | 3.64% |

| Specialized Built-Up Areas (3) | 2.17% |

| Specialized but Vegetative Areas (4) | 0.44% |

| Large Scale Networks (5) | 0.91% |

| Arable Lands (6) | 38.73% |

| Vineyards (7) | 0.98% |

| Orchards (8) | 0.15% |

| Grasslands (9) | 18.87% |

| Groves, Hedges (10) | 0.01% |

| Forests (11) | 32.52% |

| Open Spaces, Mineral (12) | 0.01% |

| Wetlands (13) | 0.31% |

| Water Surfaces (14) | 0.89% |

| MultiSenGE Semantic Classes | 10 Classes | 6 Classes |

|---|---|---|

| Dense Built-Up (1) | Dense Built-Up (1) | Dense Built-Up (1) |

| Sparse Built-Up (2) | Sparse Built-Up (2) | Sparse Built-Up (2) |

| Specialized Built-Up Areas (3) | Specialized Built-Up Areas (3) | Specialized Built-Up Areas (3) |

| Specialized but Vegetative Areas (4) | Specialized but Vegetative Areas (4) | Specialized but Vegetative Areas (4) |

| Large Scale Networks (5) | Large Scale Networks (5) | Large Scale Networks (5) |

| Arable Lands (6) | Arable Lands (6) | Non-urban areas (6) |

| Vineyards (7) | Vineyards and Orchards (7) | |

| Orchards (8) | ||

| Grasslands (9) | Grasslands (8) | |

| Groces, Hedges (10) | Forests and semi-natural areas (9) | |

| Forests (11) | ||

| Open Spaces, Mineral (12) | ||

| Wetlands (13) | Water Surfaces (10) | |

| Water Surfaces (14) |

| Name | Sensors | Method | Number of Classes |

|---|---|---|---|

| ConvLSTM-S1 | Sentinel-1 | ConvLSTM | 6 classes |

| ConvLSTM-S2 | Sentinel-2 | ConvLSTM | 6 classes |

| ConvLSTM-S1S2 | Sentinel-1 and Sentinel-2 | ConvLSTM | 6 classes |

| ConvLSTM-S1 | Sentinel-1 | ConvLSTM | 10 classes |

| ConvLSTM-S2 | Sentinel-2 | ConvLSTM | 10 classes |

| ConvLSTM-S1S2 | Sentinel-1 and Sentinel-2 | ConvLSTM | 10 classes |

| ConvLSTM+Inception-S1S2 | Sentinel-1 and Sentinel-2 | ConvLSTM and Inception | 6 classes |

| ConvLSTM+Inception-S1S2 | Sentinel-1 and Sentinel-2 | ConvLSTM and Inception | 10 classes |

| ConvLSTM-S1 | ConvLSTM-S2 | |||||

|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | |

| Class 1 | 0.1335 | 0.9397 | 0.2337 | 0.2579 | 0.8704 | 0.3980 |

| Class 2 | 0.4476 | 0.3809 | 0.4116 | 0.5575 | 0.7268 | 0.6310 |

| Class 3 | 0.3560 | 0.5813 | 0.4416 | 0.3100 | 0.7763 | 0.4431 |

| Class 4 | 0.0775 | 0.5072 | 0.1344 | 0.0528 | 0.4858 | 0.0953 |

| Class 5 | 0.1313 | 0.5516 | 0.2122 | 0.2137 | 0.7995 | 0.3372 |

| Class 6 | 0.9937 | 0.8937 | 0.9410 | 0.9979 | 0.8663 | 0.9274 |

| W-Avg | 0.9469 | 0.8661 | 0.9001 | 0.9544 | 0.8574 | 0.8958 |

| ConvLSTM-S1S2 | ConvLSTM+Inception-S1S2 | |||||

| Precision | Recall | F1 | Precision | Recall | F1 | |

| Class 1 | 0.3122 | 0.7624 | 0.4430 | 0.2308 | 0.8599 | 0.3639 |

| Class 2 | 0.5671 | 0.7706 | 0.6533 | 0.6260 | 0.6472 | 0.6364 |

| Class 3 | 0.4654 | 0.6859 | 0.5545 | 0.4794 | 0.7647 | 0.5894 |

| Class 4 | 0.0314 | 0.5739 | 0.0595 | 0.0312 | 0.4461 | 0.0584 |

| Class 5 | 0.2745 | 0.8085 | 0.4099 | 0.2736 | 0.7898 | 0.4064 |

| Class 6 | 0.9971 | 0.8446 | 0.9145 | 0.9965 | 0.8719 | 0.9301 |

| W-Avg | 0.9578 | 0.8369 | 0.8875 | 0.9591 | 0.8596 | 0.9018 |

| Method | Cohen’s Kappa |

|---|---|

| ConvLSTM-S1 | 0.3929 |

| ConvLSTM-S2 | 0.4223 |

| ConvLSTM-S1S2 | 0.3852 |

| ConvLSTM+Inception-S1S2 | 0.4186 |

| ConvLSTM-S1 | ConvLSTM-S2 | |||||

|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | |

| Class 1 | 0.1872 | 0.9247 | 0.3114 | 0.5629 | 0.4968 | 0.5278 |

| Class 2 | 0.5718 | 0.5224 | 0.5460 | 0.6814 | 0.7625 | 0.7197 |

| Class 3 | 0.4208 | 0.6480 | 0.5103 | 0.4909 | 0.7329 | 0.5880 |

| Class 4 | 0.0892 | 0.4973 | 0.1512 | 0.1597 | 0.3499 | 0.2193 |

| Class 5 | 0.2142 | 0.6183 | 0.3182 | 0.2914 | 0.8076 | 0.4283 |

| Class 6 | 0.9649 | 0.8540 | 0.9060 | 0.9838 | 0.9263 | 0.9542 |

| Class 7 | 0.8361 | 0.5625 | 0.6726 | 0.9003 | 0.8737 | 0.8868 |

| Class 8 | 0.3890 | 0.4111 | 0.3997 | 0.5720 | 0.4336 | 0.4933 |

| Class 9 | 0.7515 | 0.8280 | 0.7879 | 0.9002 | 0.7697 | 0.8299 |

| Class 10 | 0.3143 | 0.4748 | 0.3782 | 0.1611 | 0.9106 | 0.2737 |

| W-Avg | 0.8422 | 0.7836 | 0.8055 | 0.9000 | 0.8517 | 0.8696 |

| ConvLSTM-S1S2 | ConvLSTM+Inception-S1S2 | |||||

| Precision | Recall | F1 | Precision | Recall | F1 | |

| Class 1 | 0.2736 | 0.8199 | 0.4103 | 0.3870 | 0.7190 | 0.5031 |

| Class 2 | 0.6498 | 0.7287 | 0.6870 | 0.6672 | 0.8066 | 0.7303 |

| Class 3 | 0.5840 | 0.3955 | 0.4716 | 0.4612 | 0.7632 | 0.5749 |

| Class 4 | 0.1885 | 0.2692 | 0.2217 | 0.1863 | 0.3643 | 0.2465 |

| Class 5 | 0.2739 | 0.8666 | 0.4163 | 0.4290 | 0.7560 | 0.5474 |

| Class 6 | 0.9862 | 0.9033 | 0.9430 | 0.9718 | 0.9558 | 0.9637 |

| Class 7 | 0.7822 | 0.9203 | 0.8457 | 0.8869 | 0.8512 | 0.8687 |

| Class 8 | 0.4914 | 0.4555 | 0.4728 | 0.7422 | 0.3949 | 0.5155 |

| Class 9 | 0.8516 | 0.8533 | 0.8524 | 0.8585 | 0.8643 | 0.8614 |

| Class 10 | 0.2759 | 0.8660 | 0.4185 | 0.4654 | 0.7074 | 0.5614 |

| W-Avg | 0.8825 | 0.8482 | 0.8600 | 0.8977 | 0.8831 | 0.8851 |

| Method | Cohen’s Kappa |

|---|---|

| ConvLSTM-S1 | 0.6422 |

| ConvLSTM-S2 | 0.7445 |

| ConvLSTM-S1S2 | 0.7482 |

| ConvLSTM+Inception-S1S2 | 0.7945 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wenger, R.; Puissant, A.; Weber, J.; Idoumghar, L.; Forestier, G. Multimodal and Multitemporal Land Use/Land Cover Semantic Segmentation on Sentinel-1 and Sentinel-2 Imagery: An Application on a MultiSenGE Dataset. Remote Sens. 2023, 15, 151. https://doi.org/10.3390/rs15010151

Wenger R, Puissant A, Weber J, Idoumghar L, Forestier G. Multimodal and Multitemporal Land Use/Land Cover Semantic Segmentation on Sentinel-1 and Sentinel-2 Imagery: An Application on a MultiSenGE Dataset. Remote Sensing. 2023; 15(1):151. https://doi.org/10.3390/rs15010151

Chicago/Turabian StyleWenger, Romain, Anne Puissant, Jonathan Weber, Lhassane Idoumghar, and Germain Forestier. 2023. "Multimodal and Multitemporal Land Use/Land Cover Semantic Segmentation on Sentinel-1 and Sentinel-2 Imagery: An Application on a MultiSenGE Dataset" Remote Sensing 15, no. 1: 151. https://doi.org/10.3390/rs15010151

APA StyleWenger, R., Puissant, A., Weber, J., Idoumghar, L., & Forestier, G. (2023). Multimodal and Multitemporal Land Use/Land Cover Semantic Segmentation on Sentinel-1 and Sentinel-2 Imagery: An Application on a MultiSenGE Dataset. Remote Sensing, 15(1), 151. https://doi.org/10.3390/rs15010151