A TIR-Visible Automatic Registration and Geometric Correction Method for SDGSAT-1 Thermal Infrared Image Based on Modified RIFT

Abstract

:1. Introduction

- Homomorphic filtering is employed before the feature points extraction stage to denoise and enhance the texture details of TIR images.

- In the descriptors construction stage, a novel binary pattern string is proposed, which is more robust to NRD than the MIM of the original RIFT. The binary pattern string is able to express a log-Gabor convolution sequence, while reducing computational complexity.

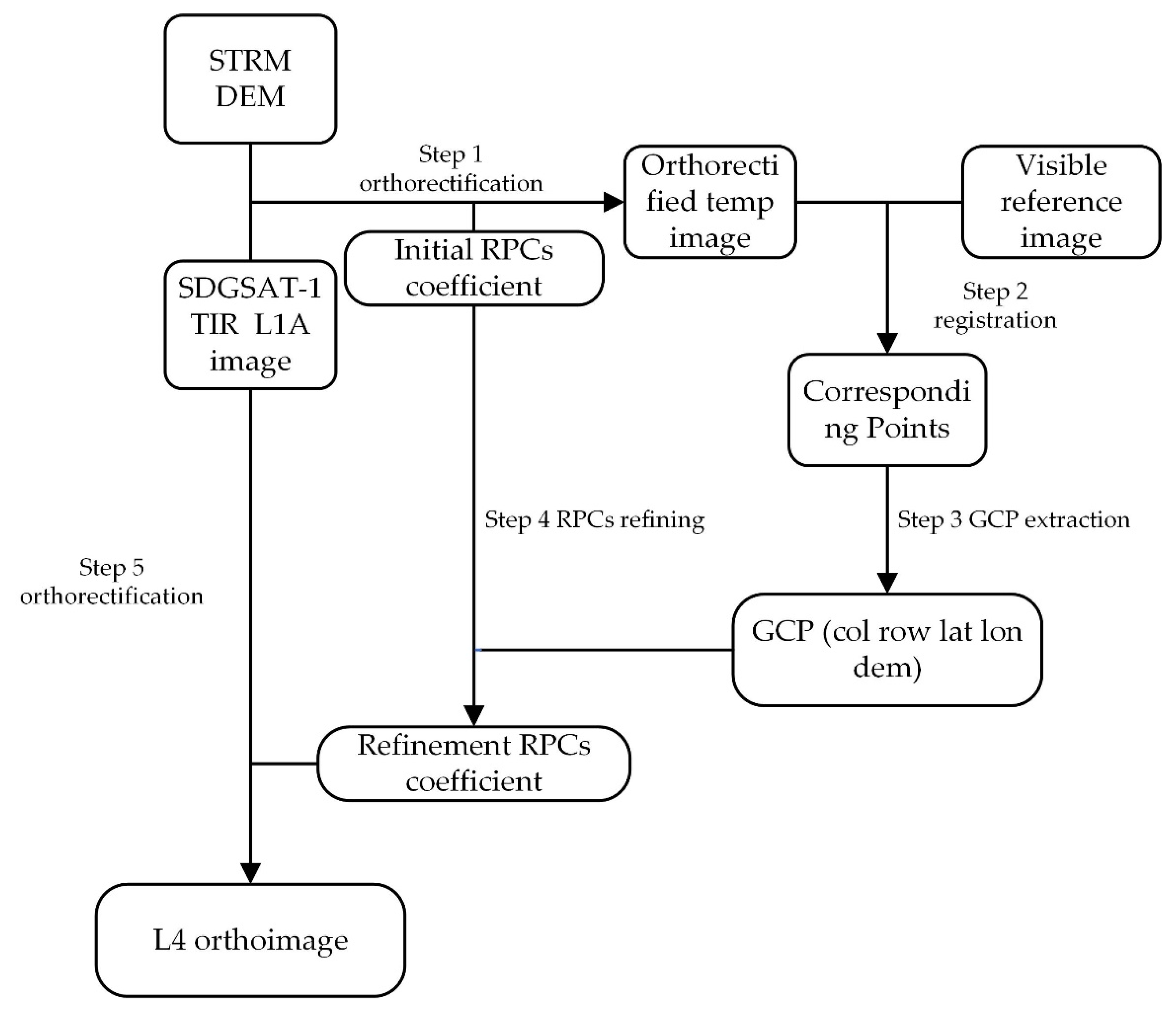

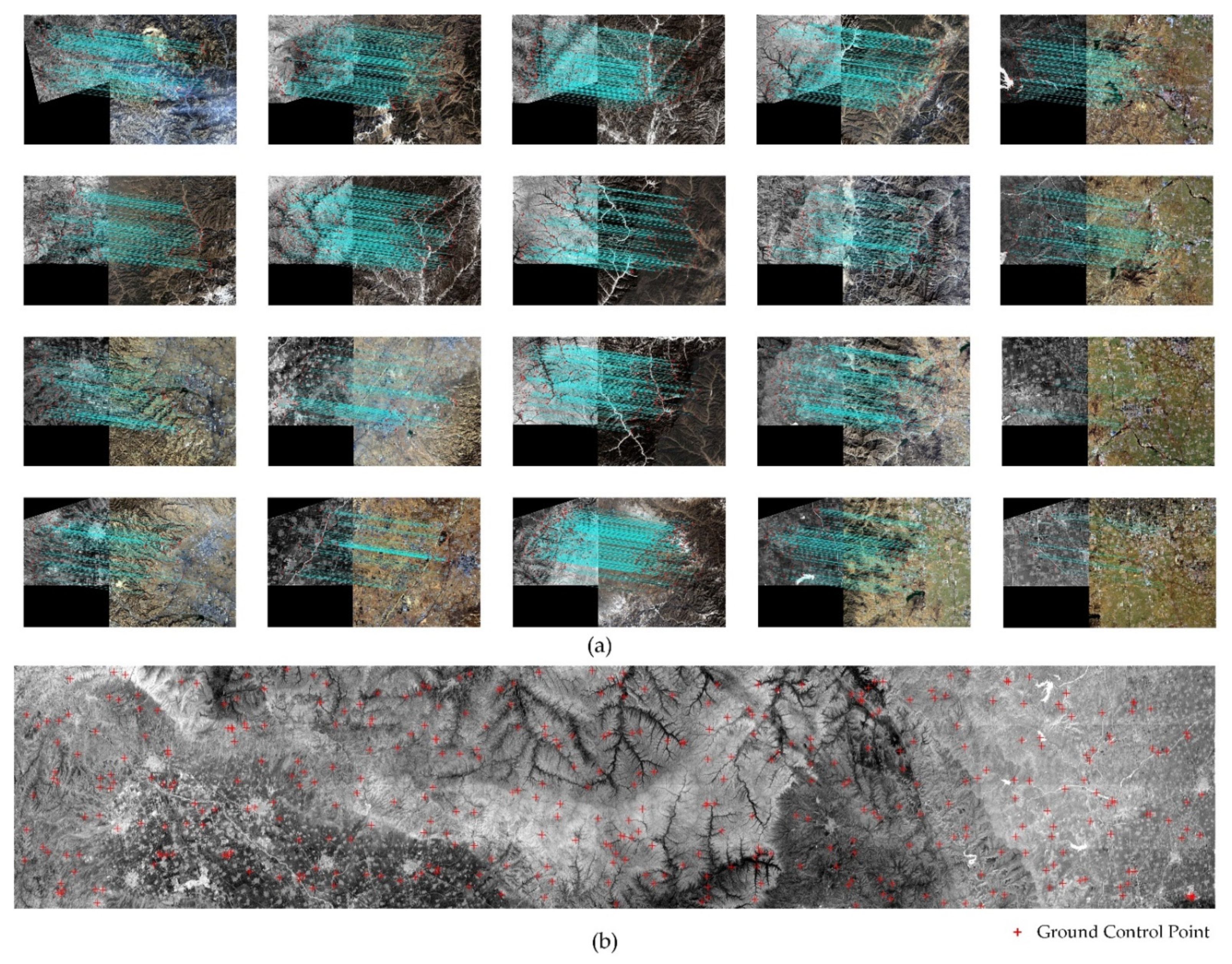

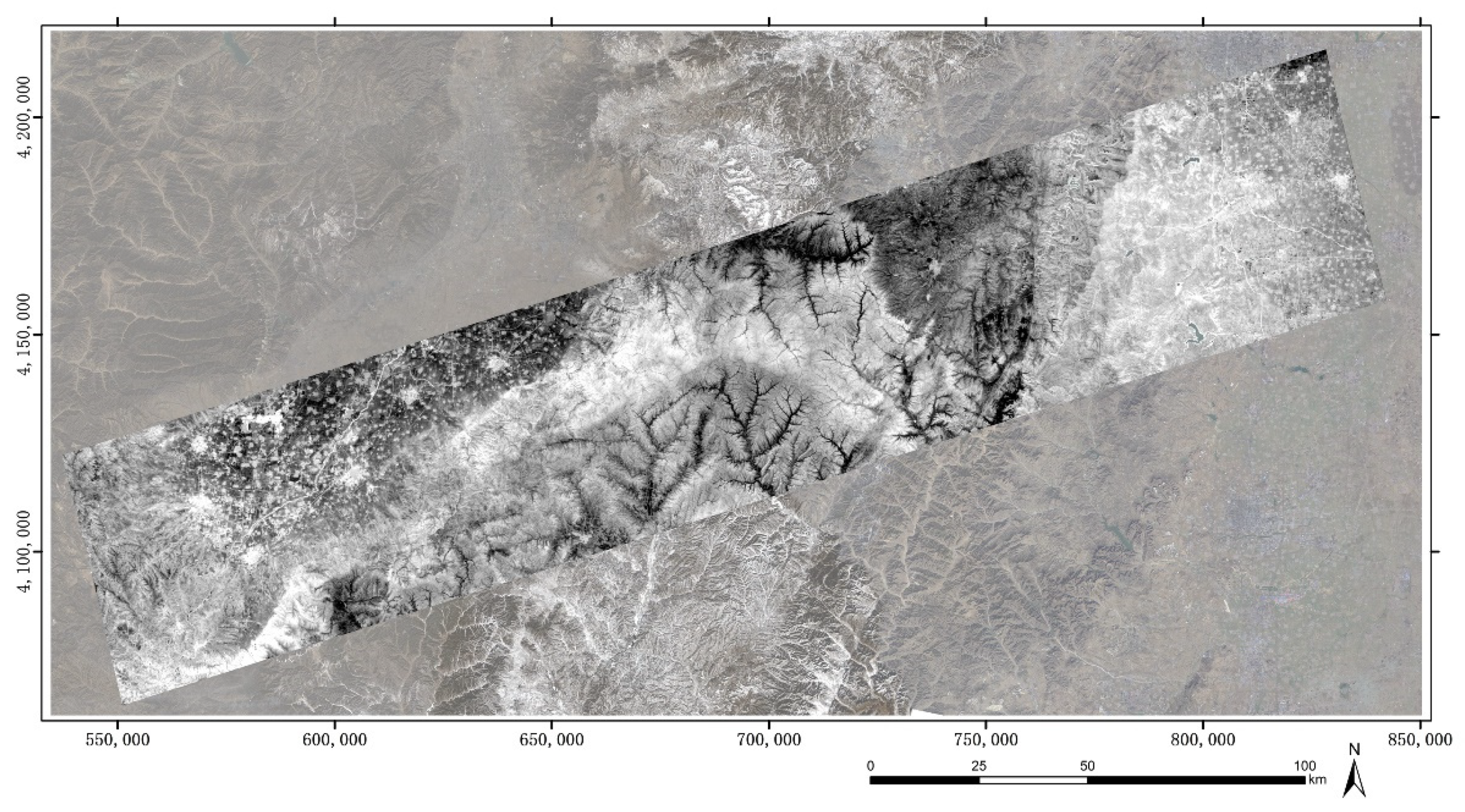

- Two-step orthorectification method from Leve1A (L1A) to L4 is designed. For the first step, the L1A TIR image is orthorectified with RPC coefficients and DEM data. Then the orthorectified image is registered with the reference visible image using the modified RIFT. To get the ground control points (GCPs), the corresponding points in the orthorectified image are remapped back to the L1A image coordinates and the points in the reference visible image are mapped to geographic coordinates. Later, the RPC refinement is executed with GCPs. For the second step, the L1A TIR image is orthorectified again, with refined RPCs coefficients and DEM, resulting the L4 production.

2. Methodology

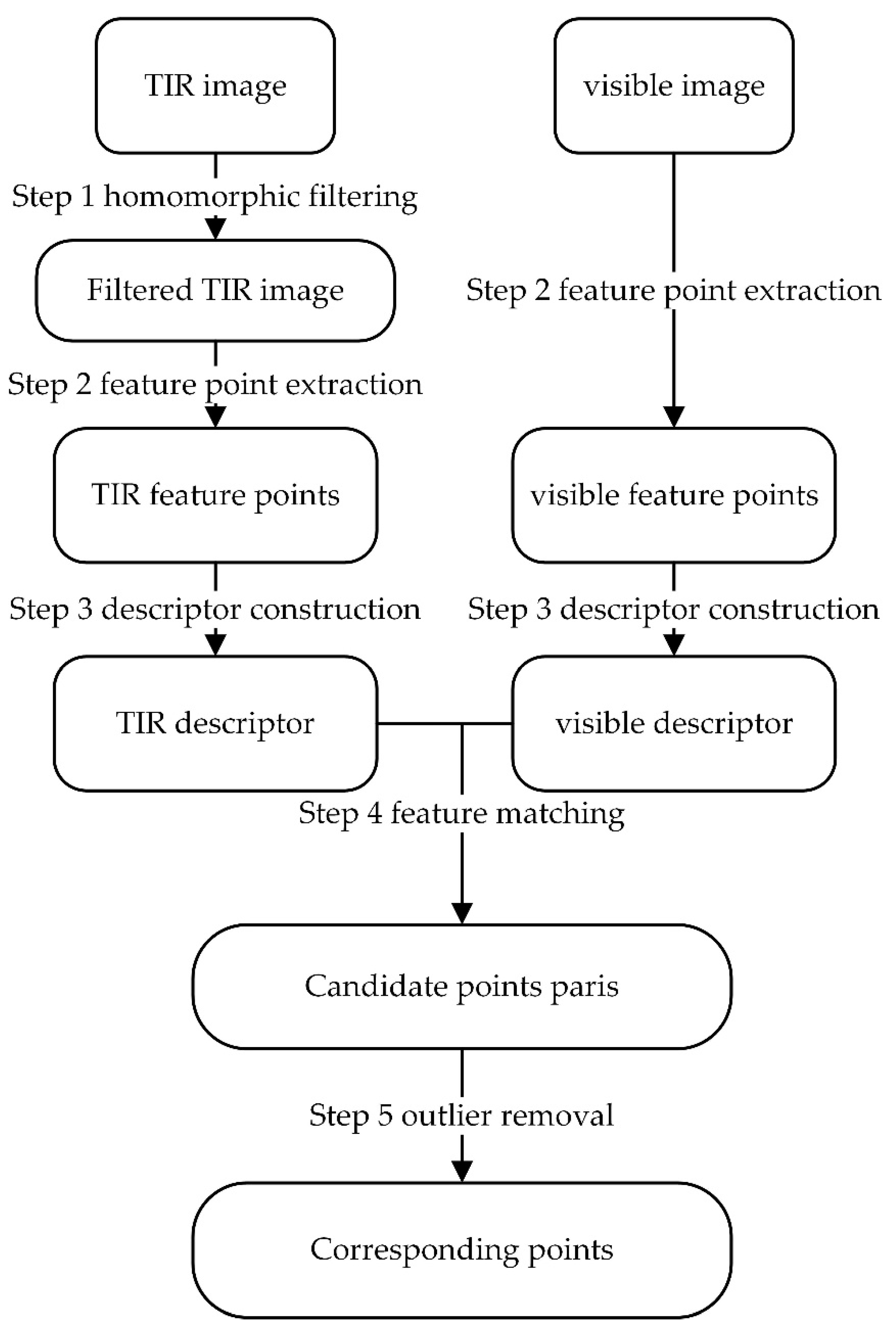

2.1. Modified RIFT

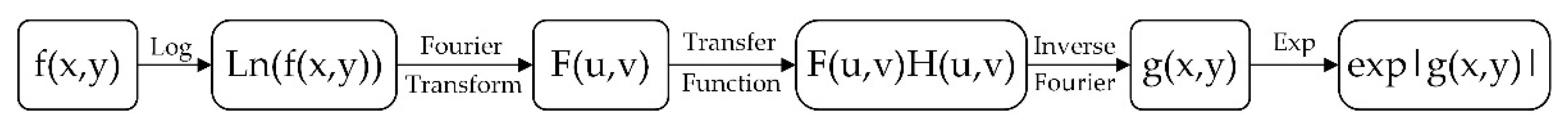

2.1.1. Homomorphic Filtering

2.1.2. Feature Points Extraction

2.1.3. Feature Description

2.1.4. Feature Matching and Outliers Removal

2.2. Orthorectification Framework for SDGSAT-1 TIS Image

3. Experiment and Results

3.1. Experiment Design

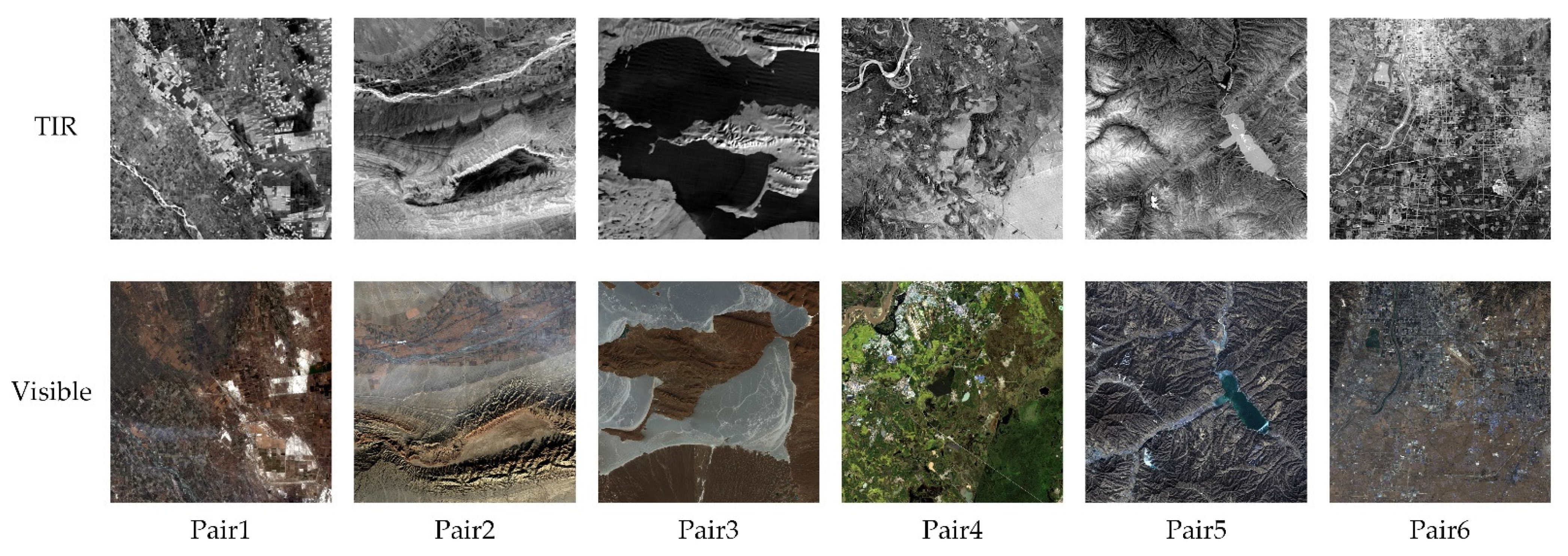

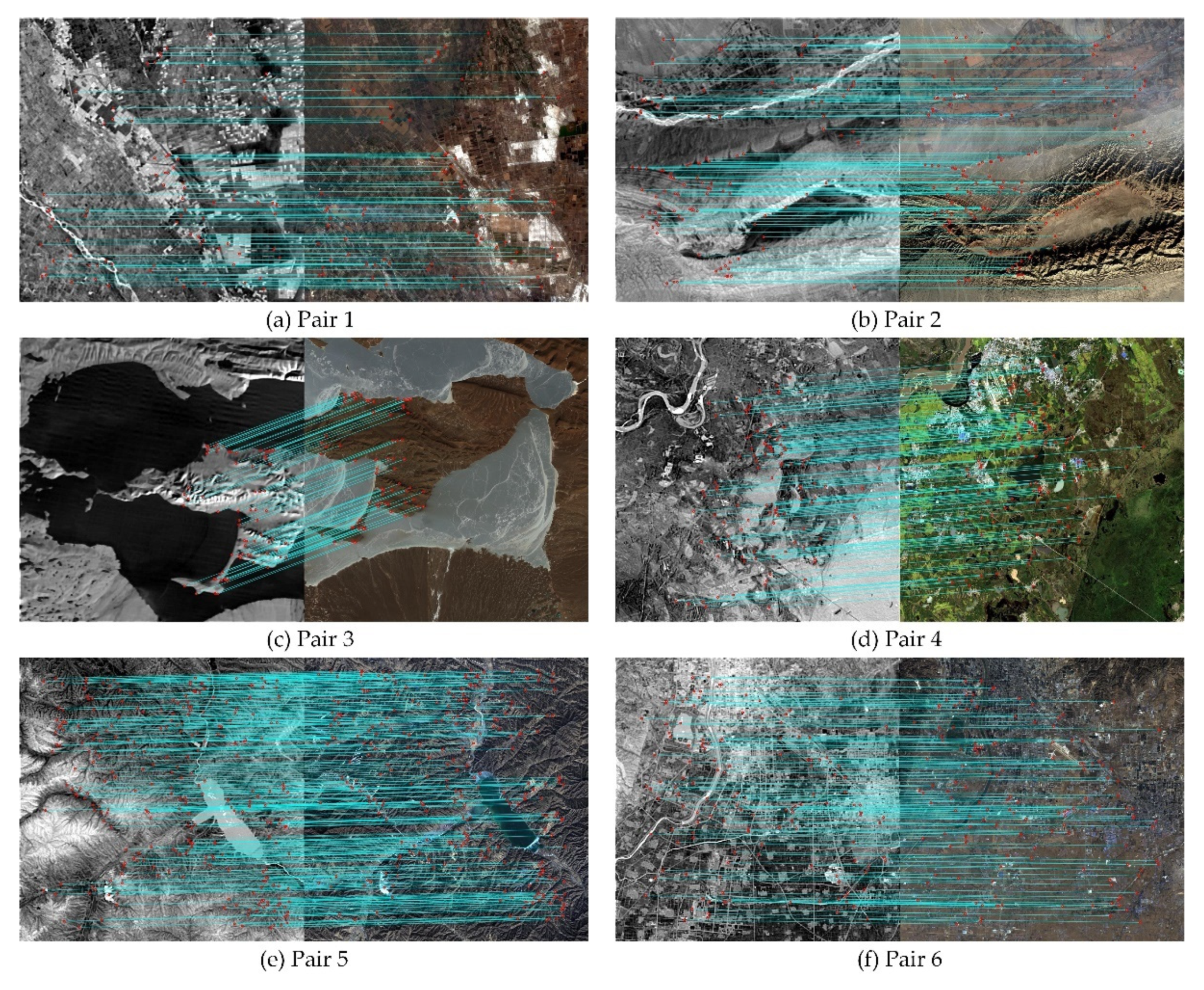

3.1.1. Experiment for Modified RIFT

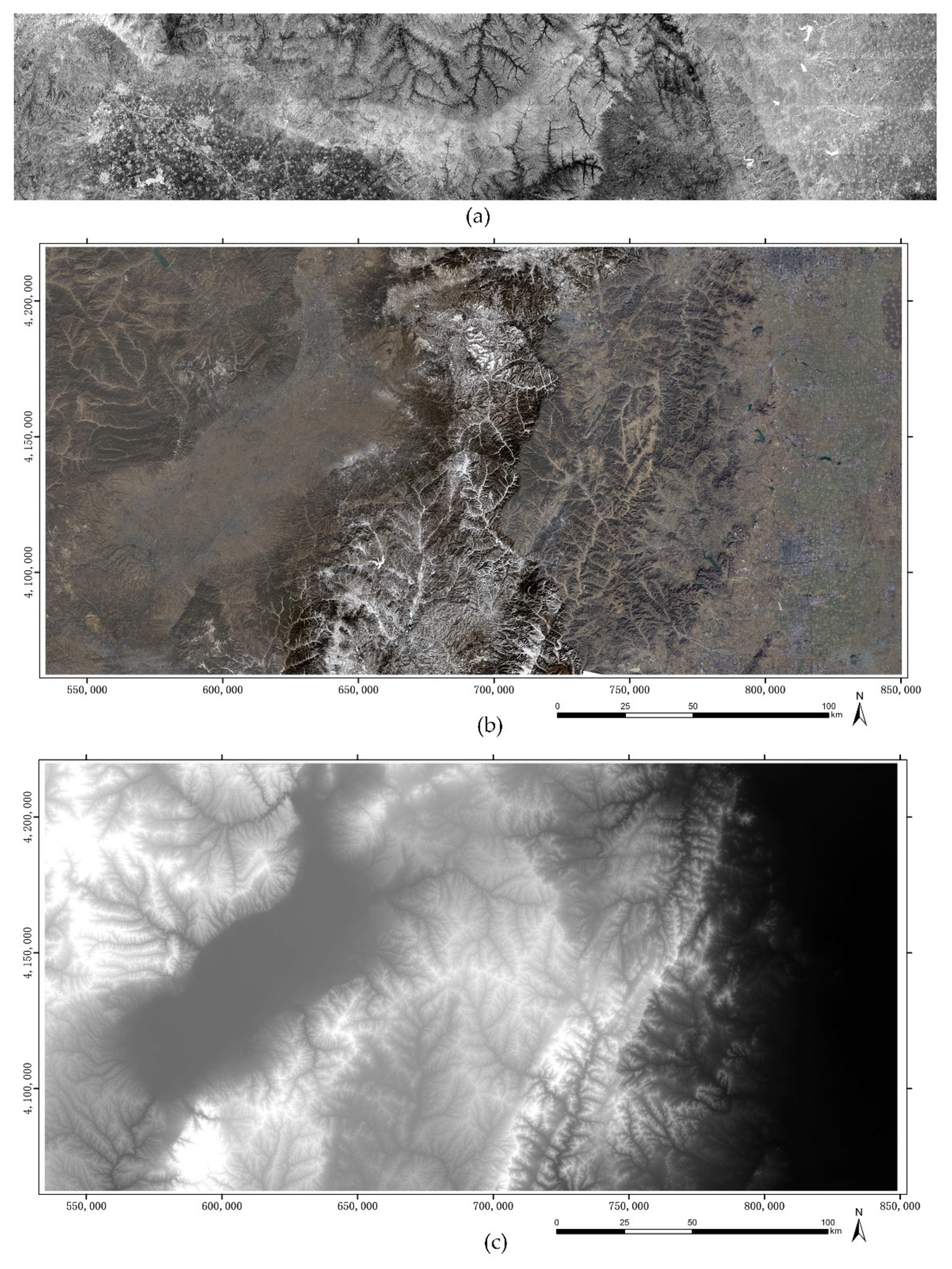

3.1.2. Experiment for Orthorectification of SDGSAT-1 TIS

3.2. Result

3.2.1. Results of Modified RIFT

3.2.2. Results of Orthorectification

4. Discussion

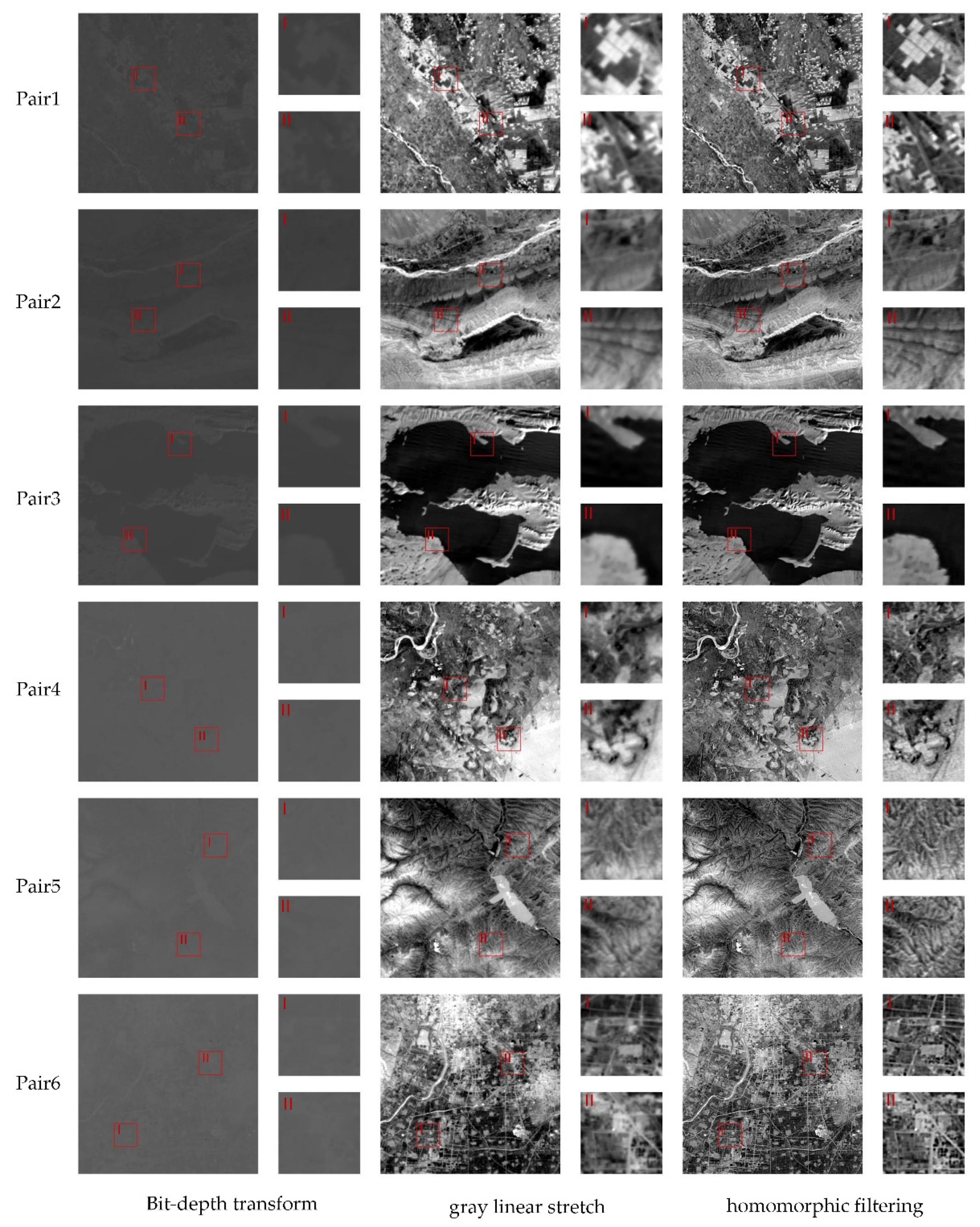

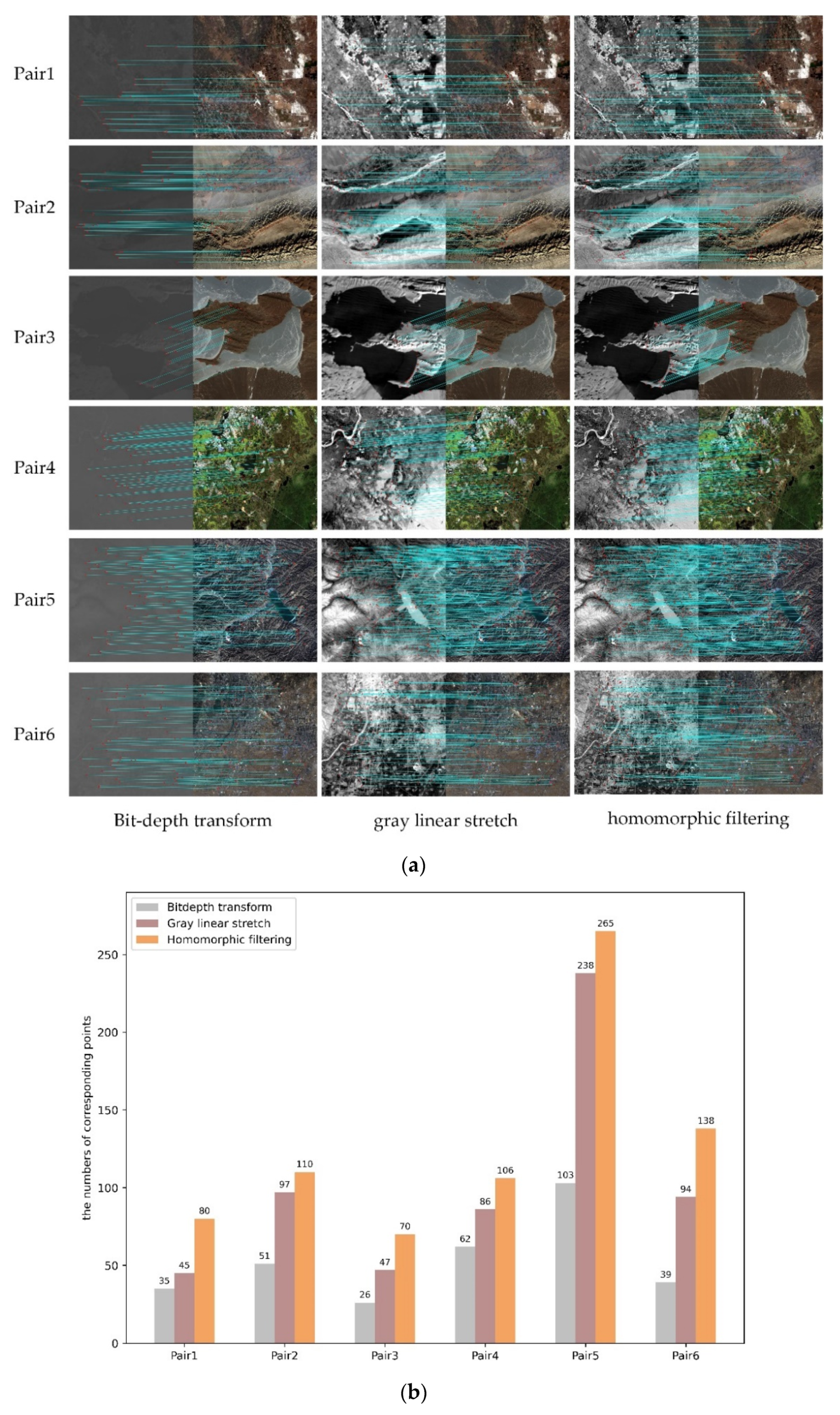

4.1. Performances of the Homomorphic Filtering

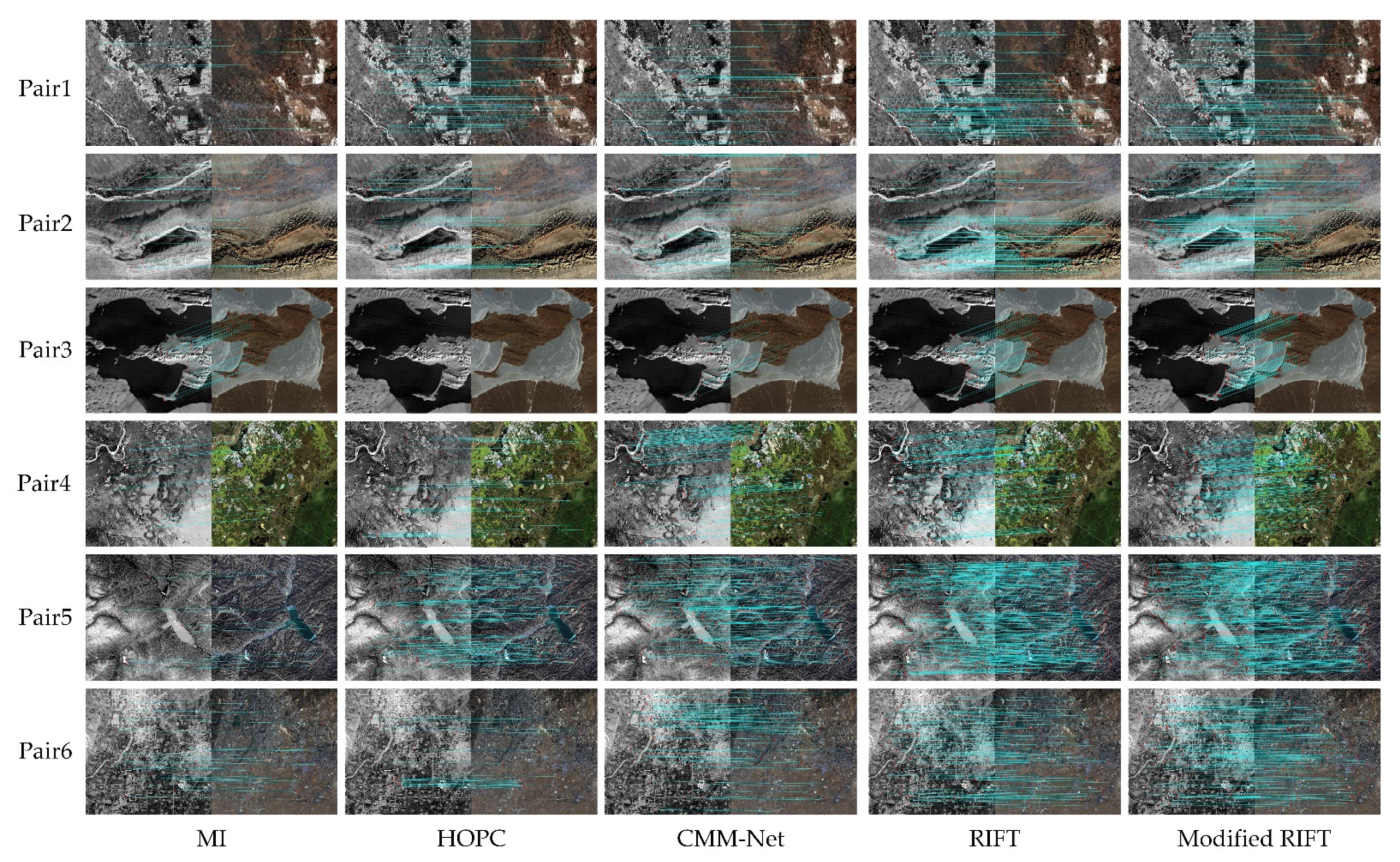

4.2. Performances of Modified RIFT

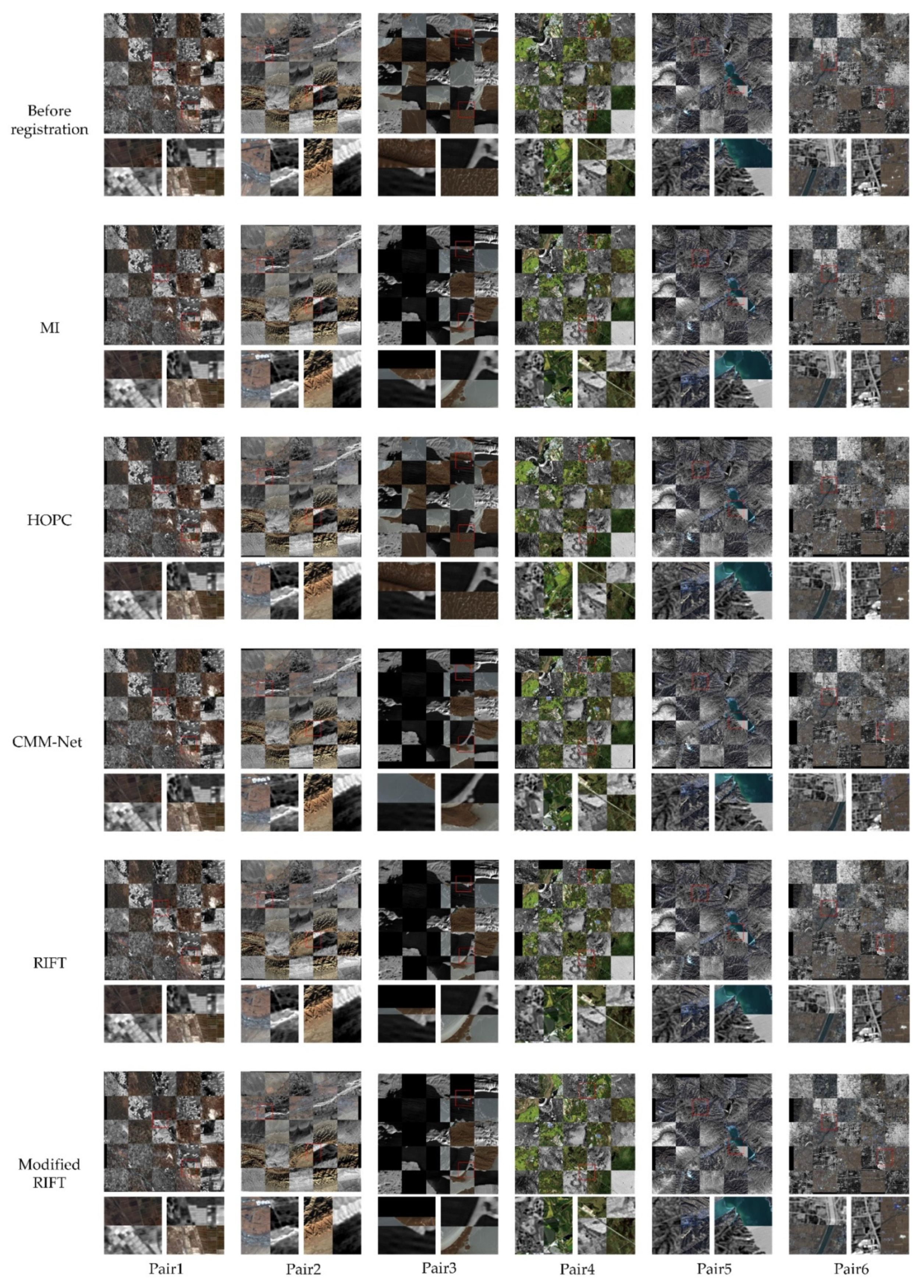

4.3. Qualitative Analysis of the Registration Accuracy

4.4. Quantitative Analysis of the Orthorectification Accuracy

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zheng, X.; Li, Z.-L.; Nerry, F.; Zhang, X. A new thermal infrared channel configuration for accurate land surface temperature retrieval from satellite data. Remote Sens. Environ. 2019, 231, 111216. [Google Scholar] [CrossRef]

- Ren, H.; Ye, X.; Liu, R.; Dong, J.; Qin, Q. Improving Land Surface Temperature and Emissivity Retrieval From the Chinese Gaofen-5 Satellite Using a Hybrid Algorithm. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1080–1090. [Google Scholar] [CrossRef]

- Wei, C.; Chen, W.; Lu, Y.; Blaschke, T.; Peng, J.; Xue, D. Synergies between Urban Heat Island and Urban Heat Wave Effects in 9 Global Mega-Regions from 2003 to 2020. Remote Sens. 2022, 14, 70. [Google Scholar] [CrossRef]

- Peng, J.; Xie, P.; Liu, Y.; Ma, J. Urban thermal environment dynamics and associated landscape pattern factors: A case study in the Beijing metropolitan region. Remote Sens. Environ. 2016, 173, 145–155. [Google Scholar] [CrossRef]

- Quan, J.; Zhan, W.; Chen, Y.; Wang, M.; Wang, J. Time series decomposition of remotely sensed land surface temperature and investigation of trends and seasonal variations in surface urban heat islands. J. Geophys. Res. Atmos. 2016, 121, 2638–2657. [Google Scholar] [CrossRef]

- Bhattarai, N.; Wagle, P. Recent Advances in Remote Sensing of Evapotranspiration. Remote Sens. 2021, 13, 4260. [Google Scholar] [CrossRef]

- Abbasi, N.; Nouri, H.; Didan, K.; Barreto-Muñoz, A.; Chavoshi Borujeni, S.; Salemi, H.; Opp, C.; Siebert, S.; Nagler, P. Estimating Actual Evapotranspiration over Croplands Using Vegetation Index Methods and Dynamic Harvested Area. Remote Sens. 2021, 13, 5167. [Google Scholar] [CrossRef]

- Zhou, Z.; Majeed, Y.; Diverres Naranjo, G.; Gambacorta, E.M.T. Assessment for crop water stress with infrared thermal imagery in precision agriculture: A review and future prospects for deep learning applications. Comput. Electron. Agric. 2021, 182, 106019. [Google Scholar] [CrossRef]

- Liu, W.; Li, J.; Zhang, Y.; Zhao, L.; Cheng, Q. Preflight Radiometric Calibration of TIS Sensor Onboard SDG-1 Satellite and Estimation of Its LST Retrieval Ability. Remote Sens. 2021, 13, 3242. [Google Scholar] [CrossRef]

- Zhang, X.; Leng, C.; Hong, Y.; Pei, Z.; Cheng, I.; Basu, A. Multimodal Remote Sensing Image Registration Methods and Advancements: A Survey. Remote Sens. 2021, 13, 5128. [Google Scholar] [CrossRef]

- Tondewad, M.P.S.; Dale, M.M.P. Remote Sensing Image Registration Methodology: Review and Discussion. Procedia Comput. Sci. 2020, 171, 2390–2399. [Google Scholar] [CrossRef]

- Feng, R.; Shen, H.; Bai, J.; Li, X. Advances and Opportunities in Remote Sensing Image Geometric Registration: A systematic review of state-of-the-art approaches and future research directions. IEEE Geosci. Remote Sens. Mag. 2021, 9, 120–142. [Google Scholar] [CrossRef]

- Sarvaiya, J.N.; Patnaik, S.; Bombaywala, S. Image Registration by Template Matching Using Normalized Cross-Correlation. In Proceedings of the 2009 International Conference on Advances in Computing, Control, and Telecommunication Technologies, Bangalore, India, 28–29 December 2009; pp. 819–822. [Google Scholar]

- Luo, J.; Konofagou, E. A fast normalized cross-correlation calculation method for motion estimation. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2010, 57, 1347–1357. [Google Scholar] [CrossRef] [Green Version]

- Johnson, K.; Cole-Rhodes, A.; Zavorin, I.; Moigne, J. Mutual information as a similarity measure for remote sensing image registration. Proc. SPIE 2001, 4383, 51–61. [Google Scholar]

- Woo, J.; Stone, M.; Prince, J.L. Multimodal Registration via Mutual Information Incorporating Geometric and Spatial Context. Ieee Trans. Image Processing 2015, 24, 757–769. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xie, X.; Zhang, Y.; Ling, X.; Wang, X. A novel extended phase correlation algorithm based on Log-Gabor filtering for multimodal remote sensing image registration. Int. J. Remote Sens. 2019, 40, 5429–5453. [Google Scholar] [CrossRef]

- Dong, Y.; Long, T.; Jiao, W.; He, G.; Zhang, Z. A Novel Image Registration Method Based on Phase Correlation Using Low-Rank Matrix Factorization With Mixture of Gaussian. IEEE Trans. Geosci. Remote Sens. 2017, 56, 446–460. [Google Scholar] [CrossRef]

- Dong, Y.; Jiao, W.; Long, T.; He, G.; Gong, C. An Extension of Phase Correlation-Based Image Registration to Estimate Similarity Transform Using Multiple Polar Fourier Transform. Remote Sens. 2018, 10, 1719. [Google Scholar] [CrossRef] [Green Version]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 1152, pp. 1150–1157. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features. In European Conference on Computer Vision 2006; Springer: Berlin/Heidelberg, Germany, 2006; Volume 3951, pp. 404–417. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Ke, Y.; Sukthankar, R. PCA-SIFT: A More Distinctive Representation for Local Image Descriptors. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; Volume 2, p. II-506. [Google Scholar]

- Dellinger, F.; Delon, J.; Gousseau, Y.; Michel, J.; Tupin, F. SAR-SIFT: A SIFT-Like Algorithm for SAR Images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 453–466. [Google Scholar] [CrossRef] [Green Version]

- Xiang, Y.; Wang, F.; You, H. OS-SIFT: A Robust SIFT-Like Algorithm for High-Resolution Optical-to-SAR Image Registration in Suburban Areas. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3078–3090. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.L.; Ye, Y.X.; Yin, G.F.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Wang, S.; Quan, D.; Liang, X.F.; Ning, M.D.; Guo, Y.H.; Jiao, L.C. A deep learning framework for remote sensing image registration. ISPRS J. Photogramm. Remote Sens. 2018, 145, 148–164. [Google Scholar] [CrossRef]

- Jiang, X.; Ma, J.; Xiao, G.; Shao, Z.; Guo, X. A review of multimodal image matching: Methods and applications. Inf. Fusion 2021, 73, 22–71. [Google Scholar] [CrossRef]

- Dusmanu, M.; Rocco, I.; Pajdla, T.; Pollefeys, M.; Sivic, J.; Torii, A.; Sattler, T. D2-Net: A Trainable CNN for Joint Description and Detection of Local Features. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2019), Long Beach, CA, USA, 16–20 June 2019; pp. 8084–8093. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperPoint: Self-Supervised Interest Point Detection and Description. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 337–349. [Google Scholar] [CrossRef] [Green Version]

- Han, X.F.; Leung, T.; Jia, Y.Q.; Sukthankar, R.; Berg, A.C. MatchNet: Unifying Feature and Metric Learning for Patch-Based Matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3279–3286. [Google Scholar]

- Dong, Y.; Jiao, W.; Long, T.; Liu, L.; He, G.; Gong, C.; Guo, Y. Local Deep Descriptor for Remote Sensing Image Feature Matching. Remote Sens. 2019, 11, 430. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Zhang, Z.; Ma, G.; Wu, J. Multi-Source Remote Sensing Image Registration Based on Local Deep Learning Feature. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Salt Lake City, UT, USA, 11–16 July 2021; pp. 3412–3415. [Google Scholar]

- Aguilera, C.A.; Aguilera, F.J.; Sappa, A.D.; Aguilera, C.; Toledo, R. Learning cross-spectral similarity measures with deep convolutional neural networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 267–275. [Google Scholar] [CrossRef]

- Tian, Y.; Fan, B.; Wu, F. L2-Net: Deep Learning of Discriminative Patch Descriptor in Euclidean Space. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6128–6136. [Google Scholar]

- Zhu, R.; Yu, D.; Ji, S.; Lu, M. Matching RGB and Infrared Remote Sensing Images with Densely-Connected Convolutional Neural Networks. Remote Sens. 2019, 11, 2836. [Google Scholar] [CrossRef] [Green Version]

- Kumari, K.; Krishnamurthi, G. GAN-based End-to-End Unsupervised Image Registration for RGB-Infrared Image. In Proceedings of the 2020 3rd International Conference on Intelligent Autonomous Systems (ICoIAS), Singapore, 26–29 February 2020; pp. 62–66. [Google Scholar]

- Kovesi, P. Image Features from Phase Congruency. Videre A J. Comput. Vis. Res. 1999, 1, 1–26. [Google Scholar]

- Kovesi, P. Phase congruency: A low-level image invariant. Psychol. Res. 2000, 64, 136–148. [Google Scholar] [CrossRef]

- Ye, Y.; Shen, L. HOPC: A Novel Similarity Metric Based on Geometric Structural Properties for Multi-Modal Remote Sensing Image Matching. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, III-1, 9–16. [Google Scholar] [CrossRef] [Green Version]

- Ye, Y.; Shan, J.; Bruzzone, L.; Shen, L. Robust Registration of Multimodal Remote Sensing Images Based on Structural Similarity. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2941–2958. [Google Scholar] [CrossRef]

- Li, J.; Hu, Q.; Ai, M. RIFT: Multi-Modal Image Matching Based on Radiation-Variation Insensitive Feature Transform. Trans. Img. Proc. 2020, 29, 3296–3310. [Google Scholar] [CrossRef] [PubMed]

- Pullagura, R.; Valasani, U.S.; Kesari, P.P. Hybrid wavelet-based aerial image enhancement using georectification and homomorphic filtering. Arab. J. Geosci. 2021, 14, 1235. [Google Scholar] [CrossRef]

- Qu, J.; Li, Y.; Du, Q.; Dong, W.; Xi, B. Hyperspectral Pansharpening Based on Homomorphic Filtering and Weighted Tensor Matrix. Remote Sens. 2019, 11, 1005. [Google Scholar] [CrossRef] [Green Version]

- Hee Young, R.; Kiwon, L.; Byung-Doo, K. Change detection for urban analysis with high-resolution imagery: Homomorphic filtering and morphological operation approach. In Proceedings of the IGARSS 2004. 2004 IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004; Volume 2664, pp. 2662–2664. [Google Scholar]

- Oppenheim, A.V.; Lim, J.S. The importance of phase in signals. Proc. IEEE 1981, 69, 529–541. [Google Scholar] [CrossRef]

- Morrone, M.C.; Owens, R.A. Feature detection from local energy. Pattern Recognit. Lett. 1987, 6, 303–313. [Google Scholar] [CrossRef]

- Muja, M.; Lowe, D.G. Fast Matching of Binary Features. In Proceedings of the 2012 Ninth Conference on Computer and Robot Vision, Toronto, ON, Canada, 28–30 May 2012; pp. 404–410. [Google Scholar]

- Muja, M. FLANN-Fast Library for Approximate Nearest Neighbors User Manual; Computer Science Department, University of British Columbia: Vancouver, BC, Canada, 2009; Available online: https://www.fit.vutbr.cz/~ibarina/pub/VGE/reading/flann_manual-1.6.pdf (accessed on 25 January 2022).

- De Rpanis, K.G. Overview of the RANSAC algorithm. Image Rochester NY 2010, 4, 2–3. [Google Scholar]

- Chaozhen, L.; Wanjie, L.; Junming, Y. Deep learning algorithm for feature matching of cross modality remote sensing images. Acta Geod. Et Cartogr. Sin. 2021, 50, 189–202. [Google Scholar]

| Sensor | Platform | Wavelengths (μm) | Resolution (m) | Swath Width (km) | Altitude (km) | Imaging Method |

|---|---|---|---|---|---|---|

| TIS | SDGSAT-1 | 8.0~10.5, 10.3~11.3 11.5~12.5 | 30 | 300 | 505 | Whisk broom |

| VIMS | GF-5 | 8.01~8.39, 8.42~8.83 10.3~11.3, 11.4~12.5 | 40 | 60 | 705 | Push broom |

| IRS | HJ-1B | 10.5~12.5 | 300 | 720 | 649 | - |

| IRMSS | CBERS-04 | 10.4~12.5 | 80 | 120 | 778 | Push broom |

| TIRS | Landsat8\9 | 10.6~11.2, 11.5~12.5 | 100 | 185 | 705 | Push broom |

| ASTER | TERRA | 8.13~8.48, 8.47~8.83, 8.93~9.28, 10.25~10.95 10.95~11.65 | 90 | 60 | 705 | Push broom |

| Image Pair | Acquired Date | Type | Position | |

|---|---|---|---|---|

| Pair1 | TIR (SDGSAT-1) Visible (Landsat-8) | 15 December 2021 18 November 2021 | Farmland | Aksu, Xinjiang, China |

| Pair2 | TIR (SDGSAT-1) Visible (Landsat-8) | 15 December 2021 13 December 2021 | Plateau | Aksu, Xinjiang, China |

| Pair3 | TIR (SDGSAT-1) Visible (Landsat-8) | 14 December 2021 12 December 2021 | Lake | Ulan UL Lake, Qinghai, China |

| Pair4 | TIR (SDGSAT-1) Visible (Landsat-8) | 20 December 2021 18 September 2021 | Plain | the Northeast Plain, China |

| Pair5 | TIR (SDGSAT-1) Visible (GF1-WFV) | 2 January 2022 26 December 2021 | Mountain | Taiyuan, Shanxi, China |

| Pair6 | TIR (SDGSAT-1) Visible (GF1-WFV) | 2 January 2022 16 December 2021 | City | Taiyuan, Shanxi, China |

| MI | HOPC | CMM-Net | RIFT | Modified RIFT | |

|---|---|---|---|---|---|

| Pair1 | 8 | 54 | 35 | 82 | 80 |

| Pair2 | 14 | 30 | 44 | 96 | 110 |

| Pair3 | 28 | 0 | 14 | 52 | 70 |

| Pair4 | 16 | 30 | 73 | 89 | 106 |

| Pair5 | 13 | 90 | 122 | 260 | 265 |

| Pair6 | 28 | 23 | 70 | 106 | 138 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Cheng, B.; Zhang, X.; Long, T.; Chen, B.; Wang, G.; Zhang, D. A TIR-Visible Automatic Registration and Geometric Correction Method for SDGSAT-1 Thermal Infrared Image Based on Modified RIFT. Remote Sens. 2022, 14, 1393. https://doi.org/10.3390/rs14061393

Chen J, Cheng B, Zhang X, Long T, Chen B, Wang G, Zhang D. A TIR-Visible Automatic Registration and Geometric Correction Method for SDGSAT-1 Thermal Infrared Image Based on Modified RIFT. Remote Sensing. 2022; 14(6):1393. https://doi.org/10.3390/rs14061393

Chicago/Turabian StyleChen, Jinfen, Bo Cheng, Xiaoping Zhang, Tengfei Long, Bo Chen, Guizhou Wang, and Degang Zhang. 2022. "A TIR-Visible Automatic Registration and Geometric Correction Method for SDGSAT-1 Thermal Infrared Image Based on Modified RIFT" Remote Sensing 14, no. 6: 1393. https://doi.org/10.3390/rs14061393

APA StyleChen, J., Cheng, B., Zhang, X., Long, T., Chen, B., Wang, G., & Zhang, D. (2022). A TIR-Visible Automatic Registration and Geometric Correction Method for SDGSAT-1 Thermal Infrared Image Based on Modified RIFT. Remote Sensing, 14(6), 1393. https://doi.org/10.3390/rs14061393